DPIBP: Dining Philosophers Problem-Inspired Binary Patterns for Facial Expression Recognition

Abstract

1. Introduction

- Inspired by Dining Philosopher Problem, a novel method for feature extraction named Dining Philosopher Problem-Inspired Binary Patterns (DPIBP) has been proposed to extract robust features in a local 5 × 5 neighborhood.

- Four variants of the DPIBP method namely DPIBP1, DPIBP2, DPIBP3, and DPIBP4 have been proposed corresponding to angles of , , , and .

- Each DPIBP method generates three feature codes by considering the positions of philosophers, chopsticks and noodles in a local 5 × 5 neighborhood with lesser dimensions than traditional variants of Local Binary Patterns (LBPs).

- The proposed DPIBP methods have been evaluated on standard FER datasets such as JAFFE, CK+, MUG, and TFEID in person-independent protocol to validate the efficiency in real-time scenarios.

2. Related Work

3. Proposed Method

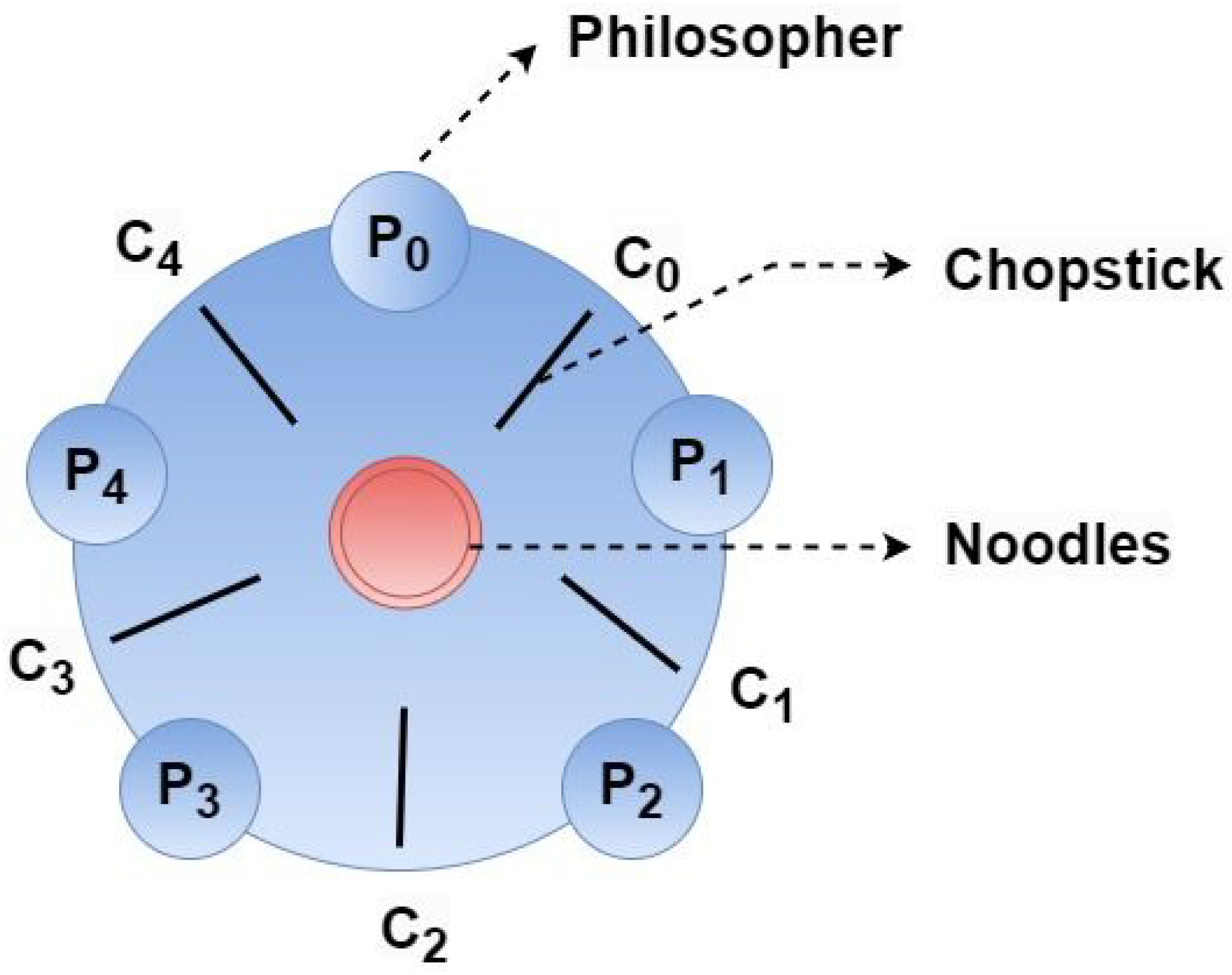

3.1. Dining Philosophers Problem

3.2. Theoretical Grounding of the Dining Philosophers Analogy

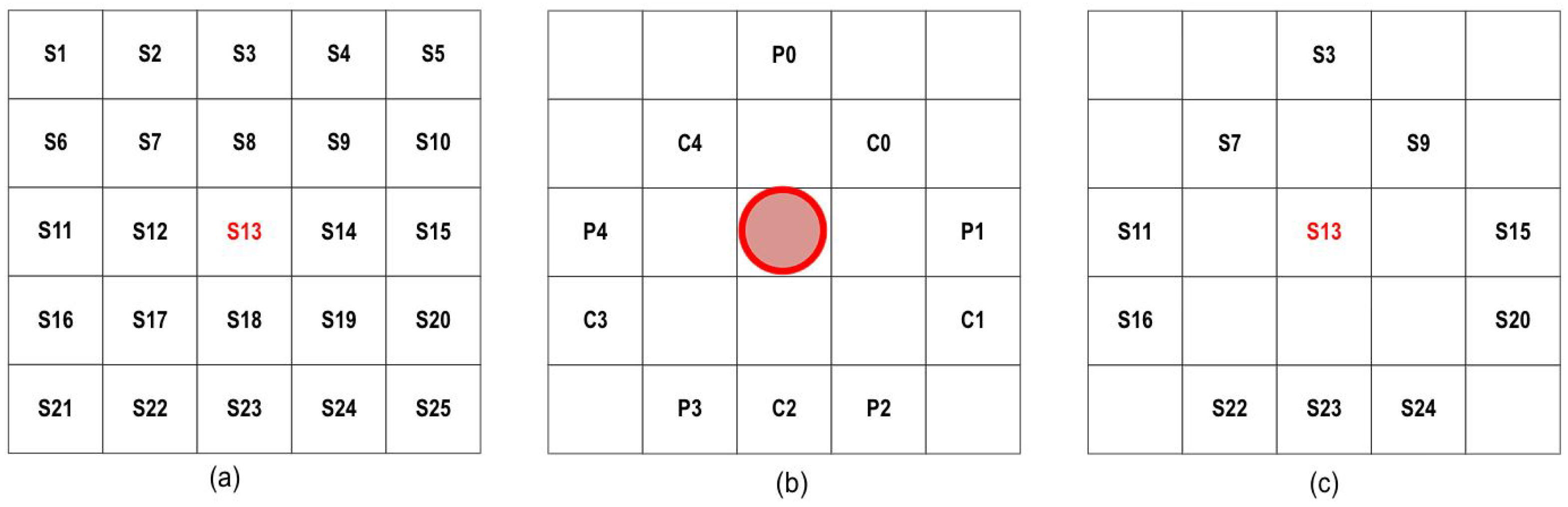

3.3. Feature Extraction Using DPIBP Method

3.3.1. DPIBP1 Feature Extraction

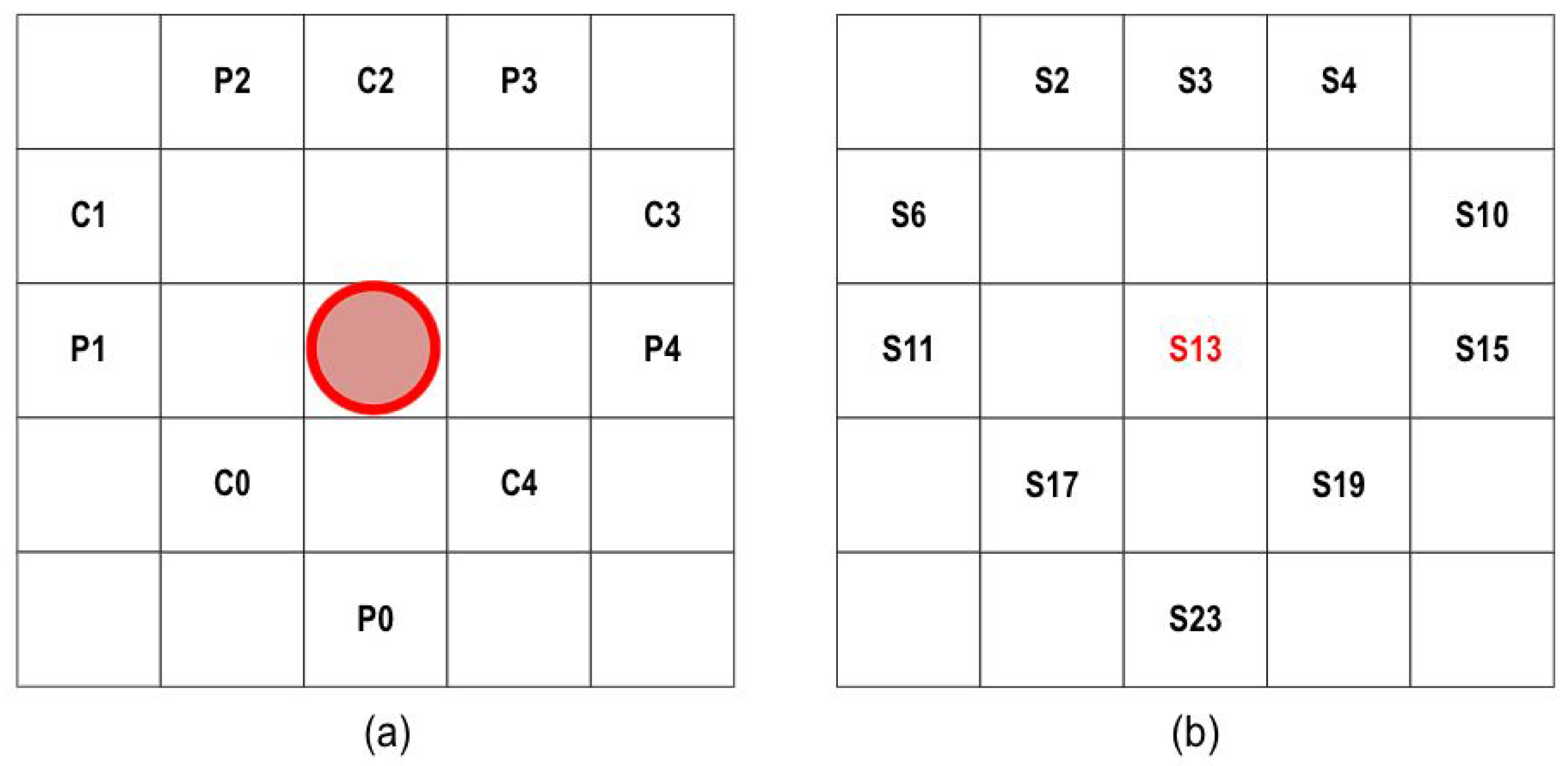

3.3.2. DPIBP2

3.3.3. DPIBP3

3.3.4. DPIBP4

3.4. Distinctiveness of DPIBP4 and Its Contribution

4. Experimental Results and Analysis

4.1. Datasets

4.2. Experimental Setup

4.3. Comparison Analysis

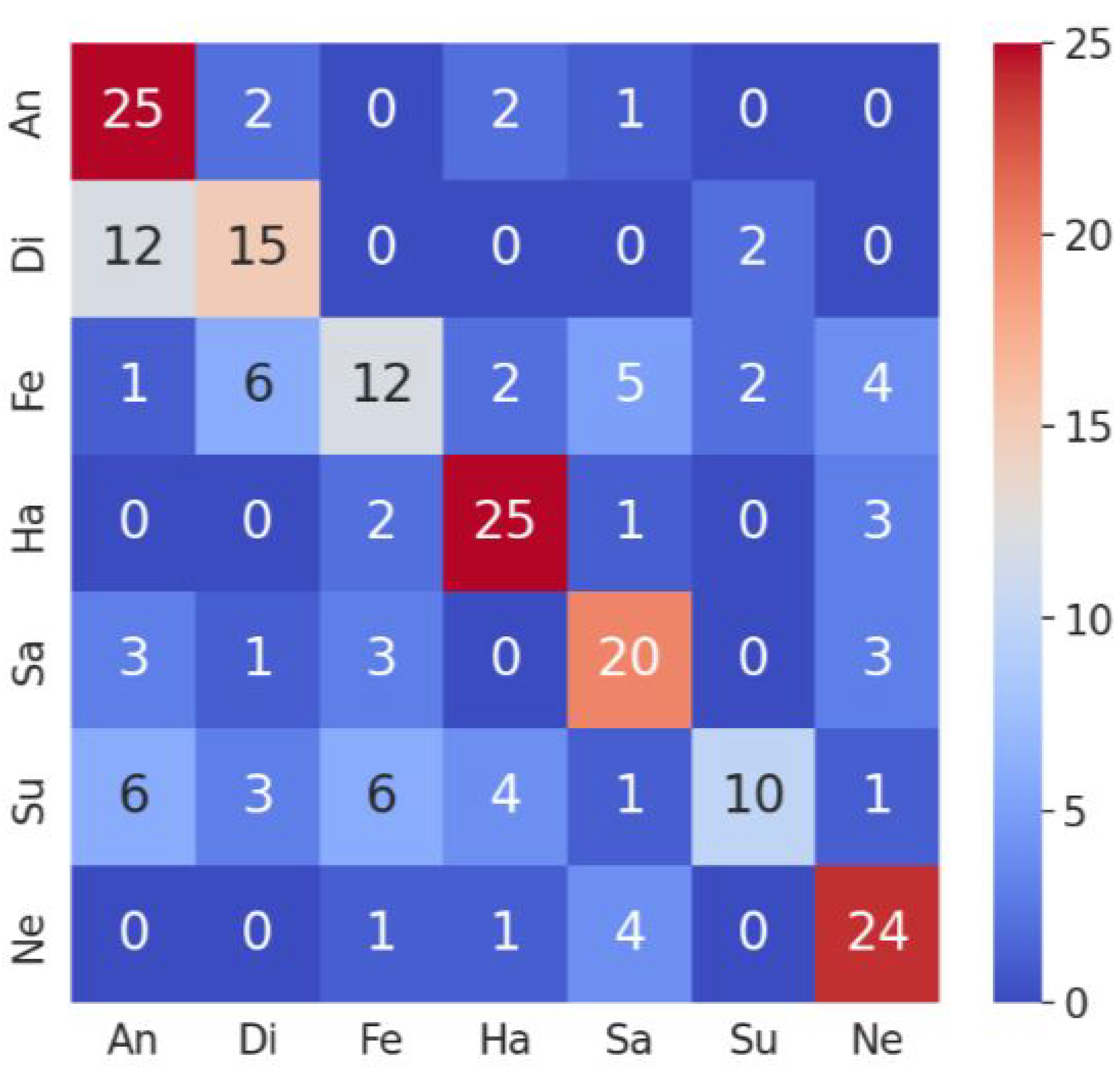

4.4. JAFFE Dataset

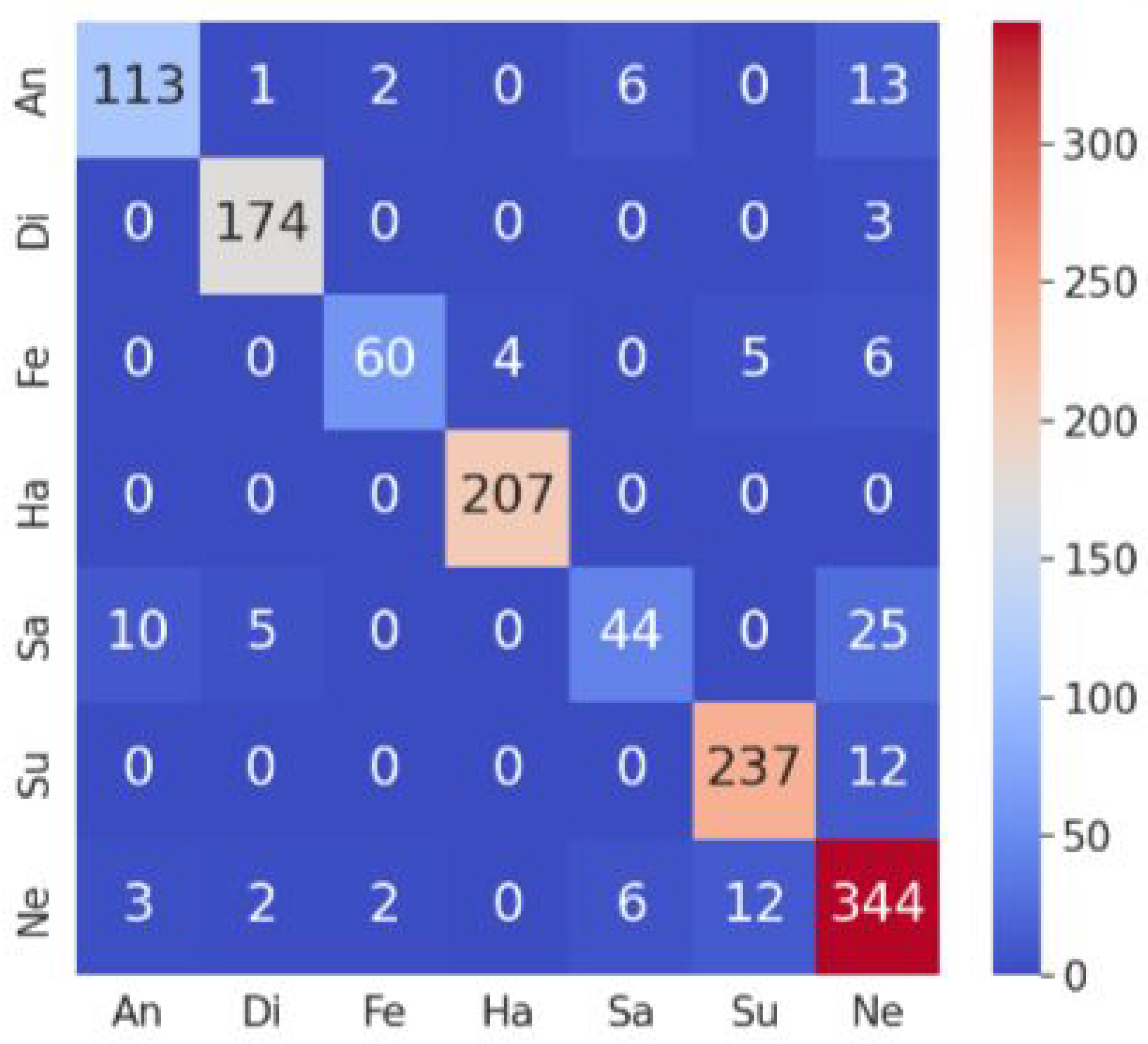

4.5. MUG Dataset

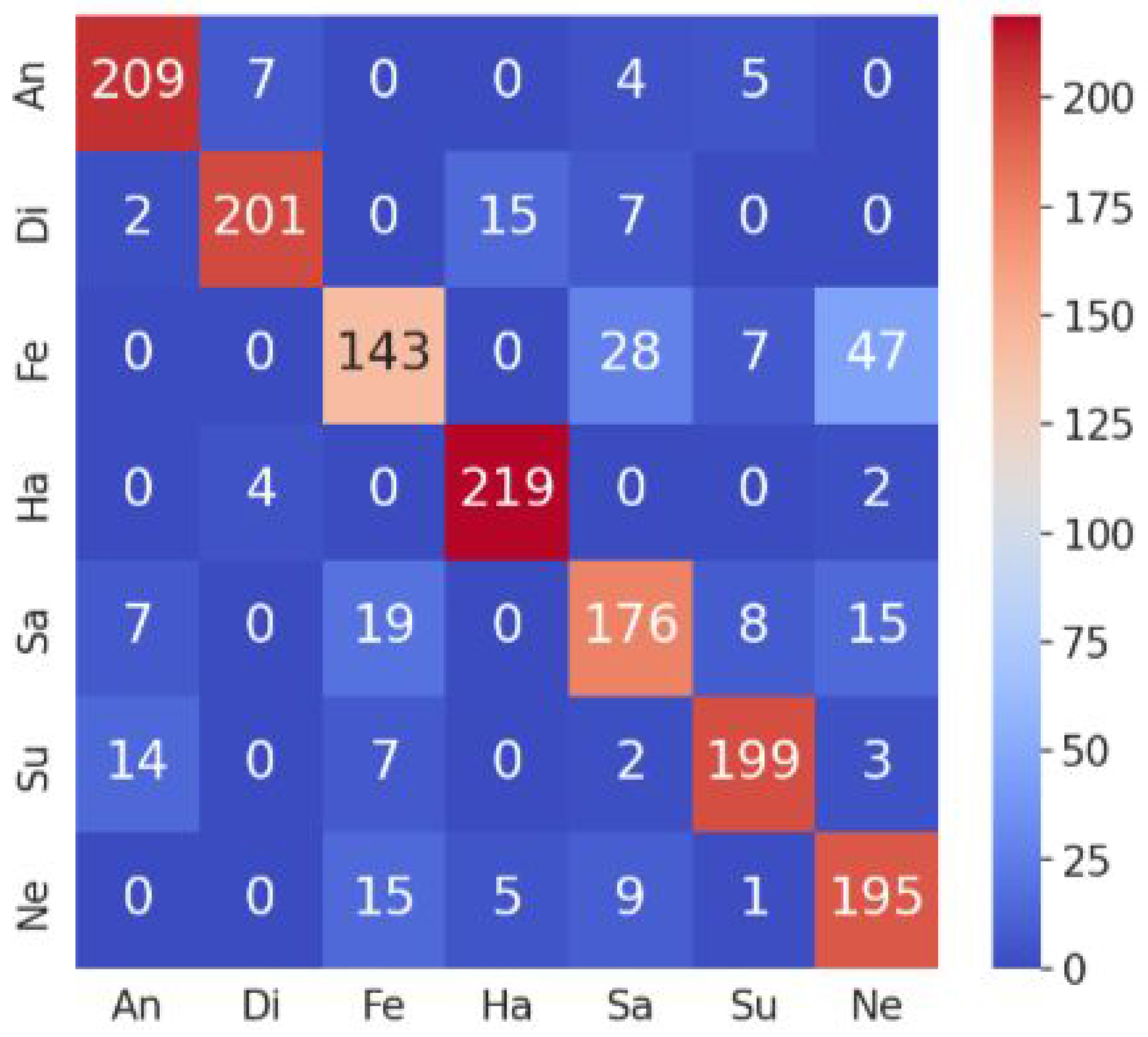

4.6. CK+ Dataset

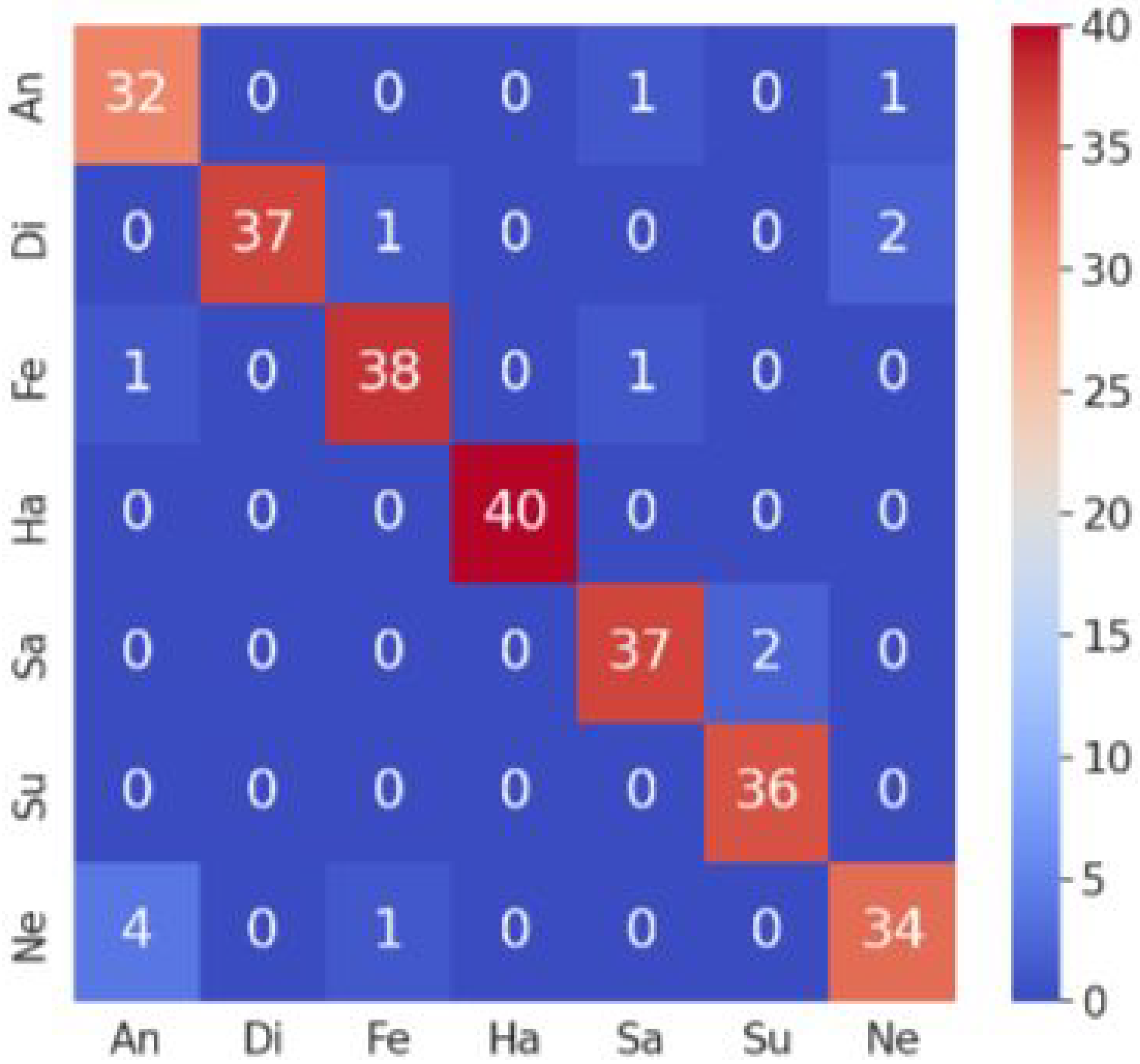

4.7. TFEID Dataset

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ramakrishnan, S.; Upadhyay, N.; Das, P.; Achar, R.; Palaniswamy, S.; Kumaar, A.N. Emotion recognition from facial expressions using images with arbitrary poses using siamese network. In Proceedings of the 2021 2nd International Conference on Smart Electronics and Communication (ICOSEC), Trichy, India, 7–9 October 2021; pp. 268–273. [Google Scholar]

- Gupta, P.K.; Varadharajan, N.; Ajith, K.; Singh, T.; Patra, P. Facial Emotion Recognition Using Computer Vision Techniques. In Proceedings of the 2024 15th International Conference on Computing Communication and Networking Technologies (ICCCNT), Kamand, India, 24–28 June 2024; pp. 1–7. [Google Scholar]

- Niu, B.; Gao, Z.; Guo, B. Facial expression recognition with LBP and ORB features. Comput. Intell. Neurosci. 2021, 2021, 8828245. [Google Scholar] [CrossRef]

- Kartheek, M.N.; Prasad, M.V.; Bhukya, R. Radial mesh pattern: A handcrafted feature descriptor for facial expression recognition. J. Ambient. Intell. Humaniz. Comput. 2023, 14, 1619–1631. [Google Scholar] [CrossRef]

- Kola, D.G.R.; Samayamantula, S.K. Facial expression recognition using singular values and wavelet-based LGC-HD operator. IET Biom. 2021, 10, 207–218. [Google Scholar] [CrossRef]

- Russo, S.; Tibermacine, I.E.; Randieri, C.; Rabehi, A.; Alharbi, A.H.; El-kenawy, E.S.M.; Napoli, C. Exploiting facial emotion recognition system for ambient assisted living technologies triggered by interpreting the user’s emotional state. Front. Neurosci. 2025, 19, 1622194. [Google Scholar] [CrossRef]

- Mandal, M.; Verma, M.; Mathur, S.; Vipparthi, S.K.; Murala, S.; Kranthi Kumar, D. Regional adaptive affinitive patterns (RADAP) with logical operators for facial expression recognition. IET Image Process. 2019, 13, 850–861. [Google Scholar] [CrossRef]

- Kola, D.G.R.; Samayamantula, S.K. A novel approach for facial expression recognition using local binary pattern with adaptive window. Multimed. Tools Appl. 2021, 80, 2243–2262. [Google Scholar] [CrossRef]

- Kumar Tataji, K.N.; Kartheek, M.N.; Prasad, M.V. CC-CNN: A cross connected convolutional neural network using feature level fusion for facial expression recognition. Multimed. Tools Appl. 2024, 83, 27619–27645. [Google Scholar] [CrossRef]

- Selvin, S.; Vinayakumar, R.; Gopalakrishnan, E.; Menon, V.K.; Soman, K. Stock price prediction using LSTM, RNN and CNN-sliding window model. In Proceedings of the 2017 International Conference on Advances in Computing, Communications and Informatics (ICACCI), Udupi, India, 13–16 September 2017; pp. 1643–1647. [Google Scholar]

- Priya, S.S.; Sanjana, P.S.; Yanamala, R.M.R.; Amar Raj, R.D.; Pallakonda, A.; Napoli, C.; Randieri, C. Flight-Safe Inference: SVD-Compressed LSTM Acceleration for Real-Time UAV Engine Monitoring Using Custom FPGA Hardware Architecture. Drones 2025, 9, 494. [Google Scholar] [CrossRef]

- Yeddula, L.R.; Pallakonda, A.; Raj, R.D.A.; Yanamala, R.M.R.; Prakasha, K.K.; Kumar, M.S. YOLOv8n-GBE: A Hybrid YOLOv8n Model with Ghost Convolutions and BiFPN-ECA Attention for Solar PV Defect Localization. IEEE Access 2025, 13, 114012–114028. [Google Scholar] [CrossRef]

- Randieri, C.; Perrotta, A.; Puglisi, A.; Grazia Bocci, M.; Napoli, C. CNN-Based Framework for Classifying COVID-19, Pneumonia, and Normal Chest X-Rays. Big Data Cogn. Comput. 2025, 9, 186. [Google Scholar] [CrossRef]

- Osheter, T.; Campisi Pinto, S.; Randieri, C.; Perrotta, A.; Linder, C.; Weisman, Z. Semi-Autonomic AI LF-NMR Sensor for Industrial Prediction of Edible Oil Oxidation Status. Sensors 2023, 23, 2125. [Google Scholar] [CrossRef] [PubMed]

- Makhmudkhujaev, F.; Iqbal, M.T.B.; Ryu, B.; Chae, O. Local directional-structural pattern for person-independent facial expression recognition. Turk. J. Electr. Eng. Comput. Sci. 2019, 27, 516–531. [Google Scholar] [CrossRef]

- Iqbal, M.T.B.; Ryu, B.; Rivera, A.R.; Makhmudkhujaev, F.; Chae, O.; Bae, S.H. Facial expression recognition with active local shape pattern and learned-size block representations. IEEE Trans. Affect. Comput. 2020, 13, 1322–1336. [Google Scholar] [CrossRef]

- Turk, M.A.; Pentland, A. Face recognition using eigenfaces. In Proceedings of the 1991 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Maui, HI, USA, 3–6 June 1991; Volume 91, pp. 586–591. [Google Scholar]

- Belhumeur, P.N.; Hespanha, J.P.; Kriegman, D.J. Eigenfaces vs. fisherfaces: Recognition using class specific linear projection. IEEE Trans. Pattern Anal. Mach. Intell. 1997, 19, 711–720. [Google Scholar] [CrossRef]

- Li, S.; Deng, W. Deep facial expression recognition: A survey. IEEE Trans. Affect. Comput. 2020, 13, 1195–1215. [Google Scholar] [CrossRef]

- Kong, C.; Chen, B.; Li, H.; Wang, S.; Rocha, A.; Kwong, S. Detect and locate: Exposing face manipulation by semantic-and noise-level telltales. IEEE Trans. Inf. Forensics Secur. 2022, 17, 1741–1756. [Google Scholar] [CrossRef]

- Kartheek, M.N.; Prasad, M.V.; Bhukya, R. Chess pattern with different weighting schemes for person independent facial expression recognition. Multimed. Tools Appl. 2022, 81, 22833–22866. [Google Scholar] [CrossRef]

- Kartheek, M.N.; Madhuri, R.; Prasad, M.V.; Bhukya, R. Knight tour patterns: Novel handcrafted feature descriptors for facial expression recognition. In Proceedings of the International Conference on Computer Analysis of Images and Patterns, Virtual, 28–30 September 2021; Springer: Berlin/Heidelberg, Germany, 2021; pp. 210–219. [Google Scholar]

- Kartheek, M.N.; Prasad, M.V.; Bhukya, R. Modified chess patterns: Handcrafted feature descriptors for facial expression recognition. Complex Intell. Syst. 2021, 7, 3303–3322. [Google Scholar] [CrossRef]

- Shanthi, P.; Nickolas, S. An efficient automatic facial expression recognition using local neighborhood feature fusion. Multimed. Tools Appl. 2021, 80, 10187–10212. [Google Scholar] [CrossRef]

- Rivera, A.R.; Castillo, J.R.; Chae, O.O. Local directional number pattern for face analysis: Face and expression recognition. IEEE Trans. Image Process. 2012, 22, 1740–1752. [Google Scholar] [CrossRef] [PubMed]

- Rivera, A.R.; Castillo, J.R.; Chae, O. Local directional texture pattern image descriptor. Pattern Recognit. Lett. 2015, 51, 94–100. [Google Scholar] [CrossRef]

- Ryu, B.; Rivera, A.R.; Kim, J.; Chae, O. Local directional ternary pattern for facial expression recognition. IEEE Trans. Image Process. 2017, 26, 6006–6018. [Google Scholar] [CrossRef]

- Iqbal, M.T.B.; Abdullah-Al-Wadud, M.; Ryu, B.; Makhmudkhujaev, F.; Chae, O. Facial expression recognition with neighborhood-aware edge directional pattern (NEDP). IEEE Trans. Affect. Comput. 2018, 11, 125–137. [Google Scholar] [CrossRef]

- Guo, S.; Feng, L.; Feng, Z.B.; Li, Y.H.; Wang, Y.; Liu, S.L.; Qiao, H. Multi-view laplacian least squares for human emotion recognition. Neurocomputing 2019, 370, 78–87. [Google Scholar] [CrossRef]

- Verma, M.; Vipparthi, S.K.; Singh, G. Hinet: Hybrid inherited feature learning network for facial expression recognition. IEEE Lett. Comput. Soc. 2019, 2, 36–39. [Google Scholar] [CrossRef]

- Sun, Z.; Chiong, R.; Hu, Z.p. Self-adaptive feature learning based on a priori knowledge for facial expression recognition. Knowl.-Based Syst. 2020, 204, 106124. [Google Scholar] [CrossRef]

- Reddy, A.H.; Kolli, K.; Kiran, Y.L. Deep cross feature adaptive network for facial emotion classification. Signal Image Video Process. 2022, 16, 369–376. [Google Scholar] [CrossRef]

- Wang, Y.; Li, M.; Wan, X.; Zhang, C.; Wang, Y. Multiparameter space decision voting and fusion features for facial expression recognition. Comput. Intell. Neurosci. 2020, 2020, 8886872. [Google Scholar] [CrossRef]

- Mohan, K.; Seal, A.; Krejcar, O.; Yazidi, A. FER-net: Facial expression recognition using deep neural net. Neural Comput. Appl. 2021, 33, 9125–9136. [Google Scholar] [CrossRef]

- Han, Z.; Huang, H. Gan based three-stage-training algorithm for multi-view facial expression recognition. Neural Process. Lett. 2021, 53, 4189–4205. [Google Scholar] [CrossRef]

- Shi, C.; Tan, C.; Wang, L. A facial expression recognition method based on a multibranch cross-connection convolutional neural network. IEEE Access 2021, 9, 39255–39274. [Google Scholar] [CrossRef]

- Al Masum Molla, M.; Manjurul Ahsan, M. Artificial Intelligence and Journalism: A Systematic Bibliometric and Thematic Analysis of Global Research. arXiv 2025, arXiv:2507.10891. [Google Scholar] [CrossRef]

- Gaya-Morey, F.X.; Buades-Rubio, J.M.; Palanque, P.; Lacuesta, R.; Manresa-Yee, C. Deep Learning-Based Facial Expression Recognition for the Elderly: A Systematic Review. arXiv 2025, arXiv:2502.02618. [Google Scholar]

- Dell’Olmo, P.V.; Kuznetsov, O.; Frontoni, E.; Arnesano, M.; Napoli, C.; Randieri, C. Dataset Dependency in CNN-Based Copy-Move Forgery Detection: A Multi-Dataset Comparative Analysis. Mach. Learn. Knowl. Extr. 2025, 7, 54. [Google Scholar] [CrossRef]

- MK, L.M.; Modepalli, D.; Shaik, M.B.; Busi, M.; Venkataiah, C.; Y, M.R.; Alkhayyat, A.; Rawat, D. Efficient Feature Extraction for Recognition of Human Emotions through Facial Expressions Using Image Processing Algorithms. E3S Web Conf. 2023, 391, 01182. [Google Scholar]

- Yue, K.B. Dining philosophers revisited, again. ACM Sigcse Bull. 1991, 23, 60–64. [Google Scholar] [CrossRef]

- Davidrajuh, R. Verifying solutions to the dining philosophers problem with activity-oriented petri nets. In Proceedings of the 2014 4th International Conference on Artificial Intelligence with Applications in Engineering and Technology, Kota Kinabalu, Malaysia, 3–5 December 2014; pp. 163–168. [Google Scholar]

- Tuncer, T.; Dogan, S.; Ataman, V. A novel and accurate chess pattern for automated texture classification. Phys. A Stat. Mech. Its Appl. 2019, 536, 122584. [Google Scholar] [CrossRef]

- Happy, S.; Routray, A. Automatic facial expression recognition using features of salient facial patches. IEEE Trans. Affect. Comput. 2014, 6, 1–12. [Google Scholar] [CrossRef]

- Majumder, A.; Behera, L.; Subramanian, V.K. Emotion recognition from geometric facial features using self-organizing map. Pattern Recognit. 2014, 47, 1282–1293. [Google Scholar] [CrossRef]

- Dubey, S.R. Local directional relation pattern for unconstrained and robust face retrieval. Multimed. Tools Appl. 2019, 78, 28063–28088. [Google Scholar] [CrossRef]

- Lyons, M.; Akamatsu, S.; Kamachi, M.; Gyoba, J. Coding facial expressions with gabor wavelets. In Proceedings of the Third IEEE International Conference on Automatic Face and Gesture Recognition, Nara, Japan, 14–16 April 1998; pp. 200–205. [Google Scholar]

- Aifanti, N.; Papachristou, C.; Delopoulos, A. The MUG facial expression database. In Proceedings of the 11th International Workshop on Image Analysis for Multimedia Interactive Services WIAMIS 10, Desenzano del Garda, Italy, 12–14 April 2010; pp. 1–4. [Google Scholar]

- Lucey, P.; Cohn, J.F.; Kanade, T.; Saragih, J.; Ambadar, Z.; Matthews, I. The extended cohn-kanade dataset (CK+): A complete dataset for action unit and emotion-specified expression. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition-Workshops, San Francisco, CA, USA, 13–18 June 2010; pp. 94–101. [Google Scholar]

- Yang, T.; Yang, Z.; Xu, G.; Gao, D.; Zhang, Z.; Wang, H.; Liu, S.; Han, L.; Zhu, Z.; Tian, Y.; et al. Tsinghua facial expression database–A database of facial expressions in Chinese young and older women and men: Development and validation. PLoS ONE 2020, 15, e0231304. [Google Scholar] [CrossRef] [PubMed]

- Chandra Sekhar Reddy, P.; Vara Prasad Rao, P.; Kiran Kumar Reddy, P.; Sridhar, M. Motif shape primitives on fibonacci weighted neighborhood pattern for age classification. In Soft Computing and Signal Processing: Proceedings of ICSCSP 2018, Volume 1; Springer: Berlin/Heidelberg, Germany, 2019; pp. 273–280. [Google Scholar]

- Yang, J.; Adu, J.; Chen, H.; Zhang, J.; Tang, J. A facial expression recongnition method based on dlib, ri-lbp and resnet. J. Phys. Conf. Ser. 2020, 1634, 012080. [Google Scholar] [CrossRef]

- Dong, F.; Zhong, J.; Wang, W.; Han, J.; Chen, T. A Novel Facial Expression Recognition Algorithm Based on LBP and Improved ResNet. In Proceedings of the 2024 7th International Conference on Sensors, Signal and Image Processing, Shenzhen, China, 22–24 November 2024; pp. 103–108. [Google Scholar]

- Mukhopadhyay, M.; Dey, A.; Shaw, R.N.; Ghosh, A. Facial emotion recognition based on textural pattern and convolutional neural network. In Proceedings of the 2021 IEEE 4th International Conference on Computing, Power and Communication Technologies (GUCON), Kuala Lumpur, Malaysia, 24–26 September 2021; pp. 1–6. [Google Scholar]

- Xie, S.; Hu, H.; Wu, Y. Deep multi-path convolutional neural network joint with salient region attention for facial expression recognition. Pattern Recognit. 2019, 92, 177–191. [Google Scholar] [CrossRef]

- Randieri, C.; Ganesh, S.V.; Raj, R.D.A.; Yanamala, R.M.R.; Pallakonda, A.; Napoli, C. Aerial Autonomy Under Adversity: Advances in Obstacle and Aircraft Detection Techniques for Unmanned Aerial Vehicles. Drones 2025, 9, 549. [Google Scholar] [CrossRef]

| Dataset | DPIBP1 | DPIBP2 | DPIBP3 | DPIBP4 |

|---|---|---|---|---|

| JAFFE | 60.36 | 61.32 | 61.50 | 58.93 |

| MUG | 85.14 | 85.21 | 83.37 | 84.25 |

| CK+ | 89.90 | 90.79 | 89.87 | 90.30 |

| TFEID | 94.29 | 93.51 | 94.23 | 94.78 |

| Method | Accuracy (%) |

|---|---|

| RADAP-LO [7] | 56.21 |

| WGC [5] | 58.20 |

| CSP [21] | 59.94 |

| RDMP [4] | 60.02 |

| KP [22] | 60.99 |

| LBP in [40] | 50.01 |

| LBP in [21] | 53.65 |

| Proposed DPIBP | 61.50 |

| Method | Accuracy (%) |

|---|---|

| RADAP-LO [7] | 80.16 |

| DCFA-CNN [51] | 83.09 |

| CSP [21] | 82.80 |

| RDMP [4] | 83.47 |

| KP [22] | 83.11 |

| LBP+SVM [40] | 80.01 |

| LBP+KNN [40] | 71.43 |

| LBP [21] | 76.16 |

| Proposed DPIBP | 85.21 |

| Method | Accuracy (%) |

|---|---|

| HiNet [30] | 88.61 |

| WGC [5] | 70.61 |

| CSP [21] | 86.24 |

| RDMP [4] | 86.54 |

| KP [22] | 87.22 |

| LBP in [52] | 88.07 |

| LBP in [53] | 86.71 |

| LBP+CNN [54] | 79.56 |

| Proposed DPIBP | 90.79 |

| Method | Accuracy (%) |

|---|---|

| DAMCNN [55] | 93.36 |

| MSDV [33] | 93.50 |

| CSP [21] | 94.40 |

| RDMP [4] | 94.64 |

| LBP [21] | 92.02 |

| Proposed DPIBP | 94.78 |

| Dataset | Precision | Recall | F1-Score | Accuracy | Total Runtime (min) | Per-Image Runtime (s) | Memory Footprint |

|---|---|---|---|---|---|---|---|

| JAFFE | 0.6123 | 0.6173 | 0.6020 | – | 20 | 5.63 | Low (∼50 MB) |

| MUG | 0.8526 | 0.8519 | 0.8503 | – | 20 | 2.29 | Low (∼50 MB) |

| CK+ | 0.9010 | 0.8530 | 0.8720 | 0.906 | 20 | 2.02 | Low (∼50 MB) |

| TFEID | 0.9550 | 0.9480 | 0.9510 | 0.947 | 20 | 2.50 | Low (∼50 MB) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pallakonda, A.; Yanamala, R.M.R.; Raj, R.D.A.; Napoli, C.; Randieri, C. DPIBP: Dining Philosophers Problem-Inspired Binary Patterns for Facial Expression Recognition. Technologies 2025, 13, 420. https://doi.org/10.3390/technologies13090420

Pallakonda A, Yanamala RMR, Raj RDA, Napoli C, Randieri C. DPIBP: Dining Philosophers Problem-Inspired Binary Patterns for Facial Expression Recognition. Technologies. 2025; 13(9):420. https://doi.org/10.3390/technologies13090420

Chicago/Turabian StylePallakonda, Archana, Rama Muni Reddy Yanamala, Rayappa David Amar Raj, Christian Napoli, and Cristian Randieri. 2025. "DPIBP: Dining Philosophers Problem-Inspired Binary Patterns for Facial Expression Recognition" Technologies 13, no. 9: 420. https://doi.org/10.3390/technologies13090420

APA StylePallakonda, A., Yanamala, R. M. R., Raj, R. D. A., Napoli, C., & Randieri, C. (2025). DPIBP: Dining Philosophers Problem-Inspired Binary Patterns for Facial Expression Recognition. Technologies, 13(9), 420. https://doi.org/10.3390/technologies13090420