1. Introduction

Although pilotless aircraft have a long history of use, it is only with recent technological advances that drones have become more prevalent in their use and become accessible and affordable [

1]. These advances have allowed Remotely Piloted Aircraft Systems (RPAS) to be made available for hobbyists and professional users, from agriculture to surveying and from mining to search and rescue operators, all at appropriate size, ease of purchase, and use. RPAS of much larger size and sophistication can be used for security and defence purposes along with burgeoning commercial applications. These larger Remotely Piloted Aircrafts (RPAs) are ready for use in National Airspace Systems (NAS), with NASA conducting research during the second decade of this century into the integration of RPAS into NAS [

2]. In the example of Australian aviation, the ever-increasing use of RPAS can be evidenced in the surging number of Remote Pilot Licenses (RePLs) being issued by the Civil Aviation Safety Authority. The increase in license issues gives a pointer to increased use of RPA. Future projections predict a yearly growth in drone activity of 20%. Currently it is estimated that there are 1.5 million drone flights annually in Australia, rising to a predicted 60.4 million flights in 2043. Of those flights, it is estimated that over 70% will be conducted by goods delivery operations [

3]. This growth may be increasingly constrained by regulatory considerations rather than technological limitations. With such a rapid increase in activity in and around the urban environments where most recipients of the delivered goods reside, there can be expected to be an increase in accidents and incidents. There is an obvious and growing need to recognize and regulate specific RPA pilot skills for specific flying tasks [

4]. Without appropriate skills to navigate in obstacle-strewn environments such as urban settings, the growth of RPA activity will also be constrained by the human element which remains a vital element in RPAS.

Despite being driven by technological innovations, most RPAS accidents have been identified as arising from failures in this same technology [

5]. Notwithstanding the technology failures, researchers have become concerned about the role of the human pilot in RPAS accidents [

6,

7]. RPA operator skills have been identified by these researchers as a leading cause of safety occurrences. Lack of depth perception is a greater contributor to RPA accidents than it is for general aviation and all aviation operations in Australia [

7]. The predicted increase in urban drone activity for delivery of goods will increase the importance of obstacle avoidance. Moving safely through an environment is summarized by Lester et al. [

8] as “A particularly complex behavior relying on a range of perceptual, mnemonic, and executive computations. It requires the integration of different types of spatial information, the selection of the appropriate navigation strategy, and, if circumstances change, switching between strategies.” (p. 1021).

Exercising accurate depth perception skills can be problematic for RPA pilots owing to the unique challenges facing the pilot. These can include generic challenges for most flights, such as not being co-located with the drone and not always being aligned with the direction of travel. Flying an RPA can be a challenging task. Compared to flying a conventionally crewed aircraft, the RPA pilot is deprived of 4 of their 5 senses. Only vision remains for the RPA pilot to be able to use [

9]. Further, not being co-located with the aircraft can also make perceptual skills and establishing accurate depth perception more difficult with the loss of supporting cues such as motion feedback [

10]. There can also be specific challenges for the sortie being undertaken, e.g., as Hartley et al. [

11] describe, issues unique to flying around forests. Distance estimation difficulties appear to increase with an increase in viewing distance. Lappin et al. [

12] identified studies that indicated the foreshortening of estimations at greater viewing distances. All produce challenges for the depth perception abilities of RPA pilots.

When operating in third person view, it is difficult to maintain depth perception and to judge distances to objects and thus avoid the objects [

13], difficulties that only increase with increasing distance between the drone and the pilot [

14,

15]. Further increasing this difficulty is when the drone pilot is stationary (as is often the case) whilst conducting operations. This situation results in motion parallax not being available to help in depth perception. Further difficulties in perceptual awareness arise for the remote pilot when the aircraft is not orientated in the same direction as the pilot, as the principle of motion compatibility is eroded [

16]. When this happens, the control inputs from the pilot may not move the aircraft in the desired direction, making it easier for the pilot to fly the aircraft into an object.

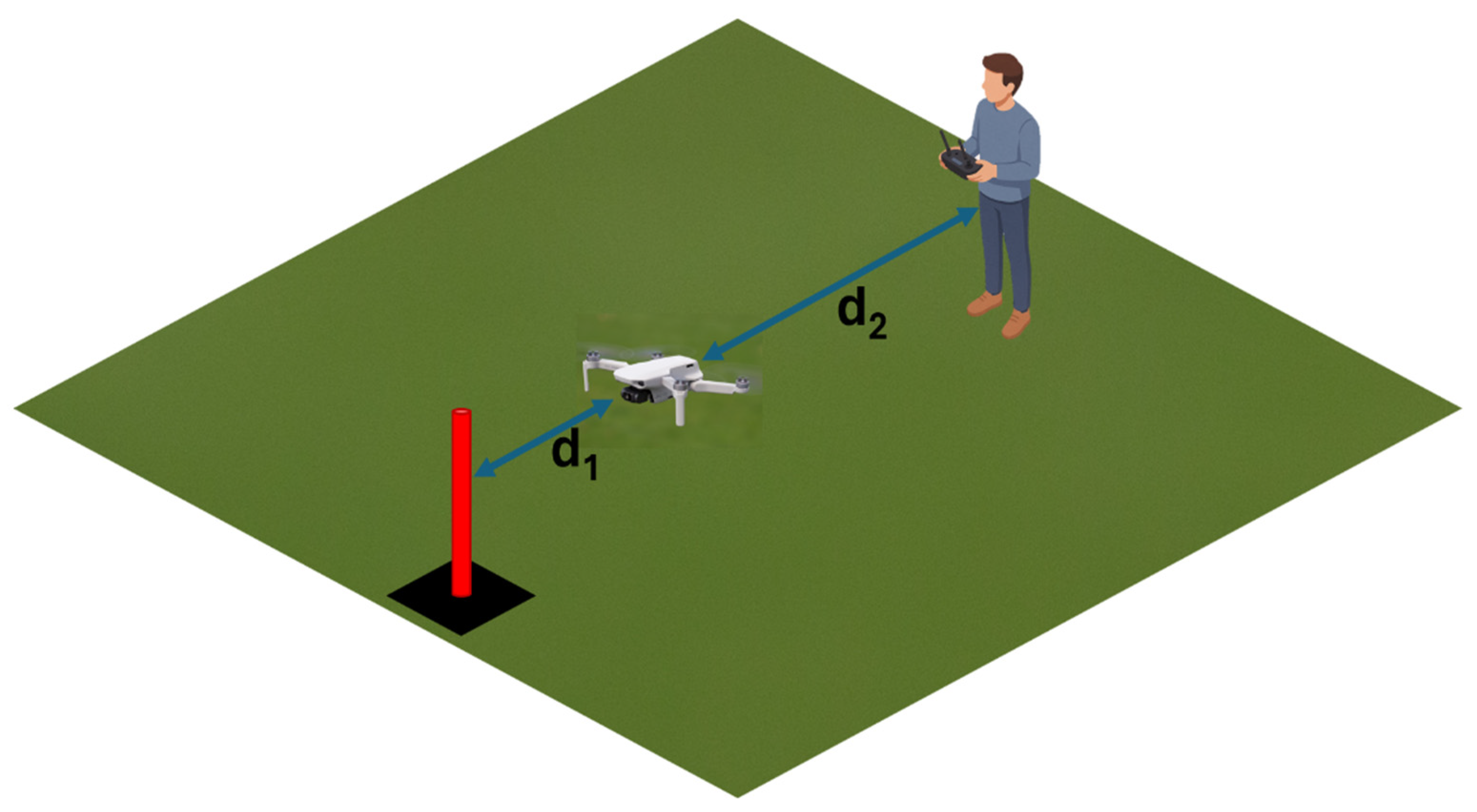

An important skill in depth perception is the ability of the drone pilot to envisage themselves in a different position, that is, a change in egocentric perspective [

17]. This has been identified as “essential for navigation in large-scale environments” [

18] (p. 414). This required egocentric perspective is one of the components of depth perception. Establishing this perspective requires estimation of distances between the RPAS pilot and objects in the environment being viewed, including the drone itself. The exocentric distance estimation between the drone and obstacles is also part of depth perception (

Figure 1). The lack of, or inaccurate, distance estimation is a causal factor in incidents and accidents for RPAS operations.

Solutions can be provided by both technology and increased depth perception skills of RPA operators. In response, drone manufacturers are developing technical solutions to enhance pilots’ depth perception. These solutions include long-standing technologies such as primary radar, which can be used to detect other aircraft and objects on the ground that could be a risk. LIDAR is also available for the detection of obstacles [

19]. With the more recent development of machine learning, Electro-Optical (EO) sensors can become a viable option to help detect collision threats, although the authors concede some issues need to be overcome to make this option truly viable. Along with the emergence of fully automated flights, these solutions would seem to reduce the need for the remote pilot to be able to perceive distances between obstacles in the flying field and the drone. However, the solutions can be cumbersome for light drones, expensive to have installed and operated, and not available to RPA pilots of smaller RPA in the micro, very small, and small categories. One technical solution offered by drone manufacturers for the identification of potential obstacles is onboard cameras. While their primary purpose is videography, they can also be used for safety purposes. Cameras are ubiquitous and available on even the most basic off-the-shelf prosumer drones, from sub 250 g drones to larger drones. The cameras offer high-definition views of the flight path and surrounding environments with a monoscopic image projected onto a 2D screen at the ground control station.

While cameras are readily available, the difficulty of implementing other supporting aids means there is likely to remain a need for VLOS pilots to be able to establish and maintain depth perception skills without the support of aids.

With the ease of use and availability of onboard cameras, this research examines the use of the image from a camera to help with establishing better depth perception amongst ab initio RPA VLOS pilots as measured by exocentric distance estimation. Hence, the specific research objective of this study was to evaluate whether the use of onboard camera imagery can improve the accuracy of exocentric distance estimation among ab initio RPA pilots operating under VLOS conditions, at varying operational distances.

2. Literature Review

While photography would seem to be the primary purpose of having a camera onboard, it can be put to other uses. These include using the transmitted images to the Ground Control Station (GCS) for navigation purposes and obstacle avoidance. Alvarez et al. [

20] used a small multi-copter to demonstrate the efficacy of a camera-based obstacle avoidance system by flying it through narrow corridors littered with obstacles. The ability to use a camera for this task has come about because of the development of greater computing power and better algorithms [

21]. They also identified that camera vision was more advantageous for VLOS pilots than LIDAR.

The cameras used for obstacle avoidance can be monocular, stereo, or depth cameras [

22], although Mohta et al. [

21] do not believe the latter are suitable for use in outdoor, sunny settings. Stereo was the preferred option over monocular [

21] because of the ease of initialization prior to flight. The benefits of camera vision have been identified as the range the camera can provide, low power requirements, and low weight [

23]. The image from the camera can be used to enhance the depth perception skills of the RPA pilot.

Although humans appear to have an innate ability to perceive depth, depth perception and the ability to perceive in three dimensions has been described as something of a paradox for humans [

24]. Walk and Gibson [

25] experimented with infants on a centre board with a shallow drop-off on one side and a much larger fall on the other—described as a “visual cliff”. Consistently the infants refused to crawl towards the side of the centre board with the large fall, even with the inducement of having its mother on the large fall side of the centre board. The researchers concluded that the “average human infant discriminates depth as soon as it can crawl.” [

25] (p. 23). This innate ability to perceive depth develops as children get older, aided by environmental experiences [

24].

Non-visual information can also be used to detect depth in the environment. Perception in the environmental elements may also be established through physical stimuli such as heat, electrical fields, or audible cues [

26]. When subjects had audio cues presented to them, they were more readily able to visually detect objects when the audio and visual stimuli were presented in unison. If the stimuli were presented at different times, there would be no improvement in detection [

27]. This characteristic was also noted in RPAS pilots where Dunn [

28] found that audio stimuli improved students’ horizontal distance accuracy when they flew in simulated wind conditions, i.e., a more challenging flight condition.

Hing et al. [

10] identified problems with using the camera for flying in cluttered environments, including the reduction in the field of view, which can reduce the pilot’s ability to know where the extremities of the aircraft are located. As the camera angles changed, there was a requirement for more mental effort from the drone pilot to establish a picture of the flying environment. This effort can induce vertigo. While it is difficult to gain depth perception for VLOS flying, Beyond Visual Line of Sight (BVLOS) operations further increase the difficulty as the aircraft is out of the pilot’s sight. As the aircraft flies further away, the accuracy of depth judgement decreases [

29]. The depth perception must be gained through the image on the ground control screen sent to it from the onboard camera. For objects outside the view captured by the camera, they remain unseen by the pilot.

To help overcome limitations of using a single camera, pilots were tested wearing a VR Head Mounted Display with monoscopic and stereoscopic views of a drone flight [

14]. The subjects were asked to estimate the height—above ground level—of the camera carried by the drone. Consistently, when viewed in monoscopic view, the subjects overestimated the distance. In stereoscopic view, the estimations of height made by the participants were closer to the actual height of the camera on the drone but were still not considered accurate.

Hing et al. [

10] examined the use of what they described as “chase viewpoint” where the drone pilot was provided with perceptual assistance by being presented with a 3D map of the flying environment, “as they were following a fixed distance behind the vehicle” (p. 5642). Results indicated that chase view-assisted flights were conducted in a more stable manner with a reduced number of large angular accelerations, allowing for a smoother, safer flight through the flying environment compared to the flights conducted using the onboard camera only.

These technological developments improve RPAS safety elements such as collision avoidance and will be for useful future developments such as urban air mobility. For RPAS tasks such as photography being conducted by a real estate agent, these technological developments have been described as “overkill” [

15]. This is because these technologies add expense, increase the difficulty in operating the drone, and require larger, heavier equipment, which outweigh the intended benefits. Simple solutions would benefit broad industry adoption.

Augmented reality (AR), in providing pilots with computer-generated graphical cues and other stimuli on images of the physical world, has also been suggested for enhancing pilots’ ability to accurately judge distance and height [

30]. The efficacy of augmented reality (AR) overlay has been used to enhance the depth perception of drone pilots [

31]. Subjects were asked to estimate the position of a stationary drone by marking its position on a map. Half of the subjects used AR while the second half of the cohort merely observed the aircraft. Using AR provides for more accurate estimation of the aircraft’s location than observing the aircraft. The subjects overall found the AR to be easy to use. For BVLOS flights, the use of AR was tested when operating at mid-level altitudes. The drone pilots found it useful in enhancing their situational awareness during long-range flights, especially for route planning and target identification [

32].

Further enhancement in using AR is the presentation of information to pilots wearing goggles. The pilot can interact with the ground control station whilst viewing the aircraft and its positioning. This reduces the amount of time the drone pilot redirects their view away from the aircraft to the ground control station. This reduction, in turn, can be expected to help pilots establish and maintain depth perception. An observational study of pilots using AR and not using AR showed there was over twice as much viewing of the aircraft when using the AR googles as against not using them during an automated flight. The researchers concluded that this was beneficial for the situational awareness levels of drone pilots [

33].

Dunn [

28] conducted an accuracy and timeliness experiment where student groups tracked a drone by flying VLOS using a monitor and goggles for BVLOS. The goggles slowed the students down, as they took much longer to complete the flying course. Dunn attributed this to “redundant cues such as two visual forms of feedback presented together, may become burdensome if oppositely oriented” (p. 105).

Inoue et al. [

30] proposed an enhanced third-person view (TPV) to provide the AR overlay to aid depth perception when flying BVLOS by having a follower drone fly above and behind the camera ship with both aircraft spatially coupled by being controlled by the same stick inputs from the pilot. The researchers found the use of enhanced TPV with an AR overlay associated with this allowed the subjects to have better depth perception than a first-person view (FPV), with subjects also commenting on the difficulties of establishing depth perception with unaided TPV.

Another technical solution to aid pilots with depth perception is assisted landing systems to aid accuracy when landing the drone [

13]. Problems arising from using assisted landing systems included excluding the pilot from the control loop. The aircraft is thus not able to adapt to changing pilot goals. Changes in the physical environment that may make the planned landing unsuitable can also not be adapted. A suggested solution was to utilize shared autonomy. Using a simulated environment, subjects were required to land the drone at specific landing sites when assisted by shared autonomous mode or unassisted mode. Novice pilots were found often to try to land between landing sites, which was attributed by the researchers to a lack of depth perception. This in turn negatively affected the ability of the assistance from the shared autonomy to correctly gauge the pilot’s intentions. Overall, the assisted landings were more accurate, and the landing site closest to the drone pilot was more accurately landed upon as against sites further away [

13]. Further work was performed on shared autonomy within a real-world environment by the researchers. Subjects were required to land the drone on pre-set landing sites being either assisted by shared autonomy or having no assistance. The assisted landings were more accurate, helping the pilots to gauge depth. Unassisted landings produced evidence of undershooting the target landing site [

34].

Haptic control of a drone has also been shown to help pilots in visually challenging environments. These systems provide tactile feedback to inform the pilot about conditions within the flying environment, including obstacles that need to be avoided. When subjects were provided with haptic control, it helped overcome reduced or lack of visual information, likely reducing the collision risk [

35]. Macchini et al. [

36] found visual systems augmented with haptic control allowed the subjects to hover the drone closer to objects without collisions. The use of haptic control can allow for the reduction in “head-down” behaviour of the drone pilot, as they need to view the ground control station less. This can improve the perception of the relationship between the drone and obstacles [

37]. Ramachandran et al. [

38] trialled a haptic control sleeve so that when the drone was in the vicinity of an obstacle, it locked the pilot’s elbow and wrist joints. In simulated exercises where visual feedback for the subjects was compromised, the use of this sleeve led to less collisions when attempting to fly the drone through a hole in a wall.

Cacan et al. [

39] demonstrated that the human operator can be better than automation when conducting airdrops of a parafoil onto designated landing spots. Using an unmanned parafoil system to drop a payload onto a designated spot, a fully autonomous system had 50% of the impact points within 17.7 m of the desired landing spot (90% of the impact points were within 38 m of the landing spot). While the researchers were pleased with this accuracy, they noted that the autonomous system “was blind to objects around the impact point” [

39] (p. 1145) and human intervention was sometimes required to stop the parafoil hitting objects surrounding the landing site. When a human operator was inserted into the system for the landing process using conventional remote control by being visual with the desired landing spot, the parafoil, and the surrounding environment, accuracy of landing improved to 10.4 m for 50% of the landing attempts (90% of the impact points were within 21 m of the landing spot).The researchers noted that depth perception when using this system was more difficult when the operator was not aligned with the impact point. Overall, the researchers noted an improvement in landing performance, including depth perception, when there was a human in the control loop [

36].

The limitations of technology in being able to assist drone pilots with depth perception were also noted by Eiris et al. [

40] when drones are employed for indoor building inspections—an obvious location for accurate depth perception and collision avoidance. The technologies suggested for safe flying in this environment are expensive and not easy to implement, resulting in the human operator being relied on to operate the drone in a very challenging environment. The development of technological devices would appear at this stage of the journey to be supportive and enhance the pilot’s depth perception abilities rather than providing a complete replacement solution. Thus, while there are technical solutions available to drone pilots to assist with depth perception, the pilot remains an important component for accurate flying.

3. Materials and Methods

With cameras so readily available on drones of every size and type, from multi-rotor to vertical take-off, they are a potentially useful tool for enhancing the depth perception abilities of RPA pilots. This research examines the use of the transmitted image from the camera to a screen on the Ground Control Station (GCS) for improved distance estimation. A group of ab initio RPA students flew a sub 250 g drone with no assistance from a camera and a second group of ab initio RPA students were helped with distance estimation by having access to a camera image on the GCS. The null hypothesis can be stated that the use of camera vision results in no improvement in distance estimation for ab initio drone pilots.

The utilized methodology is a pre-experimental design of double static group comparison, illustrated in

Figure 2. Two independent groups of ab initio drone pilots were tasked with flying the drone towards two obstacles, one placed 20 m from the pilot and the other at 50 m. The obstacle direction was 11 o’clock for the 20 m obstacle and 1 o’clock for the 50 m obstacle.

As the drone was flown towards each obstacle, the researcher called for the drone to be stopped and hovered at approximately random times for each participant. The range of the stopping distances was between 1.7 m and 6.2 m at the 20 m obstacle and 1.6 m and 18.9 m at the 50 m distances. The student was asked to estimate the exocentric distance between the drone and the obstacle.

The drone used was a DJI Mini (2 and 3). The actual distance of the drone was ascertained using a laser range finder. The flight path had the drone first flown to the 20 m obstacle and then continued to the 50 m obstacle. An illustrative example of the flight path is given in

Figure 3.

The subjects were first-year undergraduate students enrolled in various degree courses who, for familiarity with aviation operations and technologies, had to participate in a tutorial on the operation and control of a very light drone (sub 250 g). The unassisted group (n = 38) used a ground control station that did not have any supporting aids to assist the students in estimating the distance between the drone and the respective obstacle. The assisted group (n = 55) was provided with a GCS with a screen containing the image of the flight surroundings transmitted from the camera onboard the drone. The students in this group were able to directly view both the wider environment and the screen of the GCS. The activity took each participant approximately 5 min to complete. For the unassisted group, the entire time was spent looking at the drone in flight. For the assisted group, they were required to spend much of their time looking at the drone, applying visual flight protocols, and utilized the camera for approximately 60–90 s for each measurement, looking at the screen to help their exocentric distance estimation. This procedure ensured that no visual overload or conflicting perceptual cues from direct vision and the GCS screen would interfere with performance.

No distance information was available to the students on the ground control station screen. All flights were flown in clear visibility conditions.

4. Results

The unassisted group (

n = 38) were given no assistance in their task to estimate distances. The assisted group (

n = 55) used a ground control station with a screen showing the onboard camera view. The absolute estimation error was calculated by the differences between the actual distance of the drone from each of the obstacles and the estimation made by the students, and is presented in a whisker box plot in

Figure 4a. The whiskers are shown based on quartiles. Outliers are shown in the figure as ‘o’ and were calculated by MATLAB 2024A as instances exceeding the limits of the box plus or minus 1.5 interquartile ranges, referred to as the quartile method.

A Kolmogorov–Smirnov test for normality was conducted to confirm the appropriateness of this range of presentations, and to determine the appropriate statistical tests of difference. At 20 m the absolute estimation error was not sufficiently normal either for the Unassisted Group D (38) = 0.1434,

p > 0.05 or for the Assisted Group D (55) = 0.1486,

p > 0.05. However, at 50 m the absolute estimation error was normal for both the Unassisted Group D (38) = 0.1908

p < 0.05 and the Assisted Group D (55) = 0.1527

p < 0.05. This can be seen in the violin plots given in

Figure 4b.

The difference in mean performance between the groups was much larger for the 50 m obstacle. This was also true for the standard deviation, albeit with a marginally higher standard deviation for the Assisted Group at the 20 m obstacle compared to the Unassisted Group at 20 m. These results are summarized in

Table 1.

The proportion of students overestimating the distance varied between the two groups at different obstacle distances. The percentage of students overestimating the distance between the drone and the 20 m obstacle was 34.2% for the Unassisted Group and 25.5% for the Assisted Group. At the 50 m obstacle, the percentage of students overestimating the distance was 7.9% for the Unassisted Group and 25.5% for the Assisted Group. This can be seen in

Figure 5, where the dumbbell plot contrasts the length of the bars, the two assisted plots are balanced, the 50 m unassisted is skewed to underestimate, and the 20 m unassisted is skewed to overestimate, relative to the assisted.

Levene’s test for the equality of variances across both groups was conducted, the results of which are summarized in

Table 2. The requirement for homogeneity of variance at the 20 m obstacle was met, and there was no significance at the

p < 0.05 level. At the 50 m obstacle, the requirement of homogeneity of variance was not met and there was significance at the

p < 0.05 level. Aside from preparing the correct statistical test for the differences in means, Levene’s test shows the Assisted Group is statistically significantly more consistent in their error estimation than the Unassisted Group. Due to the homogeneity of variance outcomes, Welch’s

t-test was conducted for the two groups at the 50 m obstacle, while a

t-test for equal variance was conducted for the 20 m obstacle.

A one-tailed

t-test (

Table 3) for independent samples (equal variance assumed) showed the difference between the two groups at the 20 m obstacle was not statistically significant at the 95% level, with t (91) = 0.2798,

p = 0.7803, and 95% confidence intervals in the Unassisted Group (1.072, 1.775) and the Assisted Group (1.334, 1.675). Thus, the null hypothesis cannot be rejected at this distance.

A one-tailed

t-test (

Table 4) for independent samples (equal variance not assumed) showed that the difference between the two groups at the 50 m obstacle was statistically significant at the 95% level, with t (44) = 3.0823,

p < 0.004, and 95% confidence intervals in the Unassisted Group (3.594, 7.206) and Assisted Group (1.903, 2.973). Thus, the null hypothesis can be rejected at this 50 m distance, and the result is statistically significant.

The Hedge’s measure of effect size at 20 m, g = 0.034, indicated a small effect size. At 50 m the Hedge’s was g = 0.751, indicating a medium effect size.

5. Discussion

5.1. Findings

While depth perception is a challenge for pilots of all aircraft types, it is especially so for RPA pilots [

7]. As a technology-driven sector there have been different technological solutions provided in attempts to solve this issue. These solutions, however, can come at a financial and operational cost that places them beyond many operators. A simple aid to safe flying can be the onboard camera, which is freely available on most RPA from sub-250 g drones to larger craft. The utility of the camera in aiding the depth perception of ab initio students was tested, measured by exocentric distance estimation. At the shorter distance of 20 m there was no significant difference between the means or variances of the two groups. The use of the image from the camera did not provide for more accurate estimations. However, at the greater distance of 50 m, the students in the Assisted Group who had the use of the camera image were significantly more accurate and consistent in their estimations. The difference in the accuracy of estimations at the different distances could reflect operating within the egocentric action space. Within this space of 20–30 m, distance judgement by people is very accurate [

41]. The difficulty in accurate distance estimation at greater distances is consistent with that identified in the literature [

12].

While the use of technology to aid the accurate flying of RPA has been questioned [

14,

40], this new finding suggests that having even straightforward technology such as an onboard camera and using the image provided by it may be advantageous for safety. As cameras are ubiquitous on even the smallest drones, using a GCS with an appropriate screen during ab initio training could be beneficial for enhancing depth perception skills.

The greater accuracy and consistency of the students who had access to the camera image have implications for the training of ab initio drone pilots (discussed below). At the shorter tested distance of 20 m, there would appear to be little if any advantage to training students to use the camera image. It is at this distance that the initial training takes place as ab initio students are introduced to handling exercises such as horizontal and vertical rectangles, simulated bridge inspections, and figures of 8. Using a GCS with a transmitted camera image would not appear to increase effectiveness over 20 m. As humans are accurate with depth perception over this distance [

41], the provision of an aid such as a camera to help distance estimation may be redundant.

At longer distances, implementing items within the training curriculum dealing with the use of a camera could improve operational safety through better distance estimation and less likelihood of collisions. A possible negative outcome from such a camera use may be an inappropriate level of confidence for inexperienced RPA pilots who operate at distances greater than their abilities support. That is, the pilot may believe in the camera image providing an extra layer of safety and security that may not be warranted. It could be tempting for VLOS operations to become quasi-BVLOS flights.

A second metric tested the proportions of under- or over-estimation of the distance between the drone and the obstacle. In making exocentric distance estimations, the students in both groups markedly underestimated the distance of the drone to the obstacle, consistent with the literature for these distances. For the Unassisted Group, the proportion of underestimation was greater at the 50 m obstacle. For the Assisted Group, the proportion of underestimation was more constant between the two obstacles.

The higher proportion of underestimation of these exocentric distances is somewhat surprising. Research into RPA accidents [

7] indicates there were many collisions between a drone and an obstacle, suggesting pilots did not accurately perceive the distance between them. In underestimating the distance between the drone and the obstacle, the pilot perceives the drone as closer to the obstacle than it is. Such a perception could be thought to protect the flight, as there would be a reduced chance of the drone colliding with obstacles. More analysis of the incident database is required to determine at what distances each misjudgement was made, related as these are to each drone’s speed and purpose.

5.2. Limitations

While the Assisted Group students did have the use of the camera image on the GCS to aid their distance estimations, they also were able to look up from the GCS and view the actual environment and the drone’s location within it. No measurement was made about the division, if any, of viewing between the environment and the GCS made by these students. For future studies, the identification of whether the students spent longer looking at the GCS or at the actual environment would be of use. Endsley [

42] noted that the allocation of visual attention across multiple sources of information is a critical component of situation awareness in dynamic environments.

This study used ab initio students from an elite university program. The extrapolation of the results to students of different backgrounds, as well as more experienced operators, can be queried; that is, the results may have limited generalizability beyond the demographics involved. It may be that as pilots gain more flying experience, their depth perception skills increase, and they do not rely as much on supporting aids such as camera vision. Wickens and McCarley [

43] have shown that the ways in which individuals integrate and prioritize perceptual cues can change significantly with increased experience, suggesting that the observed benefits of camera use may be specific to novice operators. Further work with RPA pilots with experience would help understand how much of the benefit of the camera may have been due to the inexperience of ab initio pilots in depth perception. Similarly, if such perception practice does improve the skill, to what extent does it atrophy and therefore need currency training? Importantly, the potentially limited generalizability does not discount the statistically significant findings, which show that for new operators learning to use drones for the first time, significant safety gains can be achieved by utilizing the readily available technology in the correct way to prevent controlled flights into terrain, and other associated aviation accidents [

7]. This has the potential to reduce operating costs for the industry.

The limitations of using a monocular image have been identified as not always providing enough visual information for accurate distance information [

44], which could explain this result. However, research with people having monocular vision (loss of vision in one eye) was found to be just as accurate in large exocentric distance perception as those with binocular vision [

45]. Critically, the limitation of monocular vision using a camera at 50 m is not a significant concern, due to the application of the onboard camera to an exocentric distance estimation. That is, while the drone was 50 m from the student, it was only a few meters from the obstacle.

Stationary obstacles were used for this study, as they are a large part of the operational reality faced by small drone users such as photographers. Further, previous research indicated a number of accidents involving drones colliding with non-dynamic objects such as buildings and trees [

7].

5.3. Operational Implications

The results of this study indicate that the use of the onboard camera may provide increased accuracy and consistency in exocentric distance estimations by ab initio RPA pilots, particularly at greater distances. These findings have implications for the design of RPA training programs and standard operating procedures. While traditional VLOS training focuses on visual awareness and spatial judgement without assistance, incorporating the use of a ground control station with a live video feed from the onboard camera could support skill development, especially in operations extending beyond 20 m. The improved consistency observed in the assisted group suggests that camera use may reduce the variability in pilot performance, which is a desirable outcome for safety-critical tasks. This aligns well with the work of Backman et al. [

13], which was in the context of landing assistance.

Consideration can therefore be given to including camera-based perceptual assistance during the training period, not as a substitute for visual judgement, but as a tool to reinforce spatial understanding. This may be particularly relevant for scenarios involving obstacle-rich environments, such as infrastructure inspection or low-level urban operations. However, it must be emphasized that this should not lead to an erosion of regulatory boundaries between VLOS and BVLOS operations. Rather, the camera can be used as a supplementary aid in controlled settings during training to enhance perception without substituting core visual competencies. Consideration can be given to incorporating practical guidance on the need for following regulatory standards to prevent inappropriate overreliance on visual aids in VLOS operations.

5.4. Future Research

While the findings of this study point to improved accuracy and consistency in distance estimation from camera assistance, further work is needed to explore how these findings extend beyond the specific training context used. Future studies could investigate whether the improved performance results from enhanced perceptual support or whether it reflects a reliance on the camera image that does not translate into improved unassisted performance. Identifying whether the benefit leads to a transferable perceptual skill or is task-dependent will inform how camera use should be integrated into training curricula.

Longitudinal research could also examine whether repeated exposure to camera-supported tasks contributes to more lasting improvements in depth perception and spatial judgement, even when the camera is removed. This would help determine whether camera-based interventions have a training effect or simply function as a situational aid.

Additionally, expanding the participant pool beyond ab initio students to include experienced RPA operators would provide insight into whether the benefits observed are specific to novice users. Further work would be advantageous in determining the usefulness of the camera being confined to ab initio students or whether experienced pilots could also benefit from using a camera. That is, the current working hypothesis is that there would be less impact on the exocentric distance estimation for experienced pilots; however, this warrants experimentation to determine if this is supported by measurements, or not.

Studies incorporating more ecologically valid scenarios, including dynamic flight paths, cluttered environments, and stress factors, would assist in understanding how these perceptual skills are deployed in more operationally realistic conditions. Although stationary obstacles were used for the study, future research should examine the potential of using camera-assisted distance estimation with dynamic obstacles. This has been done with unmanned ground vehicles [

44].

Crossover design utilizing future students—the course the students were drawn from runs annually—will be implemented. Improvement in the research can further be made using eye-tracking devices to measure the amount of time participants devote to watching the camera image compared to how much time is spent gazing at the natural environment.

6. Conclusions

Depth perception is a challenge for RPA pilots who do not experience the same level of feedback as conventionally controlled aircraft pilots. Examination of the accident database indicated a higher proportion of accidents from depth perception failings in RPAS operations as against other sectors of aviation [

7]. With a predicted 60.4 million RPAS flights by 2043 [

3], there will be ever greater opportunity for the number of depth perception-related occurrences to increase.

To help improve depth perception skills, this study evaluated if the use of the image from a camera onboard an RPA would improve the accuracy of exocentric measurements of ab initio pilots. While there are different technologies available to assist in this skill, they can be difficult or expensive to install and use. Cameras are ubiquitous pieces of equipment that are included on most light, prosumer drones, and are thus easily available to VLOS ab initio pilots.

Two groups of ab initio students flew a drone towards obstacles at different distances from themselves. One group of participants used only direct viewing of the drone and its relationship with the obstacle it was approaching, while the second group had both direct view of the drone and the obstacle, and assistance from the vision provided by the camera image. The findings identified benefits of using a camera to enhance distance estimation. For the group assisted by the camera, the overall mean of the estimation error and the consistency of estimation improved. However, the improvements were noted only at the further tested distance of 50 m, not at the closer tested distance of 20 m. The closer distance is part of a sphere within which humans are remarkably accurate in their depth perception skills [

41]. It may be that using a camera at shorter distances of 20–30 m may not provide improvements in depth perception. It was also noted that both groups of ab initio pilots had high proportions of pilots who underestimated the distance between the drone and the obstacles. How this finding pertains to collision avoidance incidences of drones is uncertain until further incident analysis is undertaken.

Further study is required to identify the extrapolation of these findings from a cohort of ab initio pilots to more experienced RPAS operators. Does using the camera to assist with depth perception decrease as experience is gained? Identified benefits in using a camera for both ab initio and more experienced RPA pilots can lead to consideration for inclusion in RPAS training syllabi.