Abstract

Digital maturity is increasingly recognised as a determinant of competitiveness for small and medium-sized enterprises (SMEs), yet empirical evidence from advanced economies remains limited. Here, we evaluate a sample of Luxembourgish manufacturing SMEs across six dimensions of the Digital Maturity Assessment Tool (DMAT)—Digital Business Strategy (DBS), Digital Readiness (DR), Human-Centric Digitalisation (HCD), Data Governance/Connectedness (DG), Automation and AI (AAI), and Green Digitalisation (GD)—to quantify their overall maturity. To avoid compositional artefacts, given that we rely on the EU’s DMAT, we introduce leave-one-out correlation (LOOC) to assess the association between DMA score and each focal dimension; within-firm disparities were tested via repeated-measures ANOVA; sample profiles were examined using Principal Component Analysis (PCA) followed by hierarchical clustering (HCPC). Respectively, the results converged across methods: HCD (r = 0.717) and DBS (r = 0.652) exhibited the strongest links to maturity, DG/AAI/GD were moderate contributors (r ≈ 0.50–0.58), and DR was weak (r = 0.298). The ANOVA analysis indicated substantial between-dimension differences (partial η2 ≈ 0.41), with DG and DBS leading and AAI and GD lagging. PCA–HCPC revealed two coherent cluster profiles—Leaders and Laggards—arrayed along a general maturity axis, with the most significant gaps in DBS and HCD. Practically, firms that prioritise DBS and HCD exhibit a higher DMA score, which creates a foundation for industrialising and automatising manufacturing processes. Given the small, single-country, cross-sectional design, longitudinal and adequately powered studies with objective performance outcomes are warranted to validate and generalise these findings.

1. Introduction

1.1. Background and Contextual Settings

Digital transformation has become a cornerstone of industrial competitiveness in the era of Industry 4.0 [1,2,3]. Across Europe, policymakers have set ambitious targets for technology adoption, aiming to have 75% of enterprises use advanced digital technologies by 2030 [4]. Manufacturing small- and medium-sized enterprises (SMEs) are a particular focus [5,6], as their digital uptake is crucial for economic resilience and innovation. As a consequence, the European Commission and Member States have launched numerous initiatives to boost SME digital maturity. The Digital Europe Programme (2021–2027) is investing over EUR 8 billion to strengthen Europe’s digital capacity and infrastructure (e.g., supercomputing, AI, cybersecurity) and to enable the widespread use of digital technologies [7,8]. A key element is the establishment of European Digital Innovation Hubs (EDIHs)—a network of 200+ one-stop shops [9,10] that provide SMEs with access to expertise, testbeds, and funding for digital projects [11,12,13,14]. Within this framework, a Digital Maturity Assessment Tool (DMAT) [15,16] has been rolled out to help SMEs assess their Digital Readiness, raise awareness and benchmark against peers [17].

In digitally advanced countries, significant gaps persist between “Leader” and “Laggard” firms. This disparity is evident in Luxembourg: the country boasts world-class digital infrastructure, yet it faces persistent challenges in SME digital uptake [18]. This is particularly due to skill shortages, data portability and interoperability [19]. According to the latest Digital Intensity Index data [20] about 57.8% of Luxembourg’s SMEs have attained a basic level of digital intensity—on par with the EU average (57.7%). The moderate performance suggests that Luxembourg has yet to translate its potential into broad uptake by SMEs fully. Indeed, Luxembourg currently trails the EU’s frontrunners, for instance, around 90–93% of SMEs in Denmark and Finland have a basic level of digital intensity, while some lagging countries stand around 50% [21]. Recognising the gap, the European Commission cautions that at the current pace, neither Luxembourg nor the EU overall will reach the ambitious goal of 90% SME digital adoption by 2030 [20]. Apart from launching its own national initiatives and strategies [22,23], a central conduit to implement these strategies in the industrial sector is the Luxembourg Digital Innovation Hub (L-DIH).

Against this backdrop, systematic evidence on the digital maturity of Luxembourgish SMEs remains scarce. While policy instruments such as the DMAT are widely promoted across the EU, most existing analyses are either cross-country comparisons or conceptual discussions, leaving a gap in sector-specific and empirically grounded assessments. This absence is problematic given Luxembourg’s dual ambition: to close the adoption gap between frontrunners and Laggards, and to harness digitalisation as a lever for sustainability within the twin transition. A timely, targeted study is therefore needed to quantify SMEs’ maturity profiles, identify the organisational levers that most strongly drive advancement, and generate actionable insights for both national and European initiatives. By providing empirical evidence at the manufacturing SME level, this study aims to inform the policy agenda and support the practical mission of L-DIH as Luxembourg’s conduit for accelerating digital transformation.

1.2. Literature Review

The concept of digital maturity has become a recurrent theme in European policy and research discourse, especially in relation to the competitiveness and resilience of SMEs. Despite this, the literature and empirical evidence remain fragmented and often fail to align with the needs of policymakers and practitioners. Our rationale stems from an exhaustive and rigorous systematic review [24] of existing literature using search strings (“european digital innovation hub”) in the first run, and in the second run (“digital maturity assessment” AND SMEs), as we are primarily interested in identifying studies resulting from the DIH network. From the eligibility criteria (manufacturing SMEs and practical (empirical) studies), we identified only two closely related studies in the initial run and 18 additional studies from the second run and snowballing search. Based on the results, three main limitations were identified: (i) methodological drift, (ii) lack of empirical studies, and (iii) the scope of SMEs.

The methodological drift characterises much of the existing work. Numerous studies invoke digital maturity yet operationalise it through highly diverse and alternative frameworks, producing incompatible results. For instance, Sobczak [25] assesses the digital maturity of SMEs across countries by using EU indicators. Tanhua et al. [26] propose a digital maturity scan leveraging open-ended questions, while Kljajic-Borstnar et al. [27] test the multi-attribute model approach in Slovenia. Similarly, Volf et al. [28] propose the SBRI (Small Business Digital Maturity Assessment and Road to Industry 4.0) methodology to assess the digital maturity of Czech manufacturing SMEs. Others turn towards reinventing existing concepts, or offer customised non-validated solutions of digital maturity [29,30,31]. Although extensive DMA tools are proposed, they either overlap or fragment the landscape, preventing compatibility. Such models often highlight the problem that the EU sought to solve with DMAT: proliferation of bespoke tools lacking interoperability.

Secondly, there are more conceptual rather than empirical studies. A considerable share of the literature remains review-based, descriptive, or conceptual [32,33]. While these works clarify frameworks and ecosystems, they do not provide empirical assessments of SME maturity in practice. Such studies add little evidence on the actual state of manufacturing SMEs. Considering loosely related studies, some address digital transformation broadly but without performing maturity assessments. For instance, Crupi et al. [34] investigate the DIH’s role as knowledge brokers; Kääriäinen et al. [35] examine how “digital evangelists” support SMEs’ positioning, while Rahamaddulla et al. [30] investigate the value of digital transformation initiatives. Evidently, there is a lack of empirical studies to ensure validity [25,36]. While valuable, these perspectives remain adjacent rather than direct assessments of digital maturity, and some even lean towards AI capability assessments [37].

Lastly, there is a misalignment with the manufacturing scope. Many empirical studies extend to public institutions [38], service firms [39], or generic “businesses” [40], as in the DMAT adaptation [16], or Sobczak’s [25] EU-level employment analyses. Such inclusions dilute the manufacturing focus and overlook the sector-specific challenges of production-oriented SMEs [41]. Even where DMAT itself is mentioned, it is often positioned instrumentally rather than as the object of the study. For instance, Mazgajczyk et al. [42] illustrate how DMAT results feed into EDIH service mapping, and Lorencin et al. [43] report “Test Before Invest” case studies in Croatia. These studies underscore DMAT’s institutional role but do not interrogate its empirical outcomes for manufacturing SMEs themselves.

The most precise point of reference is Krcelic et al. [38], who applied EU DMAT within private and public organisations in Croatia. Yet even here, the sample spans multiple organisation types, diluting insights specific to manufacturing SMEs. This highlights the core gap: there is still no focused empirical body of evidence that systematically applies DMAT to manufacturing SMEs. Given that only two related studies were identified from the systematic review, a backward and forward snowballing search was performed to locate additional DMA studies. Identified studies are reported in Table 1.

Furthermore, it should be noted that the primary focus is exclusively on DMA models as they offer a comprehensive, strategy-driven and SME-relevant assessment of Digital Readiness, whereas Industry 4.0 models (e.g., Acatech I4.0 Model [44]), while important, are primarily technology-centric, process-specific and less aligned with the broader organisational challenges SMEs face in digital transformation. Also, some DMA tools, like PwC DMA, provide reports for the Oil and Gas sector [45], instead of manufacturing, while others only have one case study, as Deloitte-TM Forum DMA [46]. Thus, here we primarily focus on empirical studies demonstrating the applicability of DMA in SMEs, while more exhaustive and comparative studies of specific DMA tools are described elsewhere [47,48,49].

Table 1.

Meta-data of studies from the systematic review.

Table 1.

Meta-data of studies from the systematic review.

| Reference | Survey Tool | Region | Sample Pool | Sample Size |

|---|---|---|---|---|

| [50] | Custom DM tool | Canada | SMEs | 30 |

| [37] | FAIR EDIH AI | Finland | SMEs | >60 |

| [27] | MADM model | Slovenia | SMEs | 7 |

| [51] | DM and Readiness | Kazakhstan | SMEs | 12 (managers) |

| [28] | SBRI DMA | Czech | SMEs | 23 |

| [52] | DMAM | Kenya | SMEs | 382 |

| [53] | Digital retrofitting | UK | SMEs | 32 |

| [54] | OSME Tool | Finland | SMEs + Large | 9 |

| [26] | DM Scan | EU (Mixed) | SMEs + Large | 70 |

| [31] | Custom DM tool | Czech | SMEs | >100 |

| [55] | 6P Maturity Model | Italy | SMEs | 9 |

| [56] | MCDA DMA | Croatia | SMEs | 3 |

| [57] | Custom DMA | Croatia | SMEs | 6 |

| [30] | Smart Readiness | Global | SMEs | Concept * |

| [58] | TOE-based survey | Portugal | SMEs + Large | 9 |

| [25] | EU indicators | EU | Enterprises | Countries † |

| [59] | DREAMY | Italy | SME + Large | 1 (380 test) |

| [35] | VTT’s VMoD | Finland | SMEs | 19 |

| [36] | VTT’s VMoD | Finland | SMEs | Concept * |

| [29] | Custom DM tool | Spain | SMEs + Large | 30 SMEs (72) |

| [38] | EU DMAT | Croatia | SMEs/PSOs | 48 SMEs/62 PSOs |

| This study | EU DMAT | Luxembourg | SMEs | 30 |

* The survey tool is not demonstrated on a sample of studies or provides only data without stating the sample size. † The DMA analysis is performed on a sample of EU countries.

Taken altogether, the literature paints a paradoxical picture. On the one hand, there is a proliferation of models, frameworks and ecosystem analyses surrounding SME digitalisation; on the other hand, there is a myriad of incomparable DMA-based assessments of manufacturing SMEs. This inconsistency creates three gaps: (i) empirical scarcity (very few studies directly apply EU DMAT in manufacturing context, and even fewer offer sector-specific analysis), (ii) fragmentation (alternative models proliferate without alignment, undermining comparability), and (iii) ecosystem bias (much of the literature examines DIHs, AI adoption, or transformation enablers, but falls short of measuring SME baseline maturity with validated instruments). The present study addresses these shortcomings by applying the official EU DMAT survey systematically within Luxembourg’s manufacturing ecosystem. By doing so, it contributes evidence where the literature is weakest: sector-specific, comparable, and policy-relevant assessments of SME digital maturity.

1.3. Questions, Aims and Objectives

Building on contextual challenges and identified literature gaps, this study formulates a set of research questions. Namely, we set the core research question as follows:

RQ1: What is the current state of digital maturity among Luxembourgish manufacturing SMEs, and how do its dimensions interrelate in shaping overall maturity?

The overarching question is further broken down into the following sub-questions:

SQ1: Which dimensions represent the strongest predictors of overall digital maturity?

Strategy and human-centric capabilities often function as “meta-capabilities” that shape prioritisation, change readiness, and absorptive capacity. In contrast, technical and sustainability pillars typically realise their value once these foundations are in place [60]. To avoid compositional inflation when relating a dimension to the overall DMA score, we introduce leave-one-out correlation (LOOC) analysis to reduce bias in assessing predictors of DMA by excluding the focal dimension.

SQ2: Do firm characteristics such as sector, size, or age moderate digital maturity levels?

Prior studies often highlight that firm characteristics, such as firm age and size, tend to exhibit greater flexibility and a higher likelihood of adopting digital technologies. These firms are more compatible with emerging innovations [61], since they were established in a digitally intensive environment, so-called “born digital” [62] companies, while older firms tend to be persistently non-digital [63]. Also, younger managers are more likely to advocate for digital adoption [64], with top management support being crucial in the transformation [65]. In a small, advanced economy, scale and longevity may be proxies for capability; conversely, legacy systems could burden data practices in older firms. Hence, we consider that characteristics, such as age and size, may skew our findings [66].

SQ3: Do specific digital maturity dimensions systematically lag or lead compared to others?

Within firms, capabilities are unlikely to develop uniformly. We expect DG and DBS to lead (reflecting data foundations and strategic alignment), and AAI to lag (given the weak adoption of advanced technologies) due to complexity, lack of staff and resource constraints that often hold back smaller firms [63]. To test these patterns, we treat the six DMAT dimensions as repeated measures within the sample, allowing us to compare whether specific dimensions advanced more than others or remained underdeveloped.

SQ4: Are there natural clusters of SMEs based on their maturity profiles?

Digital transformation pathways are rarely uniform, and SMEs often exhibit different strengths and weaknesses across maturity dimensions. From the systematic review, some suggest that firms advance strategically while lagging operationally, whereas others selectively prioritise automation, data, or human-centric practices. Although the DMAT report translates the DMA score into linear maturity levels (e.g., basic, average, moderately advanced, advanced), SMEs are rarely distributed by a purely linear scoring system. To capture these nuances, we adopt an unsupervised clustering approach to explore natural clusters of SMEs. This allowed us to move beyond predefined score brackets and identify empirically grounded clusters of SMEs.

From these questions, the main aim of the study is to generate robust, sector-specific, and policy-relevant evidence on the digital maturity of Luxembourgish SMEs using the official EU DMAT framework. Hence, in support of the aim of the study, we set the following objectives: (1) conduct DMAT-based survey analysis of Luxembourgish manufacturing SMEs, producing dimension-level and overall maturity profiles; (2) conduct rigorous hypotheses testing to identify structural patterns and provide robust evidence of inferential findings; (3) interpret the results in light of EU and national digitalisation agendas regarding the existing state of manufacturing SMEs in Luxembourg.

The rest of the manuscript is structured as follows. Section 2 outlines the methodology, including the study protocol, survey instrument, scoring system, and analytical strategy for performing statistical and multivariate data analysis. Section 3 presents and discusses strictly statistical results, covering descriptives, dimension-level predictors, moderator effect analysis, within-firm disparities, and identified clusters. Section 4 discusses the findings in light of the existing literature, highlighting the importance of strategic and human-centric enablers, dimension-specific imbalances, and the clustering of SMEs into distinct maturity profiles. Lastly, we conclude the study by highlighting key implications for policy and practice, acknowledging the study’s limitations, and outlining future research directions.

2. Methodology

2.1. Study Protocol

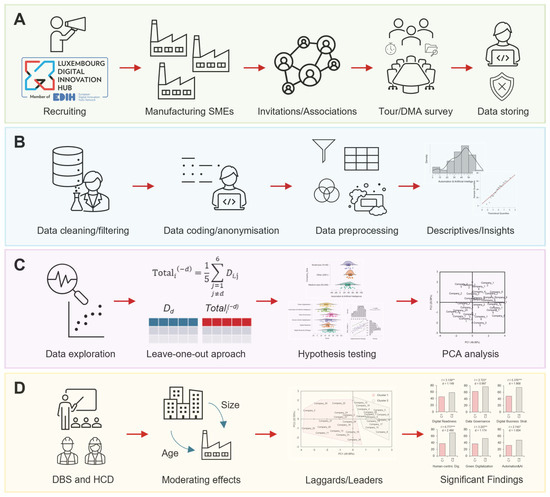

The study implements a four-module research protocol (Figure 1). Module A operationalises the DMAT through firm-level data collection. This stage encompasses SME recruitment, invitation to associations, on-site tours, and the administration of structured surveys, followed by secure data storage. Module B addresses data integrity and preparation, comprising cleaning, coding, and anonymisation, as well as preprocessing and descriptive statistics. Module C focuses on analytical procedures, including exploratory analysis, a leave-one-out approach, and hypothesis testing to identify the most influential predictors. Subsequently, PCA–HCPC (Principal Component Analysis with Hierarchical Clustering on Principal Components) is applied to delineate distinct SME cluster profiles. Module D extends the analysis by interpreting statistically significant results, assessing moderating effects, differentiating between digital Leaders and Laggards, and evaluating the relative importance of DMA dimensions across clusters.

Figure 1.

Data analysis protocol. SMEs were recruited via the L-DIH and assessed with the EU’s DMAT (A). Survey data were anonymised, cleaned, and preprocessed before descriptive profiling (B). Analytical steps included leave-one-out correlations, hypothesis testing, and PCA–HCPC clustering to identify natural firm profiles (C). Findings revealed a pivotal role that strategy and human resources play in digital maturity; moderator analysis revealed limited effects of firm size and age; clustering distinguished Leaders from Laggards (D).

The data collection centred on the DMAT administered to manufacturing companies in Luxembourg. Participating firms were recruited through the L-DIH’s outreach channels—including direct invitations to companies that had engaged with L-DIH events and an open call via industry associations (e.g., FEDIL—Fédération entrepreneuriale multisectorielle)—aiming for a broad cross-section of the manufacturing ecosystem (from small family-owned businesses to larger industrial players). In addition, a census-based invitation was issued to all eligible SMEs, and participation was voluntary, ensuring that selection did not rely on convenience sampling. The final sample is proportionally aligned with structural characteristics of Luxembourg’s manufacturing sector, particularly with respect to SME size classes, dominant subsectors (metals, plastics, machinery), and the typical age distribution of industrial firms.

To encourage busy SME owners and managers to participate, the L-DIH offered facilitated sessions. In many cases, an L-DIH expert worked with the company’s representative (typically a production manager, technical director, or CEO) to guide them through the DMAT questionnaire, either in person or via a video meeting. This approach ensured clarity in understanding the questions and maintained consistency in how responses were interpreted across. Each company was assured of confidentiality—individual results would be seen only by the company and the L-DIH assessment team. At the same time, any data used for research or policy purposes would be anonymised and aggregated.

The assessment procedure was performed in facilitated sessions. Two assessors from L-DIH would typically conduct the DMAT assessment. The session began with a brief interview and a company visit to gain an understanding of the company’s context, followed by a review of the DMAT questions one by one. The assessors ensured that the respondent understood each question in the context of their business (translating any abstract concepts into concrete examples). The respondent then selected the option that best described their company’s current practice, with the assessors recording the answers in the DMAT survey. This process typically took about 1–2 h per company, depending on the depth of the discussion. Upon completion, the company immediately received a DMAT results report generated by the platform, showing their scores in each dimension and an overall maturity level. The L-DIH experts reviewed the report with the participant, highlighting areas of strength and weakness. For instance, a firm might score high in Automation (having deployed some connected machinery) but low in Data Management (lacking data analytics capabilities). Such feedback not only validated the responses but also provided direct value to the company, which aligns with the “assessment and advice” mission of the EDIH programme. From a research perspective, these sessions also served to improve data quality, as the dialogue helped clarify any ambiguities in how questions applied to each firm.

All response data from the DMAT platform were exported to a secure database accessible to the research team. The dataset consisted of the anonymised company profiles and their item-level responses and dimension scores. Before analysis, the data were checked for completeness and consistency. The analysis plan involves both descriptive and comparative techniques. We calculate aggregate maturity scores for each dimension by converting the categorical responses to numeric scores (as defined by the DMAT scoring scheme) and normalising them to a 0–100 scale. Statistical analyses are performed in aggregate; individual company identities remain confidential. The European Commission stipulates that DMAT data be used in aggregated form for statistical purposes, a guideline we strictly followed. It should be noted that this protocol was carried out in close collaboration with ongoing L-DIH operations. As such, it benefited from the standardised methodology provided by the EDIH network, while also allowing us to integrate research-specific goals into the data collection process. Any deviations from the standard DMAT usage (for research measurement purposes) were minimal and were communicated transparently to participants. In sum, the study protocol ensured a high level of rigour, combining a validated assessment instrument, consistent implementation with expert support, and careful data management.

2.2. DMAT Survey and Scoring System

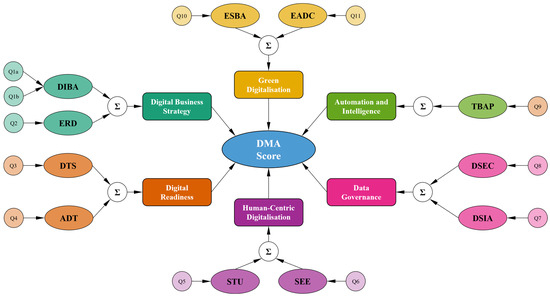

The DMAT survey [67,68] comprised a comprehensive set of questions grouped under the six main dimensions (Figure 2): (1) Digital Strategy, (2) Digital Readiness, (3) Digital Skills, (4) Data Management, (5) Automation/AI, and (6) Green Digitalisation. In total, the survey comprised 11 questions covering DMA dimensions, primarily in a multiple-choice format, where each choice corresponded to a specific maturity level or practice. Companies also provided basic profile information (sector, number of employees, annual turnover range, and respondent’s role) to allow analysis by size class or industry segment. No names or uniquely identifying details of companies were recorded in the dataset—instead, each respondent was assigned an anonymous code.

Figure 2.

DMAT Tool (reworked illustration from [68]). The survey consists of subdimensions: DIBA = Digitalisation pre Business Area; ERD = Enterprise Preparedness for Digitalisation; DTS = Digital Technologies Uptake; ADT = Advanced Digital Technologies used; STU = Staff Upskilling; SEE = Staff Engagement and Empowerment; DSIA = Data Storage, Organisation, Access and Exploitation; DSEC = Data Security; TBAP = Technologies Applied in Business Applications; EADC = Environmental Consideration of Digital Choices; ESBA = Enterprise use of Digital Technologies for Sustainability.

The DMAT scoring system is scored as specified in the survey instrument [15]. For explaining and constructing a model, let i ϵ {1,…,n} be the enterprise index, where n = 30 is the sample size, and j ϵ {1,…, d} be the DMAT dimension index of d = 6. The q ϵ {1,…, Qj} is the question index within dimension j, and lastly let o ϵ {1,…, Oj,q} represent an option index within question q of dimension j. For item-level scoring of DMAT, let wj,q,o ≥ 0 be points for option o within (j, q) as defined by DMAT, and gi,j,q,o ϵ [0, 1] be a graded response (often a 0/1 indicator) in the survey chosen by enterprise i. Then, raw points (per item) are determined:

Maximum attainable points per item are determined:

Normalisation to a 0–10 scale (bounded) gives

This formulation must ensure that 0 ≤ Qi,j,q ≤ 10 across all question types and guarantees correct up- or down-scaling regardless of Wj,q. It also implements the European Commission’s requirement that items with fewer than ten possible points are proportionally up-scaled, and items with more than ten points (if present) are proportionally down-scaled, yielding strict commensurability. Dimension scores are then aggregated on a 0–100 scale, following the official multipliers. We would also like to include here a gentle reminder that graded response g is, in some special cases, non-dichotomous and is defined by instrument scales:

For estimating each individual dimension aggregate score, let αj,q ≥ 0 be the official item weights for dimension j with . The dimension score is then determined

in the special case of Dimension 5 (e.g., AAI), the single question satisfies Qj = 1 and αj,q = 1. This way each dimension score Di,j for enterprise i and specific dimension j is bounded in [0, 100], providing a standardised maturity score per dimension. Lastly, the total DMA score for enterprise i is obtained as the average score of all dimensions:

The summary index is strictly derived from the official DMAT and provides a single measure of enterprise digital maturity that is consistent with the Commission’s framework.

2.3. Analytical Strategy

2.3.1. Correlation and Regression Analysis

The analytical strategy is structured to align directly with the research questions (RQ1–RQ4), progressing from dimension-level associations to multivariate clustering. First, we estimated correlations and performed regressions using a leave-one-out (LOO) approach to identify dimensions that strongly predict overall digital maturity (RQ1). Avoiding the tautology by leaving the focal dimension out of the dependent variable, we defined LOO totals for assessing the association of dimension j with the rest:

where with equal weights (βl = 1/D):

for each D, we assess the unique link between dimension score Di,j and Totali(−j) using Persons’s correlation r(Dj, Total(−j)), and simple regression Total(−j) ~ Dj with standardised slope and R2. By defining a dependent variable as the average of the remaining five dimensions, we avoid compositional inflation that would otherwise bias correlations. To stabilise inference under the small-sample condition, 2000 bootstrap replications were performed for each correlation, yielding bias-corrected confidence intervals. Model diagnostics include the Shapiro–Wilk (SW) test for residual normality and root mean squared error (RMSE) estimates of predictive accuracy. This approach allowed us to evaluate the unique predictive strength of each dimension relative to the composite maturity index.

2.3.2. Moderator Analysis

After performing LOOC tests, we then tested whether maturity levels vary systematically by firm size, age, or sector. Company size was operationalised categorically according to the European Commission’s SME classifications and analysed with one-way ANOVA followed by Holm-adjusted pairwise comparisons. Organisational age (years since foundation) was treated as continuous and examined via Pearson’s correlation against maturity scores.

2.3.3. Within-Subject Comparisons Across Dimensions

To test whether specific dimensions systematically lag or lead, we performed a repeated-measures ANOVA treating the dimensions as a within-subject factor. This approach leverages the paired design, as each enterprise contributes scores for all dimensions. Assumptions were verified using Mauchly’s test of sphericity, with the Greenhouse–Geisser correction applied if violated. QQ-plots of standardised residuals were inspected to confirm normality. Post hoc contrasts between dimension means were performed with Holm–Bonferroni correction. Effect sizes were reported as partial η2 for omnibus tests and Cohen’s d for pairwise comparisons. This analysis examines whether baseline maturity systematically varies across conceptual domains and quantifies the magnitude of such differences.

2.3.4. PCA with Agglomerative Hierarchical Clustering

Given that classical inferential statistics (e.g., correlation, ANOVA) methods are standardised and extensively documented in the literature, reporting their results does not require formal derivations, as readers are generally familiar with their mechanisms and assumptions. However, PCA combined with hierarchical clustering is the core modelling framework of this study, transforming the six dimensions into a lower-dimensional space and partitioning SMEs into clusters. Because this process involves several successive mathematical transformations—data standardisation, eigen decomposition, and distance metrics used—explicit equations were introduced to make the process transparent and reproducible. We rely on mathematical formulation and assumptions described by [69], as the analysis is performed in RStudio (v.R-4.5.1), package FactoMineR (v.2.12) [70]. Formally, we start by expressing the dataset as

where n indexes enterprises, and Dj are column-wise score of dimension j. Thus, column means and standard deviation are determined:

Standardised matrix is then

with μj being the mean and sj as the standard deviation of dimensions D. The standardised data matrix is then:

The covariance matrix is

Eigen decomposition gives

where λk is the eigenvalue and vk is the eigenvector of the k-th component. The principal component scores for enterprise i on component k are

Next, we perform agglomerative hierarchical clustering on principal components k. To do so, let F = (fi,k)n×K be the score matrix for the K retained components. The squared Euclidean distance between enterprises i and u is

Ward’s agglomerative clustering merges clusters A and B by minimising the increase in within-cluster inertia:

where |A| and |B| are cluster sizes, and , cluster centroids are located in the K-dimensional component space. Cluster interpretation was based on quality measures defined in FactoMineR [70]. The quality of representation of individuals on PCs was assessed using squared cosine (cos2), which quantifies the proportion of an individual’s variance explained by a given PC axis. The contribution (ctr%) was used to evaluate the extent to which each individual contributed to the inertia of an axis, defined as the proportion of the axis variance attributable to a particular enterprise. Analytically, the squared cosine is defined as

Here, the numerator fi,k provides the squared projection coordinate of enterprise i on axis k. At the same time, the denominator is the squared distance of the point to the origin in the K-dimensional PCA space. High values indicate that k provides an accurate representation of i on that particular dimension, i.e., axes k. The contribution (ctr%) is

where n is the number of enterprises and λk is the eigenvalue of the axis k. This represents the proportion of the variance of axis k explained by enterprise i. Cluster characterisation is performed using the t-test statistic, which compares whether the mean in cluster A differs significantly from the global mean. The ttest statistic is

where is the cluster mean, is the global mean, s is the global standard deviation, and nA is the cluster size. The cluster representation is used to introduce digital maturity levels by characterising clusters by their score. Namely, we determine a cluster by individuals specific to that class. Two different kinds of specific individuals are suggested: paragons (individuals closest to the centre of the class) and specificity (those furthest from the centres of other classes [69]. Thus, we rely on both paragon and specificity metrics for cluster(s) characterisation.

2.3.5. Post Hoc Power Analysis

To assess the statistical power of the correlation performed between dimensions Dd and the LOO overall score (Totali(−j)), we first applied Fisher’s z-transformation to the correlation coefficient [71,72]. This approach provides an accurate approximation to the sampling distribution of correlation coefficients. Let δ denote the true population correlation, and r the sample correlation. The Fisher z-transformation is defined as

which stabilises the variance of the correlation coefficient. Under the null hypothesis H0: δ = 0, the transformed statistic follows a normal distribution with mean zero and variance 1/n − 3, where n is the sample size. Under the alternative H1: δ ≠ 0, the distribution is shifted by

For a two-sided test with significance level α, the critical value is z1−α/2. The statistical power is obtained as

where (·) is the standard normal cumulative distribution function. Note that atanh(δ) is the inverse hyperbolic tangent of the sample correlation coefficient, which is exactly as Fisher’s z transformation (atanh(r) ≡ z). Accordingly, with our sample of n = 30 SME enterprises, the analysis shows that, at the conventional α = 0.05 threshold, correlation of magnitude |δ| ≥ 0.50 can be detected with approximately 80% power, while |δ| ≥ 0.55 achieves ~ 90% power. When applying Bonferroni adjustments for six simultaneous tests (α* = 0.05/6 ≈ 0.0083), the minimum detectable effect size increases: achieving 80% requires |δ| ≈ 0.59, and 90% requires |δ| ≈ 0.64. We are sufficiently powered to detect only moderate-to-large effect size correlations [71,72] between dimensions and the LOO-DMA score, but underpowered to identify smaller effects in correlations. Consequently, non-significant results are interpreted cautiously, as they may reflect limited sensitivity rather than the absence of effect. The calculation was cross-validated using G*Power software (v3.1.9.7) [72].

3. Results

3.1. Descriptive Analysis

The sample consisted of 30 manufacturing SMEs located in Luxembourg, spanning multiple subsectors of the NACE Rev. 2 classification [73]. In terms of size distribution, 23.3% were classified as small (10–49), 46.6% as medium (50–249), and 30% as other (>250 but ranked as SME due to the balance sheet), consistent with European Commission SME definitions [74]. The mean organisational age was 29.86 years (SD = 16.23, range = 4–64 years). Table 2 presents descriptive statistics for the six dimensions and the overall maturity score.

Table 2.

Descriptive statistics of the sample.

Across enterprises, mean scores were highest for Data Governance (M = 70.3, SD = 15.9), Digital Business Strategy (M = 62.2, SD = 18.0), and Human-Centric Digitalisation (M = 54.6, SD = 21.4), while Automation and Artificial Intelligence showed the lowest mean maturity level (M = 40.5, SD = 15.4). Variability was moderate across dimensions, with standard deviations ranging from 13.6 to 21.4. There was no violation of normality based on the Shapiro–Wilk test (p > 0.05). These descriptive statistics provide an overview of baseline maturity patterns, setting the foundation for subsequent inferential analyses.

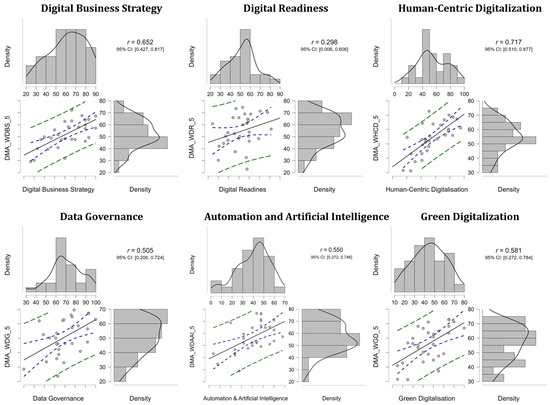

3.2. Predictive Strength of Maturity Dimensions

To examine the dimensions’ effect in predicting the overall maturity score, we first compute leave-one-out (LOO) indices for each dimension, i.e., the average of the five remaining excluding the one being tested (Totali(−j)). This was performed to avoid compositional bias that would otherwise inflate correlations. For estimating 95% confidence intervals (CI), 2000 bootstrap samples are performed to stabilise the inference of a small sample. For each dimension Dd, a correlation (r) and a simple linear regression (R) are computed with Total(−d) as the dependent variable. This way, we remove the mechanical overlap between Dd and the dependent variable. Results are summarised in Table 3.

Table 3.

Results of leave-one-out correlation and single-model regression coefficients.

The HCD exhibits the strongest correlation with overall maturity (r = 0.717 with 95% CI [0.51, 0.88], R2 = 0.514), followed by DBS (r = 0.652 with 95% CI [0.43, 0.82], R2 = 0.425), and GD (r = 0.581 with 95% CI [0.27, 0.78], R2 = 0.337), showing strong effect sizes (Fisher’s z(HCD) = 0.901, z(DBS) = 0.779, z(GD) = 0.664). The AAI dimension showed moderate predictive power (r = 0.550 with 95% CI [0.28, 0.74]) along with DG (r = 0.505 with 95%CI [0.21, 0.72]). In contrast, the DR dimension displayed only a weak correlation with the maturity score (r = 0.298, p = 0.110), indicating the absence of a significant correlation. The Shapiro–Wilk tests for bivariate normality were all non-significant (p > 0.2), suggesting that normality is not violated.

Furthermore, for illustrative purposes, we demonstrate the linear dependency between each dimension Di,d and Totali(−j) via correlation scatter plots, including marginal densities, confidence and prediction intervals (Figure 3). The scatterplots visually reaffirm the results presented in Table 3. HCD and DBS dimensions display a strong linear dependency, with narrow 95% confidence intervals and relatively small prediction intervals, indicating both a strong correlation and a stable prediction capacity. In contrast, the DR shows wide dispersion of points, a shallow slope, and a broad prediction region, consistent with its non-significant estimate (r = 0.298). The density plot further illustrates that enterprises exhibit broad variation, which weakens their alignment with the overall maturity score.

Figure 3.

Bivariate correlation analysis between LOO total score (Total(−j)) and all DMA dimensions. Each panel is supplemented with an associated distribution plot. The x-axes represent dimensions, and the y-axes represent Total(−j) scores. Each panel displays a scatterplot with a fitted regression line (solid black), the 95% confidence interval for the mean estimate (dashed blue), and the 95% prediction interval (dashed green line). Density plots on the margins illustrate the distributions of both predictor and outcome variables. Pearson correlation coefficients (r) with corresponding 95% confidence intervals quantify the strength and direction of each association.

The technical enablers—DG, AAI, GD—occupy an intermediate position. Scatterplots exhibit moderate slopes with visible dispersion, as reflected in correlations ranging from r = 0.50 to r = 0.58. The density curves suggest a relatively balanced distribution across SMEs, but with greater variability compared to HCD and DBS. The variability presumably contributes to wider confidence intervals in the regression fits. Ultimately, the visual description reinforces the conclusions: human- and strategy-oriented capabilities drive maturity, while technical and sustainability dimensions contribute moderately, and basic readiness exerts little independent effect.

3.3. Structural Moderators of Maturity

To examine whether companies’ characteristics have a moderating effect on the overall maturity, we focused on company size and organisational age. Company size was operationalised categorically (small, medium, and other) following the European Commission classification and tested using one-way ANOVA. Organisational age, measured continuously in years, was analysed using Pearson’s correlation and linear regression to estimate whether maturity scores vary systematically with age.

Sectoral analysis was not pursued as a moderator for two reasons. Firstly, the sample distribution was highly unbalanced across sectors. Although more than seven sectors were represented, several categories included only one to three companies, rendering both parametric and non-parametric group comparisons statistically unreliable. Secondly, given the limited national context (30 SMEs in Luxembourg), reporting sectoral breakdowns would compromise confidentiality obligations, since the identification of companies within niche sectors could lead to indirect disclosure of individual respondents. For both methodological and ethical reasons, the sector was excluded from testing.

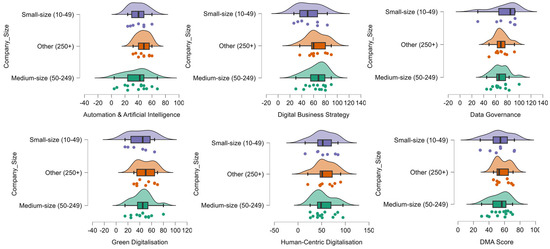

The ANOVA results are discussed, and group comparison is illustrated in Figure 4. Namely, none of the cases showed statistically significant differences in the comparison of company size. In fact, only the group comparison of company size, considering DBS, showed slight skewness, but without any significant moderating effect (p < 0.05). Hence, we conclude that company size has no moderating effect on dimensions or the overall DMA score.

Figure 4.

Visual representation of company size comparison (y-axis) and individual dimensions (x-axis).

The moderating role of organisational age on digital maturity was tested using both Pearson’s correlation coefficient (r) and Spearman’s rank correlation (ρ) to account for potential linear and monotonic relationships, respectively. As shown in Table 4, organisational age showed a lack of significant effect on overall maturity score (r = −0.04, p = 0.832, ρ = −0.081, p = 0.669). Similarly, none of the six dimensions displayed correlations with age. Some effect was observed for Data Governance, with a negative and non-significant trend (r = −0.263, p = 0.16; ρ = −0.310, p = 0.095), suggesting the existence of a tendency of older SMEs to have lower scores on this dimension; however, there was no significant evidence to support the effect. Although the presence of the effect was commonly reported in literature, surprisingly, the size and age of manufacturing SMEs had no effect on the tested relationship in this study.

Table 4.

Evaluating potential moderating effect of age on dimensions and overall DMA score.

In summary, neither company size nor organisational age emerged as a significant moderator of digital maturity in this sample of Luxembourgish SMEs. The absence of statistical differences across size categories suggests that the resource availability associated with firm scale does not directly translate into higher maturity levels. Likewise, the lack of correlation with organisational age indicates that digital maturity is not a function of firm longevity in this sample, although it has been previously reported [61]. The slight negative trend observed for the Data Governance dimension in older firms may reflect challenges in modernising legacy infrastructures, though this pattern remains inconclusive. Taken together, these findings support the view that structural attributes, such as size and age, exert a limited influence on digital maturity outcomes, reinforcing the central role of strategic orientation and human-centric practices as identified in RQ1.

3.4. Dimension-Specific Performance

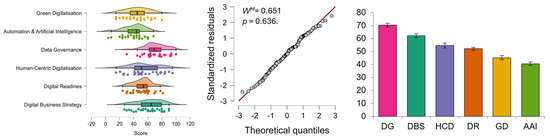

We performed repeated-measures ANOVA (within-subject comparison) to test whether dimensions’ mean scores differ (Figure 5). Mauchly’s test was non-significant (WM = 0.651, p = 0.636), showing that the sphericity assumption holds; QQ-plots of standardised residuals did not suggest deviations from normality. The repeated measures ANOVA revealed a significant effect of dimensions on digital maturity (F = 20.36, p < 0.001, η2 = 0.413), indicating that maturity levels differed substantially across the six dimensions.

Figure 5.

Raincloud plot of repeated measures ANOVA test for within-subject comparison used on DMA dimensions (left), and QQ plot of standardised residuals vs. theoretical quantiles (mid), including Mauchly’s test WM of sphericity score. Dimensions’ average scores are presented from the highest to the lowest mean score, along with the standard error (right).

Post hoc pairwise comparisons with Holm–Bonferroni correction (Table 5) indicate several robust differences. DG had, on average, the highest mean score than DR (mean difference = 18.16, d = −1.07, pholm < 0.001); HCD (mean difference = 15.65, d = −0.92, pholm < 0.001); AAI (mean difference = 29.77, d = 1.75, pholm < 0.001), and GD (mean difference = 25.13, d = 1.48, pholm < 0.001). DBS scored higher compared to dimensions: DR (difference = 10.02, 95% CI [0.43, 19.60], d = 0.59, pholm = 0.018); AAI (difference = 21.63, 95% CI [10.56, 32.70], d = 1.27, pholm = 0.001); and GD (difference = 16.99, 95% CI [5.86, 28.13], d = 1.00, pholm < 0.001). The pattern confirms that data foundations and strategic alignment, i.e., DG and DBS dimensions, reflect the importance of digital maturity.

Table 5.

Post hoc comparison test between individual dimensions.

DR consistently lagged, with lower scores than DBS, DG, and AAI. AAI itself scored significantly lower than DBS (mean difference = 21.63, d = 1.27) and DG (mean difference = 29.77, d = 1.75). Similarly, HCD occupied a middle ground: it outperformed AAI but fell behind DG (mean difference = −15.65, d = −0.920, pholm < 0.001). GD also yielded intermediate scores, significantly lower than DBS and DG, but not different from HCD or AAI after correction. Taken together, the post hoc analyses indicate a clear ranking of dimensions. DG and DBS emerged as the strongest performers, GD and AAI as the weakest, and HCD and DR in an intermediate position.

Regarding the pairwise comparison of dimensions, the large effects (e.g., DG-AAI, DBS-AAI, DG-GD, DBS-GD) are well-powered, and their Cohen’s d values exclude small effects, so we consider these findings robust. Moderate effects (e.g., HCD-AAI, HCD-DG, DR-DG, DBS-DR, DR-AAI) are statistically significant after Holm, but have wider CIs (some even without effect—DBS-DR = −0.03), which makes them reliable but less precisely estimated. For the non-significant pairs, effects are likely to be small and the design is underpowered to detect the effect after multiplicity control, which makes these results inconclusive rather than lack of difference. Hence, a larger sample size is required.

Given the observed omnibus effect (partial η2 ≈ 0.41; f ≈ 0.84), achieved power for the global test was ≥ 0.99 at α = 0.05, whereas pairwise power depended on the contrast magnitude: large effects were well-powered and precise; moderate effects (e.g., DBS–DR, DR–AAI) were significant but less precisely estimated. In repeated-measures settings, such sensitivity is expected to vary with the correlation structure among measurements [75], so future work with larger samples and theory-driven contrasts is warranted to refine estimates for the borderline pairs.

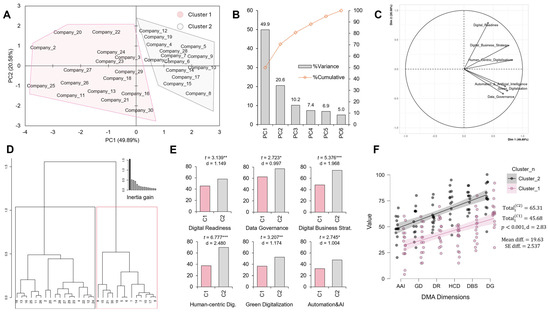

3.5. Emergence of Maturity Profiles Through Clustering

To explore whether Luxembourgish manufacturing SMEs form natural profiles based on their digital maturity, a Principal Component Analysis (PCA) followed by Hierarchical Clustering on Principal Components (HCPC) was performed. The PCA factor map (Figure 6A) revealed two distinct clusters of SMEs distributed along the first two PCs. The PCA factor and correlation map (Figure 6A,C) revealed a general maturity gradient on PC1: all dimensions loaded positively (Figure 6C), indicating that a higher score on any dimension tends to co-occur. Together, PC1 (49.9%) and PC2 (20.6%) accounted for 70.5% of the total variance (Figure 6B), suggesting that the projection adequately captures the major structure in the data.

Figure 6.

Principal component with hierarchical clustering of digital maturity dimensions in Luxembourgish SMEs. PCA factor map (A) identified two cluster profiles of SMEs. The PCA scree plot (B) shows that the first two PCs account for 70.5% of the variance. The correlation map (C) reflects the projections of DMA dimensions (loadings) in the first two PCs. The dendrogram (D) illustrates the results of agglomerative hierarchical clustering (Euclidean distance and Ward clustering). Comparative analysis (E) revealed that differences (* p < 0.05, ** p < 0.01, *** p < 0.001) between clusters were most pronounced in Digital Business Strategy and Human-centric Digitalisation, positioning these as pivotal levers for advancing SME digital maturity. The overall DMA score difference (F) showed significant mean differences (Totali(C1) = 45.68; Totali(C2) = 65.31; p < 0.001).

Agglomerative hierarchical clustering confirmed a two-cluster solution, consistent with the inertia gain (Figure 6D): Cluster 1 (n = 16 SMEs) exhibits systematically lower maturity scores across dimensions, whereas Cluster 2 (n = 14) performs consistently higher. Between-cluster comparisons (Figure 6E) were large and robust across dimensions: DBS (t = 5.38, d = 1.97, p < 0.001) and HCD (t = 6.78, d = 2.48, p < 0.001), both exhibiting large effect sizes. Significant differences were also observed for DR (t = 3.14, d = 1.15, p < 0.01) and GD (t = 3.21, d = 1.17, p < 0.01). A smaller yet significant difference was found for AAI (t = 2.75, d = 1.00, p < 0.05) and DG (t = 2.72, d = 0.99, p < 0.05).

The overall DMA scores across the two clusters (Figure 6F) revealed a highly significant gap, with Cluster 2 averaging 65.31 compared to 45.68 in Cluster 1 (p < 0.001, d = 2.83, mean difference = 19.63, SE = 2.54). This reinforces the cluster solution, highlighting that SMEs in Cluster 2 consistently outperform those in Cluster 1 across digital maturity measures. In sum, from the interpretation of the PCA-HCPC, we infer that DG and DBS act as core strengths of the more mature digital profiles, while HCD emerges as a pivotal lever in differentiating clusters nearly as strongly as DBS. Because clustering is performed on the same information later compared between clusters, we treat p-values as descriptive confirmation of the profiles, rather than independent hypothesis tests.

4. Discussion

4.1. Strategic and Human-Centric Enablers as Core Maturity Drivers

The findings provide evidence that strategy (DBS) and people (HCD) act as the primary drivers of digital maturity in Luxembourgish manufacturing SMEs. Relying on the proposed leave-one-out approach that removes mechanical overlap between each dimension and the overall digital maturity score, Human-Centric Digitalisation shows the strongest association with overall maturity (r = 0.717, R2 = 0.514), followed by Digital Business Strategy (r = 0.652, R2 = 0.425). Bootstrap confidence intervals (HCD 95% CI [0.510, 0.877]; DBS 95% CI [0.427, 0.817]), and diagnostic checks confirm that there are no deviations from normality. These “meta-capabilities” appear to shape how SMEs prioritise, absorb, and scale digital initiatives. This pattern is corroborated by cluster analysis and repeated-measures results: the higher-maturity profile outperforms sharply on DBS and HCD.

Substantively, this implies a sequencing logic. Clear digital strategy (e.g., digital priorities, Data Governance) and human enablement (e.g., digital upskilling, data-driven decision-making) form the core on which digital progress compounds. In other words, the maturity “engine” is organisational rather than purely technological, which is confirmed, on one side, by our in-person SME shop-floor inspections, and on the other, statistically with the lack of strong correlation of Digital Readiness and overall DMA (r = 0.298, p = 0.110). Consequently, a practical roadmap for practice would be (i) to consolidate DBS and HCD as foundations, and (ii) to scale Data Governance (Connectedness and digitisation) and Automation and AI, where bottlenecks and value pools are clearest. Overall, our findings suggest that soft dimensions of maturity (human-centric practices, Digital Business Strategy) are more decisive predictors than purely technical readiness measures.

4.2. Limited Influence of Structural SME Attributes

Neither company size nor organisational age moderated maturity in our SME sample. Size groups showed no reliable differences in any dimension or in the overall score, and age was uncorrelated with maturity (r < 0.20). The absence of size effects suggests that access to capabilities—not scale per se—differentiates maturity in the Luxembourg context. Similarly, longevity does not automatically translate into digital advantage; if anything, the (non-significant) negative tendency for DG in older firms hints at legacy-system drag. These nulls should be interpreted as non-differentiating within this sample, rather than as universal claims; with n = 30, tests are well-powered for large effects but less so for small ones. Still, the pattern reinforces the conclusion that what firms do (strategy and people systems) matters more than what they are (size and age) for digital advancement.

4.3. Internal Disparities Between Maturity Dimensions

Within firms, dimensions differ meaningfully. Repeated-measures ANOVA shows a strong effect (partial η2 ≈ 0.413), and post hoc contrasts consistently rank DG and DBS at the top, while AAI and GD lag; HCD and DR occupy the middle. The most significant gaps (e.g., DG–AAI, DBS–AAI, DG–GD) have large effect sizes whose CIs exclude minor effects, implying robust, actionable disparities. Moderate effects (e.g., HCD–AAI, DBS–DR) are significant but estimated with wider CIs and should be interpreted cautiously. As expected in repeated-measures designs, sensitivity depends on the correlation structure among measures; thus, some borderline contrasts may require larger samples or pre-specified contrasts to sharpen estimates.

Managerially, these within-firm gaps reveal priority imbalances: many SMEs have comparatively stronger data foundations and strategic alignment than, for instance, in Automation and AI and Green Digitalisation capabilities. Closing these gaps likely requires integrated programmes that connect use-cases to strategy, couple human-centred work redesign with automation, and embed energy/resource performance indicators into digital operating models.

4.4. Divergent SME Maturity Profiles

PCA-HCPC reveals two coherent maturity profiles: Leaders and Laggards. PC1 captures a general maturity gradient (all dimensions load positively), while PC2 differentiates firms by Digital Readiness relative to their overall level. Clustering on retained PCs yields a lower-maturity Cluster 1 and a higher-maturity Cluster 2, with significant between-cluster differences—especially in DBS and HCD—and a substantial overall-score gap (~20 points, see Figure 6E). We would again remind the reader that here p-values are treated as descriptive confirmation rather than hypothesis tests; nevertheless, the PCA separation, effect sizes, and consistency across dimensions indicated a stable segmentation.

The two-profile segmentation supports differentiated capability-building pathways tailored to each starting point. Given that our future project objectives consider working alongside SMEs to increase digitalisation level proactively, for Cluster 1 (Laggards), we consider prioritising DBS/HCD dimensions—strategy deployment, portfolio selection, and workforce upskilling—paired with data basics (e.g., Data Governance and interoperability) and targeted AAI pilots linked to pain points and measurable value. For Cluster 2 (Leaders), the focus shifts away from experimentation to industrialisation of AAI (scaling beyond pilots with model operations), advancing DG (semantic integration and real-time analytics), and operational GD (e.g., energy and resource optimisation). Here, the empirical results show adequate digital infrastructure but limited AI uptake, suggesting under-exploitation of existing readiness. Consequently, scaling AI beyond pilots represents a logical progression for these firms. Lastly, for both clusters, instituting a measurement discipline with clear KPIs (Key Performance Indicators) will help determine which interventions are effective in closing internal disparities between dimensions.

The recommendations to standardise KPIs are grounded directly in the empirical patterns observed across the assessed enterprises. The analysis showed substantial heterogeneity in the selection, definition and granularity of performance indicators. This dispersion was reflected in high within-dimension variance, the inconsistent maturity profiles of enterprises belonging to the same cluster, and the limited alignment between strategic objectives and available measurement systems. Such inconsistency reduces the comparability of maturity scores and constrains the ability of enterprises to benchmark progress or track digital transformation of outcomes over time. Standardising a core set of KPIs follows logically from these empirical observations because it provides a common measurement baseline that aligns operational behaviour with strategic objectives and supports longitudinal monitoring of digital maturity.

The identification of divergent SME maturity profiles offers not only a diagnostic classification but also a forward-looking pathway for national initiatives and/or collaboration with Luxembourg AI Factory. For Laggards, the findings highlight the necessity of targeted support that builds strategic alignment and human-centric capabilities before AI-driven solutions can be effectively adopted. For Leaders, the results underscore the potential to scale advanced analytics and AI applications, transforming isolated use cases into systematic gains in productivity and sustainability. At the policy level, this segmentation provides evidence to inform differentiated instruments—where foundational interventions address structural deficits in weaker firms, while advanced firms are incentivised to become AI frontrunners. Thus, the clustering analysis does not merely describe heterogeneity; it provides a roadmap for aligning AI adoption trajectories with the twin transition agenda, ensuring that Luxembourg’s SMEs can simultaneously enhance competitiveness and contribute to the EU’s broader digital and sustainability targets.

5. Conclusions

5.1. Concluding Remarks

This study assessed digital maturity within Luxembourgish manufacturing SMEs across six dimensions: Digital Business Strategy, Digital Readiness, Human-Centric Digitalisation, Data Governance, Automation and Artificial Intelligence, and Green Digitalisation to (i) identify which dimensions most strongly predict overall maturity and (ii) determine whether SMEs form maturity profiles. We performed leave-one-out correlations to avoid compositional bias, repeated-measures ANOVA with Holm-adjusted post hoc tests to examine within-firm disparities, and finally performed Principal Component Analysis followed by Agglomerative Hierarchical Clustering to uncover profiles. Assumption checks were satisfactory, as multiplicity was controlled and small-sample size robustness was addressed through bootstrapping and achieved-power diagnostics.

Two coherent SME profiles emerge along a dominant “overall maturity” axis—Leaders and Laggards—with secondary contrasts driven by DR. Across analytic lenses (leave-one-out regressions, repeated-measures contrasts, and cluster analysis), the higher-maturity profile is distinguished most strongly by strategy and people—Digital Business Strategy and Human-Centric Digitalization—while Automation and AI and Green Digitalization mark salient upgrade opportunities in lower-maturity SMEs. Data Governance is consistently strong but, like AAI and GD, is amplified when DBS/HCD foundations are in place. The principal effects are large in magnitude, remain significant under multiplicity control, and align with the clustering solution, yielding a convergent conclusion: strategy and people are the core drivers of digital maturity; technology and sustainability act as accelerators once those organisational foundations are established.

5.2. Implications and Limitations

Considering managerial and policy implications, the evidence points to a clear sequencing logic: build organisational foundations, then scale technology, and target support by maturity profile. Specifically, SMEs should prioritise a Digital Business Strategy—clarifying roadmaps, governance, and investments—together with Human-Centric Digitalisation—skills development, participatory change, and empowered work design—because these identified “meta-capabilities” will presumably increase the return on downstream technical initiatives. Once these foundations are set, organisations can industrialise Data Governance and Automation and AI by moving beyond pilots and instituting MLOps (Machine Learning Operations). Green Digitalisation should be embedded into day-to-day operations by tying energy/resource KPIs to digital workflows so efficiency and sustainability co-evolve, i.e., adapt “circularity analytics” [72]. The two-profile segmentation provides a practical targeting mechanism: Cluster 1 (lower maturity) benefits from DBS/HCD plus data basics and tightly scoped value-linked AAI pilots; Cluster 2 (higher maturity) requires scale-up—industrialised AAI, advanced DG (real-time analytics), and operational GD (energy/resource optimisation). Across both profiles, SMEs should strengthen measurement control (by using compact KPIs) to ensure that maturity gains translate into operational and effective outcomes.

From the study, we identified several limitations. Namely, interpretation should be tempered by methodological and contextual constraints. Findings derived from 30 Luxembourgish SMEs limit external validity beyond these settings; the cross-sectional design precludes causal claims, so the proposed path ordering (DBS/HCD → DG/AAI/GD → outcomes) remains theoretical. Despite a leave-one-out approach to reduce compositional overlap, measurement commonality may persist among dimensions; reliance on a single instrument also introduces potential self-report bias. Power is asymmetric—high for large effects but limited for small-to-moderate contrasts—so non-significant results are best treated as inconclusive rather than evidence of equivalence. The clustering is exploratory and sample-dependent. Moderator coverage is incomplete because sector effects were not analysed due to privacy constraints and a severe imbalance, which leaves potential sector idiosyncrasies untested. Finally, the overall maturity index used is a methodological construct; stronger external validity would be achieved by complementing it with objective performance outcomes not used in its development (e.g., productivity growth, on-time delivery, defect rates, energy intensity), and by estimating a dimension-to-outcome regression that demonstrates predictive validity in practice. Considering that we have an outlier of 382 SMEs (Table 1), the average number of SMEs varies between Mean ± STD = 29.19 ± 27.53 and 49.94 ± 89.63, with the outlier included. Hence, we consider the sample of 30 SMEs somewhat satisfactory, given that the majority of studies demonstrate results based on much smaller samples.

5.3. Future Research

Future work should move from descriptive mapping to causally credible evaluation of maturity pathways. Given that our obtained data covered only exploratory assessment at T0 period (T1 and T2 to follow), our priority directions primarily include longitudinal and quasi-experiment designs (e.g., stepped-wedge, difference-in-differences) to test whether strengthening DBS/HCD catalyses gains in DG/AAI/GD and, in turn, improves objective outcomes, for instance, productivity, defect rates, etc. Secondly, we consider a larger and multi-sector sample with a priori power and sensitivity analyses to detect small-to-moderate effects, as we believe that some differences may be omitted in our sample of SMEs, especially considering question items underpinning the dimensions being tested. Lastly, performing causal modelling using factor analysis, such as structural equation modelling or Bayesian mediation, and dynamic cross-lagged panel models, would be particularly suitable for our contextual sceneries in identifying mechanisms and boundary conditions (e.g., whether Digital Readiness acts as a moderator or early-warning indicator). Collectively, we believe that these steps will convert maturity diagnostics into actionable, predictive guidance for policy and management, while strengthening generalisability beyond the Luxembourg SME settings.

Author Contributions

Conceptualisation, M.O. and S.K.; Methodology, M.O. and M.D.; Validation, M.O., S.K. and M.D.; Formal Analysis, M.O.; Investigation, M.O. and M.D.; Resources, M.D. and S.K.; Data Curation, M.O. and M.D.; Writing—original draft preparation, M.O., S.K. and M.D.; Writing—review and editing, M.O., S.K. and M.D.; Visualisation, M.O.; Supervision, S.K. and M.D.; Project Administration, M.D. and S.K.; Funding Acquisition, M.D. and S.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research has been carried out in the framework of the L-DIH (Luxembourg Digital Innovation Hub) project (Grant Agreement No. 101120714) funded by the European Union’s Digital Europe Programme (DIGITAL) under the topic DIGITAL-2022-EDIH-03-INITIAL.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data can be made available upon request.

Acknowledgments

The large language models are used in the manuscript writing process for language editing assistance only. Grammarly was used for spelling, grammar, and style checks, while OpenAI’s GPT-5 was employed to refine phrasing and improve clarity. These tools were used solely as writing aids and were not intended to generate ideas, analyses, interpretations, or original intellectual content. All concepts, study design, results, conclusions, figures, and tables originate and are solely the work of the authors of this manuscript. All suggested edits were reviewed, revised where necessary, and approved by the authors, who take full responsibility for the accuracy and integrity of the work.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following notations and abbreviations are used in this manuscript:

| i | index for enterprises |

| n | index for sample size |

| j | index for dimensions of digital maturity (j = 1,…,6) |

| q | index for questions within a dimension (q = 1,…,Qj) |

| o | index for response options within a question q |

| k | index for principal component axis |

| wj,q,o | weight assigned to option o of question q in dimension j |

| gi,j,q,o | graded response value for enterprise i for option o of question q in dimension j |

| Ri,j,q | raw points accrued by enterprise i on question q in dimension j |

| Wj,q | maximum attainable points for question q in dimension j |

| Qi,j,q | normalised score of enterprise i on question q in dimension d, from 0 to 10 scale |

| Di,j | score of enterprise i in dimension j, expressed on a 0–100 scale |

| Totali | total digital maturity score of enterprise i, expressed on a 0–100 scale |

| X | data matrix of size n × d (30 × 6 for samples and dimensions) used in PCA |

| Z | standardised data matrix used in PCA |

| zi,j | standardised score of enterprise i on dimension j |

| C | correlation matrix in PCA |

| λk | eigenvalue of axis k, representing the variance explained by the component k |

| vk | eigenvector (loading) associated with axis k |

| fi,k | principal component score of enterprise i on axis k |

| cosi,k2 | squared cosine (quality of representation) of enterprise i on axis k |

| ctri,k | contribution of enterprise i to axis k |

| ttest | independent Student’s t-test statistic |

| s | sample standard deviation |

| r | Pearson’s sample correlation coefficient |

| δ | Pearson’s population correlation coefficient |

| R | Regression coefficient |

| β | Regression weight coefficient |

| SE | Standard error |

| Δ(A,B) | Within-cluster inertia |

| WM | Mauchly’s test of sphericity |

| AAI | Automation and Artificial Intelligence |

| ANOVA | Analysis of Variance |

| CEO | Chief Executive Officer |

| CoV | Coefficient of Variation |

| DBS | Digital Business Strategy |

| DG | Data Governance/Data Management and Connectedness |

| DMA | Digital Maturity Assessment |

| DMAM | Digital Maturity Assessment Model |

| DMAT | Digital Maturity Assessment Tool |

| DR | Digital Readiness |

| EC | European Commission |

| EDIH | European Digital Innovation Hub |

| EIB | European Investment Bank |

| EU | European Union |

| GD | Green Digitalisation |

| GDPR | General Data Protection Regulation |

| HCPC | Hierarchical Clustering on Principal Components |

| HCD | Human-Centric Digitalisation |

| IT | Information Technology |

| KPI | Key Performance Indicator |

| L-DIH | Luxembourg Digital Innovation Hub |

| LNDS | Luxembourg National Data Service |

| LOO/LOOC | Leave-One-Out Correlation |

| MADM | Multi-Attribute Decision-Making |

| ML | Machine Learning |

| MLOps | Machine Learning Operations |

| NACE | Statistical Classification of Economic Activities in the European Community |

| NR | Non-Related (NR1/NR2 in context) |

| PCA | Principal Component Analysis |

| PSO | Public Sector Organisation |

| RMSE | Root Mean Square Error |

| RQ | Research Question |

| SD | Standard Deviation |

| SME | Small and Medium-Sized Enterprise |

| SW | Shapiro–Wilk test |

| SLR | Systematic Literature Review |

| TBI | Test Before Invest |

References

- Milošević, I.M.; Plotnic, O.; Tick, A.; Stanković, Z.; Buzdugan, A. Digital Transformation in Manufacturing: Enhancing Competitiveness Through Industry 4.0 Technologies. Precis. Mech. Digit. Fabr. 2024, 1, 31–40. [Google Scholar] [CrossRef]

- Yaqub, M.Z.; Alsabban, A. Industry-4.0-Enabled Digital Transformation: Prospects, Instruments, Challenges, and Implications for Business Strategies. Sustainability 2023, 15, 8553. [Google Scholar] [CrossRef]

- Sharma, M.; Paliwal, T.; Baniwal, P. Challenges in Digital Transformation and Automation for Industry 4.0. In AI-Driven IoT Systems for Industry 4.0; CRC Press: Boca Raton, FL, USA, 2024; pp. 143–163. [Google Scholar]

- European Commission. 2030 Digital Compass: The European Way for the Digital Decade; European Commission: Brussels, Belgium, 2021. [Google Scholar]

- Brodny, J.; Tutak, M. Digitalization of Small and Medium-Sized Enterprises and Economic Growth: Evidence for the EU-27 Countries. J. Open Innov. Technol. Mark. Complex. 2022, 8, 67. [Google Scholar] [CrossRef]

- Strilets, V.; Frolov, S.; Datsenko, V.; Tymoshenko, O.; Yatsko, M. State Support for the Digitalization of SMEs in European Countries. Probl. Perspect. Manag. 2022, 20, 290–305. [Google Scholar] [CrossRef]

- European Commission. The Digital Europe Programme. Available online: https://digital-strategy.ec.europa.eu/en/activities/digital-programme#:~:text=The%20Digital%20Europe%20Programme%20,EDIH (accessed on 19 September 2025).

- Belciu, A.-C. Performance in Accessing Funding through Digital Europe Programme. East. Eur. J. Reg. Stud. 2025, 11, 45–62. [Google Scholar] [CrossRef]

- Kanellopoulou, D.; Giannakopoulos, G.; Terlixidis, P.; Karkaletsis, V. Smart Attica EDIH: A Paradigm for DIH Governance and a Novel Methodology for AI-Powered One-Stop-Shop Projects Design. In Proceedings of the Springer Proceedings in Business and Economics; 33rd IAMOT Conference, Porto, Portugal, 24–26 August 2025; Springer Nature: Berlin/Heidelberg, Germany, 2025; pp. 151–159. [Google Scholar]

- Rudawska, J. The One Stop Shop Model—A Case Study of a Digital Innovation Hub. Zesz. Nauk. Politech. Częstochowskiej. Zarządzanie 2022, 47, 31–42. [Google Scholar] [CrossRef]

- Carpentier, E.; D’Adda, D.; Nepelski, D.; Stake, J. European Digital Innovation Hubs Network’s Activities and Customers; Publications Office of the European Union: Luxembourg, 2025. [Google Scholar]

- Maurer, F. Business Intelligence and Innovation: A Digital Innovation Hub as Intermediate for Service Interaction and System Innovation for Small and Medium-Sized Enterprises. In IFIP Advances in Information and Communication Technology; Springer Science+Business Media: Berlin/Heidelberg, Germany, 2021; pp. 449–459. ISBN 1868-4238. [Google Scholar]

- Sassanelli, C.; Terzi, S. The D-BEST Based Digital Innovation Hub Customer Journey Analysis Method: Configuring DIHs Unique Value Proposition. Int. J. Eng. Bus. Manag. 2022, 14, 18479790221124634. [Google Scholar] [CrossRef]

- Gyulai, T.; Nagy, M.; Cibu-Buzac, R. Smart Development with Digital Intelligent Cities in Cross-Border Regions. In Pandémia—Fenntartható Gazdálkodás—Környezettudatosság; Soproni Egyetem Kiadó: Sopron, Hungary, 2022; pp. 264–677. [Google Scholar]

- Kalpaka, A.; Rissola, G.; De Nigris, S.; Nepelski, D. Digital Maturity Assessment (DMA) Framework & Qustionnaires for SMEs/PSOs: A Guidence Document for EDIHs; European Commission: Seville, Spain, 2023. [Google Scholar]

- Kulchytsky, O. Assessment of Digital Maturity of Business Companies According to the Methodology of European Digital Innovation Hubs. Econ. Time Realities 2024, 5, 117–122. [Google Scholar] [CrossRef]

- European Commission. Commission Unveils New Tool to Help SMEs Self-Assess Their Digital Maturity. Available online: https://digital-strategy.ec.europa.eu/en/news/commission-unveils-new-tool-help-smes-self-assess-their-digital-maturity (accessed on 19 September 2025).

- European Commission. Luxembourg 2025 Digital Decade Country Report. Available online: https://digital-strategy.ec.europa.eu/en/factpages/luxembourg-2025-digital-decade-country-report (accessed on 19 September 2025).

- The Government of the Grand Duchy of Luxembourg. Digital Decade: National Strategic Roadmap for Luxembourg 2.0; The Government of the Grand Duchy of Luxembourg: Luxembourg, 2024.

- European Commission. State of the Digital Decade 2024; European Commission: Brussels, Belgium, 2024. [Google Scholar]

- Eurostat Digitalisation in Europe—2025 Edition. Available online: https://ec.europa.eu/eurostat/web/interactive-publications/digitalisation-2025#:~:text=The%20proportion%20of%20SMEs%20with,in%20Finland (accessed on 25 September 2025).

- The Government of the Grand Duchy of Luxembourg. Luxembourg’s AI Strategy: Accelerating Digital Sovereignty 2030; The Government of the Grand Duchy of Luxembourg: Luxembourg, 2025.

- LNDS Luxembourg National Data Service. Annual Report 2024: Progress and Impact; Luxembourg National Data Service: Esch-sur-Alzette, Luxembourg, June 2025. [Google Scholar]

- Sobczak, E. Digital Transformation of Enterprises and Employment in Technologically Advanced and Knowledge-Intensive Sectors in the European Union Countries. Sustainability 2025, 17, 5868. [Google Scholar] [CrossRef]

- Tanhua, D.; Tuomi, E.O.; Kesti, K.; Ogilvie, B.; Delgado Sahagún, C.; Nicolas, J.; Rodríguez, A.; Pajares, J.; Banville, L.; Arcusin, L.; et al. Digital Maturity of the Companies in Smart Industry Era. Sci. Pap. Ser. Manag. Econ. Eng. Agric. Rural Dev. 2024, 24, 855–876. [Google Scholar]

- Kljajić Borštnar, M.; Pucihar, A. Multi-Attribute Assessment of Digital Maturity of SMEs. Electronics 2021, 10, 885. [Google Scholar] [CrossRef]

- Volf, L.; Dohnal, G.; Beranek, L.; Kyncl, J. Navigating the Fourth Industrial Revolution: SBRI—A Comprehensive Digital Maturity Assessment Tool and Road to Industry 4.0 for Small Manufacturing Enterprises. Manuf. Technol. 2024, 24, 668–680. [Google Scholar] [CrossRef]

- Novoa, R. Training Needs in Digital Technologies in Companies in the Valencian Region: An Empirical Study Carried out in the Framework of the European Network of EDIHs (European Digital Innovation Hubs). TechRxiv 2024. [Google Scholar] [CrossRef]

- Rahamaddulla, S.R.B.; Leman, Z.; Baharudin, B.T.H.T.B.; Ahmad, S.A. Conceptualizing Smart Manufacturing Readiness-Maturity Model for Small and Medium Enterprise (SME) in Malaysia. Sustainability 2021, 13, 9793. [Google Scholar] [CrossRef]

- Semrádová Zvolánková, S.; Krajčík, V. Digital Maturity of Czech SMEs Concerning the Demographic Characteristics of Entrepreneurs and Enterprises. Equilibrium. Q. J. Econ. Econ. Policy 2024, 19, 1363–1404. [Google Scholar] [CrossRef]

- Di Felice, P.; Paolone, G.; Di Valerio, D.; Pilotti, F.; Sciamanna, M. Transforming DIGROW into a Multi-Attribute Digital Maturity Model. Formalization and Implementation of the Proposal. In Proceedings of the Lecture Notes in Computer Science–Computational Science and Its Applications–ICCSA 2022, Malaga, Spain, 4–7 July 2022; Springer Science and Business Media Deutschland GmbH: Berlin/Heidelberg, Germany, 2022; Volume 13378 LNCS, pp. 541–557. [Google Scholar]

- Serrano-Ruiz, J.C.; Ferreira, J.; Jardim-Gonçalves, R.; Ortiz Bas, Á. Relational Network of Innovation Ecosystems Generated by Digital Innovation Hubs: A Conceptual Framework for the Interaction Processes of DIHs from the Perspective of Collaboration within and between Their Relationship Levels. J. Intell. Manuf. 2025, 36, 1505–1545. [Google Scholar] [CrossRef]