Abstract

We introduce a novel, privacy-preserving AI authoring copilot designed for educational content creation, which uniquely combines a Markov Decision Process (MDP) as a reinforcement learning orchestrator with a locally deployed Qwen3-1.7B-ONNX large language model to iteratively refine text for clarity, unity, and engagement—all running on a modest CPU-only system (Intel i7, 16 GB RAM). Unlike cloud-dependent models, our agent treats writing as a sequential decision problem, selecting refinement actions (e.g., simplification, elaboration) based on real-time LLM and sentiment feedback, ensuring pedagogically sound outputs without internet dependency. Evaluated across five diverse topics, our MDP-orchestrated agent achieved an overall average quality score of 4.23 (on a 0–5 scale), statistically equivalent to leading cloud-based LLMs like ChatGPT and DeepSeek. This performance was validated through blind evaluations by four independent LLMs and human raters, supported by statistical consistency analysis. Our work demonstrates that lightweight local LLMs, when guided by principled MDP policies, can deliver high-quality, context-aware educational content, bridging the gap between powerful AI generation and ethical, on-device deployment. This advancement empowers educators, researchers, and curriculum designers with a trustworthy, accessible tool for intelligent content augmentation aligning with the Quality Education Sustainable Development Goal through innovations in educational technology, inclusive education, equity in education, and lifelong learning.

1. Introduction

The rapid evolution of artificial intelligence has moved beyond mere automation, entering a new era of intelligent augmentation, where AI systems do not simply generate text but actively refine, adapt, and enhance it with purpose. In educational contexts—where clarity, engagement, and accessibility are critical to effective knowledge transfer—the demand for such intelligent support has never been greater. Teachers, curriculum designers, and academic researchers frequently face the challenge of distilling complex ideas into coherent, pedagogically sound content, often under tight time constraints. While large language models (LLMs) such as ChatGPT and DeepSeek have demonstrated remarkable generative capabilities, their outputs often suffer from inconsistencies in tone, structure, and rhetorical coherence, necessitating extensive manual editing. More importantly, their reliance on cloud-based infrastructure raises significant concerns regarding data privacy, latency, and lack of control—barriers that hinder their integration into sensitive or real-time educational settings.

To address these limitations, this paper introduces a novel AI authoring agent designed specifically for educational content creation. The system integrates advanced AI methodologies into a unified, on-device framework: Markov Decision Processes (MDPs) for orchestrating iterative refinement actions, a locally deployed Qwen3-1.7B model in ONNX format for efficient and private inference, and sentiment analysis for real-time quality assessment. Unlike conventional “prompt-and-generate” approaches, our agent treats content improvement as a sequential decision-making process. Each action—such as enhancing clarity, improving unity, or adjusting tone—is selected by an MDP policy that maximizes long-term quality metrics, ensuring coherent and purposeful evolution of the text. This decision-theoretic foundation enables the agent to reason over trade-offs, maintain narrative consistency, and adapt dynamically to user-defined goals.

The agent is designed to empower diverse educational stakeholders: teachers can rapidly generate lesson summaries and personalized feedback, researchers can refine technical writing for broader audiences, and curriculum developers can produce adaptive, emotionally resonant materials. By operating entirely on-device through ONNX optimization, the system guarantees data privacy and low-latency interaction—key requirements for deployment in classrooms and field settings. We evaluate its performance across multiple topics, comparing outputs from our agent with those from leading LLMs. To ensure objective assessment, we employ multiple LLMs—ChatGPT, Copilot, Qwen, and DeepSeek—as blind evaluators, rating generated paragraphs on clarity, unity, and overall quality. Results show that the MDP-orchestrated agent consistently produces content that is more coherent, pedagogically effective, and engaging, underscoring the advantages of combining structured reasoning with generative power.

Despite growing interest in combining reinforcement learning with large language models for structured generation, existing approaches primarily focus on code synthesis or task completion in cloud-based settings, with limited attention to pedagogical text refinement or on-device privacy-preserving operation. Crucially, these works do not explicitly map MDP components (states, actions, rewards) to measurable linguistic attributes like clarity, unity, or sentiment—nor do they enforce iterative quality control grounded in educational best practices. In contrast, our work introduces a quantitatively defined orchestration mechanism where (1) states correspond to discrete stages of textual evaluation (e.g., initial production, clarity check, unity enhancement); (2) actions are linguistically grounded operations (e.g., simplification, elaboration, coherence repair); and (3) rewards are computed from interpretable, sentiment-augmented LLM assessments of specific rhetorical dimensions. We hypothesize that this tight coupling between decision-theoretic control and localized linguistic feedback enables more coherent, engaging, and pedagogically sound outputs than single-pass or cloud-dependent alternatives.

This work contributes to the emerging paradigm of human-centered AI in education—not as a replacement for human expertise, but as a cognitively aware co-author that enhances the capabilities of educators and knowledge creators through technically robust, ethically responsible, and pedagogically intelligent support. The paper is organized as follows: Section 2 reports the related works while Section 3 presents the materials and methods, detailing the architecture of the agent, the use of LLMs, and the MDP-based orchestration mechanism. Section 4 reports the experimental results and evaluation outcomes. Finally, Section 5 concludes the paper by summarizing key findings and discussing future directions.

2. Related Work

Recent advances in AI-driven content generation have demonstrated the growing role of large language models (LLMs) and conversational agents in educational and creative domains. Yusuf et al. [1] introduced a framework for pedagogical AI agents in higher education, highlighting their potential in personalized instruction and administrative automation, while Schorcht et al. [2] showed how communicative agents can collaboratively refine mathematical tasks through iterative dialogue. These works emphasize the adaptability of AI agents in structured workflows, yet they primarily focus on task-specific applications rather than long-form, coherent content creation. Akçapınar and Sidan [3] further explored the dual role of AI chatbots in programming education, finding that while LLMs enhance productivity, they may encourage over-reliance without sufficient safeguards—raising concerns about quality control and pedagogical integrity. This reveals a critical gap: current systems lack mechanisms to ensure alignment with domain-specific best practices during generative processes, especially in complex, multi-stage tasks like eBook authoring.

Markov Decision Processes (MDPs) offer a principled approach to sequential decision-making in dynamic environments and have been successfully applied in planning and control. Sarsur et al. [4] used MDPs with modular supervisors for efficient planning, while Chen et al. [5] demonstrated their effectiveness in robotic path planning under uncertainty. Bäuerle and Jaśkiewicz [6] extended MDPs to risk-sensitive settings, enabling robust policy optimization. However, these applications are largely confined to physical or abstract decision spaces and do not incorporate semantic knowledge. Our work bridges this gap by embedding MDPs within a knowledge map, where states represent stages of the writing process and actions correspond to LLM-driven refinements guided by best practices.

Knowledge graphs (KGs) provide a structured means of encoding domain expertise, enhancing both interpretability and reasoning in AI systems. Ibrahim et al. [7] surveyed KG–LLM integration, demonstrating improved transparency in decision-making, while Schenker et al. [8] pioneered graph-based web mining by leveraging topological features for classification. More recently, Khan et al. [9] proposed a Distance-Driven GNN to mitigate feature sparsity using global structural information. Although these works highlight the potential of KGs, they primarily treat them as static knowledge repositories rather than dynamic process guides.

The integration of large language models (LLMs) into structured workflows has gained significant traction, especially in domains like code generation and human–agent collaboration. Fernandes et al. [10] demonstrated DeepSeek-R1’s strong reasoning capabilities in generating correct LoRaWAN code, while Ghamati et al. [11] introduced a personalized LLM for context-aware human–robot interaction. However, these approaches typically depend on cloud-based models, which can introduce latency, raise privacy concerns, and offer limited fine-grained control. In contrast, our framework operates with a locally hosted instance of DeepSeek-R1, fine-tuned to adhere to the constraints derived from the knowledge graph discussed in the literature review (though the graph itself is not used in our implementation). To address well-documented fine-tuning challenges—such as catastrophic forgetting and reward misalignment, as highlighted by Wu et al. [12]—we employ MDP-driven reinforcement learning that explicitly prioritizes outputs conforming to the graph-defined quality standards.

Designing trustworthy and usable agentic AI remains a challenge. Diebel et al. [13] found that excessive autonomy can reduce users’ perceived competence, underscoring the need for balanced human–AI collaboration. Catak and Kuzlu [14] quantified LLM uncertainty via convex hull analysis, revealing sensitivity to prompt complexity—a vulnerability our iterative MDP-based validation loop helps mitigate. Aylak [15] proposed SustAI-SCM for sustainable supply chains, but its static workflows lack the adaptability required for creative tasks. Zota et al. [16] advanced hyper-automated IT operations, though their focus on incident resolution does not extend to the nuanced demands of content generation.

Our work introduces a novel CPU-only, privacy-preserving AI authoring copilot that uniquely combines Markov Decision Process (MDP)-driven orchestration with a locally deployed Qwen3-1.7B-ONNX large language model. Unlike prior research—which either lacks formal sequential decision-making frameworks for text refinement, relies on cloud-based models, or targets non-pedagogical domains—our system operates entirely on modest consumer hardware (Intel i7 CPU, 16 GB RAM) without GPU acceleration or internet dependency. This enables secure, on-device generation and iterative enhancement of educational content, grounded in measurable quality metrics such as clarity, unity, and sentiment. The Table 1 below systematically contrasts our contribution against representative works in the literature across three key criteria: (1) use of an MDP to guide text refinement, (2) deployment of a local/on-device LLM, and (3) successful execution on CPU-only hardware with less than 16 GB RAM.

Table 1.

Comparison of our approach with related works.

3. Materials and Methods

This study presents a structured AI agent design that integrates pre-trained AI models as skill providers, and Markov Decision Process (MDP) for orchestration. The approach aims to create an intelligent system capable of reasoning, executing tasks, and optimizing its actions through sequential decision-making. This section is organized into distinct areas covering the role of graph-based knowledge representation in structuring AI understanding, the integration of pre-trained models as skill providers for language generation and evaluation, and the use of MDP to orchestrate tasks efficiently.

3.1. Materials

We used a laptop with an Intel i7 CPU, 16 GB RAM, and 1 TB SSD to host the Qwen3-1.7B-ONNX model for processing and the bert-base-multilingual-uncased-sentiment model for sentiment analysis, adapted via JavaScript to output an integer from zero to five stars. Both models were converted to ONNX for web integration. This setup provided sufficient power and storage for efficient and stable system performance.

3.2. Methods

This section describes the orchestration engine that controls our authoring copilot. The Markov Decision Process (MDP) framework is employed to guide the sequential generation of paragraphs, ensuring coherence, engagement, and uniform reading flow across the article. Within this approach, states represent different stages in structuring content, actions correspond to stylistic or structural choices, and rewards reflect improvements in clarity and logical progression. By systematically transitioning between states, the MDP optimizes the ordering and composition of paragraphs, ensuring that each section builds upon the previous one in an intuitive and compelling manner. The framework enhances readability by dynamically adjusting the writing flow, reinforcing thematic consistency, and maintaining a balance between depth and accessibility. We model the chapter writing process as a finite MDP with quality-weighted rewards. The model captures the trade-offs between different writing quality dimensions during paragraph composition.

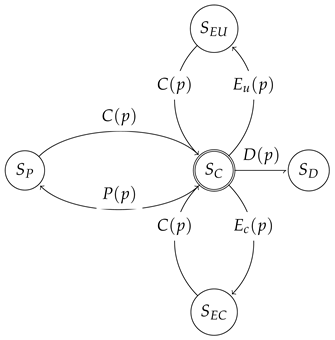

3.2.1. State Space

Let’s define as the set of MDP states. represents production state that triggers the MDP to produce a paragraph p or deliver the whole chapter and close the process. represents the check state on which the MDP checks the clarity and unity of the produced paragraph p. is the state that marks that the paragraph p clarity was enhanced while state marks that the paragraph p unity was enhanced. marks the end of the process and flags the paragraph delivery.

3.2.2. Action Space

This subsection defines the action space, where structural choices guide transitions between states defined above as the following:

- : Produce the paragraph p (From state).

- : Check the paragraph p (From state).

- : Enhance the clarity of the paragraph p (From state).

- : Enhance the unity of the paragraph p (From state).

- : Deliver the paragraph p (From state).

3.2.3. MDP State Machine

This subsection focuses on the Markov Decision Process (MDP) state machine that governs the behavior of our authoring agent. The MDP is defined over a finite set of states , representing key stages in the paragraph generation and refinement process. Transitions between these states are driven by a structured action space, including paragraph production , clarity and unity checks , and enhancement actions and . The state machine orchestrates the flow from initial production through iterative evaluation and refinement in , with optional detours to and for targeted improvements, until the final delivery state is reached, concluding the authoring process. The diagram visually captures these transitions, illustrating how the agent navigates through states to produce a polished, coherent paragraph.

3.2.4. Transition Probabilities

This subsection defines the transition functions taking into consideration the states and action spaces defined above. Transition function defines how the agent will move from a state to another.

- : This transition represents the probability of moving from the check state back to the production state by performing the action —producing a new paragraph p. The Kronecker delta ensures that this transition occurs only if no valid paragraph has been produced (i.e., p is null). Thus, the agent returns to production when the current paragraph is incomplete, undefined or in the first time when we still do not have any paragraph yet ().

- : After production, we always move to checking state. During the check state, a check action is performed to evaluate the clarity and unity of the paragraph p, and then, the reward is computed.

- : Represents the probability to move from when we are in by performing the action , enhancing the clarity of the paragraph p when the clarity check is less than the ceil (it is 4 out of 5 in our case).

- : Represents the probability to move from when we are in by performing the action , enhancing the unity of the paragraph p when the clarity check is less than the ceil (it is 4 out of 5 in our case).

- After enhancing the clarity or the unity, the agent comes back to check the paragraph p by acting and coming back to from and , respectively. Since the transition is automatic and deterministic, then we conclude that and .

- : This transition represents the probability of moving from the check state to the delivery state by performing the action —delivering the paragraph p. Delivery occurs when the evaluations of clarity and unity during exceed predefined thresholds and , respectively. At , the agent has three equally likely outcomes: (1) deliver the paragraph, (2) enhance clarity, or (3) enhance unity. Assuming uniform likelihood among these decisions, each has a probability of approximately , justifying the use of a Bernoulli distribution with mean for this discrete choice in the transition model. Although we might achieve the clarity threshold in iteration n, we may then focus on improving unity in iteration . However, this adjustment can unintentionally disrupt the previously satisfied clarity criterion. Consequently, even if clarity was met in a prior step, it must be re-verified in every subsequent iteration. This continual need for re-evaluation introduces a persistent, uniform uncertainty across all iterations, as no outcome remains guaranteed once other dimensions are modified.

3.2.5. Reward Function

The reward function is designed to guide the authoring agent toward producing high-quality paragraphs by providing feedback based on key textual attributes: clarity, unity, and sentiment. Specifically, the reward is defined as a weighted sum of quality metrics evaluated through a combination of LLM-based assessment and sentiment analysis. Let and denote the evaluations of paragraph p’s clarity and unity, respectively, performed by the LLM Qwen. These evaluations are further processed by a sentiment analysis model (bert-base-multilingual-uncased-sentiment in our case), which maps textual quality to a numerical score ranging from 0 (low sentiment/quality) to 5 (highly positive sentiment/quality). The composed function thus quantifies the perceived quality of the paragraph: that means that the sentiment analyser will analyze the answer of the LLM about the clarity of the paragraph p. This answer is given by (the same for the unity). The reward then is defined as

where and are configurable weights that balance the importance of clarity and unity during enhancement actions. This formulation ensures that only enhancement actions—those that improve the text—receive non-zero rewards, encouraging the agent to refine the paragraph until it meets desired quality standards. In our experiments, we explicitly set the default weight values to 0.6 for clarity and 0.4 for unity to reflect their relative importance in the evaluation framework. Users are free to adjust these values according to their specific priorities or contextual requirements.

3.3. Q-Function Definition

The Q-function, , represents the expected cumulative reward for taking action a in state s and following an optimal policy thereafter. It is defined by the Bellman Equation (2).

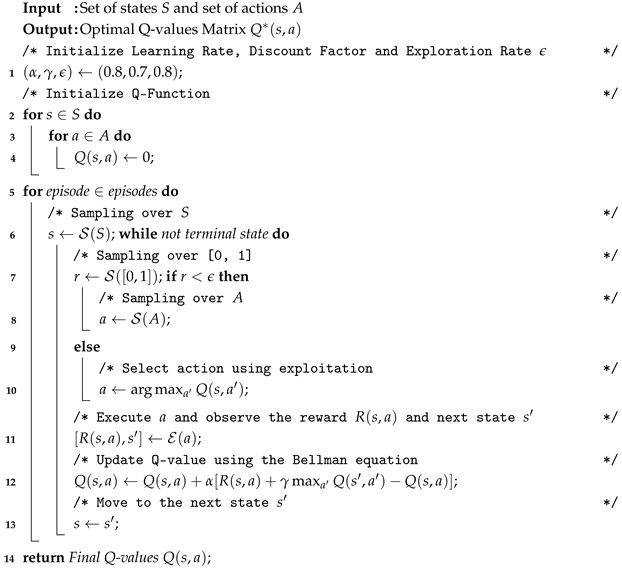

where is the immediate reward for action a in state s, is the discount factor, is the transition probability, and estimates the best future reward. The reward function guides the agent toward high-quality text. Q-learning (Algorithm 1) updates Q-values using this rule, with an -greedy policy balancing exploration and exploitation.

| Algorithm 1: Q-Learning algorithm with -greedy policy |

|

3.4. Q-Learning Algorithm

This Algorithm 1 allows reinforcement learning agents to refine their decision-making process while balancing clarity, engagement, and flow coherence. In Algorithm 1, we fix the learning rate , discount factor , and exploration rate based on empirical pilot experiments. These values reflect a deliberate trade-off between rapid policy convergence and sufficient exploration of the refinement action space.

A high learning rate () enables the Q-function to quickly incorporate feedback from LLM-based quality assessments—an important feature given the strict latency constraints of on-device operation and the limited number of refinement iterations per paragraph (typically ).

Although aggressive learning rates can destabilize training under noisy rewards, our reward function combines deterministic LLM evaluations with sentiment analysis which stabilizes learning despite the high . The moderate discount factor () prioritizes immediate improvements in clarity and unity while still accounting for cumulative effects across sequential edits.

3.5. Implementation

We designed an authoring agent governed by a Markov Decision Process (MDP) to generate a paragraph and iteratively enhance its clarity and unity. The agent first produces an initial draft and then enters a refinement loop, where the paragraph is evaluated and improved based on learned quality metrics. The agent is developed using Node.js platform and leverages the transformers.js to load the Qwen3-1.7B model locally. When deploying large language models like Qwen3-1.7B in a server-side JavaScript environment, leveraging the ONNX format can significantly enhance inference performance and cross-platform compatibility. ONNX (Open Neural Network Exchange) enables models trained in frameworks like PyTorch or TensorFlow to be exported into a standardized, optimized representation that can be executed efficiently using high-performance runtimes such as ONNX Runtime. For server-side applications built with Node.js, this is particularly valuable because it allows access to hardware-accelerated inference (via CPU optimizations or GPU backends like CUDA) without relying on Python-based serving stacks. While Transformers.js is primarily designed for browser and lightweight Node.js use cases, it does not natively support ONNX execution. Instead, Transformers.js uses its own WebAssembly (WASM) or native JavaScript tensor operations, which are convenient but generally slower than optimized ONNX runtimes for larger models like Qwen3-1.7B. Therefore, to achieve optimal performance when serving Qwen3-1.7B on the server, it is recommended to bypass Transformers.js for inference and instead integrate ONNX Runtime for Node.js (onnxruntime-node) directly. This package provides bindings to the native ONNX Runtime, enabling fast CPU inference (with optional AVX2/AVX-512 acceleration) and support for quantized models. To evaluate the effectiveness of our approach, we selected five diverse topics (see prompts in Appendix A). For each topic, we generated a paragraph using our MDP-based agent, DeepSeek, ChatGPT, Copilot X, Grok-3 and our MDP Agent using identical prompts to ensure a fair comparison. This resulted in a total of 25 paragraphs—five from each system. All paragraphs were anonymized and assigned unique identifiers to prevent bias during evaluation. We then employed several state-of-the-art large language models such as OpenAI’s ChatGPT, Alibaba’s Qwen, and DeepSeek as independent evaluators, in addition to human evaluators. Each LLM was instructed to rate the clarity and unity/completeness of every paragraph on a 5-point scale, from 0 (very poor) to 5 (excellent), without knowledge of the generating model.

4. Results and Discussion

4.1. Obtained Paragraphs

For completeness and transparency, all generated paragraphs—covering the five topics—are included in Appendix B. Each paragraph is presented with its corresponding identifier, topic, and generating model (ChatGPT, DeepSeek, or the MDP-based agent), allowing for direct inspection and qualitative assessment of the content. This enables readers to contextualize the evaluation scores and to examine the stylistic and structural differences among the systems. Now, we will ask existing LLMs to evaluate the clarity and unity of all generated paragraphs. See the evaluation results in the section below.

4.2. Evaluation Results

Evaluation results from ChatGPT (GPT-4o), Qwen (Qwen3), and DeepSeek (DeepSeek-V3) are presented in Table 2, Table 3 and Table 4, respectively. Each table compares the performance of paragraphs generated by five models, including our MDP generator (running on CPU with low memory) in ChatGPT, DeepSeek, Copilot and Grok.

Table 2.

Clarity and unity ChatGPT evaluation results of generated paragraphs.

Table 3.

Clarity and unity Qwen evaluation results of generated paragraphs.

Table 4.

Clarity and unity DeepSeek evaluation results of generated paragraphs.

4.2.1. Consolidated Evaluation Summary Across Models

To facilitate cross-model comparison, the following Table 5 aggregates the average scores ((Clarity + Unity)/2) for each paragraph ID from ChatGPT, DeepSeek, and Qwen evaluations. Overall model averages are computed at the bottom.

Table 5.

Cross-model average score comparison overview.

4.2.2. Cronbach’s Statistical Consistency Analysis

Cronbach’s alpha () is a measure of internal consistency reliability, reflecting how consistently multiple items—in this case, the three large language models (ChatGPT, DeepSeek, and Qwen)—assess the same underlying construct (clarity and unity across 25 paragraphs). A high indicates that the models function as coherent raters, justifying the use of their mean score as a reliable composite metric. Formally, Cronbach’s is defined as

where is the number of models (items), is the variance of scores from model i, and is the variance of the total scores (sum of the three model scores) across paragraphs. The pairwise Pearson correlations between model scores across the 25 paragraphs are shown in Table 6.

Table 6.

Pairwise Pearson correlations between evaluation model scores.

The values yield a mean inter-model correlation of . Substituting into the formula above gives

The Cronbach’s indicates good internal consistency among the three models (ChatGPT, DeepSeek, and Qwen), satisfying the conventional threshold () for acceptable reliability in group-level research. Despite minor systematic differences in absolute scoring—such as Qwen’s slightly lower mean (4.17) compared to ChatGPT’s (4.27)—the models exhibit consistent relative judgments, meaning they rank paragraphs similarly in terms of clarity and unity. Because Cronbach’s assesses the reliability of the composite score rather than absolute agreement, these small calibration biases do not compromise the validity of the aggregate measure. Consequently, the Overall Average column in Table 5 can be treated as a trustworthy and stable proxy for paragraph quality in downstream analyses.

4.2.3. Human Evaluation Summary

To complement automated LLM-based assessments and validate perceptual quality from an end-user perspective, we conducted a small-scale human evaluation study with two participants. Each participant independently rated all 25 generated paragraphs and focus on clarity and unity. The following Table 7 reports individual scores and their average, providing insight into human judgment consistency.

Table 7.

Human evaluation scores across 25 paragraphs (Clarity + Unity averaged per participant).

4.2.4. Overall Assessment

The table below presents a summary of paragraph quality ratings across different generators, as evaluated independently by ChatGPT, DeepSeek, and the Human Average. The (Average) column reports the mean of the two evaluator scores for each generator, while the final row shows the overall evaluator averages. Notably, the MDP Agent is the only system run locally on CPU-only hardware, whereas all other generators leveraged cloud-based GPU resources.

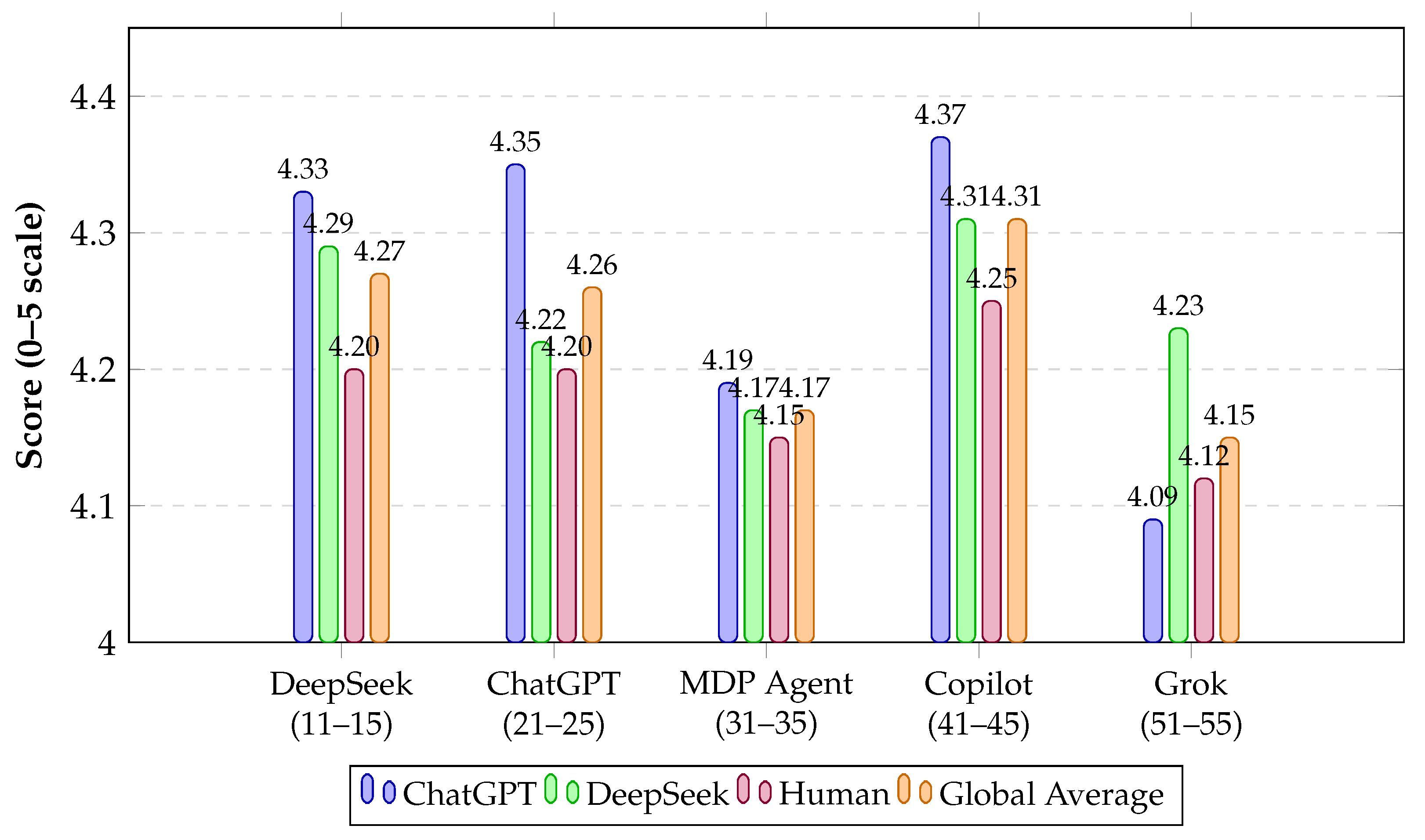

Figure 1 visually reports the data summarized in Table 8. The plot highlights the Generator Average.

Figure 1.

Evaluation of generators across LLMs, Human Raters, and Global Average (mean of all three). All scores are on a 0–5 scale. MDP Agent runs on local CPU (i7, 16GB RAM) only.

Table 8.

Summary of generator performance (evaluated by ChatGPT, DeepSeek, and Human Raters).

4.3. Discussion

4.3.1. Generation Sensitivity Across Topics

The design of generation prompts, the formulation of the reward function, and the choice of LLM inference parameters (e.g., temperature, top-p, repetition penalty) significantly influence the quality and consistency of the generated text, and this influence is highly sensitive to the nature of the topic. For technical subjects, dense prompts combined with low temperature () and a clarity-weighted reward () yielded coherent, accurate explanations. In contrast, for more subjective or narrative topics, the same settings occasionally produced overly rigid prose, suggesting a need for adaptive reward tuning (e.g., higher weight on unity) and slight stochasticity () to preserve stylistic nuance. Our current reward, which relies on LLM-based evaluations of clarity and unity filtered through a fixed sentiment model, works well for structured informational tasks but may under-penalize vagueness or over-penalize creative phrasing in open-ended domains. The MDP Agent’s performance is not uniform across topic categories, and future iterations should incorporate topic-aware reward shaping and dynamic parameter adjustment to balance factual fidelity with expressive flexibility.

4.3.2. Supporting Teachers in Educational Material Design

The integration of AI into teaching practices presents a transformative opportunity to alleviate the cognitive and temporal burdens associated with educational content creation. Our MDP-orchestrated authoring agent is specifically designed to empower educators by enhancing the clarity, and unity of instructional materials through structured, iterative refinement. By leveraging local inference with Qwen3-1.7B-ONNX, the system enables teachers to generate high-quality lesson summaries from complex source texts, such as research papers or technical textbooks, without relying on external cloud services. The MDP framework allows for strategic decision-making in text enhancement, where actions like simplification, elaboration, or tone modulation are applied in a sequence that maximizes pedagogical effectiveness. This capability supports intentional material design, enabling instructors to focus on curricular goals rather than mechanical rewriting. Furthermore, the agent can assist in developing adaptive course content tailored to diverse student populations, including varying reading levels and learning preferences, thereby promoting inclusive and differentiated instruction in higher education.

4.3.3. Enabling Privacy-Preserving AI in Educational Settings

A critical advantage of our approach lies in its on-device deployment via ONNX optimization, which ensures that all text generation and enhancement processes occur locally. This design directly addresses growing concerns about data privacy and security in educational AI applications. Unlike cloud-based large language models that require user inputs to be transmitted to remote servers—potentially exposing sensitive information such as student feedback, assessment comments, or unpublished research—our system keeps all data within the user’s environment. This feature is particularly valuable in institutional contexts governed by strict data protection regulations such as GDPR. It also enables safe deployment in low-bandwidth or restricted-network settings, including schools in resource-constrained regions. By demonstrating that powerful AI assistance can be delivered without compromising user confidentiality, our work contributes to the development of trustworthy, ethically grounded tools that align with the principles of responsible innovation in education.

4.3.4. Promoting Ethical and Trustworthy AI Use

The deployment of AI in education must be accompanied by robust mechanisms to ensure fairness, transparency, and accountability. Our agent addresses these concerns through its interpretable MDP-based orchestration and integrated sentiment analysis module. The decision policy operates over a defined action space—such as simplifying language, enriching explanations, or adjusting tone—allowing educators to understand and audit the system’s behavior. This level of transparency stands in contrast to the opaque, end-to-end outputs of conventional LLMs. Moreover, the reward function within the MDP can be explicitly designed to penalize undesirable traits, including overly complex syntax, biased language, or negative sentiment, thus promoting equitable and constructive communication.

5. Conclusions and Perspectives

This paper has presented an AI authoring agent that leverages the Qwen3-1.7B-ONNX language model and a Markov Decision Process (MDP) framework to enhance the clarity and unity of educational content. By treating text refinement as a sequential decision-making process, the system moves beyond static generation to deliver purposeful, pedagogically guided improvements. Integrated sentiment analysis ensures that outputs maintain a constructive and motivating tone, making the agent particularly suitable for use by teachers, academic writers, and course designers. The local deployment of the LLM via ONNX guarantees data privacy and enables use in secure or low-bandwidth environments—critical advantages for real-world educational settings.

Despite its benefits, the system faces a notable limitation: the iterative nature of the MDP-orchestrated—that runs on CPU only—enhancement process results in a longer total production time compared to single-pass generation models like ChatGPT or DeepSeek. While the initial text generation is fast, each refinement step—guided by policy evaluation, action selection, and quality feedback—adds computational overhead. This latency may affect usability in time-sensitive contexts, such as live classroom preparation or rapid feedback drafting. However, this trade-off reflects a deliberate design choice: sacrificing speed for greater control, transparency, and output quality. The structured enhancement process allows educators to trust the agent’s decisions and understand the rationale behind each edit, aligning with the principles of trustworthy AI in education.

Looking ahead, several directions can reduce this latency and support scalable deployment. First, the MDP policy can be optimized through model distillation or approximate inference to minimize decision-making overhead. Second, parallelization of enhancement actions—such as simultaneous grammar correction and tone adjustment—could reduce sequential steps. Third, adaptive stopping criteria could allow the agent to terminate refinement early when quality thresholds are met, balancing speed and performance. Future work will also explore human-in-the-loop evaluation with educators to measure perceived value versus time cost. As AI becomes more embedded in teaching and learning, systems like ours demonstrate that the future of educational AI lies not in speed alone, but in responsible, interpretable, and pedagogically meaningful augmentation.

To support reproducibility, the source code for the MDP Agent and associated components will be made available upon request by contacting the corresponding author. This approach allows us to share the implementation with appropriate documentation while facilitating direct communication with interested researchers. We hope this hybrid approach —combining locally LLMs with MDP-driven control—can offer a useful reference for future work on authoring agents and educational technology.

Funding

This work is funded by the Euromed University of Fez.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Acknowledgments

The author thanks the reviewers for their constructive feedback and insightful suggestions, which greatly improved the quality and clarity of this research.

Conflicts of Interest

The author declares no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| ONNX | Open Neural Network Exchange |

| MDP | Markov Decision Process |

| LLM | Large Language Model |

| GPT | Generative Pre-training Transformer |

| CPU | Central Processing Unit |

| GPU | Graphics Processing Unit |

Appendix A. Summary of Generation and Evaluation Prompts

Appendix A.1. Generation Prompts

Three AI generators (ChatGPT, DeepSeek, Copilot, Grok, and the MDP Agent) were prompted with the following instructions to produce original paragraphs on five distinct topics:

- Act as a writer and produce a small paragraph related to the following: How do quantum computers work?

- Act as a writer and produce a small paragraph related to the following: How to write a good biography?

- Act as a writer and produce a small paragraph related to the following: How does blockchain work?

- Act as a writer and produce a small paragraph related to the following: What is the meaning of inflation?

- Act as a writer and produce a small paragraph related to the following: Can AI replace humans in programming?

- Act as a writer and produce a small paragraph related to the following: Give me a historical snapshot of Europe!

All prompts were identical across generators to ensure fair comparison. Each system produced one paragraph per topic, resulting in five paragraphs per generator. Outputs were anonymized and assigned unique identifiers for blind evaluation.

Appendix A.2. Evaluation Prompts

Four independent LLM-based evaluators (ChatGPT, DeepSeek, and Qwen) assessed all generated paragraphs using one of the following two variants of the evaluation prompt:

- Attached is a PDF containing produced paragraphs by multiple generators. Each paragraph has an ID (e.g., Paragraph 12). Evaluate the clarity (ease of understanding) and unity (informational completeness) of each paragraph. Provide scores for both dimensions on a scale from 0 (very weak) to 5 (very strong).

All evaluators returned numerical scores per paragraph, which were later aggregated to compute average ratings for clarity, unity, and overall quality as reported in the main paper.

Appendix A.3. Enhancement Prompts

The following prompts are designed to systematically check and improve the quality of written paragraphs along three core dimensions: clarity, and unity/completeness. Each prompt assigns a specific editorial role to the model and provides clear, actionable instructions to enhance the input text while preserving its original meaning.

- Prompt for Improving Clarity: You are a precision-focused editor with expertise in technical and explanatory writing. Revise the following paragraph to maximize clarity. Use straightforward language, eliminate ambiguity, and ensure that each sentence builds logically upon the last. Define any complex terms in context and maintain a coherent focus on the topic. Your goal is to make the content immediately understandable to a general audience without oversimplifying or omitting key details. The paragraph to enhance is {{ paragraph }}.

- Prompt for Improving Unity: You are a subject-matter expert and structural editor. Revise the paragraph to ensure it is unified and complete. Confirm that it fully addresses the topic with all necessary components, develops each idea sufficiently, and maintains a consistent focus from start to finish. Fill any logical or informational gaps, remove irrelevant content, and ensure the final version is self-contained and comprehensive. The paragraph to enhance is {{ paragraph }}.

Appendix B. Generated Paragraphs by Topic and Generator

This appendix presents all generated paragraphs used in the evaluation, grouped by the five source prompts (topics) and organized by generator: DeepSeek, ChatGPT, MDP Agent (our system), Copilot, and Grok. Each paragraph is labeled with its corresponding ID (e.g., Paragraph 11) as referenced in the evaluation tables.

Appendix B.1. Topic 1: How Do Quantum Computers Work?

- Paragraph 11 (DeepSeek)

At its heart, a quantum computer harnesses the strange laws of quantum mechanics to process information in a fundamentally new way. Instead of using traditional bits that are either 0 or 1, it uses quantum bits, or qubits. A qubit can exist in a state of superposition, effectively being both 0 and 1 simultaneously. This allows a quantum computer to explore a vast number of possibilities at once. Furthermore, through a phenomenon called entanglement, qubits can be linked so that the state of one instantly influences another, no matter the distance. By manipulating these interconnected qubits with precise quantum gates, a quantum computer can perform complex calculations on all these potential states in parallel, offering the potential to solve certain problems exponentially faster than even the most powerful classical supercomputers.

- Paragraph 21 (ChatGPT)

A quantum computer operates by harnessing the principles of quantum mechanics to process information in ways impossible for classical machines. Instead of using bits that represent either 0 or 1, quantum computers use qubits, which can exist in multiple states simultaneously thanks to superposition. Qubits can also become entangled, meaning the state of one instantly influences the state of another, even at a distance. These properties allow quantum computers to perform complex calculations with immense parallelism, solving certain problems—like cryptography, optimization, and molecular simulation—much faster than traditional computers.

- Paragraph 31 (MDP Agent)

Quantum computers leverage the principles of quantum mechanics, such as superposition and entanglement, to perform calculations at speeds unattainable by classical computers. Unlike traditional bits that exist in only one state (0 or 1), qubits can be in multiple states simultaneously. This allows quantum computers to process vast amounts of information parallelly, significantly reducing computation time for certain tasks like factoring large numbers or searching through complex datasets. However, quantum computing is still an emerging technology with challenges in error correction and scalability.

- Paragraph 41 (Copilot)

Quantum computers operate by harnessing the strange and powerful principles of quantum mechanics to process information in fundamentally new ways. Unlike classical computers that use bits—binary units of 0 s and 1 s—quantum computers use qubits, which can exist in multiple states simultaneously thanks to a phenomenon called superposition. This allows quantum systems to explore many possible solutions at once. Additionally, qubits can be entangled, meaning the state of one qubit is directly related to the state of another, no matter how far apart they are. This entanglement enables quantum computers to perform complex calculations with remarkable speed and efficiency. Instead of following a single path like classical machines, quantum computers operate more like a symphony of probabilities, collapsing into a solution when measured.

- Paragraph 51 (Grok)

A quantum computer harnesses the bizarre principles of quantum mechanics to solve problems far beyond the reach of classical machines. Instead of bits representing 0 or 1, it uses qubits that can exist in superpositions—simultaneously 0, 1, or any blend—thanks to wave-like probability. Qubits entangle, linking their fates so measuring one instantly influences others, no matter the distance. Algorithms like Shor’s exploit interference patterns in these overlapping states, amplifying correct answers while canceling wrongs through gates that rotate probabilities on a Bloch sphere. Cooled to near absolute zero to fend off decoherence, the system evolves as a unified quantum wave, collapsing only at readout to deliver solutions in exponentially fewer steps for tasks like factoring primes or simulating molecules.

Appendix B.2. Topic 2: How to Write a Good Biography?

- Paragraph 12 (DeepSeek)

To write a compelling biography, one must first immerse themselves in exhaustive research, unearthing not just the landmark achievements but the telling nuances of a subject’s character—the private struggles, formative moments, and human contradictions. The art lies in weaving these facts into a coherent narrative, moving beyond a mere chronology to construct a story with a central thesis. A great biography doesn’t just inform the reader of what a person did; it offers a window into why they did it, capturing their essence with both scholarly integrity and the empathetic heart of a storyteller.

- Paragraph 22 (ChatGPT)

Writing a good biography requires balancing factual accuracy with engaging storytelling. Start by researching thoroughly—collecting reliable information about the person’s life, achievements, and challenges. Organize the narrative chronologically or thematically to maintain clarity and flow. Focus on key moments that reveal character, motivation, and growth, rather than listing events mechanically. Use vivid details, quotes, and anecdotes to bring the subject to life while maintaining an objective and respectful tone. Ultimately, a strong biography not only informs readers about who the person was but also captures the essence of why their story matters.

- Paragraph 32 (MDP Agent)

Writing a good biography requires careful research, attention to detail, and an understanding of the subject’s life story. Start by selecting the right individual—someone with significant impact or legacy. Next, gather comprehensive information about their early life, career, achievements, challenges, and personal relationships. Use credible sources and maintain a clear, chronological structure. Remember to highlight the person’s unique qualities and contributions, while presenting facts objectively. Finally, edit carefully to ensure clarity and flow, capturing both the triumphs and complexities of the subject’s journey.

- Paragraph 42 (Copilot)

A good biography blends factual accuracy with compelling storytelling, offering readers both insight and inspiration. To write a strong biography, start with thorough research—gather details from interviews, archives, and trusted sources to build a complete picture of the subject’s life. Focus on key milestones, challenges, and achievements that shaped their journey, and present them in a clear chronological or thematic structure. Use vivid anecdotes and quotes to bring the person to life, but maintain a respectful and balanced tone. Whether you’re writing about a public figure or a loved one, the goal is to reveal not just what they did, but who they were and why their story matters.

- Paragraph 52 (Grok)

To write a good biography, begin with thorough research into your subject’s life, gathering primary sources like letters, interviews, and diaries alongside secondary accounts to ensure accuracy and depth. Focus on a compelling narrative arc—birth, challenges, triumphs, and legacy—while weaving in vivid anecdotes that reveal personality rather than dry facts. Maintain an objective yet engaging voice, balancing admiration with honesty about flaws, and structure the piece chronologically or thematically to guide readers seamlessly through the journey, ultimately illuminating why this life matters.

Appendix B.3. Topic 3: How Does Blockchain Work?

- Paragraph 13 (DeepSeek)

At its core, blockchain is a revolutionary digital ledger that operates on principles of decentralization and cryptographic security. Imagine a chain of digital “blocks,” each containing a list of transactions. When a new transaction occurs, it is broadcast to a peer-to-peer network of computers. These computers, known as nodes, then compete to validate and bundle these transactions into a new block by solving a complex cryptographic puzzle. Once solved, this new block is cryptographically linked to the previous one, creating an immutable and tamper-evident chain. This decentralized consensus means no single entity controls the data, and altering any single record would require an improbable amount of computational power to change every subsequent block across all copies of the ledger, making it inherently trustworthy and transparent.

- Paragraph 23 (ChatGPT)

Blockchain works as a decentralized digital ledger that records transactions across a network of computers. Instead of relying on a single authority, every transaction is grouped into a block, linked to the previous one, and secured using cryptographic techniques. This chain of blocks is distributed among participants, making it transparent and nearly impossible to alter without consensus from the network. Each new transaction must be verified by multiple participants, ensuring trust, security, and accuracy. In essence, blockchain creates a secure, transparent, and tamper-proof system for storing and sharing data.

- Paragraph 33 (MDP Agent)

Blockchain is a decentralized digital ledger that records transactions across multiple computers in such a way that the historical record cannot be altered without the consensus of the entire network. Each block contains a list of transactions along with a cryptographic hash of the previous block, ensuring integrity and security. Blocks are linked and maintained by miners or validators who verify transactions through proof-of-work or other consensus mechanisms. Once added to the blockchain, a transaction is permanent and transparent, making it resistant to fraud and forgery. This technology underpins cryptocurrencies like Bitcoin and enables applications in finance, supply chain management, and more.

- Paragraph 43 (Copilot)

Blockchain is a decentralized digital ledger that securely records transactions across a network of computers, making data tamper-resistant and transparent. At its core, blockchain operates by grouping data into blocks, each containing a set of transactions. Once a block is filled, it is cryptographically linked to the previous one, forming a continuous chain. This structure ensures that any attempt to alter a block would require changing all subsequent blocks, which is nearly impossible due to the distributed nature of the network. Each participant, or node, maintains a copy of the entire blockchain, and consensus mechanisms like proof-of-work or proof-of-stake validate new entries. This decentralized verification process eliminates the need for a central authority, making blockchain ideal for applications ranging from cryptocurrencies to supply chain management.

- Paragraph 53 (Grok)

Blockchain operates as a decentralized digital ledger that records transactions across a network of computers, ensuring transparency and security without a central authority. When a transaction occurs, it is grouped with others into a “block,” which contains a cryptographic hash of the previous block, a timestamp, and the transaction data. This block is then verified by network nodes through consensus mechanisms like proof-of-work or proof-of-stake, where miners or validators solve complex mathematical puzzles to confirm its validity. Once approved, the block is appended to the existing chain, creating an immutable sequence that cannot be altered retroactively without redoing the work for all subsequent blocks, thus preventing fraud and double-spending.

Appendix B.4. Topic 4: What Is the Meaning of Inflation?

- Paragraph 14 (DeepSeek)

In its essence, inflation is the gradual erosion of your purchasing power. It represents the broad increase in prices for goods and services across the economy, which means that over time, a single unit of currency simply doesn’t stretch as far as it once did. Where a dollar might have bought a loaf of bread one year, it may only cover half the cost the next. This silent, persistent rise in the cost of living is measured by tracking the average price of a basket of common items, and it is the fundamental reason why the cost of everything from a gallon of milk to a new car tends to climb higher year after year.

- Paragraph 24 (ChatGPT)

Inflation refers to the sustained increase in the general level of prices for goods and services within an economy over a period of time. When inflation occurs, the purchasing power of money decreases, meaning that each unit of currency buys fewer goods and services than before. It is usually measured by indicators such as the Consumer Price Index (CPI). Moderate inflation is a normal sign of a growing economy, but high or unpredictable inflation can reduce savings, distort investments, and create economic uncertainty.

- Paragraph 34 (MDP Agent)

Inflation refers to the general increase in prices for goods and services over time, which reduces the purchasing power of money. It occurs when demand for certain items exceeds their supply or when production costs rise faster than consumer spending. Inflation can be measured using indices like the Consumer Price Index (CPI) or the Producer Price Index (PPI). This phenomenon affects individuals by eroding buying power and can lead to both short-term benefits, such as higher interest rates, and long-term challenges, including reduced real incomes and potential economic instability if it becomes too high or persistent.

- Paragraph 44 (Copilot)

Inflation is the gradual rise in the overall price level of goods and services, which reduces the purchasing power of money over time. In economic terms, inflation reflects how the cost of living changes as prices increase across a wide range of products and services. When inflation occurs, each unit of currency buys fewer items than before, making everyday expenses more costly for consumers. This phenomenon is typically measured by tracking the price changes of a standardized basket of goods over a set period, often a year. While moderate inflation is considered a sign of a growing economy, excessive inflation can erode savings and destabilize financial systems.

- Paragraph 54 (Grok)

Inflation is the gradual erosion of money’s purchasing power, where the same dollar buys fewer loaves of bread or gallons of gas as prices climb across the economy. It stems primarily from an expanding money supply outpacing the growth of goods and services, often fueled by central bank policies, excessive government spending, or supply shocks like oil embargoes. In essence, it’s a hidden tax that redistributes wealth from savers to debtors, rewarding those who borrow early and punishing the prudent.

Appendix B.5. Topic 5: Can AI Replace Humans in Programming?

- Paragraph 15 (DeepSeek)

While AI has rapidly evolved into a sophisticated tool capable of generating functional code and automating routine tasks, it remains a long way from truly replacing the human programmer. Its prowess lies in pattern recognition and speed, excelling at turning clear specifications into syntax. However, the essence of programming—the creative architecture of systems, the intuitive leaps to solve novel problems, and the nuanced understanding of user experience and ethical implications—is deeply human. AI is a powerful collaborator and an exceptional assistant, but the vision, ingenuity, and responsibility at the heart of creation still belong to us.

- Paragraph 25 (ChatGPT)

Artificial Intelligence has made remarkable progress in automating many aspects of programming, from writing simple code snippets to debugging and optimizing software. However, while AI can assist and speed up development, it cannot fully replace human programmers. Creativity, critical thinking, and understanding complex real-world problems still require human insight. AI lacks true comprehension of context and intent—qualities essential for designing innovative solutions. Therefore, instead of replacing humans, AI serves as a powerful tool that enhances human productivity and transforms the way software is developed.

- Paragraph 35 (MDP Agent)

AI can augment human capabilities in programming, offering efficiency and speed in tasks like code generation and bug detection. However, the nuanced understanding of context, ethics, and system design remains a domain where human oversight is essential. While AI may handle repetitive or data-driven aspects of coding, it cannot fully replace the creative problem-solving and critical thinking that humans bring to complex software development. Thus, collaboration between AI and humans will likely be the future of programming.

- Paragraph 45 (Copilot)

The question of whether AI can replace humans in programming stirs both excitement and concern. While AI has made remarkable strides in automating code generation, debugging, and even learning from vast repositories of software, it still lacks the nuanced understanding, creativity, and ethical judgment that human developers bring to the table. Programming isn’t just about writing code—it’s about solving problems, designing systems, and collaborating with others to build meaningful solutions. AI can certainly augment and accelerate the work of programmers, but replacing them entirely would mean replicating human intuition, empathy, and adaptability—traits that remain uniquely human.

- Paragraph 55 (Grok)

AI cannot fully replace humans in programming, though it excels at accelerating routine tasks like generating boilerplate code, debugging syntax errors, or optimizing algorithms based on vast training data. Yet programming demands creative problem-solving, ethical judgment, and contextual understanding—qualities rooted in human experience—that AI lacks. A developer might use AI to draft a function, but only a human can grasp the unspoken needs of users, anticipate edge cases in real-world systems, or decide when a “good enough” solution risks long-term harm. The future lies in symbiosis: AI as a tireless apprentice, humans as the architects of intent.

References

- Yusuf, H.; Money, A.; Daylamani-Zad, D. Pedagogical AI conversational agents in higher education: A conceptual framework and survey of the state of the art. Educ. Technol. Res. Dev. 2025, 73, 815–874. [Google Scholar] [CrossRef]

- Schorcht, S.; Peters, F.; Kriegel, J. Communicative AI Agents in Mathematical Task Design: A Qualitative Study of GPT Network Acting as a Multi-professional Team. Digit. Exp. Math. Educ. 2025, 11, 77–113. [Google Scholar] [CrossRef]

- Akçapınar, G.; Sidan, E. AI chatbots in programming education: Guiding success or encouraging plagiarism. Discov. Artif. Intell. 2024, 4, 87. [Google Scholar] [CrossRef]

- Sarsur, D.; Alves, L.V.; Pena, P.N. Using Markov Decision Process over Local Modular Supervisors for Planning Problems. IFAC-PapersOnLine 2024, 58, 126–131. [Google Scholar] [CrossRef]

- Chen, Y.J.; Jhong, B.G.; Chen, M.Y. A Real-Time Path Planning Algorithm Based on the Markov Decision Process in a Dynamic Environment for Wheeled Mobile Robots. Actuators 2023, 12, 166. [Google Scholar] [CrossRef]

- Bäuerle, N.; Jaśkiewicz, A. Markov decision processes with risk-sensitive criteria: An overview. Math. Methods Oper. Res. 2024, 99, 141–178. [Google Scholar] [CrossRef]

- Ibrahim, N.; Aboulela, S.; Ibrahim, A.; Kashef, R. A survey on augmenting knowledge graphs (KGs) with large language models (LLMs): Models, evaluation metrics, benchmarks, and challenges. Discov. Artif. Intell. 2024, 4, 76. [Google Scholar] [CrossRef]

- Schenker, A.; Bunke, H.; Last, M.; Kandel, A. A Graph-Based Framework for Web Document Mining. In Document Analysis Systems VI; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2004; Volume 3163, pp. 401–412. [Google Scholar] [CrossRef]

- Khan, I.; Bokhari, M.U.; Afzal, S.; Alam, S. Distance driven graph neural network for advanced node classification through feature augmentation. Discov. Comput. 2025, 28, 70. [Google Scholar] [CrossRef]

- Fernandes, D.; Matos-Carvalho, J.P.; Fernandes, C.M.; Fachada, N. DeepSeek-V3, GPT-4, Phi-4, and LLaMA-3.3 Generate Correct Code for LoRaWAN-Related Engineering Tasks. Electronics 2025, 14, 1428. [Google Scholar] [CrossRef]

- Ghamati, K.; Banitalebi Dehkordi, M.; Zaraki, A. Towards AI-Powered Applications: The Development of a Personalised LLM for HRI and HCI. Sensors 2025, 25, 2024. [Google Scholar] [CrossRef] [PubMed]

- Wu, X.K.; Chen, M.; Li, W.; Wang, R.; Lu, L.; Liu, J.; Hwang, K.; Hao, Y.; Pan, Y.; Meng, Q.; et al. LLM Fine-Tuning: Concepts, Opportunities, and Challenges. Big Data Cogn. Comput. 2025, 9, 87. [Google Scholar] [CrossRef]

- Diebel, C.; Goutier, M.; Adam, M.; Benlian, A. When AI-Based Agents Are Proactive: Implications for Competence and System Satisfaction in Human–AI Collaboration. Bus. Inf. Syst. Eng. 2025. [Google Scholar] [CrossRef]

- Catak, F.O.; Kuzlu, M. Uncertainty quantification in large language models through convex hull analysis. Discov. Artif. Intell. 2024, 4, 90. [Google Scholar] [CrossRef]

- Aylak, B.L. SustAI-SCM: Intelligent Supply Chain Process Automation with Agentic AI for Sustainability and Cost Efficiency. Sustainability 2025, 17, 2453. [Google Scholar] [CrossRef]

- Zota, R.D.; Bărbulescu, C.; Constantinescu, R. A Practical Approach to Defining a Framework for Developing an Agentic AIOps System. Electronics 2025, 14, 1775. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).