Skeleton-Based Real-Time Hand Gesture Recognition Using Data Fusion and Ensemble Multi-Stream CNN Architecture

Abstract

1. Introduction

- Enhanced Data-Level Fusion: A refined transformation process for converting 3D skeleton data into high-quality 2D spatiotemporal representations, reducing noise and improving gesture classification accuracy.

- Optimized Multi-Stream CNN Architecture with a fully trainable ensemble tuner mechanism that enhances semantic connections between multiple gesture representations, leading to improved classification performance.

- The presented framework significantly reduces computational complexity while realizing real-time performance on standard devices, maintaining the accuracy necessary and comparable to state-of-the-art methods.

- Extensive Benchmarking and Validation: Empirical evaluations conducted on five widely used benchmark datasets (SHREC2017, DHG1428, FPHA, LMDHG, and CNR) validate the framework’s robustness and generalizability across diverse gesture categories.

2. Related Work

2.1. Skeleton-Based Hand Gesture Recognition

2.2. Data-Level Fusion

2.3. Multi-Stream Network Architectures

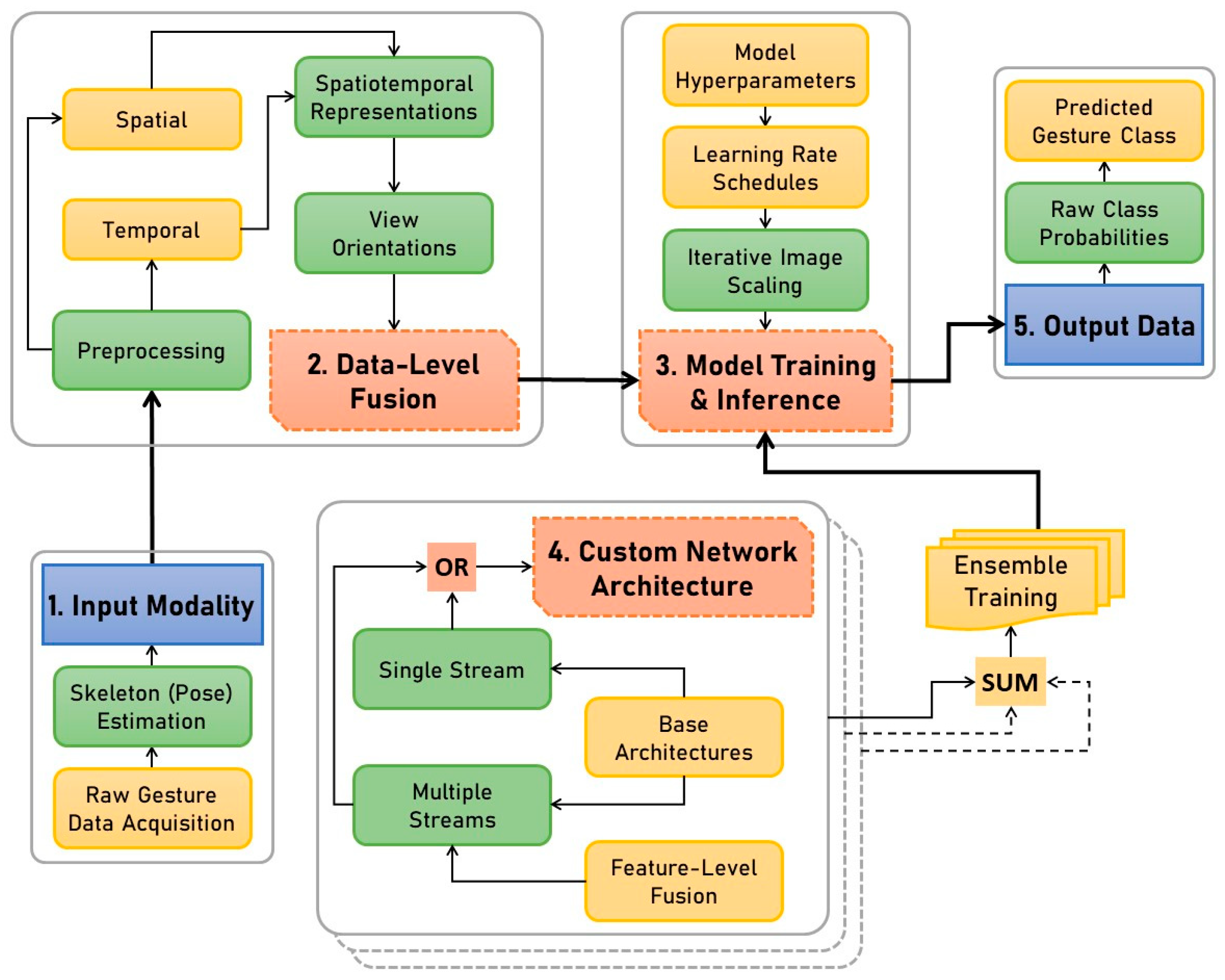

3. Skeleton-Based Hand Gesture Recognition Framework

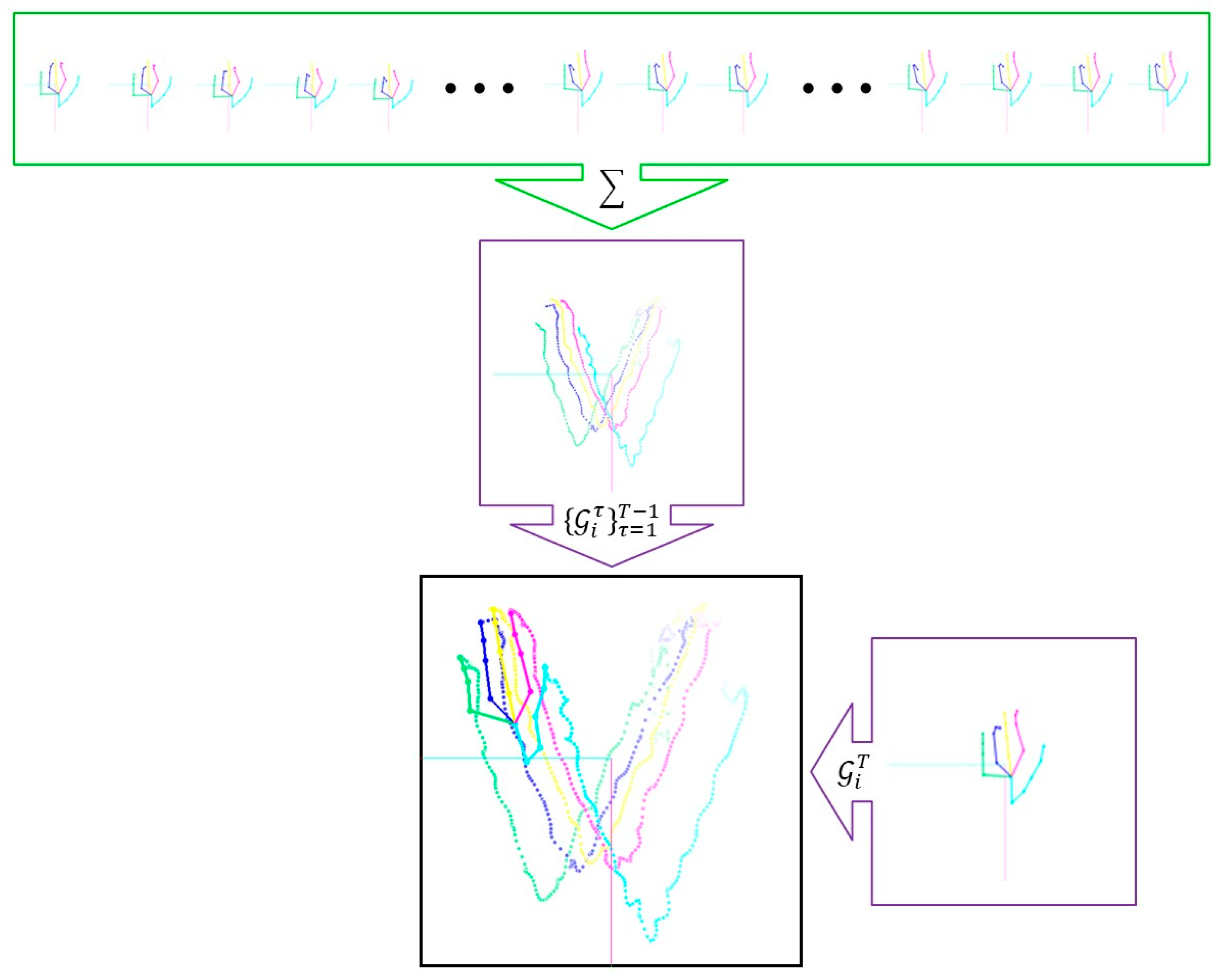

3.1. Generation of Static Spatiotemporal Images

- This process smooths out inaccuracies present in individual frames that may occur during pose estimation.

- It also reduces minor variations in motion trajectories and sequence durations caused by individual differences in gesture performance.

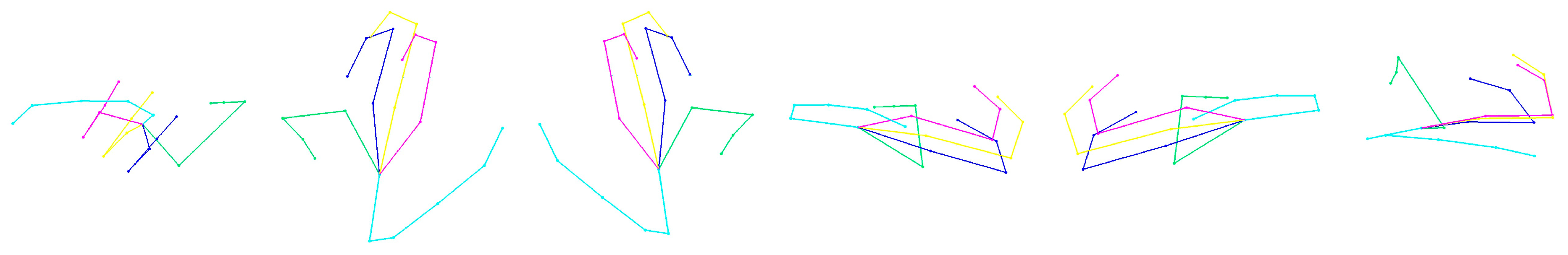

- Spatial: This channel represents changes in hand pose across frames as a 3D model of the hand skeleton. Each finger is displayed in a unique CSS color, chosen for high contrast with the background and with the others.

- Temporal: This channel visualizes hand motion through 3D “temporal trails” drawn by the five fingertips, extending from the gesture’s start to its end . These trails consist of sequential markers, each assigned a distinctive CSS color for its respective finger. The alpha channel adjusts the transparency of each marker: those earlier in the sequence () are more transparent, while those later () are more opaque, thus capturing the gesture’s temporal progression.

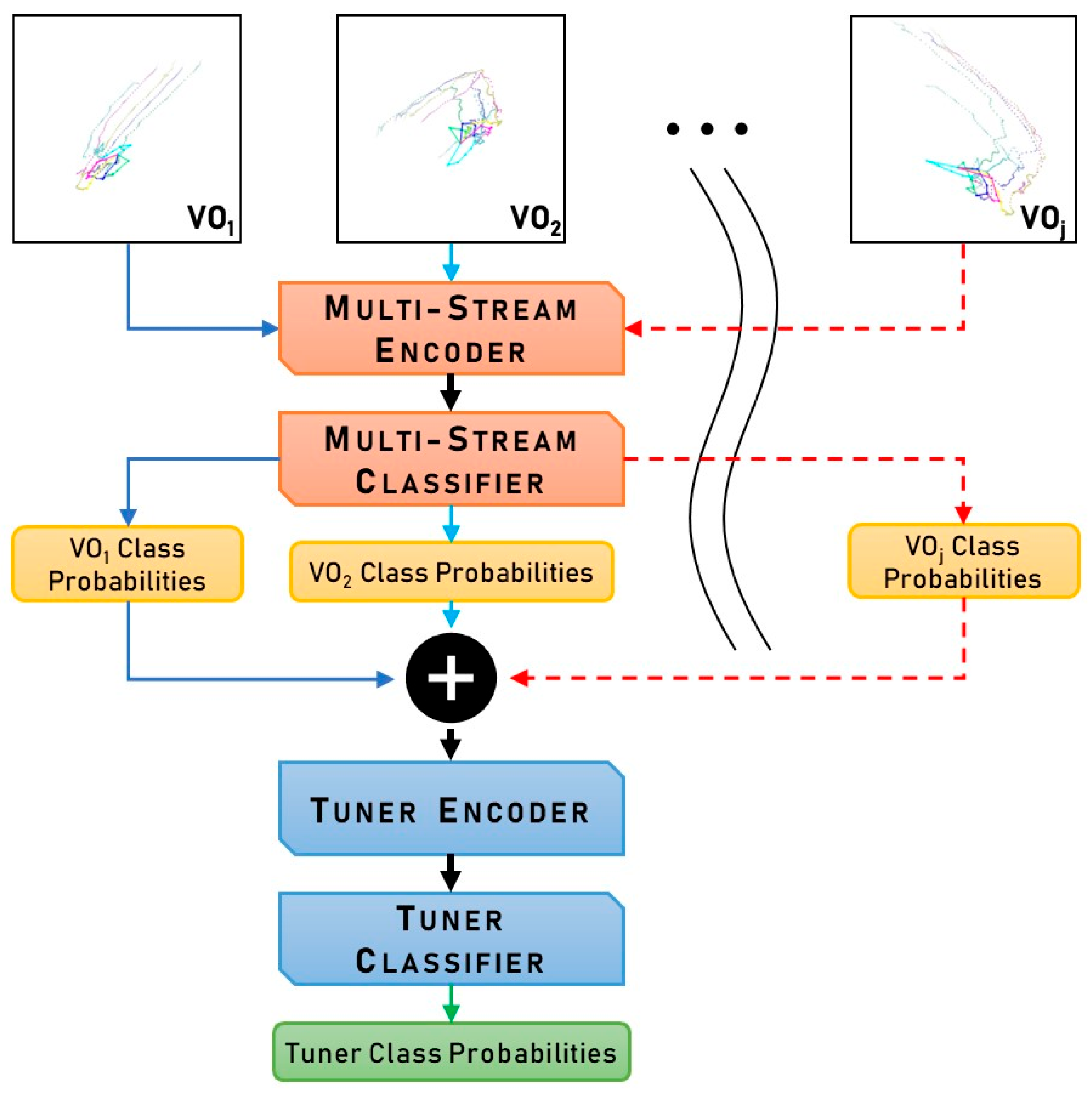

3.2. Multi-View Ensemble-Tuned CNN for Spatiotemporal Gesture Image Classification

4. Experiments & Results

4.1. Overview of Datasets

- CNR: The CNR dataset [25] comprises spatiotemporal images captured from a top-down perspective, totaling 1925 gesture sequences across 16 gesture classes. The data is partitioned into a training set of 1348 images and a validation set of 577 images. Notably, this dataset does not include raw skeleton data, limiting our framework’s training to a single viewpoint.

- LMDHG: The LMDHG dataset [50] consists of 608 gesture sequences spanning 13 classes, divided into 414 sequences for training and 194 for validation. It features minimal overlap in subjects between subsets and provides comprehensive 3D skeleton data with 46 hand joints per hand.

- FPHA: Containing 1175 gesture sequences distributed across 45 classes, the FPHA dataset [51] offers a diverse range of styles, perspectives, and scenarios. The main challenges include highly similar motion patterns, varied object interactions, and a relatively low ratio of gestures to classes. It is split into 600 gestures for training and 575 for validation, with 3D skeleton data available for 21 joints per subject.

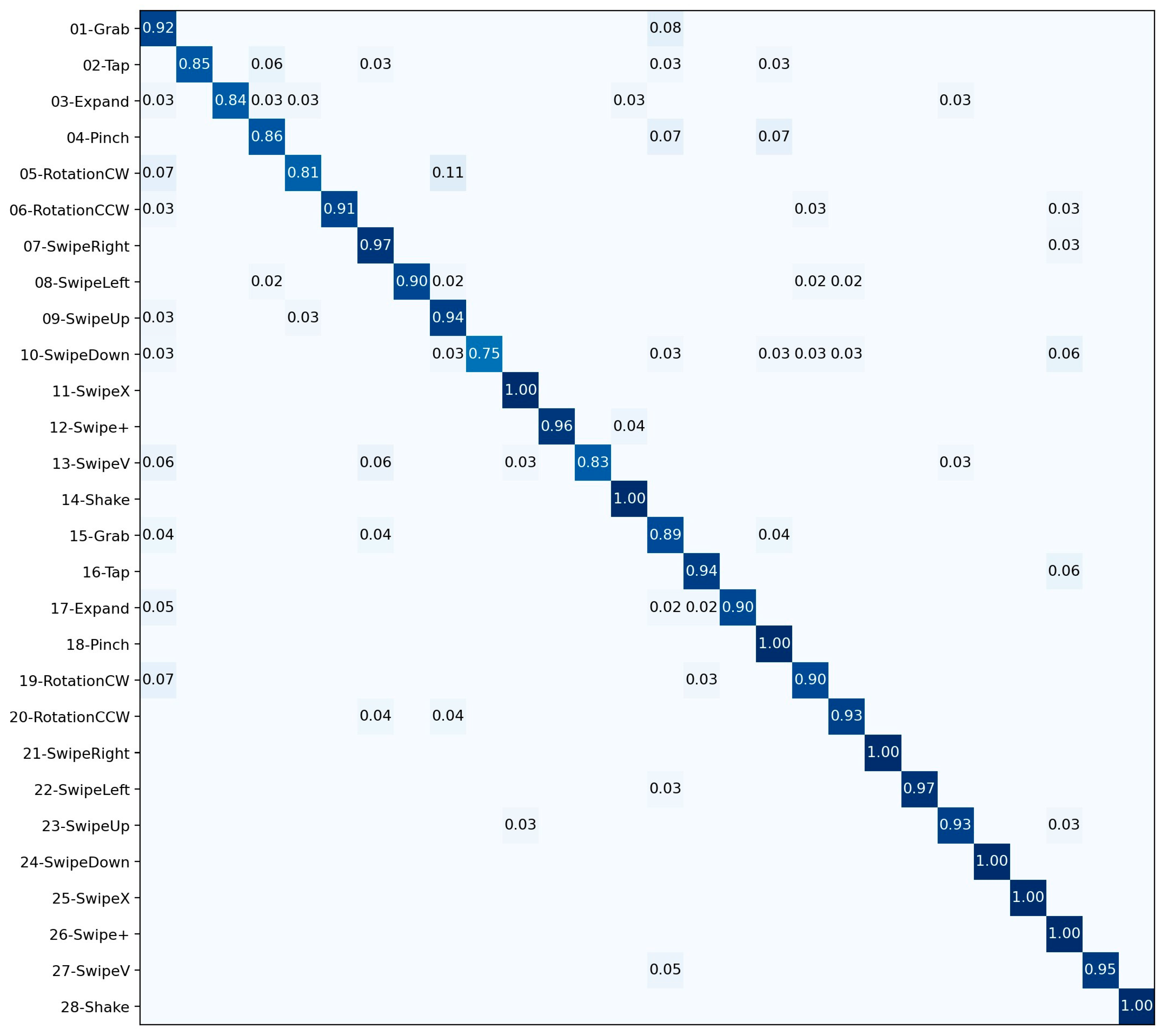

- SHREC2017: This dataset [8] features 2800 gesture sequences performed by 28 subjects, designed for both coarse- and fine-grained classification via 14-gesture (14 G) and 28-gesture (28 G) benchmarks. The data provides 3D skeleton information for 22 hand joints and follows a 70:30 random-split protocol for training (1960 gestures) and validation (840 gestures).

- DHG1428: Structured similarly to SHREC2017, the DHG1428 dataset [52] comprises 2800 sequences executed by 20 subjects for both the 14 G and 28 G tasks. It provides equivalent skeleton data and employs a 70:30 split for training and validation sets.

- SBUKID: The SBUKID [53], a smaller human action recognition (HAR) collection, includes 282 action sequences across eight classes involving two-person interactions. It provides skeleton data for 15 joints per subject and utilizes a five-fold cross-validation protocol, reporting average accuracies across all folds.

4.2. Generalized HGR Framework Evaluation

4.3. Implementation and Training Details

4.3.1. Environment and Software Setup

- PyTorch 1.8.1 + cu102 and FastAI 2.5.3 for model design, training, and evaluation.

- OpenCV 4.0.1 and Vispy 0.9.2 for generating pseudo-images and visualizations during data-level fusion.

- TensorBoard 2.8.0 was integrated into FastAI for visualizing metrics and logging experiments.

- torchvision.models

- FastAI == 2.5.3

- pytorchcv.model_provider

- geffnet (GenEfficientNet 1.0.2)

4.3.2. Model Training and Hyperparameters

4.3.3. Reproducibility and Evaluation Protocol

4.3.4. Inference Speed and Performance

4.3.5. Reproducibility and Evaluation Protocol

4.4. Results on the CNR Dataset

4.5. Results on the LMDHG Dataset

4.6. Results on the FPHA Dataset

4.7. Results on the SHREC2017 Dataset

4.8. Results on the DHG1428 Dataset

4.9. Ablation Study on the SBUKID

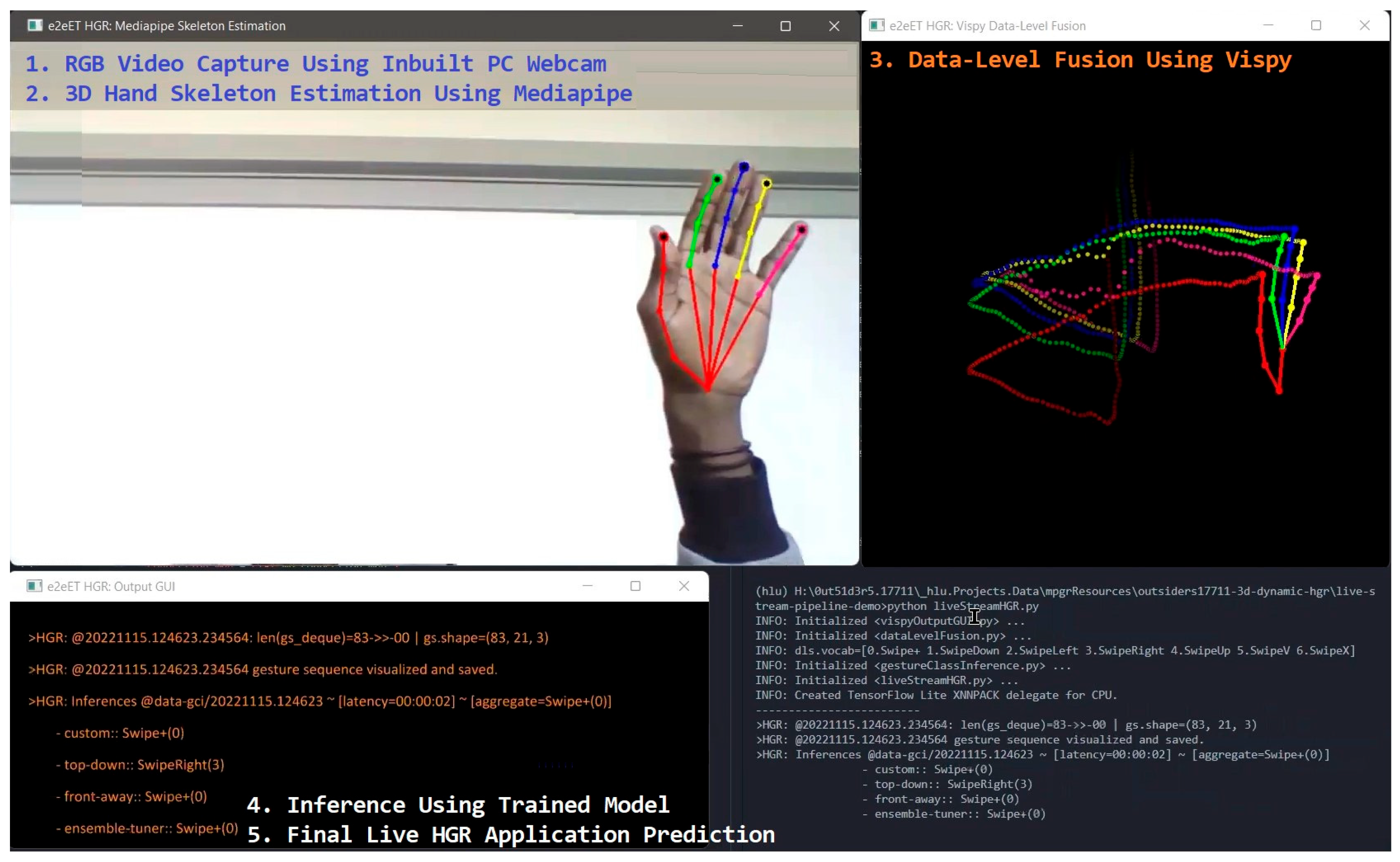

5. Real-Time HGR Application

- (1)

- OpenCV captures the raw gesture data as RGB videos from the built-in PC webcam.

- (2)

- The video frames are processed for hand detection and pose estimation using MediaPipe Hands.

- (3)

- Data-level fusion is performed on the skeleton data, resulting in three spatiotemporal images from [custom, top-down, front-away] view orientations.

Demonstration of HRG Real-Time Application

- Data Acquisition. OpenCV captures live gesture data as RGB video streams from the built-in PC webcam.

- Hand Pose Estimation. Video frames are processed with MediaPipe Hands to detect the hand and estimate its pose.

- Data-Level Fusion. The skeleton data from the detected hand is used to generate three spatiotemporal images corresponding to the [custom, top-down, front-away] view orientations.

- Gesture Classification. These fused images are input into the trained DHG1428 model, which provides four class predictions—three from the multi-stream sub-network and one from the ensemble tuner sub-network.

- Results Display. The application’s graphical user interface (GUI) presents the predicted gesture classes, relevant details about the gesture sequence, and the framework’s end-to-end latency.

6. Conclusions and Future Work

6.1. Conclusions

6.2. Future Work

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Morris, M.R. AI and Accessibility. Commun. ACM 2020, 63, 35–37. [Google Scholar] [CrossRef]

- Benitez-Garcia, G.; Olivares-Mercado, J.; Sanchez-Perez, G.; Yanai, K. IPN Hand: A Video Dataset and Benchmark for Real-Time Continuous Hand Gesture Recognition. arXiv 2020, arXiv:2005.02134. [Google Scholar] [CrossRef]

- Gammulle, H.; Denman, S.; Sridharan, S.; Fookes, C. TMMF: Temporal Multi-Modal Fusion for Single-Stage Continuous Gesture Recognition. IEEE Trans. Image Process. 2021, 30, 7689–7701. [Google Scholar] [CrossRef]

- Lai, K.; Yanushkevich, S. An Ensemble of Knowledge Sharing Models for Dynamic Hand Gesture Recognition. arXiv 2020, arXiv:2008.05732. [Google Scholar] [CrossRef]

- Li, C.; Li, S.; Gao, Y.; Zhang, X.; Li, W. A Two-stream Neural Network for Pose-based Hand Gesture Recognition. arXiv 2021, arXiv:2101.08926. [Google Scholar] [CrossRef]

- Liu, J.; Liu, Y.; Wang, Y.; Prinet, V.; Xiang, S.; Pan, C. Decoupled Representation Learning for Skeleton-Based Gesture Recognition. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 5751–5760. Available online: https://openaccess.thecvf.com/content_CVPR_2020/html/Liu_Decoupled_Representation_Learning_for_Skeleton-Based_Gesture_Recognition_CVPR_2020_paper.html (accessed on 14 October 2025).

- Wang, Z.; She, Q.; Chalasani, T.; Smolic, A. CatNet: Class Incremental 3D ConvNets for Lifelong Egocentric Gesture Recognition. arXiv 2020, arXiv:2004.09215. [Google Scholar]

- Yang, F.; Sakti, S.; Wu, Y.; Nakamura, S. Make Skeleton-based Action Recognition Model Smaller, Faster and Better. arXiv 2020, arXiv:1907.09658. [Google Scholar] [CrossRef]

- Zhang, C.; Zou, Y.; Chen, G.; Gan, L. PAN: Towards Fast Action Recognition via Learning Persistence of Appearance. arXiv 2020, arXiv:2008.03462. [Google Scholar]

- Yusuf, O.; Habib, M. Development of a Lightweight Real-Time Application for Dynamic Hand Gesture Recognition. In Proceedings of the 2023 IEEE International Conference on Mechatronics and Automation (ICMA), Harbin, China, 6–9 August 2023; pp. 543–548. [Google Scholar] [CrossRef]

- Chen, J.; Zhao, C.; Wang, Q.; Meng, H. HMANet: Hyperbolic Manifold Aware Network for SkeletonBased Action Recognition. IEEE Trans. Cogn. Dev. Syst. 2023, 15, 602–614. [Google Scholar] [CrossRef]

- De Smedt, Q.; Wannous, H.; Vandeborre, J.-P.; Guerry, J.; Le Saux, B.; Filliat, D. 3D Hand Gesture Recognition Using a Depth and Skeletal Dataset. In Proceedings of the 3Dor ′17: Proceedings of the Workshop on 3D Object Retrieval, Lyon, France, 23–24 April 2017; Eurographics Association: Goslar, Germany, 2017; pp. 33–38. [Google Scholar] [CrossRef]

- Kacem, A.; Daoudi, M.; Amor, B.B.; Berretti, S.; Alvarez-Paiva, J.C. A Novel Geometric Framework on Gram Matrix Trajectories for Human Behavior Understanding. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 1–14. [Google Scholar] [CrossRef]

- Liu, J.; Wang, G.; Duan, L.Y.; Abdiyeva, K.; Kot, A.C. Skeleton-Based Human Action Recognition with Global Context-Aware Attention LSTM Networks. IEEE Trans. Image Process. 2018, 27, 1586–1599. [Google Scholar] [CrossRef]

- Shi, L.; Zhang, Y.; Cheng, J.; Lu, H. Decoupled Spatial-Temporal Attention Network for Skeleton-Based Action Recognition. arXiv 2020, arXiv:2007.03263. [Google Scholar]

- Gao, S.; Zhang, D.; Tang, Z.; Wang, H. Deep fusion of skeleton spatial–temporal and dynamic information for action recognition. Sensors 2024, 24, 7609. [Google Scholar] [CrossRef]

- Yin, R.; Yin, J. A Two-Stream Hybrid CNN-Transformer Network for Skeleton-Based Human Interaction Recognition. In Proceedings of the Pattern Recognition and Computer Vision, Proceedings of the 7th Chinese Conference (PRCV 2024), Urumqi, China, 18–20 October 2024; PRCV 2024, Part VII.; Springer: Berlin/Heidelberg, Germany, 2024; Volume 15037, pp. 395–408. [Google Scholar] [CrossRef]

- Molchanov, P.; Gupta, S.; Kim, K.; Kautz, J. Hand gesture recognition with 3D convolutional neural networks. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Boston, MA, USA, 7–12 June 2015; pp. 1–7. [Google Scholar] [CrossRef]

- Zhang, P.; Lan, C.; Xing, J.; Zeng, W.; Xue, J.; Zheng, N. View Adaptive Neural Networks for High Performance Skeleton-Based Human Action Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 1963–1978. [Google Scholar] [CrossRef]

- Zhang, X.; Li, S.; Zeng, X.; Lu, P.; Sun, W. A Novel Multimodal Hand Gesture Recognition Model Using Combined Approach of Inter-Frame Motion and Shared Attention Weights. Computers 2025, 14, 432. [Google Scholar] [CrossRef]

- Deng, Z.; Gao, Q.; Ju, Z.; Yu, X. Skeleton-Based Multifeatures and Multistream Network for Real-Time Action Recognition. IEEE Sens. J. 2023, 23, 7397–7409. [Google Scholar] [CrossRef]

- Akremi, M.S.; Slama, R.; Tabia, H. SPD Siamese Neural Network for Skeleton-based Hand Gesture Recognition. In Proceedings of the 17th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2022)—Volume 4: VISAPP, Online, 6–8 February 2022; SciTePress: Setúbal, Portugal, 2022; pp. 394–402. [Google Scholar] [CrossRef]

- Dang, T.L.; Pham, T.H.; Dao, D.M.; Nguyen, H.V.; Dang, Q.M.; Nguyen, B.T.; Monet, N. DATE: A video dataset and benchmark for dynamic hand gesture recognition. Neural Comput. Appl. 2024, 36, 17311–17325. [Google Scholar] [CrossRef]

- Neverova, N.; Wolf, C.; Taylor, G.W.; Nebout, F. Multi-scale Deep Learning for Gesture Detection and Localization. In Computer Vision—ECCV 2014 Workshops; Agapito, L., Bronstein, M., Rother, C., Eds.; Springer: Berlin/Heidelberg, Germany, 2015; Volume 8925. [Google Scholar] [CrossRef]

- Liu, X.; Shi, H.; Hong, X.; Chen, H.; Tao, D.; Zhao, G. 3D Skeletal Gesture Recognition via Hidden States Exploration. IEEE Trans. Image Process. 2020, 29, 4583–4597. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, X.S.; Brun, L.; Lézoray, O.; Bougleux, S. A neural network based on SPD manifold learning for skeleton-based hand gesture recognition. arXiv 2019, arXiv:1904.12970. [Google Scholar] [CrossRef]

- Liu, Y.; Jiao, J. Fusing Skeleton-Based Scene Flow for Gesture Recognition on Point Clouds. Electronics 2025, 14, 567. [Google Scholar] [CrossRef]

- Akrem, M.S. Manifold-Based Approaches for Action and Gesture Recognition/Approches Basées sur les Variétés Pour la Reconnaissance des Actions et des Gestes. Ph.D. Dissertation, IBISC Lab, Paris-Saclay, Paris, France, 2025. [Google Scholar]

- Sahbi, H. Skeleton-based Hand-Gesture Recognition with Lightweight Graph Convolutional Networks. arXiv 2021, arXiv:2104.04255. [Google Scholar]

- Rehan, M.; Wannous, H.; Alkheir, J.; Aboukassem, K. Learning Co-occurrence Features Across Spatial and Temporal Domains for Hand Gesture Recognition. In Proceedings of the 19th International Conference on Content-based Multimedia Indexing (CBMI ’22), Graz, Austria, 14–16 September 2022; Association for Computing Machinery: New York, NY, USA, 2022; pp. 36–42. [Google Scholar] [CrossRef]

- Hu, J.; Wu, C.; Xu, T.; Wu, X.-J.; Kittler, J. Spatio-Temporal Domain-Aware Network for Skeleton-Based Action Representation Learning. In Proceedings of the Pattern Recognition: 27th International Conference, ICPR 2024, Kolkata, India, 1–5 December 2024; Proceedings, Part XXIX. Springer: Cham, Switzerland, 2024; pp. 148–163. [Google Scholar] [CrossRef]

- Sabater, A.; Alonso, I.; Montesano, L.; Murillo, A.C. Domain and View-point Agnostic Hand Action Recognition. arXiv 2021, arXiv:2103.02303. [Google Scholar] [CrossRef]

- Narayan, S.; Mazumdar, A.P.; Vipparthi, S.K. SBI-DHGR: Skeleton-based intelligent dynamic hand gestures recognition. Expert Syst. Appl. 2023, 232, 120735. [Google Scholar] [CrossRef]

- Chen, Y.; Zhao, L.; Peng, X.; Yuan, J.; Metaxas, D.N. Construct Dynamic Graphs for Hand Gesture Recognition via Spatial-Temporal Attention. arXiv 2019, arXiv:1907.08871. [Google Scholar] [CrossRef]

- Mohammed, A.A.Q.; Gao, Y.; Ji, Z.; Lv, J.; Islam, S.; Sang, Y. Automatic 3DSkeleton-based Dynamic Hand Gesture Recognition Using Multi-Layer Convolutional, L.S.T.M. In Proceedings of the 7th International Conference on Robotics and Artificial Intelligence (ICRAI’21), Guangzhou, China, 19–22 November 2022; Association for Computing Machinery: New York, NY, USA, 2022; pp. 8–14. [Google Scholar] [CrossRef]

- Shanmugam, S.; A, L.S.; Dhanasekaran, P.; Mahalakshmi, P.; Sharmila, A. Hand Gesture Recognition using Convolutional Neural Network. In Proceedings of the 2021 Innovations in Power and Advanced Computing Technologies (i-PACT), Kuala Lumpur, Malaysia, 27–29 November 2021; pp. 1–5. [Google Scholar] [CrossRef]

- Min, Y.; Zhang, Y.; Chai, X.; Chen, X. An Efficient PointLSTM for Point Clouds Based Gesture Recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 5761–5770. Available online: https://openaccess.thecvf.com/content_CVPR_2020/html/Min_An_Efficient_PointLSTM_for_Point_Clouds_Based_Gesture_Recognition_CVPR_2020_paper.html (accessed on 14 October 2025).

- Maghoumi, M.; LaViola, J.J., Jr. DeepGRU: Deep Gesture Recognition Utility. arXiv 2019, arXiv:1810.12514. [Google Scholar] [CrossRef]

- Song, J.-H.; Kong, K.; Kang, S.-J. Dynamic Hand Gesture Recognition Using Improved Spatio-Temporal Graph Convolutional Network. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 6227–6239. [Google Scholar] [CrossRef]

- Molchanov, P.; Yang, X.; Gupta, S.; Kim, K.; Tyree, S.; Kautz, J. Online Detection and Classification of Dynamic Hand Gestures with Recurrent 3D Convolutional Neural Networks. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 4207–4215. [Google Scholar] [CrossRef]

- Köpüklü, O.; Köse, N.; Rigoll, G. Motion Fused Frames: Data Level Fusion Strategy for Hand Gesture Recognition. arXiv 2018, arXiv:1804.07187. [Google Scholar] [CrossRef]

- Zhou, H.; Le, H.T.; Zhang, S.; Phung, S.L.; Alici, G. Hand Gesture Recognition from Surface Electromyography Signals with Graph Convolutional Network and Attention Mechanisms. IEEE Sens. J. 2025, 25, 9081–9092. [Google Scholar] [CrossRef]

- Wang, B.; Lu, R.; Zhang, L. Efficient Hand Gesture Recognition Using Multi-Stream CNNs with Feature Fusion Strategies. IEEE Access 2021, 9, 23567–23578. [Google Scholar]

- Abavisani, M.; Joze, H.R.V.; Patel, V.M. Improving the Performance of Unimodal Dynamic Hand-Gesture Recognition with Multimodal Training. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 1165–1174. Available online: https://openaccess.thecvf.com/content_CVPR_2019/html/Abavisani_Improving_the_Performance_of_Unimodal_Dynamic_Hand-Gesture_Recognition_With_Multimodal_CVPR_2019_paper.html (accessed on 14 October 2025).

- Liu, W.; Lu, B. Multi-Stream Convolutional Neural Network-Based Wearable, Flexible Bionic Gesture Surface Muscle Feature Extraction and Recognition. Front. Bioeng. Biotechnol. 2022, 10, 833793. [Google Scholar] [CrossRef] [PubMed]

- Yang, X.; Molchanov, P.; Kautz, J. Making Convolutional Networks Recurrent for Visual Sequence Learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 6469–6478. Available online: https://openaccess.thecvf.com/content_cvpr_2018/html/Yang_Making_Convolutional_Networks_CVPR_2018_paper.html (accessed on 14 October 2025).

- Yu, Z.; Zhou, B.; Wan, J.; Wang, P.; Chen, H.; Liu, X.; Li, S.Z.; Zhao, G. Searching Multi-Rate and Multi-Modal Temporal Enhanced Networks for Gesture Recognition. IEEE Trans. Image Process. 2021, 30, 5626–5640. [Google Scholar] [CrossRef]

- Li, L.; Qin, S.; Lu, Z.; Zhang, D.; Xu, K.; Hu, Z. Real-time one-shot learning gesture recognition based on lightweight 3D Inception-ResNet with separable convolutions. Pattern Anal. Appl. 2021, 24, 1173–1192. [Google Scholar] [CrossRef]

- Kendall, A.; Gal, Y.; Cipolla, R. Multi-Task Learning Using Uncertainty to Weigh Losses for Scene Geometry and Semantics. arXiv 2018, arXiv:1705.07115. [Google Scholar] [CrossRef]

- Boulahia, S.Y.; Anquetil, E.; Multon, F.; Kulpa, R. Dynamic hand gesture recognition based on 3D pattern assembled trajectories. In Proceedings of the Seventh International Conference on Image Processing Theory, Tools and Applications (IPTA), Montreal, QC, Canada, 28 November–1 December 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Devineau, G.; Xi, W.; Moutarde, F.; Yang, J. Deep Learning for Hand Gesture Recognition on Skeletal Data. In Proceedings of the 2018 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2018), Xi’an, China, 15–19 May 2018. [Google Scholar] [CrossRef]

- De Smedt, Q.; Wannous, H.; Vandeborre, J.-P. Skeleton-Based Dynamic Hand Gesture Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 1206–1214. [Google Scholar] [CrossRef]

- Kim, S.; Jung, J.; Lee, K.J. A Real-Time Sparsity-Aware 3D-CNN Processor for Mobile Hand Gesture Recognition. IEEE Trans. Circuits Syst. I Regul. Pap. 2024, 71, 3695–3707. [Google Scholar] [CrossRef]

- Lupinetti, K.; Ranieri, A.; Giannini, F.; Monti, M. 3D dynamic hand gestures recognition using the Leap Motion sensor and convolutional neural networks. arXiv 2020, arXiv:2003.01450. [Google Scholar] [CrossRef]

- Zhang, C.; Zou, Y.; Chen, G.; Gan, L. PAN: Persistent Appearance Network with an Efficient Motion Cue for Fast Action Recognition. In Proceedings of the MM ‘19: Proceedings of the 27th ACM International Conference on Multimedia, Nice, France, 21–25 October 2019; pp. 500–509. [Google Scholar] [CrossRef]

- Miah, A.S.M.; Hasan, A.M.; Shin, J. Dynamic Hand Gesture Recognition Using Multi-Branch Attention Based Graph and General Deep Learning Model. IEEE Access 2023, 11, 4703–4716. [Google Scholar] [CrossRef]

- Lai, K.; Yanushkevich, S.N. CNN+RNN Depth and Skeleton based Dynamic Hand Gesture Recognition. In Proceedings of the 2018 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018; pp. 3451–3456. [Google Scholar] [CrossRef]

- Chen, X.; Guo, H.; Wang, G.; Zhang, L. Motion Feature Augmented Recurrent Neural Network for Skeletonbased Dynamic Hand Gesture Recognition. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 2881–2885. [Google Scholar] [CrossRef]

- Weng, J.; Liu, M.; Jiang, X.; Yuan, J. Deformable Pose Traversal Convolution for 3D Action and Gesture Recognition. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 136–152. Available online: https://openaccess.thecvf.com/content_ECCV_2018/html/Junwu_Weng_Deformable_Pose_Traversal_ECCV_2018_paper.html (accessed on 14 October 2025).

- Nguyen, X.S.; Brun, L.; Lezoray, O.; Bougleux, S. Skeleton-Based Hand Gesture Recognition by Learning SPD Matrices with Neural Networks. arXiv 2019, arXiv:1905.07917. [Google Scholar] [CrossRef]

- Song, S.; Lan, C.; Xing, J.; Zeng, W.; Liu, J. Spatio-Temporal Attention-Based LSTM Networks for 3D Action Recognition and Detection. IEEE Trans. Image Process. 2018, 27, 3459–3471. [Google Scholar] [CrossRef] [PubMed]

- Liu, J.; Wang, Y.; Xiang, S.; Pan, C. HAN: An Efficient Hierarchical Self-Attention Network for Skeleton-Based Gesture Recognition. arXiv 2021, arXiv:2106.13391. [Google Scholar] [CrossRef]

- Ke, Q.; Bennamoun, M.; An, S.; Sohel, F.; Boussaid, F. Learning Clip Representations for SkeletonBased 3D Action Recognition. IEEE Trans. Image Process. 2018, 27, 2842–2855. [Google Scholar] [CrossRef]

- Mucha, W.; Kampel, M. Beyond Privacy of Depth Sensors in Active and Assisted Living Devices. In Proceedings of the 15th International Conference on PErvasive Technologies Related to Assistive Environments, Corfu, Greece, 29 June–1 July 2022; ACM: Corfu, Greece, 2022; pp. 425–429. [Google Scholar]

| Dataset | Sequences (Train/Val) | Gesture Classes | Subjects | Skeleton Data | Validation Protocol |

|---|---|---|---|---|---|

| CNR | 1925 (1348/577) | 16 | 10 | Not available (depth-based, continuous gesture) | Fixed split (top-view) |

| LMDHG | 608 (414/194) | 13 | 14 | 46 joints | Subject-wise split |

| FPHA | 1175 (600/575) | 45 | 6 | 21 joints | Fixed split (per activity) |

| SHREC2017 | 2800 (1960/840) | 14 G/28 G | 28 | 22 joints | Random 70:30 split |

| DHG1428 | 2800 (1960/840) | 14 G/28 G | 20 | 22 joints | Random 70:30 split |

| SBUKID | 282 (Cross-val) | 8 | 7 pairs | 15 joints | 5-fold cross-validation |

| View Orientation | DHG1428 | SHREC2017 | FHPA | LMDHG |

|---|---|---|---|---|

| top-down | (0.0, 0.0) | (0.0, 0.0) | (90.0, 0.0) | (0.0, 0.0) |

| front-to | (90.0, 180.0) | (90.0, 180.0) | (0.0, 180.0) | (−90.0, −180.0) |

| front-away | (−90.0, 0.0) | (−90.0, 0.0) | (0.0, 0.0) | (90.0, 0.0) |

| side-right | (0.0, −90.0) | (0.0, −90.0) | (0.0, 90.0) | (0.0, 90.0) |

| side-left | (0.0, 90.0) | (0.0, 90.0) | (0.0, −90.0) | (0.0, −90.0) |

| custom | (30.0, −132.5) | (30.0, −132.5) | (25.0, 115.0) | (−15.0, −135.0) |

| CNN Architecture | Classification Accuracies (%) | ||||

|---|---|---|---|---|---|

| Family | Variants | TS1 | TS2 | Variant Average | Family Average |

| ResNet | ResNet18 | 0.8083 | 0.7762 | 0.79225 | 0.8149 |

| ResNet34 | 0.8190 | 0.7952 | 0.8071 | ||

| ResNet50 | 0.8417 | 0.8012 | 0.82145 | ||

| ResNet101 | 0.8226 | 0.8286 | 0.8256 | ||

| ResNet152 | 0.8310 | 0.8250 | 0.828 | ||

| Inception | Inception-v3 | 0.8155 | 0.7762 | 0.79585 | 0.8124 |

| Inception-v4 | 0.8310 | 0.7964 | 0.8137 | ||

| Inception-ResNet-v1 | 0.8179 | 0.8262 | 0.82205 | ||

| Inception-ResNet-v2 | 0.8167 | 0.8190 | 0.81785 | ||

| EfficientNet | EfficientNet-B0 | 0.8143 | 0.7964 | 0.80535 | 0.8083 |

| EfficientNet-B3 | 0.8107 | 0.8107 | 0.8107 | ||

| EfficientNet-B5 | 0.8143 | 0.8131 | 0.8137 | ||

| EfficientNet-B7 | 0.7893 | 0.8179 | 0.8036 | ||

| ResNeXt | ResNeXt26 | 0.8048 | 0.7726 | 0.7887 | 0.8042 |

| ResNeXt50 | 0.8298 | 0.7952 | 0.8125 | ||

| ResNeXt101 | 0.8226 | 0.8000 | 0.8113 | ||

| SE-ResNeXt | SE-ResNeXt50 | 0.8143 | 0.7595 | 0.7869 | 0.8033 |

| SE-ResNeXt101 | 0.8405 | 0.7988 | 0.81965 | ||

| SE-ResNet | SE-ResNet18 | 0.7881 | 0.7774 | 0.78275 | 0.8032 |

| SE-ResNet26 | 0.8048 | 0.7726 | 0.7887 | ||

| SE-ResNet50 | 0.8179 | 0.8024 | 0.81015 | ||

| SE-ResNet101 | 0.8310 | 0.8131 | 0.82205 | ||

| SE-ResNet152 | 0.8060 | 0.8190 | 0.8125 | ||

| xResNet | xResNet50 | 0.7440 | 0.7333 | 0.73865 | 0.7379 |

| xResNet50-Deep | 0.7226 | 0.7357 | 0.72915 | ||

| xResNet50-Deeper | 0.7405 | 0.7512 | 0.74585 | ||

| Method | Classification Accuracy (%) |

|---|---|

| Proposed Framework | 97.05 |

| Lupinetti et al. [54] | 98.78 |

| Method | Classification Accuracy (%) |

|---|---|

| Boulahia et al. [50] | 84.78 |

| Lupinetti et al. [25] | 92.11 |

| Mohammed et al. [37] | 93.81 |

| Proposed Framework | 98.97 |

| Method | Classification Accuracy (%) |

|---|---|

| Sahbi [29] | 86.78 |

| Liu et al. [14] | 89.04 |

| Li et al. [5] | 90.26 |

| Liu et al. [6] | 90.96 |

| Proposed Framework | 91.83 |

| Nguyen et al. [26] | 93.22 |

| Rehan et al. [30] | 93.91 |

| Sabater et al. [32] | 95.93 |

| Method | Classification Accuracy (%) | ||

|---|---|---|---|

| 14 G | 28 G | Average | |

| Sabater et al. [32] | 93.57 | 91.43 | 92.50 |

| Chen et al. [34] | 94.40 | 90.70 | 92.55 |

| Yang et al. [8] | 94.60 | 91.90 | 93.25 |

| Liu et al. [6] | 94.88 | 92.26 | 93.57 |

| Liu et al. [14] | 95.00 | 92.86 | 93.93 |

| Rehan et al. [30] | 95.60 | 92.74 | 94.17 |

| Mohammed et al. [37] | 95.60 | 93.10 | 94.35 |

| Deng et al. [21] | 96.40 | 93.30 | 94.85 |

| Min et al. [56] | 95.90 | 94.70 | 95.30 |

| Shi et al. [15] | 97.00 | 93.90 | 95.45 |

| Proposed Framework | 97.86 | 95.36 | 96.61 |

| Method | Classification Accuracy (%) | ||

|---|---|---|---|

| 14 G | 28 G | Average | |

| Lai et al. [57] | 85.46 | 74.19 | 79.83 |

| Chen et al. [58] | 84.68 | 80.32 | 82.50 |

| Weng et al. [59] | 85.80 | 80.20 | 83.00 |

| Devineau et al. [22] | 91.28 | 84.35 | 87.82 |

| Nguyen et al. [26] | 92.38 | 86.31 | 89.35 |

| Chen et al. [34] | 91.90 | 88.00 | 89.95 |

| Mohammed et al. [37] | 91.64 | 89.46 | 90.55 |

| Liu et al. [6] | 92.54 | 88.86 | 90.70 |

| Liu et al. [14] | 92.71 | 89.15 | 90.93 |

| Nguyen et al. [60] | 94.29 | 89.40 | 91.85 |

| Shi et al. [15] | 93.80 | 90.90 | 92.35 |

| Proposed Framework | 95.83 | 92.38 | 94.11 |

| Li et al. [5] | 96.31 | 94.05 | 95.18 |

| Method | Average Cross-Validation Classification Accuracy (%) |

|---|---|

| Song et al. [61] | 91.50 |

| Liu et al. [62] | 93.50 |

| Kacem et al. [13] | 93.70 |

| Proposed Framework | 93.96 |

| Ke et al. [63] | 94.17 |

| Mucha & Kampel [64] | 94.90 |

| Maghoumi et al. [38] | 95.70 |

| Zhang et al. [19] | 98.30 |

| Lupinetti, et al. [54] | 98.33 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Habib, M.K.; Yusuf, O.; Moustafa, M. Skeleton-Based Real-Time Hand Gesture Recognition Using Data Fusion and Ensemble Multi-Stream CNN Architecture. Technologies 2025, 13, 484. https://doi.org/10.3390/technologies13110484

Habib MK, Yusuf O, Moustafa M. Skeleton-Based Real-Time Hand Gesture Recognition Using Data Fusion and Ensemble Multi-Stream CNN Architecture. Technologies. 2025; 13(11):484. https://doi.org/10.3390/technologies13110484

Chicago/Turabian StyleHabib, Maki K., Oluwaleke Yusuf, and Mohamed Moustafa. 2025. "Skeleton-Based Real-Time Hand Gesture Recognition Using Data Fusion and Ensemble Multi-Stream CNN Architecture" Technologies 13, no. 11: 484. https://doi.org/10.3390/technologies13110484

APA StyleHabib, M. K., Yusuf, O., & Moustafa, M. (2025). Skeleton-Based Real-Time Hand Gesture Recognition Using Data Fusion and Ensemble Multi-Stream CNN Architecture. Technologies, 13(11), 484. https://doi.org/10.3390/technologies13110484