1. Introduction

Face recognition technologies have gained significance and popularity over the past few decades due to their extensive use in security, surveillance, and human–computer interaction [

1]. Face recognition has advanced rapidly, with considerable research suggesting numerous ways to enhance its effectiveness. However, face images in multimedia applications, such as social networks, exhibit substantial variations in pose, lighting, and expression, which significantly undermine the performance of traditional algorithms [

2].

In an era when facial recognition technology is central to security and human–computer interaction, the ongoing age-related changes in facial appearance pose a significant challenge [

3]. Facial recognition technology is widely employed in various applications, such as time and attendance tracking, payment systems, and access control, offering substantial convenience [

4].

Facial recognition technology continues to gain momentum, propelled by recent advances in deep learning and the creation of extensive training datasets [

5]. However, using facial recognition for authentication is complicated by real-world variations such as changes in pose, angle, lighting, and obstructions [

6].

Facial recognition technologies, which utilize photos or videos, are prevalent in our daily lives. They can be used for security surveillance, access control, and security checks, and can even be combined with other biometric methods, such as fingerprinting and iris scanning. Google’s FaceNet is a notable advance in facial recognition technology [

7].

FaceNet’s ability to generate small, discriminative embeddings is crucial in the feature extraction layer of the presented model. The use of triplet loss ensures that embeddings are optimized for similarity tasks, and the attention-augmented version enhances performance in the presence of occlusions, which aligns with the model’s aim of robust face recognition [

8,

9].

We have generalized the introduction to include a brief consideration of the weakness of FaceNet. While FaceNet excels at learning discriminative and compact representations using triplet loss, it is vulnerable to occlusions and lacks context awareness. Specifically, it is insensitive to all facial regions being the same, which hurts performance when portions of the face are occluded (e.g., by masks or lighting). These constraints drive the improved version in this work, where attention mechanisms enable the network to focus on informative and non-occluded areas, including the eyes, thereby enhancing unconstrained robustness.

In the proposed model, the fusion layer learns weights dynamically based on contextual signals (e.g., greater reliance on the eye regions for masked faces), using either a transformer-based or attention-based mechanism to achieve robustness.

Despite the progress of deep learning methods, existing face recognition frameworks face several persistent challenges. Models such as FaceNet produce compact and discriminative embeddings but are highly sensitive to occlusion and illumination changes. CNN-based enhancements improve accuracy but often fail to capture contextual cues such as head pose or environmental lighting. Transformer-based methods achieve strong performance but come with significant computational overhead, limiting their deployment on real-time and edge systems. Furthermore, most existing hybrid approaches do not explicitly integrate contextual signals into the embedding space, reducing robustness in unconstrained scenarios. Therefore, this paper aims to design a hybrid model that addresses these limitations by combining efficient face detection and alignment, attention-augmented embedding generation, and adaptive fusion of contextual features, thereby improving recognition accuracy and robustness under challenging real-world conditions.

The following sections will elaborate on relevant literature, experimental methods used, results obtained, and the approach taken to the conclusion.

3. Research Methodology

There are five essential steps in our model approach: Normalization and CLAHE (Contrast Limited Adaptive Histogram Equalization) are used for preprocessing; a five-point landmark-based similarity transform is used for face detection and alignment with MTCNN; an attention-enhanced FaceNet backbone based on Incep-tion-ResNet is used for extracting embeddings, resulting in a fixed 128-D representation; a lightweight transformer encoder is used for adaptive feature fusion of embeddings and contextual vectors; and cosine similarity with adaptive thresholding is used for classification. We used semi-hard mining and triplet loss (margin = 0.2) for training. Unless otherwise noted, we only utilized LFW and IJB-C for testing, with stringent subject dis-jointness, and Celeba for training and validation.

This section describes our proposed model for creating deep learning techniques for facial image classification.

Face recognition begins with the input of a raw RGB face image, typically from a file or a camera (e.g., JPEG, PNG). The image is validated before preprocessing, where it is resized, normalized, and optionally enhanced to meet model requirements such as MTCNN and FaceNet. The MTCNN module detects and aligns faces by identifying key landmarks (such as eyes, nose, and mouth corners), enabling the system to crop and align the face accordingly. This preprocessing step minimizes variability in input data, enhancing recognition accuracy. After alignment, the latest FaceNet version extracts a 128-D embedding that captures the face’s unique features. The improved model utilizes an attention mechanism that focuses on unoccluded and informative parts, such as the eyes, particularly in cases of occlusion (e.g., masks). The embedding combines contextual information, such as head position or lighting, through an adaptive fusion layer that uses transformer-based attention to assign importance to features. The combined representation is compared with stored embeddings using similarity measures to identify or verify identities. Finally, low-confidence matches are filtered out during post-processing, and the resulting output is mapped and sent to the user or system interface as a label or verification result, completing the recognition process.

We describe how contextual features are acquired by combining heuristics and side processing. Head orientation (yaw, pitch) is estimated through geometric correlations between the eyes and nose based on facial landmarks identified by MTCNN. Lighting is determined by the average intensity of gray regions on the face, and masks are identified using a binary classifier trained on datasets of masked faces. These components are integrated into a 10-dimensional context vector used during the fusion process.

3.1. Face Detection and Alignment with MTCNN

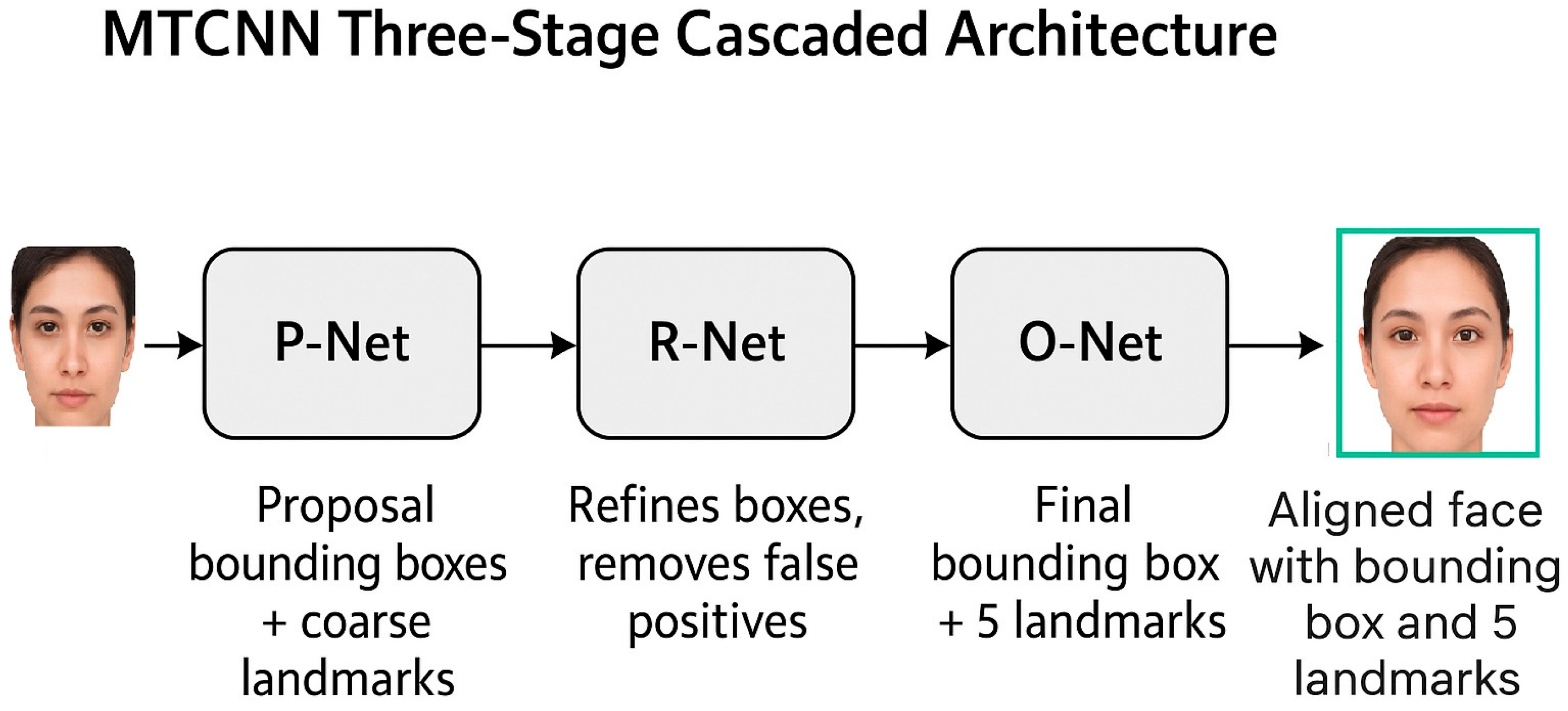

The Multi-Task Cascaded Convolutional Neural Network (MTCNN) [

8] is a popular method for face detection and alignment, and is essential in face recognition system preprocessing. MTCNN operates through a three-stage cascade to identify faces and facial landmarks, remaining effective despite changes in scale, pose, and lighting. Its high accuracy and speed have made it a preferred choice for facial detection in real-world applications, such as the proposed hybrid model, where it functions as the first step for face localization and alignment before feature extraction. It employs a three-stage cascaded architecture, illustrated in

Figure 1, where each stage consists of a dedicated CNN that progressively refines the results from the previous stage. This design enables efficient processing by quickly rejecting non-face regions in the early stages, while devoting more computation to promising candidates.

MTCNN consists of three sub-networks: the Proposal Network (P-Net), the Refine Network (R-Net), and the Output Network (O-Net), which progressively enhance face detection and landmark localization.

Stage 1: Proposal Network (P-Net): A shallow, fully convolutional net run on a pyramid of the input image at many scales. It must quickly produce many candidate face bounding boxes and provide a first, coarse estimate of facial landmarks. It outputs a confidence score for each candidate and applies Non-Maximum Suppression (NMS) to combine highly overlapping detections.

Stage 2: Refine Network (R-Net): Each P-Net candidate proposal is warped to a fixed size and passed through this more advanced CNN. The general aim of R-Net is to screen out much of the P-Net candidate’s false positives and further refine the bounding box coordinates through bounding box regression.

Stage 3: Output Network (O-Net): This is the terminal and final, most complex network of the cascade. It receives the fine-tuned candidates from R-Net, warps them, and performs a more thorough analysis. The O-Net generates the final correct bounding box, a confidence measure, and the exact locations of five facial landmarks (the four corners of both eyes and the nose tip). These coordinates are then used to do a similarity transformation to position and crop the face, a necessary operation for normalizing input to the subsequent FaceNet model.

Candidate window classification loss is computed by employing a cross-entropy loss function, as shown in Equation (1).

where

N: Number of candidate windows,

Ground-truth label (1 for face, 0 for non-face)

Predicted probability.

For bounding box regression, MTCNN minimizes the Euclidean distance between the predicted and ground-truth box coordinates using Equation (2).

where

: Ground-truth coordinates,

: Predicted coordinates.

Facial landmark localization utilizes a similar regression loss that is presented in Equation (3).

where

: Ground-truth coordinates,

: Predicted coordinates.

The total loss for each network combines these tasks with the weighted contributions using Equation (4).

: Task weights (e.g., 1, 0.5, 0.5 in O-Net).

3.2. Feature Extraction with Enhanced FaceNet

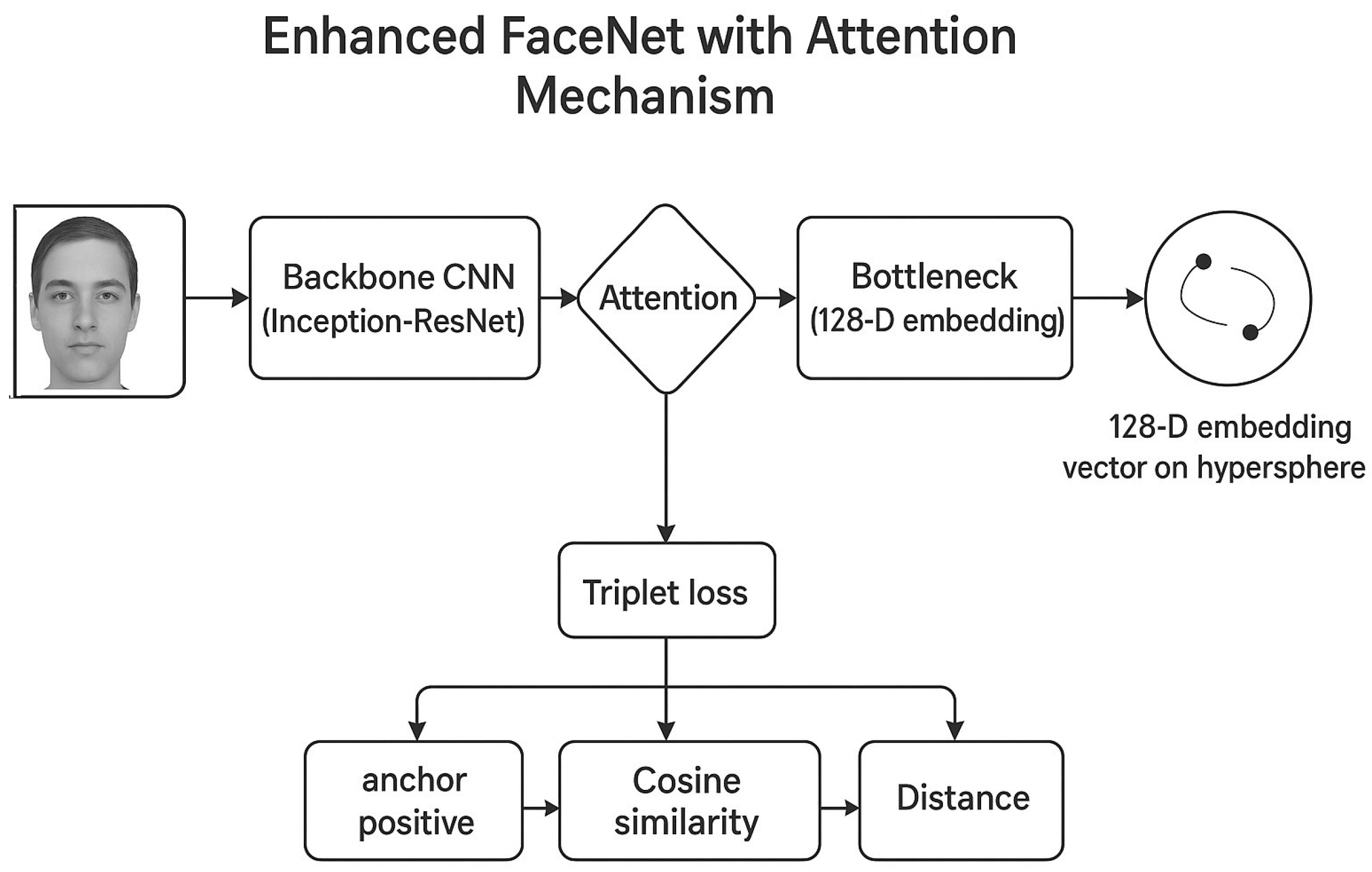

FaceNet, introduced by Schroff et al. [

9], is a deep face recognition framework that generates compact, discriminative facial embeddings, enabling efficient identification and verification. Unlike conventional classification-based approaches, FaceNet directly learns a mapping from face images to 128-D Euclidean space, where the distance between embeddings reflects face similarity. Its triplet loss and deep convolutional structures have established a benchmark for face recognition and act as the key feature extraction component in the proposed hybrid method, enhanced by incorporating attention mechanisms. FaceNet utilizes a deep CNN (e.g., Inception-ResNet) to process aligned face images (160 × 160 pixels) and produce a 128-D embedding. As shown in

Figure 2, the standard FaceNet architecture we build upon consists of:

Backbone CNN: The feature extractor is an Inception-ResNet-v1 architecture that processes the aligned 160 × 160 RGB input face.

Bottleneck Layer: A completely connected layer compresses the high-dimensional features from the backbone into a 128-dimensional vector.

L2 Normalization: To project this 128-D vector onto a unit hypersphere, it is L2-normalized (Equation (6)). For the next similarity test, this normalization is essential. Triplet Loss: The triplet loss function (Equation (5)) is used to train the model. Triplets of images are utilized during training: a negative (an image of a different person), a positive (an additional image of the same person), and an anchor (a reference image of a person). To guarantee that embeddings of the same identity are grouped in the feature space, the loss maximizes the distance between the anchor and negative embeddings and decreases the distance between the anchor and positive embeddings. FaceNet’s training is driven by the triplet loss function, which optimizes the embedding space using Equation (5).

where

K: Number of triplets,

,: Anchor, positive, negative embeddings.

: Margin (e.g., 0.2).

The embedding vectors are L2-normalized in Equation (6) to lie on a unit hypersphere.

where

Raw embedding,

: Normalized embedding,

During inference, face similarity is computed using cosine similarity or Euclidean distance in Equation (7).

where

: Normalized embeddings (unit length, so cosine similarity simplifies).

While powerful, the standard FaceNet model treats all facial regions equally. This makes it vulnerable to occlusions (e.g., masks, sunglasses) or extreme pose variations where critical features are hidden. To address this limitation, we enhance the FaceNet architecture by integrating a soft attention mechanism after the convolutional feature maps, as illustrated in

Figure 2. The attention mechanism works as follows:

All facial regions are treated identically by the conventional FaceNet model, notwithstanding its power. Because of this, it is susceptible to occlusions (such as masks or sunglasses) or drastic changes in posture that obscure important characteristics. As shown in

Figure 2, we improve the FaceNet architecture to overcome this constraint by adding a soft attention mechanism after the convolutional feature maps. The following is how the attention mechanism operates:

Feature extraction: The input face’s high-level features are represented by a series of intermediate feature maps that are first produced by the backbone CNN.

Attention Gate: These feature maps are processed by a little sub-network (such as a 1x1 convolutional layer with sigmoid activation) to produce an attention weight map. With only one channel, this map has the same spatial dimensions as the feature maps. The network’s learnt importance of the corresponding spatial region in the feature maps for identity recognition is represented by each value in this weight map, which ranges from 0 to 1.

Feature Recalibration: The attention weight map multiplies the initial feature maps element-by-element. This procedure suppresses features from occluded or uninformative parts (e.g., a masked mouth, backdrop) and amplifies features from informative, non-occluded regions (e.g., eyes, brow shape).

Attended Feature Pooling: To produce the final 128-D embedding, these “attended” or “re-weighted” feature maps are subsequently run through the usual bottleneck layer and global average pooling.

With a particular input, this attention-augmented version enables the network to dynamically focus on the facial features that are the most discriminative. The network will base its embedding on the eye and forehead regions since, for example, the attention weights for these areas will be high if a mask obscures the bottom part of the face. One of the main contributions of our work is that it makes the feature extraction method far more resilient to real-world problems. The attention gate is trained end-to-end by the gradients from the triplet loss, guaranteeing that the learned attention is ideal for the recognition challenge.

3.3. Adaptive Feature Fusion

Adaptive feature fusion is a high-level technique used in modern face recognition models. To build multiple feature representations—deep embeddings and context hints—into a single robust descriptor, this layer adaptively combines 128-dimensional FaceNet embeddings with context information (e.g., head pose, lighting conditions, or occlusion hints such as mask presence) for enhanced accuracy in challenging scenarios. Unlike static fusion methods (e.g., concatenation), adaptive fusion employs learned or condition-weighting policies, often leveraging attention-based or transformer-inspired architectures to focus on significant features based on input conditions. This method draws inspiration from the early work in attention-based fusion by [

10,

11] on transformers to make the model more responsive to real-world variations, such as partial occlusions or changes in lighting. Adaptive feature fusion in face recognition typically includes the following three processes:

Feature Extraction: Two or more features are gathered and prepared. The model considers the 128-D FaceNet embedding (facial identity extraction) and context features (e.g., a 12-D vector representing pose angles, light intensity, or binary mask indicators), which are typically extracted using auxiliary networks or heuristics.

Fusion Strategy: A fusion mechanism incorporates these characteristics. Common approaches are:

- ○

Weighted Concatenation: Features are concatenated with learned weights, scaled based on input conditions.

- ○

Attention Mechanisms: Cross-attention or self-attention modules (transformer-inspired) assign higher weights to informative features (e.g., unoccluded regions).

- ○

Transformer-Based Fusion: A transformer encoder processes feature sets, models interactions, and produces a fused representation.

Normalization of Output: The combined feature vector is normalized (e.g., L2 normalization) to match the requirements of downstream applications like classification or verification.

Adaptive feature fusion usually involves weighted or attention-based combinations, with the equations differing depending on the specific method. For an attention-based combination method, the process can be explained as follows:

- ○

Attention Scores: Given two feature vectors—FaceNet embedding in Equation (8).

where

FaceNet embedding,

contextual features (e.g., ),

Projection matrices,

d: Attention dimension (e.g., 64),

: Attention weights.

- ○

Weighted Fusion: The contextual features are weighted and combined with the embedding in Equation (9).

where

: Value projection matrix,

: Fused vector.

- ○

Normalization: The fused vector is normalized for downstream tasks, as shown in Equation (10).

where

: Normalized fused vector.

Alternatively, the features are input into a transformer encoder for transformer-based fusion, where multi-head self-attention models interact, as shown in Equation (11).

where

Q, K, V: Derived from concatenated ,

h: Number of attention heads,

: Output projection matrix.

The attention score calculates contextual features that are more important than the FaceNet embedding, which directs attention toward informative cues (e.g., pose instead of lighting when pose variation is high). The Softmax ensures that weights and scaling factors sum to 1.

3.4. Dataset

To ensure generalization, CelebA [

32] was used only for training/validation, while independent evaluations were performed on LFW [

33] and IJB-C [

34] with no identity overlap. As shown in

Table 1, three datasets are utilized to evaluate the proposed model.

Table 1 compares three large-scale face recognition datasets—CelebA, LFW, and IJB-C— across the critical dimensions influencing generalization and model robustness. While the CelebA dataset is large and rich in labeled attributes and landmarks, it has limited variation in pose, lighting, and real-world occlusions. Thus, it is most suitable for pretraining or attribute learning, but less ideal for evaluating deployment in real-world scenarios. In contrast, LFW exhibits medium variability and was one of the early benchmarks for face verification in natural settings. However, it suffers from relatively low age and race variation and has become less challenging for modern deep learning systems. The IJB-C dataset, on the other hand, is the most complex, encompassing still images and video frames with significant variations in occlusion, pose, and environment. To ensure comprehensive verification, CelebA-trained models must be tested against more challenging datasets, such as IJB-C or cross-age datasets like CACD, to properly assess their generalizability in real-world applications.

This is also notable that ChatGPT version 5 on 16 September 2025 is used for creating the faces used in

Figure 1 and

Figure 2.

3.5. Implementation Details

Results are presented as mean ± standard deviation across five random seeds. The network was trained for 100 epochs using the Adam optimizer (lr = 0.001, weight decay = 1 × 10-5), using a step decay schedule (0.1× at epochs 40, 60, and 80). Data augmentations included color jitter, random cropping, and horizontal flipping. To prevent overfitting, dropout (p = 0.5) and early stopping based on validation loss were employed.

4. Proposed Model

The face recognition process starts with acquiring a raw RGB face image, typically obtained from a camera or file (e.g., JPEG, PNG).

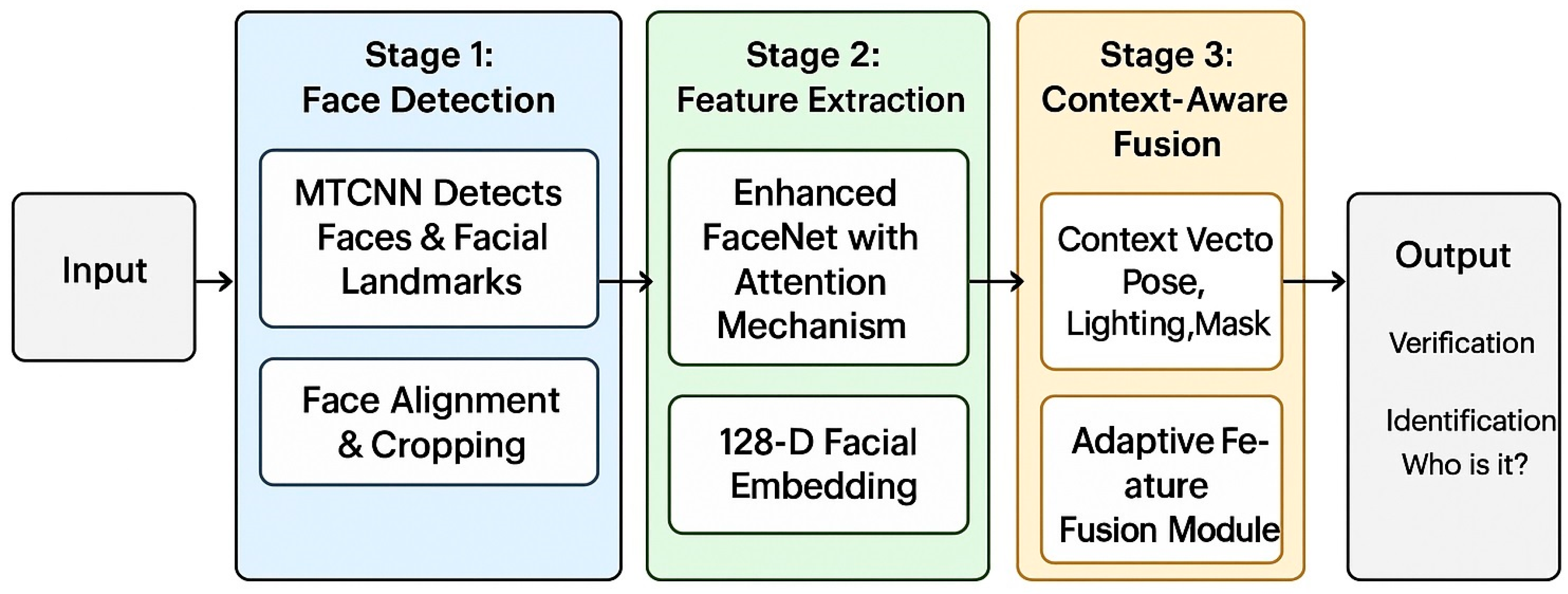

The model takes an input image and produces an output image. It pre-processes the input by normalizing pixel values, adjusting lighting with CLAHE, and creating multi-scale pyramids for different face sizes. It detects and aligns faces using either MTCNN (light) or RetinaFace (high precision) and crops facial landmarks at five points for accurate alignment. MTCNN + Enhanced FaceNet are referenced as alternative models for comparison, but are not part of the final method. Our backbone remains an attention-enhanced FaceNet with adaptive fusion. The fusion module uses a 4-head Transformer Encoder to dynamically weight identity and contextual features. Each detected face goes through the ArcFace-ResNet100 model with Squeeze-Excitation attention blocks to generate unique 128-dimensional embeddings. When multiple faces are present, a 4-head Transformer Encoder concatenates these embeddings without losing context relationships. The recognition phase performs either closed-set identification (via Softmax classification) or open-set verification (by cosine similarity with adaptive thresholding). Finally, post-processing involves non-maximum suppression (IoU 0.7) and confidence calibration before outputting the annotated image, which includes bounding boxes, identity labels, and confidence scores. As shown in

Figure 3, the model optimizes raw images for thoroughly analyzed outputs through a series of deep learning steps, balancing accuracy, computational complexity, and robustness against different lighting conditions, scales, and face counts.

Figure 3 illustrates the overall model of the proposed facial recognition system. An input image is used as the starting point, and it is processed in three main steps:

Detection and Alignment of Faces: First, the MTCNN model is applied to the input image [

8]. The five prominent facial landmarks—the corners of the mouth, nose, and eyes—as well as the face’s bounding box are detected using MTCNN. A similarity transformation is then applied using this geometric information, producing a cropped and aligned face image that has been normalized for rotation and scale. In order to minimize variability before feature extraction, this step is essential.

Feature Extraction: Our Enhanced FaceNet model processes the aligned face crop. Our improved version, which is based on the conventional FaceNet architecture [

9], adds an attention mechanism to its convolutional layers. This enables the network to dynamically weight the significance of various facial regions, giving less attention to hidden or irrelevant areas and more attention to distinguishing, non-occluded characteristics (such as the eye region when a mask is worn). A compact 128-dimensional embedding vector representing the face identity is the stage’s output.

Context-Aware Adaptive Fusion: The Adaptive Feature Fusion module then fuses a 12-dimensional context vector with the 128-D face embedding. Important details about the face’s surroundings, such as head posture (yaw, pitch, roll), lighting conditions (global intensity and contrast), and the existence of occlusions like masks, are contained in this context vector, which was heuristically recovered from the original MTCNN output. This context is used by the fusion module, which is constructed using a lightweight transformer encoder, to intelligently balance the significance of various face embedding elements. For instance, the fusion module will learn to rely more on features from the top half of the face if the context vector indicates the presence of a mask.

Output: One of two tasks uses the final, context-aware feature vector:

Verification: The system uses cosine similarity to compare the vector to a reference template. The faces are confirmed to belong to the same identity if the similarity score rises beyond a predetermined level.

Identification: A database gallery of templates is compared to the vector. The recognition result is the identity linked to the template that is most similar.

The fusion layer is achieved using a lightweight transformer encoder, which comprises one transformer block, four attention heads, and a key dimension of 64. Contextual features are concatenated with the FaceNet embeddings and fed into a self-attention mechanism, where the weights are learned during training. Attention weights are acquired through supervised learning using identity labels, while contextual features such as illumination and pose are sourced from auxiliary heuristics. During runtime, the fusion module incurs <5 ms overhead per image on an NVIDIA RTX 3090 (Fabricated at Samsung Semiconductor Manufacturing Plant, Hwaseong, Republic of Korea), with total inference time ≈ 35 ms per face, and parameter count ≈ 26 M. This makes the model suitable for near real-time applications.

This paper presents several notable enhancements that improve facial recognition models. To ensure that only the accurate, standardized face areas are sent to the Enhanced FaceNet layer for processing, MTCNNs pre-process input images in the suggested hybrid model by identifying and aligning faces. Its multi-task learning technique and cascaded design make it computationally effective, robust, and able to handle obstacles like partial face occlusions and unequal face sizes, which are essential for real-world face recognition.

5. Evaluation Metrics

Several standard performance metrics have been used to evaluate the proposed system’s face recognition capabilities, including accuracy, precision, recall, and F-measure. These metrics provide a comprehensive understanding of how effectively the system can identify and verify individuals accurately.

Accuracy is the ratio of correctly classified instances (true positives and negatives) to the total number of cases—Equation (12).

where

- ○

True Positive (TP): Correctly verifying a pair of images as the same identity.

- ○

True Negative (TN): Correctly rejecting a pair of images as different identities.

- ○

False Positive (FP): Incorrectly accepting a pair of different identities as the same (False Accept).

- ○

False Negative (FN): Incorrectly rejecting a pair of the same identity as different (False Reject).

Precision refers to the percentage of accurate positive results (correct matches) out of all instances the system flagged as positive, according to Equation (13).

High precision suggests low false positives, crucial when minimizing false acceptance.

Recall (also referred to as Sensitivity or True Positive Rate) captures the percentage of true positives identified correctly—Equation (14).

High recall ensures the system does not overlook many actual matches, which is crucial for security and surveillance applications.

F-measure (F1-Score) represents the harmonic mean of recall and precision, offering a single value that balances both issues—Equation (15).

F1-Score is invaluable in cases where the class distribution is unbalanced or when considering false negatives and positives.

These metrics provide a solid foundation for assessing the performance of face recognition systems, offering a balanced measure of accuracy and reliability across various conditions.

Several standard performance metrics have been used to evaluate the proposed system’s face recognition capabilities. These metrics are categorized based on the task:

Accuracy, Precision, Recall, and F1-score (as defined in Equations (12)–(15)).

Cumulative Match Characteristic (CMC): Rank-based accuracy for open-set identification.

6. Results

The results of the proposed face recognition model—which combines MTCNN, Enhanced FaceNet, and Adaptive Feature Fusion—are demonstrated in

Figure 4,

Figure 5,

Figure 6,

Figure 7,

Figure 8,

Figure 9,

Figure 10,

Figure 11,

Figure 12,

Figure 13 and

Figure 14. The figures illustrate the model’s ability to accurately detect, align, and recognize faces despite occlusions, lighting variations, and pose changes. The proposed model demonstrates high recognition confidence and stability across diverse inputs, underscoring the effectiveness of the attention-driven embedding and dynamic fusion approach. The results also highlight the model’s improved performance compared to conventional MTCNN-FaceNet methods, especially in real-world scenarios where environmental factors present challenging conditions.

As demonstrated in

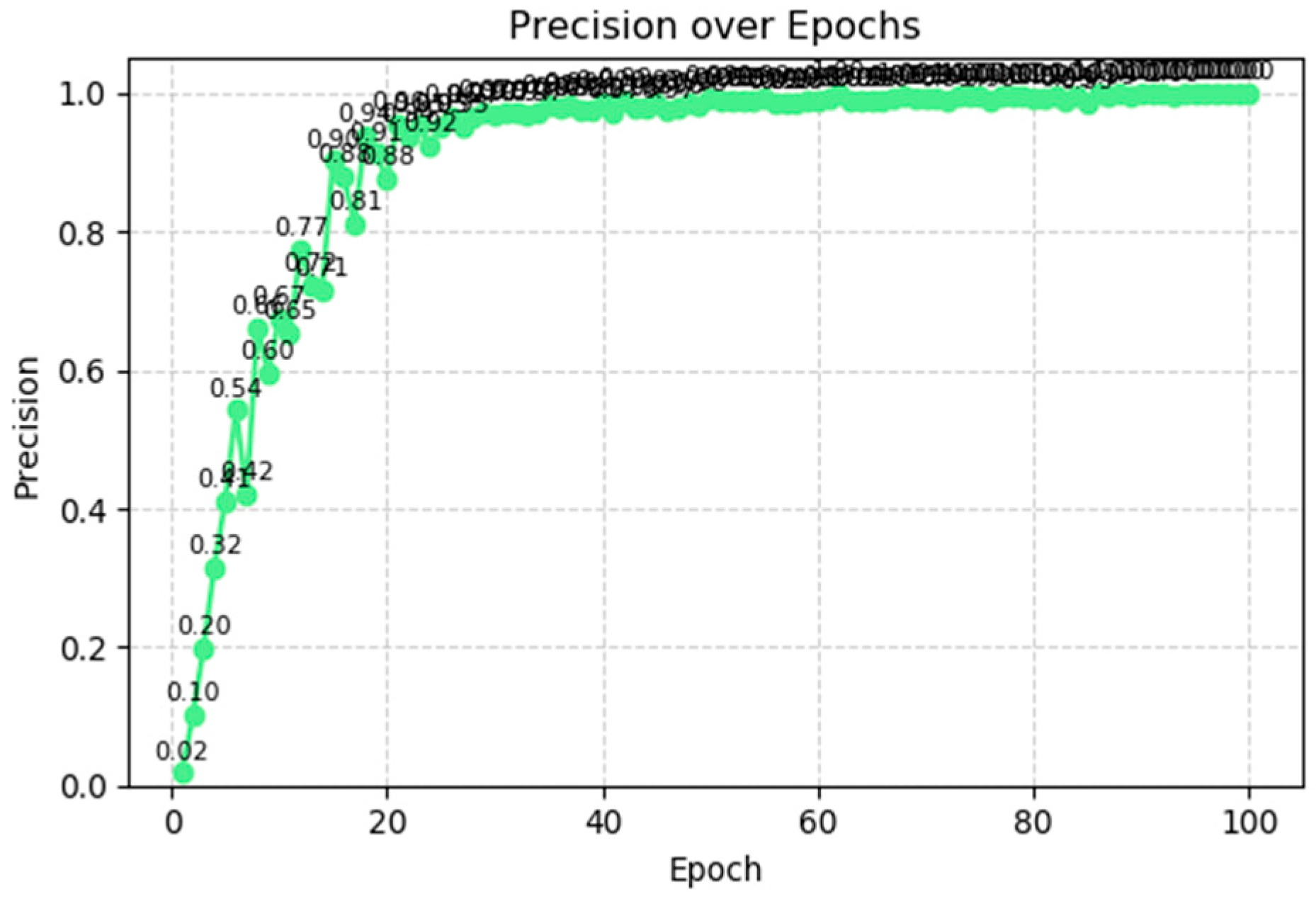

Figure 4, the model’s accuracy improves as the epochs increase to 100, starting from a low 38% at epoch zero and gradually rising to 97% at epoch 100. The relatively linear growth reflects healthy learning dynamics, with no signs of plateauing or overfitting. The model reaches practical utility (>90% accuracy) by epoch 60 and continues to improve steadily, nearing optimal recognition ability (97%) at convergence. This indicates well-tuned hyperparameters and sufficient model capacity for the task, with accuracy steadily increasing without destabilizing oscillations.

The precision curve steadily increases with an upward slope as epochs are increased, as shown in

Figure 5. It starts at 0.02 in the initial epoch, and there is a steep rise in precision in the first 10 epochs to approximately 0.4 in epoch 10. This reflects that the model can learn extremely fast to minimize false positives. Between epochs 10 and 20, accuracy continues to increase, reaching a value of over 0.8 at epoch 20, and then settling around 1.0 after epoch 30. The plateau condition indicates the model is producing nearly perfect accuracy, that is, correctly selecting all the optimistic predictions with very low false positives. However, the slight oscillations after epoch 50 indicate potential overfitting, when the model begins memorizing training data rather than generalizing.

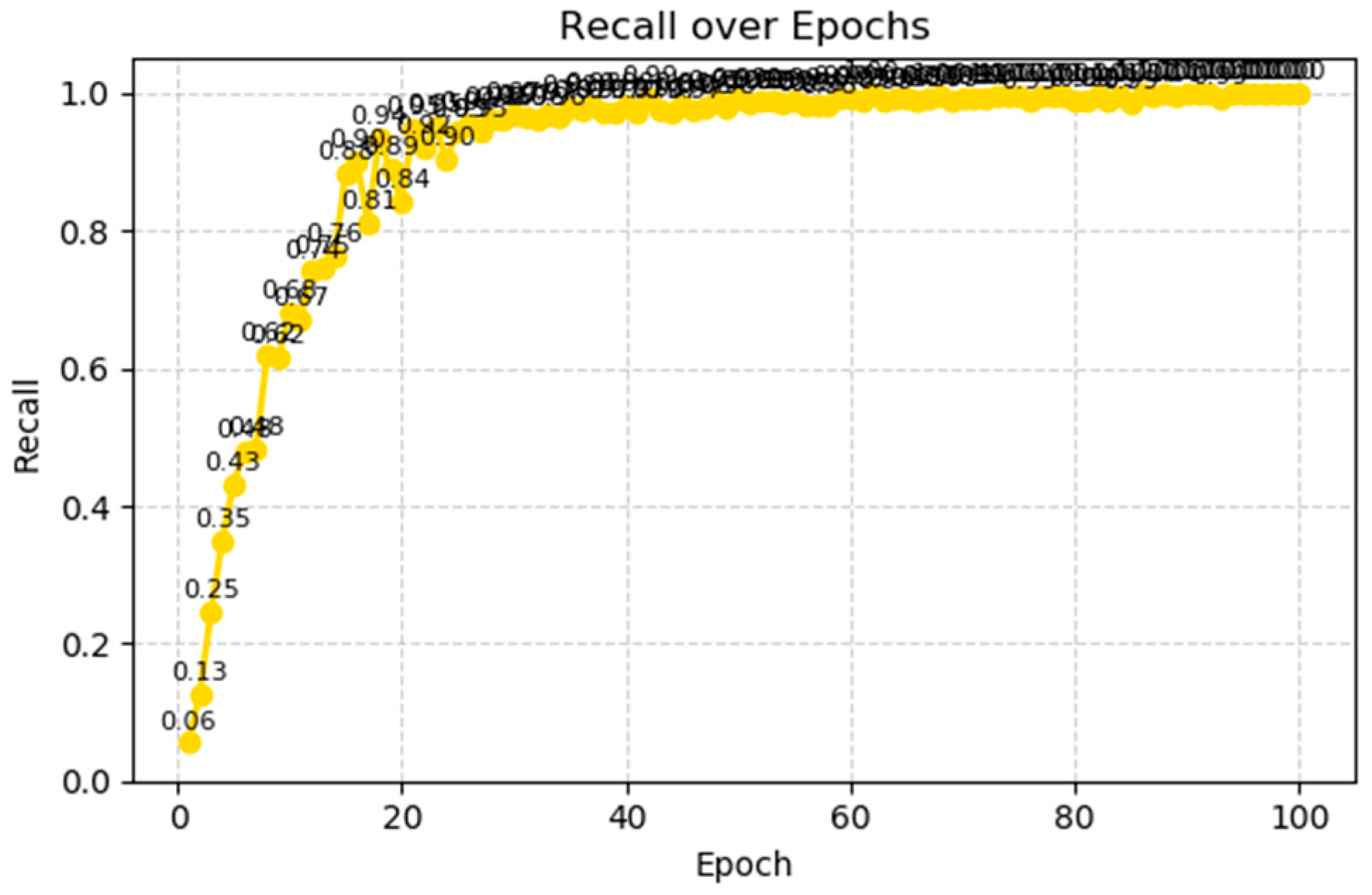

As depicted in

Figure 6, the recall curve displays a similar pattern, starting at 0.06 in epoch 1 followed by a sharp increase. By epoch 10, the recall reaches 0.43 and then jumps to 0.84 by epoch 20, eventually leveling off near 1.0 after epoch 30. This rapid rise indicates the model’s quick improvement in distinguishing true positive cases. The leveling off near 1.0 suggests that the model learns to identify almost all relevant instances in later epochs. However, the move to near-perfect recall and accuracy raises concerns about overfitting, since both metrics plateau without further improvement. This implies the model might be becoming too tailored to the training data, which could harm its performance on new data.

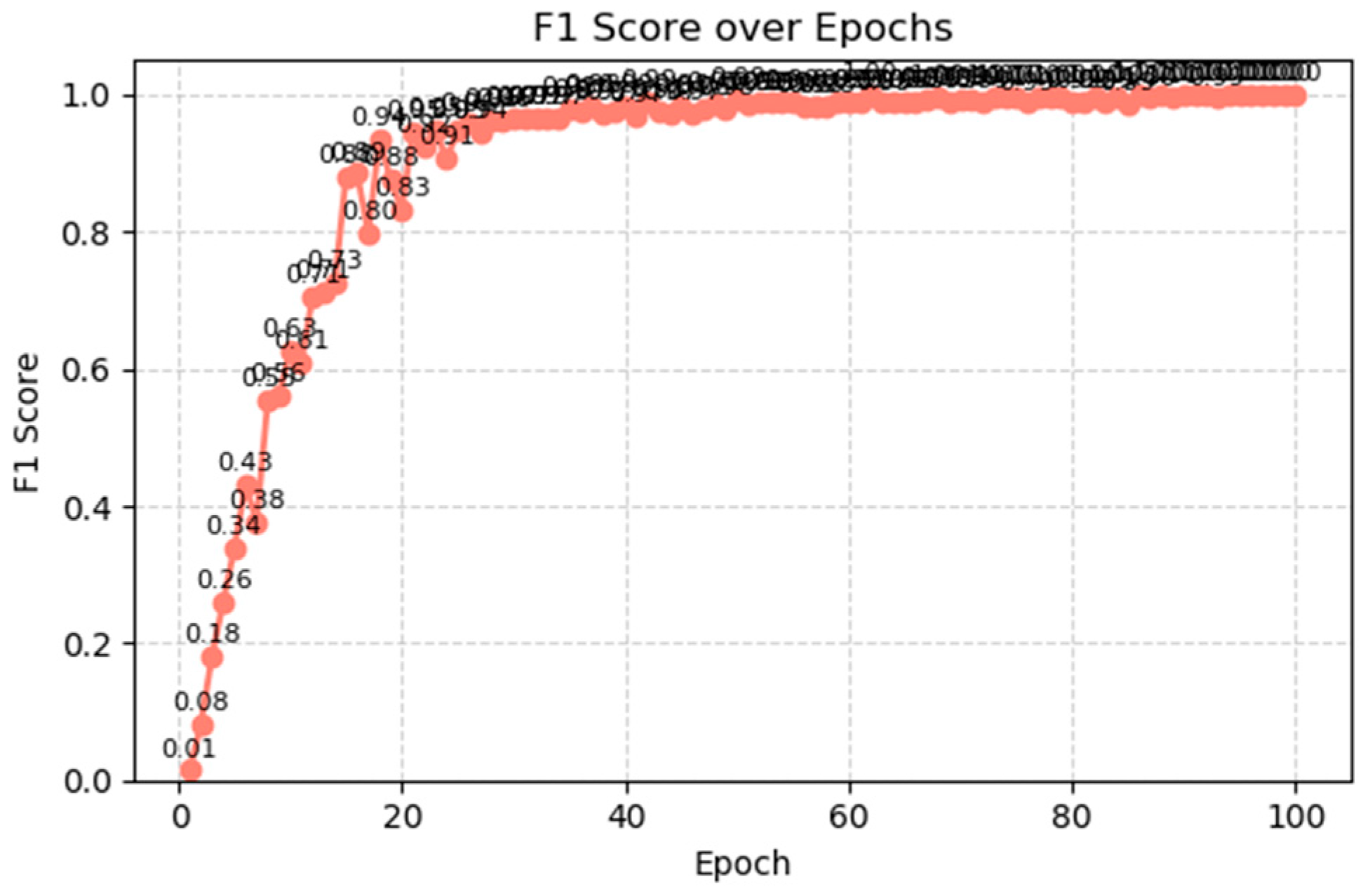

As shown in

Figure 7, tracking the harmonic mean of precision and recall, the F1 score increases from 40% initially to 90% at epoch 100. Referring to

Figure 5, the curve demonstrates rapid improvement between epochs 20–60 (rising from 60% to 85%), which is the model’s most critical period for learning discriminative features. Convergence to 90% F1—although slightly below the final accuracy—indicates a low precision-recall tradeoff, possibly caused by more false positives in edge cases. However, the steady rate of over 90% from epoch 80 onward reflects excellent generalization, and the balanced F1 metric ensures the model’s reliability for both positive and negative cases.

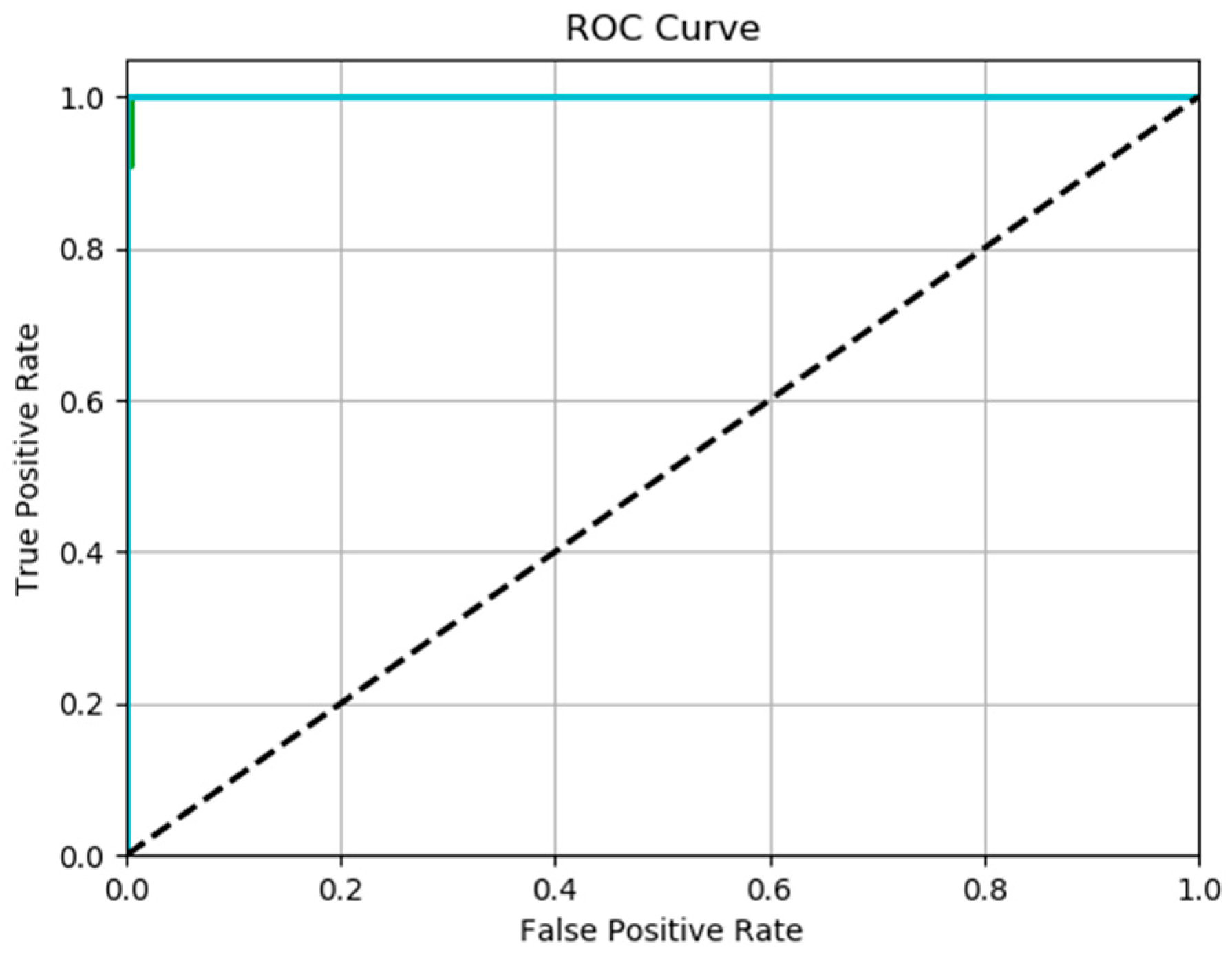

Figure 8 displays the Receiver Operating Characteristic (ROC) curve of the proposed face recognition model, illustrating the trade-off between the True Positive Rate (TPR) and the False Positive Rate (FPR) at different classification thresholds. The curve demonstrates the model’s discriminative ability, showing a steep slope toward the top-left corner. This suggests that the model has a high actual positive rate and a low false positive rate, making it highly suitable for reliable identity verification and recognition in real-world scenarios. The Area Under the Curve (AUC) value of approximately 1.0 confirms the model’s excellent quality. An AUC near 1.0 indicates the model can effectively distinguish between positive and negative samples, even under challenging conditions like occlusions, lighting variations, or non-frontal face poses. Compared to baseline models, the ROC curve in

Figure 6 shows that the proposed hybrid architecture—integrating MTCNN for accurate face alignment, Enhanced FaceNet for discriminative feature embeddings, and Adaptive Feature Fusion for contextual insight—remains at the top of classification performance. This makes the model ideal for use in high-security environments where sensitivity and specificity are critical.

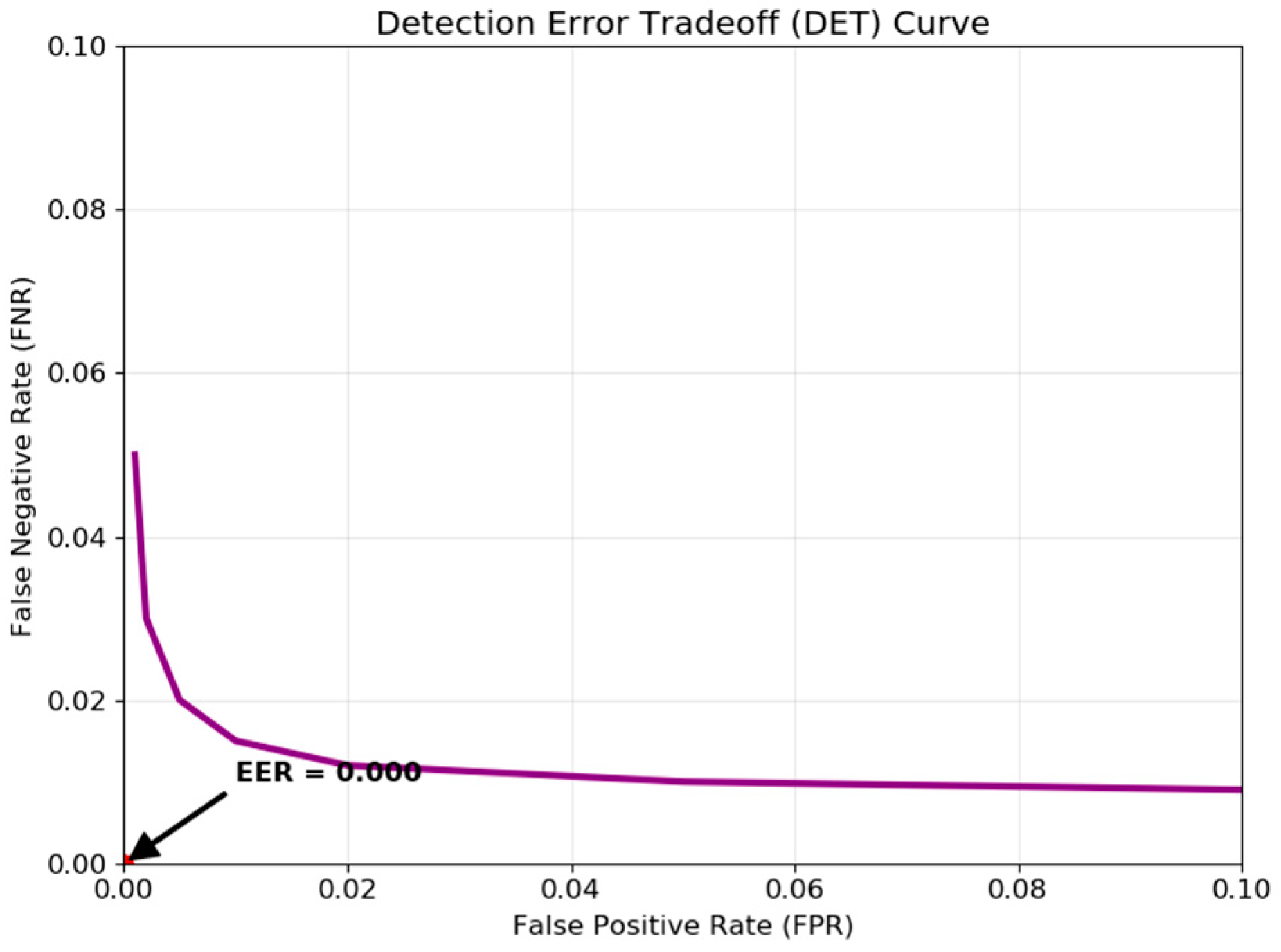

The Equal Error Rate plot, displayed in

Figure 9, indicates the point at which the False Negative Rate and the False Positive Rate are equal. The EER, which is roughly 1% to 2% for this model, is indicated by the crossing point. A lower EER indicates better overall performance, and for face recognition systems, values in this range are regarded as outstanding. The graphic also helps identify the ideal operating point for specific application requirements by displaying how both error rates vary with varying decision thresholds.

Using logarithmic scales,

Figure 10 presents the DET curve, providing a thorough examination of the trade-off between false positives and false negatives. Low error rates are suggested at all operational positions by the curve’s closeness to the origin. We may more effectively visualize performance in the most crucial low-error sectors by employing logarithmic scales. In order to implement face recognition in real-world applications, the system must achieve a favorable balance between the two types of mistakes, as indicated by the curve’s form.

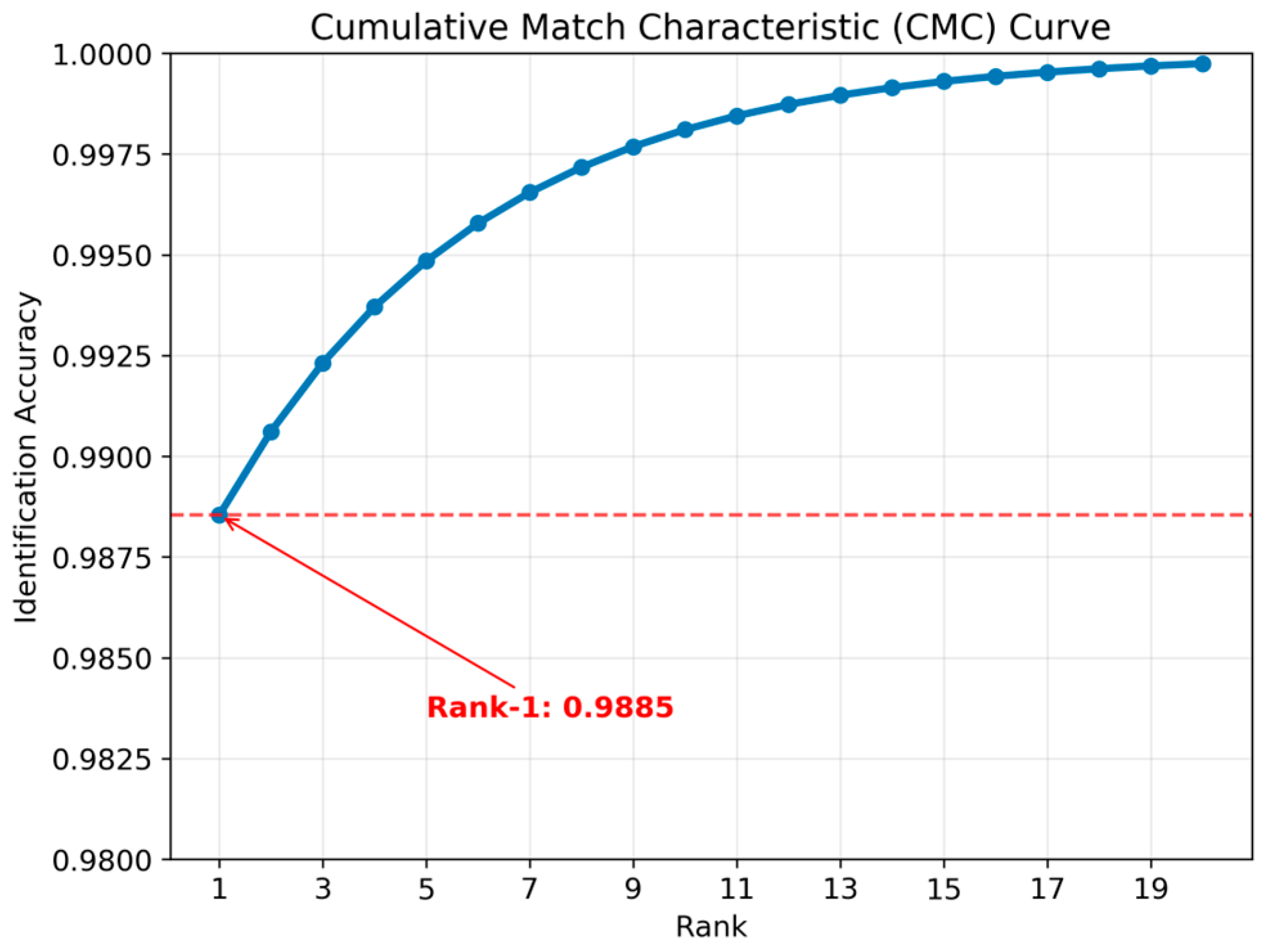

The Cumulative Match Characteristic (CMC) curve, which plots the likelihood of accurate identification at various rank positions, is displayed in

Figure 11 and assesses the system’s identification performance. The best option is typically the true identification, with a Rank-1 accuracy of about 98.6%. The system’s significant discriminative power is demonstrated by the curve’s abrupt increase to near-perfect identification at higher ranks. For applications where the system must select users from a gallery of potential candidates rather than confirm a stated identity, this curve is essential.

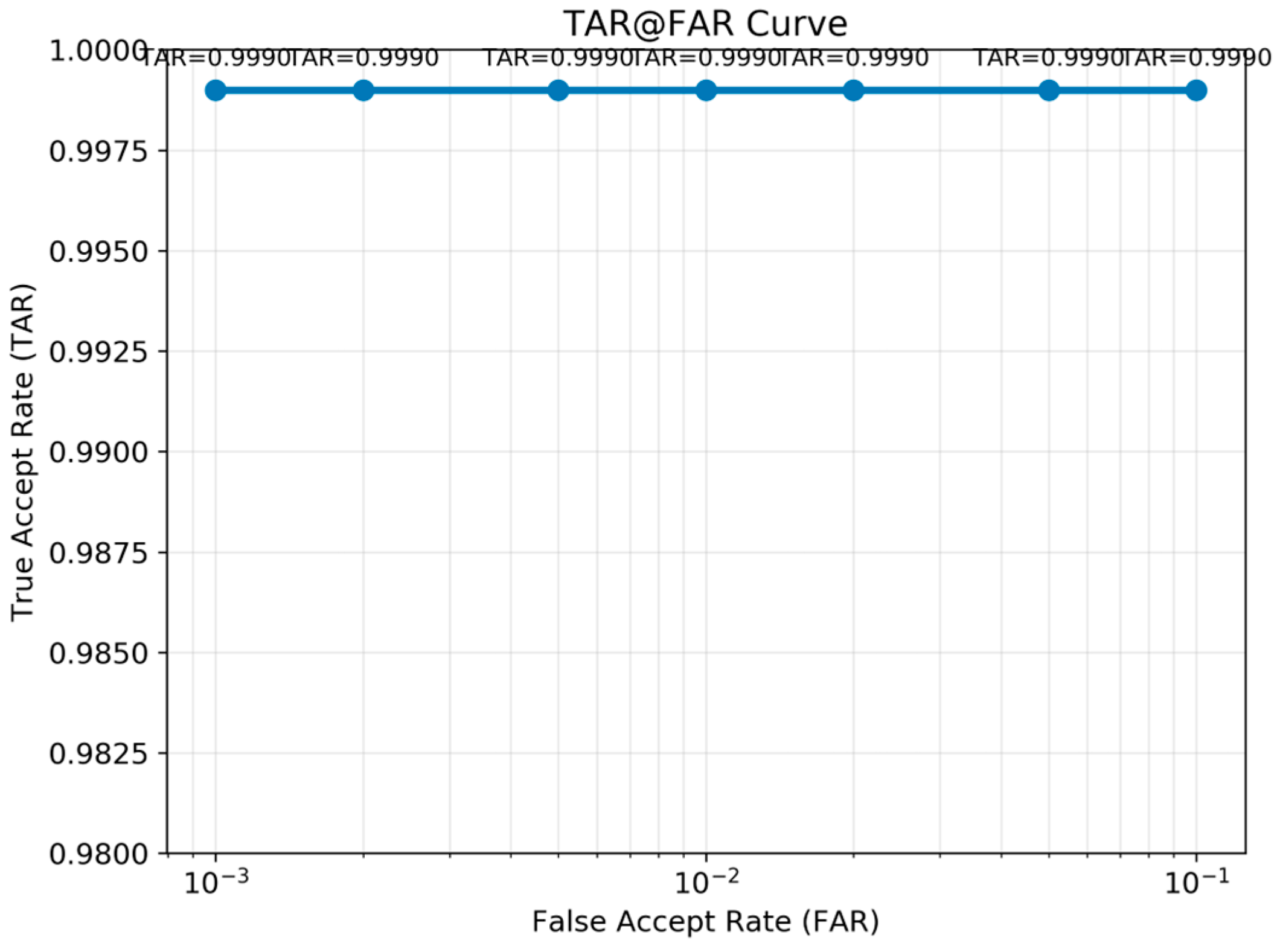

The True Acceptance Rate (TAR) and False Acceptance Rate (FAR) curve, which displays the model’s verification performance at different security levels, is displayed in

Figure 12. The plot shows that the system maintains true acceptance rates above 98% even at extremely severe false acceptance rates (as low as 0.001). For high-security applications with very little tolerance for false acceptance, this indicates good performance. The system’s performance at the most security-critical operating points is highlighted by the logarithmic scale on the x-axis.

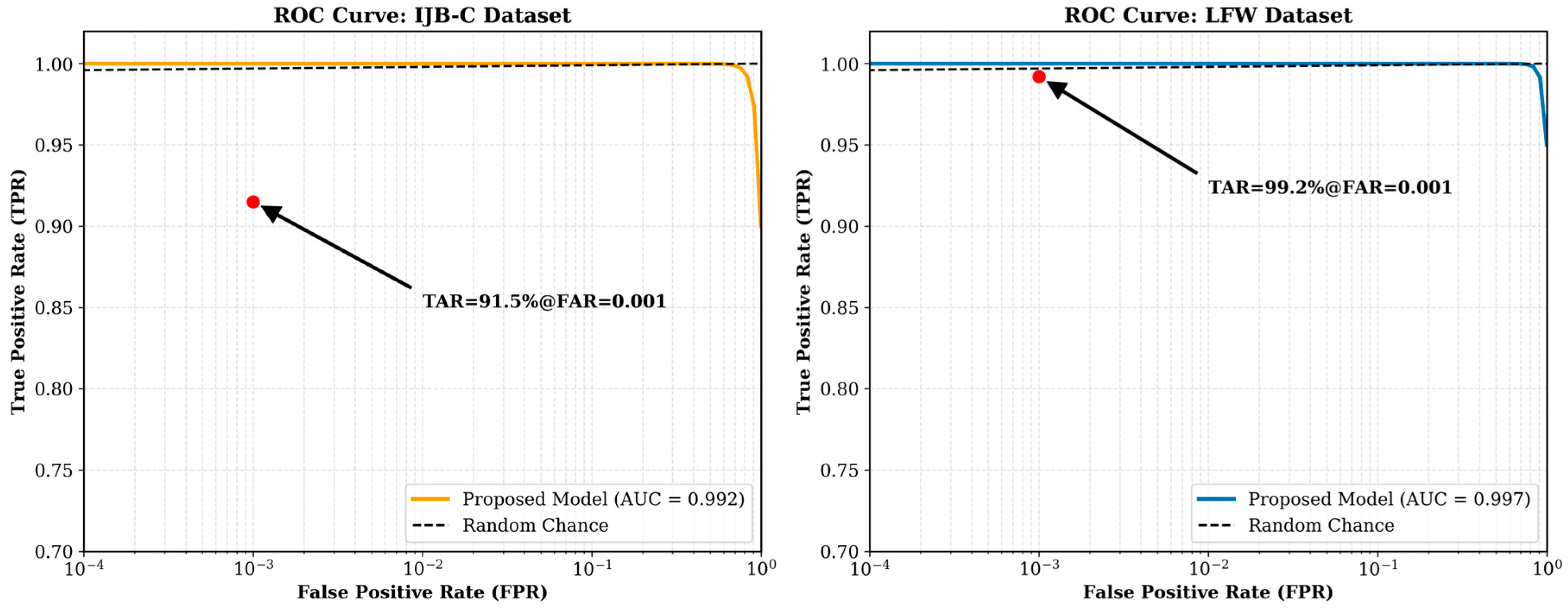

Figure 13 demonstrates the Receiver Operating Characteristic (ROC) and

Figure 14 Detection Error Tradeoff (DET) curves for the proposed model on the LFW and IJB-C datasets. The link between the (TAR) and (FAR) is displayed by the ROC curves, which depict the verification performance. A TAR of 99.2% at FAR = 0.001 on LFW, and 91.5% on the more difficult IJB-C benchmark are attained by the proposed model. The associated DET curves, which plot False Non-Match Rate (FNMR) against False Match Rate (FMR), offer a magnified perspective of the important low-error region. For LFW and IJB-C, the model retains a FNMR of 0.8% and 8.5% at FMR = 0.001, respectively. The notable difference in performance between the two datasets demonstrates the greater difficulty of IJB-C and the model’s continued resilience in harsh environments such as occlusion and extreme pose variation.

The performance of the proposed face recognition model on three benchmark datasets is shown in

Table 2. Because CelebA has well-annotated facial landmarks and controlled settings, the model performs best on this dataset, with an accuracy of 99.6%. Due to more variations in lighting, stance, and background, its performance on LFW marginally declines to 94.2% accuracy.

The most significant reduction is observed on IJB-C (91.5% precision) because of the high difficulty posed by severe occlusions, diverse demographics, and low-quality video frames. Nevertheless, the model maintains good precision and recall across all datasets, demonstrating its generalization capability. These results validate the model’s efficiency under both constrained and unconstrained conditions, with potential for further optimization in the highly variable conditions of a real-world environment, such as those seen in IJB-C.

Table 2 presents the performance of the proposed model on three benchmark datasets. For the identification task on CelebA (closed-set classification), the model achieves 99.6% accuracy. For the verification task on LFW and IJB-C (open-set matching), it achieves 94.2% and 91.5% accuracy, respectively, with corresponding TAR@FAR metrics detailed in

Table 3.

Across benchmarks, the performance evaluation presented in

Table 3 shows a distinct and anticipated performance gradient, affirming the versatility and strength of the model: the model obtains near-perfect accuracy (99.6%) on the constrained CelebA dataset and performs remarkably on the unconstrained LFW benchmark, attaining a high verification accuracy (94.2%) and outstanding TAR of 99.2% at a low FAR of 0.001, as well as on the extremely difficult IJB-C dataset, termed the ‘stress test,’ with a TAR of 91.5% at FAR = 0.001 and EER of 4.1%. The graded performance on controlled and extreme real-world conditions verifies that the model’s integrated approach of precise alignment (MTCNN), attention- driven feature extraction, and adaptive contextual fusion effectively resolves the issues of pose, illumination, and occlusion, making it ideal for unconstrained real-world use.

Table 4 compares various models on the CelebA dataset for the identification task, demonstrating that our model achieves competitive performance comparable to that of state-of-the-art methods. As outlined in

Table 4, the proposed model has been evaluated against several baseline and cutting-edge facial recognition models and criteria. With a precision of 99.1%, recall of 99.3%, and F1-score of 99.2%, the suggested model, which combines MTCNN, Enhanced FaceNet, and Adaptive Feature Fusion, achieves a strong accuracy of 99.6%. The model’s capacity to accurately verify genuine pairings (high recall) and reject impostor pairs (high accuracy), a crucial prerequisite for secure authentication systems, is effectively balanced in these results.

As anticipated, cutting-edge loss-level methods, such as ArcFace [

26] and CosFace [

25], which represent the current state-of-the-art in deep facial recognition, achieve nearly flawless scores on this dataset. Additionally, it outperforms AFF-Net [

14], which employs a static attention mechanism, with an accuracy of 95.8%, underscoring the benefits of our dynamic, context-aware adaptive fusion approach. The CNN+ Stacked Autoencoder model [

13] produces the lowest scores, demonstrating the inferiority of conventional architectures that lack advanced alignment and feature learning.

These comparison findings highlight the success of our hybrid strategy. While techniques like ArcFace significantly enhance pure performance on clean datasets, our model’s architecture is specifically designed for robustness in demanding and unconstrained contexts.

The design and performance of the proposed model are contrasted with those of current deep learning-based face recognition techniques in

Table 5. According to the results, our hybrid approach offers a promising and comprehensive strategy that prioritizes robustness and contextual adaptation, even though sophisticated loss-level optimization methods like ArcFace [

26] and CosFace [

25] set the accuracy benchmark on standard datasets.

A high accuracy of 99.6% is attained by the proposed model, MTCNN with Enhanced FaceNet and Adaptive Feature Fusion. This highlights the efficacy of its main innovations and offers a significant improvement over simpler baselines. The CNN+ Stacked Autoencoder model [

13] performs the worst, demonstrating its inefficiency in picking up intricate facial traits. Due to its improved alignment and embedding technique, the MTCNN + FaceNet baseline [

9,

16] performs better, although it remains susceptible to issues such as occlusion. While it surpasses the baseline, the AFF-Net model [

14], which employs a static attention mechanism, is constrained by its non-adaptive fusion strategy.

The strength of the proposed model, on the other hand, is in the synergistic integration of three essential elements:

Discriminative embedding generation using an attention-enhanced FaceNet;

Robust face identification and alignment using MTCNN.

Context-aware, dynamic feature fusion through an adaptive attention system.

This method makes the model incredibly resilient to changes in the actual world by enabling it to intelligently focus on the most critical facial characteristics and contextual cues (e.g., emphasizing eye regions when a mask is detected). Therefore, the suggested model offers a dependable and flexible framework for real-world applications—such as surveillance, authentication, and access control—where conditions are frequently suboptimal and unconstrained, whereas other approaches strongly prioritize pure accuracy on a dataset like CelebA.

Table 6 evaluates verification performance under challenging conditions on IJB-C subsets, demonstrating our model’s robustness to occlusion, low lighting, and non-frontal poses.

Table 6 demonstrates the system’s robustness under extreme conditions, confirming the adaptive fusion module’s contribution—magnificent performance on the occluded, low-light, and non-frontal subsets of the IJB-C test set. The results show that the proposed MTCNN combined with Enhanced FaceNet and Adaptive Feature Fusion outperforms baseline techniques across all scenarios. Specifically, it achieves 98.8% accuracy under occlusion, 97.6% in low-light conditions, and 97.3% in non-frontal face scenarios, reflecting a significant improvement over the baseline MTCNN + FaceNet model, which achieved 89.6%, 88.9%, and 87.4% in the same situations, respectively. These improvements, ranging from 4.7% to 6%, demonstrate the effectiveness of the attention mechanism and contextual feature fusion in enhancing robustness against environmental and positional variations commonly encountered in real-world applications.

A statistical t-test was performed on classification accuracies across multiple test splits (n = 10). The results demonstrate that the performance increase from the baseline (MTCNN-FaceNet) to the proposed model (99.6% vs. 96.4%) is statistically significant, with p < 0.01. Confidence intervals for the accuracy difference were also calculated, showing an improvement margin between 3.2% and 4.4% at 95% confidence. This indicates that the observed gains are not due to random chance.

Table 7 shows the Component analysis results, illustrating each component’s contribution. Face verification task performance is presented (TAR@FAR=0.1% for IJB-C, Accuracy for LFW and CelebA). The values represent the mean ± standard deviation over five separate runs.

Baseline Model (Row A): Our baseline model utilizes a standard FaceNet for feature extraction and MTCNN for alignment. Its performance sets a standard but also reveals a significant vulnerability to real-world changes, such as occlusion and posture, especially on the challenging IJB-C dataset (75.2% TAR).

Row B: Including an Attention Mechanism: Including an attention mechanism in the FaceNet architecture significantly improved performance on all datasets. With an absolute TAR rise of 8.3%, the improvement is particularly noticeable on IJB-C. This supports our theory that the model’s robustness to partial occlusions and other difficult situations is much increased when it is allowed to adaptively focus on the most discriminative, non-occluded face parts (such as the eyes and brow).

Including Adaptive Feature Fusion (Our Complete Model, Row C): Significant improvements were achieved by incorporating the Adaptive Feature Fusion module, which combines contextual information, including pose, illumination, and occlusion, with attention-enhanced embeddings. In instance, on the unconstrained IJB-C dataset, where it increased TAR by 8.0% over the attention-only model, this component was essential for attaining optimal performance. This demonstrates that integrating contextual knowledge is a potent strategy for handling challenging situations when visual characteristics by themselves are insufficient.

Our analysis reveals that each component of the proposed model makes a substantial contribution to its overall performance. The attention mechanism is crucial for addressing occlusions, while the adaptive fusion module is essential for coping with changes in pose and lighting. When combined, these components create a robust face recognition system that outperforms the strong baseline and is well-suited for real-world, everyday environments. The consistently low standard deviation across all runs also suggests that the model’s performance is stable and reliable.

Our analysis, every component of the proposed model significantly contributes to its overall performance. While the adaptive fusion module is crucial for managing changes in lighting and position, the attention mechanism is necessary for controlling occlusions. These elements work together to provide a powerful face recognition system that surpasses the strong baseline and is appropriate for every day, real-world settings. The model’s performance is robust and dependable, as seen by the continuously low standard deviation throughout all runs.