Abstract

Human activity recognition (HAR) in real-world settings has gained significance due to the growth of Internet of Things (IoT) devices such as smartphones and smartwatches. Nonetheless, limitations such as fluctuating environmental conditions and intricate behavioral patterns have impacted the accuracy of the current procedures. This research introduces an innovative methodology employing a modified deep residual network, called 1D-ResNeXt, for IoT-enabled HAR in uncontrolled environments. We developed a comprehensive network that utilizes feature fusion and a multi-kernel block approach. The residual connections and the split–transform–merge technique mitigate the accuracy degradation and reduce the parameter number. We assessed our suggested model on three available datasets, mHealth, MotionSense, and Wild-SHARD, utilizing accuracy metrics, cross-entropy loss, and F1 score. The findings indicated substantial enhancements in proficiency in recognition, attaining 99.97% on mHealth, 98.77% on MotionSense, and 97.59% on Wild-SHARD, surpassing contemporary methodologies. Significantly, our model attained these outcomes with considerably fewer parameters (24,130–26,118) than other models, several of which exceeded 700,000 parameters. The 1D-ResNeXt model demonstrated outstanding effectiveness under various ambient circumstances, tackling a significant obstacle in practical HAR applications. The findings indicate that our modified deep residual network presents a viable approach for improving the dependability and usability of IoT-based HAR systems in dynamic, uncontrolled situations while preserving the computational effectiveness essential for IoT devices. The results significantly impact multiple sectors, including healthcare surveillance, intelligent residences, and customized assistive devices.

1. Introduction

The investigation of human activity recognition (HAR) has become a crucial area of research in the age of ubiquitous technology and the Internet of Things (IoT). The capacity to precisely identify and categorize human behavior instantaneously has extensive consequences in several fields, such as healthcare surveillance, intelligent housekeeping, safety technologies, and customized assistive devices [1].

Studies in the field of medical care and wellness have explicitly focused on identifying activities of daily living (ADLs) since the effectiveness of ADLs is a crucial measure of everyday wellness and good health [2]. HAR can be advantageous for the surveillance of the health conditions of elderly individuals, allowing for the subsequent dissemination of these data to caregivers, family members, or medical professionals. Because of the unpredictable behavior of older individuals, the execution of ADLs can fluctuate throughout their daily existence [3]. Security and privacy concepts continue to be a complex topic in HAR. This issue arises from the widespread dissemination of sensor-based HAR systems that monitor individual behaviors and places, therefore giving rise to worries over privacy infringements against persons [4]. Nevertheless, the shift from controlled laboratory conditions to operational contexts poses substantial obstacles that have impeded the extensive use of HAR systems [5].

Conventional HAR techniques encounter substantial difficulties in real-life situations where behaviors exhibit unpredictable changes. Movement recognition effectiveness is influenced by various factors, such as environmental uncertainty, individual variations in motion patterns, and concurrent actions occurring in the vicinity [6]. Moreover, integrating HAR networks with IoT equipment presents additional issues concerning computing efficacy, battery usage, and real-time processing abilities [7]. These issues require developing and assessing HAR systems utilizing data gathered from uncontrolled surroundings, as system effectiveness frequently varies considerably between controlled and real-world circumstances [5].

Convolutional neural networks (CNNs), a type of deep learning approach, have demonstrated encouraging outcomes in tackling some of these problems [8,9]. CNNs have been increasingly used by researchers to implement HAR networks, as exemplified by [10,11]. CNNs can represent the complete sequence by distributing the weights from local to global, extracting abstract characteristics at higher layers via convolutional operations, and analyzing raw activity signals to identify possible features [12]. Deep residual networks (ResNets) have shown outstanding achievement in several computer vision tasks due to their fantastic capacity to train profound neural networks [13] efficiently. To enhance the model’s accuracy, it is common practice to deepen or broaden the network. However, networks’ complexity and computation expense would escalate significantly if the number of hyperparameters (including channels and filter size) grows. Therefore, Xie et al. [14] introduced an enhanced framework derived from the residual network, the ResNeXt network. It can enhance the accuracy via cluster convolutions without growing parameter complexity and simultaneously decrease the number of hyperparameters. Furthermore, the approach of recurring a fundamental unit that combines a series of transformations with identical topology reduces the workload of designing the network structure [14]. Nevertheless, the straightforward implementation of conventional ResNet structures for HAR challenges in IoT settings does not always fulfill the particular demands of real-world activity identification.

This study introduces a new HAR method facilitated by the IoT. The method utilizes a one-dimensional ResNet with aggregated transformations [14]. We mitigate significant constraints in current approaches by implementing essential architectural advancements in the ResNet structure, especially designed to tackle the distinct issues posed by human action data and the limitations of IoT devices. The key contributions of this study are as follows.

- Our study presents a novel deep learning model named 1D-ResNeXt, which modifies the ResNeXt design to handle 1D time-series sensor data. This novel architecture integrates convolutional blocks, residual blocks, and aggregated transformations, hence improving the model’s capacity to describe intricate patterns of action while preserving the effectiveness of computing.

- Our newly developed 1D-ResNeXt structure is a theoretical concept and a practical solution for efficient deployment on IoT devices. We have successfully addressed significant limitations, such as restricted processing assets and power consumption, making real-time HAR feasible in resource-constrained settings without compromising effectiveness.

- The suggested paradigm dramatically enhances the accuracy and resilience of HAR in real-world, unregulated environments. By integrating sophisticated feature extraction methods and an attention mechanism, our methodology effectively manages the unpredictability and intricacy of everyday situations.

- We comprehensively assess our model against cutting-edge HAR techniques utilizing three uncontrolled HAR datasets: mHealth, MotionSense, and Wild-SHARD. This thorough analysis evidences the excellence and generalizability of our method in various sensing modalities and circumstances in an uncontrolled environment.

Our study effectively merges outstanding performance HAR with realistic IoT deployment, making substantial progress in real-life movement identification. The suggested methodology improves the accuracy of identification. It guarantees practicality for widespread implementation in IoT environments, possibly revolutionizing implementations for health tracking, intelligent homes, and personalized devices for assistance.

The subsequent sections of this work are structured as follows: Section 2 presents an extensive examination of previous research in HAR, the incorporation of IoT, and deep learning methodologies. Section 3 details the recommended methodology, which involves the 1D-ResNeXt design and its implementation. Section 4 describes the setting for the experiment and its outcomes. Section 5 provides an analysis and interpretation of our results. Finally, Section 6 serves as the conclusion of the work and delineates potential areas for additional investigation.

2. Related Works

This section presents a summary of the current research that is pertinent to our study, with a specific emphasis on three main domains: IoT-enabled HAR, deep learning networks, and HAR in uncontrolled environments.

2.1. IoT-Enabled HAR

HAR, in combination with IoT technology, has resulted in substantial progress in an instantaneous fashion and the uninterrupted tracking of human behaviors. Recent studies in this field have concentrated on enhancing accuracy, effectiveness, and flexibility in practical situations. We examine the latest and pertinent research in IoT-enabled HAR from this position.

Bianchi et al. [15] introduced a novel HAR approach that combines wearable technology with deep learning methodologies to observe everyday behaviors in domestic settings. The system includes a customized portable sensor with an inertial measurement unit (IMU) and Wi-Fi connectivity, facilitating user configuration and cloud-based data communication. The method fundamentally utilizes a CNN tuned for practical interpretation, rendering it theoretically appropriate for deployment on low-cost or embedded machines. This method can accurately detect nine distinct everyday behaviors with an accuracy of 97%, showcasing the promise of integrating IoT devices with powerful machine learning for effective, user-centric HAR solutions.

Kolkar et al. [16] investigated the application of smartphones in HAR, utilizing their integrated sensors to identify and assess an individual’s motions. The research highlights the importance of HAR technologies for detecting inertial motion and comprehensive bodily activity using wearable sensors utilizing real-time signal processing methods. The integration of IoT technology seeks to unveil novel opportunities in medical solutions. The study recognizes the difficulties in attaining consistent activity identification and emphasizes enhancing HAR accuracy with deep learning models, particularly CNN, long short-term memory (LSTM), and gated recurrent units (GRUs). The research compares these models utilizing the UCI-HAR and WISDM datasets, which are well established in action identification. This investigation reveals beneficial findings for improving the accuracy of HAR, aiding the development of smartphone-based HAR solutions and its prospective uses in medical tracking and IoT-enabled settings.

Yu et al. [17] acquired comprehensive path monitoring just by force sensors integrated into footwear, obviating the necessity for additional infrastructure and site assessments. The device utilizes five force-sensing resistors in each insole. It applies a machine learning method (support vector machine) to assess alterations in walking trajectory and stride lengths based on force patterns. The study describes how accurate interior path tracking could be accomplished with IoT-enabled HAR utilizing solely in-shoe force sensors, providing a viable alternative for situations lacking wireless infrastructure.

This study examines the increasing significance of HAR employing wearable and mobile sensors across several domains, including medical treatment, observation, education, and entertainment. It underscores the recent development of edge computing as a remedy to mitigate communication delays and network congestion. Nonetheless, edge devices encounter limitations in processing power, restricting their capacity to accommodate complex processes. Although deep learning methods provide exceptional accuracy in HAR, their computational requirements render them impractical for edge implementation. The work [18] offers a compact deep learning model for HAR that necessitates reduced computational capability, rendering it appropriate for edge equipment. The suggested model was evaluated using data from six individuals’ everyday activities. The findings indicate that our compact model surpasses most current machine learning and deep learning methods, presenting a viable option for effective HAR on resource-limited edge systems. Ouyang et al. [19] tackles the issues of edge computing in federated learning for HAR by introducing an innovative similarity-aware method that minimizes computational and communication burdens while preserving high accuracy. The technology is explicitly engineered for resource-limited edge environments. Nevertheless, the research predominantly used in-laboratory datasets, constraining its ability to identify real-world events and potentially undermining the system’s usability.

Despite these developments enhancing the potential of IoT-HAR infrastructure, significant challenges persist in attaining high accuracy in varied, uncontrolled situations. This underscores the urgency of ensuring computing efficiency is appropriate for IoT devices. Our objective is to tackle these issues by presenting an innovative deep learning architecture tailored for IoT-enabled HAR in practical environments.

2.2. Deep Learning Networks

HAR scholars have employed deep learning methodologies for the recognition of human movements. High-quality data enable even superficial and ensemble learning [20] to produce favorable outcomes, yet it necessitates domain knowledge and extensive data pre-processing. The obligatory nature of feature engineering and extensive data pre-processing compels experts to adopt deep learning models for automated feature engineering and reliable outcomes.

Neural networks’ ability to capture temporal characteristics enhances the development of end-to-end deep learning models, thereby streamlining the feature learning and identification process. The feature extraction and model-building stages of deep learning approaches are typically executed concurrently, allowing for automatic feature learning. Deep neural networks are adept at producing profound interpretations from low-level data, making them suitable for complex human activity recognition tasks. Various deep-learning-based techniques, including CNNs, RNNs, and hybrid architectures, have been utilized for time series feature extraction. The key benefit of integrating deep learning into HAR is its automatic feature extraction capability [11].

In sophisticated models of HAR, a more intricate and profound model architecture enhances accuracy compared to the previously stated feature learning. These models employ CNNs to extract features autonomously. Particular academics suggested sophisticated backbone models as alternatives to CNNs. Dong et al. [21] introduced an inception module integrated with HCF. Long et al. [22] presented methodologies for individual learning of large-scale and small-scale networks, comprising combining them. The essence of the approach is to incorporate two dimensions of leftover blocks. Tuncer et al. [23] offered various ResNet architectures with differing layers as feature extractors, cascading the extracted features to establish the backbone. Ronald et al. [24] introduced the iSPLInception backbone, derived from Inception-ResNet, employing a multichannel-residual compound structure for HAR research. Mehmood et al. [25] employed DenseNet as the foundational architecture and utilized dense connections for HAR.

2.3. Available HAR Datasets

The advancement and assessment of HAR applications depend significantly on the accessibility of high-quality datasets. These datasets encompass diverse actions individuals take across multiple situations, supplying experts with essential data to train and evaluate their models. Although many sensor-based HAR datasets are publicly accessible, they exhibit considerable variation regarding the number of actions, individuals, sensor types, sensor placements, and the environments in which the data were gathered.

In HAR study findings, current datasets can be classified into two major categories based on their data-collecting surroundings, including controlled and uncontrolled [26]. An uncontrolled environment is characterized by data collection occurring in natural, real-world circumstances, marked by substantial variations in three principal dimensions: user diversity (distinct physical attributes and behavioral patterns), environmental variables (diverse locations, conditions, and ambient noise), and equipment variability (varied sensor types and configurations) [27]. Among the existing HAR datasets, only a limited number include time-series data of ADLs gathered under uncontrolled conditions [28]. The lack of naturally obtained data presents a considerable obstacle to developing proper real-time activity identification systems, significantly hindering their real-world implementations.

Controlled datasets like UCI-HAR [29] and WISDM [30] have been crucial in developing HAR research, offering defined standards for formulating algorithms and evaluation. The UCI-HAR dataset, obtained from 30 individuals engaged in six fundamental activities (walking, ascending stairs, descending stairs, sitting, standing, and reclining), utilizes smartphone-derived accelerometer and gyroscope data sampled at 50 Hz. It provides a balanced depiction of actions in a regulated environment, making it favored for preliminary model evaluation. The WISDM dataset, in its newest iteration (v2.0), includes 18 actions from 51 individuals collected by smartphone and smartwatch sensors. This dataset is distinguished by its wide variety of actions and the utilization of various sensor placements (pocket and wrist), facilitating a more comprehensive array of HAR situations. Both datasets include pristine, accurately labeled data gathered under controlled conditions, simplifying pre-processing and model training. Nonetheless, their regulated nature imposes constraints, as they may only partially encapsulate the heterogeneity and intricacy of reality.

Unlike controlled datasets, uncontrolled datasets encapsulate the intrinsic intricacies and variability of real-world HAR applications. These statistics exhibit inherent fluctuations in user behavior, ambient factors, and equipment settings. mHEALTH [31] gathers data from individuals engaging in actions within their everyday contexts without rigid protocols, permitting spontaneous variability in activity execution. MotionSense [32] explicitly tackles device heterogeneity by collecting data from diverse smartphone models and orientations in multiple real-world environments. Wild-SHARD [28] underscores the variety of environments by incorporating data gathered from several locations at various times, reflecting natural changes in activity performance and environmental settings. These unregulated datasets present more authentic issues for HAR systems, encompassing fluctuating sensor noise levels, irregular activity patterns, and varied surrounding conditions that more precisely represent real-world implementation circumstances.

Table 1 explicitly indicates that most publicly accessible datasets were gathered in controlled settings [29,30,33,34,35], perhaps failing to encapsulate the complexities of real-world HAR applications. Significantly, only a limited number of datasets, including mHEALTH [31], MotionSense [32], and Wild-SHARD [28], have time-series data for ADLs gathered in uncontrolled situations.

Table 1.

Overview of commonly used HAR datasets along with their main features.

3. Methodology

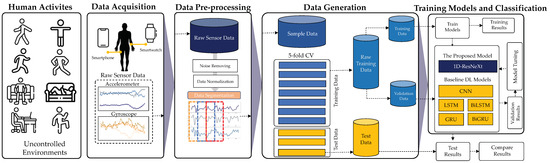

This part outlines our thorough methodology for IoT-enabled HAR in unregulated situations. Our methodology includes the complete workflow from data collection to model evaluation, as depicted in Figure 1. We started with data collection from three distinct datasets, mHealth, MotionSense, and Wild-SHARD, each illustrating various facets of real-world HAR situations. The unprocessed sensor data subsequently undergo a stringent pre-processing phase, encompassing denoising, normalization, and segmentation, to ready them for deep learning investigation. Central to our methodology is the innovative 1D-ResNeXt architecture, meticulously crafted to address the intricacies of time-series sensor data while ensuring computational effectiveness for IoT devices. We evaluate our model against multiple standard deep learning architectures, such as CNN, LSTM, Bidirectional LSTM (BiLSTM), GRU, and Bidirectional GRU (BiGRU). The training procedure leverages the Adam optimizer with meticulously calibrated parameters and leverages cross-entropy loss alongside L2 regularization. Ultimately, we assess the effectiveness of our model utilizing different indicators, including accuracy, loss, and F1-score, across all three datasets to illustrate its efficacy and generalizability in uncontrolled HAR scenarios.

Figure 1.

HAR framework for IoT-enable HAR in uncontrolled environments.

3.1. Data Acquisition

The efficacy of our IoT-enabled HAR solution is predicated on the quality and variety of the data utilized for training and assessment. To guarantee the reliability and generality of our proposed 1D-ResNeXt model, we employ three datasets encompassing a broad spectrum of individual behaviors in diverse uncontrolled circumstances: mHealth, MotionSense, and Wild-SHARD. These datasets were selected for their complementing attributes, reflecting diverse sensor arrangements, action categories, and real-world contexts. By integrating data from various sources, we seek to tackle the issues of HAR in different, uncontrolled environments, improving our approach’s real-world relevance. Each dataset presents distinct characteristics that enhance the thorough assessment of our methodology, ranging from the multi-sensor configuration of mHealth to the smartphone-centric data acquisition of Wild-SHARD. In the remaining subsections, we present comprehensive explanations of each dataset, including their collection methodology, sensor specifications, and the spectrum of actions they cover.

3.1.1. mHEALTH Dataset

The mHEALTH (mobile HEALTH) dataset [31] comprises acceleration, angular velocity, magnetic field orientation, and ECG readings from ten individuals engaged in various activities involving movement, including cycling, jogging, running, and forward waist bends. The sensors on the participant’s chest, right wrist, and left ankle quantify each individual’s movement. The chest sensor delivers 2-lead ECG readings. All signals are sampled at a frequency of 50 Hz. We allocate three participants, or 30% of the dataset, for validation. The dataset comprises twelve actions related to daily living and playing sports. Participants executed the tasks optimally in the unregulated situation.

3.1.2. MotionSense Dataset

Experts at the MIT Media Lab developed the MotionSense dataset [32], which comprises data on six different tasks conducted by 24 volunteers, including climbing upstairs, descending stairs, sitting, standing, walking, and jogging, all within identical environmental conditions. Smartwatches’ accelerometer and gyroscope sensors were utilized to quantify attitude, acceleration, gravitational force, and rotational velocity. A total of 1,412,865 samples were gathered, encompassing 12 characteristics: attitude-roll, attitude-pitch, attitude-yaw, gravity-x, gravity-y, gravity-z, rotation rate-x, rotation rate-y, rotation rate-z, acceleration-x, acceleration-y, and acceleration-z.

3.1.3. Wild-SHARD Dataset

The Wild-SHARD dataset [28] comprises HAR data gathered in an uncontrolled, real-world context. The data were gathered utilizing various smartphone systems, including the Samsung Galaxy F62, Samsung Galaxy A30s, Poco X2, and OnePlus 9 Pro. The dataset aims to enhance the real-time identification of activities and the system’s efficacy by supplying high-quality, non-simulated sensor data. The sensor module, comprising accelerometers and gyroscopes, was affixed in the front pockets (vertically, with the phone earpiece side facing up) of 40 adult participants of varying ages, genders, weights, and heights. Participants engaged in actions organically to obtain genuine ADL data, encompassing sitting, walking, standing, running, and traversing stairs both indoors and outdoors. The dataset was acquired at a sample frequency of 100 Hz, encompassing six activities of daily living: sitting, walking, standing, running, ascending stairs, and descending stairs. The dataset comprises characteristics such as gravitational acceleration, linear acceleration, gravity, rotational rate, rotational vector, and the cosine of the rotational vector. As outlined in the accompanying equations, this extensive dataset framework seeks to enhance real-time activity detection and overall system efficacy by providing high-quality, realistic sensor data.

3.2. Data Pre-Processing

The unprocessed sensor data obtained from the mHealth, MotionSense, and Wild-SHARD datasets necessitates meticulous preparation to guarantee the optimum effectiveness of our 1D-ResNeXt model. This pre-processing phase is essential for addressing the diverse characteristics of the data and the difficulties presented by uncontrolled situations. Our pre-processing pipeline comprises three primary stages: denoising, normalization, and segmentation.

3.2.1. Data Denoising

To minimize the influence of noise and artifacts in sensor data, a sixth-order zero-phase Butterworth IIR bandpass filter is utilized. The parameters for this filter are adjusted based on the specific sensor type:

- The settings include a low-pass cutoff at 20 Hz and a high-pass cutoff at 1 Hz for accelerometer and gyroscope readings across all datasets.

- In the mHealth dataset, the magnetometer uses a bandpass filter ranging from 5 Hz to 15 Hz.

- For ECG data within the mHealth dataset, a bandpass filter is applied with a range between 0.5 Hz and 100 Hz.

This filtering technique effectively eliminates both high-frequency noise and low-frequency drift while retaining essential motion and physiological details.

3.2.2. Data Normalization

As illustrated in Equation (1), the sensor data are scaled to a range of 0 to 1. This normalization process helps address challenges in model training by ensuring that all data points are within a consistent range. As a result, gradient descent algorithms can achieve faster convergence.

where represents the normalized data, n denotes the number of channels, and and are the maximum and minimum values of the ith channel, respectively.

3.2.3. Data Segmentation

A sliding window technique is used to divide the continuous sensor data into segments of fixed length. Considering both the properties of our datasets and findings from prior research, the following parameters are applied:

- Window length: 2 s, which equates to 100 samples for mHealth and Wild-SHARD datasets at a sampling rate of 50 Hz, and 200 samples for MotionSense at 100 Hz.

- Overlap: 50% overlap between consecutive windows.

This segmentation method ensures that each activity’s temporal context is adequately captured, while also allowing for smooth transitions between activities.

3.3. The Proposed 1D-ResNeXt Architecture

This research introduces a multi-branch aggregation model called 1D-ResNeXt, which is inspired by ResNeXt [36]. The primary difference is that 1D-ResNeXt customizes the well-established ResNeXt model—typically applied in computer vision—to handle one-dimensional time-series sensor data for user identification. Our approach involves modifying convolutional blocks for one-dimensional processing, using causal convolutions to prevent data leakage, and exploiting temporal depths to capture unique patterns in smartphone sensor data that reflect user biomechanics. In contrast, the original ResNeXt model is limited to two-dimensional images and does not address sequential or temporal data, which are crucial for sensor-based recognition tasks.

The 1D-ResNeXt model adapts both the core building blocks and the overall structure to effectively extract distinguishing features from multi-channel time-series data rather than spatial images. In the revised manuscript, we will explicitly highlight how our model formulation adapts ResNeXt for one-dimensional sensor processing, emphasizing the key modifications that enabled us to achieve cutting-edge user identification results compared to standard ResNeXt.

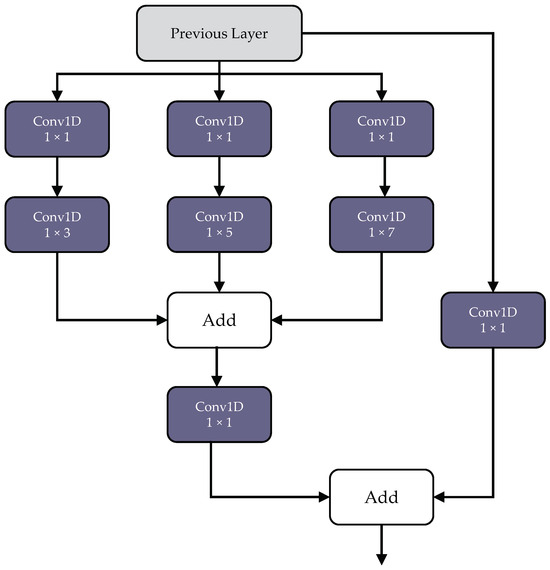

The proposed 1D-ResNeXt comprises a convolutional block and multi-kernel blocks that aggregate feature maps, unlike InceptionNet [14], which merges feature maps from different kernel sizes. This approach reduces the model’s parameter count, making it more suitable for edge computing and low-latency applications. A detailed depiction of the 1D-ResNeXt model can be found in Figure 2.

Figure 2.

The architecture of the 1D-ResNeXt model.

3.3.1. Convolutional Block

The convolutional section of the proposed model includes a convolutional layer (Conv), a batch normalization layer (BN), a rectified linear unit layer (ReLU), and a max-pooling layer (MP). The Conv layer uses trainable convolutional kernels to capture various features and produce corresponding feature maps. These kernels are one-dimensional, matching the input spectrum. The BN layer accelerates and stabilizes the training process, while the ReLU layer improves the model’s capacity to represent complex features. The MP layer reduces the feature map size, retaining essential components.

The one-dimensional Conv (Conv1D) layer functions as the preliminary means for extracting features. It uses adjustable one-dimensional convolutional filters that move along the time axis of the input arrangement. These filters perform operations such as element-wise multiplication and summation. This process can be represented mathematically as follows:

Here, denotes the activated output, while represents the weight of the i-th filter. The sensing data, , are convolved with , and refers to the bias associated with the i-th kernel. The total number of kernels, n, influences the depth of feature extraction. The function f is a non-linear activation that adds complexity to the model. These kernels are designed to recognize diverse temporal patterns and activity characteristics in the input data. The richness of the resulting feature maps depends on the number of kernels used.

After the convolution step, the BN layer standardizes the activation outputs by rescaling and shifting them for each batch. This normalization technique, integrated into the training phase, helps speed up the convergence of gradient descent. As a result, it reduces the overall training time of neural networks. The computation can be expressed as follows:

where represents the k-th dimensional component of within the training set . The parameter denotes the mean of the k-th dimensional component across all samples in the training set, while is the corresponding standard deviation. This normalization technique improves the training process by minimizing internal covariate shifts. It also allows for higher learning rates and inherently acts as a form of regularization, which helps mitigate overfitting.

The ReLU layer adds non-linearity to the network using a straightforward transformation: . This activation function allows the model to capture complex patterns and relationships within the data. It also helps address the vanishing gradient issue often encountered in deep neural networks. Furthermore, ReLU encourages sparse activations and is computationally more efficient than many other activation functions, making it well suited for applications with limited resources.

The final MP layer reduces the temporal dimension of feature maps by selecting the highest value in each pooling window. This downsampling process decreases computational demands while providing translation invariance. It also supports handling inputs of variable lengths, preserving key features. Concurrently, these four layers form a streamlined feature-extraction pipeline. This pipeline efficiently processes time-series data for HAR, providing computational efficiency and robust characteristic expression.

3.3.2. Multi-Kernel Block

Another model component is the multi-kernel (MK) block. Each MK module contains kernels of three different sizes: , , and . Before applying these kernels, convolutions are used to reduce the overall model complexity and the number of trainable parameters. The kernel sizes of , , and in the multi-kernel block were deliberately selected to achieve an optimal balance between performance and computational efficiency for HAR tasks. Each kernel size plays a unique role in building a hierarchical feature extraction framework. The kernel focuses on capturing short-term dependencies and local features. The kernel targets medium-range temporal patterns, while the kernel identifies longer-range temporal relationships within the sensor data. This integration ensures thorough coverage of temporal features while preserving low computational costs, which is essential for IoT-based applications. More information on the MK module is available in Figure 3.

Figure 3.

Details of the multi-kernel module.

3.3.3. Classification Block

The classification block is the final part of our network design and converts extracted features into activity predictions. It comprises two primary layers: global average pooling (GAP) and a dense layer, followed by a softmax activation for multi-class classification.

The GAP layer simplifies the spatial dimensions of the feature maps by averaging the values in each feature map, resulting in a single value for each channel. Unlike conventional flattening methods, GAP decreases the network’s parameter count while retaining the spatial context embedded in the feature maps. This reduction minimizes the risk of overfitting and enhances computational efficiency, making it particularly suitable for IoT devices with limited resources.

Following GAP, the dense layer maps the pooled features to activity classes, with the number of neurons equal to the total activity classes in the HAR task. The softmax activation function at the end converts the dense layer outputs into a probability distribution over all classes, calculated as

This generates comprehensible confidence scores for every action class, allowing the model to generate probabilistic forecasts regarding the executed actions. The class exhibiting the greatest probability is designated as the ultimate forecast.

3.4. Model Training

3.4.1. Optimizer

Adam’s algorithm is utilized as the optimizer [37]. This algorithm optimizes a random objective function step by step and relies on adaptive low-order moment estimation. It offers high computational efficiency and requires minimal memory. By estimating the first and second gradients, Adam can adjust the learning rates for different parameters. Additionally, since Adam’s gradient rescaling is invariant, it is well suited for handling problems involving large-scale data or numerous parameters.

The advantages of Adam include ease of implementation, computational efficiency, low memory usage, diagonal scaling invariance of the gradients, and minimal parameter tuning. Table 2 shows the parameter settings for the Adam optimizer. Here, Lr denotes the learning rate, which represents the step size for each gradient descent. Decay refers to a weight decay factor, which helps prevent overfitting by incorporating a regularization term into the loss function.

Table 2.

Adam optimizer parameters.

3.4.2. Loss Function and Evaluation Index

The loss function incorporates the cross-entropy function combined with L2 regularization decay. The following equation represents the cross-entropy loss:

Here, denotes the actual label for each instance, while represents the predicted probability for that instance. To help prevent overfitting, regularization is applied to the loss function, as shown in the equation below:

In this expression, is the initial loss function, which is based on the cross-entropy formula. The term serves as the regularization coefficient, n indicates the total number of training samples, and represents a parameter within the network. L2 regularization, also known as weight decay, is effective in reducing the likelihood of overfitting.

3.5. Performance Measurement Criteria

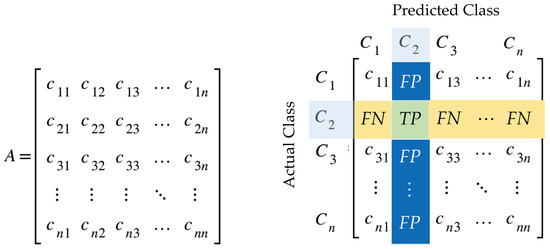

HAR is a type of multiclass classification problem. Accuracy and F1-score are commonly used evaluation metrics to assess and compare the performance of classification models. These metrics are derived from a confusion matrix, which helps determine the model’s effectiveness in identifying transitional activities.

In a multi-class classification problem, let A be a set containing n different class labels, (where ), represented as . The confusion matrix for this problem is an matrix, as shown in Figure 4. Each row corresponds to instances of an actual class, while each column represents instances of a predicted class. An element in the confusion matrix, located at row i and column j, indicates the number of cases where the actual class is i and the predicted class is j.

Figure 4.

Confusion matrix for a multi-class classification problem.

To determine performance metrics, the confusion matrix provides elements such as true positive (), false positive (), true negative (), and false negative (). For a given class label , the values of , , , and can be computed using the following mathematical equations.

We define accuracy, precision, recall, and F1-score for a multi-class confusion matrix, as presented in Table 3.

Table 3.

Performance metrics for a multi-class confusion matrix.

4. Experiments and Results

To assess the effectiveness of our proposed 1D-ResNeXt model for HAR in IoT-enabled, uncontrolled settings, we performed comprehensive experiments on three publicly available datasets: mHealth, MotionSense, and Wild-SHARD. This section outlines the experimental setup, provides an overview of the datasets, and shares the findings from our comparative analysis.

4.1. Experimental Setup

In this study, we utilized advanced computing resources and cutting-edge software frameworks to implement and evaluate the 1D-ResNeXt model alongside other deep learning models. The experimental setup was structured to ensure efficient model training and thorough analysis of the results.

4.1.1. Hardware and Software Infrastructure

We employed Google Colab Pro+ and a Tesla V100-SXM2-16GB GPU (Hewlett Packard Enterprise, Los Angeles, CA, USA) to accelerate the training process for our deep learning models. This high-performance computing setup enabled us to efficiently handle the large sensor data volumes from the mHealth, MotionSense, and Wild-SHARD datasets.

The model was implemented in Python 3.6.9, with TensorFlow 2.2.0 serving as the main deep learning framework. To optimize GPU computations, we used CUDA 10.2 as the backend.

4.1.2. Software Libraries

Our methodology utilized a range of Python libraries, each playing a unique role within the data pipeline and model development:

- Data management and analysis with NumPy and Pandas: These libraries facilitated the efficient retrieval, processing, and analysis of sensor data.

- Visualization with Matplotlib and Seaborn: Used to generate visualizations for presenting the outcomes of data analysis and model evaluation.

- Machine learning and data preparation with Scikit-learn (Sklearn): Applied for data preparation tasks such as splitting data into train and test sets, conducting cross-validation, and calculating performance metrics.

- Deep learning framework with TensorFlow: Serves as the primary library for building and training the 1D-ResNeXt model along with other baseline deep learning models.

4.1.3. Training Process

Our training approach aimed to ensure robust performance and adaptability across the three datasets. A five-old cross-validation strategy was applied to each dataset, allowing us to evaluate the model’s consistency and minimize overfitting risks.

Before training, we used a thorough pre-processing pipeline as described in Section 3.2. This included denoising to eliminate signal artifacts, normalization to standardize input data scales, and segmentation with a 2-s sliding window to capture temporal dependencies.

For optimization, we employed the Adam algorithm with hyperparameters specified in Table 2. The loss function combined cross-entropy loss with L2 regularization, effectively reducing classification errors while controlling overfitting.

Each training fold was allowed to run up to 200 epochs. However, an early stopping mechanism based on validation performance was implemented to halt training if convergence occurred before the maximum epochs. This strategy ensured the efficient use of resources and minimized unnecessary overfitting.

4.2. Results and Analysis

Table 4, Table 5 and Table 6 provide a comparison of the performance of our proposed 1D-ResNeXt model with other deep learning models on the mHealth, MotionSense, and Wild-SHARD datasets, respectively.

Table 4.

Performance comparison of models based on accuracy, loss, and F1-score using mHEALTH dataset.

Table 5.

Performance comparison of models based on accuracy, loss, and F1-score using the MotionSense dataset.

Table 6.

Performance comparison of models based on accuracy, loss, and F1-score using the Wild-SHARD dataset.

The findings in Table 4, based on the mHealth dataset, highlight the exceptional performance of our 1D-ResNeXt model for HAR. Achieving an impressive accuracy of 99.97% (±0.06%), our model outperforms all other models tested, with BiGRU as the closest competitor at 98.14%. Additionally, the 1D-ResNeXt model achieved the lowest loss at 0.00 (±0.00) and the highest F1-score at 99.95% (±0.11%), indicating both high precision and balanced performance across various activity classes.

Remarkably, these results were obtained using only 26,118 parameters, which is significantly less than other models, such as CNN, which has 799,948 parameters. This combination of superior accuracy and a reduced parameter count makes our model ideal for deployment on IoT devices with limited resources. The low standard deviations across all metrics further indicate the model’s consistent and reliable recognition capabilities. These outcomes clearly demonstrate that our 1D-ResNeXt architecture provides an effective solution for IoT-based HAR applications, offering substantial improvements in both accuracy and computational efficiency compared to other deep learning models.

The findings in Table 5, based on the MotionSense dataset, further confirm the effectiveness of our 1D-ResNeXt model for HAR. Our model achieved the highest accuracy at 98.77% (±0.11%) and F1-score at 98.63% (±0.13%), surpassing all other models tested. GRU and BiGRU were the next best performers, both with an accuracy of 98.46%, which emphasizes the clear advantage of our model. Notably, the 1D-ResNeXt model also showed the lowest loss at 0.06 (±0.01), indicating highly reliable predictions.

These superior results were obtained with only 24,130 parameters, a significant reduction compared to other models like CNN, which has 495,558 parameters, and BiLSTM, which has 171,910 parameters. This combination of high accuracy and a low parameter count is particularly valuable for IoT applications, where computational efficiency is as critical as performance. The consistent results in terms of accuracy, F1-score, and loss, along with low standard deviations, suggest that our model is both robust and stable across various activities in the MotionSense dataset.

These findings highlight the adaptability of our 1D-ResNeXt architecture across different HAR scenarios and its strong potential for real-world IoT-based activity recognition applications.

The results in Table 6, based on the Wild-SHARD dataset, further confirm the effectiveness of our 1D-ResNeXt model for HAR in uncontrolled settings. Our model achieved the highest accuracy at 97.59% (±0.53%) and F1-score at 97.53% (±0.55%), outperforming all other deep learning models tested. The closest competitor, BiGRU, reached an accuracy of 96.81% and an F1-score of 96.73%. Notably, our model also had the lowest loss at 0.17 (±0.05), indicating more reliable predictions.

The superior performance of the 1D-ResNeXt model is especially noteworthy given the challenging Wild-SHARD dataset, which reflects real-world, uncontrolled conditions. Furthermore, our model achieved these results with only 24,976 parameters, significantly fewer than models like CNN, with 385,478 parameters, and BiLSTM, with 181,126 parameters. This combination of high accuracy and low parameter count demonstrates the model’s capacity to effectively capture complex activity patterns in diverse, real-life scenarios while remaining computationally efficient. These findings underscore the robustness and adaptability of our approach, making it particularly well suited for IoT-enabled HAR applications in dynamic and uncontrolled environments.

4.3. Comparison Results with State-of-the-Art Models

To exemplify the importance and effectiveness of the proposed 1D-ResNeXt model for IoT-based HAR in uncontrolled environments, this study evaluated its interpretation against leading state-of-the-art methods. The evaluation was conducted using the same benchmark HAR datasets employed in this work: mHEALTH, MotionSense, and Wild-SHARD. Accomplishing heightened accuracy and computational efficiency is essential for implementing HAR systems on IoT devices with limited resources. These devices often operate in dynamic, real-world conditions with varied sensory data and user behaviors. The results of the comparison are presented in Table 7.

Table 7.

Comparison of the proposed 1D-ResNeXt model’s performance with state-of-the-art models across three datasets.

The results summarized in Table 7 highlight the exceptional performance of the proposed 1D-ResNeXt model compared to state-of-the-art methods for HAR using sensor data from the mHEALTH, MotionSense, and Wild-SHARD datasets.

For the mHEALTH dataset, the 1D-ResNeXt model achieved an impressive accuracy of 99.97%, surpassing other advanced deep learning methods. These include the ensemble of hybrid DL models (99.34%), the ensemble of autoencoders (94.80%), the deep belief network (93.33%), and the fully convolutional network (92.50%). The dataset comprises multi-modal sensor data, including accelerometer, gyroscope, magnetometer, and ECG signals. The 1D-ResNeXt model’s superior accuracy demonstrates its ability to extract and learn intricate patterns from diverse sensory inputs efficiently.

On the MotionSense dataset, which uses inertial sensor data from smartphones, the 1D-ResNeXt model achieved an accuracy of 97.77%. This performance exceeds that of other models, such as DySan (92.00%), CNN (95.05%), and random forest (96.96%). These results confirm the adaptability of the 1D-ResNeXt architecture to various sensor types and device configurations.

For the Wild-SHARD dataset, representing a more complex and uncontrolled environment, the 1D-ResNeXt model achieved an accuracy of 97.59%. This result outperformed the CNN-LSTM model (97.00%), showcasing the robustness and effectiveness of the 1D-ResNeXt approach in handling real-world, dynamic settings.

5. Discussion

The outcomes of our experiments highlight the exceptional performance of the proposed 1D-ResNeXt model for HAR in uncontrolled settings, evaluated across three diverse datasets: mHealth, MotionSense, and Wild-SHARD. This thorough assessment demonstrates the robustness and versatility of our model in various real-world contexts.

5.1. Performance Analysis

In the mHealth dataset, our 1D-ResNeXt model achieved a remarkable accuracy of 99.97%, significantly surpassing all other models tested. This near-perfect accuracy, combined with the lowest recorded loss (0.0017) and the highest F1-score (99.95%), underscores the model’s capability to accurately capture and differentiate between a wide array of physical activities and physiological signals.

The results on the MotionSense dataset further confirm the effectiveness of our model, with the highest accuracy (98.77%) and F1-score (98.63%) among all evaluated models. This strong performance on a smartphone-based sensing dataset illustrates the model’s adaptability to various sensor configurations and types of activities.

Most notably, on the Wild-SHARD dataset, which represents the most challenging uncontrolled environment, our model maintained impressive performance, achieving 97.59% accuracy and an F1-score of 97.53%. This resilience in complex, real-world settings is essential for practical applications in IoT-enabled HAR.

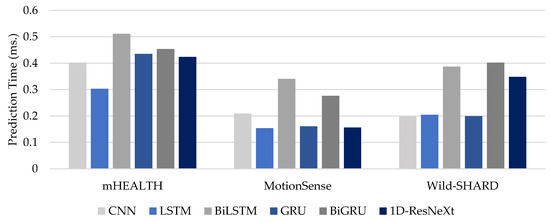

5.2. Computational Efficiency

A notable strength of our model is its outstanding computational efficiency, which is achieved without compromising accuracy. The 1D-ResNeXt model consistently utilized far fewer parameters (between 24,130 and 26,118) compared to other models, some of which required over 700,000 parameters, as shown in Figure 5. This efficiency is crucial for IoT applications, where limited computational resources and low energy consumption are key considerations.

Figure 5.

Comparison of model parameters across different deep learning architectures for the mHEALTH, MotionSense, and Wild-SHARD datasets.

5.3. Model Generalizability

To better comprehend the resource demands of the models, we compared their prediction speeds. Specifically, we calculated the average time each model required to process a set of samples from the test dataset. This metric, the mean prediction time, is crucial for assessing the computational efficiency of models intended for real-time IoT-based HAR tasks.

As illustrated in Figure 6, comparing prediction times across the models provides valuable insights into their computational performance. For the mHEALTH dataset, BiLSTM recorded the longest prediction time at 0.5114 ms, while LSTM achieved the fastest time at 0.3035 ms. Our proposed 1D-ResNeXt model exhibited a competitive prediction time of 0.4238 ms, positioning it within the middle range among the tested models.

Figure 6.

Mean prediction time in milliseconds of deep learning models used in this work.

In the MotionSense dataset, all models demonstrated faster prediction times. LSTM and 1D-ResNeXt stood out, achieving times of 0.1538 ms and 0.1561 ms, respectively. For the Wild-SHARD dataset, CNN offered the quickest prediction at 0.1988 ms, whereas BiGRU required the longest time, taking 0.4024 ms.

It is worth noting that bidirectional models such as BiLSTM and BiGRU consistently exhibited higher prediction times across all datasets. This is likely due to their more complex architectures. In contrast, our 1D-ResNeXt model balanced computational efficiency with its advanced feature extraction capabilities, maintaining reasonable prediction speeds.

These findings highlight that the proposed 1D-ResNeXt model effectively balances computational demands and model complexity. This balance makes it well suited for real-time IoT-based HAR applications, where speed and accuracy are essential.

5.4. Implications for IoT-Enabled HAR

The combination of high accuracy with low computational demands makes our 1D-ResNeXt model a promising candidate for real-time HAR in IoT contexts. This balance directly addresses a core challenge in the field: achieving high performance while ensuring suitability for deployment on devices with limited resources.

5.5. Limitations and Future Work

While our 1D-ResNeXt model shows encouraging results, there are areas for further research to enhance its applicability in real-world settings. Future studies should investigate the model’s long-term performance to determine its adaptability to changing user behaviors and environments. Additionally, detailed evaluations of energy efficiency on actual IoT hardware are necessary to confirm its practical deployment feasibility. Given the sensitive nature of activity data, integrating privacy-preserving methods into the model architecture is an essential next step.

Exploring the model’s potential applications in related fields, such as gesture recognition or anomaly detection, could also extend its impact. Despite these areas for further exploration, the 1D-ResNeXt model represents a substantial advancement in IoT-enabled HAR by offering a balance of high accuracy and efficiency across diverse, uncontrolled settings. These findings lay the groundwork for more reliable and practical HAR solutions in applications like healthcare monitoring, smart home systems, and personalized assistive technologies, marking a significant step forward in real-world HAR.

6. Conclusions and Future Works

This research introduces an innovative 1D-ResNeXt model designed for HAR in real-world environments, specifically tailored for IoT applications. Through comprehensive evaluation on three distinct datasets—mHealth, MotionSense, and Wild-SHARD—we have demonstrated that our model delivers outstanding performance and efficiency when compared to traditional deep learning models.

The proposed 1D-ResNeXt consistently outperformed other architectures, achieving impressive accuracy rates (99.97% for mHealth, 98.77% for MotionSense, and 97.59% for Wild-SHARD) while significantly reducing the number of parameters. This optimal balance between accuracy and computational efficiency addresses a critical challenge in IoT-based HAR, making our model highly suitable for deployment on resource-constrained devices.

While our 1D-ResNeXt model represents a substantial advancement in IoT-enabled HAR, several areas for future research remain. Long-term studies are necessary to evaluate the model’s adaptability to changing user behaviors and environmental conditions over time. Additionally, further exploration of the model’s energy efficiency on real IoT devices is crucial for optimizing practical deployments. Given the sensitive nature of activity data, incorporating privacy-preserving mechanisms into the model architecture is a priority for future development.

Expanding the model’s applicability to related areas like gesture recognition or anomaly detection could broaden its impact. Further optimization for real-time processing on edge devices, along with research into transfer learning, could improve the model’s utility by enabling adaptation to new users or activities with minimal retraining. These future research directions aim to address ongoing challenges in HAR and help bridge the gap between lab-based results and real-world effectiveness in various IoT scenarios.

Author Contributions

Conceptualization, S.M. and A.J.; methodology, S.M.; software, A.J.; validation, A.J.; formal analysis, S.M.; investigation, S.M.; resources, A.J.; data curation, A.J.; writing—original draft preparation, S.M.; writing—review and editing, A.J.; visualization, S.M.; supervision, A.J.; project administration, A.J.; funding acquisition, S.M. and A.J. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by University of Phayao; Thailand Science Research and Innovation Fund (Fundamental Fund 2025, Grant No. 5014/2567); National Science, Research and Innovation Fund (NSRF); and King Mongkut’s University of Technology North Bangkok (contract no. KMUTNB-FF-68-B-02).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

To clarify, our research utilizes a pre-existing, publicly available dataset collected and made available by licensed under Creative Commons Attribution 4.0 International. The dataset has been anonymized and does not contain any personally identifiable information. We have cited the source of the dataset in our manuscript and have complied with the terms of use set forth by the dataset provider.

Data Availability Statement

The original data presented in the study are openly available for the mHEALTH dataset in the UCI Machine Learning Repository at https://archive.ics.uci.edu/dataset/319/mhealth+dataset (accessed on 12 September 2024), the MotionSense dataset at https://www.kaggle.com/datasets/malekzadeh/motionsense-dataset (accessed on 12 September 2024), and Wild-SHARD dataset in the IEEEDataport at https://ieee-dataport.org/documents/wild-shard-smartphone-sensor-based-human-activity-recognition-dataset-wild (accessed on 15 September 2024).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Gilmore, J.; Nasseri, M. Human Activity Recognition Algorithm with Physiological and Inertial Signals Fusion: Photoplethysmography, Electrodermal Activity, and Accelerometry. Sensors 2024, 24, 3005. [Google Scholar] [CrossRef] [PubMed]

- Karayaneva, Y.; Sharifzadeh, S.; Jing, Y.; Tan, B. Human Activity Recognition for AI-Enabled Healthcare Using Low-Resolution Infrared Sensor Data. Sensors 2023, 23, 478. [Google Scholar] [CrossRef] [PubMed]

- Onthoni, D.D.; Sahoo, P.K. Artificial-Intelligence-Assisted Activities of Daily Living Recognition for Elderly in Smart Home. Electronics 2022, 11, 4129. [Google Scholar] [CrossRef]

- Sakka, S.; Liagkou, V.; Stylios, C. Exploiting Security Issues in Human Activity Recognition Systems (HARSs). Information 2023, 14, 315. [Google Scholar] [CrossRef]

- Cherian, J.; Ray, S.; Taele, P.; Koh, J.I.; Hammond, T. Exploring the Impact of the NULL Class on In-the-Wild Human Activity Recognition. Sensors 2024, 24, 3898. [Google Scholar] [CrossRef]

- Stojchevska, M.; De Brouwer, M.; Courteaux, M.; Ongenae, F.; Van Hoecke, S. From Lab to Real World: Assessing the Effectiveness of Human Activity Recognition and Optimization through Personalization. Sensors 2023, 23, 4606. [Google Scholar] [CrossRef]

- Bouchabou, D.; Nguyen, S.M.; Lohr, C.; LeDuc, B.; Kanellos, I. A Survey of Human Activity Recognition in Smart Homes Based on IoT Sensors Algorithms: Taxonomies, Challenges, and Opportunities with Deep Learning. Sensors 2021, 21, 6037. [Google Scholar] [CrossRef]

- Islam, M.M.; Nooruddin, S.; Karray, F.; Muhammad, G. Human activity recognition using tools of convolutional neural networks: A state of the art review, data sets, challenges, and future prospects. Comput. Biol. Med. 2022, 149, 106060. [Google Scholar] [CrossRef]

- Chen, Z.; Cai, C.; Zheng, T.; Luo, J.; Xiong, J.; Wang, X. RF-Based Human Activity Recognition Using Signal Adapted Convolutional Neural Network. IEEE Trans. Mob. Comput. 2023, 22, 487–499. [Google Scholar] [CrossRef]

- Doniec, R.; Konior, J.; Sieciński, S.; Piet, A.; Irshad, M.T.; Piaseczna, N.; Hasan, M.A.; Li, F.; Nisar, M.A.; Grzegorzek, M. Sensor-Based Classification of Primary and Secondary Car Driver Activities Using Convolutional Neural Networks. Sensors 2023, 23, 5551. [Google Scholar] [CrossRef]

- Zhongkai, Z.; Kobayashi, S.; Kondo, K.; Hasegawa, T.; Koshino, M. A Comparative Study: Toward an Effective Convolutional Neural Network Architecture for Sensor-Based Human Activity Recognition. IEEE Access 2022, 10, 20547–20558. [Google Scholar] [CrossRef]

- Krichen, M. Convolutional Neural Networks: A Survey. Computers 2023, 12, 151. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Xie, S.; Girshick, R.; Dollár, P.; Tu, Z.; He, K. Aggregated Residual Transformations for Deep Neural Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5987–5995. [Google Scholar] [CrossRef]

- Bianchi, V.; Bassoli, M.; Lombardo, G.; Fornacciari, P.; Mordonini, M.; De Munari, I. IoT Wearable Sensor and Deep Learning: An Integrated Approach for Personalized Human Activity Recognition in a Smart Home Environment. IEEE Internet Things J. 2019, 6, 8553–8562. [Google Scholar] [CrossRef]

- Kolkar, R.; Singh Tomar, R.P.; Vasantha, G. IoT-based Human Activity Recognition Models based on CNN, LSTM and GRU. In Proceedings of the 2022 IEEE Silchar Subsection Conference (SILCON), Silchar, India, 4–6 November 2022; pp. 1–7. [Google Scholar] [CrossRef]

- Yu, T.; Jin, H.; Nahrstedt, K. ShoesLoc: In-Shoe Force Sensor-Based Indoor Walking Path Tracking. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2019, 3, 31. [Google Scholar] [CrossRef]

- Agarwal, P.; Alam, M. A Lightweight Deep Learning Model for Human Activity Recognition on Edge Devices. Procedia Comput. Sci. 2020, 167, 2364–2373. [Google Scholar] [CrossRef]

- Ouyang, X.; Xie, Z.; Zhou, J.; Huang, J.; Xing, G. ClusterFL: A similarity-aware federated learning system for human activity recognition. In Proceedings of the 19th Annual International Conference on Mobile Systems, Applications, and Services, MobiSys ’21, New York, NY, USA, 24 June–2 July 2021; pp. 54–66. [Google Scholar] [CrossRef]

- Choudhury, N.A.; Moulik, S.; Roy, D.S. Physique-Based Human Activity Recognition Using Ensemble Learning and Smartphone Sensors. IEEE Sens. J. 2021, 21, 16852–16860. [Google Scholar] [CrossRef]

- Dong, M.; Han, J. HAR-Net:Fusing Deep Representation and Hand-crafted Features for Human Activity Recognition. arXiv 2018, arXiv:1810.10929. [Google Scholar] [CrossRef]

- Long, J.; Sun, W.; Yang, Z.; Raymond, O.I. Asymmetric Residual Neural Network for Accurate Human Activity Recognition. Information 2019, 10, 203. [Google Scholar] [CrossRef]

- Tuncer, T.; Ertam, F.; Dogan, S.; Aydemir, E.; Pławiak, P. Ensemble residual network-based gender and activity recognition method with signals. J. Supercomput. 2020, 76, 2119–2138. [Google Scholar] [CrossRef]

- Ronald, M.; Poulose, A.; Han, D.S. iSPLInception: An Inception-ResNet Deep Learning Architecture for Human Activity Recognition. IEEE Access 2021, 9, 68985–69001. [Google Scholar] [CrossRef]

- Mehmood, K.; Imran, H.A.; Latif, U. HARDenseNet: A 1D DenseNet Inspired Convolutional Neural Network for Human Activity Recognition with Inertial Sensors. In Proceedings of the 2020 IEEE 23rd International Multitopic Conference (INMIC), Bahawalpur, Pakistan, 5–7 November 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Demrozi, F.; Pravadelli, G.; Bihorac, A.; Rashidi, P. Human Activity Recognition Using Inertial, Physiological and Environmental Sensors: A Comprehensive Survey. IEEE Access 2020, 8, 210816–210836. [Google Scholar] [CrossRef] [PubMed]

- Ramanujam, E.; Perumal, T.; Padmavathi, S. Human Activity Recognition With Smartphone and Wearable Sensors Using Deep Learning Techniques: A Review. IEEE Sens. J. 2021, 21, 13029–13040. [Google Scholar] [CrossRef]

- Choudhury, N.A.; Soni, B. An Adaptive Batch Size-Based-CNN-LSTM Framework for Human Activity Recognition in Uncontrolled Environment. IEEE Trans. Ind. Inform. 2023, 19, 10379–10387. [Google Scholar] [CrossRef]

- Anguita, D.; Ghio, A.; Oneto, L.; Parra, X.; Reyes-Ortiz, J.L. A Public Domain Dataset for Human Activity Recognition using Smartphones. In Proceedings of the The European Symposium on Artificial Neural Networks, Bruges, Belgium, 24–26 April 2013; pp. 437–442. [Google Scholar]

- Weiss, G.M.; Yoneda, K.; Hayajneh, T. Smartphone and Smartwatch-Based Biometrics Using Activities of Daily Living. IEEE Access 2019, 7, 133190–133202. [Google Scholar] [CrossRef]

- Banos, O.; Garcia, R.; Holgado-Terriza, J.A.; Damas, M.; Pomares, H.; Rojas, I.; Saez, A.; Villalonga, C. mHealthDroid: A Novel Framework for Agile Development of Mobile Health Applications. In Proceedings of the International Workshop on Ambient Assisted Living and Daily Activities, Belfast, UK, 2–5 December 2014; pp. 91–98. [Google Scholar]

- Malekzadeh, M.; Clegg, R.G.; Cavallaro, A.; Haddadi, H. Mobile sensor data anonymization. In Proceedings of the International Conference on Internet of Things Design and Implementation, IoTDI ’19, New York, NY, USA, 15–18 April 2019; pp. 49–58. [Google Scholar] [CrossRef]

- Vavoulas, G.; Chatzaki, C.; Malliotakis, T.; Pediaditis, M.; Tsiknakis, M. The MobiAct Dataset: Recognition of Activities of Daily Living using Smartphones. In Proceedings of the International Conference on Information and Communication Technologies for Ageing Well and e-Health—Volume 1: ICT4AWE, (ICT4AGEINGWELL 2016), Rome, Italy, 21–22 April 2016; pp. 143–151. [Google Scholar] [CrossRef]

- Attal, F.; Mohammed, S.; Dedabrishvili, M.; Chamroukhi, F.; Oukhellou, L.; Amirat, Y. Physical Human Activity Recognition Using Wearable Sensors. Sensors 2015, 15, 31314–31338. [Google Scholar] [CrossRef]

- Chavarriaga, R.; Sagha, H.; Calatroni, A.; Digumarti, S.T.; Tröster, G.; del, R. Millán, J.; Roggen, D. The Opportunity challenge: A benchmark database for on-body sensor-based activity recognition. Pattern Recognit. Lett. 2013, 34, 2033–2042. [Google Scholar] [CrossRef]

- Ismail Fawaz, H.; Lucas, B.; Forestier, G.; Pelletier, C.; Schmidt, D.F.; Weber, J.; Webb, G.I.; Idoumghar, L.; Muller, P.A.; Petitjean, F. InceptionTime: Finding AlexNet for time series classification. Data Min. Knowl. Discov. 2020, 34, 1936–1962. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Semwal, V.B.; Gupta, A.; Lalwani, P. An optimized hybrid deep learning model using ensemble learning approach for human walking activities recognition. J. Supercomput. 2021, 77, 12256–12279. [Google Scholar] [CrossRef]

- Garcia, K.D.; de Sá, C.R.; Poel, M.; Carvalho, T.; Mendes-Moreira, J.; Cardoso, J.M.; de Carvalho, A.C.; Kok, J.N. An ensemble of autonomous auto-encoders for human activity recognition. Neurocomputing 2021, 439, 271–280. [Google Scholar] [CrossRef]

- Burns, D.; Boyer, P.; Arrowsmith, C.; Whyne, C. Personalized Activity Recognition with Deep Triplet Embeddings. Sensors 2022, 22, 5222. [Google Scholar] [CrossRef]

- Singh, S.P.; Sharma, M.K.; Lay-Ekuakille, A.; Gangwar, D.; Gupta, S. Deep ConvLSTM With Self-Attention for Human Activity Decoding Using Wearable Sensors. IEEE Sens. J. 2021, 21, 8575–8582. [Google Scholar] [CrossRef]

- Boutet, A.; Frindel, C.; Gambs, S.; Jourdan, T.; Ngueveu, R.C. DySan: Dynamically Sanitizing Motion Sensor Data Against Sensitive Inferences through Adversarial Networks. In Proceedings of the 2021 ACM Asia Conference on Computer and Communications Security, ASIA CCS ’21, New York, NY, USA, 7–11 June 2021; pp. 672–686. [Google Scholar] [CrossRef]

- Sharshar, A.; Fayez, A.; Ashraf, Y.; Gomaa, W. Activity With Gender Recognition Using Accelerometer and Gyroscope. In Proceedings of the 2021 15th International Conference on Ubiquitous Information Management and Communication (IMCOM), Seoul, Republic of Korea, 4–6 January 2021; pp. 1–7. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).