Abstract

The precise and prompt identification of skin cancer is essential for efficient treatment. Variations in colour within skin lesions are critical signs of malignancy; however, discrepancies in imaging conditions may inhibit the efficacy of deep learning models. Numerous previous investigations have neglected this problem, frequently depending on deep features from a singular layer of an individual deep learning model. This study presents a new hybrid deep learning model that integrates discrete cosine transform (DCT) with multi-convolutional neural network (CNN) structures to improve the classification of skin cancer. Initially, DCT is applied to dermoscopic images to enhance and correct colour distortions in these images. After that, several CNNs are trained separately with the dermoscopic images and the DCT images. Next, deep features are obtained from two deep layers of each CNN. The proposed hybrid model consists of triple deep feature fusion. The initial phase involves employing the discrete wavelet transform (DWT) to merge multidimensional attributes obtained from the first layer of each CNN, which lowers their dimension and provides time–frequency representation. In addition, for each CNN, the deep features of the second deep layer are concatenated. Afterward, in the subsequent deep feature fusion stage, for each CNN, the merged first-layer features are combined with the second-layer features to create an effective feature vector. Finally, in the third deep feature fusion stage, these bi-layer features of the various CNNs are integrated. Through the process of training multiple CNNs on both the original dermoscopic photos and the DCT-enhanced images, retrieving attributes from two separate layers, and incorporating attributes from the multiple CNNs, a comprehensive representation of attributes is generated. Experimental results showed 96.40% accuracy after trio-deep feature fusion. This shows that merging DCT-enhanced images and dermoscopic photos can improve diagnostic accuracy. The hybrid trio-deep feature fusion model outperforms individual CNN models and most recent studies, thus proving its superiority.

1. Introduction

Skin cancer is widely recognised as one of the most prevalent and substantial types of cancer worldwide, with its incidence steadily increasing in recent times. By the year 2023, the United States is projected to have a total of 97,610 individuals who will be diagnosed with skin cancer [1]. There are various types of skin cancer; melanoma is the most fatal [2]. This particular form of cancer is responsible for 75% of fatalities, despite only comprising 7% of the overall number of cases [3]. Fortunately, the likelihood of survival is significantly high when the disease is detected, and appropriate treatment is given to patients during the early stages [4]. Without treatment, the condition will progressively spread to various parts of the body, significantly reducing the likelihood of successful treatment [5,6]. Therefore, early detection is crucial.

The traditional approach for detecting skin cancer involves conducting a physical examination and performing a biopsy. Although biopsies are an effective strategy for identifying skin cancer, the procedure is time-consuming and uncertain. Dermoscopic skin imaging has emerged as the predominant non-invasive technique utilised by dermatologists for the identification of skin lesions [7]. Nevertheless, the main drawbacks of dermoscopy are the requirement for the extensive training of dermatologists, subjectivity, and the insufficient number of experienced dermatologists. Furthermore, different types of skin cancer have similar characteristics, leading to misdiagnosis [8].

Advancements in digital image processing and artificial intelligence involving machine learning and deep learning have led to the development of several Computer-Aided Diagnosis (CAD) systems in the last twenty years for diagnosing several diseases [9,10,11,12,13,14]. Conventional machine learning techniques require substantial prior knowledge and time-consuming preparation phases. On the other hand, deep learning-based CAD systems do not need these preprocessing and handcrafted feature extraction processes. Multiple deep learning schemes have been developed for the purposes of classification, segmentation, and detection [15,16,17,18]. Of these methods, convolutional neural networks (CNNs) are particularly significant well-known approaches for image recognition and classification [19,20,21]. CNN-based CAD systems can accurately identify skin cancer, surpassing dermatologists in this aspect.

Colour plays a crucial role in identifying malignant lesions. The presence of different shades in a skin lesion can indicate irregularities, which can be helpful in identifying skin cancer [4,5]. Timely detection of alterations in the hue of a lesion is a crucial element for prompt diagnosis and efficient treatment [6]. The quality of the photos is an important aspect when constructing deep neural network models and necessitates appropriate handling. However, most previous studies did not consider this issue when building their models. The application of Discrete Cosine Transform (DCT) to dermoscopic images allows for the improvement of image quality and the correction of colour distortions. This correction results in a more precise depiction of skin features. Moreover, combining attributes derived from CNNs that have been trained on both enhanced and original images can greatly enhance diagnostic accuracy. This approach exploits the collaborative information offered by diverse image representations, thereby capturing minor image attributes that could be missed when relying solely on a single image method.

This study proposes a framework based on DCT to enhance images and correct colour distortions in dermoscopic images, taking into account the significance of precise diagnosis and the difficulties caused by variations in lighting and colour. The framework examines the influence of utilising both original and improved dermoscopic photographs on the diagnostic efficacy of skin cancer. This framework is built upon three CNNs with different architectures. The CNNs are initially provided with the original images of skin cancer. Subsequently, the same CNNs undergo training using the DCT-enhanced images. Afterwards, features are derived out of two layers of each deep learning model and then combined. This study investigates whether combining attributes gathered out of these dual layers of each network can enhance performance. Additionally, the bi-layer deep features of the three CNNs trained with the original and DCT-enhanced images are merged to explore whether the attributes’ incorporation retrieved from the deep learning networks fed with the original images and DCT-enhanced images can enhance the accuracy of classification. The novelty and contributions of this study are as follows:

- Utilising a diverse set of deep networks with varying configurations, in contrast to existing deep learning-based frameworks for skin cancer classification that depend on an individual deep network.

- As opposed to counting solely on the features of an individual deep layer, the approach involves extracting attributes out of dual distinctive layers (namely, layer 1 and layer 2) of a CNN.

- Merging information obtained from the original dermoscopic images with DCT images by extracting deep features from CNN trained with both images.

- Fusing layer 1 features of each CNN (trained with original and DCT images) having high dimensionality using discrete wavelet transform (DWT) to reduce their size and provide time–frequency representations to improve performance.

- Concatenating layer 2 features for each CNN (trained with original and DCT images) and then integrating them with fused layer 1 deep features to further improve classification accuracy.

- Integrating both deep layers features of the three CNNs to merge the benefits of each CNN architecture which usually achieves greater results.

2. Related Works

Several studies have demonstrated that CAD systems have the capacity to enhance the diagnostic rates for skin cancer. The literature offers a wide range of machine learning-based automated methods to address this challenge. The methods are classified into two categories: classic and deep learning-based automated skin cancer diagnosis. The former approaches require extensive preprocessing and processing steps such as segmentation and handcrafted feature extraction, which are prone to error. On the other hand, deep learning-based approaches do not require these steps and can automatically extract features from the input and produce a decision. Deep learning approaches, particularly pre-trained CNN models, show potential for improving the accuracy as well as effectiveness of skin cancer detection, segmentation, and classification. This section will focus on examining previous studies that have utilised methods of deep learning, specifically CNNs, to classify skin cancer. Some research was conducted for binary skin cancer classification. For example, the paper [22] presented a web-based CAD model that accurately identified skin cancer as benign and malignant. The CAD utilised several CNNs to identify malignant melanoma. With ResNet-50, the study showed that the new model for identifying skin cancer achieved an accuracy level of 94%, which is the highest among all models tested. Additionally, it achieved an F1-score of 93.9%. On the other hand, the study [23] introduced a new and efficient convolutional neural network (CNN) named TurkerNet. TurkerNet consists of four key components: the input block, residual bottleneck block, efficient block, and output block. The TurkerNet system demonstrated an impressive performance of 92.12% accuracy in classifying binary skin cancer.

Other research was carried out for multi-class skin cancer classification. For instance, the authors of [24] created a CAD system for the early-stage multi-class categorisation of skin cancer carcinoma using the HAM10000 dataset. They employed a hair removal image processing algorithm and an Ensemble Visual Geometry Group-16 (EVGG-16) CNN. The primary concept was to assign distinct initialisations to the VGG-16 model on three separate scenarios, followed by combining the outcomes through an ensemble method. The experimental results demonstrate that the VGG-16 model reached an average accuracy of 87% and an F1 score of 86%. The EVGG-16 model achieved an average accuracy of 88% and an F1-score of 88% without using the hair removal image processing approach. However, when the hair removal image processing technique was applied, the exact model attained an average accuracy of 89% and an F1-score of 88%. While the authors of reference [1] employed median filtering and random sampling to enhance images and increase the amount of images, these images were then fed into a Gated Recurrent Unit Network (GRU) optimised by the Orca Predation algorithm (OPA). The results achieved are sensitivity (0.95), specificity (0.97), and accuracy (0.98). The study [25] provided a CAD framework that employed a customised CNN design along with a variant of a GAN network for preprocessing. The effectiveness of the model is greatly enhanced by employing preprocessing techniques, as demonstrated by its exceptional accuracy (exceeding 98%) when applied to the HAM10000 dataset.

The authors of reference [26] applied traditional image enhancement techniques and various CNN structures, resulting in a maximum performance of 92.08 percent accuracy via ResNet-50. The authors of reference [27] tackled the issue of data imbalance by using augmentation techniques. They employed three different CNN models, namely InceptionV3, AlexNet, and RegNetY-320. Among these models, RegNetY-320 showed better performance. Also, the researchers in [28] employed a combination of image filtering, segmentation, and CNN-based classification techniques. They operated ResNet-50 and DenseNet-169 models for this purpose. The utilisation of wavelet transform was introduced by [29] to obtain features from skin cancer photographs, subsequently followed by classification employing a deep residual neural network (R-CNN) and Extreme Learning Machine. The F1-Score and accuracy reached were 93.44% and 95.73%. Other research [30] integrated feature pyramid network (FPN) and Mask R-CNN techniques for segmentation, along with CNN for classification, resulting in an accuracy rate of 86.5%. Khan et al. [31] employed Mask R-CNN and DenseNet to derive features and used a support vector machine (SVM) for classification, achieving an accuracy of 88.5%. In [32], DenseNet and EfficientNet were used for skin cancer classification. EfficientNet achieved an outstanding accuracy of 85.8%.

In addition, in the study [33], Inception-Resnet-v2 was investigated, resulting in an enhanced accuracy rate of 95.09% using the HAM10000 dataset. In [34], a novel approach for enhancing contrast was introduced, along with numerous CNN topologies. Additionally, feature integration methods were employed using serial–harmonic mean and marine predator optimisation. The study attained accuracy rates of 85.4% and 98.80% on the two versions of the ISIC dataset, respectively. In reference [35], a CAD system was introduced that used a CNN model and feature selection. The researchers exploited transfer learning, genetic algorithms for hyperparameter optimisation, and feature combination strategies. The suggested technique demonstrated remarkable accuracies of 96.1% and 99.9% on the ISIC2018 and ISIC2019 datasets, respectively. The study [36] combined deep features extracted from three CNNs with handcrafted features to recognise skin lesion subcategories. In another paper [37], three CNNs were employed to obtain multiple deep features and they were then fused and a feature selection approach was performed to select among these features reaching an accuracy of 0.965. In study [38], a CAD system was constructed based on multi-directional CNNs trained with Gabor wavelets images reaching an accuracy of 91.70% on the HAM10000 dataset.

The results of these investigations emphasise the significance of image preprocessing, feature extraction, and reliable classification models for the precise identification of skin cancer. Nevertheless, there is still potential for enhancement in terms of managing various image conditions, improving feature representation, and investigating innovative fusion approaches. Reviewing the literature makes it abundantly evident that even with many diagnostic and classification systems for skin cancer, several gaps still need attention. For example, many CAD systems available in the scientific literature generate acceptable outcomes in clearly differentiating benign from malignant skin lesions. Nonetheless, this exception does not hold true when recognising different types of skin cancer. Additionally, it is noteworthy that the majority of the research investigations have concentrated on obtaining deep attributes from a solitary deep layer. The latest studies showed that various layers of a CNN may collect various forms of information [39,40,41]. The CNN layers gradually develop more complex characteristics. The lower layers of the structure gather fundamental characteristics such as borderlines and textures, whereas the deeper layers acquire more intricate patterns that are disease-specific. The proposed hybrid model employs a detailed data pyramid to improve comprehension of skin diseases by integrating attributes from multiple layers. Prior investigations have shown that combining data from several layers often produces improved classification accuracy when as opposed to using information from one layer [39,40,41]. This is so because every layer catches a different aspect of the image and when the outcomes are merged, the classification task finds a more consistent interpretation. One can improve performance by adding deep features obtained from several layers. Moreover, former CAD systems usually rely on individual CNNs for classification. Nevertheless, it is possible to improve the accuracy of diagnoses by combining more intricate characteristics from various CNNs with distinct structures. Furthermore, the majority of current CAD systems have not taken into account the correction of colour distortion during the construction of their models. This consideration could potentially improve the identification procedure of deep networks. Furthermore, the majority of existing CADs exclusively utilise spatial information data for constructing their models. However, incorporating time–frequency information alongside spatial data has the potential to enhance performance.

This study presents a framework that exploits DCT to improve the quality of images and resolve colour distortions in dermoscopic images. The framework takes into consideration the importance of accurate diagnosis and the challenges posed by variations in lighting and colour. The framework assesses the impact of using both original and enhanced dermoscopic photographs on the diagnostic effectiveness of skin cancer. This framework is constructed using three CNNs with distinct architectures. The CNNs are initially presented with the original images of skin cancer. Afterwards, the identical CNNs are trained using the DCT-enhanced images. After that, attributes are taken out of both substantial layers of every CNN and then put together. The research investigates whether merging features from each CNN’s two layers can enhance performance. In addition, the bi-layer deep features of three CNNs trained on both the original and DCT-enhanced images are combined. This is carried out to investigate whether merging the deep features obtained from CNNs trained on the original images and DCT-enhanced images can improve the accuracy of classification.

3. Materials and Methods

3.1. HAM10000 Skin Cancer Dataset

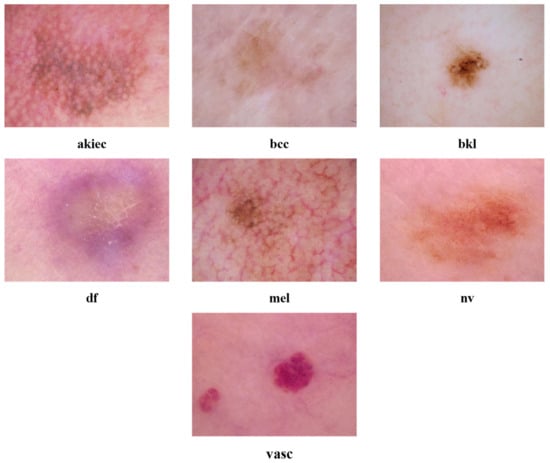

This dataset consists of diverse skin cancer pictures, and the utilisation of deep learning methods necessitates a significant amount of data to generate acceptable outcomes. Obtaining a varied assortment of skin cancer photos presents a substantial difficulty, and the limited availability of training data is a major concern when employing deep learning techniques. In order to tackle these problems, the HAM10000 dataset is adapted in current research, which comprises 10,015 dermoscopy images [42]. The HAM10000 dataset comprises photos representing skin lesions, which are classified into seven distinct categories based on their particular features. The classes consist of Melanocytic nevi (nv), Melanoma (mel), Benign keratosis-like lesions (bkl), Basal cell carcinoma (bcc), Actinic keratoses (akiec), Vascular lesions (vasc), and Dermatofibroma (df). This dataset is extensively utilised in dermatological studies for skin lesion classification problems. It encompasses a wide variety of skin disorders commonly seen in clinical practise, which makes it an appropriate option for assessing the performance of dermatoscopic image classification models. Figure 1 showcases a representative example from every category. The HAM10000 dataset exhibits an imbalance issue, with certain classes having notably fewer instances than others. The issue is visually illustrated in Table 1, which clearly depicts the uneven distribution of data among each class.

Figure 1.

A representative example from every skin cancer category of the HAM10000 dataset.

Table 1.

Photo distribution among different skin cancer categories of the HAM10000 dataset.

3.2. Discrete Cosine Transform

The DCT is a basic method used in image processing, specifically in tasks such as image compression and colour correction. It allows for the effective exploitation of colour content in an image by breaking down a picture into its various frequency components. First, DCT divides images into smaller block sizes, for example, 8 × 8. Then, the DCT converts each block into a matrix of coefficients. For every block, the DCT generates a set of coefficients. The top-left coefficient shows the mean value of pixels (DC coefficient); the remainder reveals various frequency components (AC coefficients). The coefficients reflect the extent to which every frequency element adds to the original picture block, with the low-frequency components representing the global colour and brightness, and the high-frequency components capturing intricate details and textures. For the correction of colour imbalances, one can make adjustments to particular frequency coefficients, such as scaling or shifting the values. By strategically adjusting these coefficients, it is possible to make colour corrections without causing a noticeable decrease in image quality [43]. The altered coefficients are reversed into their original domain by applying the inverse DCT, which ultimately produces a colour-corrected photo [44]. This strategy provides a mathematically and computationally effective technique for colour correction, rendering it a useful approach in image processing applications.

3.3. Discrete Wavelet Transform

DWT is a popular signal-processing approach that displays a time–frequency representation of the input. DWT breaks down the input data into a set of wavelet coefficients by applying high-pass and low-pass filters at different scales. When the input data first enter the DWT, they are first divided at the lowest resolution into approximation (CA) and detail coefficients (CD). The CA component captures overall characteristics, whereas the CD element provides comprehensive details regarding the high-frequency elements. Afterwards, this process is carried out on the CA coefficients, decomposing them into more compact scales with progressively more complex information within the detail components. This process involves breaking down a complex system or concept into smaller, more manageable parts, arranged in a hierarchical structure [45].

3.4. Proposed Hybrid Model

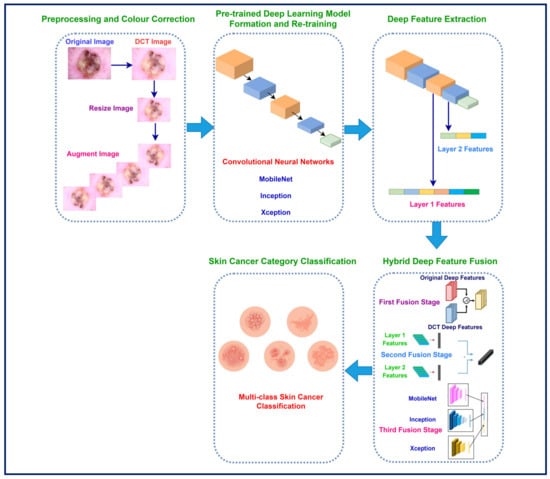

The proposed hybrid model has five steps in order to classify skin cancer into seven categories. These steps are preprocessing and colour correction, pre-trained deep learning model formation and retraining, deep feature extraction, hybrid deep feature fusion, and skin cancer category classification. Initially, in the preprocessing and colour correction step, DCT is applied to the dermoscopic images to correct colour distortions. Afterward, the original dermoscopic images and the DCT-enhanced images are resized and augmented. Next, in the second step, three pre-trained CNNs of various architecture are constructed and re-trained using the original dermoscopic and DCT-enhanced images. In the subsequent stage, complex attributes are obtained through the pair of layers of each CNN that has been developed utilising both kinds of photos. After that, deep features are fused using three fusion stages. Finally, in the last step, skin cancer is classified into seven categories using support vector machine (SVM) classifiers. The steps of the proposed hybrid model are illustrated in Figure 2.

Figure 2.

The proposed hybrid model steps for improved skin cancer classification.

3.4.1. Preprocessing and Colour Correction

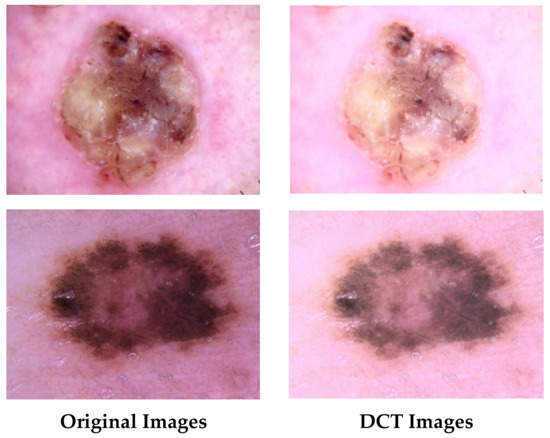

The DCT colour enhancement procedure employed in this study is based on the technique found in reference [44]. The colour enhancement procedure using DCT starts by transforming the photo from the RGB colour format to the YCbCr colour space. This conversion allows for the separation of the luminance (brightness) and chrominance (colour) information. Afterwards, DCT is applied to each colour component, breaking it down into frequency coefficients. Subsequently, the DCT coefficients are adjusted based on a predetermined function. This function commonly highlights specific frequency bands that are linked to colour characteristics. The algorithm assigns distinct scaling parameters to the DC coefficient, which represents the mean intensity, and the AC coefficients, which describe the details, of every colour component. The altered coefficients are subsequently converted back to the spatial domain through the inverse DCT, resulting in the enhanced photo which is then converted again to the RGB colour space. Figure 3 shows examples of the original and colour-enhanced images.

Figure 3.

Examples of the original and DCT-enhanced images.

After that, both the original dermoscopic and DCT-enhanced images are resized to 229 × 229 × 3 for Xception and Inception, and 224 × 224 × 3 for MobileNet to be acceptable by the CNNs’ input. Subsequently, both sets of images are split into training and testing partitions using a 70–30% ratio. Afterward, training partitions are augmented by several augmentation techniques. The details of these augmentation methods are found in Table 2.

Table 2.

Photo distribution among different skin cancer categories of the HAM10000 dataset.

3.4.2. Pre-Trained Deep Learning Model Formation and Retraining

This phase uses transfer learning to build triple pre-trained CNNs originally learned on the images from ImageNet [46]. The CNNs involve Inception, MobileNet, and Xception. The first and last models of these three CNNs prioritise improving the features acquired by efficiently employing convolutional filters [47,48]. Inception uses inception components, which incorporate filters of varying sizes within an individual layer, allowing for the comprehensive capturing of a broad spectrum of visual information. Xception improves upon Inception by applying depth-separable convolutions, which improves efficacy while maintaining feature learning capabilities. Both methods offer advantages in preserving intricate feature depictions, but the choices taken during their design may influence the training time and memory requirements. On the other hand, the MobileNet architecture provides numerous benefits over Xceptin and Inception. Its depthwise separable convolutions significantly decrease the computational expense in comparison to conventional convolutional layers, resulting in high efficiency in terms of storage and processing power. Furthermore, the structure of MobileNet enables effective scaling to accomplish various balances among accuracy and model dimensions, thus rendering it adjustable to diverse hardware limitations [49].

In this study, the initial count of fully connected layers of each CNN was modified to 7, which corresponds to the skin cancer classes in the HAM10000 dataset. Subsequently, various hyper-parameters were fine-tuned for optimisation, with additional information available in the experiment setup section. The relearning procedure of the three networks commenced thereafter. Each of the three CNNs was trained independently utilising the DCT-enhanced images and the original dermoscopic images. In other words, every deep learning model underwent parallel training using both DCT images and the original images.

3.4.3. Deep Feature Extraction

Upon completing the training process phase for each CNN using both DCT-enhanced images and dermoscopic images, transfer learning is operated to attain complex attributes from every CNN. The objective of this stage is to extract deep attributes from the two distinct levels of any given CNN, rather than obtaining deep attributes from just one layer of a CNN. The complex attributes are obtained from the final pooling layer, referred to as layer 1, and the fully connected layer, referred to as layer 2, within a CNN. Table 3 shows the length of the deep features acquired from both layers of each CNN.

Table 3.

The length of the deep features acquired from both layers of each CNN.

3.4.4. Hybrid Deep Feature Fusion

The deep feature fusion step is a crucial component of the hybrid model, which consists of three stages of deep feature fusion. As noted in Table 3, the dimension of layer 1 features is high. Thus, in the preliminary stage, each CNN’s first layer’s high-dimensional variables obtained from the DCT-enhanced images and the original dermoscopic images are combined using DWT, which reduces the CNNs’ dimensions and gives time–frequency representation, improving their capacity to distinguish between various types of skin cancer. This study leverages the “Haar” mother wavelet and applies two levels of breakdown to the data. Furthermore, in the first fusion stage, the deep features of layer 2 are merged together in a concatenation manner for each CNN trained independently using the DCT-enhanced images and the original dermoscopic images. In the subsequent phase, during the second stage of deep feature fusion, layer 1 features acquired from each CNN are merged and combined with the layer 2 fused features to generate a highly efficient feature vector. Ultimately, during the third stage of deep feature fusion, the fused bi-layer features from the three CNNs trained independently using the DCT-enhanced images and the original dermoscopic images are combined. The length of the feature vector after each fusion stage is shown in Table 4.

Table 4.

The length of the feature vector after each fusion stage.

3.4.5. Skin Cancer Category Classification

The last step of the proposed hybrid model is the skin cancer category classification. Within this step, three SVM classifiers are built having different kernels including Linear SVM, Quadratic SVM, and Cubic SVM. These classifiers are employed in the three fusion stages to classify skin cancer into seven categories. These models’ performance is assessed by five-fold cross-validation.

4. Experimental Setting and Evaluation Metrics

This section presents the metrics of efficacy applied to evaluate the ability of the proposed hybrid model, along with the refined hyper-parameter configurations. Each CNN has undergone numerous alterations to its hyperparameter settings. The values of these hyper-parameters are shown in Table 5. For instance, the mini-match size is 10 and the maximum number of epochs is 40. Whereas the learning rate is 0.001 and the validation frequency is 701. The remainder of the parameters are preserved in their initial state. The optimisation method employed for learning the networks is SGDM, an abbreviation for stochastic gradient descent with momentum.

Table 5.

The modified hyper-parameters information for the deep learning models.

Evaluation measures are essential for evaluating the effectiveness of deep learning methods, providing useful information on how well they perform. The main indicators employed to assess networks are accuracy, precision, sensitivity, specificity, Mathew correlation coefficient (MCC), F1-score, receiving characteristics curve (ROC), and the area under this curve (AUC). Accuracy is a metric that quantifies the proportion of accurate predictions out of the overall number of predictions, offering a comprehensive assessment of the efficacy of a given model. Precision measures how accurate the model is in properly recognising positive cases, whereas sensitivity, also known as the true positive rate (TPR), evaluates how well it can obtain all positive examples. The F1-score provides a comprehensive measure of the model’s efficiency by taking into account both precision and recall. These metrics are essential for identifying overfitting, fine-tuning model settings, and guaranteeing reliable performance. Specificity, or the true negative rate (TNR), is the percentage of correctly categorised negative examples out of the overall number of negative observations. It is additionally referred to as the opposite of sensitivity. In the classification of multiple classes, the specificity is determined by calculating the specificity for each individual category followed by finding the mean among these specificities. The AUC measure, which is frequently employed in binary classification purposes, may additionally be expanded to encompass multiple class cases. It is a metric used to assess the effectiveness of a model in ranking examples of various categories in multiclass classification. The MCC metric quantifies the degree of correlation among the predicted and the actual classification labels. In the case of multi-class classification, it is determined by computing a one-vs.-rest MCC for every category and subsequently averaging the outcomes. These metrics are calculated using the Formulas (1)–(7).

TP represents the count of cases that have been accurately estimated as positive (P). TN signifies the count of incidences that are reliably estimated as negative (N). FP represents the count of cases that are mistakenly identified as positive. FN denotes the count of occurrences that are inaccurately categorised as negative.

5. Experimental Results

The findings of the hybrid three-stage deep feature fusion’s capacity for classification are shown in this section. The first subsection delves into the outcomes of the preliminary fusion stage. This stage involves merging the layer 1 features of each CNN that was developed independently with the dermoscopy and DCT-enhanced images. The DWT is utilised for this combination. In addition, the results of concatenating layer 1 deep features of the same network are displayed and discussed in the first subsection. In the following subsection, the results of combining layer 1 and layer 2 deep features for each CNN built independently using the dermoscopic and DCT-enhanced images are presented. Finally, in the third subsection, the outcomes of merging the integrated dual-layered deep features of the three networks are demonstrated.

5.1. First Fusion Stage Results

The results of fusing layer 1 deep features for each CNN as well as concatenating layer 2 deep features are displayed in Table 6 and Table 7, respectively. Table 6 displays the outcomes of the initial fusion phase, in which the deep attributes obtained from layer 1 of CNNs trained on dermoscopic and DCT-enhanced photos are merged employing DWT. The results demonstrate an apparent enhancement in classification accuracy after combining deep attributes of the CNN trained with the original images with that of the DCT image-based deep attributes, as opposed to employing only the DCT image-based deep features. The MobileNet network demonstrates an accurate rate of 88.9% by utilising deep features based on DCT images. This level of accuracy improves to 91.3% when combined with dermoscopic image features. Comparable improvements are noted for the Inception and Xception CNNs. The maximum accuracy attained is 95.5% by employing Cubic-SVM with the combined attributes of the Xception model. More precisely, all three CNNs experience the greatest improvement when feature fusion is applied. For instance, MobileNet demonstrates an accuracy boost of 2.6% for linear SVM, 2.6% for quadratic SVM, and 2.7% for cubic SVM. Inception demonstrates notable enhancements, with increases of 2.8%, 2.9%, and 2.1% for linear, quadratic, and cubic SVM, respectively. For linear, quadratic, and cubic SVM, Xception displays a somewhat more subdued improvement of 1.6% to 1.5%, and 1.6%, respectively. The outcome highlights the efficacy of integrating dermoscopic and DCT-based features to improve the classification accuracy for diagnosing skin lesions. The enhancement proposes that the integration of data from both dermoscopic and DCT-enhanced images offers a more thorough depiction of the skin lesion, resulting in improved classification accuracy.

Table 6.

The accuracies (%) of the SVM classifiers after fusing layer 1 deep features using DWT.

Table 7.

The accuracies (%) of the SVM classifiers after concatenating layer 2 deep features.

Table 7 displays the prediction accuracies obtained by combining layer 2 deep features obtained from both dermoscopic and DCT-enhanced images, and then applying SVM for classification. The findings demonstrate an overall enhancement in classification performance when using features from both DCT and dermoscopic images as opposed to employing attributes from each of them individually. More specifically, the Inception model experiences great improvement from combining features together, resulting in accuracies of 92.8%, 92.8%, and 91.8% for linear, quadratic, and cubic SVM, respectively. MobileNet also exhibits significant enhancement, achieving accuracies of 91.0%, 91.2%, and 90.0% for linear, quadratic, and cubic SVM, respectively. The Xception model demonstrates a notable enhancement, attaining accuracies of 95.0%, 94.9%, and 94.2% for linear, quadratic, and cubic SVM, respectively. These accuracies surpass those achieved solely using the DCT-images-based features. The results indicate that the combination of attributes from various image representations can greatly improve the ability of classification models to distinguish between different categories, especially when dealing with intricate and nuanced visual patterns found in skin lesions. The combination of features clearly results in a significant improvement in accuracy for all CNN models and SVM classifiers. These findings highlight the significance of colour correction and fusion of enhanced images with the original dermoscopic images.

5.2. Second Fusion Stage Results

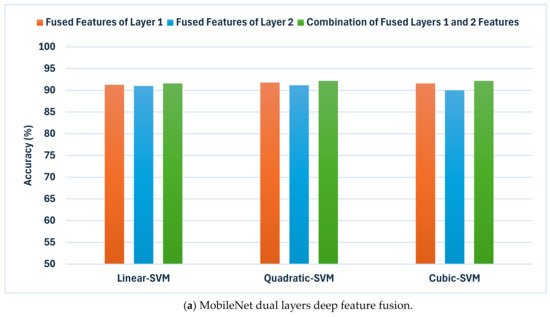

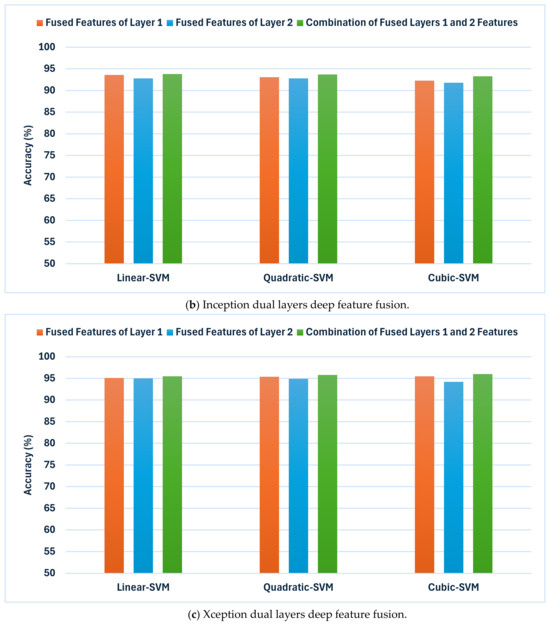

Figure 4 presents the results of the second fusion stage, which represents the combination of the dual-layered deep features for each CNN trained with the dermoscopic and DCT-enhanced images. As indicated in the data description section, the HAM10000 dataset consists of 100,015 images of seven skin cancer categories. In order to produce the results in Figure 4, as mentioned in the Skin Cancer Category Classification section of the proposed model, the five-fold cross-validation method was employed, where data are split into five equal folds in which four folds are used for training the classification models and the fifth for testing. This process is repeated each time with different training and testing folds. Deep features used to train the classification models are extracted from the last pooling and fully connected layer of each CNN. The size of deep features of the first fusion stage extracted from the two deep layers of each CNN as illustrated in Table 4 is equal to 1024 and 14 for layer 1 and layer 2 of the Xception and Inception CNNs and 640 and 14 for layer 1 and layer 2 of the MobileNet. After the second fusion stage, the dimension of deep features is equal to 1038, 1038, and 654 for Inception, Xception, and MobileNet CNN.

Figure 4.

The results of the second fusion phase: the classification accuracies (%) achieved by combining deep features extracted from both layer 1 and layer 2 of each CNN model trained with dermoscopic and DCT-enhanced images. (a) MobileNet, (b) Inception, and (c) Xception.

The outcomes shown in Figure 4 demonstrate a notable enhancement in classification accuracy in comparison to the earlier fusion stage (Table 6 and Table 7) across all CNN techniques. This implies that incorporating attributes from numerous layers improves the model’s capacity to differentiate between various skin lesion categories. Inception showcases exceptional performance, attaining accuracies of 93.8%, 93.7%, and 93.3% for linear, quadratic, and cubic SVM, accordingly. MobileNet exhibits notable enhancement, achieving accuracies of 91.6%, 92.2%, and 92.2%. In addition, Xception has shown an improvement with accuracies reaching 95.5%,95.8%, and 96.0% for the same classifiers. These results are higher than that reached using deep layers separately.

Such results indicate that the inclusion of attributes from various layers could significantly improve the discriminatory ability of the classification models. Nevertheless, the degree of enhancement differs among various CNN designs. While Inception consistently gains advantages from this methodology, MobileNet exhibits a more modest improvement in accuracy. Furthermore, Xception seems to reach a slight improvement when layer 2 features are added. These results emphasise the significance of investigating various strategies for combining features in order to enhance the classification performance of specific CNN architectures.

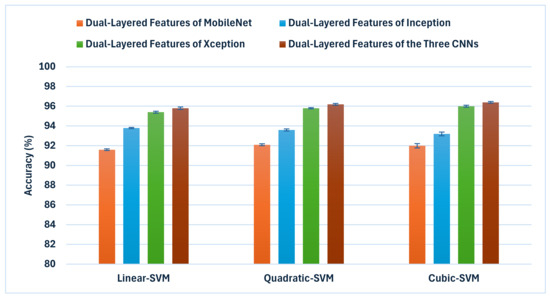

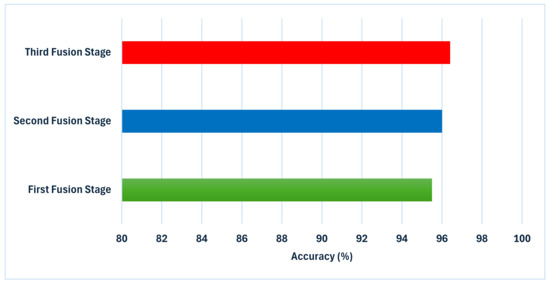

5.3. Third Fusion Stage Results

This subsection examines if feature fusion of the various CNNs could boost performance. It demonstrates the results of the third feature fusion stage where the dual-layered deep features of the three CNNs trained with the dermoscopic and the DCT-enhanced images are merged. Figure 5 displays a comparison of classification accuracies of the third feature fusion stage where the dual-layered deep features of the three CNNs trained with the dermoscopic and the DCT-enhanced images are merged in contrast to the dual-layered deep features of each CNN independently. The objective of this integration approach is to obtain a thorough depiction of the image by merging data from several CNNs and layers. The findings indicate a significant enhancement in classification performance when exploiting features from a single CNN. The integration of attributes from all three CNNs continues to enhance the accuracy of all SVM classifiers. Significantly, the combination of dual-layered features from all three CNNs results in the highest accuracy of 96.4% when employing the cubic SVM, followed by 96.2% and 95.8% achieved using the quadratic and linear SVMs, respectively, exceeding the accuracy of each of the CNNs. The narrow confidence intervals found in Figure 5 signify a favourable outcome in machine learning, reflecting a precise and dependable model.

Figure 5.

A comparison of classification accuracies of the third feature fusion stage where the dual-layered deep features of the three CNNs trained with the dermoscopic and the DCT-enhanced images are merged in conjunction with the dual-layered deep features of each CNN independently.

The one-way analysis of variance (ANOVA) statistical test was conducted and the results are shown in Table 8 in the manuscript. The ANOVA test was performed to ascertain the statistical significance of the variations between the models. The p-values corresponding to the statistical tests are provided. The null hypothesis (H₀) asserts that there is no statistically significant difference in the mean accuracies of the classification approaches. The ANOVA test was employed to evaluate the accuracy results of classifiers trained on the three fusion stages of the proposed model. The results presented in Table 8 demonstrate that the obtained p-values were significantly lower than the established alpha level of 0.05. The pattern seen indicates a statistically significant difference in the accuracy of classifiers learnt on the features of the three fusion stages.

Table 8.

One-way analysis of variance test outcomes for the comparison between classification accuracies of the three fusion stages.

Other performance metrics were used to evaluate the effectiveness of the proposed hybrid deep feature fusion model including sensitivity, precision, F1-score, specificity, and MCC for the three SVM classifiers. Table 9 shows the evaluation metrics for the three SVMs trained with the dual-layered fused features of the three CNNs. The findings suggest that combining dual-layered deep features from all three CNNs greatly improves effectiveness in all of the metrics used for assessment. The model exhibits a high level of sensitivity and specificity (0.95796, 0.99299), (0.96106, 0.99351), and (0.96347, 0.99391) among the linear, quadratic, and SVM classifiers, indicating its capacity for correctly recognising different skin cancer categories. The F1-score, which optimally combines precision and recall, demonstrates the outstanding performance of 0.95796, 0.96106, and 0.96347 for the linear, quadratic, and SVM classifiers, affirming the model’s efficacy in accurately categorising skin lesions. The MCC, which considers both true positives and true negatives, further supports the outstanding outcome of the suggested method. The MCC values achieved by the same classifiers are 0.95095, 0.95457, and 0.95738. The performance metrics displayed in Table 9 provide strong evidence for the efficacy of the suggested hybrid deep feature fusion model in effectively classifying skin lesions.

Table 9.

The evaluation metrics for the three SVMs trained with the dual-layered fused features of the three CNNs.

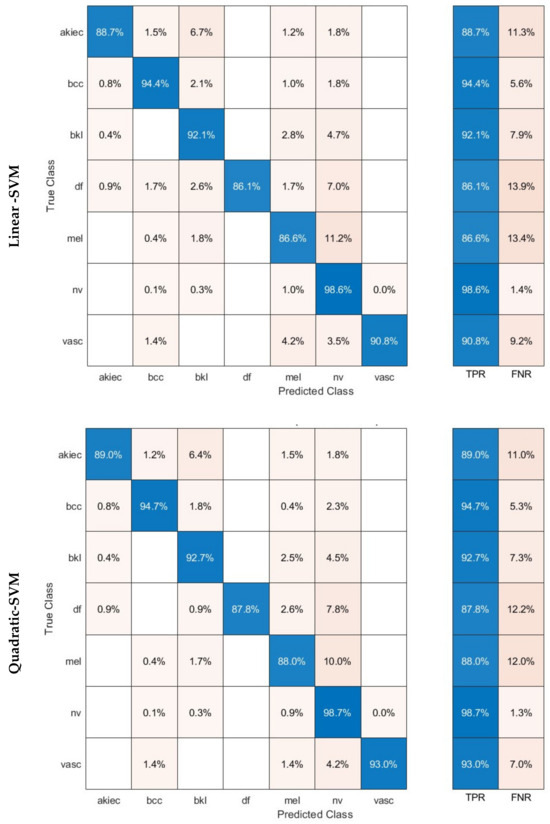

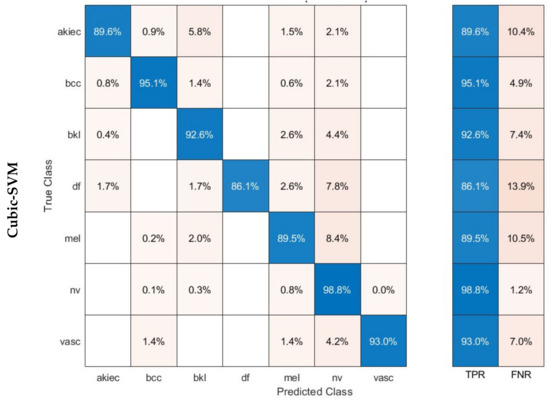

The confusion matrices were established for the three SVM classifiers and are shown in Figure 6. These classifiers were built using the combined dual-layered deep features extracted from the three CNNs. The CNNs were trained using dermoscopic and DCT-enhanced images. The diagram illustrates that the linear, quadratic, and cubic SVM models of the proposed hybrid model are capable of identifying all categories of the HAM10000 dataset. The linear, quadratic, and cubic SVM classifiers achieved the following sensitivities for each class: akiec (88.7%, 89%, 89.6%), bcc (94.4%, 94.7%, 95.1%), bkl (92.1%, 92.7%, 92.6%), df (86.1%, 87.8%, 86.1%), mel (88.6%, 88%, 89.5%), nv (98.6%, 98.7%, 98.8%), and vasc (90.8%, 93.0%, 93.0%).

Figure 6.

Confusion matrices achieved using the three SVM classifiers learned by the combined dual-layered deep features of the three CNNs.

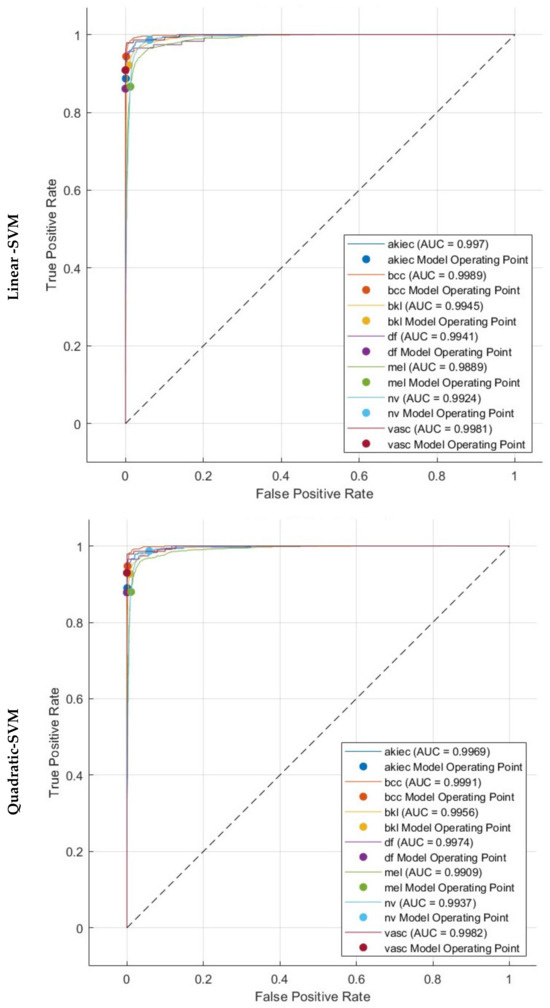

Figure 7 displays the Receiver Operating Characteristic (ROC) curves for the HAM10000 dataset. These curves were generated employing the three SVMs that were trained using the fused dual-layered deep features extracted from three CNNs. These CNNs are learned using both the original dermoscopic images and the images enhanced with DCT. The figures depict the value of (1-specificity) on a horizontal plane and the sensitivity on the vertical plane. Every ROC curve has been generated assuming a specific type of skin cancer is regarded as the positive classification predictor. The proposed hybrid model works reliably well across all categories of the datasets, as evidenced by the ROC curves. The ROC curves indicate that the linear, quadratic, and cubic SVM classifiers obtained an AUC value exceeding 0.99 across all classes of the HAM10000. This demonstrates that the proposed hybrid model can accurately distinguish between various subgroups of skin cancer.

Figure 7.

ROC and AUC realised with the three SVM classifiers learned by the combined dual-layered deep features of the three CNNs.

6. Discussion

The present research introduces a hybrid model that employs DCT to enhance image quality and address colour defects in dermoscopic images. It acknowledges the significance of precise diagnosis and the difficulties presented by fluctuations in illumination and hue. The model evaluates the influence of deploying both the actual and improved dermoscopic images on the diagnostic efficacy of skin cancer. This hybrid model was built by employing three CNNs that have various layouts. The CNNs were first provided with the actual photographs of skin cancer. Subsequently, the same CNNs were trained with the DCT-enhanced photos. Afterwards, features were gathered from two deep layers of each CNN and then combined. In addition, the doubled layered features generated by the three CNNs learned using the original and DCT-enhanced images were merged. The purpose of this investigation was to determine if combining the deep features extracted from CNNs trained on the original images with those obtained from DCT-enhanced images may increase the accuracy of classification. The research also investigated the potential for enhancing performance by integrating features from both layers of each CNN.

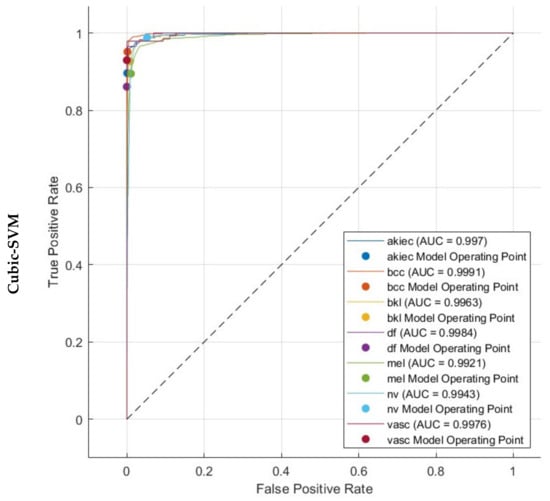

To achieve the above goals, the hybrid model accomplishes three hybrid deep feature fusion stages. Each CNN’s first layer’s high-dimensional variables from DCT-enhanced images and dermoscopic images are combined using DWT in the preliminary stage to reduce their dimensions and give time–frequency representation, improving their ability to distinguish skin cancer types. The deep features of deep layer 2 are concatenated for each CNN developed autonomously using DCT-enhanced pictures and dermoscopic photos in the first fusion stage. The second stage of deep feature fusion merges layer 1 features from each CNN with layer 2 fused features to create a highly efficient feature vector. In the third stage of deep feature fusion, the fused bi-layer features from the three CNNs trained separately using DCT-enhanced and dermoscopic images are combined. Figure 8 shows and compares the superior accuracy reached in every fusion stage. As can be seen in Figure 8, each of the fusion stages has enhanced the accuracy of the hybrid model. These findings prove that merging the deep features captured from the DCT-enhanced images with that of the actual dermoscopic images could boost performance. Additionally, integrating spatial and time–frequency information provides better representations of the input and improves classification performance. Additionally, relying on deep features gathered from multiple deep layers is superior to employing one deep layer. Finally, integrating deep features from several CNNs having different structures is capable of further improving the accuracy of skin cancer classification.

Figure 8.

An analysis of the highest level of accuracy achieved in every phase of integration in the suggested hybrid model.

6.1. Cutting-Edge CAD Comparisons

Table 10 displays a thorough examination of the suggested hybrid model in comparison to various cutting-edge methods for categorising skin lesions on the HAM10000 dataset. The results indicate a substantial enhancement in performance attained by the proposed hybrid model in all of the metrics of assessment. The success of the proposed model depends significantly on the DCT approach. DCT reduces noise by efficiently separating the image into many frequency components, so enabling selective enhancement of salient features. This procedure is especially helpful for dermoscopic images, where diagnosis depends critically on minute colour and texture changes. Improving these aspects gives the CNNs clearer, more diagnostically relevant input, which directly helps to raise classification accuracy. Moreover, the proposed hybrid model makes advantage of three different CNN architectures: MobileNet, Inception, and Xception. Every one of these networks is different: Inception uses parallel convolutional filters of different sizes, allowing it to capture features at many scales concurrently; Xception uses depthwise separable convolutions, which are particularly effective at learning cross-channel correlations and spatial correlation independently; MobileNet excels in efficient feature extraction with less parameters, capturing general features effectively. Combining these architectures allows the hybrid model to capture, at different levels of abstraction and scales, a more complete set of features than any one model might neglect.

Table 10.

Comparison between the proposed hybrid model and the state-of-the-art techniques based on the HAM10000 dataset.

Furthermore, the proposed hybrid model extracts features from two layers of every CNN. This method captures low-level attributes (such as edges, and textures) as well as high-level semantic features (such as complicated structures unique to particular lesion types). These multi-level attributes taken together offer a better picture of the skin lesions, allowing more complex classification. The three-stage fusion approach is essential to properly combining the several properties: Using DWT, the first stage combines features from the first layer of every CNN, so lowering dimensionality while maintaining time–frequency information. The second fusion stage generates an extensive feature set for every CNN by concatenating these combined features with those from the second layer of every CNN. The last fusion stage aggregates the features of all three CNNs to generate a very useful feature vector including the strengths of every network and layer. This sequential fusion approach guarantees the effective integration of complementary information and controls the high dimensionality of the merged feature space. DCT enhancement, multi-CNN architecture, and multi-layer feature fusion taken together strengthen the proposed hybrid model to the natural variability in dermoscopic images. This variability, which can be attributed to variations in imaging conditions, equipment, or lesion presentations, is a prevalent difficulty in the classification of skin lesions.

On the other hand, several of the methods being examined depend on individual CNN structures or only the original dermoscopic photographs, which restricts their capacity to capture intricate patterns and variations in skin lesions. The robust strategy of the proposed hybrid model effectively tackles these limitations, leading to a substantial improvement in performance. The results emphasise the possibility of integrating colour correction, multiple deep feature fusion strategies, and various CNN designs to improve the accuracy of the skin cancer diagnosis.

6.2. Limitations and Future Work

It is imperative to examine the constraints of the proposed hybrid model for skin cancer classification. An important concern arises from the potential impact of an imbalanced split between skin cancer categories in the HAM10000 dataset. The data imbalance may lead to an insufficient amount of training data for specific subclasses of skin cancer, potentially compromising the efficacy of the procedure for classification. Furthermore, further investigation is necessary to ascertain the degree to which the suggested hybrid model might be exploited for a broader spectrum of skin-related problems. Further research can particularly evaluate the effectiveness of the model in accurately identifying and diagnosing other skin diseases and abnormalities. Additionally, additional research is required to investigate the efficacy of the model in properly recognising patients with unique attributes. One other limitation of this study is the huge feature dimensionality obtained in the third fusion stage of the proposed model. Additionally, this study did not employ explainable artificial intelligence (XAI) techniques to interpret how predictions are accomplished.

To enhance the reliability and appropriateness of the proposed model in a clinical setting for a wider population, it is crucial to engage with experts and perform external verification. Subsequent studies will explore sampling methodologies to tackle the problem of class imbalance. In addition, future work will consider using feature selection and reduction techniques to lower feature dimensionality. There are intentions to gather data from a more extensive cohort of patients from various scan sites to improve the applicability and effectiveness of this study. The following investigations will assess the effectiveness of the hybrid model on a wider spectrum of skin disorders and abnormalities, in addition to on individuals who have distinctive characteristics. Ultimately, the proposed model will undergo assessment by expert dermatologists in a controlled clinical setting. Qualified physicians will evaluate the proposed model in clinical environments. Upcoming work will take into consideration using XAI approaches to help dermatologists understand how deep learning models make decisions.

The applicability of the presented model’s effectiveness to alternative skin cancer datasets is a critical factor to evaluate. Although the highest achieved accuracy (96.40%) by the proposed model on the HAM10000 dataset is outstanding, it is crucial to be aware that the efficacy of deep learning models may fluctuate considerably across diverse datasets owing to differences in image quality, tumour forms, and dataset attributes. The ISIC dataset may pose distinct challenges regarding image diversity, image quality, and distribution of classes in comparison to HAM10000. Consequently, it is expected to see some kind of performance variability when implementing this model on the ISIC dataset or other skin cancer image repositories.

Nevertheless, various elements of the proposed methodology indicate possible resilience across datasets. The application of DCT for colour correction and enhancement may effectively alleviate the impact of diverse photo acquisition conditions, a prevalent challenge in various skin lesion datasets. Moreover, the multi-CNN construction offers an extensive variety of features, improving the model’s capacity to identify complex structures in skin cancer photos. Moreover, the integration of attributes from various layers of the CNNs enables the model to utilise both local and global information, thereby enhancing its classification quality. Furthermore, the trio-deep feature fusion phases may yield a more thorough and generalisable description of features, thereby matching more effectively to the characteristics of various datasets. To comprehensively evaluate the generalisability of this methodology, it is recommended to carry out comprehensive experiments across various datasets, including the ISIC skin cancer datasets, and possibly refine the model parameters to enhance performance for each distinct dataset. These assessments would yield significant insights into the model’s suitability across various skin cancer imaging scenarios and its promise as a widely applicable technique in dermatological investigations. In future studies, additional experiments on the ISIC skin cancer dataset will be conducted to confirm the generalizability of the proposed model.

7. Conclusions

This study focused on identifying and categorising different types of skin cancer by developing a highly efficient hybrid model. It also aimed to overcome the shortcomings of prior approaches, improve accuracy, and assess the suggested hybrid method employing the HAM10000 dataset. This study presented an innovative hybrid deep learning model that efficiently detected intricate patterning and distinguished various skin cancer categories throughout dermoscopic photographs using the combination of DCT, multiple CNN structures, and a multi-stage feature fusion approach. The experimental findings clearly establish the merits of this method compared to current state-of-the-art methods. The inclusion of DCT-enhanced photos tackles issues regarding image quality, while the combination of deep features gathered from various CNN layers and the use of DWT for reducing dimensionality together contributed to the outstanding efficiency of the model. In addition, the results presented emphasised the significance of integrating data from various sources including dermoscopic and DCT images to enhance the precision of classification. By combining features from various CNN structures, a more inclusive depiction of the input data could be obtained, resulting in an improved ability to differentiate between different classes or categories. Nevertheless, the individual CNNs may have different impacts on the overall performance, indicating the necessity for additional research on feature selection and weighting techniques to enhance the merging procedure. This study provides a hopeful path for creating strong and precise automated diagnoses for skin cancer.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The HAM10000 dataset analysed during the current study is available in the Harvard Dataverse repository, https://dataverse.harvard.edu/dataset.xhtml?persistentId=doi:10.7910/DVN/DBW86T (accessed 30 May 2023).

Conflicts of Interest

The author declares no conflicts of interest.

References

- Zhang, L.; Zhang, J.; Gao, W.; Bai, F.; Li, N.; Ghadimi, N. A Deep Learning Outline Aimed at Prompt Skin Cancer Detection Utilizing Gated Recurrent Unit Networks and Improved Orca Predation Algorithm. Biomed. Signal Process. Control. 2024, 90, 105858. [Google Scholar] [CrossRef]

- Adegun, A.; Viriri, S. Deep Learning Techniques for Skin Lesion Analysis and Melanoma Cancer Detection: A Survey of State-of-the-Art. Artif. Intell. Rev. 2021, 54, 811–841. [Google Scholar] [CrossRef]

- Rao, N.R.; Vasumathi, D. Segmentation and Detection of Skin Cancer Using Deep Learning-Enabled Artificial Namib Beetle Optimization. Biomed. Signal Process. Control. 2024, 96, 106605. [Google Scholar]

- Rundle, C.W.; Militello, M.; Barber, C.; Presley, C.L.; Rietcheck, H.R.; Dellavalle, R.P. Epidemiologic Burden of Skin Cancer in the US and Worldwide. Curr. Dermatol. Rep. 2020, 9, 309–322. [Google Scholar] [CrossRef]

- Wei, L.; Ding, K.; Hu, H. Automatic Skin Cancer Detection in Dermoscopy Images Based on Ensemble Lightweight Deep Learning Network. IEEE Access 2020, 8, 99633–99647. [Google Scholar] [CrossRef]

- Xin, C.; Liu, Z.; Zhao, K.; Miao, L.; Ma, Y.; Zhu, X.; Zhou, Q.; Wang, S.; Li, L.; Yang, F. An Improved Transformer Network for Skin Cancer Classification. Comput. Biol. Med. 2022, 149, 105939. [Google Scholar] [CrossRef]

- Goodson, A.G.; Grossman, D. Strategies for Early Melanoma Detection: Approaches to the Patient with Nevi. J. Am. Acad. Dermatol. 2009, 60, 719–735. [Google Scholar] [CrossRef]

- Kittler, H.; Pehamberger, H.; Wolff, K.; Binder, M. Diagnostic Accuracy of Dermoscopy. Lancet Oncol. 2002, 3, 159–165. [Google Scholar] [CrossRef]

- Attallah, O. GabROP: Gabor Wavelets-Based CAD for Retinopathy of Prematurity Diagnosis via Convolutional Neural Networks. Diagnostics 2023, 13, 171. [Google Scholar] [CrossRef]

- Sharkas, M.; Attallah, O. Color-CADx: A Deep Learning Approach for Colorectal Cancer Classification through Triple Convolutional Neural Networks and Discrete Cosine Transform. Sci. Rep. 2024, 14, 6914. [Google Scholar] [CrossRef]

- Attallah, O. RADIC: A Tool for Diagnosing COVID-19 from Chest CT and X-Ray Scans Using Deep Learning and Quad-Radiomics. Chemom. Intell. Lab. Syst. 2023, 233, 104750. [Google Scholar] [CrossRef] [PubMed]

- Attallah, O. Cervical Cancer Diagnosis Based on Multi-Domain Features Using Deep Learning Enhanced by Handcrafted Descriptors. Appl. Sci. 2023, 13, 1916. [Google Scholar] [CrossRef]

- Attallah, O. ECG-BiCoNet: An ECG-Based Pipeline for COVID-19 Diagnosis Using Bi-Layers of Deep Features Integration. Comput. Biol. Med. 2022, 142, 105210. [Google Scholar] [CrossRef]

- Pacal, I.; Alaftekin, M.; Zengul, F.D. Enhancing Skin Cancer Diagnosis Using Swin Transformer with Hybrid Shifted Window-Based Multi-Head Self-Attention and SwiGLU-Based MLP. J. Imaging Inform. Med. 2024, 1–19. [Google Scholar] [CrossRef]

- Mijwil, M.M. Skin Cancer Disease Images Classification Using Deep Learning Solutions. Multimed. Tools Appl. 2021, 80, 26255–26271. [Google Scholar] [CrossRef]

- Attallah, O. An Intelligent ECG-Based Tool for Diagnosing COVID-19 via Ensemble Deep Learning Techniques. Biosensors 2022, 12, 299. [Google Scholar] [CrossRef]

- Attallah, O. CerCan· Net: Cervical Cancer Classification Model via Multi-Layer Feature Ensembles of Lightweight CNNs and Transfer Learning. Expert Syst. Appl. 2023, 229, 120624. [Google Scholar] [CrossRef]

- Pacal, I. MaxCerVixT: A Novel Lightweight Vision Transformer-Based Approach for Precise Cervical Cancer Detection. Knowl.-Based Syst. 2024, 289, 111482. [Google Scholar] [CrossRef]

- Kandhro, I.A.; Manickam, S.; Fatima, K.; Uddin, M.; Malik, U.; Naz, A.; Dandoush, A. Performance Evaluation of E-VGG19 Model: Enhancing Real-Time Skin Cancer Detection and Classification. Heliyon 2024, 10, e31488. [Google Scholar] [CrossRef]

- Attallah, O. Acute Lymphocytic Leukemia Detection and Subtype Classification via Extended Wavelet Pooling Based-CNNs and Statistical-Texture Features. Image Vis. Comput. 2024, 147, 105064. [Google Scholar] [CrossRef]

- Attallah, O. MonDiaL-CAD: Monkeypox Diagnosis via Selected Hybrid CNNs Unified with Feature Selection and Ensemble Learning. Digit. Health 2023, 9, 20552076231180054. [Google Scholar] [CrossRef] [PubMed]

- Sivakumar, M.S.; Leo, L.M.; Gurumekala, T.; Sindhu, V.; Priyadharshini, A.S. Deep Learning in Skin Lesion Analysis for Malignant Melanoma Cancer Identification. Multimed. Tools Appl. 2023, 83, 17833–17853. [Google Scholar] [CrossRef]

- Tuncer, T.; Barua, P.D.; Tuncer, I.; Dogan, S.; Acharya, U.R. A Lightweight Deep Convolutional Neural Network Model for Skin Cancer Image Classification. Appl. Soft Comput. 2024, 162, 111794. [Google Scholar] [CrossRef]

- Mushtaq, S.; Singh, O. A Deep Learning Based Architecture for Multi-Class Skin Cancer Classification. Multimed. Tools Appl. 2024. [Google Scholar] [CrossRef]

- Mukadam, S.B.; Patil, H.Y. Skin Cancer Classification Framework Using Enhanced Super Resolution Generative Adversarial Network and Custom Convolutional Neural Network. Appl. Sci. 2023, 13, 1210. [Google Scholar] [CrossRef]

- Kassani, S.H.; Kassani, P.H. A Comparative Study of Deep Learning Architectures on Melanoma Detection. Tissue Cell 2019, 58, 76–83. [Google Scholar] [CrossRef]

- Alam, T.M.; Shaukat, K.; Khan, W.A.; Hameed, I.A.; Almuqren, L.A.; Raza, M.A.; Aslam, M.; Luo, S. An Efficient Deep Learning-Based Skin Cancer Classifier for an Imbalanced Dataset. Diagnostics 2022, 12, 2115. [Google Scholar] [CrossRef]

- Gururaj, H.L.; Manju, N.; Nagarjun, A.; Aradhya, V.N.M.; Flammini, F. DeepSkin: A Deep Learning Approach for Skin Cancer Classification. IEEE Access 2023, 11, 50205–50214. [Google Scholar] [CrossRef]

- Alenezi, F.; Armghan, A.; Polat, K. Wavelet Transform Based Deep Residual Neural Network and ReLU Based Extreme Learning Machine for Skin Lesion Classification. Expert Syst. Appl. 2023, 213, 119064. [Google Scholar] [CrossRef]

- Khan, M.A.; Zhang, Y.-D.; Sharif, M.; Akram, T. Pixels to Classes: Intelligent Learning Framework for Multiclass Skin Lesion Localization and Classification. Comput. Electr. Eng. 2021, 90, 106956. [Google Scholar] [CrossRef]

- Khan, M.A.; Akram, T.; Zhang, Y.-D.; Sharif, M. Attributes Based Skin Lesion Detection and Recognition: A Mask RCNN and Transfer Learning-Based Deep Learning Framework. Pattern Recognit. Lett. 2021, 143, 58–66. [Google Scholar] [CrossRef]

- Huang, H.-W.; Hsu, B.W.-Y.; Lee, C.-H.; Tseng, V.S. Development of a Light-Weight Deep Learning Model for Cloud Applications and Remote Diagnosis of Skin Cancers. J. Dermatol. 2021, 48, 310–316. [Google Scholar] [CrossRef]

- Bozkurt, F. Skin Lesion Classification on Dermatoscopic Images Using Effective Data Augmentation and Pre-Trained Deep Learning Approach. Multimed. Tools Appl. 2023, 82, 18985–19003. [Google Scholar] [CrossRef]

- Bibi, S.; Khan, M.A.; Shah, J.H.; Damaševičius, R.; Alasiry, A.; Marzougui, M.; Alhaisoni, M.; Masood, A. MSRNet: Multiclass Skin Lesion Recognition Using Additional Residual Block Based Fine-Tuned Deep Models Information Fusion and Best Feature Selection. Diagnostics 2023, 13, 3063. [Google Scholar] [CrossRef]

- Hussain, M.; Khan, M.A.; Damaševičius, R.; Alasiry, A.; Marzougui, M.; Alhaisoni, M.; Masood, A. SkinNet-INIO: Multiclass Skin Lesion Localization and Classification Using Fusion-Assisted Deep Neural Networks and Improved Nature-Inspired Optimization Algorithm. Diagnostics 2023, 13, 2869. [Google Scholar] [CrossRef]

- Attallah, O.; Sharkas, M. Intelligent Dermatologist Tool for Classifying Multiple Skin Cancer Subtypes by Incorporating Manifold Radiomics Features Categories. Contrast Media Mol. Imaging 2021, 2021, 7192016. [Google Scholar] [CrossRef]

- Attallah, O. Skin-CAD: Explainable Deep Learning Classification of Skin Cancer from Dermoscopic Images by Feature Selection of Dual High-Level CNNs Features and Transfer Learning. Comput. Biol. Med. 2024, 178, 108798. [Google Scholar] [CrossRef]

- Attallah, O. Skin Cancer Classification Leveraging Multi-Directional Compact Convolutional Neural Network Ensembles and Gabor Wavelets. Sci. Rep. 2024, 14, 20637. [Google Scholar] [CrossRef]

- Amin, S.U.; Alsulaiman, M.; Muhammad, G.; Mekhtiche, M.A.; Hossain, M.S. Deep Learning for EEG Motor Imagery Classification Based on Multi-Layer CNNs Feature Fusion. Future Gener. Comput. Syst. 2019, 101, 542–554. [Google Scholar] [CrossRef]

- Zhang, P.; Wang, D.; Lu, H.; Wang, H.; Ruan, X. Amulet: Aggregating Multi-Level Convolutional Features for Salient Object Detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 202–211. [Google Scholar]

- Soleymani, S.; Dabouei, A.; Kazemi, H.; Dawson, J.; Nasrabadi, N.M. Multi-Level Feature Abstraction from Convolutional Neural Networks for Multimodal Biometric Identification. In Proceedings of the 2018 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018; IEEE: New York, NY, USA, 2018; pp. 3469–3476. [Google Scholar]

- Tschandl, P.; Rosendahl, C.; Kittler, H. The HAM10000 Dataset, a Large Collection of Multi-Source Dermatoscopic Images of Common Pigmented Skin Lesions. Sci. Data 2018, 5, 180161. [Google Scholar] [CrossRef]

- Gonzalez, R.C. Digital Image Processing; Pearson Education India: Delhi, India, 2009. [Google Scholar]

- Mukherjee, J.; Mitra, S.K. Enhancement of Color Images by Scaling the DCT Coefficients. IEEE Trans. Image Process. 2008, 17, 1783–1794. [Google Scholar] [CrossRef] [PubMed]

- Shensa, M.J. The Discrete Wavelet Transform: Wedding the a Trous and Mallat Algorithms. IEEE Trans. Signal Process. 1992, 40, 2464–2482. [Google Scholar] [CrossRef]

- Huh, M.; Agrawal, P.; Efros, A.A. What Makes ImageNet Good for Transfer Learning? arXiv 2016, arXiv:160808614. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper with Convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:170404861. [Google Scholar]

- Ali, K.; Shaikh, Z.A.; Khan, A.A.; Laghari, A.A. Multiclass Skin Cancer Classification Using EfficientNets—A First Step towards Preventing Skin Cancer. Neurosci. Inform. 2022, 2, 100034. [Google Scholar] [CrossRef]

- Imam, M.H.; Nahar, N.; Rahman, M.A.; Rabbi, F. Enhancing Skin Cancer Classification Using a Fusion of Densenet and Mobilenet Models: A Deep Learning Ensemble Approach. Multidiscip. Sci. J. 2024, 6, 2024117. [Google Scholar] [CrossRef]

- Jain, S.; Singhania, U.; Tripathy, B.; Nasr, E.A.; Aboudaif, M.K.; Kamrani, A.K. Deep Learning-Based Transfer Learning for Classification of Skin Cancer. Sensors 2021, 21, 8142. [Google Scholar] [CrossRef]

- Gottumukkala, V.S.S.P.R.; Kumaran, N.; Sekhar, V.C. BLSNet: Skin Lesion Detection and Classification Using Broad Learning System with Incremental Learning Algorithm. Expert Syst. 2022, 39, e12938. [Google Scholar] [CrossRef]

- Siva, P.B. SLDCNet: Skin Lesion Detection and Classification Using Full Resolution Convolutional Network-Based Deep Learning CNN with Transfer Learning. Expert Syst. 2022, 39, e12944. [Google Scholar]

- Kassem, M.A.; Hosny, K.M.; Fouad, M.M. Skin Lesions Classification into Eight Classes for ISIC 2019 Using Deep Convolutional Neural Network and Transfer Learning. IEEE Access 2020, 8, 114822–114832. [Google Scholar] [CrossRef]

- El-Khatib, H.; Popescu, D.; Ichim, L. Deep Learning–Based Methods for Automatic Diagnosis of Skin Lesions. Sensors 2020, 20, 1753. [Google Scholar] [CrossRef]

- Khan, M.A.; Muhammad, K.; Sharif, M.; Akram, T.; Kadry, S. Intelligent Fusion-Assisted Skin Lesion Localization and Classification for Smart Healthcare. Neural Comput. Appl. 2024, 36, 37–52. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).