A Review of Deep Transfer Learning and Recent Advancements

Abstract

1. Introduction

2. Deep Learning

3. Deep Transfer Learning (DTL)

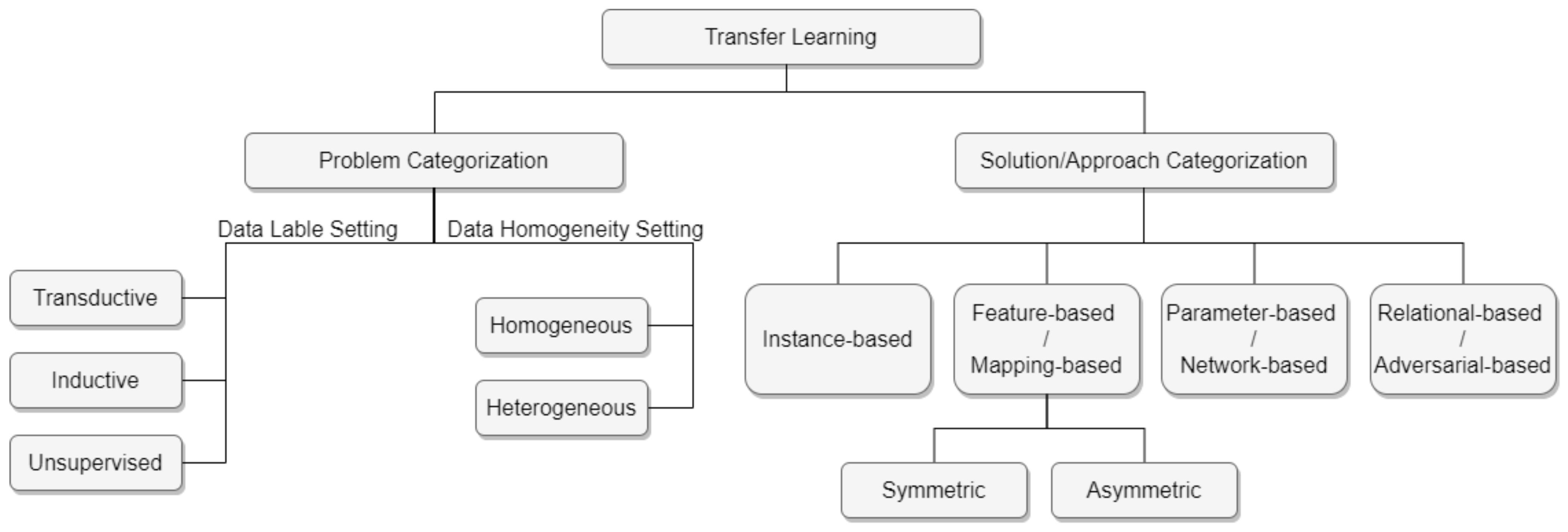

4. From Transfer Learning to Deep Transfer Learning, Taxonomy

5. Review of Recent Advancements in DTL

6. Experimental Analyzations of Deep Transfer Learning

7. Discussion

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Iman, M.; Arabnia, H.R.; Branchinst, R.M. Pathways to Artificial General Intelligence: A Brief Overview of Developments and Ethical Issues via Artificial Intelligence, Machine Learning, Deep Learning, and Data Science; Springer: Cham, Switzerland, 2021; pp. 73–87. [Google Scholar]

- Zhuang, F.; Qi, Z.; Duan, K.; Xi, D.; Zhu, Y.; Zhu, H.; Xiong, H.; He, Q. A comprehensive survey on transfer learning. Proc. IEEE 2020, 109, 43–76. [Google Scholar] [CrossRef]

- Farahani, A.; Voghoei, S.; Rasheed, K.; Arabnia, H.R. A brief review of domain adaptation. In Advances in Data Science and Information Engineering; Springer: Cham, Switzerland, 2021; pp. 877–894. [Google Scholar]

- Voghoei, S.; Tonekaboni, N.H.; Wallace, J.G.; Arabnia, H.R. Deep learning at the edge. In Proceedings of the 2018 International Conference on Computational Science and Computational Intelligence (CSCI), Las Vegas, NV, USA, 12–14 December 2018; pp. 895–901. [Google Scholar]

- Chang, H.S.; Fu, M.C.; Hu, J.; Marcus, S.I. Google Deep Mind’s AlphaGo. Or/Ms Today 2016, 43, 24–29. [Google Scholar]

- Das, N.N.; Kumar, N.; Kaur, M.; Kumar, V.; Singh, D. Automated Deep Transfer Learning-Based Approach for Detection of COVID-19 Infection in Chest X-rays. Irbm 2022, 43, 114–119. [Google Scholar]

- Jaiswal, A.; Gianchandani, N.; Singh, D.; Kumar, V.; Kaur, M. Classification of the COVID-19 infected patients using DenseNet201 based deep transfer learning. J. Biomol. Struct. Dyn. 2020, 39, 5682–5689. [Google Scholar] [CrossRef] [PubMed]

- Yosinski, J.; Clune, J.; Bengio, Y.; Lipson, H. How transferable are features in deep neural networks? Adv. Neural Inf. Process. Syst. 2014, 4, 3320–3328. [Google Scholar]

- Tan, C.; Sun, F.; Kong, T.; Zhang, W.; Yang, C.; Liu, C. A survey on deep transfer learning. Lect. Notes Comput. Sci. (Incl. Subser. Lect. Notes Artif. Intell. Lect. Notes Bioinform.) 2018, 11141, 270–279. [Google Scholar]

- Rusu, A.A.; Rabinowitz, N.C.; Desjardins, G.; Soyer, H.; Kirkpatrick, J.; Kavukcuoglu, K.; Pascanu, R.; Hadsell, R. Progressive neural networks. arXiv 2016, arXiv:1606.04671. [Google Scholar]

- Yosinski, J.; Clune, J.; Nguyen, A.; Fuchs, T.; Lipson, H. Understanding neural networks through deep visualization. arXiv 2015, arXiv:1506.06579. [Google Scholar]

- Hariharan, R.; Sudhakar, P.; Venkataramani, R.; Thiruvenkadam, S.; Annangi, P.; Babu, N.; Vaidya, V. Understanding the mechanisms of deep transfer learning for medical images. In Deep Learning and Data Labeling for Medical Applications; Springer: Cham, Switzerland, 2016; pp. 188–196. [Google Scholar]

- Kitchenham, B.; Pearlbrereton, O.; Budgen, D.; Turner, M.; Bailey, J.; Linkman, S. Systematic literature reviews in software engineering—A systematic literature review. Inf. Softw. Technol. 2009, 51, 7–15. [Google Scholar] [CrossRef]

- Wan, L.; Liu, R.; Sun, L.; Nie, H.; Wang, X. UAV swarm based radar signal sorting via multi-source data fusion: A deep transfer learning framework. Inf. Fusion 2022, 78, 90–101. [Google Scholar] [CrossRef]

- Albayrak, A. Classification of analyzable metaphase images using transfer learning and fine tuning. Med. Biol. Eng. Comput. 2022, 60, 239–248. [Google Scholar] [CrossRef] [PubMed]

- Kumar, S. MCFT-CNN: Malware classification with fine-tune convolution neural networks using traditional and transfer learning in internet of things. Future Gener. Comput. Syst. 2021, 125, 334–351. [Google Scholar]

- Wang, Y.; Feng, Z.; Song, L.; Liu, X.; Liu, S. Multiclassification of endoscopic colonoscopy images based on deep transfer learning. Comput. Math. Methods Med. 2021, 2021, 2485934. [Google Scholar] [CrossRef] [PubMed]

- Akh, M.A.H.; Roy, S.; Siddique, N.; Kamal, M.A.S.; Shimamura, T. Facial Emotion Recognition Using Transfer Learning in the Deep CNN. Electronics 2021, 10, 1036. [Google Scholar]

- Dipendra, J.; Choudhary, K.; Tavazza, F.; Liao, W.; Choudhary, A.; Campbell, C.; Agrawal, A. Enhancing materials property prediction by leveraging computational and experimental data using deep transfer learning. Nat. Commun. 2019, 10, 1–12. [Google Scholar]

- Talo, M.; Baloglu, U.B.; Yıldırım, Ö.; Acharya, U.R. Application of deep transfer learning for automated brain abnormality classification using MR images. Cogn. Syst. Res. 2019, 54, 176–188. [Google Scholar] [CrossRef]

- Wu, Z.; Jiang, H.; Zhao, K.; Li, X. An adaptive deep transfer learning method for bearing fault diagnosis. Measurement 2020, 151, 107227. [Google Scholar] [CrossRef]

- Mao, W.; Ding, L.; Tian, S.; Liang, X. Online detection for bearing incipient fault based on deep transfer learning. Meas. J. Int. Meas. Confed. 2020, 152, 107278. [Google Scholar] [CrossRef]

- Huy, P.; Chén, O.Y.; Koch, P.; Lu, Z.; McLoughlin, I.; Mertins, A.; Vos, M.D. Towards more accurate automatic sleep staging via deep transfer learning. IEEE Trans. Biomed. Eng. 2020, 68, 1787–1798. [Google Scholar]

- Perera, P.; Patel, V.M. Deep Transfer Learning for Multiple Class Novelty Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 11544–11552. [Google Scholar]

- Xu, Y.; Sun, Y.; Liu, X.; Zheng, Y. A Digital-Twin-Assisted Fault Diagnosis Using Deep Transfer Learning. IEEE Access 2019, 7, 19990–19999. [Google Scholar] [CrossRef]

- Han, K.; Vedaldi, A.; Zisserman, A. Learning to Discover Novel Visual Categories via Deep Transfer Clustering. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8401–8409. [Google Scholar]

- Geng, M.; Wang, Y.; Xiang, T.; Tian, Y. Deep Transfer Learning for Person Re-identification. arXiv 2016, arXiv:1611.05244. [Google Scholar]

- Sabatelli, M.; Kestemont, M.; Daelemans, W.; Geurts, P. Deep Transfer Learning for Art Classification Problems. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops, Munich, Germany, 8–14 September 2018. [Google Scholar]

- George, D.; Shen, H.; Huerta, E.A. Deep transfer learning: A new deep learning glitch classification method for advanced ligo. arXiv 2017, arXiv:1706.07446. [Google Scholar]

- Ding, R.; Li, X.; Nie, L.; Li, J.; Si, X.; Chu, D.; Liu, G.; Zhan, D. Empirical study and improvement on deep transfer learning for human activity recognition. Sensors 2019, 19, 57. [Google Scholar] [CrossRef]

- Zeng, M.; Li, M.; Fei, Z.; Yu, Y.; Pan, Y.; Wang, J. Automatic ICD-9 coding via deep transfer learning. Neurocomputing 2019, 324, 43–50. [Google Scholar] [CrossRef]

- Kaya, H.; Gürpınar, F.; Salah, A.A. Video-Based emotion recognition in the wild using deep transfer learning and score fusion. Image Vis. Comput. 2017, 65, 66–75. [Google Scholar] [CrossRef]

- Ay, B.; Tasar, B.; Utlu, Z.; Ay, K.; Aydin, G. Deep transfer learning-based visual classification of pressure injuries stages. Neural Comput. Appl. 2022, 4, 16157–16168. [Google Scholar] [CrossRef]

- Li, P.; Cui, H.; Khan, A.; Raza, U.; Piechocki, R.; Doufexi, A.; Farnham, T.M. Deep transfer learning for WiFi localization. In Proceedings of the 2021 IEEE Radar Conference (RadarConf21), Atlanta, GA, USA, 8–14 May 2021; IEEE: Piscataway, NJ, USA, 2021. [Google Scholar]

- Celik, Y.; Talo, M.; Yildirim, O.; Karabatak, M.; Acharya, U.R. Automated invasive ductal carcinoma detection based using deep transfer learning with whole-slide images. Pattern Recognit. Lett. 2020, 133, 232–239. [Google Scholar] [CrossRef]

- Liu, C.; Wei, Z.; Ng, D.W.K.; Yuan, J.; Liang, Y.C. Deep Transfer Learning for Signal Detection in Ambient Backscatter Communications. IEEE Trans. Wirel. Commun. 2020, 20, 1624–1638. [Google Scholar] [CrossRef]

- Deepak, S.; Ameer, P.M. Brain tumor classification using deep CNN features via transfer learning. Comput. Biol. Med. 2019, 111, 103345. [Google Scholar] [CrossRef] [PubMed]

- Mormont, R.; Geurts, P.; Marée, R. Comparison of Deep Transfer Learning Strategies for Digital Pathology. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2262–2271. [Google Scholar]

- Zhi, Y.; Yu, W.; Liang, P.; Guo, H.; Xia, L.; Zhang, F.; Ma, Y.; Ma, J. Deep transfer learning for military object recognition under small training set condition. Neural Comput. Appl. 2019, 31, 6469–6478. [Google Scholar]

- Gao, Y.; Mosalam, K.M. Deep Transfer Learning for Image-Based Structural Damage Recognition. Comput. Civ. Infrastruct. Eng. 2018, 33, 748–768. [Google Scholar] [CrossRef]

- Yu, Y.; Lin, H.; Meng, J.; Wei, X.; Guo, H.; Zhao, Z. Deep Transfer Learning for Modality Classification of Medical Images. Information 2017, 8, 91. [Google Scholar] [CrossRef]

- Wang, S.; Li, Z.; Yu, Y.; Xu, J. Folding Membrane Proteins by Deep Transfer Learning. Cell Syst. 2017, 5, 202–211.e3. [Google Scholar] [CrossRef] [PubMed]

- Joshi, D.; Mishra, V.; Srivastav, H.; Goel, D. Progressive Transfer Learning Approach for Identifying the Leaf Type by Optimizing Network Parameters. Neural Process. Lett. 2021, 53, 3653–3676. [Google Scholar] [CrossRef]

- Abdul, M.; Hagerer, G.; Dugar, S.; Gupta, S.; Ghosh, M.; Danner, H.; Mitevski, O.; Nawroth, A.; Groh, G. An evaluation of progressive neural networksfor transfer learning in natural language processing. In Proceedings of the 12th Language Resources and Evaluation Conference, Marseille, France, 11–16 May 2020; pp. 1376–1381. [Google Scholar]

- Gu, Y.; Ge, Z.; Bonnington, C.P.; Zhou, J. Progressive Transfer Learning and Adversarial Domain Adaptation for Cross-Domain Skin Disease Classification. IEEE Biomed. Health Informa. 2020, 24, 1379–1393. [Google Scholar] [CrossRef] [PubMed]

- Gideon, J.; Khorram, S.; Aldeneh, Z.; Dimitriadis, D.; Provost, E.M. Progressive Neural Networks for Transfer Learning in Emotion Recognition. Proc. Annu. Conf. Int. Speech Commun. Assoc. Interspeech 2017, 2017, 1098–1102. [Google Scholar]

- Loey, M.; Manogaran, G.; Khalifa, N.E.M. A deep transfer learning model with classical data augmentation and CGAN to detect COVID-19 from chest CT radiography digital images. Neural Comput. Appl. 2020, 1–13. [Google Scholar] [CrossRef]

- Li, X.; Zhang, W.; Ding, Q.; Li, X. Diagnosing Rotating Machines with Weakly Supervised Data Using Deep Transfer Learning. IEEE Trans. Ind. Inform. 2020, 16, 1688–1697. [Google Scholar] [CrossRef]

- Wen, L.; Gao, L.; Li, X. A new deep transfer learning based on sparse auto-encoder for fault diagnosis. IEEE Trans. Syst. Man, Cybern. Syst. 2019, 49, 136–144. [Google Scholar] [CrossRef]

- Simon, M.; Rodner, E.; Denzler, J. ImageNet Pre-Trained Models with Batch Normalization. arXiv 2016, arXiv:1612.01452. [Google Scholar]

- Neyshabur, B.; Sedghi, H.; Zhang, C. What is being transferred in transfer learning? Adv. Neural Inf. Process. Syst. 2020, 33, 512–523. [Google Scholar]

- Mensink, T.; Uijlings, J.; Kuznetsova, A.; Gygli, M.; Ferrari, V. Factors of Influence for Transfer Learning across Diverse Appearance Domains and Task Types. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 9298–9314. [Google Scholar] [CrossRef] [PubMed]

- Iman, M.; Miller, J.A.; Rasheed, K.; Branch, R.M.; Arabnia, H.R. EXPANSE: A Continual and Progressive Learning System for Deep Transfer Learning. In Proceedings of the 2022 International Conference on Computational Science and Computational Intelligence (CSCI), Las Vegas, NV, USA, 14–16 December 2022; IEEE: Piscataway, NJ, USA, 2022. [Google Scholar]

| Ref. | Year | Title | Data Type | Time Series | Approach | CNN | Known Models Used | Dataset Field |

|---|---|---|---|---|---|---|---|---|

| [14] | 2022 | UAV swarm-based radar signal sorting via multi-source data fusion: A deep transfer learning framework | Image | No | Finetuning | Yes | Yolo, Faster-RCNN, and Cascade-RCNN | Radar image |

| [15] | 2022 | Classification of analyzable metaphase images using transfer learning and fine tuning | Image | No | Finetuning | Yes | VGG16, Inception V3 | Medical image |

| [16] | 2021 | Multiclassification of Endoscopic Colonoscopy Images Based on Deep Transfer Learning | Image | No | Finetuning | Yes | AlexNet, VGG, and Res-Net | Medical Image |

| [17] | 2021 | MCFT-CNN: Malware classification with fine-tune convolution neural networks using traditional and transfer learning in Internet of Things | Image | No | Finetuning | Yes | Res-Net50 | Malware classification |

| [18] | 2021 | Facial Emotion Recognition Using Transfer Learning in the Deep CNN | Image | No | Finetuning | Yes | VGGs, Res-Nets, Inception-v3, DenseNet-161 | Facial emotion recognition (FER) |

| [6] | 2020 | Automated Deep Transfer Learning-Based Approach for Detection of COVID-19 Infection in Chest X-rays | Image | No | Finetuning | Yes | Inception-Xception | Medical image |

| [7] | 2020 | Classification of the COVID-19 infected patients using DenseNet201 based deep transfer learning | Image | No | Finetuning | Yes | ImageNet, Dense-Net | Medical image |

| [19] | 2019 | Enhancing materials property prediction by leveraging computational and experimental data using deep transfer learning | Tabular/ bigdata | Yes | Finetuning | No | None | Quantum mechanics |

| [20] | 2019 | Application of deep transfer learning for automated brain abnormality classification using MR images | Image | No | Finetuning | Yes | Res-Net | Medical image |

| [21] | 2019 | An adaptive deep transfer learning method for bearing fault diagnosis | Tabular/ bigdata | Yes | Finetuning | No | LSTM RNN | Mechanic |

| [22] | 2019 | Online detection for bearing incipient fault based on deep transfer learning | Image | Yes | Finetuning | Yes | VGG-16 | Mechanic |

| [23] | 2019 | Towards More Accurate Automatic Sleep Staging via Deep Transfer Learning | Tabular/ bigdata | Yes | Finetuning | Yes | None | Medical data |

| [24] | 2019 | Deep Transfer Learning for Multiple Class Novelty Detection | Image | No | Finetuning | Yes | Alex-Net, VGG-Net | Vision |

| [25] | 2019 | A Digital-Twin-Assisted Fault Diagnosis Using Deep Transfer Learning | Tabular/ bigdata | No | Finetuning | No | None | Mechanic |

| [26] | 2019 | Learning to Discover Novel Visual Categories via Deep Transfer Clustering | Image | No | Finetuning | Yes | None | Vision |

| [27] | 2018 | Deep Transfer Learning for Person Re-identification | Image | No | Finetuning | Yes | None | Identification/ security |

| [28] | 2018 | Deep Transfer Learning for Art Classification Problems | Image | No | Finetuning | Yes | None | Art |

| [29] | 2018 | Classification and unsupervised clustering of LIGO data with Deep Transfer Learning | Image | No | Finetuning | Yes | None | Physics/ Astrophysics |

| [30] | 2018 | Empirical Study and Improvement on Deep Transfer Learning for Human Activity Recognition | Tabular/ bigdata | Yes | Finetuning | Yes | None | Human Activity Recognition |

| [31] | 2018 | Automatic ICD-9 coding via deep transfer learning | Tabular/ bigdata | No | Finetuning | Yes | None | Medical |

| [32] | 2017 | Video-based emotion recognition in the wild using deep transfer learning and score fusion | Video (audio and visual) | Yes | Finetuning | Yes | VGG-Face | Human science/ psychology |

| [33] | 2022 | Deep transfer learning-based visual classification of pressure injuries stages | Image | No | Freezing CNN layers | Yes | Dense-Net 121, Inception V3, MobilNet V2, Res-Nets, VGG16 | Medical image |

| [34] | 2021 | Deep Transfer Learning for WiFi Localization | Tabular/ bigdata | No | Freezing CNN layers | Yes | None | WiFi Localization |

| [35] | 2020 | Automated invasive ductal carcinoma detection based using deep transfer learning with whole-slide images | Image | No | Freezing CNN layers | Yes | Res-Net, Dense-Net | Medical image |

| [36] | 2019 | Deep Transfer Learning for Signal Detection in Ambient Backscatter Communications | Tabular/ bigdata | No | Freezing CNN layers | Yes | None | Tele-communication |

| [37] | 2019 | Brain tumor classification using deep CNN features via transfer learning | Image | No | Freezing CNN layers | Yes | Google-Net | Medical image |

| [38] | 2018 | Comparison of Deep Transfer Learning Strategies for Digital Pathology | Image | No | Freezing CNN layers | Yes | None | Medical image |

| [39] | 2018 | Deep transfer learning for military object recognition under small training set condition | Image | No | Freezing CNN layers | Yes | None | Military |

| [40] | 2018 | Deep Transfer Learning for Image-Based Structural Damage Recognition | Image | No | Freezing CNN layers | Yes | VGG-Net | Civil engineering |

| [41] | 2017 | Deep Transfer Learning for Modality Classification of Medical Images | Image | No | Freezing CNN layers | Yes | VGG-Net, Res-Net | Medical image |

| [42] | 2017 | Folding Membrane Proteins by Deep Transfer Learning | Tabular/ bigdata | No | Freezing CNN layers | Yes | Res-Net | Chemistry |

| [43] | 2021 | Progressive Transfer Learning Approach for Identifying the Leaf Type by Optimizing Network Parameters | Image | No | Progressive learning | Yes | Res-Net50 | Plant science |

| [44] | 2020 | An Evaluation of Progressive Neural Networks for Transfer Learning in Natural Language Processing | NLP/text | No | Progressive learning | No | None | NLP |

| [45] | 2020 | Progressive Transfer Learning and Adversarial Domain Adaptation for Cross-Domain Skin Disease Classification | Image | No | Progressive learning | Yes | None | Medical image |

| [46] | 2017 | Progressive Neural Networks for Transfer Learning in Emotion Recognition | Image and audio | Yes | Progressive learning | No | None | Para-linguistic |

| [47] | 2020 | A deep transfer learning model with classical data augmentation and CGAN to detect COVID-19 from chest CT radiography digital images | Image | No | Adversarial-based | Yes | Alex-Net, VGG-Net16, VGG-Net19, Google-Net, Res-Net50 | Medical image |

| [48] | 2019 | Diagnosing Rotating Machines with Weakly Supervised Data Using Deep Transfer Learning | Tabular/ bigdata | Yes | Adversarial-based | Yes | None | Mechanic |

| [49] | 2017 | A New Deep Transfer Learning Based on Sparse Auto-Encoder for Fault Diagnosis | Tabular/ bigdata | Yes | Sparse Auto-Encoder | No | None | Mechanic |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Iman, M.; Arabnia, H.R.; Rasheed, K. A Review of Deep Transfer Learning and Recent Advancements. Technologies 2023, 11, 40. https://doi.org/10.3390/technologies11020040

Iman M, Arabnia HR, Rasheed K. A Review of Deep Transfer Learning and Recent Advancements. Technologies. 2023; 11(2):40. https://doi.org/10.3390/technologies11020040

Chicago/Turabian StyleIman, Mohammadreza, Hamid Reza Arabnia, and Khaled Rasheed. 2023. "A Review of Deep Transfer Learning and Recent Advancements" Technologies 11, no. 2: 40. https://doi.org/10.3390/technologies11020040

APA StyleIman, M., Arabnia, H. R., & Rasheed, K. (2023). A Review of Deep Transfer Learning and Recent Advancements. Technologies, 11(2), 40. https://doi.org/10.3390/technologies11020040