Specific Electronic Platform to Test the Influence of Hypervisors on the Performance of Embedded Systems

Abstract

:1. Introduction

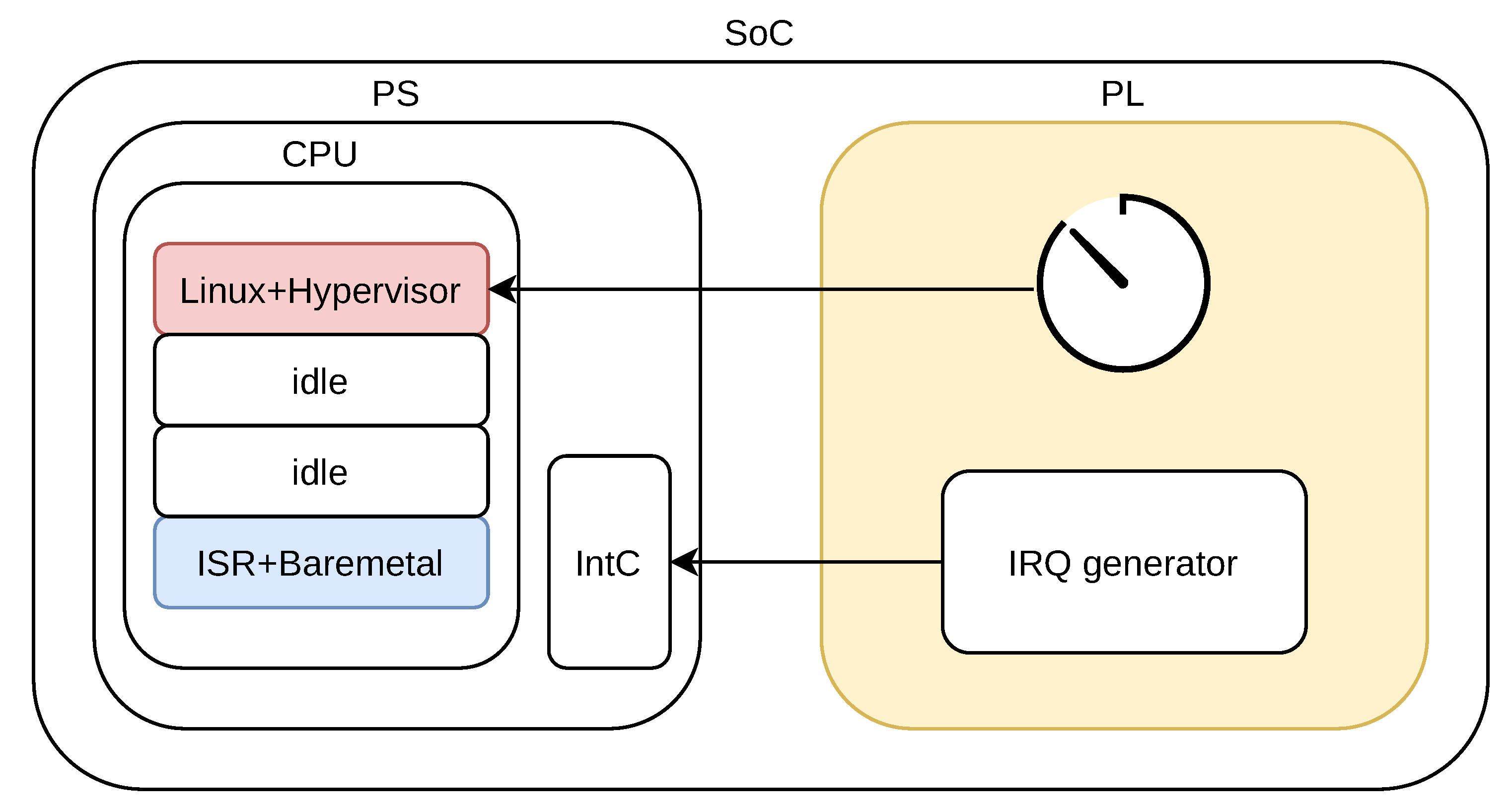

- Flexibility of a higher level operating system;

- Careful control of latencies of a baremetal application;

- Security by separation of hardware and software between both elements.

2. Hardware-Testbenches for Hypervisors

3. The Electronic Platform

- Two of the most used hypervisors, Xen and Jailhouse, have been selected as the reference for the design requirements; they maintain a list of hardware systems on which they have ever been tested. The Xen list is longer because it is an older alternative. For the sake of generality, the selected hardware should appear in both lists. If it does not, the adequacy of it should be considered feasible;

- To test the virtualized systems, tools to generate the Linux cell are demanded, with its kernel, device-tree and file-system, and the baremetal cell. The selected solution must provide this software, and the availability of the source code is also valuable, in case modifications were needed.

- UltraScale+ MPSoC XZU3EG-1SFVA625E, which includes:

- –

- 4 ARM Cortex-A53 (ARMv8) cores (up to 1.2 GHz)

- –

- 2 ARM Cortex-R5 cores (up to 500 MHz)

- –

- Mali-400 MP2 graphic processor (up to 600 MHz)

- –

- 154K Logic Cells

- –

- 141K flip-flops

- –

- 7.6 Mb RAM

- –

- 360 DSP blocks

- 2 GB DDR4 SDRAM

- 64 MB QSPI flash

- 8 GB eMMC flash

- 1 Gigabit Ethernet

- 1 SD card

- 12 Peripheral Module interface (PMOD) in PL

- 2 USB-UARTs

- 1 JTAG

- 8 switches and 8 LED in PL

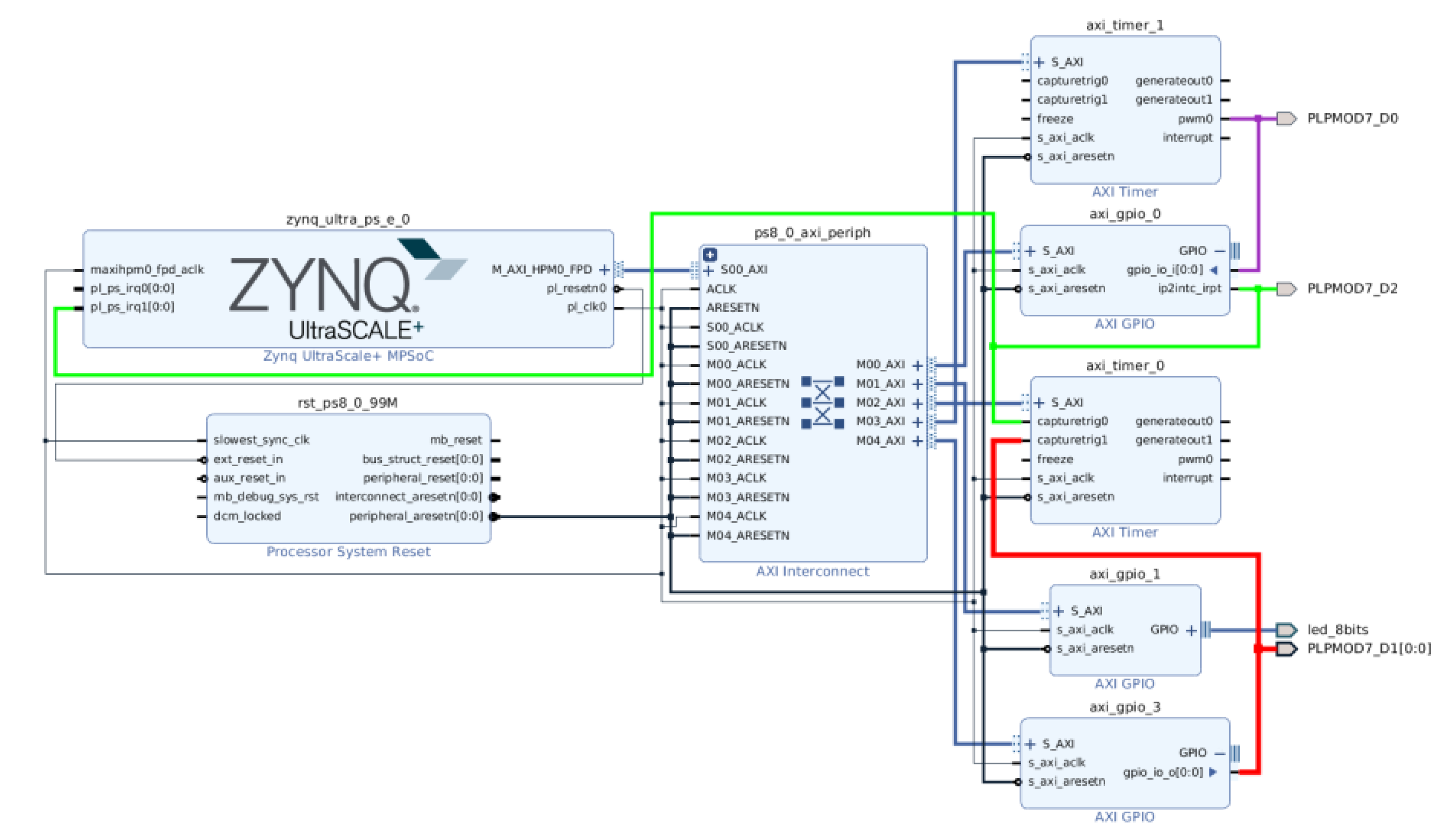

Hardware System Description for the UltraScale+ MPSoC

- axi_gpio_0 [33]: generates an interrupt whenever a change occurs in its input. The output is connected as an interrupt generated in PL and used as input in PS;

- axi_timer_1 [34]: it is configured as Pulse-width modulation (PWM), and its output is the input signal in axi_gpio_0;

- axi_gpio_3: its output signal is activated from the interrupt service routine;

- axi_timer_0: it captures two events: the interrupt event generated by axi_gpio_0 and the activation of axi_gpio_3, which occurs when the interrupt is attended. So the difference between both times is the time needed to attend the interrupt;

- axi_gpio_1: its output is connected to a LED in order to visually follow the execution.

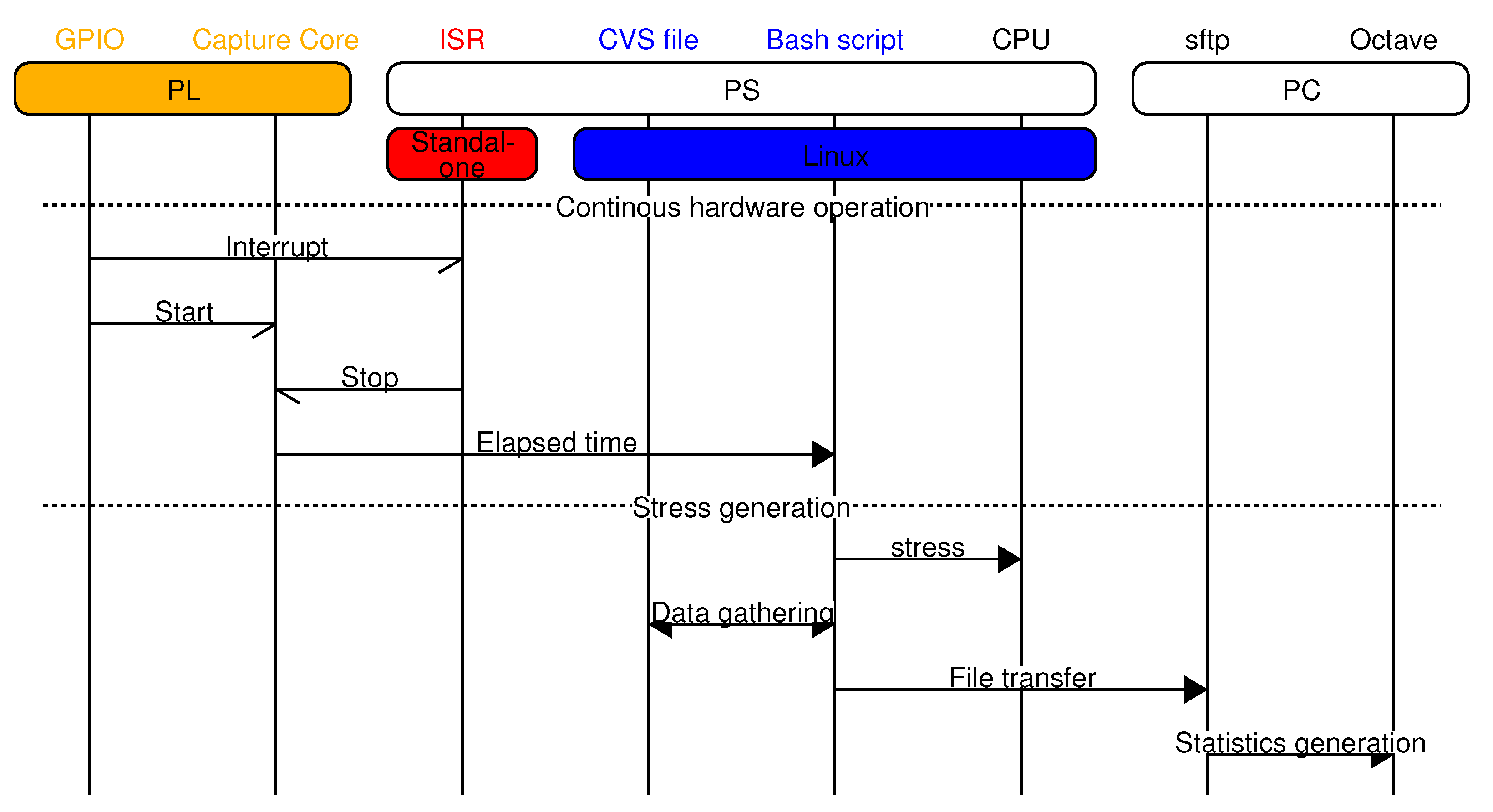

4. Test Procedure

- Create a base application-level operating system. In our case, Linux. For that purpose, we require:

- –

- Create a kernel with hypervisor supports;

- –

- Add the hypervisor executable and configuration;

- Create a base low latency application. In our case, a baremetal application has been created for the interrupt service function. In a more general way, a real-time application could be used, both baremetal or RTOS;

- Create a periodic interrupt by the use of the hardware timer;

- Capture the time to serve the interrupt by the use of the hardware capture module;

- Repeat the operation for a statistically significant number of times to obtain the data under different CPU loads. The hypervisor’s operating system is stressed to test the impact of the hypervisor in the high-priority baremetal application.

4.1. General Flow

4.2. Validation Test

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Toumassian, S.; Werner, R.; Sikora, A. Performance measurements for hypervisors on embedded ARM processors. In Proceedings of the 2016 International Conference on Advances in Computing, Communications and Informatics (ICACCI), Jaipur, India, 21–24 September 2016; IEEE: Piscataway, NJ, USA, 2016. [Google Scholar] [CrossRef]

- Kou, E. Virtualization for Embedded Industrial Systems. 2018. SPRY317A. Available online: https://www.ti.com/lit/wp/spry317b/spry317b.pdf (accessed on 25 April 2022).

- Greenbaum, J.; Garlati, C. Hypervisors in Embedded Systems. Applications and Architechtures. In Proceedings of the Embedded World Conference, Nuremberg, Germany, 27 February–1 March 2018. [Google Scholar]

- IEC 61850-1 ed2.0; Communication Networks and Systems for Power Utility Automation—Part 1: Introduction and Overview. IEC: Geneva, Switzerland, 2013.

- Polenov, M.; Guzik, V.; Lukyanov, V. Hypervisors Comparison and Their Performance Testing. In Advances in Intelligent Systems and Computing; Springer International Publishing: Berlin/Heidelberg, Germany, 2018; pp. 148–157. [Google Scholar] [CrossRef]

- Poojara, S.R.; Ghule, V.B.; Birje, M.N.; Dharwadkar, N.V. Performance Analysis of Linux Container and Hypervisor for Application Deployment on Clouds. In Proceedings of the 2018 International Conference on Computational Techniques, Electronics and Mechanical Systems (CTEMS), Belgaum, India, 21–22 December 2018; IEEE: Piscataway, NJ, USA, 2018. [Google Scholar] [CrossRef]

- Ferreira, R.D.F.; de Oliveira, R.S. Cloud IEC 61850: DDS Performance in Virtualized Environment with OpenDDS. In Proceedings of the 2017 IEEE International Conference on Computer and Information Technology (CIT), Helsinki, Finland, 21–23 August 2017; IEEE: Piscataway, NJ, USA, 2017. [Google Scholar] [CrossRef]

- Graniszewski, W.; Arciszewski, A. Performance analysis of selected hypervisors (Virtual Machine Monitors—VMMs). Int. J. Electron. Telecommun. 2016, 62, 231–236. [Google Scholar] [CrossRef] [Green Version]

- Pesic, D.; Djordjevic, B.; Timcenko, V. Competition of virtualized ext4, xfs and btrfs filesystems under type-2 hypervisor. In Proceedings of the 2016 24th Telecommunications Forum (TELFOR), Belgrade, Serbia, 22–23 November 2016; IEEE: Piscataway, NJ, USA, 2016. [Google Scholar] [CrossRef]

- Vojtesek, J.; Pipis, M. Virtualization of Operating System Using Type-2 Hypervisor. In Advances in Intelligent Systems and Computing; Springer International Publishing: Berlin/Heidelberg, Germany, 2016; pp. 239–247. [Google Scholar] [CrossRef]

- Bujor, A.C.; Dobre, R. KVM IO profiling. In Proceedings of the 2013 RoEduNet International Conference 12th Edition: Networking in Education and Research, Iasi, Romania, 26–28 September 2013; IEEE: Piscataway, NJ, USA, 2013. [Google Scholar] [CrossRef]

- Yang, Z.; Fang, H.; Wu, Y.; Li, C.; Zhao, B.; Huang, H.H. Understanding the effects of hypervisor I/O scheduling for virtual machine performance interference. In Proceedings of the 4th IEEE International Conference on Cloud Computing Technology and Science Proceedings, Taipei, Taiwan, 3–6 December 2012; IEEE: Piscataway, NJ, USA, 2012. [Google Scholar] [CrossRef]

- Binu, A.; Kumar, G.S. Virtualization Techniques: A Methodical Review of XEN and KVM. In Advances in Computing and Communications; Springer: Berlin/Heidelberg, Germany, 2011; pp. 399–410. [Google Scholar] [CrossRef]

- Zhang, L.; Bai, Y.; Luo, C. idsocket: API for Inter-domain Communications Base on Xen. In Algorithms and Architectures for Parallel Processing; Springer: Berlin/Heidelberg, Germany, 2010; pp. 324–336. [Google Scholar] [CrossRef]

- Xu, X.; Zhou, F.; Wan, J.; Jiang, Y. Quantifying Performance Properties of Virtual Machine. In Proceedings of the 2008 International Symposium on Information Science and Engineering, Shanghai, China, 20–22 December 2008; IEEE: Piscataway, NJ, USA, 2008. [Google Scholar] [CrossRef]

- Dordevic, B.; Timcenko, V.; Kraljevic, N.; Davidovic, N. File system performance comparison in full hardware virtualization with ESXi and Xen hypervisors. In Proceedings of the 2019 18th International Symposium INFOTEH-JAHORINA (INFOTEH), East Sarajevo, Bosnia and Herzegovina, 20–22 March 2019; IEEE: Piscataway, NJ, USA, 2019. [Google Scholar] [CrossRef]

- Yang, Z.; Liu, C.; Zhou, Y.; Liu, X.; Cao, G. SPDK Vhost-NVMe: Accelerating I/Os in Virtual Machines on NVMe SSDs via User Space Vhost Target. In Proceedings of the 2018 IEEE 8th International Symposium on Cloud and Service Computing (SC2), Paris, France, 18–21 November 2018; IEEE: Piscataway, NJ, USA, 2018. [Google Scholar] [CrossRef]

- Sok, S.W.; Jung, Y.W.; Lee, C.H. Optimizing System Call Latency of ARM Virtual Machines. J. Phys. Conf. Ser. 2017, 787, 012032. [Google Scholar] [CrossRef]

- Zhang, D.; Wu, H.; Xue, F.; Chen, L.; Huang, H. High Performance and Scalable Virtual Machine Storage I/O Stack for Multicore Systems. In Proceedings of the 2017 IEEE 23rd International Conference on Parallel and Distributed Systems (ICPADS), Shenzhen, China, 15–17 December 2017; IEEE: Piscataway, NJ, USA, 2017. [Google Scholar] [CrossRef]

- Ul Ain, Q.; Anwar, U.; Mehmood, M.A.; Waheed, A. HTTM—Design and Implementation of a Type-2 Hypervisor for MIPS64 Based Systems. J. Phys. Conf. Ser. 2017, 787, 012006. [Google Scholar] [CrossRef]

- Twardowski, M.; Pastucha, E.; Kolecki, J. Performance of the Automatic Bundle Adjustment in the Virtualized Environment. In Proceedings of the 2016 Baltic Geodetic Congress (BGC Geomatics), Gdansk, Poland, 2–4 June 2016; IEEE: Piscataway, NJ, USA, 2016. [Google Scholar] [CrossRef]

- Borislav, Đ.; Nemanja Maček, V.T. Performance Issues in Cloud Computing: KVM Hypervisor’s Cache Modes Evaluation. Acta Polytech. Hung. 2015, 12, 147–165. [Google Scholar] [CrossRef]

- Ye, K.; Wu, Z.; Zhou, B.B.; Jiang, X.; Wang, C.; Zomaya, A.Y. Virt-B: Towards Performance Benchmarking of Virtual Machine Systems. IEEE Internet Comput. 2014, 18, 64–72. [Google Scholar] [CrossRef]

- Tang, X.; Zhang, Z.; Wang, M.; Wang, Y.; Feng, Q.; Han, J. Performance Evaluation of Light-Weighted Virtualization for PaaS in Clouds. In Algorithms and Architectures for Parallel Processing; Springer International Publishing: Berlin/Heidelberg, Germany, 2014; pp. 415–428. [Google Scholar] [CrossRef]

- Cacheiro, J.L.; Fernández, C.; Freire, E.; Díaz, S.; Simón, A. Providing Grid Services Based on Virtualization and Cloud Technologies. In Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2010; pp. 444–453. [Google Scholar] [CrossRef]

- André Bögelsack, H.K.; Wittges, H. Performance Overhead of Paravirtualization on an Exemplary Erp System. In Proceedings of the 12th International Conference on Enterprise Information Systems, Funchal, Portugal, 8–12 June 2010; SciTePress—Science and and Technology Publications: Setubal, Portugal, 2010. [Google Scholar] [CrossRef] [Green Version]

- Kloda, T.; Solieri, M.; Mancuso, R.; Capodieci, N.; Valente, P.; Bertogna, M. Deterministic Memory Hierarchy and Virtualization for Modern Multi-Core Embedded Systems. In Proceedings of the 2019 IEEE Real-Time and Embedded Technology and Applications Symposium (RTAS), Montreal, QC, Canada, 16–18 April 2019; IEEE: Piscataway, NJ, USA, 2019. [Google Scholar] [CrossRef] [Green Version]

- Heiser, G.; Leslie, B. The OKL4 microvisor. In Proceedings of the First ACM Asia-Pacific Workshop on Workshop on Systems—APSys’10, New Delhi, India, 30 August 2010; ACM Press: New York, NY, USA, 2010. [Google Scholar] [CrossRef]

- Danielsson, J.; Seceleanu, T.; Jagemar, M.; Behnam, M.; Sjodin, M. Testing Performance-Isolation in Multi-core Systems. In Proceedings of the 2019 IEEE 43rd Annual Computer Software and Applications Conference (COMPSAC), Milwaukee, WI, USA, 15–19 July 2019; IEEE: Piscataway, NJ, USA, 2019. [Google Scholar] [CrossRef]

- Bansal, A.; Tabish, R.; Gracioli, G.; Mancuso, R.; Pellizzoni, R.; Caccamo, M. Evaluating memory subsystem of configurable heterogeneous MPSoC. In Proceedings of the 14th Annual Workshop onOperating Systems Platforms for Embedded Real-Time Applications, Barcelona, Spain, 3 July 2018. [Google Scholar]

- Xilinx. Zynq UltraScale+ MPSoC ZCU102; Technical Report; Xilinx: San Jose, CA, USA, 2020. [Google Scholar]

- Xilinx. Zynq UltraScale+ MPSoC Data Sheet: Overview; Technical Report; Xilinx: San Jose, CA, USA, 2019. [Google Scholar]

- Xilinx. AXI GPIO v2.0 LogiCORE IP Product Guide; Technical Report; Xilinx: San Jose, CA, USA, 2016. [Google Scholar]

- Xilinx. AXI Timer v2.0 LogiCORE IP Product Guide; Technical Report; Xilinx: San Jose, CA, USA, 2016. [Google Scholar]

| Platform | References |

|---|---|

| Computer | [5,6,7,8,9,10,11,12,13,14,15] |

| Servers | [16,17,18,19,20,21,22,23,24,25,26] |

| Embedded | [1,27,28] |

| FPGA | [29] |

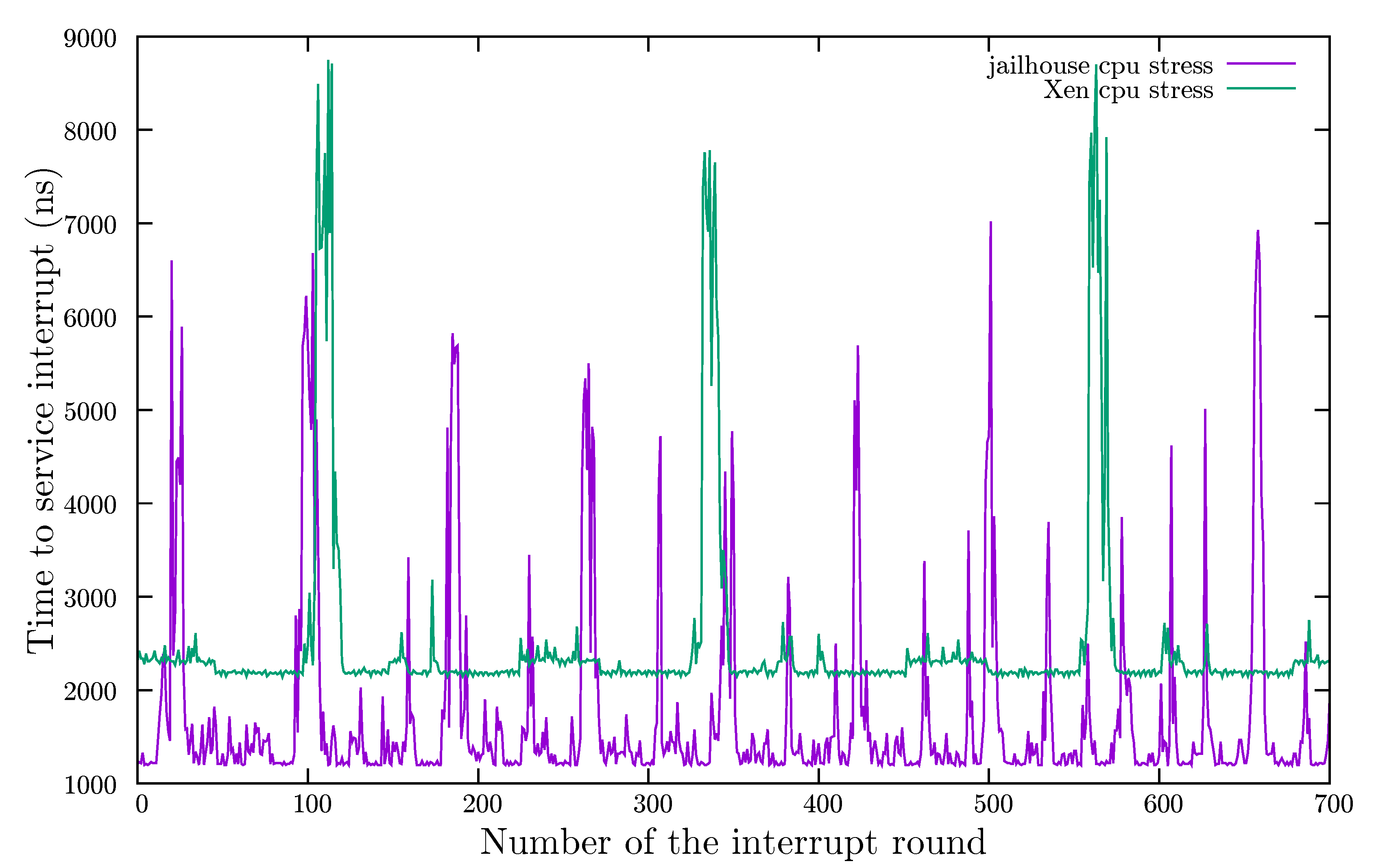

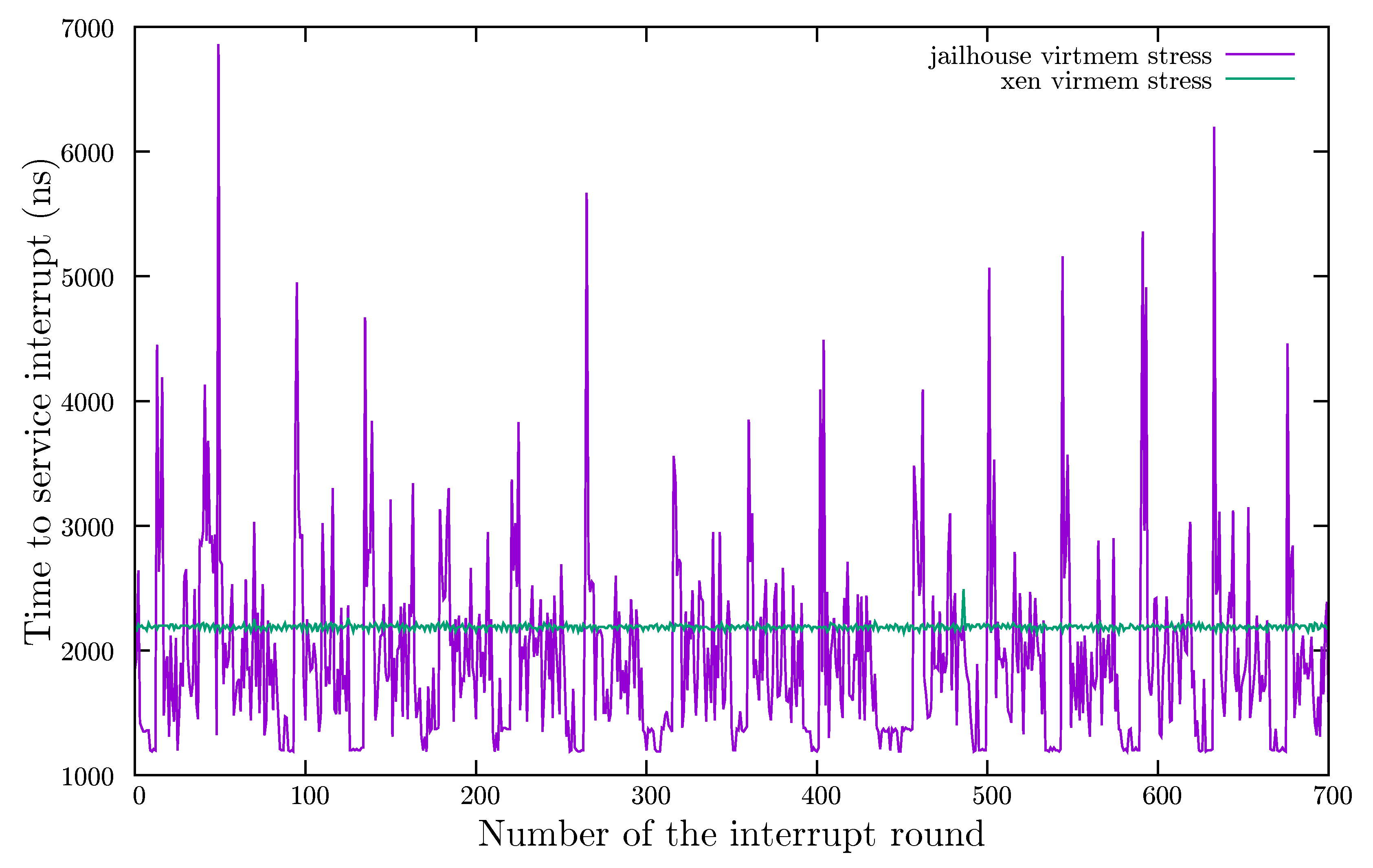

| CPU Stress | Virtmem Stress | All Stress | ||||

|---|---|---|---|---|---|---|

| Statistics | Jailhouse | Xen | Jailhouse | Xen | Jailhouse | Xen |

| min | 1190.0000 | 2140.0000 | 1190.0000 | 2140.0000 | 1190.0000 | 2140.0000 |

| Q1 | 1220.0000 | 2190.0000 | 1420.0000 | 2180.0000 | 1200.0000 | 2190.0000 |

| median | 1320.0000 | 2210.0000 | 1790.0000 | 2190.0000 | 1310.0000 | 2220.0000 |

| Q3 | 1580.0000 | 2310.0000 | 2230.0000 | 2200.0000 | 1460.0000 | 2310.0000 |

| max | 7020.0000 | 8750.0000 | 6860.0000 | 2490.0000 | 7050.0000 | 8750.0000 |

| mean | 1705.7489 | 2481.8545 | 1934.0942 | 2188.4593 | 1611.0699 | 2337.4608 |

| StdDev | 1033.8568 | 1022.1210 | 721.2251 | 21.4788 | 943.2967 | 556.9779 |

| skewness | 3.0235 | 4.5639 | 2.2143 | 3.7068 | 3.5246 | 7.8376 |

| kurtosis | 11.8676 | 23.2601 | 11.0569 | 57.1830 | 15.4045 | 71.8205 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jiménez, J.; Muguira, L.; Bidarte, U.; Largacha, A.; Lázaro, J. Specific Electronic Platform to Test the Influence of Hypervisors on the Performance of Embedded Systems. Technologies 2022, 10, 65. https://doi.org/10.3390/technologies10030065

Jiménez J, Muguira L, Bidarte U, Largacha A, Lázaro J. Specific Electronic Platform to Test the Influence of Hypervisors on the Performance of Embedded Systems. Technologies. 2022; 10(3):65. https://doi.org/10.3390/technologies10030065

Chicago/Turabian StyleJiménez, Jaime, Leire Muguira, Unai Bidarte, Alejandro Largacha, and Jesús Lázaro. 2022. "Specific Electronic Platform to Test the Influence of Hypervisors on the Performance of Embedded Systems" Technologies 10, no. 3: 65. https://doi.org/10.3390/technologies10030065

APA StyleJiménez, J., Muguira, L., Bidarte, U., Largacha, A., & Lázaro, J. (2022). Specific Electronic Platform to Test the Influence of Hypervisors on the Performance of Embedded Systems. Technologies, 10(3), 65. https://doi.org/10.3390/technologies10030065