Does One Size Fit All? A Case Study to Discuss Findings of an Augmented Hands-Free Robot Teleoperation Concept for People with and without Motor Disabilities

Abstract

:1. Introduction

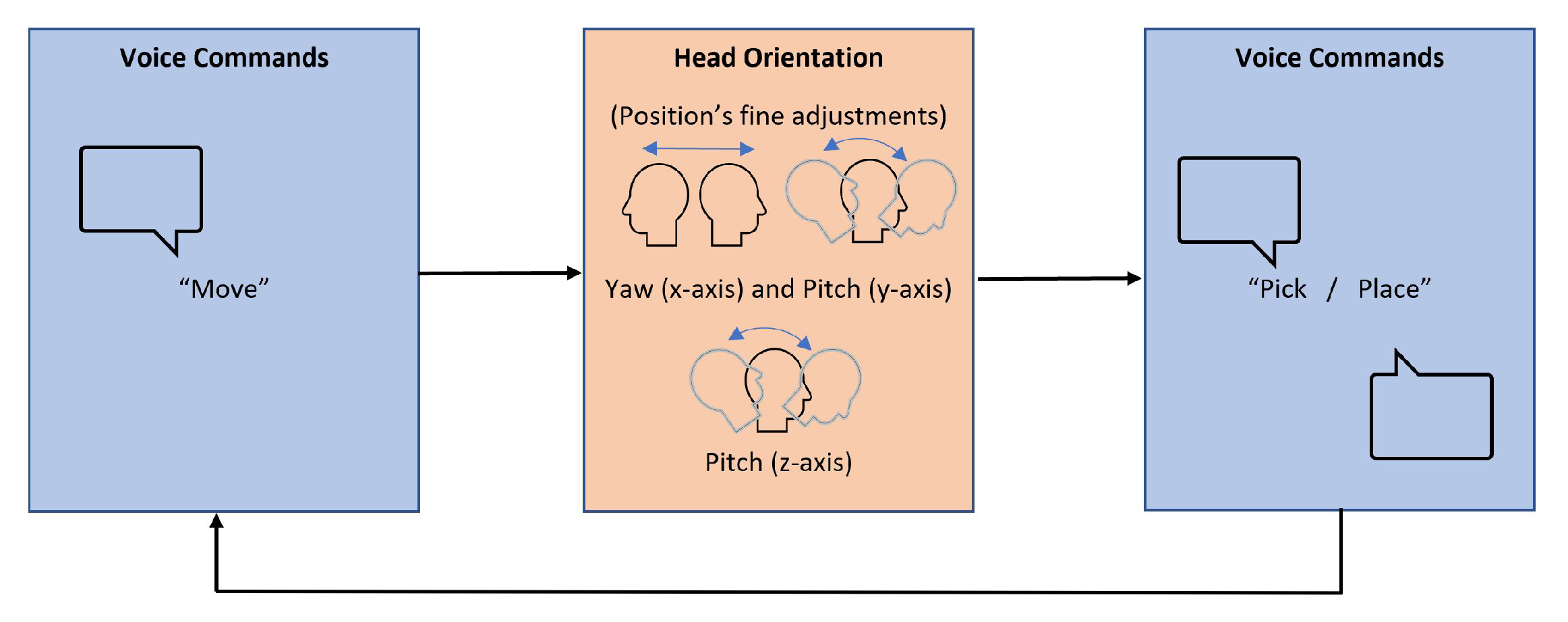

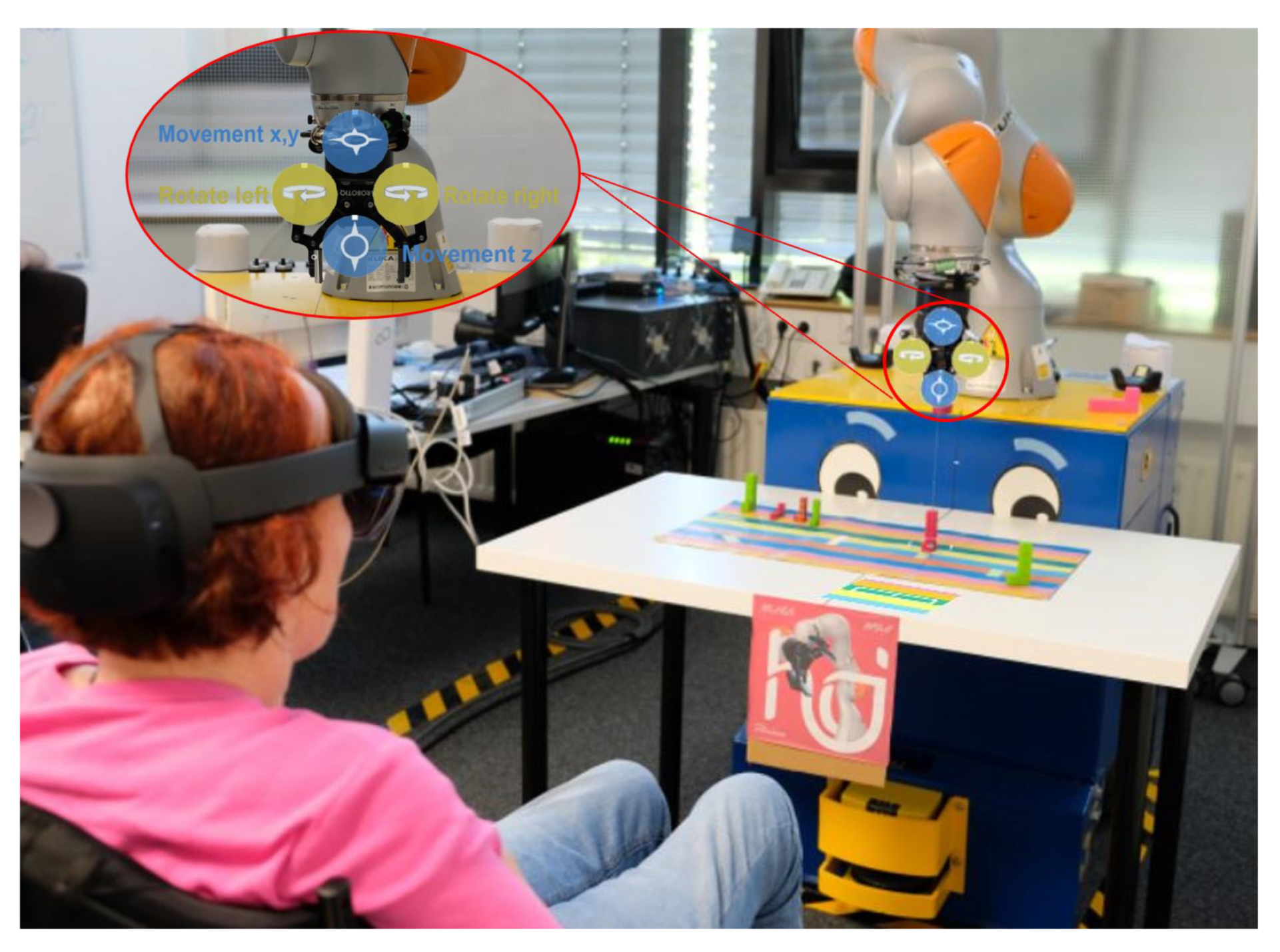

2. An Augmented Hands-Free Teleoperation Concept

3. A Case Study—Miss L.

3.1. A Personal Background

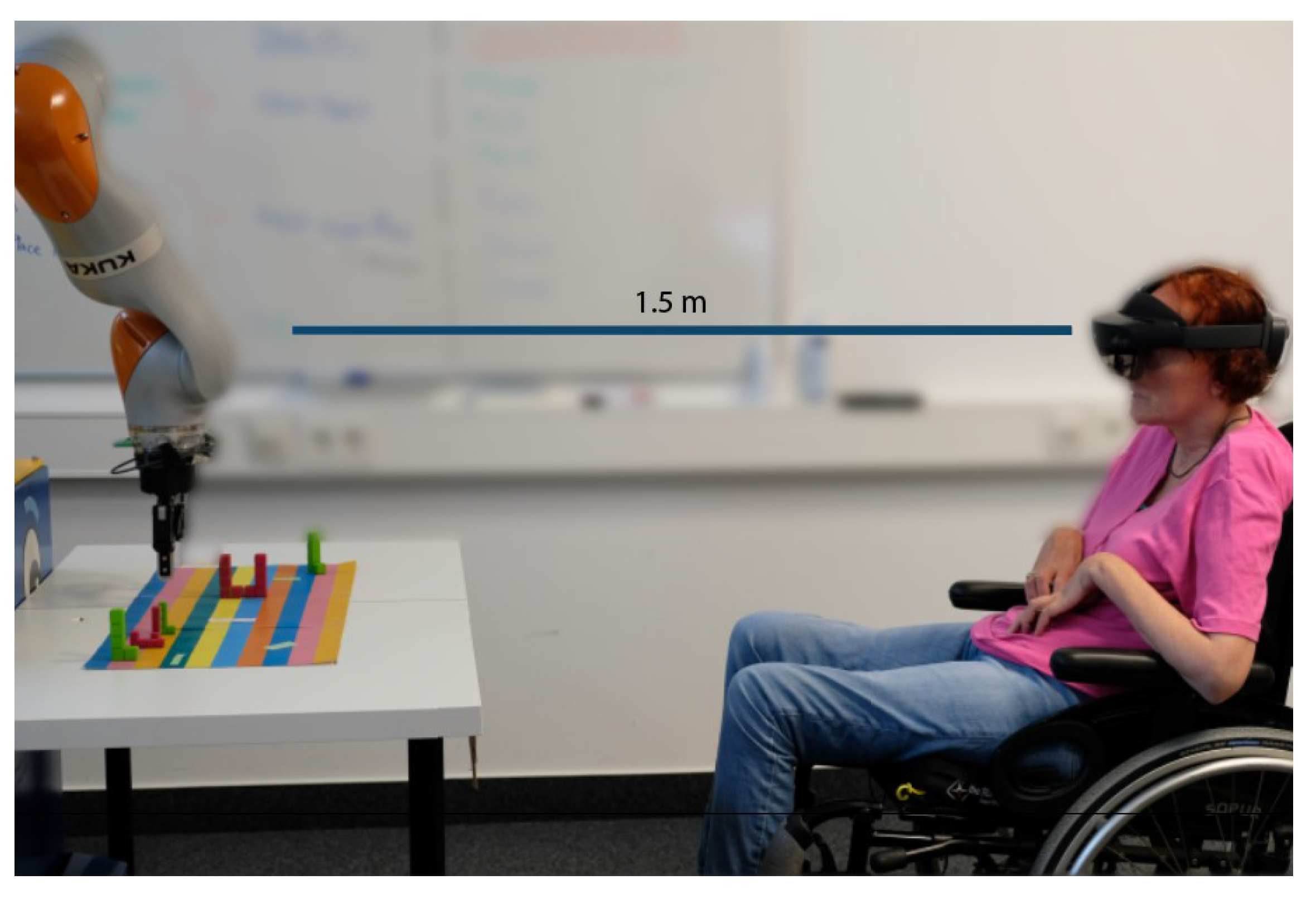

3.2. Study

3.3. Findings

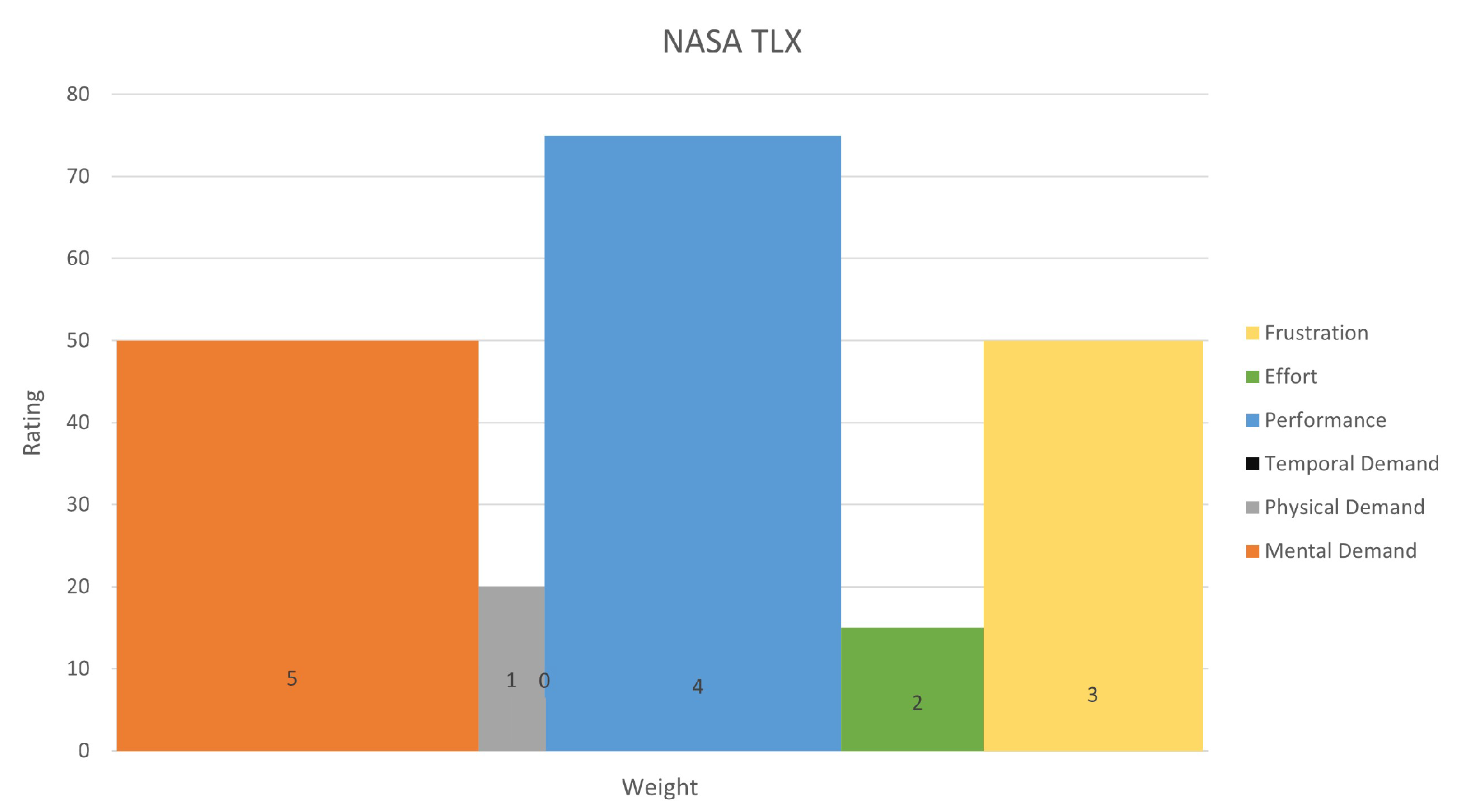

3.3.1. Questionnaire Results

3.3.2. Interview

“In my opinion there were never problems because there is always an emergency button, so I am not afraid of robotic arms. I do not get easily scared but it is important to have an emergency button. The hardest part is always to understand how everything works and what I should do. I do not always know what to do and I take some time until I fully understand everything.”

“Usually, I like voice commands, but today I do not have a lot of energy to speak. So that is why the voice commands were not working today for me. The voice commands are fantastic, but today I am missing energy.

I had another experiment where I had to move my head a lot, and it was very very very exhausting. But here I did not have to do that. I liked that everything felt compact.”

“I think it’s really cool! That is of course rather imprecise. But I felt like a part that contributed to the development. I thought that it was great.

I was also feeling good, I found it interesting, but I felt frustrated. The buttons were constantly activating, and I was not able to deactivate them, thus, I could not use them. When the robot was following the movements of my head, I was not sure where to look. And I was not able to see where the cursor was. I am almost blind from the left eye, so maybe that is the reason behind it.”

“No, I did not feel any pain or discomfort.”

“When I need to look at the visual cues and then use the buttons to control the robot it feels like working with a different display. However, I found those elements easy to understand. What I really liked was having the buttons just in front of me, it was like a screen within the screen. I like that they are attached to the robot.”

“At the beginning, I was not using any of those things. But when you started asking me about them, I realized that I could actually use them. I found the colors useful, it was easier to find where objects were with the colored stripes. I liked the virtual rectangle with the colors at the front. It showed me where the gripper is. Once I realized that, it got clear to me. But at the beginning I was just ignoring all of them.

In other experiments when I have teleoperated a robot. I never knew how far the robot’s gripper was relative to an object. However, when I saw that small virtual rectangle at the front showing me the color, it helped a lot to realize where I was on the workspace.”

“I think it is very good, interesting, and easy to understand. I have positive feelings towards it but I also felt frustrated because I could not see the cursor, so I could not activate the menu and then I could not perform the task at all.”

3.3.3. Discussion

4. Comparing Findings from People with and without Motor Disabilities

4.1. Hands-Free Multimodal Interaction

4.1.1. Similarities

4.1.2. Differences

4.2. Augmented Visual Cues and the Use of AR

4.2.1. Similarities

4.2.2. Differences

5. Participants with and without Motor Disabilities: Learning Points

5.1. Re-Evaluate the Experimental Metrics

5.2. Beware of the Bias

5.3. Consider Variability in Abilities Evoking Different Experiences

6. Limitations

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AR | Augmented Reality |

| DIX | Disability Interaction |

| GUI | Graphical User Interface |

| HMD | Head-Mounted Display |

| HRI | Human–Robot Interaction |

| IMD | Individual with Motor Disabilities |

| PMD | People with Motor Disabilities |

| SUS | System Usability Scale |

References

- Fraunhofer Institute for Manufacturing Engineering and Automation. Care-O-bot 4. Available online: https://www.care-o-bot.de/en/care-o-bot-4.html (accessed on 4 June 2021).

- Guerreiro, J.; Sato, D.; Asakawa, S.; Dong, H.; Kitani, K.M.; Asakawa, C. CaBot: Designing and Evaluating an Autonomous Navigation Robot for Blind People. In Proceedings of the 21st International ACM SIGACCESS Conference on Computers and Accessibility—ASSETS’19, Pittsburgh, PA, USA, 28–30 October 2019; Bigham, J.P., Azenkot, S., Kane, S.K., Eds.; ACM Press: New York, NY, USA, 2019; pp. 68–82. [Google Scholar] [CrossRef] [Green Version]

- Kyrarini, M.; Lygerakis, F.; Rajavenkatanarayanan, A.; Sevastopoulos, C.; Nambiappan, H.R.; Chaitanya, K.K.; Babu, A.R.; Mathew, J.; Makedon, F. A Survey of Robots in Healthcare. Technologies 2021, 9, 8. [Google Scholar] [CrossRef]

- Miseikis, J.; Caroni, P.; Duchamp, P.; Gasser, A.; Marko, R.; Miseikiene, N.; Zwilling, F.; de Castelbajac, C.; Eicher, L.; Fruh, M.; et al. Lio-A Personal Robot Assistant for Human-Robot Interaction and Care Applications. IEEE Robot. Autom. Lett. 2020, 5, 5339–5346. [Google Scholar] [CrossRef] [PubMed]

- Chadalavada, R.T.; Andreasson, H.; Krug, R.; Lilienthal, A.J. That’s on my mind! robot to human intention communication through on-board projection on shared floor space. In Proceedings of the 2015 European Conference on Mobile Robots, Lincoln, UK, 2–4 September 2015; Robots, E.C.O.M., Duckett, T., Eds.; IEEE: Piscataway, NJ, USA, 2015; pp. 1–6. [Google Scholar] [CrossRef]

- Qvarfordt, P. Gaze-informed multimodal interaction. In The Handbook of Multimodal-Multisensor Interfaces: Foundations, User Modeling, and Common Modality Combinations—Volume 1; Oviatt, S., Schuller, B., Cohen, P.R., Sonntag, D., Potamianos, G., Krüger, A., Eds.; ACM: New York, NY, USA, 2017; pp. 365–402. [Google Scholar] [CrossRef]

- Park, K.B.; Choi, S.H.; Lee, J.Y.; Ghasemi, Y.; Mohammed, M.; Jeong, H. Hands-Free Human–Robot Interaction Using Multimodal Gestures and Deep Learning in Wearable Mixed Reality. IEEE Access 2021, 9, 55448–55464. [Google Scholar] [CrossRef]

- Makhataeva, Z.; Varol, H. Augmented Reality for Robotics: A Review. Robotics 2020, 9, 21. [Google Scholar] [CrossRef] [Green Version]

- Culén, A.L.; van der Velden, M. The Digital Life of Vulnerable Users: Designing with Children, Patients, and Elderly. In Nordic Contributions in IS Research; van der Aalst, W., Mylopoulos, J., Rosemann, M., Shaw, M.J., Szyperski, C., Aanestad, M., Bratteteig, T., Eds.; Lecture Notes in Business Information Processing; Springer: Berlin/Heidelberg, Germany, 2013; Volume 156, pp. 53–71. [Google Scholar] [CrossRef]

- Shinohara, K.; Bennett, C.L.; Wobbrock, J.O. How Designing for People With and Without Disabilities Shapes Student Design Thinking. In Proceedings of the 18th International ACM SIGACCESS Conference on Computers and Accessibility—ASSETS’16, Reno, NN, USA, 24–26 October 2016; Feng, J.H., Huenerfauth, M., Eds.; ACM Press: New York, NY, USA, 2016; pp. 229–237. [Google Scholar] [CrossRef]

- Arevalo Arboleda, S.; Pascher, M.; Baumeister, A.; Klein, B.; Gerken, J. Reflecting upon Participatory Design in Human-Robot Collaboration for People with Motor Disabilities: Challenges and Lessons Learned from Three Multiyear Projects. In Proceedings of the 14th PErvasive Technologies Related to Assistive Environments Conference, Corfu, Greece, 29 June–2 July 2021; ACM: New York, NY, USA, 2021; pp. 147–155. [Google Scholar] [CrossRef]

- Arboleda, S.A.; Pascher, M.; Lakhnati, Y.; Gerken, J. Understanding Human-Robot Collaboration for People with Mobility Impairments at the Workplace, a Thematic Analysis. In Proceedings of the 2020 29th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Naples, Italy, 31 August–4 September 2020; pp. 561–566. [Google Scholar] [CrossRef]

- Arevalo Arboleda, S.; Rücker, F.; Dierks, T.; Gerken, J. Assisting Manipulation and Grasping in Robot Teleoperation with Augmented Reality Visual Cues. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 8–13 May 2021; Kitamura, Y., Quigley, A., Isbister, K., Igarashi, T., Bjørn, P., Drucker, S., Eds.; ACM: New York, NY, USA, 2021; pp. 1–14. [Google Scholar] [CrossRef]

- Microsoft. Microsoft Hololens. Available online: https://www.microsoft.com/en-us/hololens/hardware (accessed on 31 July 2018).

- Holloway, C. Disability interaction (DIX). Interactions 2019, 26, 44–49. [Google Scholar] [CrossRef]

- Bolt, R.A. “Put-that-there”: Voice and gesture at the graphics interface. ACM SIGGRAPH Comput. Graph. 1980, 14, 262–270. [Google Scholar] [CrossRef]

- Jacob, R.J.K. What You Look at Is What You Get: Eye Movement-Based Interaction Techniques; ACM Press: New York, NY, USA, 1990; pp. 11–18. [Google Scholar] [CrossRef] [Green Version]

- Kinova. Professional Robots for Industry. Available online: https://www.kinovarobotics.com/en/products/gen2-robot (accessed on 23 April 2020).

- KUKA. LBR iiwa | KUKA AG. New York, NY, USA. Available online: https://www.kuka.com/en-de/products/robot-systems/industrial-robots/lbr-iiwa (accessed on 21 April 2020).

- Universal Robots. UR5—The Flexible and Collaborative Robotic Arm, New York, NY, USA. Available online: https://www.universal-robots.com/products/ur5-robot/ (accessed on 30 June 2020).

- Graser, A.; Heyer, T.; Fotoohi, L.; Lange, U.; Kampe, H.; Enjarini, B.; Heyer, S.; Fragkopoulos, C.; Ristic-Durrant, D. A Supportive FRIEND at Work: Robotic Workplace Assistance for the Disabled. IEEE Robot. Autom. Mag. 2013, 20, 148–159. [Google Scholar] [CrossRef]

- Hart, S.G.; Staveland, L.E. Development of NASA-TLX (Task Load Index): Results of Empirical and Theoretical Research. In Human Mental Workload; Hancock, P.A., Meshkati, N., Eds.; Advances in Psychology; North-Holland: Amsterdam, The Netherlands; Oxford, UK, 1988; Volume 52, pp. 139–183. [Google Scholar] [CrossRef]

- Brooke, J. Sus: A quick and dirty usability scale. In Usability Evaluation in Industry; CRC Press: Boca Raton, FL, USA, 1996; Volume 189. [Google Scholar]

- Bartneck, C.; Kulić, D.; Croft, E.; Zoghbi, S. Measurement Instruments for the Anthropomorphism, Animacy, Likeability, Perceived Intelligence, and Perceived Safety of Robots. Int. J. Soc. Robot. 2009, 1, 71–81. [Google Scholar] [CrossRef] [Green Version]

- Bangor, A.; Kortum, P.; Miller, J. Determining What Individual SUS Scores Mean: Adding an Adjective Rating Scale. J. Usability Stud. 2009, 4, 114–123. [Google Scholar]

- Millo, F.; Gesualdo, M.; Fraboni, F.; Giusino, D. Human Likeness in Robots: Differences between Industrial and Non-Industrial Robots. In Proceedings of the ECCE 2021: European Conference on Cognitive Ergonomics 2021, Siena, Italy, 26–29 April 2021. [Google Scholar] [CrossRef]

- Ladner, R.E. Design for user empowerment. Interactions 2015, 22, 24–29. [Google Scholar] [CrossRef]

- Arevalo, S.; Miller, S.; Janka, M.; Gerken, J. What’s behind a choice? Understanding Modality Choices under Changing Environmental Conditions. In Proceedings of the ICMI’19; Gao, W., Ling Meng, H.M., Turk, M., Fussell, S.R., Schuller, B., Song, Y., Yu, K., Meng, H., Eds.; The Association for Computing Machinery: New York, NY, USA, 2019; pp. 291–301. [Google Scholar] [CrossRef]

- Busso, C.; Narayanan, S.S. Interrelation Between Speech and Facial Gestures in Emotional Utterances: A Single Subject Study. IEEE Trans. Audio Speech Lang. Process. 2007, 15, 2331–2347. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Arévalo Arboleda, S.; Becker, M.; Gerken, J. Does One Size Fit All? A Case Study to Discuss Findings of an Augmented Hands-Free Robot Teleoperation Concept for People with and without Motor Disabilities. Technologies 2022, 10, 4. https://doi.org/10.3390/technologies10010004

Arévalo Arboleda S, Becker M, Gerken J. Does One Size Fit All? A Case Study to Discuss Findings of an Augmented Hands-Free Robot Teleoperation Concept for People with and without Motor Disabilities. Technologies. 2022; 10(1):4. https://doi.org/10.3390/technologies10010004

Chicago/Turabian StyleArévalo Arboleda, Stephanie, Marvin Becker, and Jens Gerken. 2022. "Does One Size Fit All? A Case Study to Discuss Findings of an Augmented Hands-Free Robot Teleoperation Concept for People with and without Motor Disabilities" Technologies 10, no. 1: 4. https://doi.org/10.3390/technologies10010004

APA StyleArévalo Arboleda, S., Becker, M., & Gerken, J. (2022). Does One Size Fit All? A Case Study to Discuss Findings of an Augmented Hands-Free Robot Teleoperation Concept for People with and without Motor Disabilities. Technologies, 10(1), 4. https://doi.org/10.3390/technologies10010004