Abstract

Hands-free robot teleoperation and augmented reality have the potential to create an inclusive environment for people with motor disabilities. It may allow them to teleoperate robotic arms to manipulate objects. However, the experiences evoked by the same teleoperation concept and augmented reality can vary significantly for people with motor disabilities compared to those without disabilities. In this paper, we report the experiences of Miss L., a person with multiple sclerosis, when teleoperating a robotic arm in a hands-free multimodal manner using a virtual menu and visual hints presented through the Microsoft HoloLens 2. We discuss our findings and compare her experiences to those of people without disabilities using the same teleoperation concept. Additionally, we present three learning points from comparing these experiences: a re-evaluation of the metrics used to measure performance, being aware of the bias, and considering variability in abilities, which evokes different experiences. We consider these learning points can be extrapolated to carrying human–robot interaction evaluations with mixed groups of participants with and without disabilities.

1. Introduction

Robots and assistive devices have taken a caregiving role for the aging population and people with disabilities [1,2,3]. For people with physical impairments, such as motor disabilities, assistive robotics provides a set of opportunities that can enhance their abilities. People with Motor Disabilities (PMD) have restricted mobility of the upper and/or lower limbs and could perform reaching and manipulation tasks using robotic arms. An example of this is the Lio robot, which is presented as a “multifunctional arm designed for human-robot interaction and personal care assistant tasks” and has been used in different assistive environments and health care facilities [4].

Many PMD maintain their ability to use eye gaze, head movements, and speech and use those abilities to interact with other people and the environment. These abilities can be used as hands-free input modalities which can be suitable for robot control [5]. Further, a multimodal approach to robot teleoperation may complement the use of some modalities. For instance, head movements complement eye gaze for pointing and can be coordinated with speech [6]. Park et al. [7] proposed using eye gaze and head movements as a hands-free interaction to perform manipulation tasks with a robotic arm. Added to multimodality in teleoperation, augmented reality (AR) has gained popularity in robotics as a medium to exchange information, primarily using Head-Mounted Displays (HMDs) [8]. Moreover, using AR in robot teleoperation allows to enhance the operator’s visual space and provide visual feedback within the operator’s line of sight.

The experiences of people with and without disabilities may vary greatly, even when handling an experiment under the same environmental and instrumental conditions. These changes derive from the differences in abilities from each group. Culen and Velden [9] discuss methodological challenges when using participatory design with vulnerable groups derived from differences in cognitive and physical abilities. Moreover, Shinohara et al. [10] specified crucial differences about the use and perception of technology from people with and without disabilities. PMD may have different needs and expectations even when sharing a diagnosis [11] or having a common goal, e.g., at sheltered workshops [12]. We build upon those findings to present a perspective from an Individual with Motor Disabilities (IMD) about a teleoperation concept that was already tested with participants without disabilities [13].

The contribution of this paper is to provide insights from an IMD about a teleoperation concept of a robotic arm using AR (Microsoft HoloLens 2 [14]) in a pick-and-place task. We briefly present our interaction concept to allow for a detailed description of the experience of our participant. Further, we put that experience in context with those of people without disabilities. This allows us to present some reflections about similarities and differences in their experiences and learning points from carrying out such analysis. Our goal is to spread the perspectives of PMD and add to the body of knowledge about Disability Interaction (DIX), specifically to the third point in Holloway’s DIX action plan by reporting in-the-wild studies testing solutions with people with disabilities [15].

2. An Augmented Hands-Free Teleoperation Concept

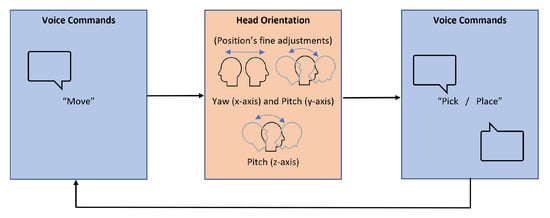

We use a multimodal hands-free approach through head orientation (yaw and pitch) and voice commands to teleoperate a robotic arm in a co-located space to perform pick-and-place tasks. This interaction concept, akin to “Put-that-there” [16], combines speech with deictic gestures. However, to adapt to the abilities of PMD, we considered head pose to point instead of hand gestures and combined it with voice commands. The interaction flow starts with head pointing to mark a position on a workspace and voice commands to commit an action, e.g., moving the robotic arm to an intended position and grasping/releasing objects, see Figure 1. We describe our interaction concept as follows:

Figure 1.

Diagram of the multimodal hands-free teleoperation.

1. The operator sees a cursor that follows the movements of the head (head pointing).

2. The operator uses head pointing to determine a position on the workspace, then the voice command “move” directs the robotic arm above the pointed position. The operator hears a click and the gaze pointer changes sizes to indicate that the command has been recognized. We set this to provide 1 second to keep or modify the position.

3. Once the operator has determined that the gripper is above a target, the command “pick” commits the action of grasping, i.e., the gripper moves in the robot’s z-axis toward the tabletop, closes its fingers, and moves back to the initial position on the z-axis (height) again.

4. To release a grasped object, the command “place” moves the gripper in the z-axis toward the tabletop, opens its fingers to release the object, and moves to the initial position on the z-axis (height) again.

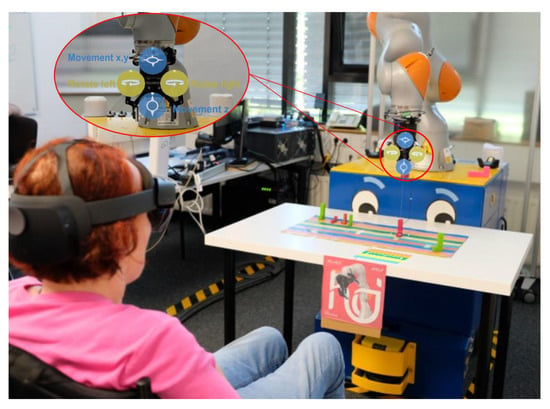

Additionally, our control interface presents two sets of virtual buttons anchored to the real gripper, which are activated by dwelling (Figure 2). The first set of buttons (yellow) rotate the robot’s gripper (10 degrees per second) to the left or right. The second set of virtual buttons (blue) activate a precision mode where the robotic arm follows the operator’s head yaw and pitch. The top blue button uses head’s yaw to move the robotic arm left or right and pitch to move it front or back, i.e., x,y pane. The lower blue button uses head’s pitch to move the gripper toward or away from the workspace, i.e., z-axis. This mode is intended to allow for fine adjustment of the position of the robot above a target object. The speed we use ranges from 0 to 800 mm/s and the operator can control the speed by directing the cursor further from the gripper (fast movement) or closer (slow movement) to it. For instance, if the operator moves the cursor to the workspace’s borders, the gripper will move faster. When any of the buttons is activated, the rest of them are hidden to avoid Midas touch problems [17].

Figure 2.

Study scenario with a view of the interface from the HoloLens.

We added shortcut commands that perform some actions: the command “turn” rotates the gripper on/to 90° and the command “down”, which executes the same action as the z-movement button, but it reduces the time needed to execute an action. It moves the gripper to half of the calculated distance from its current position to the tabletop.

We have previously reported the conceptualization and experiences of people without motor disabilities using two types of augmented visual cues (basic and advanced) in [13]. This case study builds upon that work and further investigates the use of basic augmented visual cues for PMD. Participants without disabilities did not express having problems with the input modalities (voice commands, head movements) or identifying the virtual elements in the environment.

3. A Case Study—Miss L.

We report on the experience of an IMD, Miss L., when teleoperating a robotic arm using our multimodal hands-free teleoperation concept. Miss L. has been involved in testing different assistive technologies for over 10 years and therefore possesses a certain level of experience. She has teleoperated different robotic arms, e.g., Kinova [18], Kuka iiwa [19], UR5 [20], tested different devices and sensors, as well as tried out various teleoperation interaction concepts. This allows her to provide broader insights and establish comparisons between different technologies and interactions grounded on her experience. Additionally, her assessments may be more critical and realistic than the ones from a novice user. In fact, we believe that her experiences minimize the influence of novelty and therefore allows a more ecological valid evaluation of the teleoperation interaction concept.

3.1. A Personal Background

Miss L. is an optimistic and kind person who enjoys reading and being outdoors. She studied journalism and worked as an editor. In 1988, she was diagnosed with multiple sclerosis. Today, she cannot move her legs and arms but maintains her energy and joyful personality. She describes her diagnosis as “the disease of 1000 faces” and says “I am lucky that I can still see, but I am shortsighted”. Around 10 years ago, the German Department of Labour contacted her since they were looking for a person with motor disabilities to join a research team at the University of Bremen. That led her to move from Berlin to Bremen, where she joined the Institute for Automation Technology. Since then, she has been testing different assistive technologies developed at the University of Bremen and the Westphalian University of Applied Sciences.

She recalls her first experiences with the FRIEND (Functional Robot arm with user frIENdly interface for Disabled people) robot, which was a robotic arm mounted on a wheelchair [21]. It was designed to support people with motor disabilities to perform manipulation tasks in a workplace setting. She describes her experience with it, “I was a bit hesitant because it was very big and I was always sitting in it.” She adds that, around that time, she was asked if she wanted to have a robotic arm at home which she declined as she preferred to have a “human assistant.” However, she expressed, “Honestly, nowadays after all my experiences with robots, I would like to have a robotic arm at home. Caregivers have sometimes crazy mood swings, and a robotic arm does not have moods. It will not have good mood but there is no bad mood either. I would like very much to work with a neutral robotic arm”.

3.2. Study

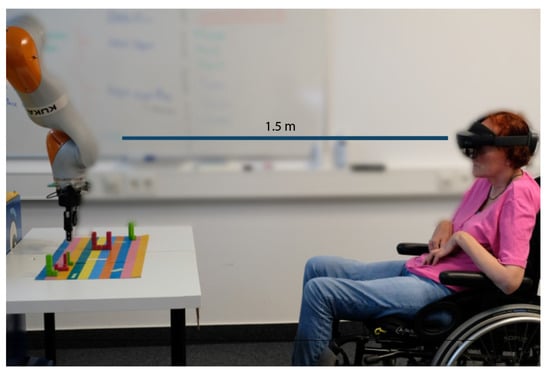

We present a study where Miss L. teleoperated a robotic arm to perform a basic pick-and-place task using our augmented hands-free teleoperation concept, as seen in Figure 3. The goal was to collect impressions to identify the strengths and downsides about the overall interaction concept and the technology used for a person with motor disabilities.

Figure 3.

Side view of the scenario with Miss L. wearing the Microsoft HoloLens2 while teleoperating the robotic arm.

The task comprised teleoperating the robotic arm to pick an L-shaped object, rotating it, and placing it at a specific position on the workspace. The task was performed wearing the Microsoft HoloLens 2 at 1.5 m away from the workspace, see Figure 3. We collected subjective impressions using the NASA-TLX [22] to evaluate workload, the System Usability Scale (SUS) [23] for usability, the Godspeed Questionnaires Series [24]. Our goal was to determine the robot’s perception, considering four dimensions (animacy, likeability, perceived intelligence, and safety), and carried out an interview. All the information was collected in German, analyzed in German, and then translated to English to report it in this paper.

The session lasted approximately three hours with several breaks in between. First, we introduced the session, the devices to be used, the goal of the study, asked for verbal consent, and carried out a short interview. Then, we showed an introductory video and proceeded to calibrate the HoloLens. Next, we performed a training task to familiarize her with the devices, modalities, and overall interaction. After that, we proceeded to perform the task, followed by the subjective questionnaires and a final interview.

3.3. Findings

We aimed to collect objective and subjective measures of performance of our teleoperation concept. However, we experienced a new series of unexpected challenges that influenced Miss L.’s experiences. She reported having difficulties visually identifying the cursor that follows head movements. The cursor was needed to activate the robot controls on the virtual menu (see Figure 2). Additionally, she mentioned that on that particular day, her voice volume was rather soft and she was not able to speak out very loud. This resulted in failures to recognize the voice commands.

3.3.1. Questionnaire Results

We present the questionnaire results together with some comments from Miss L. For some items of the different questionnaires, she elaborated on her line of reasoning behind them.

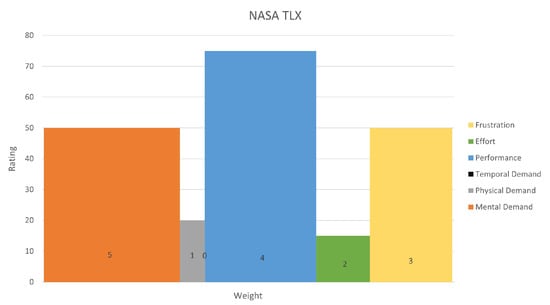

The results from the NASA-TLX workload showed mental demand = 50, weight (5); physical demand = 20, weight (1); temporal demand = 5, weight (0); performance = 75, weight (4); effort = 15, weight (2); frustration = 50, weight (3); with a total score of 50. These results show a relatively high frustration and mental demand compared to the other dimensions, see Figure 4.

Figure 4.

Participant’s workload (NASA TLX plot).

The Godspeed Questionnaire prompted the following scores (5-point Likert scale). On the animacy scale (4.83), Miss L. emphasized the positive characteristics, e.g., “very alive”, “very lively”, “very lifelike”, “very interactive”. When Miss L. was asked about the robotic arm being mechanic or organic, she added, “Well, I always give a robot a soul, so I think the robot was indeed organic”. Regarding the likeability dimension (5), she also expressed a rather positive attitude, e.g., “I like the robot very much”, “It is a nice robot, it gets a 5”, “It was a nice and spontaneous experience”. In the perceived intelligence dimension (5), she added, “I do not think that in any situation the robot was unreasonable”. Concerning safety (4), she mentioned, “Well, today I have not been on my greatest mood so I have not been relaxed”.

The SUS Questionnaire (5-point Likert scale) prompted an overall score of 82.5 out of 100. During the questionnaire, she mentioned, “I do not think the system is unnecessary complex. I sometimes just need a while to understand something but after doing the task for a few times I understand it”.

3.3.2. Interview

We present the answers, in verbatim, that Miss L. gave us during the interview. This interview took place after she teleoperated the robotic arm.

Question 1. What do you think about working with robotic arms? Have you had any problems in the past?

“In my opinion there were never problems because there is always an emergency button, so I am not afraid of robotic arms. I do not get easily scared but it is important to have an emergency button. The hardest part is always to understand how everything works and what I should do. I do not always know what to do and I take some time until I fully understand everything.”

Question 2. What do you think about controlling the robot by moving your head and using voice commands?

“Usually, I like voice commands, but today I do not have a lot of energy to speak. So that is why the voice commands were not working today for me. The voice commands are fantastic, but today I am missing energy.

I had another experiment where I had to move my head a lot, and it was very very very exhausting. But here I did not have to do that. I liked that everything felt compact.”

Question 3. How did you feel controlling the robot? Can you mention three feelings that you experience while controlling the robot?

“I think it’s really cool! That is of course rather imprecise. But I felt like a part that contributed to the development. I thought that it was great.

I was also feeling good, I found it interesting, but I felt frustrated. The buttons were constantly activating, and I was not able to deactivate them, thus, I could not use them. When the robot was following the movements of my head, I was not sure where to look. And I was not able to see where the cursor was. I am almost blind from the left eye, so maybe that is the reason behind it.”

Question 4. Did you feel any pain or were you uncomfortable when using the HoloLens or teleoperating the robot?

“No, I did not feel any pain or discomfort.”

Question 5. We know that in previous experiments, you have used an external display to control the robot. How does it feel to have the robot controls within your line of sight?

“When I need to look at the visual cues and then use the buttons to control the robot it feels like working with a different display. However, I found those elements easy to understand. What I really liked was having the buttons just in front of me, it was like a screen within the screen. I like that they are attached to the robot.”

Question 6. You had virtual elements that indicated to you the position on the workspace such as different colors. Which element helped you the most?

“At the beginning, I was not using any of those things. But when you started asking me about them, I realized that I could actually use them. I found the colors useful, it was easier to find where objects were with the colored stripes. I liked the virtual rectangle with the colors at the front. It showed me where the gripper is. Once I realized that, it got clear to me. But at the beginning I was just ignoring all of them.

In other experiments when I have teleoperated a robot. I never knew how far the robot’s gripper was relative to an object. However, when I saw that small virtual rectangle at the front showing me the color, it helped a lot to realize where I was on the workspace.”

Question 7. Can you mention three words to describe the system and three words to describe your feelings while teleoperating the robotic arm?

“I think it is very good, interesting, and easy to understand. I have positive feelings towards it but I also felt frustrated because I could not see the cursor, so I could not activate the menu and then I could not perform the task at all.”

3.3.3. Discussion

The NASA-TLX questionnaires showed a relatively high frustration and mental demand compared to the other dimensions. These results align with the comments expressed during the interview, where "frustrated" was one of the words used to describe the experience. We believe that this portrays the challenges that Miss L. experienced when teleoperating the robotic arm and thus had a decisive role in the workload.

The score of the SUS shows high usability of our overall interaction concept which, according to Bangor et al. [25], can be interpreted as high acceptability. These results align with those from the Godspeed questionnaire and hint at positive impressions of the overall interaction concept. However, we remark the high scores on animacy and perceived intelligence, as we expected that a collaborative industrial robotic arm and the teleoperation would evoke low scores on both dimensions.

Industrial robotic arms have anthropomorphic characteristics that relate to their functionality rather than their sociality, such as end-effectors similar to hands and fingers [26]. We consider that these anthropomorphic human-like features, e.g., an arm with soft edges and a gripper as end-effector, can indeed evoke high scores of animacy. Bartneck et al. [24] argue that animacy can be attributed to robots as they can react to stimuli and industrial robotic arms show physical behavior to manipulate objects in the environment in a similar way to that of a human hand. Additionally, we considered that teleoperation itself would decrease the perceived intelligence of the robot due to its lack of autonomy. However, perceived intelligence is more related to competence [24]. Although Miss L. experienced challenges performing the task, she did not attribute them to the robot but to the characteristics of her situation on that particular day. Further, seeing the evolution of the robots and technology used may have influenced the perceived intelligence of the robot. In Miss L.’s previous experiences, she teleoperated a larger robot with an extra external display and used a more demanding teleoperation concept as she expressed in the interview (Question 2). The use of AR to present basic robot controls within the operator’s line of sight may have also simplified teleoperation and influenced the perceived intelligence of the robot.

4. Comparing Findings from People with and without Motor Disabilities

We designed an interaction concept that both people with and without disabilities could use. We aimed for simplicity in design and presented basic robot controls that novice users could quickly learn without further training in robotics. However, we encountered new challenges that an IMD faced, which we did not consider in previous studies with people without disabilities [13]. We present these findings and the challenges that Miss L. experienced in two topics, hands-free multimodal teleoperation and augmented visual cues.

In [13], participants highlighted and often used the terms “useful and precise” to describe the system. We found those words also mentioned by Miss L. However, we believe that this needs to be put in context. Miss L. found certain virtual elements useful, especially to improve depth perception as it can be seen in her answers to Question 6. She did not refer to the whole teleoperation concept as useful, but she established comparisons to other teleoperation concepts that she found exhausting and she described our teleoperation as compact (Question 2). Regarding finding the concept “precise”, Miss L. described it as rather lacking precision. We believe that this comment represents the challenges she experienced when trying to move the robot using the virtual basic controls. However, she also emphasized the improvements on depth perception by using the augmented visual cues.

4.1. Hands-Free Multimodal Interaction

4.1.1. Similarities

People without motor disabilities and Miss L. did not experience problems using their head orientation (yaw and pitch) to make the robot move in the workspace. Additionally, the choice of words used as voice commands was easily remembered by both groups.

4.1.2. Differences

The voice commands presented challenges for Miss L. This was expressed in a comment mentioning that she feels that she has to speak louder but she was lacking energy. This resulted in constraints to move the robotic arm around the workspace. The volume of someone’s voice had not been previously identified as an issue. This aspect invites reflection on the wide range of variability in the physical abilities of PMD [11]. Besides, an individual might be comfortable to move their head further left or right on one day but less on the next one.

4.2. Augmented Visual Cues and the Use of AR

4.2.1. Similarities

Both groups commented about the helpfulness of the visual cues to realize the position of the robot relative to a target. Moreover, both groups expressed positive and negative subjective impressions about the augmented visual cues. Overall, both groups expressed that using augmented visual cues improved their perception of depth.

4.2.2. Differences

For our participant with motor disabilities, the lack of visual acuity in one eye did not allow us to identify the virtual cursor clearly. This element was crucial to use the dwell buttons and execute fine adjustments to position the gripper. We believe that increasing the size of the cursor might mitigate this issue, although further testing would be needed for this affirmation to be accurate. Derived from this problem, the virtual menu—containing the basic robot controls—was unintentionally activated several times, possibly pointing to the downsides of having the menu within the operator’s line of sight. We considered that having the basic controls in the line of sight at all times would reduce the workload evoked by gaze shifts. However, we realized that it also led to unintentional activations derived from the unawareness of the cursor’s location. We noticed that this led to higher levels of frustration expressed by the IMD, which could evoke a feeling of lack of agency.

5. Participants with and without Motor Disabilities: Learning Points

We present learning points when comparing the experiences of people with and without disabilities with our robot teleoperation concept. We grouped them in three different topics that may provide a bigger picture of pragmatic factors to consider when handling experiments with these two different groups of participants.

5.1. Re-Evaluate the Experimental Metrics

Handling experiments with people with and without disabilities brings a pertinent question about the type of metrics to be collected when evaluating any given concept. However, using the exact same objective metrics might not provide a real picture of the experience of people with disabilities. For instance, in our study, the lack of acuity in one eye did not always allow our participant to identify the virtual cursor and activate the basic robot controls. This led us toward not considering metrics such as time or distance in the evaluation. Instead, we emphasize the subjective impressions when using our teleoperation concept. Hence, we highlight the relevance of collecting subjective measures and qualitative analysis when carrying out studies with people with disabilities. We consider that qualitative analysis and subjective measures point at specific design decisions that could challenge the usability of a solution for people with disabilities.

5.2. Beware of the Bias

PMD may often feel excluded from design decisions in technology and most of them are eager to participate in design sessions as well as user studies, but it could also lead to a rather positive bias. For instance, Miss L. provided several positive comments about our augmented hands-free teleoperation concept reflected in the questionnaires used and the interview. However, during our observations, she struggled to teleoperate the robot because of the factors we previously mentioned (voice’s volume and not being able to see the cursor at all times). This leads us to consider that her vast and positive experiences with robotic arms together with being involved in design sessions might have played a determining role. Although we call for caution about this positive bias, it also shows the influence of including participants in design sessions as a way to reduce fear or negative bias toward robots and even contributes to the notion of “design for empowerment” of people with disabilities [27].

Miss L.’s experience leads us to consider that individual and situational characteristics have a major influence on the impressions of interaction concepts. This aligns with previous findings of such factors in modality choices and may be even applied to general interaction concepts [28]. That is why we suggest performing periodical tests to familiarize participants not only with the technology but interaction concepts. We consider that this would allow reducing the influence of external and internal factors, e.g., individual characteristics of participants.

5.3. Consider Variability in Abilities Evoking Different Experiences

We believe that our findings emphasize the importance of usability testing and performing studies with people with disabilities, as it identifies problems that only PMD could possibly experience. Our results regarding hands-free multimodal teleoperation lead us to design to allow for multiple options in teleoperation. This can reduce the impact of having differences in participants’ abilities and could even mitigate fatigue factors. For instance, in the morning, voice commands and head movements could be used as input modalities for teleoperation, and by the end of the day, when the voice may be feeble, the GUI could allow using solely head movements with a context menu displayed at the cursor. Therefore, we consider that presenting a base interaction that allows for multiple adaptations could improve the subjective experience of teleoperation and possibly performance.

6. Limitations

Our main limitation is presenting a single-subject study. We initially planned to carry out the study with more PMD. However, the impact of SARS-CoV-2 hindered us from inviting more participants as most of them belong to the high-risk group from this disease. Nevertheless, we align with Busso et al. [29] who remark the relevance of single-case studies to indicate intersubject variability.

In retrospect, we could have rescheduled the appointment for the experiment to minimize the effects of the situational characteristics of Miss L. However, it would have added more burden to our participant, as she needs special assistance and transportation to attend to experiments outside of her home. Therefore, we decided to carry out the experiment and report the subjective measures collected together with some reflections on her experience.

7. Conclusions

All in all, our findings align with Holloway’s philosophy of “one size fits one” [15], as they point toward the absence of a design or interaction concept that allows people with and without disabilities to achieve the same level of performance or experience. In fact, there might not even be a singular design for a group of people sharing a diagnosis.

In this paper, we present the impressions of an augmented multimodal teleoperation concept from the perspective of a person with motor disabilities, Miss L. These suggest the potential of including AR in HRI for PMD and the challenges that may arise from certain design decisions, e.g., presenting robot controls within the line of sight and the use of voice commands. Further, we put in context the experiences of people without motor disabilities with those of Miss L. This led us to provide three learning points when evaluating an interaction concept with people with and without disabilities: (1) re-evaluating the metrics used to measure performance, (2) being aware of the bias, and (3) considering abilities’ variability which evokes different experiences. Finally, our findings do not intend to present a definite conclusion about interaction design decisions but aim to enable discussions about the perspectives of people with disabilities in HRI design.

Author Contributions

Conceptualization, S.A.A.; methodology, S.A.A.; formal analysis, S.A.A., M.B.; investigation, S.A.A.; experiment, S.A.A. and M.B.; writing—original draft preparation, S.A.A.; writing—review and editing, S.A.A. and J.G.; supervision, J.G.; project administration, S.A.A. and J.G.; funding acquisition, J.G. All authors have read and agreed to the published version of the manuscript.

Funding

This research is supported by the German Federal Ministry of Education and Research (BMBF, FKZ: 13FH011IX6).

Institutional Review Board Statement

Ethical reviews by an “Ethics Committee/Board” are waived in Germany as they are not compulsory for fields outside medicine.

Informed Consent Statement

Our participant gave informed consent before participating in the study and approved the text concerning the background in this manuscript.

Data Availability Statement

Not applicable.

Acknowledgments

We would like to thank our participant for her openness and willingness to participate in our study. We further would like to thank Henrik Dreisewerd for his support with the translations.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AR | Augmented Reality |

| DIX | Disability Interaction |

| GUI | Graphical User Interface |

| HMD | Head-Mounted Display |

| HRI | Human–Robot Interaction |

| IMD | Individual with Motor Disabilities |

| PMD | People with Motor Disabilities |

| SUS | System Usability Scale |

References

- Fraunhofer Institute for Manufacturing Engineering and Automation. Care-O-bot 4. Available online: https://www.care-o-bot.de/en/care-o-bot-4.html (accessed on 4 June 2021).

- Guerreiro, J.; Sato, D.; Asakawa, S.; Dong, H.; Kitani, K.M.; Asakawa, C. CaBot: Designing and Evaluating an Autonomous Navigation Robot for Blind People. In Proceedings of the 21st International ACM SIGACCESS Conference on Computers and Accessibility—ASSETS’19, Pittsburgh, PA, USA, 28–30 October 2019; Bigham, J.P., Azenkot, S., Kane, S.K., Eds.; ACM Press: New York, NY, USA, 2019; pp. 68–82. [Google Scholar] [CrossRef] [Green Version]

- Kyrarini, M.; Lygerakis, F.; Rajavenkatanarayanan, A.; Sevastopoulos, C.; Nambiappan, H.R.; Chaitanya, K.K.; Babu, A.R.; Mathew, J.; Makedon, F. A Survey of Robots in Healthcare. Technologies 2021, 9, 8. [Google Scholar] [CrossRef]

- Miseikis, J.; Caroni, P.; Duchamp, P.; Gasser, A.; Marko, R.; Miseikiene, N.; Zwilling, F.; de Castelbajac, C.; Eicher, L.; Fruh, M.; et al. Lio-A Personal Robot Assistant for Human-Robot Interaction and Care Applications. IEEE Robot. Autom. Lett. 2020, 5, 5339–5346. [Google Scholar] [CrossRef] [PubMed]

- Chadalavada, R.T.; Andreasson, H.; Krug, R.; Lilienthal, A.J. That’s on my mind! robot to human intention communication through on-board projection on shared floor space. In Proceedings of the 2015 European Conference on Mobile Robots, Lincoln, UK, 2–4 September 2015; Robots, E.C.O.M., Duckett, T., Eds.; IEEE: Piscataway, NJ, USA, 2015; pp. 1–6. [Google Scholar] [CrossRef]

- Qvarfordt, P. Gaze-informed multimodal interaction. In The Handbook of Multimodal-Multisensor Interfaces: Foundations, User Modeling, and Common Modality Combinations—Volume 1; Oviatt, S., Schuller, B., Cohen, P.R., Sonntag, D., Potamianos, G., Krüger, A., Eds.; ACM: New York, NY, USA, 2017; pp. 365–402. [Google Scholar] [CrossRef]

- Park, K.B.; Choi, S.H.; Lee, J.Y.; Ghasemi, Y.; Mohammed, M.; Jeong, H. Hands-Free Human–Robot Interaction Using Multimodal Gestures and Deep Learning in Wearable Mixed Reality. IEEE Access 2021, 9, 55448–55464. [Google Scholar] [CrossRef]

- Makhataeva, Z.; Varol, H. Augmented Reality for Robotics: A Review. Robotics 2020, 9, 21. [Google Scholar] [CrossRef] [Green Version]

- Culén, A.L.; van der Velden, M. The Digital Life of Vulnerable Users: Designing with Children, Patients, and Elderly. In Nordic Contributions in IS Research; van der Aalst, W., Mylopoulos, J., Rosemann, M., Shaw, M.J., Szyperski, C., Aanestad, M., Bratteteig, T., Eds.; Lecture Notes in Business Information Processing; Springer: Berlin/Heidelberg, Germany, 2013; Volume 156, pp. 53–71. [Google Scholar] [CrossRef]

- Shinohara, K.; Bennett, C.L.; Wobbrock, J.O. How Designing for People With and Without Disabilities Shapes Student Design Thinking. In Proceedings of the 18th International ACM SIGACCESS Conference on Computers and Accessibility—ASSETS’16, Reno, NN, USA, 24–26 October 2016; Feng, J.H., Huenerfauth, M., Eds.; ACM Press: New York, NY, USA, 2016; pp. 229–237. [Google Scholar] [CrossRef]

- Arevalo Arboleda, S.; Pascher, M.; Baumeister, A.; Klein, B.; Gerken, J. Reflecting upon Participatory Design in Human-Robot Collaboration for People with Motor Disabilities: Challenges and Lessons Learned from Three Multiyear Projects. In Proceedings of the 14th PErvasive Technologies Related to Assistive Environments Conference, Corfu, Greece, 29 June–2 July 2021; ACM: New York, NY, USA, 2021; pp. 147–155. [Google Scholar] [CrossRef]

- Arboleda, S.A.; Pascher, M.; Lakhnati, Y.; Gerken, J. Understanding Human-Robot Collaboration for People with Mobility Impairments at the Workplace, a Thematic Analysis. In Proceedings of the 2020 29th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Naples, Italy, 31 August–4 September 2020; pp. 561–566. [Google Scholar] [CrossRef]

- Arevalo Arboleda, S.; Rücker, F.; Dierks, T.; Gerken, J. Assisting Manipulation and Grasping in Robot Teleoperation with Augmented Reality Visual Cues. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 8–13 May 2021; Kitamura, Y., Quigley, A., Isbister, K., Igarashi, T., Bjørn, P., Drucker, S., Eds.; ACM: New York, NY, USA, 2021; pp. 1–14. [Google Scholar] [CrossRef]

- Microsoft. Microsoft Hololens. Available online: https://www.microsoft.com/en-us/hololens/hardware (accessed on 31 July 2018).

- Holloway, C. Disability interaction (DIX). Interactions 2019, 26, 44–49. [Google Scholar] [CrossRef]

- Bolt, R.A. “Put-that-there”: Voice and gesture at the graphics interface. ACM SIGGRAPH Comput. Graph. 1980, 14, 262–270. [Google Scholar] [CrossRef]

- Jacob, R.J.K. What You Look at Is What You Get: Eye Movement-Based Interaction Techniques; ACM Press: New York, NY, USA, 1990; pp. 11–18. [Google Scholar] [CrossRef] [Green Version]

- Kinova. Professional Robots for Industry. Available online: https://www.kinovarobotics.com/en/products/gen2-robot (accessed on 23 April 2020).

- KUKA. LBR iiwa | KUKA AG. New York, NY, USA. Available online: https://www.kuka.com/en-de/products/robot-systems/industrial-robots/lbr-iiwa (accessed on 21 April 2020).

- Universal Robots. UR5—The Flexible and Collaborative Robotic Arm, New York, NY, USA. Available online: https://www.universal-robots.com/products/ur5-robot/ (accessed on 30 June 2020).

- Graser, A.; Heyer, T.; Fotoohi, L.; Lange, U.; Kampe, H.; Enjarini, B.; Heyer, S.; Fragkopoulos, C.; Ristic-Durrant, D. A Supportive FRIEND at Work: Robotic Workplace Assistance for the Disabled. IEEE Robot. Autom. Mag. 2013, 20, 148–159. [Google Scholar] [CrossRef]

- Hart, S.G.; Staveland, L.E. Development of NASA-TLX (Task Load Index): Results of Empirical and Theoretical Research. In Human Mental Workload; Hancock, P.A., Meshkati, N., Eds.; Advances in Psychology; North-Holland: Amsterdam, The Netherlands; Oxford, UK, 1988; Volume 52, pp. 139–183. [Google Scholar] [CrossRef]

- Brooke, J. Sus: A quick and dirty usability scale. In Usability Evaluation in Industry; CRC Press: Boca Raton, FL, USA, 1996; Volume 189. [Google Scholar]

- Bartneck, C.; Kulić, D.; Croft, E.; Zoghbi, S. Measurement Instruments for the Anthropomorphism, Animacy, Likeability, Perceived Intelligence, and Perceived Safety of Robots. Int. J. Soc. Robot. 2009, 1, 71–81. [Google Scholar] [CrossRef] [Green Version]

- Bangor, A.; Kortum, P.; Miller, J. Determining What Individual SUS Scores Mean: Adding an Adjective Rating Scale. J. Usability Stud. 2009, 4, 114–123. [Google Scholar]

- Millo, F.; Gesualdo, M.; Fraboni, F.; Giusino, D. Human Likeness in Robots: Differences between Industrial and Non-Industrial Robots. In Proceedings of the ECCE 2021: European Conference on Cognitive Ergonomics 2021, Siena, Italy, 26–29 April 2021. [Google Scholar] [CrossRef]

- Ladner, R.E. Design for user empowerment. Interactions 2015, 22, 24–29. [Google Scholar] [CrossRef]

- Arevalo, S.; Miller, S.; Janka, M.; Gerken, J. What’s behind a choice? Understanding Modality Choices under Changing Environmental Conditions. In Proceedings of the ICMI’19; Gao, W., Ling Meng, H.M., Turk, M., Fussell, S.R., Schuller, B., Song, Y., Yu, K., Meng, H., Eds.; The Association for Computing Machinery: New York, NY, USA, 2019; pp. 291–301. [Google Scholar] [CrossRef]

- Busso, C.; Narayanan, S.S. Interrelation Between Speech and Facial Gestures in Emotional Utterances: A Single Subject Study. IEEE Trans. Audio Speech Lang. Process. 2007, 15, 2331–2347. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).