Forecasting Credit Ratings of EU Banks

Abstract

1. Introduction

2. Literature Review

3. Data and Methodology

3.1. The Data

3.2. Support Vector Machines

4. Empirical Findings

4.1. Feature Selection

4.2. Ordered Probit Model Results

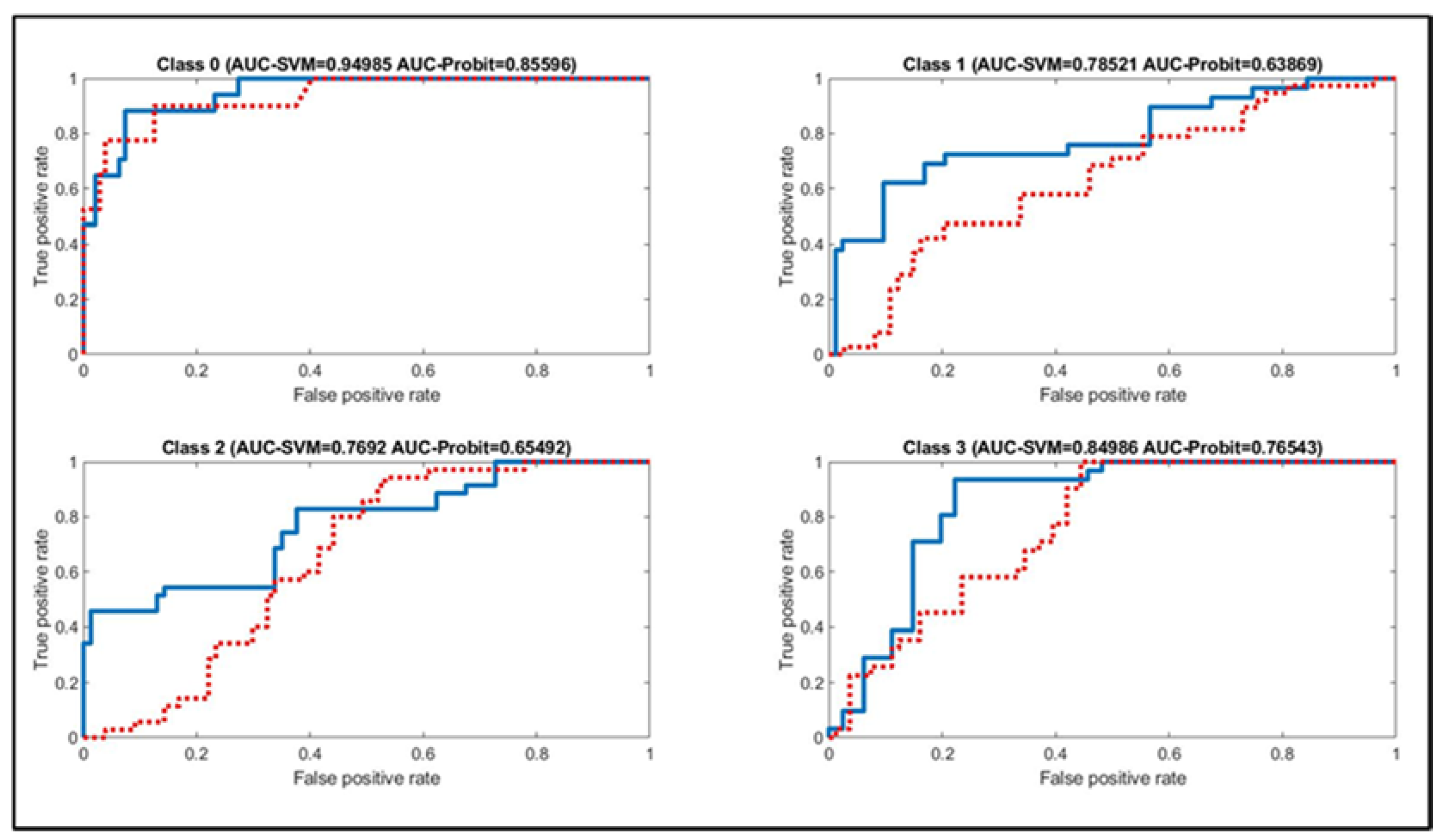

4.3. Support Vector Machines Model Results

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Belkaoui, Ahmed. 1980. Industrial bond ratings: A new look. Financial Management 9: 44–51. [Google Scholar] [CrossRef]

- Bissoondoyal-Bheenick, Emawtee, and Sirimon Treepongkaruna. 2011. Analysis of the determinants of bank ratings: Comparison across agencies. Australian Journal of Management 36: 405–24. [Google Scholar] [CrossRef]

- Chang, Chih-Chung, and Chih-Jen Lin. 2011. LIBSVM: A library for support vector machines. ACM Transactions on Intelligent Systems and Technology 2: 1–27. [Google Scholar] [CrossRef]

- Cortes, Corina, and Vladimir Vapnik. 1995. Support vector networks. Machine Learning 20: 273–97. [Google Scholar] [CrossRef]

- Dutta, Soumitra, and Shashi Shekhar. 1988. Bond rating: A non-conservative application of neural networks. Paper presented at the IEEE International Conference on Neural Networks, San Diego, CA, USA, July 21–24; pp. 1443–450. [Google Scholar]

- Ederington, Louis H. 1985. Classification models and bond ratings. Financial Review 20: 237–62. [Google Scholar] [CrossRef]

- European Central Bank (ECB). 2006. Dent Securities. Available online: https://www.ecb.europa.eu/stats/financial_markets_and_interest_rates/securities_issues/debt_securities/html/index.en.html (accessed on 20 July 2020).

- Gogas, Periklis, Theophilos Papadimitriou, and Anna Agrapetidou. 2014. Forecasting bank credit ratings. The Journal of Risk Finance 15: 195–209. [Google Scholar] [CrossRef]

- Hau, Harald, Sam Langfield, and David Marques-Ibanez. 2013. Bank ratings: What determines their quality? Economic Policy 28: 289–333. [Google Scholar] [CrossRef]

- He, Jie, Jun Qian, and Philip E. Strahan. 2012. Are all ratings created equal? The impact of issuer size on the pricing of mortgage-backed securities. The Journal of Finance 67: 2097–137. [Google Scholar] [CrossRef]

- Huang, Zan, Hsinchun Chen, Chia-Jung Hsu, Wun-Hwa Chen, and Soushan Wu. 2004. Credit rating analysis with support vector machines and neural networks: A market comparative study. Decision Support Systems 37: 543–58. [Google Scholar] [CrossRef]

- Kim, Jun Woo, H. Roland Weistroffer, and Richard T. Redmond. 1993. Expert systems for bond rating: A comparative analysis of statistical, rule-based and neural network systems. Expert Systems 10: 167–72. [Google Scholar] [CrossRef]

- Kraft, Pepa. 2015. Do rating agencies cater? Evidence from rating-based contracts. Journal of Accounting and Economics 59: 264–83. [Google Scholar] [CrossRef]

- Kwon, Young S., Ingoo Han, and Kun Chang Lee. 1997. Ordinal pairwise partitioning (OPP) approach to neural networks training in bond rating. International Journal of Intelligent Systems in Accounting Finance and Management 6: 23–40. [Google Scholar] [CrossRef]

- Maher, John J., and Tarun K. Sen. 1997. Predicting bond ratings using neural networks: A comparison with logistic regression. Intelligent Systems in Accounting, Finance and Management 6: 59–72. [Google Scholar] [CrossRef]

- Moody, John, and Joachim Utans. 1995. Architecture selection strategies for neural networks application to corporate bond rating. In Neural Networks in the Capital Markets. Edited by Apostolos Refenes. New York: John Wiley & Sons, Inc., pp. 277–300. [Google Scholar]

- Pagratis, Spyros, and M. Stringa. 2007. Modelling Bank Credit Ratings: A Structural Approach to Moody’s Credit Risk Assessment. London: Bank of England. [Google Scholar]

- Papadimitriou, Theophilos. 2012. Financial institutions clustering based on their financial statements using multiple correspondence analysis. Economics and Financial Notes 1: 119–33. [Google Scholar]

- Parnes, Dror. 2018. Observed Leniency among the Credit Rating Agencies. The Journal of Fixed Income 28: 48–60. [Google Scholar] [CrossRef]

- Parnes, Dror, and Sagi Akron. 2016. Rating the Credit Rating Agencies. Applied Economics 48: 4799–812. [Google Scholar] [CrossRef]

- Pinches, George E., and Kent A. Mingo. 1973. A Multivariate Analysis of Industrial Bond Ratings. Journal of Finance 28: 1–18. [Google Scholar] [CrossRef]

- Ravi, Vadlamani, H. Kurniawan, Peter Nwee Kok Thai, and P. Ravi Kumar. 2008. Soft computing system for bank performance prediction. Applied Soft Computing 8: 305–15. [Google Scholar] [CrossRef]

- Surkan, Alvin J., and J. Clay Singleton. 1990. Neural networks for bond rating improved by multiple hidden layers. Paper presented at IEEE International Conference on Neural Networks, San Diego, CA, USA, June 17–21; pp. 157–162. [Google Scholar]

| 1 | In the SVM jargon. |

| 2 | Our implementation of SVR models is based on LIBSVM (Chang and Lin 2011). The software is available at http://www.csie.ntu.edu.tw/~cjlin/libsvm/. |

| No | Abbreviation | Description |

|---|---|---|

| Panel A: Assets | ||

| 1 | TASSET | Total Assets |

| 2 | LO | Loans |

| 3 | GRLO | Gross loans |

| 4 | CBCB | Cash& Balances at Central Bank |

| 5 | LASSET | Liquid assets |

| Panel B: Liabilities | ||

| 6 | DSF | Deposits and Short-term funding |

| 7 | EQ | Equity |

| 8 | TCDE | Total customer deposits |

| 9 | OIBL | Other interest-bearing liabilities |

| 10 | BDE | Bank deposits |

| Panel C: Income and Expenses | ||

| 11 | NI | Net Income |

| 12 | NIM | Net interest margin |

| 13 | NIR | Net interest revenue |

| 14 | PBT | Profit before tax |

| 15 | OPIN | Operating income |

| 16 | ITEX | Income tax expense |

| 17 | OPPR | Operating profit |

| 18 | TOE | Total operating expenses |

| 19 | NOR | Net operating revenues |

| 20 | TIP | Total interest paid |

| 21 | TIR | Total interest received |

| Panel D: Financial Ratios | ||

| 22 | NLTA | Net loans/Total assets |

| 23 | NLDSF | Net loans/Deposits and Short-Term funding |

| 24 | NLTDB | Liquid assets/Total deposits and borrowed |

| 25 | LADSF | Liquid assets/Deposits and Short-Term funding |

| 26 | LATDB | Liquid assets/Total deposits and borrowed |

| 27 | NIRAA | Net interest revenues/Average assets |

| 28 | OOPIAA | Other operating income/Average assets |

| 29 | NOEAA | Non-interest expenses/Average assets |

| 30 | ROAE | Return On Average Equity (ROAE) |

| 31 | ROAA | Return On Average Assets (ROAA) |

| 32 | ETA | Equity/Total assets |

| 33 | ENL | Equity/Net loans |

| 34 | EL | Equity/Liabilities |

| Class Identification | Rating Category | Number of Banks | ||||

|---|---|---|---|---|---|---|

| 3 | AAA | AA– | AA | A+ | 24 | |

| 2 | A– | A | ΒΒΒ+ | 34 | ||

| 1 | ΒΒΒ– | ΒΒΒ | 32 | |||

| 0 | ΒΒ+ | ΒΒ– | ΒΒ | Β+ | Β– | 22 |

| Total | 112 | |||||

| Group 1 | Group 2 | Group 3 | Group 4 | Group 5 | Group 6 |

|---|---|---|---|---|---|

| 18 variables | 20 variables | 30 variables | 30 variables | 10 variables | 136 variables |

| Combinatorial 4 | Group 1 | Group 2 | Group 3 | Group 4 | Group 5 | Group 6 |

| TASSET14 | TASSET14 | TASSET14 | TIR16 | TASSET14 | TIR16 | |

| TIR16 | TIR16 | TASSET13 | DSF14 | TIR16 | DSF14 | |

| NOEAA13 | NIRAA13 | OPPR13 | NIRAA13 | OIBL13 | TASSET15 | |

| NIM13 | NOEAA13 | OIBL16 | NOEAA13 | TASSET13 | NOR14 | |

| Combinatorial 8 | Group 1 | Group 2 | Group 3 | Group 4 | Group 5 | Group 6 |

| TASSET14 | TASSET14 | TASSET14 | DSF14 | TASSET14 | TIR16 | |

| TIR16 | TIR16 | TASSET13 | NIRAA13 | TIR16 | TASSET13 | |

| TASSET13 | NIRAA13 | OIBL16 | EQ16 | OIBL13 | ETA16 | |

| TIR14 | NOEAA13 | LADSF16 | TIR15 | TASSET13 | LO15 | |

| NOEAA13 | TASSET13 | LADSF14 | GRLO14 | TIR14 | GRLO15 | |

| NIM13 | TIR14 | LADSF13 | LO14 | NOEAA14 | NOEAA16 | |

| NOEAA15 | NOEAA15 | OPPR14 | OOPIAA13 | TIR15 | OOPIAA13 | |

| NOEAA16 | NOEAA16 | NI13 | OOPIAA16 | TASSET16 | NLTA14 | |

| Stepwise-forward | Group 1 | Group 2 | Group 3 | Group 4 | Group 5 | Group 6 |

| TASSET14 | TASSET14 | TASSET14 | TIR15 | TASSET14 | TASSET14 | |

| TIR16 | TIR16 | TASSET13 | DSF14 | TIR16 | TIR16 | |

| NIRAA14 | NIRAA14 | OPPR13 | NIRAA13 | OIBL13 | DSF14 | |

| NOEAA14 | NOEAA14 | OIBL16 | NOEAA13 | TASSET13 | PBT13 | |

| (4) | (4) | (4) | EQ16 | (4) | NIR13 | |

| (5) | CBCB16 | |||||

| CBCB13 | ||||||

| OOPIAA14 | ||||||

| TIR14 | ||||||

| NIR14 | ||||||

| (10) |

| Regressor Selection | Group 1 | Group 2 | Group 3 | Group 4 | Group 5 | Group 6 |

|---|---|---|---|---|---|---|

| Combinatorial 4 | 57.14 | 54.46 | 50.89 | 49.11 | 51.79 | 54.46 |

| Combinatorial 8 | 57.14 | 56.25 | 58.04 | 53.57 | 55.36 | 66.07 |

| Stepwise-forward | 57.14 | 57.14 | 50.89 | 46.43 | 51.79 | 57.14 |

| Correct | Incorect | % Correct | % Incorect | |

|---|---|---|---|---|

| predicted 0 | 15 | 7 | 68.18% | 31.82% |

| predicted 1 | 20 | 12 | 62.50% | 37.50% |

| predicted 2 | 24 | 10 | 70.59% | 29.41% |

| predicted 3 | 15 | 9 | 62.50% | 37.50% |

| Total | 74 | 38 | 66.07% | 33.93% |

| Regressor Selection | Group 1 | Group 2 | Group 3 | Group 4 | Group 5 | Group 6 |

|---|---|---|---|---|---|---|

| Panel A: Linear kernel (k-fold cross validation) | ||||||

| Combinatorial 4 | 62.50 | 63.39 | 60.71 | 63.39 | 61.61 | 60.71 |

| Combinatorial 8 | 58.04 | 64.29 | 58.04 | 50.00 | 63.39 | 53.57 |

| Stepwise-forward | 59.82 | 62.50 | 59.82 | 63.39 | 67.86 | 66.96 |

| Panel B: nonlinear RBF kernel (k-fold cross validation) | ||||||

| Combinatorial 4 | 64.29 | 63.39 | 61.61 | 62.50 | 63.39 | 61.61 |

| Combinatorial 8 | 72.32 | 76.79 | 72.32 | 91.07 | 50.89 | 66.96 |

| Stepwise-forward | 68.75 | 60.71 | 68.75 | 61.61 | 62.50 | 79.46 |

| Correct | Incorrect | %Correct | %Incorrect | |

|---|---|---|---|---|

| predicted 0 | 22 | 0 | 100% | 0% |

| predicted 1 | 26 | 6 | 81.25% | 18.75% |

| predicted 2 | 31 | 3 | 91.18% | 8.82% |

| predicted 3 | 23 | 1 | 95.83% | 4.17% |

| Total | 102 | 10 | 91.07% | 8.93% |

| Actual 0 | Actual 1 | Actual 2 | Actual 3 | |

|---|---|---|---|---|

| predicted 0 | 22 | 1 | 0 | 0 |

| predicted 1 | 0 | 26 | 0 | 0 |

| predicted 2 | 0 | 4 | 31 | 1 |

| predicted 3 | 0 | 1 | 3 | 23 |

| Regressor Selection | Group 1 | Group 2 | Group 3 | Group 4 | Group 5 | Group 6 |

|---|---|---|---|---|---|---|

| Panel A: Linear kernel (bootstrap) | ||||||

| Combinatorial 4 | 64.29 [61.61, 66.96] | 70.54 [67.86, 73.21] | 63.39 [60.71, 66.52] | 64.29 [61.61, 66.96] | 70.54 [66.96, 73.21] | 63.40 [61.61, 66.96] |

| Combinatorial 8 | 61.61 [58.04, 65.18] | 71.43 [68.75, 73.21] | 59.82 [57.15, 63.39] | 58.04 [55.36, 60.71] | 70.54 [67.86, 70.66] | 62.95 [60.27, 66.07] |

| Stepwise-forward | 62.50 [59.82, 65.18] | 66.96 [64.29, 69.64] | 62.50 [59.82, 64.89] | 65.18 [62.95, 68.75] | 74.11 [71.43, 76.79] | 80.36 [77.68, 85.04] |

| Panel B: nonlinear RBF kernel (bootstrap) | ||||||

| Combinatorial 4 | 81.25 [79.46, 83.93] | 85.71 [84.82, 87.50] | 78.13 [75.90, 80.36] | 78.57 [75.89, 81.25] | 86.60 [83.93, 87.50] | 78.57 [75.89, 81.25] |

| Combinatorial 8 | 82.15 [80.36, 83.93] | 91.96 [89.29, 93.75] | 82.14 [80.36, 83.93] | 91.52 89.29, 93.75] | 97.32 [96.43, 98.21] | 95.54 [94.64, 97.32] |

| Stepwise-forward | 81.25 [79.46, 83.04] | 88.39 [84.82, 90.18] | 81.25 [79.46, 83.04] | 97.32 [95.54, 97.32] | 98.21 [97.32, 100] | 95.42 [92.11, 98.57] |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Plakandaras, V.; Gogas, P.; Papadimitriou, T.; Doumpa, E.; Stefanidou, M. Forecasting Credit Ratings of EU Banks. Int. J. Financial Stud. 2020, 8, 49. https://doi.org/10.3390/ijfs8030049

Plakandaras V, Gogas P, Papadimitriou T, Doumpa E, Stefanidou M. Forecasting Credit Ratings of EU Banks. International Journal of Financial Studies. 2020; 8(3):49. https://doi.org/10.3390/ijfs8030049

Chicago/Turabian StylePlakandaras, Vasilios, Periklis Gogas, Theophilos Papadimitriou, Efterpi Doumpa, and Maria Stefanidou. 2020. "Forecasting Credit Ratings of EU Banks" International Journal of Financial Studies 8, no. 3: 49. https://doi.org/10.3390/ijfs8030049

APA StylePlakandaras, V., Gogas, P., Papadimitriou, T., Doumpa, E., & Stefanidou, M. (2020). Forecasting Credit Ratings of EU Banks. International Journal of Financial Studies, 8(3), 49. https://doi.org/10.3390/ijfs8030049