Consideration of Passenger Interactions for the Prediction of Aircraft Boarding Time

Abstract

1. Introduction

1.1. Status Quo

1.2. Scope and Structure of the Document

2. Aircraft Boarding Model

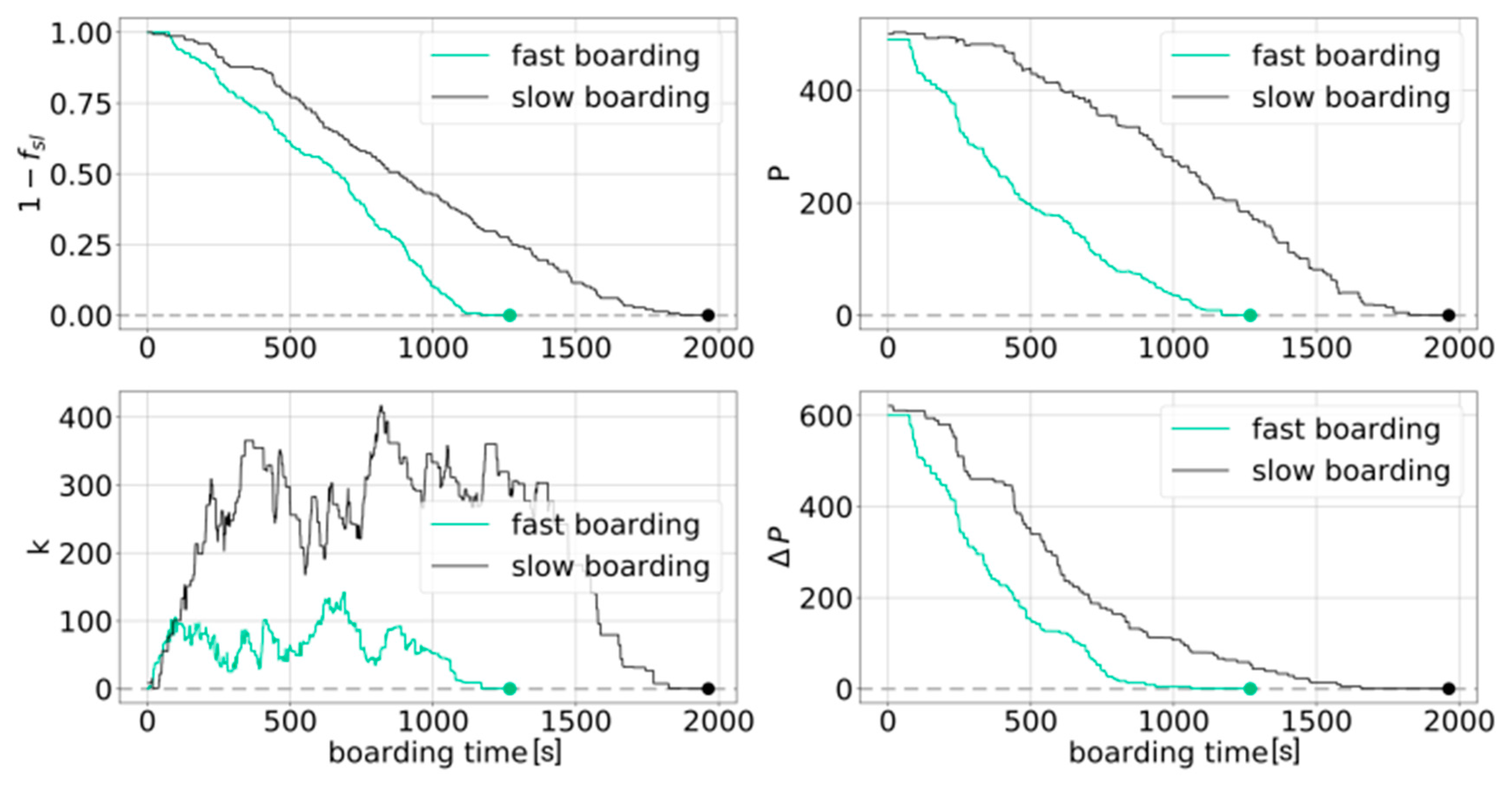

Complexity Metric

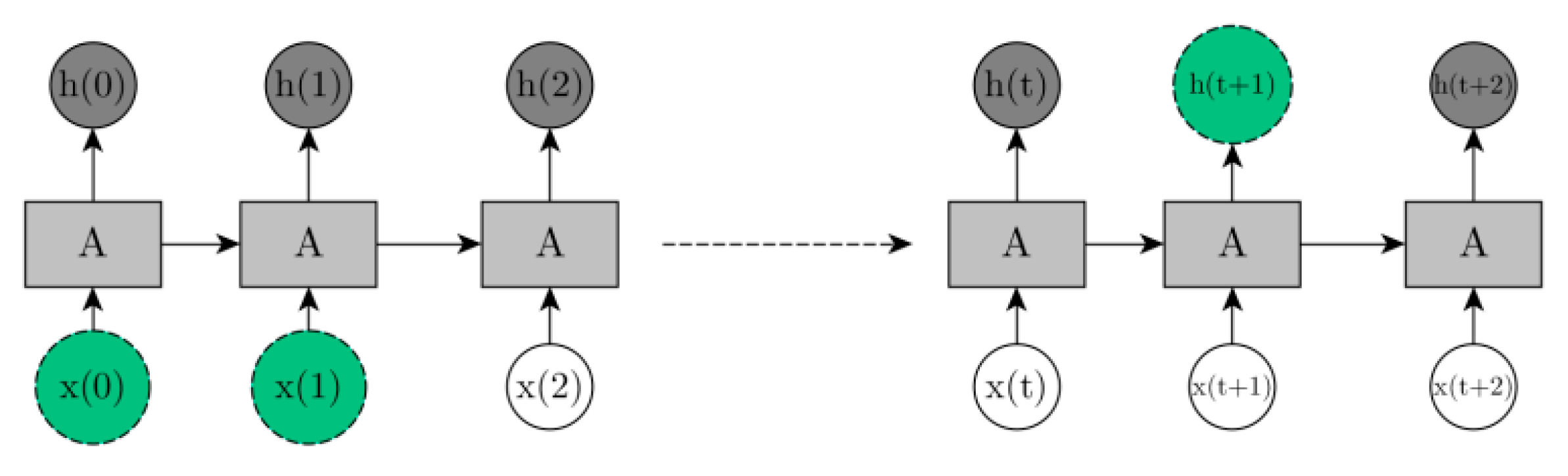

3. Machine Learning

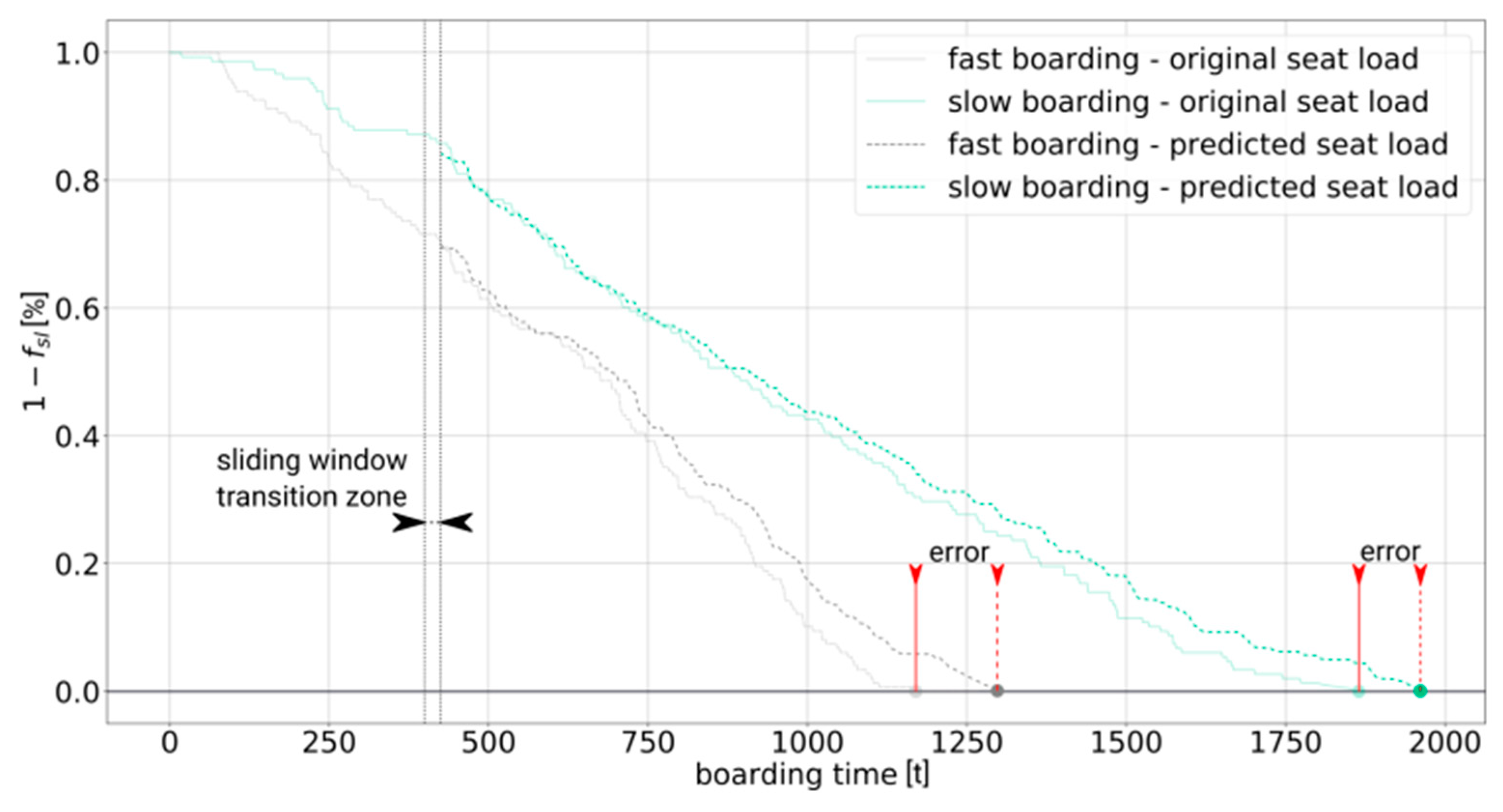

3.1. Data Transformation

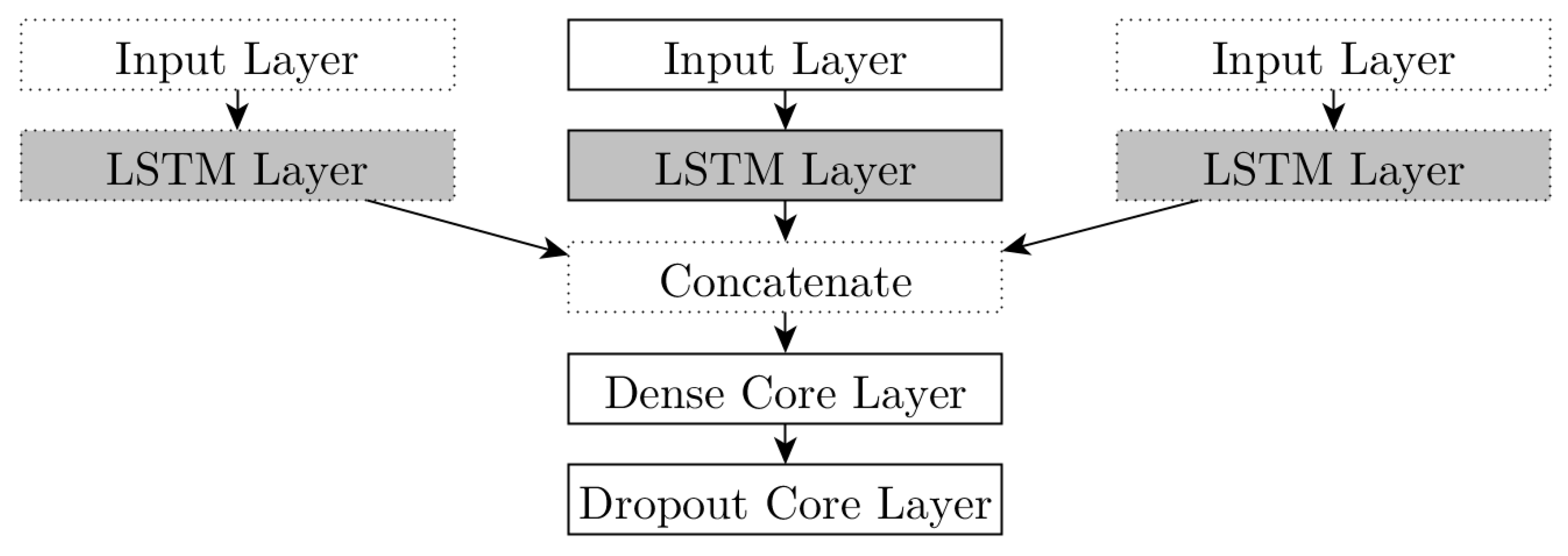

3.2. Neural Network Models

4. Simulation Framework and Application

4.1. Simulation Framework

4.2. Scenario Definition

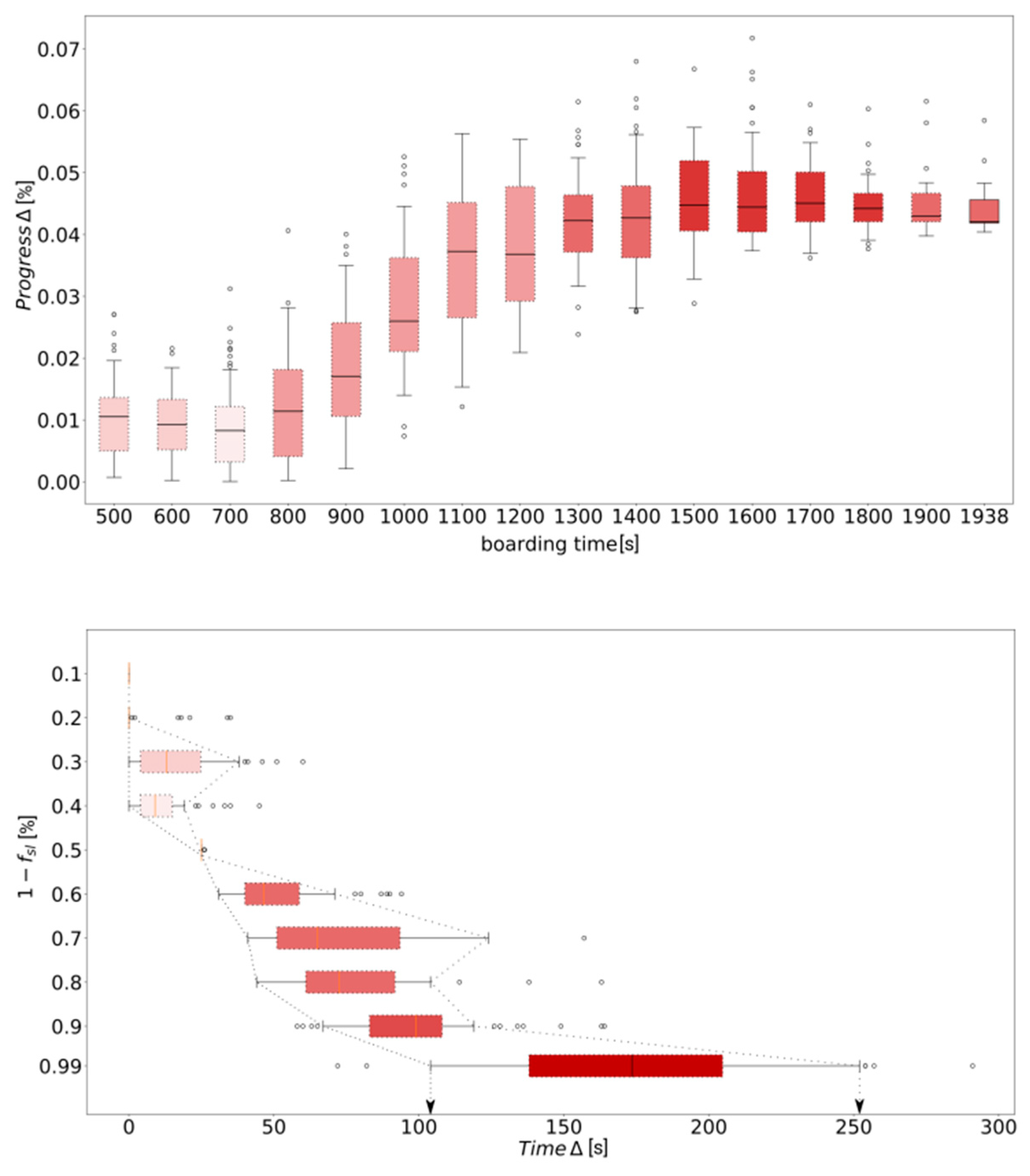

4.3. Results

5. Summary and Outlook

Author Contributions

Funding

Conflicts of Interest

References

- Bronsvoort, J.; McDonald, G.; Porteous, R.; Gutt, E. Study of Aircraft Derived Temporal Prediction Accuracy using FANS. In Proceedings of the 13th Air Transport Research Society (ATRS) World Conference, Abu Dhabi, UAE, 27–30 June 2009. [Google Scholar]

- Mueller, E.R.; Chatterji, G.B. Analysis of Aircraft Arrival and Departure Delay. In Proceedings of the AIAA’s Aircraft Technology, Integration, and Operations (ATIO) 2002 Technical Forum, Los Angeles, CA, USA, 1–3 October 2002. [Google Scholar]

- EUROCONTROL Performance Review Commission. Performance Review Report—An Assessment of Air Traffic Management in Europe During the Calendar Year 2015; EUROCONTROL Performance Review Commission: Brussels, Belgium, 2015. [Google Scholar]

- Tielrooij, M.; Borst, C.; van Paassen, M.M.; Mulder, M. Predicting Arrival Time Uncertainty from Actual Flight Information. In Proceedings of the 11th USA/Europe Air Traffic Management R&D Seminar, Lisbon, Portugal, 23–26 June 2015. [Google Scholar]

- Rosenow, J.; Lindner, M.; Fricke, H. Impact of climate costs on airline network and trajectory optimization: A parametric study. CEAS Aeronaut. J. 2017, 8, 371–384. [Google Scholar] [CrossRef]

- Rosenow, J.; Fricke, H.; Schultz, M. Air traffic simulation with 4d multi-criteria optimized trajectories. In Proceedings of the Winter Simulation Conference (WSC), Las Vegas, NV, USA, 3–6 December 2017; pp. 2589–2600. [Google Scholar]

- Niklaß, M.; Lührs, B.; Grewe, V.; Dahlmann, K.; Luchkova, T.; Linke, F.; Gollnick, V. Potential to reduce the climate impact of aviation by climate restricted airspaces. Transp. Policy 2017. [Google Scholar] [CrossRef]

- Rosenow, J.; Fricke, H.; Luchkova, T.; Schultz, M. Minimizing contrail formation by rerouting around dynamic ice-supersaturated regions. AAOAJ 2018, 2, 105–111. [Google Scholar]

- Rosenow, J.; Förster, S.; Fricke, H. Continuous climb operations with minimum fuel burn. In Proceedings of the 6th SESAR Innovation Days, Delft, The Netherlands, 8–10 November 2016. [Google Scholar]

- Kaiser, M.; Schultz, M.; Fricke, H. Automated 4D descent path optimization using the enhanced trajectory prediction model. In Proceedings of the International Conference on Research in Air Transportation (ICRAT), Berkeley, CA, USA, 22–25 May 2012. [Google Scholar]

- Kaiser, M.; Rosenow, J.; Fricke, H.; Schultz, M. Tradeoff between optimum altitude and contrail layer to ensure maximum ecological en-route performance using the Enhanced Trajectory Prediction Model. In Proceedings of the 2nd International Conference on Application and Theory of Automation in Command and Control Systems, London, UK, 29–31 May 2012. [Google Scholar]

- Cook, A.J.; Tanner, G. European Airline Delay Cost Reference Values; Technical Report; EUROCONTROL Performance Review Unit: Brussels, Belgium, 2015. [Google Scholar]

- Airbus. Airbus A320 Aircraft Characteristics—Airport and Maintenance Planning; Airbus: Toulouse, France, 2017. [Google Scholar]

- Fricke, H.; Schultz, M. Improving Aircraft Turn Around Reliability. In Proceedings of the 3rd ICRAT, Fairfax, VA, USA, 1–4 June 2008; pp. 335–343. [Google Scholar]

- Fricke, H.; Schultz, M. Delay Impacts onto Turnaround Performance. In Proceedings of the 8th USA/Europe ATM Seminar, Napa, CA, USA, 29 June–2 July 2009. [Google Scholar]

- Schmidt, M. A Review of Aircraft Turnaround Operations and Simulations. Prog. Aerosp. Sci. 2017, 92, 25–38. [Google Scholar] [CrossRef]

- Jaehn, F.; Neumann, S. Airplane Boarding. Eur. J. Oper. Res. 2015, 244, 339–359. [Google Scholar] [CrossRef]

- Nyquist, D.C.; McFadden, K.L. A study of the airline boarding problem. J. Air Transp. Manag. 2008, 14, 197–204. [Google Scholar] [CrossRef]

- Mirza, M. Economic impact of airplane turn-times. AERO Q. 2008, 4, 14–19. [Google Scholar]

- Montlaur, A.; Delgado, L. Flight and passenger delay assignment optimization strategies. J. Trans. Res. Part C Emerg. Technol. 2017, 81, 99–117. [Google Scholar] [CrossRef]

- Oreschko, B.; Schultz, M.; Fricke, H. Skill Analysis of Ground Handling Staff and Delay Impacts for Turnaround Modeling. In Proceedings of the 2nd International Air Transport and Operations Symposium, Delft, The Netherlands, 28–29 March 2011; pp. 310–318. [Google Scholar]

- Oreschko, B.; Kunze, T.; Schultz, M.; Fricke, H.; Kumar, V.; Sherry, L. Turnaround prediction with stochastic process times and airport specific delay pattern. In Proceedings of the International Conference on Research in Airport Transportation (ICRAT), Berkeley, CA, USA, 22–25 May 2012. [Google Scholar]

- Ivanov, N.; Netjasov, F.; Jovanovic, R.; Starita, S.; Strauss, A. Air Traffic Flow Management slot allocation to minimize propagated delay and improve airport slot adherence. J. Transp. Res. Part A 2017, 95, 183–197. [Google Scholar] [CrossRef]

- Du, J.Y.; Brunner, J.O.; Kolisch, R. Planning towing processes at airports more efficiently. J. Trans. Res. Part E 2014, 70, 293–304. [Google Scholar] [CrossRef]

- Schultz, M.; Kunze, T.; Oreschko, B.; Fricke, H. Microscopic Process Modelling for Efficient Aircraft Turnaround Management; International Air Transport and Operations Symposium: Delft, The Netherlands, 2013. [Google Scholar]

- Kafle, N.; Zou, B. Modeling flight delay propagation: A new analytical-econometric approach. J. Transp. Res. Part B 2016, 93, 520–542. [Google Scholar] [CrossRef]

- Grunewald, E. Incentive-based slot allocation for airports. Transp. Res. Procedia 2016, 14, 3761–3770. [Google Scholar] [CrossRef]

- Schmidt, M.; Paul, A.; Cole, M.; Ploetner, K.O. Challenges for ground operations arising from aircraft concepts using alternative energy. J. Air Transp. Manag. 2016, 56, 107–117. [Google Scholar] [CrossRef]

- Milne, R.J.; Kelly, A.R. A New Method for Boarding Passengers onto an Airplane. J. Air Transp. Manag. 2014, 34, 93–100. [Google Scholar] [CrossRef]

- Qiang, S.-J.; Jia, B.; Xie, D.-F.; Gao, Z.-Y. Reducing Airplane Boarding Time by Accounting for Passengers’ Individual Properties: A Simulation Based on Cellular Automaton. J. Air Transp. Manag. 2014, 40, 42–47. [Google Scholar] [CrossRef]

- Milne, R.J.; Salari, M. Optimization of Assigning Passengers to Seats on Airplanes Based on Their Carry-on Luggage. J. Air Transp. Manag. 2016, 54, 104–110. [Google Scholar] [CrossRef]

- Zeineddine, H. A Dynamically Optimized Aircraft Boarding Strategy. J. Air Transp. Manag. 2017, 58, 144–151. [Google Scholar] [CrossRef]

- Fuchte, J. Enhancement of Aircraft Cabin Design Guidelines with Special Consideration of Aircraft Turnaround and Short Range Operations. Ph.D. Thesis, TU Hamburg-Harburg, Hamburg, Germany, 2014. [Google Scholar]

- Schmidt, M.; Nguyen, P.; Hornung, M. Novel Aircraft Ground Operation Concepts Based on Clustering of Interfaces; SAE Technical Paper 2015-01-2401; SAE: Warrendale, PA, USA, 2015. [Google Scholar]

- Schmidt, M.; Heinemann, P.; Hornung, M. Boarding and Turnaround Process Assessment of Single- and Twin-Aisle Aircraft. In Proceedings of the 55th AIAA Aerospace Sciences Meeting, Grapevine, TX, USA, 9–13 January 2017. AIAA 2017-1856. [Google Scholar]

- Schultz, M. Dynamic Change of Aircraft Seat Condition for Fast Boarding. J. Trans. Res. Part C Emerg. Technol. 2017, 85, 131–147. [Google Scholar] [CrossRef]

- Gwynne, S.M.V.; Yapa, U.S.; Codrington, L.; Thomas, J.R.; Jennings, S.; Thompson, A.J.L.; Grewal, A. Small-scale trials on passenger microbehaviours during aircraft boarding and deplaning procedures. J. Air Transp. Manag. 2018, 67, 115–133. [Google Scholar] [CrossRef]

- Schultz, M. Field Trial Measurements to Validate a Stochastic Aircraft Boarding Model. Aerospace 2017, 5, 27. [Google Scholar] [CrossRef]

- Li, Q.; Mehta, A.; Wise, A. Novel approaches to airplane boarding. UMAP J. 2007, 28, 353–370. [Google Scholar]

- Wang, K.; Ma, L. Reducing boarding time: Synthesis of improved genetic algorithms. In Proceedings of the 2009 Fifth International Conference on Natural Computation, Tianjin, China, 14–16 August 2009; pp. 359–362. [Google Scholar]

- Soolaki, M.; Mahdavi, I.; Mahdavi-Amiri, N.; Hassanzadeh, R.; Aghajani, A. A new linear programming approach and genetic algorithm for solving airline boarding problem. Appl. Math. Model. 2012, 36, 4060–4072. [Google Scholar] [CrossRef]

- Reitmann, S.; Nachtigall, K. Applying Bidirectional Long Short-Term Memories to Performance Data in Air Traffic Management for System Identification; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2017; Volume 10614, pp. 528–536. [Google Scholar]

- Maa, X.; Tao, Z.; Wang, Y.; Yu, H.; Wang, Y. Long short-term memory neural network for traffic speed prediction using remote microwave sensor data. Transp. Res. Part C 2015, 54, 187–197. [Google Scholar] [CrossRef]

- Lv, Y.; Duan, Y.; Kang, W.; Li, Z.; Wang, F.Y. Traffic flow prediction with big data: A deep learning approach. IEEE Trans. Intell. Transp. Syst. 2015, 16, 865–873. [Google Scholar] [CrossRef]

- Polson, N.G.; Sokolov, V.O. Deep learning for short-term traffic flow prediction. J. Trans. Res. Part C Emerg. Technol. 2017, 79, 1–17. [Google Scholar] [CrossRef]

- Zhou, M.; Qu, X.; Li, X. A recurrent neural network based microscopic car following model to predict traffic oscillation. J. Trans. Res. Part C 2017, 84, 245–264. [Google Scholar] [CrossRef]

- Zhong, R.X.; Luo, J.C.; Cai, H.X.; Sumalee, A.; Yuan, F.F.; Chow, A.H.F. Forecasting journey time distribution with consideration to abnormal traffic conditions. Transp. Res. Part C 2017, 85, 292–311. [Google Scholar] [CrossRef]

- Schultz, M.; Schulz, C.; Fricke, H. Efficiency of Aircraft Boarding Procedures. In Proceedings of the 3rd ICRAT, Fairfax, VA, USA, 1–4 June 2008; pp. 371–377. [Google Scholar]

- Schultz, M.; Kunze, T.; Fricke, H. Boarding on the Critical Path of the Turnaround. In Proceedings of the 10th ATM Seminar, Chicago, IL, USA, 10–13 June 2013. [Google Scholar]

- Schultz, M. A metric for the real-time evaluation of the aircraft boarding progress. J. Trans. Res. Part C Emerg. Technol. 2018, 86, 467–487. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Schultz, M. Stochastic Transition Model for Pedestrian Dynamics. In Pedestrian and Evacuation Dynamics 2012; Springer: Cham, Switzerland, 2014; pp. 971–986. [Google Scholar]

- Schultz, M. Entwicklung Eines Individuenbasierten Modells zur Abbildung des Bewegungsverhaltens von Passagieren im Flughafenterminal. Ph.D. Thesis, TU Dresden, Saxony, Germany, 2010. Available online: http://nbn-resolving.de/urn:nbn:de:bsz:14-qucosa-85592 (accessed on 26 September 2018).

- Bazargan, M. A Linear Programming Approach for Aircraft Boarding Strategy. Eur. J. Oper. Res. 2007, 183, 394–411. [Google Scholar] [CrossRef]

- Yu, L.; Wang, S.; Lai, K.K. An integrated data preparation scheme for neural network data analysis. IEEE Trans. Knowl. Data Eng. 2006, 18, 217–230. [Google Scholar]

- Graves, A.; Fernandez, S.; Schmidhuber, J. Multi-Dimensional Recurrent Neural Networks. In Proceedings of the International Conference on Artificial Neural Networks (ICANN-2007), Porto, Portugal, 9–13 September 2007; Volume 4668, pp. 865–873. [Google Scholar]

- Gers, F.A.; Schmidhuber, J.; Cummins, F. Learning to Forget: Continual Prediction with LSTM. Neural Comput. 2000, 12, 2451–2471. [Google Scholar] [CrossRef] [PubMed]

- Hochreiter, S.; Bengio, Y.; Frasconi, P.; Schmidhuber, J. Gradient Flow in Recurrent Nets: The Difficulty of Learning Long-Term Dependencies; IEEE Press: Piscataway, NJ, USA, 2001. [Google Scholar]

- Schultz, M. Implementation and Application of a Stochastic Aircraft Boarding Model. J. Trans. Res. Part C Emerg. Technol. 2018, 90, 334–349. [Google Scholar] [CrossRef]

- Duchi, J.; Hazan, E.; Singer, Y. Adaptive subgradient methods for online learning and stochastic optimization. J. Mach. Learn. Res. JMLR 2011, 12, 2121–2159. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. In Proceedings of the 3rd ICLR, Banff, AB, Canada, 14–16 April 2014. [Google Scholar]

- Steffen, J.H. Optimal Boarding Method for Airline Passengers. J. Air Transp. Manag. 2008, 14, 146–150. [Google Scholar] [CrossRef]

| Time | Input A | Input B | Input C | Output |

|---|---|---|---|---|

| t | XA | XB | XC | Y |

| 0 | - | - | P(0) | 1 − fsl (1) |

| 1 | P(0) | 1 − fsl (1) | P(1) | 1 − fsl (2) |

| 2 | P(1) | 1 − fsl (2) | P(2) | 1 − fsl (3) |

| 3 | P(2) | 1 − fsl (3) | P(3) | prediction |

| Scenario | Boarding Strategy Learned | Boarding Strategy Predicted | Input from Complexity Metric | Prediction Start Time t (s) |

|---|---|---|---|---|

| A | random | random | {P, 1 − fsl, [P, 1 − fsl]} | {300, 400, 500} |

| B | all (random, block, back-to-front, outside-in, reverse pyramid, individual) | individual |

| Scenario | Input (Uni-Variate) | Input (Uni-Variate) | Input (Multi-Variate) |

|---|---|---|---|

| A | [1 − fsl] | [P] | [P, 1 − fsl] |

| 300 s | NaNt = 1h 28min | NaNt = 1h 43min | 289.8 s t = 3h 02min |

| 400 s | 536.1 s t = 0h 30min | NaNt = 1h 01min | 143.7 s t = 2h 38min |

| 500 s | 301.8 s t = 0h 32min | NaNt = 0h 49min | 122.3 s t = 2h 12min |

| B | [1 − fsl] | [P] | [P, 1 − fsl] |

| 300 s | 603.1 s t = 1h 13min | NaNt = 1h 19min | 168,1 s t = 7h 38min |

| 400 s | 292.1 s t = 1h 12min | 389.9 s t = 1h 10min | 77.3 s t = 8h 01min |

| 500 s | 246.0 s t = 1h 08min | 412.4 s t = 1h 11min | 73.3 s t = 7h 54min |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Schultz, M.; Reitmann, S. Consideration of Passenger Interactions for the Prediction of Aircraft Boarding Time. Aerospace 2018, 5, 101. https://doi.org/10.3390/aerospace5040101

Schultz M, Reitmann S. Consideration of Passenger Interactions for the Prediction of Aircraft Boarding Time. Aerospace. 2018; 5(4):101. https://doi.org/10.3390/aerospace5040101

Chicago/Turabian StyleSchultz, Michael, and Stefan Reitmann. 2018. "Consideration of Passenger Interactions for the Prediction of Aircraft Boarding Time" Aerospace 5, no. 4: 101. https://doi.org/10.3390/aerospace5040101

APA StyleSchultz, M., & Reitmann, S. (2018). Consideration of Passenger Interactions for the Prediction of Aircraft Boarding Time. Aerospace, 5(4), 101. https://doi.org/10.3390/aerospace5040101