1. Introduction

It took almost four decades before the statistical usefulness and attractiveness of copulas was widely recognized after the seminal papers by

Frees and Valdez (1998),

Li (2000), and

Embrechts et al. (2002). Copulas are now a standard tool for modeling a dependence structure of multivariate iid data in applied science. The foundation of the copula theory was laid by the famous Sklar’s theorem (see

Sklar 1959), which states that any multivariate distribution can be represented through its copula and marginal distributions. If marginal distributions are continuous, then the copula of a multivariate distribution is unique. This approach is particularly flexible, since margins and the dependence structure—which is dictated by the copula—can be modeled independently.

To our knowledge,

Darsow et al. (1992) initiated a theoretical application of copulas to specify univariate Markov processes of first order. Thus, conditional independence can be stated in terms of copulas, and this results in a copula counterpart of the Chapman–Kolmogorov equations for the transition probabilities of a Markov process.

Ibragimov (2009) generalized their approach for univariate Markov processes of higher order as well as for non-Markov processes. Furthermore, he introduced new classes of copulas for modeling univariate time series. Estimation of copula-based stationary time series models can still be pursued in the classical framework as for iid data. For example,

Chen and Fan (2006) investigated theoretical aspects of the two-step estimation when marginal distributions are fitted non-parametrically in the first step and copula parameters are then estimated by maximum likelihood.

The first non-Gaussian VAR models were introduced by

Biller and Nelson (2003), where smartly chosen Gaussian VAR time series were transformed to achieve desired autocorrelation structure and marginal distributions. Recently,

Brechmann and Czado (2015),

Beare and Seo (2015), and

Smith (2015) simultaneously developed copula-based models for stationary multivariate time series. Although these models differ from each other, their generality consists of an underlying R-vine pair-copula construction (see

Aas et al. 2009) to describe the cross-sectional and temporal dependence jointly. To capture the cross-sectional dependence,

Brechmann and Czado (2015) employ C-Vine, while

Beare and Seo (2015) and

Smith (2015) consider D-vine. Further,

Brechmann and Czado (2015) and

Beare and Seo (2015) assume the existence of a key variable, whose temporal dependence was explicitly modeled, and this assumption combined with C- or D-vine for the cross-sectional dependence results in a corresponding R-vine for a multivariate time series. In contrast,

Smith (2015) explicitly modeled the temporal dependence between multivariate observations and constructed a general D-vine for them consisting of D-vines for the cross-sectional dependence.

In the time of big data, factor models offer an elegant solution to describe a high-dimensional panel data with a few unobservable (latent) variables, called factors. The idea behind factor models is that observable variables are driven by two orthogonal, hidden processes: one captures their co-movements and arises from a linear combination of the latent factors, whereas the other covers their individual nature in the form of idiosyncratic shocks. The dimension of the observed data usually significantly exceeds the number of factors, and so a reduction in dimension takes place.

In the seminal works of

Stock and Watson (

1999,

2002a,

2002b), factor models supported the forecasting of univariate time series. It was shown for large panel data that the unobserved factors can be consistently estimated, and this served for a consistent forecasting framework. In particular,

Stock and Watson (2002a) illustrated that the forecasting of several macroeconomic variables based on factor models can outperform those obtained from competing models such as autoregression (AR), VAR, and leading indicators. In the literature, factor models are classified as static or dynamic with respect to the stochastic dynamics of the unobserved factors. Static factor models suppose iid normally distributed factors, while dynamic factor models assume that the factor obeys a VAR model of order

.

In this paper, we apply the copula autoregressive (COPAR) model of

Brechmann and Czado (2015) to quantify the dependence structure of estimated unobserved factors in dynamic factor models. More precisely, we consider two dynamic factor models and estimate them separately with the maximum likelihood by employing the Kalman filter and smoother. The estimated factors are then combined with a COPAR model, from which latent factors are simulated for a forecasting purpose. Thus, our approach allows several estimated dynamic factor models to be coupled with a copula and admits a non-Gaussian dependence structure of simulated latent factors. To gain information from the estimated factors, a forecasted variable of each market is regressed on the corresponding simulated factors and its previous value.

It should be noted that our modeling approach is different from the factor copula modeling of

Krupskii and Joe (2013) and

Oh and Patton (2017). We first estimate unobserved factors and then fit copula for them as for observable data. With factor models, we reduce the data dimension, and using autoregressive structure of factors we decrease the number of copula parameters essentially. In contrast,

Krupskii and Joe (2013) and

Oh and Patton (2017) treat iid data and reduce the dimension of copula parameters by considering conditional independence with respect to unobserved factors. Numerically integrating the unobserved factors, a copula with low dimensional parameters for observed iid data is obtained. For multivariate time series,

Oh and Patton (2016) extended the factor copula models with time-varying parametric copulas.

In the empirical application, we consider monthly U.S. financial and macroeconomic panel data to filter driving factors later employed for a mean-variance portfolio optimization. Our main contribution is a new method to improve portfolio performance using factor predictions sampled from the COPAR model. In contrast to dynamic factor models, we explicitly allow for non-Gaussian cross-sectional and temporal dependence between factors. The forecasted factors with non-linear dependence structure are used to assess the future variability of multivariate asset returns. This allows us to construct an optimal portfolio in the mean-variance framework. For comparison, three benchmark portfolios are constructed using dynamic factor models as well as empirical moments of observed asset returns. Thus, our optimal portfolio outperforms the benchmark portfolios according to several risk-adjusted performance measures.

The rest of the paper is organized as follows.

Section 2 outlines dynamic factor models and their estimation in the maximum likelihood framework.

Section 3 briefly considers vine copulas.

Section 4 reviews COPAR models and discusses an algorithm to extend COPAR(1) model for another multivariate observation.

Section 5 presents our proposed methodology for an optimal asset allocation of 35 industrial stocks from S&P500 listed in

Appendix A and compares it with three benchmark portfolios. Finally, we conclude and discuss further research.

Appendix B gives an overview on the considered monthly panel data.

Appendix C presents bivariate pair-copulas considered in a model selection procedure for cross-sectional dependence. Appendices

Appendix D and

Appendix E contain detailed numerical results for portfolio comparisons.

Appendix F summarizes testing results of Granger causality between estimated factors.

2. Factor Models

In our application, we deal only with dynamic factor models (DFMs). Further, we restrict our exposition to the simplest factor dynamics of order 1, since any VAR

can be written in VAR

form (see

Lütkepohl 2005, p. 15).

Definition 1. (Dynamic Factor Model) For any point in time t, let be a stationary vector process with zero mean. Let denote the multivariate factor at time t, and let the vector collect all idiosyncratic shocks. Then, a dynamic factor model of order 1 is given bywith constant matrices and . The idiosyncratic shocks are iid Gaussian with zero mean and covariance matrix and the error vectors are iid Gaussian with zero mean and covariance matrix . Since

is a stationary process, Definition 1 implicitly assumes that unobserved factor process

is also stationary. The stationarity of

can be ensured if the roots of the characteristic polynomial

lie outside of the complex unit circle. In this case, the moving average representation for

yields its stationary

dimensional zero-mean Gaussian distribution (see

Lütkepohl 2005, pp. 18–21) given by

For known or estimated

Q and

A, the factors can be drawn from (

1) by truncating the infinite sum for a pre-specified error tolerance of

for all entries of

.

For the convenience of the reader, we outline the estimation procedure of

Bork (2009) and

Bańbura and Modugno (2014). and refer to the original works for further details. Ignoring the unobservability of the factors, the log-likelihood function of the model in Definition 1 for a data sample of length

T can be derived by iterative conditioning on observations (e.g., in

Bork 2009, p. 45 or

Bańbura and Modugno 2014, p. 156). However, the factors

are unobservable, and therefore the log-likelihood is integrated out with respect to the factor distribution. This results in the expected log-likelihood conditioned on the observed panel data, which constitutes the expectation step of the EM-Algorithm. In contrast to the unconditioned log-likelihood, here factors are replaced by their corresponding conditional moments of first and second order, which a single run of the Kalman filter and smoother given in

(Bork 2009, p.43) can provide.

In the maximization step of the EM-Algorithm,

Bork (2009) and

Bańbura and Modugno (2014) treat the conditional factor moments as constants, when the partial derivatives of the conditional expectation of the log-likelihood with respect to the model parameters are computed. Next, they search for the zeros of the arising system of linear equations to determine the maximum of the expected log-likelihood function. The iterative parameter updates of the EM-Algorithm from

Bork (2009) and

Bańbura and Modugno (2014) is summarized in Theorem 1.

Theorem 1. (EM-Algorithm as in Bork (2009) and Bańbura and Modugno (2014)) Assume the dynamic factor model in Definition 1 and let the matrix collect all panel data. Let the index indicate the current loop of the EM-Algorithm and let denote the expectation conditioned on the panel data and the parameters estimated in loop l. Then, the parameter updates in loop are given as follows:where stands for the inverse matrix and conditional factor moments are computed using the Kalman filter and smoother for each loop l. The iterative estimation procedure in Theorem 1 requires a termination criterion. In our application, we terminate the above EM-Algorithm as soon as the change in log-likelihood is smaller than . Finally, note that the estimated factors are unique up to rotation with orthogonal matrices. For forecasting purposes, one can ignore this fact, since estimated parameters of factors in forecasting equations will then be transformed correspondingly with no effect on forecasting variable. We illustrate this point later in our application.

3. Vine Copulas

Since the statistical applicability of vine copulas with non-Gaussian building bivariate copulas was recognized by

Aas et al. (2009), vine copulas became a standard tool to describe the dependence structure of multivariate data (see

Aas 2016 for a recent review). Moreover,

Brechmann and Czado (2015),

Beare and Seo (2015), and

Smith (2015) have applied vine copulas to model temporal dependence of multivariate time series as well as the cross-sectional dependence between univariate time series. In this section, we review the COPAR model of

Brechmann and Czado (2015), which is used to describe the stochastic dynamics and the dependence structure of the estimated factors from

Section 2. We start with the concept of regular vines from

Kurowicka and Cooke (2006). We do not consider illustrating examples on pair-copula constructions, and refer instead to

Aas (2016) or

Czado (2010) for more intuition on them.

Definition 2. (Regular vine)

A collection of trees is a regular vine on d elements if

- 1.

is a tree with nodes connected by a set of non-looping edges .

- 2.

For , is a connected tree with edge set and node set , where and are the number of edges and nodes, respectively.

- 3.

For , : (two nodes are connected by an edge e in if the corresponding edges a and b in share one node (proximity condition)).

A tree

is an acyclic graph, where

N is its set of nodes and

E is its set of edges. Acyclic means that there exits no path such that it cycles. In a connected tree we can reach each node from all other nodes on this tree. R-vine is simply a sequence of connected trees such that the edges of

are the nodes of

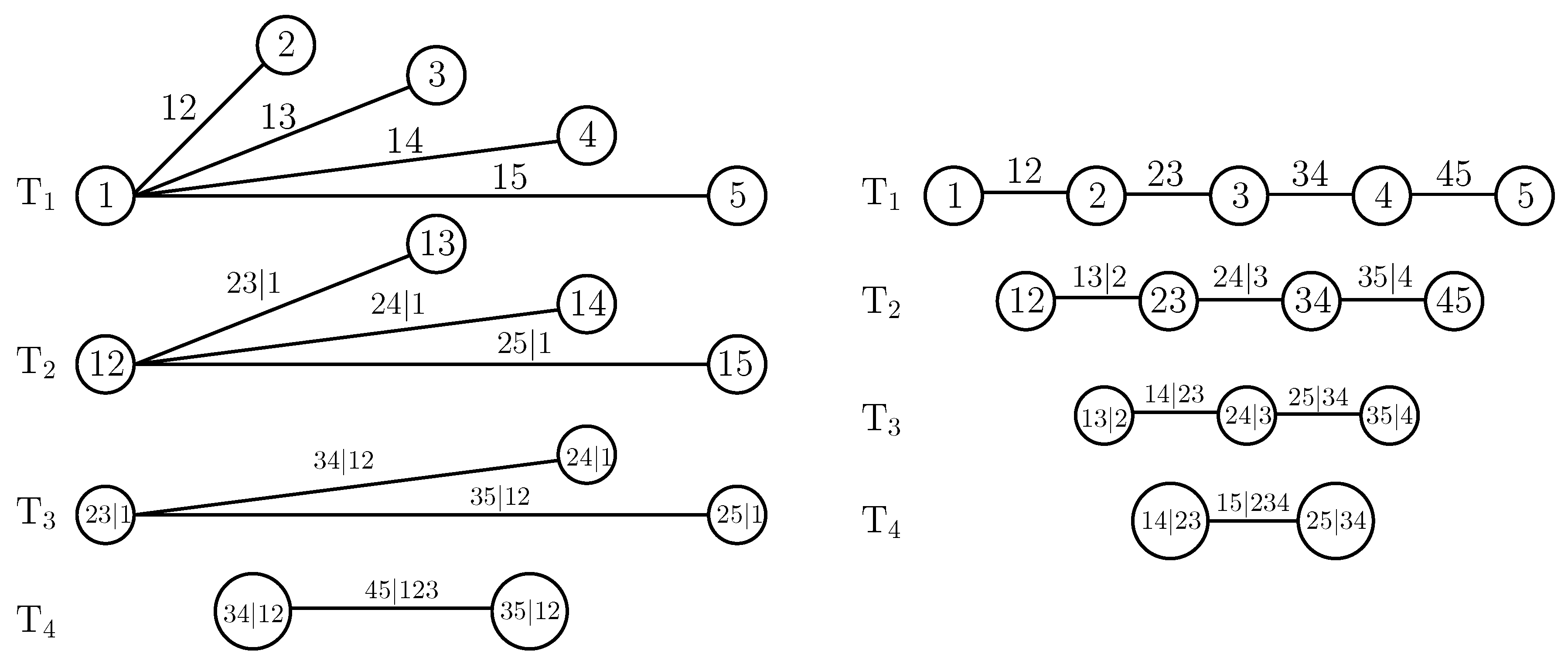

. A traditional example of these structures are canonical vines (C-vines) and drawable vines (D-vines) (see

Czado (2010) and

Aas et al. (2009)) in

Figure 1. Every tree of a C-vine is defined by a root node, which has

incoming edges, in each tree

,

, whereas a D-vine is solely defined through its first tree, where each node has at most two incoming edges.

Regular vines are a powerful tool to systemize all possible factorizations of a

dimensional density as a product of

d univariate marginal densities with a product of

conditional and unconditional bivariate copulas (see Theorem 4.2 in

Kurowicka and Cooke (2006)). Thus, the unconditional and conditional bivariate copulas—called pair-copulas—of a given factorization can be uniquely mapped onto the edge set

E of a particular regular vine, and vice versa. Then, the conditional copulas and their arguments depend on conditioned values. The dependence of conditional copulas on conditioned values is crucial, and allows for statistical applications only for a subclass of elliptical distributions (see

Stöber et al. (2013)), since in this case, conditioned pair-copulas depend on conditioned values only through their arguments.

Aas et al. (2009) first developed this observation further and went beyond the elliptical world. Thus, they considered regular vine factorizations with arbitrary fixed conditional copulas and showed that this results in valid multivariate distributions and copulas. In this paper, we also assume that conditional copulas depend on conditioned values only through their arguments, and so they can be chosen from bivariate copula families.

The number of possible vine structures on

d random variables can be immense. For

, only C- and D-vines are possible. For

, there are

different C- and D-vines. The total amount of regular vine structures has been computed by

Morales-Nápoles et al. (2010), and is equal to

. To select conditional copulas on these graphical structures, we define the following sets as in

Czado (2010).

Definition 3. (Conditioned and conditioning sets) For any edge of a regular vine , the complete union of e is the subsetThe conditioning set

associated with e isThe conditioned sets

associated with e areThe copula for this edge will be denoted by Given a regular vine, we specify a regular vine copula by assigning a (conditional) pair copula (with parameters) to each edge of the regular vine. In doing so, we follow

Czado et al. (2012).

Definition 4. (Regular vine copula). A regular vine copula in d dimensions is a multivariate distribution function such that for a random vector with uniform margins

is a regular vine on d elements,

is a set of copula families identifying the unconditional distributions of as well as the conditional distributions of

is the set of parameter vectors corresponding to the copulas in

To facilitate statistical inference, a matrix representation of R-vines was proposed by

Morales-Nápoles et al. (2010) and further developed by

Dissmann et al. (2013). To specify a

d-dimensional R-vine in matrix form, one needs several lower triangular

matrices: one that stores the structure of the R-vine, one with copula families, and another two with the first and second parameters.

For a

d-dimensional R-vine, the matrix with the structure has the following form

where

. The rules for reading from this matrix are as follows. The conditioned set for an entry

is the entry itself and the diagonal entry of the column

, whereas the conditioning set is composed of variables under the entry; i.e., for

, the conditioned set will be

, the conditioning set is

. Thus,

denotes the node

. We will assume that the diagonal of

M is sorted in descending order, which can always be achieved by reordering the node labels, so that we have

. To illustrate the R-vine matrix notation, we consider the C-vine from

Figure 1 and give below its R-vine matrix representation.

The first column encodes the following nodes

Node is saved through given and ;

Node is saved through given and ;

Node is saved through given ;

Node 51 is saved through .

In the sequel, we utilize C-vines to capture the cross-sectional dependence of a multivariate time series at any time point

t. To capture the dependence between multivariate observations, the first tree of the C-vine for multivariate observation at time point

t is connected to the first trees of the C-vines for existing neighboring multivariate observations at time points

and

with one edge, correspondingly. This results in the first tree of an R-vine for all multivariate observations treated as one huge sample point. Depending on the choice of C-vines and the connection of the first trees, the copula autoregressive model of

Brechmann and Czado (2015) from the next section can be obtained.

Finally, note that the information on copula families and their parameters is similarly stored in lower triangular matrices. Each element of the R-vine matrix below the diagonal specifies a conditional or unconditional pair-copula depending on the diagonal entry above it. The family and parameters of this pair-copula are now entered at the same entry place of matrices for copula families and parameters. Since the diagonal entries of the R-vine matrix alone do not determine any pair-copulas, no entries for copula family and parameters matrices are needed on the diagonal. To avoid confusion with space character, we fill the main diagonal with * sign.

4. Copula Autoregressive Model

R-vines have been mostly used to model contemporaneous dependence. We now present a special R-vine structure called copula autoregressive (COPAR) model from

Brechmann and Czado (2015) which is designed to capture cross-sectional, serial, and cross-serial dependence in multivariate time series, and allows a general Markovian structure. Let

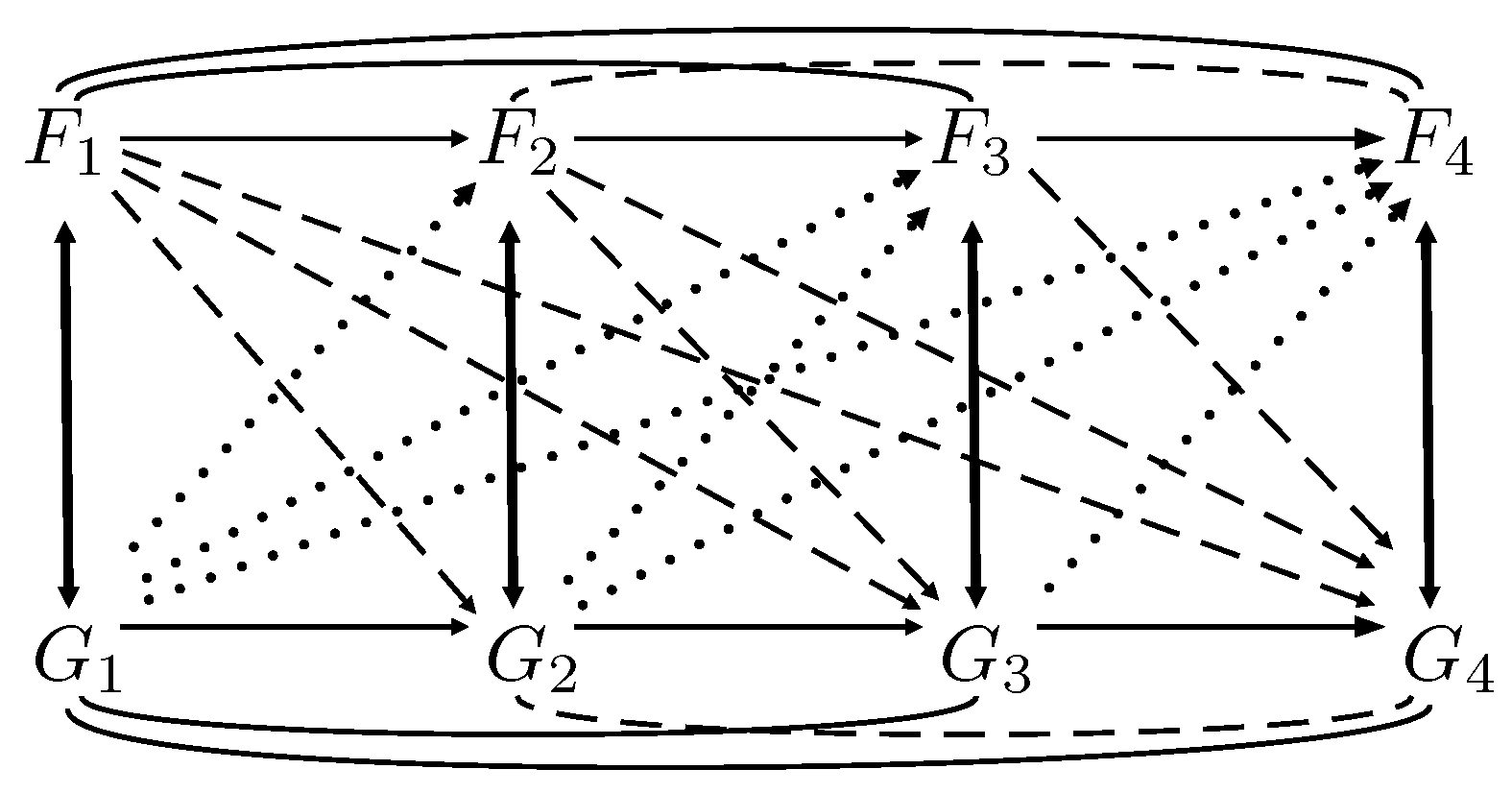

be an observable bivariate stationary time series. To illustrate how the two individual time series are interdependent, consider the mapping of dependencies for

in

Figure 2. Vertical solid lines represent the cross-sectional dependence, horizontal solid lines and curved lines represent the serial dependence for each time series, and dotted and dashed lines represent the cross-serial dependence.

Traditionally, R-vines were used to model only the cross-sectional dependence (pictured by the vertical solid lines in

Figure 2), but under the assumption that other dependencies are absent (i.e., data is iid). The COPAR model is designed to additionally capture serial and cross-serial dependence. The following definition of a COPAR model without Markovian structure for two time series adopted to our notation is taken from

Brechmann and Czado (2015). The vectors

and

are denoted as

and

, respectively.

Definition 5. (COPAR model for the bivariate case) The COPAR model for stationary continuous time series and has the following components.

- (i)

Unconditional marginal distributions of each time series are independent of time.

- (ii)

An R-vine for the serial and between-series dependence of and , where the following pairs are selected.

- 1.

Serial dependence of : The pairs of serial D-vine copula for ; that is, - 2.

Between-series dependence:and - 3.

Conditional serial dependence of : The pairs of a serial D-vine copula for conditioned on all previous values of ; that is,

Pair copulas of the same lag length , , are identical. We associate

- 1.

copula with (2), - 2.

copulas and with (3) and (4), respectively, and - 3.

copula with (5).

Let us explain the above definition on an example of a bivariate time series

with four observations. Using a D-vine, Equation (

2) captures the dependence structure of

,

,

, and

expressed by the top lines connecting them in

Figure 2. For

and

, Equation (

3) describes the conditional dependence between

and

conditioned on

,

, and

. Thus, Equation (

3) captures the conditional dependence between

and

for

reflected by the dashed lines in

Figure 2. For

, Equation (

3) models the unconditional dependence between

and

expressed by the vertical lines. Similarly, Equation (

4) describes the conditional serial dependence between univariate time series illustrated by the dotted lines in

Figure 2. However, Equations (

3) and (

4) are not symmetric with respect to conditioned sets. In particular, the conditional distribution of

and

for

is independent of

. This already indicates that the first time series

plays a key role in the stochastic dynamics of the time series, since it is sufficient to describe the conditional dependence between

and

for

. Therefore, the univariate time series are not interchangeable. Using a D-vine, Equation (

5) finally describes the dependence structure of

(connecting bottom lines in

Figure 2) conditioned on

. Due to the property that copulas of same lag length are identical, the COPAR model defines a stationary bivariate time series.

Now, we expand the COPAR model to an arbitrary number of variables with Markov structure. We consider

q univariate time series observed at

T time points; that is,

. We denote a random vector of

q variables observed at times

as

, time series of individual variables for

as

, and also introduce a vector

. Finally, we define a COPAR

model, which is COPAR model for a multivariate time series with a Markovian structure of the

k-th order. Since unobservable factors in

Section 2 are also denoted by

, our notation above should not lead to a confusion. Our modeling approach utilizes estimated factors even if they are not observed. Therefore, for reader convenience, we denote here multivariate time series with

and present COPAR models in terms of

.

Definition 6. (COPAR model for q time series of length T) The COPAR model for a q-dimensional stationary time series , has the following components.

- (i)

Unconditional marginal distributions of each time series are independent of time.

- (ii)

An R-vine for the serial and between-series dependence of , , where the following pairs are selected.

- 1.

Serial dependence of : The pairs of serial D-vine copula for ; that is, - 2.

Between-series dependence of and for , :and - 3.

Conditional serial dependence of for :

whereas

- (a)

copulas and for lag length are independent copulas,

- (b)

copulas for for the same lag length , are identical.

Definition 6 introduces the COPAR model, if a) is neglected. The dependence of

is modeled conditioned on

, and consequently, the order of variables cannot be simply interchanged. The number of pair copulas in COPAR models for time series with Markovian structure does not depend on

T, and is less than the number needed for a general R-vine. With respect to the number of pair-copulas, the following result from

Brechmann and Czado (2015) holds.

Lemma 1. The number of copulas needed for a COPAR model of q univariate time series is .

Note, the number of parameters in a VAR for the between-series dependence of q time series—not counting parameters for marginal distributions—is equal to the number of pair-copulas in a COPAR model. Therefore, the number of parameters in a COPAR model is bounded by a multiple of the number of VAR parameters, depending on the number of parameters of the involved copula families. In contrast, a general R-vine requires pair-copulas, resulting in a huge number of parameters.

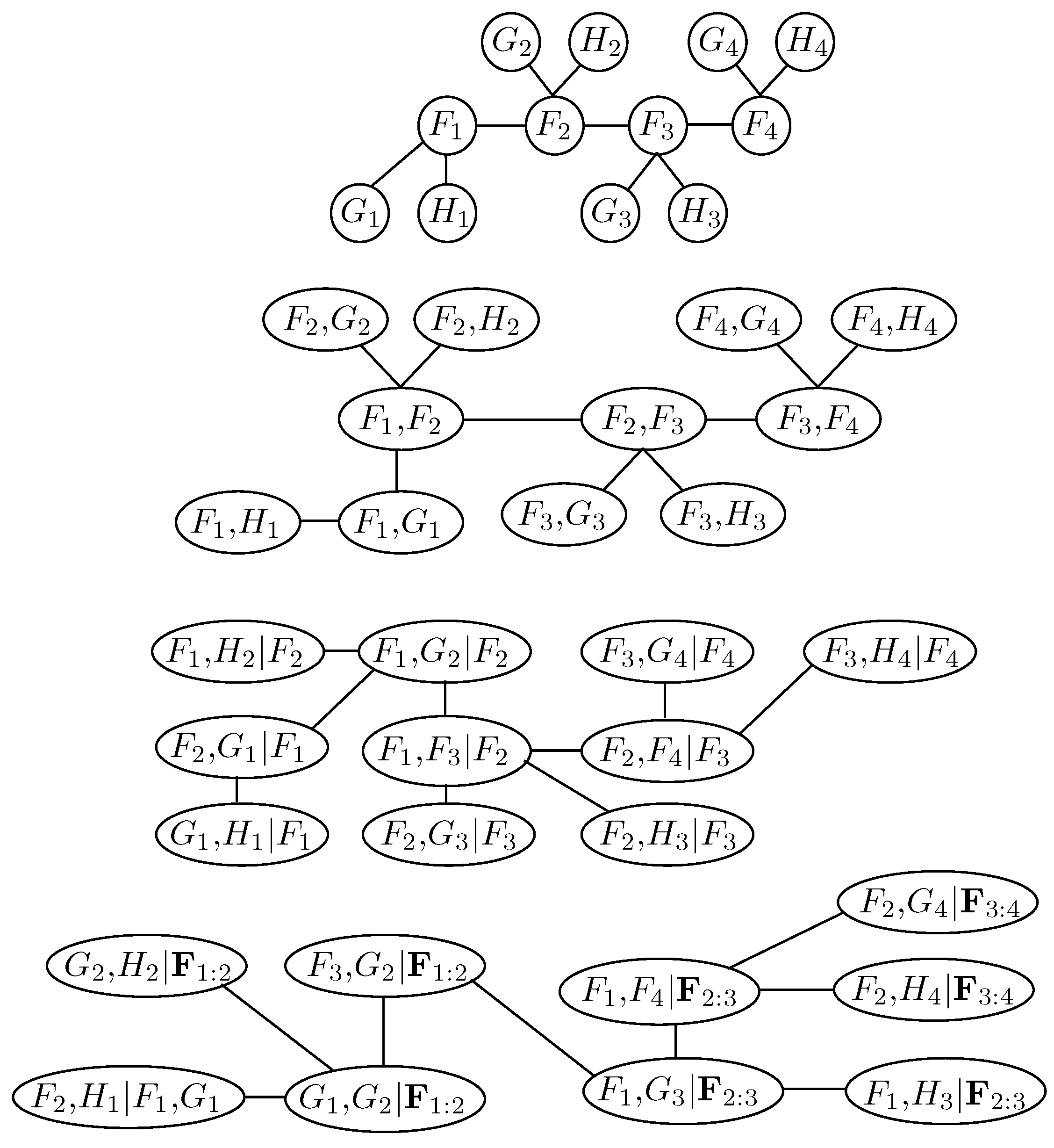

Figure 3 illustrates the first 4 trees of a COPAR model for three univariate time series

,

, and

with

(i.e., for four observations). Examining the first tree, we observe that it is a sequence of connected C-vines, where the central nodes are the time points of the first factor

. Thus, unconditional contemporaneous dependence is modeled with a C-vine.

The matrix representation of the R-vine structure of this COPAR model is given by

and the matrix of copulas for three-dimensional COPAR model is given by

If a COPAR

model is estimated based on the first

T multivariate observations, then it can easily be extended to

observations. This allows us to sample the

-th observation according to the estimated dependence structure between the

th subsequent multivariate observations. Let us illustrate this point for a COPAR

model and the above example with three univariate time series. The matrix representation of the R-vine structure of this COPAR

model remains unchanged, and the matrix of copulas for three-dimensional COPAR

model is now simplified to the matrix in (

6), where 0 stands for independence copula

Now, if we want to expand this COPAR model by adding a new time point (i.e., —in our case, ), the matrix representation will be changed as follows

Add three blank columns to the left of the matrix and add to the diagonal;

Under comes , then and ;

Under comes , then and ;

Under comes , then and .

The expanded matrix representation is as follows, where the new columns are marked in bold:

We also expand the matrix of copulas by adding three columns to the left of (

6), as follows:

Thus, the three new columns are just replications of the previous three columns by expanding the uninterrupted sequences of zeros with an additional zero.

5. Empirical Application

The idea to model asset returns with factors arising from some observed data and idiosyncratic components is quite popular in modern finance theory. The most prominent example is the capital asset-pricing model (CAPM) of

Sharpe (1964),

Litner (1965),

Mossin (1966), and

Treynor (2012), which is a one-factor model with the market return as the only common driver of asset prices. Another well-known approach is the arbitrage pricing theory (APT) of

Ross (1976). In this case, a multi-factor model describes the return of an asset as the sum of an asset-specific return, an exposure to systematic risk factors, and an error term. A third example is

Stock and Watson (2002a), who extracted factors from a large number of predictors to forecast the log-returns of the Federal Reserve Board’s Index of Industrial Production.

Ando and Bai (2014) provide further empirical evidence that stock returns are related to macro- and microeconomic factors. In this section, latent factors from U.S. macroeconomic data are extracted and then used for portfolio optimization. We consider 35 assets from S&P 500, which are classified as “Industrials” according to Global Industry Classification Standard. We assume that the estimated factors have the most prediction power for these assets.

The U.S. panel data includes such economic indicators as government bond yields along the curve, currency index, main commodity prices, indicators of money stock, inflation, consumer consumption, and industrial production gauges. Altogether, we have 22 time series listed in

Appendix B. Each series contains monthly data from 31 January 1986 to 30 November 2016, 371 data points in total. Next, we split the panel data into financial and macroeconomic groups according to

Table A2 and

Table A3 in

Appendix B. In the sequel, each time series is transformed in order to eliminate trends and achieve its stationarity.

Table A2 and

Table A3 in

Appendix B also contain information on considered data transformations. Further, we consider three factor models: separately for the two groups of the panel data, and one joint model for all monthly indicators. Thereby, we aim to illustrate that modeling nonlinear dependence between estimated factors of different groups of panel data with COPAR may lead to a better asset allocation.

We consider the following three DFMs for

:

where

collects observed macroeconomic data and

is the vector of factors. The dimension

of the panel data for

and

is equal to

and 22, respectively.

In the first step, we estimate three DFMs—one for each group (macro and financial) and for the full panel data. The starting time frame is from January 1986 to December 2005, and expands successively by one month until November 2016 is reached. For the three DFMs, we apply the EM-Algorithm from

Section 2 to the panel data from each expanding time window and obtain a monthly sequence of estimated factors for each month of the considered time period. For

, and

, the EM-Algorithm requires the factor dimension

, which is not known. In what follows, we perform the model selection for the factor dimension.

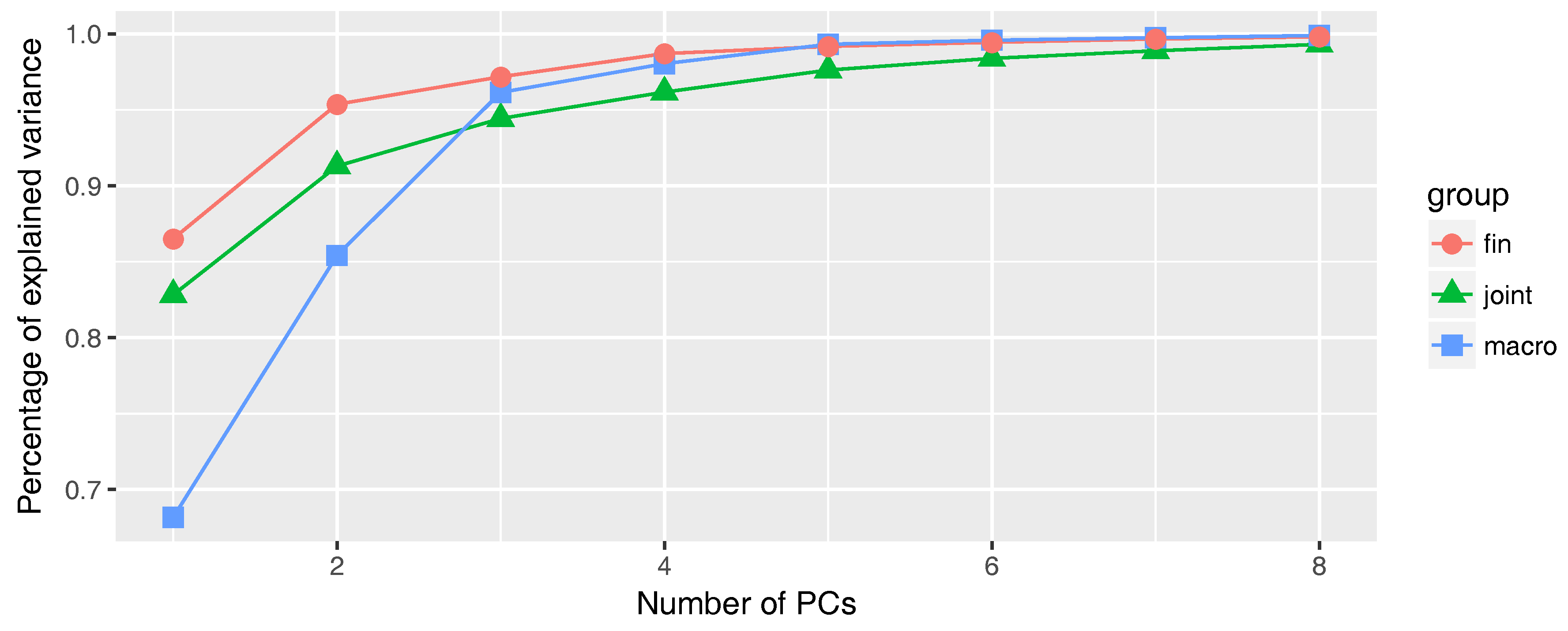

The dimension of factors is selected using principal components analysis (see

Jolliffe 2002). We choose the number of principal components (PCs) such that we capture more than 95% of the empirical data variance, based on the initial time frame from January 1986 to December 2005.

Figure 4 illustrates the fraction of variance captured by eight or fewer PCs for the considered time window. Thus, two factors are sufficient for the financial group to capture 95.4% of the corresponding data variability. For macroeconomic indicators, we need three factors (96.5% of variability), while four factors are enough for the whole panel data (96.2% of variability).

In general, the latent factors of DFMs may follow a VAR model of order

p. Initiated by a referee’s comment, we also consider autoregressive order

for the joint DFM with all monthly indicators and compare its forecasting performance for

and

. Our decision criterion is based on the root-mean-square error (RMSE) of point predictions defined for univariate observations

as

where

are predicted values. Thus, we compute the RMSE for each time series and take their average value. Thereby, our first prediction is done for January 2006 and ends in November 2016, resulting in 131 point forecasts. The averaged values of the RMSE for

and for

are

and

, respectively. Therefore, our initial choice of

is justified.

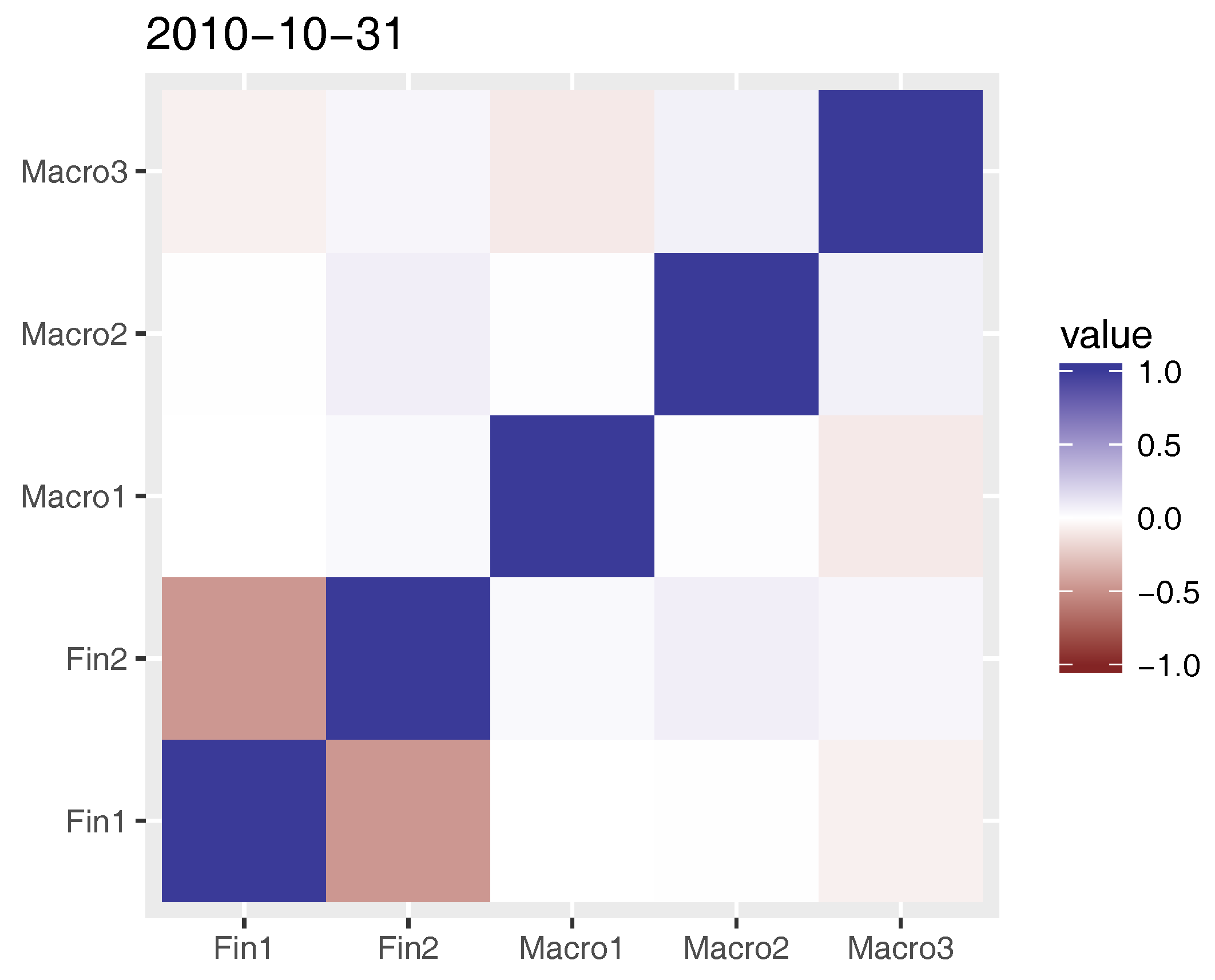

Figure 5 illustrates the correlation coefficients of the financial and macro factors filtered from data up to October 2010. The estimated factors show some moderate linear dependence within groups and a weak linear dependence between groups. Note that dynamic factor models assume factors to be multivariate normally distributed. Nevertheless, the estimated factors could exhibit non-Gaussian dependence, which we aim to capture using the COPAR model.

To improve the joint forecasting of asset returns at month t, for a subsequent month, we link the two factor models; namely, we capture the dependence structure of the estimated factors with a COPAR model. Thereby, we combine the estimated factors from two groups as a single five-dimensional vector for each data time window. We put the financial factors first, then the macroeconomic. Using fitted marginal normal distribution functions, the filtered factors are translated to copula data.

We consider a COPAR(1) model, since the transition equation of the DFMs is supposed to be a VAR(1). Further,

Brechmann and Czado (2015) discuss a sophisticated copula family selection procedure for two time series. Here, we follow a more simple approach consisting of three steps. In the first step, the selection of copulas for contemporaneous cross-sectional dependence of the filtered factors is done by neglecting serial dependence. Note that the cross-sectional dependence is described by a C-vine with fixed order of variables. Therefore, we only have to select copula families of pair-copulas. Thus, we consider the estimated five-dimensional factors as iid data and perform sequential selection of copula families for each pair-copula using Akaike information criterion. Copula families for the pair-copulas associated with the first tree of the C-vine are selected first, then with the second tree, and so on. For more details on model selection for C-vines, we refer to

Czado et al. (2012). Equation (

9) presents the selected copula families for cross-sectional dependence in R-vine matrix notation until December 2005 (for copula families in (

9), see

Appendix C):

It is remarkable that none of the selected copula families in (

9) is Gaussian. Moreover, only 0.5% of families selected for cross-sectional dependence for all expending time windows are the Gaussian copula.

In the second step, we use the Gaussian copulas as conditional pair-copulas to model the temporal dependence of the filtered factors. Note that this simple approach does not imply that the joint distribution of the time-shifted factors is Gaussian except for the first component of

; i.e.,

. Non-Gaussian pair-copulas for cross-sectional dependence destroy the Gaussianity of the time-shifted factors, and only the joint distribution of the first component of

remains Gaussian. In the third step, the R-vine matrix of the COPAR(1) model for the estimated factors

is constructed, and the maximum likelihood estimation is performed. Copula selection and parameter estimation of the COPAR model for the factors is done for every expanding window of data; that is, as soon as the DFMs for both groups are estimated and the latent factors are filtered out. Finally, note that we also test Granger causality between components of the multivariate time series

in the whole time period, and can confirm it. For this, we regress univariate series of

on the lagged values of

.

Table A11 in

Appendix F summarizes all five linear regressions. For example, the first component of

Granger causes almost all other univariate time series at least at

of significance level with the exception of the second component of

.

Next, we present a conditional method for forecasting using COPAR, which follows

Brechmann and Czado (2015) with a small modification. For a prediction time point, we simulate factors from the estimated COPAR model, but conditioned on the past values of the factors. The sampled factors are further used to forecast assets returns and to construct an optimal mean-variance portfolio. Since we assume an autoregressive order of one, it is enough to condition only on the last value of factors; i.e., we condition only on

and

to make a forecast for time point

. The full algorithm for both markets is given in Algorithm 1, employing notation

.

| Algorithm 1: Conditional method of forecasting using COPAR. |

Estimate COPAR model based on the first T observations and get factor estimates ; Set ; Repeat the following steps

- (a)

If then Stop; - (b)

Determine the estimated conditional density of on the equidistant grid on with step , i.e., for , ;

where and are the estimated Gaussian density and distribution function of latent factor ; - (c)

Determine the estimated conditional cumulative distribution function on the above grid; - (d)

Simulate 10,000 from the estimated conditional cumulative distribution function and set forecast to their empirical mean; - (e)

Set and go to (a);

|

To illustrate the idea behind this method, we consider a small-scale example of two variables

and

. Let us assume that we observe some values of these two random variables at time point

t; i.e.,

,

. We want to find the distribution of

and

conditioned on the observed values that we have observed. For this purpose, we consider the decomposition of the conditional distribution of

given by

where

is some forecast. One has a free choice for forecast methods for

. As

Brechmann and Czado (2015), we opt for the conditional mean estimated by the sample mean. Next, we first estimate the conditional density, and then, the conditional distribution function. For this simple case of two variables

and

, the estimated density

of

on a grid from 0 to 1 with step 0.0001 is estimated. Then, we search for the estimated distribution function

. With

and

, one can estimate the mean.

In the next step, we use the estimated factors to model the asset returns of 35 industrial stocks from S&P 500 and the sampled factors to construct an optimal portfolio in the mean-variance framework for each expanding time window starting from January 2006 up to November 2016. We consider and estimate the following model for each asset return

:

where the constants

,

, and the vector

constitute unknown regression parameters and the errors

are assumed to be iid Gaussian with respect to

t. The error terms

are assumed to be independent across

j due to the dimensionality of the error covariance matrix. Moreover, we treat all linear regression separately for each asset

j due to the dimensionality of regression parameters and couple them with the common estimated factors. In

Section 2, we have pointed out that the estimated factors are unique up to a rotation with an orthogonal matrix

R; i.e.,

is an identity matrix. Since

holds, the impact of the rotated and unrotated estimated factors together with their regression coefficients on asset returns remains the same.

To construct an optimal portfolio in the mean-variance framework, we determine portfolio weights

at month

t by solving the following optimization problem with respect to

:

where

is a vector of asset returns at

t,

and

are the expectation and covariance matrix of

. Thus, our optimal portfolio does not allow short-selling.

Since

and

are unknown at month

t, we estimate them with four different methods, resulting in four portfolios. The first portfolio is the COPAR portfolio, which is constructed using asset returns modeled with (

10). In this case,

and

are empirically estimated at time point

t based on 10,000 sampled factors from the COPAR model and 10,000 sampled errors from the estimated univariate Gaussian distribution. The second portfolio is the DFM portfolio similarly constructed with sampled factors from the estimated stationary distribution (

1) for (

7)–(

8) and

(i.e., the DFM for the full panel data). The third portfolio—called independent DFM—uses sampled factors from the estimated stationary distribution (

1) for (

7)–(

8) and

; i.e., assets returns are driven by

. The fourth portfolio is the historical portfolio, which employs the empirical mean and covariance matrix based on the data up to time

t. We consider the historical portfolio as a benchmark. Finally note that the comparison of these four portfolios enables the assessment of the economic relevance of factors.

For the above mean-variance optimization problem, we choose three monthly volatilities

, which correspond to annual volatilities of

. These choices of

’s are practically reasonable, and the optimizer diversifies the portfolio for them. For higher values of

, the optimal portfolio consists of only one stock, if no constraints on maximal weights are imposed. We start to determine an optimal portfolio for the panel data up to January 2006. Then, we sequentially expand the time window by one month and find optimal weights for the considered four portfolios and chosen level of volatilities. The performance of the four portfolios with initial investment of 1 USD during the out-of-sample time period up to November 2016 is illustrated in

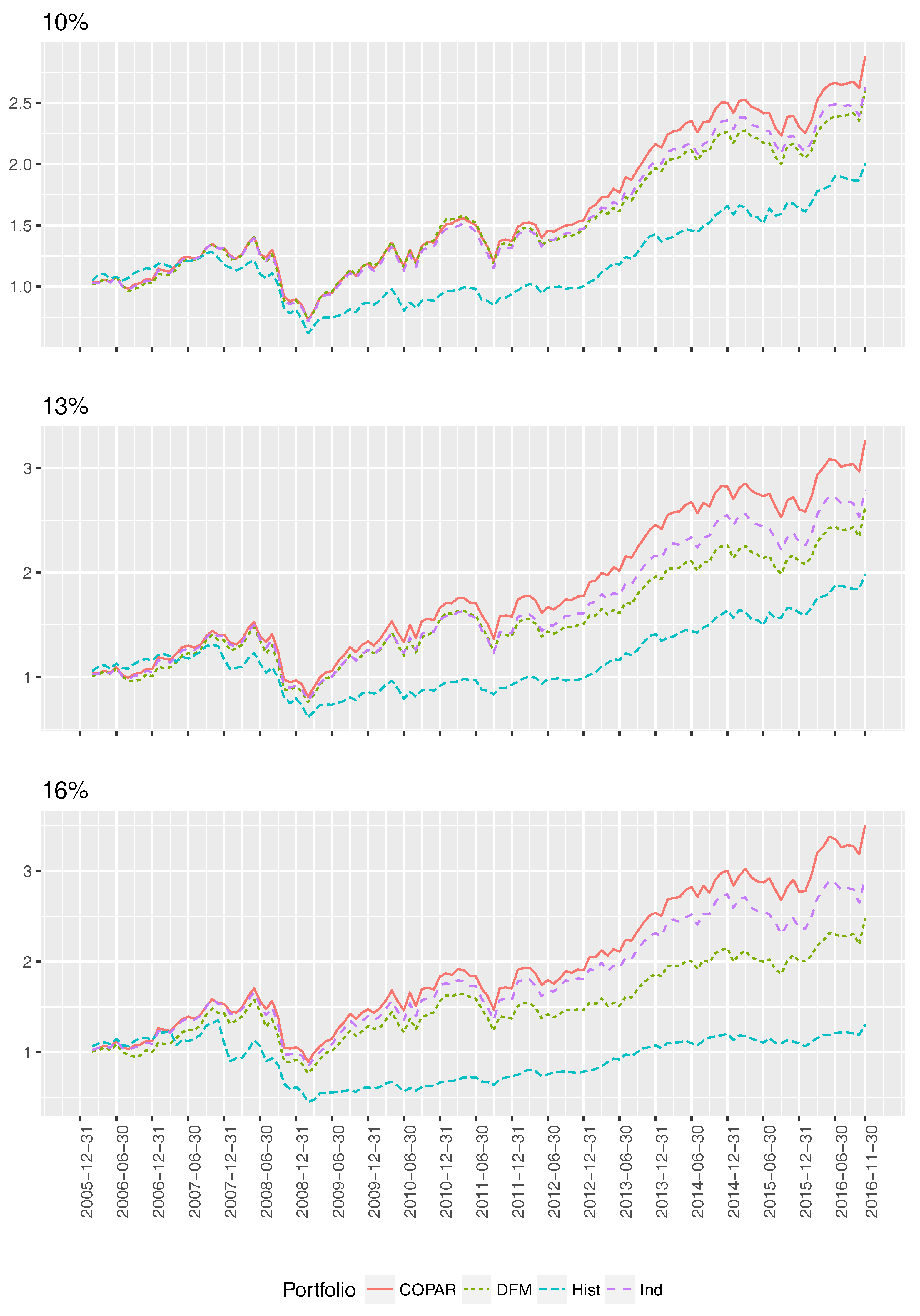

Figure 6.

We observe that the COPAR portfolio outperforms the DFM, independent DFM, and historical portfolios. Further, two portfolios based on DFMs deliver a higher overall return than the historical portfolio, and the independent DFM (abbreviated in Figures and Tables as ind) is preferred over the DFM portfolio.

To compare the four portfolios, we consider several portfolio performance and risk measures summarized in

Appendix D. First, note that observed standard deviations of portfolio returns are higher than prespecified ones. This is natural due to the prediction error. According to the Sharpe and Omega ratio, the COPAR portfolio is preferred over all remaining portfolios. For both risk measures, the independent DFM portfolio outperforms the DFM portfolio. We explain this finding with a fortunate split of the panel data. Further, if we consider

Value at Risk (VaR) and

Conditional Value at Risk (CVaR), then the historical portfolio outperforms the others except for one case. For

and

VaR, the COPAR portfolio is slightly superior.

To statistically assess the differences in the Sharpe ratios, we perform the Jobson–Korkie Test from

Jobson and Korkie (1981). The null hypothesis of this test is that the Sharpe ratios of the two considered portfolios are equal. The normalized and centered test statistics are asymptotically Gaussian distributed with mean 0 and variance 1 under the null hypothesis. If the null hypothesis is rejected, then there is significant statistical evidence for different Sharpe ratios.

Appendix E presents results of the Jobson–Korkie Test for all pairs of portfolios and

. The COPAR portfolio significantly outperforms the independent DFM portfolio, and this advocates our approach to model estimated factors with a COPAR model. For

and

, we do not see a statistically significant difference in Sharpe ratios for historical and COPAR portfolios. We explain this finding with lower standard deviations of the historical portfolio returns.