A Calibration Report for Wireless Sensor-Based Weatherboards

Abstract

:1. Introduction

- (a)

- What is the accuracy of the sensors?

- (b)

- What is the variability of measurements in a network containing such sensors?

- (c)

- What change, or bias, will there be in the data provided by the sensor if its siting location is changed?

- (d)

- What change or bias will there be in the data if it replaces a different sensor measuring the same weather element(s)?

- (1)

- The sensors boards’ lag errors; these result from: delay statements used for stabilizing power supply after waking up the sensor boards; the process of storing the readings in secure digital (SD) cards; print and println statements; and general packet radio service (GPRS) commands;

- (2)

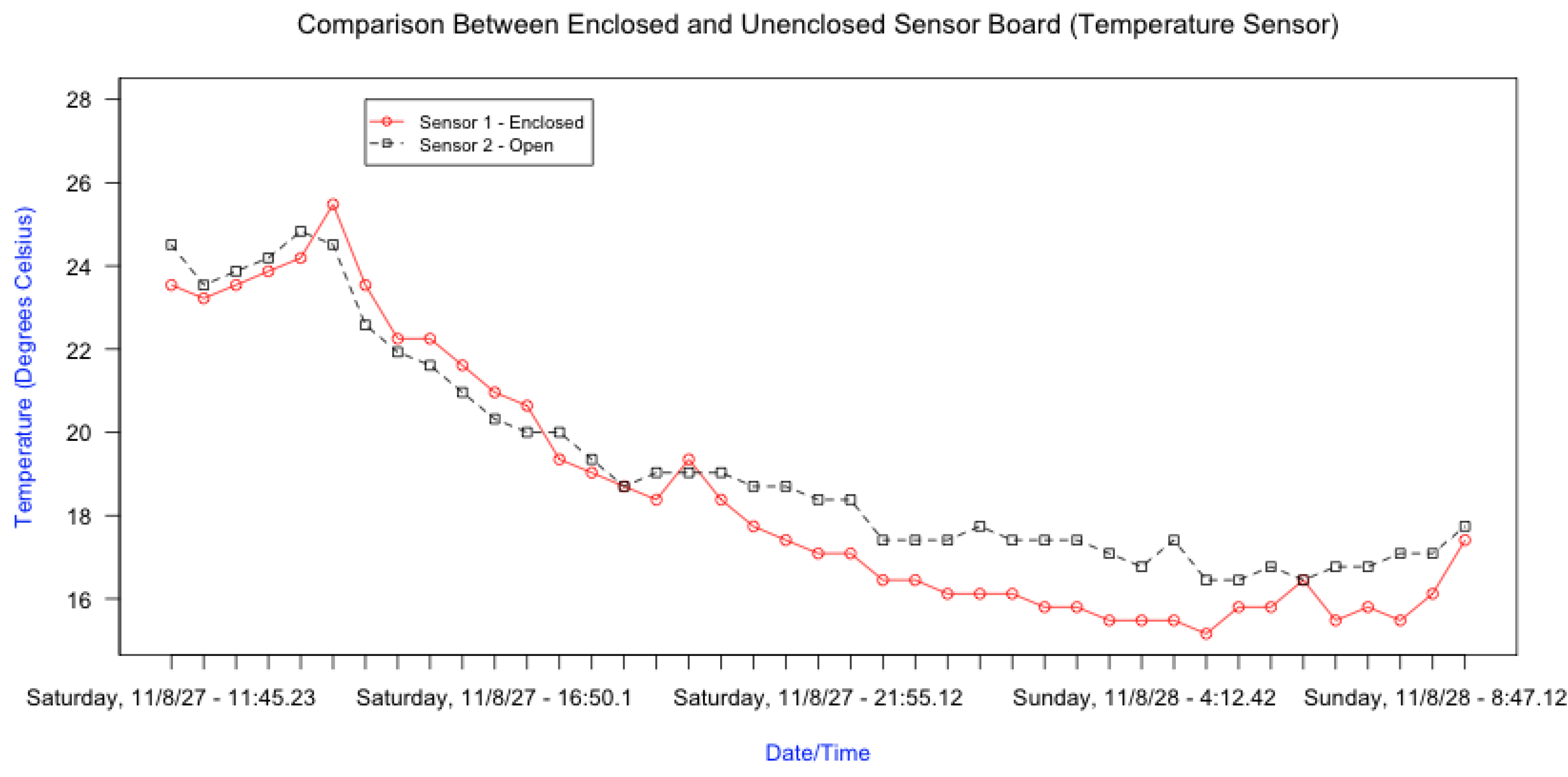

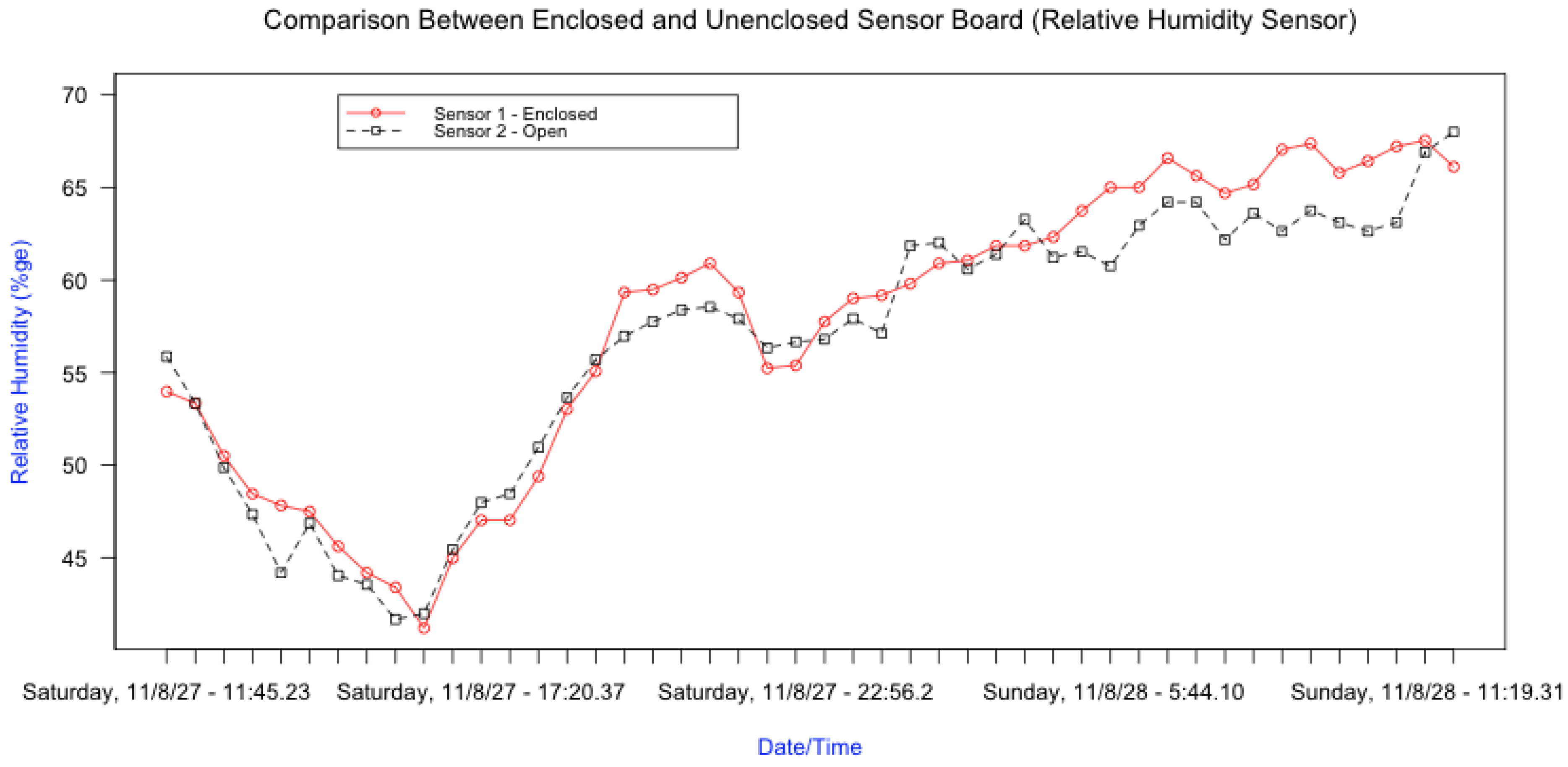

- The effects of enclosing the sensor boards in a Perspex enclosure;

- (3)

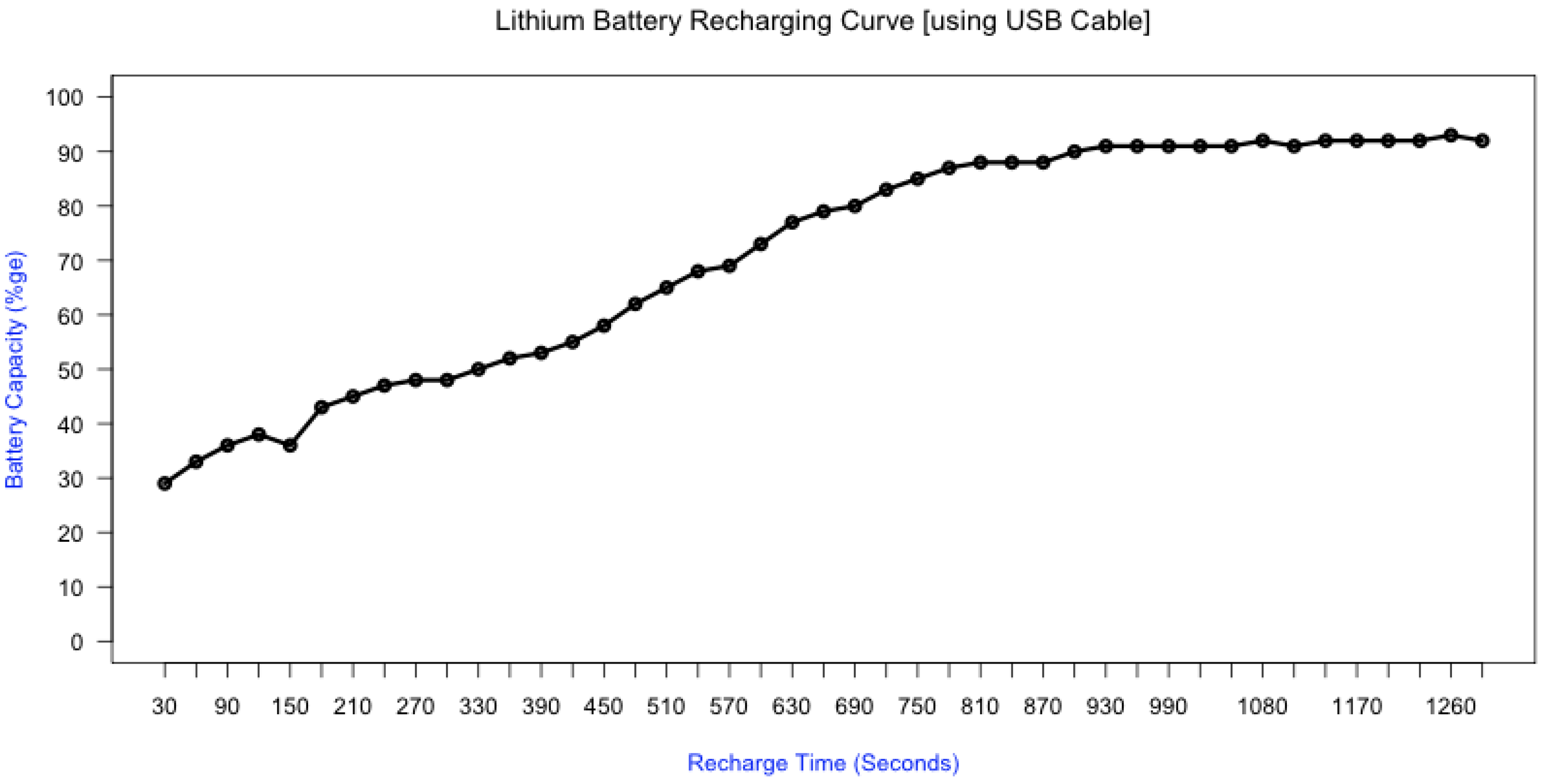

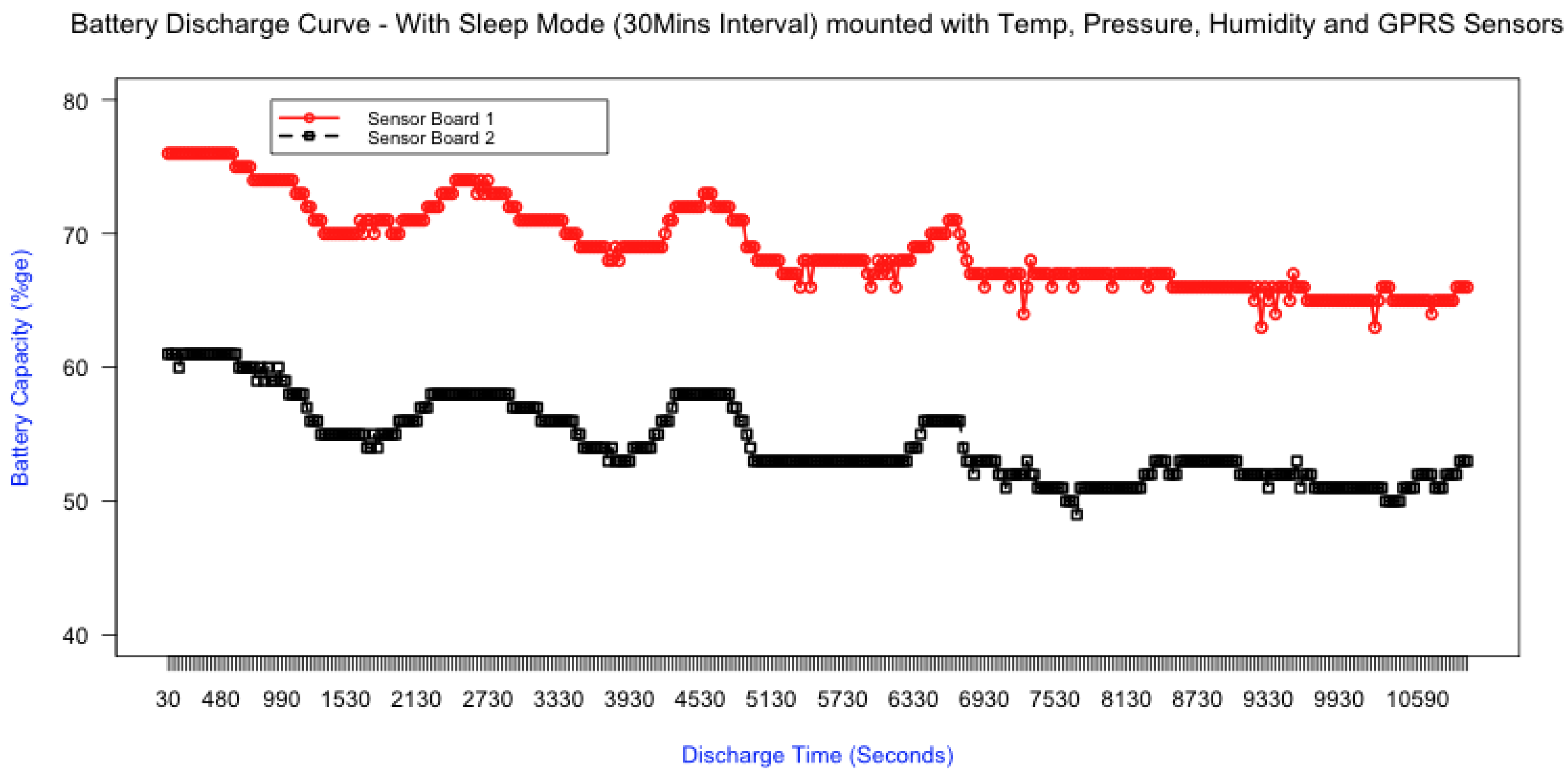

- The sensors boards’ battery discharge and recharge curves; this would enable one to know the frequency with which the deployed sensors’ batteries needed to be recharged/replaced.

- (1)

- The reliability and stability of the sensors;

- (2)

- The convenience of the operation and maintenance of the sensors boards;

- (3)

- The sensors’ durability; and

- (4)

- The acceptability of the sensors in terms of their initial cost, as well as the cost of their consumables and spare parts.

2. Background Literature

2.1. About the Kenya Meteorological Department

2.2. Weather Instruments Calibration Guidelines

2.3. Calibrating for Uncertainty of Meteorological Measurements

3. Experimental Section

3.1. Pilot, Exploratory and Confirmatory Experiments

3.1.1. Pilot Experiments

3.1.2. Exploratory Experiments

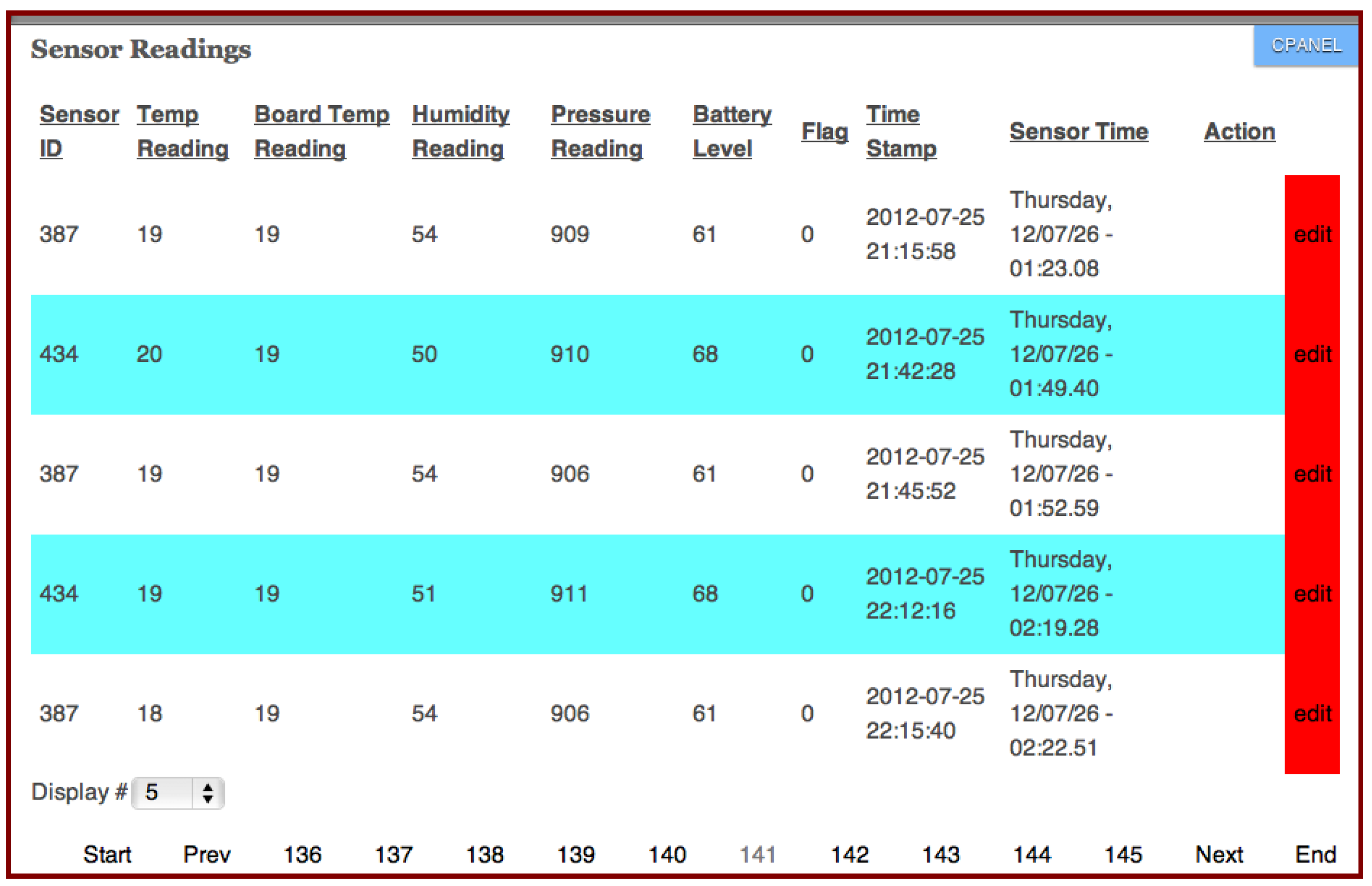

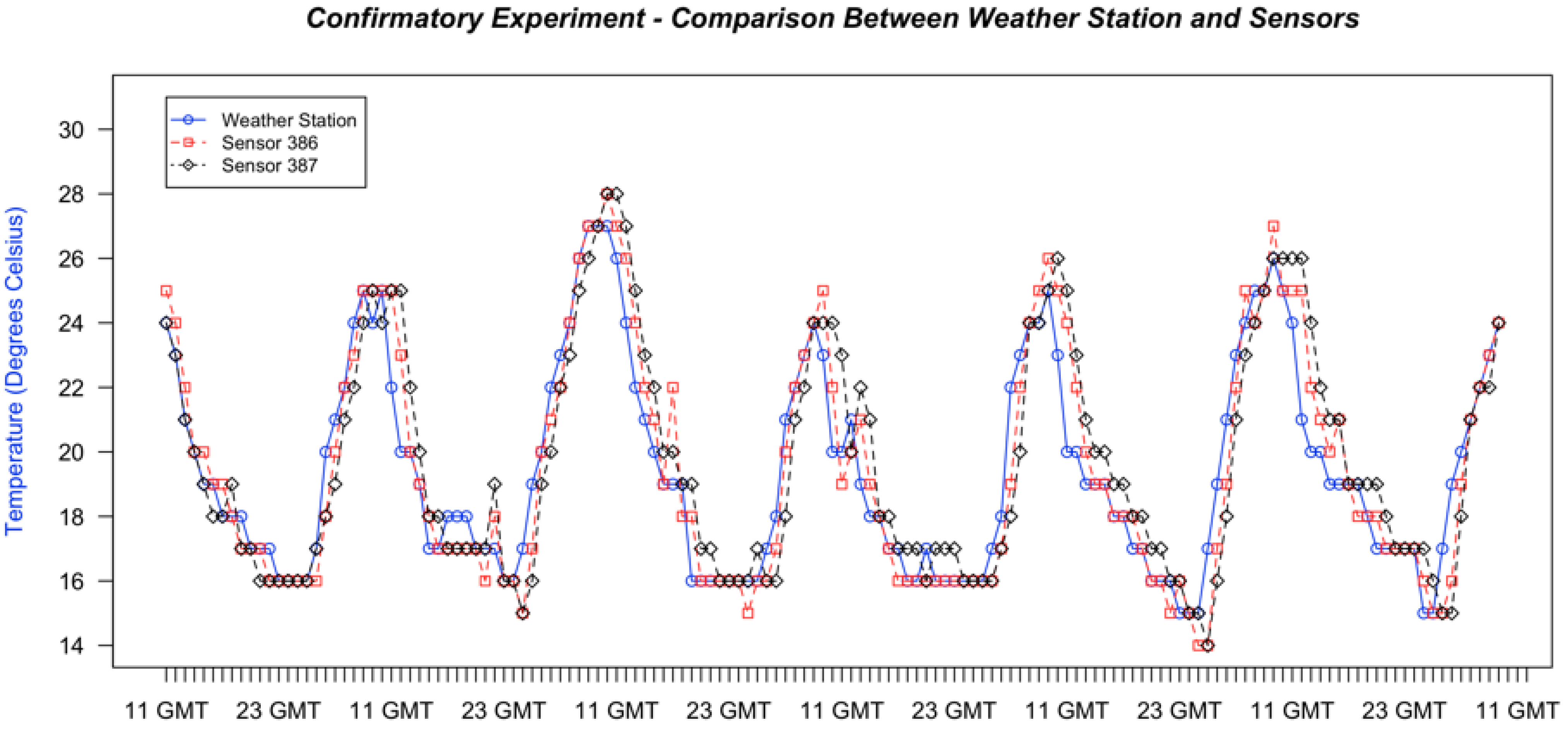

3.1.3. Confirmatory Experiments

3.1.4. Systematic Error Analysis

3.2. Sensor Boards’ Inherent Errors

3.2.1. Sensor vs. Board Temperature Differences

3.2.2. Lag Errors

3.2.3. Similarity Tests

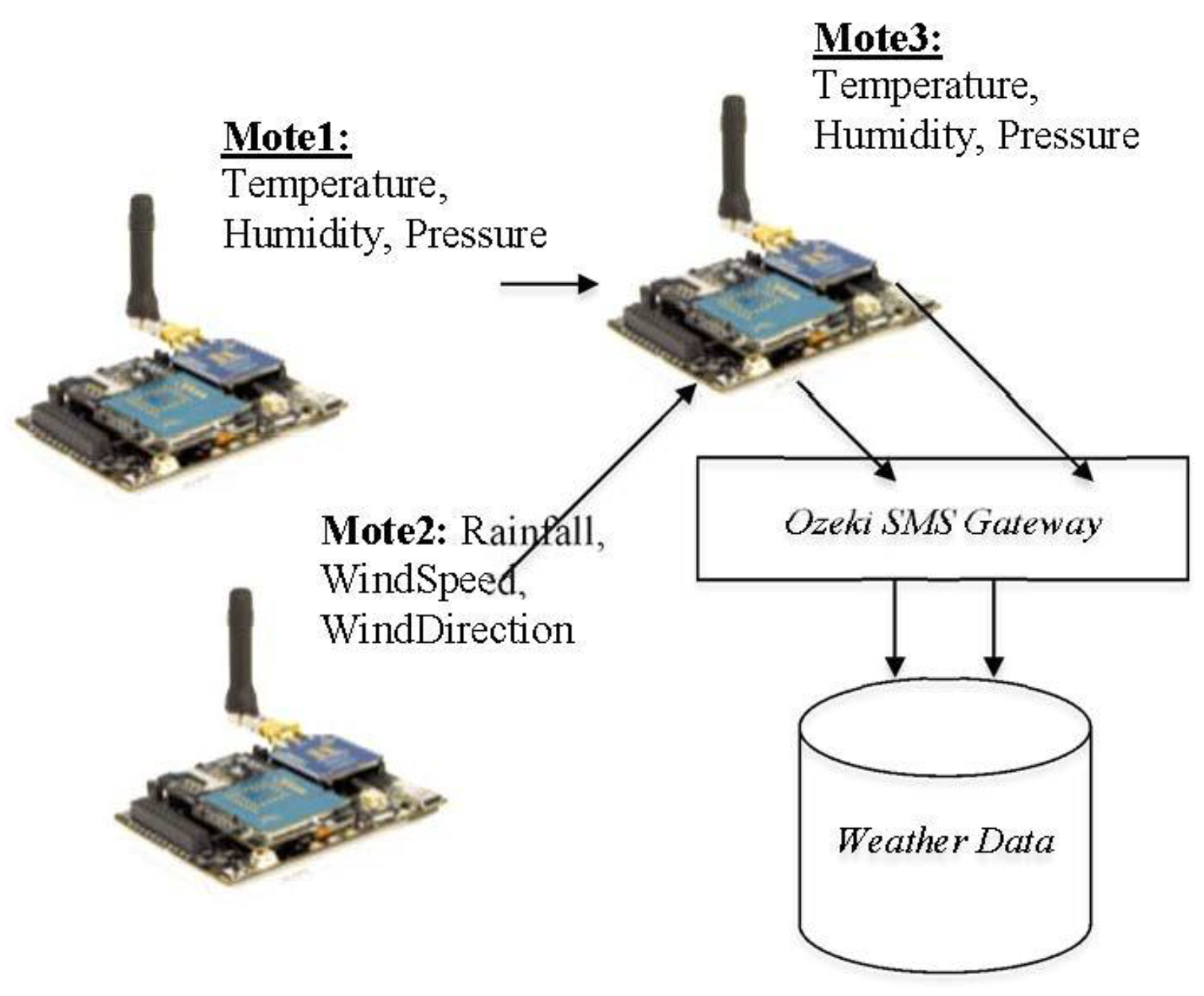

3.3. Aggregating Sensors Readings

- (I)

- Option 1: average all sensor readings taken within the hour:where Ss is the aggregated reading for Sensor S; for example, S could be a temperature sensor or humidity sensor. Si1 and Si2 are the sensor reading for Sensor S on Sensor Board i (see Equation (1) above). For instance, in the case of five sensor boards, the aggregated reading for temperature sensors would be computed as shown in Equation (2)

- (II)

- Option 2: average readings taken closest to the hour:

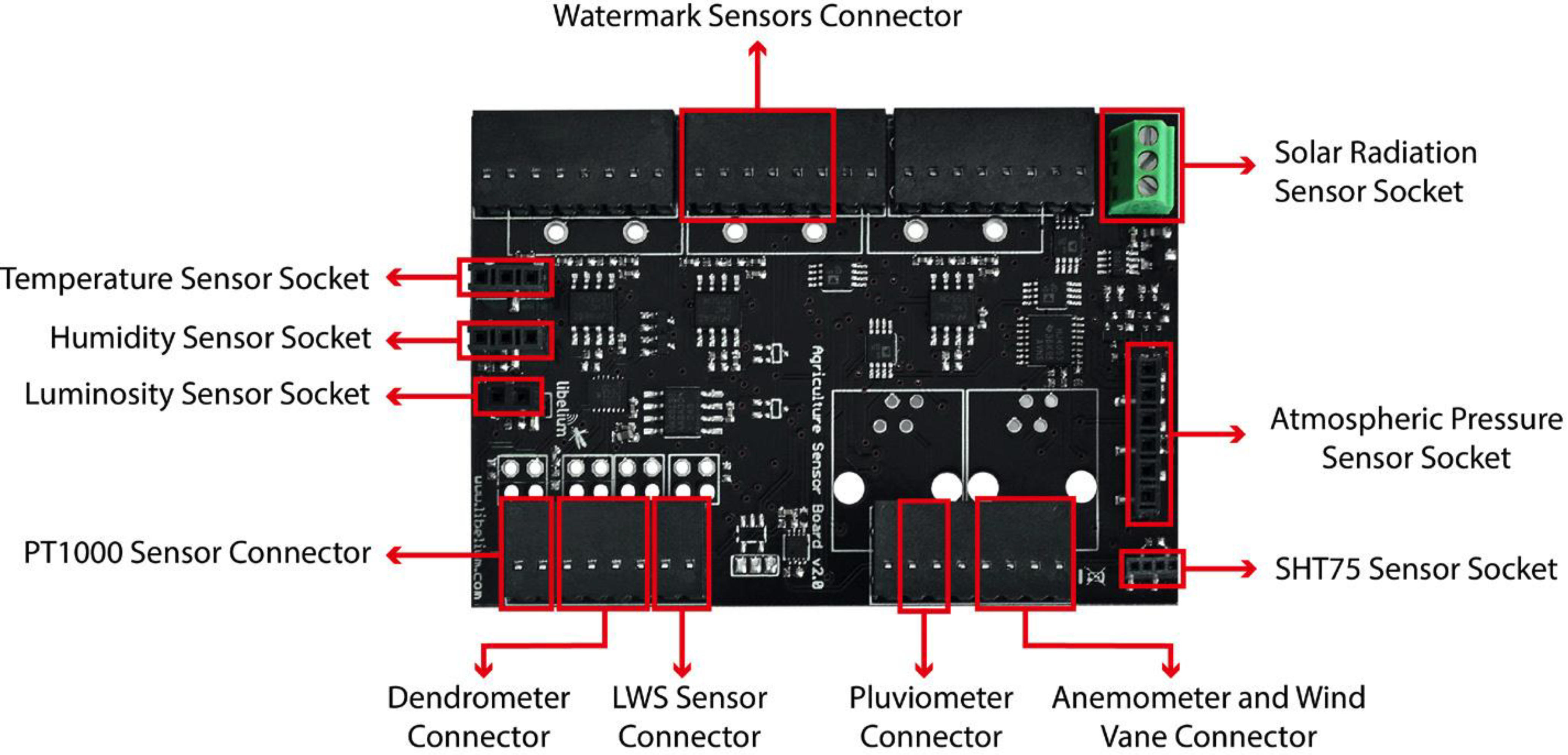

3.4. Equipment Selection

- Temperature sensor MCP9700A by Microchip;

- Humidity sensor 808H5V5 by Sencera;

- Temperature and humidity sensor SHT75 by Sensirion;

- Soil moisture sensor Watermark by Irrometer;

- Atmospheric pressure sensor MPX4115A by Freescale;

- Leaf wetness sensor (LWS);

- Solar radiation sensor SQ-110 by Apogee;

- DC2, DD and DF dendrometers by Ecomatik;

- Soil temperature sensor PT1000;

- EWeather Station (anemometer, wind vane and pluviometer).

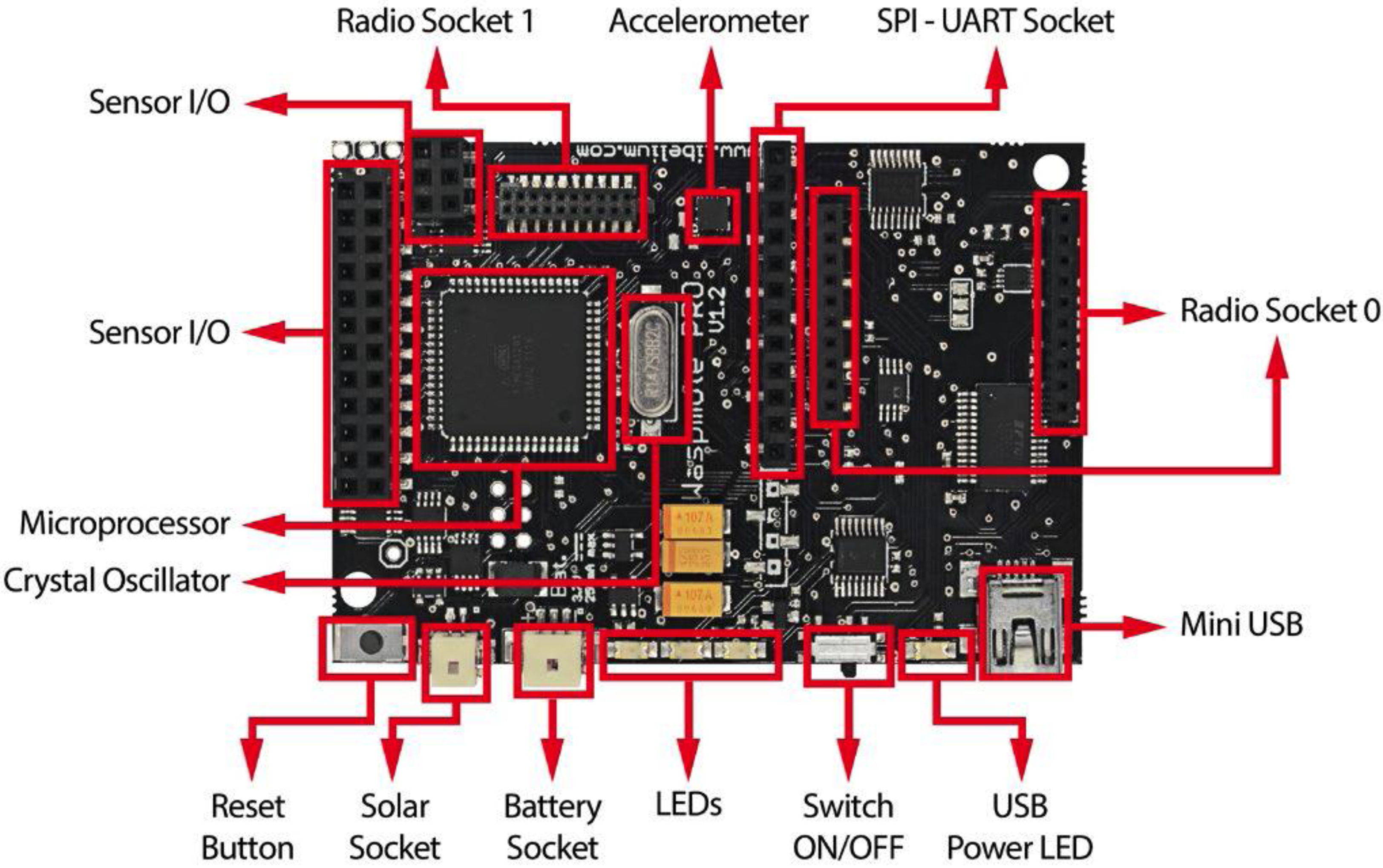

- Lithium batteries, for power supply;

- General packet radio service (GSM)/Global System for Mobile Communications (GPRS) module, for sending data via the mobile telephone network;

- GPS receiver, for getting GPS coordinates (latitude, longitude, height, speed, direction, date/time and ephemerids); this is to enable support for mobile data sensing;

- SD cards, for data storage;

- USB cables for uploading program to the Waspmotes;

- Waspmote gateway, XBee radio and XBee antennas (2 dBi/5 dBi) for networking the sensor nodes.

| Weather Parameter | Sensor Board | Weather Instrument |

|---|---|---|

| Temperature | Temperature sensor MCP9700A by Microchip | Mercury-in-glass thermometer |

| Relative Humidity | Humidity sensor 808H5V5 | Computed using a humidity slide rule |

| Atmospheric Pressure | Atmospheric pressure sensor MPX4115A by Freescale | Kew-type station barometer: |

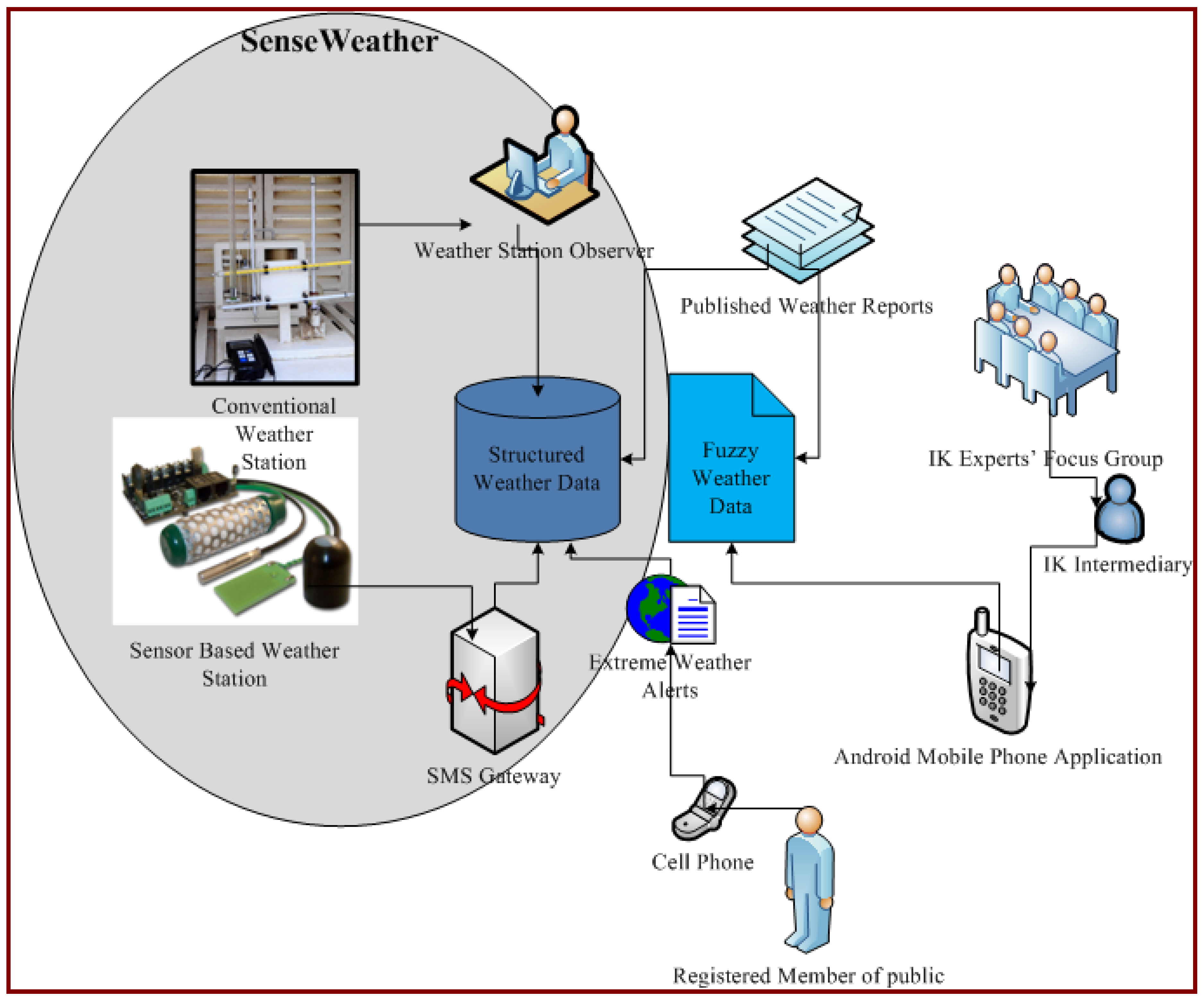

3.5. SenseWeather System Design and Implementation

- (1)

- Sensor boards next to weather stations:

- (2)

- Stand-alone sensor boards:

4. Results and Discussion

4.1. Reading Time Lags (Lag Errors)

4.2. Sensor vs. Weather Station Data Analysis

- (a)

- Error Analysis

| Error Type | Temperature Sensor | Humidity Sensor | Pressure Sensor |

|---|---|---|---|

| ME | 1.54 | 6.52 | −12.80 |

| MAPE | 8.21% | 9.58% | 1.35% |

| RMSE | 1.63 | 8.31 | 12.83 |

- (b)

- Sensor Readings Adjustments

| Error Type | Temperature Sensor | Humidity Sensor | Pressure Sensor |

|---|---|---|---|

| ME | 0.08 | 1.04 | 0.17 |

| MAPE | 3.35% | 6.14% | 0.08% |

| RMSE | 0.74 | 4.30 | 0.87 |

- (c)

- Similarity Tests

4.3. Confirmatory Experiments

value_temp = value_temp + (value_temp * 0.0821)

- (a)

- Error Analysis

| Error Type | Option | Temperature | Humidity | Pressure |

|---|---|---|---|---|

| MAPE | Option 1 | 8.55 | 12.54 | 1.47 |

| Option 2 | 8.53 | 11.90 | 1.47 | |

| RMSE | Option 1 | 1.96 | 10.94 | 12.06 |

| Option 2 | 1.89 | 10.56 | 12.10 |

- (b)

- Correlation Coefficients

| Options | Temperature | Humidity | Pressure |

|---|---|---|---|

| Option 1 | 0.924 | 0.920 | 0.723 |

| Option 2 | 0.940 | 0.936 | 0.657 |

4.4. Calibrating the Sensors

4.5. Battery Tests

4.6. Sensor Board Enclosure

5. Conclusions and Further Work

5.1. Inherent Sensor Board Facts

5.2. Appropriate Material for Building Sensor Enclosures

5.3. Future Work

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Armstrong, S.; Mark, C.; Randolph, K.; Dan, M. World Disasters Report—Focus on Hunger and Malnutrition, 1st ed.; International Federation of Red Cross and Red Crescent Societies: Geneva, Switzerland, 2013. [Google Scholar]

- Patt, A.G.; Jordan, W. Applying Climate Information in Africa; An Assessment of Current Knowledge. Available online: http://www.iiasa.ac.at/Publications/Documents/XO-07-006.pdf (accessed on 2 February 2015). Written in Support of NOAA, Boston University and the International Institute for Applied Systems Analysis (IIASA)

- Masinde, M.; Bagula, A.; Muthama, N. Wireless Sensor Networks (WSNs) use in Drought Prediction and Alerts. In Application of ICTs for Climate Change Adaptation in the Water Sector: Developing Country Experiences and Emerging Research Priorities. APC, IDRC, CRDI; Finlay, A., Adera, E., Eds.; Association for Progressive Communication (APC) and the International Development Research Centre (IDRC): Johannesburg, South Africa, 2012; pp. 107–108. [Google Scholar]

- Abdoulie, J.; John, Z.; Alpha, K. Climate Information for Development Needs: An Action Plan for Africa—Report and Implementation Strategy; World Meteorological Organization (WMO): Geneva, Switzerland, 2006. [Google Scholar]

- EAC, S. Enhancing Capacities of the Meteorological Services in Support of Sustainable Development in the East African Community Region Focusing on Data Processing and Forecasting Systems. Available online: http://www.eac.int (accessed on 2 April 2014).

- Masinde, M.; Bagula, A. ITIKI: Bridge between African indigenous knowledge and modern science of drought prediction. Knowl. Manag. Dev. J. 2012, 7, 274–290. [Google Scholar] [CrossRef]

- Libelium, Comunicaciones Distribuidas, S.L. Agriculture Board Technical Guide. 2010. Available online: http://www.libelium.com (accessed on 2 February 2015).

- Plummer, N.; Terry, A.; José, A.L. Guidelines on Climate Observation Networks and Systems; World Meteorological Organization: Geneva, Switzerland, 2003. [Google Scholar]

- Manual on the Global Observing System: Volume I (Annex V to the WMO Technical Regulations); World Meteorological Organisation: Geneva, Switzerland.

- Jarraud, M. Guide to Meteorological Instruments and Methods of Observation (WMO-No. 8); World Meteorological Organisation: Geneva, Switzerland, 2008. [Google Scholar]

- Eisenhart, C.; Dorsey, N.E. On Absolute Measurement. The Sci. Mon. 1953, 77, 103–109. [Google Scholar]

- Phillips, S.D.; Estler, W.T.; Eberhardt, K.R.; Levenson, M.S. A careful consideration of the calibration concept. J. Res. National Inst. Stand. Technol. 2001, 106, 371–379. [Google Scholar] [CrossRef]

- Masinde, M. An innovative drought early warning system for sub-Saharan Africa: Integrating modern and indigenous approaches. Afr. J. Sci. Technol. Innov. Dev. 2015, 7. [Google Scholar] [CrossRef]

- Guidelines for National Meteorological Services in the Establishment of National Climate Services; World Meteorological Organisation: Geneva, Switzerland, 12 May 2014.

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Masinde, M.; Bagula, A. A Calibration Report for Wireless Sensor-Based Weatherboards. J. Sens. Actuator Netw. 2015, 4, 30-49. https://doi.org/10.3390/jsan4010030

Masinde M, Bagula A. A Calibration Report for Wireless Sensor-Based Weatherboards. Journal of Sensor and Actuator Networks. 2015; 4(1):30-49. https://doi.org/10.3390/jsan4010030

Chicago/Turabian StyleMasinde, Muthoni, and Antoine Bagula. 2015. "A Calibration Report for Wireless Sensor-Based Weatherboards" Journal of Sensor and Actuator Networks 4, no. 1: 30-49. https://doi.org/10.3390/jsan4010030

APA StyleMasinde, M., & Bagula, A. (2015). A Calibration Report for Wireless Sensor-Based Weatherboards. Journal of Sensor and Actuator Networks, 4(1), 30-49. https://doi.org/10.3390/jsan4010030