Abstract

Artificial intelligence is a branch of computer science that attempts to understand the essence of intelligence and produce a new intelligent machine capable of responding in a manner similar to human intelligence. Research in this area includes robotics, language recognition, image identification, natural language processing, and expert systems. In recent years, the availability of large datasets, the development of effective algorithms, and access to powerful computers have led to unprecedented success in artificial intelligence. This powerful tool has been used in numerous scientific and engineering fields including mineral identification. This paper summarizes the methods and techniques of artificial intelligence applied to intelligent mineral identification based on research, classifying the methods and techniques as artificial neural networks, machine learning, and deep learning. On this basis, visualization analysis is conducted for mineral identification of artificial intelligence from field development paths, research hot spots, and keywords detection, respectively. In the end, based on trend analysis and keyword analysis, we propose possible future research directions for intelligent mineral identification.

1. Introduction

Recent years have seen the development of artificial intelligence gaining significant attention and providing new and effective solutions to problems in many areas, such as image recognition, natural language processing, self-driving cars, and malware identification. Geosciences is one of the fields in which there has also been a great deal of effort devoted to the combination of traditional problems and artificial intelligence, such as using artificial intelligence methods for the identification of rocks and minerals. For traditional methods of mineral identification, it takes a considerable amount of time and energy, as well as the use of expensive and specialized instruments to obtain the data needed to ensure the accuracy of the identification. Artificial intelligence has been shown to have the potential to contribute to intelligent mineral identification in several early experiments. In spite of that, it has been difficult to adopt artificial intelligence methods in the identification of minerals and there has been little progress in that direction. In recent years, breakthroughs in artificial intelligence, including remarkable advances in deep learning methods and the development of more and easier-to-use toolkits, have rekindled interest among geoscientists in artificial intelligence. Numerous exploratory studies have been undertaken to identify minerals. Mineral identification is of great importance in the process of mineral selection, exploration, separation, and related protection of artifacts in archaeology. It is common to use granite [1,2] as a monument and, in certain conservation activities, such as laser cleaning, identifying mineral deposits on the granite surface is of great importance. By adapting to the effects of the different forming minerals on granite stone, we can improve processing and prevent damage. Based on this development trend, this paper summarizes the intelligent mineral identification methods using artificial intelligence and provides a new division of the intelligent mineral identification methods; then, it analyzes its development trend with visualization methods, expecting that mineral researchers can quickly determine the available discriminatory routes and methods and find the most effective way to solve the problems of different scenarios. Furthermore, it is expected that researchers in the field of artificial intelligence will be able to comprehend the scenarios in which existing methods are applied and identify problems and challenges that may arise during the development of technology in this area.

The most crucial part of intelligent mineral identification is the determination of mineral species, and there are currently two types of methods: (1) Expert systems for mineral identification. Expert systems are computer programs that simulate the behavior of human experts to solve practical problems related to a specific domain of knowledge [3]. A mineral identification expert system is essentially a computer system that contains expertise in mineral identification. The main goal of a mineral identification expert system is to provide expertise to the user. A well-established expert system solves the problem of insufficient experts and reduced costs, but cannot identify minerals that are not available in the expert system. Rule-driven mineral identification expert systems are classified into five categories in [4]. This approach is beyond the scope of this paper. (2) Identification method based on artificial intelligence model. This mineral identification method is the focus of this paper. The method of model-based identification involves using a large amount of mineral data to develop a model for the identification of minerals utilizing artificial intelligence. During mineral identification, the data are input into the model, and the model discriminates the mineral category corresponding to the data. The artificial-intelligence-model-based identification method uses artificial intelligence to train the model, i.e., the model “learns” how to identify minerals directly from the mineral data, and can identify mineral data that are not existing in the system, which effectively improves the identification accuracy. An extension of mineral identification has been greatly enhanced by the development of this technique. In recent years, most of the scholars performing intelligent mineral identification favor the model identification method; therefore, many scholars have used this method to carry out exploratory work in mineral-related geological studies, such as mineral processing [5,6], mineral prediction [4], mineral exploration mapping [7,8], chemical exploration anomaly mapping, geological mapping, core-drilling mapping [9,10,11,12], and mineral phase segmentation of X-ray microcomputer tomography data [13]. The use of artificial intelligence for intelligent mineral identification has received increasing attention and interest from researchers, and significant progress has been made.

The birth and development of intelligent mineral identification methods have greatly facilitated and simplified the process of mineral identification by learning the characteristic patterns of mineral samples. The most traditional method of mineral identification is manual identification based on the shape and physical properties of the mineral. This method is simple and inexpensive, but the identification accuracy is low and time-consuming, and the identifier requires a high level of expertise. Then, mineral identification based on data such as X-ray diffraction, electron microprobe, and Raman spectroscopy provides better identification accuracy, but also requires advanced experimental instrumentation and relevant identification knowledge. The emergence of intelligent mineral identification methods provides solutions for mineral identification tasks that handle big data for a variety of data types abovementioned while achieving high accuracy rates. While greatly reducing labor consumption in the identification process, the intelligent mineral identification method also makes it possible to identify simpler data types with high accuracy. Typically, photo-type data about ores can be accurately identified, which greatly reduces the reliance on specialized instruments for data acquisition.

Some typical methods of mineral identification using photo-type data are briefly described in this paragraph. Ref. [14] selected rock, thin sections and segmented the samples using Goodchild and Fueten’s edge detection algorithm. A standard feedforward neural network type with three layers with backpropagation error correction is used, and a genetic algorithm is used to find a near-optimal solution. Ref. [15] captured and selected images, which were median-filtered for noise reduction and then histogram-equalized. Experiments were initially conducted using RGB values of pixels, and in the second series of experiments, RGB images were converted to a hue, saturation, and value (HSV) space closer to the human conceptual understanding of color. A feedforward MLPNN with backpropagation training algorithm is used. The activation function f in the hidden layer is a tangent sigmoid, and in the output layer, f is a logarithmic sigmoid function, and the neural network is implemented using MATLAB’s neural network toolbox. Ref. [16] acquired images and marked a random set of points on the analyzed images. For each point, its position (XY coordinates) was recorded, as well as the classification determined by the researcher. Based on pattern recognition methods (NN, KNN) and artificial neural network algorithms (multilayer perceptron—MLP), a multidimensional feature space was defined to achieve an automatic classification of the structures. Ref. [17] collected sample image sets; used photo-editing software such as Photoshop to uniformly adjust parameters, image segmentation, and annotation of rock images; and then applied data enhancement methods such as mirror flip and random cropping to the images. Based on the deep learning system TensorFlow, a targeted Unet convolutional neural network model was designed to effectively and automatically extract the deep feature information of ore minerals and realize the intelligent recognition and classification of ore minerals under the mirror. Ref. [18] performed data acquisition with a large amount of data and diverse features, enhanced it with data, and expanded the original dataset using both image flipping and image scale transformation. ResNet-18 was selected as the convolutional neural network and SGD was used as the optimizer to implement a deep-learning-based intelligent mineral recognition method. Ref. [19] selected the more important and common rock and mineral images, performed extraction of internal square slices from the raw rock images, and performed operations to expand the dataset such as image flipping and rotation. The ResNet-50 model was used as the base model and, on this basis, a python- and html5-based rock and mineral intelligent recognition tool is developed. This recognition tool uses a cloud+end service model, which is a front-end service for the user’s browser and a back-end service for the cloud server, respectively.

This paper attempts to introduce the research advances in this field, analyze the research methods and basic paths of identification of minerals based on artificial intelligence, show the existing specific research works, summarize these works, and provide an outlook on the research in this field in an attempt to provide a reference for scholars to carry out relevant research.

Our contributions are shown below.

- In this paper, artificial-intelligence-based mineral identification models are classified into three categories. (1) Artificial neural network. Mineral identification models based on artificial neural networks are accurate and have a potential advantage over other methods when, for example, Raman spectroscopy datasets are used for mineral identification, without the need to remove fluorescence. However, artificial neural networks require too much mineral expertise and experience to avoid overtraining and undertraining. (2) Machine learning. In this paper, machine learning is divided into statistical-based machine learning and rule-based machine learning. The model is given the ability to identify minerals in the process of training the model. In the process of training the model, certain rules are adopted to improve efficiency by influencing the training of the model i.e., rule-based machine learning. Statistical-based machine learning, on the other hand, requires little mineral expertise, relies mainly on the quality of the dataset, and requires large amounts of data for training. (3) Deep learning. The emergence of deep learning breaks the deadlock of artificial intelligence and is an extension of artificial neural networks. Deep learning models have deeper hidden layers to achieve results that are as close to reality as possible; so, deep learning has more learning power and better performance. However, the accuracy of deep learning is highly dependent on data, and the larger the amount of data, the higher the accuracy. In addition, the nonlinear function mapping capability is applied to the deep learning model. As a result, it reduces the computation time for extracting features from images and improves the accuracy of the deep learning model.

- Visualization analysis is performed to explore the panorama of developments in the field based on literature related to intelligent mineral identification. Specifically, we first analyzed the development paths of the field to obtain information on the evolution of academic hot spots and themes over time. Then, recent literature was analyzed to explore what topics are of concern to recent scholars. Finally, based on the taxonomy established above, we perform keyword detection analysis on the literature related to each of the three intelligent identification methods and summarize the focus and development trend of the research of different identification methods. The visualization results show that a wider range of application scenarios with more accurate identification results become the main goal pursued by scholars.

The rest of the paper is organized as follows. In Section 2, some works related to artificial intelligence for mineral identification are given. In Section 3, the basic process of intelligent mineral identification and the main models that are applied are presented. In Section 4, three main models for intelligent mineral identification and other selected models are presented. In Section 5, we collect field-related literature and perform visualization analysis. We conclude the paper in Section 6.

2. Related Work

In this section, some of the work related to the mineral intelligence identification survey is presented.

To present, there have been several reviews of work on mineral identification for specific dataset types. For example, Ref. [20] summarized automatic analysis systems for minerals based on scanning electron microscopy. Ref. [21] found that when targeting soot identification, ultrasonic and electromagnetic wave detection techniques had the lowest bureau limits and infrared imaging identification techniques had the highest bureau limits, where the working environment had the most obvious limitations on infrared imaging identification and coal rock characteristics had the most obvious limitations on reflection spectrum identification and process signal monitoring identification. However, these works are limited to the form of scanning electron microscopy data and do not detail how the dataset is preprocessed by the automated mineral analysis system and what type of classifier is used.

A further discussion of which identification methods and techniques are most appropriate for different forms of mineral datasets is presented. Ref. [22] discusses the application of machine learning to intelligent mineral identification and does not further divide the methods based on the differences and commonalities of methods such as machine learning, nor does it address the application of other technical approaches of artificial intelligence to mineral identification. Ref. [6] describes mineral processing challenges that may be addressed by artificial intelligence, including increased productivity, minimal ecological impact, and identification in mineral flotation.

In this paper, we innovatively propose to classify artificial intelligence methods and techniques for mineral identification into three categories—artificial neural networks, machine learning, and deep learning—where machine learning is divided into statistical-based machine learning and rule-based machine learning. It involves Raman spectroscopy, laser-induced breakdown spectroscopy, X-ray fluorescence logging, spectral images, grayscale images, etc.

3. Preliminaries

3.1. Basic Process of Intelligent Mineral Identification

Despite the variety of research methods used to carry out intelligent identification of minerals, the basic process is broadly consistent and can be summarized in four stages: (1) Acquisition of mineral datasets. Instruments are used to acquire descriptive data of minerals, including images, graphics, and physical data. Multiangle photographic images of minerals, microscopic images of thin sections, and spectral images of minerals all become important datasets for intelligent identification. (2) Preprocessing of mineral datasets. Preprocessing the dataset by partitioning SEM images, dimensionality reduction, and image noise reduction to improve the accuracy of the classifier in the next step. (3) Training the mineral identification model. Artificial intelligence is used to train the discriminative model for mineral identification. Despite the short-term development of artificial intelligence, it has been widely used in the exploration of intelligent mineral identification and important milestones have been achieved (see Section 4). (4) Validate the accuracy of the mineral identification model. The model is used to discriminate the data to be identified and obtain the category of minerals. According to the different mineral datasets, different discriminative methods are used, the current discriminative models are also diverse, and the accuracy rate varies greatly.

3.2. Network Architecture Overview

In this paper, we mainly classify the models used for intelligent mineral identification into three categories. Artificial Neural Network (ANN) is a simplified model based on the abstraction of human brain neural network. Machine Learning (ML) can find patterns in the data, which in turn enables decision-making for uncertain scenarios. Deep Learning (DL) can learn features directly from the data without human intervention and interpret the data by simulating human brain mechanisms.

There are some differences between the three models in accomplishing the task of mineral identification. ANN has good self-learning and associative storage functions and has the ability to find optimized solutions at high speed. However, it requires large amounts of mineral image data and can only perform accurately on trained tasks; so, it cannot generalize well. Meanwhile, the neural network is opaque, and it is difficult to determine the logic behind its decisions. The main use of ML in mineral identification is supervised learning, where statistical learning, neural networks, and linear regression are all important methods applied in the field of mineral identification. However, at the same time, ML is still in the early exploration stage in the field of mineral identification, and there are relatively few mature methods with high accuracy and wide acceptance. DL can analyze a large amount of mineral data, but the generated models are very difficult to interpret. Moreover, when the amount of data for mineral identification is limited, deep learning cannot provide unbiased estimation of data patterns; thus, good accuracy can only be guaranteed when the amount of data is large enough.

3.2.1. Artificial Neural Network (ANN)

An artificial neural network (ANN) is a set of multilayer perceptrons/neurons consisting of three layers: an input layer, a hidden layer, and an output layer—essentially, each layer tries to learn certain weights.

Artificial neural networks are capable of learning any nonlinear function. Therefore, these networks are commonly referred to as Universal Function Approximators (UFAs). Artificial neural networks have the ability to learn the weights that map any input to an output. One of the main reasons for universal approximation is the activation function. The activation function introduces nonlinear properties into the network. This helps the network to learn any complex relationship between inputs and outputs.

3.2.2. Convolutional Neural Network (CNN)

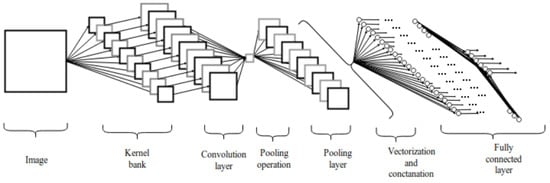

CNN is a deeply structured feedforward neural network and one of the representative algorithms of deep learning. The research on convolutional neural networks started as early as last century. The first convolutional neural networks that appeared were time-delay networks and LeNet-5. Later, with the continuous improvement of technology and the proposal of deep learning theory, CNNs developed rapidly and were gradually applied to natural language processing, computer vision, and other fields. The main principle of CNN is to obtain the spatial features of relevant images by convolutional kernel; adjust the parameter size of convolutional kernel by backpropagation algorithm; and finally, obtain a model that can obtain effective information on images. The structure of a convolutional neural network can be mainly divided as follows: input layer, convolutional layer, pooling layer, fully connected layer, and output layer. The structure of a CNN network is shown in the following Figure 1.

Figure 1.

CNN network structure [23].

Input Layer: As convolutional neural networks use gradient descent algorithm for learning, their input data need to be normalized, which can effectively improve the learning efficiency of convolutional neural networks.

Hidden Layer: The hidden layer of convolutional neural network mainly includes the convolutional layer, pooling layer, and fully connected layer. Among them, the convolutional layer is mainly used to extract the features of the image, the pooling layer is mainly used for feature selection and information filtering, and the fully connected layer is mainly used for classification.

- Convolutional Layer: As the central layer of the convolutional neural network, the convolutional layer extracts different features of the input data by convolutional operations, and it also reduces the number of parameters to prevent overfitting caused by too many parameters. The convolutional layer can have multiple convolutional kernels, and each element of each convolutional kernel also has corresponding weight coefficients and deviations. When the convolutional kernel slides to each position, it performs an operation with the input image and projects the information in its field of perception onto the feature map. The parameters of the convolution layer mainly consist of the convolution kernel size, padding, and step size. The size of the convolution kernel needs to be smaller than the size of the input image, and as the convolution kernel gets larger, the input features that can be extracted become more and more complex. The padding process is to artificially increase the size of the feature map before it passes through the convolution kernel to counteract the negative effects of size shrinkage during the computation. The step size of the convolution mainly defines the distance between two adjacent positions of the convolution kernel during the scanning of the feature map. When its value is 1, the convolution kernel scans every element of the entire feature map; when its value is n, it skips n-1 pixels after each scan to continue scanning.The dimensionality of the convolution layer can be calculated from the filter of size (,,c), the input image of fixed size (H,W,C), the step size , and the number of zero padding . The calculation formula is as follows:The convolutional layer usually has an activation layer, which is usually combined with the convolutional layer and called the “convolutional layer”. The activation layer is a nonlinear mapping of the output of the convolutional layer, and the activation function used is usually the ReLU function.

- Pooling Layer: The pooling layer is in the middle of the successive convolutional layers, which is mainly used for feature selection and information filtering. Feature selection is mainly used to reduce the number of training parameters, thus reducing the dimensionality of the output feature vector of the convolutional layer, while information filtering is performed to retain only useful information, so as to reduce the transmission of noise and also effectively prevent the generation of overfitting phenomena. Usually, pooling layers are inserted periodically between successive convolutional layers. The two main pooling methods are Max Pooling, which picks the maximum value of the sliding window, and Average Pooling, which picks the average value of the sliding window. The dimensionality of the pooling layer can be calculated as

- Fully Connected Layer: The fully connected layer is located in the last part of the implicit layer of the convolutional neural network and only passes signals to the other fully connected layers. The role of the fully connected layer is to perform a nonlinear combination of the extracted features to obtain the output for classification, i.e., the features obtained from the convolutional and pooling layers are classified by the fully connected layer. The fully connected layer mainly obtains the weight of each neuron feedback based on the weights, and then adjusts the weights and the network to obtain the final classification results.

Output Layer: The output layer has a loss function similar to the categorical cross-entropy, which is used to calculate the error of the prediction. Once the forward propagation is completed, the backward propagation starts updating the weights and biases to reduce errors and losses. For image classification problems, the output layer uses a logistic function or a normalized exponential function to output the classified labels. For the image semantic segmentation problem, the output layer can directly output the classification results for each pixel.

3.2.3. Difference between ANN and CNN

ANN has only an input layer, output layer, and hidden layer. The hidden layer data depend on the need. Each layer neuron is fully connected to the next layer neuron, and there is no connection between the same layer and across layers. Under the condition that the incoming data are images, ANN can only process smaller images because the whole image is considered as a whole to learn patterns. As the image size increases, the number of parameters to be trained increases dramatically. In addition, ANNs do not possess translation invariance, which means that if the image is rotated or shifted, we need to retrain the image.

In contrast, each neuron in CNN is connected to only part of the neuron in the previous layer and only perceives the local rather than the whole image. In addition, each neuron can be considered as a filter, and the same neuron uses a fixed convolution kernel to convolve the whole image. Then, the CNN extracts multiple features by using multiple convolution kernels. Once the CNN learns to recognize patterns in one location, it can recognize patterns in any other location. In short, the learning (weights) can be reused even if the image is rotated or shifted.

4. Mineral Identification Method Based on Artificial Intelligence

The development of artificial intelligence technology will lead to a new round of industrial and technological changes with machine learning as its core technology. Machine learning learns and recognizes complex patterns and relationships from empirical data, extracting implicit knowledge and the ability to make inferences. For decades, scientists have been using machine learning methods to try to solve the problem of intelligent identification of rocks and minerals. Early experimental results validate that machine learning holds some promise for mineral identification, but progress has been slow in adopting machine learning methods for intelligent mineral identification more broadly. This situation has been changing rapidly as breakthroughs in machine learning methods on multiple levels, including tremendous advances in deep learning methods and the emergence of more and easier-to-use toolkits, have rekindled the interest of geoscientists in machine learning, and a growing number of exploratory studies of mineral intelligence identification methods have emerged. This paper presents a summary of machine learning methods applied to the intelligent identification of minerals, with the expectation that mineral researchers can quickly identify the discriminatory paths and methods available for adoption and find the most effective ways to solve problems in different scenarios. Likewise, it is hoped that researchers in the field of machine learning can understand the scenarios in which existing methods are used and identify problems and challenges that are likely to arise from technological developments in the field.

In this paper, artificial intelligence techniques in intelligent mineral identification are divided into three main categories, namely, artificial neural networks, machine learning, and deep learning. Among them, machine learning is divided into statistical-based machine learning and rule-based machine learning, as shown in Table 1.

Table 1.

Comparison of different machine learning methods for intelligent identification of minerals.

Machine learning techniques in mineral intelligence identification are mainly rule-based machine learning where training data class labels (classification) or target predicted values (regression) are available and models are developed for predicting new observation classes or values. Machine learning methods applied to intelligent mineral identification contain several mainstream methods, including principal component analysis (PCA), partial least squares regression (PLS), decision trees, random forests (RF), and distance metric models. Each of them is briefly described below.

4.1. Artificial Neural Network

Artificial Neural Network (ANN) is an artificial network composed of a large number of simple processing units extensively connected, which is an abstraction and simulation of some basic features of the human brain or biological neural network. Neural network theory has provided new ideas for the study of many problems such as machine learning, and has been successfully applied in intelligent mineral identification. Artificial neural networks are able to outperform decision tree classifiers by extracting features from the data and mimicking the structure and function of biological neural networks to identify mineral species. They demonstrate that an average accuracy of 83% can be achieved when classifying based on mineral groups and 73% when classifying based on individual minerals. The technique is also very attractive when Raman spectroscopy is used for mineral identification, as there is no need to remove fluorescence, and it is shown that its presence actually improves classification performance. However, ANN implementation requires the expertise and experience of the user in order to avoid overtraining and undertraining. In addition, ANNs must be retrained for data obtained on different spectrometers (due to expected differences in noise, background/fluorescence levels, Raman peak line shape, and spectrometer resolution) or any new spectra that have been added to the database, which can be a major inconvenience.

The models of artificial neural networks involved in the intelligent identification of minerals are perceptron, Autoencoder, BP neural network, Kohonen (also called SOM) network, multilayer perceptual neural network (MLP), and feedforward network structure. Each of them is described below and shown in Table 2.

Table 2.

Comparison of different artificial neural network models for intelligent identification of minerals.

Perceptron: Ref. [7] trained a multilayer perceptron based on single polarized and orthogonal polarized image texture features to identify 23 test minerals in igneous rocks. Compared with other networks, artificial neural networks [14] are ideal for applications requiring repetitive identification of a limited number of minerals because they are less susceptible to changes such as lighting. This work can reach 90% accuracy for the identification of colored and colorless minerals, and it is more accurate when the training set has a larger amount of data. Reference [1] used a backpropagation algorithm with mean square error minimization to optimize a conventional three-layer perceptron to identify the mineral types contained in granite images. It was experimentally verified that the artificial neural network with 10 neurons hidden was the best network performance when used as a recognition model for granite minerals, which reached a success rate of 90%. In [16], two artificial-intelligence-based approaches are compared. One is based on the pattern recognition method—more precisely, on the nearest neighbor (NN) method; the other is based on the artificial neural network (multilayer perceptron—MLP) algorithm. The results from the experiments show that both AI methods have a high correct classification rate and that the pattern recognition method has a great potential to be applied to the identification of coal microfraction groups, and the results of the study also show that the best results can be obtained with the most classical pattern recognition method, i.e., the neural network method. Reference [2] proved the ability of a laboratory-scale hyperspectral reflectance imaging system combined with an artificial neural network to accurately identify the constituent minerals of Hessian granites in Haixi.

Autoencoder: Nonlinear mixing of minerals is a common phenomenon in geological scenarios. A large number of mineral spectral unmixing methods have been developed by scholars for mineral identification and quantitative analysis. Zhou Qiu et al. (2022) [24] designed a self-coding neural network containing dropout noise reduction and sparse strategy, resulting a sparse fully connected neural network.

BP Neural Network: The method of interpretation of stratigraphic elements is based on optimization algorithms that use core analysis data to identify minerals by determining a mineral model that reflects the distribution of mineral content. However, mineral identification in coreless wells becomes very difficult, and artificial neural networks can solve this problem with their unique sample learning capability. Wang Q et al. (2021) [13] trained and optimized a BP neural network for mineral identification, and a BP neural network trained from a known well successfully predicted another unknown well; however, due to the diversity of elements in the XRF measurements, elemental analysis had to be performed before training the BP neural network. Reference [25] used the spectral angle mineral mapping method for identification and BP neural network technique for different iron ores, both of which have their own advantages. Reference [13] used XRF to analyze the elemental content of rock chips and the BP neural network (BPNN) model to identify the rocks to construct a neural network evaluation system based on accuracy, kappa, recall, and training speed, and the improvement made the model have significant advantages in recognition performance and training speed.

Reference [26] designed multilayer perceptron, applied 5-fold cross-validation, and performed artificial neural network identification for each image after clustering mineral pixels using the properties of RGB and HSI color spaces of mineral pixels and the proposed clustering algorithm of the new ART algorithm design. This intelligent system has high accuracy and precision for mineral identification.

4.2. Machine Learning

Machine learning is part of artificial intelligence, a field of computational science that specializes in analyzing and interpreting patterns and structures of data for the purpose of learning, reasoning, and decision-making without human interaction. In brief, machine learning means supporting users to feed large amounts of data to computer algorithms, and then allowing the computer to analyze that data and give data-driven recommendations and decisions based on the input data alone. If the algorithm identifies any corrections, it will integrate the corrected information and improve future decisions. Intelligent mineral identification methods based on machine learning are shown in the Table 3.

Table 3.

Comparison of different statistical-based machine learning algorithms for intelligent identification of minerals.

Statistical-based machine learning will be introduced first. Statistical-based machine learning is machine learning based on data rules, which includes statistical learning and clustering.

Statistical Learning: Statistical learning is used to discriminate the class of a mineral by calculating the magnitude of the probability of the measured mineral. Aligholi et al. (2015) [27] selected seven mineral optical properties in the CIELab color space, calculated the probability that the test sample was a specific class, and used a majority voting scheme to determine the class of the mineral.

Clustering: To detect minerals using unsupervised classification, we use the concept of clustering, where similar spectral features are grouped into one class of minerals. The main idea of unsupervised learning is to extract useful information from unlabeled data. The clusters are classified into soft and hard clusters according to their formation. Hard clustering makes each data point belong to only one cluster. However, in soft clustering, each data point can belong to more than one cluster, usually with membership associated with each cluster. Prabhavathy P et al. (2019) [8] used principal component analysis to downscale the frequency bands to achieve dimensionality reduction, and then used hard and soft clustering algorithms to classify the hyperspectral data to identify minerals in the hyperspectrum. Reference [28] using KSOM for training, with clustering centers as input, enabling the system to identify six classes of minerals and to give the number of possible occurrences in each class. Reference [29] used the K-means clustering algorithm for classification via Matlab with a known number of clusters of interest and the FCC-K-means method for unsupervised mineral identification with significantly improved performance. Reference [8] performed unsupervised training and used PCA algorithm as a band selection technique for dimensionality reduction of HSI dimensions, and hard clustering (K-means) and soft clustering (PFCM) algorithms were used to classify the given data, and the performance of PFCM was found to be better than K-means for both original HSI images and reduced bands by DBI values.

- machine learning is introduced in the following. Rule-based machine learning is statistical machine learning based on rules, which includes principal component analysis (PCA), partial least squares regression (PLS), decision tree, random forests (RF), and distance metric models, as shown in the Table 4.

Table 4.

Comparison of different rule-based machine learning algorithms for intelligent identification of minerals.

PCA: Principal component analysis is often used to reduce the dimensionality of a dataset while maintaining the features of the dataset that contribute the most to variance. Reference [29] grouped mineral regions into different classes based on the K-means clustering method of hue saturation value (HSV) principal component analysis (PCA).

PLS: PLS overcomes both the undesirable effects of multiple correlation of variables in system modeling and considers the correlation between inputs and outputs. PLSDA is a commonly used chemometric technique for statistical regression of high-dimensional data. Remus et al. (2012) [49] used this method to identify obsidian provenance with over 90% accuracy in the Coso Volcanic Belt, Bodie Massif, and other obsidian-producing areas of north-central California, USA. El Haddad et al. (2019) [32] tested 10 minerals in rocks using SEM/EDS instruments and applied multivariate curve-resolved-alternating least squares (MCR-ALS) to the resulting LIBS data for training and building prediction models, by predicting the test data and comparing with quantitative mineral analysis (QMA). The root mean square error of the primary minerals is less than 10%, which is in good agreement with the QMA results.

PCA and PLS extract latent variables from a system to represent the system in a system of variables with reduced complexity. Ideally, the extracted latent variables respond to the physical properties of the model. PLS differs from PCA in that in addition to extracting variables, it also performs regression on the expected response of the system for a defined set of inputs. In order to extract values for mineral phase presence/abundance, PCA and PLS use systematic latent variables that must be classified and/or calibrated. When performing classification of high-dimensional data such as spectra, for example, PCA and PLS have the unique advantage of not requiring data downscaling techniques prior to classification [31].

Decision Tree: A decision tree is a predictive model that represents a mapping relationship between object attributes and object values. The data are identified by classification from top to bottom based on the distinguishability of the attributes, with leaf nodes representing specific categories and the paths experienced from the root node to that leaf node forming specific classification rules. It is easy to understand and interpret, can handle both numerical and categorical data, and is highly robust in large or noisy datasets. Reference [33] used decision trees to extend applications in the optical identification of common opaque minerals.

Random Forest: A random forest (RF) is a classifier containing multiple decision trees, where a random subset of candidate features is selected during the learning process to train the decision trees, and the output class is determined by the plurality of the output classes of the multiple decision trees. It can handle a large number of input variables and produce highly accurate classifiers, as well as reduce the classification error of category-imbalanced datasets. Xuefeng Liu et al. (2022) [50] used a random forest model to train a classifier for SEM grayscale anomaly image segmentation. Ref. [10] used linear discriminant analysis (LDA) in an attempt to project the dataset into a space with fewer dimensions and maximum separability between classes by maximizing the relationship between intraclass variance and interclass variance. Unlike other classification methods such as LDA, where SVM classification relies only on observations located at or beyond the edges, random forest classification, a development of classification trees, introduces two modifications, either by constructing a collection of n trees (without pruning) or by using only a subset of m descriptors. Three classification methods—linear discriminant analysis (LDA), SVM, and random forest (RF)—were used and the results performed similarly, with random forest producing slightly higher accuracy results. Ref. [34] developed a random-forest-based model to classify different stages of kerogen (organic component) and minerals (inorganic component).

Distance Metric Model: The distance metric model determines the similarity of test data to other minerals based on a distance function (metric) between elements in the set. The metric model is simple and scalable but the classification features need to be highly distinguishable. Baklanova et al. (2014) [51] classified the dataset into categories based on similarity through the clustering analysis of the K-means algorithm used for mineral identification, which is calculated by a distance, such as the Euclidean distance.

4.3. Deep Learning

Deep neural networks are extensions of neural networks, which at their core contain multiple hidden layers to extract features of complex structured data in layers. Deep learning does not represent a single algorithm, but an approach with different network architectures, and several deep learning frameworks exist to date, such as convolutional neural networks, residual networks, and Siamese networks. The intelligent mineral identification methods based on deep learning are shown in Table 5 and Table 6.

Table 5.

Intelligent mineral identification methods based on Transfer learning and convolutional neural networks.

Table 6.

Intelligent mineral identification methods based on Inception-v3 and ResNet.

Convolutional Neural Networks (CNNs): CNNs consist of one or more convolutional layers and a fully connected layer at the top. This structure allows convolutional neural networks to take advantage of the two-dimensional structure of the input data and provide better results in image and speech recognition. As CNN models are applied to the field of semantic segmentation of spectral images, repeated convolution and pooling operations reduce the feature map resolution, resulting in the loss of detailed structure and edge information of spectral images. Tian et al. (2022) [43] addressed this problem by introducing dilated convolution, and proposed a mineral spectral classification method based on one-dimensional dilated convolutional neural network (1D-DCNN), which extracts spectral features using null-dilated convolutional neural network, adjusts model parameters using backpropagation algorithm combined with stochastic gradient descent optimizer, and outputs spectral classification results to achieve end-to-end detection of mineral categories. Latif, G. et al. (2022) [38] first applied a simple linear iterative clustering (SLIC) method to SEM images of mineral particles smaller than 50 µm for high-quality segmentation, and then employed a convolutional-neural-network-based model, ResNet, to overcome the gradient disappearance problem in deep learning networks with hundreds or thousands of layers, thus improving their performance and reducing the associated training errors. Cai Y. et al. (2022) [42] constructed a specific multiscale expanded convolution attention network for Raman spectroscopy to identify unknown minerals. To extract multiscale features from mineral spectrum, expanding convolution is used, and the field of perception for feature extraction is broadened by expanding convolution. In order to increase the sensitivity of the convolutional network to informative features, a squeeze-and-excitation block (SE block) and a multiscale expanding convolution module are combined to form a channeled attention mechanism. Zeng, X. et al. (2022) [44] proposed a method to identify minerals by combining mineral photo image features and mineral hardness features, in which the deep convolutional neural network EfficientNet-b4 was used to extract image features. Reference [5] proposed an automatic mineral identification system that can identify mineral types prior to the mineral processing stage by combining hyperspectral imaging and deep learning. Reference [41] developed and evaluated a new method for automated mineral identification that combines measurements with two complementary spectroscopic methods, using CNN for Raman and VNIR (Visible and Near Infra-Red) and cosine similarity for LIBS. Reference [45] used convolutional neural network techniques to automatically extract optical features of minerals for mineral identification. Reference [11] explored the use of CNN as a tool to accelerate and automate microphase classification, which utilized migration learning based on a robust and reliable CNN model trained on a large number of nongeological images.

Inception-v3: Zhang et al. (2019) [46] extracted four mineral image features of potassium feldspar, feldspar, plagioclase, and quartz based on the Inception-v3 architecture, and used machine learning methods of logistic regression (LR), support vector machine (SVM), random forest (RF), k-nearest neighbor (KNN), multilayer perceptron (MLP), and Gaussian Naive Bayes (GNB) to build the identification models. The results show that LR, SVM, and MLP are the more prominent single models for high-dimensional feature analysis, and the LR model is also set as a metaclassifier in the final prediction, which also shows that the fusion of models effectively improves the performance of the models. Peng et al. (2019) [47] studied 16 common types of mineral crystal images to build the mineral identification Inception-v3 model; the overall accuracy of minerals was around 86%; and the top-5 accuracy reached 99% and showed strong robustness in the final results. Ref. [35] selected the Inception-v3 model as a pretraining model for rock mineral image identification.

ResNet: Guo et al. (2020) [18] successfully trained a more accurate mineral identification model based on five mineral images—quartz, hornblende, black mica, garnet, and olivine—using the ResNet-18 neural network model as the basis, and achieved an accuracy of 89%, realizing the intelligent mineral identification based on deep learning. Ren et al. (2021) [48] reached the highest accuracy when using the ResNet-50 model as the base model for intelligent identification of rock mineral image samples. Reference [38] achieved a validation accuracy of 90.5% using a 47-layer ResNet-2 architecture.

Transfer learning: Zhang et al. (2021) [52] proposed a multiproduct coal image classification method combining convolutional neural network and Transfer learning, constructed a deep learning model based on the convolutional neural network Inception-v3 of Tensorflow, Keras framework, and applied the Transfer learning method to train and test different coal product image datasets until the loss values and accuracy of the training process converged. They concluded that the test accuracy and validation accuracy of the deep learning model reach more than 90%. Ref. [53] used a combination of Transfer learning and Siamese neural networks to improve the ability to extract multielement geochemical anomalies and tried using multiscale geochemical data to improve the model performance. The accuracy of the model using both Transfer learning and Siamese neural networks reached 85%, indicating that the improved deep learning approach can greatly improve the ability of the model to identify anomalies. Reference [36] used a Transfer learning technique that uses pretrained parameters trained on a larger ImageNet dataset as initialization of the network to achieve high accuracy and low computational cost. Reference [11] explored the use of CNN as a tool to accelerate and automate microphase classification using Transfer learning based on a robust and reliable CNN model trained on a large number of nongeological images.

Zhou et al. (2022) [24] proposed the research idea of combining neural networks with physical models to address the common problem of learning from few samples in hyperspectral remote sensing geological investigations by using a domain-knowledge-based data augmentation method, i.e., by combining the classical Hapke radiative transfer model with a small number of ground truth points for training label data augmentation followed by a sparse, fully connected neural network for mineral content assessment.

Reference [39] proposed hierarchical spatial spectral feature extraction with long- and short-term memory (HSS-LSTM) to explore the correlation between spatial and spectral features and to obtain hierarchical intrinsic features for mineral identification.

4.4. Other Models

In addition to the three main models mentioned above, there are some other models that are worth being introduced.

The Unet model used in [17] is different from the general convolutional neural network, which gradually becomes smaller for the input image data after one layer of pooling and convolutional operations, and finally enters the fully connected layer for training, while the Unet model adds an upsampling layer after the fully connected layer to restore the image to its original size. Therefore, the Unet convolutional neural network model has the advantage of requiring less training sets and high segmentation accuracy compared with other convolutional neural networks. By comparing with AlexNet, VGGNet, and GoogleNet, ResNet [18] has significantly improved both in accuracy and efficiency due to the inclusion of residual units in the ResNet network that can calculate the weights between different layers in a connected manner, preserving the integrity of the information. It is able to reduce the parameter settings with the same accuracy as other networks and achieve the desired effect by fewer iterations, which greatly improves the model training efficiency. Meanwhile, unlike GoogleNet, ResNet [38] has satisfactory accuracy by training a deeper network to overcome the model complexity and gradient disappearance problems. Compared with other networks [14], artificial neural networks are ideal for applications requiring repetitive identification of a limited number of minerals because they are less susceptible to changes such as lighting. It can reach 90% accuracy for the identification of colored and colorless minerals, and it is more accurate when the training set has a larger amount of data. In the developed 1D-CNN [42], the multiscale features in mineral spectra are extracted using multiscale dilation convolution, which extends the conventional convolutional operation strategy and increases the spacing of points in the convolutional kernel. The ResNet-18 model, as a type of CNN network, performs poorly in mineral spectrum classification, and the classification accuracy of AlexNet, which has a shallower depth and more direct structure, is much higher than that of ResNet-18. In addition, in deep learning, the LSTM-based hierarchical spatial spectral feature extraction method (HSS-LSTM) [39] outperforms many other learning methods by considering the correlation between the main spatial features and the spectral features. Many other deep learning methods exist, mainly the following: five-layer CNN utilizes too few spatial statistical features to obtain enough complete features; spectral–spatial feature-based classification method (SSFC) does not have a unified objective function for optimization; recurrent neural network (RNN)-based model does not consider spectral features when obtaining spatial spectral features; convolutional long short-term memory (ConvLSTM) method pays less attention to the recognition of image element spectra and cannot obtain the correlation between spatial and spectral features; the spectral–spatial unified network (SSUN) does not further explore the correlation between spatial and spectral features to obtain hierarchical features; multiscale CNN greatly reduces the focus on recognizing pixels.

5. Visualization and Analysis

5.1. Data and Visualization Tools

We searched the academic literature through Scopus, selected the search condition “TITLE-ABS-KEY” in the advanced search box, and searched all the relevant literature on intelligent mineral identification up to 19 June 2022, using the search formula (“mineral” OR “ore”) AND (“identification” OR “recognition”) AND (“learning”). By filtering articles including “Article”, “Conference Paper”, “Conference Review”, and “Review”, and manually filtering the content of the literature to eliminate irrelevant literature, we finally obtained 201 literature records. The obtained literature records were published between 1975–2022, with 142 literature sources (Journals, Conferences), including 693 authors from 28 countries and regions.

VOSviewer [54] is a scientific knowledge visualization software developed by the VAN ECK and WALTMAN team at Leiden University in the Netherlands, which is commonly used for large-scale data analysis and has a strong visualization mapping capability. Bibliometrix [55] is a scientific bibliometric software based on R language developed by Italian MASSIMOA, which can perform complete scientometric and visual analysis of the literature exported from the Scopus database, including statistical analysis of relevant scientific literature indices, construction of data matrices, cocitation, coupling, coauthorship analysis and coword analysis, etc., with excellent visualization performance.

This paper focuses on the visual analysis of the retrieved literature records using a combination of VOSviewer and Bibliometrix in order to explore the development path, hot spots, and trends in the field of intelligent mineral identification and to further analyze the development of taxonomy-based mineral identification approaches.

5.2. Field of Mineral Identification

In this section, we first analyze the development path of the field of intelligent mineral identification; then, we explore the emergence of field keywords, and analyze and discuss the present field keywords.

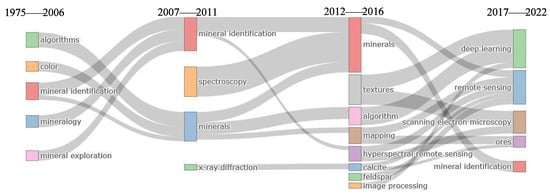

The thematic development path in the field of intelligent mineral identification is shown in Figure 2. In the figure, we can observe the thematic development and the thematic evolution in different periods clearly. Based on the retrieval information available to us, Pooley et al. first proposed that pure mineral powders of known chemical composition could be used as standards to calibrate detection equipment, and the calibration results could be used to obtain the chemical composition of unknown particles for the identification of unknown minerals. As a possible pioneer of intelligent mineral identification, Pooley et al. [56] attracted the research interest of the academic community, but the number of research literature on mineral identification was only 10% (21 papers) of the total literature records as of 2006. Basic mineral identification was studied before 2006, including the works on more efficient algorithms for mineral identification, with a main focus on the color characteristics of minerals [57] and related research aimed at more efficient mineral exploration of unknown rocks [58]. Since 2007, more attention has been paid to the use of spectroscopic information obtained by X-ray diffraction [59,60] for precise mineral identification. After 2012, academic research attempted to use mineral image processing [61] and hyperspectral remote sensing [62] for mineral identification, and focused more on the textures [63] of minerals. At this stage, mineral types such as calcite and feldspar [64] were given major attention because their samples are easy to collect and their datasets are rich and reliable for further research. From 2017 to the present, intelligent mineral identification based on deep learning has become a major direction in academic research, and different ways of mineral identification such as remote sensing and scanning electron microscopy [65] have been actively explored, while more ore types are targeted for mineral identification.

Figure 2.

Thematic evolution diagram for the field of intelligent mineral identification.

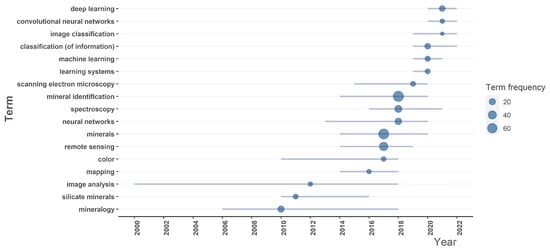

In order to better explore the hot spots of intelligent mineral identification research in recent years, we detected the emergence of field keywords and plotted them as shown in Figure 3.

Figure 3.

Keyword emergence detection results.

As we can see from the Figure 3, image analysis and image classification of mineral data have been studied for years. As time passes, the network form of intelligent mineral recognition has evolved from artificial neural network to machine learning to deep learning neural network. In recent years, the convolutional neural network [66,67], which mainly focuses on image data, has gained much attention and achieved excellent recognition performance. It is evident from the study of mineral species recognition that scholars have shifted from studying mainly silicate minerals [68] to studying a wider range of minerals, indicating that intelligent mineral identification research is growing towards a wider range of uses. Color, spectroscopy, and other information become the main training content for the acquisition of mineral features.

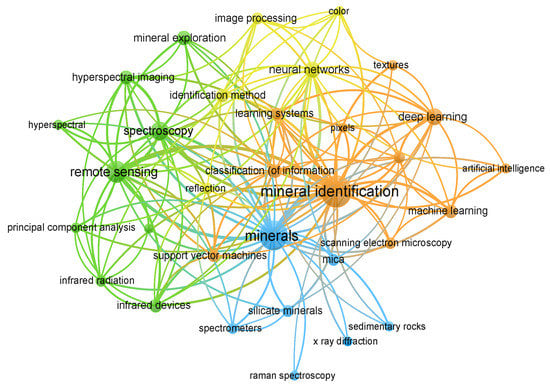

Then, we perform a detailed keyword detection analysis for the existing literature, which is used to explore the current research hot spots in the field, plotted as shown in Figure 4.

Figure 4.

Field keyword detection.

In this keyword map, the node size represents the frequency of the keyword, the connection between the nodes represents the co-occurrence between two keywords, and more connections represent that the node has higher centrality. From the figure, we can obtain 4 clusters. The orange cluster is characterized by “mineral identification”, “machine learning”, “deep learning”, and “support vector machines”, which represent the existing ideas of network choices in the field of intelligent mineral identification and constitute a complete and effective learning system. In the learning process, the model pays more attention to the texture of the ore and the pixels [69] of the image. The blue cluster consists of “minerals”, “mica”, “silicate minerals”, and “sedimentary rocks” [70], which represent the main mineral types that are currently of interest for mineral identification. So far, the identification of common rock and mineral species is still the goal of most scholars. The green cluster is represented by “remote sensing”, “spectroscopy”, “infrared radiation”, and “hyperspectral”, indicating that the current academic research mainly relies on the information of spectral and remote sensing results as the main discriminatory basis for mineral identification, and uses the results to predict the potential mineral exploration. Finally, the yellow cluster represented by the keywords “image processing”, “color”, “reflection”, etc. mainly means the image-based mineral identification method, which is becoming popular now. In image-based identification methods, the main focus is on image features such as color, reflection, and illumination [71], and is used for model training, thus relying less on the use of specialized instruments.

5.3. Mineral Identification Methods Based on Taxonomy

In this section, we detect and analyze keywords for intelligent mineral identification methods based on artificial neural networks, machine learning, and deep learning, respectively, and discuss the development preferences and trends under different methods.

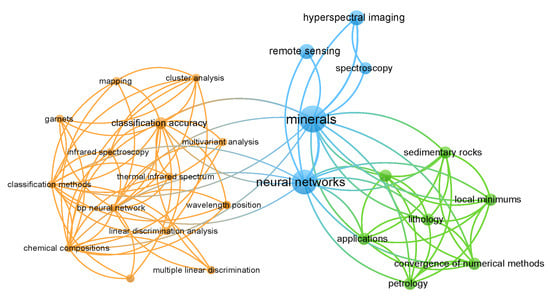

Figure 5 represents the keyword detection results of the intelligent mineral identification method related to artificial neural networks. In terms of classification, linear discrimination [71] of minerals occupies a part of this research, and the main models are perceptron, etc. However, the perceptron cannot handle the linear indistinguishable case and, for this problem, the multilayer network structure has been focused on, such as “BP Neural Network” [72], “Multilayer Perceptron” [2], etc. The main ways to obtain identification data include “remote sensing”, “hyperspectral imaging”, “spectroscopy”, “chemical composition”, “thermal infrared spectrum”, etc. These data acquisition methods usually require more specialized experimental instruments to assist in the process. Although “cluster analysis” is not classified in this taxonomy, it can be seen that some scholars are still clustering the data during network training [73], which indicates that different mineral identification taxonomies are not completely distinct from each other, and scholars will combine different optimization methods to achieve the targeted identification goals. In the process of training the model, the global optimal solution is usually obtained by optimization algorithms based on derivatives as evidences such as gradient descent and Newton’s method. To achieve convergence of the algorithm, many scholars have attempted to convert the problem into a convex optimization problem due to the presence of local minimums in practice. In addition, through the identification of minerals—mainly “sedimentary rocks” and “petrology”—scholars have tried to put their research results into applications, such as mapping.

Figure 5.

Keyword detection of artificial-neural-network-based methods.

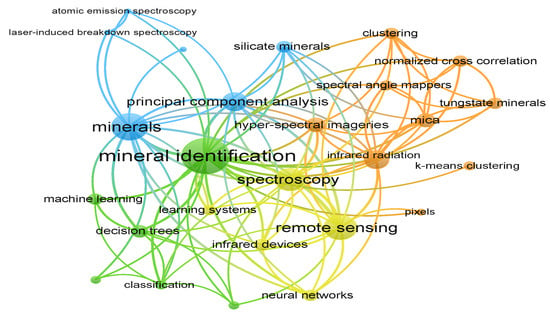

Figure 6 represents the keyword detection results of the intelligent mineral identification method related to machine learning. Statistical-based machine learning, represented by clustering, and rule-based machine learning, represented by principal component analysis [74] and decision tree, are the main research directions of this type of mineral identification method. Similar to artificial neural-network-based identification methods, data acquisition methods based on spectroscopy and remote sensing, such as the use of infrared devices, Raman spectroscopy [75], atomic emission spectroscopy, or laser-induced breakdown spectroscopy [76], are also dominant in this type of identification method. The selection of identifying minerals is more diverse, mainly including mica, silicate mineral, tungstate mineral, and other mineral types [77]. In addition, due to the increase in the number of layers in the network, the machine-learning-related methods are more capable of handling sample data with more features such as image type, for example, focusing on information such as the “pixel” of the image, and using algorithms such as normalized cross correlation [78] to calculate the correlation between sample data (pixels). In general, machine-learning-based mineral identification methods use more algorithms and methods to obtain more data rules for mineral samples than artificial-neural-network-based methods in order to better increase the variety of mineral identification and improve the classification accuracy.

Figure 6.

Keyword detection of machine-learning-based methods.

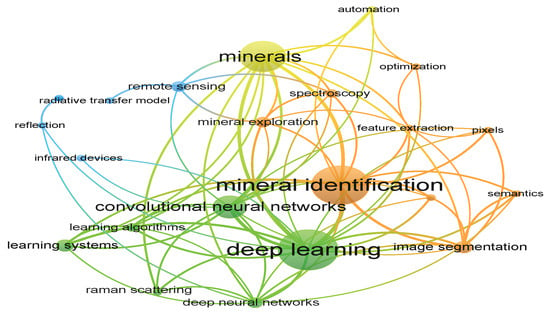

Figure 7 represents the keyword detection results of the intelligent mineral identification method related to deep learning. From the figure, we can clearly see that deep neural network, represented by convolutional neural network, is an important component of this type of recognition method. When performing hierarchical feature extraction of complex structured data, often, not a single algorithm is used, but rather, a learning system with different network architectures and complemented by different optimization strategies [79]. Similarly, this type of identification is also concerned with the extraction of sample data by traditional mineral identification aids, such as infrared devices, remote sensing, etc., to obtain spectroscopy information. The effectiveness of convolutional neural network architectures in image processing has, however, led more attention to the recognition of mineral images, which are less costly and more versatile, and the attempt to perform image segmentation in a single image in order to identify minerals of different pixel sizes due to the effectiveness of convolutional neural network architectures in image processing tasks. Additionally, given that there are insufficient training data for some minerals due to scarcity, the transfer model is employed to solve the problem of only a few samples. Due to the good accuracy and range of minerals based on deep learning, academia is also committed to the application of intelligent mineral identification in industry to achieve the automation of mineral identification and mineral exploration [38].

Figure 7.

Keyword detection of deep-learning-based methods.

6. Conclusions

Mineral identification is a fundamental task in geology, mining engineering, and other related fields. Intelligent identification of minerals reflects the frontier needs of scientific research and industrial demands. The combination of computer science and earth science is the current trend, and the application of artificial intelligence related to the background of digital earth science in deep time has gained widespread attention. It is important to pay close attention to the powerful potential demonstrated by the method in the intelligent identification of minerals, since it represents an important direction for future development.

While there is still a huge gulf between the subjects of geology and artificial intelligence, artificial intelligence identification processes and criteria are often difficult to interpret directly and there is a lack of recognized, unified, benchmark mineral datasets, pending progressive foundation building.

In this paper, we provide an in-depth and comprehensive summary of intelligent ore recognition. We summarize three types of taxonomies. Based on this, we visualize the relevant domain literature and perform a trend analysis using keyword detection in order to better explore the trends in the field. Some suggestions are made for possible future research directions.

It is our intention in this paper to provide guidance to researchers in the fields of computing and earth sciences involved in the study of intelligent mineral identification. Due to the limitation of the scope of the study, the way to preprocess the dataset, the different scenarios, scientific questions, data, and applications corresponding to different objectives and others are not fully analyzed and await to be completed in the future.

Author Contributions

Conceptualization, T.L. and Z.Z.; methodology, T.L. and Z.Z.; data curation, Y.B.; writing—original draft preparation, T.L.; writing—review and editing, G.H. and Z.Z.; visualization, Q.G.; supervision, G.H. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by National Natural Science Foundation of China under Grant Nos. 62002332, 62072443.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

Thanks are given to Xinyi Diao for her help in data collection.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Ramil, A.; López, A.; Pozo-Antonio, J.; Rivas, T. A computer vision system for identification of granite-forming minerals based on RGB data and artificial neural networks. Measurement 2018, 117, 90–95. [Google Scholar] [CrossRef]

- López, A.; Ramil, A.; Pozo-Antonio, J.; Fiorucci, M.; Rivas, T. Automatic Identification of Rock-Forming Minerals in Granite Using Laboratory Scale Hyperspectral Reflectance Imaging and Artificial Neural Networks. J. Nondestruct. Eval. 2017, 36, 52. [Google Scholar] [CrossRef]

- DeTore, A.W. An introduction to expert systems. J. Insur. Med. 1989, 21, 233–236. [Google Scholar]

- Folorunso, I.; Abikoye, O.; Jimoh, R.; Raji, K. A rule-based expert system for mineral identification. J. Emerg. Trends Comput. Inf. Sci. 2012, 3, 205–210. [Google Scholar]

- Okada, N.; Maekawa, Y.; Owada, N.; Haga, K.; Shibayama, A.; Kawamura, Y. Automated identification of mineral types and grain size using hyperspectral imaging and deep learning for mineral processing. Minerals 2020, 10, 809. [Google Scholar] [CrossRef]

- Mishra, K.A. AI4R2R (AI for Rock to Revenue): A Review of the Applications of AI in Mineral Processing. Minerals 2021, 11, 1118. [Google Scholar] [CrossRef]

- Izadi, H.; Sadri, J.; Bayati, M. An intelligent system for mineral identification in thin sections based on a cascade approach. Comput. Geosci. 2017, 99, 37–49. [Google Scholar] [CrossRef]

- Prabhavathy, P.; Tripathy, B.; Venkatesan, M. Unsupervised learning method for mineral identification from hyperspectral data. In Proceedings of the International Conference on Innovations in Bio-Inspired Computing and Applications, Gunupur, India, 16–18 December 2019; Springer: Cham, Switzerland, 2019; pp. 148–160. [Google Scholar]

- Chen, Z.; Liu, X.; Yang, J.; Little, E.; Zhou, Y. Deep learning-based method for SEM image segmentation in mineral characterization, an example from Duvernay Shale samples in Western Canada Sedimentary Basin. Comput. Geosci. 2020, 138, 104450. [Google Scholar] [CrossRef]

- Lobo, A.; Garcia, E.; Barroso, G.; Martí, D.; Fernandez-Turiel, J.L.; Ibáñez-Insa, J. Machine-learning for mineral identification and ore estimation from hyperspectral imagery in tin-tungsten deposits. Remote Sens. 2021, 13, 3258. [Google Scholar] [CrossRef]

- De Lima, R.P.; Duarte, D.; Nicholson, C.; Slatt, R.; Marfurt, K.J. Petrographic microfacies classification with deep convolutional neural networks. Comput. Geosci. 2020, 142, 104481. [Google Scholar] [CrossRef]

- Tang, D.G.; Milliken, K.L.; Spikes, K.T. Machine learning for point counting and segmentation of arenite in thin section. Mar. Pet. Geol. 2020, 120, 104518. [Google Scholar] [CrossRef]

- Wang, Q.; Zhang, X.; Tang, B.; Ma, Y.; Xing, J.; Liu, L. Lithology identification technology using BP neural network based on XRF. Acta Geophys. 2021, 69, 2231–2240. [Google Scholar] [CrossRef]

- Thompson, S.; Fueten, F.; Bockus, D. Mineral identification using artificial neural networks and the rotating polarizer stage. Comput. Geosci. 2001, 27, 1081–1089. [Google Scholar] [CrossRef]

- Baykan, N.A.; Yılmaz, N. Mineral identification using color spaces and artificial neural networks. Comput. Geosci. 2010, 36, 91–97. [Google Scholar] [CrossRef]

- Mlynarczuk, M.; Skiba, M. The application of artificial intelligence for the identification of the maceral groups and mineral components of coal. Comput. Geosci. 2017, 103, 133–141. [Google Scholar] [CrossRef]

- Xu, S.T.; Zhou, Y.Z. Artificial intelligence identification of ore minerals under microscope based on deep learning algorithm. Acta Petrol. Sin. 2018, 34, 3244–3252. [Google Scholar]

- Guo, Y.J.; Zhou, Z.; Lin, H.X.; Liu, X.H.; Chen, D.Q.; Zhu, J.Q.; Wu, J.Q. The mineral intelligence identification method based on deep learning algorithms. Earth Sci. Front. 2020, 27, 39–47. [Google Scholar]

- Ren, W.; Zhang, S.; Huang, J.Q. The rock and mineral intelligence identification method based on deep learning. Geol. Rev. 2021, 67, 2. [Google Scholar]

- Wen, L.; Jia, M.; Wang, Q.; Fu, Q.; Zhao, J. Automated mineralogy part I. advances and applications in quantitative, automated mineralogical methods. China Min. Mag. 2020, 341–349. [Google Scholar]

- Qiang, Z.; Runxin, Z.; Junming, L.; Cong, W.; Hezhe, Z.; Ying, T. Review on coal and rock identification technology for intelligent mining in coal mines. Coal Sci. Technol. 2022. [Google Scholar]

- Huizhen, H.; Qing, G.; Xiumian, H. Research Advances and Prospective in Mineral Intelligent Identification Based on Machine Learning. Editor. Comm. Earth-Sci.-J. China Univ. Geosci. 2021, 46, 3091–3106. [Google Scholar]

- Murugan, P. Feed forward and backward run in deep convolution neural network. arXiv 2017, arXiv:1711.03278. [Google Scholar]

- Mi, Z.; Kai, Q.; Ling, Z.; Yuechao, Y. Deep Learning Method for Mineral Spectral Unmixing. Uranium Geol. 2022. [Google Scholar]

- Jiang, G.; Zhou, K.; Wang, J.; Cui, S.; Zhou, S.; Tang, C. Identification of iron-bearing minerals based on HySpex hyperspectral remote sensing data. J. Appl. Remote. Sens. 2019, 13, 047501. [Google Scholar] [CrossRef]

- Izadi, H.; Sadri, J.; Mehran, N.A. Intelligent mineral identification using clustering and artificial neural networks techniques. In Proceedings of the 2013 First Iranian Conference on Pattern Recognition and Image Analysis (PRIA), Birjand, Iran, 6–8 March 2013; pp. 1–5. [Google Scholar]

- Aligholi, S.; Khajavi, R.; Razmara, M. Automated mineral identification algorithm using optical properties of crystals. Comput. Geosci. 2015, 85, 175–183. [Google Scholar] [CrossRef]

- Anigbogu, P.; Odunayo, D.; Okwudili, S.; Olanloye. An Intelligent System for Mineral Prospecting Using Supervised and Unsupervised Learning Approach. Int. J. Eng. Tech. Res. (IJETR) 2015, 3, 200–208. [Google Scholar]

- Yousefi, B.; Castanedo, C.I.; Maldague, X.P.; Beaudoin, G. Assessing the reliability of an automated system for mineral identification using LWIR Hyperspectral Infrared imagery. Miner. Eng. 2020, 155, 106409. [Google Scholar] [CrossRef]

- Khajehzadeh, N.; Haavisto, O.; Koresaar, L. On-stream and quantitative mineral identification of tailing slurries using LIBS technique. Miner. Eng. 2016, 98, 101–109. [Google Scholar] [CrossRef]

- Cochrane, C.J.; Blacksberg, J. A fast classification scheme in Raman spectroscopy for the identification of mineral mixtures using a large database with correlated predictors. IEEE Trans. Geosci. Remote Sens. 2015, 53, 4259–4274. [Google Scholar] [CrossRef]

- El Haddad, J.; de Lima Filho, E.S.; Vanier, F.; Harhira, A.; Padioleau, C.; Sabsabi, M.; Wilkie, G.; Blouin, A. Multiphase mineral identification and quantification by laser-induced breakdown spectroscopy. Miner. Eng. 2019, 134, 281–290. [Google Scholar] [CrossRef]

- Domínguez-Olmedo, J.L.; Toscano, M.; Mata, J. Application of classification trees for improving optical identification of common opaque minerals. Comput. Geosci. 2020, 140, 104480. [Google Scholar] [CrossRef]

- Tiwary, A.K.; Ghosh, S.; Singh, R.; Mukherjee, D.P.; Shankar, B.U.; Dash, P.S. Automated coal petrography using random forest. Int. J. Coal Geol. 2020, 232, 103629. [Google Scholar] [CrossRef]

- Liu, C.; Li, M.; Zhang, Y.; Han, S.; Zhu, Y. An enhanced rock mineral recognition method integrating a deep learning model and clustering algorithm. Minerals 2019, 9, 516. [Google Scholar] [CrossRef]

- Liu, X.; Song, H. Automatic identification of fossils and abiotic grains during carbonate microfacies analysis using deep convolutional neural networks. Sediment. Geol. 2020, 410, 105790. [Google Scholar] [CrossRef]

- Anderson, T.I.; Vega, B.; Kovscek, A.R. Multimodal imaging and machine learning to enhance microscope images of shale. Comput. Geosci. 2020, 145, 104593. [Google Scholar] [CrossRef]

- Latif, G.; Bouchard, K.; Maitre, J.; Back, A.; Bédard, L.P. Deep-Learning-Based Automatic Mineral Grain Segmentation and Recognition. Minerals 2022, 12, 455. [Google Scholar] [CrossRef]

- Zhao, H.; Deng, K.; Li, N.; Wang, Z.; Wei, W. Hierarchical Spatial-Spectral Feature Extraction with Long Short Term Memory (LSTM) for Mineral Identification Using Hyperspectral Imagery. Sensors 2020, 20, 6854. [Google Scholar] [CrossRef]

- Tanaka, S.; Tsuru, H.; Someno, K.; Yamaguchi, Y. Identification of alteration minerals from unstable reflectance spectra using a deep learning method. Geosciences 2019, 9, 195. [Google Scholar] [CrossRef]

- Jahoda, P.; Drozdovskiy, I.; Payler, S.J.; Turchi, L.; Bessone, L.; Sauro, F. Machine learning for recognizing minerals from multispectral data. Analyst 2021, 146, 184–195. [Google Scholar] [CrossRef]

- Cai, Y.; Xu, D.; Shi, H. Rapid identification of ore minerals using multi-scale dilated convolutional attention network associated with portable Raman spectroscopy. Spectrochim. Acta Part A Mol. Biomol. Spectrosc. 2022, 267, 120607. [Google Scholar] [CrossRef]

- Qing-Lin, T.; Bang-Jie, G.; Fa-Wang, Y.; Yao, L.; Peng-Fei, L.; Xue-Jiao, C. Mineral Spectra Classification Based on One-Dimensional Dilated Convolutional Neural Network. Spectrosc. Spectr. Anal. 2022, 42, 873–877. [Google Scholar]

- Zeng, X.; Xiao, Y.; Ji, X.; Wang, G. Mineral identification based on deep learning that combines image and mohs hardness. Minerals 2021, 11, 506. [Google Scholar] [CrossRef]