Trends in Intelligent Communication Systems: Review of Standards, Major Research Projects, and Identification of Research Gaps

Abstract

1. Introduction

1.1. Related Survey Papers and Contributions of This Survey

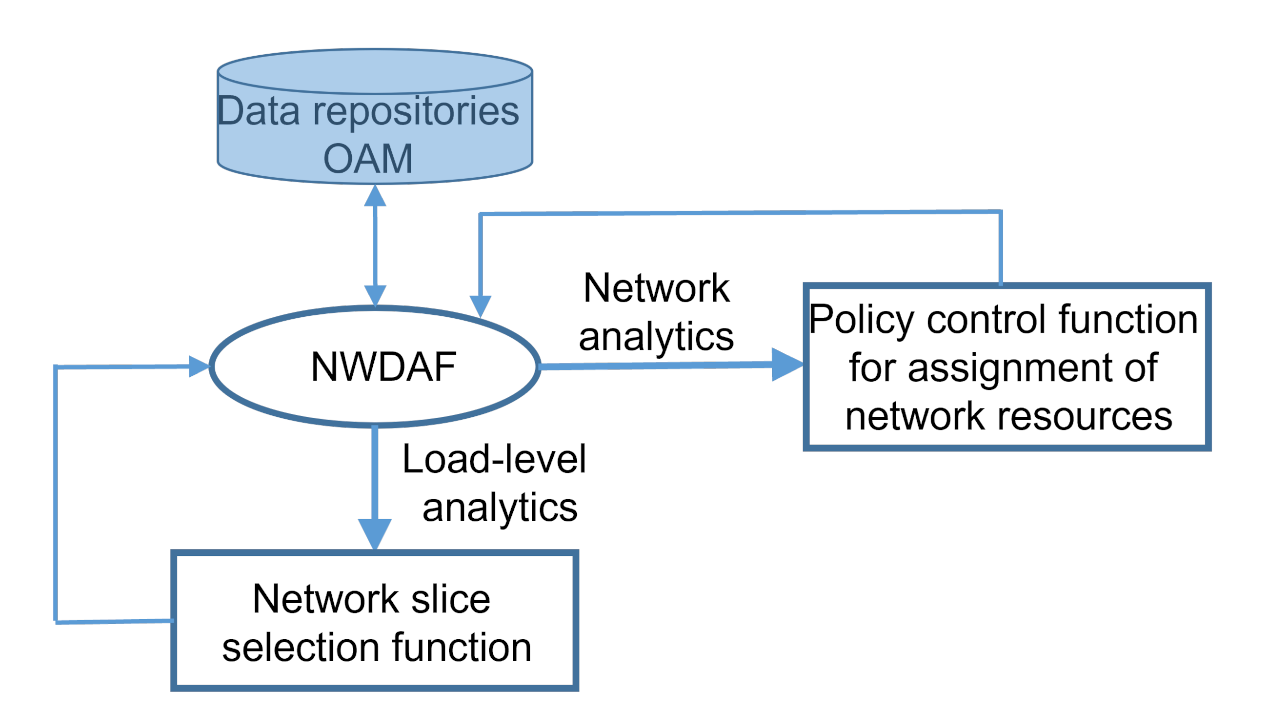

- The O-RAN Alliance and the 3GPP standardisation body have already made significant progress in recommending how to perform data collection and intelligent control at the RAN and the core network, respectively. In terms of our approach, at the RAN, we categorise ML-based technology components for transceiver design, according to their operational timescales as suggested by the O-RAN Alliance: real-time (less than 10 ms), near real-time (between 10 ms and 1000 ms), and non-real-time (larger than 1 s) intelligent control. For ML models operating at the edge and core networks, we summarize the main salient features of the network data analytics function (NWDAF) developed and standardised by 3GPP. It is worth noting that only a few research contributions are cognisant of the data collection and analytics architecture suggested by 3GPP.

- We highlight the use cases for AI-enabled communication networks recommended by ITU, the O-RAN Alliance and ongoing research projects to guide future research activities with high and significant business potentials.

1.2. Summary of the Paper

2. Background on ML-Based Optimisation of Wireless Networks

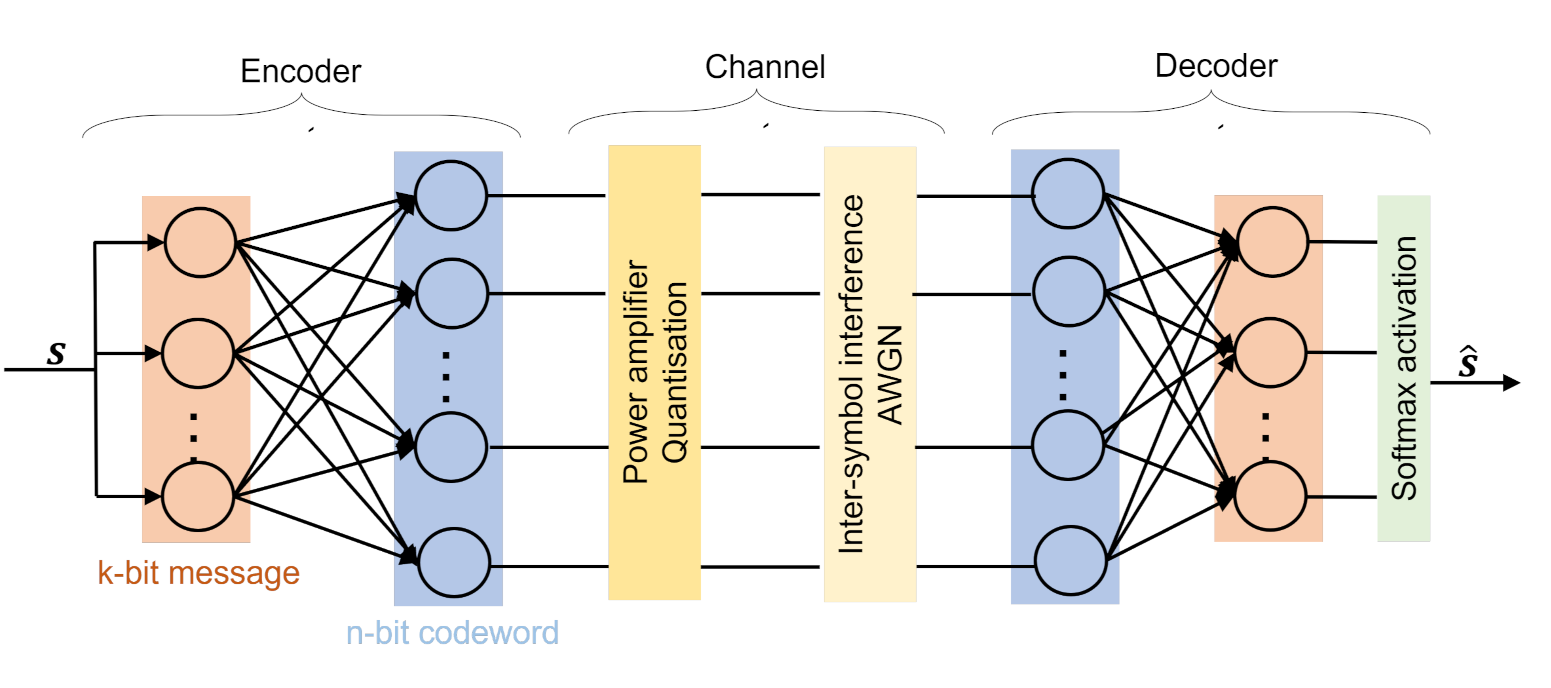

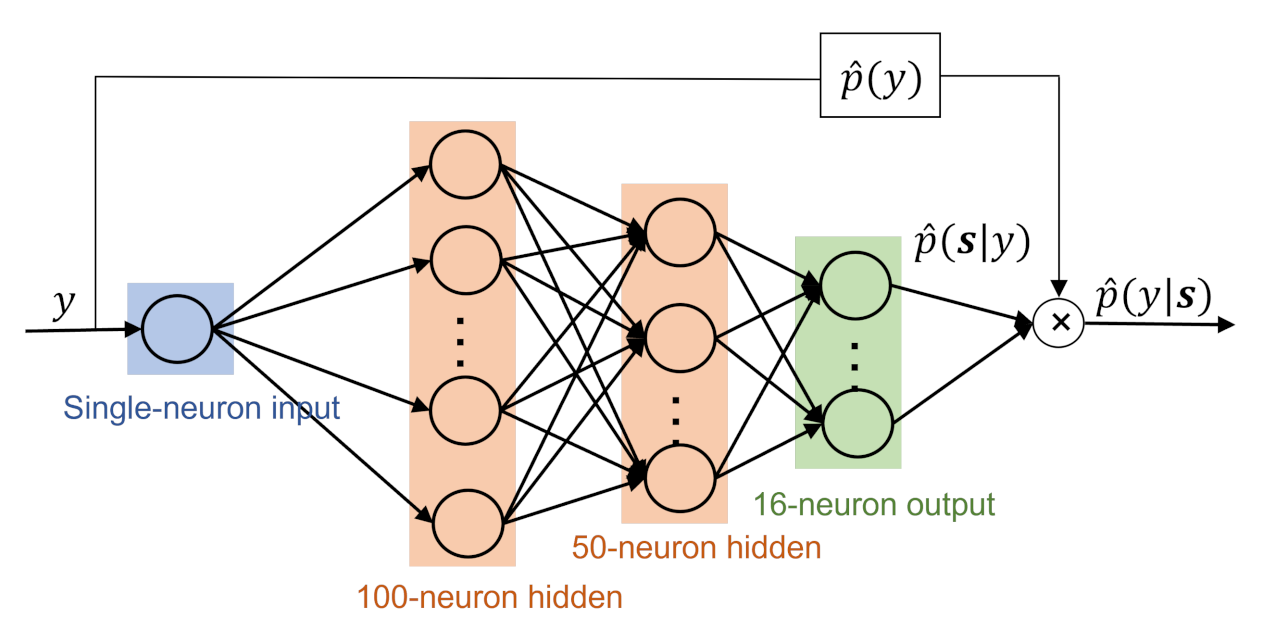

- Training the auto-encoder over all possible source messages is required, which becomes quickly impractical for long code words. Additionally, it was observed that training at a low SNR does not necessarily generalise well at high SNRs [22], while training at multiple SNRs will prohibitively increase the size of required labelled datasets and time to train the NN.

- The channel and all impairments between the transmitter and receiver must have a known deterministic functional form and be differentiable, which is seldom the case. For instance, the fading channel probabilistically varies over space and time. Furthermore, some impairments may not be differentiable, such as quantisation, or its mathematical representation may be inaccurate (e.g., the power amplifier response), or poorly understood (e.g., channel models for molecular and underwater communications). In this case, it is unclear how to backpropagate gradients from the receiver to the transmitter [24]. In addition, small discrepancies between the actual impairment and its model used for training may significantly degrade the performance during testing.

- It is usually difficult to understand the relation between the topology of the NN, e.g., the number of layers, the activation and loss functions, and the performance of the transceiver. The explainability is a common problem hindering the adoption of AI/ML techniques in some application areas, though it may not be a significant obstacle in transceiver optimisation. Thus, efforts are needed not only to design new AI/ML models, but also to explain the working principle of the models. Fortunately, such research works are slowly emerging, for example, that of [25], which develops a parallel model to explain the behaviour of a recurrent neural network (RNN).

3. AI/ML in the Standards and Industry

3.1. Data Analytics and AI/ML in 3GPP

3.1.1. Network Data Analytics Function (NWADF)

NWDAF in Releases 15 and 16

NWDAF in Release 17

Federated Learning

3.1.2. European Telecommunications Standards Institute (ETSI)

ETSI ENI

ETSI ZSM

3.2. International Telecommunication Union (ITU)

3.3. Open RAN

3.3.1. RAN Intelligent Controllers

3.3.2. Use Cases and Challenges in Open RANs

3.4. TinyML

4. Overview of Research Projects on AI/ML for Communications and Networking

4.1. Europe—H2020 Research Framework

4.1.1. ARIADNE

4.1.2. 5GENESIS

4.1.3. 5GROWTH

4.1.4. 5G-CARMEN

4.1.5. Smart Connectivity beyond 5G

4.2. U.K.-EPSRC and U.S.-NSF

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| API | Application Programming Interface |

| AWGN | Additive White Gaussian Noise |

| CPU | Central Processing Unit |

| CQI | Channel Quality Indicator |

| CSI | Channel State Information |

| DL | Deep Learning |

| DNN | Deep Neural Network |

| DRL | Deep Reinforcement Learning |

| eMBB | Enhanced Mobile Broadband |

| E2E | End-to-End |

| KPI | Key Performance Indicator |

| LSTM | Long Short Term Memory |

| MCU | Micro-Controller Unit |

| MDAF | Management Data Analytics Function |

| MEC | Multi-access Edge Computing |

| ML | Machine Learning |

| MNO | Mobile Network Operator |

| NFV | Network Function Virtualisation |

| NN | Neural Network |

| NDDI | Network Slice Subnet Instance |

| NWDAF | Network Data Analytics Function |

| QoE | Quality-of-Experience |

| RAN | Radio Access Network |

| RIC | RAN Intelligent Control |

| RIS | Reflecting Intelligent Surfaces |

| RNN | Recurrent Neural Network |

| SDN | Software Defined Networking |

| UE | User Equipment |

References

- Li, R.; Zhao, Z.; Zhou, X.; Ding, G.; Chen, Y.; Wang, Z.; Zhang, H. Intelligent 5G: When Cellular Networks Meet Artificial Intelligence. IEEE Wirel. Commun. 2017, 24, 175–183. [Google Scholar] [CrossRef]

- Letaief, K.B.; Chen, W.; Shi, Y.; Zhang, J.; Zhang, Y.J.A. The Roadmap to 6G: AI Empowered Wireless Networks. IEEE Commun. Mag. 2019, 57, 84–90. [Google Scholar] [CrossRef]

- Jiang, W.; Han, B.; Habibi, M.A.; Schotten, H.D. The Road Towards 6G: A Comprehensive Survey. IEEE Open J. Commun. Soc. 2021, 2, 334–366. [Google Scholar] [CrossRef]

- Zhao, Y.; Zhai, W.; Zhao, J.; Zhang, T.H.; Sun, S.; Niyato, D.; Lam, K.Y. A Comprehensive Survey of 6G Wireless Communications. arXiv 2020, arXiv:2101.03889. [Google Scholar]

- Bhat, J.R.; Alqahtani, S.A. 6G Ecosystem: Current Status and Future Perspective. IEEE Access 2021, 9, 43134–43167. [Google Scholar] [CrossRef]

- Hossain, M.A.; Noor, R.M.; Yau, K.L.A.; Azzuhri, S.R.; Zaba, M.R.; Ahmedy, I. Comprehensive Survey of Machine Learning Approaches in Cognitive Radio-Based Vehicular Ad Hoc Networks. IEEE Access 2020, 8, 78054–78108. [Google Scholar] [CrossRef]

- Praveen Kumar, D.; Amgoth, T.; Annavarapu, C.S.R. Machine learning algorithms for wireless sensor networks: A survey. Inform. Fusion 2019, 49, 1–25. [Google Scholar] [CrossRef]

- Mahdavinejad, M.S.; Rezvan, M.; Barekatain, M.; Adibi, P.; Barnaghi, P.; Sheth, A.P. Machine learning for internet of things data analysis: A survey. Digit. Commun. Netw. 2018, 4, 161–175. [Google Scholar] [CrossRef]

- Khalil, R.A.; Saeed, N.; Masood, M.; Fard, Y.M.; Alouini, M.S.; Al-Naffouri, T.Y. Deep Learning in the Industrial Internet of Things: Potentials, Challenges, and Emerging Applications. IEEE Internet Things J. 2021, 8, 11016–11040. [Google Scholar] [CrossRef]

- Mao, Q.; Hu, F.; Hao, Q. Deep Learning for Intelligent Wireless Networks: A Comprehensive Survey. IEEE Commun. Surv. Tutor. 2018, 20, 2595–2621. [Google Scholar] [CrossRef]

- Zhang, C.; Ueng, Y.L.; Studer, C.; Burg, A. Artificial Intelligence for 5G and Beyond 5G: Implementations, Algorithms, and Optimizations. IEEE J. Emerg. Sel. Top. Circ. Syst. 2020, 10, 149–163. [Google Scholar] [CrossRef]

- Ali, S.; Saad, W.; Steinbach, D.E. White Paper on Machine Learning in 6G Wireless Communication Networks; 6G Research Visions, No. 7; University of Oulu: Oulu, Finland, 2020. [Google Scholar]

- Gündüz, D.; de Kerret, P.; Sidiropoulos, N.D.; Gesbert, D.; Murthy, C.R.; van der Schaar, M. Machine Learning in the Air. IEEE J. Sel. Areas Commun. 2019, 37, 2184–2199. [Google Scholar] [CrossRef]

- Sun, Y.; Peng, M.; Zhou, Y.; Huang, Y.; Mao, S. Application of Machine Learning in Wireless Networks: Key Techniques and Open Issues. IEEE Commun. Surveys Tutor. 2019, 21, 3072–3108. [Google Scholar] [CrossRef]

- Boutaba, R.; Salahuddin, M.A.; Limam, N.; Ayoubi, S.; Shahriar, N.; Estrada-Solano, F.; Caicedo, O.M. A comprehensive survey on machine learning for networking: Evolution, applications and research opportunities. J. Internet Ser. Appl. 2018, 9. [Google Scholar] [CrossRef]

- Shafin, R.; Liu, L.; Chandrasekhar, V.; Chen, H.; Reed, J.; Zhang, J.C. Artificial Intelligence-Enabled Cellular Networks: A Critical Path to Beyond-5G and 6G. IEEE Wirel. Commun. 2020, 27, 212–217. [Google Scholar] [CrossRef]

- Zhang, C.; Patras, P.; Haddadi, H. Deep Learning in Mobile and Wireless Networking: A Survey. IEEE Commun. Surv. Tutor. 2019, 21, 2224–2287. [Google Scholar] [CrossRef]

- Wang, J.; Jiang, C.; Zhang, H.; Ren, Y.; Chen, K.C.; Hanzo, L. Thirty Years of Machine Learning: The Road to Pareto-Optimal Next-Generation Wireless Networks. IEEE Commun. Surv. Tutor. 2020, 22, 1472–1514. [Google Scholar] [CrossRef]

- Zappone, A.; Di Renzo, M.; Debbah, M.; Lam, T.T.; Qian, X. Model-Aided Wireless Artificial Intelligence: Embedding Expert Knowledge in Deep Neural Networks for Wireless System Optimization. IEEE Veh. Technol. Mag. 2019, 14, 60–69. [Google Scholar] [CrossRef]

- Wang, T.; Wen, C.K.; Wang, H.; Gao, F.; Jiang, T.; Jin, S. Deep learning for wireless physical layer: Opportunities and challenges. China Commun. 2017, 14, 92–111. [Google Scholar] [CrossRef]

- Qin, Z.; Ye, H.; Li, G.Y.; Juang, B.H.F. Deep Learning in Physical Layer Communications. IEEE Wirel. Commun. 2019, 26, 93–99. [Google Scholar] [CrossRef]

- O’Shea, T.; Hoydis, J. An Introduction to Deep Learning for the Physical Layer. IEEE Trans. Cogn. Commun. Netw. 2017, 3, 563–575. [Google Scholar] [CrossRef]

- O’Shea, T.J.; Karra, K.; Clancy, T.C. Learning to communicate: Channel auto-encoders, domain specific regularizers, and attention. In Proceedings of the 2016 IEEE International Symposium on Signal Processing and Information Technology (ISSPIT), Limassol, Cyprus, 12–14 December 2016; pp. 223–228. [Google Scholar] [CrossRef]

- Balevi, E.; Andrews, J.G. One-Bit OFDM Receivers via Deep Learning. IEEE Trans. Commun. 2019, 67, 4326–4336. [Google Scholar] [CrossRef]

- Mulvey, D.; Foh, C.H.; Imran, M.A.; Tafazolli, R. Improved Neural Network Transparency for Cell Degradation Detection Using Explanatory Model. In Proceedings of the ICC 2020—2020 IEEE International Conference on Communications (ICC), Dublin, Ireland, 7–11 June 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Shlezinger, N.; Eldar, Y.C.; Farsad, N.; Goldsmith, A.J. ViterbiNet: Symbol Detection Using a Deep Learning Based Viterbi Algorithm. In Proceedings of the 2019 IEEE 20th International Workshop on Signal Processing Advances in Wireless Communications (SPAWC), Cannes, France, 2–5 July 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Ye, H.; Li, G.Y.; Juang, B.H. Power of Deep Learning for Channel Estimation and Signal Detection in OFDM Systems. IEEE Wirel. Commun. Lett. 2018, 7, 114–117. [Google Scholar] [CrossRef]

- He, H.; Wen, C.K.; Jin, S.; Li, G.Y. Model-Driven Deep Learning for MIMO Detection. IEEE Trans. Signal Process. 2020, 68, 1702–1715. [Google Scholar] [CrossRef]

- Soltani, M.; Pourahmadi, V.; Mirzaei, A.; Sheikhzadeh, H. Deep Learning-Based Channel Estimation. IEEE Commun. Lett. 2019, 23, 652–655. [Google Scholar] [CrossRef]

- Burse, K.; Yadav, R.N.; Shrivastava, S.C. Channel Equalization Using Neural Networks: A Review. IEEE Trans. Syst. Man Cybern. Part C 2010, 40, 352–357. [Google Scholar] [CrossRef]

- Mao, H.; Lu, H.; Lu, Y.; Zhu, D. RoemNet: Robust Meta Learning Based Channel Estimation in OFDM Systems. In Proceedings of the ICC 2019—2019 IEEE International Conference on Communications (ICC), Shanghai, China, 20–24 May 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Wen, C.K.; Shih, W.T.; Jin, S. Deep Learning for Massive MIMO CSI Feedback. IEEE Wirel. Commun. Lett. 2018, 7, 748–751. [Google Scholar] [CrossRef]

- Yang, Y.; Gao, F.; Li, G.Y.; Jian, M. Deep Learning-Based Downlink Channel Prediction for FDD Massive MIMO System. IEEE Commun. Lett. 2019, 23, 1994–1998. [Google Scholar] [CrossRef]

- Arnold, M.; Dorner, S.; Cammerer, S.; Hoydis, J.; ten Brink, S. Towards Practical FDD Massive MIMO: CSI Extrapolation Driven by Deep Learning and Actual Channel Measurements. In Proceedings of the 2019 53rd Asilomar Conference on Signals, Systems, and Computers, Pacific Grove, CA, USA, 3–6 November 2019; pp. 1972–1976. [Google Scholar] [CrossRef]

- Jiang, W.; Schotten, H.D. Multi-Antenna Fading Channel Prediction Empowered by Artificial Intelligence. In Proceedings of the 2018 IEEE 88th Vehicular Technology Conference (VTC-Fall), Chicago, IL, USA, 27–30 August 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Gruber, T.; Cammerer, S.; Hoydis, J.; Brink, S.t. On deep learning-based channel decoding. In Proceedings of the 2017 51st Annual Conference on Information Sciences and Systems (CISS), Baltimore, MD, USA, 22–24 March 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Cammerer, S.; Gruber, T.; Hoydis, J.; ten Brink, S. Scaling Deep Learning-Based Decoding of Polar Codes via Partitioning. In Proceedings of the GLOBECOM 2017—2017 IEEE Global Communications Conference, Singapore, 4–8 December 2017; pp. 1–6. [Google Scholar] [CrossRef]

- He, Y.; Zhang, J.; Jin, S.; Wen, C.K.; Li, G.Y. Model-Driven DNN Decoder for Turbo Codes: Design, Simulation, and Experimental Results. IEEE Trans. Commun. 2020, 68, 6127–6140. [Google Scholar] [CrossRef]

- Blanquez-Casado, F.; Aguayo Torres, M.d.C.; Gomez, G. Link Adaptation Mechanisms Based on Logistic Regression Modeling. IEEE Commun. Lett. 2019, 23, 942–945. [Google Scholar] [CrossRef]

- Luo, F.L. (Ed.) Machine Learning for Future Wireless Communications; John Wiley & Sons Ltd.: Hoboken, NJ, USA, 2020. [Google Scholar]

- Liaskos, C.; Nie, S.; Tsioliaridou, A.; Pitsillides, A.; Ioannidis, S.; Akyildiz, I. A New Wireless Communication Paradigm through Software-Controlled Metasurfaces. IEEE Commun. Mag. 2018, 56, 162–169. [Google Scholar] [CrossRef]

- Elbir, A.M.; Papazafeiropoulos, A.; Kourtessis, P.; Chatzinotas, S. Deep Channel Learning for Large Intelligent Surfaces Aided mm-Wave Massive MIMO Systems. IEEE Wirel. Commun. Lett. 2020, 9, 1447–1451. [Google Scholar] [CrossRef]

- Taha, A.; Alrabeiah, M.; Alkhateeb, A. Enabling Large Intelligent Surfaces With Compressive Sensing and Deep Learning. IEEE Access 2021, 9, 44304–44321. [Google Scholar] [CrossRef]

- Thilina, K.M.; Choi, K.W.; Saquib, N.; Hossain, E. Machine Learning Techniques for Cooperative Spectrum Sensing in Cognitive Radio Networks. IEEE J. Sel. Areas Commun. 2013, 31, 2209–2221. [Google Scholar] [CrossRef]

- Lee, W.; Kim, M.; Cho, D.H. Deep Cooperative Sensing: Cooperative Spectrum Sensing Based on Convolutional Neural Networks. IEEE Trans. Veh. Technol. 2019, 68, 3005–3009. [Google Scholar] [CrossRef]

- Wang, Y.; Liu, M.; Yang, J.; Gui, G. Data-Driven Deep Learning for Automatic Modulation Recognition in Cognitive Radios. IEEE Trans. Veh. Technol. 2019, 68, 4074–4077. [Google Scholar] [CrossRef]

- Ahmed, K.I.; Tabassum, H.; Hossain, E. Deep Learning for Radio Resource Allocation in Multi-Cell Networks. IEEE Network 2019, 33, 188–195. [Google Scholar] [CrossRef]

- Ghadimi, E.; Davide Calabrese, F.; Peters, G.; Soldati, P. A reinforcement learning approach to power control and rate adaptation in cellular networks. In Proceedings of the 2017 IEEE International Conference on Communications (ICC), Paris, France, 21–25 May 2017; pp. 1–7. [Google Scholar] [CrossRef][Green Version]

- Sun, H.; Chen, X.; Shi, Q.; Hong, M.; Fu, X.; Sidiropoulos, N.D. Learning to Optimize: Training Deep Neural Networks for Interference Management. IEEE Trans. Signal Process. 2018, 66, 5438–5453. [Google Scholar] [CrossRef]

- Challita, U.; Dong, L.; Saad, W. Proactive Resource Management for LTE in Unlicensed Spectrum: A Deep Learning Perspective. IEEE Trans. Wirel. Commun. 2018, 17, 4674–4689. [Google Scholar] [CrossRef]

- Bonati, L.; d’oro, S.; Polese, M.; Basagni, S.; Melodia, T. Intelligence and Learning in O-RAN for Data-driven NextG Cellular Networks. arXiv 2020, arXiv:2012.01263. [Google Scholar]

- Zhou, P.; Fang, X.; Wang, X.; Long, Y.; He, R.; Han, X. Deep Learning-Based Beam Management and Interference Coordination in Dense mmWave Networks. IEEE Trans. Veh. Technol. 2019, 68, 592–603. [Google Scholar] [CrossRef]

- Li, X.; Ni, R.; Chen, J.; Lyu, Y.; Rong, Z.; Du, R. End-to-End Network Slicing in Radio Access Network, Transport Network and Core Network Domains. IEEE Access 2020, 8, 29525–29537. [Google Scholar] [CrossRef]

- Abbas, K.; Afaq, M.; Ahmed Khan, T.; Rafiq, A.; Song, W.C. Slicing the Core Network and Radio Access Network Domains through Intent-Based Networking for 5G Networks. Electronics 2020, 9, 1710. [Google Scholar] [CrossRef]

- Zappone, A.; Renzo, M.D.; Debbah, M. Wireless Networks Design in the Era of Deep Learning: Model-Based, AI-Based, or Both? IEEE Trans. Commun. 2019, 67, 7331–7376. [Google Scholar] [CrossRef]

- Hammouti, H.E.; Ghogho, M.; Raza Zaidi, S.A. A Machine Learning Approach to Predicting Coverage in Random Wireless Networks. In Proceedings of the 2018 IEEE Globecom Workshops (GC Wkshps), Abu Dhabi, United Arab Emirates, 9–13 December 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Mulvey, D.; Foh, C.H.; Imran, M.A.; Tafazolli, R. Cell Fault Management Using Machine Learning Techniques. IEEE Access 2019, 7, 124514–124539. [Google Scholar] [CrossRef]

- Association, G. An Introduction to Network Slicing; Technical Report; GSMA Head Office: London, UK, 2017. [Google Scholar]

- Available online: https://www.thefastmode.com/self-organizing-network-son-vendors (accessed on 10 October 2021).

- Kibria, M.G.; Nguyen, K.; Villardi, G.P.; Zhao, O.; Ishizu, K.; Kojima, F. Big Data Analytics, Machine Learning, and Artificial Intelligence in Next-Generation Wireless Networks. IEEE Access 2018, 6, 32328–32338. [Google Scholar] [CrossRef]

- Technical Specification Group Services and System Aspects; Architecture Enhancements for 5G System (5GS) to Support Network Data Analytics Services; 3GPP TS 23.288 Version 16.3.0 Release 16; Technical Report; 3GPP Mobile Competence Centre: Sophia Antipolis, France, March 2021.

- 5G System; Network Data Analytics Services 3GPP TS 29.520 V15.3.0; Technical Report; 3GPP Mobile Competence Centre: Sophia Antipolis, France, April 2019.

- 5G System; Network Data Analytics Services 3GPP TS 29.520 V16.7.0; Technical Report; 3GPP Mobile Competence Centre: Sophia Antipolis, France, March 2021.

- Potluri, R.; Young, K. Analytics Automation for Service Orchestration. In Proceedings of the 2020 International Symposium on Networks, Computers and Communications (ISNCC), Montreal, QC, Canada, 20–22 October 2020; pp. 1–4. [Google Scholar] [CrossRef]

- Chih-Lin, L.; Sun, Q.; Liu, Z.; Zhang, S.; Han, S. The Big-Data-Driven Intelligent Wireless Network: Architecture, Use Cases, Solutions, and Future Trends. IEEE Veh. Technol. Mag. 2017, 12, 20–29. [Google Scholar] [CrossRef]

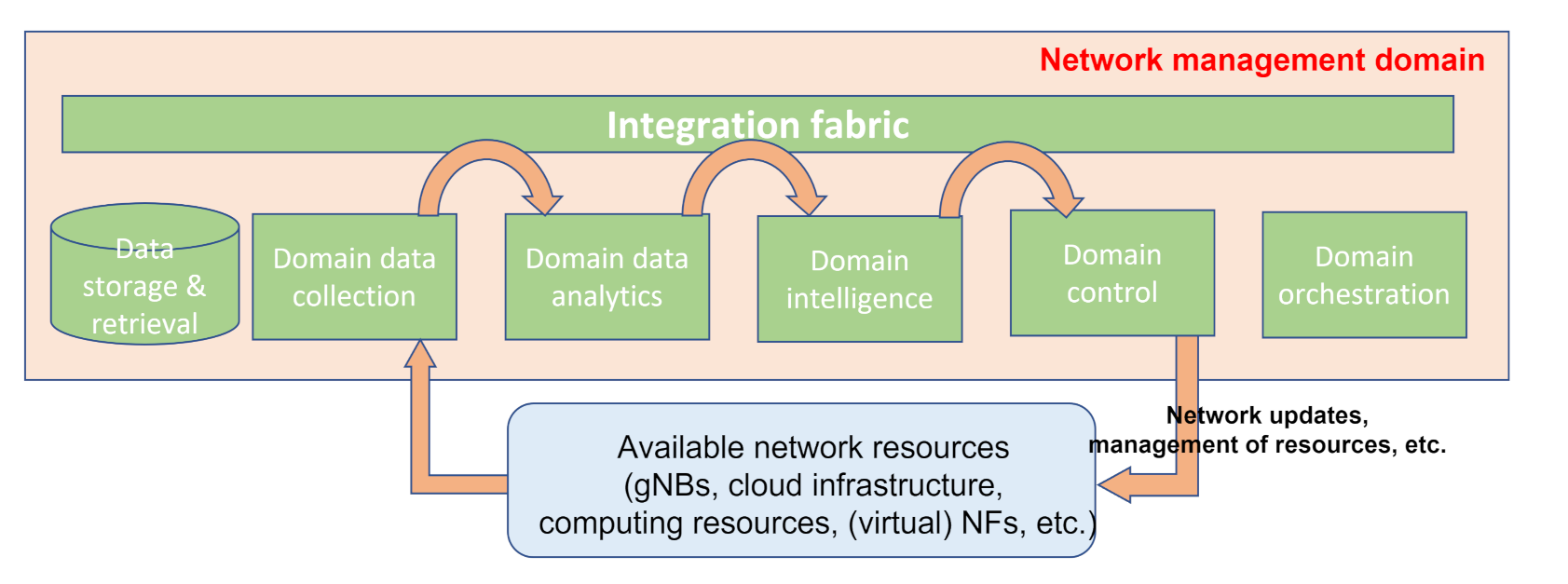

- Pateromichelakis, E.; Moggio, F.; Mannweiler, C.; Arnold, P.; Shariat, M.; Einhaus, M.; Wei, Q.; Bulakci, Ö.; De Domenico, A. End-to-End Data Analytics Framework for 5G Architecture. IEEE Access 2019, 7, 40295–40312. [Google Scholar] [CrossRef]

- Sevgican, S.; Turan, M.; Gokarslan, K.; Yilmaz, H.B.; Tugcu, T. Intelligent network data analytics function in 5G cellular networks using machine learning. J. Commun. Netw. 2020, 22, 269–280. [Google Scholar] [CrossRef]

- Study on Enablers for Network Automation for the 5G System (5GS); Phase 2 (Release 17), 3GPP TR 23.700-91 V17.0.0; Technical Report; 3GPP Mobile Competence Centre: Sophia Antipolis, France, December 2020.

- McMahan, H.B.; Moore, E.; Ramage, D.; Hampson, S.; Arcas, B.A.Y. Communication-Efficient Learning of Deep Networks from Decentralized Data. In Artificial Intelligence and Statistics; AISTATS: Ft. Lauderdale, FL, USA, 2017. [Google Scholar]

- Thapa, C.; Chamikara, M.; Camtepe, S. SplitFed: When Federated Learning Meets Split Learning. arXiv 2020, arXiv:2004.12088. [Google Scholar]

- Niknam, S.; Dhillon, H.S.; Reed, J.H. Federated Learning for Wireless Communications: Motivation, Opportunities, and Challenges. IEEE Commun. Mag. 2020, 58, 46–51. [Google Scholar] [CrossRef]

- Jeong, E.; Oh, S.; Kim, H.; Park, J.; Bennis, M.; Kim, S.L. Communication-Efficient On-Device Machine Learning: Federated Distillation and Augmentation under Non-IID Private Data. arXiv 2018, arXiv:1811.11479. [Google Scholar]

- Chen, M.; Yang, Z.; Saad, W.; Yin, C.; Poor, H.V.; Cui, S. A Joint Learning and Communications Framework for Federated Learning Over Wireless Networks. IEEE Trans. Wirel. Commun. 2021, 20, 269–283. [Google Scholar] [CrossRef]

- Park, J.; Samarakoon, S.; Elgabli, A.; Kim, J.; Bennis, M.; Kim, S.L.; Debbah, M. Communication-Efficient and Distributed Learning Over Wireless Networks: Principles and Applications. Proc. IEEE 2021, 109, 796–819. [Google Scholar] [CrossRef]

- Lim, W.Y.B.; Luong, N.C.; Hoang, D.T.; Jiao, Y.; Liang, Y.C.; Yang, Q.; Niyato, D.; Miao, C. Federated Learning in Mobile Edge Networks: A Comprehensive Survey. IEEE Commun. Surv. Tutor. 2020, 22, 2031–2063. [Google Scholar] [CrossRef]

- Liu, Y.; Yuan, X.; Xiong, Z.; Kang, J.; Wang, X.; Niyato, D. Federated learning for 6G communications: Challenges, methods, and future directions. China Commun. 2020, 17, 105–118. [Google Scholar] [CrossRef]

- ETSI. Artificial Intelligence and Future Directions for ETSI; [ETSI White Paper], No. 32; Technical Report; ETSI 06921 Sophia Antipolis CEDEX: Valbonne, France, June 2020. [Google Scholar]

- ETSI ENI. Available online: https://www.etsi.org/technologies/experiential-networked-intelligence (accessed on 10 October 2021).

- ENI System Architecture, ETSI GS ENI 005 V1.1.1; Technical Report; ETSI 06921 Sophia Antipolis CEDEX: Valbonne, France, September 2019.

- ENI Use Cases, ETSI GS ENI 001 V3.1.1; Technical Report; ETSI 06921 Sophia Antipolis CEDEX: Valbonne, France, December 2020.

- ETSI. ENI Requirements, ETSI GS ENI 002 V3.1.1; Technical Report; ETSI 06921 Sophia Antipolis CEDEX: Valbonne, France, December 2020. [Google Scholar]

- ETSI ENI. Available online: https://eniwiki.etsi.org/index.php?title=Ongoing_PoCs (accessed on 10 October 2021).

- ETSI. ENI Vision: Improved Network Experience Using Experiential Networked Intelligence; [ETSI White Paper No. 44]; Technical Report; ETSI 06921 Sophia Antipolis CEDEX: Valbonne, France, March 2021. [Google Scholar]

- ETSI. Requirements Based on Documented Scenarios ETSI GS ZSM 001 V1.1.1; Technical Report; ETSI 06921 Sophia Antipolis CEDEX: Valbonne, France, October 2019. [Google Scholar]

- ETSI ZSM. Available online: https://www.etsi.org/technologies/zero-touch-network-service-management (accessed on 10 October 2021).

- ETSI. Zero-Touch Network and Service Management [Introductory White Paper]; Technical Report; ETSI 06921 Sophia Antipolis CEDEX: Valbonne, France, 2017. [Google Scholar]

- ETSI. ZSM Reference Architecture ETSI GS ZSM 002 V1.1.1; Technical Report; ETSI 06921 Sophia Antipolis CEDEX: Valbonne, France, August 2019. [Google Scholar]

- ITU-T. Available online: https://www.itu.int/en/ITU-T/focusgroups/ml5g/Pages/default.aspx (accessed on 10 October 2021).

- [ITU-T Y.3170]. Use Cases for Machine Learning in Future Networks Including IMT-2020, Supplement 55; Technical Report; Telecommunication Stanrdardization Sector of ITU: Geneva, Switzerland, October 2019. [Google Scholar]

- [ITU-T Y.3172]. Architectural Framework for Machine Learning in Future Networks including IMT-2020; Technical Report; Telecommunication Stanrdardization Sector of ITU: Geneva, Switzerland, June 2019. [Google Scholar]

- Wilhelmi, F.; Barrachina-Munoz, S.; Bellalta, B.; Cano, C.; Jonsson, A.; Ram, V. A Flexible Machine-Learning-Aware Architecture for Future WLANs. IEEE Commun. Mag. 2020, 58, 25–31. [Google Scholar] [CrossRef]

- Wilhelmi, F.; Carrascosa, M.; Cano, C.; Jonsson, A.; Ram, V.; Bellalta, B. Usage of Network Simulators in Machine-Learning-Assisted 5G/6G Networks. IEEE Wirel. Commun. 2021, 28, 160–166. [Google Scholar] [CrossRef]

- [ITU-T Y.3173]. Framework for Evaluating Intelligence Levels of Future Networks Including IMT-2020; Technical Report; Telecommunication Stanrdardization Sector of ITU: Geneva, Switzerland, 2020. [Google Scholar]

- ACUMOS. Available online: https://www.acumos.org/ (accessed on 10 October 2021).

- ITU Challenge. Available online: https://www.itu.int/en/ITU-T/AI/challenge/2020/Pages/PROGRAMME.aspx (accessed on 10 October 2021).

- ITU-T. Available online: https://www.itu.int/en/ITU-T/focusgroups/an/Pages/default.aspx (accessed on 10 October 2021).

- O-RAN: Towards an Open and Smart RAN, [White Paper]; Technical Report; O-RAN Alliance: Alfter, Germany, October 2018.

- TIP. Available online: https://telecominfraproject.com/ (accessed on 10 October 2021).

- Masur, P.; Reed, J. Artificial Intelligence in Open Radio Access Network. arXiv 2021, arXiv:2104.09445. [Google Scholar]

- O-RAN Use Cases and Deployment Scenarios, [White Paper]; Technical Report; O-RAN Alliance: Alfter, Germany, February 2020.

- Niknam, S.; Roy, A.; Dhillon, H.S.; Singh, S.; Banerji, R.; Reed, J.H.; Saxena, N.; Yoon, S. Intelligent O-RAN for Beyond 5G and 6G Wireless Networks. arXiv 2020, arXiv:abs/2005.08374. [Google Scholar]

- Open RAN Integration: Run with It, [White Paper]; Technical Report; O-RAN Alliance: Alfter, Germany, 2021.

- Warden, P.; Situnayake, D. Tiny ML. Machine Learning with TensorFlow Lite on Arduino and Ultra-Low-Power Microcontrollers; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2019. [Google Scholar]

- Branco, S.; Ferreira, A.G.; Cabral, J. Machine Learning in Resource-Scarce Embedded Systems, FPGAs, and End-Devices: A Survey. Electronics 2019, 8, 1289. [Google Scholar] [CrossRef]

- Qualcomm. Available online: https://rebootingcomputing.ieee.org/images/files/pdf/iccv-2019_edwin-park.pdf (accessed on 10 October 2021).

- Lin, J.; Chen, W.M.; Lin, Y.; Cohn, J.; Gan, C.; Han, S. MCUNet: Tiny Deep Learning on IoT Devices. arXiv 2020, arXiv:abs/2007.10319. [Google Scholar]

- David, R.; Duke, J.; Jain, A.; Janapa Reddi, E.A. TensorFlow Lite Micro: Embedded Machine Learning for TinyML Systems. In Proceedings of Machine Learning and Systems; Smola, A., Dimakis, A., Stoica, I., Eds.; Systems and Machine Learning Foundation: Indio, CA, USA, 2021; Volume 3, pp. 800–811. [Google Scholar]

- Lin, J.; Chen, W.M.; Lin, Y.; Cohn, J.; Gan, C.; Han, S. Benchmarking TinyML Systems: Challenges and Direction. arXiv 2020, arXiv:2007.10319. [Google Scholar]

- Tiny ML. Available online: https://www.tinyml.org/ (accessed on 10 October 2021).

- Association, T.G.I. European Vision for the 6G Wireless Ecosystem [White Paper]; Technical Report; The 5G Infrastructure Association: Heidelberg, Germany, 2021. [Google Scholar]

- Kaloxylos, A.; Gavras, A.; Camps Mur, D.; Ghoraishi, M.; Hrasnica, H. AI and ML—Enablers for Beyond 5G Networks. Zenodo 2020. [Google Scholar] [CrossRef]

- 5G PPP Partnership. Phase 3 [Brochure]; Technical Report; The 5G Infrastructure Public Private Partnership: Heidelberg, Germany, 2021. [Google Scholar]

- Windmill. Available online: https://windmill-itn.eu/research/ (accessed on 10 October 2021).

- Ariadne Vision and System Concept, [Newsletter]; Technical Report; The 5G Infrastructure Public Private Partnership: Heidelberg, Germany, January 2021.

- Ariadne Deliverable 1.1: ARIADNE Use Case Definition and System Requirements; Technical Report; The 5G Infrastructure Public Private Partnership: Heidelberg, Germany, January 2021.

- Ariadne Deliverable 2.1: Initial Results in D-Band Directional Links Analysis, System Performance Assessment, and Algorithm Design; Technical Report; The 5G Infrastructure Public Private Partnership: Heidelberg, Germany, January 2021.

- 5Genesis Deliverable 2.1: Requirements of the Facility; Technical Report; The 5G Infrastructure Public Private Partnership: Heidelberg, Germany, January 2021.

- 5Growth Deliverable 1.1: Business Model Design; Technical Report; The 5G Infrastructure Public Private Partnership: Heidelberg, Germany, January 2021.

- Study on Management and Orchestration of Network Slicing for Next Generation Network; (Release 17), 3GPP TR 28.801 V15.1.0; Technical Report; 3GPP Mobile Competence Centre: Sophia Antipolis, France, January 2018.

- 5Growth Deliverable 2.1: Initial Design of 5G End-to-End Service Platform; Technical Report; The 5G Infrastructure Public Private Partnership: Heidelberg, Germany, January 2021.

- 5G-Carmen Deliverable 2.1: 5G-Carmen Use Cases and Requirements; Technical Report; The 5G Infrastructure Public Private Partnership: Heidelberg, Germany, January 2021.

- 5G-Carmen Deliverable 5.1: 5G-Carmen Pilon Plan; Technical Report; The 5G Infrastructure Public Private Partnership: Heidelberg, Germany, January 2021.

- Hexa-X Deliverable 1.2: Expanded 6G Vision, Use Cases and Societal Values Including Aspects of Sustainability, Security and Spectrum; Technical Report; 2021. Available online: https://hexa-x.eu/ (accessed on 10 October 2021).

- EPSCR. Available online: https://epsrc.ukri.org/research/ourportfolio/researchareas/ait/ (accessed on 10 October 2021).

- Deep Learning Based Solutions for the Physical Layer of Machine Type Communications. Available online: https://gow.epsrc.ukri.org/NGBOViewGrant.aspx?GrantRef=EP/S028455/1 (accessed on 10 October 2021).

- Haykin, S. Cognitive radio: Brain-empowered wireless communications. IEEE J. Sel. Areas Commun. 2005, 23, 201–220. [Google Scholar] [CrossRef]

- Secure Wireless Agile Networks. Available online: https://gow.epsrc.ukri.org/NGBOViewGrant.aspx?GrantRef=EP/T005572/1 (accessed on 10 October 2021).

- Communication Aware Dynamic Edge Computing. Available online: https://www.chistera.eu/projects/connect (accessed on 10 October 2021).

- Transforming Networks—Building an Intelligent Optical Infrastructure. Available online: https://gow.epsrc.ukri.org/NGBOViewGrant.aspx?GrantRef=EP/R035342/1 (accessed on 10 October 2021).

- The Mathematics of Deep Learning. Available online: https://gow.epsrc.ukri.org/NGBOViewGrant.aspx?GrantRef=EP/V026259/1 (accessed on 10 October 2021).

- Graph Neural Networks for Explainable Artificial Intelligence. Available online: https://www.chistera.eu/projects/graphnex (accessed on 10 October 2021).

- Partnership between Intel and the National Science Foundation. Available online: https://www.nsf.gov/pubs/2019/nsf19591/nsf19591.htm (accessed on 10 October 2021).

- Partnership between Intel and the National Science Foundation. Available online: https://newsroom.intel.com/wp-content/uploads/sites/11/2020/06/MLWiNS-Fact-Sheet.pdf (accessed on 10 October 2021).

- NSF New Partnership with Private Industries on Intelligent and Resilient Next-Generation Systems. Available online: https://www.nsf.gov/funding/pgm_summ.jsp?pims_id=505904 (accessed on 10 October 2021).

- NSF New Partnership with Private Industries on Intelligent and Resilient Next-Generation Systems. Available online: https://www.nsf.gov/attachments/302634/public/RINGSv6-2.pdf (accessed on 10 October 2021).

- Accelercomm. Available online: https://www.accelercomm.com/news/193m-savings-with-improvements-in-5g-radio-signal-processing (accessed on 10 October 2021).

| Network Functions | Examples of Key Research Studies |

|---|---|

| Symbol detection | DNN-based receiver design for joint channel equalisation and symbol detection in OFDM systems [27]. DNN-based MIMO detector combining deep unfolding with linear-MMSE for channel equalisation [28]. LSTM-based learning of the log-likelihood ratios (LLRs) in Viterbi decoding [26]. |

| Channel estimation | Estimating the channel state information (CSI) under combined time and frequency selective fading channels using DNNs [Yang2019] and convolutional neural networks (CNNs) [29]. Adaptive channel equalisation using recurrent neural networks (RNNs) [30]. Channel estimation using meta-learning [31]. Reducing the CSI feedback in FDD massive MIMO systems using autoencoders in the feedback channel [32]. |

| Channel prediction | DNN-based prediction of the downlink CSI based on the measured uplink CSI in FDD MIMO systems [33,34]. RNN-based downlink channel prediction leveraging correlations in space and time to alleviate the issue of outdated CSI [35]. |

| Channel coding | DNN-based decoding of short polar codes of rate ½ and block length N = 16 [36]. DNN-based decoding of polar codes with length N = 128 leveraging the structure of belief propagation decoding algorithm [37]. Integrating supervised learning for estimating the extrinsic LLRs into the max-log-map turbo decoder [38]. |

| Link adaptation | Deep reinforcement learning (DRL)-based selection of the modulation and coding scheme (MCS) using the measured SNR as the environmental state and the experienced throughput as the reward [39]. Supervised learning techniques for adaptive modulation and coding (AMC) including k-nearest-neighbours and support vector machines (SVMs) [40]. |

| Reflecting intelligent surfaces (RIS) | DNN-based design and control of phase shifters [41]. Using supervised learning to estimate the direct and the cascade (base station to RIS and RIS to the user) channels in RIS-based communication [42]. Combining compressive sensing with deep learning (DL) to reduce the training overhead (due to the large number of reflecting elements) in the design of phase-shifts in RIS [43]. |

| Spectrum sensing | Unsupervised (k-means, Gaussian mixture models) and supervised (k-nearest-neighbours and SVMs) learning methods for binary spectrum sensing [44]. CNN-based multi-band cooperative binary spectrum sensing leveraging spatial and spectral correlations [45]. Image-based automatic modulation identification based on the received constellation diagrams, signal distributions and spectrograms [46]. |

| Operational Timescales | Network Functions | Examples of Key Research Studies |

|---|---|---|

| Near-real-time RIC | Resource allocation | DL for joint subcarrier allocation and power control in the downlink of multi-cell networks [47]. Joint downlink power control and bandwidth allocation in multi-cell networks using NNs and Q-learning [48]. DNNs for interference-limited power control that maximises the sum-rate under a power budget constraint at the base station [49]. Predictive models on spectrum availability for distributed proactive dynamic channel allocation and carrier aggregation for maximising the throughput of LTE small cells operating in unlicensed spectrum bands [50]. A DRL agent learns to select the best scheduling policy, including water-filling, round-robin, proportional-fair, or max-min, for each base station and RAN slice [51]. |

| Interference management | Centralised joint beam management and inter-cell interference coordination in dense mm-wave networks using DNNs [52]. | |

| Non-real-time RIC | E2E network slicing | Predicting the capacity of RAN slices and the congestion of network slices using NNs, and optimally combining them to sustain the quality-of-experience (QoE) [53]. An AI/ML module predicts the states of network resources in runtime and autonomously stitches together RAN and core slices to satisfy all the user intents [54]. |

| Network dimensioning and planning | SINR-based coverage evaluation using stochastic geometry | Deep transfer learning for selecting the downlink transmit power level that optimises the energy efficiency in cellular networks given the density of base stations [55]. NN-based prediction of the coverage probability given the base station density, the propagation pathloss and the shadowing correlation model [56]. |

| Network maintenance | Fault detection and compensation | Supervised learning method to detect performance degradation and the corresponding root cause of the fault [57]. |

| Categories | Use Cases |

|---|---|

| Network slice and other network service-related use cases. |

|

| User-plane related use cases. |

|

| Application related use cases. |

|

| Signalling or management related use cases. |

|

| Security-related use cases |

|

| Use Cases | Key Benefits and Enablers |

|---|---|

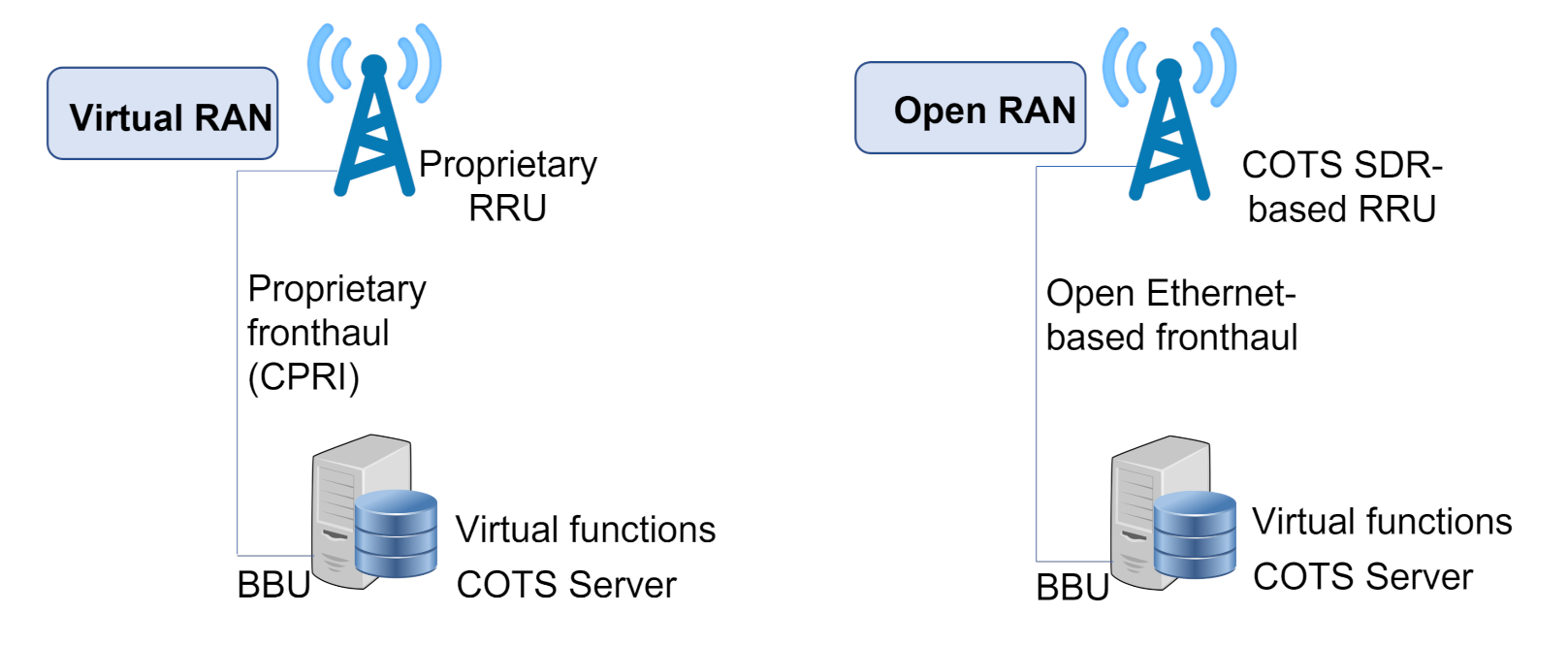

| Low-cost RAN White-box Hardware | COTS hardware reduces CapEx. It is easier to upgrade and inter-operable with network functions developed by different vendors. |

| Traffic Steering | Faster response to data traffic variations using AI/ML-based proactive load-balancing yielding reduced OpEx, better network efficiency and user experience. |

| QoE Optimisation | Prediction of degraded QoE for a UE using AI/ML and proactive allocation of radio resources to the UE. |

| QoS-based Resource Optimisation | AI/ML-based allocation of radio resources to ensure that at least certain prioritised users maintain their QoS under data traffic congestion. |

| Massive MIMO Optimisation | Adapting beam configuration and related policies, e.g., packet scheduling, for enhancing the network capacity. |

| RAN slice SLA assurance | Maximise revenue with AI/ML-based management of network slices. |

| Context-based dynamic handover management for vehicle-to-everything (V2X) | AI/ML-based handovers using historical road traffic and navigation data resulting to better user-experience. |

| Dynamic resource allocation based on the flight-path for unmanned aerial vehicles (UAV) | AI/ML-based resource allocation using historical flight data and UAV measurement reports. |

| Radio resource allocation for UAV applications | AI/ML-based resource allocation under asymmetric uplink/downlink data traffic. |

| RAN sharing | Reduced CapEx due to multi-vendor deployments. |

| Use Cases | Scenarios | Mobility |

|---|---|---|

| Outdoor backhaul and fronthaul networks of fixed topology. | Long-range line-of-sight (LoS) rooftop point-to-point backhauling without RIS. | Stationary |

| Street-level point-to-point and point-to-multipoint backhauling and fronthauling with RIS for non-LoS (NLoS). | Stationary | |

| Advanced NLoS connectivity based on meta-surfaces. | Indoor advanced NLoS connectivity based on meta-surfaces. | Stationary or low. |

| Data-kiosk communication for downloading large amounts of data in a short time. | Stationary or low or moderate. | |

| Ad hoc connectivity in moving network topology. | Dynamic fronthaul and backhaul connectivity for mobile 5G access nodes and repeaters, e.g., using drones. | Low or moderate. |

| LoS vehicle-to-vehicle (V2V) and vehicle-to-everything (V2X) connectivity. | Low or moderate. |

| Use Cases | Latency | Coverage | Service Creation Time | Capacity | Availability | Reliability | User Density | Service Types |

|---|---|---|---|---|---|---|---|---|

| Big event | ✓ | ✓ | ✓ | eMBB | ||||

| Eye in the sky | ✓ | ✓ | ✓ | eMBB, uRLLC | ||||

| Security as a service at the edge | ✓ | ✓ | All | |||||

| Wireless video in large scale event | ✓ | ✓ | ✓ | eMMB | ||||

| Multimedia mission critical services | ✓ | ✓ | ✓ | ✓ | eMBB, uRLLC | |||

| MEC-based mission critical services | ✓ | ✓ | ✓ | ✓ | eMMB | |||

| Maritime communications | ✓ | ✓ | ✓ | ✓ | eMMB | |||

| Capacity on demand and rural IoT | ✓ | ✓ | ✓ | eMMB, mMTC | ||||

| Massive IoT for large-scale public events | ✓ | ✓ | ✓ | ✓ | mMTC | |||

| Dense urban 360 degrees virtual reality | ✓ | ✓ | ✓ | ✓ | eMBB |

| Project Name | Target Areas Using AI/ML |

|---|---|

| 6G Brains | Implements a self-learning agent based on deep reinforcement learning which performs intelligent and dynamic resource allocation for future industrial IoT at massive scales. |

| AI@Edge | Designs reusable, trustworthy and secure AI solutions at the network edge for autonomous decision making and E2E quality assurance. The targeted use cases are AI-based smart content pre-selection for in-flight infotainment, AI-assisted edge computing for infrastructure monitoring using drones, and AI for intrusion detection in industrial IoT. |

| Daemon | Implements AI/ML algorithms for real-time network control and intelligent orchestration and management. Specifically, the project investigates the use of real time RIC for embedding intelligence in RIS, radio resource allocation, distribution of computational resources at the edge/fog, and backhaul traffic control. At longer timescales, energy-aware network slicing, capacity forecasting, anomaly detection and self-learning network orchestration are examined. |

| Dedicat 6G | Aspires to demonstrate distributed network intelligence for dynamic coverage extension, indoor positioning, data caching, and energy-efficient distribution of computation loads across the network. Representative use cases demonstrate the developed solutions include smart warehousing, augmented and virtual reality applications, public safety and disaster relief using automated guided vehicles and drones, and connected autonomous mobility. |

| Hexa-X | Applies AI for network orchestration from the end-devices through the edge to the cloud and the core network. This includes inter-connecting intelligent agents based on federated learning, proactive network slice management, instantiation of network functions, zero-touch automation, explainable AI, intelligent spectrum usage and intelligent air interface design, to name a few. |

| Marsal | Integrates blockchain technology with ML-based mechanisms to foster privacy and security in multi-tenant network slicing scenarios. Furthermore, ML-based orchestration and management of radio and computational resources is studied. |

| Reindeer | Develops an experimental testbed for the RadioWeaves technology. RadioWeaves leverages the ideas of RIS and cell free wireless access for offering zero-latency and high-capacity connectivity in short-range indoor applications such as immersive entertainment, health care, and smart factories. Intelligence is distributed near the end devices for an efficient use of spectral, energy and computational resources. |

| Rise-6G | Designs and prototypes intelligent radio-wave propagation using RIS. |

| Teraflow | Implements a novel SDN controller with cloud-native architecture, AI-based security, and zero-touch automation features. The demonstrated use cases are autonomous networks beyond 5G, cybersecurity and automotive. |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Koufos, K.; EI Haloui, K.; Dianati, M.; Higgins, M.; Elmirghani, J.; Imran, M.A.; Tafazolli, R. Trends in Intelligent Communication Systems: Review of Standards, Major Research Projects, and Identification of Research Gaps. J. Sens. Actuator Netw. 2021, 10, 60. https://doi.org/10.3390/jsan10040060

Koufos K, EI Haloui K, Dianati M, Higgins M, Elmirghani J, Imran MA, Tafazolli R. Trends in Intelligent Communication Systems: Review of Standards, Major Research Projects, and Identification of Research Gaps. Journal of Sensor and Actuator Networks. 2021; 10(4):60. https://doi.org/10.3390/jsan10040060

Chicago/Turabian StyleKoufos, Konstantinos, Karim EI Haloui, Mehrdad Dianati, Matthew Higgins, Jaafar Elmirghani, Muhammad Ali Imran, and Rahim Tafazolli. 2021. "Trends in Intelligent Communication Systems: Review of Standards, Major Research Projects, and Identification of Research Gaps" Journal of Sensor and Actuator Networks 10, no. 4: 60. https://doi.org/10.3390/jsan10040060

APA StyleKoufos, K., EI Haloui, K., Dianati, M., Higgins, M., Elmirghani, J., Imran, M. A., & Tafazolli, R. (2021). Trends in Intelligent Communication Systems: Review of Standards, Major Research Projects, and Identification of Research Gaps. Journal of Sensor and Actuator Networks, 10(4), 60. https://doi.org/10.3390/jsan10040060