Enhanced Detection of Algal Leaf Spot, Tea Brown Blight, and Tea Grey Blight Diseases Using YOLOv5 Bi-HIC Model with Instance and Context Information

Abstract

1. Introduction

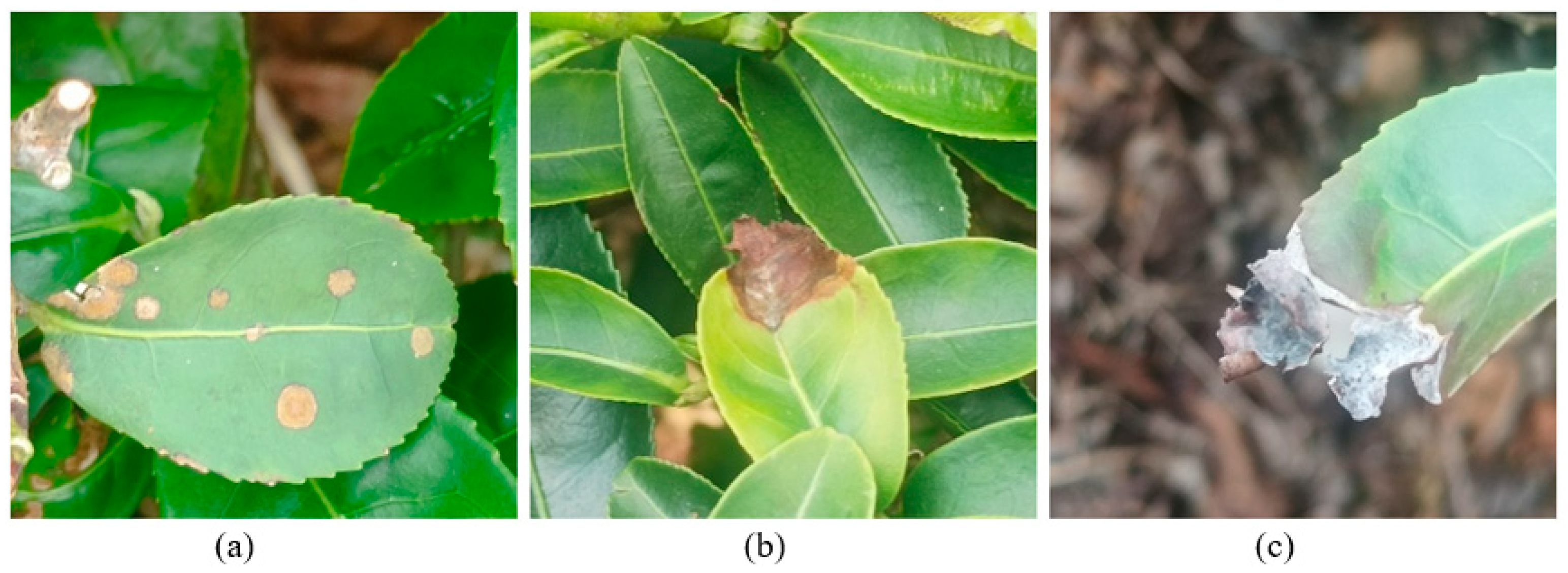

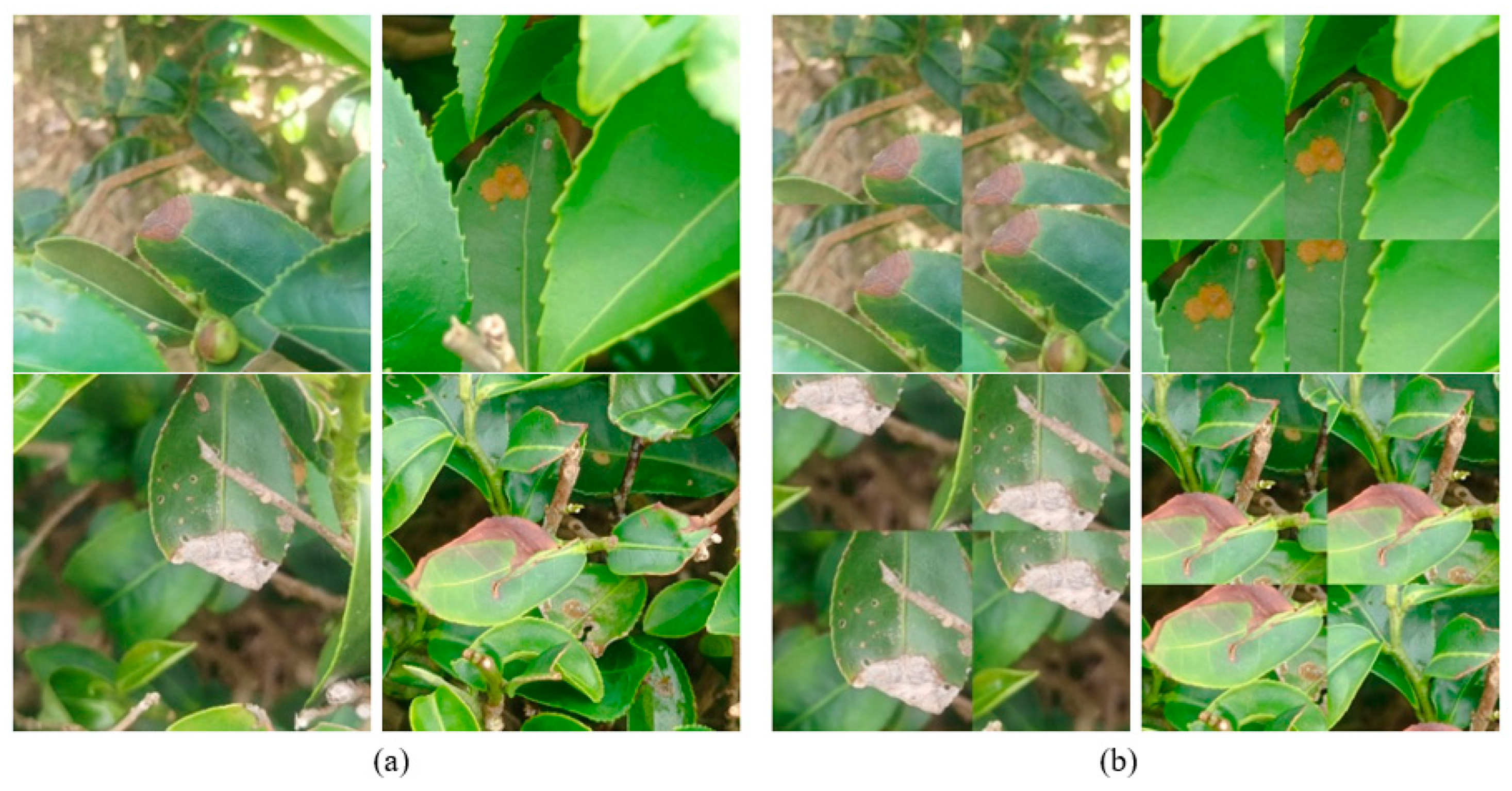

2. Data Acquisition

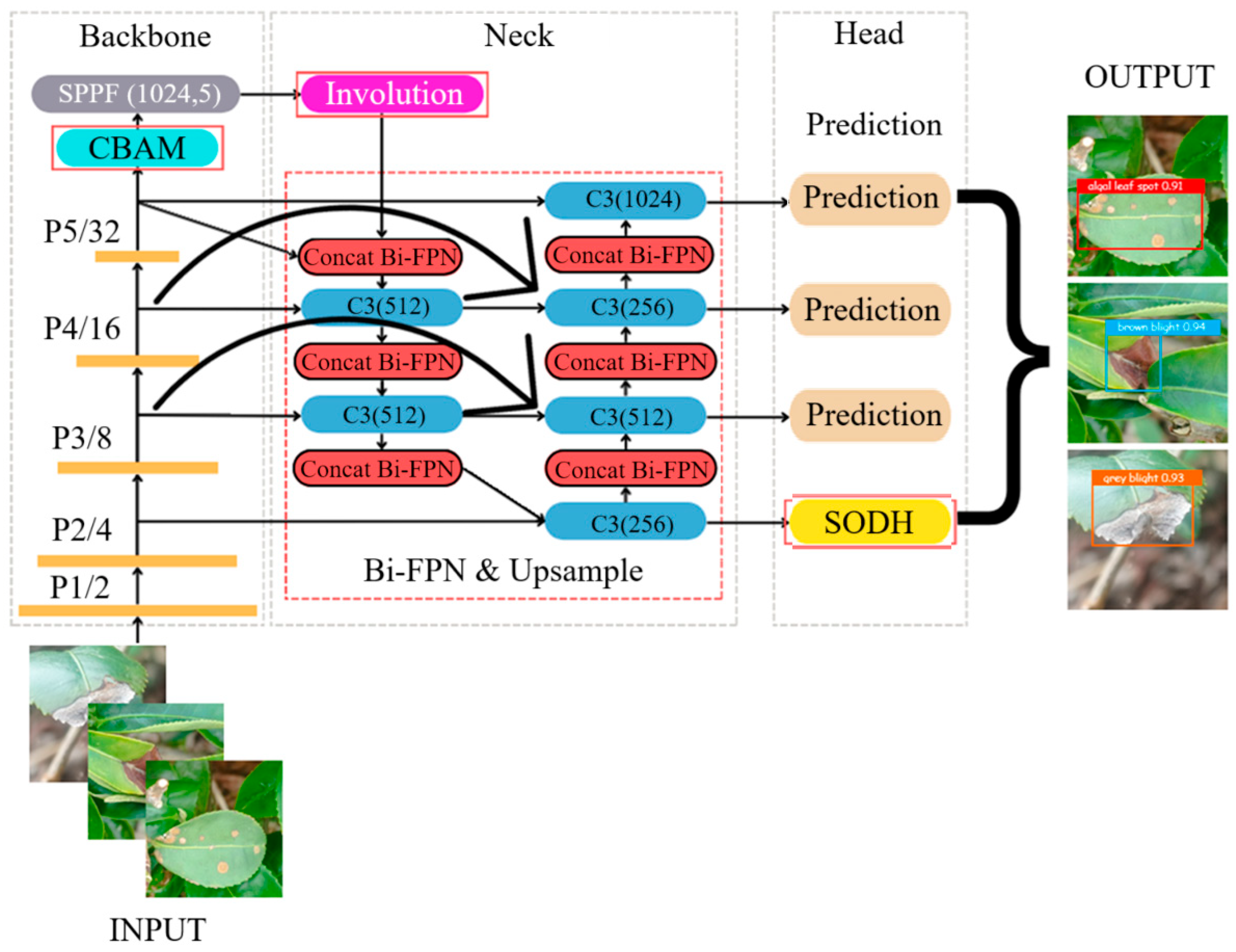

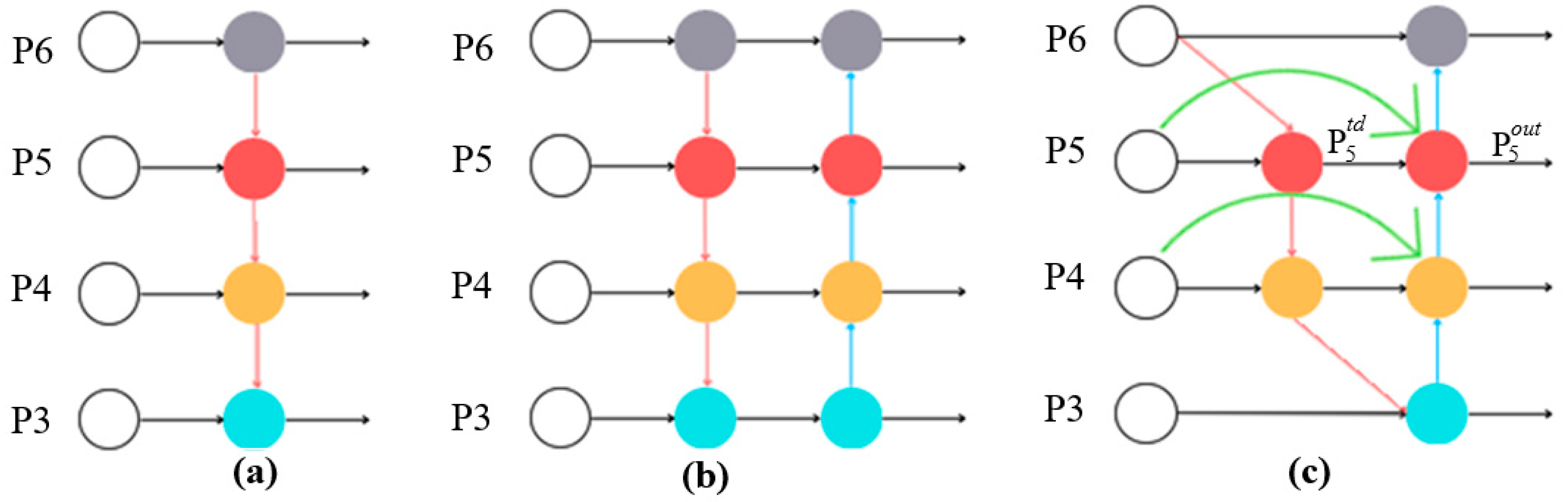

3. Customized YOLOv5 Bi-HIC Model for Tea Leaf Disease Detection

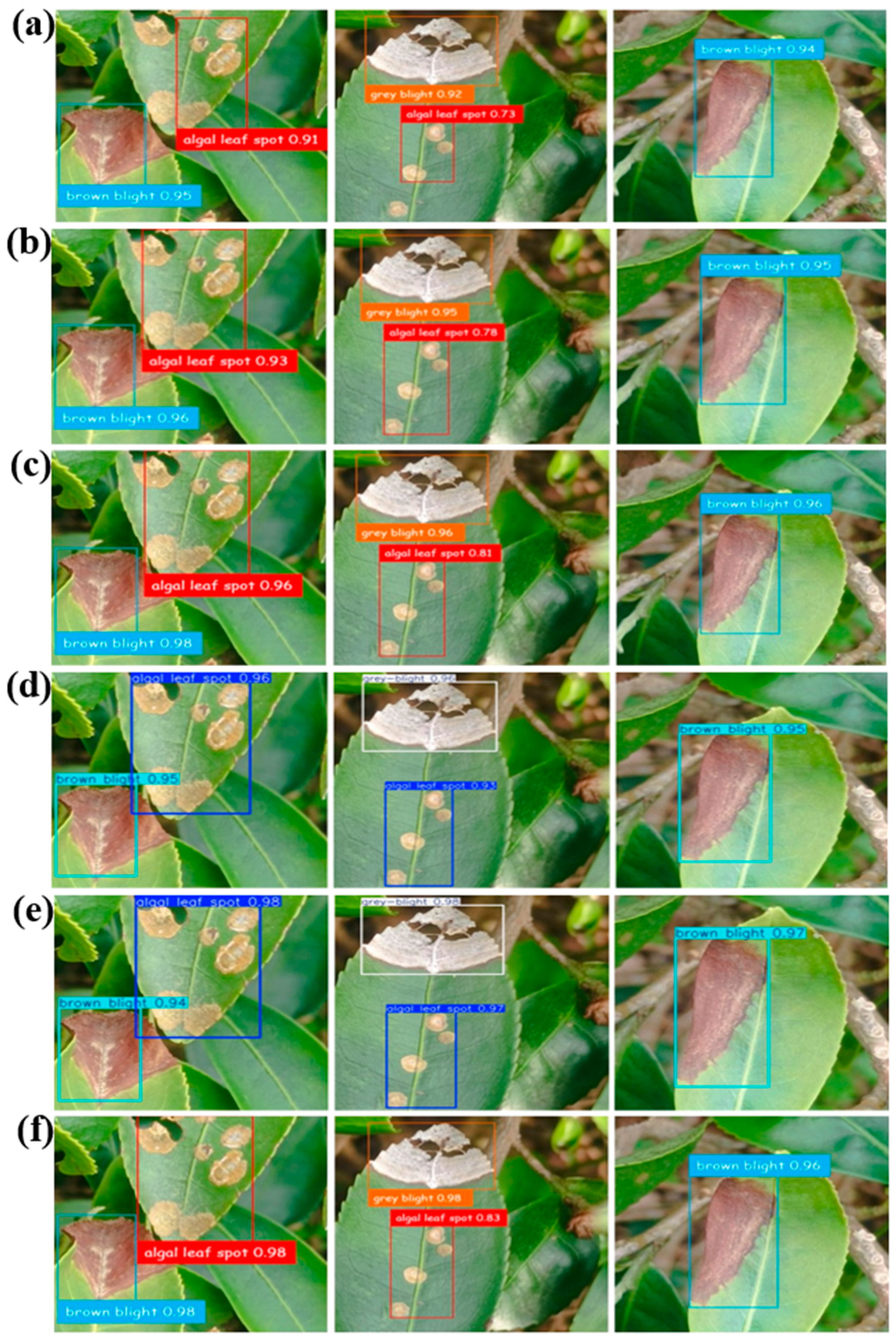

4. Results and Discussion

4.1. Evaluation Metric

4.2. Experimental Setup and Results

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Liou, B.K.; Jaw, Y.M.; Chuang, G.C.C.; Yau, N.N.; Zhuang, Z.Y.; Wang, L.F. Important sensory, association, and postprandial perception attributes influencing young Taiwanese consumers’ acceptance for Taiwanese specialty teas. Foods 2020, 9, 100. [Google Scholar] [CrossRef] [PubMed]

- Mukhopadhyay, S.; Paul, M.; Pal, R.; De, D. Tea leaf disease detection using multi-objective image segmentation. Multimed. Tools Appl. 2021, 80, 753–771. [Google Scholar] [CrossRef]

- Balasundaram, A.; Sundaresan, P.; Bhavsar, A.; Mattu, M.; Kavitha, M.S.; Shaik, A. Tea leaf disease detection using segment anything model and deep convolutional neural networks. Results Eng. 2025, 25, 103784. [Google Scholar] [CrossRef]

- Datta, S.; Gupta, N. A novel approach for the detection of tea leaf disease using deep neural network. Procedia Comput. Sci. 2023, 218, 2273–2286. [Google Scholar] [CrossRef]

- Bhuyan, P.; Singh, P.K.; Das, S.K. Res4net-CBAM: A deep cnn with convolution block attention module for tea leaf disease diagnosis. Multimed. Tools Appl. 2024, 83, 48925–48947. [Google Scholar] [CrossRef]

- Hu, G.; Wan, M.; Wei, K.; Ye, R. Computer vision-based method for severity estimation of tea leaf blight in natural scene images. Eur. J. Agron. 2023, 144, 126756. [Google Scholar]

- Zou, H.; Li, T.; Zhang, J.; Shao, H.; Kageyama, K.; Feng, W. Rapid detection of Colletotrichum siamense from infected tea plants using filter-disc DNA extraction and loop-mediated isothermal amplification. Plant Dis. 2024, 108, 35–40. [Google Scholar] [CrossRef]

- Tiwari, V.; Joshi, R.C.; Dutta, M.K. Dense convolutional neural networks based multiclass plant disease detection and classification using leaf images. Ecol. Inform. 2021, 63, 101289. [Google Scholar] [CrossRef]

- Chen, H.C.; Widodo, A.M.; Wisnujati, A.; Rahaman, M.; Lin, J.C.W.; Chen, L.; Weng, C.E. AlexNet convolutional neural network for disease detection and classification of tomato leaf. Electronics 2022, 11, 951. [Google Scholar] [CrossRef]

- Du, J. Understanding of object detection based on CNN family and YOLO. J. Phys. Conf. Ser. 2018, 1004, 012029. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Mou, X.; Lei, S.; Zhou, X. YOLO-FR: A YOLOv5 infrared small target detection algorithm based on feature reassembly sampling method. Sensors 2023, 23, 2710. [Google Scholar]

- Terven, J.; Córdova-Esparza, D.M.; Romero-González, J.A. A comprehensive review of yolo architectures in computer vision: From yolov1 to yolov8 and yolo-nas. Mach. Learn. Knowl. Extr. 2023, 5, 1680–1716. [Google Scholar] [CrossRef]

- Wang, Y.; Yan, G.; Meng, Q.; Yao, T.; Han, J.; Zhang, B. DSE-YOLO: Detail semantics enhancement YOLO for multi-stage strawberry detection. Comput. Electron. Agric. 2022, 198, 107057. [Google Scholar] [CrossRef]

- Gao, J.; Dai, S.; Huang, J.; Xiao, X.; Liu, L.; Wang, L.; Sun, X.; Guo, Y.; Li, M. Kiwifruit detection method in orchard via an improved light-weight YOLOv4. Agronomy 2022, 12, 2081. [Google Scholar] [CrossRef]

- Lin, J.; Bai, D.; Xu, R.; Lin, H. TSBA-YOLO: An improved tea diseases detection model based on attention mechanisms and feature fusion. Forests 2023, 14, 619. [Google Scholar] [CrossRef]

- Xue, Z.; Xu, R.; Bai, D.; Lin, H. YOLO-tea: A tea disease detection model improved by YOLOv5. Forests 2023, 14, 415. [Google Scholar] [CrossRef]

- Bao, W.; Zhu, Z.; Hu, G.; Zhou, X.; Zhang, D.; Yang, X. UAV remote sensing detection of tea leaf blight based on DDMA-YOLO. Comput. Electron. Agric. 2023, 205, 107637. [Google Scholar] [CrossRef]

- Soeb, M.J.A.; Jubayer, M.F.; Tarin, T.A.; Al Mamun, M.R.; Ruhad, F.M.; Parven, A.; Mubarak, N.M.; Karri, S.L.; Meftaul, I.M. Tea leaf disease detection and identification based on YOLOv7 (YOLO-T). Sci. Rep. 2023, 13, 6078. [Google Scholar] [CrossRef]

- Ye, R.; Shao, G.; Yang, Z.; Sun, Y.; Gao, Q.; Li, T. Detection Model of Tea Disease Severity under Low Light Intensity Based on YOLOv8 and EnlightenGAN. Plants 2024, 13, 1377. [Google Scholar] [CrossRef] [PubMed]

- Zhan, B.; Xiong, X.; Li, X.; Luo, W. BHC-YOLOV8: Improved YOLOv8-based BHC target detection model for tea leaf disease and defect in real-world scenarios. Front. Plant Sci. 2024, 15, 1492504. [Google Scholar] [CrossRef]

- Islam, M.S.; Abid, M.A.S.; Rahman, M.; Barua, P.; Islam, K.; Zilolakhon, R.; Salayeva, L. YOLOv10-Powered Detection of Tea Leaf Diseases: Enhancing Crop Quality through AI. In Proceedings of the 4th International Conference on Advances in Computing, Communication, Embedded and Secure Systems (ACCESS), Ernakulam, India, 11–13 June 2025; pp. 406–411. [Google Scholar]

- Han, T.; Dong, Q.; Sun, L. SenseLite: A YOLO-Based Lightweight Model for Small Object Detection in Aerial Imagery. Sensors 2023, 23, 8118. [Google Scholar] [CrossRef]

- Hao, W.; Zhili, S. Improved mosaic: Algorithms for more complex images. J. Phys. Conf. Ser. 2020, 1684, 012094. [Google Scholar] [CrossRef]

- Islam, M.F.; Reza, M.T.; Manab, M.A.; Zabeen, S.; Islam, M.F.; Shahriar, M.F.; Kaykobad, M.; Husna, M.G.Z.A.; Noor, J. Involution-based efficient autoencoder for denoising histopathological images with enhanced hybrid feature extraction. Comput. Biol. Med. 2025, 192, 110174. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Li, D.; Hu, J.; Wang, C.; Li, X.; She, Q.; Zhu, L.; Zhang, T.; Chen, Q. Involution: Inverting the inherence of convolution for visual recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 12321–12330. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision And Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10781–10790. [Google Scholar]

- He, L.; Wei, H.; Wang, Q. A New Target Detection Method of Ferrography Wear Particle Images Based on ECAM-YOLOv5-BiFPN Network. Sensors 2023, 23, 6477. [Google Scholar] [CrossRef]

- Li, H.; Shi, L.; Fang, S.; Yin, F. Real-Time Detection of Apple Leaf Diseases in Natural Scenes Based on YOLOv5. Agriculture 2023, 13, 878. [Google Scholar] [CrossRef]

- Ai, J.; Li, Y.; Gao, S.; Hu, R.; Che, W. Tea Disease Detection Method Based on Improved YOLOv8 in Complex Background. Sensors 2025, 25, 4129. [Google Scholar] [CrossRef]

- Yao, X.; Lin, H.; Bai, D.; Zhou, H. A small target tea leaf disease detection model combined with transfer learning. Forests 2024, 15, 591. [Google Scholar] [CrossRef]

- Wang, J.; Li, M.; Han, C.; Guo, X. YOLOv8-RCAA: A lightweight and high-performance network for tea leaf disease detection. Agriculture 2024, 14, 1240. [Google Scholar] [CrossRef]

| Diseases | Training Set | Test Set |

|---|---|---|

| Algal leaf spot | 839 | 94 |

| Tea brown blight | 803 | 90 |

| Tea grey blight | 806 | 91 |

| Total | 2448 | 275 |

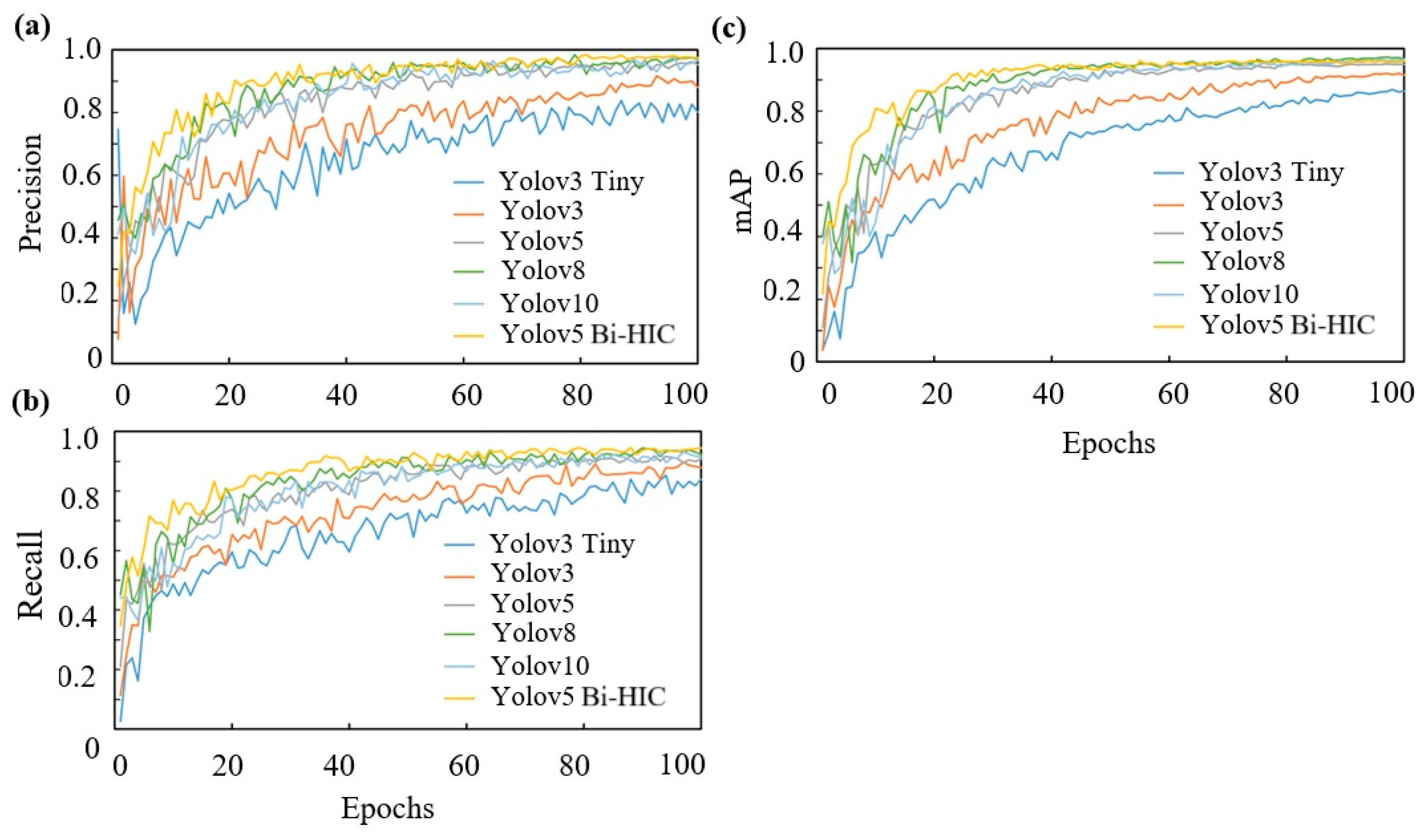

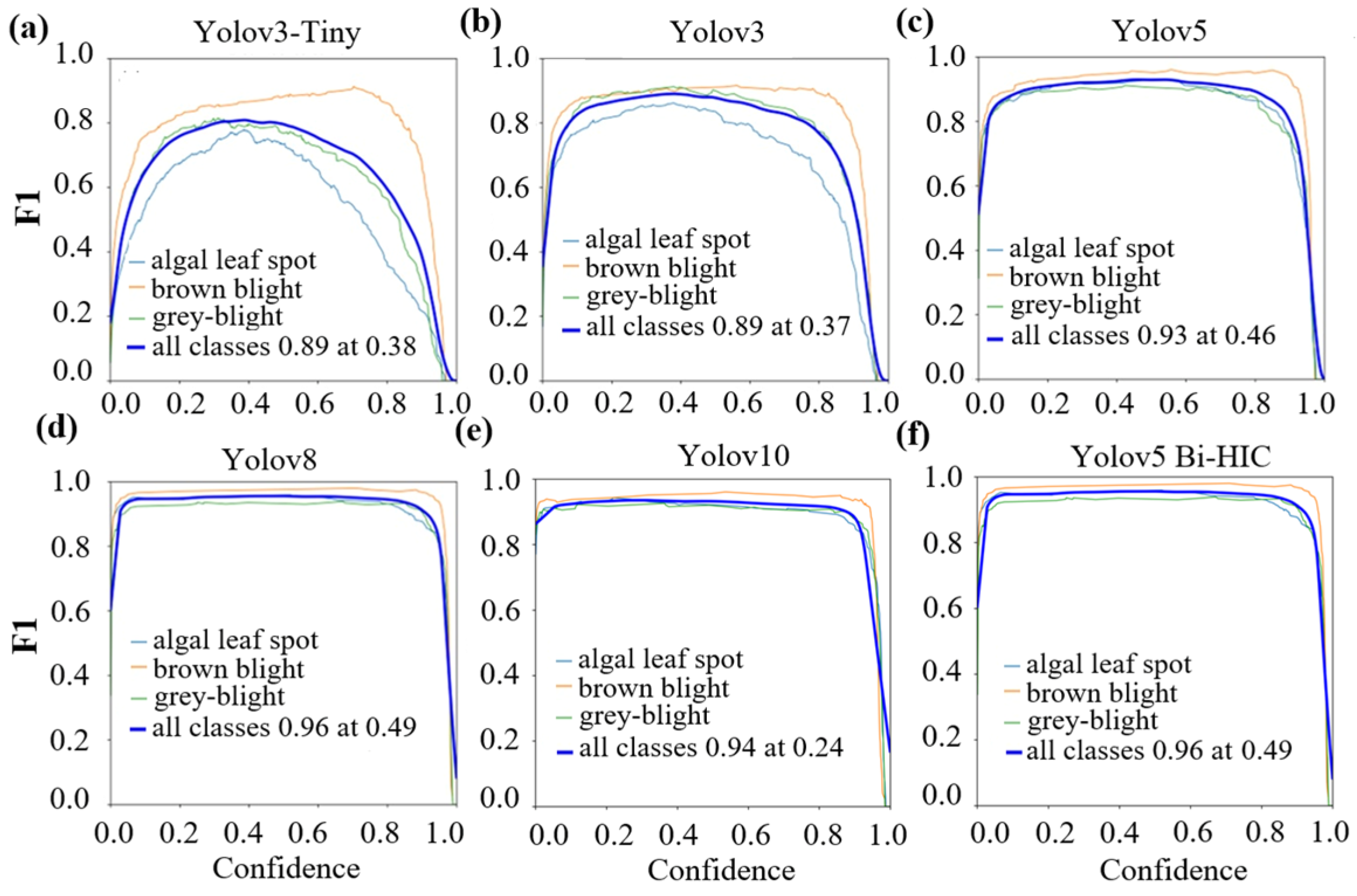

| Model | Precision (P) | Recall (R) | mAP | F1 Score |

|---|---|---|---|---|

| YOLOv3 Tiny | 0.779 | 0.831 | 0.869 | 0.81 |

| YOLOv3 | 0.896 | 0.886 | 0.916 | 0.89 |

| YOLOv5 | 0.951 | 0.909 | 0.950 | 0.93 |

| YOLOv8 | 0.960 | 0.930 | 0.967 | 0.94 |

| YOLOv10 | 0.955 | 0.917 | 0.963 | 0.94 |

| YOLOv5 Bi-HIC | 0.977 | 0.943 | 0.968 | 0.96 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Phan, Q.-H.; Setyawan, B.; Duong, T.-P.; Tsai, F.-T. Enhanced Detection of Algal Leaf Spot, Tea Brown Blight, and Tea Grey Blight Diseases Using YOLOv5 Bi-HIC Model with Instance and Context Information. Plants 2025, 14, 3219. https://doi.org/10.3390/plants14203219

Phan Q-H, Setyawan B, Duong T-P, Tsai F-T. Enhanced Detection of Algal Leaf Spot, Tea Brown Blight, and Tea Grey Blight Diseases Using YOLOv5 Bi-HIC Model with Instance and Context Information. Plants. 2025; 14(20):3219. https://doi.org/10.3390/plants14203219

Chicago/Turabian StylePhan, Quoc-Hung, Bryan Setyawan, The-Phong Duong, and Fa-Ta Tsai. 2025. "Enhanced Detection of Algal Leaf Spot, Tea Brown Blight, and Tea Grey Blight Diseases Using YOLOv5 Bi-HIC Model with Instance and Context Information" Plants 14, no. 20: 3219. https://doi.org/10.3390/plants14203219

APA StylePhan, Q.-H., Setyawan, B., Duong, T.-P., & Tsai, F.-T. (2025). Enhanced Detection of Algal Leaf Spot, Tea Brown Blight, and Tea Grey Blight Diseases Using YOLOv5 Bi-HIC Model with Instance and Context Information. Plants, 14(20), 3219. https://doi.org/10.3390/plants14203219