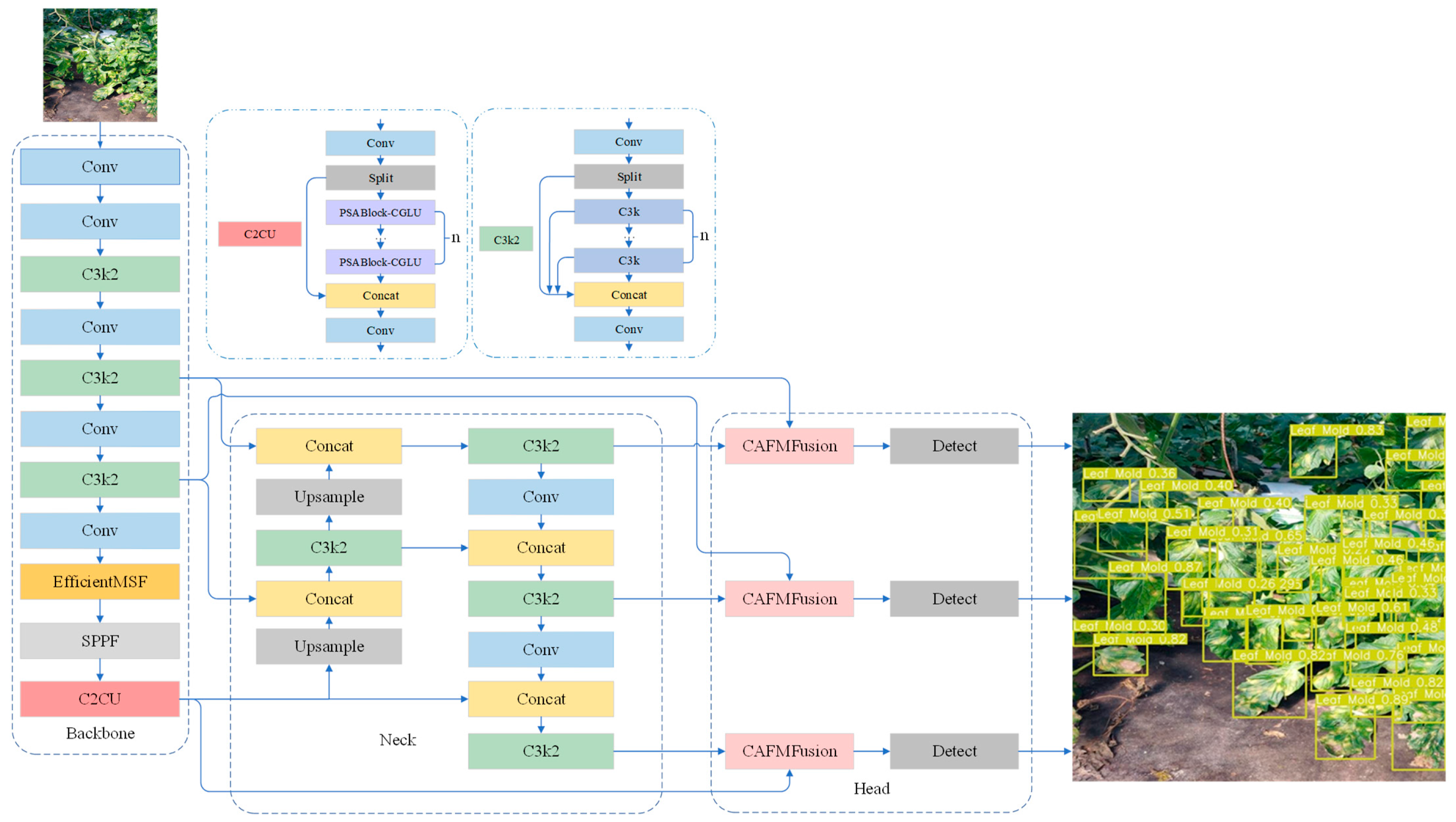

Tomato Leaf Disease Detection Method Based on Multi-Scale Feature Fusion

Abstract

1. Introduction

- •

- EfficientMSF Module: Enhances multi-scale feature extraction, enabling the model to more effectively identify lesions of varying sizes and shapes, thereby improving detection robustness under diverse environmental conditions.

- •

- C2CU Module: Strengthens global contextual modeling by capturing long-range dependencies among lesions, effectively reducing confusion between diseases with similar visual characteristics.

- •

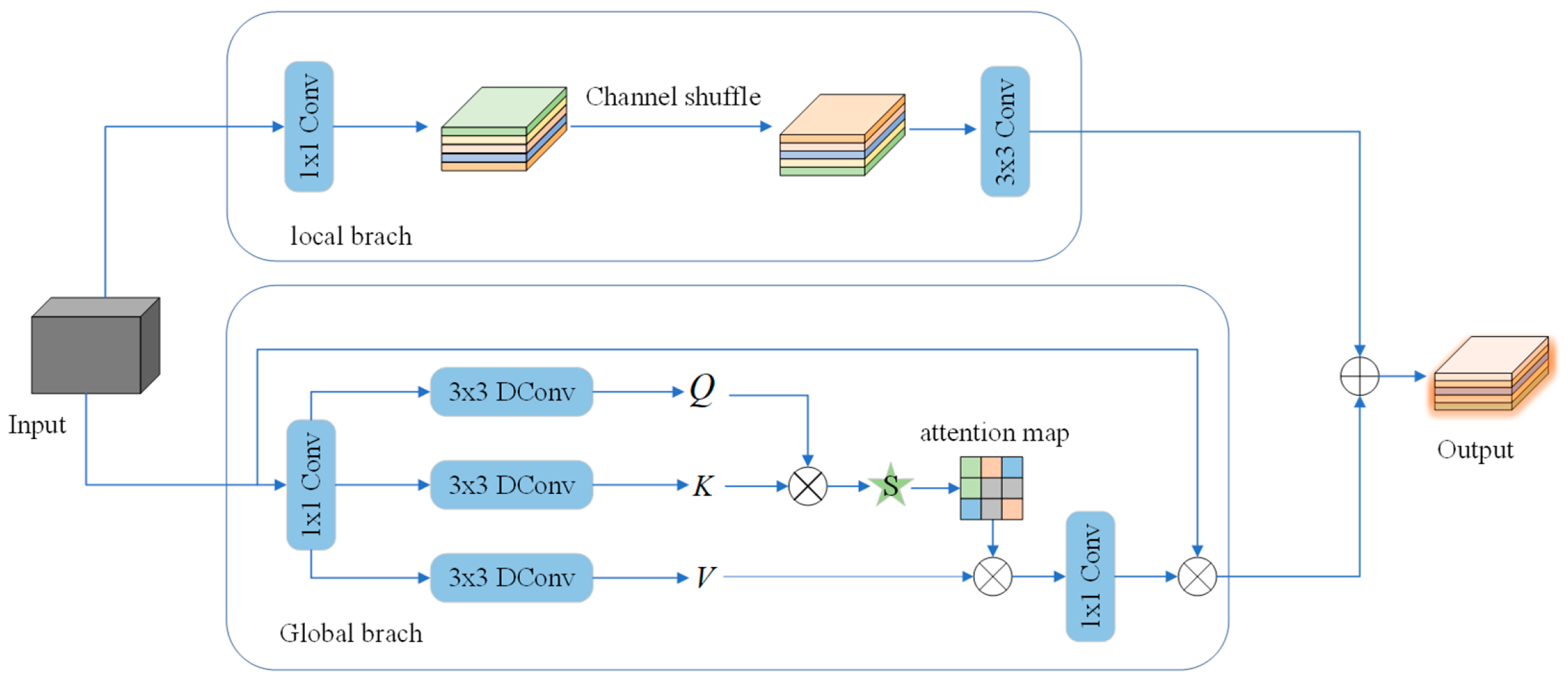

- CAFMFusion Module: Achieves efficient fusion of local details and global semantic information, enhancing overall feature representation while preserving fine-grained sensitivity, which significantly improves the detection of small lesions and complex background scenes.

2. Relevant Work

2.1. Evolution and Optimization of Feature Pyramid Networks

2.2. GhostConv Module

2.3. YOLO11 Model

3. Method Design

3.1. EfficientMSF Module

3.2. C2CU Module

3.3. CAFMFusion Module

4. Experiment

4.1. Dataset

4.2. Experimental Platform and Hyperparameter Setting

4.3. Evaluation Indicators

5. Experimental Analysis

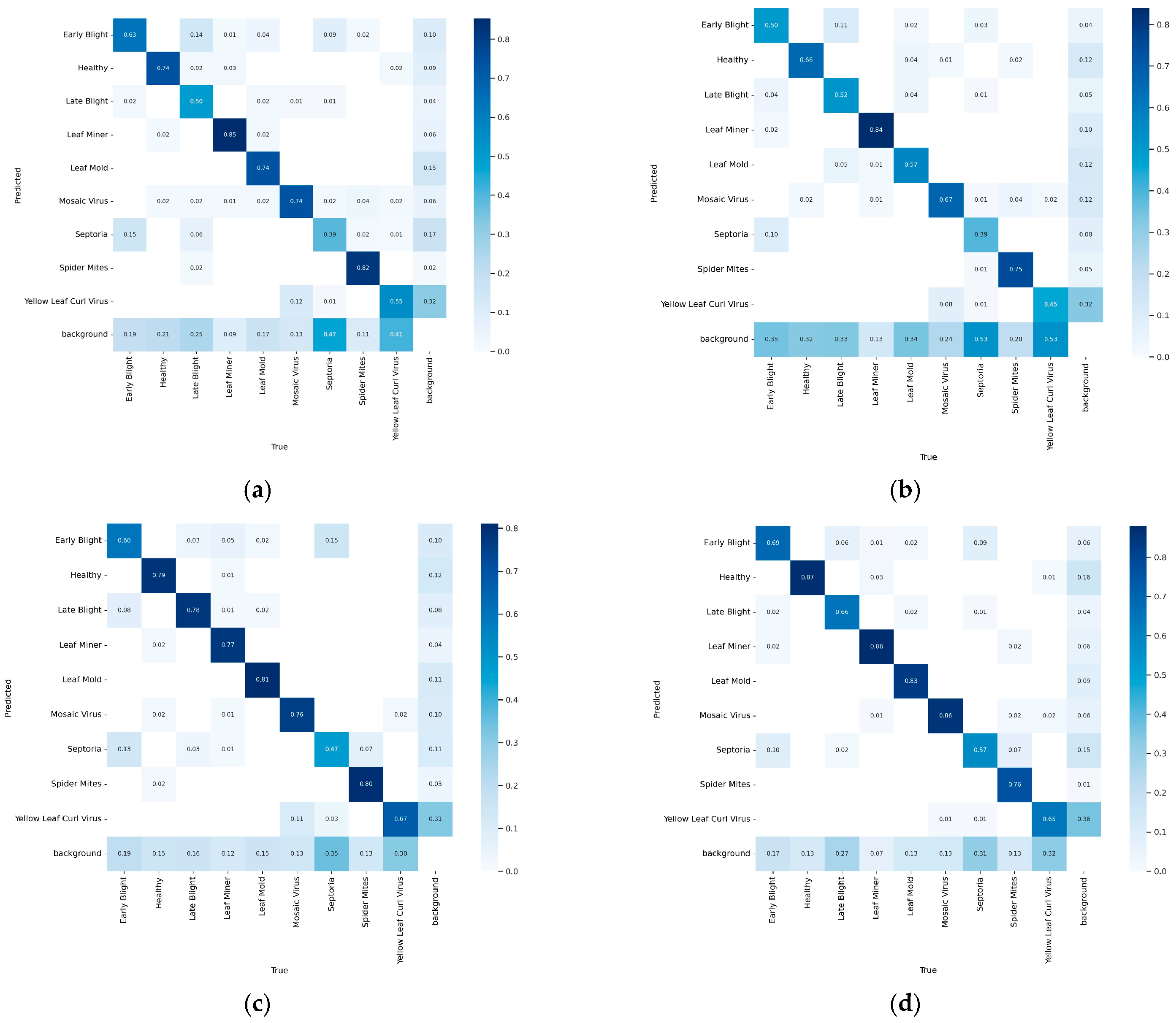

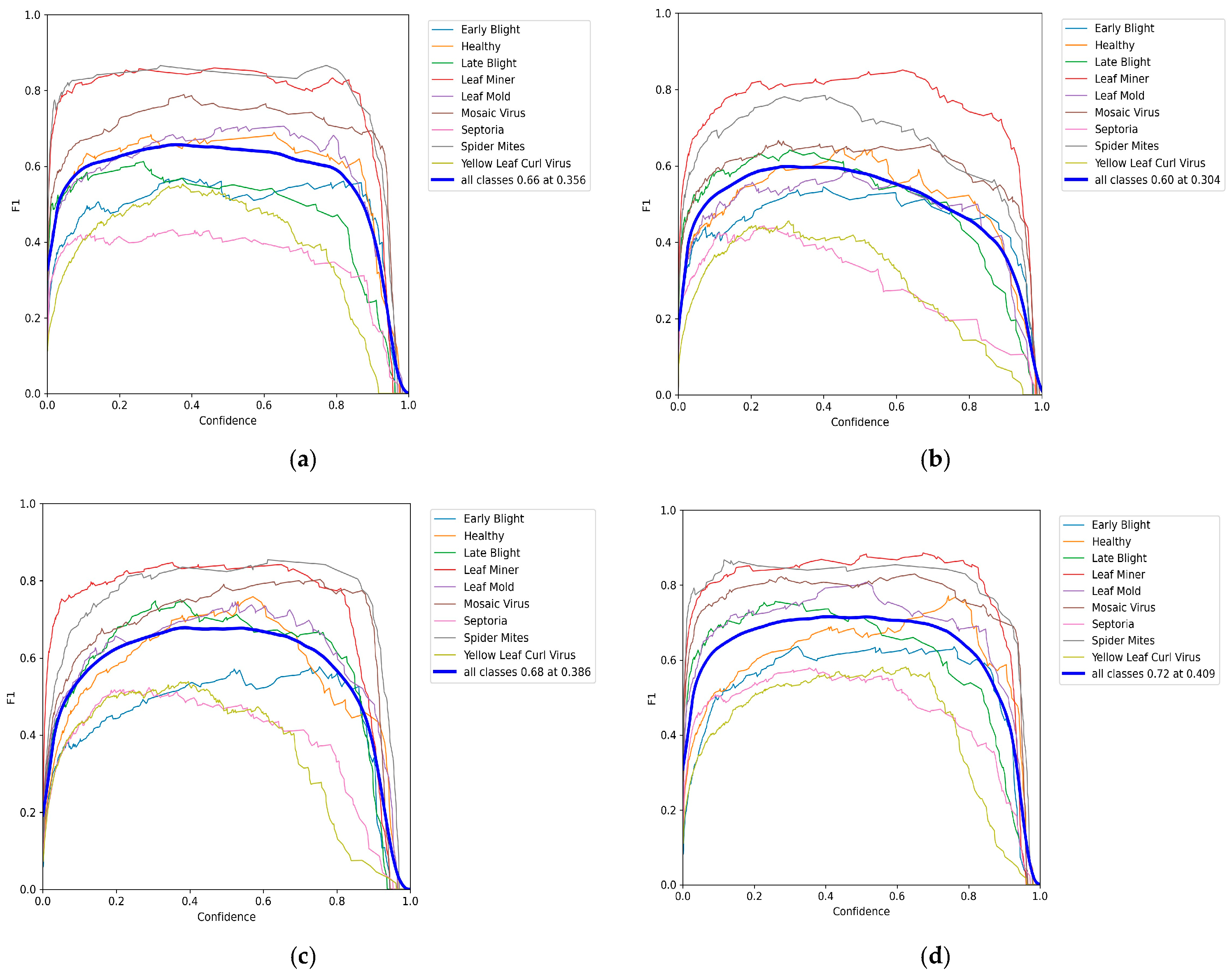

5.1. Algorithm Comparison Results

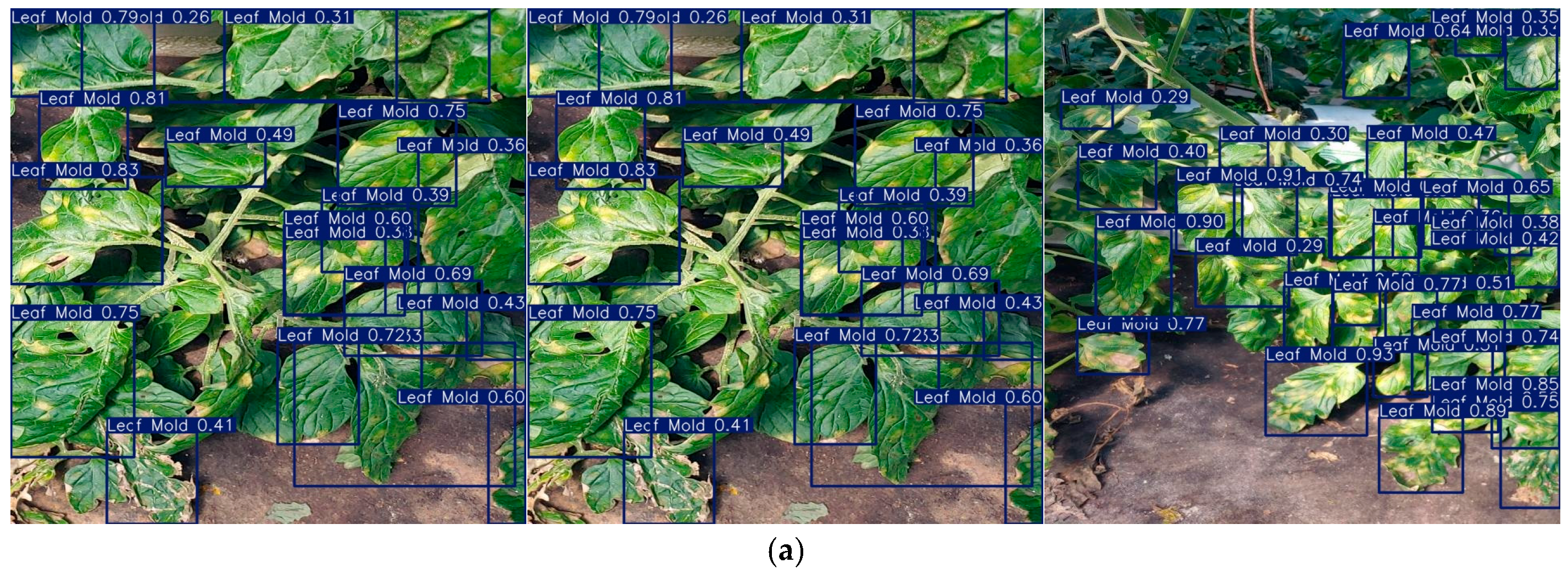

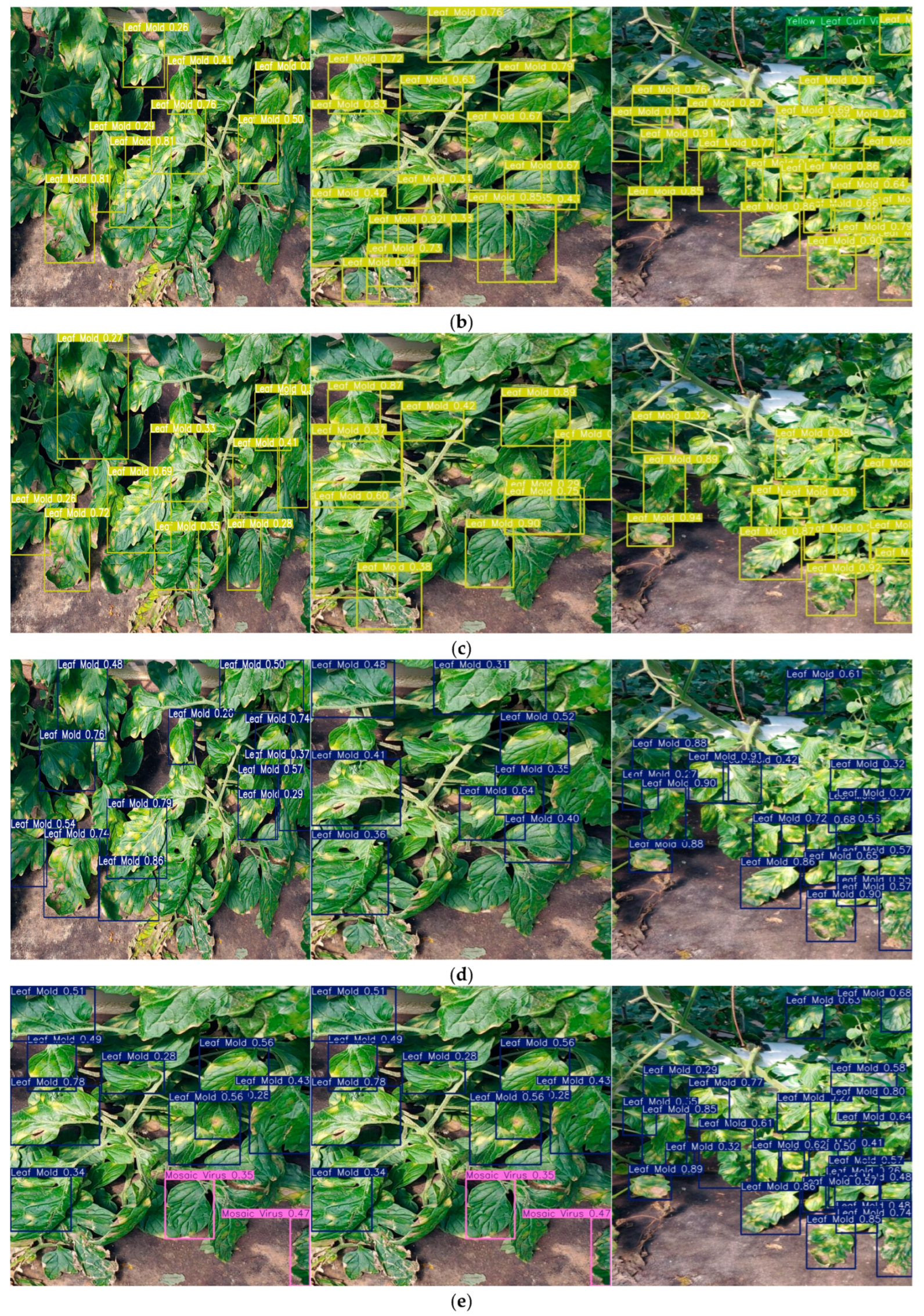

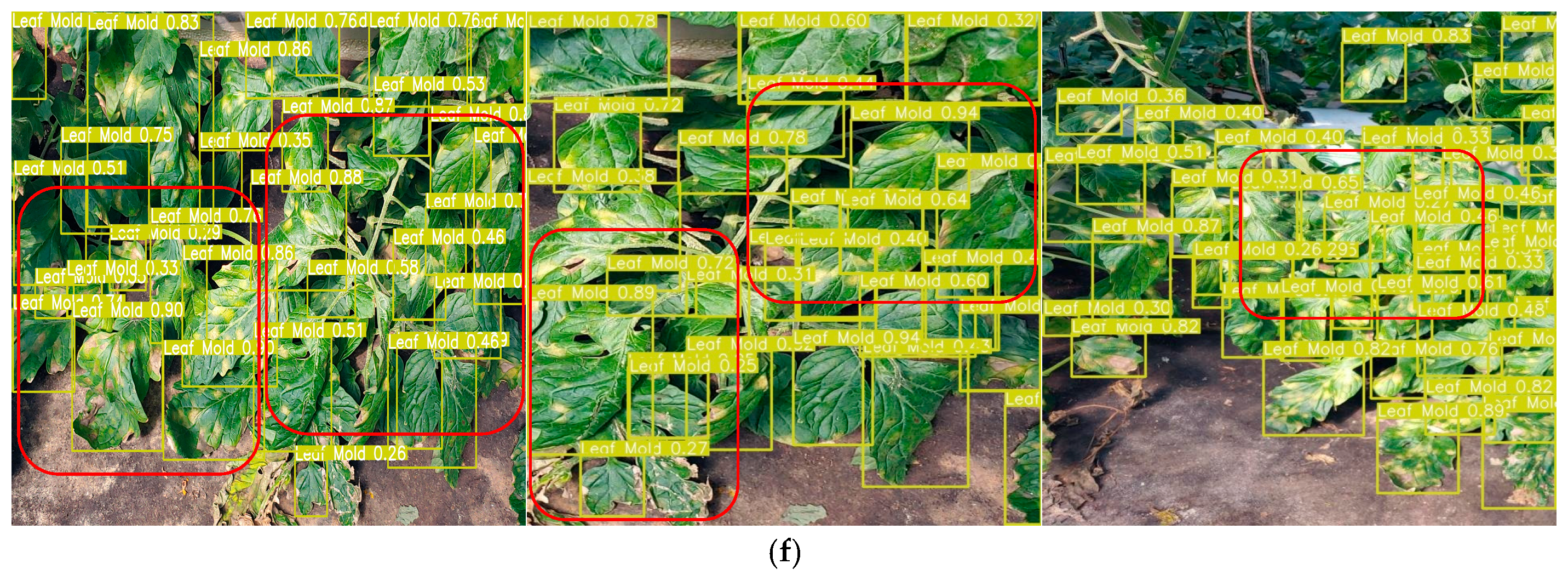

5.2. Visualization of Results

5.3. Ablation Experiment

6. Discussion

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| YOLO11 | You Only Look Once 11 |

| CBAM | Convolutional Block Attention Module |

| CAFM | Convolution and Attention Fusion Module |

| EfficientMSF | Efficient Multi-Scale Feature |

| FPN | Feature Pyramid Network |

| PANet | Path Aggregation Network |

| BiFPN | Bidirectional Feature Pyramid Network |

| CNNs | Convolutional Neural Networks |

References

- Khan, M.; Gulan, F.; Arshad, M.; Zaman, A.; Riaz, A. Early and late blight disease identification in tomato plants using a neural network-based model to augmenting agricultural productivity. Sci. Prog. 2024, 107, 00368504241275371. [Google Scholar] [CrossRef]

- Wang, X.; Liu, J.; Zhu, X. Early real-time detection algorithm of tomato diseases and pests in the natural environment. Plant Methods 2021, 17, 43. [Google Scholar] [CrossRef]

- Zhang, D.; Huang, Y.; Wu, C.; Ma, M. Detecting tomato disease types and degrees using multi-branch and destruction learning. Comput. Electron. Agric. 2023, 213, 108244. [Google Scholar] [CrossRef]

- Jelali, M. Deep learning networks-based tomato disease and pest detection: A first review of research studies using real field datasets. Front. Plant Sci. 2024, 15, 1493322. [Google Scholar] [CrossRef]

- Oni, M.K.; Prama, T.T. Optimized Custom CNN for Real-Time Tomato Leaf Disease Detection. arXiv 2025, arXiv:2502.18521. [Google Scholar] [CrossRef]

- Gonzalez-Huitron, V.; León-Borges, J.A.; Rodriguez-Mata, A.E.; Amabilis-Sosa, L.E.; Ramírez-Pereda, B.; Rodriguez, H. Disease detection in tomato leaves via CNN with lightweight architectures implemented in Raspberry Pi 4. Comput. Electron. Agric. 2021, 181, 105951. [Google Scholar] [CrossRef]

- Liu, J.; Wang, X. Tomato diseases and pests detection based on improved Yolo V3 convolutional neural network. Front. Plant Sci. 2020, 11, 898. [Google Scholar] [CrossRef] [PubMed]

- Liu, J.; Wang, X.; Miao, W.; Liu, G. Tomato pest recognition algorithm based on improved YOLOv4. Front. Plant Sci. 2022, 13, 814681. [Google Scholar] [CrossRef] [PubMed]

- Jing, J.; Li, S.; Qiao, C.; Li, K.; Zhu, X.; Zhang, L. A tomato disease identification method based on leaf image automatic labeling algorithm and improved YOLOv5 model. J. Sci. Food Agric. 2023, 103, 7070–7082. [Google Scholar] [CrossRef]

- Haijoub, A.; Hatim, A.; Arioua, M.; Eloualkadi, A.; Gómez-López, M.D. Enhancing Plant Disease Detection in Agriculture Through YOLOv6 Integration with Convolutional Block Attention Module. In Proceedings of the International Work-Conference on the Interplay Between Natural and Artificial Computation, Olhâo, Portugal, 4–7 June 2024; Springer Nature: Cham, Switzerland, 2024; pp. 474–484. [Google Scholar]

- Huizheng, W.A.N.G.; Liangchen, S.U.N.; Xinlong, L.I.; Haiteng, L.I.U.; Guobin, W.A.N.G.; Yubin, L.A.N. Detecting tomato leaf pests and diseases using improved YOLOv7-tiny. Trans. Chin. Soc. Agric. Eng. 2024, 40, 194–202. [Google Scholar]

- Shen, Y.; Yang, Z.; Khan, Z.; Liu, H.; Chen, W.; Duan, S. Optimization of improved YOLOv8 for precision tomato leaf disease detection in sustainable agriculture. Sensors 2025, 25, 1398. [Google Scholar] [CrossRef]

- Wang, Q.; Yan, N.; Qin, Y.; Zhang, X.; Li, X. BED-YOLO: An Enhanced YOLOv10n-Based Tomato Leaf Disease Detection Algorithm. Sensors 2025, 25, 2882. [Google Scholar] [CrossRef]

- Abulizi, A.; Ye, J.; Abudukelimu, H.; Guo, W. DM-YOLO: Improved YOLOv9 model for tomato leaf disease detection. Front. Plant Sci. 2025, 15, 1473928. [Google Scholar] [CrossRef]

- Sun, H.; Fu, R.; Wang, X.; Wu, Y.; Al-Absi, M.A.; Cheng, Z.; Chen, Q.; Sun, Y. Efficient deep learning-based tomato leaf disease detection through global and local feature fusion. BMC Plant Biol. 2025, 25, 311. [Google Scholar] [CrossRef]

- Zhao, S.; Peng, Y.; Liu, J.; Wu, S. Tomato leaf disease diagnosis based on improved convolution neural network by attention module. Agriculture 2021, 11, 651. [Google Scholar] [CrossRef]

- Saeed, A.; Abdel-Aziz, A.A.; Mossad, A.; Abdelhamid, M.A.; Alkhaled, A.Y.; Mayhoub, M. Smart detection of tomato leaf diseases using transfer learning-based convolutional neural networks. Agriculture 2023, 13, 139. [Google Scholar] [CrossRef]

- Lu, Y.; Zhou, H.; Wang, P.; Wang, E.; Li, G.; Yu, T. IMobileTransformer: A fusion-based lightweight model for rice disease identification. Eng. Appl. Artif. Intell. 2025, 161, 112271. [Google Scholar] [CrossRef]

- Lu, Y.; Li, P.; Wang, P.; Li, T.; Li, G. A method of rice yield prediction based on the QRBILSTM-MHSA network and hyperspectral image. Comput. Electron. Agric. 2025, 239, 110884. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, P.; Tian, S. Tomato leaf disease detection based on attention mechanism and multi-scale feature fusion. Front. Plant Sci. 2024, 15, 1382802. [Google Scholar] [CrossRef]

- Ye, Y.; Zhou, H.; Yu, H.; Hu, H.; Zhang, G.; Hu, J.; He, T. Application of Tswin-F network based on multi-scale feature fusion in tomato leaf lesion recognition. Pattern Recognit. 2024, 156, 110775. [Google Scholar] [CrossRef]

- Li, Y.; Sun, S.; Zhang, C.; Yang, G.; Ye, Q. One-stage disease detection method for maize leaf based on multi-scale feature fusion. Appl. Sci. 2022, 12, 7960. [Google Scholar] [CrossRef]

- Wang, R.; Chen, Y.; Liang, F.; Mou, X.; Zhang, G.; Jin, H. TomaFDNet: A multiscale focused diffusion-based model for tomato disease detection. Front. Plant Sci. 2025, 16, 1530070. [Google Scholar] [CrossRef]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Ghiasi, G.; Lin, T.Y.; Le, Q.V. Nas-fpn: Learning scalable feature pyramid architecture for object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 7036–7045. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10781–10790. [Google Scholar]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. Ghostnet: More features from cheap operations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1580–1589. [Google Scholar]

- Cheng, Y.; Wang, D.; Zhou, P.; Zhang, T. A survey of model compression and acceleration for deep neural networks. arXiv 2017, arXiv:1710.09282. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. Shufflenet: An extremely efficient convolutional neural network for mobile devices. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6848–6856. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Khanam, R.; Hussain, M. Yolov11: An overview of the key architectural enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar] [CrossRef]

- Rasheed, A.F.; Zarkoosh, M. YOLOv11 optimization for efficient resource utilization. J. Supercomput. 2025, 81, 1085. [Google Scholar] [CrossRef]

- Shi, D. Transnext: Robust foveal visual perception for vision transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 17773–17783. [Google Scholar]

- Liao, S.; Zhang, L.; He, Y.; Zhang, J.; Sun, J. Optimization of a Navigation System for Autonomous Charging of Intelligent Vehicles Based on the Bidirectional A Algorithm and YOLOv11n Model. Sensors 2025, 25, 4577. [Google Scholar] [CrossRef]

- Chen, M.; Xu, Y.; Qin, W.; Li, Y.; Yu, J. Tomato ripeness detection method based on FasterNet block and attention mechanism. AIP Adv. 2025, 15, 065117. [Google Scholar] [CrossRef]

- Zhang, G.; Zhao, X. An Improved RT-DETR Model for Small Object Detection on Construction Sites. In Proceedings of the 2025 11th International Conference on Computing and Artificial Intelligence (ICCAI), Kyoto, Japan, 28–31 March 2025; IEEE: Piscataway, NJ, USA, 2025; pp. 47–54. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer International Publishing: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Wang, M.; Li, F. Real-Time Accurate Apple Detection Based on Improved YOLOv8n in Complex Natural Environments. Plants 2025, 14, 365. [Google Scholar] [CrossRef]

- Wang, Q.; Wang, X.; Hou, J.; Liu, X.; Wen, H.; Ji, Z. MF-YOLOv10: Research on the Improved YOLOv10 Intelligent Identification Algorithm for Goods. Sensors 2025, 25, 2975. [Google Scholar] [CrossRef]

- Chen, Z.; Liu, B. A High-Accuracy PCB Defect Detection Algorithm Based on Improved YOLOv12. Symmetry 2025, 17, 978. [Google Scholar] [CrossRef]

| Classes | Image Count | Target Count |

|---|---|---|

| Early Blight | 387 | 782 |

| Healthy | 197 | 736 |

| Late Blight | 244 | 473 |

| Leaf Miner | 383 | 836 |

| Leaf Mold | 224 | 791 |

| Mosaic Virus | 430 | 654 |

| Septoria | 140 | 598 |

| Spider Mites | 121 | 498 |

| Yellow Leaf Curl Virus | 86 | 1213 |

| Algorithms | Recall/% | mAP@0.5/% | mAP@0.5–0.95/% | FPS |

|---|---|---|---|---|

| Faster R-CNN | 72.13 | |||

| SSD | 70.79 | 73.52 | ||

| RT-DETR-r18 | 67.7 | 71.2 | 56.8 | 133.3 |

| YOLOv8n | 65.0 | 68.7 | 52.7 | 303.0 |

| YOLOv10n | 55.9 | 61.5 | 46.4 | 303.0 |

| YOLO11n | 67.6 | 75.2 | 58.5 | 454.5 |

| YOLOv12n | 68.5 | 72.5 | 55.1 | 454.5 |

| Ours | 71.0 | 76.5 | 60.5 | 400.0 |

| Algorithms | RT-DETR-r18 | YOLOv8n | YOLOv10n | YOLO11n | YOLOv12n | Ours | |

|---|---|---|---|---|---|---|---|

| Classes | |||||||

| Healthy | 60.3 | 73.2 | 66.3 | 76.5 | 75.7 | 79.5 | |

| Late Blight | 67.2 | 60.1 | 62.7 | 77.5 | 77.3 | 78.1 | |

| Leaf Miner | 92.0 | 92.3 | 89.2 | 93.6 | 90.4 | 94.5 | |

| Leaf Mold | 66.4 | 72.6 | 61.1 | 81.2 | 80.0 | 84.6 | |

| Mosaic Virus | 88.0 | 79.0 | 69.8 | 87.9 | 83.0 | 89.3 | |

| Septoria | 50.1 | 39.3 | 36.0 | 55.5 | 49.8 | 56.0 | |

| Spider Mites | 83.4 | 87.1 | 77.3 | 87.4 | 85.3 | 88.8 | |

| Algorithms | RT-DETR-r18 | YOLOv8n | YOLOv10n | YOLO11n | YOLOv12n | Ours | |

|---|---|---|---|---|---|---|---|

| Classes | |||||||

| Healthy | 58.2 | 68.5 | 63.8 | 72.3 | 76.6 | 83.9 | |

| Leaf Miner | 85.7 | 85.7 | 84.0 | 82.3 | 78.7 | 87.1 | |

| Leaf Mold | 64.1 | 75.5 | 52.8 | 75.5 | 81.1 | 79.2 | |

| Septoria | 47.7 | 37.1 | 31.5 | 44.9 | 41.6 | 50.8 | |

| Datasets | Algorithms | Recall | mAP@0.5 | mAP@0.5–0.95 |

|---|---|---|---|---|

| VisDrone | YOLOv8n | 33.4 | 32.7 | 18.9 |

| YOLOv10n | 29.9 | 29.7 | 16.5 | |

| YOLO11n | 33.4 | 32.4 | 18.7 | |

| YOLOv12n | 30.9 | 30.3 | 17.4 | |

| Ours | 34.4 | 34.2 | 19.8 | |

| PASCAL VOC | YOLOv8n | 33.6 | 33.7 | 19.0 |

| YOLOv10n | 27.9 | 24.9 | 14.2 | |

| YOLO11n | 44.3 | 46.7 | 27.9 | |

| YOLOv12n | 43.4 | 44.0 | 26.4 | |

| Ours | 45.1 | 48.2 | 29.3 |

| Algorithms | GFLOPS | GPU Mem (GB) | FPS |

|---|---|---|---|

| RT-DETR-r18 | 57.0 | 13.0 | 133.3 |

| YOLOv8n | 8.1 | 10.1 | 303.0 |

| YOLOv10n | 8.2 | 11.5 | 303.0 |

| Ours | 7.9 | 9.0 | 400.0 |

| Number | Experiments | Recall/% | mAP@0.5/% | mAP@0.5–0.95/% |

|---|---|---|---|---|

| 1 | YOLO11n | 67.6 | 75.2 | 58.5 |

| 2 | YOLO11n + EfficientMSF | 69.2 | 76.0 | 59.3 |

| 3 | YOLO11n + CAFMFusion | 70.0 | 76.2 | 59.5 |

| 4 | YOLO11n + C2CU | 70.6 | 76.4 | 59.8 |

| 5 | YOLO11n + EfficientMSF + C2CU + CAFMFusion | 71.0 | 76.5 | 60.5 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Meng, X.; Chen, C.; Dong, W.; Wang, K. Tomato Leaf Disease Detection Method Based on Multi-Scale Feature Fusion. Plants 2025, 14, 3174. https://doi.org/10.3390/plants14203174

Meng X, Chen C, Dong W, Wang K. Tomato Leaf Disease Detection Method Based on Multi-Scale Feature Fusion. Plants. 2025; 14(20):3174. https://doi.org/10.3390/plants14203174

Chicago/Turabian StyleMeng, Xiangrui, Cong Chen, Wenxue Dong, and Ke Wang. 2025. "Tomato Leaf Disease Detection Method Based on Multi-Scale Feature Fusion" Plants 14, no. 20: 3174. https://doi.org/10.3390/plants14203174

APA StyleMeng, X., Chen, C., Dong, W., & Wang, K. (2025). Tomato Leaf Disease Detection Method Based on Multi-Scale Feature Fusion. Plants, 14(20), 3174. https://doi.org/10.3390/plants14203174