2.4. EfficientNet Network

The EfficientNet network is selected as the backbone network for EN-YOLO based on its efficient feature extraction capabilities for small object detection and its multi-scale scalability. Compared to standard architectures like ResNet, EfficientNet achieves a superior accuracy-to-computation ratio with similar parameter sizes. Experiments have demonstrated its enhanced robustness in environments with high insect infestation density and low light occlusion and its potential for adaptability to edge deployment. When it extracts the features, the YOLO-v8 model no longer uses the shallow feature map F2 with fewer semantic features but instead sends the feature maps F3, F4 and F5 obtained from the backbone network to the neck for feature fusion. Due to the down-sampling of the convolutional layer, the receptive field gradually expands, and the deep feature map contains richer semantic information, which is sufficient for target detection of general objects. However, the durian pest and disease dataset contains a large number of small targets. Due to the scarcity of information and the difficulty of detection, the target positioning is inaccurate and the recognition rate is low. The lack of feature information obtained by the prediction head from the feature map leads to a low recognition accuracy rate. In addition, there are many similar, overlapping, occluded targets, which further aggravates the difficulty of target detection. So this study introduces the EfficientNet network, which balances the feature information, depth and length of the training network through the model compound scaling method, which improves the model performance. It has multiple convolutional layers with the same structure [

20]. If multiple convolutional layers with the same structure are called one level, the convolutional network G can be changed to Equation (2):

In Equation (2), m represents the serial number of the level,

represents the convolution operation of the m-th layer, and

represents

has

layer with the same structure in the i-th level, which indicates the input form of the i-th layer.

and

are the resolution of the image,

represents the number of the channel, and

represents the depth of the network. By adjusting and balancing the coefficients of the three dimensions, a network model with higher accuracy can be obtained with the same amount of calculation. The changes in the coefficients of the three dimensions are unified by introducing the mixing coefficient. The change method is as shown in Equation (3):

In Equation (3), the basic module of MnasNet is as follows: MBConv is the search space, the benchmark network EfficientNet-A1 is searched, and e = 0.8 is fixed. By using the network search method, the best combination is found to be x = 1.5, y = 1.6, and z = 1.55, which can fix these three coefficients, and e is gradually enlarged to obtain the network structure of A1–A7. The baseline structure of the EfficientNet network is shown in

Table 2.

Table 2 shows that the performance of the Darknet-53 network is similar to that of the ResNet-152 network. However, the performance of the EfficientNet A1-A7 network is higher than that of the ResNet network, which is compared with the 18-layer convolution structure of EfficientNet-A0, and the network structure of Darknet-53 is relatively complex. The compound scaling method in the EfficientNet network tends to focus on areas related to target details [

21] because some durian pests and diseases have similar pest and disease characteristics, such as durian leaf blight, which is similar to the damaged leaves of leaf blight. So the EfficientNet network is applied to the YOLO algorithm as the backbone network, which is beneficial for extracting the characteristics of durian pests and diseases. Inspired by the CBAM module (convolutional block attention module) [

22], this study introduces a channel attention mechanism into the backbone feature extraction network to perform the multi-scale maximum pooling operations by using a set of volumes of 1 × 1, 8 × 8 and 14 × 14 sizes. The product kernel performs the maximum pooling of the same feature map and removes the redundant information, which simplifies the network complexity and parameter amount while capturing more meaningful features. The process of optimizing the YOLO algorithm structure using the EfficientNet network is shown in

Figure 4.

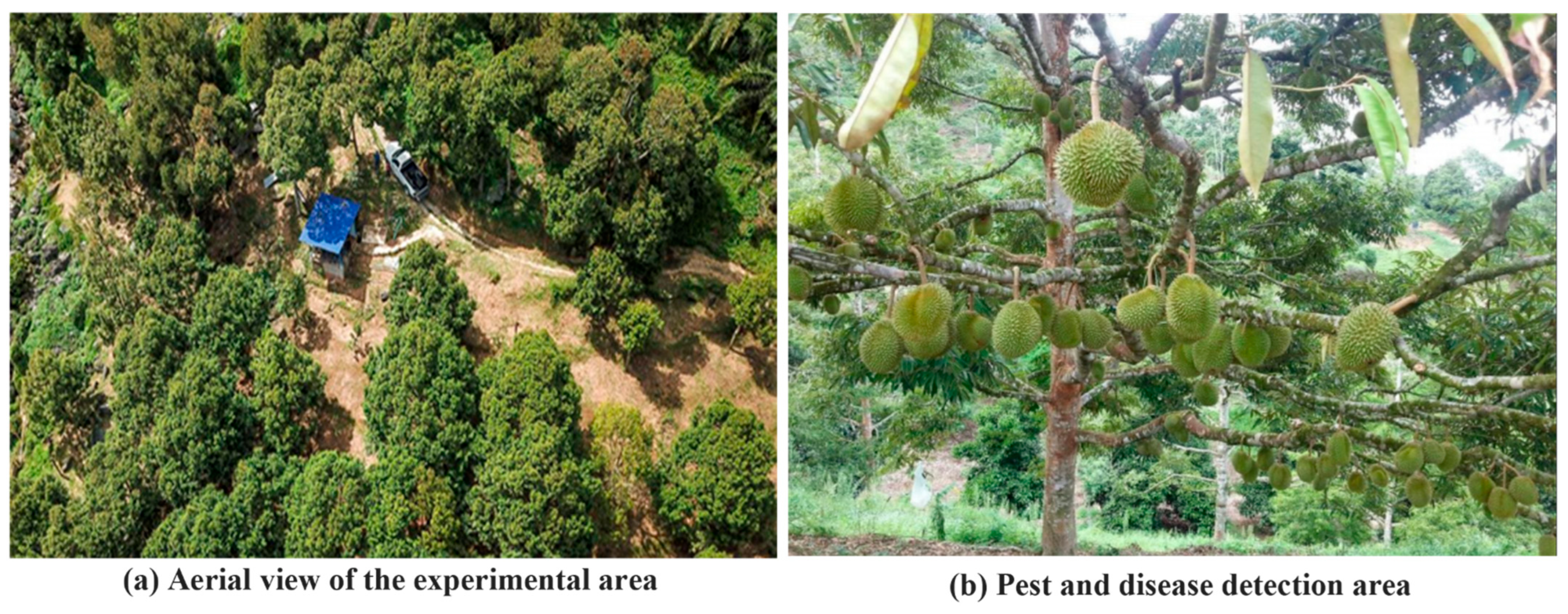

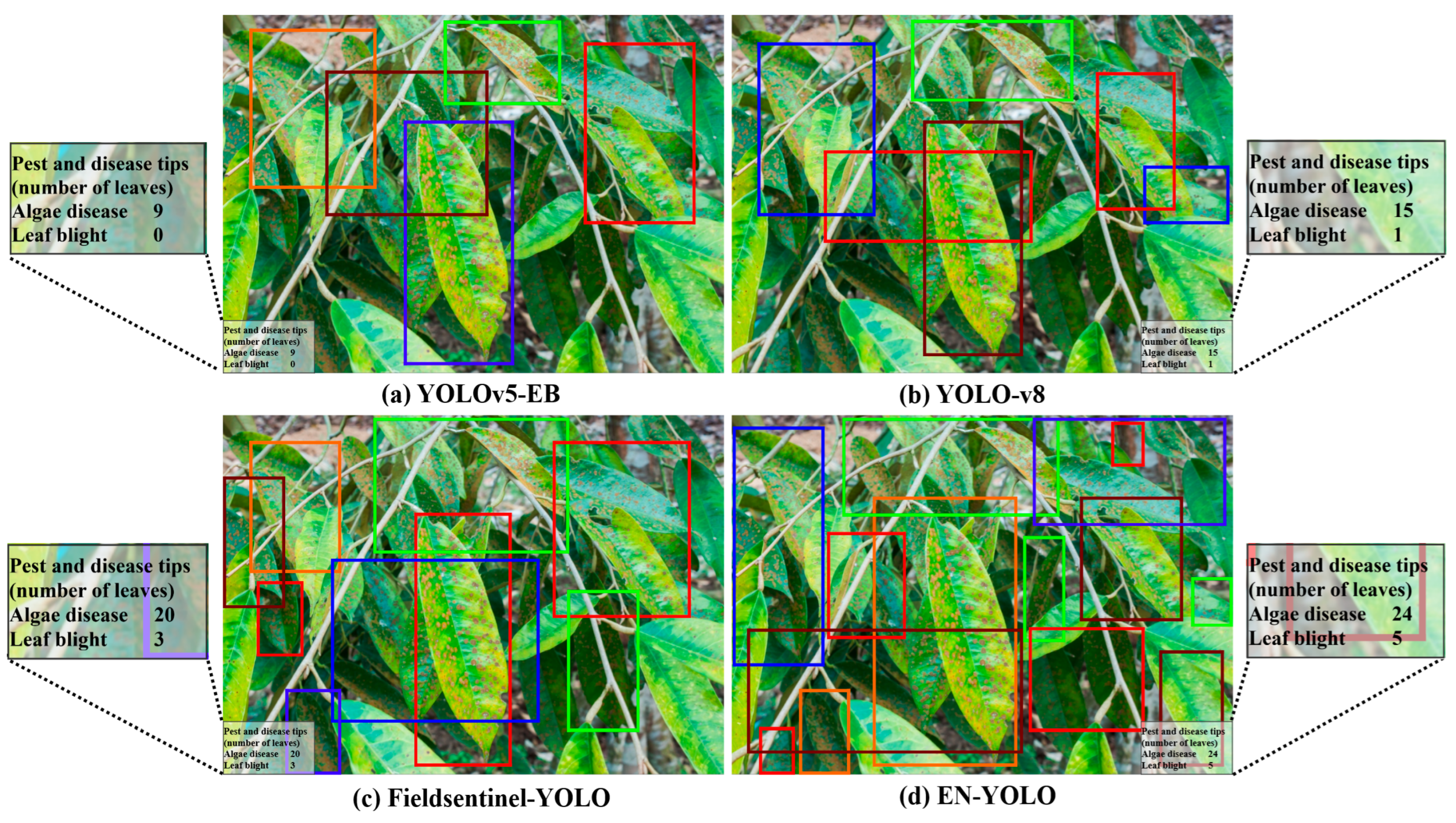

To enhance the adaptability of YOLOv8 for small object detection in orchard pests and diseases, this paper designed an improved model, EN-YOLO. While maintaining the fundamental architecture of the YOLOv8 detection framework, this model replaces the backbone network and introduces several lightweight modules to enhance the model’s expressiveness and robustness. In the backbone feature extraction module, EN-YOLO replaces the default C2f module used in YOLOv8 with the EfficientNet-B4 network. This network utilizes a composite scale expansion strategy, improving multi-scale feature extraction while maintaining a low parameter count. It performs particularly well for small objects with severe occlusion and large scale variations. Compared with traditional backbones such as ResNet and DarkNet, EfficientNet demonstrates higher accuracy and superior inference efficiency in this study’s task. To mitigate information loss and vanishing gradient issues in deep neural networks, EN-YOLO introduces long-span cross-layer residual connections between the 14 × 14 and 7 × 7 feature maps. First, by combining the size of the durian pest and disease area, the 28 × 28 feature layer is removed; only 14 × 14 and 56 × 56 scale feature layers are used to identify better durian disease areas and smaller durian pests. Because the pest targets in this task are generally small, have blurred boundaries, and operate in complex scenes, the shallow texture features extracted by the 28 × 28 layer interfere strongly with the background, making it difficult to form highly discriminative detection features. In our structural design, we based our architecture on the network pruning strategies of YOLOv8-lite and YOLOv6-s, referencing their common practice of eliminating high-resolution output in lightweight designs. Therefore, we also pruned the 28 × 28 feature layer in EN-YOLO to improve the balance between model accuracy and inference efficiency. The durian pest and disease identification box is shown in

Figure 5.

The basic surface features in the network are diluted by constructing a feature pyramid to extract the characteristics of durian pests and diseases. This study adds a large residual edge between the 14 × 14 and 56 × 56 feature layers to retain some basic surface features, which improve YOLO’s prediction network’s performance. The feature of the 4 × 4 convolutional layer is integrated; the 1 × 1 convolutional layer is to adjust the number of channels [

23]. To meet the requirements for expressing multi-scale small object information during feature fusion, EN-YOLO sets the number of channels in the backbone output feature map to 256. This value was selected based on performance comparisons of multiple channel numbers (e.g., 128, 256, and 512) on the validation set, balancing detection accuracy and model complexity. This setting also ensures compatibility with the channel structure of the feature fusion module in YOLOv8, facilitating subsequent detection head sharing and migration. The confidence level is shown in Equation (4):

In Equation (4),

represents the confidence level in judging durian pests and diseases,

represents the probability of the target box that contains durian pests and diseases. COR represents the ratio of the intersection of the real and predicted boxes and their union. The confidence is used to select the appropriate border to mark the durian pests and diseases target; the highest box of the confidence is determined through non-maximum suppression. A value of 4 represents the location information of durian pest and disease targets (

); 59 represents the number of identification categories of healthy durian leaves and fruits. The identification box parameters of the actual target are shown in Equation (5):

In Equation (5), (

,

) represents the upper left corner coordinate of the small grid in the feature layer. In YOLO, the width and height of each small grid in the feature layer are 1. (

,

) represents the width and height of the preset a priori frame mapped to the feature layer. w and h represent the size of the feature layer, and the final box coordinates are (

,

,

,

).

Figure 6 shows the pest and disease identification process of the EN-YOLO algorithm. Its core modules include the following.

- (1)

Multi-source data perception layer

This layer integrates the visible light and multi-spectral and thermal imaging inputs, which realize the cross-modal feature alignment through an adaptive fusion module (AFM).

- (2)

Dynamic feature parsing network

In this study, the dynamic feature parsing network adopts the composite scaling structure of EfficientNet-B4 as the backbone and constructs a lightweight feature extractor using depthwise separable convolution. A large-span residual connection is established between the 14 × 14 and 7 × 7 feature layers to alleviate gradient vanishing, and a spatial-channel dual-path attention mechanism is introduced to effectively suppress background interference. For interpretability enhancement, the model integrates a Grad-CAM++-based feature heat map generator to visualize pest response regions, a decision path tracker to record the activation status of key nodes during feature propagation, and a molecular feature parser that connects to a plant pathology database to output the correlation degree of pathogen molecular features, thereby improving both decision transparency and biological interpretability.

As shown in

Figure 6, the recognition process of the EN-YOLO algorithm is divided into three stages as follows:

- (1)

Data perception stage:

Multimodal data input: Synchronous collection of visible light (leaf texture), near infrared (chlorophyll distribution), thermal imaging (lesion temperature field).

Adaptive preprocessing: Apply illumination invariance transformation to eliminate environmental interference, as shown in Equation (6):

In Equation (6), represents the image pixel mean, which is used to eliminate the difference in illumination intensity; represents the standard deviation, which represents the discreteness of illumination distribution; and α and β represent scene adaptation parameters, which can be dynamically adjusted through online learning.

- (2)

Feature analysis stage:

In Equation (7), represents the hue component in the HSV color space; represents the saturation component and quantifies the degree of abnormal color of the lesion; represents the brightness component, reflecting the light absorption characteristics of the lesion area; and represents the hue space gradient, capturing the diffusion direction of the lesion edge.

In Equation (8), represents the multi-scale feature map of EfficientNet; represents global average pooling, extracting channel statistical features; represents the multi-layer perceptron (including ReLU activation), learning channel weights; and represents Sigmoid function, generating 0–1 attention weights.

- (3)

Decision verification stage:

Multi-dimensional verification: Output bounding box, disease probability, and pathogen type confidence at the same time.

Traceable decision: Trace back the contribution of key features through the decision tree.

Expert system interaction: Connect to the agricultural expert knowledge base to generate a prevention and control recommendation report.

Table 3 shows the interpretability verification experiment results of the EN-YOLO model. The comparison between the feature heat map and the pathological section shows that the response area overlap rate of the EN-YOLO model to anthrax spores reaches 89%. The decision path tracing shows that the EN-YOLO model preferentially activates the texture analysis channel (weighted at 78%) in occluded scenes; the molecular feature parser successfully associates the characteristics of the Colletotrichum gloeosporioides strain of anthrax bacteria. The molecular feature parser was implemented by integrating the NCBI Plant Pathogen Genome Database (release 2024.09) and the PHI-base v5.4 molecular phenotype repository. Molecular features were extracted using BLASTn alignment with a 95% identity threshold and mapped to image-derived lesion regions through a keypoint-based spatial registration algorithm. The parser’s output was quantitatively validated against 120 expert-labeled pathogen–symptom pairs, achieving a top-1 matching accuracy of 92.3%.