AFBF-YOLO: An Improved YOLO11n Algorithm for Detecting Bunch and Maturity of Cherry Tomatoes in Greenhouse Environments

Abstract

1. Introduction

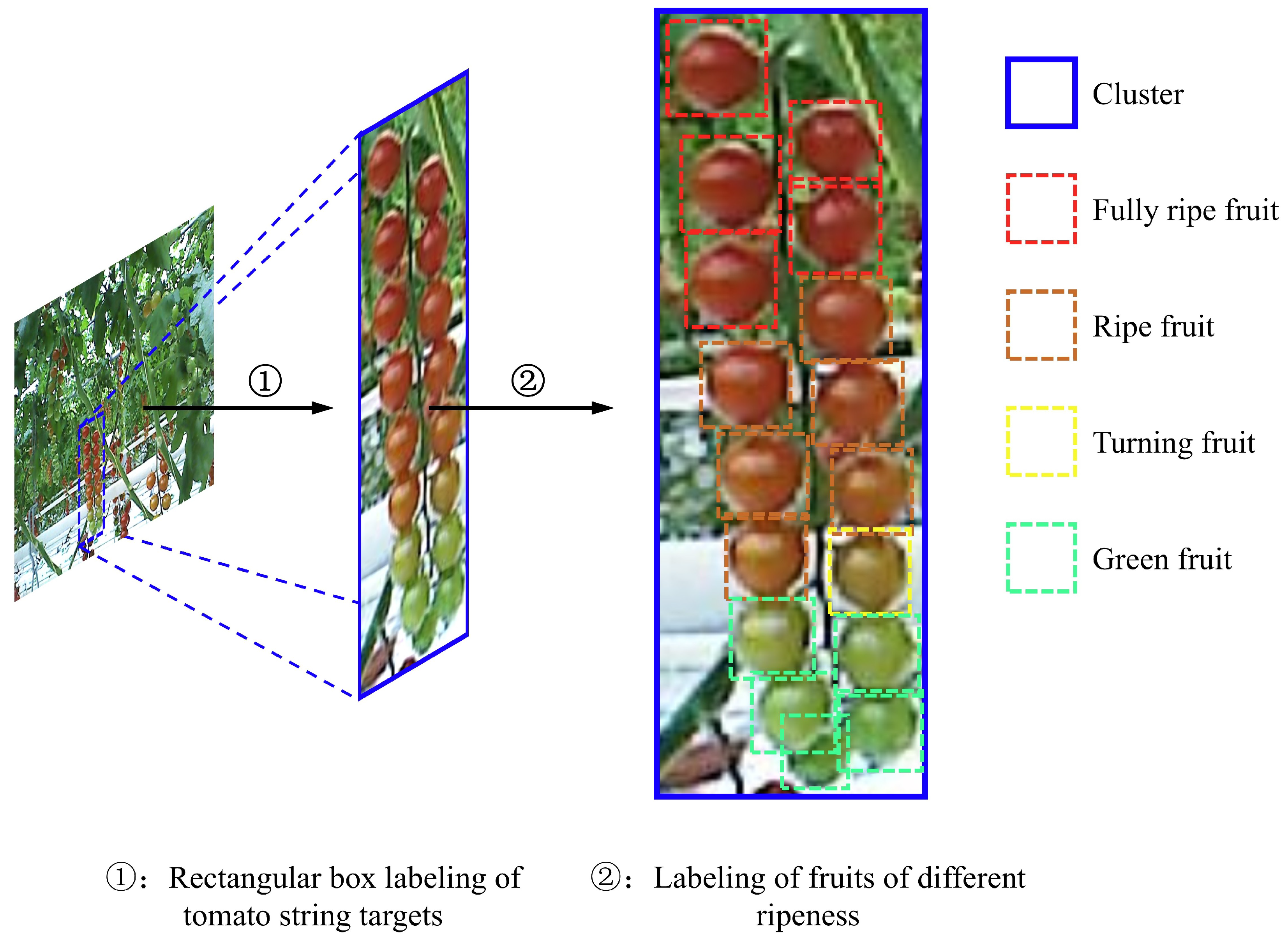

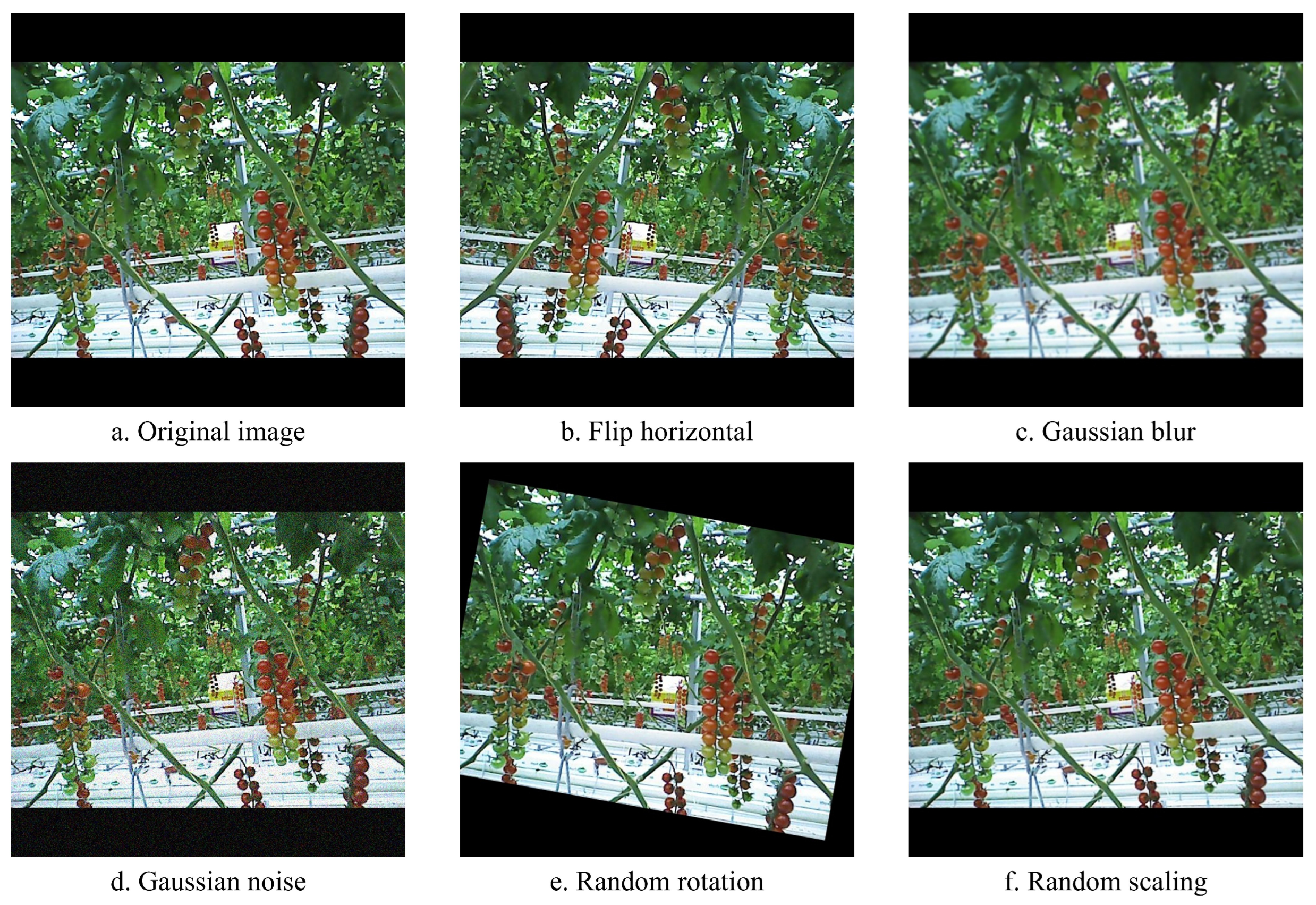

- A high-quality cherry tomato dataset was constructed, labeled into four maturity stages and cluster categories. Data augmentation techniques were applied to simulate various environmental conditions.

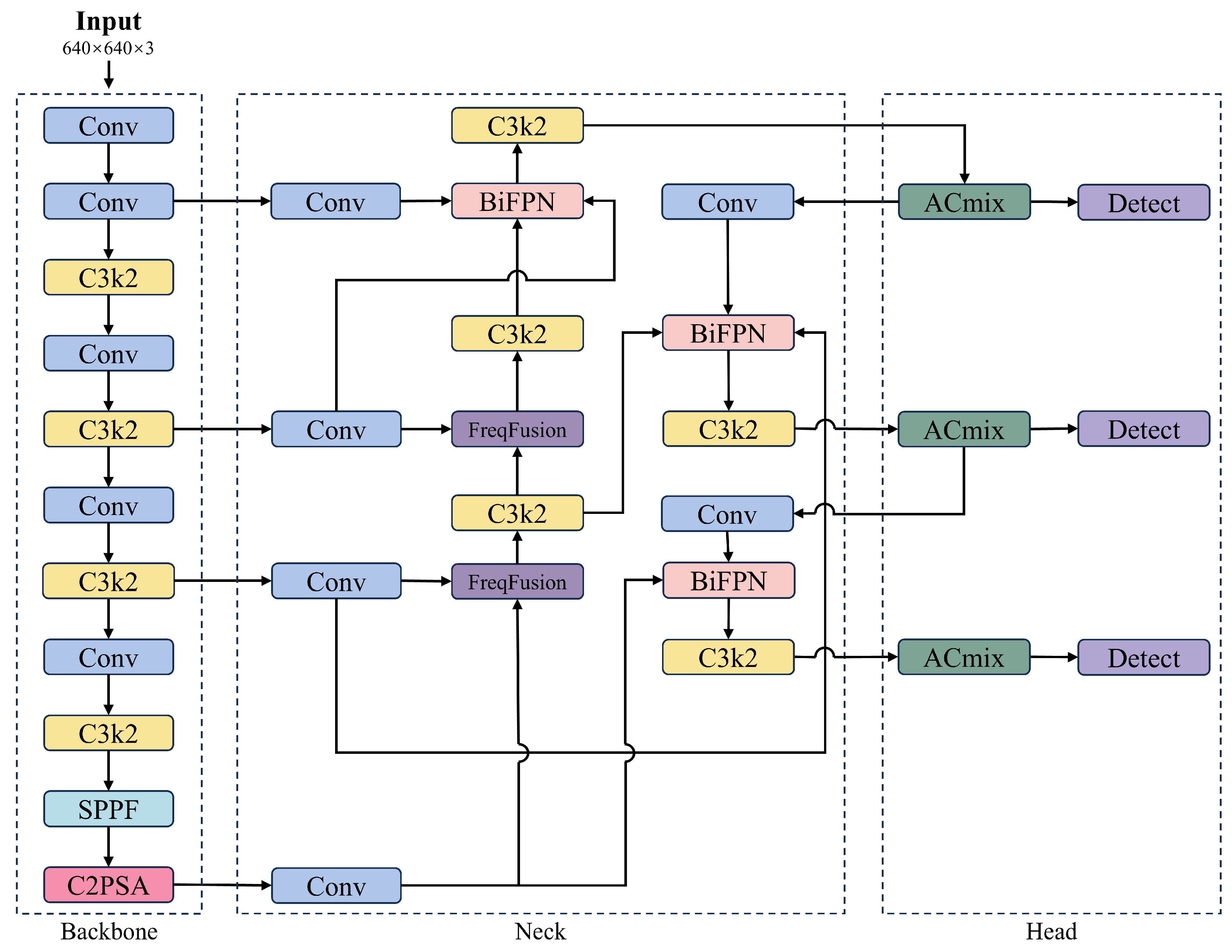

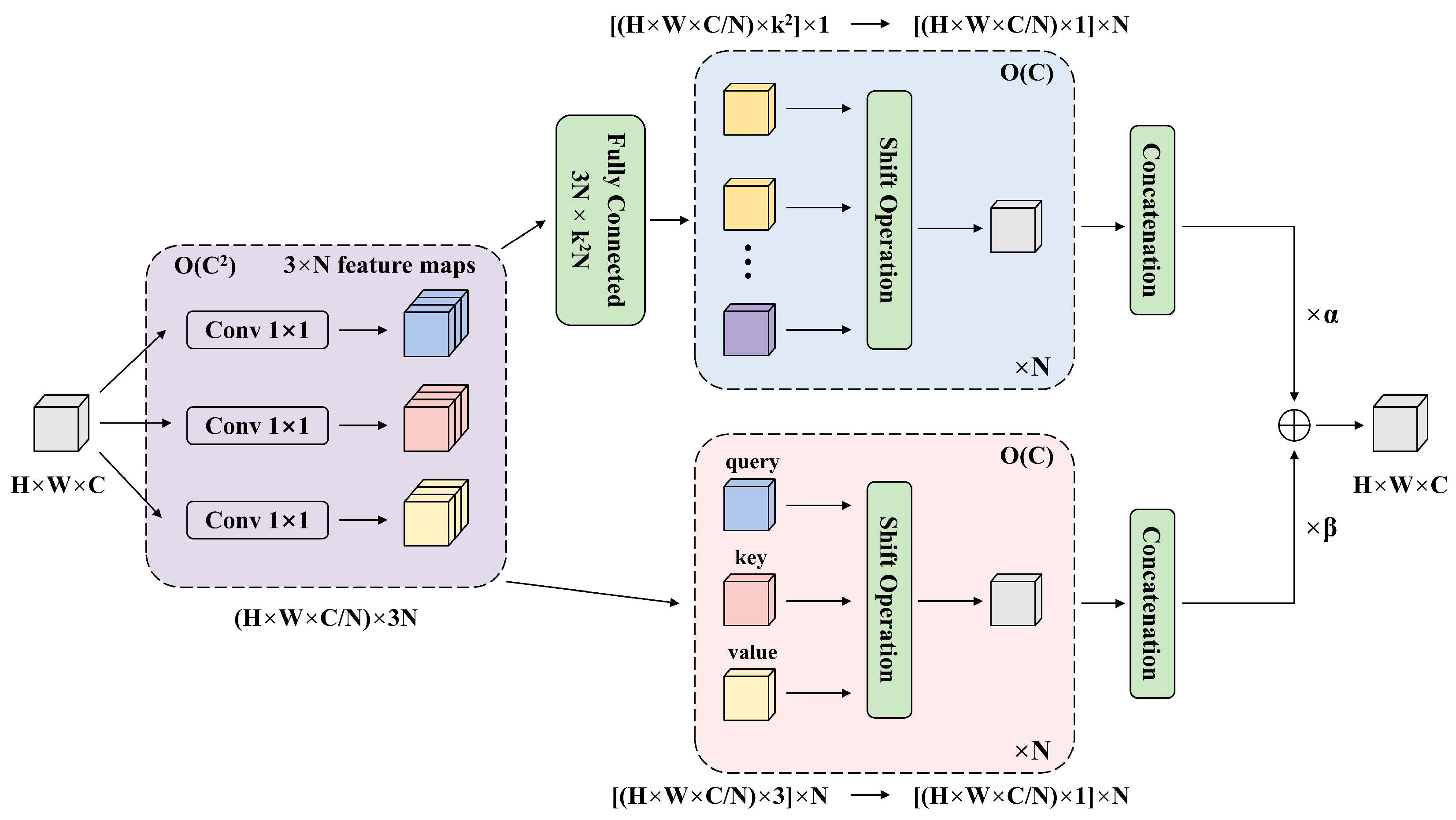

- The AFBF-YOLO network was developed by integrating ACmix, FreqFusion-BiFPN, and Inner-Focaler-IoU, aiming to enhance detection accuracy and robustness under challenging scenarios.

- Extensive experiments and ablation studies were carried out, demonstrating that AFBF-YOLO significantly outperforms mainstream YOLO networks in both detection precision and computational efficiency.

- A practical and scalable solution was established for the simultaneous detection of cherry tomato clusters and their maturity stages in real time, providing a foundational step toward the integration of AI-based perception systems into robotic harvesting platforms.

2. Materials and Methods

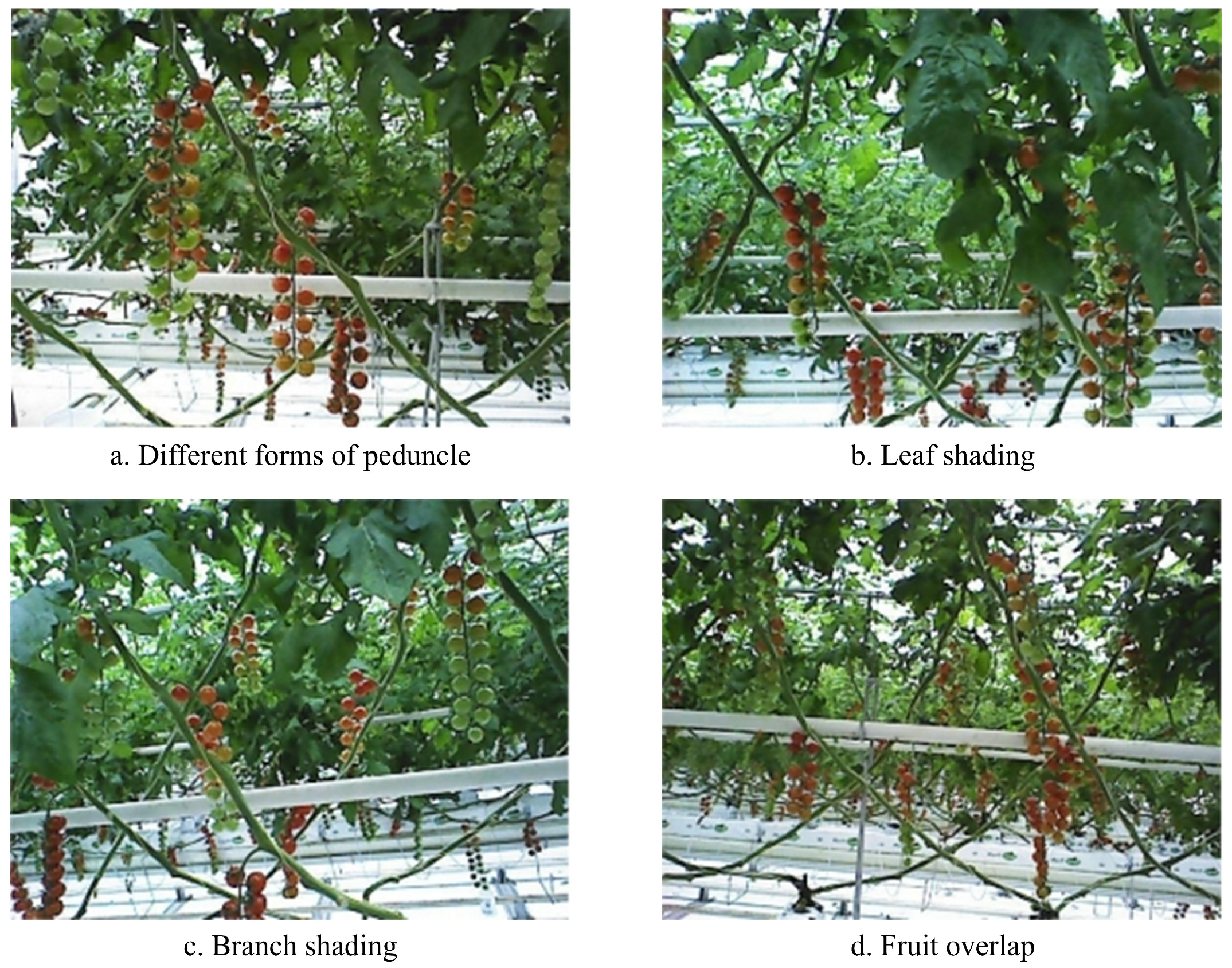

2.1. Data Collection and Dataset Construction

2.1.1. Data Sample Collection

2.1.2. Dataset Processing and Annotation

2.1.3. Data Augmentation

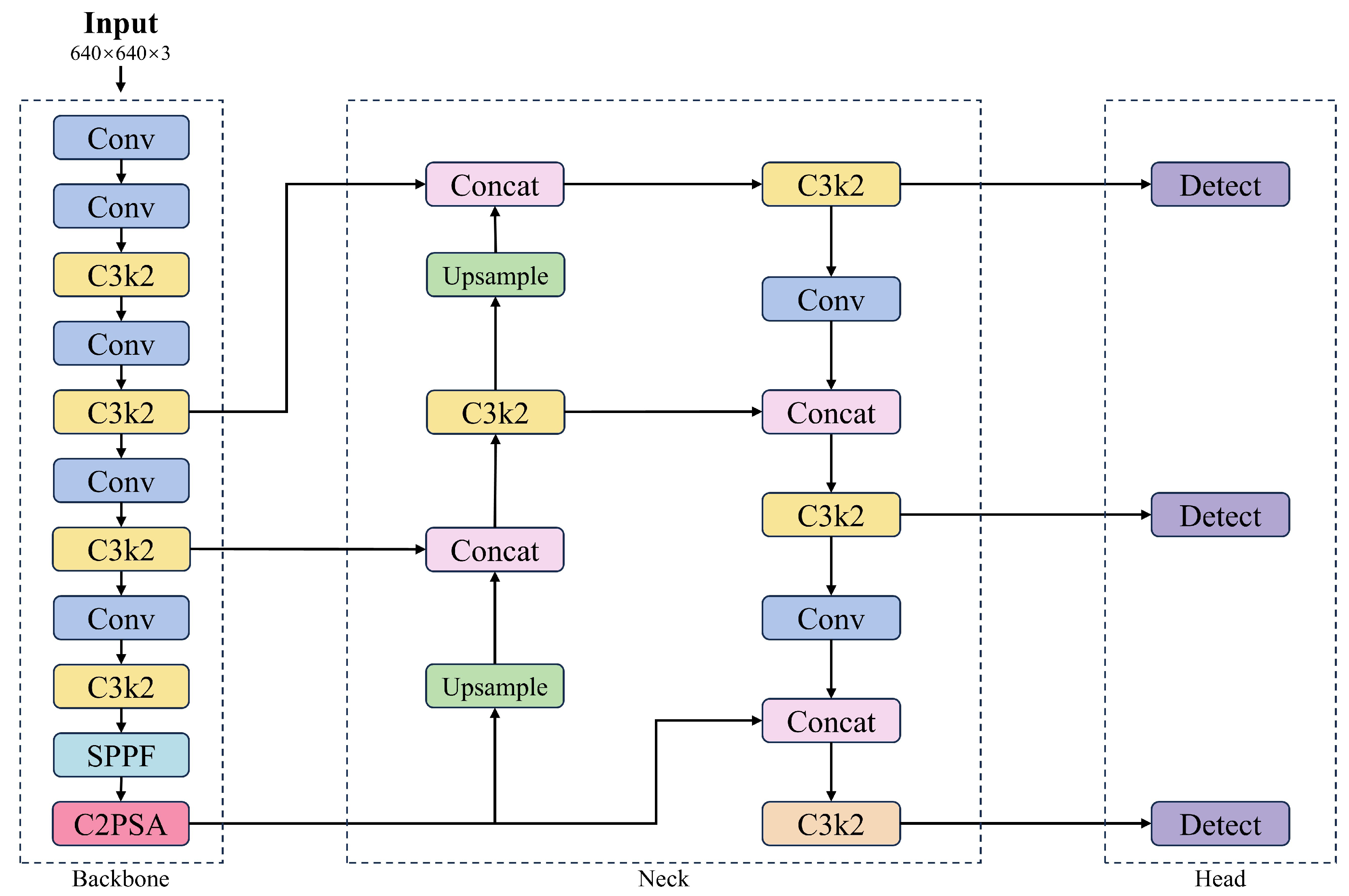

2.2. YOLO11 Model

2.3. Improvements to the YOLO11

2.3.1. Attention Module

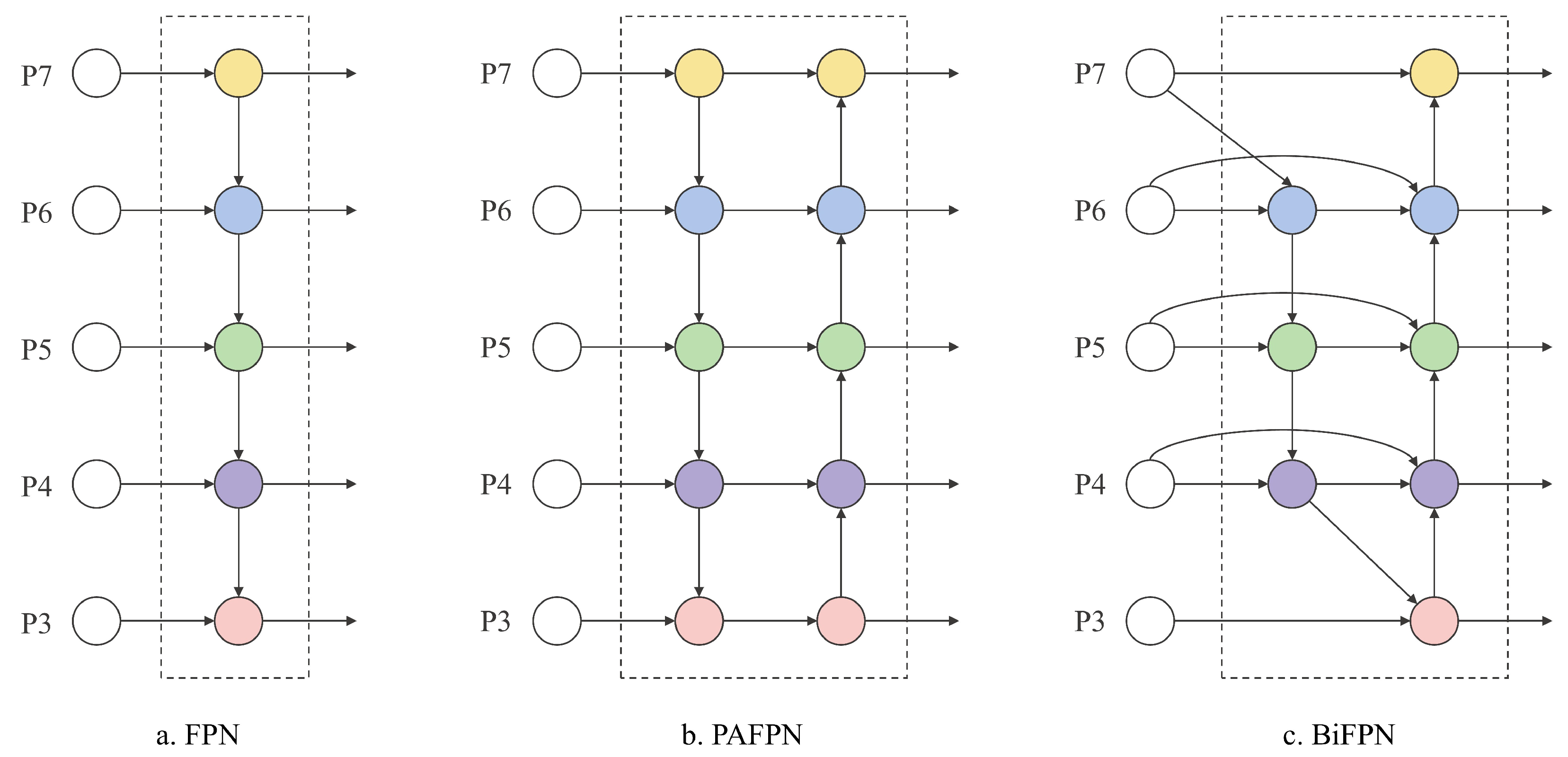

2.3.2. Neck

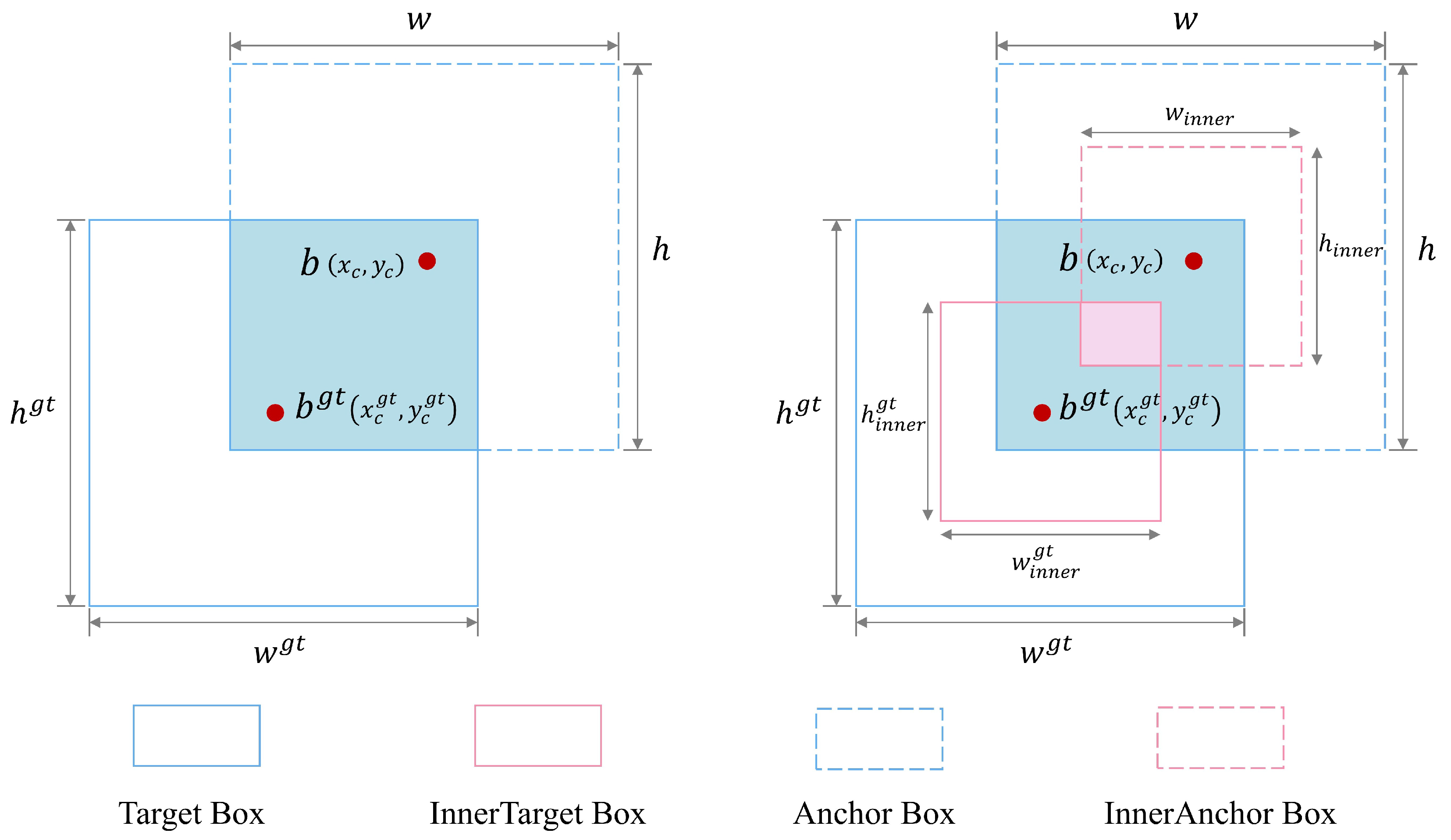

2.3.3. Loss Function

2.4. Evaluation Metrics

3. Experiments and Results

3.1. Experimental Platform and Parameter Settings

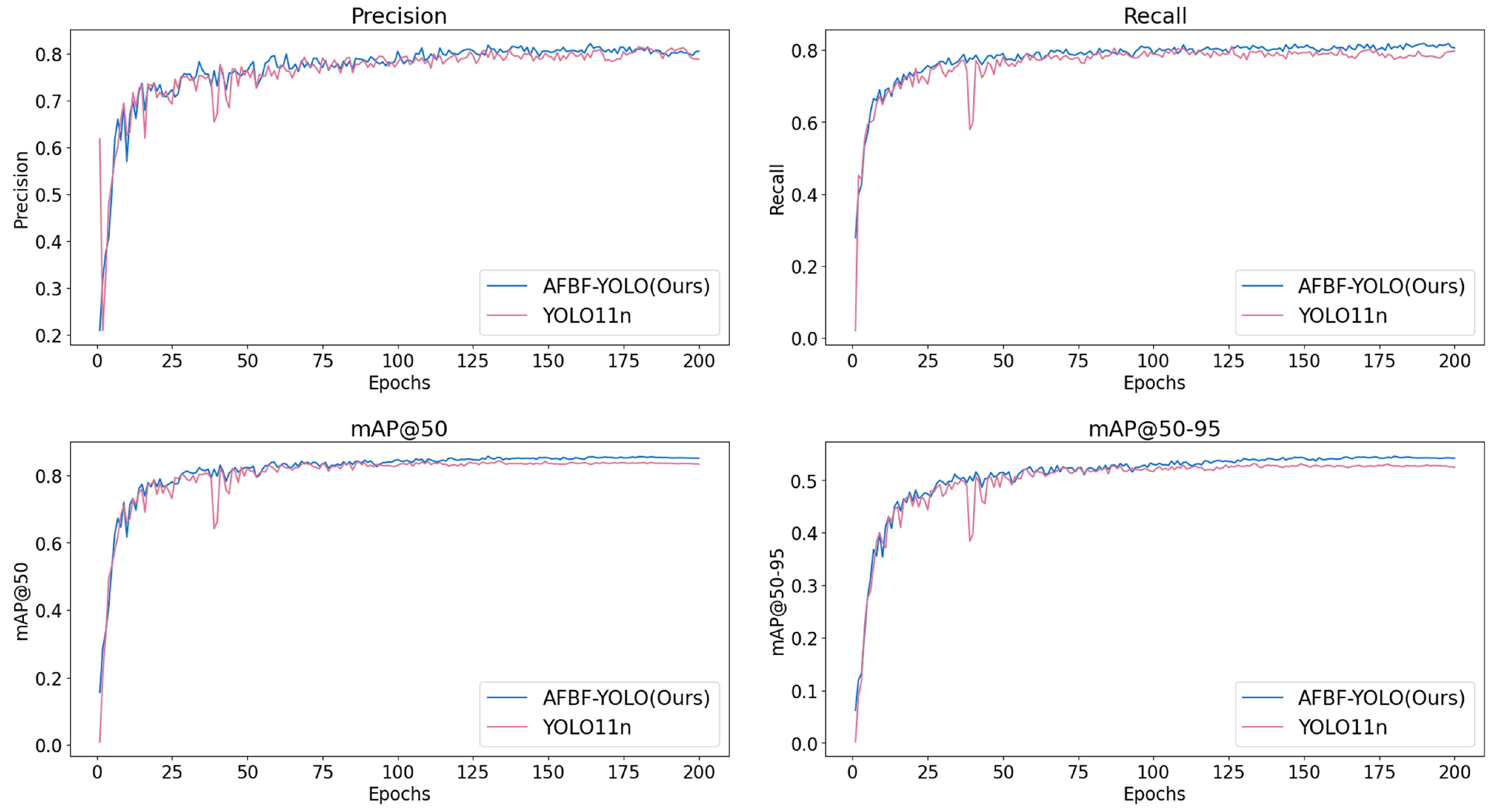

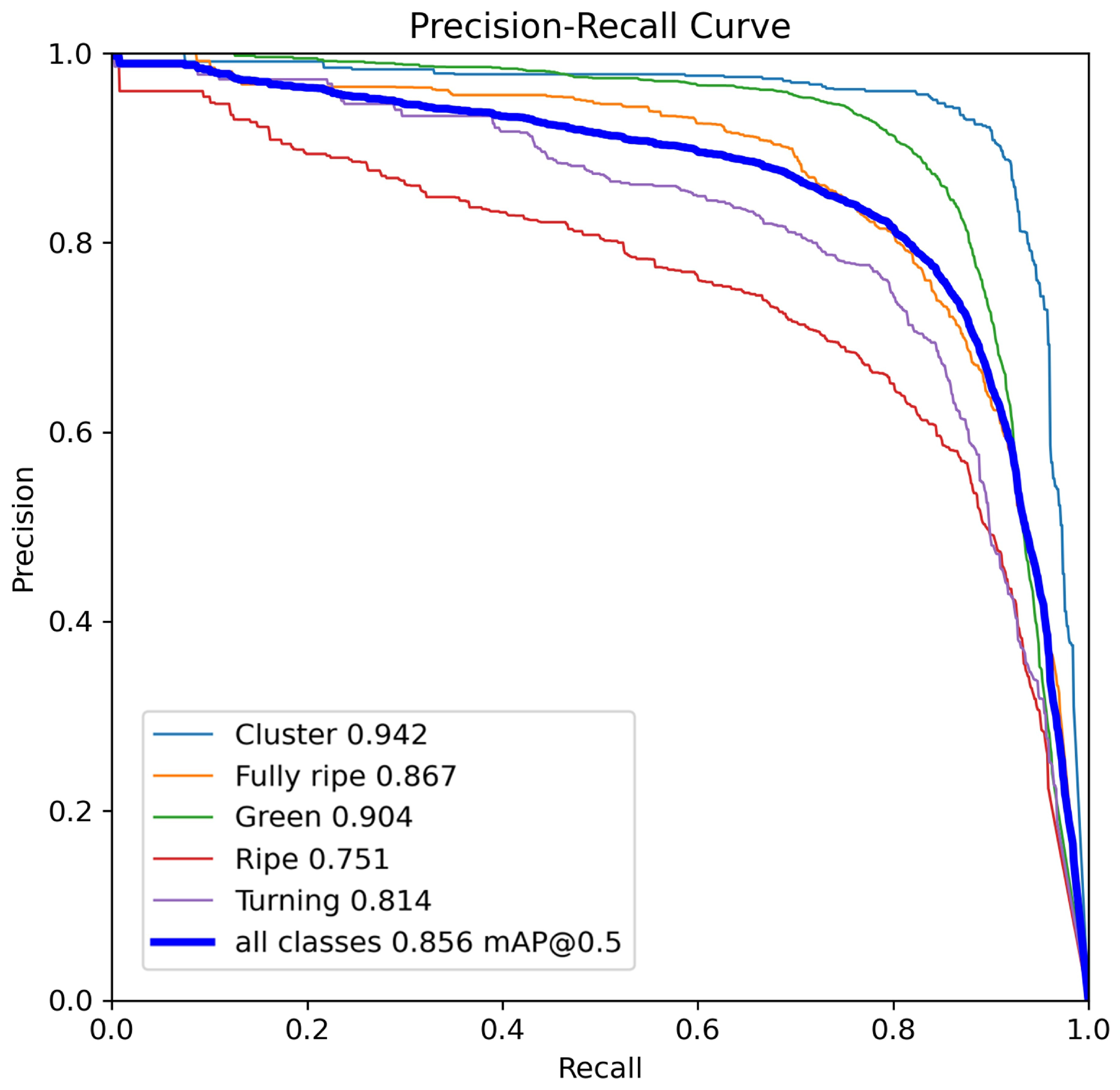

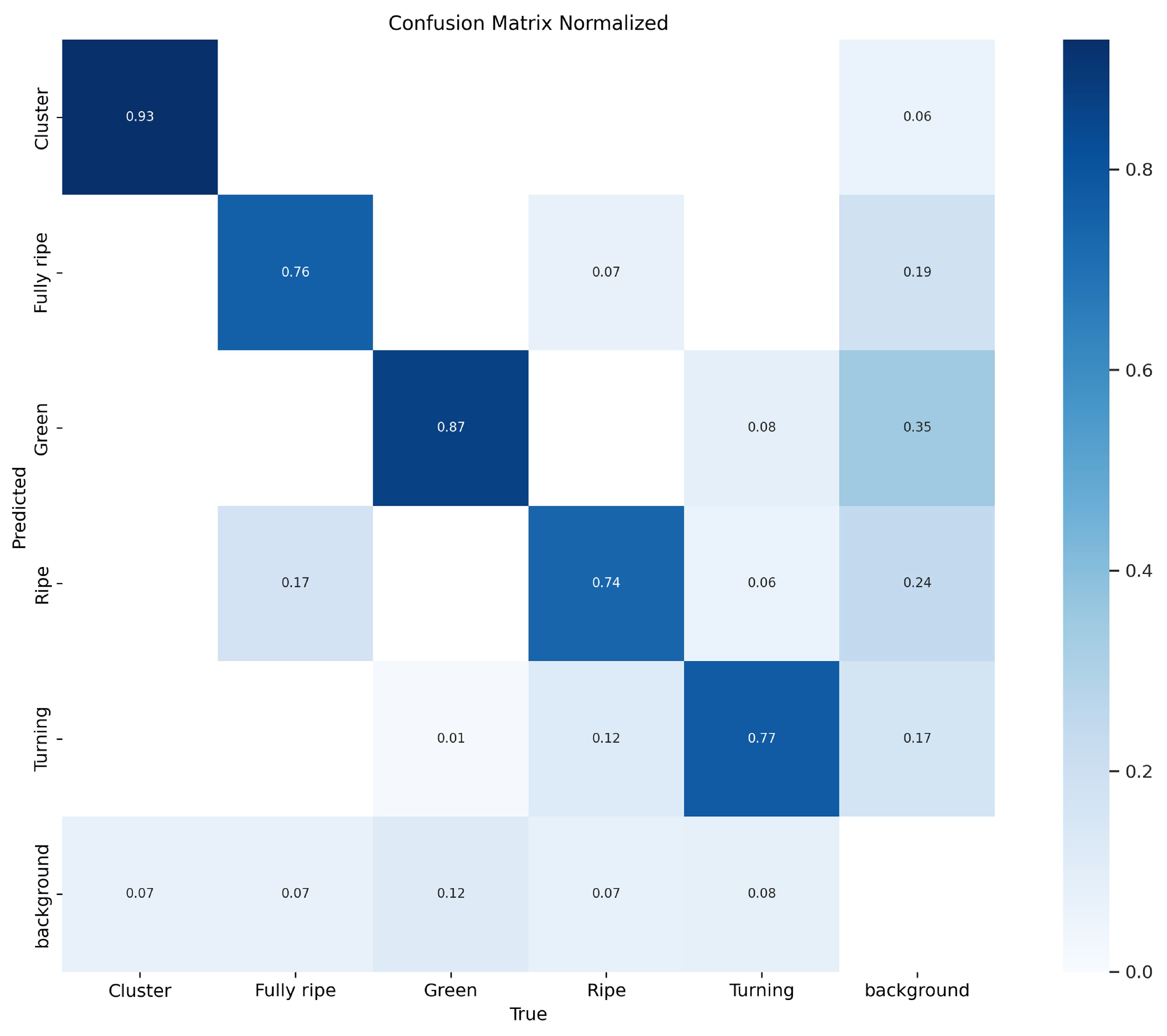

3.2. Performance of AFBF-YOLO Network

3.3. Visual Analysis

3.4. Ablation Experiments

3.5. Performance Comparison with YOLO Series Model

4. Discussion

4.1. Discussion of the Current Research Status

4.2. Limitations and Future Work

- (1)

- (2)

- Dense fruit clustering and interference from leaves or stems often cause partial or complete occlusion, leading to incomplete feature representation and increased false negatives and localization errors.

- (3)

- Inconsistent illumination and shadowing introduce variability in color and texture, distorting the visual appearance of fruit surfaces, especially under partial shading or backlighting.

- (4)

- In the early “green” stage, fruit color and texture often blend with the surrounding vegetation. The small size and low signal-to-background contrast impair fruit contour discrimination, leading to missed detections.

- (5)

- Labeling noise and human subjectivity during manual annotation may contribute to boundary inconsistencies and marginal misclassifications.

5. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Collins, E.J.; Bowyer, C.; Tsouza, A.; Chopra, M. Tomatoes: An extensive review of the associated health impacts of tomatoes and factors that can affect their cultivation. Biology 2022, 11, 239. [Google Scholar] [CrossRef]

- Raffo, A.; Leonardi, C.; Fogliano, V.; Ambrosino, P.; Salucci, M.; Gennaro, L.; Bugianesi, R.; Giuffrida, F.; Quaglia, G. Nutritional value of cherry tomatoes (Lycopersicon esculentum cv. Naomi F1) harvested at different ripening stages. J. Agric. Food Chem. 2002, 50, 6550–6556. [Google Scholar] [CrossRef]

- Feng, Q.; Zou, W.; Fan, P.; Zhang, C.; Wang, X. Design and test of robotic harvesting system for cherry tomato. Int. J. Agric. Biol. Eng. 2018, 11, 96–100. [Google Scholar] [CrossRef]

- Jin, T.; Han, X. Robotic arms in precision agriculture: A comprehensive review of the technologies, applications, challenges, and future prospects. Comput. Electron. Agric. 2024, 221, 108938. [Google Scholar] [CrossRef]

- Ampatzidis, Y.; Partel, V.; Costa, L. Agroview: Cloud-based application to process, analyze and visualize UAV-collected data for precision agriculture applications utilizing artificial intelligence. Comput. Electron. Agric. 2020, 174, 105457. [Google Scholar] [CrossRef]

- Li, M.; Wu, F.; Wang, F.; Zou, T.; Li, M.; Xiao, X. CNN-MLP-Based configurable robotic arm for Smart Agriculture. Agriculture 2024, 14, 1624. [Google Scholar] [CrossRef]

- Saleem, M.H.; Potgieter, J.; Arif, K.M. Automation in agriculture by machine and deep learning techniques: A review of recent developments. Precis. Agric. 2021, 22, 2053–2091. [Google Scholar] [CrossRef]

- Xiao, F.; Wang, H.; Xu, Y.; Zhang, R. Fruit detection and recognition based on deep learning for automatic harvesting: An overview and review. Agronomy 2023, 13, 1625. [Google Scholar] [CrossRef]

- Hou, G.; Niu, R.; Chen, H. A visual recognition method for the growth posture of small tomatoes under a complex greenhouse background. Comput. Electron. Agric. 2025, 237, 110753. [Google Scholar] [CrossRef]

- Rizzo, M.; Marcuzzo, M.; Zangari, A.; Gasparetto, A.; Albarelli, A. Fruit ripeness classification: A survey. Artif. Intell. Agric. 2023, 7, 44–57. [Google Scholar] [CrossRef]

- Yoshida, T.; Fukao, T.; Hasegawa, T. Cutting point detection using a robot with point clouds for tomato harvesting. J. Robot. Mechatronics 2020, 32, 437–444. [Google Scholar] [CrossRef]

- Meng, Z.; Du, X.; Xia, J.; Ma, Z.; Zhang, T. Real-time statistical algorithm for cherry tomatoes with different ripeness based on depth information mapping. Comput. Electron. Agric. 2024, 220, 108900. [Google Scholar] [CrossRef]

- Tenorio, G.L.; Caarls, W. Automatic visual estimation of tomato cluster maturity in plant rows. Mach. Vis. Appl. 2021, 32, 78. [Google Scholar] [CrossRef]

- Dai, G.; Hu, L.; Wang, P.; Rong, J. Tracking and Counting Method for Tomato Fruits Scouting Robot in Greenhouse. In Proceedings of the International Conference on Intelligent Robotics and Applications, Harbin, China, 1–3 August 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 60–68. [Google Scholar]

- Rong, J.; Zhou, H.; Zhang, F.; Yuan, T.; Wang, P. Tomato cluster detection and counting using improved YOLOv5 based on RGB-D fusion. Comput. Electron. Agric. 2023, 207, 107741. [Google Scholar] [CrossRef]

- Li, Y.; Feng, Q.; Liu, C.; Xiong, Z.; Sun, Y.; Xie, F.; Li, T.; Zhao, C. MTA-YOLACT: Multitask-aware network on fruit bunch identification for cherry tomato robotic harvesting. Eur. J. Agron. 2023, 146, 126812. [Google Scholar] [CrossRef]

- Li, X.; Ma, N.; Han, Y.; Yang, S.; Zheng, S. AHPPEBot: Autonomous robot for tomato harvesting based on phenotyping and pose estimation. In Proceedings of the 2024 IEEE International Conference on Robotics and Automation (ICRA), Yokohama, Japan, 13–17 May 2024; pp. 18150–18156. [Google Scholar]

- Chen, W.; Liu, M.; Zhao, C.; Li, X.; Wang, Y. MTD-YOLO: Multi-task deep convolutional neural network for cherry tomato fruit bunch maturity detection. Comput. Electron. Agric. 2024, 216, 108533. [Google Scholar] [CrossRef]

- Pan, X.; Ge, C.; Lu, R.; Song, S.; Chen, G.; Huang, Z.; Huang, G. On the integration of self-attention and convolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 815–825. [Google Scholar]

- Chen, L.; Fu, Y.; Gu, L.; Yan, C.; Harada, T.; Huang, G. Frequency-aware feature fusion for dense image prediction. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 10763–10780. [Google Scholar] [CrossRef]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10781–10790. [Google Scholar]

- Zhang, H.; Xu, C.; Zhang, S. Inner-IoU: More effective intersection over union loss with auxiliary bounding box. arXiv 2023, arXiv:2311.02877. [Google Scholar]

- Zhang, H.; Zhang, S. Focaler-IoU: More focused intersection over union loss. arXiv 2024, arXiv:2401.10525. [Google Scholar]

- Li, X.; Chen, W.; Wang, Y.; Yang, S.; Wu, H.; Zhao, C. Design and experiment of an automatic cherry tomato harvesting system based on cascade vision detection. Trans. Chin. Soc. Agric. Eng. 2023, 39, 136–145. [Google Scholar]

- Wang, Z.; Wang, R.; Wang, M.; Lai, T.; Zhang, M. Self-supervised transformer-based pre-training method with General Plant Infection dataset. In Proceedings of the Chinese Conference on Pattern Recognition and Computer Vision (PRCV), Urumqi, China, 18–20 October 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 189–202. [Google Scholar]

- Khanam, R.; Hussain, M. Yolov11: An overview of the key architectural enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar] [CrossRef]

- Varghese, R.; Sambath, M. Yolov8: A novel object detection algorithm with enhanced performance and robustness. In Proceedings of the 2024 International Conference on Advances in Data Engineering and Intelligent Computing Systems (ADICS), Chennai, India, 18–19 April 2024; pp. 1–6. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. Yolov10: Real-time end-to-end object detection. Adv. Neural Inf. Process. Syst. 2024, 37, 107984–108011. [Google Scholar]

- Zheng, Z.; Wang, P.; Ren, D.; Liu, W.; Ye, R.; Hu, Q.; Zuo, W. Enhancing geometric factors in model learning and inference for object detection and instance segmentation. IEEE Trans. Cybern. 2021, 52, 8574–8586. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

- Jocher, G.; Stoken, A.; Borovec, J.; Changyu, L.; Hogan, A.; Diaconu, L.; Poznanski, J.; Yu, L.; Rai, P.; Ferriday, R.; et al. Ultralytics/Yolov5: V3.0; Zenodo. 2020. Available online: https://zenodo.org/records/3983579 (accessed on 5 August 2025).

- Wang, C.Y.; Yeh, I.H.; Mark Liao, H.Y. Yolov9: Learning what you want to learn using programmable gradient information. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 1–21. [Google Scholar]

- Tan, M.; Le, Q.V. Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; Volume 15. [Google Scholar]

- Qiu, Z.; Wang, F.; Li, T.; Liu, C.; Jin, X.; Qing, S.; Shi, Y.; Wu, Y.; Liu, C. LGWheatNet: A Lightweight Wheat Spike Detection Model Based on Multi-Scale Information Fusion. Plants 2025, 14, 1098. [Google Scholar] [CrossRef]

- Qin, Y.M.; Tu, Y.H.; Li, T.; Ni, Y.; Wang, R.F.; Wang, H. Deep Learning for Sustainable Agriculture: A Systematic Review on Applications in Lettuce Cultivation. Sustainability 2025, 17, 3190. [Google Scholar] [CrossRef]

- Wang, R.F.; Su, W.H. The application of deep learning in the whole potato production Chain: A Comprehensive review. Agriculture 2024, 14, 1225. [Google Scholar] [CrossRef]

- Zeng, T.; Li, S.; Song, Q.; Zhong, F.; Wei, X. Lightweight tomato real-time detection method based on improved YOLO and mobile deployment. Comput. Electron. Agric. 2023, 205, 107625. [Google Scholar] [CrossRef]

- Wang, Z.; Ling, Y.; Wang, X.; Meng, D.; Nie, L.; An, G.; Wang, X. An improved Faster R-CNN model for multi-object tomato maturity detection in complex scenarios. Ecol. Inform. 2022, 72, 101886. [Google Scholar] [CrossRef]

- Huang, W.; Liao, Y.; Wang, P.; Chen, Z.; Yang, Z.; Xu, L.; Mu, J. AITP-YOLO: Improved tomato ripeness detection model based on multiple strategies. Front. Plant Sci. 2025, 16, 1596739. [Google Scholar] [CrossRef]

- Wei, J.; Ni, L.; Luo, L.; Chen, M.; You, M.; Sun, Y.; Hu, T. GFS-YOLO11: A Maturity Detection Model for Multi-Variety Tomato. Agronomy 2024, 14, 2644. [Google Scholar] [CrossRef]

- Chai, S.; Wen, M.; Li, P.; Zeng, Z.; Tian, Y. DCFA-YOLO: A Dual-Channel Cross-Feature-Fusion Attention YOLO Network for Cherry Tomato Bunch Detection. Agriculture 2025, 15, 271. [Google Scholar] [CrossRef]

- Liang, X.; Jia, H.; Wang, H.; Zhang, L.; Li, D.; Wei, Z.; You, H.; Wan, X.; Li, R.; Li, W.; et al. ASE-YOLOv8n: A Method for Cherry Tomato Ripening Detection. Agronomy 2025, 15, 1088. [Google Scholar] [CrossRef]

- Obuchi, T.; Tanaka, T. When resampling/reweighting improves feature learning in imbalanced classification?: A toy-model study. arXiv 2024, arXiv:2409.05598. [Google Scholar]

- Xu, Y.; Liu, S. Botanical-based simulation of color change in fruit ripening: Taking tomato as an example. Comput. Animat. Virtual Worlds 2024, 35, e2225. [Google Scholar] [CrossRef]

- Ropelewska, E.; Szwejda-Grzybowska, J. Relationship of Textures from Tomato Fruit Images Acquired Using a Digital Camera and Lycopene Content Determined by High-Performance Liquid Chromatography. Agriculture 2022, 12, 1495. [Google Scholar] [CrossRef]

- Jeon, J.; Kang, J.K.; Kim, Y. Filter pruning method for inference time acceleration based on YOLOX in edge device. In Proceedings of the 2022 19th International SoC Design Conference (ISOCC), Gangneung-si, Republic of Korea, 19–22 October 2022; pp. 354–355. [Google Scholar]

- Gou, J.; Yu, B.; Maybank, S.J.; Tao, D. Knowledge distillation: A survey. Int. J. Comput. Vis. 2021, 129, 1789–1819. [Google Scholar] [CrossRef]

| Class | Training Set | Test Set | Total |

|---|---|---|---|

| Cluster | 12,624 | 525 | 13,149 |

| Fully ripe | 29,340 | 1217 | 30,557 |

| Green | 59,032 | 2612 | 61,694 |

| Ripe | 28,356 | 1031 | 29,387 |

| Turning | 19,842 | 796 | 20,638 |

| Environment Configuration | Parameter |

|---|---|

| Operating system | Windows 11 |

| CPU | Intel(R) Xeon(R) Gold 8481 |

| GPU | NVIDIA RTX 4090 16 GB |

| Development environment | Visual Studio Code 1.98 |

| Programming language | Python 3.10.8 |

| Deep learning framework | Pytorch 2.1.0 |

| Operating platform | Cuda 12.1 |

| A | B | C | P (%) | R (%) | F1 Score (%) | (%) | (%) | Parameters (M) | FLOPS (G) |

|---|---|---|---|---|---|---|---|---|---|

| × | × | × | 2,590,815 | ||||||

| ✓ | × | × | 2,887,161 | ||||||

| × | ✓ | × | 2,755,683 | ||||||

| × | × | ✓ | 2,590,815 | ||||||

| ✓ | ✓ | × | 2,730,749 | ||||||

| ✓ | × | ✓ | 2,879,473 | ||||||

| × | ✓ | ✓ | 2,434,403 | ||||||

| ✓ | ✓ | ✓ | 2,730,749 |

| Model | P (%) | R (%) | F1 Score (%) | (%) | (%) | Parameters (M) | FLOPS (G) | Time (ms) |

|---|---|---|---|---|---|---|---|---|

| YOLOv5n | 2,503,919 | |||||||

| YOLOv8n | 3,006,623 | |||||||

| YOLOv9t | 2,006,383 | |||||||

| YOLOv10n | 2,696,366 | |||||||

| YOLO11n | 2,590,815 | |||||||

| AFBF-YOLO (Ours) | 2,730,749 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, B.-J.; Bu, J.-Y.; Xia, J.-L.; Li, M.-X.; Su, W.-H. AFBF-YOLO: An Improved YOLO11n Algorithm for Detecting Bunch and Maturity of Cherry Tomatoes in Greenhouse Environments. Plants 2025, 14, 2587. https://doi.org/10.3390/plants14162587

Chen B-J, Bu J-Y, Xia J-L, Li M-X, Su W-H. AFBF-YOLO: An Improved YOLO11n Algorithm for Detecting Bunch and Maturity of Cherry Tomatoes in Greenhouse Environments. Plants. 2025; 14(16):2587. https://doi.org/10.3390/plants14162587

Chicago/Turabian StyleChen, Bo-Jin, Jun-Yan Bu, Jun-Lin Xia, Ming-Xuan Li, and Wen-Hao Su. 2025. "AFBF-YOLO: An Improved YOLO11n Algorithm for Detecting Bunch and Maturity of Cherry Tomatoes in Greenhouse Environments" Plants 14, no. 16: 2587. https://doi.org/10.3390/plants14162587

APA StyleChen, B.-J., Bu, J.-Y., Xia, J.-L., Li, M.-X., & Su, W.-H. (2025). AFBF-YOLO: An Improved YOLO11n Algorithm for Detecting Bunch and Maturity of Cherry Tomatoes in Greenhouse Environments. Plants, 14(16), 2587. https://doi.org/10.3390/plants14162587