A Detection Approach for Wheat Spike Recognition and Counting Based on UAV Images and Improved Faster R-CNN

Abstract

1. Introduction

1.1. Wheat Yield Estimation Significance and Technological Evolution

1.2. Critical Limitations in Current Detection Methodologies

1.3. Systematic Limitations in Current Research Paradigms

1.4. Proposed Innovations and Contributions

2. Results

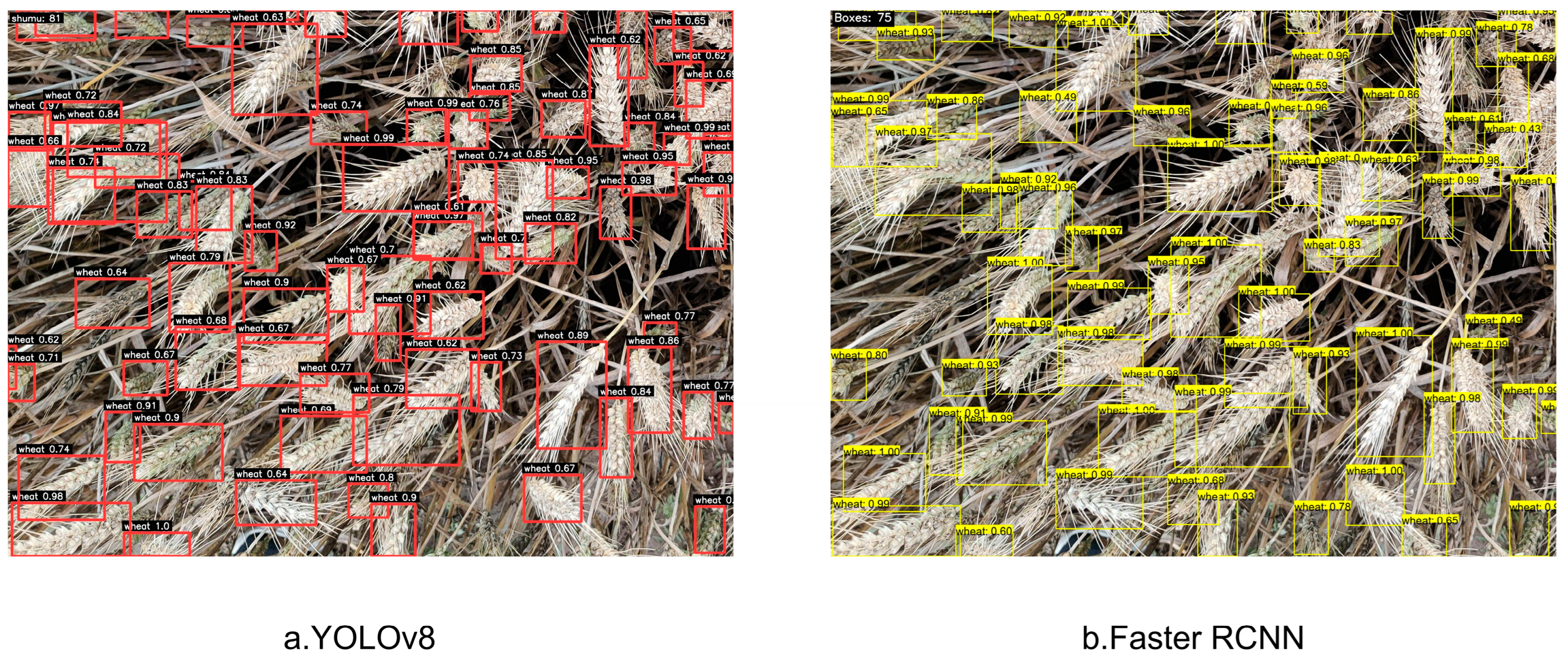

2.1. Performance Comparison of Faster-RCNN and YOLOv8

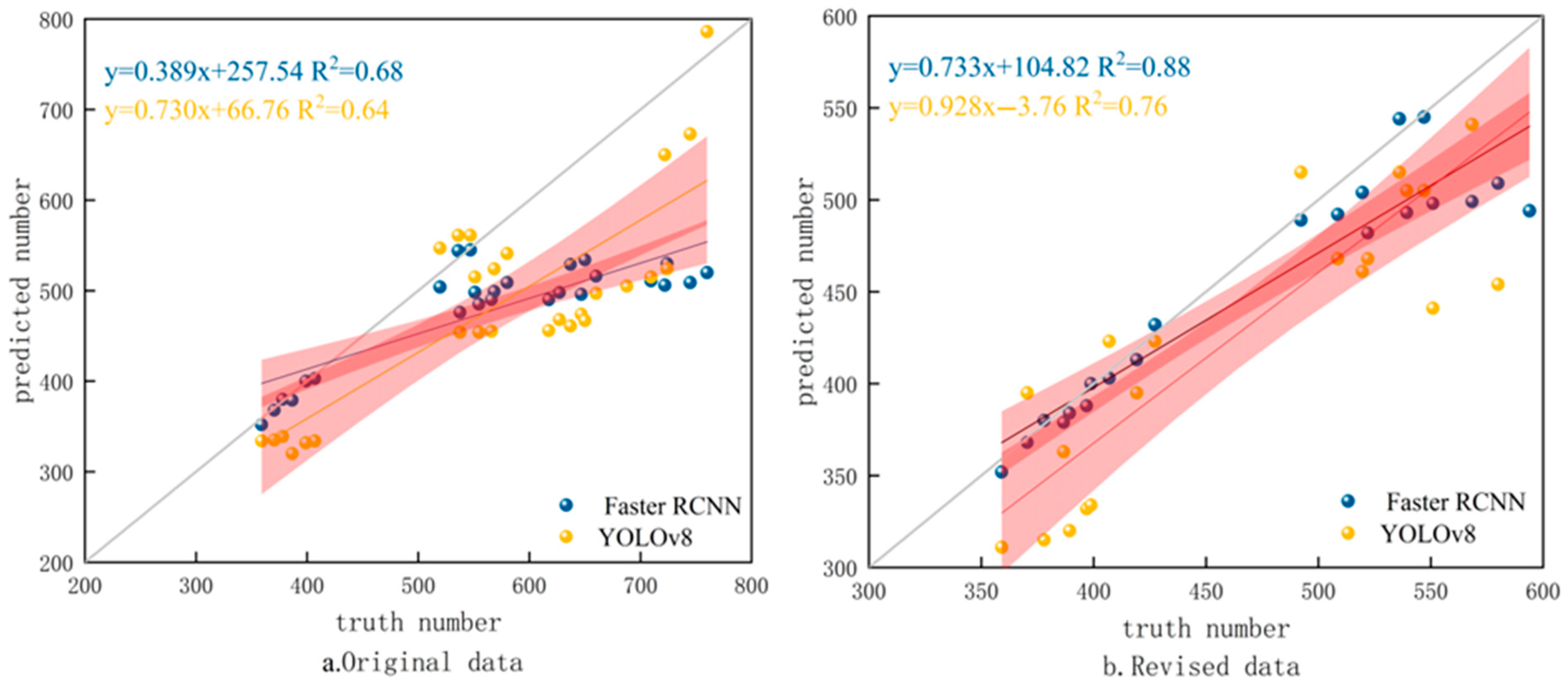

2.2. Correlation Analysis of Test and Actual Values

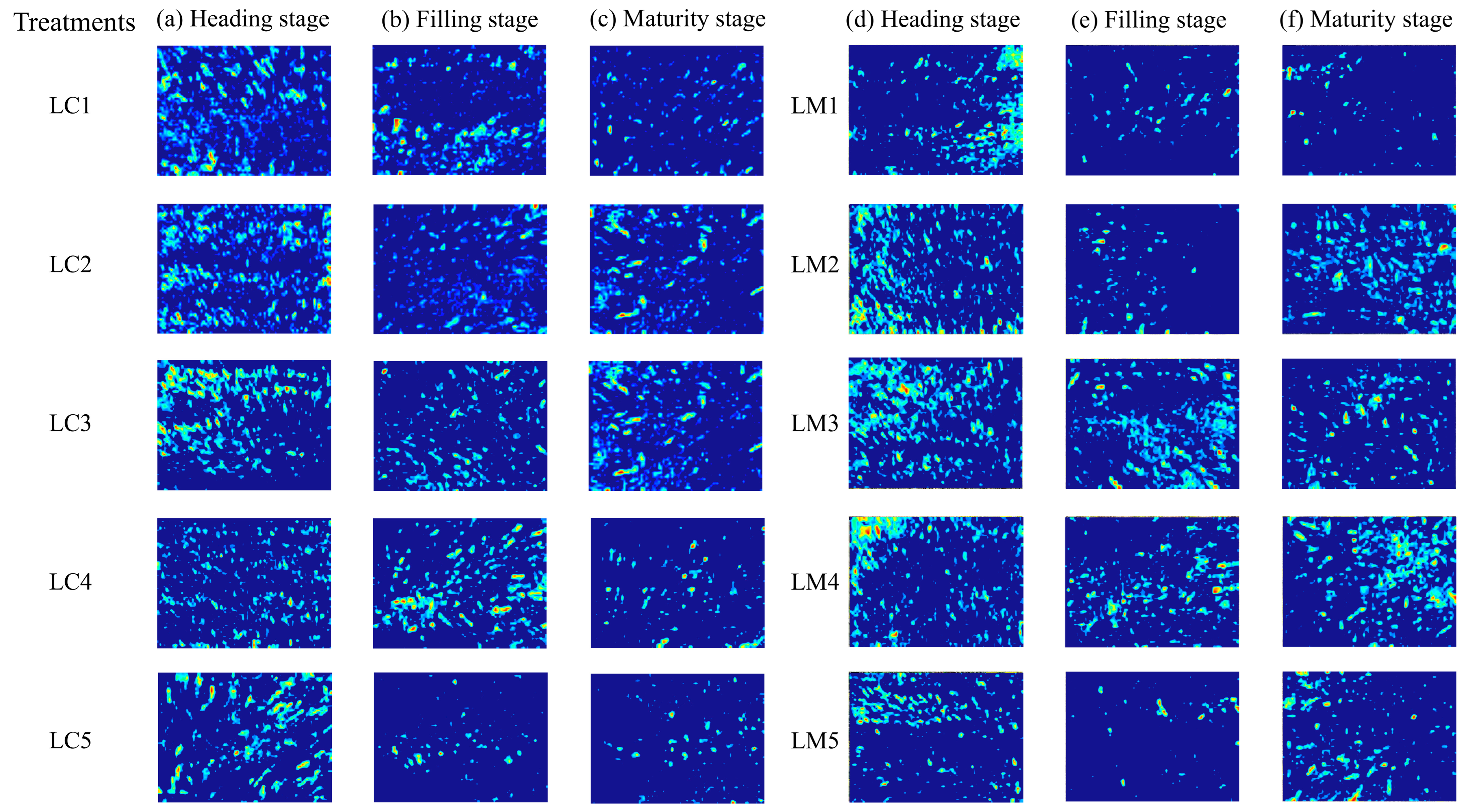

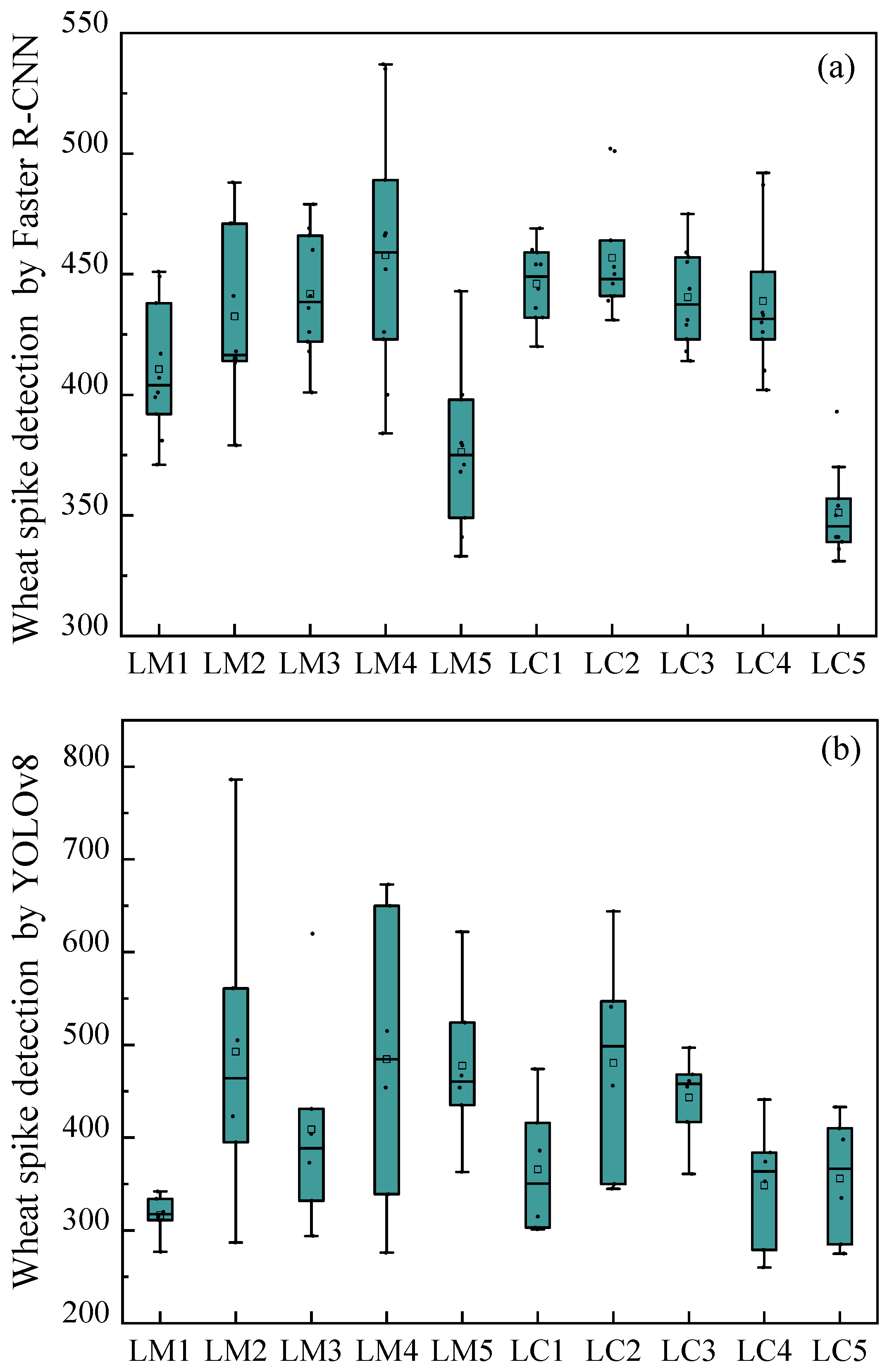

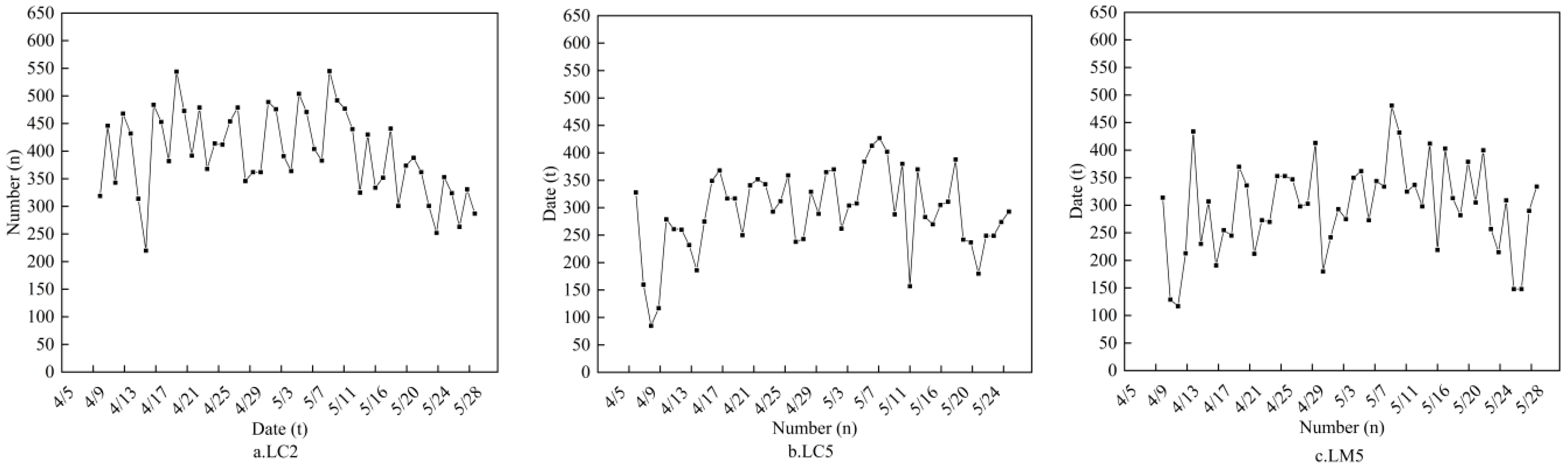

2.3. Generalization Analysis of the Fluctuation of Wheat Spike Number in Different Periods

3. Discussion

3.1. Advantages and Limitations of the Improved Faster RCNN Model

3.2. The Relationship Between Different Processing Methods of Fields and Recognition Accuracy

3.3. Correlation Analysis of the Model

4. Materials and Methods

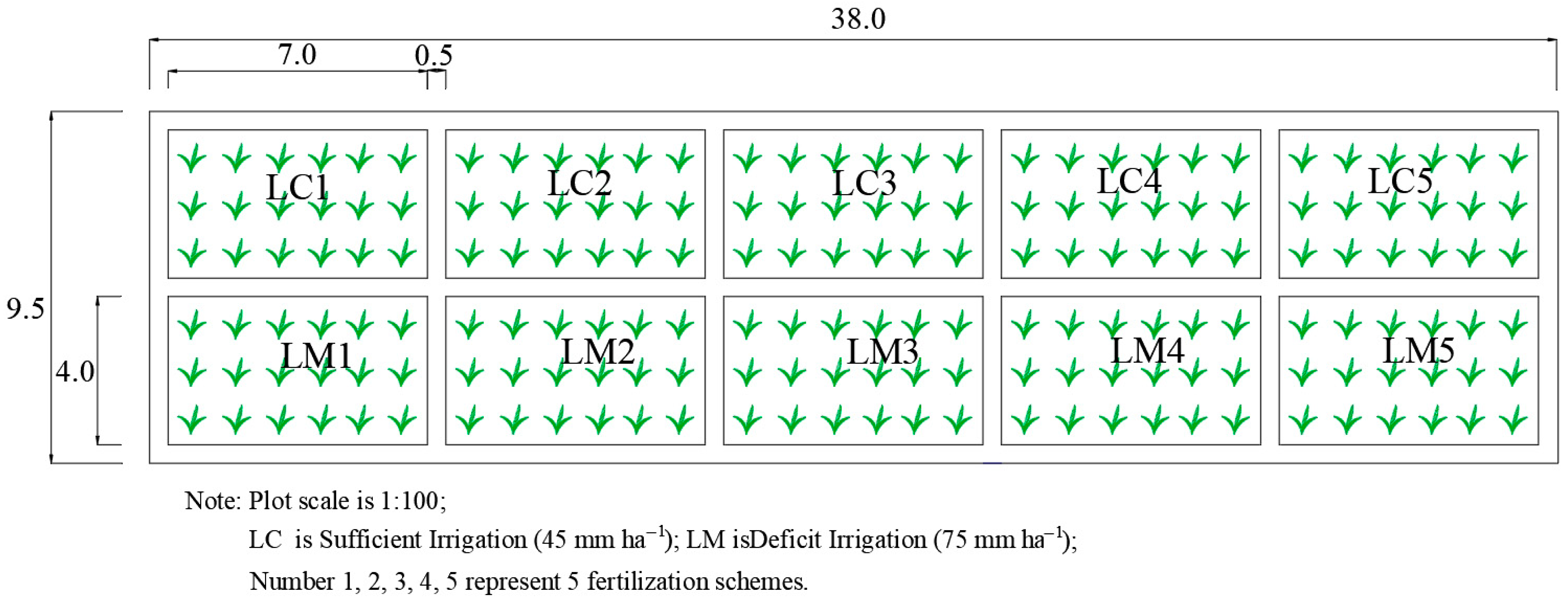

4.1. Field Experimental Site and Design

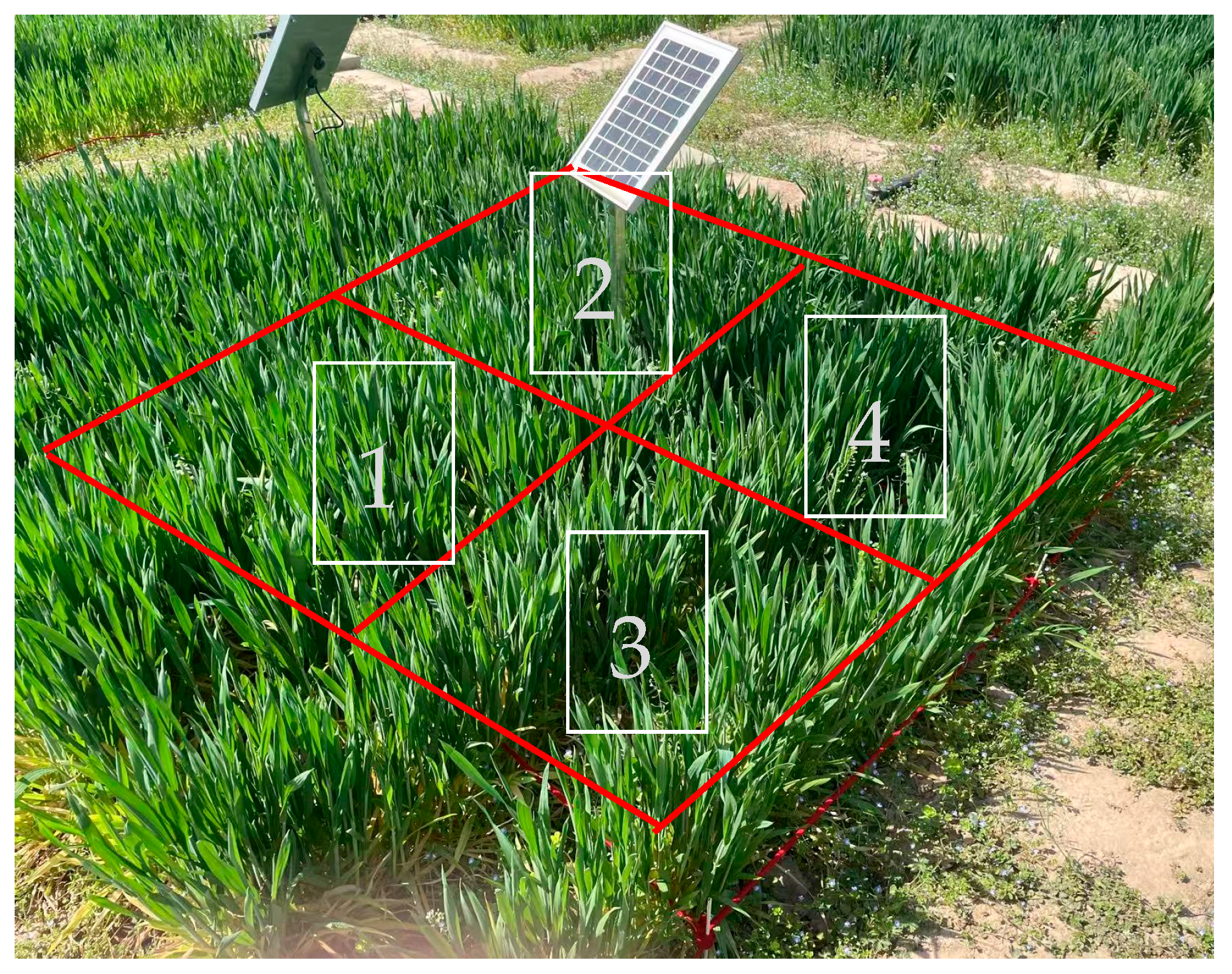

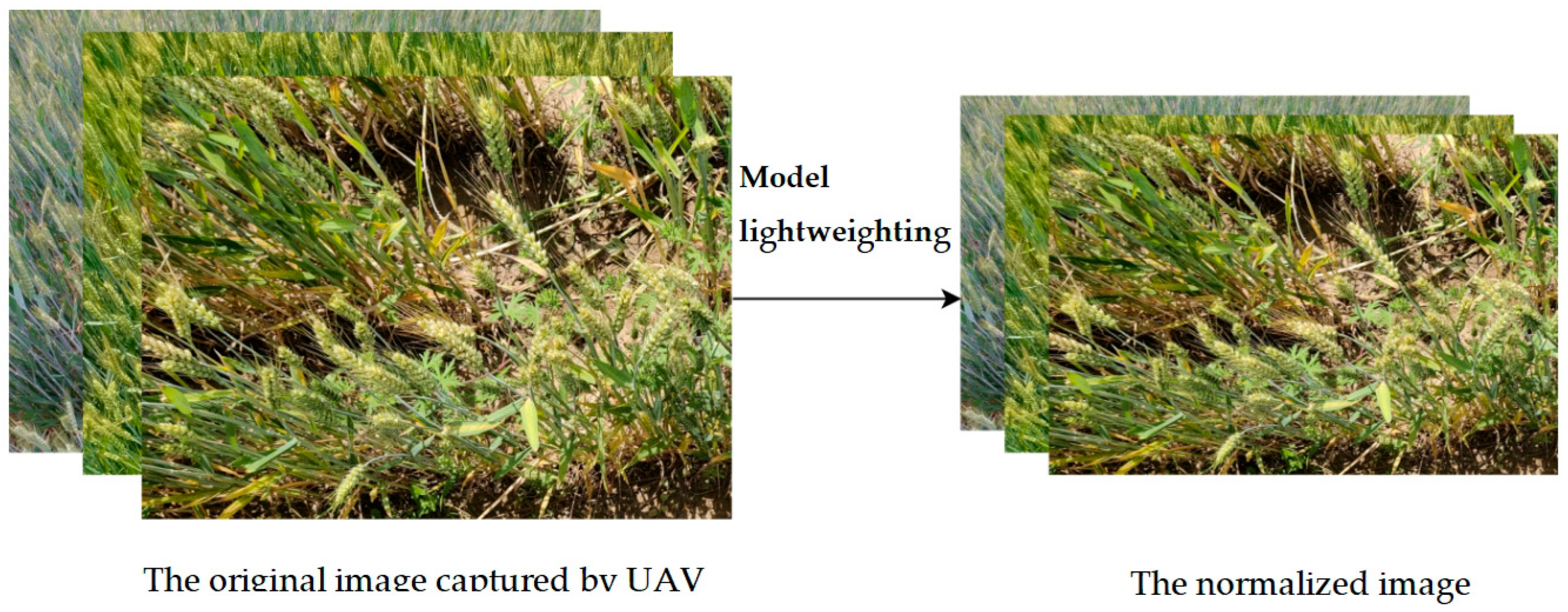

4.2. A Dual-Dataset Collaboration Strategy for Public Datasets and Experimental Datasets

4.3. Annotation Protocol and Quality Control for Training Details

4.4. Faster-RCNN Model for Tassel Detection

4.4.1. Faster RCNN Network Structure

4.4.2. ResNet-50 Module Details

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wang, L.; Zhang, Q.; Feng, T.C.; Wang, Y.B.; Li, Y.T.; Chen, D. Wheat grain counting method based on YOLO v7-ST model. Trans. Chin. Soc. Agric. Mach. 2023, 54, 188–197+204. [Google Scholar] [CrossRef]

- Dammer, K.H.; Möller, B.; Rodemann, B.; Heppner, D. Detection of head blight (Fusarium ssp.) in winter wheat by color and multispectral image analyses. Crop Prot. 2011, 30, 420–428. [Google Scholar] [CrossRef]

- Yousafzai, S.N.; Nasir, I.M.; Tehsin, S.; Fitriyani, N.L.; Syafrudin, M. FLTrans-Net: Transformer-based feature learning network for wheat head detection. Comput. Electron. Agric. 2025, 229, 109706. [Google Scholar] [CrossRef]

- Liu, X.; Yang, H.; Ata-Ul-Karim, S.T.; Schmidhalter, U.; Qiao, Y.Z.; Dong, B.D.; Liu, X.J.; Tian, Y.C.; Zhu, Y.; Cao, W.X.; et al. Screening drought-resistant and water-saving winter wheat varieties by predicting yields with multi-source UAV remote sensing data. Comput. Electron. Agric. 2025, 234, 110213. [Google Scholar] [CrossRef]

- Gruber, K. Agrobiodiversity: The living library. Nature 2017, 544, S8–S10. [Google Scholar] [CrossRef] [PubMed]

- Ge, Y.; Zhu, Z.C.; Zang, J.R.; Zhang, R.N.; Jin, S.C.; Xu, H.L.; Zhai, Z.Y. Yield estimation of winter wheat based on multi-temporal parameters by UAV remote sensing. Trans. Chin. Soc. Agric. Mach. 2025, 56, 344–355. [Google Scholar] [CrossRef]

- Pranaswi, D.; Jagtap, M.P.; Shinde, G.U.; Khatri, N.; Shetty, S.; Pare, S. Analyzing the synergistic impact of UAV-based technology and knapsack sprayer on weed management, yield-contributing traits, and yield in wheat (Triticum aestivum L.) for enhanced agricultural operations. Comput. Electron. Agric. 2024, 219, 108796. [Google Scholar] [CrossRef]

- Nakajima, K.; Saito, K.; Tsujimoto, Y.; Takai, T.; Mochizuki, A.; Yamaguchi, T.; Ibrahim, A.; Mairoua, S.G.; Andrianary, B.H.; Katsura, K.; et al. Robustness of the RGB image-based estimation for rice above-ground biomass by utilizing the dataset collected across multiple locations. Smart Agric. Technol. 2025, 11, 100998. [Google Scholar] [CrossRef]

- Ma, J.; Li, Y.; Chen, Y.; Du, K.; Zheng, F.; Zhang, L.; Sun, Z. Estimating above ground biomass of winter wheat at early growth stages using digital images and deep convolutional neural network. Eur. J. Agron. 2019, 103, 117–129. [Google Scholar] [CrossRef]

- Bendig, J.; Bolten, A.; Bennertz, S.; Broscheit, J.; Eichfuss, S.; Bareth, G. Estimating biomass of barley using crop surface models (CSMs) derived from UAV-based RGB imaging. Remote Sens. 2014, 6, 10395–10412. [Google Scholar] [CrossRef]

- Yang, N.; Zhang, Z.T.; Zhang, J.R.; Yang, X.F.; Liu, H.; Chen, J.Y.; Ning, J.F.; Sun, S.K.; Shi, L.S. Accurate estimation of winter-wheat leaf water content using continuous wavelet transform-based hyperspectral combined with thermal infrared on a UAV platform. Eur. J. Agron. 2025, 168, 127624. [Google Scholar] [CrossRef]

- Chojnacki, J.; Pachuta, A. Impact of the Parameters of Spraying with a Small Unmanned Aerial Vehicle on the Distribution of Liquid on Young Cherry Trees. Agriculture 2021, 11, 1094. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Hartling, S.; Esposito, F.; Fritschi, F.B. Soybean yield prediction from UAV using multimodal data fusion and deep learning. Remote Sens. Environ. 2020, 237, 111599. [Google Scholar] [CrossRef]

- Dandrifosse, S.; Ennadifi, E.; Carlier, A.; Gosselin, B.; Dumont, B.; Mercatoris, B. Deep learning for wheat ear segmentation and ear density measurement: From heading to maturity. Comput. Electron. Agric. 2022, 199, 107161. [Google Scholar] [CrossRef]

- Li, Z.; Zhu, Y.; Sui, S.; Zhao, Y.; Liu, P.; Li, X. Real-time detection and counting of wheat ears based on improved YOLOv7. Comput. Electron. Agric. 2024, 218, 108670. [Google Scholar] [CrossRef]

- Maheswari, P.; Raja, P.; Apolo-Apolo, O.E.; Pérez-Ruiz, M. Intelligent fruit yield estimation for orchards using deep learning-based semantic segmentation techniques—A review. Front. Plant Sci. 2021, 12, 684328. [Google Scholar] [CrossRef]

- You, J.; Li, X.; Low, M.; Lobell, D.; Ermon, S. Deep Gaussian process for crop yield prediction based on remote sensing data. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence (AAAI-17), San Francisco, CA, USA, 4–9 February 2017; pp. 4559–4565. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single shot MultiBox detector. Lect. Notes Comput. Sci. 2016, 9905, 21–37. [Google Scholar] [CrossRef]

- Ren, S.Q.; He, K.M.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Sharma, J.; Kumar, D.; Verma, R. Deep learning-based wheat stripe rust disease recognition using Mask RCNN. In Proceedings of the 2024 International Conference on Intelligent Systems and Advanced Applications (ICISAA), Pune, India, 25–26 October 2024; pp. 1–5. [Google Scholar] [CrossRef]

- Yao, Z.S.; Zhang, D.S.; Tian, T.; Zain, M.; Zhang, W.J.; Yang, T.L.; Song, X.X.; Zhu, S.L.; Liu, T.; Ma, H.J.; et al. APW: An ensemble model for efficient wheat spike counting in unmanned aerial vehicle images. Comput. Electron. Agric. 2024, 224, 109204. [Google Scholar] [CrossRef]

- Zhou, G.H.; Ma, S.; Liang, F.F. Recognition of the apple in panoramic images based on improved YOLOv4 model. Trans. Chin. Soc. Agric. Eng. 2022, 38, 159–168. [Google Scholar] [CrossRef]

- Chen, J.W.; Li, Q.; Tan, Q.X.; Gui, S.Q.; Wang, X.; Yi, F.J.; Jiang, D.; Zhou, J. Combining lightweight wheat spikes detecting model and offline Android software development for in-field wheat yield prediction. Trans. Chin. Soc. Agric. Eng. 2021, 37, 156–164. [Google Scholar] [CrossRef]

- Zhang, J.M.; Yan, K.; Wang, Y.F.; Liu, J.; Zeng, J.; Wu, P.F.; Huang, Q.Y. Classification and identification of crop pests using improved Mask-RCNN algorithm. Trans. Chin. Soc. Agric. Eng. 2024, 40, 202–209. [Google Scholar] [CrossRef]

- Yan, J.W.; Zhao, Y.; Zhang, L.W.; Su, X.D.; Liu, H.Y.; Zhang, F.G.; Fan, W.G.; He, L. Recognition of Rosa roxbunghii in natural environment based on improved Faster RCNN. Trans. Chin. Soc. Agric. Eng. 2019, 35, 143–150. [Google Scholar] [CrossRef]

- Wang, W.H.; Xin, Z.Y.; Che, Q.L.; Zhang, J.J. Detecting winter jujube defects using improved Faster RCNN model. Trans. Chin. Soc. Agric. Eng. 2024, 40, 283–289. [Google Scholar] [CrossRef]

- Sun, J.; Yang, K.; Chen, C.; Shen, J.; Yang, Y.; Wu, X.; Norton, T. Wheat head counting in the wild by an augmented feature pyramid networks-based convolutional neural network. Comput. Electron. Agric. 2022, 193, 106705. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Bao, W.X.; Yang, X.J.; Liang, D.; Hu, G.S.; Yang, X.J. Lightweight convolutional neural network model for field wheat ear disease identification. Comput. Electron. Agric. 2021, 189, 106367. [Google Scholar] [CrossRef]

- Jeon, Y.; Hong, M.J.; Seop Ko, C.; Park, S.J.; Lee, H.; Lee, W.G.; Jung, D.H. A hybrid CNN-Transformer model for identification of wheat varieties and growth stages using high-throughput phenotyping. Comput. Electron. Agric. 2025, 230, 109882. [Google Scholar] [CrossRef]

- Li, L.; Hassan, M.A.; Yang, S.R.; Jing, F.; Yang, M.J.; Rasheed, A.; Wang, J.K.; Xia, X.C.; He, Z.H.; Xiao, Y.G. Development of image-based wheat spike counter through a Faster R-CNN algorithm and application for genetic studies. Crop J. 2022, 10, 1303–1311. [Google Scholar] [CrossRef]

- Zhao, J.Q.; Yan, J.W.; Xue, T.J.; Wang, S.W.; Qiu, X.L.; Yao, X.; Tian, Y.C.; Zhu, Y.; Cao, W.X.; Zhang, X.H. A deep learning method for oriented and small wheat spike detection (OSWSDet) in UAV images. Comput. Electron. Agric. 2022, 198, 107087. [Google Scholar] [CrossRef]

- Kong, X.H.; Li, X.J.; Zhu, X.X.; Guo, Z.M.; Zeng, L.P. Detection model based on improved Faster-RCNN in apple orchard environment. Intell. Syst. Appl. 2024, 21, 200325. [Google Scholar] [CrossRef]

- Zhao, H.; Qiao, Y.J.; Wang, H.J.; Yue, Y.J. Apple fruit recognition in complex orchard environment based on improved YOLOv3. Trans. Chin. Soc. Agric. Eng. 2021, 37, 127–135. [Google Scholar] [CrossRef]

- Chen, F.J.; Zhu, X.Y.; Zhou, W.J.; Gu, M.M.; Zhao, Y.D. Spruce counting method based on improved YOLOv3 model in UAV images. Trans. Chin. Soc. Agric. Eng. 2020, 36, 22–30. [Google Scholar] [CrossRef]

- Bao, W.X.; Zhang, X.; Hu, G.S.; Huang, L.S.; Liang, D.; Lin, Z. Estimation and counting of wheat ears density in field based on deep convolutional neural network. Trans. Chin. Soc. Agric. Eng. 2020, 36, 186–193. [Google Scholar] [CrossRef]

- Shen, X.J.; Sun, J.S.; Liu, Z.G.; Zhang, J.P.; Liu, X.F. Effects of low irrigation limits on yield and grain quality of winter wheat. Trans. Chin. Soc. Agric. Eng. 2010, 26, 58–65. [Google Scholar]

- Gu, S.W.; Gao, J.M.; Deng, Z.; Lyu, M.C.; Liu, J.Y.; Zong, J.; Qin, J.T.; Fan, X.C. Effects of border irrigation and fertilization timing on soil nitrate nitrogen distribution and winter wheat yield. Trans. Chin. Soc. Agric. Eng. 2020, 36, 134–142. [Google Scholar] [CrossRef]

- Deng, Y.; Wu, H.R.; Zhu, H.J. Recognition and counting of citrus flowers based on instance segmentation. Trans. Chin. Soc. Agric. Eng. 2020, 36, 200–207. [Google Scholar] [CrossRef]

- Zhang, D.Y.; Luo, H.S.; Cheng, T.; Li, W.F.; Zhou, X.G.; Gu, C.Y.; Diao, Z.H. Enhancing wheat Fusarium head blight detection using rotation Yolo wheat detection network and simple spatial attention network. Comput. Electron. Agric. 2023, 211, 107968. [Google Scholar] [CrossRef]

- Sharma, A.; Kumar, V.; Longchamps, L. Comparative performance of YOLOv8, YOLOv9, YOLOv10, YOLOv11 and Faster R-CNN models for detection of multiple weed species. Smart Agric. Technol. 2024, 9, 100648. [Google Scholar] [CrossRef]

- Sapkota, R.; Ahmed, D.; Karkee, M. Comparing YOLOv8 and Mask R-CNN for instance segmentation in complex orchard environments. Artif. Intell. Agric. 2024, 13, 84–99. [Google Scholar] [CrossRef]

- Qian, Y.R.; Qin, Y.G.; Wei, H.Y.; Lu, Y.G.; Huang, Y.N.; Liu, P.; Fan, Y.Y. MFNet: Multi-scale feature enhancement networks for wheat head detection and counting in complex scene. Comput. Electron. Agric. 2024, 225, 109342. [Google Scholar] [CrossRef]

- Bao, W.X.; Xie, W.J.; Hu, G.S.; Yang, X.J.; Su, B.B. Wheat ear counting method in UAV images based on TPH-YOLO. Trans. Chin. Soc. Agric. Eng. 2023, 39, 155–161. [Google Scholar] [CrossRef]

- Li, Z.W.; Zhang, Y.L.; Lu, J.M.; Wang, Y.; Zhao, C.; Wang, W.L.; Wang, J.J.; Zhang, H.C.; Huo, Z.Y. Better inversion of rice nitrogen nutrition index at early panicle initiation stage using spectral features, texture features, and wavelet features based on UAV multispectral imagery. Eur. J. Agron. 2025, 168, 127654. [Google Scholar] [CrossRef]

- Fan, M.Y.; Ma, Q.; Liu, J.M.; Wang, Q.; Wang, Y.; Duan, X.C. Counting method of wheat in field based on machine vision technology. Trans. Chin. Soc. Agric. Mach. 2015, 46, 234–239. [Google Scholar]

- Zaji, A.; Liu, Z.; Xiao, G.Z.; Bhowmik, P.; Sangha, J.S.; Ruan, Y.F. Wheat spike localization and counting via hybrid UNet architectures. Comput. Electron. Agric. 2022, 203, 107439. [Google Scholar] [CrossRef]

- Liu, L.B.; Wang, T.; Zhang, P. Yield estimation method of Ningxia wolfberry using hyperspectral images based on CNN-S-GPR. Trans. Chin. Soc. Agric. Mach. 2022, 53, 250–257. [Google Scholar]

- Wang, J.; Wang, P.X.; Tian, H.R.; Tansey, K.; Liu, J.M.; Quan, W.T. A deep learning framework combining CNN and GRU for improving wheat yield estimates using time series remotely sensed multi-variables. Comput. Electron. Agric. 2023, 206, 107705. [Google Scholar] [CrossRef]

- Yan, E.P.; Ji, Y.; Yin, X.M.; Mo, D.K. Rapid estimation of Camellia oleifera yield based on automatic detection of canopy fruits using UAV images. Trans. Chin. Soc. Agric. Eng. 2021, 37, 39–46. [Google Scholar] [CrossRef]

- Sun, J.; Yang, K.F.; Luo, Y.Q.; Shen, J.F.; Wu, X.H.; Qian, L. Method for the multiscale perceptual counting of wheat ears based on UAV images. Trans. Chin. Soc. Agric. Eng. 2021, 37, 136–144. [Google Scholar] [CrossRef]

- Li, J.; Yang, Z.H.; Zheng, Q.; Qiao, J.W.; Tu, J.M. Method for detecting and counting wheat ears using RT-WEDT. Trans. Chin. Soc. Agric. Eng. 2024, 40, 146–156. [Google Scholar] [CrossRef]

- Li, Y.X.; Ma, J.C.; Liu, H.J.; Zhang, L.X. Field growth parameter estimation system of winter wheat using RGB digital images and deep learning. Trans. Chin. Soc. Agric. Eng. 2021, 37, 189–198. [Google Scholar] [CrossRef]

- David, E.; Madec, S.; Sadeghi-Tehran, P.; Aasen, H.; Zheng, B.; Liu, S.; Kirchgessner, N.; Ishikawa, G.; Nagasawa, K.; Badhon, M.A.; et al. Global Wheat Head Detection (GWHD) Dataset: A Large and Diverse Dataset of High-Resolution RGB-Labelled Images to Develop and Benchmark Wheat Heat Detection Methods. Plant Phenomics 2020, 2020, 3521852. [Google Scholar] [CrossRef] [PubMed]

- Theckedath, D.; Sedamkar, R.R. Detecting Affect States Using VGG16, ResNet-50 and SE-ResNet-50 Networks. SN Comput. Sci. 2020, 1, 79. [Google Scholar] [CrossRef]

- Qassim, H.; Verma, A.; Feinzimer, D. Compressed residual-VGG16 CNN model for big data places image recognition. In Proceedings of the 2018 IEEE 8th annual computing and communication workshop and conference (CCWC), Las Vegas, NV, USA, 8–10 January 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 169–175. [Google Scholar] [CrossRef]

| Parameter | Value |

|---|---|

| Optimizer | SGD |

| Momentum | 0.937 |

| Label smoothing | 0.0001 |

| Batch size | 4 |

| Epochs | 100 |

| Learning rate | 0.001 |

| Workers | 8 |

| Loss | 0.6291 |

| Model | Training Dataset | Precision | Batch Size | Inference GFLOPs | Training Time (min) | Epoch | TrainGFLOPs | Recall | F1-Score | IoU |

|---|---|---|---|---|---|---|---|---|---|---|

| Faster RCNN | global-wheat-detection | 92.1 ± 0.012 a | 4 | 1.7 | 714 | 100 | 6.5 | 0.8872 | 0.9038 | 0.5 |

| YOLOv8 | global-wheat-detection | 89.1 ± 0.015 ab | 4 | 0.7 | 53 | 100 | 0.9 | 0.8825 | 0.8867 | 0.5 |

| Treatments | Spike Density (spikes/m2) | Effective Spike Density (spikes/m2) | Faster RCNN-Based Wheat Spike Count | Revised Number of Wheat Spikes | Precision(%) | Relative Error (%) |

|---|---|---|---|---|---|---|

| LM1 | 601 ± 13 b | 566 ± 11 b | 411 ± 20 b | 446 ± 30 b | 74.18% | 25.82% |

| LM2 | 747 ± 24 ab | 724 ± 14 ab | 433 ± 34 ab | 467 ± 37 b | 62.86% | 37.14% |

| LM3 | 800 ± 16 ab | 760 ± 15 a | 442 ± 26 ab | 480 ± 28 a | 59.96% | 40.04% |

| LM4 | 773 ± 16 a | 739 ± 13 ab | 458 ± 52 a | 497 ± 57 a | 64.32% | 35.68% |

| LM5 | 459 ± 9 c | 407 ± 8 c | 376 ± 17 c | 408 ± 35 bc | 88.99% | 11.01% |

| LC1 | 612 ± 12 b | 580 ± 11 a | 446 ± 16 a | 484 ± 17 a | 79.13% | 20.87% |

| LC2 | 561 ± 11 ab | 547 ± 10 ab | 457 ± 25 a | 496 ± 27 a | 88.41% | 11.59% |

| LC3 | 702 ± 14 a | 660 ± 13 a | 441 ± 20 ab | 478 ± 22 b | 68.13% | 31.87% |

| LC4 | 697 ± 13 ab | 650 ± 16 a | 439 ± 30 ab | 476 ± 32 b | 68.36% | 31.64% |

| LC5 | 409 ± 8 c | 378 ± 7 c | 351 ± 19 c | 381 ± 20 c | 93.23% | 6.77% |

| Soil Depth (cm) | Soil Physical Properties | |||||

|---|---|---|---|---|---|---|

| Soil Volume (g/cm3) | Field Capacity (cm3/cm3) | Nitrate Nitrogen (mg/cm3) | Ammonium Nitrogen (mg/cm3) | Soil Organic Matter (g·kg−1) | Total N (g·kg−1) | |

| 0~20 | 1.35 | 32 | 0.0368 | 0.0104 | 9.16 | 0.5665 |

| 20~40 | 1.56 | 34 | 0.0204 | 0.0033 | 6.67 | 0.3635 |

| 40~60 | 1.41 | 34 | 0.0132 | 0.0018 | 2.79 | 0.1945 |

| Parameter | Value | Rationale |

|---|---|---|

| Batch Size | 16 | Balanced GPU memory utilization |

| Learning Rate | 0.001 (Adam optimizer) | Stable convergence for detection |

| Training Epochs | 100 | Early stopping at plateau (patience = 10) |

| Hardware | NVIDIA RTX 3090 (24 GB) | Mixed-precision training enabled |

| Training Time | 8.5 h | 2.1 iterations/sec on full dataset |

| Data Augmentation | Horizontal flip (p = 0.5) | Improved orientation invariance |

| Loss Weights | 1:3 (background: spike) | Address class imbalance |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, D.; Shi, L.; Yin, H.; Cheng, Y.; Liu, S.; Wu, S.; Yang, G.; Dong, Q.; Ge, J.; Li, Y. A Detection Approach for Wheat Spike Recognition and Counting Based on UAV Images and Improved Faster R-CNN. Plants 2025, 14, 2475. https://doi.org/10.3390/plants14162475

Wang D, Shi L, Yin H, Cheng Y, Liu S, Wu S, Yang G, Dong Q, Ge J, Li Y. A Detection Approach for Wheat Spike Recognition and Counting Based on UAV Images and Improved Faster R-CNN. Plants. 2025; 14(16):2475. https://doi.org/10.3390/plants14162475

Chicago/Turabian StyleWang, Donglin, Longfei Shi, Huiqing Yin, Yuhan Cheng, Shaobo Liu, Siyu Wu, Guangguang Yang, Qinge Dong, Jiankun Ge, and Yanbin Li. 2025. "A Detection Approach for Wheat Spike Recognition and Counting Based on UAV Images and Improved Faster R-CNN" Plants 14, no. 16: 2475. https://doi.org/10.3390/plants14162475

APA StyleWang, D., Shi, L., Yin, H., Cheng, Y., Liu, S., Wu, S., Yang, G., Dong, Q., Ge, J., & Li, Y. (2025). A Detection Approach for Wheat Spike Recognition and Counting Based on UAV Images and Improved Faster R-CNN. Plants, 14(16), 2475. https://doi.org/10.3390/plants14162475