Intelligent Identification of Tea Plant Seedlings Under High-Temperature Conditions via YOLOv11-MEIP Model Based on Chlorophyll Fluorescence Imaging

Abstract

1. Introduction

2. Materials and Methods

2.1. Experimental Samples

2.2. Fluorescence Image Acquisition Equipment

2.3. Data Acquisition and Processing

2.3.1. Correlation Analysis of Fluorescence Parameters

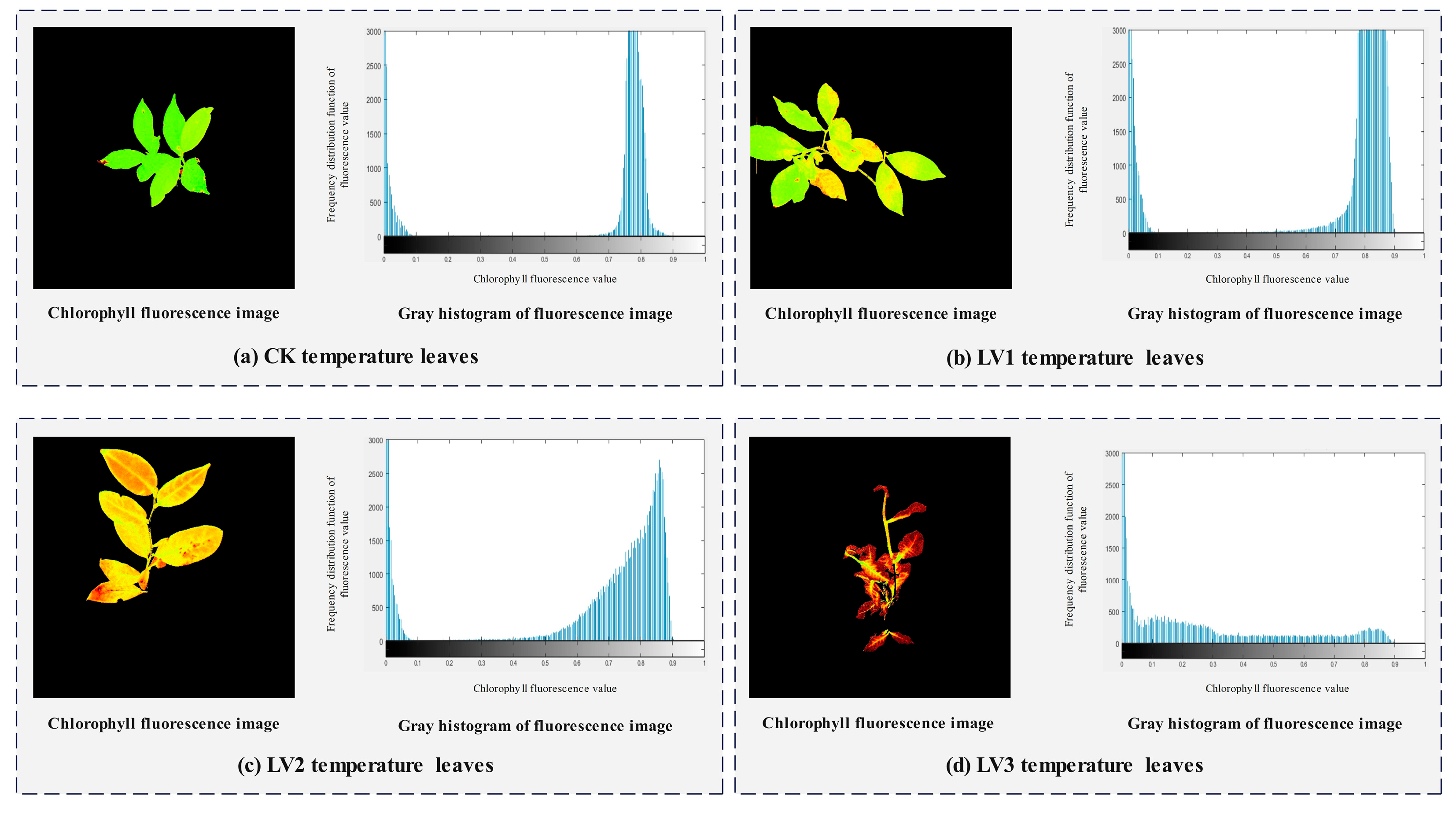

2.3.2. Fluorescence Image Analysis

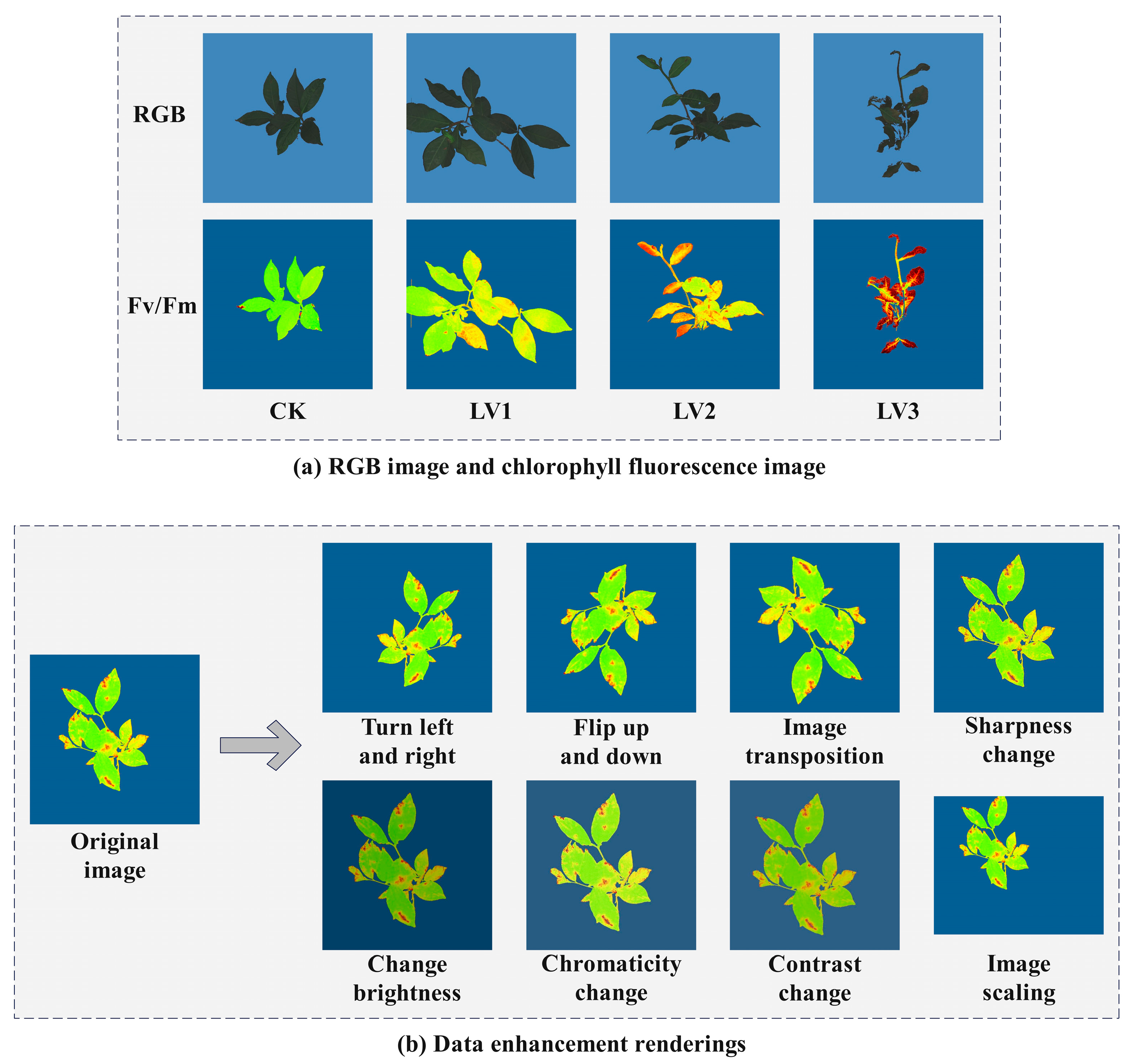

2.3.3. Establishment of the Image Dataset

2.4. Improvement of the YOLOv11 Network

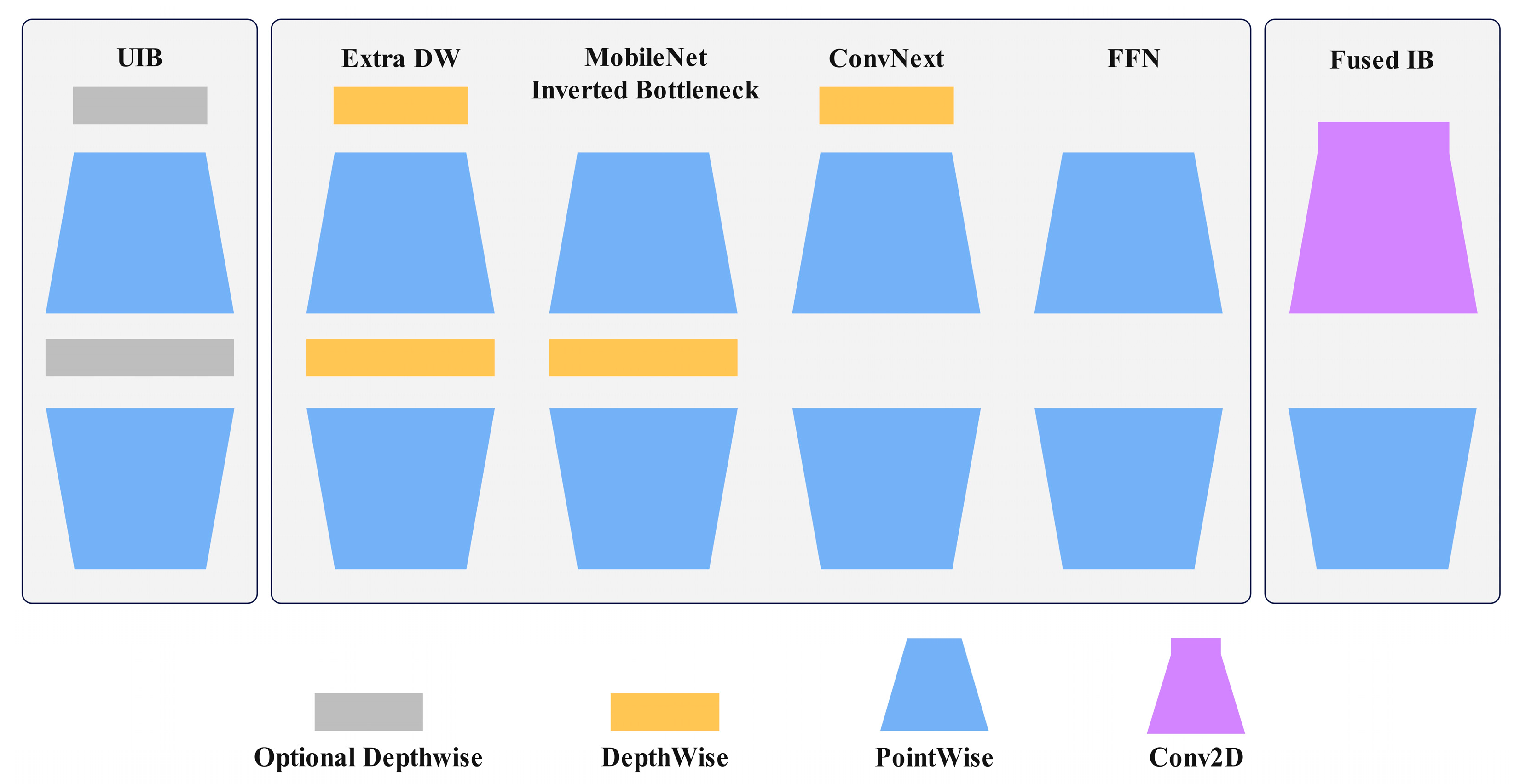

2.4.1. Target Detection Network MobileNetV4

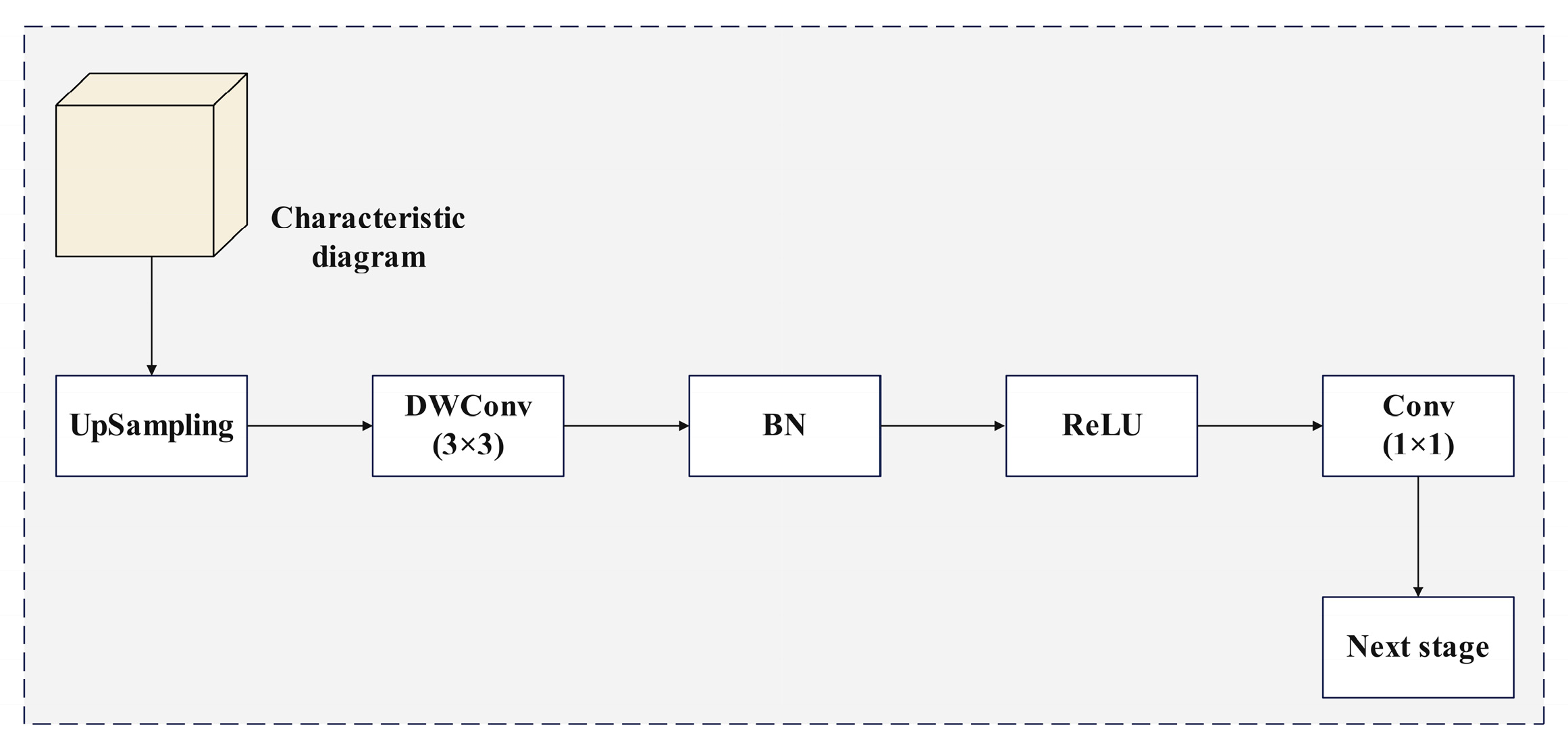

2.4.2. Efficient up Convolution Block

2.4.3. Inverted Residual Mobile Block

2.4.4. Lightweight Partial Convolution

2.5. Evaluation Index and Operating Environment

3. Results

3.1. Ablation Study

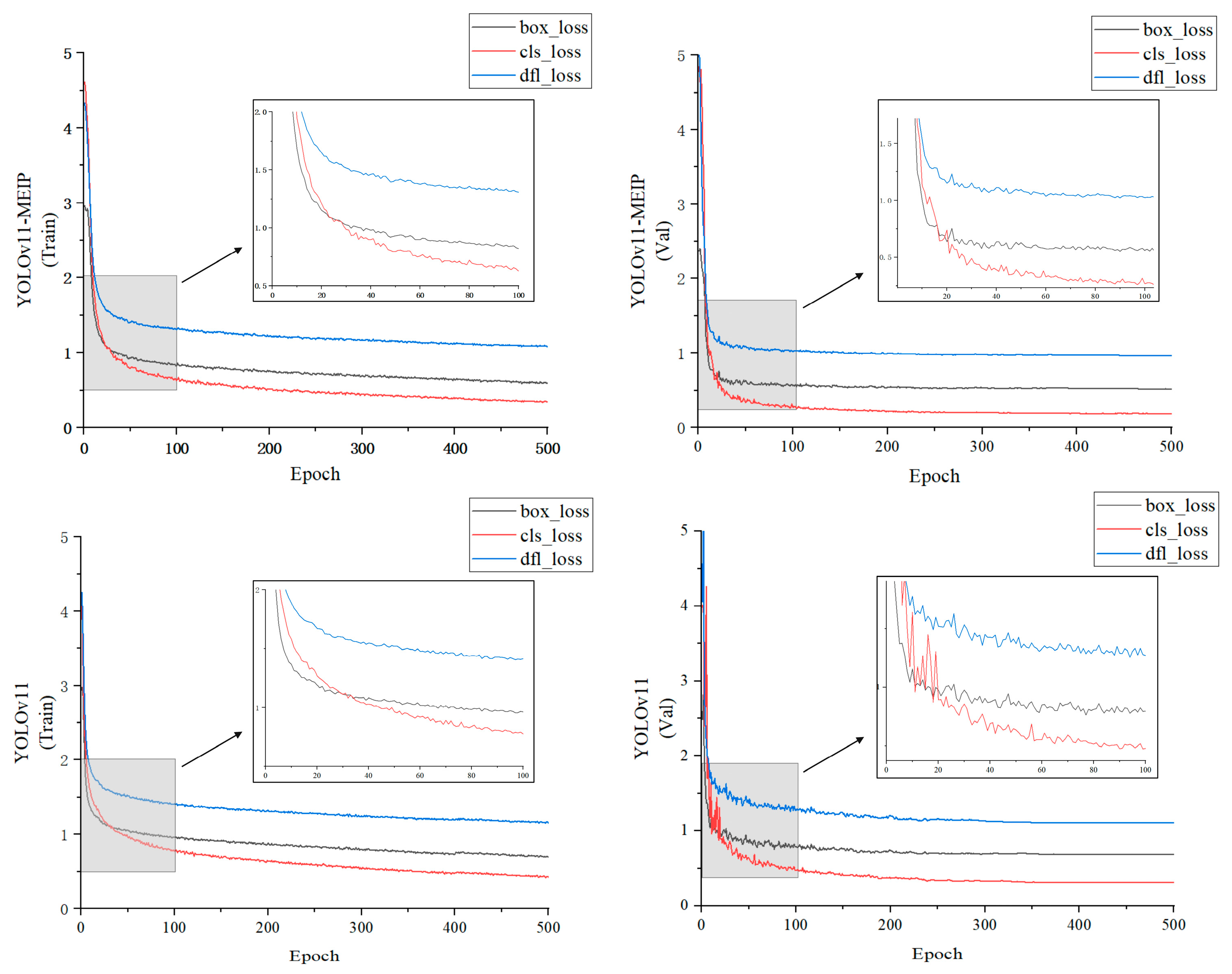

3.2. Loss Function Analysis

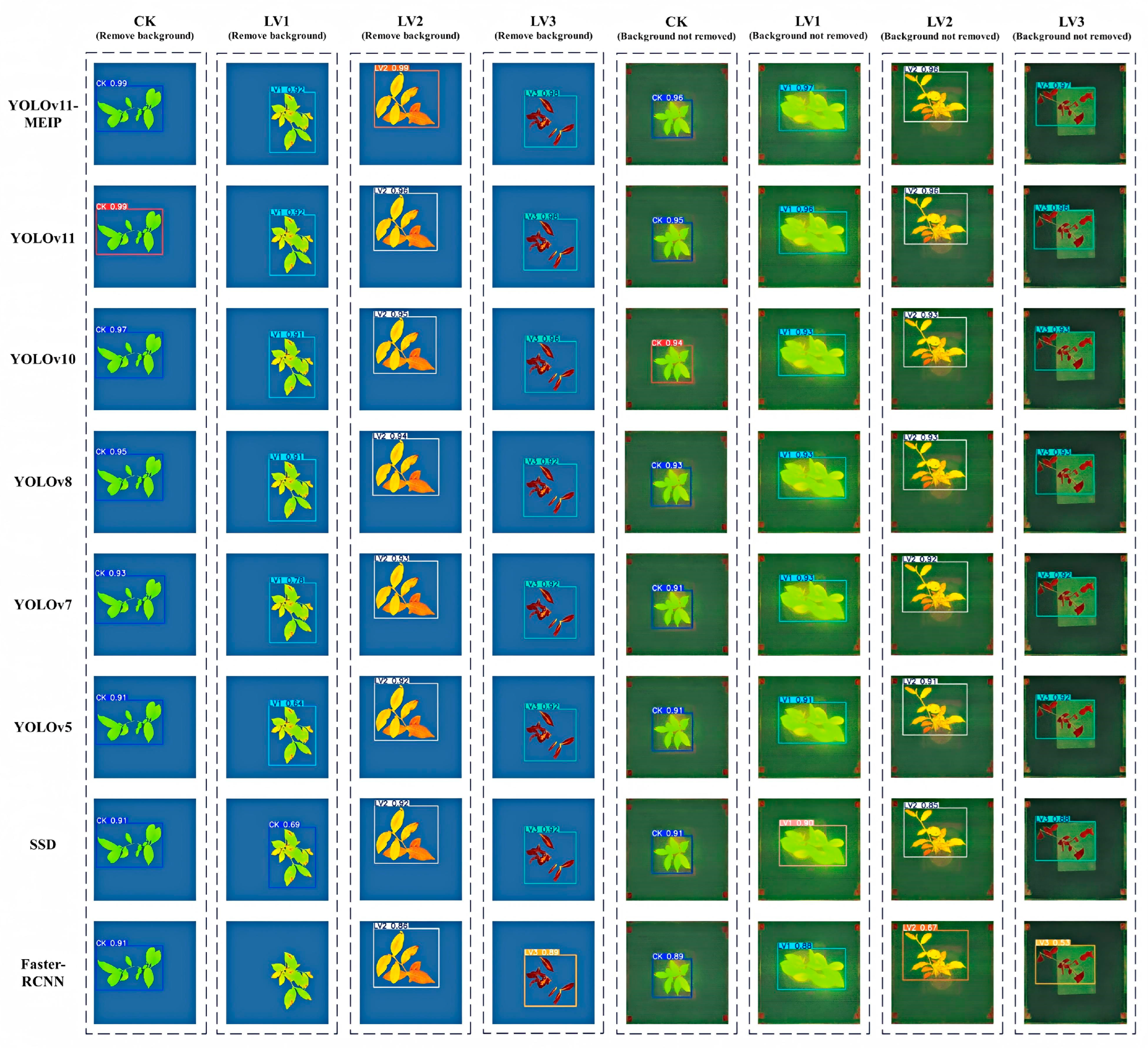

3.3. Comparative Experiments of Different Models

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Liu, Z.-W.; Wu, Z.-J.; Li, X.-H.; Huang, Y.; Li, H.; Wang, Y.-X.; Zhuang, J. Identification, classification, and expression profiles of heat shock transcription factors in tea plant (Camellia sinensis) under temperature stress. Gene 2016, 576, 52–59. [Google Scholar] [CrossRef]

- Yang, Q.; Guo, Y.; Li, J.; Wang, L.; Wang, H.; Liu, G.; Fang, W.; Qiang, S.; Strasser, R.J.; Chen, S. Natural plant inducer 2-Amino-3-Methylhexanoic acid protects physiological activity against high-temperature damage to tea (Camellia sinensis). Sci. Hortic. 2023, 312, 111836. [Google Scholar] [CrossRef]

- Chen, H.; Song, Y.; Li, H.; Zaman, S.; Fan, K.; Ding, Z.; Wang, Y. Enhancing the Adaptability of Tea Plants (Camellia sinensis L.) to High-Temperature Stress with Small Peptides and Biosurfactants. Plants 2023, 12, 2817. [Google Scholar] [CrossRef]

- Li, X.; Wei, J.-P.; Ahammed, G.J.; Zhang, L.; Li, Y.; Yan, P.; Zhang, L.-P.; Han, W.-Y. Brassinosteroids Attenuate Moderate High Temperature-Caused Decline in Tea Quality by Enhancing Theanine Biosynthesis in Camellia sinensis L. Front. Plant Sci. 2018, 9, 1016. [Google Scholar]

- Damayanthi, M.M.N.; Wijeratne, T.L.; Damunupola, J.W. Effect of exogenous application of salicylic acid on alleviation of drought stress of immature tea (Camellia sinensis L.) plants. Isr. J. Plant Sci. 2024, 71, 65–76. [Google Scholar] [CrossRef]

- Chen, M.; Zhu, X.; Hou, M.; Luo, W.; Jiang, Y.; Yu, Y.; Wang, J.; Yuan, H.; Huang, X.; Hua, J. Effects of Low-Temperature Stress on Cold Resistance Biochemical Characteristics of Dali and Siqiu Tea Seedlings. Horticulturae 2024, 10, 823. [Google Scholar] [CrossRef]

- Pan, S.-Y.; Nie, Q.; Tai, H.-C.; Song, X.-L.; Tong, Y.-F.; Zhang, L.-J.-F.; Wu, X.-W.; Lin, Z.-H.; Zhang, Y.-Y.; Ye, D.-Y.; et al. Tea and tea drinking: China’s outstanding contributions to the mankind. Chin. Med. 2022, 17, 27. [Google Scholar] [CrossRef] [PubMed]

- Xiao, Z.; Huang, X.; Zang, Z.; Yang, H. Spatio-temporal variation and the driving forces of tea production in China over the last 30 years. J. Geogr. Sci. 2018, 28, 275–290. [Google Scholar] [CrossRef]

- Yan, Y.; Jeong, S.; Park, C.-E.; Mueller, N.D.; Piao, S.; Park, H.; Joo, J.; Chen, X.; Wang, X.; Liu, J.; et al. Effects of extreme temperature on China’s tea production. Environ. Res. Lett. 2021, 16, 044040. [Google Scholar] [CrossRef]

- Zhang, S.; Cheng, X.; Wang, Z.; Cui, K.; Liao, S. The Future Potential Distribution and Sustainable Management of Ancient Pu’er Tea Trees (Camellia sinensis var. assamica (J. W. Mast.) Kitam.). Forests 2022, 13, 983. [Google Scholar] [CrossRef]

- Gust, D.; Moore, T.A.; Moore, A.L. Mimicking photosynthetic solar energy transduction. Acc. Chem. Res. 2001, 34, 40–48. [Google Scholar] [CrossRef] [PubMed]

- Huang, G.; Zhang, Q.; Wei, X.; Peng, S.; Li, Y. Nitrogen Can Alleviate the Inhibition of Photosynthesis Caused by High Temperature Stress under Both Steady-State and Flecked Irradiance. Front. Plant Sci. 2017, 8, 945. [Google Scholar] [CrossRef] [PubMed]

- Lu, Y.; Lu, R. Detection of Chilling Injury in Pickling Cucumbers Using Dual-Band Chlorophyll Fluorescence Imaging. Foods 2021, 10, 1094. [Google Scholar] [CrossRef] [PubMed]

- Dong, Z.; Men, Y.; Liu, Z.; Li, J.; Ji, J. Application of chlorophyll fluorescence imaging technique in analysis and detection of chilling injury of tomato seedlings. Comput. Electron. Agric. 2020, 168, 105109. [Google Scholar] [CrossRef]

- Jain, N.; Singh, G.P.; Pandey, R.; Ramya, P.; Singh, P.K.; Nivedita; Prabhu, K.V. Chlorophyll fluorescence kinetics and response of wheat (Triticum aestivum L.) under high temperature stress. Indian J. Exp. Biol. 2018, 56, 194–201. [Google Scholar]

- Guidi, L.; Landi, M.; Penella, C.; Calatayud, A. Application of modulated chlorophyll fluorescence and modulated chlorophyll fluorescence imaging in studying environmental stresses effect. Ann. Di Bot. 2016, 6, 5–22. [Google Scholar]

- Arief, M.A.A.; Kim, H.; Kurniawan, H.; Nugroho, A.P.; Kim, T.; Cho, B.-K. Chlorophyll Fluorescence Imaging for Early Detection of Drought and Heat Stress in Strawberry Plants. Plants 2023, 12, 1387. [Google Scholar] [CrossRef]

- Poobalasubramanian, M.; Park, E.-S.; Faqeerzada, M.A.; Kim, T.; Kim, M.S.; Baek, I.; Cho, B.-K. Identification of Early Heat and Water Stress in Strawberry Plants Using Chlorophyll-Fluorescence Indices Extracted via Hyperspectral Images. Sensors 2022, 22, 8706. [Google Scholar] [CrossRef]

- Chen, X.; Shi, D.; Zhang, H.; Antonio Sánchez Pérez, J.; Yang, X.; Li, M. Early diagnosis of greenhouse cucumber downy mildew in seedling stage using chlorophyll fluorescence imaging technology. Biosyst. Eng. 2024, 242, 107–122. [Google Scholar] [CrossRef]

- Long, Y.; Ma, M. Recognition of Drought Stress State of Tomato Seedling Based on Chlorophyll Fluorescence Imaging. IEEE Access 2022, 10, 48633–48642. [Google Scholar] [CrossRef]

- Liu, F.; Zhang, M.; Hu, J.; Pan, M.; Shen, L.; Ye, J.; Tan, J. Early Diagnosis of Pine Wilt Disease in Pinus thunbergii Based on Chlorophyll Fluorescence Parameters. Forests 2023, 14, 154. [Google Scholar] [CrossRef]

- Zheng, W.; Lu, X.; Li, Y.; Li, S.; Zhang, Y. Hyperspectral Identification of Chlorophyll Fluorescence Parameters of Suaeda salsa in Coastal Wetlands. Remote Sens. 2021, 13, 2066. [Google Scholar] [CrossRef]

- Xiao, C.; Ye, J.; Esteves, R.M.; Rong, C. Using Spearman’s correlation coefficients for exploratory data analysis on big dataset. Concurr. Comput. Pract. Exp. 2016, 28, 3866–3878. [Google Scholar] [CrossRef]

- Song, S.-B.; Liu, J.-F.; Ni, H.-Y.; Cao, X.-L.; Pu, H.; Huang, B.-X. A new automatic thresholding algorithm for unimodal gray-level distribution images by using the gray gradient information. J. Pet. Sci. Eng. 2020, 190, 107074. [Google Scholar] [CrossRef]

- Sang, H.; Guo, W.; Gao, Y.; Jiao, X.; Pan, X. Effects of Alternating Fresh and Saline Water Irrigation on Soil Salinity and Chlorophyll Fluorescence of Summer Maize. Water 2020, 12, 3054. [Google Scholar] [CrossRef]

- Chen, Q.; Xiong, Q.; Huang, H.; Tang, S. An Efficient and Lightweight Surface Defect Detection Method for Micro-Motor Commutators in Complex Industrial Scenarios Based on the CLS-YOLO Network. Electronics 2025, 14, 505. [Google Scholar] [CrossRef]

- Wang, D.; Tan, J.; Wang, H.; Kong, L.; Zhang, C.; Pan, D.; Li, T.; Liu, J. SDS-YOLO: An improved vibratory position detection algorithm based on YOLOv11. Measurement 2025, 244, 116518. [Google Scholar] [CrossRef]

- Qin, D.; Leichner, C.; Delakis, M.; Fornoni, M.; Luo, S.; Yang, F.; Wang, W.; Banbury, C.; Ye, C.; Akin, B.; et al. MobileNetV4: Universal Models for the Mobile Ecosystem. In Proceedings of the Computer Vision—ECCV 2024, Milan, Italy, 29 September–4 October 2024; Springer Nature: Cham, Switzerland, 2025; pp. 78–96. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Zhu, Y.; Yuan, K.; Zhong, W.; Xu, L. Spatial–Spectral ConvNeXt for Hyperspectral Image Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 5453–5463. [Google Scholar] [CrossRef]

- Soares, P. The lifting bifurcation problem on feed-forward networks. Nonlinearity 2018, 31, 5500. [Google Scholar] [CrossRef]

- Rahman, M.M.; Munir, M.; Marculescu, R. EMCAD: Efficient Multi-Scale Convolutional Attention Decoding for Medical Image Segmentation. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 11769–11779. [Google Scholar]

- Park, M.; Hwang, S.; Cho, H. BiRD: Bi-Directional Input Reuse Dataflow for Enhancing Depthwise Convolution Performance on Systolic Arrays. IEEE Trans. Comput. 2024, 73, 2708–2721. [Google Scholar] [CrossRef]

- Zhang, J.; Li, X.; Li, J.; Liu, L.; Xue, Z.; Zhang, B.; Jiang, Z.; Huang, T.; Wang, Y.; Wang, C. Rethinking Mobile Block for Efficient Attention-based Models. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; pp. 1389–1400. [Google Scholar]

- Lv, H.; Zhang, Y.; Xiao, T.; Wang, Z.; Wang, S.; Feng, H.; Zhao, X.; Zhao, Y. Seizure Detection Based on Lightweight Inverted Residual Attention Network. Int. J. Neural Syst. 2024, 34, 2450042. [Google Scholar] [CrossRef] [PubMed]

- Chen, J.; Kao, S.h.; He, H.; Zhuo, W.; Wen, S.; Lee, C.H.; Chan, S.H.G. Run, Don’t Walk: Chasing Higher FLOPS for Faster Neural Networks. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 12021–12031. [Google Scholar]

- Zhou, H.; Dang, A.; Sun, K. IPCRGC-YOLOv7: Face mask detection algorithm based on improved partial convolution and recursive gated convolution. J. Real-Time Image Process. 2024, 21, 59. [Google Scholar] [CrossRef]

- Lee, Y.; Park, J.; Lee, C.-O. Two-level group convolution. Neural Netw. 2022, 154, 323–332. [Google Scholar] [CrossRef]

- Wang, Z.; Zhang, S.; Chen, Y.; Xia, Y.; Wang, H.; Jin, R.; Wang, C.; Fan, Z.; Wang, Y.; Wang, B. Detection of small foreign objects in Pu-erh sun-dried green tea: An enhanced YOLOv8 neural network model based on deep learning. Food Control 2025, 168, 110890. [Google Scholar] [CrossRef]

- Li, H.; Yuan, W.; Xia, Y.; Wang, Z.; He, J.; Wang, Q.; Zhang, S.; Li, L.; Yang, F.; Wang, B. YOLOv8n-WSE-Pest: A Lightweight Deep Learning Model Based on YOLOv8n for Pest Identification in Tea Gardens. Appl. Sci. 2024, 14, 8748. [Google Scholar] [CrossRef]

- Wang, Z.; Zhang, S.; Chen, L.; Wu, W.; Wang, H.; Liu, X.; Fan, Z.; Wang, B. Microscopic Insect Pest Detection in Tea Plantations: Improved YOLOv8 Model Based on Deep Learning. Agriculture 2024, 14, 1739. [Google Scholar] [CrossRef]

| Simulated Environment | Time/h | Environmental Air Temperature/°C | Relative Humidity/% | |||

|---|---|---|---|---|---|---|

| Healthy Culture | Daytime | 16 | 25 | 80 | ||

| Nighttime | 8 | 15 | 80 | |||

| High-Temperature Stress | Daytime | 16 | 30 | 36 | 42 | 80 |

| Nighttime | 8 | 20 | 26 | 32 | 80 | |

| Fluorescence Parameter | Meaning | Fluorescence Parameter | Meaning |

|---|---|---|---|

| Fo | Basal fluorescence | qP | Photochemical quenching, established based on the “swamp model” |

| Fm | Maximum fluorescence | qN | Non-photochemical quenching coefficient |

| Fv/Fm | Maximum photochemical efficiency | qL | Photochemical quenching, established based on the “lake model” |

| Fm’ | Maximum fluorescence under light adaptation | Y(NO) | Quantum yield of non-regulatory energy dissipation |

| Fq’/Fm’ | Effective photochemical quantum yield of Photosystem II | Y(NPQ) | Quantum yield of regulatory energy dissipation |

| ETR | Electron transport rate | NPQ | Non-photochemical quenching coefficient |

| Fo’ | Minimum fluorescence under light adaptation |

| Fluorescence Parameter | Correlation Coefficient | Significance | Fluorescence Parameter | Correlation Coefficient | Significance |

|---|---|---|---|---|---|

| Fo | 0.126 | qP | −0.713 | ** | |

| Fm | −0.486 | qN | 0.183 | ||

| Fv/Fm | −0.886 | *** | qL | −0.538 | * |

| Fm’ | −0.651 | ** | Y(NO) | 0.843 | *** |

| Fq’/Fm’ | −0.804 | *** | Y(NPQ) | 0.307 | |

| ETR | −0.804 | *** | NPQ | −0.054 | |

| Fo’ | 0.032 |

| Configuration Item | Configuration Parameter |

|---|---|

| Operating system | Windows 11 |

| CPU | Intel(R) Core (TM) i7-14650HX |

| Memory | 16 GB |

| GPU | NAIDIA GeForce RTX 4060 |

| Compiled language | Python 3.8.19 |

| Software framework | PyCharm 2024 |

| CUDA | CUDA Version 11.8 |

| Model | P/% | R/% | mAP50/% | Layers | Parameters | Gradients | GFLOPs | Weight/MB |

|---|---|---|---|---|---|---|---|---|

| YOLOv11 | 95.20 | 91.33 | 96.04 | 319 | 2593740 | 2593724 | 6.5 | 5.4 |

| YOLOv11-M | 96.10 | 90.77 | 97.21 | 386 | 2173383 | 2173367 | 5.3 | 3.8 |

| YOLOv11-E | 96.14 | 86.29 | 97.54 | 333 | 2680268 | 2680252 | 6.9 | 5.4 |

| YOLOv11-I | 97.14 | 97.90 | 98.91 | 333 | 2609612 | 2609596 | 6.5 | 5.2 |

| YOLOv11-P | 96.13 | 97.59 | 98.31 | 315 | 2510460 | 2510444 | 5.9 | 5.0 |

| YOLOv11-ME | 94.78 | 94.07 | 96.77 | 400 | 1886956 | 1886940 | 4.8 | 3.9 |

| YOLOv11-MI | 97.01 | 90.81 | 96.96 | 400 | 1816300 | 1816284 | 4.3 | 3.8 |

| YOLOv11-MP | 97.08 | 94.04 | 97.75 | 384 | 1727372 | 1727356 | 4.0 | 3.6 |

| YOLOv11-EI | 98.25 | 95.91 | 97.82 | 347 | 2696140 | 2696124 | 6.9 | 5.4 |

| YOLOv11-EP | 98.04 | 94.65 | 97.68 | 329 | 2596988 | 2596972 | 6.4 | 5.2 |

| YOLOv11-IP | 97.75 | 96.34 | 97.52 | 329 | 2526332 | 2526316 | 5.9 | 5.0 |

| YOLOv11-MEI | 98.39 | 97.45 | 98.79 | 414 | 1902828 | 1902812 | 4.8 | 3.9 |

| YOLOv11-MEP | 98.76 | 98.71 | 98.77 | 398 | 1813900 | 1813884 | 4.4 | 3.7 |

| YOLOv11-MIP | 98.73 | 98.38 | 98.74 | 398 | 1743244 | 1743228 | 4.0 | 3.6 |

| YOLOv11-EIP | 99.18 | 99.00 | 99.25 | 343 | 2612860 | 2612844 | 6.4 | 5.2 |

| YOLOv11-MEIP | 99.25 | 99.19 | 99.46 | 412 | 1829772 | 1829756 | 4.5 | 3.8 |

| Model | P/% | R/% | mAP50/% | GFLOPs | Parameters | Weight/MB |

|---|---|---|---|---|---|---|

| YOLOv11-MEIP | 99.25 | 99.19 | 99.46 | 4.5 | 1829772 | 3.8 |

| YOLOv11 | 95.20 | 91.33 | 96.04 | 6.5 | 2593740 | 5.4 |

| YOLOv10 | 94.49 | 91.48 | 97.2 | 8.2 | 2498168 | 5.1 |

| YOLOv8 | 93.14 | 90.59 | 92.86 | 8.2 | 3011628 | 5.9 |

| YOLOv7 | 91.36 | 88.29 | 91.17 | 105.2 | 37212738 | 71.3 |

| YOLOv5 | 90.18 | 90.79 | 93.90 | 16.0 | 7030417 | 13.7 |

| SSD | 93.71 | 92.84 | 94.52 | 34.8 | 35641826 | 136.0 |

| Faster-RCNN | 92.77 | 93.62 | 95.23 | 134.38 | 41755286 | 159.7 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, C.; Wang, Z.; Chen, L.; Liu, W.; Wang, X.; Cao, Z.; Zhao, J.; Zou, M.; Li, H.; Yuan, W.; et al. Intelligent Identification of Tea Plant Seedlings Under High-Temperature Conditions via YOLOv11-MEIP Model Based on Chlorophyll Fluorescence Imaging. Plants 2025, 14, 1965. https://doi.org/10.3390/plants14131965

Wang C, Wang Z, Chen L, Liu W, Wang X, Cao Z, Zhao J, Zou M, Li H, Yuan W, et al. Intelligent Identification of Tea Plant Seedlings Under High-Temperature Conditions via YOLOv11-MEIP Model Based on Chlorophyll Fluorescence Imaging. Plants. 2025; 14(13):1965. https://doi.org/10.3390/plants14131965

Chicago/Turabian StyleWang, Chun, Zejun Wang, Lijiao Chen, Weihao Liu, Xinghua Wang, Zhiyong Cao, Jinyan Zhao, Man Zou, Hongxu Li, Wenxia Yuan, and et al. 2025. "Intelligent Identification of Tea Plant Seedlings Under High-Temperature Conditions via YOLOv11-MEIP Model Based on Chlorophyll Fluorescence Imaging" Plants 14, no. 13: 1965. https://doi.org/10.3390/plants14131965

APA StyleWang, C., Wang, Z., Chen, L., Liu, W., Wang, X., Cao, Z., Zhao, J., Zou, M., Li, H., Yuan, W., & Wang, B. (2025). Intelligent Identification of Tea Plant Seedlings Under High-Temperature Conditions via YOLOv11-MEIP Model Based on Chlorophyll Fluorescence Imaging. Plants, 14(13), 1965. https://doi.org/10.3390/plants14131965