A Synthetic Review of Various Dimensions of Non-Destructive Plant Stress Phenotyping

Abstract

1. Introduction

2. Phenotyping of 1D

2.1. Vis-IR Reflectance Spectroscopy

2.2. Chlorophyll Fluorescence (ChlF) Spectroscopy

2.3. Data Analysis of the 1D Spectral Curves

2.3.1. Vis-IR Reflectance Spectral Curve

2.3.2. Chlorophyll Fluorescence (ChlF) Kinetic Curve

3. Phenotyping of 2D

3.1. Visible Imaging

3.2. Multispectral and Hyperspectral Imaging

3.3. Chlorophyll Fluorescence (ChlF) Imaging

3.4. Infrared (IR) Imaging

3.5. Thermal-IR Imaging

3.6. Image Processing of 2D Phenotyping

4. Phenotyping of 3D

4.1. Light Detection and Ranging (LiDAR)

4.2. X-ray Computed Tomography (CT)

4.3. Magnetic Resonance Imaging (MRI)

4.4. Positron Emission Tomography (PET)

4.5. Data Processing of 3D Phenotyping

5. Discussion

| Dimensions | Data-Acquiring Methods | Data Types | Data Analyzing Methods/Tools | References |

|---|---|---|---|---|

| 1D Phenotyping | Vis-IR reflectance spectroscopy | S-1D | 1. Chemometric methods (PCA or PLS regression), statistical methods, and tools such as SPSS, R language, etc. 2. For devices that can calculate automatically, typical mathematical methods are then used to further process the data. | [45,55,62,155,156] |

| ChlF spectroscopy | 1D, T-1D | |||

| 2D Phenotyping | Visible imaging | 2D, S-2D T-2D | 1. Image preprocessing, segmentation algorithms (watershed algorithm and color segmentation methods), and image conversion (wavelet analysis). 2. Image processing tools, such as Plant CV (https://plantcv.danforthcenter.org, accessed on 20 March 2023), IAP (http://iap.ipkgatersleben.de, accessed on 20 March 2023), and Image J (http://imagej.nih.gov/ij, accessed on 20 March 2023), and, etc. 3. Machine learning and/deep learning for the identification, classification, quantification, and prediction of stress phenotypes, such as support vector machine (SVM), Random Forest, Gaussian processes (GP), CNN and LSTM, etc. 4. Specialized corresponding data analysis software, such as Envi, Evince, SpecSight, as well as other proposed in-house image processing software solutions. | [22,66,95,157,158,159] |

| Multispectral imaging | 2D, S-2D, T-2D | |||

| Hyperspectral imaging | 2D, S-2D, T-2D | |||

| ChlF imaging | 2D, T-2D | |||

| IR imaging | 2D, S-2D, T-2D | |||

| Thermal-IR imaging | 2D, T-2D | |||

| 3D Phenotyping | LiDAR | 3D | 1. Specialized 3D software solutions for showing 3D models, as well as with algorithms such as SFM (structure from motion), voxel-based volume carving, and Stereo correspondence algorithms. 2. Dimensionality reduction is commonly used for image processing and feature extraction, while machine learning and/or deep learning are used for data analysis. 3. To capture temporal information, optical flow-based tracking and adaptive hierarchical segmentation methods can be utilized. | [143,160] |

| X-ray CT MRI PET Multispectral LiDAR | 3D | |||

| 3D, T-3D | ||||

| 3D, T-3D | ||||

| 3D, S-3D |

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Lichtenthaler, H.K. The stress concept in plants: An introduction. Ann. N. Y. Acad. Sci. 1998, 851, 187–198. [Google Scholar] [CrossRef] [PubMed]

- Sarić, R.; Nguyen, V.D.; Burge, T.; Berkowitz, O.; Trtílek, M.; Whelan, J.; Lewsey, M.G.; Čustović, E. Applications of hyperspectral imaging in plant phenotyping. Trends Plant Sci. 2022, 27, 301–315. [Google Scholar] [CrossRef] [PubMed]

- Deery, D.M.; Jones, H.G. Field Phenomics: Will It Enable Crop Improvement? Plant Phenomics 2021, 2021, 9871989. [Google Scholar] [CrossRef] [PubMed]

- Rivero, R.M.; Mittler, R.; Blumwald, E.; Zandalinas, S.I. Developing climate-resilient crops: Improving plant tolerance to stress combination. Plant J. 2022, 109, 373–389. [Google Scholar] [CrossRef]

- Zandalinas, S.I.; Mittler, R. Plant responses to multifactorial stress combination. N. Phytol. 2022, 234, 1161–1167. [Google Scholar] [CrossRef]

- Masson-Delmotte, V.; Zhai, P.; Pirani, A.; Connors, S.L.; Péan, C.; Berger, S.; Caud, N.; Chen, Y.; Goldfarb, L.; Gomis, M. Climate change 2021, The physical science basis. In Contribution of Working Group I to the Sixth Assessment Report of the Intergovernmental Panel on Climate Change; IPCC: Saint-Aubin, France, 2021; Volume 2. [Google Scholar]

- World Health Organization. The State of Food Security and Nutrition in the World 2021, Transforming Food Systems for Food Security, Improved Nutrition and Affordable Healthy Diets for All; Food & Agriculture Organization: Geneva, Switzerland, 2021; Volume 2021. [Google Scholar]

- Furbank, R.T.; Tester, M. Phenomics-technologies to relieve the phenotyping bottleneck. Trends Plant Sci. 2011, 16, 635–644. [Google Scholar] [CrossRef] [PubMed]

- Araus, J.L.; Kefauver, S.C.; Zaman-Allah, M.; Olsen, M.S.; Cairns, J.E. Translating High-Throughput Phenotyping into Genetic Gain. Trends Plant Sci. 2018, 23, 451–466. [Google Scholar] [CrossRef]

- Sun, D.; Robbins, K.; Morales, N.; Shu, Q.; Cen, H. Advances in optical phenotyping of cereal crops. Trends Plant Sci. 2022, 27, 191–208. [Google Scholar] [CrossRef]

- Fountas, S.; Malounas, I.; Athanasakos, L.; Avgoustakis, I.; Espejo-Garcia, B. AI-Assisted Vision for Agricultural Robots. AgriEngineering 2022, 4, 674–694. [Google Scholar] [CrossRef]

- Waiphara, P.; Bourgenot, C.; Compton, L.J.; Prashar, A. Optical Imaging Resources for Crop Phenotyping and Stress Detection. Methods Mol. Biol. 2022, 2494, 255–265. [Google Scholar]

- Sun, D.; Xu, Y.; Cen, H. Optical sensors: Deciphering plant phenomics in breeding factories. Trends Plant Sci. 2022, 27, 209–210. [Google Scholar] [CrossRef] [PubMed]

- Maxwell, K.; Johnson, G.N. Chlorophyll fluorescence—A practical guide. J. Exp. Bot. 2000, 51, 659–668. [Google Scholar] [CrossRef]

- Myneni, R.B.; Hall, F.G.; Sellers, P.J.; Marshak, A.L. The interpretation of spectral vegetation indexes. IEEE Trans. Geosci. Remote Sens. 1995, 33, 481–486. [Google Scholar] [CrossRef]

- Buschmann, C.; Nagel, E. In vivo spectroscopy and internal optics of leaves as basis for remote sensing of vegetation. Int. J. Remote Sens. 1993, 14, 711–722. [Google Scholar] [CrossRef]

- Zahir, S.A.D.M.; Omar, A.F.; Jamlos, M.F.; Azmi, M.A.M.; Muncan, J. A review of visible and near-infrared (Vis-NIR) spectroscopy application in plant stress detection. Sens. Actuat A-Phys. 2022, 338, 113468. [Google Scholar] [CrossRef]

- Peñuelas, J.; Filella, I. Visible and near-infrared reflectance techniques for diagnosing plant physiological status. Trends Plant Sci. 1998, 3, 151–156. [Google Scholar] [CrossRef]

- Moustakas, M.; Calatayud, Á.; Guidi, L. Chlorophyll fluorescence imaging analysis in biotic and abiotic stress. Front. Plant Sci. 2021, 12, 658500. [Google Scholar] [CrossRef]

- Krause, G.; Weis, E. Chlorophyll fluorescence and photosynthesis: The basics. Annu. Rev. Plant Biol. 1991, 42, 313–349. [Google Scholar] [CrossRef]

- Schreiber, U.; Bilger, W.; Neubauer, C. Chlorophyll fluorescence as a nonintrusive indicator for rapid assessment of in vivo photosynthesis. In Ecophysiology of Photosynthesis; Springer: Berlin/Heidelberg, Germany, 1995; pp. 49–70. [Google Scholar]

- Li, L.; Zhang, Q.; Huang, D. A review of imaging techniques for plant phenotyping. Sensors 2014, 14, 20078–20111. [Google Scholar] [CrossRef]

- Duarte-Carvajalino, J.M.; Silva-Arero, E.A.; Goez-Vinasco, G.A.; Torres-Delgado, L.M.; Ocampo-Paez, O.D.; Castano-Marin, A.M. Estimation of Water Stress in Potato Plants Using Hyperspectral Imagery and Machine Learning Algorithms. Horticulturae 2021, 7, 176. [Google Scholar] [CrossRef]

- Al-Tamimi, N.; Langan, P.; Bernad, V.; Walsh, J.; Mangina, E.; Negrao, S. Capturing crop adaptation to abiotic stress using image-based technologies. Open Biol. 2022, 12, 210353. [Google Scholar] [CrossRef]

- Udayakumar, N. Visible Light Imaging. In Imaging with Electromagnetic Spectrum: Applications in Food and Agriculture; Manickavasagan, A., Jayasuriya, H., Eds.; Springer: Berlin/Heidelberg, Germany, 2014; pp. 67–86. [Google Scholar]

- Ruffing, A.M.; Anthony, S.M.; Strickland, L.M.; Lubkin, I.; Dietz, C.R. Identification of Metal Stresses in Arabidopsis thaliana Using Hyperspectral Reflectance Imaging. Front. Plant Sci. 2021, 12, 624656. [Google Scholar] [CrossRef] [PubMed]

- Liu, H.; Bruning, B.; Garnett, T.; Berger, B. Hyperspectral imaging and 3D technologies for plant phenotyping: From satellite to close-range sensing. Comput. Electron. Agric. 2020, 175, 105621. [Google Scholar] [CrossRef]

- Golbach, F.; Kootstra, G.; Damjanovic, S.; Otten, G.; van de Zedde, R. Validation of plant part measurements using a 3D reconstruction method suitable for high-throughput seedling phenotyping. Mach. Vision Appl. 2016, 27, 663–680. [Google Scholar] [CrossRef]

- Rossi, R.; Costafreda-Aumedes, S.; Leolini, L.; Leolini, C.; Bindi, M.; Moriondo, M. Implementation of an algorithm for automated phenotyping through plant 3D-modeling: A practical application on the early detection of water stress. Comput. Electron. Agric. 2022, 197, 106937. [Google Scholar] [CrossRef]

- Fu, P.; Montes, C.M.; Siebers, M.H.; Gomez-Casanovas, N.; McGrath, J.M.; Ainsworth, E.A.; Bernacchi, C.J. Advances in field-based high-throughput photosynthetic phenotyping. J. Exp. Bot. 2022, 73, 3157–3172. [Google Scholar] [CrossRef] [PubMed]

- Atkinson, J.A.; Jackson, R.J.; Bentley, A.R.; Ober, E.; Wells, D.M. Field Phenotyping for the Future. In Annual Plant Reviews Online; Wiley Online Library: Hoboken, NJ, USA, 2018; pp. 719–736. [Google Scholar]

- Feng, L.; Chen, S.; Zhang, C.; Zhang, Y.; He, Y. A comprehensive review on recent applications of unmanned aerial vehicle remote sensing with various sensors for high-throughput plant phenotyping. Comput. Electron. Agric. 2021, 182, 106033. [Google Scholar] [CrossRef]

- Amarasingam, N.; Ashan Salgadoe, A.S.; Powell, K.; Gonzalez, L.F.; Natarajan, S. A review of UAV platforms, sensors, and applications for monitoring of sugarcane crops. Remote Sens. Appl. Soc. Environ. 2022, 26, 100712. [Google Scholar] [CrossRef]

- Bartley, G.E.; Scolnik, P.A. Plant carotenoids: Pigments for photoprotection, visual attraction, and human health. Plant Cell 1995, 7, 1027. [Google Scholar]

- Tokarz, D.; Cisek, R.; Garbaczewska, M.; Sandkuijl, D.; Qiu, X.; Stewart, B.; Levine, J.D.; Fekl, U.; Barzda, V. Carotenoid based bio-compatible labels for third harmonic generation microscopy. Phys. Chem. Chem. Phys. 2012, 14, 10653–10661. [Google Scholar] [CrossRef]

- Horler, D.; Dockray, M.; Barber, J. The red edge of plant leaf reflectance. Int. J. Remote Sens. 1983, 4, 273–288. [Google Scholar] [CrossRef]

- Gogoi, N.; Deka, B.; Bora, L. Remote sensing and its use in detection and monitoring plant diseases: A review. Agric. Rev. 2018, 39, 307–313. [Google Scholar] [CrossRef]

- Meacham-Hensold, K.; Montes, C.M.; Wu, J.; Guan, K.; Fu, P.; Ainsworth, E.A.; Pederson, T.; Moore, C.E.; Brown, K.L.; Raines, C. High-throughput field phenotyping using hyperspectral reflectance and partial least squares regression (PLSR) reveals genetic modifications to photosynthetic capacity. Remote Sens. Environ. 2019, 231, 111176. [Google Scholar] [CrossRef] [PubMed]

- Junttila, S.; Hölttä, T.; Saarinen, N.; Kankare, V.; Yrttimaa, T.; Hyyppä, J.; Vastaranta, M. Close-range hyperspectral spectroscopy reveals leaf water content dynamics. Remote Sens. Environ. 2022, 277, 113071. [Google Scholar] [CrossRef]

- El-Hendawy, S.E.; Al-Suhaibani, N.A.; Elsayed, S.; Hassan, W.M.; Dewir, Y.H.; Refay, Y.; Abdella, K.A. Potential of the existing and novel spectral reflectance indices for estimating the leaf water status and grain yield of spring wheat exposed to different irrigation rates. Agric. Water Manag. 2019, 217, 356–373. [Google Scholar] [CrossRef]

- El-Hendawy, S.; Al-Suhaibani, N.; Hassan, W.; Tahir, M.; Schmidhalter, U. Hyperspectral reflectance sensing to assess the growth and photosynthetic properties of wheat cultivars exposed to different irrigation rates in an irrigated arid region. PLoS ONE 2017, 12, e0183262. [Google Scholar] [CrossRef]

- Maimaitiyiming, M.; Ghulam, A.; Bozzolo, A.; Wilkins, J.L.; Kwasniewski, M.T. Early detection of plant physiological responses to different levels of water stress using reflectance spectroscopy. Remote Sens. 2017, 9, 745. [Google Scholar] [CrossRef]

- Yuan, M.; Couture, J.J.; Townsend, P.A.; Ruark, M.D.; Bland, W.L. Spectroscopic Determination of Leaf Nitrogen Concentration and Mass Per Area in Sweet Corn and Snap Bean. Agron. J. 2016, 108, 2519–2526. [Google Scholar] [CrossRef]

- Gold, K.M.; Townsend, P.A.; Herrmann, I.; Gevens, A.J. Investigating potato late blight physiological differences across potato cultivars with spectroscopy and machine learning. Plant Sci. 2020, 295, 110316. [Google Scholar] [CrossRef]

- Neto, A.J.S.; Lopes, D.C.; Pinto, F.A.; Zolnier, S. Vis/NIR spectroscopy and chemometrics for non-destructive estimation of water and chlorophyll status in sunflower leaves. Biosyst. Eng. 2017, 155, 124–133. [Google Scholar] [CrossRef]

- Muller, P.; Li, X.-P.; Niyogi, K.K. Non-photochemical quenching. A response to excess light energy. Plant Physiol. 2001, 125, 1558–1566. [Google Scholar] [CrossRef]

- Stirbet, A.; Govindjee. The slow phase of chlorophyll a fluorescence induction in silico: Origin of the S-M fluorescence rise. Photosynth. Res. 2016, 130, 193–213. [Google Scholar] [CrossRef] [PubMed]

- Schreiber, U.; Schliwa, U.; Bilger, W. Continuous recording of photochemical and non-photochemical chlorophyll fluorescence quenching with a new type of modulation fluorometer. Photosynth. Res. 1986, 10, 51–62. [Google Scholar] [CrossRef] [PubMed]

- Strasser, R.J.; Srivastava, A.; Tsimilli-Michael, M. The fluorescence transient as a tool to characterize and screen photosynthetic samples. In Probing Photosynthesis: Mechanisms, Regulation and Adaptation; CRC Press: Boca Raton, FL, USA, 2000; pp. 445–483. [Google Scholar]

- Kolber, Z.S.; Prášil, O.; Falkowski, P.G. Measurements of variable chlorophyll fluorescence using fast repetition rate techniques: Defining methodology and experimental protocols. Biochim. Et Biophys. Acta (BBA)-Bioenerg. 1998, 1367, 88–106. [Google Scholar] [CrossRef]

- Van Kooten, O.; Snel, J.F. The use of chlorophyll fluorescence nomenclature in plant stress physiology. Photosynth. Res. 1990, 25, 147–150. [Google Scholar] [CrossRef]

- Guo, Y.; Tan, J. Recent advances in the application of chlorophyll a fluorescence from photosystem II. Photochem. Photobiol. 2015, 91, 1–14. [Google Scholar] [CrossRef]

- Kalaji, H.M.; Jajoo, A.; Oukarroum, A.; Brestic, M.; Zivcak, M.; Samborska, I.A.; Cetner, M.D.; Łukasik, I.; Goltsev, V.; Ladle, R.J. Chlorophyll a fluorescence as a tool to monitor physiological status of plants under abiotic stress conditions. Acta Physiol. Plant 2016, 38, 102. [Google Scholar] [CrossRef]

- Kalaji, H.M.; Schansker, G.; Ladle, R.J.; Goltsev, V.; Bosa, K.; Allakhverdiev, S.I.; Brestic, M.; Bussotti, F.; Calatayud, A.; Dabrowski, P.; et al. Frequently asked questions about in vivo chlorophyll fluorescence: Practical issues. Photosynth. Res. 2014, 122, 121–158. [Google Scholar] [CrossRef]

- Ryckewaert, M.; Héran, D.; Simonneau, T.; Abdelghafour, F.; Boulord, R.; Saurin, N.; Moura, D.; Mas-Garcia, S.; Bendoula, R. Physiological variable predictions using VIS–NIR spectroscopy for water stress detection on grapevine: Interest in combining climate data using multiblock method. Comput. Electron. Agric. 2022, 197, 106973. [Google Scholar] [CrossRef]

- Glenn, E.P.; Huete, A.R.; Nagler, P.L.; Nelson, S.G. Relationship between remotely-sensed vegetation indices, canopy attributes and plant physiological processes: What vegetation indices can and cannot tell us about the landscape. Sensors 2008, 8, 2136–2160. [Google Scholar] [CrossRef]

- Rouse, J.W., Jr.; Haas, R.H.; Schell, J.; Deering, D. Monitoring the Vernal Advancement and Retrogradation (Green Wave Effect) of Natural Vegetation; Goddard Space Flight Center: Greenbelt, MD, USA, 1973. [Google Scholar]

- Garbulsky, M.F.; Peñuelas, J.; Gamon, J.; Inoue, Y.; Filella, I. The photochemical reflectance index (PRI) and the remote sensing of leaf, canopy and ecosystem radiation use efficiencies: A review and meta-analysis. Remote Sens. Environ. 2011, 115, 281–297. [Google Scholar] [CrossRef]

- Huang, S.; Tang, L.; Hupy, J.P.; Wang, Y.; Shao, G. A commentary review on the use of normalized difference vegetation index (NDVI) in the era of popular remote sensing. J. For. Res. 2021, 32, 1–6. [Google Scholar] [CrossRef]

- Xue, J.; Su, B. Significant Remote Sensing Vegetation Indices: A Review of Developments and Applications. J. Sens. 2017, 2017, 1353691. [Google Scholar] [CrossRef]

- Bannari, A.; Morin, D.; Bonn, F.; Huete, A. A review of vegetation indices. Remote Sens. Rev. 1995, 13, 95–120. [Google Scholar] [CrossRef]

- Murchie, E.H.; Lawson, T. Chlorophyll fluorescence analysis: A guide to good practice and understanding some new applications. J. Exp. Bot. 2013, 64, 3983–3998. [Google Scholar] [CrossRef] [PubMed]

- Gitelson, A.A.; Buschmann, C.; Lichtenthaler, H.K. Leaf chlorophyll fluorescence corrected for re-absorption by means of absorption and reflectance measurements. J. Plant Physiol. 1998, 152, 283–296. [Google Scholar] [CrossRef]

- Baker, N.R.; Rosenqvist, E. Applications of chlorophyll fluorescence can improve crop production strategies: An examination of future possibilities. J. Exp. Bot. 2004, 55, 1607–1621. [Google Scholar] [CrossRef]

- Kalaji, H.M.; Schansker, G.; Brestic, M.; Bussotti, F.; Calatayud, A.; Ferroni, L.; Goltsev, V.; Guidi, L.; Jajoo, A.; Li, P.; et al. Frequently asked questions about chlorophyll fluorescence, the sequel. Photosynth. Res. 2017, 132, 13–66. [Google Scholar] [CrossRef]

- Rahaman, M.M.; Chen, D.; Gillani, Z.; Klukas, C.; Chen, M. Advanced phenotyping and phenotype data analysis for the study of plant growth and development. Front. Plant Sci. 2015, 6, 619. [Google Scholar] [CrossRef]

- Tackenberg, O. A New Method for Non-destructive Measurement of Biomass, Growth Rates, Vertical Biomass Distribution and Dry Matter Content Based on Digital Image Analysis. Ann. Bot. 2007, 99, 777–783. [Google Scholar] [CrossRef]

- Enders, T.A.S.; Dennis, S.; Oakland, J.; Callen, S.T.; Gehan, M.A.; Miller, N.D.; Spalding, E.P.; Springer, N.M.; Hirsch, C.D. Classifying cold-stress responses of inbred maize seedlings using RGB imaging. Plant Direct. 2019, 3, e00104. [Google Scholar] [CrossRef]

- Qin, J.W.; Monje, O.; Nugent, M.R.; Finn, J.R.; O’Rourke, A.E.; Fritsche, R.F.; Baek, I.; Chan, D.E.; Kim, M.S. Development of a Hyperspectral Imaging System for Plant Health Monitoring in Space Crop Production. In Proceedings of the Conference on Sensing for Agriculture and Food Quality and Safety XIV, Online, 3 April–12 June 2022. [Google Scholar]

- Moghimi, A.; Yang, C.; Marchetto, P.M. Ensemble Feature Selection for Plant Phenotyping: A Journey From Hyperspectral to Multispectral Imaging. IEEE Access 2018, 6, 56870–56884. [Google Scholar] [CrossRef]

- Mishra, P.; Asaari, M.S.M.; Herrero-Langreo, A.; Lohumi, S.; Diezma, B.; Scheunders, P. Close range hyperspectral imaging of plants: A review. Biosyst. Eng. 2017, 164, 49–67. [Google Scholar] [CrossRef]

- Zea, M.; Souza, A.; Yang, Y.; Lee, L.; Nemali, K.; Hoagland, L. Leveraging high-throughput hyperspectral imaging technology to detect cadmium stress in two leafy green crops and accelerate soil remediation efforts. Environ. Pollut. 2022, 292, 118405. [Google Scholar] [CrossRef] [PubMed]

- Cui, L.H.; Yan, L.J.; Zhao, X.H.; Yuan, L.; Jin, J.; Zhang, J.C. Detection and Discrimination of Tea Plant Stresses Based on Hyperspectral Imaging Technique at a Canopy Level. Phyton-Int. J. Exp. Bot. 2021, 90, 621–634. [Google Scholar] [CrossRef]

- Paulus, S.; Mahlein, A.K. Technical workflows for hyperspectral plant image assessment and processing on the greenhouse and laboratory scale. Gigascience 2020, 9, giaa090. [Google Scholar] [CrossRef]

- Zubler, A.V.; Yoon, J.Y. Proximal Methods for Plant Stress Detection Using Optical Sensors and Machine Learning. Biosensors 2020, 10, 193. [Google Scholar] [CrossRef]

- Bandopadhyay, S.; Rastogi, A.; Juszczak, R. Review of top-of-canopy sun-induced fluorescence (SIF) studies from ground, UAV, airborne to spaceborne observations. Sensors 2020, 20, 1144. [Google Scholar] [CrossRef]

- Moustakas, M.; Bayçu, G.; Gevrek, N.; Moustaka, J.; Csatári, I.; Rognes, S.E. Spatiotemporal heterogeneity of photosystem II function during acclimation to zinc exposure and mineral nutrition changes in the hyperaccumulator Noccaea caerulescens. Environ. Sci. Pollut. Res. 2019, 26, 6613–6624. [Google Scholar] [CrossRef]

- Dong, Z.; Men, Y.; Li, Z.; Zou, Q.; Ji, J. Chlorophyll fluorescence imaging as a tool for analyzing the effects of chilling injury on tomato seedlings. Sci. Hortic. 2019, 246, 490–497. [Google Scholar] [CrossRef]

- Buschmann, C.; Lichtenthaler, H.K. Principles and characteristics of multi-colour fluorescence imaging of plants. J. Plant Physiol. 1998, 152, 297–314. [Google Scholar] [CrossRef]

- Pérez-Bueno, M.L.; Pineda, M.; Barón, M. Phenotyping plant responses to biotic stress by chlorophyll fluorescence imaging. Front. Plant Sci. 2019, 10, 1135. [Google Scholar] [CrossRef] [PubMed]

- Kolber, Z.; Klimov, D.; Ananyev, G.; Rascher, U.; Berry, J.; Osmond, B. Measuring photosynthetic parameters at a distance: Laser induced fluorescence transient (LIFT) method for remote measurements of photosynthesis in terrestrial vegetation. Photosynth. Res. 2005, 84, 121–129. [Google Scholar] [CrossRef]

- Peng, H.; Cendrero-Mateo, M.P.; Bendig, J.; Siegmann, B.; Acebron, K.; Kneer, C.; Kataja, K.; Muller, O.; Rascher, U. HyScreen: A Ground-Based Imaging System for High-Resolution Red and Far-Red Solar-Induced Chlorophyll Fluorescence. Sensors 2022, 22, 9443. [Google Scholar] [CrossRef]

- Sun, D.; Zhu, Y.; Xu, H.; He, Y.; Cen, H. Time-Series Chlorophyll Fluorescence Imaging Reveals Dynamic Photosynthetic Fingerprints of sos Mutants to Drought Stress. Sensors 2019, 19, 2649. [Google Scholar] [CrossRef]

- Messina, G.; Modica, G. Applications of UAV Thermal Imagery in Precision Agriculture: State of the Art and Future Research Outlook. Remote Sens. 2020, 12, 1491. [Google Scholar] [CrossRef]

- Briglia, N.; Montanaro, G.; Petrozza, A.; Summerer, S.; Cellini, F.; Nuzzo, V. Drought phenotyping in Vitis vinifera using RGB and NIR imaging. Sci. Hortic. 2019, 256, 108555. [Google Scholar] [CrossRef]

- Weiping, Y.; Xuezhi, W.; Wheaton, A.; Cooley, N.; Moran, B. Automatic optical and IR image fusion for plant water stress analysis. In Proceedings of the 2009 12th International Conference on Information Fusion, Seattle, WA, USA, 6–9 July 2009; pp. 1053–1059. [Google Scholar]

- Khanal, S.; Fulton, J.; Shearer, S. An overview of current and potential applications of thermal remote sensing in precision agriculture. Comput. Electron. Agric. 2017, 139, 22–32. [Google Scholar] [CrossRef]

- Pineda, M.; Barón, M.; Pérez-Bueno, M.-L. Thermal Imaging for Plant Stress Detection and Phenotyping. Remote Sens. 2020, 13, 68. [Google Scholar] [CrossRef]

- Balakrishnan, G.K.; Yaw, C.T.; Koh, S.P.; Abedin, T.; Raj, A.A.; Tiong, S.K.; Chen, C.P. A Review of Infrared Thermography for Condition-Based Monitoring in Electrical Energy: Applications and Recommendations. Energies 2022, 15, 6000. [Google Scholar] [CrossRef]

- Mastrodimos, N.; Lentzou, D.; Templalexis, C.; Tsitsigiannis, D.I.; Xanthopoulos, G. Development of thermography methodology for early diagnosis of fungal infection in table grapes: The case of Aspergillus carbonarius. Comput. Electron. Agric. 2019, 165, 104972. [Google Scholar] [CrossRef]

- Khorsandi, A.; Hemmat, A.; Mireei, S.A.; Amirfattahi, R.; Ehsanzadeh, P. Plant temperature-based indices using infrared thermography for detecting water status in sesame under greenhouse conditions. Agric. Water Manag. 2018, 204, 222–233. [Google Scholar] [CrossRef]

- Jordan, M.I.; Mitchell, T.M. Machine Learning; McGraw-hill: New York, NY, USA, 1997; Volume 1. [Google Scholar]

- Singh, A.K.; Ganapathysubramanian, B.; Sarkar, S.; Singh, A. Deep learning for plant stress phenotyping: Trends and future perspectives. Trends Plant Sci. 2018, 23, 883–898. [Google Scholar] [CrossRef] [PubMed]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Gao, Z.; Luo, Z.; Zhang, W.; Lv, Z.; Xu, Y. Deep Learning Application in Plant Stress Imaging: A Review. AgriEngineering 2020, 2, 430–446. [Google Scholar] [CrossRef]

- Tausen, M.; Clausen, M.; Moeskjaer, S.; Shihavuddin, A.S.M.; Dahl, A.B.; Janss, L.; Andersen, S.U. Greenotyper: Image-Based Plant Phenotyping Using Distributed Computing and Deep Learning. Front. Plant Sci. 2020, 11, 1181. [Google Scholar] [CrossRef]

- Dobrescu, A.; Giuffrida, M.V.; Tsaftaris, S.A. Doing More With Less: A Multitask Deep Learning Approach in Plant Phenotyping. Front. Plant Sci. 2020, 11, 141. [Google Scholar] [CrossRef]

- Chen, J.; Zhang, D.; Nanehkaran, Y.A.; Li, D. Detection of rice plant diseases based on deep transfer learning. J. Sci. Food Agric. 2020, 100, 3246–3256. [Google Scholar] [CrossRef]

- Kaya, A.; Keceli, A.S.; Catal, C.; Yalic, H.Y.; Temucin, H.; Tekinerdogan, B. Analysis of transfer learning for deep neural network based plant classification models. Comput. Electron. Agric. 2019, 158, 20–29. [Google Scholar] [CrossRef]

- Barbedo, J.G.A. Impact of dataset size and variety on the effectiveness of deep learning and transfer learning for plant disease classification. Comput. Electron. Agric. 2018, 153, 46–53. [Google Scholar] [CrossRef]

- Ghazi, M.M.; Yanikoglu, B.; Aptoula, E. Plant identification using deep neural networks via optimization of transfer learning parameters. Neurocomputing 2017, 235, 228–235. [Google Scholar] [CrossRef]

- Weidman, S. Deep Learning from Scratch: Building with Python from First Principles; O’Reilly Media: Sebastopol, CA, USA, 2019. [Google Scholar]

- Mohanty, S.P.; Hughes, D.P.; Salathe, M. Using Deep Learning for Image-Based Plant Disease Detection. Front. Plant Sci. 2016, 7, 1419. [Google Scholar] [CrossRef]

- Arya, S.; Sandhu, K.S.; Singh, J.; Kumar, S. Deep learning: As the new frontier in high-throughput plant phenotyping. Euphytica 2022, 218, 47. [Google Scholar] [CrossRef]

- Rivas, P. Deep Learning for Beginners: A Beginner’s Guide to Getting Up and Running with Deep Learning from Scratch Using Python; Packt Publishing Ltd.: Mumbai, India, 2020. [Google Scholar]

- Li, Z.; Guo, R.; Li, M.; Chen, Y.; Li, G. A review of computer vision technologies for plant phenotyping. Comput. Electron. Agric. 2020, 176, 105672. [Google Scholar] [CrossRef]

- Wu, S.; Wen, W.L.; Gou, W.B.; Lu, X.J.; Zhang, W.Q.; Zheng, C.X.; Xiang, Z.W.; Chen, L.P.; Guo, X.Y. A miniaturized phenotyping platform for individual plants using multi-view stereo 3D reconstruction. Front. Plant Sci. 2022, 13, 897746. [Google Scholar] [CrossRef] [PubMed]

- Forero, M.G.; Murcia, H.F.; Mendez, D.; Betancourt-Lozano, J. LiDAR Platform for Acquisition of 3D Plant Phenotyping Database. Plants 2022, 11, 2199. [Google Scholar] [CrossRef]

- Sampaio, G.S.; Silva, L.A.; Marengoni, M. 3D Reconstruction of Non-Rigid Plants and Sensor Data Fusion for Agriculture Phenotyping. Sensors 2021, 21, 4115. [Google Scholar] [CrossRef]

- Kehoe, S.; Byrne, T.; Spink, J.; Barth, S.; Ng, C.K.; Tracy, S. A novel 3D X-ray computed tomography (CT) method for spatio-temporal evaluation of waterlogging-induced aerenchyma formation in barley. Plant Phenome J. 2022, 5, e20035. [Google Scholar] [CrossRef]

- Zhou, Y.F.; Maitre, R.; Hupel, M.; Trotoux, G.; Penguilly, D.; Mariette, F.; Bousset, L.; Chevre, A.M.; Parisey, N. An automatic non-invasive classification for plant phenotyping by MRI images: An application for quality control on cauliflower at primary meristem stage. Comput. Electron. Agric. 2021, 187, 106303. [Google Scholar] [CrossRef]

- Arino-Estrada, G.; Mitchell, G.S.; Saha, P.; Arzani, A.; Cherry, S.R.; Blumwald, E.; Kyme, A.Z. Imaging Salt Uptake Dynamics in Plants Using PET. Sci. Rep. 2019, 9, 18626. [Google Scholar] [CrossRef]

- Jin, S.C.; Sun, X.L.; Wu, F.F.; Su, Y.J.; Li, Y.M.; Song, S.L.; Xu, K.X.; Ma, Q.; Baret, F.; Jiang, D.; et al. Lidar sheds new light on plant phenomics for plant breeding and management: Recent advances and future prospects. Isprs J. Photogramm. Remote Sens. 2021, 171, 202–223. [Google Scholar] [CrossRef]

- Su, Y.J.; Wu, F.F.; Ao, Z.R.; Jin, S.C.; Qin, F.; Liu, B.X.; Pang, S.X.; Liu, L.L.; Guo, Q.H. Evaluating maize phenotype dynamics under drought stress using terrestrial lidar. Plant Methods 2019, 15, 1–16. [Google Scholar] [CrossRef] [PubMed]

- Perez-Sanz, F.; Navarro, P.J.; Egea-Cortines, M. Plant phenomics: An overview of image acquisition technologies and image data analysis algorithms. Gigascience 2017, 6, gix092. [Google Scholar] [CrossRef]

- Li, Y.L.; Wen, W.L.; Miao, T.; Wu, S.; Yu, Z.T.; Wang, X.D.; Guo, X.Y.; Zhao, C.J. Automatic organ-level point cloud segmentation of maize shoots by integrating high-throughput data acquisition and deep learning. Comput. Electron. Agric. 2022, 193, 106702. [Google Scholar] [CrossRef]

- Ao, Z.; Wu, F.; Hu, S.; Sun, Y.; Su, Y.; Guo, Q.; Xin, Q. Automatic segmentation of stem and leaf components and individual maize plants in field terrestrial LiDAR data using convolutional neural networks. Crop J. 2022, 10, 1239–1250. [Google Scholar] [CrossRef]

- Zhu, Y.L.; Sun, G.; Ding, G.H.; Zhou, J.; Wen, M.X.; Jin, S.C.; Zhao, Q.; Colmer, J.; Ding, Y.F.; Ober, E.S.; et al. Large-scale field phenotyping using backpack LiDAR and CropQuant-3D to measure structural variation in wheat. Plant Physiol. 2021, 187, 716–738. [Google Scholar] [CrossRef]

- Wang, Q.; Che, Y.P.; Shao, K.; Zhu, J.Y.; Wang, R.L.; Sui, Y.; Guo, Y.; Li, B.G.; Meng, L.; Ma, Y.T. Estimation of sugar content in sugar beet root based on UAV multi-sensor data. Comput. Electron. Agric. 2022, 203, 107433. [Google Scholar] [CrossRef]

- Piovesan, A.; Vancauwenberghe, V.; Van De Looverbosch, T.; Verboven, P.; Nicolai, B. X-ray computed tomography for 3D plant imaging. Trends Plant. Sci. 2021, 26, 1171–1185. [Google Scholar] [CrossRef]

- Kotwaliwale, N.; Singh, K.; Kalne, A.; Jha, S.N.; Seth, N.; Kar, A. X-ray imaging methods for internal quality evaluation of agricultural produce. J. Food Sci. Technol. 2014, 51, 1–15. [Google Scholar] [CrossRef]

- Okochi, T.; Hoshino, Y.; Fujii, H.; Mitsutani, T. Nondestructive tree-ring measurements for Japanese oak and Japanese beech using micro-focus X-ray computed tomography. Dendrochronologia 2007, 24, 155–164. [Google Scholar] [CrossRef]

- Blümich, B. PT Callaghan. Principles of Nuclear Magnetic Resonance Microscopy; Wiley Online Library: Hoboken, NJ, USA, 1995. [Google Scholar]

- Borisjuk, L.; Rolletschek, H.; Neuberger, T. Surveying the plant’s world by magnetic resonance imaging. Plant J. 2012, 70, 129–146. [Google Scholar] [CrossRef] [PubMed]

- Van As, H.; Scheenen, T.; Vergeldt, F.J. MRI of intact plants. Photosynth. Res. 2009, 102, 213–222. [Google Scholar] [CrossRef] [PubMed]

- Van As, H. Intact plant MRI for the study of cell water relations, membrane permeability, cell-to-cell and long distance water transport. J. Exp. Bot. 2007, 58, 743–756. [Google Scholar] [CrossRef] [PubMed]

- Van Dusschoten, D.; Metzner, R.; Kochs, J.; Postma, J.A.; Pflugfelder, D.; Buhler, J.; Schurr, U.; Jahnke, S. Quantitative 3D Analysis of Plant Roots Growing in Soil Using Magnetic Resonance Imaging. Plant Physiol. 2016, 170, 1176–1188. [Google Scholar] [CrossRef]

- Pflugfelder, D.; Metzner, R.; van Dusschoten, D.; Reichel, R.; Jahnke, S.; Koller, R. Non-invasive imaging of plant roots in different soils using magnetic resonance imaging (MRI). Plant Methods 2017, 13, 1–9. [Google Scholar] [CrossRef]

- Scheenen, T.W.J.; Vergeldt, F.J.; Heemskerk, A.M.; Van As, H. Intact plant magnetic resonance imaging to study dynamics in long-distance sap flow and flow-conducting surface area. Plant Physiol. 2007, 144, 1157–1165. [Google Scholar] [CrossRef]

- Meixner, M.; Tomasella, M.; Foerst, P.; Windt, C.W. A small-scale MRI scanner and complementary imaging method to visualize and quantify xylem embolism formation. N. Phytol. 2020, 226, 1517–1529. [Google Scholar] [CrossRef]

- Lambert, J.; Lampen, P.; von Bohlen, A.; Hergenroder, R. Two- and three-dimensional mapping of the iron distribution in the apoplastic fluid of plant leaf tissue by means of magnetic resonance imaging. Anal. Bioanal. Chem. 2006, 384, 231–236. [Google Scholar] [CrossRef]

- Windt, C.W.; Soltner, H.; van Dusschoten, D.; Blumler, P. A portable Halbach magnet that can be opened and closed without force: The NMR-CUFF. J. Magn. Reson. 2011, 208, 27–33. [Google Scholar] [CrossRef]

- Galieni, A.; D’Ascenzo, N.; Stagnari, F.; Pagnani, G.; Xie, Q.G.; Pisante, M. Past and Future of Plant Stress Detection: An Overview From Remote Sensing to Positron Emission Tomography. Front. Plant Sci. 2021, 11, 609155. [Google Scholar] [CrossRef]

- Hubeau, M.; Steppe, K. Plant-PET Scans: In Vivo Mapping of Xylem and Phloem Functioning. Trends Plant Sci. 2015, 20, 676–685. [Google Scholar] [CrossRef] [PubMed]

- Mincke, J.; Courtyn, J.; Vanhove, C.; Vandenberghe, S.; Steppe, K. Guide to Plant-PET Imaging Using (CO2)-C-11. Front. Plant Sci. 2021, 12, 602550. [Google Scholar] [CrossRef] [PubMed]

- Gao, T.; Zhu, F.Y.; Paul, P.; Sandhu, J.; Doku, H.A.; Sun, J.X.; Pan, Y.; Staswick, P.; Walia, H.; Yu, H.F. Novel 3D Imaging Systems for High-Throughput Phenotyping of Plants. Remote Sens. 2021, 13, 2113. [Google Scholar] [CrossRef]

- Wang, Y.J.; Wen, W.L.; Wu, S.; Wang, C.Y.; Yu, Z.T.; Guo, X.Y.; Zhao, C.J. Maize Plant Phenotyping: Comparing 3D Laser Scanning, Multi-View Stereo Reconstruction, and 3D Digitizing Estimates. Remote Sens. 2019, 11, 63. [Google Scholar] [CrossRef]

- Fuentes, S.; Palmer, A.R.; Taylor, D.; Zeppel, M.; Whitley, R.; Eamus, D. An automated procedure for estimating the leaf area index (LAI) of woodland ecosystems using digital imagery, MATLAB programming and its application to an examination of the relationship between remotely sensed and field measurements of LAI. Funct. Plant Biol. 2008, 35, 1070–1079. [Google Scholar] [CrossRef] [PubMed]

- Cabrera-Bosquet, L.; Fournier, C.; Brichet, N.; Welcker, C.; Suard, B.; Tardieu, F. High-throughput estimation of incident light, light interception and radiation-use efficiency of thousands of plants in a phenotyping platform. N. Phytol. 2016, 212, 269–281. [Google Scholar] [CrossRef]

- Lou, L.; Liu, Y.; Han, J.; Doonan, J.H. Accurate multi-view stereo 3D reconstruction for cost-effective plant phenotyping. In Proceedings of the Image Analysis and Recognition: 11th International Conference, ICIAR 2014, Vilamoura, Portugal, 22–24 October 2014; Springer: Berlin/Heidelberg, Germany, 2014; pp. 349–356. [Google Scholar]

- Rusu, R.B.; Cousins, S. 3d is here: Point cloud library (pcl). In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; IEEE: New York, NY, USA, 2011; pp. 1–4. [Google Scholar]

- Qiu, Q.; Sun, N.; Bai, H.; Wang, N.; Fan, Z.; Wang, Y.; Meng, Z.; Li, B.; Cong, Y. Field-based high-throughput phenotyping for maize plant using 3D LiDAR point cloud generated with a “Phenomobile”. Front. Plant Sci. 2019, 10, 554. [Google Scholar] [CrossRef]

- Paturkar, A.; Sen Gupta, G.; Bailey, D. Making use of 3D models for plant physiognomic analysis: A review. Remote Sens. 2021, 13, 2232. [Google Scholar] [CrossRef]

- Elnashef, B.; Filin, S.; Lati, R.N. Tensor-based classification and segmentation of three-dimensional point clouds for organ-level plant phenotyping and growth analysis. Comput. Electron. Agric. 2019, 156, 51–61. [Google Scholar] [CrossRef]

- Paulus, S.; Dupuis, J.; Riedel, S.; Kuhlmann, H. Automated analysis of barley organs using 3D laser scanning: An approach for high throughput phenotyping. Sensors 2014, 14, 12670–12686. [Google Scholar] [CrossRef]

- Paulus, S. Measuring crops in 3D: Using geometry for plant phenotyping. Plant Methods 2019, 15, 1–13. [Google Scholar] [CrossRef] [PubMed]

- Taiz, L.; Zeiger, E.; Møller, I.M.; Murphy, A. Plant Physiology and Development: Sinauer Associates Incorporated; Springer: Berlin/Heidelberg, Germany, 2015. [Google Scholar]

- Alscher, R.G.; Cumming, J.R. Stress Responses in Plants: Adaptation and Acclimation Mechanisms; Wiley-Liss: Hoboken, NJ, USA, 1990. [Google Scholar]

- Liu, H.; Lee, S.-H.; Chahl, J.S. Registration of multispectral 3D points for plant inspection. Precis. Agric. 2018, 19, 513–536. [Google Scholar] [CrossRef]

- Sun, G.; Wang, X.; Sun, Y.; Ding, Y.; Lu, W. Measurement method based on multispectral three-dimensional imaging for the chlorophyll contents of greenhouse tomato plants. Sensors 2019, 19, 3345. [Google Scholar] [CrossRef] [PubMed]

- Chebrolu, N.; Läbe, T.; Stachniss, C. Spatio-temporal non-rigid registration of 3d point clouds of plants. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Online, 31 May–31 August 2020; IEEE: New York, NY, USA, 2020; pp. 3112–3118. [Google Scholar]

- Schunck, D.; Magistri, F.; Rosu, R.A.; Cornelißen, A.; Chebrolu, N.; Paulus, S.; Léon, J.; Behnke, S.; Stachniss, C.; Kuhlmann, H. Pheno4D: A spatio-temporal dataset of maize and tomato plant point clouds for phenotyping and advanced plant analysis. PLoS ONE 2021, 16, e0256340. [Google Scholar] [CrossRef]

- Van As, H. MRI of water transport in intact plants: Characteristics and dynamics. Comp. Biochem. Physiol. A-Mol. Integr. Physiol. 2006, 143, S42. [Google Scholar]

- Zwieniecki, M.A.; Melcher, P.J.; Ahrens, E.T. Analysis of spatial and temporal dynamics of xylem refilling in Acer rubrum L. using magnetic resonance imaging. Front. Plant Sci. 2013, 4, 265. [Google Scholar] [CrossRef]

- Cozzolino, D. Use of Infrared Spectroscopy for In-Field Measurement and Phenotyping of Plant Properties: Instrumentation, Data Analysis, and Examples. Appl. Spectrosc. Rev. 2014, 49, 564–584. [Google Scholar] [CrossRef]

- Jansen, M.; Gilmer, F.; Biskup, B.; Nagel, K.A.; Rascher, U.; Fischbach, A.; Briem, S.; Dreissen, G.; Tittmann, S.; Braun, S.; et al. Simultaneous phenotyping of leaf growth and chlorophyll fluorescence via GROWSCREEN FLUORO allows detection of stress tolerance in Arabidopsis thaliana and other rosette plants. Funct. Plant Biol. 2009, 36, 902–914. [Google Scholar] [CrossRef]

- Nakhle, F.; Harfouche, A.L. Ready Steady Go, A.I. A practical tutorial on fundamentals of artificial intelligence and its applications in phenomics image analysis. Patterns 2021, 2, 100323. [Google Scholar] [CrossRef]

- Gehan, M.A.; Fahlgren, N.; Abbasi, A.; Berry, J.C.; Callen, S.T.; Chavez, L.; Doust, A.N.; Feldman, M.J.; Gilbert, K.B.; Hodge, J.G.; et al. PlantCV v2, Image analysis software for high-throughput plant phenotyping. PeerJ 2017, 5, e4088. [Google Scholar] [CrossRef]

- Koh, J.C.O.; Spangenberg, G.; Kant, S. Automated Machine Learning for High-Throughput Image-Based Plant Phenotyping. Remote Sens. 2021, 13, 858. [Google Scholar] [CrossRef]

- Choudhury, S.D.; Samal, A.; Awada, T. Leveraging Image Analysis for High-Throughput Plant Phenotyping. Front. Plant Sci. 2019, 10, 508. [Google Scholar] [CrossRef] [PubMed]

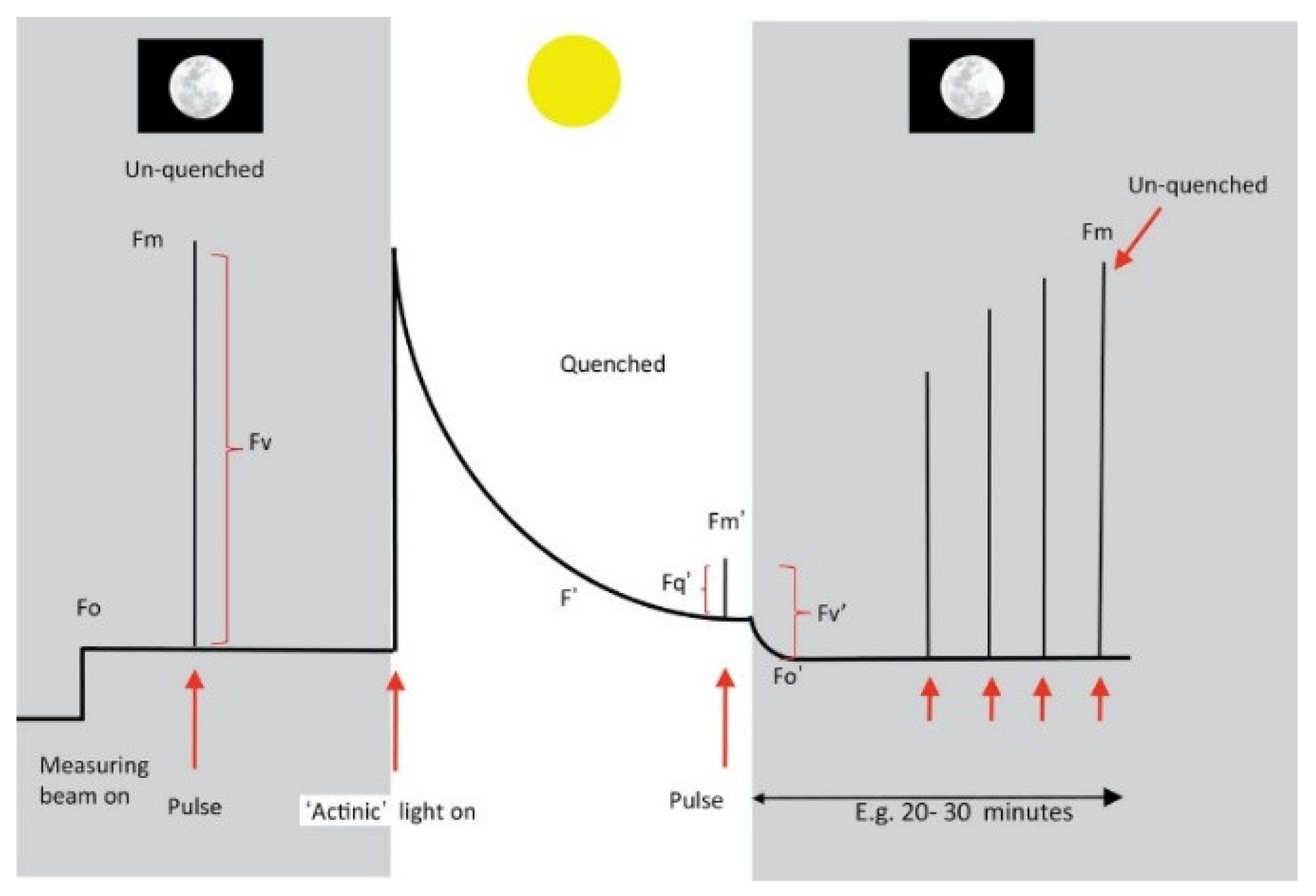

| Parameter | Measurement and Calculation | Description | |

|---|---|---|---|

| Preliminary parameters | Fo | Switch on the measuring light, and get the parameter Fo. | Minimal fluorescence of chlorophyll a in dark-adapted leaves, indicating the baseline fluorescence of the sample. |

| Fm | Offer a pulse, then induces Fm. | Maximal fluorescence of chlorophyll a in dark-adapted leaves. | |

| F’ | Switch on actinic light, followed by an initial rise in fluorescence. Then fluorescence quenches due to the increasing competition with photochemical and non-photochemical events. This state is also named the light-adapted state. | It represents the chlorophyll fluorescence yield in the light-adapted state in the presence of actinic light. | |

| Fm′ | Offer a pulse to the light-adapted state, and get the parameter Fm′. | Maximal fluorescence of chlorophyll a in light-adapted leaves. | |

| Fo′ | Switch off the actinic light and measure immediately, recording Fo′. However, the accurate measurement is complex; an alternative approach is to calculate Fo′. | Minimal fluorescence of chlorophyll a in light-adapted leaves. | |

| Deduced parameters | Fv | Fv = Fm − Fo, the difference between Fm and Fo is the variable fluorescence Fv. | It is related to the maximum quantum yield of PS-II, reflecting the amount of chlorophyll molecules that are in the open reaction centers and actively. |

| Fv/Fm | Fv/Fm = (Fm − Fo)/Fm, the result is found to be a consistent value of roughly 0.83. | It represents the photochemical efficiency of PS-II and is used as an indicator of stress or damage to the photosynthetic system. | |

| ΦPSII | ΦPSII = (Fm′ − F′)/Fm′, this parameter doesn’t need a dark-adapted measurement, so it is a commonly measured light-adapted parameter. | It represents the operating efficiency of PS-II photochemistry. | |

| Fq’/Fv’ | Fq′/Fv′ = (Fm′ − F′)/Fv’, this parameter also doesn’t need a dark-adapted measurement. | It reflects the level of photoprotective quenching of fluorescence, and indicates the onset of photoinhibition. | |

| NPQ | NPQ = (Fm − Fm′)/Fm′, also be calculated as (Fm/Fm′) − 1. | NPQ is the non-photochemical quenching coefficient, which evaluates the rate constant for heat loss from PS-II. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ye, D.; Wu, L.; Li, X.; Atoba, T.O.; Wu, W.; Weng, H. A Synthetic Review of Various Dimensions of Non-Destructive Plant Stress Phenotyping. Plants 2023, 12, 1698. https://doi.org/10.3390/plants12081698

Ye D, Wu L, Li X, Atoba TO, Wu W, Weng H. A Synthetic Review of Various Dimensions of Non-Destructive Plant Stress Phenotyping. Plants. 2023; 12(8):1698. https://doi.org/10.3390/plants12081698

Chicago/Turabian StyleYe, Dapeng, Libin Wu, Xiaobin Li, Tolulope Opeyemi Atoba, Wenhao Wu, and Haiyong Weng. 2023. "A Synthetic Review of Various Dimensions of Non-Destructive Plant Stress Phenotyping" Plants 12, no. 8: 1698. https://doi.org/10.3390/plants12081698

APA StyleYe, D., Wu, L., Li, X., Atoba, T. O., Wu, W., & Weng, H. (2023). A Synthetic Review of Various Dimensions of Non-Destructive Plant Stress Phenotyping. Plants, 12(8), 1698. https://doi.org/10.3390/plants12081698