Detection of Strawberry Diseases Using a Convolutional Neural Network

Abstract

1. Introduction

2. Results and Discussion

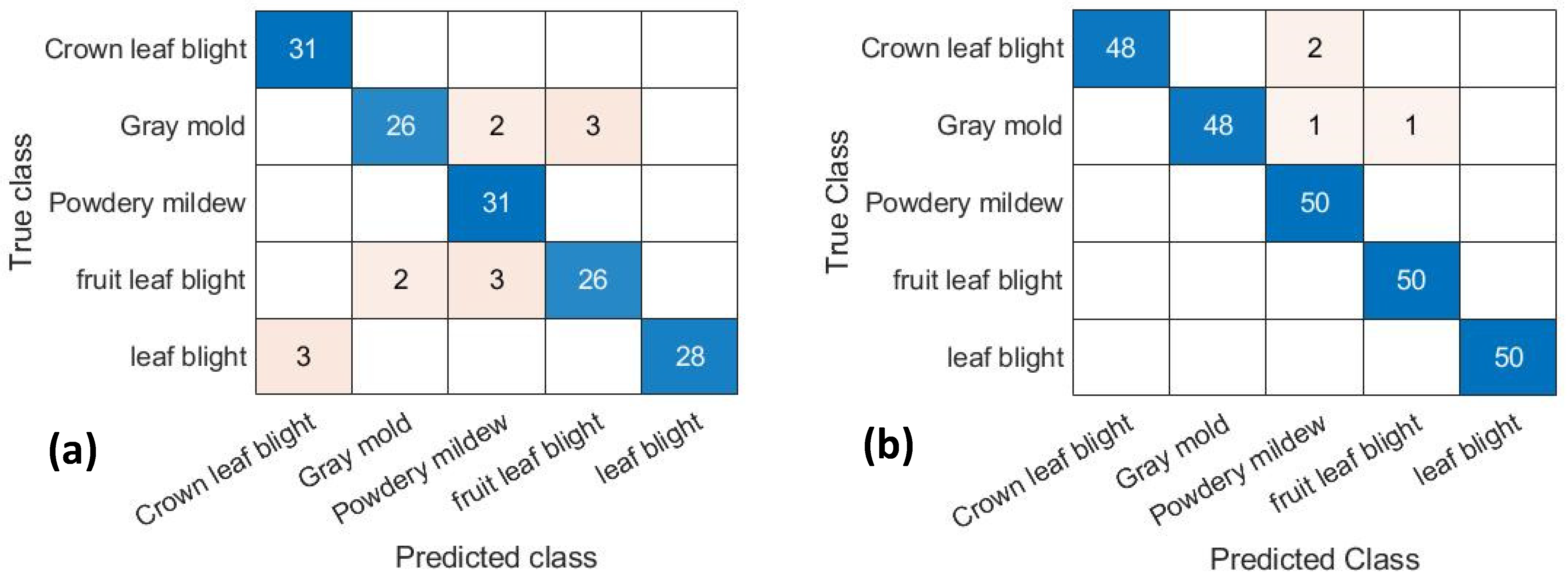

2.1. GoogLeNet Model of the Confusion Matrix

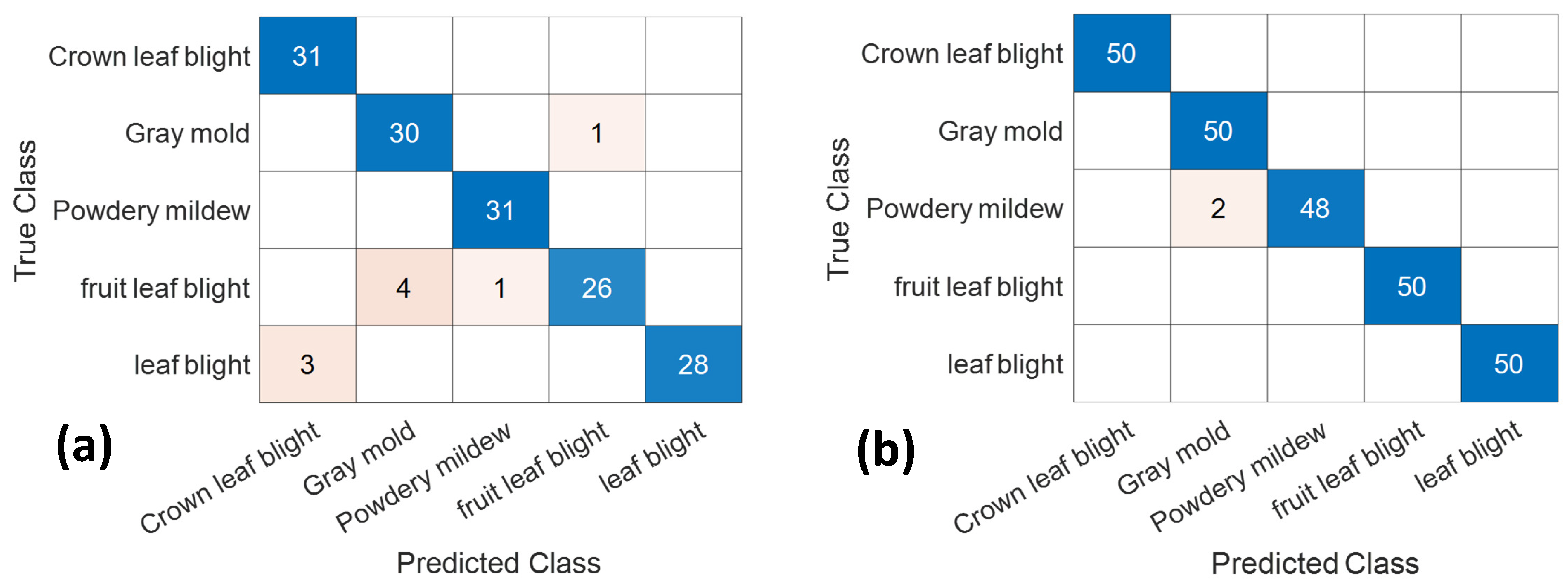

2.2. VGG16 Model of the Confusion Matrix

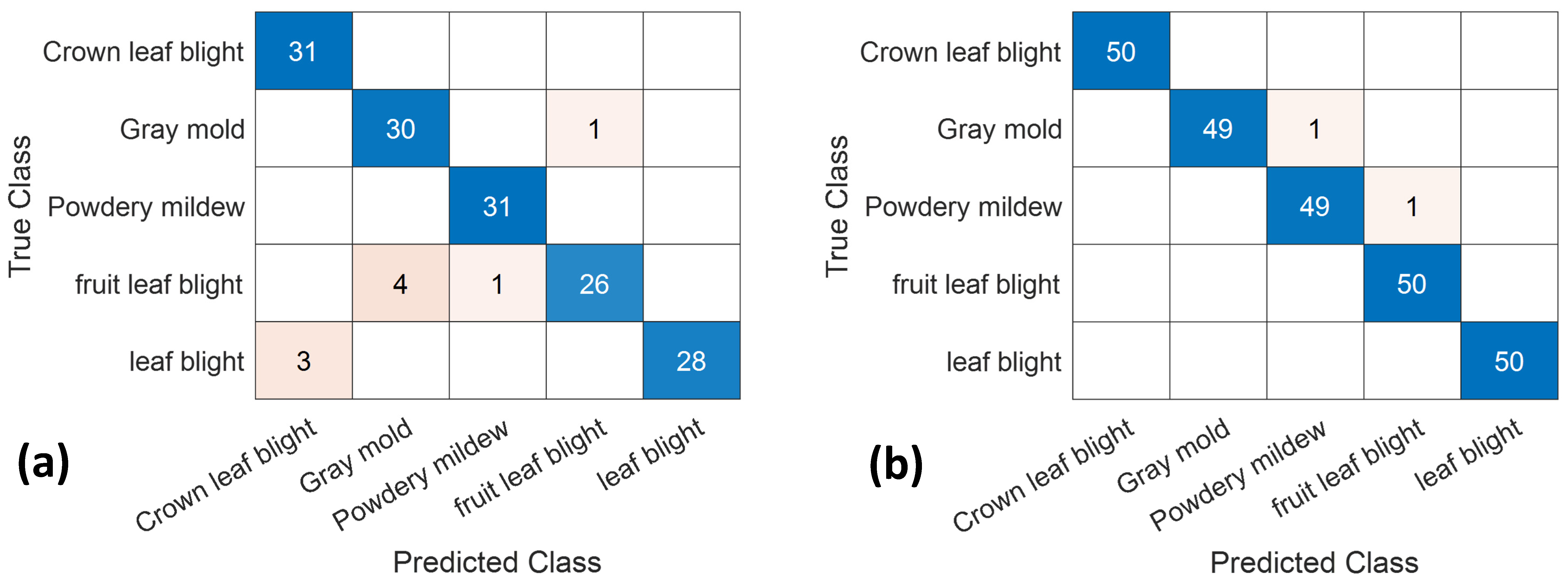

2.3. Resnet50 Model of the Confusion Matrix

3. Materials and Methods

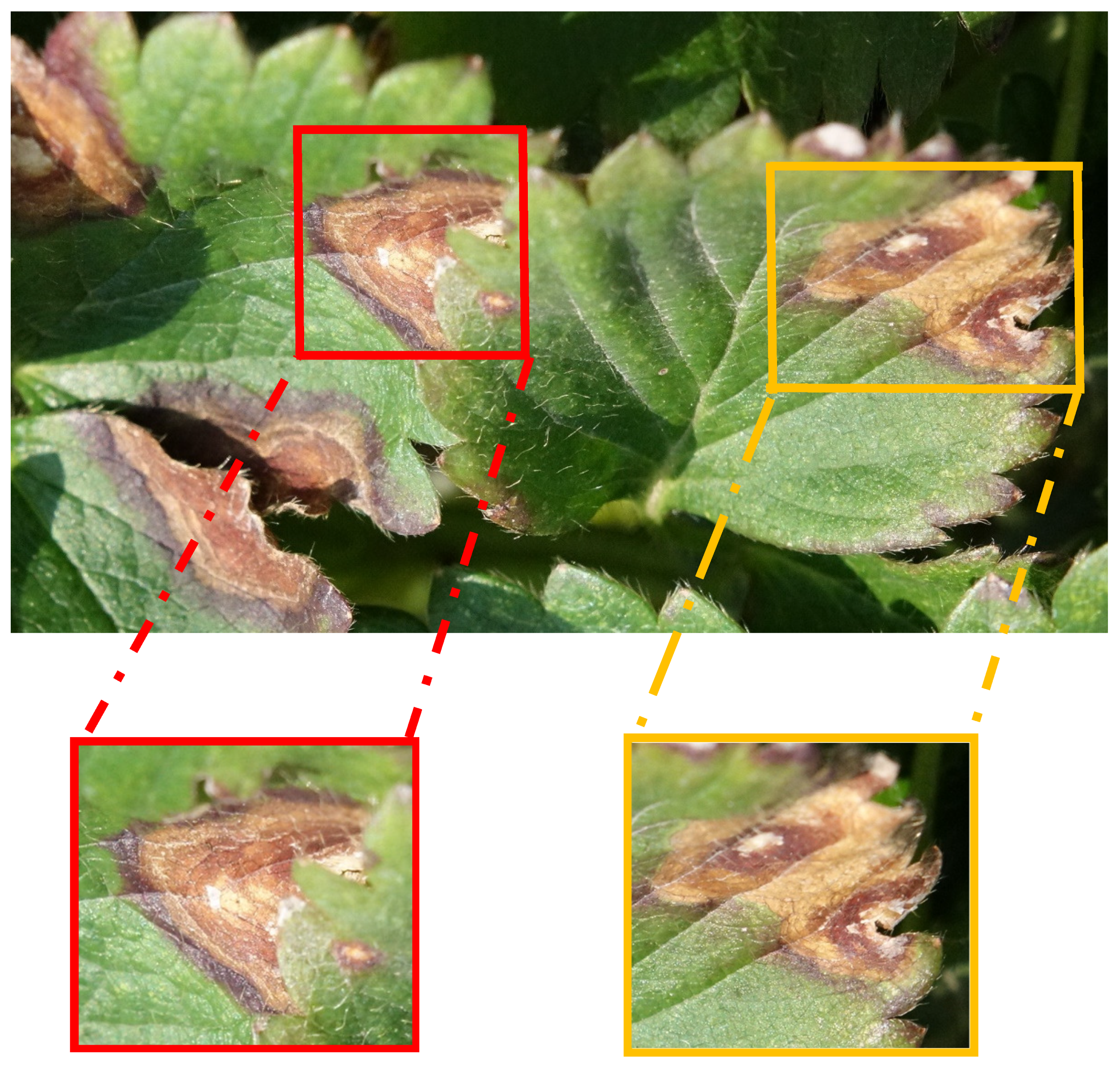

3.1. Strawberry Diseases Dataset

3.2. Convolution Neural Network Imaging Recognition

3.2.1. Convolution Neural Network Sketch

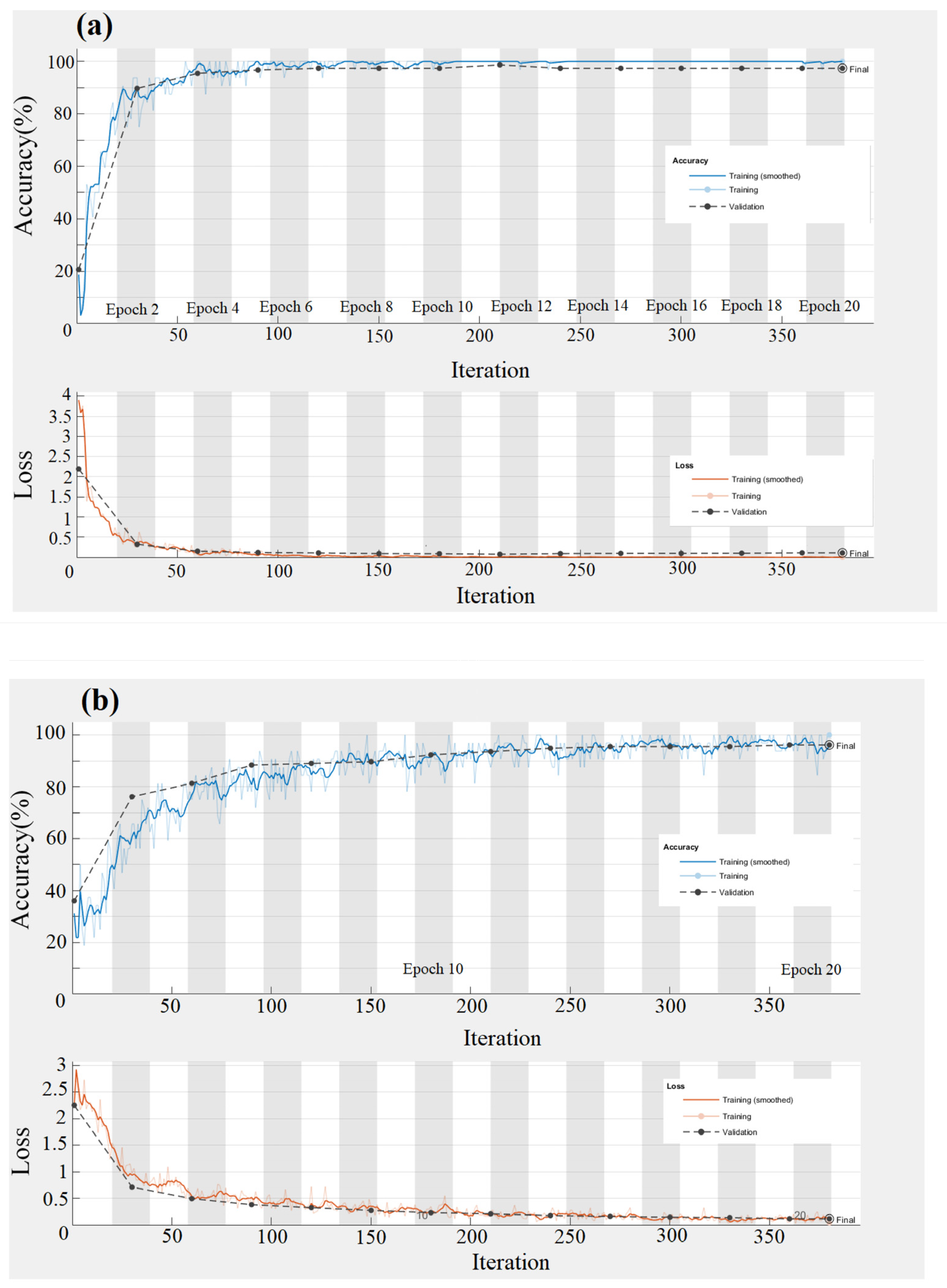

3.2.2. GoogLeNet Structure Diagram

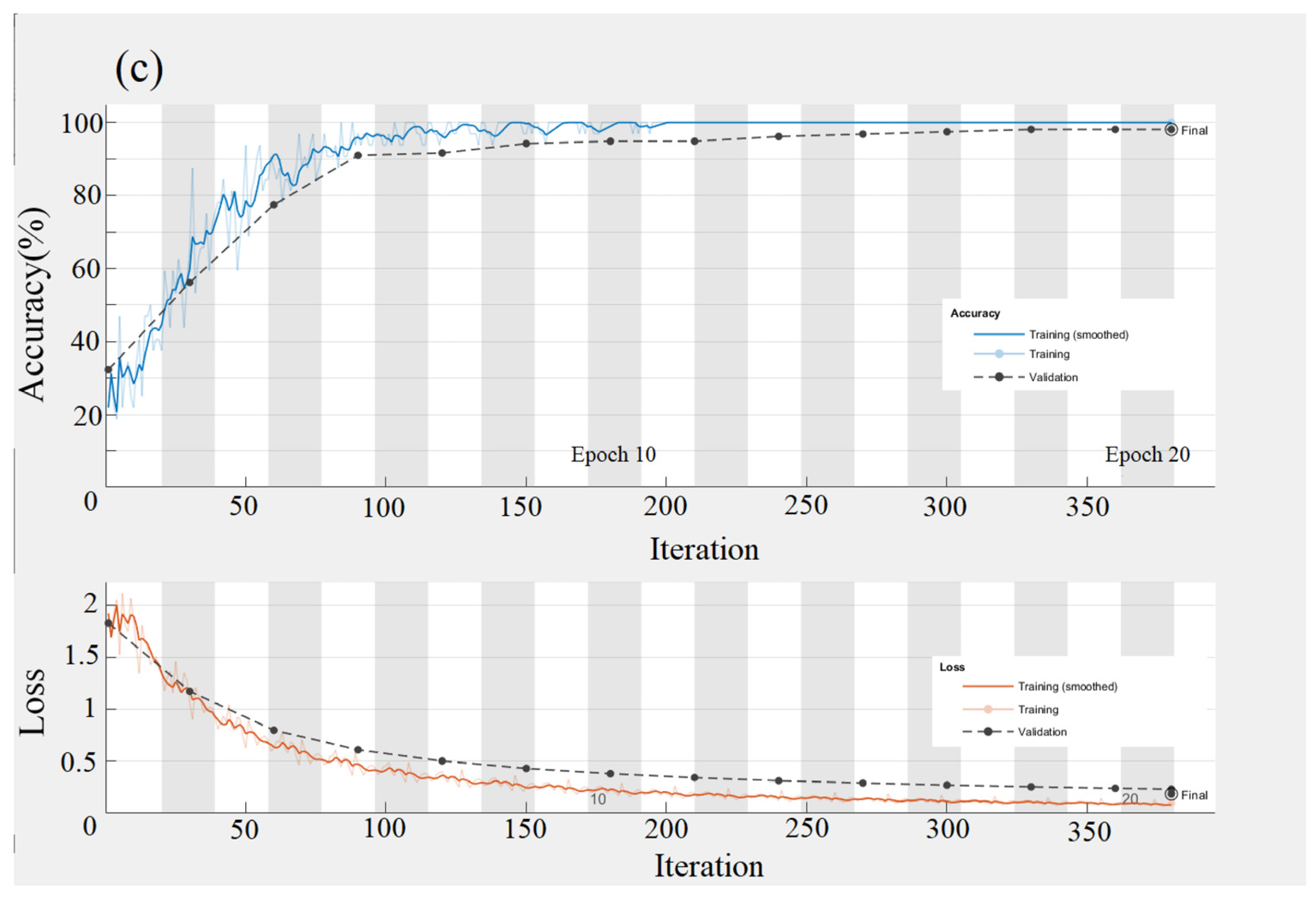

3.2.3. VGG16 Structure Diagram

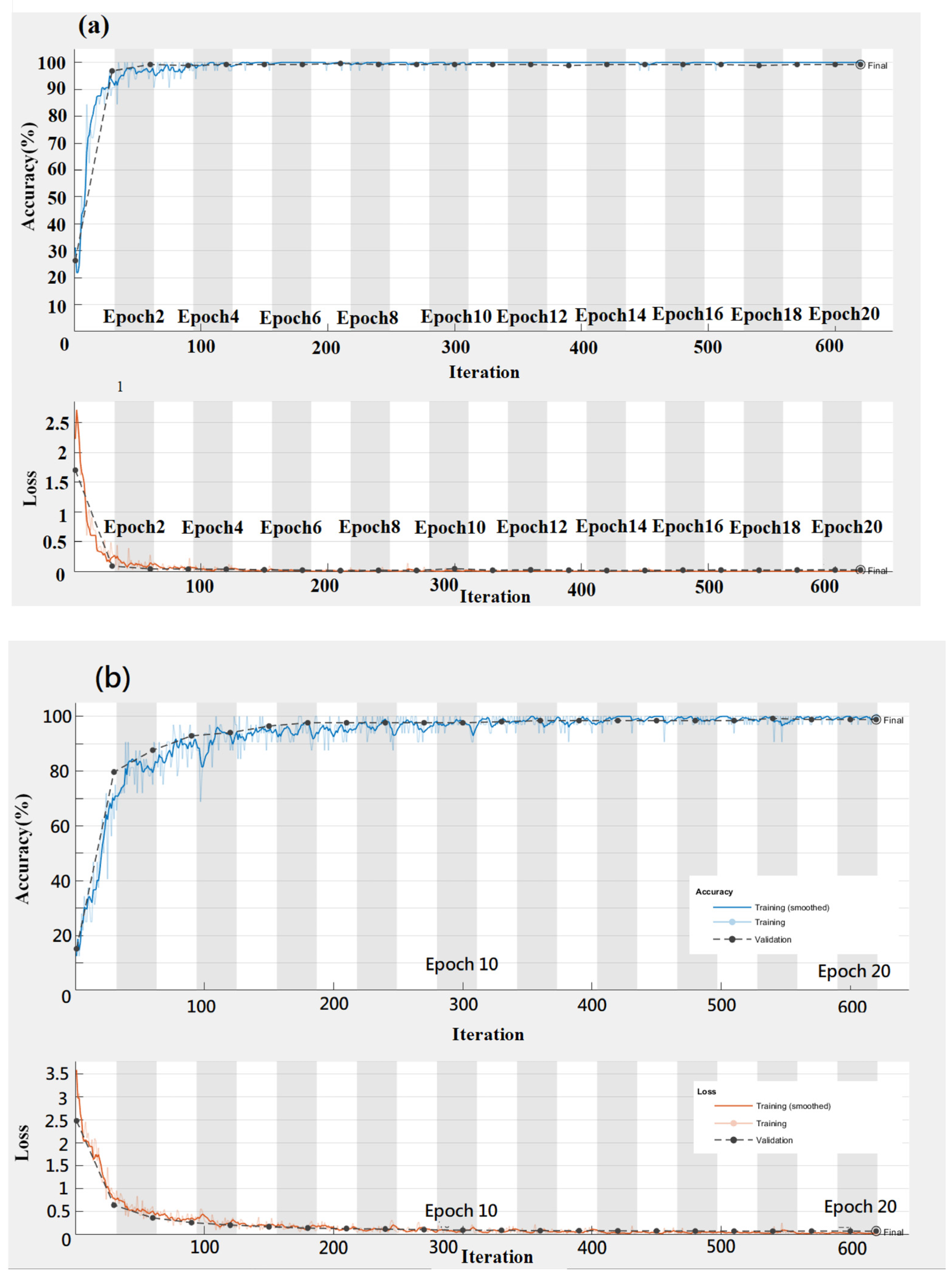

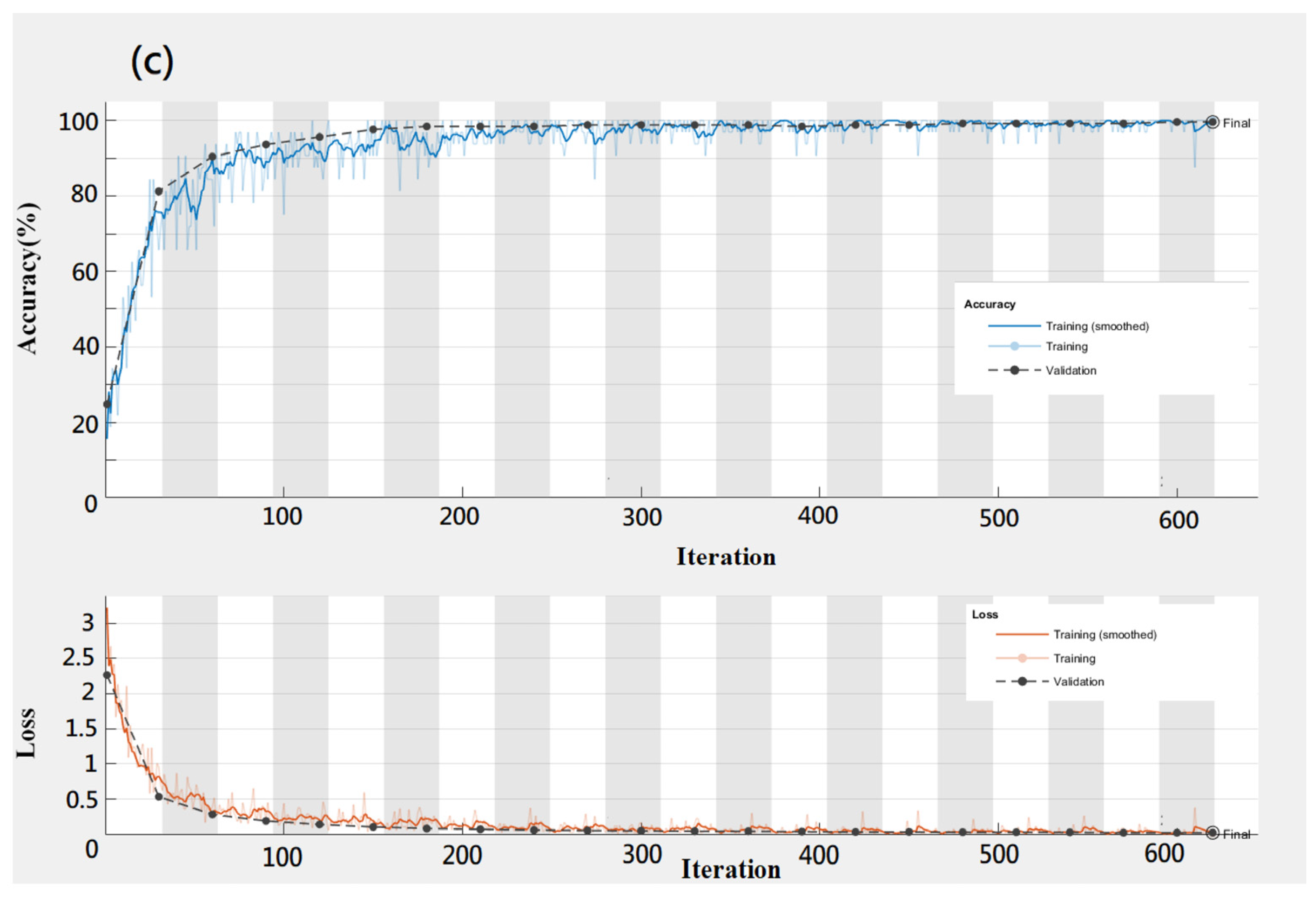

3.2.4. Resnet50 Structure Diagram

3.2.5. CNN Traning

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Li, Y.F.; Wang, H.X.; Dang, L.M.; Niaraki, A.S.; Mon, H.J. Crop pest recognition in natural scenes using convolutional neural networks. Comput. Electron. Agric. 2020, 169, 105174. [Google Scholar] [CrossRef]

- Skrovankova, S.; Sumczynski, D.; Mlcek, J.; Jurikova, T.; Sochor, J. Bioactive compounds and antioxidant activity in different types of berries. Int. J. Mol. Sci. 2015, 16, 24673–24706. [Google Scholar] [CrossRef] [PubMed]

- Tylewicz, U.; Mannozzi, C.; Romani, S.; Castagnini, J.M.; Samborska, K.; Rocculi, P.; Rosa, M.D. Chemical and physicochemical properties of semi-dried organic strawberries. LWT 2019, 114, 108377. [Google Scholar] [CrossRef]

- Pan, L.; Zhang, W.; Zhu, N.; Mao, S.; Tu, K. Early detection and classification of pathogenic fungal disease in post-harvest strawberry fruit by electronic nose and gas chromatography–mass spectrometry. Food Res. Int. 2014, 62, 162–168. [Google Scholar] [CrossRef]

- Maas, J.L. Strawberry diseases and pests-progress and problems. In Proceedings of the VII International Strawberry Symposium 1049, Beijing, China, 18 December 2012; pp. 133–142. [Google Scholar]

- Paulus, A.O. Fungal diseases of strawberry. HortScience 1990, 25, 885–889. [Google Scholar] [CrossRef]

- Chung, P.C.; Wu, H.Y.; Ariyawansa, H.A.; Tzean, S.-S.; Chung, C.-L. First report of Anthracnose crown rot of strawberry caused by Colletotrichum siamense in Taiwan. Plant Dis. 2020, 103, 1775. [Google Scholar] [CrossRef]

- Chen, X.Y.; Dai, D.J.; Zhao, S.F.; Shen, Y.; Wang, H.D.; Zhang, C.Q. Genetic diversity of Colletotrichum spp. causing strawberry anthracnose in Zhejiang, China. Plant Dis. 2020, 104, 1351–1357. [Google Scholar] [CrossRef]

- Feliziani, E.; Romanazzi, G. Postharvest decay of strawberry fruit: Etiology, epidemiology, and disease management. J. Berry Res. 2016, 6, 47–63. [Google Scholar] [CrossRef]

- Petrasch, S.; Knapp, S.J.; Van Kan, J.A.; Blanco-Ulate, B. Grey mould of strawberry, a devastating disease caused by the ubiquitous necrotrophic fungal pathogen Botrytis cinerea. Mole. Plant Pathol. 2019, 20, 877–892. [Google Scholar] [CrossRef]

- Chamorro, M.; Aguado, A.; De los Santos, B. First report of root and crown rot caused by Pestalotiopsis clavispora (Neopestalotiopsis clavispora) on strawberry in Spain. Plant Dis. 2016, 100, 1495. [Google Scholar] [CrossRef]

- Amsalem, L.; Freeman, S.; Rav-David, D.; Nitzani, Y.; Sztejnberg, A.; Pertot, I.; Elad, Y. Effect of climatic factors on powdery mildew caused by Sphaerotheca macularis f. sp. fragariae on strawberry. Euro J. Plant Pathol. 2006, 114, 283–292. [Google Scholar] [CrossRef]

- Rebollar-Alviter, A.; Silva-Rojas, H.V.; Fuentes-Aragón, D.; Acosta-Henández, U.; Martínez-Ruiz, M.; Parra-Robles, B.E. An emerging strawberry fungal disease associated with root rot, crown rot and leaf spot caused by Neopestalotiopsis rosae in Mexico. Plant Dis. 2020, 104, 2054–2059. [Google Scholar] [CrossRef] [PubMed]

- Mahnud, M.S.; Zaman, Q.U.; Esau, T.J.; Price, G.Q.; Prithiviraj, B. Development of an artificial cloud lighting condition system using machine vision for strawberry mildew disease detection. Comput. Electron. Agric. 2019, 158, 219–225. [Google Scholar]

- Jayawardena, R.S.; Huang, J.K.; Jin, B.C.; Yan, J.Y.; Li, X.H.; Hyde, K.D.; Zhang, G.Z. An account of Colletotrichum species associated with strawberry anthracnose in China based on morphology and molecular data. Mycosphere 2016, 8, 1147–1163. [Google Scholar] [CrossRef]

- Esgario, J.G.M.; Krohling, R.A.; Ventura, J.A. Deep learning for classification and severity estimation of coffee leaf biotic stress. Comput. Electron. Agric. 2020, 169, 105162. [Google Scholar] [CrossRef]

- Lottes, P.; Behley, J.; Milioto, A.; Stachniss, C. Fully convolutional networks with sequential information for robust crop and weed detection in precision farming. Comput. Electron. Agric. 2018, 3, 2870–2877. [Google Scholar] [CrossRef]

- Ferentinos, K.P. Deep learning models for plant disease detection and diagnosis. Comput. Electron. Agric. 2018, 145, 311–318. [Google Scholar] [CrossRef]

- Cheng, X.; Zhang, Y.H.; Chen, Y.Q.; Wu, Y.Z.; Yue, Y. Pest identification via deep residual learning in complex background. Comput. Electron. Agric. 2017, 141, 351–356. [Google Scholar] [CrossRef]

- Selvaraj, M.G.; Vergara, A.; Ruiz, H.; Safari, N.; Elayabalan, S.; Ocimati, W.; Blomme, G. AI-power banana diseases and pest detection. Plant Methods. 2019, 15, 92. [Google Scholar] [CrossRef]

- Mohanty, S.P.; Hughes, D.P.; Salathe, M. Using deep learning for image based plant disease detection. Front. Plant Sci. 2016, 7, 1419. [Google Scholar] [CrossRef]

- Chen, Y.; Lee, W.S.; Gan, H.; Peres, N.; Fraisse, C.; Zhang, Y.C.; He, Y. Strawberry yield prediction based on a deep neural network using high resolution aerial orthoimages. Remote. Sens. 2019, 11, 1584. [Google Scholar] [CrossRef]

- Sustika, R.; Subekti, A.; Pardede, H.F.; Suryawati, E.; Mahendra, O.; Yuwana, S. Evaluation of deep convolutional neural network architectures for strawberry quality inspection. Int. J. Eng. Tech. 2018, 7, 75–80. [Google Scholar]

- Shin, J.; Chang, Y.K.; Heung, B.; Nguyen, T.Q.; Price, Q.W.; Almallhi, A. Effect of directional augmentation using supervised machine learning technologies: A case study of strawberry powdery mildew detection. Biosyst. Eng. 2020, 194, 49–60. [Google Scholar] [CrossRef]

- Gao, Z.M.; Shao, Y.Y.; Xuan, G.T.; Wang, Y.X.; Liu, Y.; Han, X. Realtime hyperspectral imaging for the in-field estimation of strawberry ripeness with deep learning. Artif. Intell. Agri. 2020, 4, 31–38. [Google Scholar]

- Nie, X.; Wang, L.; Ding, H.X.; Xu, M. Strawberry verticillium wilt detection network based on multi-task learning and attention. IEEE Access 2020, 7, 170004. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556v6. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Montserrat, D.M.; Lin, Q.; Allebach, J.; Delp, E.J. Training object detection and recognition CNN models using data augmentation. Symp. Electron. Imaging 2017, 2017, 27–36. [Google Scholar] [CrossRef]

| Diseases | Tissues | Original Images | Feature Images |

|---|---|---|---|

| leaf blight | crown | 156 | 267 |

| leaf | 166 | 262 | |

| fruit | 155 | 254 | |

| gray mold | fruit | 157 | 250 |

| powdery mildew | fruit | 158 | 273 |

| Total | 792 | 1306 |

| Parameter Name | Value |

|---|---|

| Optimization | sgd |

| Epochs | 20 |

| ValidationFrequency | 30 |

| Mini Batch size | 32 |

| Learning rate | 0.0001 |

| Execution environment | GPU |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xiao, J.-R.; Chung, P.-C.; Wu, H.-Y.; Phan, Q.-H.; Yeh, J.-L.A.; Hou, M.T.-K. Detection of Strawberry Diseases Using a Convolutional Neural Network. Plants 2021, 10, 31. https://doi.org/10.3390/plants10010031

Xiao J-R, Chung P-C, Wu H-Y, Phan Q-H, Yeh J-LA, Hou MT-K. Detection of Strawberry Diseases Using a Convolutional Neural Network. Plants. 2021; 10(1):31. https://doi.org/10.3390/plants10010031

Chicago/Turabian StyleXiao, Jia-Rong, Pei-Che Chung, Hung-Yi Wu, Quoc-Hung Phan, Jer-Liang Andrew Yeh, and Max Ti-Kuang Hou. 2021. "Detection of Strawberry Diseases Using a Convolutional Neural Network" Plants 10, no. 1: 31. https://doi.org/10.3390/plants10010031

APA StyleXiao, J.-R., Chung, P.-C., Wu, H.-Y., Phan, Q.-H., Yeh, J.-L. A., & Hou, M. T.-K. (2021). Detection of Strawberry Diseases Using a Convolutional Neural Network. Plants, 10(1), 31. https://doi.org/10.3390/plants10010031