A Pedestrian Avoidance Method Considering Personal Space for a Guide Robot

Abstract

:1. Introduction

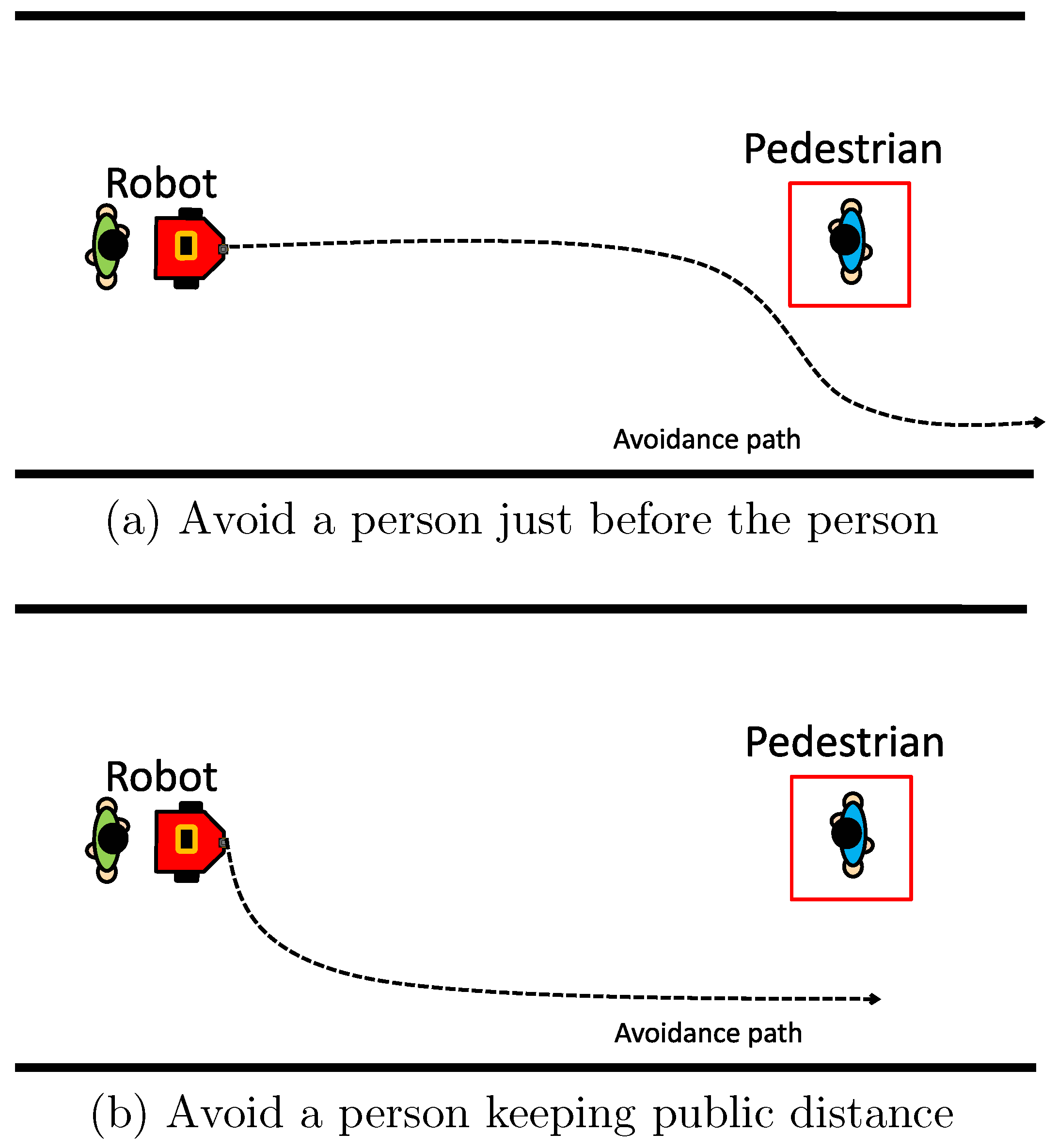

- (A)

- The robot should avoid a pedestrian, considering the public distance for mental safety;

- (B)

- The robot should avoid obstacles, based on local information;

- (C)

- The robot should move along the taught path to visit POIs, while avoiding obstacles and pedestrians.

2. Related Works

2.1. Pedestrian Avoidance for Collision-Free Robot Navigation

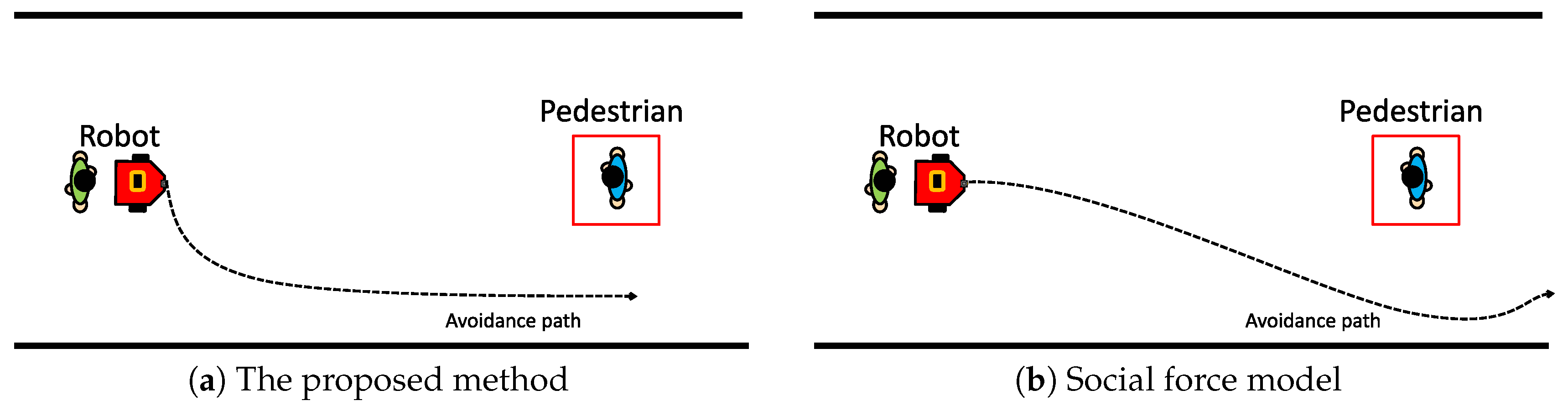

2.2. Robot Navigation Considering the Social Distance

3. Proposed Method

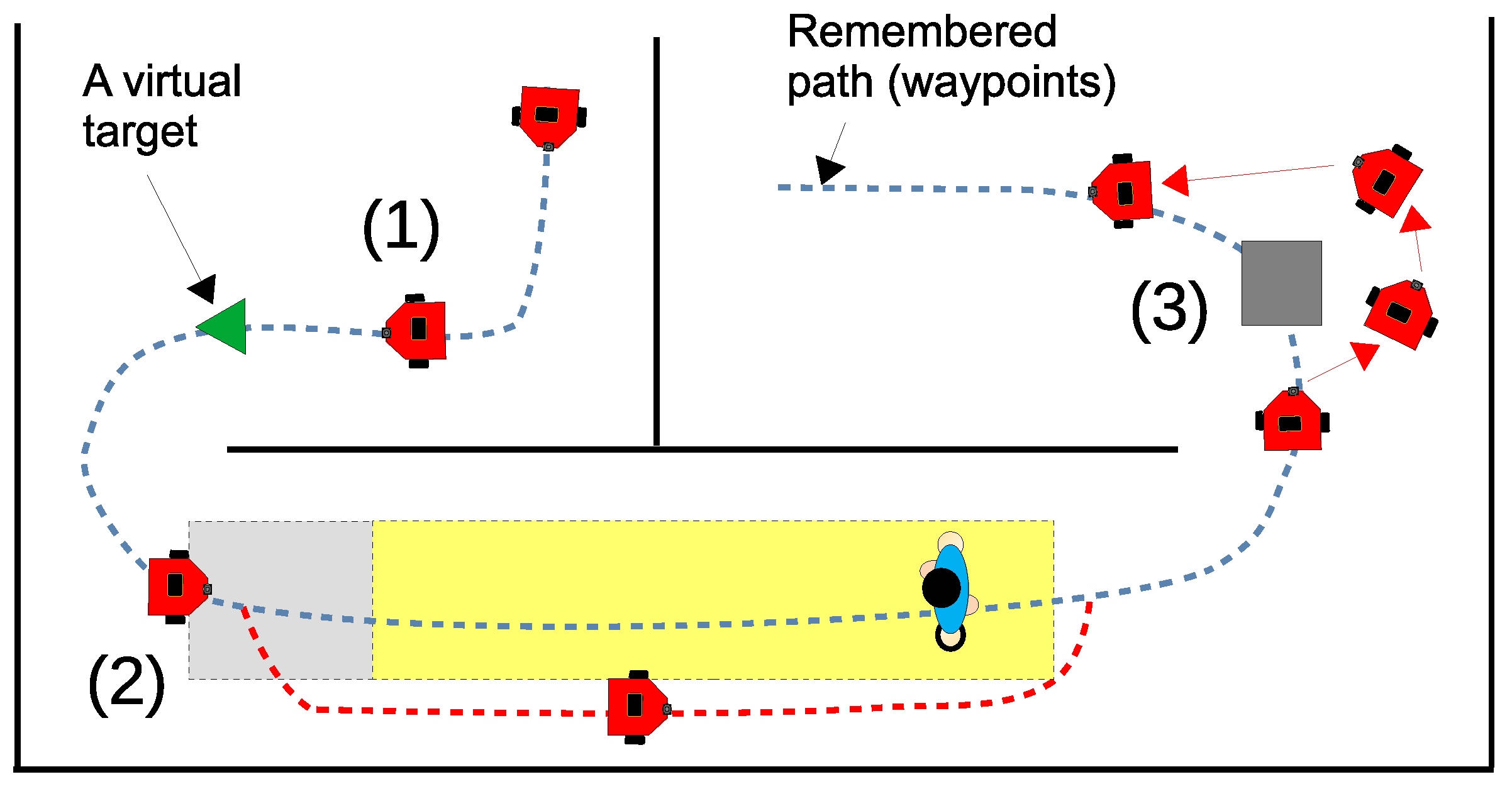

3.1. Overview

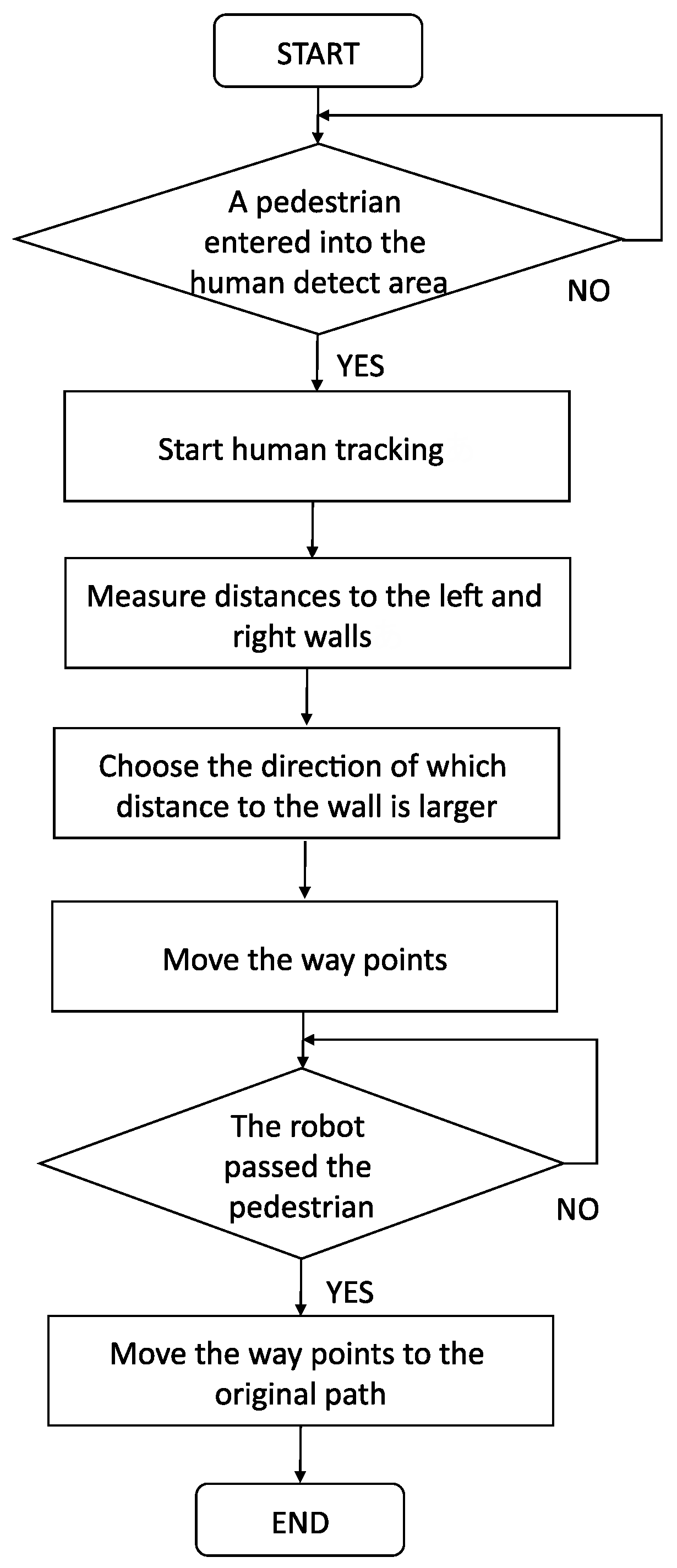

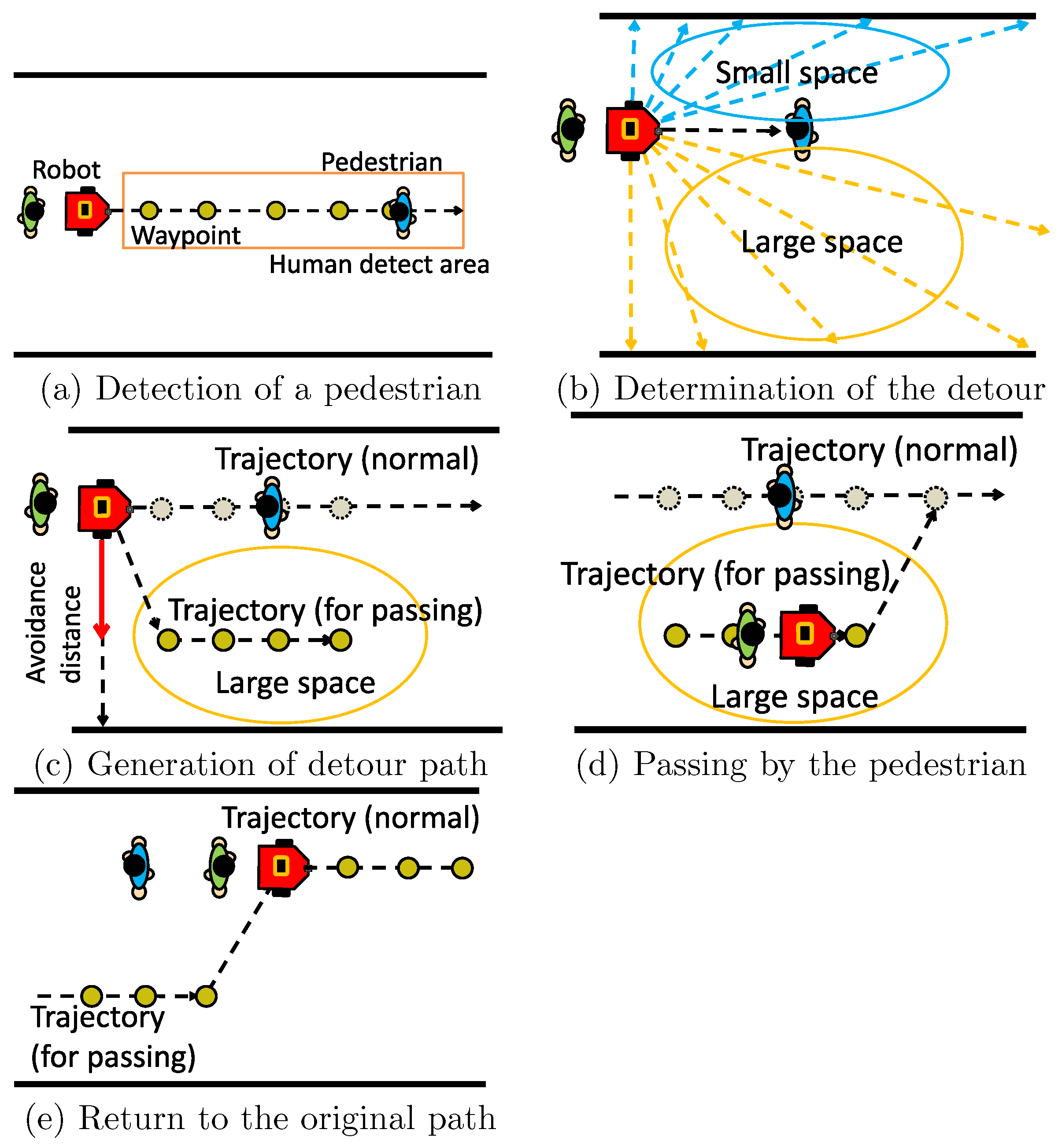

3.2. Avoiding an Oncoming Pedestrian

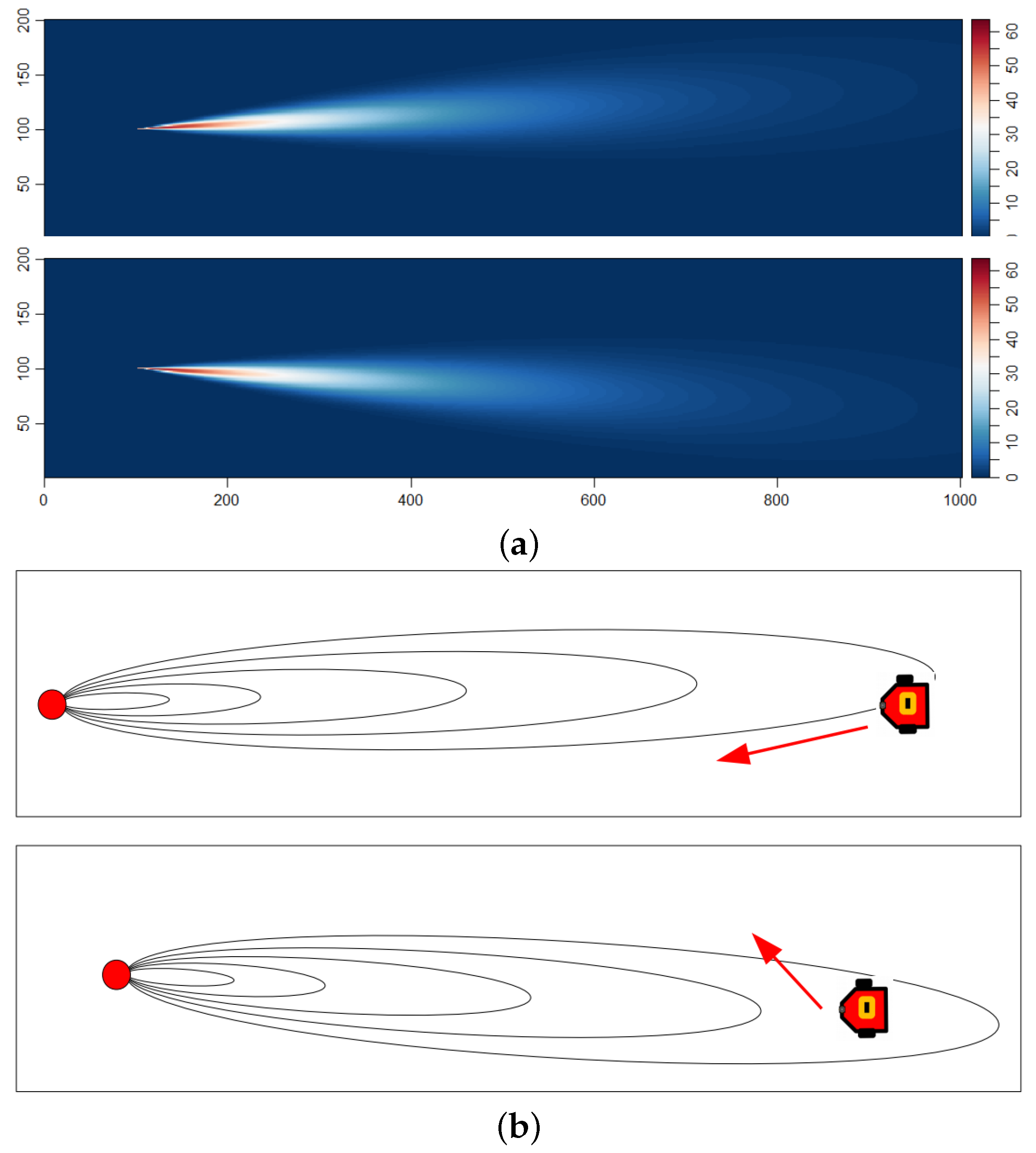

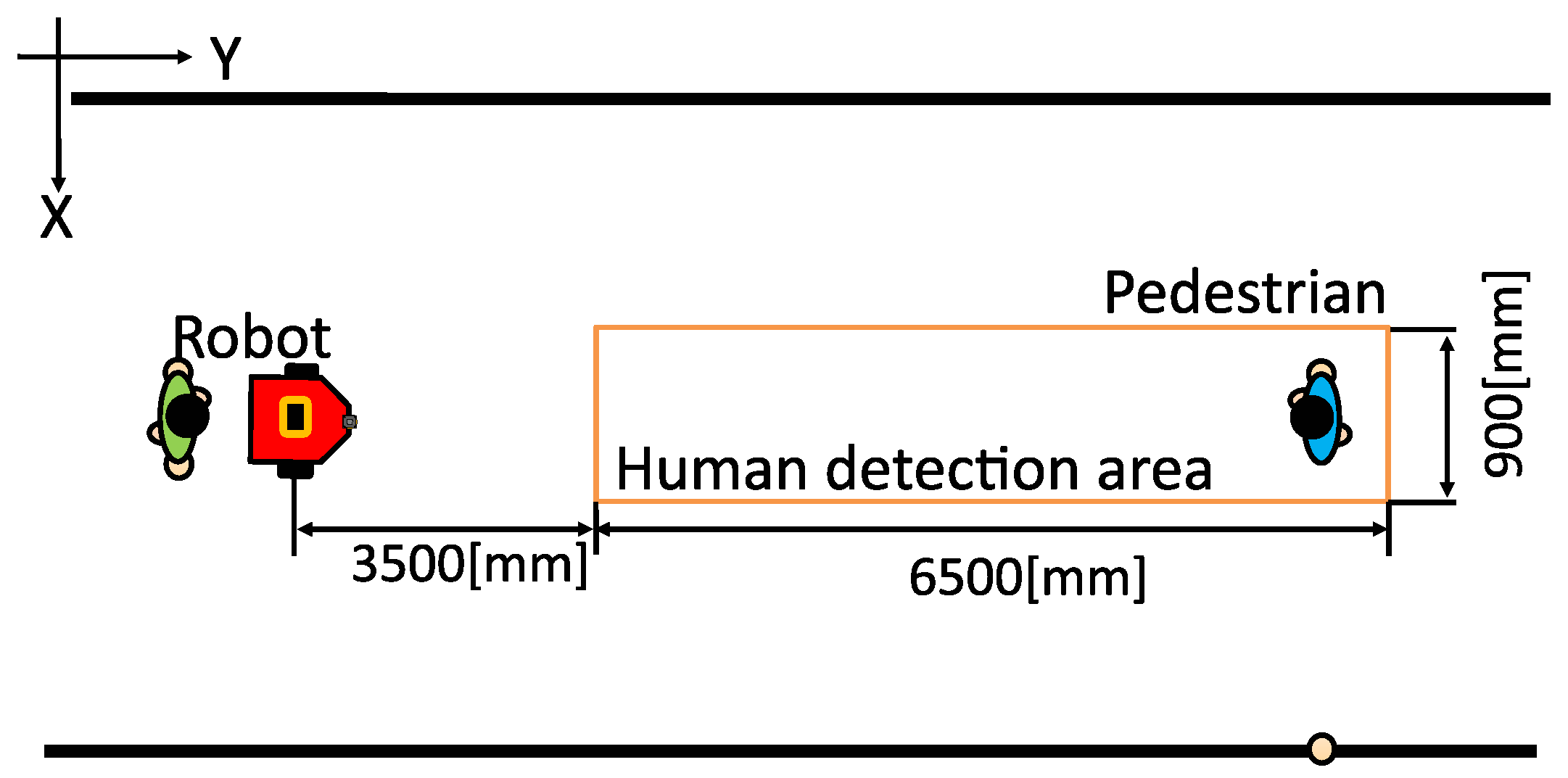

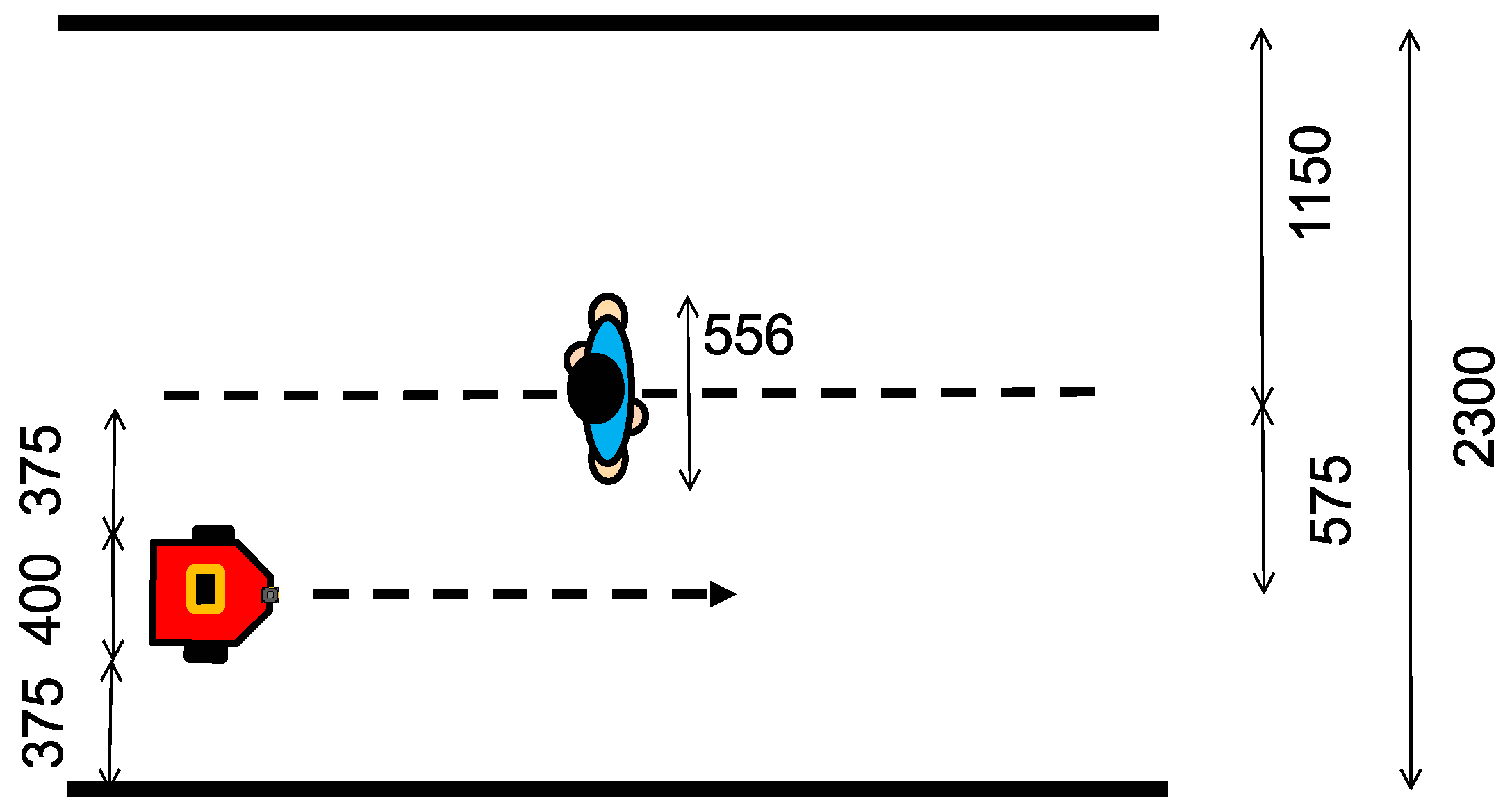

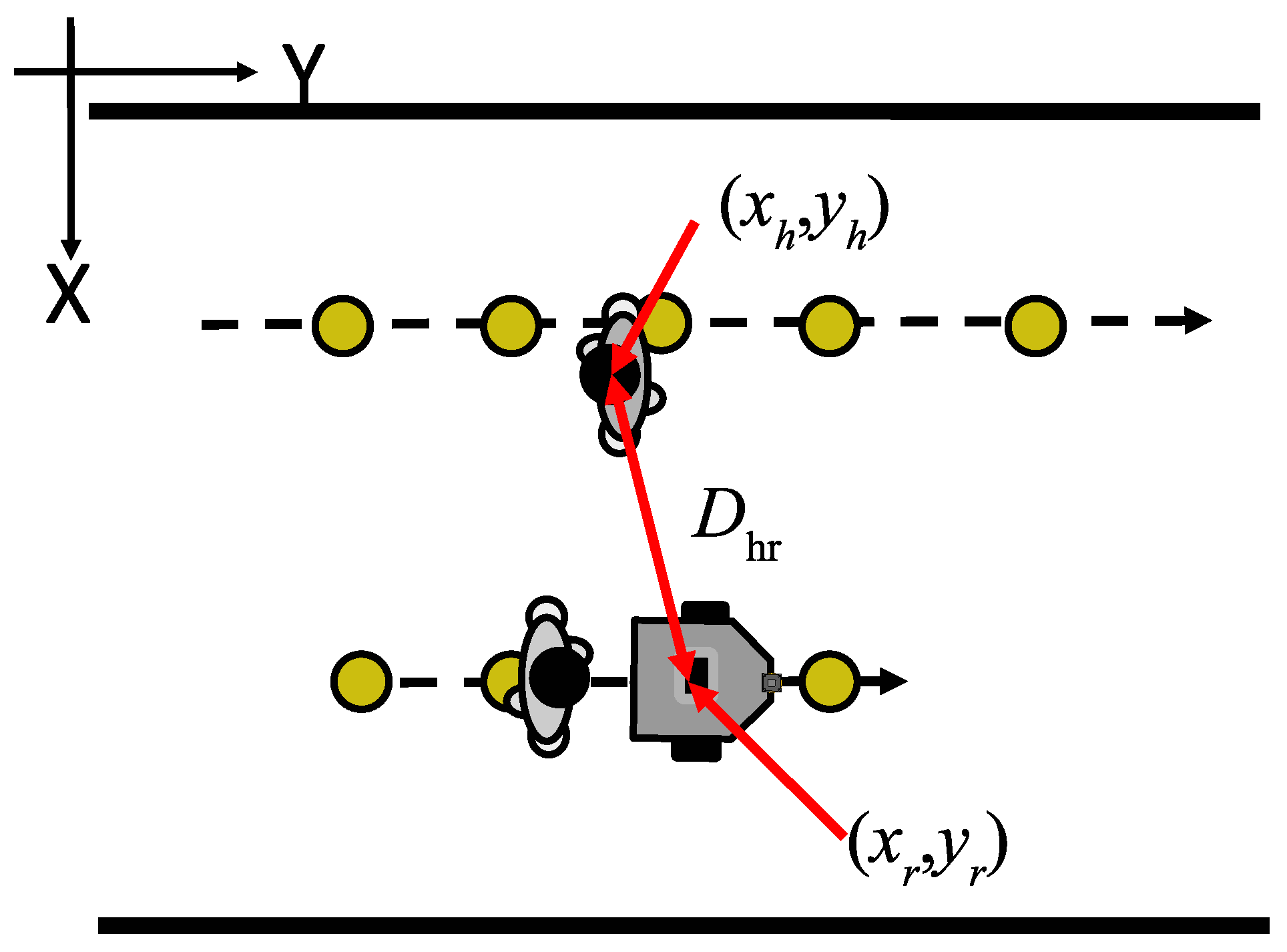

Detection of a Pedestrian

3.3. Determination of the Direction to Move

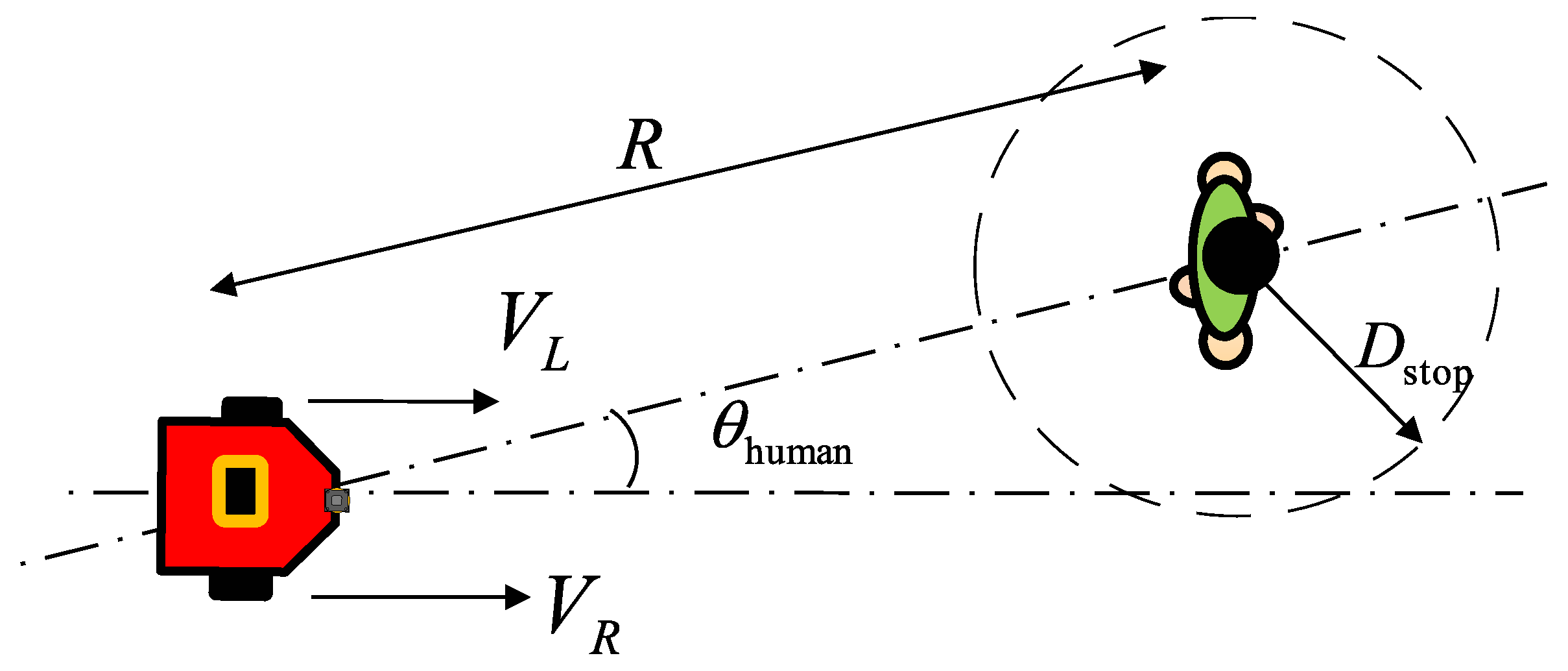

3.3.1. Calculation of the Avoiding Distance

3.3.2. Generation of the Avoidance Path

3.3.3. Detection of Passing by the Pedestrian

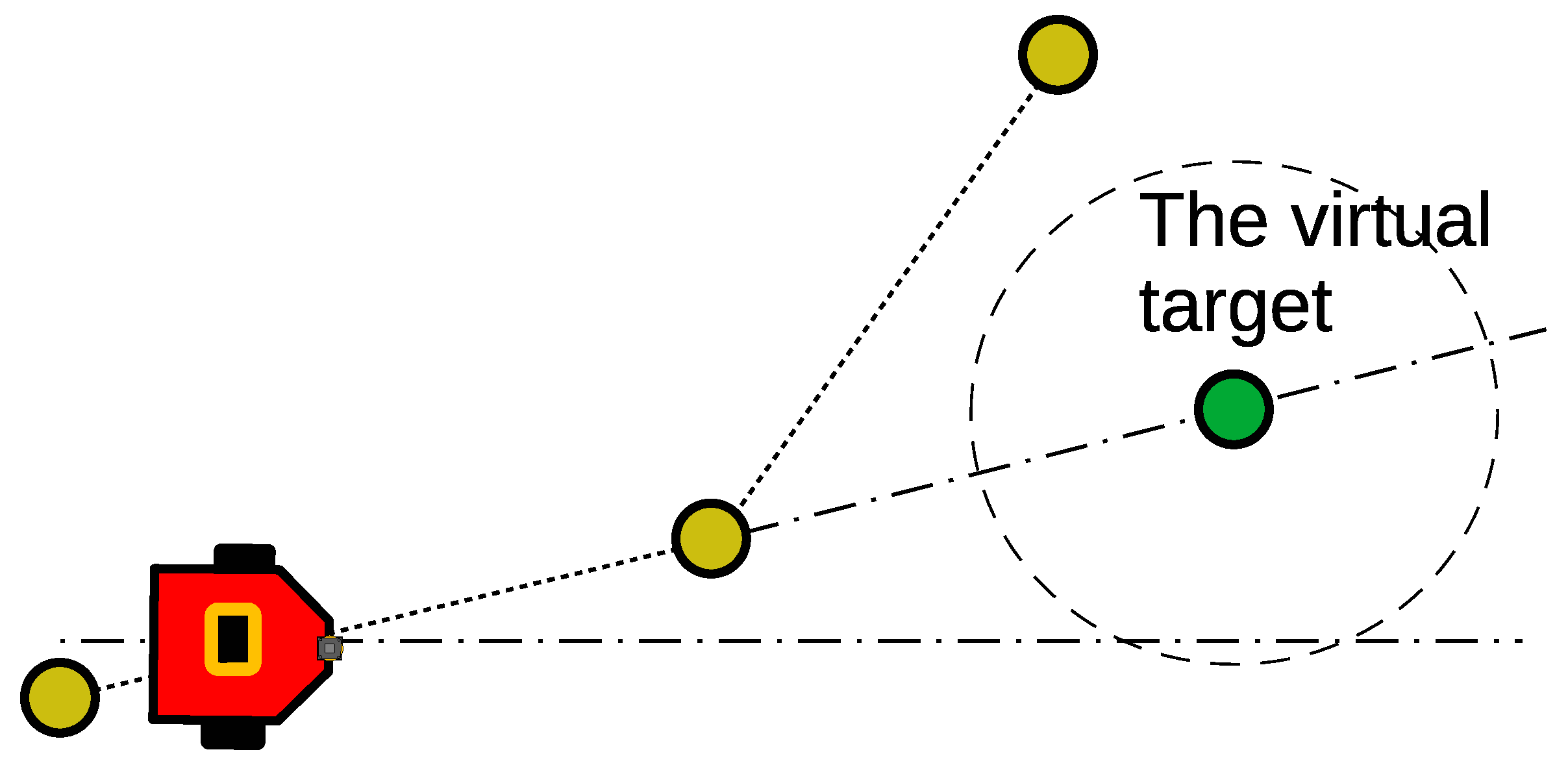

3.3.4. Returning to the Original Path

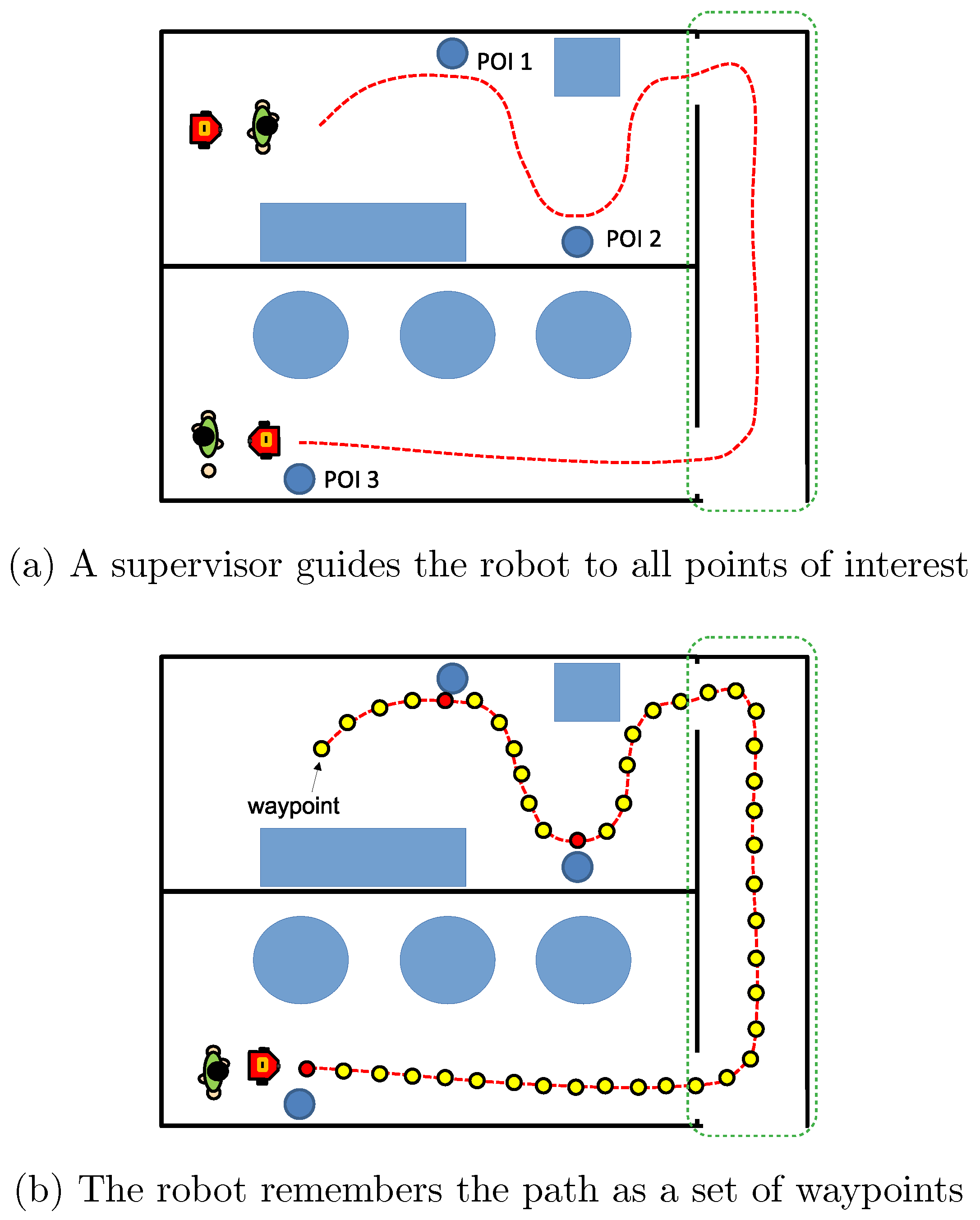

3.4. Following the Waypoints

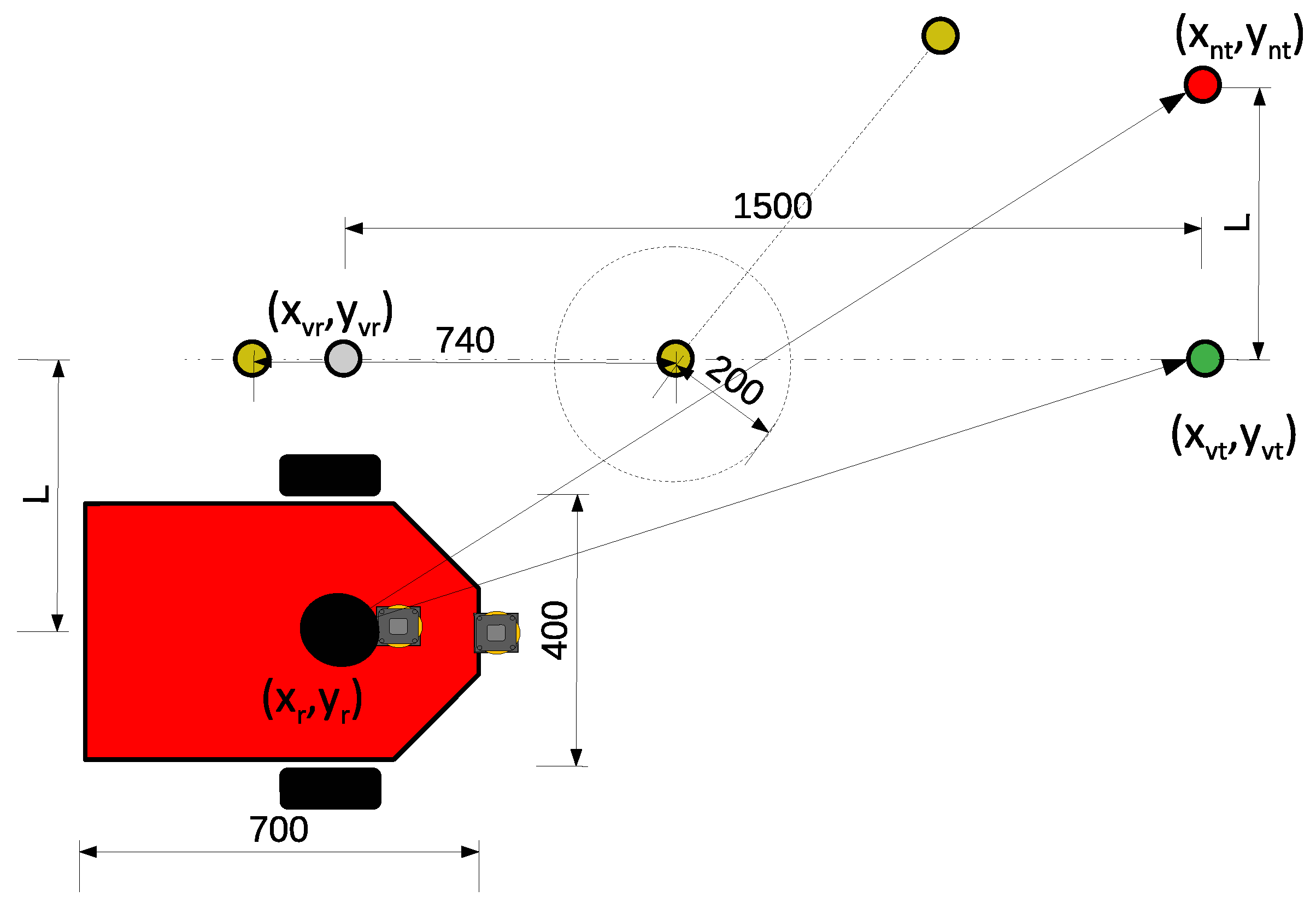

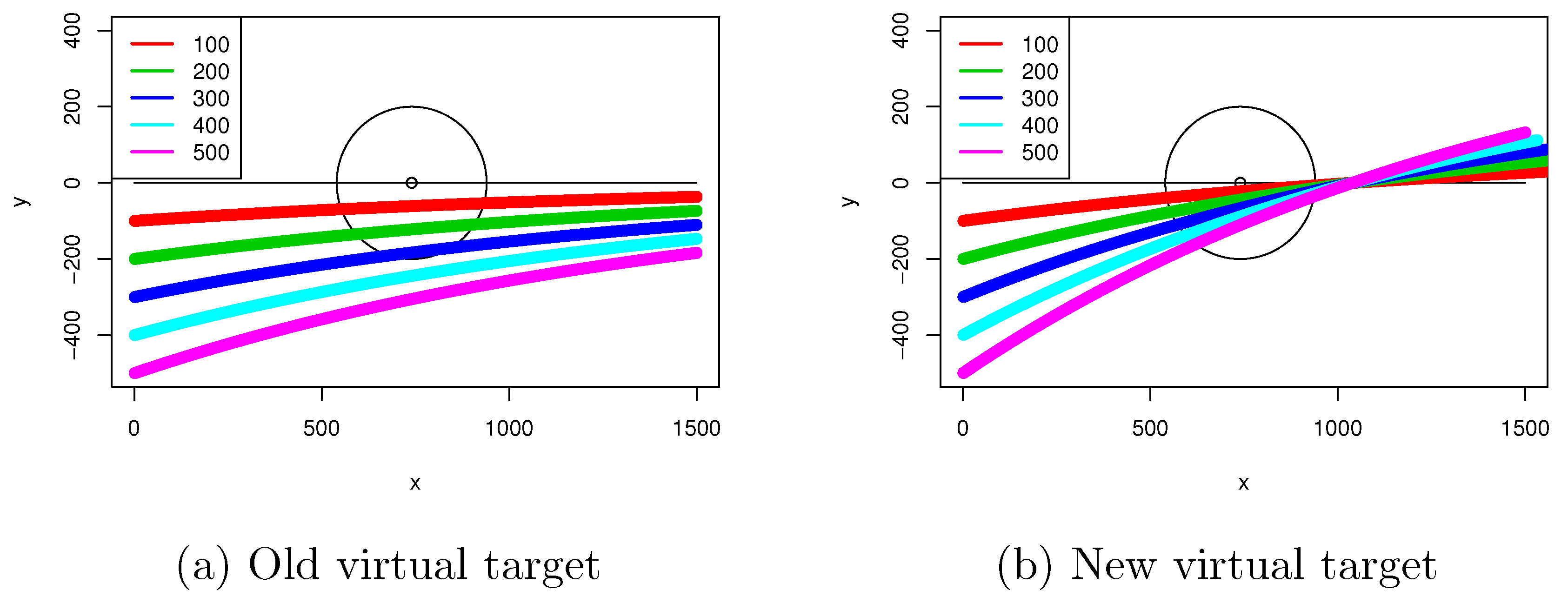

3.4.1. The Human Following Method

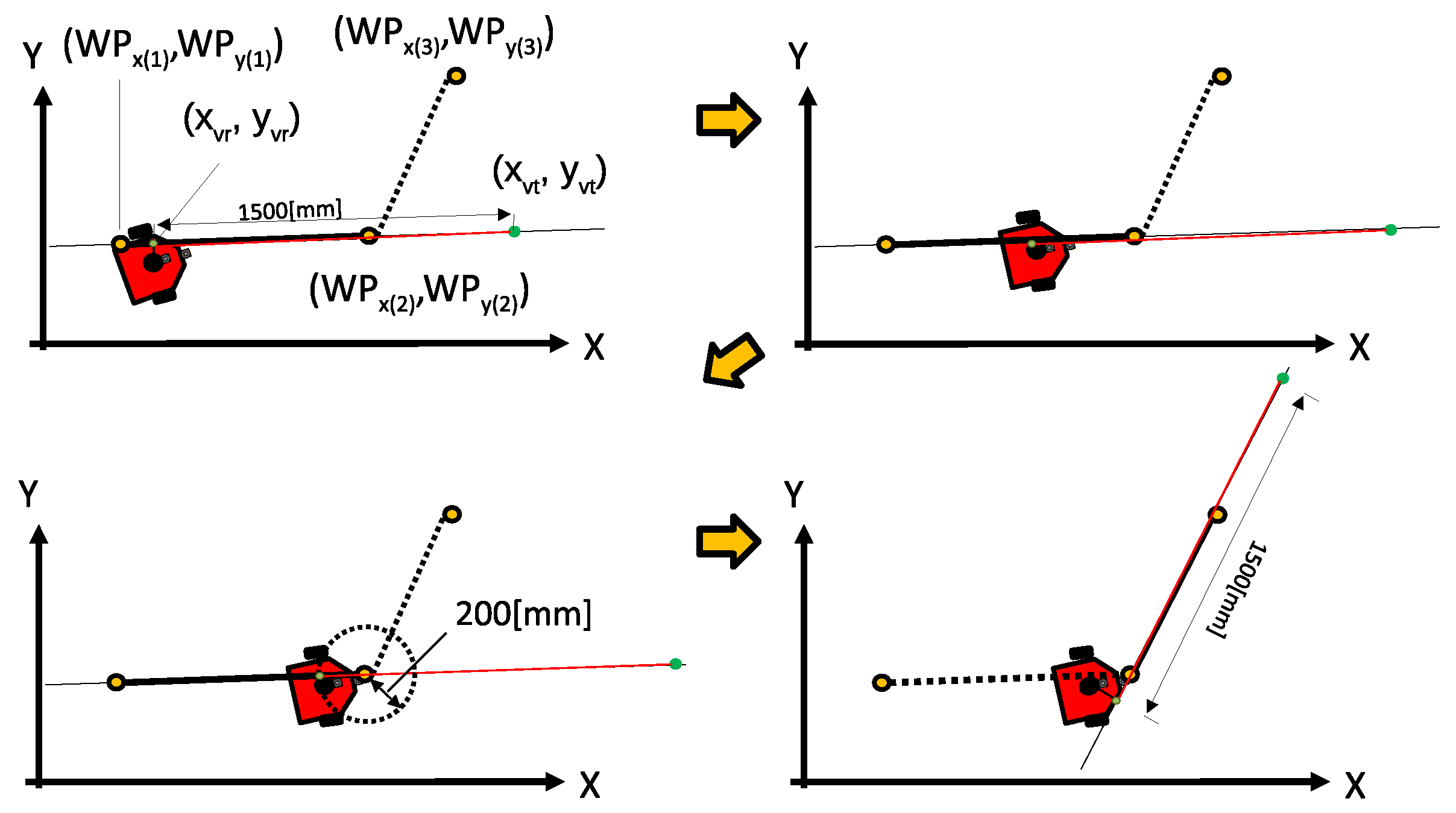

3.4.2. Following the Waypoints

3.4.3. Quick Recovery to the Original Path

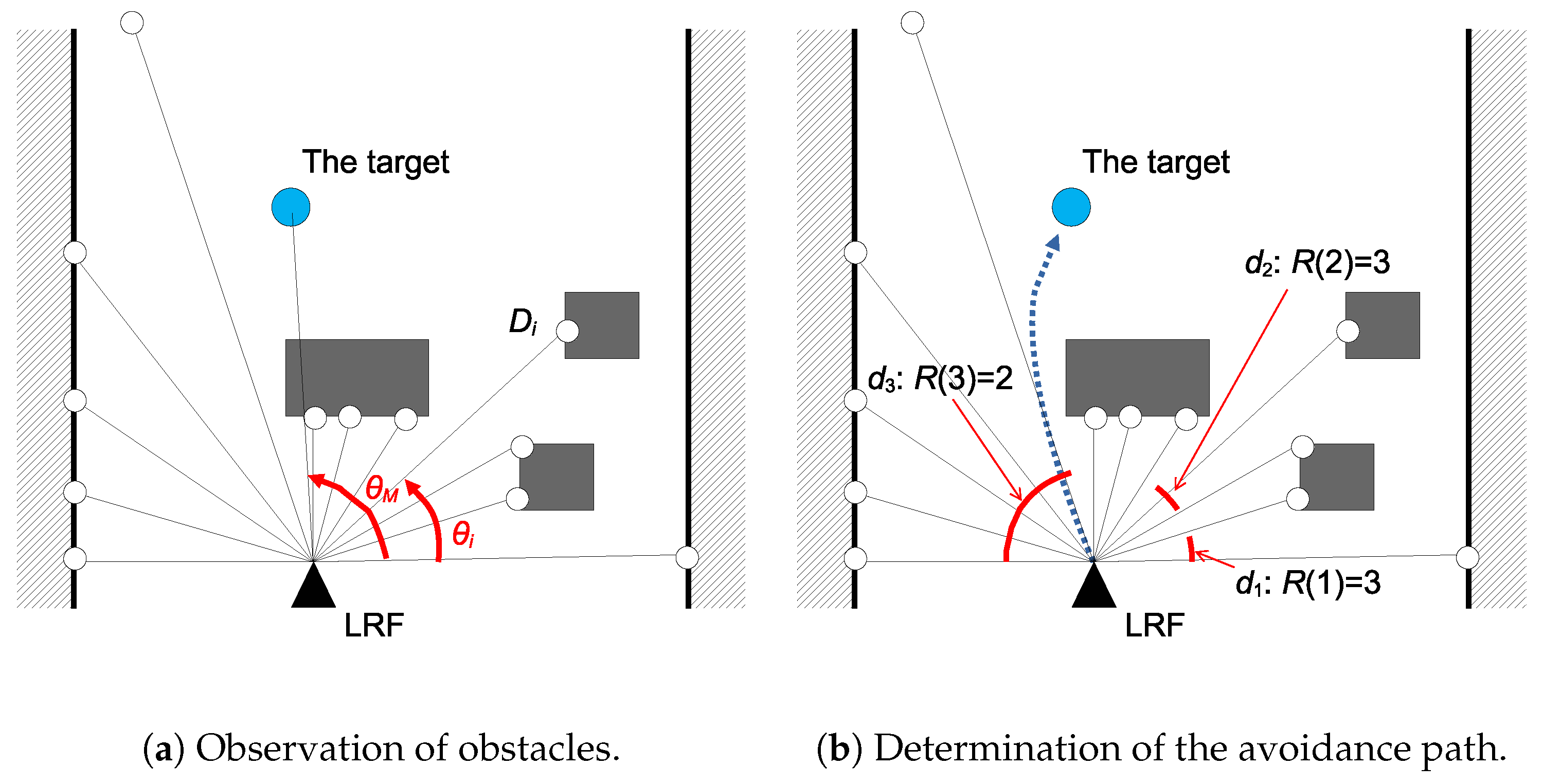

3.5. Combination with Obstacle Avoidance

- The LRF observes the space in front of the robot, and the robot calculates the regions where the robot will collide with an obstacle.

- Let be the i-th angle observed from the LRF and be the distance to any object (the target, an obstacle, or the wall) at an angle of (Figure 17a). List all regions (contiguous angles observed by the LRF) through which the robot can pass. Let be such regions, where covers all angles from to ().

- Judge whether the robot needs to avoid any obstacles, considering the positions of the robot and the target. If the straight path from the LRF to the target is included in any of the regions , then there is no need to avoid an obstacle.

- Let the angle from the LRF toward the target be andThen, let be the rank of among , in ascending order (the smallest has the highest rank). Now, indicates how near the region is to the target.

- Let be the average distance to the object at region , as follows:

- Let be the rank of among , in descending order (the largest has the highest rank).

- Let .

- Determine the region with the highest rank; . Let the robot move toward (Figure 17b). If there are ties, then we choose the region with the best .

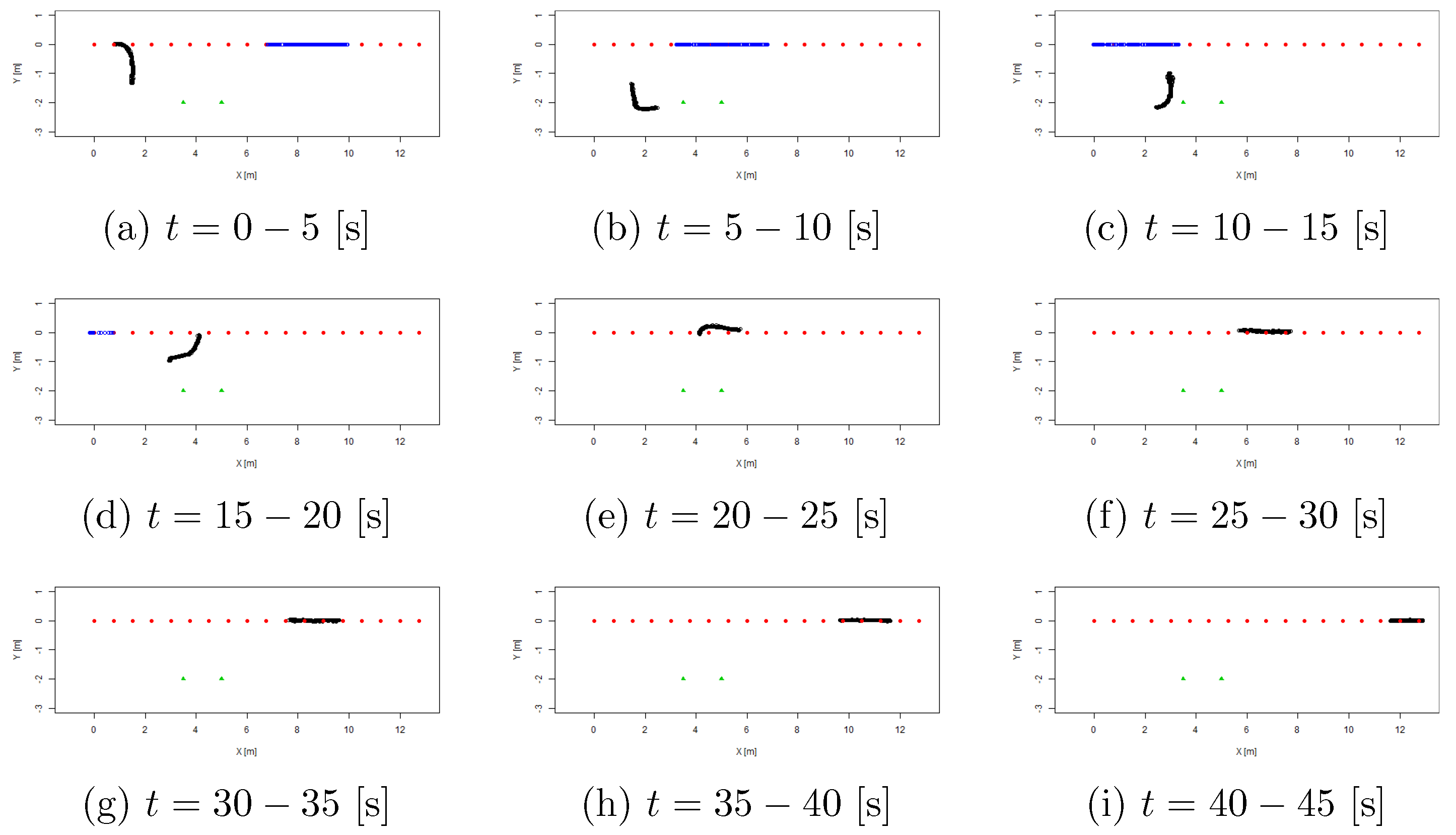

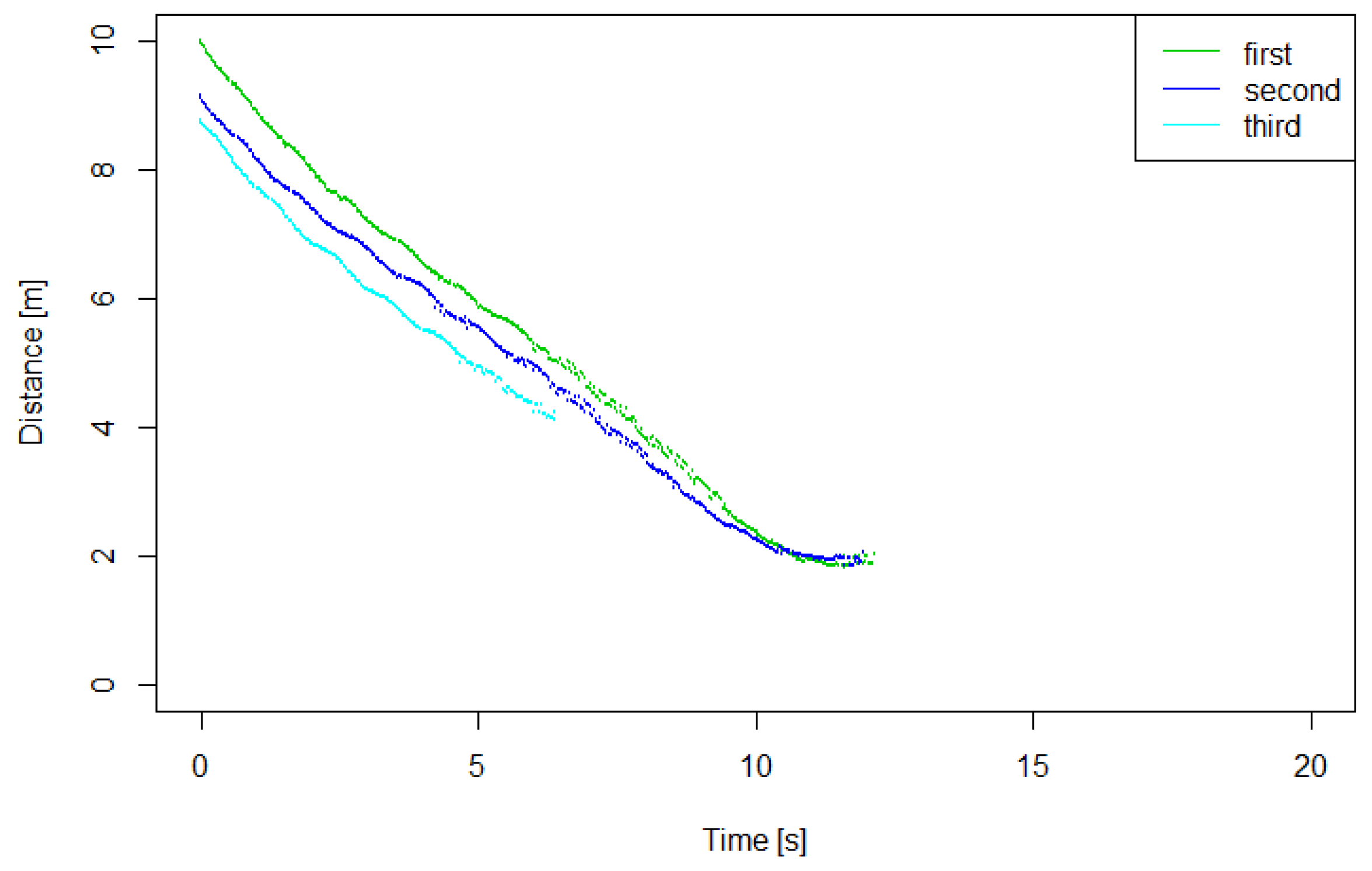

4. Experiment

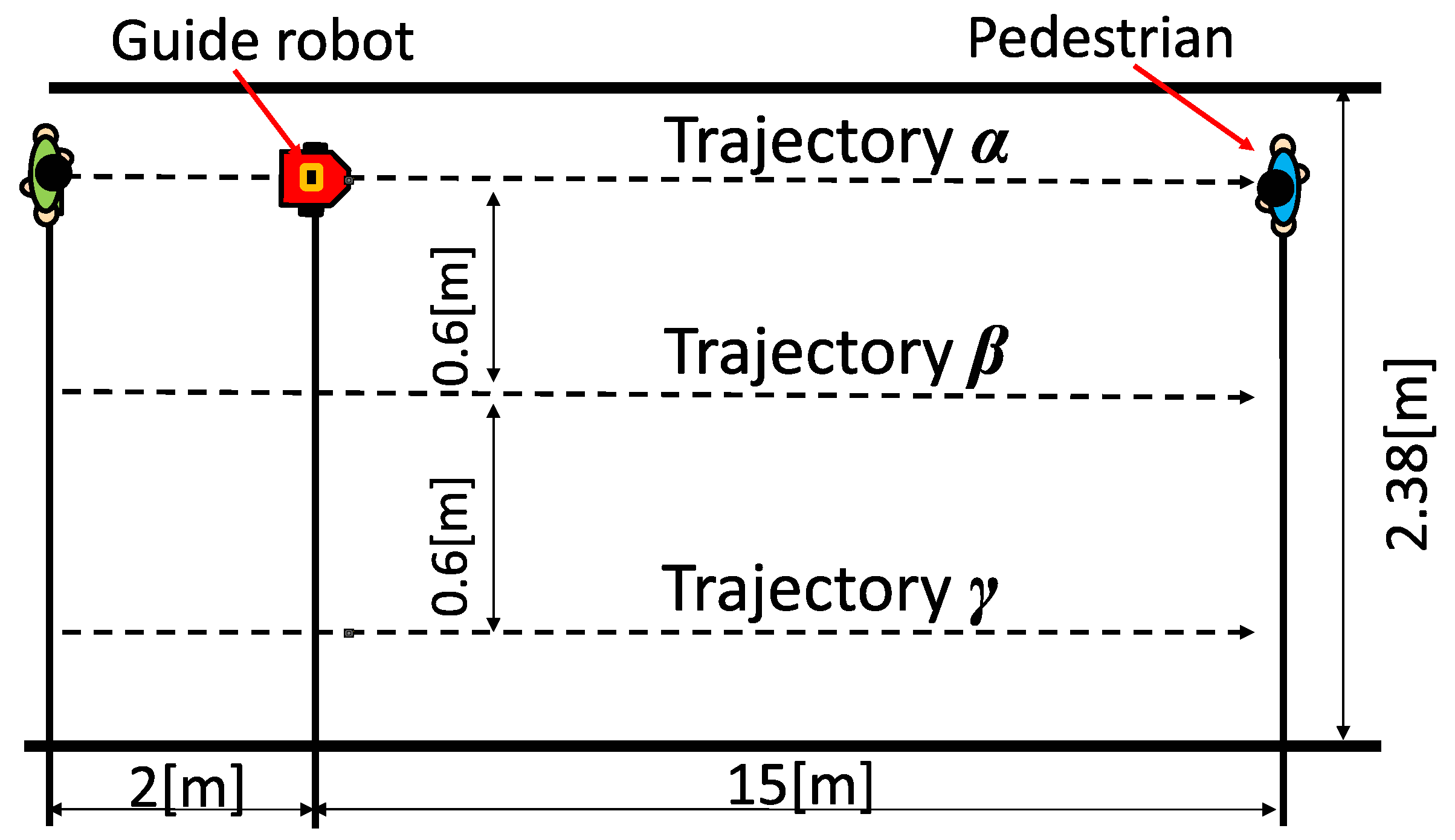

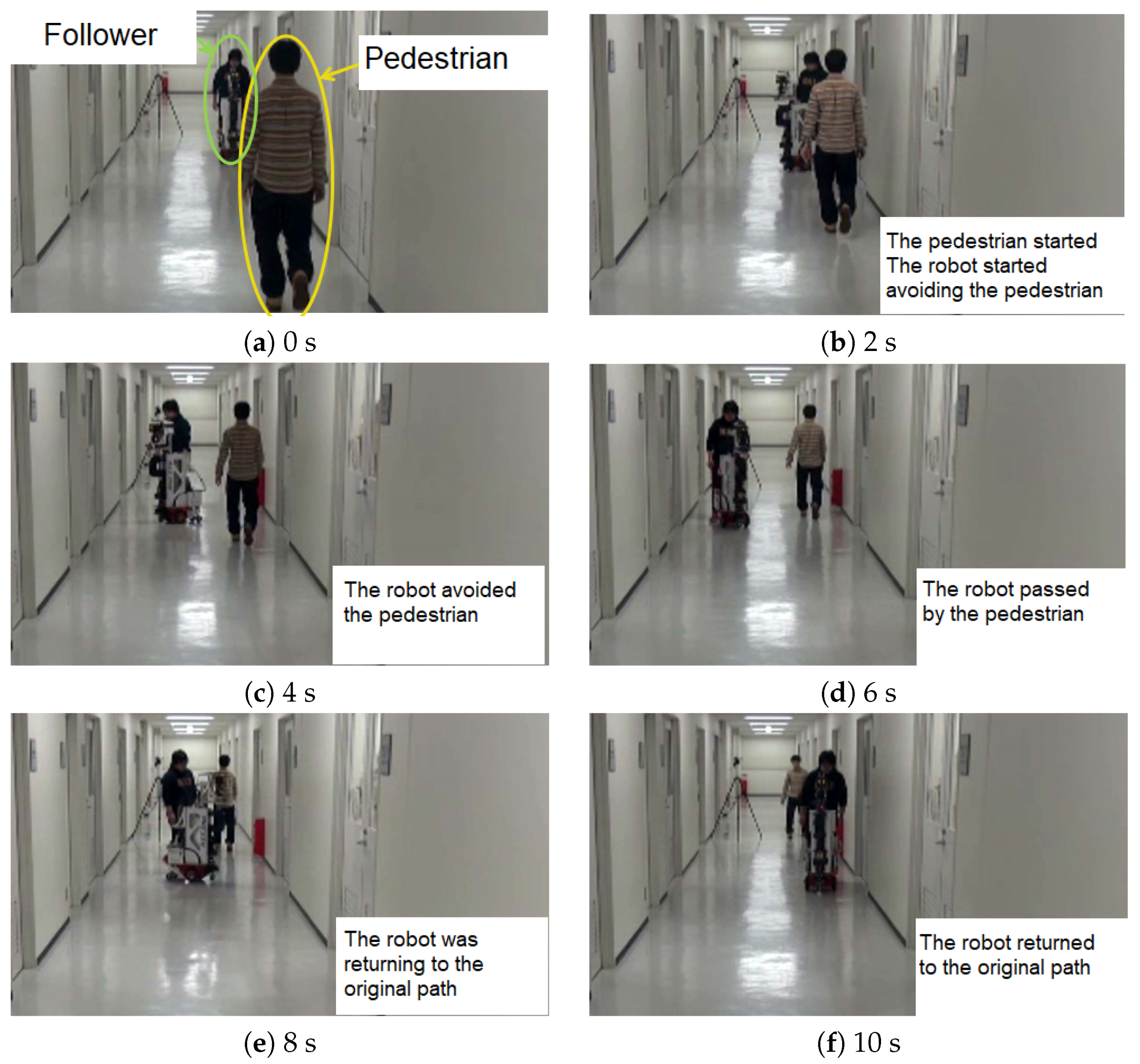

4.1. Overview and Conditions of the Experiment

- The robot detects an oncoming pedestrian walking towards it;

- The robot tracks the detected pedestrian;

- The robot determines the area for moving to avoid the pedestrian;

- The robot returns to the original path after passing by the pedestrian; and

- The robot moves in front of the person to be guided, keeping a proper distance.

4.2. Experimental Results

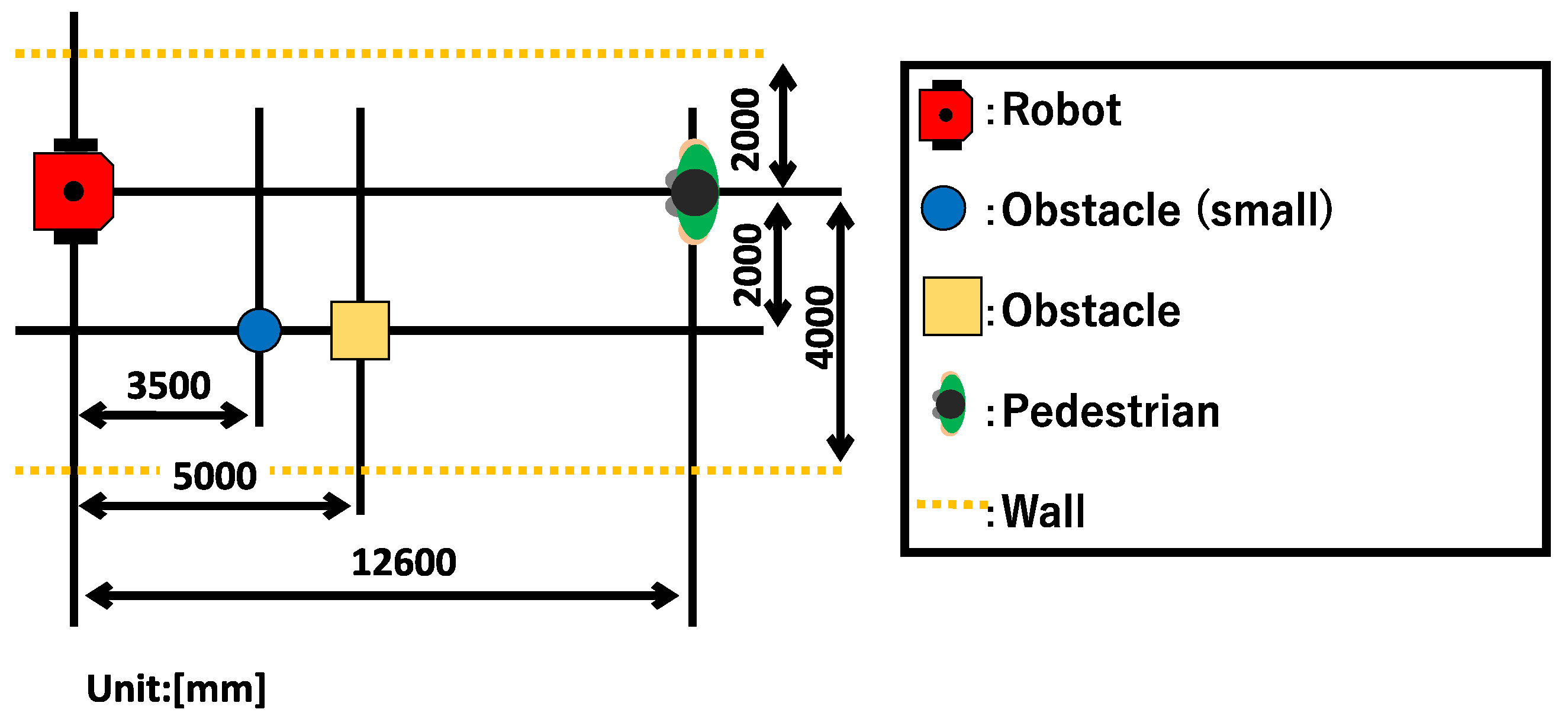

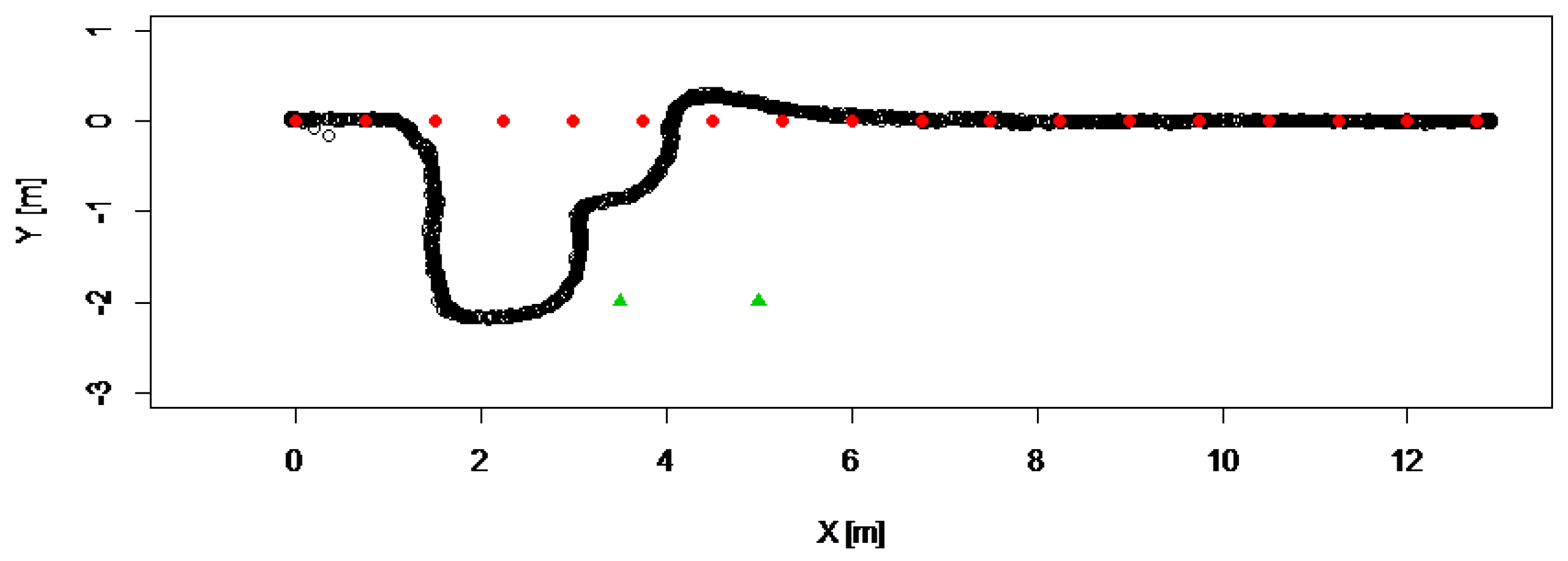

4.3. The Application Experiment

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Iwata, H.; Sugano, S. Design of human symbiotic robot TWENDY-ONE. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA’09), Kobe, Japan, 12–17 May 2009; pp. 580–586. [Google Scholar]

- Kanda, T.; Shiomi, M.; Miyashita, Z.; Ishiguro, H.; Hagita, N. An affective guide robot in a shopping mall. In Proceedings of the 4th ACM/IEEE International Conference on Human Robot Interaction, La Jolla, CA, USA, 9–13 March 2009; pp. 173–180. [Google Scholar]

- Gandhi, T.; Trivedi, M.M. Pedestrian collision avoidance systems: A survey of computer vision based recent studies. In Proceedings of the 2006 IEEE Intelligent Transportation Systems Conference, Toronto, ON, Canada, 17–20 September 2006; pp. 976–981. [Google Scholar] [CrossRef]

- Snape, J.; van den Berg, J.; Guy, S.J.; Manocha, D. Smooth and collision-free navigation for multiple robots under differential-drive constraints. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010; pp. 4584–4589. [Google Scholar] [CrossRef]

- Hoy, M.; Matveev, A.S.; Savkin, A.V. Algorithms for collision-free navigation of mobile robots in complex cluttered environments: A survey. Robotica 2015, 33, 463–497. [Google Scholar] [CrossRef]

- Almasri, M.; Elleithy, K.; Alajlan, A. Sensor fusion based model for collision free mobile robot navigation. Sensors 2016, 16, 24. [Google Scholar] [CrossRef] [PubMed]

- Little, K.B. Personal space. J. Exp. Soc. Psychol. 1965, 1, 237–247. [Google Scholar] [CrossRef]

- Hall, E.T. Proxemics. Curr. Anthropol. 1968, 9, 83–108. [Google Scholar] [CrossRef]

- Tamura, Y.; Fukuzawa, T.; Asama, H. Smooth collision avoidance in human-robot coexisting environment. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Taipei, Taiwan, 18–22 October 2010; pp. 3887–3892. [Google Scholar]

- Sardar, A.; Joosse, M.; Weiss, A.; Evers, V. Don’T Stand So Close to Me: Users’ Attitudinal and Behavioral Responses to Personal Space Invasion by Robots. In Proceedings of the 7th ACM/IEEE International Conference on Human-Robot Interaction (HRI ’12), Boston, MA, USA, 5–8 March 2012; ACM: New York, NY, USA, 2012; pp. 229–230. [Google Scholar] [CrossRef]

- Kruse, T.; Pandey, A.K.; Alami, R.; Kirsch, A. Human-aware robot navigation: A survey. Robot. Auton. Syst. 2013, 61, 1726–1743. [Google Scholar] [CrossRef]

- Arai, M.; Sato, Y.; Suzuki, R.; Kobayashi, Y.; Kuno, Y.; Miyazawa, S.; Fukushima, M.; Yamazaki, K.; Yamazaki, A. Robotic wheelchair moving with multiple companions. In Proceedings of the 23rd IEEE International Symposium on Robot and Human Interactive Communication, Edinburgh, UK, 25–29 August 2014; pp. 513–518. [Google Scholar] [CrossRef]

- Hiroi, Y.; Ito, A. ASAHI: OK for Failure—A Robot for Supporting Daily Life, Equipped with a Robot Avatar. In Proceedings of the 8th ACM/IEEE International Conference on Human-Robot Interaction, Tokyo, Japan, 3–6 March 2013; pp. 141–142. [Google Scholar]

- Yuan, F.; Twardon, L.; Hanheide, M. Dynamic path planning adopting human navigation strategies for a domestic mobile robot. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Taipei, Taiwan, 18–22 October 2010; pp. 3275–3281. [Google Scholar]

- Sakai, K.; Hiroi, Y.; Ito, A. Teaching a robot where objects are: Specification of object location using human following and human orientation estimation. In Proceedings of the World Automation Congress (WAC), Waikoloa, HI, USA, 3–7 August 2014; pp. 490–495. [Google Scholar] [CrossRef]

- Alvarez-Santos, V.; Canedo-Rodriguez, A.; Iglesias, R.; Pardo, X.; Regueiro, C.; Fernandez-Delgado, M. Route learning and reproduction in a tour-guide robot. Robot. Auton. Syst. 2015, 63, 206–213. [Google Scholar] [CrossRef]

- Akai, N.; Morales, L.Y.; Murase, H. Teaching-Playback Navigation Without a Consistent Map. J. Robot. Mechatron. 2018, 30, 591–597. [Google Scholar] [CrossRef]

- Iocchi, L.; Holz, D.; del Solar, J.R.; Sugiura, K.; van der Zant, T. RoboCup@Home: Analysis and results of evolving competitions for domestic and service robots. Artif. Intell. 2015, 229, 258–281. [Google Scholar] [CrossRef]

- Matamoros, M.; Seib, V.; Memmesheimer, R.; Paulus, D. RoboCup@Home: Summarizing achievements in over eleven years of competition. In Proceedings of the IEEE International Conference on Autonomous Robot Systems and Competitions (ICARSC), Torres Vedras, Portugal, 25–27 April 2018; pp. 186–191. [Google Scholar] [CrossRef]

- Paccierotti, E. Evaluation of passing distance for social robots. In Proceedings of the IEEE International Workshop on Robot and Human Interactive Communication, Hatfield, UK, 6–8 September 2006; pp. 315–320. [Google Scholar]

- Shiomi, M.; Zanlungo, F.; Hayashi, K.; Kanda, T. Towards a Socially Acceptable Collision Avoidance for a Mobile Robot Navigating Among Pedestrians Using a Pedestrian Model. Int. J. Soc. Robot. 2014, 6, 443–455. [Google Scholar] [CrossRef]

- Yuta, S.; Mizukawa, M.; Hashimoto, H.; Tashiro, H.; Okubo, T. An open experiment of mobile robot autonomous navigation at the pedestrian streets in the city — Tsukuba Challenge. In Proceedings of the 2011 IEEE International Conference on Mechatronics and Automation, Beijing, China, 7–10 August 2011; pp. 904–909. [Google Scholar] [CrossRef]

- Sakai, K.; Hiroi, Y.; Ito, A. Proposal of an obstacle avoidance method considering the LRF-based human following. In Proceedings of the Robotics Society Japan Annual Meeting, Fukuoka, Japan, 4–6 September 2014; p. 2D2-06. [Google Scholar]

- Koren, Y.; Borenstein, J. Potential field methods and their inherent limitations for mobile robot navigation. In Proceedings of the 1991 IEEE International Conference on Robotics and Automation, Sacramento, CA, USA, 9–11 April 1991; Volume 2, pp. 1398–1404. [Google Scholar] [CrossRef]

- Fox, D.; Burgard, W.; Thrun, S. The dynamic window approach to collision avoidance. IEEE Robot. Autom. Mag. 1997, 4, 23–33. [Google Scholar] [CrossRef]

- Charalampous, K.; Kostavelis, I.; Gasteratos, A. Recent trends in social aware robot navigation: A survey. Robot. Auton. Syst. 2017, 93, 85–104. [Google Scholar] [CrossRef]

- Kim, B.; Pineau, J. Socially Adaptive Path Planning in Human Environments Using Inverse Reinforcement Learning. Int. J. Soc. Robot. 2016, 8, 51–66. [Google Scholar] [CrossRef]

- Chen, Y.F.; Everett, M.; Liu, M.; How, J.P. Socially aware motion planning with deep reinforcement learning. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 1343–1350. [Google Scholar]

- Kostavelis, I.; Kargakos, A.; Giakoumis, D.; Tzovaras, D. Robot’s Workspace Enhancement with Dynamic Human Presence for Socially-Aware Navigation. In Computer Vision Systems; Liu, M., Chen, H., Vincze, M., Eds.; Springer International Publishing: Cham, Switzerland, 2017; pp. 279–288. [Google Scholar]

- Tai, L.; Zhang, J.; Liu, M.; Burgard, W. Socially compliant navigation through raw depth inputs with generative adversarial imitation learning. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 1111–1117. [Google Scholar]

- Hoshino, S.; Maki, K. Safe and efficient motion planning of multiple mobile robots based on artificial potential for human behavior and robot congestion. Adv. Robot. 2015, 29, 1095–1109. [Google Scholar] [CrossRef]

- Papadakis, P.; Rives, P.; Spalanzani, A. Adaptive spacing in human-robot interactions. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014; pp. 2627–2632. [Google Scholar]

- Aotani, Y.; Ienaga, T.; Machinaka, N.; Sadakuni, Y.; Yamazaki, R.; Hosoda, Y.; Sawahashi, R.; Kuroda, Y. Development of Autonomous Navigation System Using 3D Map with Geometric and Semantic Information. J. Robot. Mechatron. 2017, 29, 639–648. [Google Scholar] [CrossRef]

- Matsumaru, T. Development of Four Kinds of Mobile Robot with Preliminary-Announcement and Indication Function of Upcoming Operation. J. Robot. Mechatron. 2007, 19, 148–159. [Google Scholar] [CrossRef]

- Nakamori, Y.; Hiroi, Y.; Ito, A. Multiple player detection and tracking method using a laser range finder for a robot that plays with human. ROBOMECH J. 2018, 5, 25. [Google Scholar] [CrossRef]

- Yoda, M.; Shiota, Y. Mobile Robot’s Passing Motion Algorithm Based on Subjective Evaluation. Trans. Jpn. Soc. Mech. Eng. 2000, 66, 156–163. [Google Scholar]

- National Institute of Advanced Industrial Science and Technology. AIST Anthropometric Database 1991–1992. Available online: https://www.airc.aist.go.jp/dhrt/91-92/ (accessed on 6 November 2019).

- Takahashi, M.; Suzuki, T.; Shitamoto, H.; Moriguchi, T.; Yoshida, K. Developing a mobile robot for transport applications in the hospital domain. Robot. Auton. Syst. 2010, 58, 889–899. [Google Scholar] [CrossRef]

- Ljungblad, S.; Kotrbova, J.; Jacobsson, M.; Cramer, H.; Niechwiadowicz, K. Hospital Robot at Work: Something Alien or an Intelligent Colleague? In Proceedings of the ACM 2012 Conference on Computer Supported Cooperative Work (CSCW ’12), Seattle, WA, USA, 11–15 February 2012; ACM: New York, NY, USA, 2012; pp. 177–186. [Google Scholar] [CrossRef]

- Burgard, W.; Cremers, A.B.; Fox, D.; Hähnel, D.; Lakemeyer, G.; Schulz, D.; Steiner, W.; Thrun, S. Experiences with an interactive museum tour-guide robot. Artif. Intell. 1999, 114, 3–55. [Google Scholar] [CrossRef]

- Kuno, Y.; Sadazuka, K.; Kawashima, M.; Yamazaki, K.; Yamazaki, A.; Kuzuoka, H. Museum guide robot based on sociological interaction analysis. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, San Jose, CA, USA, 28 April–3 May 2007; pp. 1191–1194. [Google Scholar]

- Yamazaki, A.; Yamazaki, K.; Ohyama, T.; Kobayashi, Y.; Kuno, Y. A techno-sociological solution for designing a museum guide robot: Regarding choosing an appropriate visitor. In Proceedings of the 7th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Boston, MA, USA, 5–8 March 2012; pp. 309–316. [Google Scholar] [CrossRef]

- Parikh, S.P.; Grassi, V., Jr.; Kumar, V.; Okamoto, J., Jr. Integrating human inputs with autonomous behaviors on an intelligent wheelchair platform. IEEE Intell. Syst. 2007, 22, 33–41. [Google Scholar] [CrossRef]

- Matsumoto, O.; Komoriya, K.; Toda, K.; Goto, S.; Hatase, T.; Nishimura, H. Autonomous traveling control of the “TAO Aicle” intelligent wheelchair. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Beijing, China, 9–15 October 2006; pp. 4322–4327. [Google Scholar]

- Morishita, K.; Hiroi, Y.; Ito, A. a Crowd Avoidance Method Using Circular Avoidance Path for Robust Person Following. J. Robot. 2017, 2017, 3148202. [Google Scholar] [CrossRef] [Green Version]

- Fujiwara, Y.; Hiroi, Y.; Tanaka, Y.; Ito, A. Development of a mobile robot moving on a handrail—Control for preceding a person keeping a distance. In Proceedings of the 24th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Kobe, Japan, 31 August–4 September 2015; pp. 413–418. [Google Scholar]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hiroi, Y.; Ito, A. A Pedestrian Avoidance Method Considering Personal Space for a Guide Robot. Robotics 2019, 8, 97. https://doi.org/10.3390/robotics8040097

Hiroi Y, Ito A. A Pedestrian Avoidance Method Considering Personal Space for a Guide Robot. Robotics. 2019; 8(4):97. https://doi.org/10.3390/robotics8040097

Chicago/Turabian StyleHiroi, Yutaka, and Akinori Ito. 2019. "A Pedestrian Avoidance Method Considering Personal Space for a Guide Robot" Robotics 8, no. 4: 97. https://doi.org/10.3390/robotics8040097