“Hmm, Did You Hear What I Just Said?”: Development of a Re-Engagement System for Socially Interactive Robots

Abstract

1. Introduction and Background

2. System Development

2.1. Design Principles

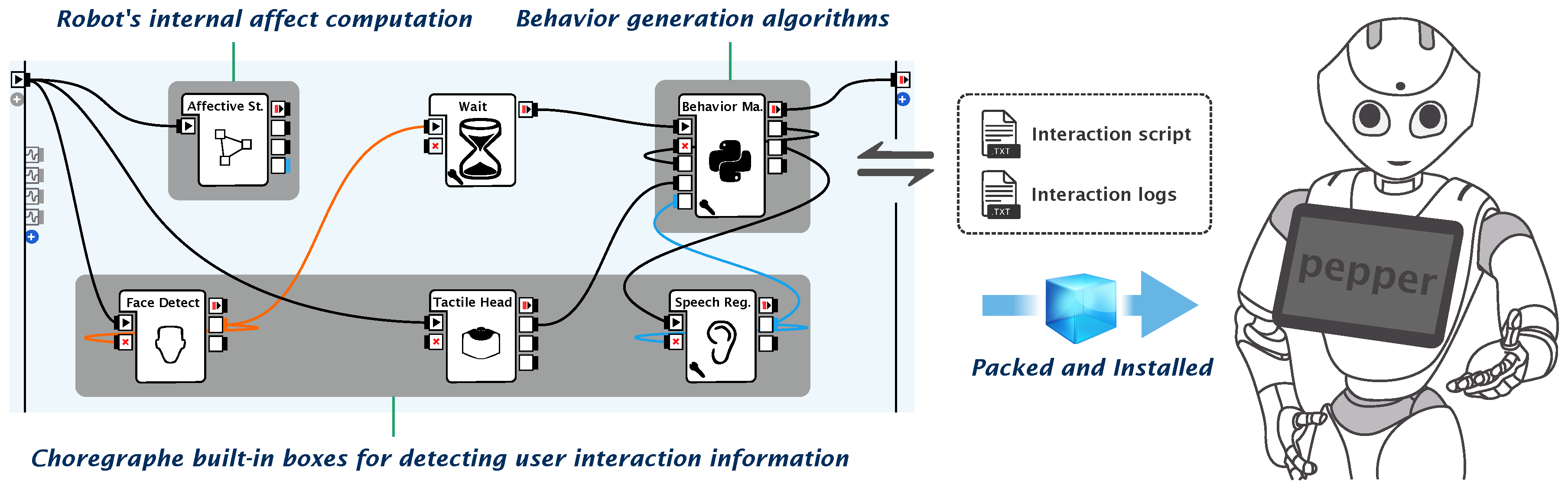

2.2. System Architecture

2.3. System Architecture Components

2.3.1. Internal Affective System

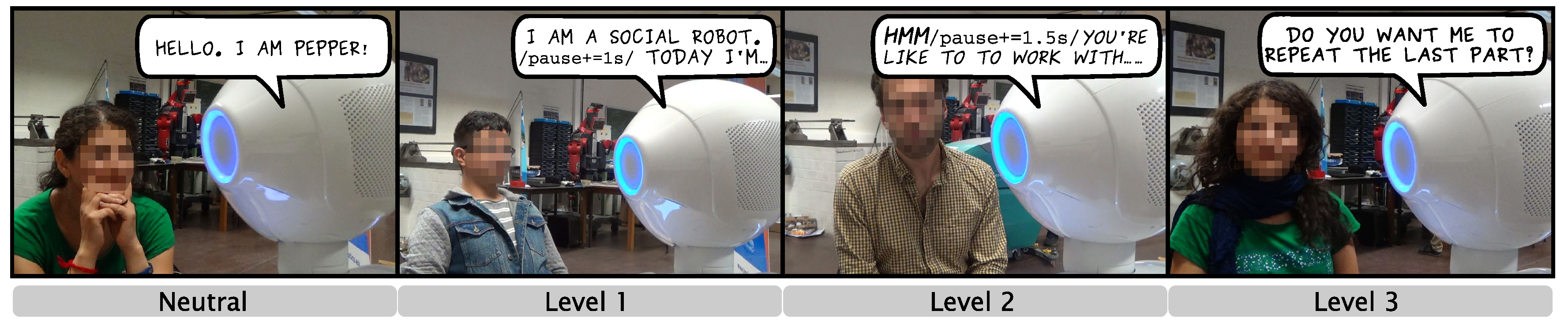

2.3.2. Behavior Generation System

- The deliberation layer generates task-based behaviors. When the user is engaged with the interaction, this layer generates behaviors following the interaction script, e.g., a story, a lesson, or a guidance. When disengagements occur, the system attempts to apply three levels of re-engagement strategies. We adopted re-engagement strategies from human communication literature as summarized by Richmond et al. [45]). However, since human strategies are highly abstract, previous HRI studies used different ways to (partially) translate these strategies into programmable rules (e.g., [46,47,48,49,50]).

- The reflection layer evaluates behaviors decided by the lower layers and might change behavior planning if found not proper, e.g., ethically unacceptable. Due to the required complexity and the scope of the system development, this layer is not implemented.

| Algorithm 1: Behavior generation mechanism in the deliberation layer. Specific numerical values of parameters (e.g., attention levels, movement speed, and speech) are chosen based on the implemented robot platform and sensors |

|

2.4. System Implementation

2.4.1. Compact Implementation

2.4.2. System Parameters

3. System Usability Test

3.1. Users

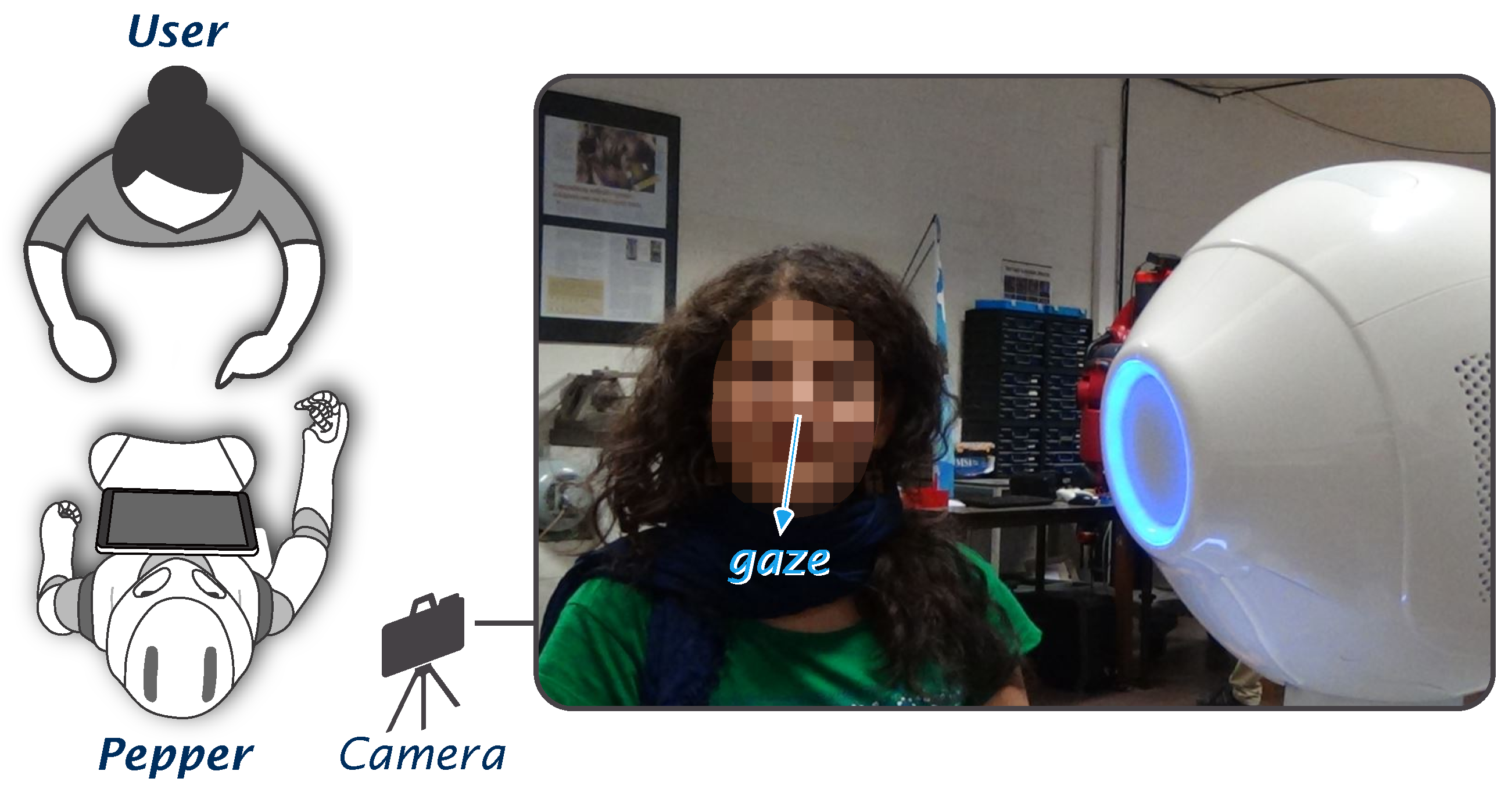

3.2. Usability Testing Design

3.3. Interaction Procedure

3.4. Open Questions and Quantitative Measurements

3.5. Results and Discussion

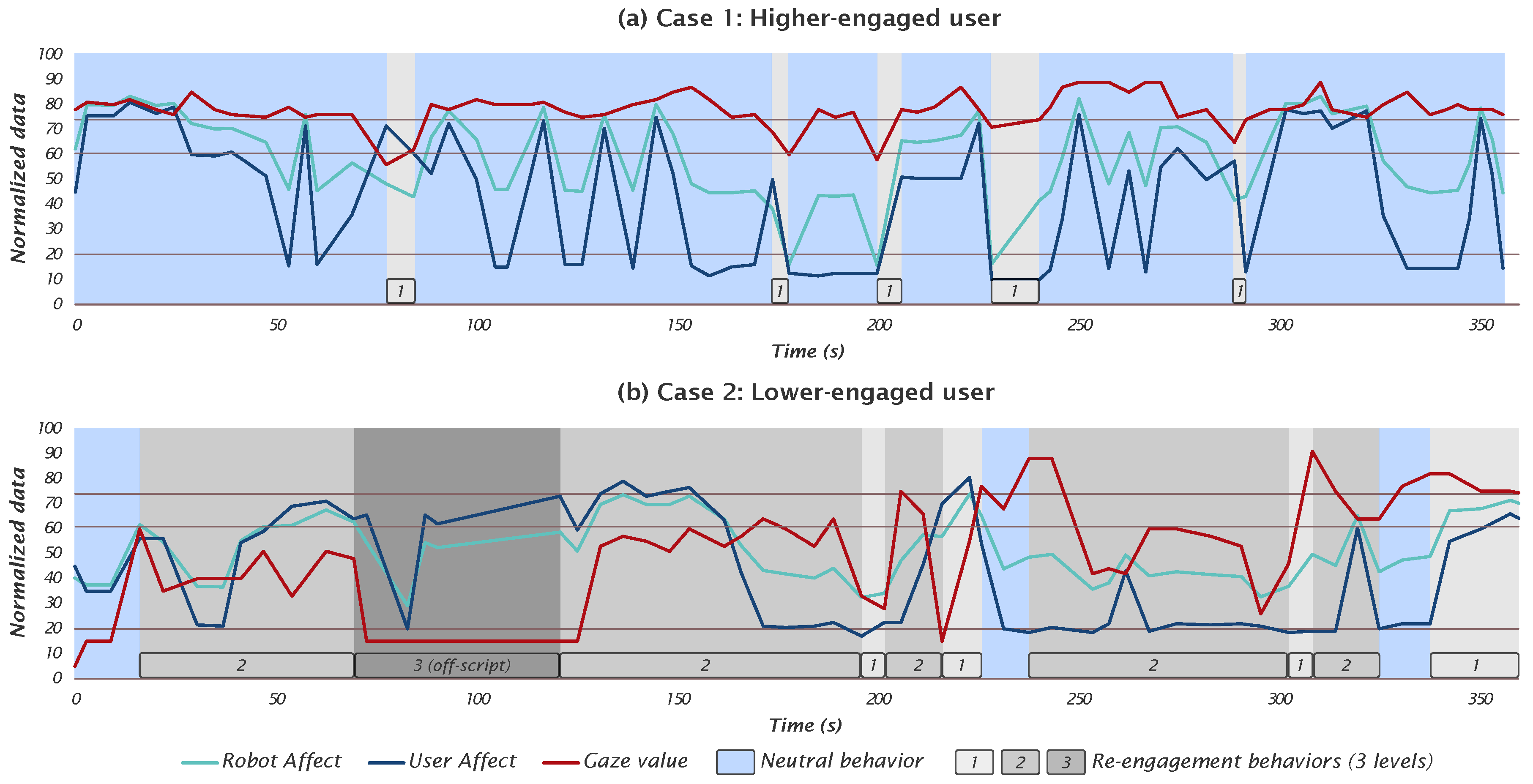

3.5.1. Technical Performance of the Re-Engagement System

3.5.2. Answers to Open Questions

3.5.3. Quantitative Assessment: User’s Engagement, Performance, and Perception

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Fong, T.; Nourbakhsh, I.; Dautenhahn, K. A survey of socially interactive robots. Robot. Auton. Syst. 2003, 42, 143–166. [Google Scholar] [CrossRef]

- McColl, D.; Nejat, G. Recognizing emotional body language displayed by a human-like social robot. Int. J. Soc. Robot. 2014, 6, 261–280. [Google Scholar] [CrossRef]

- Belpaeme, T.; Kennedy, J.; Baxter, P.; Vogt, P.; Krahmer, E.E.; Kopp, S.; Bergmann, K.; Leseman, P.; Küntay, A.C.; Göksun, T.; et al. L2TOR-second language tutoring using social robots. In Proceedings of the ICSR 2015 WONDER Workshop, Paris, France, 26–30 October 2015. [Google Scholar]

- Vogt, P.; De Haas, M.; De Jong, C.; Baxter, P.; Krahmer, E. Child-robot interactions for second language tutoring to preschool children. Front. Hum. Neurosci. 2017, 11, 73. [Google Scholar] [CrossRef] [PubMed]

- Esteban, P.G.; Baxter, P.; Belpaeme, T.; Billing, E.; Cai, H.; Cao, H.L.; Coeckelbergh, M.; Costescu, C.; David, D.; De Beir, A.; et al. How to build a supervised autonomous system for robot-enhanced therapy for children with autism spectrum disorder. Paladyn J. Behav. Robot. 2017, 8, 18–38. [Google Scholar] [CrossRef]

- Belpaeme, T.; Baxter, P.E.; Read, R.; Wood, R.; Cuayáhuitl, H.; Kiefer, B.; Racioppa, S.; Kruijff-Korbayová, I.; Athanasopoulos, G.; Enescu, V.; et al. Multimodal child-robot interaction: Building social bonds. J. Hum. Robot. Interact. 2012, 1, 33–53. [Google Scholar] [CrossRef]

- Cao, H.L.; Esteban, P.G.; Bartlett, M.; Baxter, P.; Belpaeme, T.; Billing, E.; Cai, H.; Coeckelbergh, M.; Costescu, C.; David, D.; et al. Robot-enhanced therapy: Development and validation of a supervised autonomous robotic system for autism spectrum disorders therapy. IEEE Robot. Autom. Mag. 2019, 26, 49–58. [Google Scholar] [CrossRef]

- Loza-Matovelle, D.; Verdugo, A.; Zalama, E.; Gómez-García-Bermejo, J. An Architecture for the Integration of Robots and Sensors for the Care of the Elderly in an Ambient Assisted Living Environment. Robotics 2019, 8, 76. [Google Scholar] [CrossRef]

- Palacín, J.; Clotet, E.; Martínez, D.; Martínez, D.; Moreno, J. Extending the Application of an Assistant Personal Robot as a Walk-Helper Tool. Robotics 2019, 8, 27. [Google Scholar] [CrossRef]

- Burgard, W.; Cremers, A.B.; Fox, D.; Hähnel, D.; Lakemeyer, G.; Schulz, D.; Steiner, W.; Thrun, S. Experiences with an interactive museum tour-guide robot. Artif. Intell. 1999, 114, 3–55. [Google Scholar] [CrossRef]

- Yamazaki, A.; Yamazaki, K.; Ohyama, T.; Kobayashi, Y.; Kuno, Y. A techno-sociological solution for designing a museum guide robot: Regarding choosing an appropriate visitor. In Proceedings of the 2012 7th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Boston, MA, USA, 5–8 March 2012; pp. 309–316. [Google Scholar]

- Kidd, C.D.; Breazeal, C. Effect of a robot on user perceptions. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2004), Sendai, Japan, 28 September–2 October 2004; Volume 4, pp. 3559–3564. [Google Scholar]

- Xu, J. Affective Body Language of Humanoid Robots: Perception and Effects in Human Robot Interaction. Ph.D. Thesis, Delft University of Technology, TU Delft, The Netherlands, 2015. [Google Scholar]

- Mower, E.; Feil-Seifer, D.J.; Mataric, M.J.; Narayanan, S. Investigating implicit cues for user state estimation in human-robot interaction using physiological measurements. In Proceedings of the 16th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN 2007), Jeju, Korea, 26–29 August 2007; pp. 1125–1130. [Google Scholar]

- Pitsch, K.; Kuzuoka, H.; Suzuki, Y.; Sussenbach, L.; Luff, P.; Heath, C. “The first five seconds”: Contingent stepwise entry into an interaction as a means to secure sustained engagement in HRI. In Proceedings of the 18th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN 2009), Toyama, Japan, 27 September–2 October 2009; pp. 985–991. [Google Scholar]

- Ahmad, M.I.; Mubin, O.; Orlando, J. Adaptive social robot for sustaining social engagement during long-term children–robot interaction. Int. J. Hum. Comput. Interact. 2017, 33, 943–962. [Google Scholar] [CrossRef]

- Coninx, A.; Baxter, P.; Oleari, E.; Bellini, S.; Bierman, B.; Henkemans, O.B.; Cañamero, L.; Cosi, P.; Enescu, V.; Espinoza, R.R.; et al. Towards long-term social child-robot interaction: using multi-activity switching to engage young users. J. Hum. Robot. Interact. 2016, 5, 32–67. [Google Scholar] [CrossRef]

- Komatsubara, T.; Shiomi, M.; Kanda, T.; Ishiguro, H.; Hagita, N. Can a social robot help children’s understanding of science in classrooms? In Proceedings of the Second International Conference on Human-Agent Interaction, Tsukuba, Japan, 29–31 October 2014; pp. 83–90. [Google Scholar]

- Jimenez, F.; Yoshikawa, T.; Furuhashi, T.; Kanoh, M. An emotional expression model for educational-support robots. J. Artif. Intell. Soft Comput. Res. 2015, 5, 51–57. [Google Scholar] [CrossRef]

- Moshkina, L.; Trickett, S.; Trafton, J.G. Social engagement in public places: A tale of one robot. In Proceedings of the 2014 ACM/IEEE international conference on Human-Robot Interaction, Bielefeld, Germany, 3–6 March 2014; pp. 382–389. [Google Scholar]

- Ivaldi, S.; Lefort, S.; Peters, J.; Chetouani, M.; Provasi, J.; Zibetti, E. Towards engagement models that consider individual factors in HRI: On the relation of extroversion and negative attitude towards robots to gaze and speech during a human–robot assembly task. Int. J. Soc. Robot. 2017, 9, 63–86. [Google Scholar] [CrossRef]

- Kuno, Y.; Sadazuka, K.; Kawashima, M.; Yamazaki, K.; Yamazaki, A.; Kuzuoka, H. Museum guide robot based on sociological interaction analysis. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, San Jose, CA, USA, 28 April–3 May 2007; pp. 1191–1194. [Google Scholar]

- Sidner, C.L.; Lee, C.; Kidd, C.D.; Lesh, N.; Rich, C. Explorations in engagement for humans and robots. Artif. Intell. 2005, 166, 140–164. [Google Scholar] [CrossRef]

- Yamazaki, A.; Yamazaki, K.; Burdelski, M.; Kuno, Y.; Fukushima, M. Coordination of verbal and non-verbal actions in human-robot interaction at museums and exhibitions. J. Pragmat. 2010, 42, 2398–2414. [Google Scholar] [CrossRef]

- Corrigan, L.J.; Basedow, C.; Küster, D.; Kappas, A.; Peters, C.; Castellano, G. Perception matters! Engagement in task orientated social robotics. In Proceedings of the 2015 24th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Kobe, Japan, 31 August–4 September 2015; pp. 375–380. [Google Scholar]

- Sidner, C.L.; Kidd, C.D.; Lee, C.; Lesh, N. Where to look: A study of human-robot engagement. In Proceedings of the 9th International Conference on Intelligent User Interfaces, Funchal, Portugal, 13–16 January 2004; pp. 78–84. [Google Scholar]

- Bohus, D.; Horvitz, E. Managing human-robot engagement with forecasts and... um... hesitations. In Proceedings of the 16th International Conference on Multimodal Interaction, Istanbul, Turkey, 12–16 November 2014; pp. 2–9. [Google Scholar]

- Chan, J.; Nejat, G. Promoting engagement in cognitively stimulating activities using an intelligent socially assistive robot. In Proceedings of the 2010 IEEE/ASME International Conference on Advanced Intelligent Mechatronics (AIM), Montreal, ON, Canada, 6–9 July 2010; pp. 533–538. [Google Scholar]

- Mubin, O.; Stevens, C.J.; Shahid, S.; Al Mahmud, A.; Dong, J.J. A review of the applicability of robots in education. J. Technol. Educ. Learn. 2013, 1, 13. [Google Scholar] [CrossRef]

- Leite, I.; Castellano, G.; Pereira, A.; Martinho, C.; Paiva, A. Empathic robots for long-term interaction. Int. J. Soc. Robot. 2014, 6, 329–341. [Google Scholar] [CrossRef]

- Pandey, A.; Gelin, R. A Mass-Produced Sociable Humanoid Robot: Pepper: The First Machine of Its Kind. IEEE Robot. Autom. Mag. 2018, 25, 40–48. [Google Scholar] [CrossRef]

- Lewis, J.R. Sample sizes for usability studies: Additional considerations. Hum. Factors 1994, 36, 368–378. [Google Scholar] [CrossRef] [PubMed]

- Nielsen, J. Usability 101: Introduction to Usability. Available online: https://www.nngroup.com/articles/usability-101-introduction-to-usability/ (accessed on 8 November 2019).

- Virzi, R.A. Refining the test phase of usability evaluation: How many subjects is enough? Hum. Factors 1992, 34, 457–468. [Google Scholar] [CrossRef]

- Cooney, G.; Gilbert, D.T.; Wilson, T.D. The novelty penalty: Why do people like talking about new experiences but hearing about old ones? Psychol. Sci. 2017, 28, 380–394. [Google Scholar] [CrossRef] [PubMed]

- Ortony, A.; Clore, G.L.; Collins, A. The Cognitive Structure of Emotions; Cambridge University Press: Cambridge, UK, 1990. [Google Scholar]

- Ortony, A.; Norman, D.; Revelle, W. Affect and Proto-Affect in Effective Functioning. In Who Needs Emotions, The Brain Meets the Robot; Oxford University Press: New York, NY, USA, 2005. [Google Scholar]

- Sloman, A. Varieties of meta-cognition in natural and artificial systems. In Metareasoning: Thinking about Thinking; MIT Press: Cambridge, MA, USA, 2011; pp. 307–323. [Google Scholar] [CrossRef][Green Version]

- Sloman, A.; Logan, B. Evolvable Architectures for Human-Like Minds. In Affective Minds; Elsevier: Amsterdam, The Netherlands, 2000; pp. 169–181. [Google Scholar]

- Mutlu, B.; Forlizzi, J.; Hodgins, J. A storytelling robot: Modeling and evaluation of human-like gaze behavior. In Proceedings of the 2006 6th IEEE-RAS International Conference on Humanoid Robots, Genova, Italy, 4–6 December 2006; pp. 518–523. [Google Scholar]

- Yoshikawa, Y.; Shinozawa, K.; Ishiguro, H.; Hagita, N.; Miyamoto, T. Responsive Robot Gaze to Interaction Partner. In Proceedings of the Robotics: Science and Systems, Philadelphia, PA, USA, 16–19 August 2006. [Google Scholar]

- Lazzeri, N.; Mazzei, D.; Zaraki, A.; De Rossi, D. Towards a believable social robot. In Biomimetic and Biohybrid Systems; Springer: Berlin/Heidelberg, Germany, 2013; pp. 393–395. [Google Scholar]

- Saldien, J.; Vanderborght, B.; Goris, K.; Van Damme, M.; Lefeber, D. A motion system for social and animated robots. Int. J. Adv. Robot. Syst. 2014, 11. [Google Scholar] [CrossRef]

- Gómez Esteban, P.; Cao, H.L.; De Beir, A.; Van de Perre, G.; Lefeber, D.; Vanderborght, B. A multilayer reactive system for robots interacting with children with autism. In Proceedings of the 5th International Symposium on New Frontiers in Human-Robot Interaction, Sheffield, UK, 5–6 April 2016; pp. 1–4. [Google Scholar]

- Richmond, V.P.; Gorham, J.S.; McCroskey, J.C. The relationship between selected immediacy behaviors and cognitive learning. Ann. Int. Commun. Assoc. 1987, 10, 574–590. [Google Scholar] [CrossRef]

- Shim, J.; Arkin, R.C. Other-oriented robot deception: A computational approach for deceptive action generation to benefit the mark. In Proceedings of the 2014 IEEE International Conference on Robotics and Biomimetics (ROBIO 2014), Bali, Indonesia, 5–10 December 2014; pp. 528–535. [Google Scholar]

- Szafir, D.; Mutlu, B. Pay attention!: Designing adaptive agents that monitor and improve user engagement. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Austin, TX, USA, 5–10 May 2012; pp. 11–20. [Google Scholar]

- Baroni, I.; Nalin, M.; Zelati, M.C.; Oleari, E.; Sanna, A. Designing motivational robot: How robots might motivate children to eat fruits and vegetables. In Proceedings of the 23rd IEEE International Symposium on Robot and Human Interactive Communication (2014 RO-MAN), Edinburgh, UK, 25–29 August 2014; pp. 796–801. [Google Scholar]

- Chidambaram, V.; Chiang, Y.H.; Mutlu, B. Designing persuasive robots: How robots might persuade people using vocal and nonverbal cues. In Proceedings of the Seventh Annual ACM/IEEE International Conference on Human-Robot Interaction, Boston, MA, USA, 5–8 March 2012; pp. 293–300. [Google Scholar]

- Brown, L.; Kerwin, R.; Howard, A.M. Applying behavioral strategies for student engagement using a robotic educational agent. In Proceedings of the 2013 IEEE International Conference on Systems, Man, and Cybernetics, Manchester, UK, 13–16 October 2013; pp. 4360–4365. [Google Scholar]

- Crumpton, J.; Bethel, C.L. A survey of using vocal prosody to convey emotion in robot speech. Int. J. Soc. Robot. 2016, 8, 271–285. [Google Scholar] [CrossRef]

- Lim, A.; Okuno, H.G. The mei robot: Towards using motherese to develop multimodal emotional intelligence. IEEE Trans. Auton. Ment. Dev. 2014, 6, 126–138. [Google Scholar] [CrossRef]

- Bennewitz, M.; Faber, F.; Joho, D.; Behnke, S. Intuitive multimodal interaction with communication robot Fritz. In Humanoid Robots, Human-Like Machines; Itech: Vienna, Austria, 2007. [Google Scholar]

- Gonsior, B.; Sosnowski, S.; Buß, M.; Wollherr, D.; Kühnlenz, K. An emotional adaption approach to increase helpfulness towards a robot. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura, Portugal, 7–12 October 2012; pp. 2429–2436. [Google Scholar]

- Bartneck, C.; Kulić, D.; Croft, E.; Zoghbi, S. Measurement instruments for the anthropomorphism, animacy, likeability, perceived intelligence, and perceived safety of robots. Int. J. Soc. Robot. 2009, 1, 71–81. [Google Scholar] [CrossRef]

| Impression of the Robot | |

|---|---|

| Activated (n = 5) | U01:I found it friendly and nice. |

| U02:It was very lifelike, it was hard to remember it is a robot. | |

| U03:Friendly. | |

| U04:Impressive the way to show emotions, through emotions. Nevertheless, in time the movements become somehow monotonous and predictable. | |

| U05:A lively social robot with nice hand gestures. | |

| Deactivated (n = 5) | U06:First surprised, then a little bit depressed because I have no conversation with him. |

| U07:It was really trying to impress me and I could feel his try which was wonderful. But his hand motion seems too much and didn’t permit me to focus on his speaking which I prefer he pays attention and adjusts it. | |

| U08:I like the hand movements and the way it looks around curiously. | |

| U09:It was nice and good gesture. | |

| U10: [No impression] |

| Interaction Experience | |

|---|---|

| Activated (n = 5) | U01:I felt comfortable and I wanted to share more, and Pepper identified my reactions super fast which was surprising for me. |

| U02:He asked questions and understood my responses. | |

| U03:Nice and friendly. | |

| U04:It was a pleasant experience. I would have like to interact longer. | |

| U05:It feels nice to know pepper. It enlightened me about how robots are handling social interactiveness. | |

| Deactivated (n = 5) | U06:Not at all. |

| U07:It was really unique and wonderful; but a bit monologue! it was much better if i also could speak with him and see his realtime interaction abilities. | |

| U08:Good. | |

| U09:Responsive interaction. | |

| U10:Communication. |

| Deactivated (n = 5) | Activated (n = 5) | ||||||

|---|---|---|---|---|---|---|---|

| M | SD | Mdn | M | SD | Mdn | ||

| Age | 28 | 4.90 | 30 | 27 | 2.00 | 27 | |

| Engagement | |||||||

| Time gazing at the robot (%) | 43.04 | 29.50 | 56.28 | 63.13 | 18.30 | 57.95 | |

| Each gaze duration (s) | 17.25 | 13.67 | 13.02 | 22.99 | 1.84 | 23.36 | |

| User’s perception | |||||||

| Anthropomorphism | 3.72 | 0.58 | 3.60 | 3.76 | 0.62 | 3.60 | |

| Animacy | 3.47 | 0.27 | 3.33 | 4.03 | 0.43 | 4.00 | |

| Likability | 4.24 | 0.33 | 4.20 | 4.44 | 0.43 | 4.40 | |

| Perceived intelligence | 3.88 | 0.67 | 4.0 | 4.04 | 0.59 | 4.20 | |

| Perceived safety | 3.87 | 0.73 | 3.67 | 3.80 | 0.84 | 3.67 | |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cao, H.-L.; Torrico Moron, P.C.; Esteban, P.G.; De Beir, A.; Bagheri, E.; Lefeber, D.; Vanderborght, B. “Hmm, Did You Hear What I Just Said?”: Development of a Re-Engagement System for Socially Interactive Robots. Robotics 2019, 8, 95. https://doi.org/10.3390/robotics8040095

Cao H-L, Torrico Moron PC, Esteban PG, De Beir A, Bagheri E, Lefeber D, Vanderborght B. “Hmm, Did You Hear What I Just Said?”: Development of a Re-Engagement System for Socially Interactive Robots. Robotics. 2019; 8(4):95. https://doi.org/10.3390/robotics8040095

Chicago/Turabian StyleCao, Hoang-Long, Paola Cecilia Torrico Moron, Pablo G. Esteban, Albert De Beir, Elahe Bagheri, Dirk Lefeber, and Bram Vanderborght. 2019. "“Hmm, Did You Hear What I Just Said?”: Development of a Re-Engagement System for Socially Interactive Robots" Robotics 8, no. 4: 95. https://doi.org/10.3390/robotics8040095

APA StyleCao, H.-L., Torrico Moron, P. C., Esteban, P. G., De Beir, A., Bagheri, E., Lefeber, D., & Vanderborght, B. (2019). “Hmm, Did You Hear What I Just Said?”: Development of a Re-Engagement System for Socially Interactive Robots. Robotics, 8(4), 95. https://doi.org/10.3390/robotics8040095