Leaf Area Estimation of Reconstructed Maize Plants Using a Time-of-Flight Camera Based on Different Scan Directions

Abstract

1. Introduction

2. Materials and Methods

2.1. Hardware and Sensors

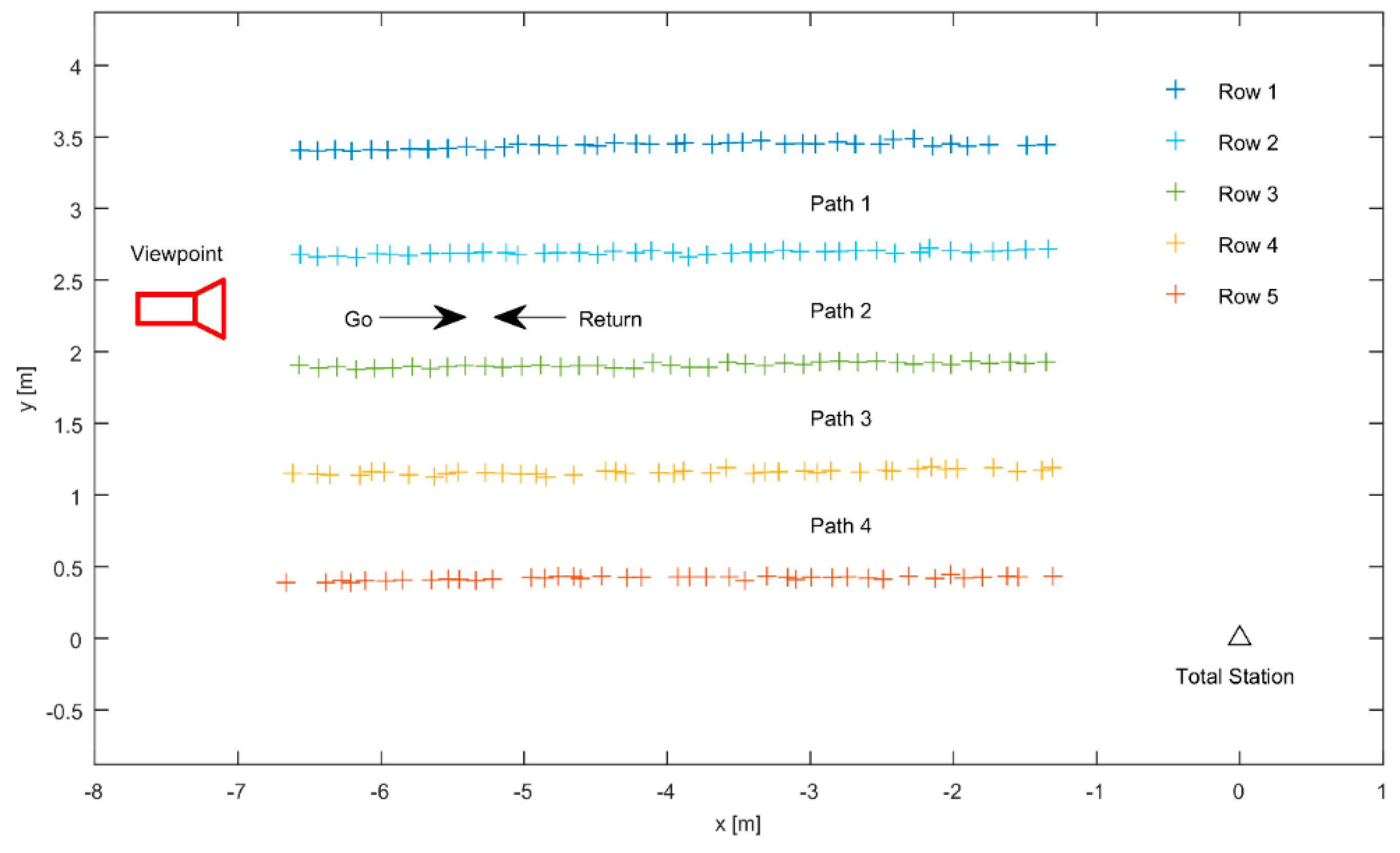

2.2. Experimental Setup

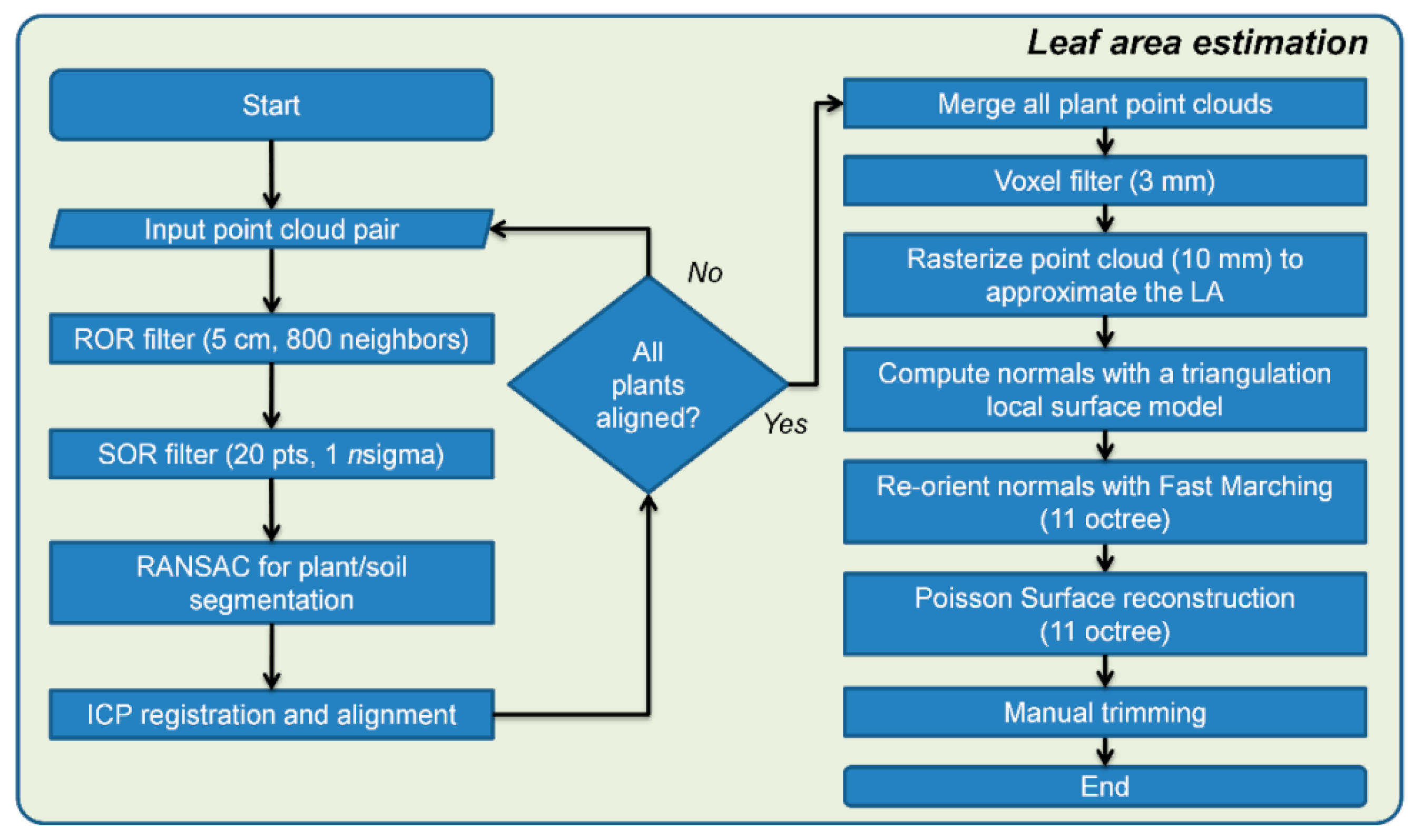

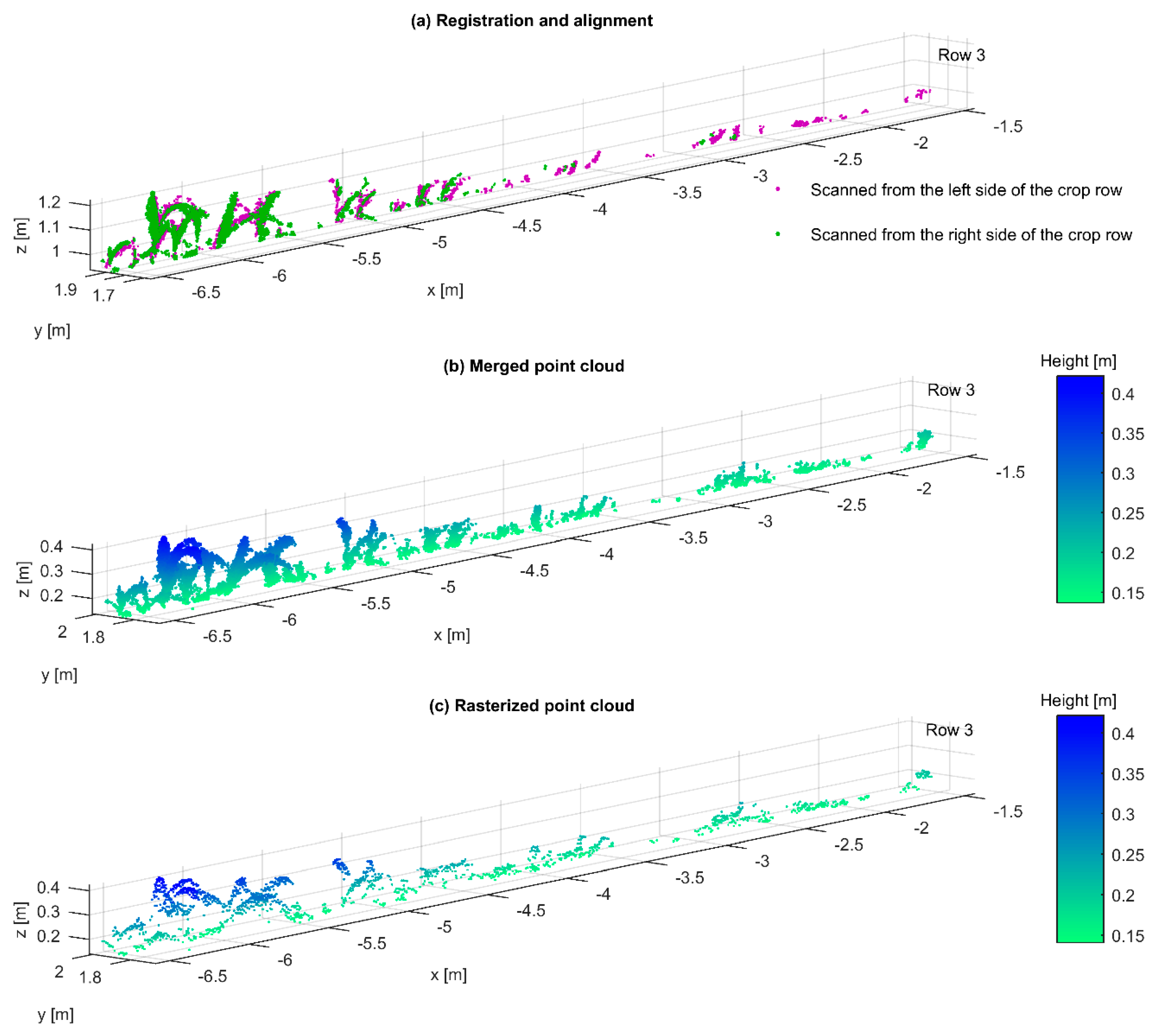

2.3. Data Processing

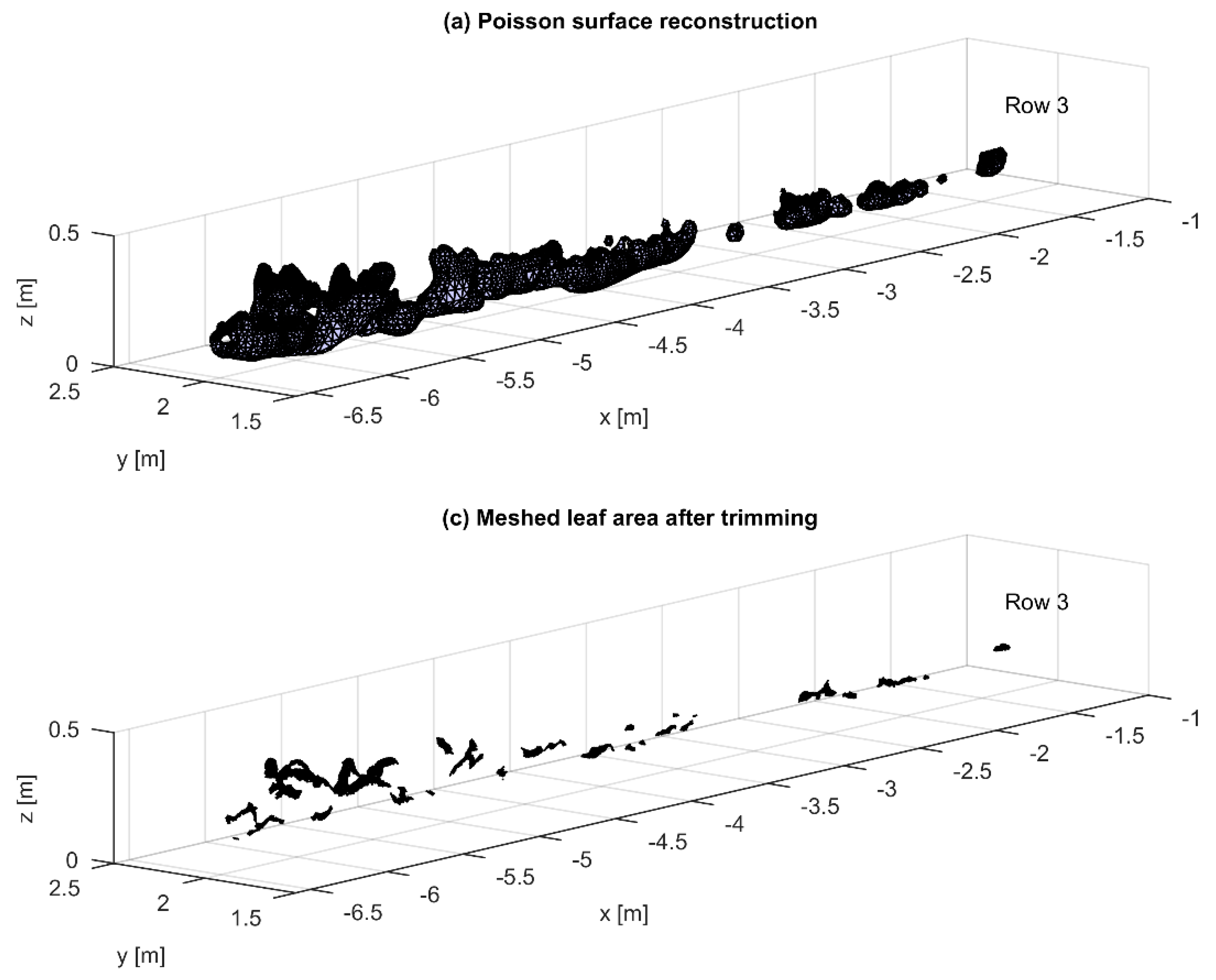

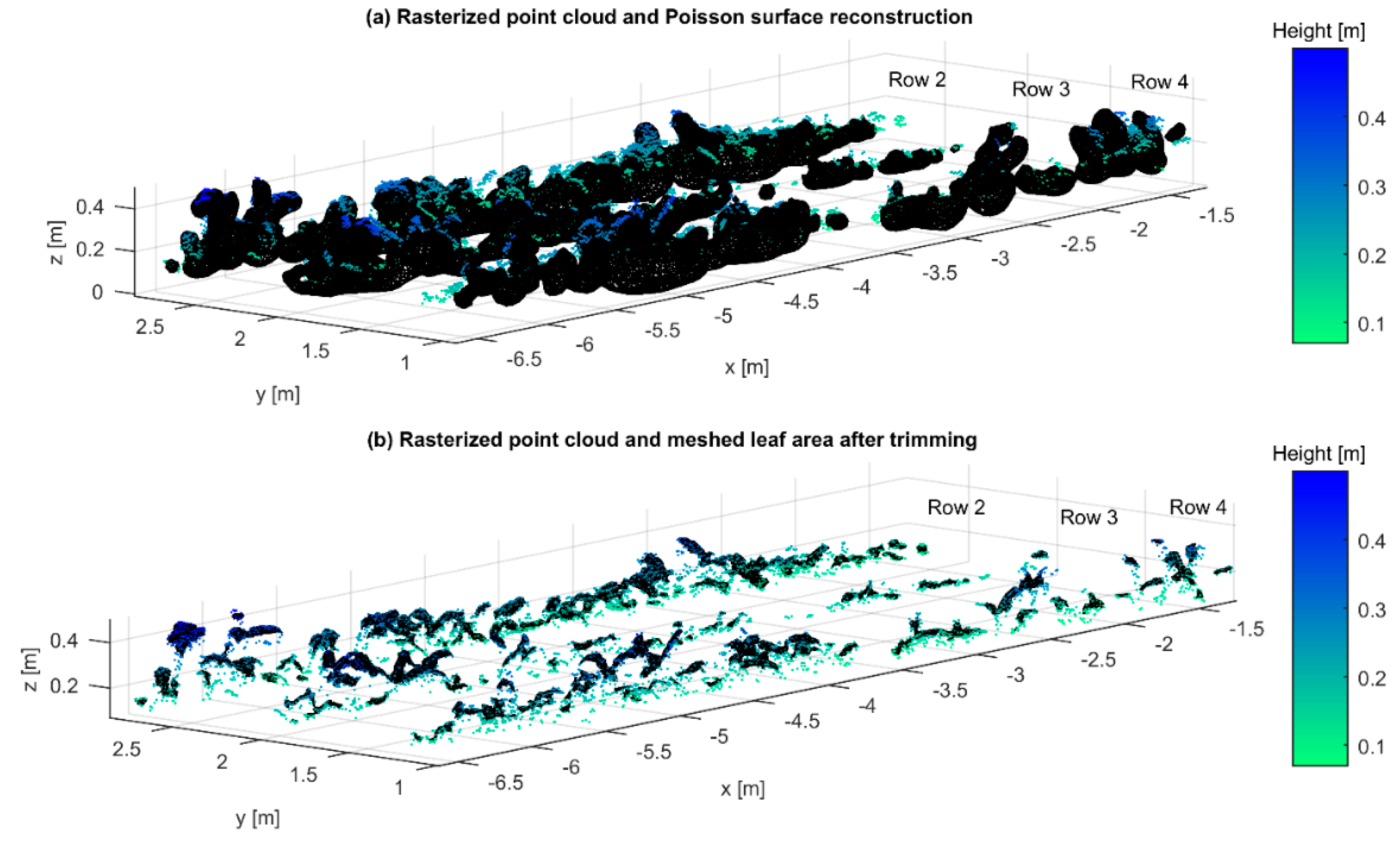

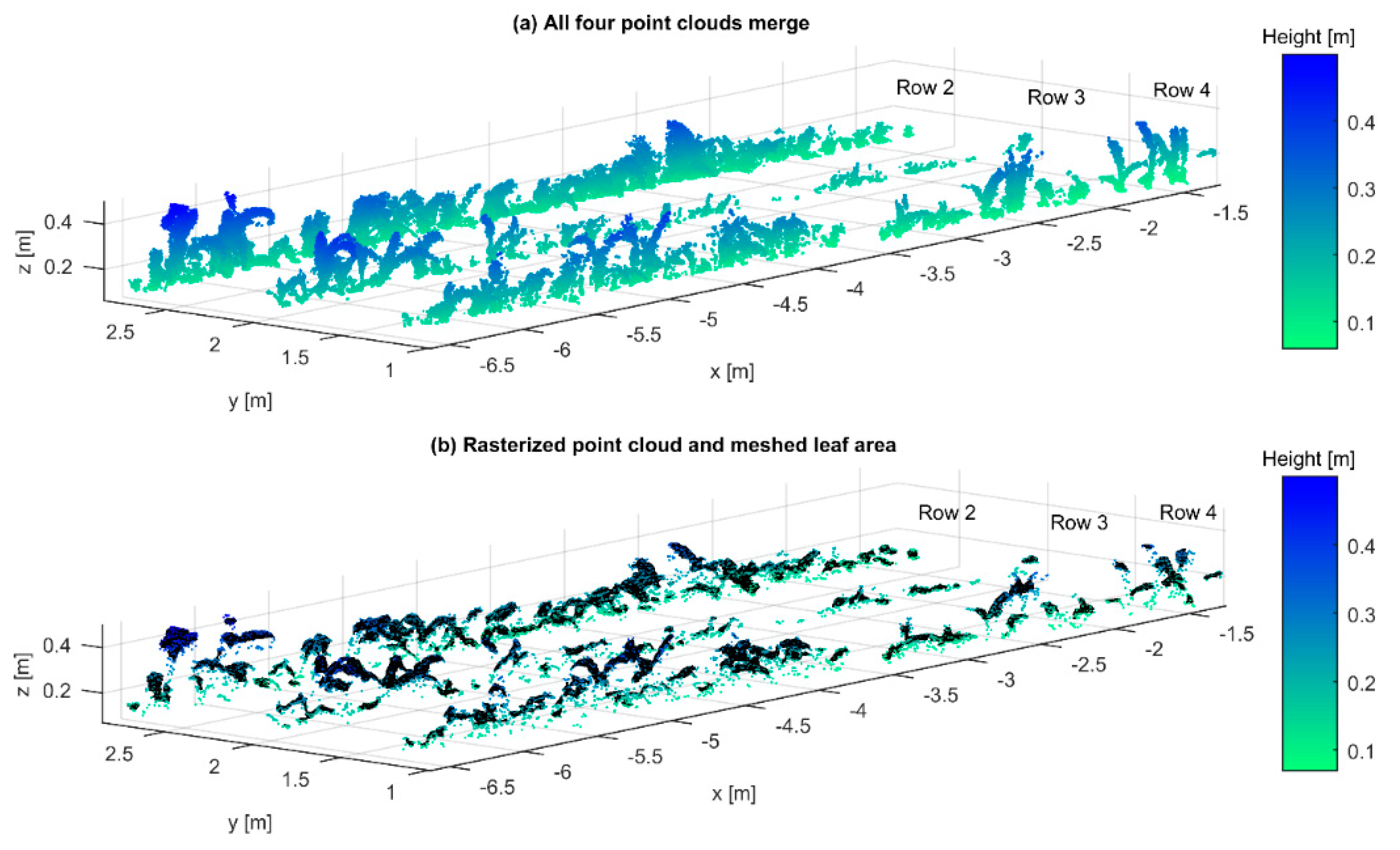

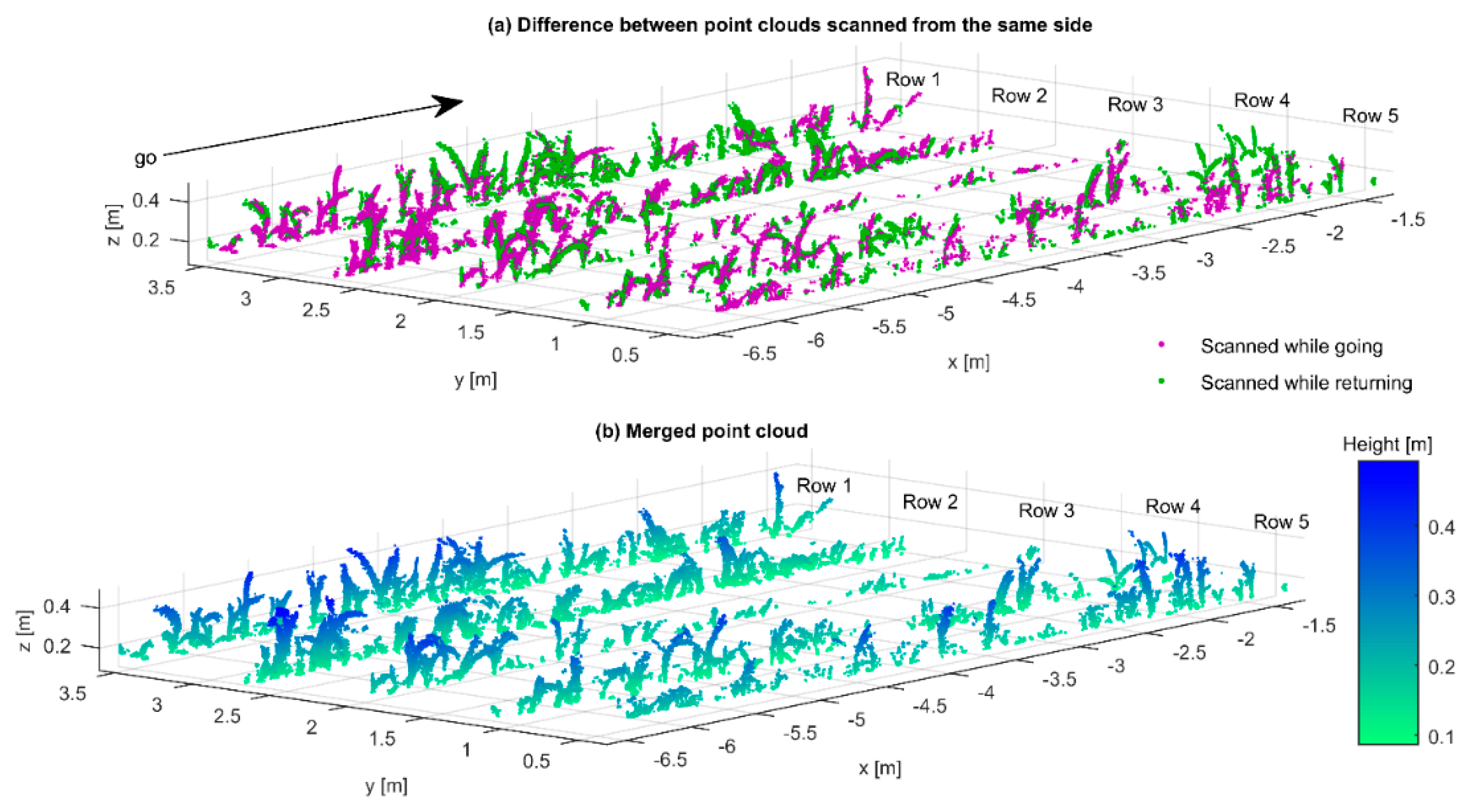

2.4. Leaf Area Estimation

3. Results and Discussion

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Lambert, R.J.; Mansfield, B.D.; Mumm, R.H. Effect of leaf area on maize productivity. Maydica 2014, 59, 58–64. [Google Scholar]

- Diago, M.P.; Correa, C.; Millán, B.; Barreiro, P.; Valero, C.; Tardaguila, J. Grapevine yield and leaf area estimation using supervised classification methodology on RGB images taken under field conditions. Sensors 2012, 12, 16988–17006. [Google Scholar] [CrossRef] [PubMed]

- Hosoi, F.; Nakabayashi, K.; Omasa, K. 3-D modeling of tomato canopies using a high-resolution portable scanning lidar for extracting structural information. Sensors 2011, 11, 2166–2174. [Google Scholar] [CrossRef] [PubMed]

- Kazmi, W.; Bisgaard, M.; Garcia-Ruiz, F.; Hansen, K.D.; la Cour-Harbo, A. Adaptive Surveying and Early Treatment of Crops with a Team of Autonomous Vehicles. In Proceedings of the 5th European Conference on Mobile Robots ECMR, Orebro, Sweden, 7–9 September 2011; pp. 253–258. [Google Scholar]

- Bréda, N.J.J. Ground-based measurements of leaf area index: A review of methods, instruments and current controversies. J. Exp. Bot. 2003, 54, 2403–2417. [Google Scholar] [CrossRef] [PubMed]

- Vázquez-Arellano, M.; Griepentrog, H.W.; Reiser, D.; Paraforos, D.S. 3-D imaging systems for agricultural applications—A review. Sensors 2016, 16, 618. [Google Scholar] [CrossRef] [PubMed]

- Vázquez-Arellano, M.; Reiser, D.; Paraforos, D.S.; Garrido-Izard, M.; Burce, M.E.C.; Griepentrog, H.W. 3-D reconstruction of maize plants using a time-of-flight camera. Comput. Electron. Agric. 2018, 145, 235–247. [Google Scholar] [CrossRef]

- Vázquez-Arellano, M.; Paraforos, D.S.; Reiser, D.; Garrido-Izard, M.; Griepentrog, H.W. Determination of stem position and height of reconstructed maize plants using a time-of-flight camera. Comput. Electron. Agric. 2018, 154, 276–288. [Google Scholar] [CrossRef]

- Andújar, D.; Dorado, J.; Fernández-Quintanilla, C.; Ribeiro, A. An approach to the use of depth cameras for weed volume estimation. Sensors 2016, 16, 972. [Google Scholar] [CrossRef] [PubMed]

- Jiang, Y.; Li, C.; Paterson, A.H. High throughput phenotyping of cotton plant height using depth images under field conditions. Comput. Electron. Agric. 2016, 130, 57–68. [Google Scholar] [CrossRef]

- Hämmerle, M.; Höfle, B. Mobile low-cost 3D camera maize crop height measurements under field conditions. Precis. Agric. 2017, 19, 630–647. [Google Scholar] [CrossRef]

- Hu, Y.; Wang, L.; Xiang, L.; Wu, Q.; Jiang, H. Automatic non-destructive growth measurement of leafy vegetables based on kinect. Sensors 2018, 18, 806. [Google Scholar] [CrossRef] [PubMed]

- Paulus, S.; Behmann, J.; Mahlein, A.K.; Plümer, L.; Kuhlmann, H. Low-cost 3D systems: Suitable tools for plant phenotyping. Sensors 2014, 14, 3001–3018. [Google Scholar] [CrossRef] [PubMed]

- Nakarmi, A.D.; Tang, L. Automatic inter-plant spacing sensing at early growth stages using a 3D vision sensor. Comput. Electron. Agric. 2012, 82, 23–31. [Google Scholar] [CrossRef]

- Chaivivatrakul, S.; Tang, L.; Dailey, M.N.; Nakarmi, A.D. Automatic morphological trait characterization for corn plants via 3D holographic reconstruction. Comput. Electron. Agric. 2014, 109, 109–123. [Google Scholar] [CrossRef]

- Alenyà, G.; Foix, S.; Torras, C. ToF cameras for active vision in robotics. Sens. Actuators A Phys. 2014, 218, 10–22. [Google Scholar] [CrossRef]

- Colaço, A.F.; Molin, J.P.; Rosell-Polo, J.R.; Escolà, A. Application of light detection and ranging and ultrasonic sensors to high-throughput phenotyping and precision horticulture: Current status and challenges. Hortic. Res. 2018, 5. [Google Scholar] [CrossRef] [PubMed]

- Reiser, D.; Garrido-Izard, M.; Vázquez-Arellano, M.; Paraforos, D.S.; Griepentrog, H.W. Crop row detection in maize for developing navigation algorithms under changing plant growth stages. In Proceedings of the Robot 2015, Second Iberian Robotics Conference, Lisbon, Portugal, 19–21 November 2015; pp. 371–382. [Google Scholar]

- Paraforos, D.S.; Reutemann, M.; Sharipov, G.; Werner, R.; Griepentrog, H.W. Total station data assessment using an industrial robotic arm for dynamic 3D in-field positioning with sub-centimetre accuracy. Comput. Electron. Agric. 2017, 136, 166–175. [Google Scholar] [CrossRef]

- EDF R&D, T. P. CloudCompare (Version 2.9.1) [GPL Software]. Available online: https://www.danielgm.net/cc/ (accessed on 15 August 2018).

- Besl, P.; McKay, N. A Method for Registration of 3-D Shapes. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 239–256. [Google Scholar] [CrossRef]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Dewez, T.J.B.; Girardeau-Montaut, D.; Allanic, C.; Rohmer, J. Facets: A cloudcompare plugin to extract geological planes from unstructured 3d point clouds. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. ISPRS Arch. 2016, 41, 799–804. [Google Scholar] [CrossRef]

- Kazhdan, M.; Bolitho, M.; Hoppe, H. Poisson Surface Reconstruction. In Proceedings of the International Conference on Scale Space and Variational Methods in Computer Vision, Lège-Cap Ferret, France, 31 May–4 June 2015; pp. 525–537. [Google Scholar]

- Li, X.; Zaragoza, J.; Kuffner, P.; Ansell, P.; Nguyen, C.; Daily, H. Growth Measurement of Arabidopsis in 2.5D from a High Throughput Phenotyping Platform. In Proceedings of the 21st International Congress on Modelling and Simulation, Gold Coast, Australia, 29 November–4 December 2015; pp. 517–523. [Google Scholar]

- Kazhdan, M.; Hoppe, H. Screened Poisson Surface Reconstruction. ACM Trans. Graph. 2013, 32, 1–13. [Google Scholar] [CrossRef]

- Montgomery, E.G. Correlation studies in corn. Neb. Agric. Exp. Stn. Annu. Rep. 1911, 24, 108–159. [Google Scholar]

| Direction | Crop Row | Rasterized Crop Height (10 mm) [cm2] | Poisson Surface Reconstruction [cm2] | Ground Truth LA [cm2] | RMSE [cm2] | MAPE |

|---|---|---|---|---|---|---|

| Go left side, return left side, go right side, and return right side | 2 | 4713 | 4580 | 4191 | 389 | 9.2% |

| Go left side, return left side, go right side, and return right side | 3 | 1781 | 1895 | 1634 | 263 | 16% |

| Go left side, return left side, go right side, and return right side | 4 | 2179 | 2777 | 2819 | 42 | 1,4% |

| Average | 2891 | 3084 | 2881 | 231 | 8.8% |

| Direction | Crop Row | Rasterized Crop Height (10 mm) [cm2] | Poisson Surface Reconstruction [cm2] | Ground Truth LA [cm2] | RMSE [cm2] | MAPE |

|---|---|---|---|---|---|---|

| Go right side and return right side | 1 | 2685 | 2611 | 2824 | 213 | 7.5% |

| Go left side and return left side | 2 | 2680 | 4091 | 4191 | 100 | 2.3% |

| Go left side and return left side | 3 | 1077 | 1733 | 1634 | 99 | 6% |

| Go left side and return left side | 4 | 1611 | 2332 | 2819 | 487 | 17.2% |

| Go left side and return left side | 5 | 1433 | 1762 | 1879 | 117 | 6.2% |

| Average | 1897 | 2505 | 2669 | 203 | 7.8% |

| Direction | Crop Row | Rasterized Crop Height (10 mm) [cm2] | Poisson Surface Reconstruction [cm2] | Ground Truth LA [cm2] | RMSE [cm2] | MAPE |

|---|---|---|---|---|---|---|

| Go left side and return right side | 2 | 3047 | 3156 | 4750 | 1594 | 33.5% |

| Go left side and return right side | 3 | 1191 | 2479 | 1852 | 627 | 33.8% |

| Go left side and return right side | 4 | 1560 | 4150 | 3195 | 955 | 29.8% |

| Average | 1932 | 3261 | 3265 | 1059 | 32.3% |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vázquez-Arellano, M.; Reiser, D.; Paraforos, D.S.; Garrido-Izard, M.; Griepentrog, H.W. Leaf Area Estimation of Reconstructed Maize Plants Using a Time-of-Flight Camera Based on Different Scan Directions. Robotics 2018, 7, 63. https://doi.org/10.3390/robotics7040063

Vázquez-Arellano M, Reiser D, Paraforos DS, Garrido-Izard M, Griepentrog HW. Leaf Area Estimation of Reconstructed Maize Plants Using a Time-of-Flight Camera Based on Different Scan Directions. Robotics. 2018; 7(4):63. https://doi.org/10.3390/robotics7040063

Chicago/Turabian StyleVázquez-Arellano, Manuel, David Reiser, Dimitrios S. Paraforos, Miguel Garrido-Izard, and Hans W. Griepentrog. 2018. "Leaf Area Estimation of Reconstructed Maize Plants Using a Time-of-Flight Camera Based on Different Scan Directions" Robotics 7, no. 4: 63. https://doi.org/10.3390/robotics7040063

APA StyleVázquez-Arellano, M., Reiser, D., Paraforos, D. S., Garrido-Izard, M., & Griepentrog, H. W. (2018). Leaf Area Estimation of Reconstructed Maize Plants Using a Time-of-Flight Camera Based on Different Scan Directions. Robotics, 7(4), 63. https://doi.org/10.3390/robotics7040063