A Comprehensive Survey of Recent Trends in Cloud Robotics Architectures and Applications

Abstract

1. Introduction

- Cloud robotics offers robots increased computational power and storage space [4].

- Cloud-enabled robots are not required to perform complicated tasks on board, but can offload computation-intensive tasks such as object recognition, pattern matching, computer vision, and speech synthesis and recognition to the cloud. These tasks can be solved much faster on the cloud, in real-time, utilizing massively parallel computation or grid computing capabilities [4].

- Along with dynamic offloading of computational tasks, cloud infrastructure in cloud robotics supports elastic and on-demand availability of computational resources [2].

- Many applications in robotics, like simultaneous localization and mapping (SLAM) and other perception systems, rapidly give rise to enormous amounts of sensor data that is difficult to store with the limited onboard storage capacity on most robots. Cloud enabled robots have access to high storage spaces offered by the cloud where they can store all the useful information for utilization in future [2].

- In addition to these advantages, cloud robotics enables robots to access big data such as global maps for localization, object models that the robots might need for manipulation tasks as well as open-source algorithms and code [5].

- Finally, cloud robotics also facilitates cooperative learning between geographically distributed robots by information sharing on how to solve a complicated task. Cloud robotics also allows robots to access human knowledge through crowdsourcing utilizing the cloud. Hence, with the introduction of cloud robotics, robots are no longer self-contained systems limited by their on-board capabilities, but they can utilize all the advantages offered by the cloud infrastructure [5].

2. Cloud Computing and Robotics

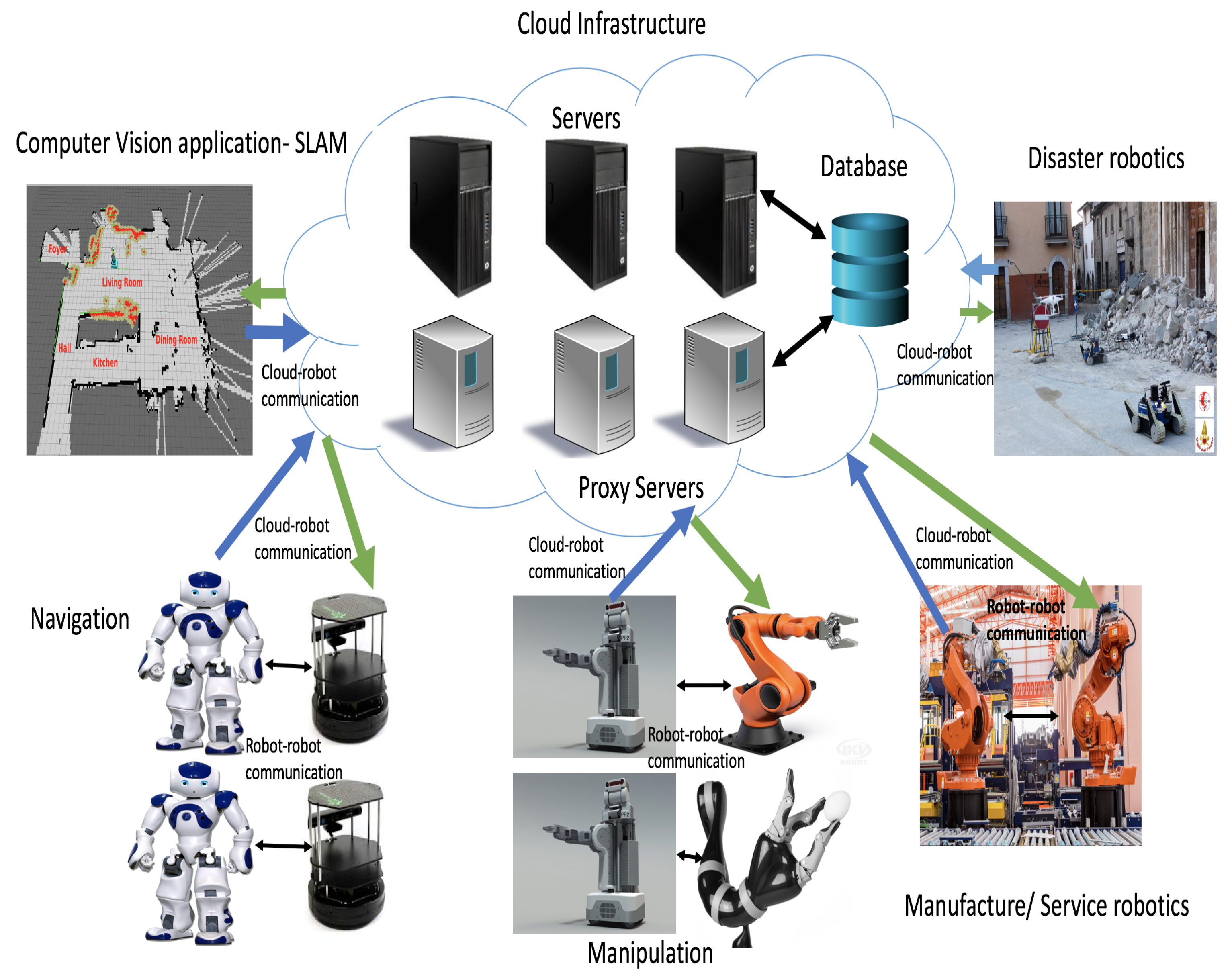

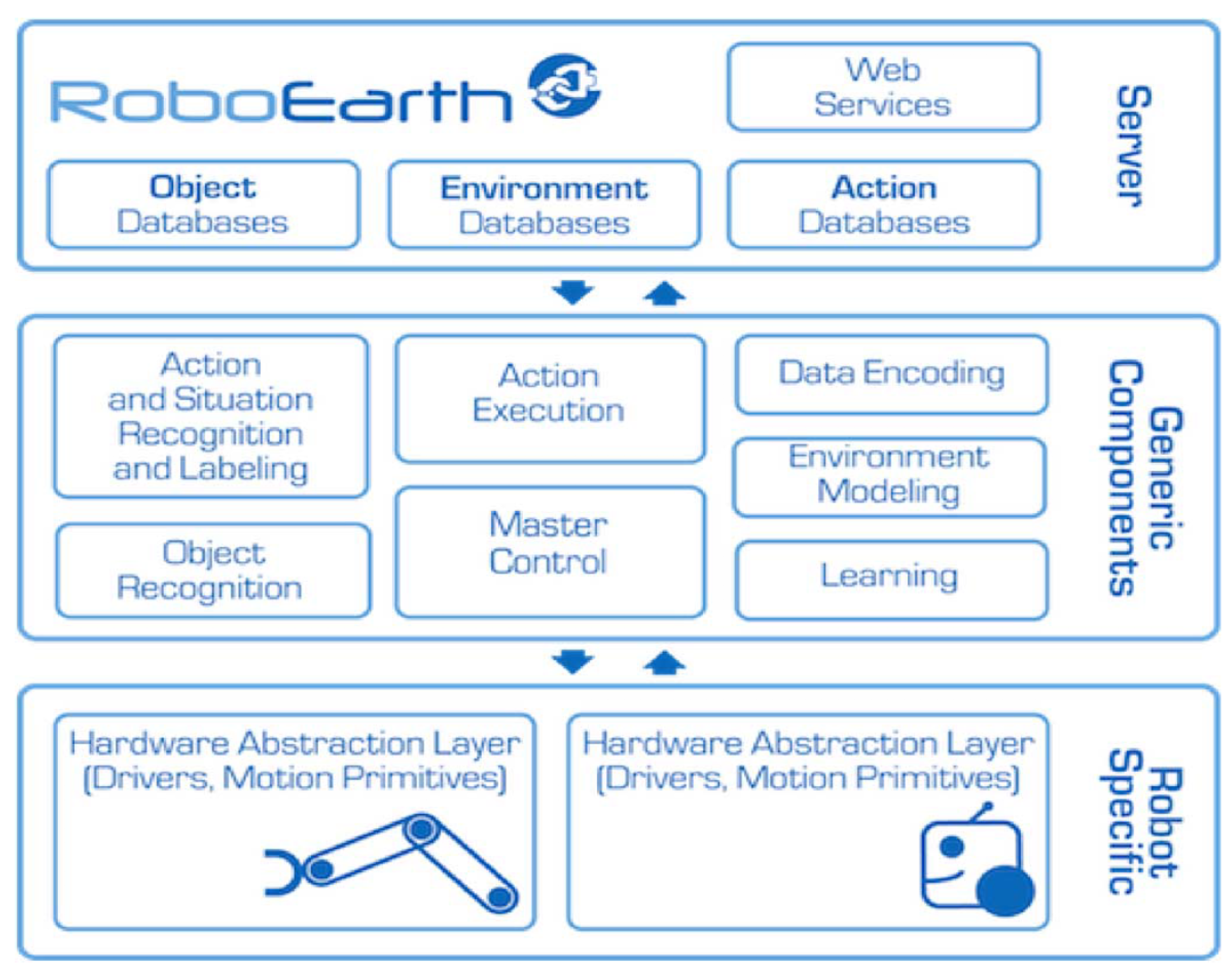

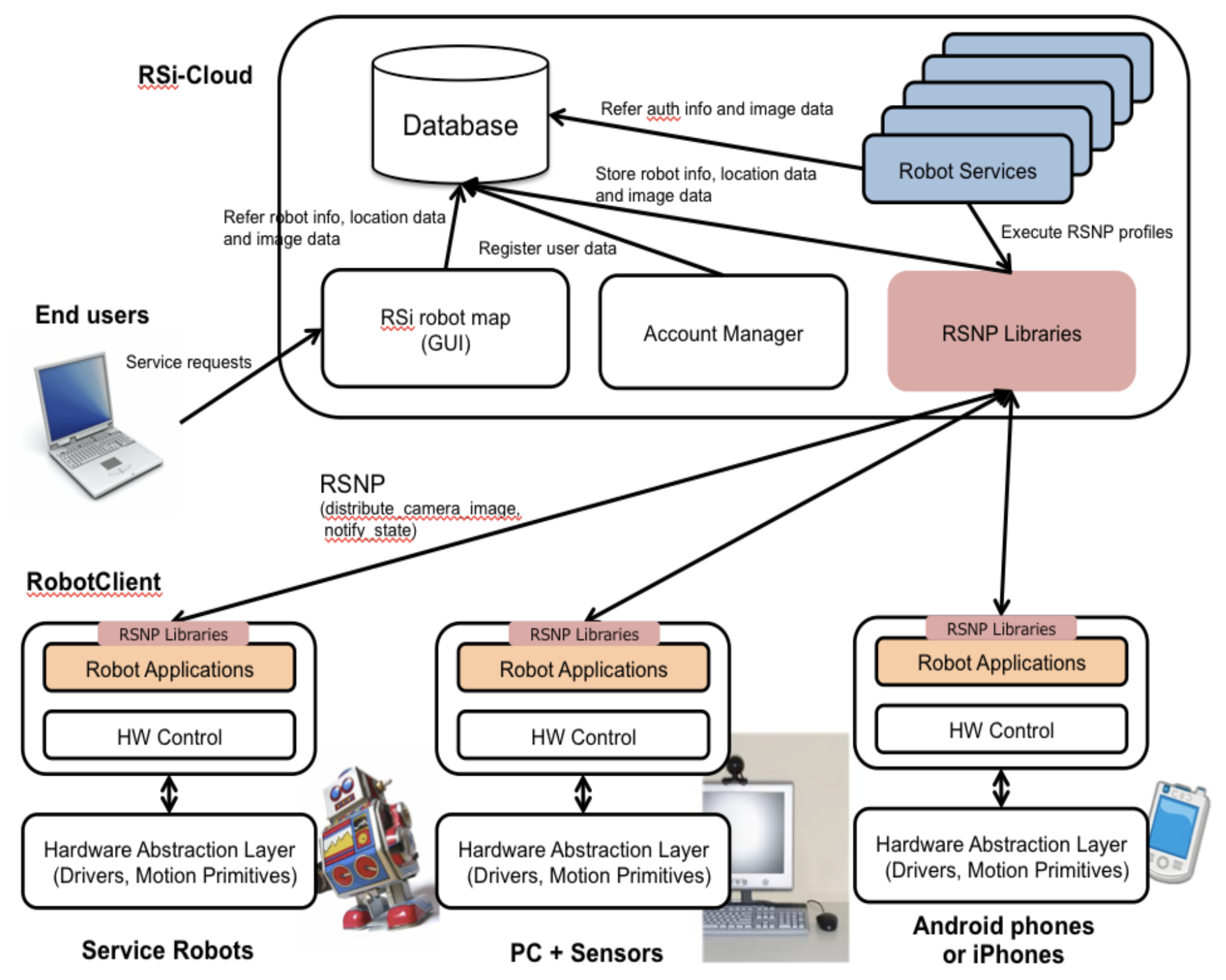

3. Cloud Robotics System Architectures

4. Cloud Robotics Applications

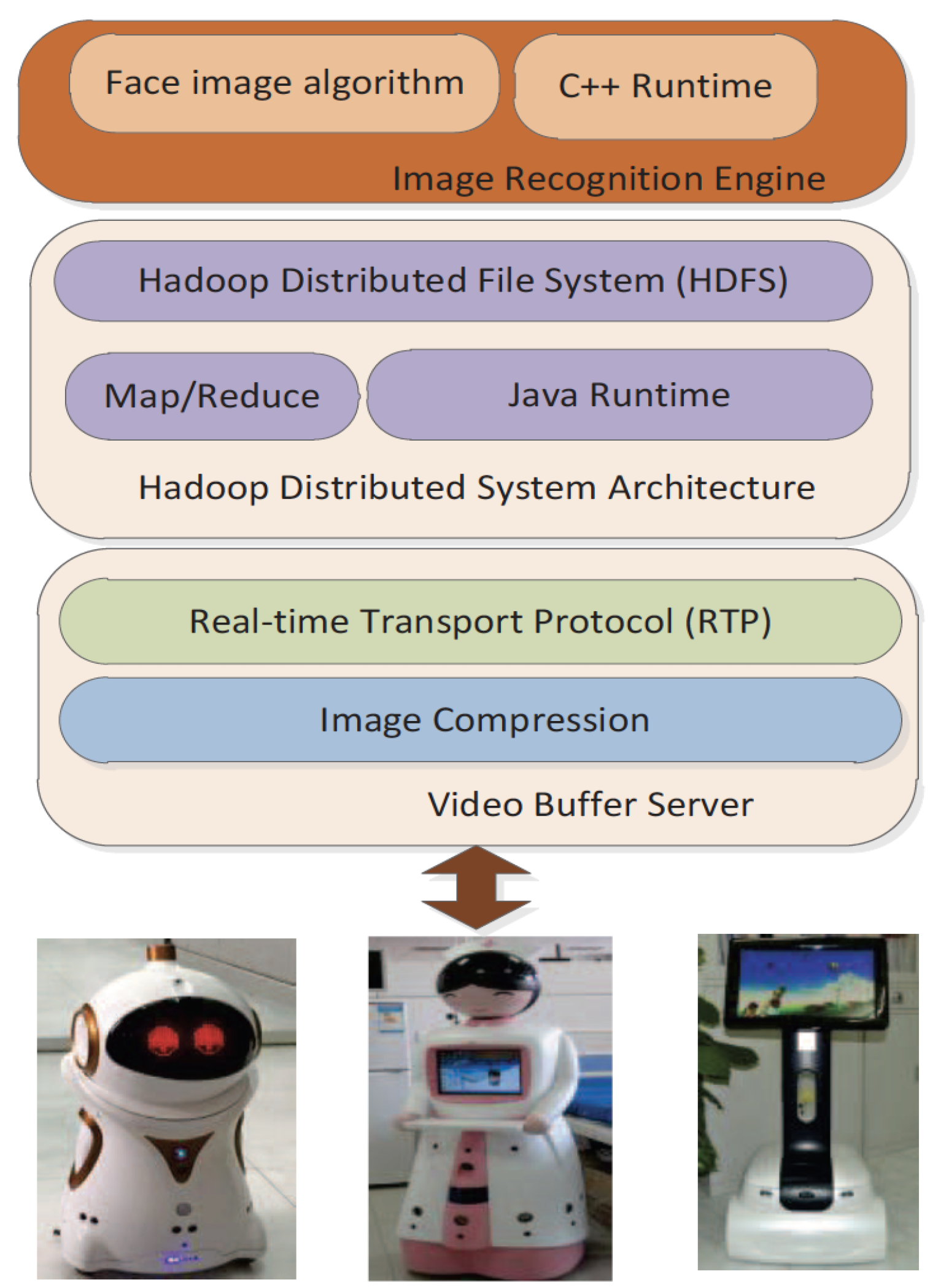

4.1. Perception and Computer Vision Applications

4.2. Navigation

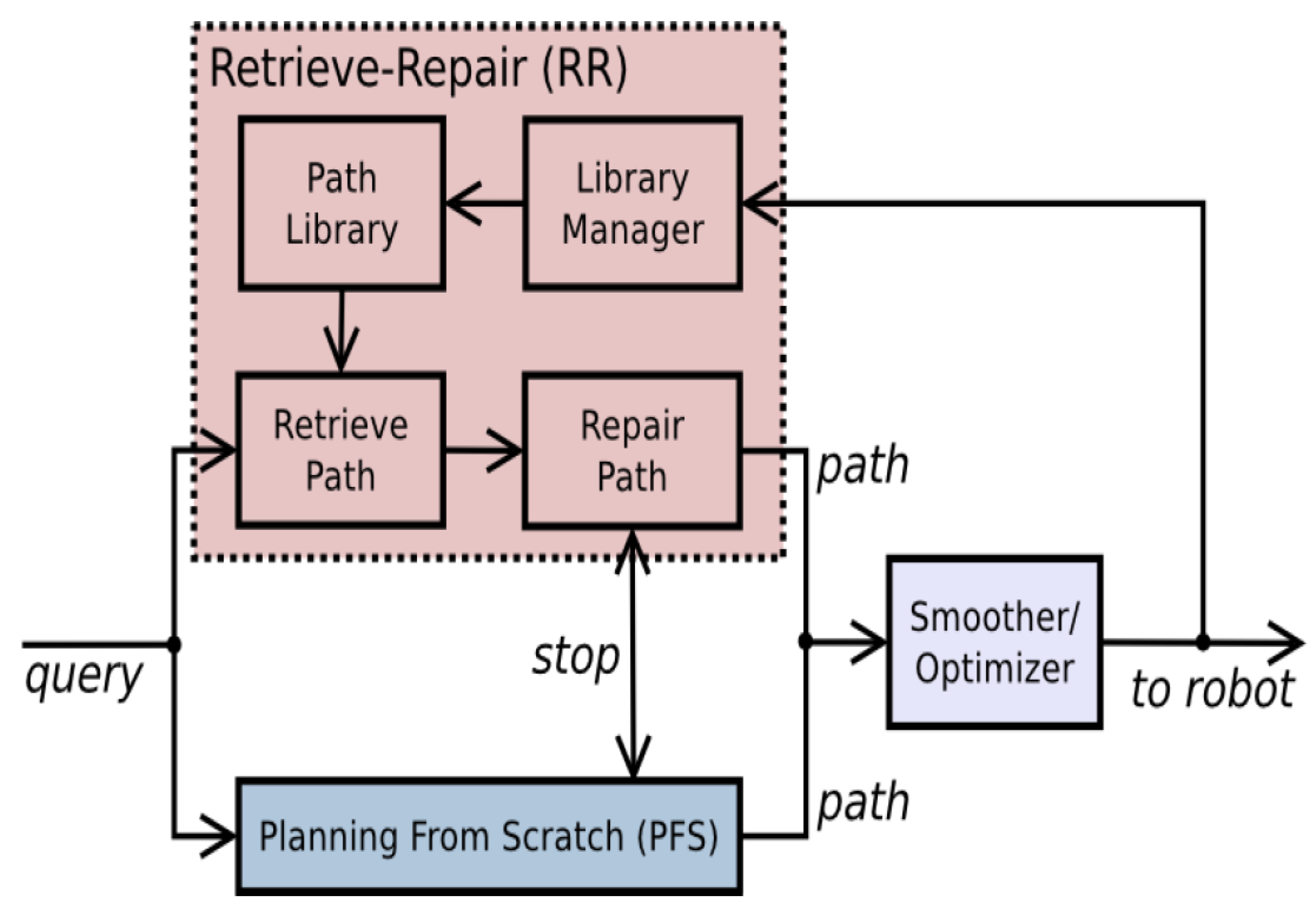

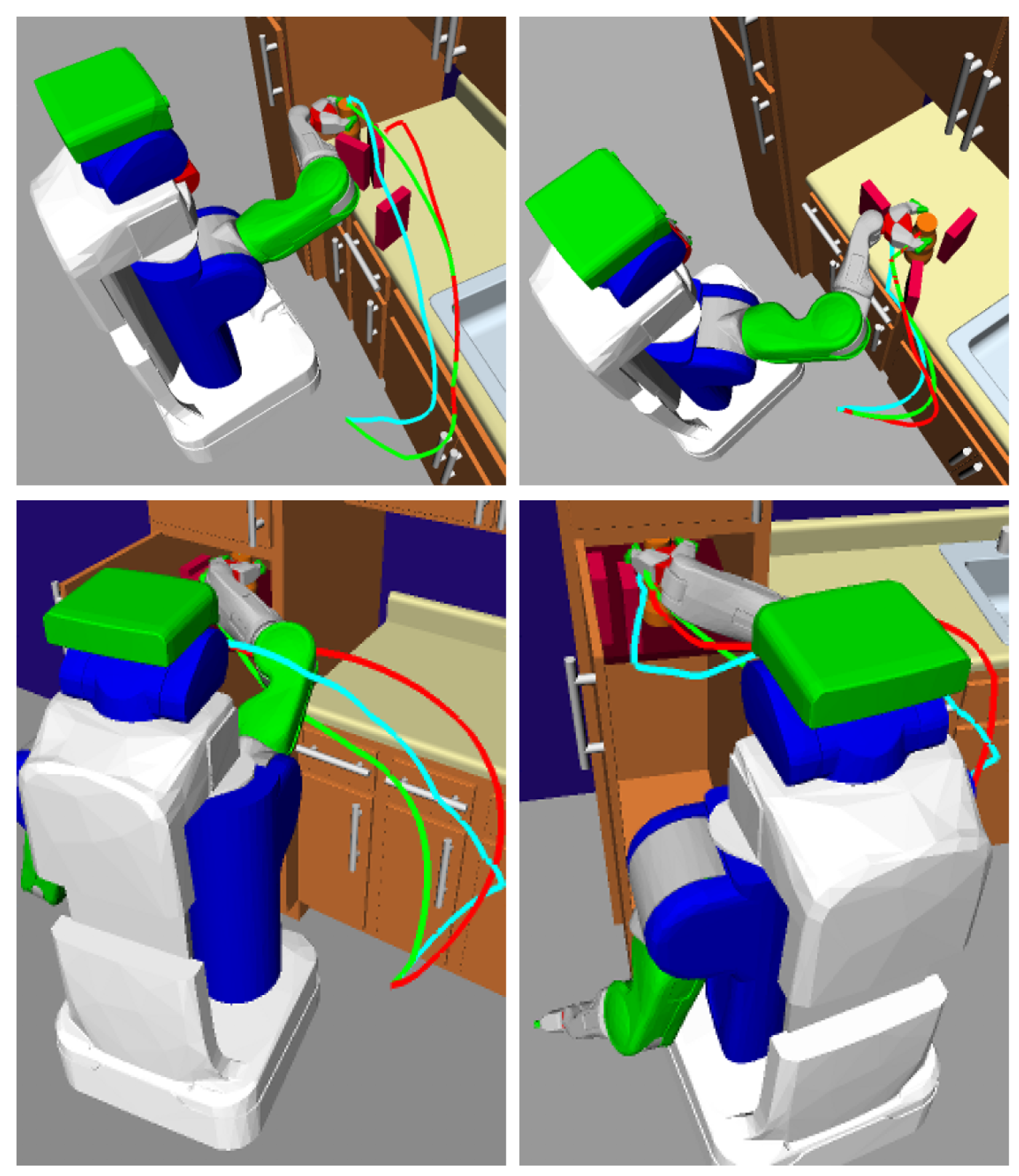

4.3. Grasping or Manipulation

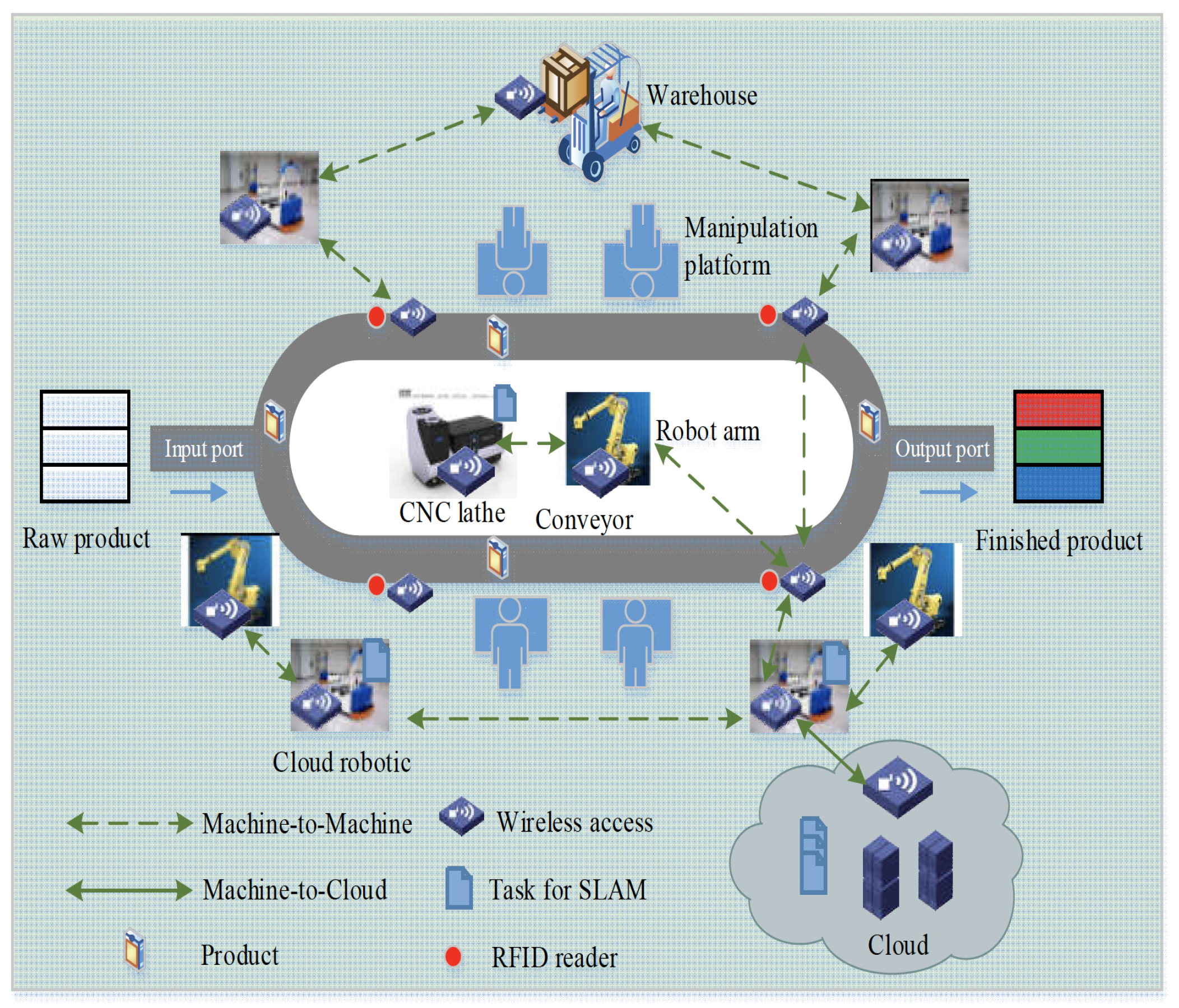

4.4. Manufacture or Service Robotics

4.5. Social, Agriculture, Medical and Disaster Robotics

4.6. Crowd-Sourcing and Human–Robot Interaction

5. Open Issues, Challenges and Future Directions

- Efficient Resource and Task Allocation Over the Cloud. One of the crucial determinants of performance of cloud-based robotic systems is making a decision to upload a task to the cloud versus processing it using local resources. Recent works have proposed coordination mechanisms including auctions [100], sequential games [101] and belief propagation techniques [102] for real-time resource allocation and retrieval in cloud-based mobile robotic systems. However, the performance of these techniques is susceptible to the dynamic changes in the network topology as well as delays during the coordination process. A problem worthy of future research is to enable resource allocation methods in the cloud to gracefully handle dynamic changes in the network that might occur during the resource allocation process. Issues related to truthful revelation of preferences through the bids with an auction mechanism and dynamic changes in bids are also relevant in this connection. Within the game-based resource allocation strategy, auto-tuning of variables subject to available information conditions like willingness payment and corresponding response time is another potential future research direction. To address the problem of task allocation in the cloud, authors proposed a communication scheduling technique called Location-based Packing Scheduling (LPS) [10] that agglomerates multiple resource or service requests from different robots and allocates them together to a single robot. As a future direction, a judicious strategy needs to be implemented to select the position of the data center in order to make resource and task allocation approaches over the cloud more efficient and cost-effective. Future work should also involve designing flexible collaboration techniques to maximize the utilization of robot resources to handle challenging tasks.

- Reducing Communication Delays over the Cloud. Cloud-robot communication typically associated with cloud robotic systems can result in considerable delays. The massive amounts of data that usually arise in many robotics applications like perception, SLAM and navigation aggravate communication delays. In fact, the central issue in cloud robotics is the computation–communication delay that persists between robots and the cloud for most of the applications. Over recent years, wireless technology has made steady improvements, yet delays are difficult to avoid in the presence of network connection problems between the robot and the cloud services. To overcome this limitation, novel load distribution algorithms with inherent, anytime characteristics need to be designed so that when a computation request from a robot cannot be uploaded properly to the cloud, backup mechanisms are available to dynamically allocate the task and minimize the robot’s delay time in performing operations. Network latency gives rise to another challenge in the real-time deployment of cloud robotics applications. A service quality assurance system and effects analysis dedicated for a specific bandwidth can facilitate uninterrupted network flow by retrieving a balance between limited resource and real-time demands. New algorithms and methods should be investigated to handle time-varying network latency and Quality-of-Service (QoS). In a recent work [103], authors proposed the RoboCloud, which introduces a task specified mission cloud with controllable resources and predictable behavior. However, the Robocloud was mainly tested for a semantic mapping task based on cloud-assisted object recognition. In the future, it would be worthwhile to test the proposed approach in other types of cloud robotics scenarios like navigation, manipulation, etc. Another work along this direction [87] developed a survivable cloud multi-robot framework for heterogeneous environments, which leverages the advantages of a virtual ad hoc network formed by robot-to-robot communications and a physical cloud infrastructure consisting of robot-to-cloud communication. Future directions of this research could involve the investigation of different offloading schemes to reduce the robot’s energy consumption along with pre-processing and compression of images prior to offloading the data to the cloud server.

- Data Inter-Operability and Scalability of Operations between Robots in the Cloud. The data interaction between robots and a cloud platform gives rise to another challenge in cloud robotics. Different robotics applications output data using diverse data formats. As cloud-based services can preserve and operate with data only with specific structures, the data uploaded by the robots to the cloud needs to be properly preprocessed before uploading. Similarly, the data output by the cloud-based services has to be transformed by the robot in robot-specific format for its utilization by the robotics application. This data format conversion to match cloud and robot requirements creates considerable overhead, especially for huge amounts of sensor data involved in robotic tasks. Hence, a unified and standardized data format for robot–cloud interaction would be worthy of exploration in the future. Refs. [104,105] described a formal language for knowledge representation between robots connected via a cloud under the RoboEarth and KnowRob projects. A limitation of the proposed system arises owing to scalability concerns related to management of the quality of stored information, as the RoboEarth system includes many users. In order to make the system scalable, new techniques similar to human crowdsourcing or feedback sharing among the robots need to be investigated to autonomously manage the quality of stored information. The robots can be provided with the ratings of the downloaded information, and they can upload the details of their experience while performing the task and update the rating of the downloaded information. Another interesting future direction that will arise with substantial increase of data will involve learning on the database, which will enable robots to learn typical object locations, success models of plans based on the context, common execution failures, timing information or promising plans for a given robot platform.

- Privacy and Security in Cloud–Robot Systems. The increased utilization of cloud-based robotics technologies introduces privacy and security issues. Cloud-based services involve robotic data to be stored and processes to be performed remotely in the cloud, making these applications vulnerable to hackers and malicious users. Remote storage of data in the cloud can lead to inappropriate access, manipulation and deletion of valuable data by hackers. Remote execution of robotic services in the cloud makes it easier for hackers to access and modify these services, thus changing the behavior of robot tasks in malicious ways. In this regard, researchers have used the term cryptorobotics as a unification of cyber-security and robotics [106]. To resolve the security and privacy threats associated with cloud robotics, proper authentication techniques with a layered encryption mechanism are currently used for an entity to access cloud data and services [18]. In the future, more effective integrated verification algorithms should be designed to ensure the security and privacy of cloud-based robotic systems. An important consideration for developing secured cloud robotic systems is whether the integration of security measures like encrypted communication and data validation will affect the real-time performance of these systems. The architectural design in these systems should consider the hardware, the software, the network and the specific application under consideration to achieve a trade-off decision.

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- McKee, G.T.; Schenker, P.S. Networked robotics. Sensor Fusion and Decentralized Control in Robotic Systems III. Int. Soc. Opt. Photonics 2000, 4196, 197–210. [Google Scholar]

- Hu, G.; Tay, W.P.; Wen, Y. Cloud robotics: Architecture, challenges and applications. IEEE Netw. 2012, 26, 21–28. [Google Scholar] [CrossRef]

- Mell, P.; Grance, T. The Nist Definition of Cloud Computing; Computer Security Division, Information Technology Laboratory, National Institute of Standards and Technology: Gaithersburg, MD, USA, 2011; pp. 1–3.

- Wan, J.; Tang, S.; Yan, H.; Li, D.; Wang, S.; Vasilakos, A.V. Cloud robotics: Current status and open issues. IEEE Access 2016, 4, 2797–2807. [Google Scholar] [CrossRef]

- Kehoe, B.; Patil, S.; Abbeel, P.; Goldberg, K. A survey of research on cloud robotics and automation. IEEE Trans. Autom. Sci. Eng. 2015, 12, 398–409. [Google Scholar] [CrossRef]

- Arumugam, R.; Enti, V.R.; Liu, B.; Wu, X.; Baskaran, K.; Kong, F.F.; Kumar, A.S.; Meng, K.D.; Kit, G.W. DAvinCi: A cloud computing framework for service robots. In Proceedings of the 2010 IEEE International Conference on Robotics and Automation (ICRA), Anchorage, AK, USA, 3–7 May 2010; pp. 3084–3089. [Google Scholar]

- Bereznyak, I.; Chepin, E.; Dyumin, A. The actions language as a programming framework for cloud robotics applications. In Proceedings of the 2016 6th International Conference on Cloud System and Big Data Engineering (Confluence), Noida, India, 14–15 January 2016; pp. 119–124. [Google Scholar]

- Chen, W.; Yaguchi, Y.; Naruse, K.; Watanobe, Y.; Nakamura, K. QoS-aware Robotic Streaming Workflow Allocation in Cloud Robotics Systems. IEEE Trans. Serv. Comput. 2018. [Google Scholar] [CrossRef]

- Bouziane, R.; Terrissa, L.S.; Ayad, S.; Brethe, J.F.; Kazar, O. A web services based solution for the NAO robot in cloud robotics environment. In Proceedings of the 2017 4th International Conference on Control, Decision and Information Technologies (CoDIT), Barcelona, Spain, 5–7 April 2017; pp. 0809–0814. [Google Scholar]

- Du, Z.; He, L.; Chen, Y.; Xiao, Y.; Gao, P.; Wang, T. Robot Cloud: Bridging the power of robotics and cloud computing. Future Gener. Comput. Syst. 2017, 74, 337–348. [Google Scholar] [CrossRef]

- Hajjaj, S.S.H.; Sahari, K.S.M. Establishing remote networks for ROS applications via Port Forwarding: A detailed tutorial. Int. J. Adv. Robot. Syst. 2017, 14. [Google Scholar] [CrossRef]

- Hu, B.; Wang, H.; Zhang, P.; Ding, B.; Che, H. Cloudroid: A Cloud Framework for Transparent and QoS-aware Robotic Computation Outsourcing. In Proceedings of the 2017 IEEE 10th International Conference on Cloud Computing (CLOUD), Honolulu, CA, USA, 25–30 June 2017; pp. 114–121. [Google Scholar]

- Liu, H.; Liu, S.; Zheng, K. A Reinforcement Learning-Based Resource Allocation Scheme for Cloud Robotics. IEEE Access 2018, 6, 17215–17222. [Google Scholar] [CrossRef]

- Luo, J.; Zhang, L.; Zhang, H.Y. Design of a cloud robotics middleware based on web service technology. In Proceedings of the 2017 18th International Conference on Advanced Robotics (ICAR), Hong Kong, China, 10–12 July 2017; pp. 487–492. [Google Scholar]

- Merle, P.; Gourdin, C.; Mitton, N. Mobile cloud robotics as a service with OCCIware. In Proceedings of the 2017 IEEE International Congress on Internet of Things (ICIOT), Honolulu, CA, USA, 25–30 June 2017; pp. 50–57. [Google Scholar]

- Mohanarajah, G.; Hunziker, D.; D’Andrea, R.; Waibel, M. Rapyuta: A cloud robotics platform. IEEE Trans. Autom. Sci. Eng. 2015, 12, 481–493. [Google Scholar] [CrossRef]

- Riazuelo, L.; Civera, J.; Montiel, J. C2tam: A cloud framework for cooperative tracking and mapping. Robot. Auton. Syst. 2014, 62, 401–413. [Google Scholar] [CrossRef]

- Nandhini, C.; Doriya, R. Towards secured cloud-based robotic services. In Proceedings of the 2017 International Conference on IEEE Signal Processing and Communication (ICSPC), Coimbatore, India, 28–19 July 2017; pp. 165–170. [Google Scholar]

- Rahman, A.; Jin, J.; Cricenti, A.; Rahman, A.; Panda, M. Motion and Connectivity Aware Offloading in Cloud Robotics via Genetic Algorithm. In Proceedings of the IEEE GLOBECOM 2017-2017 IEEE Global Communications Conference, Singapore, 4–8 December 2017; pp. 1–6. [Google Scholar]

- Wang, L.; Liu, M.; Meng, M.Q.H. A hierarchical auction-based mechanism for real-time resource allocation in cloud robotic systems. IEEE Trans. Cybern. 2017, 47, 473–484. [Google Scholar] [CrossRef] [PubMed]

- Beksi, W.J.; Spruth, J.; Papanikolopoulos, N. Core: A cloud-based object recognition engine for robotics. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 4512–4517. [Google Scholar]

- Yin, L.; Zhou, F.; Wang, Y.; Yuan, X.; Zhao, Y.; Chen, Z. Design of a Cloud Robotics Visual Platform. In Proceedings of the 2016 Sixth International Conference on IEEE Instrumentation & Measurement, Computer, Communication and Control (IMCCC), Harbin, China, 21–23 July 2016; pp. 1039–1043. [Google Scholar]

- Liu, B.; Chen, Y.; Blasch, E.; Pham, K.; Shen, D.; Chen, G. A holistic cloud-enabled robotics system for real-time video tracking application. In Future Information Technology; Springer: Berlin/Heidelberg, Germnay, 2014; pp. 455–468. [Google Scholar]

- Niemueller, T.; Schiffer, S.; Lakemeyer, G.; Rezapour-Lakani, S. Life-long learning perception using cloud database technology. In Proceedings of the IROS Workshop on Cloud Robotics, Tokyo, Japan, 3–7 November 2013. [Google Scholar]

- Tian, S.; Lee, S.G. An implementation of cloud robotic platform for real time face recognition. In Proceedings of the 2015 IEEE International Conference on IEEE Information and Automation, Lijiang, China, 8–10 August 2015; pp. 1509–1514. [Google Scholar]

- Wang, L.; Liu, M.; Meng, M.Q.H. Real-time multisensor data retrieval for cloud robotic systems. IEEE Trans. Autom. Sci. Eng. 2015, 12, 507–518. [Google Scholar] [CrossRef]

- Ansari, F.Q.; Pal, J.K.; Shukla, J.; Nandi, G.C.; Chakraborty, P. A cloud based robot localization technique. In International Conference on Contemporary Computing; Springer: Berlin/Heidelberg, Germnay, 2012; pp. 347–357. [Google Scholar]

- Cieslewski, T.; Lynen, S.; Dymczyk, M.; Magnenat, S.; Siegwart, R. Map api-scalable decentralized map building for robots. In Proceedings of the 2015 IEEE International Conference on IEEE Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 6241–6247. [Google Scholar]

- Hammad, A.; Ali, S.S.; Eldien, A.S.T. A novel implementation for FastSLAM 2.0 algorithm based on cloud robotics. In Proceedings of the 2017 13th International IEEE Computer Engineering Conference (ICENCO), Cairo, Egypt, 27–28 December 2017; pp. 184–189. [Google Scholar]

- Lam, M.L.; Lam, K.Y. Path planning as a service PPaaS: Cloud-based robotic path planning. In Proceedings of the 2014 IEEE International Conference on IEEE Robotics and Biomimetics (ROBIO), Bali, Indonesia, 5–10 December 2014; pp. 1839–1844. [Google Scholar]

- Mohanarajah, G.; Usenko, V.; Singh, M.; D’Andrea, R.; Waibel, M. Cloud-based collaborative 3D mapping in real-time with low-cost robots. IEEE Trans. Autom. Sci. Eng. 2015, 12, 423–431. [Google Scholar] [CrossRef]

- Riazuelo, L.; Tenorth, M.; Marco, D.; Salas, M.; Mosenlechner, L.; Kunze, L.; Beetz, M.; Tardos, J.; Montano, L.; Montiel, J. Roboearth web-enabled and knowledge-based active perception. In Proceedings of the IROS Workshop on AI-Based Robotics, Tokyo, Japan, 3–7 November 2013. [Google Scholar]

- Riazuelo, L.; Tenorth, M.; Di Marco, D.; Salas, M.; Gálvez-López, D.; Mösenlechner, L.; Kunze, L.; Beetz, M.; Tardós, J.D.; Montano, L.; et al. RoboEarth semantic mapping: A cloud enabled knowledge-based approach. IEEE Trans. Autom. Sci. Eng. 2015, 12, 432–443. [Google Scholar] [CrossRef]

- Salmeron-Garcia, J.; Inigo-Blasco, P.; Diaz-del Rio, F.; Cagigas-Muniz, D. Mobile robot motion planning based on Cloud Computing stereo vision processing. In Proceedings of the 41st International Symposium on Robotics, ISR/Robotik 2014, Munich, Germany, 2–3 June 2014; pp. 1–6. [Google Scholar]

- Vick, A.; Vonásek, V.; Pěnička, R.; Krüger, J. Robot control as a service—Towards cloud-based motion planning and control for industrial robots. In Proceedings of the 2015 10th International Workshop on IEEE Robot Motion and Control (RoMoCo), Poznan, Poland, 6–8 July 2015; pp. 33–39. [Google Scholar]

- Berenson, D.; Abbeel, P.; Goldberg, K. A robot path planning framework that learns from experience. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation (ICRA), Saint Paul, MN, USA, 14–18 May 2012; pp. 3671–3678. [Google Scholar]

- Bozcuoğlu, A.K.; Kazhoyan, G.; Furuta, Y.; Stelter, S.; Beetz, M.; Okada, K.; Inaba, M. The Exchange of Knowledge Using Cloud Robotics. IEEE Robot. Autom. Lett. 2018, 3, 1072–1079. [Google Scholar] [CrossRef]

- Kehoe, B.; Berenson, D.; Goldberg, K. Toward cloud-based grasping with uncertainty in shape: Estimating lower bounds on achieving force closure with zero-slip push grasps. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation (ICRA), Saint Paul, MN, USA, 14–18 May 2012; pp. 576–583. [Google Scholar]

- Kehoe, B.; Berenson, D.; Goldberg, K. Estimating part tolerance bounds based on adaptive cloud-based grasp planning with slip. In Proceedings of the 2012 IEEE International Conference on Automation Science and Engineering (CASE), Seoul, Korea, 20–24 August 2012; pp. 1106–1113. [Google Scholar]

- Kehoe, B.; Matsukawa, A.; Candido, S.; Kuffner, J.; Goldberg, K. Cloud-based robot grasping with the google object recognition engine. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation (ICRA), Karlsruhe, Germany, 6–10 May 2013; pp. 4263–4270. [Google Scholar]

- Kehoe, B.; Warrier, D.; Patil, S.; Goldberg, K. Cloud-based grasp analysis and planning for toleranced parts using parallelized Monte Carlo sampling. IEEE Trans. Autom. Sci. Eng. 2015, 12, 455–470. [Google Scholar] [CrossRef]

- Levine, S.; Pastor, P.; Krizhevsky, A.; Quillen, D. Learning hand-eye coordination for robotic grasping with large-scale data collection. In International Symposium on Experimental Robotics; Springer: Cham, Switzerland, 2016; pp. 173–184. [Google Scholar]

- Mahler, J.; Pokorny, F.T.; Hou, B.; Roderick, M.; Laskey, M.; Aubry, M.; Kohlhoff, K.; Kröger, T.; Kuffner, J.; Goldberg, K. Dex-net 1.0: A cloud-based network of 3d objects for robust grasp planning using a multi-armed bandit model with correlated rewards. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 1957–1964. [Google Scholar]

- Mahler, J.; Liang, J.; Niyaz, S.; Laskey, M.; Doan, R.; Liu, X.; Ojea, J.A.; Goldberg, K. Dex-net 2.0: Deep learning to plan robust grasps with synthetic point clouds and analytic grasp metrics. arXiv 2017, arXiv:1703.09312. [Google Scholar]

- Mahler, J.; Hou, B.; Niyaz, S.; Pokorny, F.T.; Chandra, R.; Goldberg, K. Privacy-preserving grasp planning in the cloud. In Proceedings of the 2016 IEEE International Conference on Automation Science and Engineering (CASE), Fort Worth, TX, USA, 21–25 August 2016; pp. 468–475. [Google Scholar]

- Wensing, P.M.; Slotine, J.J.E. Cooperative Adaptive Control for Cloud-Based Robotics. arXiv 2017, arXiv:1709.07112. [Google Scholar]

- Cardarelli, E.; Sabattini, L.; Secchi, C.; Fantuzzi, C. Cloud robotics paradigm for enhanced navigation of autonomous vehicles in real world industrial applications. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 4518–4523. [Google Scholar]

- Rahimi, R.; Shao, C.; Veeraraghavan, M.; Fumagalli, A.; Nicho, J.; Meyer, J.; Edwards, S.; Flannigan, C.; Evans, P. An industrial robotics application with cloud computing and high-speed networking. In Proceedings of the IEEE International Conference on Robotic Computing (IRC), Taichung, Taiwan, 10–12 April 2017; pp. 44–51. [Google Scholar]

- Singhal, A.; Pallav, P.; Kejriwal, N.; Choudhury, S.; Kumar, S.; Sinha, R. Managing a fleet of autonomous mobile robots (AMR) using cloud robotics platform. In Proceedings of the 2017 European Conference on Mobile Robots (ECMR), Paris, France, 6–8 September 2017; pp. 1–6. [Google Scholar]

- Wan, J.; Tang, S.; Hua, Q.; Li, D.; Liu, C.; Lloret, J. Context-aware cloud robotics for material handling in cognitive industrial internet of things. IEEE Internet Things J. 2017, 5. [Google Scholar] [CrossRef]

- Wang, X.V.; Wang, L.; Mohammed, A.; Givehchi, M. Ubiquitous manufacturing system based on cloud: A robotics application. Robot. Comput. Integr. Manuf. 2017, 45, 116–125. [Google Scholar] [CrossRef]

- Yan, H.; Hua, Q.; Wang, Y.; Wei, W.; Imran, M. Cloud robotics in Smart Manufacturing Environments: Challenges and countermeasures. Comput. Electr. Eng. 2017, 63, 56–65. [Google Scholar] [CrossRef]

- Beigi, N.K.; Partov, B.; Farokhi, S. Real-time cloud robotics in practical smart city applications. In Proceedings of the 2017 IEEE 28th Annual International Symposium on Personal, Indoor, and Mobile Radio Communications (PIMRC), Montreal, QC, Canada, 8–13 October 2017; pp. 1–5. [Google Scholar]

- Bhargava, B.; Angin, P.; Duan, L. A mobile-cloud pedestrian crossing guide for the blind. In Proceedings of the International Conference on Advances in Computing & Communication, Bali Island, Indonesia, 1–3 April 2011. [Google Scholar]

- Bonaccorsi, M.; Fiorini, L.; Cavallo, F.; Saffiotti, A.; Dario, P. A cloud robotics solution to improve social assistive robots for active and healthy aging. Int. J. Soc. Robot. 2016, 8, 393–408. [Google Scholar] [CrossRef]

- Botta, A.; Cacace, J.; Lippiello, V.; Siciliano, B.; Ventre, G. Networking for Cloud Robotics: A case study based on the Sherpa Project. In Proceedings of the 2017 International Conference on Cloud and Robotics (ICCR), Saint Quentin, France, 22–23 November 2017. [Google Scholar]

- Jangid, N.; Sharma, B. Cloud Computing and Robotics for Disaster Management. In Proceedings of the 2016 7th International Conference on Intelligent Systems, Modelling and Simulation (ISMS), Bangkok, Thailand, 25–27 January 2016; pp. 20–24. [Google Scholar]

- Marconi, L.; Melchiorri, C.; Beetz, M.; Pangercic, D.; Siegwart, R.; Leutenegger, S.; Carloni, R.; Stramigioli, S.; Bruyninckx, H.; Doherty, P.; et al. The SHERPA project: Smart collaboration between humans and ground-aerial robots for improving rescuing activities in alpine environments. In Proceedings of the 2012 IEEE International Symposium on Safety, Security, and Rescue Robotics (SSRR), College Station, TX, USA, 5–8 November 2012; pp. 1–4. [Google Scholar]

- Ng, M.K.; Primatesta, S.; Giuliano, L.; Lupetti, M.L.; Russo, L.O.; Farulla, G.A.; Indaco, M.; Rosa, S.; Germak, C.; Bona, B. A cloud robotics system for telepresence enabling mobility impaired people to enjoy the whole museum experience. In Proceedings of the 2015 10th International Conference on Design & Technology of Integrated Systems in Nanoscale Era (DTIS), Naples, Italy, 21–23 April 2015; pp. 1–6. [Google Scholar]

- Ramírez De La Pinta, J.; Maestre Torreblanca, J.M.; Jurado, I.; Reyes De Cozar, S. Off the shelf cloud robotics for the smart home: Empowering a wireless robot through cloud computing. Sensors 2017, 17, 525. [Google Scholar] [CrossRef] [PubMed]

- Salmerón-García, J.J.; van den Dries, S.; Díaz-del Río, F.; Morgado-Estevez, A.; Sevillano-Ramos, J.L.; van de Molengraft, M. Towards a cloud-based automated surveillance system using wireless technologies. Multimedia Syst. 2017, 1–15. [Google Scholar] [CrossRef]

- Yokoo, T.; Yamada, M.; Sakaino, S.; Abe, S.; Tsuji, T. Development of a physical therapy robot for rehabilitation databases. In Proceedings of the 2012 12th IEEE International Workshop on Advanced Motion Control (AMC), Sarajevo, Bosnia and Herzegovina, 25–27 March 2012; pp. 1–6. [Google Scholar]

- Mavridis, N.; Bourlai, T.; Ognibene, D. The human–robot cloud: Situated collective intelligence on demand. In Proceedings of the 2012 IEEE International Conference on Cyber Technology in Automation, Control, and Intelligent Systems (CYBER), Bangkok, Thailand, 27–31 May 2012; pp. 360–365. [Google Scholar]

- Sugiura, K.; Zettsu, K. Rospeex: A cloud robotics platform for human–robot spoken dialogues. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 6155–6160. [Google Scholar]

- Tan, J.T.C.; Inamura, T. Sigverse—A cloud computing architecture simulation platform for social human–robot interaction. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation (ICRA), Saint Paul, MN, USA, 14–18 May 2012; pp. 1310–1315. [Google Scholar]

- Cloud, A.E.C. Amazon Web Services. Available online: https://aws.amazon.com/ (accessed on 29 August 2018).

- Cloud, C. Amazon Elastic Compute Cloud. Available online: https://aws.amazon.com/ (accessed on 29 August 2018).

- Krishnan, S.; Gonzalez, J.L.U. Google compute engine. In Building Your Next Big Thing with Google Cloud Platform; Springer: Berlin, Germany, 2015; pp. 53–81. [Google Scholar]

- Copeland, M.; Soh, J.; Puca, A.; Manning, M.; Gollob, D. Microsoft azure and cloud computing. In Microsoft Azure; Springer: Berlin, Germany, 2015; pp. 3–26. [Google Scholar]

- Chen, Y.; Du, Z.; García-Acosta, M. Robot as a service in cloud computing. In Proceedings of the 2010 Fifth IEEE International Symposium on Service Oriented System Engineering (SOSE), Nanjing, China, 4–5 June 2010; pp. 151–158. [Google Scholar]

- Chen, Y.; Hu, H. Internet of intelligent things and robot as a service. Simul. Model. Pract. Theory 2013, 34, 159–171. [Google Scholar] [CrossRef]

- Waibel, M.; Beetz, M.; Civera, J.; d’Andrea, R.; Elfring, J.; Galvez-Lopez, D.; Häussermann, K.; Janssen, R.; Montiel, J.; Perzylo, A.; et al. Roboearth. IEEE Robot. Autom. Mag. 2011, 18, 69–82. [Google Scholar] [CrossRef]

- Crick, C.; Jay, G.; Osentoski, S.; Jenkins, O.C. ROS and rosbridge: Roboticists out of the loop. In Proceedings of the Seventh Annual ACM/IEEE International Conference on Human–Robot Interaction, Boston, MA, USA, 5–8 March 2012; ACM: New York, NY, USA, 2012; pp. 493–494. [Google Scholar]

- Crick, C.; Jay, G.; Osentoski, S.; Pitzer, B.; Jenkins, O.C. Rosbridge: Ros for non-ros users. In Robotics Research; Springer: Cham, Switzerland, 2017; pp. 493–504. [Google Scholar]

- Osentoski, S.; Jay, G.; Crick, C.; Pitzer, B.; DuHadway, C.; Jenkins, O.C. Robots as web services: Reproducible experimentation and application development using rosjs. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation (ICRA), Shanghai, China, 9–13 May 2011; pp. 6078–6083. [Google Scholar]

- Lee, J. Web Applications for Robots Using Rosbridge; Brown University: Providence, RI, USA, 2012. [Google Scholar]

- Alexander, B.; Hsiao, K.; Jenkins, C.; Suay, B.; Toris, R. Robot web tools [ros topics]. IEEE Robot. Autom. Mag. 2012, 19, 20–23. [Google Scholar] [CrossRef]

- Toris, R.; Kammerl, J.; Lu, D.V.; Lee, J.; Jenkins, O.C.; Osentoski, S.; Wills, M.; Chernova, S. Robot web tools: Efficient messaging for cloud robotics. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 4530–4537. [Google Scholar]

- Sato, M.; Kamei, K.; Nishio, S.; Hagita, N. The ubiquitous network robot platform: Common platform for continuous daily robotic services. In Proceedings of the 2011 IEEE/SICE International Symposium on System Integration (SII), Kyoto, Japan, 20–22 December 2011; pp. 318–323. [Google Scholar]

- Tenorth, M.; Kamei, K.; Satake, S.; Miyashita, T.; Hagita, N. Building knowledge-enabled cloud robotics applications using the ubiquitous network robot platform. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Tokyo, Japan, 3–7 November 2013; pp. 5716–5721. [Google Scholar]

- Gherardi, L.; Hunziker, D.; Mohanarajah, G. A software product line approach for configuring cloud robotics applications. In Proceedings of the 2014 IEEE 7th International Conference on Cloud Computing (CLOUD), Anchorage, AK, USA, 27 June–2 July 2014; pp. 745–752. [Google Scholar]

- Kato, Y.; Izui, T.; Tsuchiya, Y.; Narita, M.; Ueki, M.; Murakawa, Y.; Okabayashi, K. Rsi-cloud for integrating robot services with internet services. In Proceedings of the IEEE IECON 2011-37th Annual Conference on IEEE Industrial Electronics Society, Melbourne, Australia, 7–10 November 2011; pp. 2158–2163. [Google Scholar]

- Yuriyama, M.; Kushida, T. Sensor-cloud infrastructure-physical sensor management with virtualized sensors on cloud computing. In Proceedings of the 2010 13th International Conference on Network-Based Information Systems (NBiS), Takayama, Japan, 14–16 September 2010; pp. 1–8. [Google Scholar]

- Nakagawa, S.; Ohyama, N.; Sakaguchi, K.; Nakayama, H.; Igarashi, N.; Tsunoda, R.; Shimizu, S.; Narita, M.; Kato, Y. A distributed service framework for integrating robots with internet services. In Proceedings of the 2012 IEEE 26th International Conference on Advanced Information Networking and Applications (AINA), Fukuoka, Japan, 26–29 March 2012; pp. 31–37. [Google Scholar]

- Nakagawa, S.; Igarashi, N.; Tsuchiya, Y.; Narita, M.; Kato, Y. An implementation of a distributed service framework for cloud-based robot services. In Proceedings of the IEEE IECON 2012-38th Annual Conference on IEEE Industrial Electronics Society, Montreal, QC, Canada, 25–28 October 2012; pp. 4148–4153. [Google Scholar]

- Narita, M.; Okabe, S.; Kato, Y.; Murakwa, Y.; Okabayashi, K.; Kanda, S. Reliable cloud-based robot services. In Proceedings of the IECON 2013-39th Annual Conference of the IEEE Industrial Electronics Society, Vienna, Austria, 10–13 November 2013; pp. 8317–8322. [Google Scholar]

- Ramharuk, V. Survivable Cloud Multi-Robotics Framework for Heterogeneous Environments. Ph.D. Thesis, University of South Africa, Pretoria, South Africa, 2015. [Google Scholar]

- Koubâa, A. A service-oriented architecture for virtualizing robots in robot-as-a-service clouds. In International Conference on Architecture of Computing Systems; Springer: Berlin/Heidelberg, Germany, 2014; pp. 196–208. [Google Scholar]

- Miratabzadeh, S.A.; Gallardo, N.; Gamez, N.; Haradi, K.; Puthussery, A.R.; Rad, P.; Jamshidi, M. Cloud robotics: A software architecture: For heterogeneous large-scale autonomous robots. In Proceedings of the World Automation Congress (WAC), Rio Grande, Puerto Rico, 31 July–4 August 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1–6. [Google Scholar]

- Pereira, A.B.; Bastos, G.S. ROSRemote, using ROS on cloud to access robots remotely. In Proceedings of the 2017 IEEE 18th International Conference on Advanced Robotics (ICAR), Hong Kong, China, 10–12 July 2017; pp. 284–289. [Google Scholar]

- Fette, I. The Websocket Protocol. 2011. Available online: tools.ietf.org/html/rfc6455 (accessed on 25 August 2018).

- Rusu, R.B.; Cousins, S. 3D is here: Point cloud library (pcl). In Proceedings of the 2011 IEEE International Conference on Robotics and automation (ICRA), Shanghai, China, 9–13 May 2011; pp. 1–4. [Google Scholar]

- Miller, A.T.; Allen, P.K. Graspit! a versatile simulator for robotic grasping. IEEE Robot. Autom. Mag. 2004, 11, 110–122. [Google Scholar] [CrossRef]

- Diankov, R.; Kuffner, J. Openrave: A Planning Architecture for Autonomous Robotics; Tech. Rep. CMU-RI-TR-08-34; Robotics Institute: Pittsburgh, PA, USA, 2008; Volume 79. [Google Scholar]

- Tian, N.; Matl, M.; Mahler, J.; Zhou, Y.X.; Staszak, S.; Correa, C.; Zheng, S.; Li, Q.; Zhang, R.; Goldberg, K. A cloud robot system using the dexterity network and berkeley robotics and automation as a service (Brass). In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 1615–1622. [Google Scholar]

- Tenorth, M.; Winkler, J.; Beßler, D.; Beetz, M. Open-EASE: A Cloud-Based Knowledge Service for Autonomous Learning. KI-Künstliche Intell. 2015, 29, 407–411. [Google Scholar] [CrossRef]

- Gu, S.; Holly, E.; Lillicrap, T.; Levine, S. Deep reinforcement learning for robotic manipulation with asynchronous off-policy updates. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 3389–3396. [Google Scholar]

- Yahya, A.; Li, A.; Kalakrishnan, M.; Chebotar, Y.; Levine, S. Collective robot reinforcement learning with distributed asynchronous guided policy search. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 79–86. [Google Scholar]

- Rahman, A.; Jin, J.; Cricenti, A.; Rahman, A.; Yuan, D. A cloud robotics framework of optimal task offloading for smart city applications. In Proceedings of the 2016 IEEE Global Communications Conference (GLOBECOM), Washington, DC, USA, 4–8 December 2016; pp. 1–7. [Google Scholar]

- Wang, L.; Liu, M.; Meng, M.Q.H. Hierarchical auction-based mechanism for real-time resource retrieval in cloud mobile robotic system. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 2164–2169. [Google Scholar]

- Wang, L.; Liu, M.; Meng, M.Q.H. A pricing mechanism for task oriented resource allocation in cloud robotics. In Robots and Sensor Clouds; Springer: Cham, Switzerland, 2016; pp. 3–31. [Google Scholar]

- Kong, Y.; Zhang, M.; Ye, D. A belief propagation-based method for task allocation in open and dynamic cloud environments. Knowl.-Based Syst. 2017, 115, 123–132. [Google Scholar] [CrossRef]

- Li, Y.; Wang, H.; Ding, B.; Shi, P.; Liu, X. Toward QoS-aware cloud robotic applications: A hybrid architecture and its implementation. In Proceedings of the 2016 Intl IEEE Conferences Ubiquitous Intelligence & Computing, Advanced and Trusted Computing, Scalable Computing and Communications, Cloud and Big Data Computing, Internet of People, and Smart World Congress (UIC/ATC/ScalCom/CBDCom/IoP/SmartWorld), Toulouse, France, 18–21 July 2016; pp. 33–40. [Google Scholar]

- Tenorth, M.; Perzylo, A.C.; Lafrenz, R.; Beetz, M. Representation and exchange of knowledge about actions, objects, and environments in the roboearth framework. IEEE Trans. Autom. Sci. Eng. 2013, 10, 643–651. [Google Scholar] [CrossRef]

- Tenorth, M.; Beetz, M. Representations for robot knowledge in the KnowRob framework. Artif. Intell. 2017, 247, 151–169. [Google Scholar] [CrossRef]

- Morante, S.; Victores, J.G.; Balaguer, C. Cryptobotics: Why robots need cyber safety. Front. Robot. AI 2015, 2, 23. [Google Scholar] [CrossRef]

| Category | Works | Contribution | Metrics and Evaluation Techniques |

|---|---|---|---|

| Architecture | [6,7,8,9,10,11,12,13,14,15,16,17,18,19,20] | Development of software architecture for heterogeneous robots to share sensor data between processing nodes for computationally intense algorithms. | Execution and round-trip communication time for SLAM and robotic manipulation tasks. |

| Applications | [21,22,23,24,25,26] | (i.) Computer vision tasks like object and face recognition, mapping and video tracking. | Round-trip communication time for computer vision systems and performance analysis for biometric systems like False Rejection Rate (FAR) and False Acceptance Rate (FAR). |

| [27,28,29,30,31,32,33,34,35] | (ii.) Navigation problems in robotics like SLAM, motion and path planning. | Implemented on distributed robots, constructed maps and paths, qualitatively examined for localization accuracy, bandwidth usage, processing speeds and map and path storage. | |

| [36,37,38,39,40,41,42,43,44,45,46] | (iii.) Manipulation tasks like grasp planning combined with deep learning from publicly available 3D object models like Dex-Net 1.0 and Dex-Net 2.0. | Empirically evaluated for grasp reliability in comparison to naive locally computed strategies. | |

| [47,48,49,50,51,52] | (iv.) Automate industrial processes to increase the efficiency of manufacturing | Distributed robots’ navigation performance. | |

| [11,53,54,55,56,57,58,59,60,61,62] | (v.) Agriculture, healthcare and disaster management. | Performance gains measured by analyzing standard domain metrics like round-trip time, data loss percentage, mean localization and root mean square error. | |

| [63,64,65] | (vi.) Incorporate human knowledge for augmenting vision and speech tasks. | Approximate time for cloud communication and task completion and system feasibility analysis. |

| Papers | Application Area | Experimental Setup | Results |

|---|---|---|---|

| [21] | Perception & omputer vision applications | This paper presents the experimental results of the measured round-trip times when point cloud data is transmitted through CORE using UDP, TCP and Websocket protocols. | Experimental results illustrated that UDP provides the fastest round-trip times although there is no guarantee that the server will receive all point cloud messages in order with no duplication. |

| [23] | Perception & computer vision | To evaluate the performance of offloading image processing in video tracking tasks to the cloud, a cloud enabled distributed robotics prototype consisting of a remote robot network and a cloud testbed were designed. | The medium and large instance showed higher performance than the others owing to the availability of higher number of CPUs. |

| Performance differences were evaluated between a local machine and three instances in the cloud-small, medium and large. | |||

| [28] | Robot localization and map building | To demonstrate and validate proposed Map API system, a visual inertial mapping application was developed. For experimental purposes, the runtime for running the registration operation was evaluated about on three emulated ground robots vs. a centralized instance with serialized map processing. | Experimental results indicate that even using less computational power, the decentralized entities finished registering the data within 10 minutes while the central entity takes 25 minutes to finish all the processing. |

| [31] | Collaborative 3D mapping | The experimental setup for evaluation consisted of two robots and the cloud based architecture running in data center. Experimental analysis involved qualitative evaluation of building and merging of maps created in different environments in addition to quantitative evaluation of network usage, localization and accuracy and global map optimization times. | The experiments analyzing network usage illustrate that the bandwidth usage is proportional to the velocity of the robot. Empirical evaluation of map optimization revealed that cloud-based optimization significantly reduced the error especially in presence of loop closures. |

| [34] | Mobile robot motion planning | Intensive performance testing was done using different stereo streams, cloud states and connection technologies. Experiments were performed to evaluate scalability, communication technology and time delay. | A significant speedup was obtained for scalability measures which sustained an average frequency of 4 frame pairs per second. |

| [40] | Robot manipulation | Two sets of experiments were performed. The first set included a set of six objects and end-to-end testing of object recognition, pose estimation and grasping. The second set of experiments evaluated the confidence measure for object recognition using a set of 100 objects and pose estimation using the first set of objects. | For the first set of experiments, higher recall was achieved for image recognition through multiple rounds of hand-selected training images. Pose estimation indicated a maximum failure rate of and grasping experiments illustrated a maximum failure rate of . The second set of experiments demonstrated a recall rate of for image recognition and a recall rate of for pose estimation. |

| [42] | Robotic grasping | The proposed approach was compared to an open-loop method which observes the scene prior to the grasp, a random baseline method and a hand-engineered grasping system. Two experimental protocols were used to evaluate the methods based on grasping with and without replacement. | The success rate of the proposed continuous servoing method exceeded the baseline and prior methods in all cases. For the experiments with no replacement, it removed almost all objects successfully after 30 grasps. In the experiments with replacement, the grasp success rate achieved ranged from at the beginning to at the end. |

| [44] | Robot grasp planning | Classification performance was evaluated on both real and synthetic data. Physical evaluations were performed on an ABB YuMi robot with custom silicon gripper tips. Performance of grasp planning methods were evaluated on known and novel objects. Generalization ability was also evaluated. | The proposed approach planned grasps 3 times faster compared to the baseline approach for known objects and achieved a success rate of high and a precision rate of . For novel objects, success rate achieved was a high of and precision rate was . |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Saha, O.; Dasgupta, P. A Comprehensive Survey of Recent Trends in Cloud Robotics Architectures and Applications. Robotics 2018, 7, 47. https://doi.org/10.3390/robotics7030047

Saha O, Dasgupta P. A Comprehensive Survey of Recent Trends in Cloud Robotics Architectures and Applications. Robotics. 2018; 7(3):47. https://doi.org/10.3390/robotics7030047

Chicago/Turabian StyleSaha, Olimpiya, and Prithviraj Dasgupta. 2018. "A Comprehensive Survey of Recent Trends in Cloud Robotics Architectures and Applications" Robotics 7, no. 3: 47. https://doi.org/10.3390/robotics7030047

APA StyleSaha, O., & Dasgupta, P. (2018). A Comprehensive Survey of Recent Trends in Cloud Robotics Architectures and Applications. Robotics, 7(3), 47. https://doi.org/10.3390/robotics7030047