Deep Reinforcement Learning for Soft, Flexible Robots: Brief Review with Impending Challenges

Abstract

1. Introduction

1.1. Soft Robotics: A New Surge in Robotics

1.2. Deep Learning for Controls in Robotics

- Data distribution: In the case of Deep RL for perception, the observations are independent and identically distributed. While in the case of controls, they are accumulated in an online manner due to their continuous nature where each one is correlated to the previous ones [16].

- Supervision Signal: Complete supervision is provided in case of perception in the form of ground truth labels. While in controls, there are only sparse rewards available.

- Data Collection: Dataset collection can be done offline in perception; it requires online collection in case of controls. Hence, this affects the data we can collect due to the fact that the agent needs to execute actions in the real world, which is not a primitive task.

1.3. Deep Learning in SoRo

1.4. Forthcoming Challenges

2. Brief Overview of Reinforcement Learning

2.1. Introduction

2.2. Reinforcement Learning Algorithms

- Value-based Methods: These methods estimate the probability of being in a given state, using which the control policy is determined. The sequential state estimation is done by making use of Bellman’s Equations (Bellman’s Expectation Equation and Bellman’s Optimality Equation). Value-based RL algorithms include State-Action-Reward-State-Action (SARSA) and Q-Learning, which differ in their targets, that is the target value to which Q-values are recursively updated by a step size at each time step. SARSA is an on-policy method where the value estimations are updated towards a policy while Q-Learning, being an off-policy method, updates the value estimations towards a target optimal policy. This algorithm is a complex algorithm that is used to expound various multiplex-looking problems but computational constraints act as stepping stones to utilizing it. Detailed explanation can be found in recent works like Dayan [27], Kulkarni et al. [28], Barreto et al. [29], and Zhang et al. [30].

- Policy-based Methods: In contrast to the Value-based methods, Policy-based methods directly update the policy without looking at the value estimations. Some of key differences between Value-based and Policy-based are listed in Table 1. They are slightly better than value-based methods in the terms of convergence, solving problems with continuous high dimensional data, and effectiveness in solving deterministic policies. They perform in two broad ways—gradient-based and gradient-free [31,32] methods of parameter estimation. We focus on gradient-based methods where gradient descent seems to be the choice of optimization algorithm. Here, we optimize the objective function as:wherein the score function [33] for the policy θ is given by fπθ(.). Using Equation (1), we can comment on the performance of the model with respect to the task in hand. A RL algorithm is the REINFORCE algorithm [34], that simply plugs in the sample return equal to the score function given by:A baseline term b(s) is subtracted from the sample return to reduce the variance of estimation which updated the equation in the following manner:while using Q-value function, score function can make use of either stochastic policy gradient (Equation (4)) or deterministic policy gradient (Equation (5)) [35] given by:andIt is observed that this method certainly overpowers the former in terms of computational time and space limitations. Still, it cannot be extended to tasks involving interaction with continuously evolving environments that require the agent to be adaptive.It has been noted that it is not practically suitable to follow the policy gradient because of safety issues and hardware restrictions. Therefore, we optimize using the policy gradient on stochastic policies wherein integration is done over state space due to the large dimension of the action space in case of soft robots that can sustain movements in directions and angles possible.

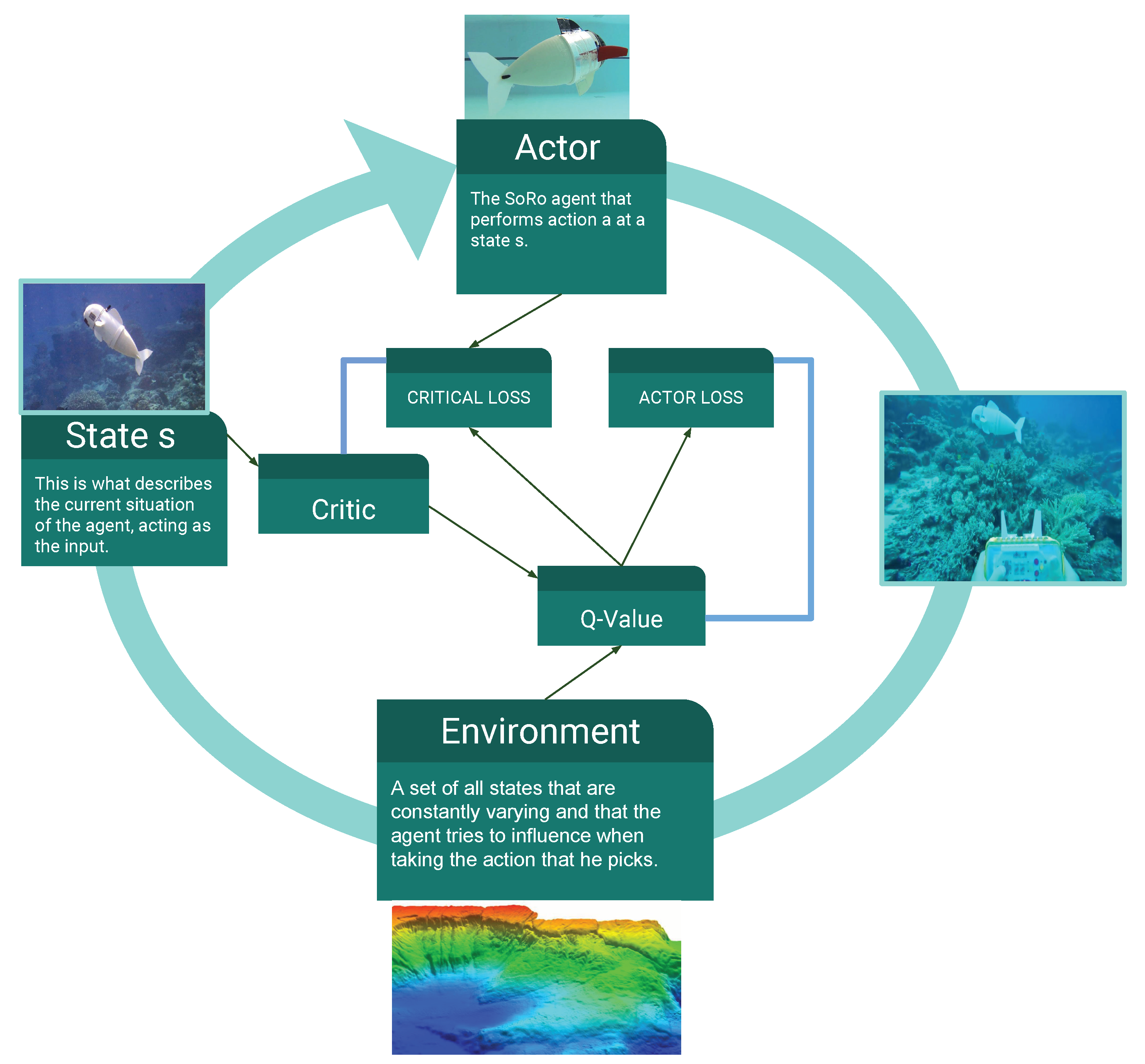

- Actor Critic Method: These algorithms keep a clear representation of the policy and state estimations. The score function for this is obtained by replacing the return from Equation (3) of policy based methods with Qπθ(,) and baseline b(s) with Vπθ() that results in the following equation:The advantage function is given by:Actor-critic methods could be described as an intersection of policy-based and value-based methods, wherein it combines iterative learning methods of both the methods.

- Integrating Planning and Learning: There exist methods wherein the agent learns from experiences itself and can collect imaginary roll-outs [36]. Such methods have been upgraded by using alongside DRL methods [37,38]. They are essential in extending RL techniques to soft robotic systems, as the droves of degrees of freedom lead to expensive interaction with the environment and hence compromise on the training data available.

3. Deep Reinforcement Learning Algorithms Coupled with SoRo

3.1. Introduction

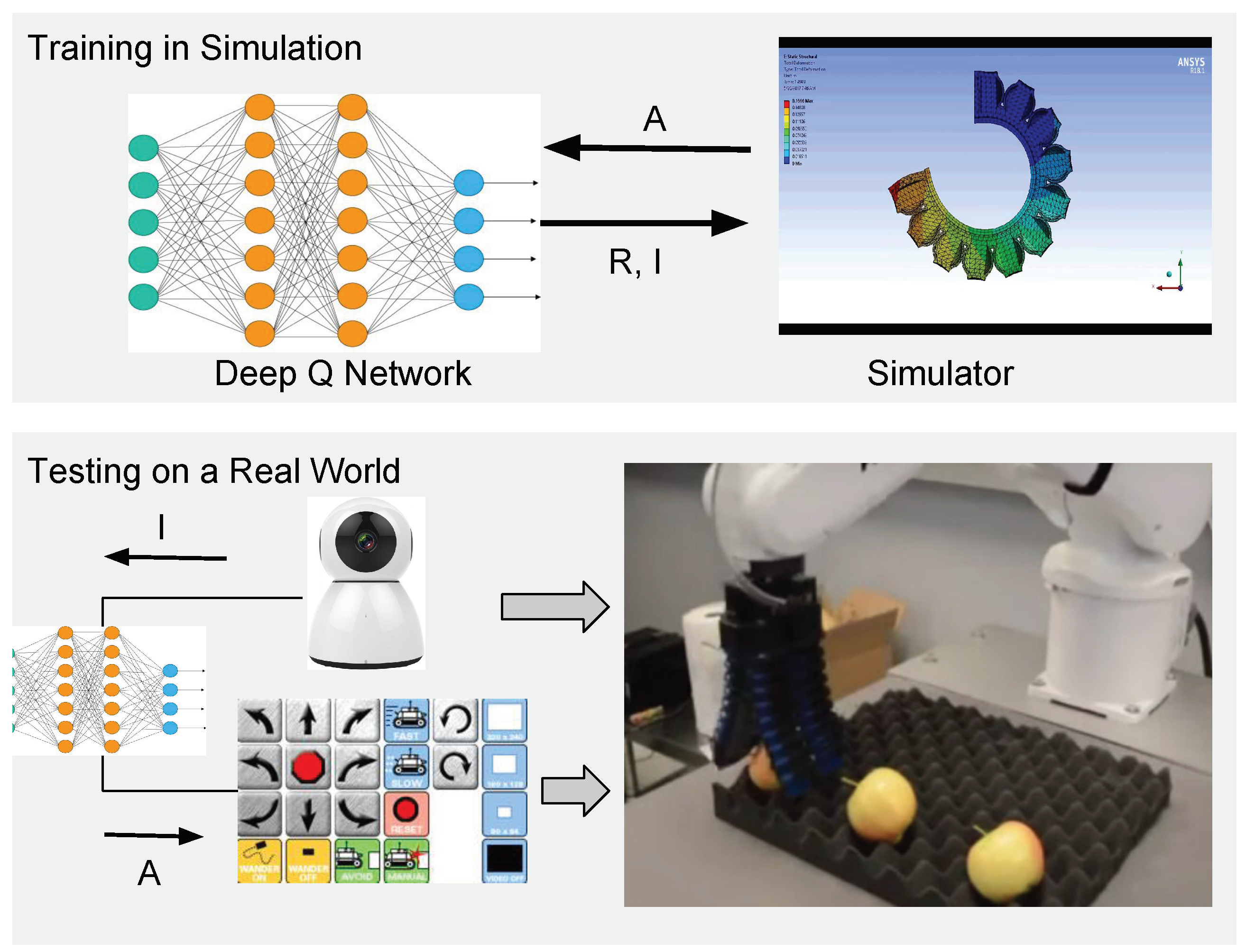

- Deep Q-Network (DQN) [45]: In this approach the optimal value of Q-function is obtained using a deep neural network (generally a CNN), like we do in other value-based algorithms. We denote the weights of this Q-network by Q* and the error and target are given by equations:andThe weights are recursively updated by the equation:moving in the direction of decreasing gradient with a rate equal to the learning rate. These are capable of solving problems involving high dimensional state spaces but restricted to discrete low dimensional action space. These manipulation methods using soft robotics that have structure inspired from biological creatures can interact with the environment, alongside additional flexibility and adaptation to changing situations. The training architecture is given by Figure 2.Two main methods employed in DQN for learning are:

- -

- Target Network: Target network Q- has the same architecture as the Q-network but while learning the weights of only the Q-network are updated, while repeatedly being copied to weights of the − network. In this procedure, a target is computed from the output of − function [18].

- -

- Experience Replay: The collected data in form of state-action pairs with their rewards are not directly utilized but are stored in a replay memory. While actually training, samples are picked up from the memory to serve as mini-batches for the learning. The further learning task follows the usual steps of using gradient descent to reduce loss between learned Q-network and target Q-network.

- Deep Deterministic Policy Gradients (DDPG) [18]: This is a modification of the DQN combining techniques from actor-critic methods to model problems with continuous high dimensional action spaces. The training procedure of a DDPG agent is depicted in Figure 3. The equations for stochastic (Equation (11)) and deterministic (Equation (12)) policies are given by equations:andThe difference between this and DQN lies in the dependence of Q-value on the action where it is represented by giving one value from each action in DQN and by taking action as input to theta Q in case of DDPG. This method remains to be one of the premiere algorithms in the field of DRL applied to systems utilizing soft robots.

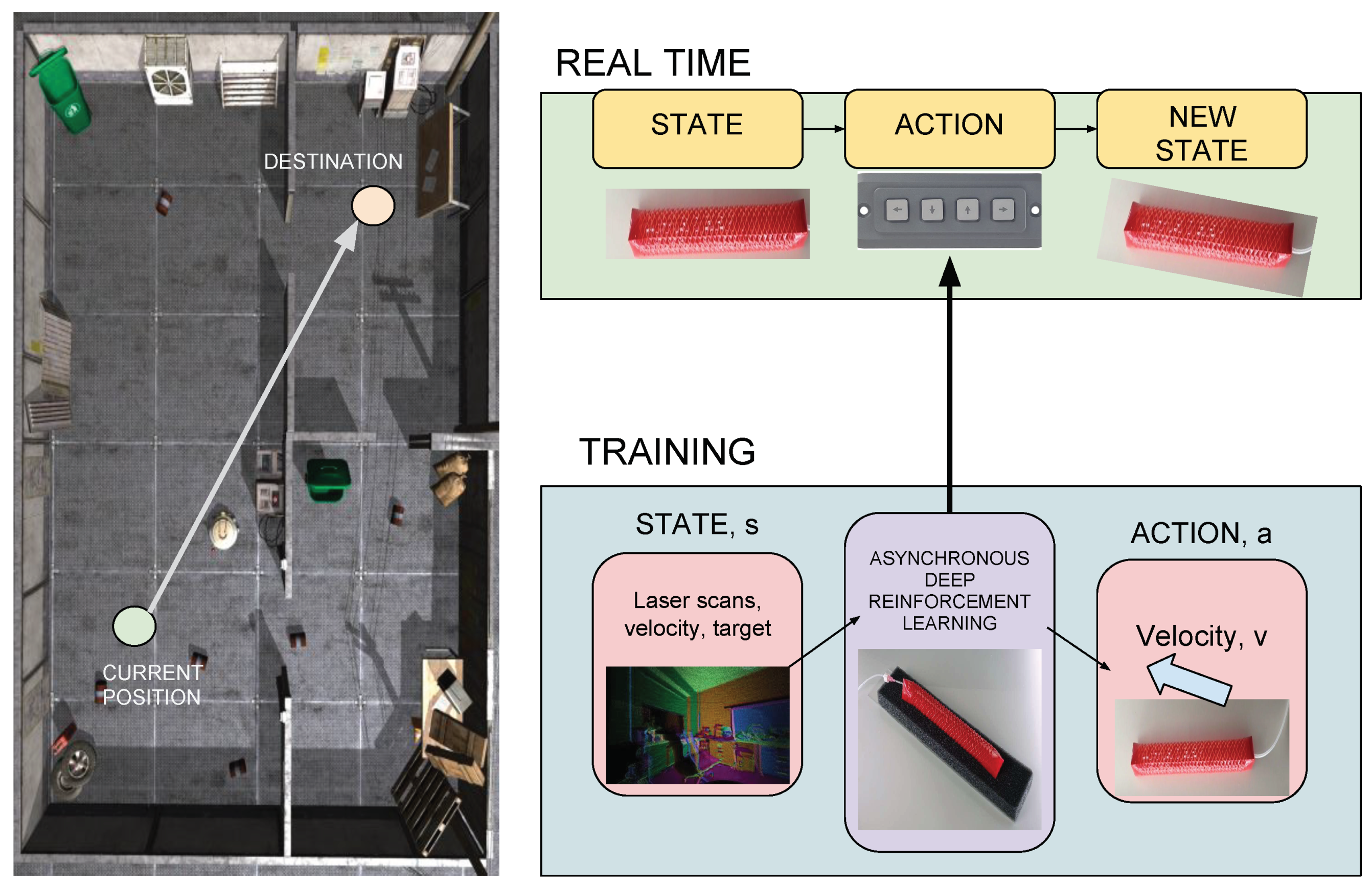

- Normalised Advantage Function (NAF) [49]: This functions in a similar way as DDPG in the sense that it enables Q-learning in continuous high dimensional action spaces by employing the use of deep learning. In NAF, Q-function Q(s, a) is represented so as to ensure that its maximum value can easily be determined during the learning procedure. The difference in NAF and DQN lies in the network output, wherein it outputs , and L in its last linear layer of the neural network. and L predict the advantage necessary for the learning technique. Similar to a DQN, it makes use of Target Network and Experience Replays to ensure there is the least correlation in observations collected over time. The advantage term in NAF is given by:wherein,Asynchronous NAF approach has been introduced in the work by Gu et al. [50].

- Asynchronous Advantage Actor Critic (A3C) [51]: In asynchronous DRL approaches, various actors-learners are utilized to collect observations, each storing gradient for their respective observations that used to update the weights of the network. A3C, as a commonly used algorithm of this type, always maintains a policy representation ;) and a value estimation making use of score function in the form of an advantage function that is obtained by observations that are provided by the action-learners. Each actor-learner collects roll-outs of observations of its local environment up to T steps, accumulating gradients from samples in the roll-outs. The approximation of advantage function used in this approach is given by equation:The network parameters V and are updated repeatedly according to the equations given by:andTraining architecture is shown in Figure 4.This approach does not require learning stabilization techniques like memory replay as the parameters are updated simultaneously rather than sequentially, hence eliminating the correlation factor between them. Furthermore, there are action-learners involved in this method that tend to explore a wider view of the environment and helping to learn an optimal policy. A3C has proven to be the stepping stone for DRL research and to be efficient in providing state-of-art results alongside reduced time and space complexity and its range of problem-solving capabilities.

- Advantage Actor-Critic (A2C) [52,53]: It is not necessary that asynchronous methods lead to better performance. It has been shown in various papers, that synchronous version of the A3C algorithm provides fine results wherein each actor-learner finishes collecting observation after which they are averaged and an update is made.

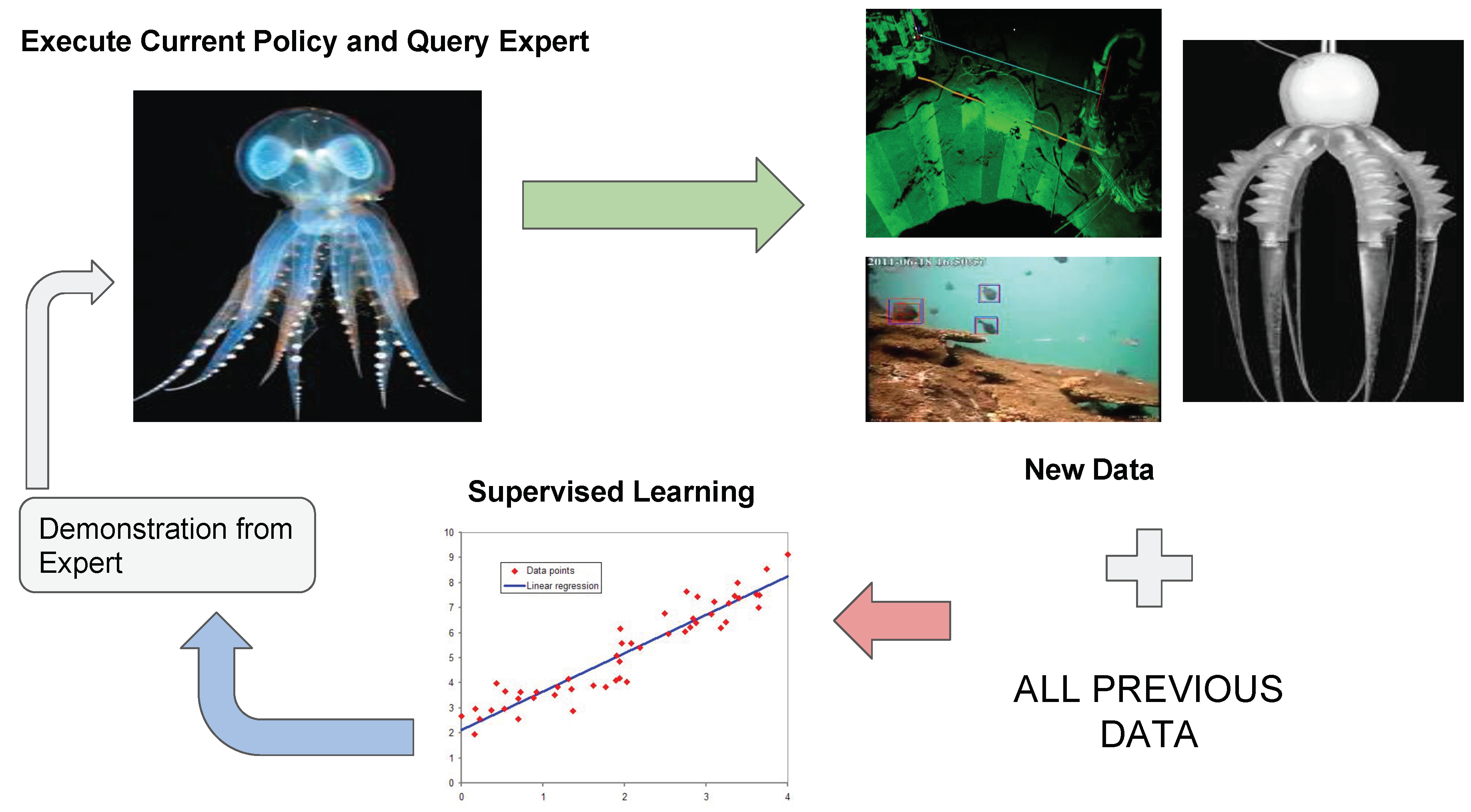

- Guided Policy Search (GPS) [54]: This approach involves collecting samples making use of current policy, generating a training trajectory at each iteration that is utilized to update the current policy according to supervised learning. The change is bounded by adding it like a regularization term in the cost function, to prevent sudden changes in policies leading to instabilities.

- Trust Region Policy Optimization (TRPO) [55]: In Schulman et al. [55], an algorithm was proposed for optimization of large nonlinear policies which gave improvement in the accuracy. Discount cost function for an infinite horizon MDP is given by replacing reward function with cost function giving the equation:Similarly, the same replacement made to state-value functions give the following Equation (19). Hence, resulting in advantage function given by:Optimizing Equation (19) would result in giving an updating rule for the policy as follows:

- Proximal Policy Optimization (PPO) [57]: These methods solve soft constraint optimization problem making use of standard Stochastic Gradient Descent problem. Due to its simplicity and effectiveness in solving control problems, it has been applied to policy estimation in OpenAI. Training architecture is shown in Figure 4.

- Actor-Critic Kronecker-Factored Trust-Region (ACKTR) [53]: This uses a form of trust region gradient descent algorithm for an actor-critic with curvature estimated using a Kronecker-Factored approximation.

3.2. Deep Reinforcement Learning Mechanisms

- Prioritized Action Replay [63]: Prioritizing memory replay according to error

- Hindsight Action Replay [13]: Relabeling the rewards for the collected observations by effective use of failure trajectories along with using binary/sparse labels that speed the off-policy methods.

- Curiosity-Driven Exploration [67]: Incorporating internal rewards besides external ones collected from observations.

- Asymmetric Action Replay for Exploration [68]: The interplay between two forms of the same learner generates curricula, hence, driving exploration

3.3. Deep Reinforcement Learning for Soft Robotics Navigation

- Zhu et al. [72] gave the A3C system the first-person view alongside the target image to conceive the goal of reaching the destination point, by the aid of universal function approximators. The network used for learning is a ResNet [73] that is trained using a simulator [74] that creates a realistic environment consisting of various rooms each as a different scene for scene-specific layers [72].

- Zhang et al. [30] implemented a deep successor representation formulation for predicting Q-value functions that learn representations interchangeable between navigation tasks. Successor feature representation [28,29] breaks down the learning into two fragments-learning task-specific reward functions and task-specific features alongside their evolution for getting the task in hand done. This method takes motivation from other DRL algorithms that make use of optimal function approximations to relate and utilize the information gained from previous tasks for solving the tasks we currently intend to perform [30]. This method has been observed to work effectively in transferring current policies to different goal positions in varied/scaled reward functions and to newer complex situations like new unseen environments.Both these methods intend to solve the problem of navigation for autonomous robots that have inputs in the form of RGB images of the environment by either getting the target image [72] or by transferring information that is gained through previous processes [30,75]. Such models are trained via asynchronous DDPG for a varied set of real and simulations of real environments.

- Mirowski et al. [58] made use of a DRL mechanism with additional supervision signals available (especially loop closures and depth prediction losses) in the environment, allowing the robot to freely move between a varying start and end.

- Chen et al. [76] proposed a solution for compound problems involving dynamic environment (essentially obstacles) like navigating on a path with pedestrians as moving obstacles. It utilizes a set of hardware to demonstrate the proposed algorithm in which LIDAR sensor readings are used to predict the different properties associated with pedestrians (like speed, position, radius) that contribute to forming the reward/loss function. Long et al. [77] make use of PPO to conduct multi-agent obstacle avoidance task.

- Simultaneous Localisation and Mapping (SLAM) [78] has been at the heart of recent robotic advancements with loads of papers been written regularly in this field in the last decade. SLAM makes use of DRL methodologies partially or completely and have shown to produce one of the best results in such tasks of localization and navigation.

- An imitation learning problem, that will be dealt with later in detail, trains a Cognitive Mapping and Planning (CMP) [79] model with DAGGer that inputs 3-channeled images and with the aid of Value Iteration Networks (VIN) creates a map of the environment. The work of Gupta et al. [80] has further introduced a new form of amalgamation of spatial reasoning and path planning.

- Zhang et al. [65] further introduced a new form of SLAM called Neural SLAM that took inspiration from works of Parisotto and Salakhutdinov [81] that allowed interacting with Neural Turing Machine (NTM). Graph-based SLAM [78,82] led the way for Neural Graph Optimiser by Parisotto et al. [83] which inserted this global pose optimiser in the network.

3.4. Deep Reinforcement Learning for Soft Robotics Manipulation

- Gu et al. [50]: Gave a modified form of NAF that works in an asynchronous fashion in the task of door opening taking state inputs like joint angles, end effector position and position of the target. It gave a whopping 100% accuracy in this task and learned it in a mere 2.5 h.

- Levine et al. [61]: Proposed a visuomotor policy-based model that is an extended deep version of the GPS algorithm studied earlier. The architecture of the network consists of convolutional layers along with softmax activation function taking in as input-images of the environment and concatenating the necessary information gained along with the robot’s state information. The required torques are predicted by passing this concatenated input to linear layers at the end of the network. These experiments were carried out with a PR2 robot for various tasks like screwing a bottle, inserting the block into a shape sorting cube etc. Despite giving desirable results, it is not widely used in real-world applications as it requires complete observability in state space that is difficult to obtain in real life.

- Riedmiller et al. [59]: Gave a new algorithm that enhanced the learning procedure from the time complexity as well as accuracy point of view. It said that sparse rewards for the model in attaining optimal policy faster than providing binary rewards, that lead to policy that did not have the desired trajectories for the end effector. For this, another policy (referred to as intentions) was learned for auxiliary tasks whose inputs are easily attainable via basic sensors [101]. Besides this, a scheduling policy is further learned for scheduling the intention policies. Such a system has better results than a normal DRL algorithm for the task of lifting that took about 10 h to learn from scratch.

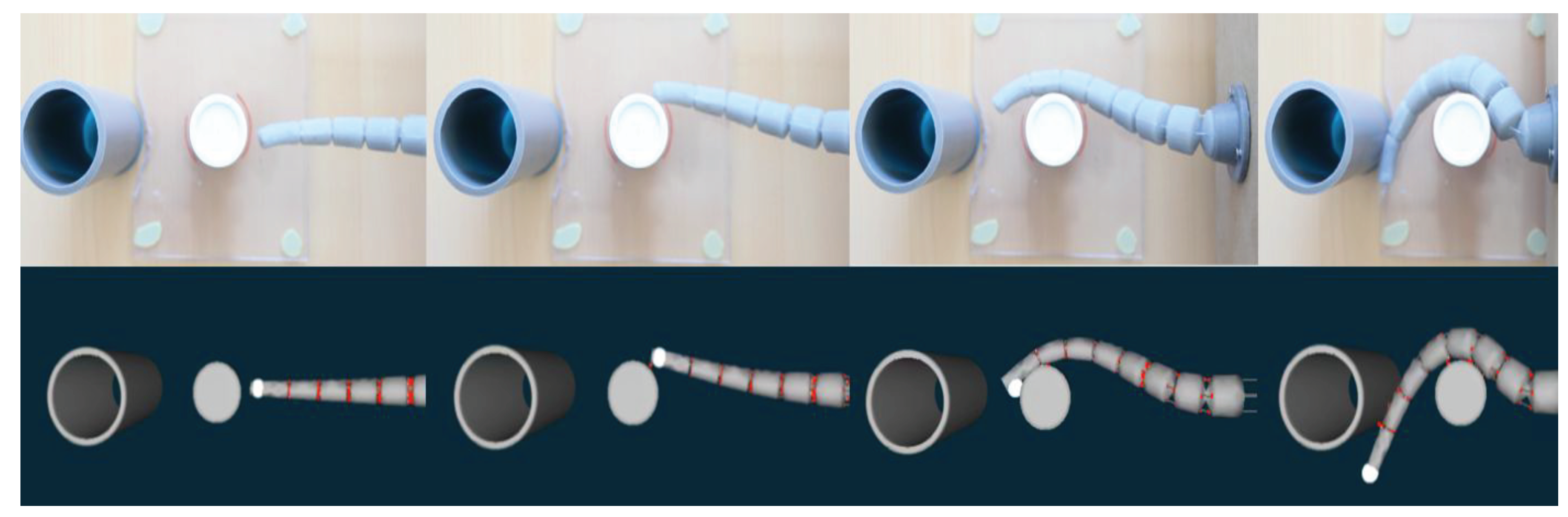

3.5. Difference between Simulation and Real World

- Domain Adaptation: This translates images from a source domain to the destination domain. Domain confusion loss that was first proposed in the paper Tzeng et al. [103] learns a representation that is steady towards changes in the domain. However, the limitation of this approach lies in the fact that it requires the source and destination domain information before the training step, which is challenging. Visual data coming from multiple sources is represented by X (simulator) and Y (onboard sensors). The problem arises when we train the model on X and test it on Y, wherein we observe a considerable amount of performance difference between the two. This problem of reality gap is a genuine problem faced while dealing with systems involving soft robots due to the constant variations in the position of end-effector in numerous degrees of freedom. Hence, there is a need for a system that is invariant to changes in perspective (transform) with which the agent observes various key points in the environment. Domain adaptation is a basic yet effective approach that is widely utilized to solve problems of low accuracy due to variations between simulation and real-world environments.

- This problem can be solved if we have a mapping function that can map data from one domain to the other one. This can be done by employing a deep generative model called Generative Adversarial Network or commonly known as GANs [104,105,106]. GANs are deep models that have two basic components—a discriminator and a generator. The job of the generator is to produce image samples from the source domain to the destination domain, while that of the discriminator is to differentiate between true and false (generated) samples.

- -

- CycleGAN [85]: First proposed in Zhu et al. [85], works on the principle that it is possible and feasible to predict a mapping that maps from input domain to output domain simply by adding a cycle consistent loss term as a regulariser, for the original loss for making sure the mapping is reversible. It is a combination of two normal GANs and hence, two separate encoders, decoders and discriminators are trained according to equations:andThe loss term for the complete weight updating (after incorporating the cycle consistent loss terms for each GAN) step now turns out to be:Hence, the final optimization problem turns out to be Equation (24).This is known to produce desirable results for scenes to draw comparisons/relations between both domains but occasionally fails on complex environments.

- -

- CyCADA [107]: The problems that CycleGAN, that was first introduced in Hoffmann et al. [107], faced were resolved by making use of the semantic consistency loss that could be used to map complex environments. It trains a model to move from the source domain containing semantic labels, helping map the domain images from X to that in Y. The equations that are used for mapping using the decoder are given by:andHere, represents the cross-entropy loss between data-points predicted by pre-trained model and the true labels .

Deep learning frameworks like GANs [104,105,106], VAEs [108], disentangled representations [109,110] have the potential to aid the control process of soft robots. These developing frameworks have widened the perspective of DRL for robotic controls. The combination of two such tender fields of technology-soft robotics and deep learning frameworks (especially generative models) act as stepping stones to major technological advancement in the coming decades. - Domain Adaptation for Visual DRL Policies: In such adaptation techniques, we transform the policy from a source domain to the destination domain.Bousmalis et al. [111] proposed a new way to solve problems of reality gap in policies trained on simulations and applying them in real life scenarios.

3.6. Simulation Platforms

4. Imitation Learning for Soft Robotic Actuators

- Errors—Independent or Compound: The basic assumption of a common supervised learning task that assumes that the actions of the soft robotics agent do not affect the environment in any way is violated in the case of imitation control tasks. The presupposition that data samples (observations) collected are independent and identically distributed is not valid for imitation learning tasks, hence, causing error propagation making the system unstable due to minor errors too.

- Time-step Decisions—Single or Multiple: Supervised learning models generally ignore the dependence between consecutive decisions different from what primitive robotic approaches. The goal of imitation learning is different from simply mimicking the expert’s actions. At times, a hidden objective is missed by the agent while simply copying the actions of the expert. These hidden objectives might be tasks like avoiding colliding with obstacles, increasing the chances to complete a specific task, or minimizing the effort by the mobile robot.

- Behaviour Cloning: This is one of the basic imitation learning approaches in which we train a supervised learning agent based on actions of the expert agent from input states to output states via performed actions. Data AGGregation (DAGGer) [141] is one of the algorithms described earlier that solves the problem of propagation of errors in a sequential system. This is similar to common supervised learning problems in which at each iteration the updated (current) policy is applied and observations recorded are labeled by expert policy. The data collected is concatenated to the data already available and the training procedure is applied to it. This technique has been readily utilized in diverse domain applications due to its simplicity and effectiveness.Bojarski et al. [142] trained a navigation control model that collected data from 72 h of actual driving by the expert agent and tried to mimic the state (images pixels) to actions (steering commands) with a mapping function. Similarly, Tai et al. [143] and Giusti et al. [144] came up with imitation learning applications for real life robotic control. Advanced readings include Codevilla et al. [145] and Dosovitskiy et al. [119].Imitation learning is effective in problems involving manipulation given below:

- -

- Duan et al. [146] improved the one-shot imitation learning to formulate the low-dimensional state to action mapping, using behavioral cloning that tries to reduce the differences in agent and the expert actions. He used this method in order to make a robotic arm stack various blocks in the way the expert does it, observing the relative position of the block at each time step. The performance achieved after incorporating various other additional features like temporal dropouts and convolutions were similar to that of a DAGGer.

- -

- Finn et al. [147] and Yu et al. [60] modified the already existing Model Agnostic Meta-Learning (MAML) [148], which is a diverse algorithm that trains a model on varied tasks and making it capable to solve a new unseen task when assigned. The updating of weights, is done using a method which is quite similar to the common gradient algorithm and given by equation:wherein is the step size for gradient descent. The learning is done to achieve the objective function given by:which leads to the gradient descent step given by:wherein represents the meta step size.

- -

- While Duan et al. [146] and Finn et al. [147] propose a way to train a model that works on newer set of samples, the earlier described Yu et al. [60] is an effective algorithm in case of domain shift problems. Eitel et al. [149] came up with a new approach wherein he gave a new model that takes in over segmented RGB-D images as inputs and gives actions as outputs for segregation of objects in an environment.

- Inverse Reinforcement Learning: This method aims to formulate the utility function that makes the desired behavior nearly optimal. An IRL algorithm called as Maximum Entropy IRL [150] uses an objective function as given by the equation:For a robot following a set a constraints [151,152,153], it is difficult to formulate an accurate reward function but these actions are easy to demonstrate. Soft robotic systems generally have constraint issues due to the composition and configuration of different materials of the actuators resulting in the elimination of a certain part of the action space (or sometimes state space). Hence forcing the involvement of IRL techniques in such situations. This algorithm for soft robotic systems can perform the task that we want to solve by the human expert due to the flexibility and adaptability of the soft robot. The motivation for exploiting this technique in soft robots comes from the fact that their pliant movements make it difficult to formulate a cost function, hence leading to dependence of such models in systems requiring resilience and robustness. Maximum Entropy IRL [154] has been used alongside deep convolutional networks to learn the multiplex representations in problems involving a soft robot to copy the actions of a human expert for control tasks.

- Generative Adversarial Imitation Learning: Even though IRL algorithms are effective, they require large sets of data and training time. Hence, an alternative was proposed by Ho and Ermon [155] who gave Generative Adversarial Imitation Learning (GAIL) that comprises a Generative Adversarial Network (GAN) [104]. Such generative models are essential when working with soft robotic systems as they require larger sets of training data because of the wide variety of actions-state pairs possible in such cases and the fact that GANs are complex deep networks that are able to learn complex representations in the latent space.Like other GANs, GAIL consists of two independently trained fragments-generator (or the decoder) that generate state-action pairs close to that of the expert and the discriminator that learns to distinguish between samples created by the generator and real samples. The objective function of such a model is given by equation:Extensions of GAIL have been proposed in recent works including Baram et al. [156] and Wang et al. [157]. GAIL solved imitation learning problems in navigation (Li et al. [158] and Tai et al. [159] applied it for finding socially complaint policies) as well as manipulation (Stadie et al. [160] used GAIL for mimicking an expert’s actions through domain agnostic representation). This presents an opportunity for GAIL to be applied to systems involving soft actuators for its composite structure and unique learning technique.

5. Future Scope

- Sample Efficiency: It takes efforts and resources in collecting observations for training by making agents interact with the environments especially for soft robotic systems due to the various number of actions possible at each state. The biomimetic motions [10,173] of the flexible bio-inspired actuators make way for further research in creating efficient systems that can collect experiences without the expense.

- Strong Real-time Requirements: Training networks with millions of neurons and tons of tunable parameters that require special hardware and loads of computational time. The current policies need to be made compact in their representation to prevent wasting time and hardware resources in training. The dimensionality of the actions as well as the state space for soft robotic actuated systems is sizeable as compared to its hard counterpart leading to a rise in the number of neurons in the deep network.

- Safety Concerns: The control policies designed need to be precise in their decision-making process. Like factories producing food items, soft robots are required to operate in environments where even a small error could cause loss of life and property.

- Stability, Robustness, and Interpretability [174]: Slight changes in configurations of parameters or robotic hardware or changes in concentration or composition of the materials of the soft robot over time affect the performance of the agent in a way, hence making it unstable. A learned representation that can detect adversarial scenarios is a topic of interest for researchers aiming to improve the performance of DRL agents on soft robotic systems.

- Lifelong Learning: The appearance of the environment differs drastically when observed at different moments, alongside the composition and configuration of soft robotics systems varying with time could result in a certain dip in performance of the learned policies. Hence, this problem provokes technologies that are always evolving and learning from changes in the environmental conditions caused due to actions like bending, twisting, warping and other deformations and variations in chemical composition of the soft robot, besides keeping the policies intact.

- Generalization between tasks: A completely trained model is able to perform well in the tasks trained on, but it performs poorly in new tasks and situations. For soft robotics systems that are required to perform a varied set of tasks that are correlated, it is necessary to come up with methods that can transfer the learning from one training procedure when being tested on tasks. Therefore, there is a requirement of creating completely autonomous systems that take up least resources for training and still are diverse in application. This challenge is of key significance in the context of soft robots due to the hefty expense of allowing them to interact with the environment and the inconsistency of their own structure and composition, leading to increased adaptation concerns.

- Unifying Reinforcement Learning and Imitation Learning: There have been quite a few developments [175,176,177,178] with the aim to combine the two algorithms and reap the benefits of both wherein the agent can learn from the actions of the expert agent alongside interacting and collecting experiences from the environment itself. The learning from experts’ actions (generally a person for soft robotic manipulation problems) can sometimes lead to less-optimal solutions while using deep neural networks to train reinforcement learning agent can turn out to be an expensive task pertaining to the high dimensional action space for soft robots. Current research in this domain focuses on creating a model where a soft robotic agent is able to learn from experts’ demonstrations and then as the time progresses, it moves to a DRL-based exploration technique wherein it interacts with the continuously evolving environment to collect observations. In the near future, we could witness completely self-determining soft robotics systems that have the best of both worlds. It can learn from the expert in the beginning and equipped with capabilities to learn on its own when necessary. Hence resulting in the benefits of the amalgamated mechanical structure by exploiting its benefits.

- Meta-Learning: Methods proposed in Finn et al. [148] and by Nichol and Schulman [179] have found a way to find parameters from relatively fewer data samples and produce better results on newer tasks than they have not been trained on. This development can be stepping stones to further developments leading to the creation of robust and universal policy solutions. This could be a milestone research item when it comes to combining deep learning technologies with soft robotics. Generally, it is hard to retrieve a large dataset for soft robotic systems due to the expenses in allowing it to interact with its environment. Soft robotic systems are generally harder to deal with as compared to the harder ones and therefore, such learning procedures could aid soft robots to perform satisfactorily well.

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Trimmer, B. A Confluence of Technology: Putting Biology into Robotics. Soft Robot. 2014, 1, 159–160. [Google Scholar] [CrossRef]

- Banerjee, H.; Tse, Z.T.H.; Ren, H. Soft Robotics with Compliance and Adaptation for Biomedical Applications and Forthcoming Challenges. Int. J. Robot. Autom. 2018, 33. [Google Scholar] [CrossRef]

- Trivedi, D.; Rahn, C.D.; Kier, W.M.; Walker, I.D. Soft robotics: Biological inspiration, state of the art, and future research. Appl. Bionics Biomech. 2008, 5, 99–117. [Google Scholar] [CrossRef]

- Banerjee, H.; Ren, H. Electromagnetically responsive soft-flexible robots and sensors for biomedical applications and impending challenges. In Electromagnetic Actuation and Sensing in Medical Robotics; Springer: Berlin, Germany, 2018; pp. 43–72. [Google Scholar]

- Banerjee, H.; Aaron, O.Y.W.; Yeow, B.S.; Ren, H. Fabrication and Initial Cadaveric Trials of Bi-directional Soft Hydrogel Robotic Benders Aiming for Biocompatible Robot-Tissue Interactions. In Proceedings of the IEEE ICARM 2018, Singapore, 18–20 July 2018. [Google Scholar]

- Banerjee, H.; Roy, B.; Chaudhury, K.; Srinivasan, B.; Chakraborty, S.; Ren, H. Frequency-induced morphology alterations in microconfined biological cells. Med. Biol. Eng. Comput. 2018. [Google Scholar] [CrossRef]

- Kim, S.; Laschi, C.; Trimmer, B. Soft robotics: A bioinspired evolution in robotics. Trends Biotechnol. 2013, 31, 287–294. [Google Scholar] [CrossRef]

- Ren, H.; Banerjee, H. A Preface in Electromagnetic Robotic Actuation and Sensing in Medicine. In Electromagnetic Actuation and Sensing in Medical Robotics; Springer: Berlin, Germany, 2018; pp. 1–10. [Google Scholar]

- Banerjee, H.; Shen, S.; Ren, H. Magnetically Actuated Minimally Invasive Microbots for Biomedical Applications. In Electromagnetic Actuation and Sensing in Medical Robotics; Springer: Berlin, Germany, 2018; pp. 11–41. [Google Scholar]

- Banerjee, H.; Suhail, M.; Ren, H. Hydrogel Actuators and Sensors for Biomedical Soft Robots: Brief Overview with Impending Challenges. Biomimetics 2018, 3, 15. [Google Scholar] [CrossRef] [PubMed]

- Iida, F.; Laschi, C. Soft robotics: Challenges and perspectives. Proc. Comput. Sci. 2011, 7, 99–102. [Google Scholar] [CrossRef]

- Schmidhuber, J. Deep learning in neural networks: An overview. Neural Netw. 2015, 61, 85–117. [Google Scholar] [CrossRef] [PubMed]

- Andrychowicz, M.; Wolski, F.; Ray, A.; Schneider, J.; Fong, R.; Welinder, P.; McGrew, B.; Tobin, J.; Abbeel, O.P.; Zaremba, W. Hindsight experience replay. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 5048–5058. [Google Scholar]

- Deng, L. A tutorial survey of architectures, algorithms, and applications for deep learning. APSIPA Trans. Signal Inf. Process. 2014, 3. [Google Scholar] [CrossRef]

- Guo, Y.; Liu, Y.; Oerlemans, A.; Lao, S.; Wu, S.; Lew, M.S. Deep learning for visual understanding: A review. Neurocomputing 2016, 187, 27–48. [Google Scholar] [CrossRef]

- Bagnell, J.A. An Invitation to Imitation; Technical Report; Carnegie-Mellon Univ Pittsburgh Pa Robotics Inst: Pittsburgh, PA, USA, 2015. [Google Scholar]

- Levine, S. Exploring Deep and Recurrent Architectures for Optimal Control. arXiv 2013, arXiv:1311.1761. [Google Scholar]

- Lillicrap, T.P.; Hunt, J.J.; Pritzel, A.; Heess, N.; Erez, T.; Tassa, Y.; Silver, D.; Wierstra, D. Continuous Control with Deep Reinforcement Learning. arXiv 2015, arXiv:1509.02971. [Google Scholar]

- Spielberg, S.; Gopaluni, R.B.; Loewen, P.D. Deep reinforcement learning approaches for process control. In Proceedings of the 2017 6th International Symposium on Advanced Control of Industrial Processes (AdCONIP), Taipei, Taiwan, 28–31 May 2017; pp. 201–206. [Google Scholar]

- Khanbareh, H.; de Boom, K.; Schelen, B.; Scharff, R.B.N.; Wang, C.C.L.; van der Zwaag, S.; Groen, P. Large area and flexible micro-porous piezoelectric materials for soft robotic skin. Sens. Actuators A Phys. 2017, 263, 554–562. [Google Scholar] [CrossRef]

- Zhao, H.; O’Brien, K.; Li, S.; Shepherd, R.F. Optoelectronically innervated soft prosthetic hand via stretchable optical waveguides. Sci. Robot. 2016, 1, eaai7529. [Google Scholar] [CrossRef]

- Li, S.; Vogt, D.M.; Rus, D.; Wood, R.J. Fluid-driven origami-inspired artificial muscles. Proc. Natl. Acad. Sci. USA 2017, 114, 13132–13137. [Google Scholar] [CrossRef]

- Ho, S.; Banerjee, H.; Foo, Y.Y.; Godaba, H.; Aye, W.M.M.; Zhu, J.; Yap, C.H. Experimental characterization of a dielectric elastomer fluid pump and optimizing performance via composite materials. J. Intell. Mater. Syst. Struct. 2017, 28, 3054–3065. [Google Scholar] [CrossRef]

- Shepherd, R.F.; Ilievski, F.; Choi, W.; Morin, S.A.; Stokes, A.A.; Mazzeo, A.D.; Chen, X.; Wang, M.; Whitesides, G.M. Multigait soft robot. Proc. Natl. Acad. Sci. USA 2011, 108, 20400–20403. [Google Scholar] [CrossRef]

- Banerjee, H.; Pusalkar, N.; Ren, H. Single-Motor Controlled Tendon-Driven Peristaltic Soft Origami Robot. J. Mech. Robot. 2018, 10, 064501. [Google Scholar] [CrossRef]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction; MIT Press: Cambridge, MA, USA, 1998. [Google Scholar]

- Dayan, P. Improving generalization for temporal difference learning: The successor representation. Neural Comput. 1993, 5, 613–624. [Google Scholar] [CrossRef]

- Kulkarni, T.D.; Saeedi, A.; Gautam, S.; Gershman, S.J. Deep Successor Reinforcement Learning. arXiv 2016, arXiv:1606.02396. [Google Scholar]

- Barreto, A.; Dabney, W.; Munos, R.; Hunt, J.J.; Schaul, T.; van Hasselt, H.P.; Silver, D. Successor features for transfer in reinforcement learning. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 4055–4065. [Google Scholar]

- Zhang, J.; Springenberg, J.T.; Boedecker, J.; Burgard, W. Deep reinforcement learning with successor features for navigation across similar environments. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 2371–2378. [Google Scholar]

- Fu, M.C.; Glover, F.W.; April, J. Simulation optimization: A review, new developments, and applications. In Proceedings of the 37th Conference on Winter Simulation, Orlando, FL, USA, 4–7 December 2005; pp. 83–95. [Google Scholar]

- Szita, I.; Lörincz, A. Learning Tetris using the noisy cross-entropy method. Neural Comput. 2006, 18, 2936–2941. [Google Scholar] [CrossRef] [PubMed]

- Schulman, J.; Moritz, P.; Levine, S.; Jordan, M.; Abbeel, P. High-Dimensional Continuous Control Using Generalized Advantage Estimation. arXiv 2015, arXiv:1506.02438. [Google Scholar]

- Williams, R.J. Simple statistical gradient-following algorithms for connectionist reinforcement learning. Mach. Learn. 1992, 8, 229–256. [Google Scholar] [CrossRef]

- Silver, D.; Lever, G.; Heess, N.; Degris, T.; Wierstra, D.; Riedmiller, M. Deterministic policy gradient algorithms. In Proceedings of the ICML, Beijing, China, 21–26 June 2014. [Google Scholar]

- Sutton, R.S. Dyna, an integrated architecture for learning, planning, and reacting. ACM SIGART Bull. 1991, 2, 160–163. [Google Scholar] [CrossRef]

- Weber, T.; Racanière, S.; Reichert, D.P.; Buesing, L.; Guez, A.; Rezende, D.J.; Badia, A.P.; Vinyals, O.; Heess, N.; Li, Y.; et al. Imagination-Augmented Agents for Deep Reinforcement Learning. arXiv 2017, arXiv:1707.06203. [Google Scholar]

- Kalweit, G.; Boedecker, J. Uncertainty-driven imagination for continuous deep reinforcement learning. In Proceedings of the Conference on Robot Learning, Mountain View, CA, USA, 13–15 November 2017; pp. 195–206. [Google Scholar]

- Banerjee, H.; Pusalkar, N.; Ren, H. Preliminary Design and Performance Test of Tendon-Driven Origami-Inspired Soft Peristaltic Robot. In Proceedings of the 2018 IEEE International Conference on Robotics and Biomimetics (IEEE ROBIO 2018), Kuala Lumpur, Malaysia, 12–15 December 2018. [Google Scholar]

- Cianchetti, M.; Ranzani, T.; Gerboni, G.; Nanayakkara, T.; Althoefer, K.; Dasgupta, P.; Menciassi, A. Soft Robotics Technologies to Address Shortcomings in Today’s Minimally Invasive Surgery: The STIFF-FLOP Approach. Soft Robot. 2014, 1, 122–131. [Google Scholar] [CrossRef]

- Hawkes, E.W.; Blumenschein, L.H.; Greer, J.D.; Okamura, A.M. A soft robot that navigates its environment through growth. Sci. Robot. 2017, 2, eaan3028. [Google Scholar] [CrossRef]

- Atalay, O.; Atalay, A.; Gafford, J.; Walsh, C. A Highly Sensitive Capacitive-Based Soft Pressure Sensor Based on a Conductive Fabric and a Microporous Dielectric Layer. Adv. Mater. 2017. [Google Scholar] [CrossRef]

- Truby, R.L.; Wehner, M.J.; Grosskopf, A.K.; Vogt, D.M.; Uzel, S.G.M.; Wood, R.J.; Lewis, J.A. Soft Somatosensitive Actuators via Embedded 3D Printing. Adv. Mater. 2018, 30, e1706383. [Google Scholar] [CrossRef]

- Bishop-Moser, J.; Kota, S. Design and Modeling of Generalized Fiber-Reinforced Pneumatic Soft Actuators. IEEE Trans. Robot. 2015, 31, 536–545. [Google Scholar] [CrossRef]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-level control through deep reinforcement learning. Nature 2015, 518, 529. [Google Scholar] [CrossRef] [PubMed]

- Katzschmann, R.K.; DelPreto, J.; MacCurdy, R.; Rus, D. Exploration of underwater life with an acoustically controlled soft robotic fish. Sci. Robot. 2018, 3, eaar3449. [Google Scholar] [CrossRef]

- Van Hasselt, H.; Guez, A.; Silver, D. Deep Reinforcement Learning with Double Q-Learning. In Proceedings of the AAAI, Phoenix, AZ, USA, 12–17 February 2016; Volume 2, p. 5. [Google Scholar]

- Wang, Z.; Schaul, T.; Hessel, M.; Van Hasselt, H.; Lanctot, M.; De Freitas, N. Dueling Network Architectures for Deep Reinforcement Learning. arXiv 2015, arXiv:1511.06581. [Google Scholar]

- Gu, S.; Lillicrap, T.; Sutskever, I.; Levine, S. Continuous deep q-learning with model-based acceleration. In Proceedings of the International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016; pp. 2829–2838. [Google Scholar]

- Gu, S.; Holly, E.; Lillicrap, T.; Levine, S. Deep reinforcement learning for robotic manipulation with asynchronous off-policy updates. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 3389–3396. [Google Scholar]

- Mnih, V.; Badia, A.P.; Mirza, M.; Graves, A.; Lillicrap, T.; Harley, T.; Silver, D.; Kavukcuoglu, K. Asynchronous methods for deep reinforcement learning. In Proceedings of the International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016; pp. 1928–1937. [Google Scholar]

- Wang, J.X.; Kurth-Nelson, Z.; Tirumala, D.; Soyer, H.; Leibo, J.Z.; Munos, R.; Blundell, C.; Kumaran, D.; Botvinick, M. Learning to Reinforcement Learn. arXiv 2016, arXiv:1611.05763. [Google Scholar]

- Wu, Y.; Mansimov, E.; Grosse, R.B.; Liao, S.; Ba, J. Scalable trust-region method for deep reinforcement learning using kronecker-factored approximation. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 5279–5288. [Google Scholar]

- Levine, S.; Koltun, V. Guided policy search. In Proceedings of the International Conference on Machine Learning, Atlanta, GA, USA, 16 June–21 June 2013; pp. 1–9. [Google Scholar]

- Schulman, J.; Levine, S.; Abbeel, P.; Jordan, M.; Moritz, P. Trust region policy optimization. In Proceedings of the International Conference on Machine Learning, Lille, France, 6 July–11 July 2015; pp. 1889–1897. [Google Scholar]

- Kakade, S.; Langford, J. Approximately optimal approximate reinforcement learning. In Proceedings of the ICML, Sydney, Australia, 8–12 July 2002; Volume 2, pp. 267–274. [Google Scholar]

- Schulman, J.; Wolski, F.; Dhariwal, P.; Radford, A.; Klimov, O. Proximal Policy Optimization Algorithms. arXiv 2017, arXiv:1707.06347. [Google Scholar]

- Mirowski, P.; Pascanu, R.; Viola, F.; Soyer, H.; Ballard, A.J.; Banino, A.; Denil, M.; Goroshin, R.; Sifre, L.; Kavukcuoglu, K.; et al. Learning to Navigate in Complex Environments. arXiv 2016, arXiv:1611.03673. [Google Scholar]

- Riedmiller, M.; Hafner, R.; Lampe, T.; Neunert, M.; Degrave, J.; Van de Wiele, T.; Mnih, V.; Heess, N.; Springenberg, J.T. Learning by Playing-Solving Sparse Reward Tasks from Scratch. arXiv 2018, arXiv:1802.10567. [Google Scholar]

- Yu, T.; Finn, C.; Xie, A.; Dasari, S.; Zhang, T.; Abbeel, P.; Levine, S. One-Shot Imitation from Observing Humans via Domain-Adaptive Meta-Learning. arXiv 2018, arXiv:1802.01557. [Google Scholar]

- Levine, S.; Finn, C.; Darrell, T.; Abbeel, P. End-to-end training of deep visuomotor policies. J. Mach. Learn. Res. 2016, 17, 1334–1373. [Google Scholar]

- Jaderberg, M.; Mnih, V.; Czarnecki, W.M.; Schaul, T.; Leibo, J.Z.; Silver, D.; Kavukcuoglu, K. Reinforcement Learning with Unsupervised Auxiliary Tasks. arXiv 2016, arXiv:1611.05397. [Google Scholar]

- Schaul, T.; Quan, J.; Antonoglou, I.; Silver, D. Prioritized Experience Replay. arXiv 2015, arXiv:1511.05952. [Google Scholar]

- Bengio, Y.; Louradour, J.; Collobert, R.; Weston, J. Curriculum learning. In Proceedings of the 26th Annual International Conference on Machine Learning, Montreal, QC, Canada, 14–18 June 2009; pp. 41–48. [Google Scholar]

- Zhang, J.; Tai, L.; Boedecker, J.; Burgard, W.; Liu, M. Neural SLAM. arXiv 2017, arXiv:1706.09520. [Google Scholar]

- Florensa, C.; Held, D.; Wulfmeier, M.; Zhang, M.; Abbeel, P. Reverse Curriculum Generation for Reinforcement Learning. arXiv 2017, arXiv:1707.05300. [Google Scholar]

- Pathak, D.; Agrawal, P.; Efros, A.A.; Darrell, T. Curiosity-driven exploration by self-supervised prediction. In Proceedings of the International Conference on Machine Learning (ICML), Sydney, Australia, 6–11 August 2017; Volume 2017. [Google Scholar]

- Sukhbaatar, S.; Lin, Z.; Kostrikov, I.; Synnaeve, G.; Szlam, A.; Fergus, R. Intrinsic Motivation and Automatic Curricula via Asymmetric Self-Play. arXiv 2017, arXiv:1703.05407. [Google Scholar]

- Fortunato, M.; Azar, M.G.; Piot, B.; Menick, J.; Osband, I.; Graves, A.; Mnih, V.; Munos, R.; Hassabis, D.; Pietquin, O.; et al. Noisy Networks for Exploration. arXiv 2017, arXiv:1706.10295. [Google Scholar]

- Plappert, M.; Houthooft, R.; Dhariwal, P.; Sidor, S.; Chen, R.Y.; Chen, X.; Asfour, T.; Abbeel, P.; Andrychowicz, M. Parameter Space Noise for Exploration. arXiv 2017, arXiv:1706.01905. [Google Scholar]

- Rafsanjani, A.; Zhang, Y.; Liu, B.; Rubinstein, S.M.; Bertoldi, K. Kirigami skins make a simple soft actuator crawl. Sci. Robot. 2018. [CrossRef]

- Zhu, Y.; Mottaghi, R.; Kolve, E.; Lim, J.J.; Gupta, A.; Fei-Fei, L.; Farhadi, A. Target-driven visual navigation in indoor scenes using deep reinforcement learning. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 30–31 May 2017; pp. 3357–3364. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Kolve, E.; Mottaghi, R.; Gordon, D.; Zhu, Y.; Gupta, A.; Farhadi, A. AI2-THOR: An Interactive 3d Environment for Visual AI. arXiv 2017, arXiv:1712.05474. [Google Scholar]

- Tai, L.; Paolo, G.; Liu, M. Virtual-to-real deep reinforcement learning: Continuous control of mobile robots for mapless navigation. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 31–36. [Google Scholar]

- Chen, Y.F.; Everett, M.; Liu, M.; How, J.P. Socially aware motion planning with deep reinforcement learning. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 1343–1350. [Google Scholar]

- Long, P.; Fan, T.; Liao, X.; Liu, W.; Zhang, H.; Pan, J. Towards Optimally Decentralized Multi-Robot Collision Avoidance via Deep Reinforcement Learning. arXiv 2017, arXiv:1709.10082. [Google Scholar]

- Thrun, S.; Burgard, W.; Fox, D. Probabilistic Robotics (Intelligent Robotics and Autonomous Agents); The MIT Press: Cambridge, MA, USA, 2001. [Google Scholar]

- Gupta, S.; Davidson, J.; Levine, S.; Sukthankar, R.; Malik, J. Cognitive Mapping and Planning for Visual Navigation. arXiv 2017, arXiv:1702.039203. [Google Scholar]

- Gupta, S.; Fouhey, D.; Levine, S.; Malik, J. Unifying Map and Landmark Based Representations for Visual Navigation. arXiv 2017, arXiv:1712.08125. [Google Scholar]

- Parisotto, E.; Salakhutdinov, R. Neural Map: Structured Memory for Deep Reinforcement Learning. arXiv 2017, arXiv:1702.08360. [Google Scholar]

- Kümmerle, R.; Grisetti, G.; Strasdat, H.; Konolige, K.; Burgard, W. G2o: A general framework for graph optimization. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation (ICRA), Shanghai, China, 9–13 May 2011; pp. 3607–3613. [Google Scholar]

- Parisotto, E.; Chaplot, D.S.; Zhang, J.; Salakhutdinov, R. Global Pose Estimation with an Attention-Based Recurrent Network. arXiv 2018, arXiv:1802.06857. [Google Scholar]

- Schaul, T.; Horgan, D.; Gregor, K.; Silver, D. Universal value function approximators. In Proceedings of the International Conference on Machine Learning, Lille, France, 6 July–11 July 2015; pp. 1312–1320. [Google Scholar]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired Image-to-Image Translation Using Cycle-Consistent Adversarial Networks. arXiv 2017, arXiv:1703.10593. [Google Scholar]

- Khan, A.; Zhang, C.; Atanasov, N.; Karydis, K.; Kumar, V.; Lee, D.D. Memory Augmented Control Networks. arXiv 2017, arXiv:1709.05706. [Google Scholar]

- Bruce, J.; Sünderhauf, N.; Mirowski, P.; Hadsell, R.; Milford, M. One-Shot Reinforcement Learning for Robot Navigation with Interactive Replay. arXiv 2017, arXiv:1711.10137. [Google Scholar]

- Chaplot, D.S.; Parisotto, E.; Salakhutdinov, R. Active Neural Localization. arXiv 2018, arXiv:1801.08214. [Google Scholar]

- Savinov, N.; Dosovitskiy, A.; Koltun, V. Semi-Parametric Topological Memory for Navigation. arXiv 2018, arXiv:1803.00653. [Google Scholar]

- Heess, N.; Sriram, S.; Lemmon, J.; Merel, J.; Wayne, G.; Tassa, Y.; Erez, T.; Wang, Z.; Eslami, A.; Riedmiller, M.; et al. Emergence of Locomotion Behaviours in Rich Environments. arXiv 2017, arXiv:1707.02286. [Google Scholar]

- Calisti, M.; Giorelli, M.; Levy, G.; Mazzolai, B.; Hochner, B.; Laschi, C.; Dario, P. An octopus-bioinspired solution to movement and manipulation for soft robots. Bioinspir. Biomim. 2011, 6, 036002. [Google Scholar] [CrossRef]

- Martinez, R.V.; Branch, J.L.; Fish, C.R.; Jin, L.; Shepherd, R.F.; Nunes, R.M.D.; Suo, Z.; Whitesides, G.M. Robotic tentacles with three-dimensional mobility based on flexible elastomers. Adv. Mater. 2013, 25, 205–212. [Google Scholar] [CrossRef]

- Caldera, S. Review of Deep Learning Methods in Robotic Grasp Detection. Multimodal Technol. Interact. 2018, 2, 57. [Google Scholar] [CrossRef]

- Zhou, J.; Chen, S.; Wang, Z. A Soft-Robotic Gripper with Enhanced Object Adaptation and Grasping Reliability. IEEE Robot. Autom. Lett. 2017, 2, 2287–2293. [Google Scholar] [CrossRef]

- Finn, C.; Tan, X.Y.; Duan, Y.; Darrell, T.; Levine, S.; Abbeel, P. Deep Spatial Autoencoders for Visuomotor Learning. arXiv 2015, arXiv:1509.06113. [Google Scholar]

- Tzeng, E.; Devin, C.; Hoffman, J.; Finn, C.; Peng, X.; Levine, S.; Saenko, K.; Darrell, T. Towards Adapting Deep Visuomotor Representations from Simulated to Real Environments. arXiv 2015, arXiv:1511.07111v3. [Google Scholar]

- Fu, J.; Levine, S.; Abbeel, P. One-shot learning of manipulation skills with online dynamics adaptation and neural network priors. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, 9–14 October 2016; pp. 4019–4026. [Google Scholar]

- Kumar, V.; Todorov, E.; Levine, S. Optimal control with learned local models: Application to dexterous manipulation. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), New York, NY, USA, 16–20 May 2016; pp. 378–383. [Google Scholar]

- Gupta, A.; Eppner, C.; Levine, S.; Abbeel, P. Learning dexterous manipulation for a soft robotic hand from human demonstrations. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Deajeon, Korea, 9–14 October 2016; pp. 3786–3793. [Google Scholar]

- Popov, I.; Heess, N.; Lillicrap, T.; Hafner, R.; Barth-Maron, G.; Vecerik, M.; Lampe, T.; Tassa, Y.; Erez, T.; Riedmiller, M. Data-Efficient Deep Reinforcement Learning for Dexterous manipulation. arXiv 2017, arXiv:1704.03073. [Google Scholar]

- Prituja, A.; Banerjee, H.; Ren, H. Electromagnetically Enhanced Soft and Flexible Bend Sensor: A Quantitative Analysis with Different Cores. IEEE Sens. J. 2018, 18, 3580–3589. [Google Scholar] [CrossRef]

- Sun, J.Y.; Zhao, X.; Illeperuma, W.R.; Chaudhuri, O.; Oh, K.H.; Mooney, D.J.; Vlassak, J.J.; Suo, Z. Highly stretchable and tough hydrogels. Nature 2012, 489, 133–136. [Google Scholar] [CrossRef]

- Tzeng, E.; Hoffman, J.; Zhang, N.; Saenko, K.; Darrell, T. Deep Domain Confusion: Maximizing for Domain Invariance. arXiv 2014, arXiv:1412.3474. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Proceedings of the Advances in Neural Information Processing Systems (NIPS 2014), Montreal, QC, Canada, 8–13 December 2014; pp. 2672–2680. [Google Scholar]

- Radford, A.; Metz, L.; Chintala, S. Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks. arXiv 2015, arXiv:1511.06434. [Google Scholar]

- Arjovsky, M.; Chintala, S.; Bottou, L. Wasserstein gan. arXiv 2017, arXiv:1701.07875. [Google Scholar]

- Hoffman, J.; Tzeng, E.; Park, T.; Zhu, J.Y.; Isola, P.; Saenko, K.; Efros, A.A.; Darrell, T. Cycada: Cycle-Consistent Adversarial Domain Adaptation. arXiv 2017, arXiv:1711.03213. [Google Scholar]

- Doersch, C. Tutorial on Variational Autoencoders. arXiv 2016, arXiv:1606.05908v2. [Google Scholar]

- Szabó, A.; Hu, Q.; Portenier, T.; Zwicker, M.; Favaro, P. Challenges in Disentangling Independent Factors of Variation. arXiv 2017, arXiv:1711.02245v1. [Google Scholar]

- Mathieu, M.; Zhao, J.J.; Sprechmann, P.; Ramesh, A.; LeCun, Y. Disentangling factors of variation in deep representations using adversarial training. In Proceedings of the NIPS 2016, Barcelona, Spain, 5–10 December 2016. [Google Scholar]

- Bousmalis, K.; Irpan, A.; Wohlhart, P.; Bai, Y.; Kelcey, M.; Kalakrishnan, M.; Downs, L.; Ibarz, J.; Pastor, P.; Konolige, K.; et al. Using Simulation and Domain Adaptation to Improve Efficiency of Deep Robotic Grasping. arXiv 2017, arXiv:1709.07857. [Google Scholar]

- Tobin, J.; Fong, R.; Ray, A.; Schneider, J.; Zaremba, W.; Abbeel, P. Domain randomization for transferring deep neural networks from simulation to the real world. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 23–30. [Google Scholar]

- Peng, X.B.; Andrychowicz, M.; Zaremba, W.; Abbeel, P. Sim-to-Real Transfer of Robotic Control with Dynamics Randomization. arXiv 2017, arXiv:1710.06537. [Google Scholar]

- Rusu, A.A.; Vecerik, M.; Rothörl, T.; Heess, N.; Pascanu, R.; Hadsell, R. Sim-to-Real Robot Learning from Pixels with Progressive Nets. arXiv 2016, arXiv:1610.04286. [Google Scholar]

- Zhang, J.; Tai, L.; Xiong, Y.; Liu, M.; Boedecker, J.; Burgard, W. Vr Goggles for Robots: Real-to-Sim Domain Adaptation for Visual Control. arXiv 2018, arXiv:1802.00265. [Google Scholar] [CrossRef]

- Ruder, M.; Dosovitskiy, A.; Brox, T. Artistic style transfer for videos and spherical images. Int. J. Comput. Vis. 2018, 126, 1199–1219. [Google Scholar] [CrossRef]

- Koenig, N.P.; Howard, A. Design and use paradigms for Gazebo, an open-source multi-robot simulator. IROS. Citeseer 2004, 4, 2149–2154. [Google Scholar]

- Maddern, W.; Pascoe, G.; Linegar, C.; Newman, P. 1 year, 1000 km: The Oxford RobotCar dataset. Int. J. Robot. Res. 2017, 36, 3–15. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Ros, G.; Codevilla, F.; Lopez, A.; Koltun, V. CARLA: An Open Urban Driving Simulator. arXiv 2017, arXiv:1711.03938. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Semantic Image Segmentation with Deep Convolutional Nets and Fully Connected Crfs. arXiv 2014, arXiv:1412.7062. [Google Scholar]

- Yang, L.; Liang, X.; Xing, E. Unsupervised Real-to-Virtual Domain Unification for End-to-End Highway Driving. arXiv 2018, arXiv:1801.03458. [Google Scholar]

- Uesugi, K.; Shimizu, K.; Akiyama, Y.; Hoshino, T.; Iwabuchi, K.; Morishima, K. Contractile performance and controllability of insect muscle-powered bioactuator with different stimulation strategies for soft robotics. Soft Robot. 2016, 3, 13–22. [Google Scholar] [CrossRef]

- Niiyama, R.; Sun, X.; Sung, C.; An, B.; Rus, D.; Kim, S. Pouch Motors: Printable Soft Actuators Integrated with Computational Design. Soft Robot. 2015, 2, 59–70. [Google Scholar] [CrossRef]

- Gul, J.Z.; Sajid, M.; Rehman, M.M.; Siddiqui, G.U.; Shah, I.; Kim, K.C.; Lee, J.W.; Choi, K.H. 3D printing for soft robotics—A review. Sci. Technol. Adv. Mater. 2018, 19, 243–262. [Google Scholar] [CrossRef]

- Umedachi, T.; Vikas, V.; Trimmer, B. Highly deformable 3-D printed soft robot generating inching and crawling locomotions with variable friction legs. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 4590–4595. [Google Scholar]

- Mutlu, R.; Tawk, C.; Alici, G.; Sariyildiz, E. A 3D printed monolithic soft gripper with adjustable stiffness. In Proceedings of the IECON 2017—43rd Annual Conference of the IEEE Industrial Electronics Society, Beijing, China, 29 October–1 November 2017; pp. 6235–6240. [Google Scholar]

- Lu, N.; Hyeong Kim, D. Flexible and Stretchable Electronics Paving the Way for Soft Robotics. Soft Robot. 2014, 1, 53–62. [Google Scholar] [CrossRef]

- Rohmer, E.; Singh, S.P.; Freese, M. V-REP: A versatile and scalable robot simulation framework. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Tokyo, Japan, 3–8 November 2013; pp. 1321–1326. [Google Scholar]

- Shah, S.; Dey, D.; Lovett, C.; Kapoor, A. Airsim: High-fidelity visual and physical simulation for autonomous vehicles. In Field and Service Robotics; Springer: Berlin, Germany, 2018; pp. 621–635. [Google Scholar]

- Pan, X.; You, Y.; Wang, Z.; Lu, C. Virtual to Real Reinforcement Learning for Autonomous Driving. arXiv 2017, arXiv:1704.03952. [Google Scholar]

- Savva, M.; Chang, A.X.; Dosovitskiy, A.; Funkhouser, T.; Koltun, V. MINOS: Multimodal Indoor Simulator for Navigation in Complex Environments. arXiv 2017, arXiv:1712.03931. [Google Scholar]

- Wu, Y.; Wu, Y.; Gkioxari, G.; Tian, Y. Building Generalizable Agents with a Realistic and Rich 3D Environment. arXiv 2018, arXiv:1801.02209. [Google Scholar]

- Coevoet, E.; Bieze, T.M.; Largilliere, F.; Zhang, Z.; Thieffry, M.; Sanz-Lopez, M.; Carrez, B.; Marchal, D.; Goury, O.; Dequidt, J.; et al. Software toolkit for modeling, simulation, and control of soft robots. Adv. Robot. 2017, 31, 1208–1224. [Google Scholar] [CrossRef]

- Duriez, C.; Coevoet, E.; Largilliere, F.; Bieze, T.M.; Zhang, Z.; Sanz-Lopez, M.; Carrez, B.; Marchal, D.; Goury, O.; Dequidt, J. Framework for online simulation of soft robots with optimization-based inverse model. In Proceedings of the 2016 IEEE International Conference on Simulation, Modeling, and Programming for Autonomous Robots (SIMPAR), San Francisco, CA, USA, 13–16 December 2016; pp. 111–118. [Google Scholar]

- Olaya, J.; Pintor, N.; Avilés, O.F.; Chaparro, J. Analysis of 3 RPS Robotic Platform Motion in SimScape and MATLAB GUI Environment. Int. J. Appl. Eng. Res. 2017, 12, 1460–1468. [Google Scholar]

- Coevoet, E.; Escande, A.; Duriez, C. Optimization-based inverse model of soft robots with contact handling. IEEE Robot. Autom. Lett. 2017, 2, 1413–1419. [Google Scholar] [CrossRef]

- Yekutieli, Y.; Sagiv-Zohar, R.; Aharonov, R.; Engel, Y.; Hochner, B.; Flash, T. Dynamic model of the octopus arm. I. Biomechanics of the octopus reaching movement. J. Neurophysiol. 2005, 94, 1443–1458. [Google Scholar] [CrossRef]

- Zatopa, A.; Walker, S.; Menguc, Y. Fully soft 3D-printed electroactive fluidic valve for soft hydraulic robots. Soft Robot. 2018, 5, 258–271. [Google Scholar] [CrossRef]

- Ratliff, N.D.; Bagnell, J.A.; Srinivasa, S.S. Imitation learning for locomotion and manipulation. In Proceedings of the 2007 7th IEEE-RAS International Conference on Humanoid Robots, Pittsburgh, PA, USA, 29 November–1 December 2007; pp. 392–397. [Google Scholar]

- Langsfeld, J.D.; Kaipa, K.N.; Gentili, R.J.; Reggia, J.A.; Gupta, S.K. Towards Imitation Learning of Dynamic Manipulation Tasks: A Framework to Learn from Failures. Available online: https://pdfs.semanticscholar.org/5e1a/d502aeb5a800f458390ad1a13478d0fbd39b.pdf (accessed on 18 January 2019).

- Ross, S.; Gordon, G.; Bagnell, D. A reduction of imitation learning and structured prediction to no-regret online learning. In Proceedings of the Fourteenth International Conference on Artificial Intelligence and Statistics, Fort Lauderdale, FL, USA, 11–13 April 2011; pp. 627–635. [Google Scholar]

- Bojarski, M.; Del Testa, D.; Dworakowski, D.; Firner, B.; Flepp, B.; Goyal, P.; Jackel, L.D.; Monfort, M.; Muller, U.; Zhang, J.; et al. End to end Learning for Self-Driving Cars. arXiv 2016, arXiv:1604.07316. [Google Scholar]

- Tai, L.; Li, S.; Liu, M. A deep-network solution towards model-less obstacle avoidance. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, 9–14 October 2016; pp. 2759–2764. [Google Scholar]

- Giusti, A.; Guzzi, J.; Ciresan, D.C.; He, F.L.; Rodríguez, J.P.; Fontana, F.; Faessler, M.; Forster, C.; Schmidhuber, J.; Di Caro, G.; et al. A Machine Learning Approach to Visual Perception of Forest Trails for Mobile Robots. IEEE Robot. Autom. Lett. 2016, 1, 661–667. [Google Scholar] [CrossRef]

- Codevilla, F.; Müller, M.; Dosovitskiy, A.; López, A.; Koltun, V. End-to-End Driving via Conditional Imitation Learning. arXiv 2017, arXiv:1710.02410. [Google Scholar]

- Duan, Y.; Andrychowicz, M.; Stadie, B.C.; Ho, J.; Schneider, J.; Sutskever, I.; Abbeel, P.; Zaremba, W. One-Shot Imitation Learning. In Proceedings of the NIPS, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Finn, C.; Yu, T.; Zhang, T.; Abbeel, P.; Levine, S. One-Shot Visual Imitation Learning via Meta-Learning. arXiv 2017, arXiv:1709.04905. [Google Scholar]

- Finn, C.; Abbeel, P.; Levine, S. Model-Agnostic Meta-Learning for Fast Adaptation of Deep Networks. arXiv 2017, arXiv:1703.03400. [Google Scholar]

- Eitel, A.; Hauff, N.; Burgard, W. Learning to Singulate Objects Using a Push Proposal Network. arXiv 2017, arXiv:1707.08101. [Google Scholar]

- Ziebart, B.D.; Maas, A.L.; Bagnell, J.A.; Dey, A.K. Maximum Entropy Inverse Reinforcement Learning. In Proceedings of the AAAI, Chicago, IL, USA, 13–17 July 2008; Volume 8, pp. 1433–1438. [Google Scholar]

- Okal, B.; Arras, K.O. Learning socially normative robot navigation behaviors with bayesian inverse reinforcement learning. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–20 May 2016; pp. 2889–2895. [Google Scholar]

- Pfeiffer, M.; Schwesinger, U.; Sommer, H.; Galceran, E.; Siegwart, R. Predicting actions to act predictably: Cooperative partial motion planning with maximum entropy models. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Deajeon, Korea, 9–14 October 2016; pp. 2096–2101. [Google Scholar]

- Kretzschmar, H.; Spies, M.; Sprunk, C.; Burgard, W. Socially compliant mobile robot navigation via inverse reinforcement learning. Int. J. Robot. Res. 2016, 35, 1289–1307. [Google Scholar] [CrossRef]

- Wulfmeier, M.; Ondruska, P.; Posner, I. Maximum Entropy Deep Inverse Reinforcement Learning. arXiv 2015, arXiv:1507.04888. [Google Scholar]

- Ho, J.; Ermon, S. Generative adversarial imitation learning. In Proceedings of the Advances in Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; pp. 4565–4573. [Google Scholar]

- Baram, N.; Anschel, O.; Mannor, S. Model-Based Adversarial Imitation Learning. arXiv 2016, arXiv:1612.02179. [Google Scholar]

- Wang, Z.; Merel, J.S.; Reed, S.E.; de Freitas, N.; Wayne, G.; Heess, N. Robust imitation of diverse behaviors. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 5320–5329. [Google Scholar]

- Li, Y.; Song, J.; Ermon, S. Inferring the Latent Structure of Human Decision-Making from Raw Visual Inputs. arXiv 2017, arXiv:1604.07316. [Google Scholar]

- Tai, L.; Zhang, J.; Liu, M.; Burgard, W. Socially-Compliant Navigation through Raw Depth Inputs with Generative Adversarial Imitation Learning. arXiv 2017, arXiv:1710.02543. [Google Scholar]

- Stadie, B.C.; Abbeel, P.; Sutskever, I. Third-Person Imitation Learning. arXiv 2017, arXiv:1703.01703. [Google Scholar]

- Wehner, M.; Truby, R.L.; Fitzgerald, D.J.; Mosadegh, B.; Whitesides, G.M.; Lewis, J.A.; Wood, R.J. An integrated design and fabrication strategy for entirely soft, autonomous robots. Nature 2016, 536. [Google Scholar] [CrossRef]

- Katzschmann, R.K.; de Maille, A.; Dorhout, D.L.; Rus, D. Physical human interaction for an inflatable manipulator. In Proceedings of the 2011 IEEE/EMBC Annual International Conference of the Engineering in Medicine and Biology Society, Boston, MA, USA, August 30–3 September 2011; pp. 7401–7404. [Google Scholar]

- Rogóz, M.; Zeng, H.; Xuan, C.; Wiersma, D.S.; Wasylczyk, P. Light-driven soft robot mimics caterpillar locomotion in natural scale. Adv. Opt. Mater. 2016, 4. [Google Scholar]

- Katzschmann, R.K.; Marchese, A.D.; Rus, D. Hydraulic Autonomous Soft Robotic Fish for 3D Swimming. In Proceedings of the ISER, Marrakech and Essaouira, Morocco, 15–18 June 2014. [Google Scholar]

- Katzschmann, R.K.; de Maille, A.; Dorhout, D.L.; Rus, D. Cyclic hydraulic actuation for soft robotic devices. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, 9–14 October 2016; pp. 3048–3055. [Google Scholar]

- DelPreto, J.; Katzschmann, R.K.; MacCurdy, R.B.; Rus, D. A Compact Acoustic Communication Module for Remote Control Underwater. In Proceedings of the WUWNet, Washington, DC, USA, 22–24 October 2015. [Google Scholar]

- Marchese, A.D.; Onal, C.D.; Rus, D. Towards a Self-contained Soft Robotic Fish: On-Board Pressure Generation and Embedded Electro-permanent Magnet Valves. In Proceedings of the ISER, Quebec City, QC, Canada, 17–21 June 2012. [Google Scholar]

- Narang, Y.S.; Degirmenci, A.; Vlassak, J.J.; Howe, R.D. Transforming the Dynamic Response of Robotic Structures and Systems Through Laminar Jamming. IEEE Robot. Autom. Lett. 2018, 3, 688–695. [Google Scholar] [CrossRef]

- Narang, Y.S.; Vlassak, J.J.; Howe, R.D. Mechanically Versatile Soft Machines Through Laminar Jamming. Adv. Funct. Mater. 2017, 28, 1707136. [Google Scholar] [CrossRef]

- Kim, T.; Yoon, S.J.; Park, Y.L. Soft Inflatable Sensing Modules for Safe and Interactive Robots. IEEE Robot. Autom. Lett. 2018, 3, 3216–3223. [Google Scholar] [CrossRef]

- Qi, R.; Lam, T.L.; Xu, Y. Mechanical design and implementation of a soft inflatable robot arm for safe human-robot interaction. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 3490–3495. [Google Scholar]

- Zeng, H.; Wani, O.M.; Wasylczyk, P.; Priimagi, A. Light-Driven, Caterpillar-Inspired Miniature Inching Robot. Macromol. Rapid Commun. 2018, 39, 1700224. [Google Scholar] [CrossRef]

- Banerjee, H.; Ren, H. Optimizing double-network hydrogel for biomedical soft robots. Soft Robot. 2017, 4, 191–201. [Google Scholar] [CrossRef]

- Henderson, P.; Islam, R.; Bachman, P.; Pineau, J.; Precup, D.; Meger, D. Deep Reinforcement Learning that Matters. arXiv 2017, arXiv:1709.06560. [Google Scholar]

- Vecerík, M.; Hester, T.; Scholz, J.; Wang, F.; Pietquin, O.; Piot, B.; Heess, N.; Rothörl, T.; Lampe, T.; Riedmiller, M.A. Leveraging Demonstrations for Deep Reinforcement Learning on Robotics Problems with Sparse Rewards. arXiv 2017, arXiv:1707.08817v1. [Google Scholar]

- Nair, A.; McGrew, B.; Andrychowicz, M.; Zaremba, W.; Abbeel, P. Overcoming Exploration in Reinforcement Learning with Demonstrations. arXiv 2017, arXiv:1709.10089. [Google Scholar]

- Gao, Y.; Lin, J.; Yu, F.; Levine, S.; Darrell, T. Reinforcement Learning from Imperfect Demonstrations. arXiv 2018, arXiv:1802.05313. [Google Scholar]

- Zhu, Y.; Wang, Z.; Merel, J.; Rusu, A.; Erez, T.; Cabi, S.; Tunyasuvunakool, S.; Kramár, J.; Hadsell, R.; de Freitas, N.; et al. Reinforcement and Imitation Learning for Diverse Visuomotor Skills. arXiv 2018, arXiv:1802.09564. [Google Scholar]

- Nichol, A.; Schulman, J. Reptile: A Scalable Metalearning Algorithm. arXiv 2018, arXiv:1803.02999. [Google Scholar]

| Algorithm | Value Based Methods | Policy Based Methods (and Actor Critic Methods) |

|---|---|---|

| Examples | Q-Learning, SARSA, Value Iteration | Advantage Actor Critic, Cross Entropy Method |

| Steps Involved | Finding optimal value function and find the policy based on that (policy extraction) | Policy evaluation and policy improvement |

| Iteration | The two processes (listed in above cell) are not repeated after once completed | The above two processes (listed in above cell) are iteratively done to achieve convergence |

| Convergence | Relatively Slower | Relatively Faster |

| Type of Problem Solved | Relatively Harder control problems | Relatively basic control problems |

| Method of Learning | Explicit Exploration | Innate Exploration and Stochasticity |

| Advantages | Basic to train off-policy | Blends well with supervised way of learning |

| Process Basis | Based on Optimality Bellman Operator- is non-linear operator | Based on Bellman Operator |

| Domain of Application | Basic Applications | Methods in Which DRL/Imitation Learning Algorithms Can Be Incorporated |

|---|---|---|

Biomedical [42]

|  |

|

Manipulation [43]

|  |

|

Mobile Robotics [44]

|  |

|

| Simulator | Modalities | Special Purpose of Use |

|---|---|---|

| Gazebo (Koenig et al. [117]) | Sensor Plugins | General Purpose |

| Vrep (Rohmer et al. [128]) | Sensor Plugins | General Purpose |

| Airsim (Shah et al. [129]) | Depth/Color/Semantics | Autonomous Driving |

| Carla (Dosovitskiy et al. [119]) | Depth/Color/Semantics | Autonomous Driving |

| Torcs (Pan et al. [130]) | Color/Semantics | Autonomous Drivings |

| AI-2 (Kolve et al. [74]) | Color | Indoor Navigation |

| Minos (Savva et al. [131]) | Depth/Color/Semantics | Indoor Navigation |

| House3D (Wu et al. [132]) | Depth/Color/Semantics | Indoor Navigation |

| Type of Soft Bio-Inspired Robot | Features of Soft Physical Structure | Applications of DRL/Imitation Learning Algorithms |

|---|---|---|

MIT’s Soft Robotic Fish (SoFi) [46,164,165,166,167] |

|

|

Harvard’s Soft Octopus (Octobot) [168,169] |

|

|

CMU’s Inflatable Soft Robotic Arm [170,171] |

|

|

Soft Caterpillar Micro-Robot [172] |

|

|

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bhagat, S.; Banerjee, H.; Ho Tse, Z.T.; Ren, H. Deep Reinforcement Learning for Soft, Flexible Robots: Brief Review with Impending Challenges. Robotics 2019, 8, 4. https://doi.org/10.3390/robotics8010004

Bhagat S, Banerjee H, Ho Tse ZT, Ren H. Deep Reinforcement Learning for Soft, Flexible Robots: Brief Review with Impending Challenges. Robotics. 2019; 8(1):4. https://doi.org/10.3390/robotics8010004

Chicago/Turabian StyleBhagat, Sarthak, Hritwick Banerjee, Zion Tsz Ho Tse, and Hongliang Ren. 2019. "Deep Reinforcement Learning for Soft, Flexible Robots: Brief Review with Impending Challenges" Robotics 8, no. 1: 4. https://doi.org/10.3390/robotics8010004

APA StyleBhagat, S., Banerjee, H., Ho Tse, Z. T., & Ren, H. (2019). Deep Reinforcement Learning for Soft, Flexible Robots: Brief Review with Impending Challenges. Robotics, 8(1), 4. https://doi.org/10.3390/robotics8010004