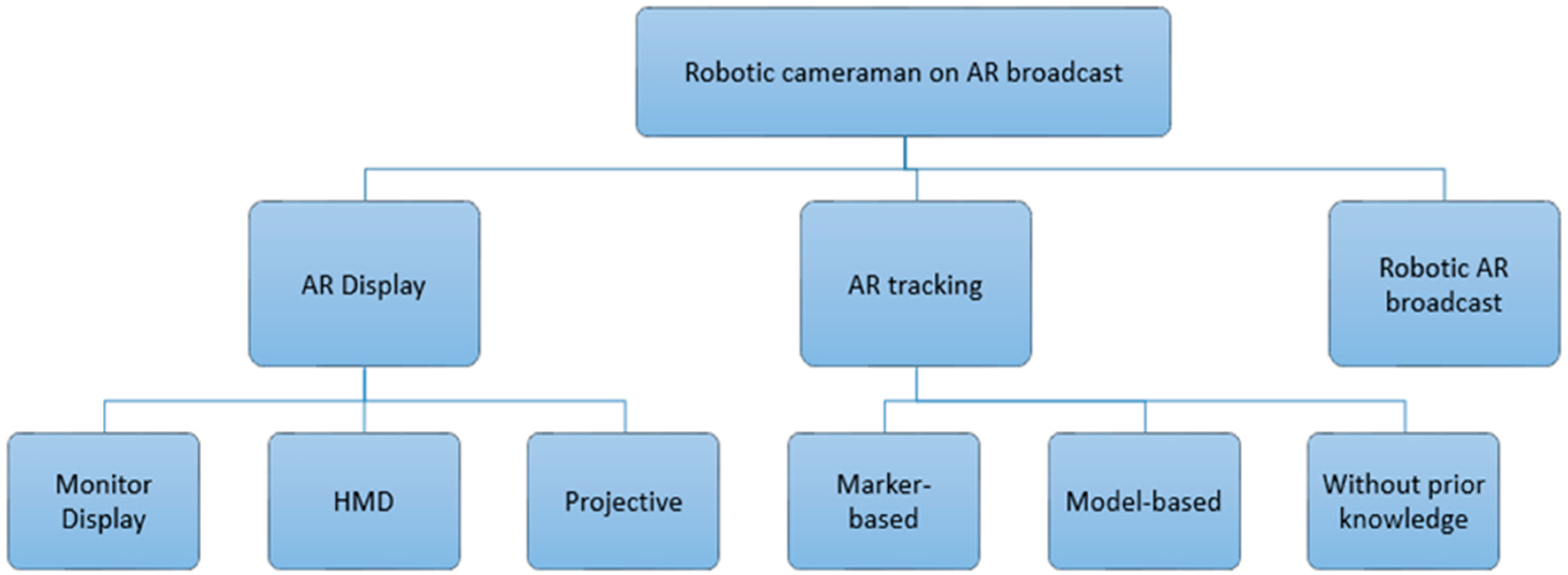

Application of Augmented Reality and Robotic Technology in Broadcasting: A Survey

Abstract

:1. Introduction

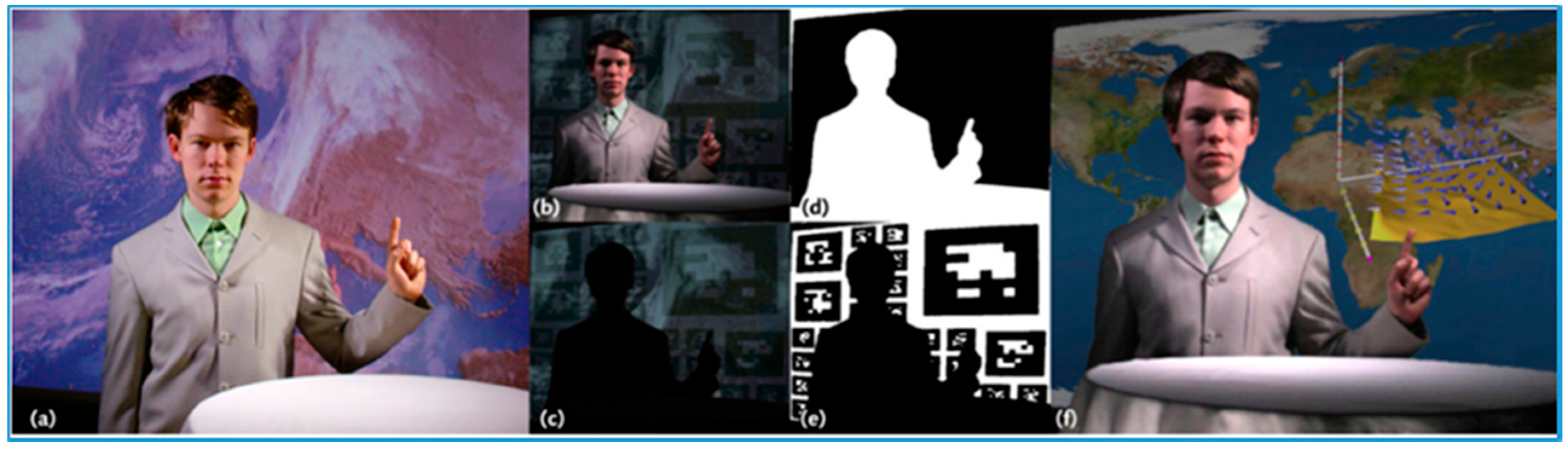

2. Display of AR Broadcast

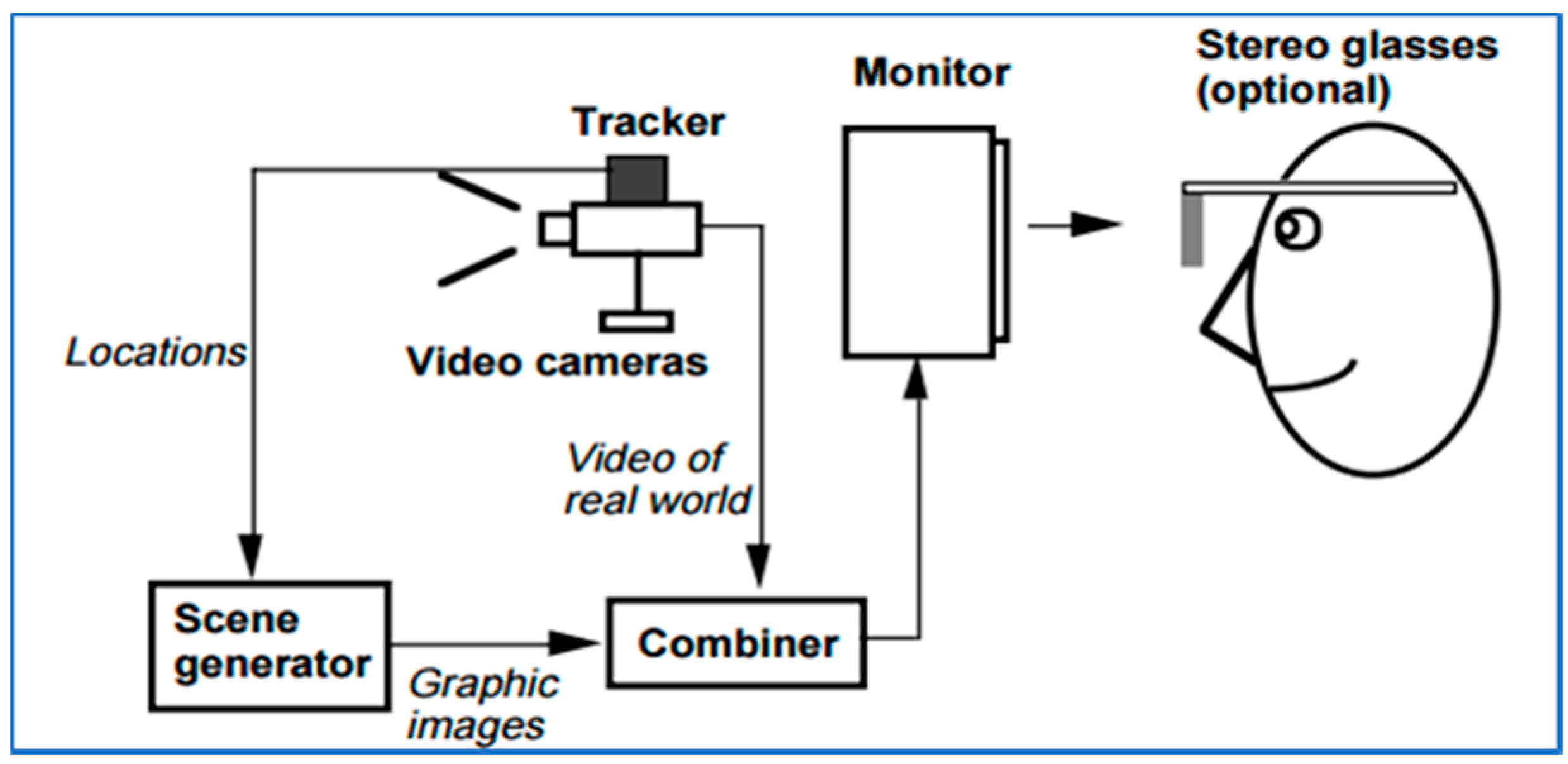

2.1. Monitor-Based Applications

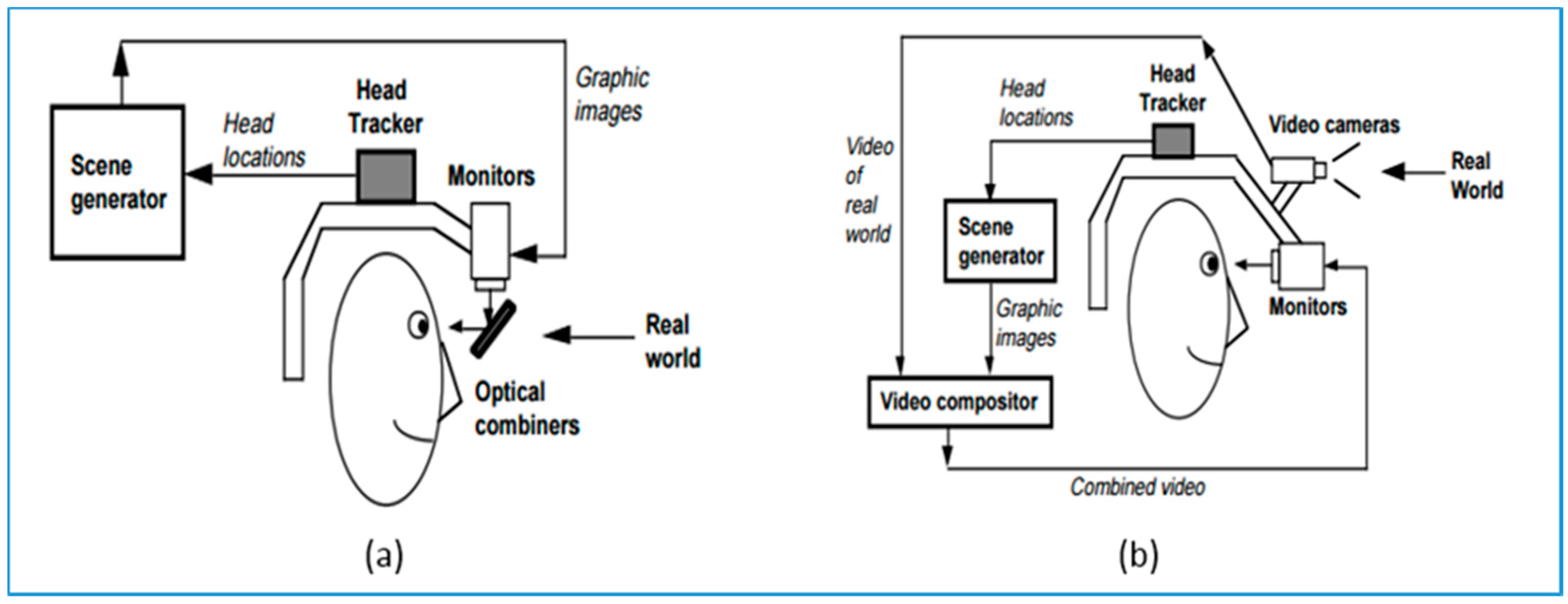

2.2. HMD (Head-Mounted Display)

2.3. Projector-Based Augmented Reality System

2.4. Summary

3. AR Tracking in Broadcasting

3.1. Sensors in AR Broadcasting

3.1.1. Camera

3.1.2. IMU

3.1.3. Infrared Sensor

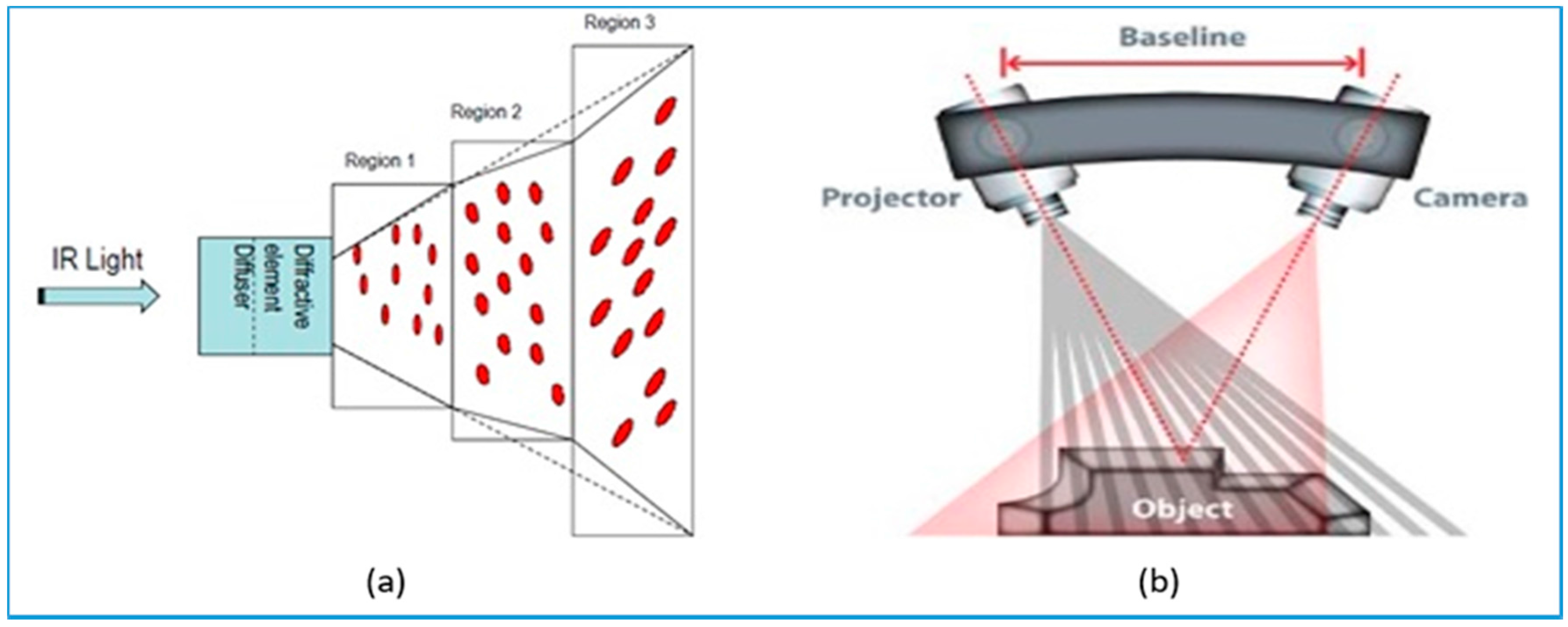

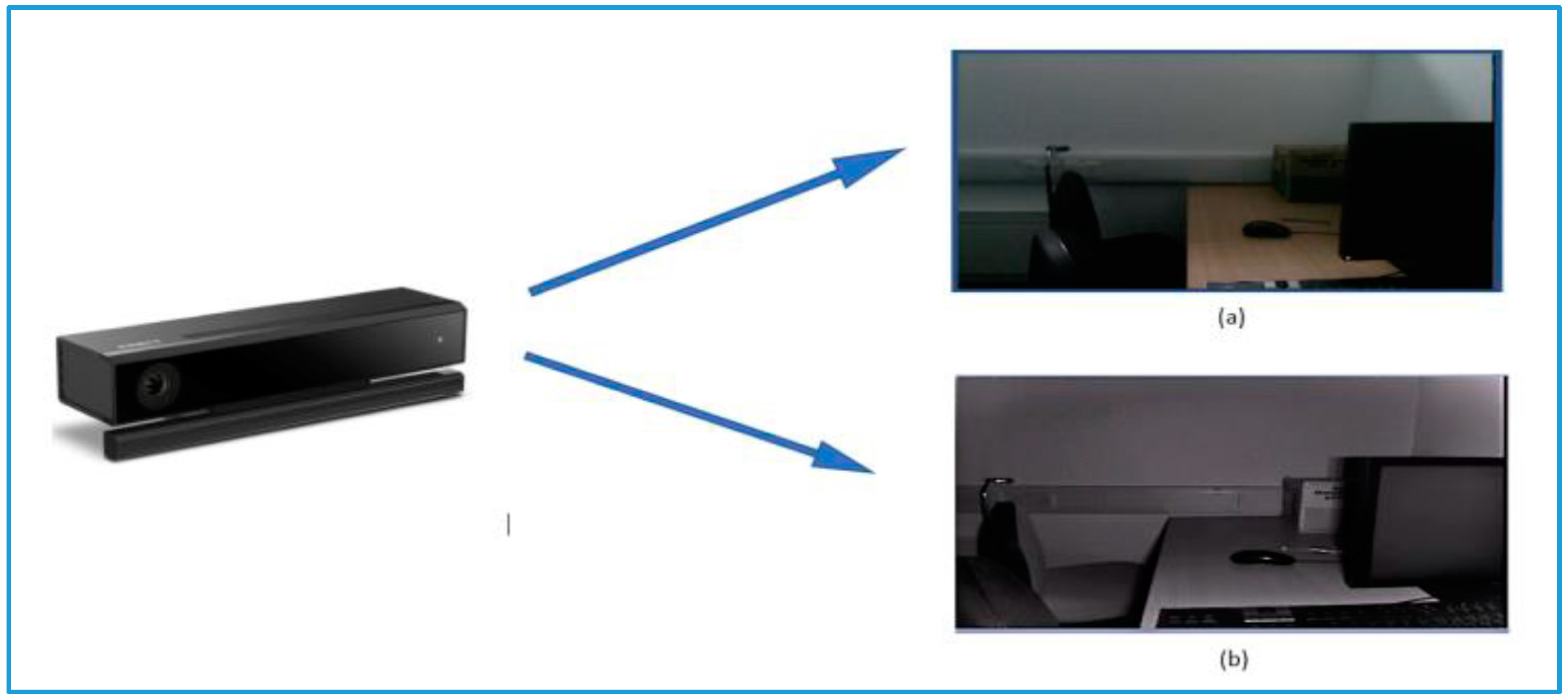

- (i)

- The structure-light projection technique to obtain depth information, e.g., Kinect V1 in Figure 4a. It projects light patterns on the object surface by a LCD projector or other light source, and then calculates the distance of points by analyzing the deformation of projected patterns. Structure light projection is also popular in calibrating intrinsic and extrinsic parameter of camera-projector system [30,31].

- (ii)

- The time of flight technique to obtain depth information, as shown in Figure 4b. The TOF-based 3D camera projects laser light onto target surface and times the reflection time to measure distances of each point [23]. It works at a large range with a high accuracy. Swiss Ranger SR4000/SR4500, and Kinect V2 are two types of such sensors, which are popular.

3.1.4. Hybrid Sensors

3.2. Marker-Based Approaches

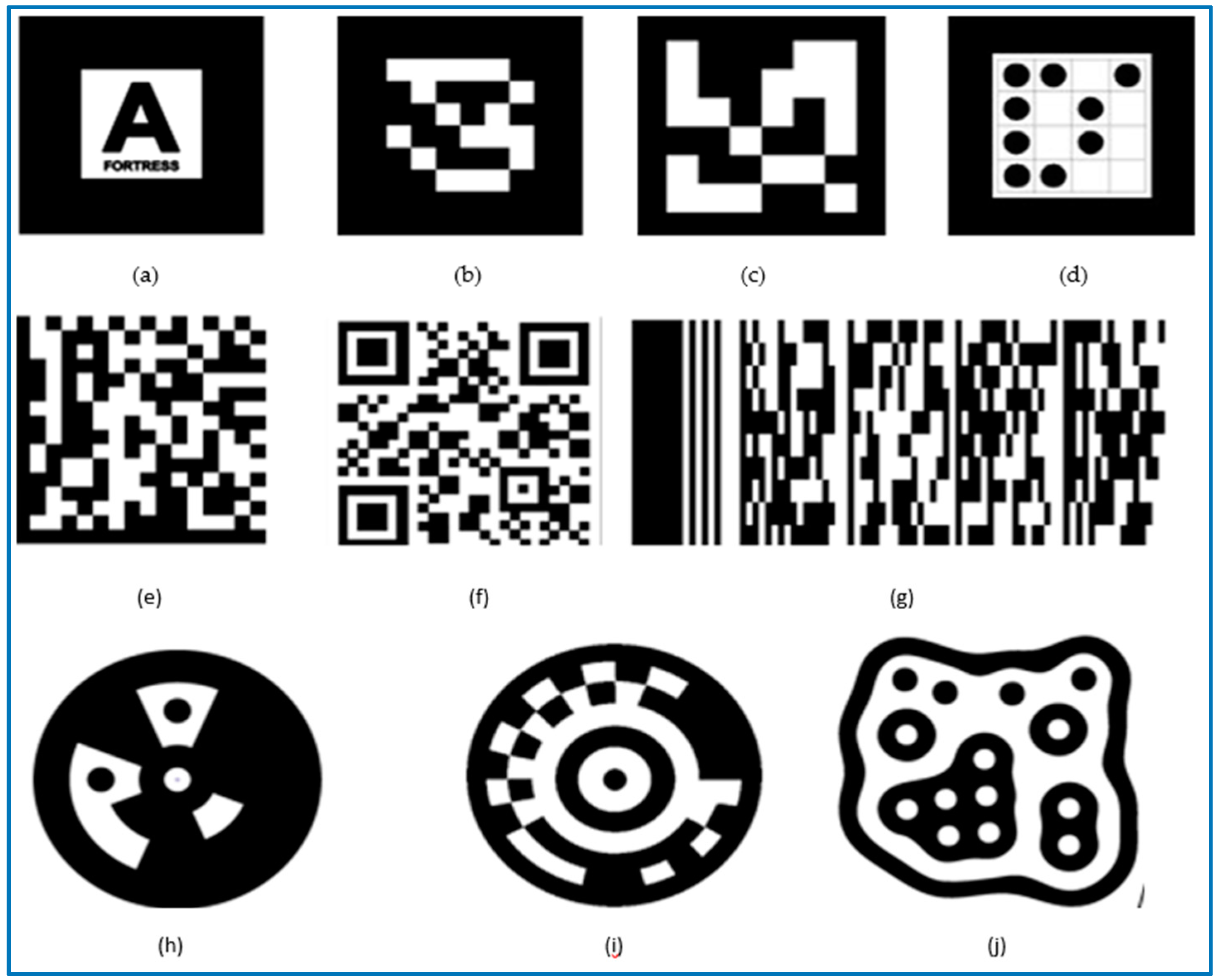

3.2.1. 2D Marker

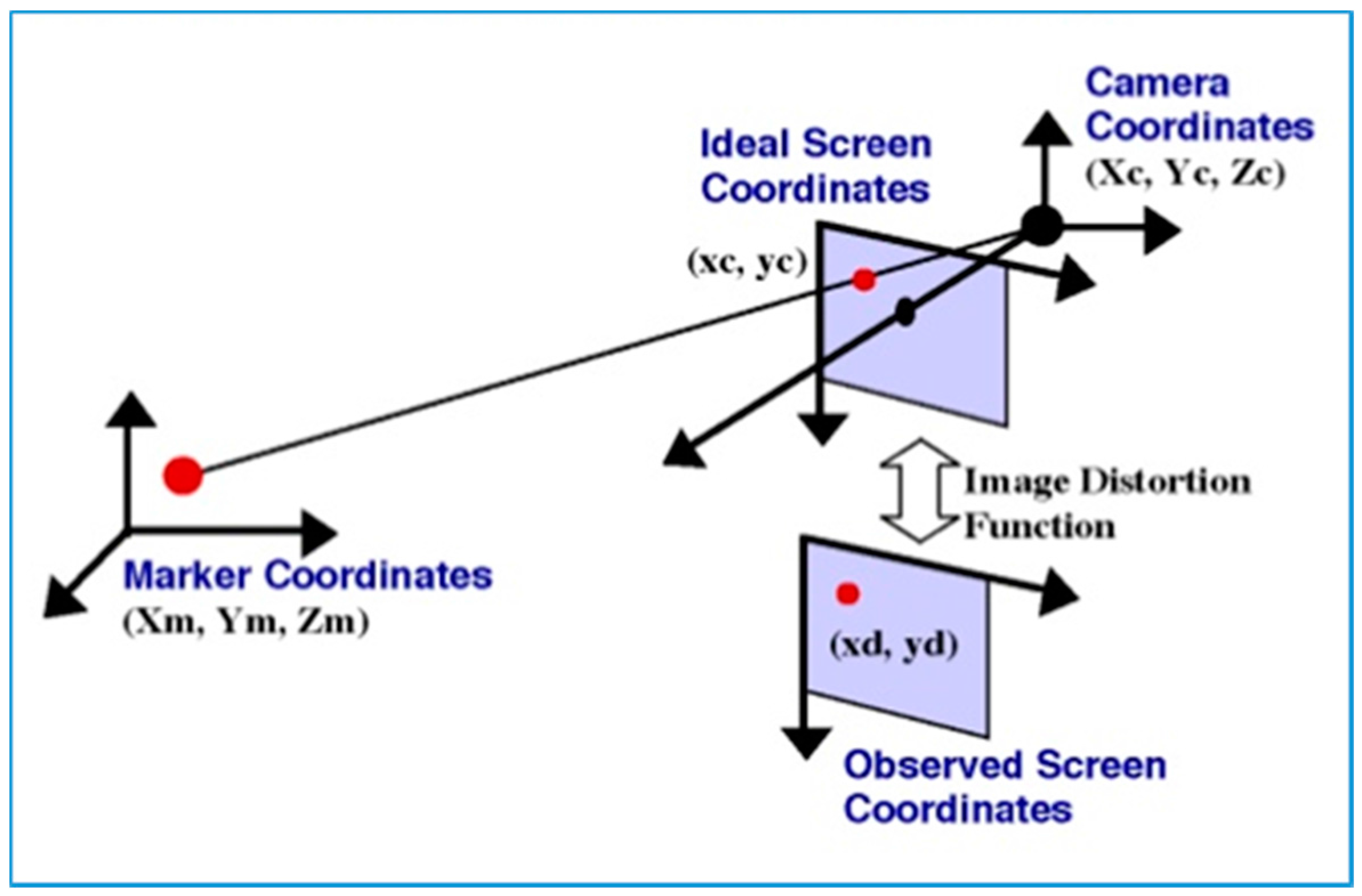

3.2.2. 2D Marker-Based Tracking Algorithm

3.2.3. 3D Marker-based Tracking

3.3. Model-Based Approach

3.4. Tracking without Prior Knowledge

3.5. Summary

- Marker tracking computationally inexpensive and it keeps its efficiency even working with poor quality cameras, but it requires camera keep whole 2D image inside FOV throughout tracking process.

- As an improvement, model-based approach firstly reconstructs a suitable model through scanning working environments, and then tracking camera pose by matching current obtained frame with reference frames. Rather than tracking visual markers, this approach avoids instrumenting environment, has better robustness and accuracy. It is preferred by recent AR broadcasting industries.

- Most recent camera pose tracking theory eliminates the reliance on prior knowledge (2D marker or 3D model), but it is less practical for AR application with consumer-level equipment.

4. Recent Robotic Cameraman Systems

4.1. PTZ

4.2. Truck and Dolly

4.3. Robot Arm

4.4. JIB

4.5. Bracket

4.6. Ground Moving Robot

4.7. Summary

5. Conclusions

- The first challenge is how to make AR techniques be widely deployed in the broadcasting industry successfully. Although many types of AR broadcasting concept or prototypes have been proposed recently, only monitor-displayed AR has a relative mature framework, but provided the limited immersive AR experience. Therefore, it remains to be seen that more advanced AR broadcasting equipment could provide better immersive experience and accepted by all ages of audience.

- The second challenge is how to improve the performance of AR tracking and modeling, such as robustness and accuracy. More advanced AR techniques are still waiting for development, including making the broadcaster have more realistic AR experiences and removing the model dependence in AR broadcasting.

- The third challenge is how to combine AR applications with a wider range of broadcasting programs. The current AR is mainly applied in news reporting and sports broadcasting programs. It becomes necessary to develop the potential AR applications in a wide range of broadcasting programs and make AR become an indispensable part of broadcasting.

- Last but not least, a very important research topic for the future AR broadcasting industry is how to make robotic cameramen completely autonomous so that no human involvement is required and the system accuracy could be much improved.

Author Contributions

Conflicts of Interest

References

- Castle, R.O.; Klein, G.; Murray, D.W. Wide-area augmented reality using camera tracking and mapping in multiple regions. Comput. Vis. Image Underst. 2011, 115, 854–867. [Google Scholar] [CrossRef]

- Azuma, R.T. A survey of augmented reality. Presence Teleoper. Virtual Environ. 1997, 6, 355–385. [Google Scholar] [CrossRef]

- Sports Open House—Vizrt.com. Available online: http://www.vizrt.com/incoming/44949/Sports_Open_House (accessed on 5 May 2017).

- BBC Adds Augmented Reality to Euro 2012 Programs—Vizrt.com. Available online: http://www.vizrt.com/news/newsgrid/35393/BBC_adds_Augmented_Reality_to_Euro_2012_programs (accessed on 10 July 2017).

- Zhang, Z.; Rebecq, H.; Forster, C.; Scaramuzza, D. Benefit of large field-of-view cameras for visual odometry. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation, Stockholm, Sweden, 16–21 May 2016; pp. 801–808. [Google Scholar]

- Hayashi, T.; Uchiyama, H.; Pilet, J.; Saito, H. An augmented reality setup with an omnidirectional camera based on multiple object detection. In Proceedings of the 20th International Conference on Pattern Recognition, Istanbul, Turkey, 23–26 August 2010; pp. 3171–3174. [Google Scholar]

- Li, M.; Mourikis, A.I. Improving the accuracy of EKF-based visual-inertial odometry. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation, Saint Paul, MN, USA, 14–18 May 2012; pp. 828–835. [Google Scholar]

- Pavlik, J.V. Digital Technology and the Future of Broadcasting: Global Perspectives; Routledge: Abingdon, UK, 2015. [Google Scholar]

- Schmalstieg, D.; Hollerer, T. Augmented Reality: Principles and Practice; Addison-Wesley Professional: Boston, MA, USA, 2016. [Google Scholar]

- Steptoe, W.; Julier, S.; Steed, A. Presence and discernability in conventional and non-photorealistic immersive augmented reality. In Proceedings of the 2014 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Munich, Germany, 10–12 September 2014; pp. 213–218. [Google Scholar]

- Cakmakci, O.; Rolland, J. Head-worn displays: A review. J. Disp. Technol. 2006, 2, 199–216. [Google Scholar] [CrossRef]

- Kress, B.; Starner, T. A review of head-mounted displays (HMD) technologies and applications for consumer electronics. In SPIE Defense, Security, and Sensing; International Society for Optics and Photonics: Bellingham, WA, USA, 2013. [Google Scholar]

- Raskar, R.; Welch, G.; Fuchs, H. Spatially augmented reality. In Proceedings of the First IEEE Workshop on Augmented Reality, San Francisco, CA, USA, 1 November 1998; pp. 11–20. [Google Scholar]

- Bimber, O.; Raskar, R. Spatial Augmented Reality: Merging Real and Virtual Worlds; CRC Press: Boca Raton, FL, USA, 2005. [Google Scholar]

- Kipper, G.; Rampolla, J. Augmented Reality: An Emerging Technologies Guide to AR; Elsevier: Amsterdam, The Netherlands, 2012. [Google Scholar]

- Harrison, C. How Panasonic Can Use Stadium Suite Windows for Augmented Reality Projection During Games. Available online: https://www.sporttechie.com/panasonic-unveils-augmented-reality-projection-prototype-for-suite-level-windows/ (accessed on 20 January 2017).

- Moemeni, A. Hybrid Marker-Less Camera Pose Tracking with Integrated Sensor Fusion; De Montfort University: Leicester, UK, 2014. [Google Scholar]

- Engel, J.; Sturm, J.; Cremers, D. Semi-Dense Visual Odometry for a Monocular Camera. In Proceedings of the 2013 IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 1449–1456. [Google Scholar]

- Liu, H.; Zhang, G.; Bao, H. Robust Keyframe-based Monocular SLAM for Augmented Reality. In Proceedings of the 2016 IEEE International Symposium on Mixed and Augmented Reality, Merida, Mexico, 19–23 September 2016; pp. 1–10. [Google Scholar]

- Scaramuzza, D.; Fraundorfer, F. Visual odometry [tutorial]. IEEE Robot. Autom. Mag. 2011, 18, 80–92. [Google Scholar] [CrossRef]

- Moravec, H.P. Obstacle Avoidance and Navigation in the Real World by a Seeing Robot Rover; DTIC Document No. STAN-CS-80-813; Stanford University California Department Computer Science: Stanford, CA, USA, 1980. [Google Scholar]

- Tomono, M. Robust 3D SLAM with a stereo camera based on an edge-point ICP algorithm. In Proceedings of the IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; pp. 4306–4311. [Google Scholar]

- Park, J.; Seo, B.-K.; Park, J.-I. Binocular mobile augmented reality based on stereo camera tracking. J. Real Time Image Process. 2016, 1–10. [Google Scholar] [CrossRef]

- Wu, W.; Tošić, I.; Berkner, K.; Balram, N. Depth-disparity calibration for augmented reality on binocular optical see-through displays. In Proceedings of the 6th ACM Multimedia Systems Conference, Portland, Oregon, 18–20 March 2015; pp. 120–129. [Google Scholar]

- Streckel, B.; Koch, R. Lens model selection for visual tracking. In Joint Pattern Recognition Symposium; Springer: Berlin, Germany, 2005; pp. 41–48. [Google Scholar]

- Inertial Navigation Systems Information. Engineering360. Available online: http://www.globalspec.com/learnmore/sensors_transducers_detectors/tilt_sensing/inertial_gyros (accessed on 20 May 2017).

- Scaramuzza, D.; Achtelik, M.C.; Doitsidis, L.; Friedrich, F.; Kosmatopoulos, E.; Martinelli, A.; Achtelik, M.W.; Chli, M.; Chatzichristofis, S.; Kneip, L. Vision-controlled micro flying robots: From system design to autonomous navigation and mapping in GPS-denied environments. IEEE Robot. Autom. Mag. 2014, 21, 26–40. [Google Scholar] [CrossRef]

- Corke, P.; Lobo, J.; Dias, J. An Introduction to Inertial and Visual Sensing; Sage Publications: London, UK, 2007. [Google Scholar]

- Deyle, T. Low-Cost Depth Cameras (Aka Ranging Cameras or RGB-D Cameras) to Emerge in 2010? Hizook, 2010. Available online: http://www.hizook.com/blog/2010/03/28/low-cost-depth-cameras-aka-ranging-cameras-or-rgb-d-cameras-emerge-2010 (accessed on 29 April 2017).

- VizrtVideos. The Vizrt Public Show at IBC 2013 Featuring Viz Virtual Studio: YouTube. San Bruno, CA, USA. Available online: https://www.youtube.com/watch?v=F89pxcyRbe8 (accessed on 30 September 2013).

- Gennery, D.B. Visual tracking of known three-dimensional objects. Int. J. Comput. Vis. 1992, 7, 243–270. [Google Scholar] [CrossRef]

- Centre for Sports Engineering Research. How the Kinect Works Depth Biomechanics. Sheffield Hallam University: Sheffield, UK. Available online: http://www.depthbiomechanics.co.uk/?p=100 (accessed on 22 May 2017).

- NeoMetrix. What You Need to Know about 3D Scanning—NeoMetrixl. NeoMetrix Technologies Inc.: Lake Mary, FL, USA. Available online: http://3dscanningservices.net/blog/need-know-3d-scanning/ (accessed on 21 July 2016).

- Jianjun, G.; Dongbing, G. A direct visual-inertial sensor fusion approach in multi-state constraint Kalman filter. In Proceedings of the 34th Chinese Control Conference, Hangzhou, China, 28–30 July 2015; pp. 6105–6110. [Google Scholar]

- Viéville, T.; Faugeras, O.D. Cooperation of the inertial and visual systems. In Traditional and Non-Traditional Robotic Sensors; Springer: Berlin, Germany, 1990; pp. 339–350. [Google Scholar]

- Oskiper, T.; Samarasekera, S.; Kumar, R. Multi-sensor navigation algorithm using monocular camera, IMU and GPS for large scale augmented reality. In Proceedings of the 2012 IEEE International Symposium on Mixed and Augmented Reality, Atlanta, GA, USA, 5–8 November 2012; pp. 71–80. [Google Scholar]

- Gui, J.; Gu, D.; Wang, S.; Hu, H. A review of visual inertial odometry from filtering and optimisation perspectives. Adv. Robot. 2015, 29, 1289–1301. [Google Scholar] [CrossRef]

- Tykkälä, T.; Hartikainen, H.; Comport, A.I.; Kämäräinen, J.-K. RGB-D Tracking and Reconstruction for TV Broadcasts. In Proceedings of the International Conference on Computer Vision Theory and Applications (VISAPP) (2), Barcelona, Spain, 21–24 February 2013; pp. 247–252. [Google Scholar]

- Li, R.; Liu, Q.; Gui, J.; Gu, D.; Hu, H. Indoor relocalization in challenging environments with dual-stream convolutional neural networks. IEEE Trans. Autom. Sci. Eng. 2017. [Google Scholar] [CrossRef]

- Wilson, A.D.; Benko, H. Projected Augmented Reality with the RoomAlive Toolkit. In Proceedings of the 2016 ACM International Conference on Interactive Surfaces and Spaces (Iss 2016), Niagara Falls, ON, Canada, 6–9 November 2016; pp. 517–520. [Google Scholar]

- Dong, Z.; Zhang, G.; Jia, J.; Bao, H. Keyframe-based real-time camera tracking. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 1538–1545. [Google Scholar]

- De Gaspari, T.; Sementille, A.C.; Vielmas, D.Z.; Aguilar, I.A.; Marar, J.F. ARSTUDIO: A virtual studio system with augmented reality features. In Proceedings of the 13th ACM SIGGRAPH International Conference on Virtual-Reality Continuum and Its Applications in Industry, Shenzhen, China, 30 November–2 December 2014; pp. 17–25. [Google Scholar]

- Rohs, M. Marker-based embodied interaction for handheld augmented reality games. J. Virtual Real. Broadcast. 2007, 4, 1860–2037. [Google Scholar]

- Naimark, L.; Foxlin, E. Circular data matrix fiducial system and robust image processing for a wearable vision-inertial self-tracker. In Proceedings of the International Symposium on Mixed and Augmented Reality, Darmstadt, Germany, 1 October 2002; pp. 27–36. [Google Scholar]

- Matsushita, N.; Hihara, D.; Ushiro, T.; Yoshimura, S.; Rekimoto, J.; Yamamoto, Y. ID CAM: A smart camera for scene capturing and ID recognition. In Proceedings of the Second IEEE and ACM International Symposium on Mixed and Augmented Reality, Tokyo, Japan, 10 October 2003; pp. 227–236. [Google Scholar]

- Grundhöfer, A.; Seeger, M.; Hantsch, F.; Bimber, O. Dynamic adaptation of projected imperceptible codes. In Proceedings of the 6th IEEE and ACM International Symposium on Mixed and Augmented Reality, Nara, Japan, 13–16 November 2007; IEEE Computer Society: Washington, DC, USA, 2007. [Google Scholar]

- Bruce Thomas, M.B. COMP 4010 Lecture10: AR Tracking; University of South Australia: Adlaide, Australia, 2016. [Google Scholar]

- Seungjun, K.; Jongeun, C.; Jongphil, K.; Jeha, R.; Seongeun, E.; Mahalik, N.P.; Byungha, A. A novel test-bed for immersive and interactive broadcasting production using augmented reality and haptics. IEICE Trans. Inf. Syst. 2006, 89, 106–110. [Google Scholar]

- Khairnar, K.; Khairnar, K.; Mane, S.; Chaudhari, R.; Professor, U. Furniture Layout Application Based on Marker Detection and Using Augmented Reality. Int. Res. J. Eng. Technol. 2015, 2, 540–544. [Google Scholar]

- Celozzi, C.; Paravati, G.; Sanna, A.; Lamberti, F. A 6-DOF ARTag-based tracking system. IEEE Trans. Consum. Electron. 2010, 56. [Google Scholar] [CrossRef]

- Kawakita, H.; Nakagawa, T. Augmented TV: An augmented reality system for TV programs beyond the TV screen. In Proceedings of the 2014 International Conference on Multimedia Computing and Systems, Marrakech, Morocco, 14–16 April 2014; pp. 955–960. [Google Scholar]

- Harris, C. Tracking with rigid models. In Active Vision; MIT Press: Cambridge, MA, USA, 1993. [Google Scholar]

- Kermen, A.; Aydin, T.; Ercan, A.O.; Erdem, T. A multi-sensor integrated head-mounted display setup for augmented reality applications. In Proceedings of the 3DTV-Conference: The True Vision-Capture, Transmission and Display of 3D Video (3DTV-CON), Lisbon, Portugal, 8–10 July 2015; pp. 1–4. [Google Scholar]

- Brown, J.A.; Capson, D.W. A framework for 3D model-based visual tracking using a GPU-accelerated particle filter. IEEE Trans. Vis. Comput. Gr. 2012, 18, 68–80. [Google Scholar] [CrossRef] [PubMed]

- Zisserman, M.A.A. Robust object tracking. In Proceedings of the Asian Conference on Computer Vision, Singapore, 5–8 December 1995; pp. 58–61. [Google Scholar]

- Comport, A.I.; Marchand, E.; Pressigout, M.; Chaumette, F. Real-time markerless tracking for augmented reality: The virtual visual servoing framework. IEEE Trans. Vis. Comput. Gr. 2006, 12, 615–628. [Google Scholar] [CrossRef] [PubMed]

- Agarwal, S.; Furukawa, Y.; Snavely, N.; Simon, I.; Curless, B.; Seitz, S.M.; Szeliski, R. Building rome in a day. Commun. ACM 2011, 54, 105–112. [Google Scholar] [CrossRef]

- Cornelis, K. From Uncalibrated Video to Augmented Reality; ESAT-PSI, Processing Speech and Images; K.U.Leuven: Leuven, Belgium, 2004. [Google Scholar]

- Klein, G.; Murray, D. Parallel tracking and mapping for small AR workspaces. In Proceedings of the 6th IEEE and ACM International Symposium on Mixed and Augmented Reality, Nara, Japan, 13–16 November 2007; pp. 225–234. [Google Scholar]

- Bleser, G.; Wuest, H.; Stricker, D. Online camera pose estimation in partially known and dynamic scenes. In Proceedings of the IEEE/ACM International Symposium on Mixed and Augmented Reality, Santa Barbard, CA, USA, 22–25 October 2006; pp. 56–65. [Google Scholar]

- Bleser, G. Towards Visual-Inertial Slam for Mobile Augmented Reality; Verlag Dr. Hut: München, Germany, 2009. [Google Scholar]

- D’Ippolito, F.; Massaro, M.; Sferlazza, A. An adaptive multi-rate system for visual tracking in augmented reality applications. In Proceedings of the 2016 IEEE 25th International Symposium on Industrial Electronics (ISIE), Santa Clara, CA, USA, 8–10 June 2016; pp. 355–361. [Google Scholar]

- Chandaria, J.; Thomas, G.; Bartczak, B.; Koeser, K.; Koch, R.; Becker, M.; Bleser, G.; Stricker, D.; Wohlleber, C.; Felsberg, M. Realtime camera tracking in the MATRIS project. SMPTE Motion Imaging J. 2007, 116, 266–271. [Google Scholar] [CrossRef]

- Sa, I.; Ahn, H.S. Visual 3D model-based tracking toward autonomous live sports broadcasting using a VTOL unmanned aerial vehicle in GPS-impaired environments. Int. J. Comput. Appl. 2015, 122. [Google Scholar] [CrossRef]

- Salas-Moreno, R.F.; Glocker, B.; Kelly, P.H.J.; Davison, A.J. Dense Planar SLAM. In Proceedings of the 2014 IEEE International Symposium on Mixed and Augmented Reality (Ismar)—Science and Technology, Munich, Germany, 10–12 September 2014; pp. 157–164. [Google Scholar]

- Cho, H.; Jung, S.-U.; Jee, H.-K. Real-time interactive AR system for broadcasting. In Proceedings of the Virtual Reality (VR), Los Angeles, CA, USA, 18–22 March 2017; pp. 353–354. [Google Scholar]

- Newcombe, R.A.; Lovegrove, S.J.; Davison, A.J. DTAM: Dense tracking and mapping in real-time. In Proceedings of the 2011 IEEE International Conference on Computer Vision (ICCV), Barcelona, Spain, 6–13 November 2011; pp. 2320–2327. [Google Scholar]

- Engel, J.; Schöps, T.; Cremers, D. LSD-SLAM: Large-scale direct monocular SLAM. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 834–849. [Google Scholar]

- Schöps, T.; Engel, J.; Cremers, D. Semi-dense visual odometry for AR on a smartphone. In Proceedings of the 2014 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Munich, Germany, 10–12 September 2014; pp. 145–150. [Google Scholar]

- Strasdat, H.; Montiel, J.; Davison, A.J. Real-time monocular SLAM: Why filter? In Proceedings of the 2010 IEEE International Conference on Robotics and Automation (ICRA), Anchorage, AK, USA, 3–7 May 2010; pp. 2657–2664. [Google Scholar]

- Strasdat, H.; Montiel, J.M.; Davison, A.J. Visual SLAM: Why Filter? Image Vis. Comput. 2012, 30, 65–77. [Google Scholar] [CrossRef]

- Li, M.; Mourikis, A.I. High-precision, consistent EKF-based visual-inertial odometry. Int. J. Robot. Res. 2013, 32, 690–711. [Google Scholar] [CrossRef]

- Zhu, Z.; Branzoi, V.; Wolverton, M.; Murray, G.; Vitovitch, N.; Yarnall, L.; Acharya, G.; Samarasekera, S.; Kumar, R. AR-mentor: Augmented reality based mentoring system. In Proceedings of the 2014 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Munich, Germany, 10–12 September 2014; pp. 17–22. [Google Scholar]

- Jeong, C.-J.; Park, G.-M. Real-time Auto Tracking System using PTZ Camera with DSP. Int. J. Adv. Smart Converg. 2013, 2, 32–35. [Google Scholar] [CrossRef]

- Ltd, C.C. Camera Corps Announces Q3 Advanced Compact Robotic Camera. Camera Corpts Ltd.: Byfleet, Surrey, UK. Available online: http://www.cameracorps.co.uk/news/camera-corps-announces-q3-advanced-compact-robotic-camera (accessed on 3 May 2017).

- Vinten. Vinten Vantage—Revolutionary Compact Robotic Head. Richmond, UK. Available online: http://vantage.vinten.com (accessed on 23 May 2017).

- Patidar, V.; Tiwari, R. Survey of robotic arm and parameters. In Proceedings of the 2016 International Conference on Computer Communication and Informatics (ICCCI), Coimbatore, India, 7–9 January 2016; pp. 1–6. [Google Scholar]

- Inc, E.F. Short Presentation—Electricfriends. Bergen, Norway. Available online: http://www.electricfriends.net/portfolio/short-presentation/ (accessed on 27 March 2017).

- Grip, S. Technical Specifications—Stype Grip. Available online: http://www.stypegrip.com/technical-specifications/ (accessed on 20 May 2017).

- As, S.N. 3D Virtual Studio/Augmented Reality System—The Stype Kit. Stype Noway AS. Available online: https://www.youtube.com/watch?v=d8ktVGkHAes (accessed on 20 May 2013).

- Inc T. Elevating Wall Mount Camera Robot-Telemetrics Inc.: Jacksonville, FL, USA. Available online: http://www.telemetricsinc.com/products/camera-robotics/elevating-wall-mount-system/elevating-wall-mount-system/elevating-wall-mount (accessed on 10 May 2017).

- Beacham, F. Cost-Cutting Boosts the Use of Robots in Television Studios—The Broadcast Bridge—Connecting IT to Broadcast. Banbury, UK. Available online: https://www.thebroadcastbridge.com/content/entry/823/cost-cutting-boosts-the-use-of-robots-in-television-studios (accessed on 25 May 2017).

- Ltd, D.P.S. TVB Upgrade Studios with Vinten Radamec and Power Plus VR Camera Tracking. Digital Precision Systems Ltd. Available online: http://www.dpshk.com/news/tvb-upgrade-studios-vinten-radamec-power-plus-vr-camera-tracking (accessed on 2 March 2017).

| Display Methods | Monitor-Based | HMD | Projector | |

|---|---|---|---|---|

| Video-Based | Optical-Based | |||

| Devices | TV screens, Tablet monitor, etc. | Glass-shaped Screen | Optical Combiner | Projector |

| Image Quality | High | Normal | High | Low |

| FOV | Limited | Wide | Wide | Wide |

| No. of viewer | Single | Single | Single | Multiple |

| Advantages | Powerful, Widespread, relatively mature | Portable; Full visualization; Immersive experience | Natural perception of the real-world; Immersive and realistic experience | Multi-views; Appearance change of object; No need for external devices; No program accidents |

| Limitations | Limited view, Limited immersive experience | High computing cost; Need wearable devices; Unnatural view | High computing cost; Need wearable devices; Technical immature | Technical immature; Relatively low quality |

| Vision Sensor | Laser | IMU | Hybrid Sensor | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Sensor Type | Monocular | Binocular | Omni-directional | 2D Laser | 3D Laser | Camera + Laser | Camera + IMU | ||

| 2D laser | 3D laser | ||||||||

| Sensitivity | Vision | Vision | Vision | Light | Light | Friction | Vision + Light | Vision + Light | Vision + Friction |

| Accuracy | Less Accurate | Accurate | Less-Accurate | Accurate | Less-Accurate | Less-Accurate | Accurate | Less-Accurate | Accurate |

| Flexibility | High | High | High | High | Low | High | High | Low | High |

| DOF | 3/6 DOF | 3/6 DOF | 3/6 DOF | 3 DOF | 6 DOF | 6 DOF | 6 DOF | 6 DOF | 6 DOF |

| FOV | High | High | High | High | Low | High | High | Low | High |

| Price | Low | Low | High | Low | High | Low | Low | High | Low |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yan, D.; Hu, H. Application of Augmented Reality and Robotic Technology in Broadcasting: A Survey. Robotics 2017, 6, 18. https://doi.org/10.3390/robotics6030018

Yan D, Hu H. Application of Augmented Reality and Robotic Technology in Broadcasting: A Survey. Robotics. 2017; 6(3):18. https://doi.org/10.3390/robotics6030018

Chicago/Turabian StyleYan, Dingtian, and Huosheng Hu. 2017. "Application of Augmented Reality and Robotic Technology in Broadcasting: A Survey" Robotics 6, no. 3: 18. https://doi.org/10.3390/robotics6030018

APA StyleYan, D., & Hu, H. (2017). Application of Augmented Reality and Robotic Technology in Broadcasting: A Survey. Robotics, 6(3), 18. https://doi.org/10.3390/robotics6030018