A Matlab-Based Testbed for Integration, Evaluation and Comparison of Heterogeneous Stereo Vision Matching Algorithms

Abstract

:1. Introduction

2. Revision of Methods

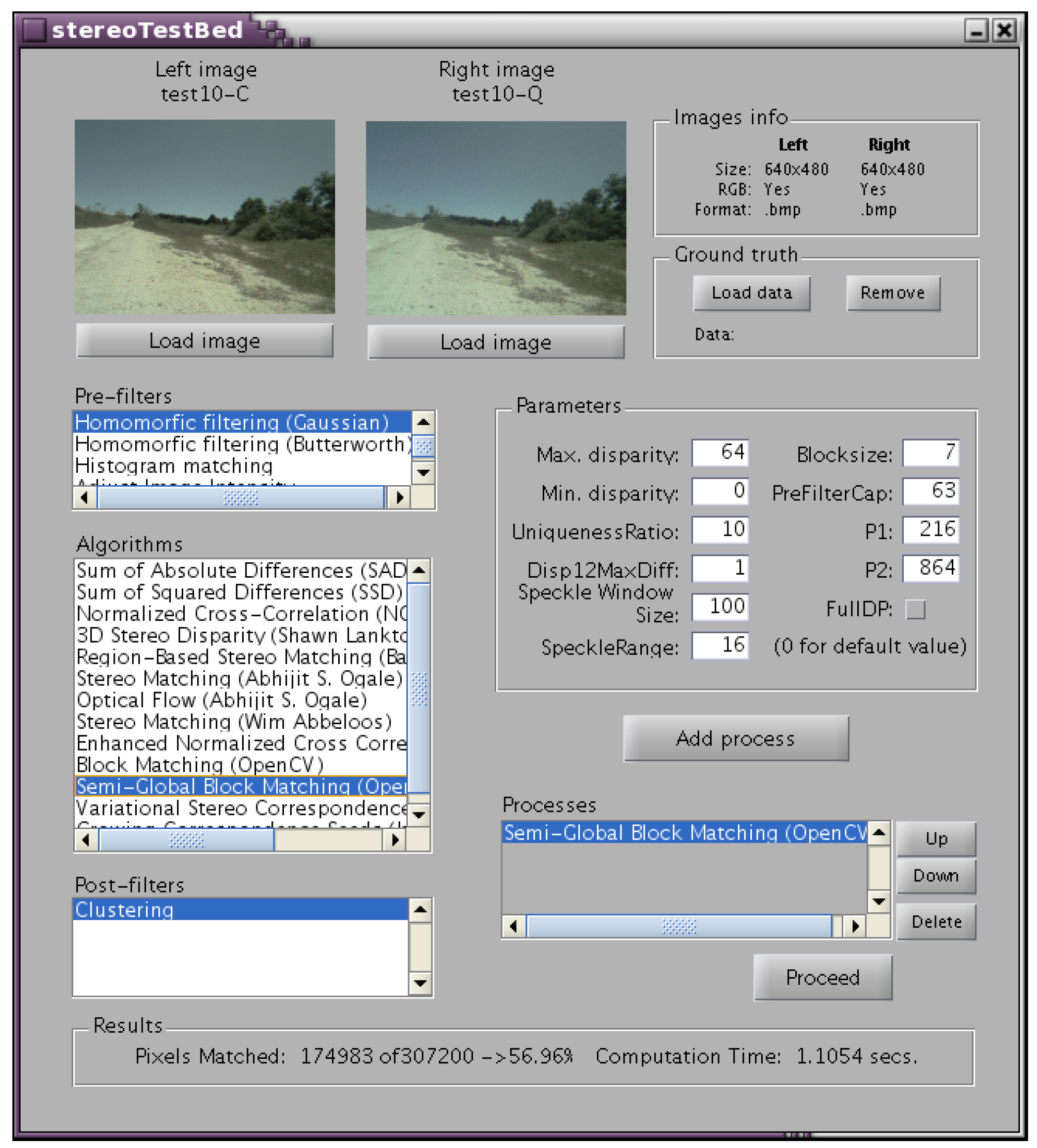

3. Stereo Testbed

3.1. Requirements

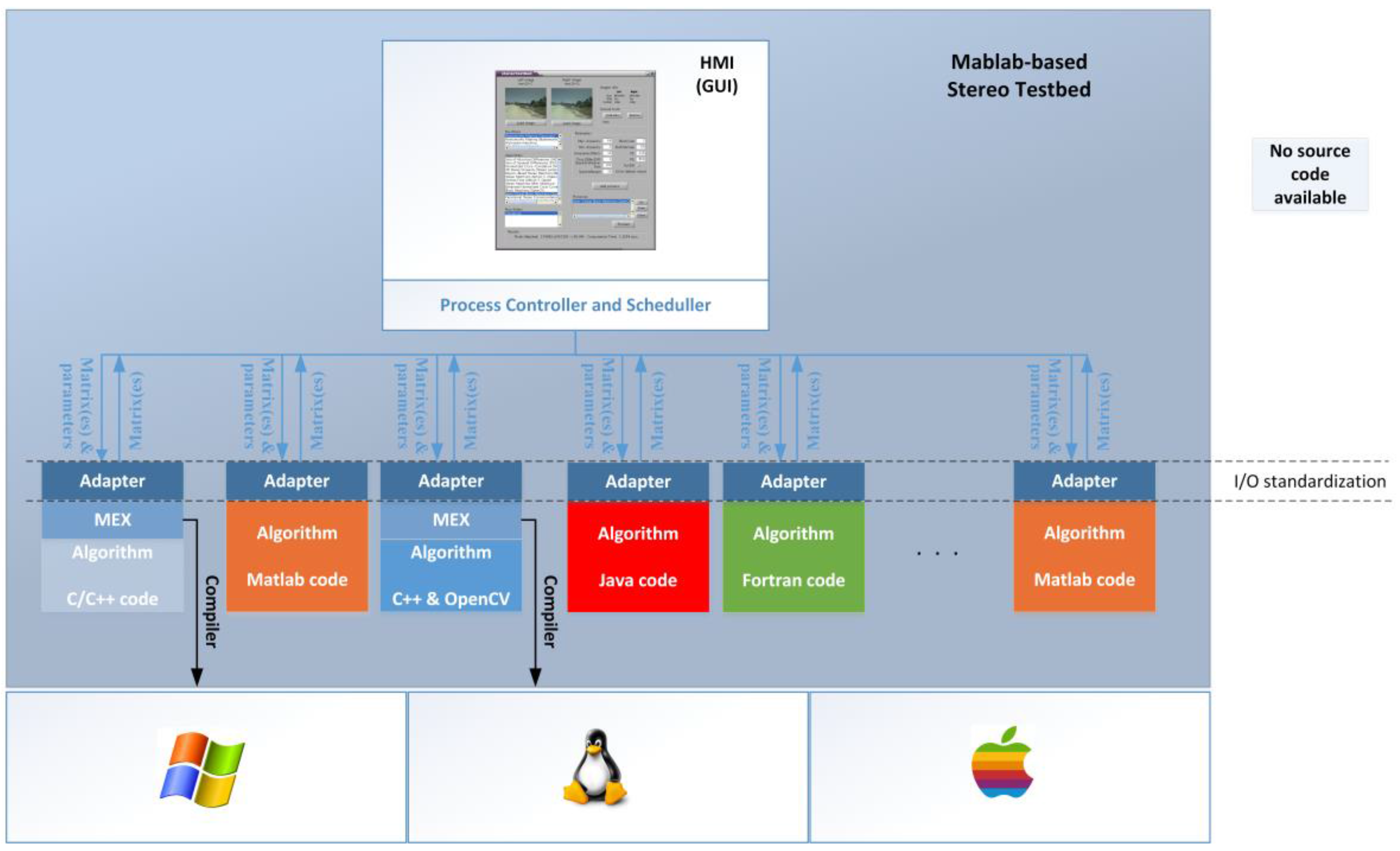

3.2. Design

4. Integrating Algorithms within the Testbed

- Homomorphic filtering [15,16]: this is based on the assumption that images can be expressed as the product of illumination and reflectance components. Illumination is associated with low frequencies of the Fourier transform and reflectance with high frequencies. Thanks to the product and applying logarithms followed by high pass filtering, the illumination component can be eliminated. This allows the retention of only the reflectance associated with intrinsic properties of the objects in the scene. In this implementation we have two different filters available: a Butterworth High Pass [17] and a Gaussian filters. They can be applied in the RGB or HSV color models.

- Adjust image intensity: this adjusts the intensity values such that 1% of data is saturated at low and high intensities, increasing the contrast of each image of the stereo pair.

- Sum of absolute differences, SAD [20]: this is a classical approach. It measures similarity between image blocks by taking the absolute difference between each pixel in the original block and the corresponding pixel in the block being used for comparison. These differences are summed to create a simple metric of block similarity. In this implementation, three similarity measures can be applied: SAD, zero-mean SAD or locally scaled SAD.

- Sum of squared differences, SSD [21]: this measures similarity between image blocks by squaring the differences between each pixel in the original block and the corresponding pixel in the block being used for comparison. In this implementation, three similarity measures can be applied: SSD, zero-mean SSD or locally scaled SSD.

- Normalized cross correlation, NCC [22]: this is a common matching technique to tolerate radiometric differences between stereo images. In this implementation, two similarity measures can be applied: NCC or zero-mean NCC.

- 3D stereo disparity [23]: this estimates pixel disparity by sliding an image over another one used as a reference, subtracting their intensity values and gradient information (spatial derivatives). It also performs a post filtering phase, segmenting the reference image using a “mean shift segmentation” technique, creating clusters with the same disparity value, computed as the median disparity of all the pixels within that segment.

- Region-based stereo matching [24]: this provides two different algorithms based on region growing: a) Global Error Energy Minimization by Smoothing Functions method, using a block-matching technique to construct an error energy matrix for every disparity found and b) line growing method, locating starting points from which regions will grow and then expanding them according to a predefined rule.

- Stereo matching [25,26]: this develops a compositional matching algorithm using binary local evidence which can match images containing slanted surfaces and images having different contrast. It finds connected components and for each pixel, picks the shift which corresponds to the largest connected component containing that pixel, while respecting the uniqueness constraint.

- Optical flow [25,26]: this is similar to the previous stereo matching algorithm, but the main difference is this considers the shifts are two dimensional, unlike the previous one that considered just horizontal shifts, and connections across edges parallel to the flow being considered must be severed.

- Stereo matching [27]: this implements a fast algorithm based on sum of absolute differences (SAD), matching two rectified and undistorted stereo images with subpixel accuracy.

- Enhanced normalized cross correlation [28]: this implements a local (window-based) algorithm that estimates the disparity using a linear kernel that is embodied in the normalized cross correlation function, leading to a continuous function of subpixel correction parameter for each candidate right window. It presents two modes: (a) a typical local mode where the disparity decision regards the central pixel only of each investigated left window, and (b) a shiftable-window mode where each pixel is matched based on the best window that participates into, no matter what its position inside the window is.

- Block-matching [29]: this is based on sum of absolute differences between pixels of the left and right images. This algorithm is optimized by applying the epipolar constraint, searching for matches only over the same horizontal lines which cross the two images, what necessarily implies the two images of the stereo pair must have previously been rectified. The OpenCV 2.3.0 [12] implementation is used for this process.

- Semi-global block-matching [30]: this is a dense hybrid approach that divides the image into windows or blocks of pixels and looks for matches trying to minimize a global energy function, based on the content of the window, instead of looking for similarities between individual pixels. It includes two parameters that control the penalty for disparity changes between neighboring pixels, regulating the “softness” in the changes of disparity. The OpenCV 2.3.0 [12] implementation is used for this process.

- Variational stereo correspondence: this implements a modification of the variational stereo correspondence algorithm described in [31], consisting on a multi-grid approach combined with a multi-level adaptive technique that refines the grid only at peculiarities in the solution, allowing the variational algorithm to achieve real-time performance. It includes also a technique that adapts the regularizer used in the variational approach, as a function of the current state of the optimization. The OpenCV 2.3.0 [12] implementation is used for this process.

- Growing correspondence seeds [32]: this is a fast matching algorithm for binocular stereo suitable for large images. It avoids visiting the entire disparity space by growing high similarity components. The final decision is performed by Confidently Stable Matching, which selects among competing correspondence hypotheses. The algorithm is not a direct combination of unconstrained growth followed by a filtration step. Instead, the growing procedure is designed to fit the final matching algorithm in the sense the growing is stopped whenever the correspondence hypothesis cannot win the final matching competition.

- Clustering: this removes false matches after disparities computation. The algorithm groups pixels in connected components based on the principle of spatial continuity, where pixels belonging to the same region are all reachable from any other pixel from the same region, either by direct contact or at very short distance. Once clusters have been computed, they are filtered out depending on certain configurable criteria, such as cluster size or density.

5. Usage of the Testbed

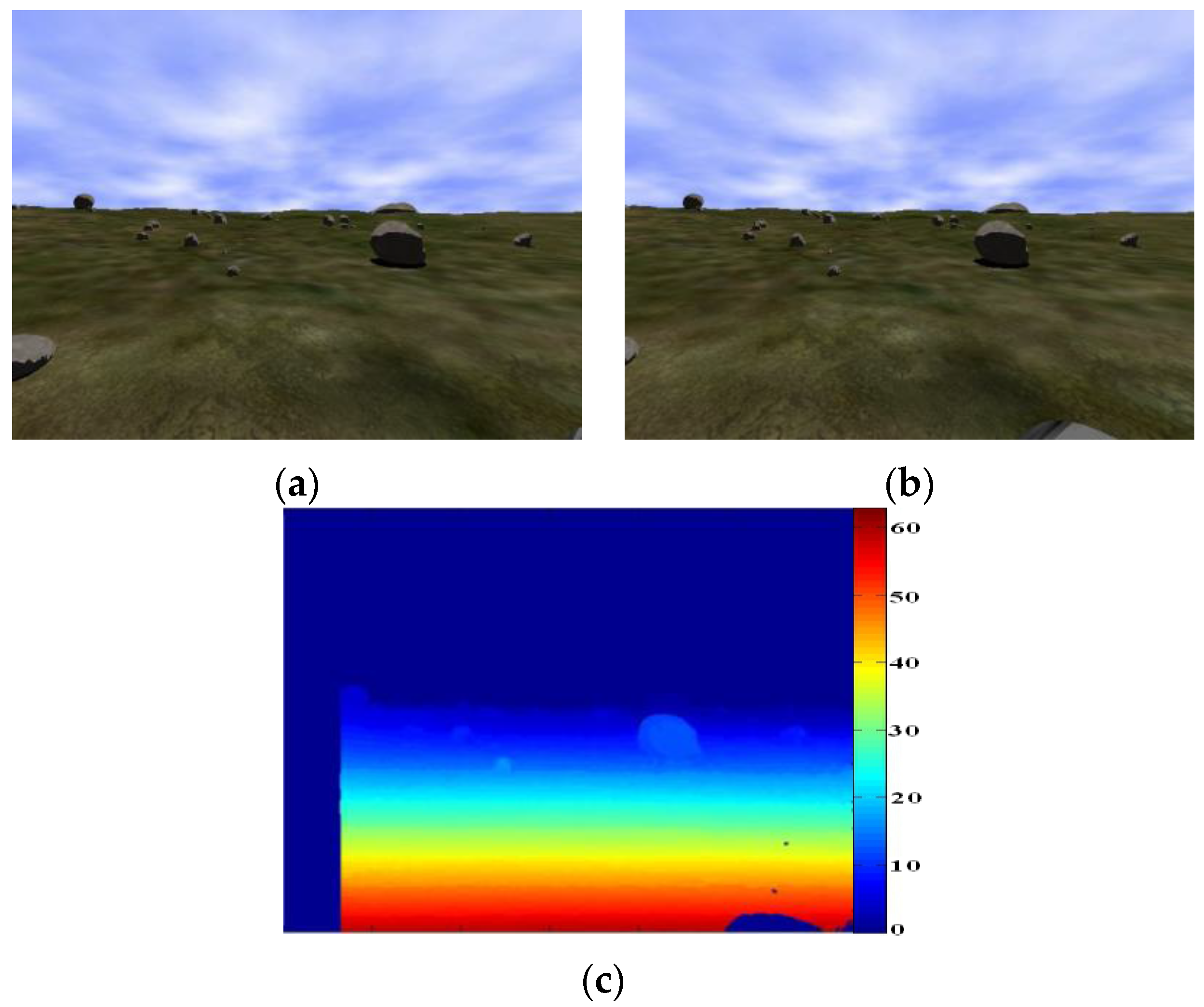

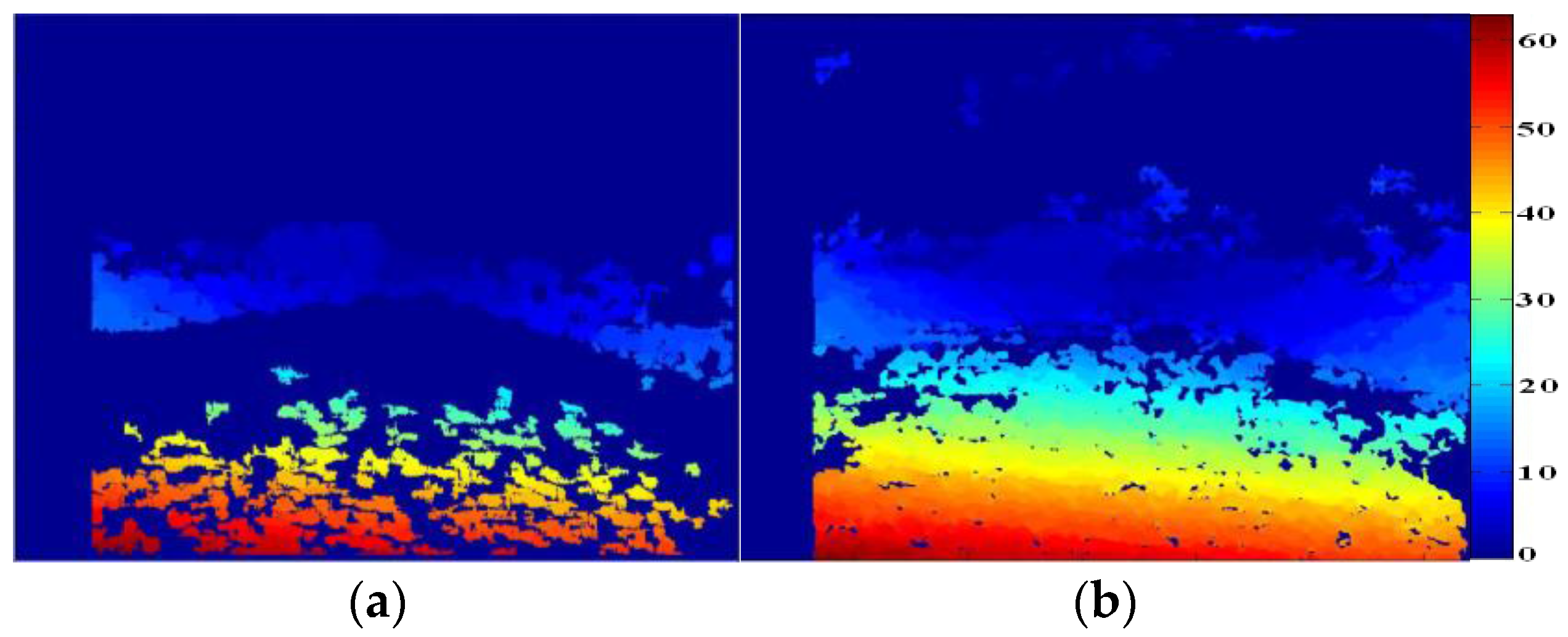

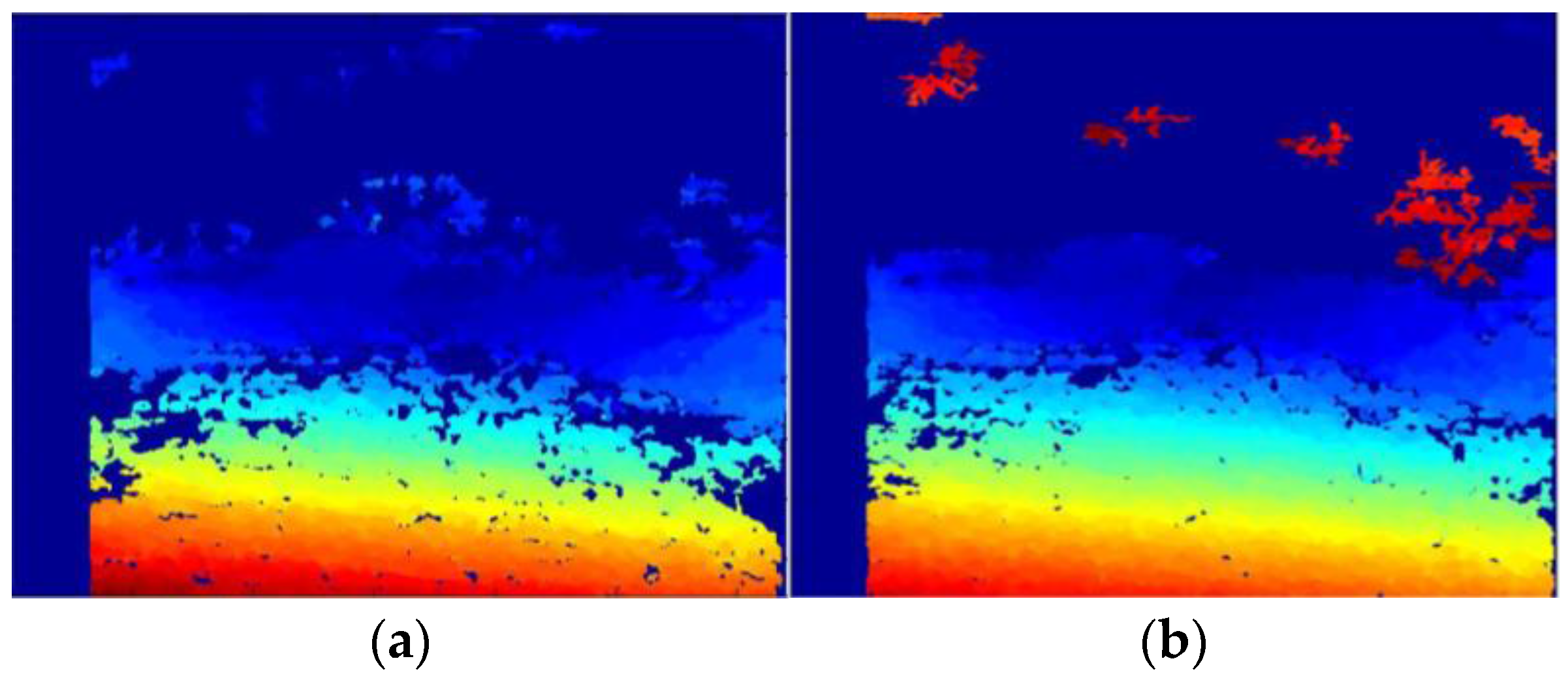

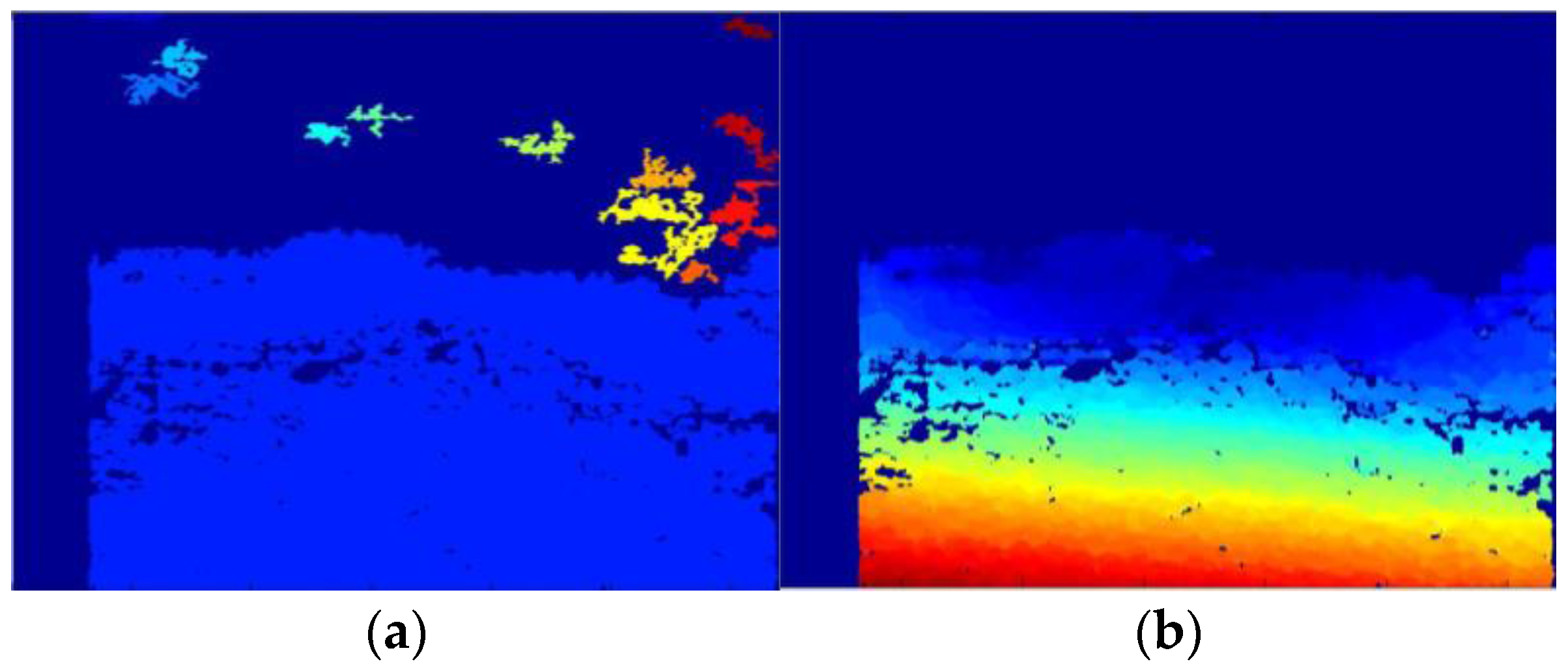

Use Case: Experiments and Results

6. Conclusions and Future Work

Author Contributions

Conflicts of Interest

References

- Barnard, S.; Fishler, M. Computational stereo. ACM Comput. Surv. 1982, 14, 553–572. [Google Scholar] [CrossRef]

- Cochran, S.D.; Medioni, G. 3-D surface description from binocular stereo. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 981–994. [Google Scholar] [CrossRef]

- Correal, R. Matlab Central. 2012. Available online: http://www.mathworks.com/matlabcentral/fileexchange/36433 (accessed on 8 November 2016).

- Scharstein, G.; Szeliski, R. A taxonomy and evaluation of dense two-frame stereo correspondence algorithms. Int. J. Comput. Vis. 2002, 47, 7–42. [Google Scholar] [CrossRef]

- Tombari, F.; Gori, F.; Di Stefano, L. Evaluation of Stereo Algorithms for 3D Object Recognition. In Proceedings of the 2011 IEEE International Conference on Computer Vision Workshops (ICCV Workshops), Barcelona, Spain, 6–13 November 2011.

- Ozanian, T. Approaches for Stereo Matching—A Review. Model. Identif. Control 1995, 16, 65–94. [Google Scholar] [CrossRef]

- Grimson, W.E.L. Computational Experiments with a Feature-based Stereo Algorithm. IEEE Trans. Pattern Anal. Mach. Intell. 1985, 7, 17–34. [Google Scholar] [CrossRef] [PubMed]

- Ayache, N.; Faverjon, B. Efficient Registration of Stereo Images by Matching Graph Descriptions of Edge Segments. Int. J. Comput. Vis. 1987, 1, 107–131. [Google Scholar] [CrossRef]

- Pajares, G.; Cruz, J.M.; López-Orozco, J.A. Relaxation Labeling in Stereo Image Matching. Pattern Recognit. 2000, 33, 53–68. [Google Scholar] [CrossRef]

- Pajares, G.; Cruz, J.M. Fuzzy Cognitive Maps for Stereovision Matching. Pattern Recognit. 2006, 39, 2101–2114. [Google Scholar] [CrossRef]

- Baker, H.H. Building and Using Scene Representations in Image Understanding. In Machine Perception; Prentice-Hall: Englewood Cliffs, NJ, USA, 1982; pp. 1–11. [Google Scholar]

- Bradski, G.; Kaehler, A. Learning OpenCV: Computer Vision with the OpenCV Library; O’Reilly Media: Sebastopol, CA, USA, 2008. [Google Scholar]

- Darabiha, A.; MacLean, W.J.; Rose, J. Reconfigurable hardware implementation of a phase-correlation stereoalgorithm. Mach. Vis. Appl. 2006, 17, 116–132. [Google Scholar] [CrossRef]

- Lim, Y.K.; Kleeman, L.; Drummond, T. Algorithmic methodologies for FPGA-based vision. Mach. Vis. Appl. 2013, 24, 1197–1211. [Google Scholar] [CrossRef]

- Gonzalez, R.C.; Woods, R.E. Digital Image Processing; Prentice-Hall: Englewood Cliffs, NJ, USA, 2008. [Google Scholar]

- Pajares, G.; de la Cruz, J.M. Visión Por Computador: Imágenes Digitales y Aplicaciones; RA-Ma: Madrid, Spain, 2007; pp. 102–105. [Google Scholar]

- Butterworth, S. On the Theory of Filter Amplifiers. Wirel. Eng. 1930, 7, 536–541. [Google Scholar]

- Jensen, J.R. Introductory Digital Image Processing; Prentice-Hall: Englewood Cliffs, NJ, USA, 1982. [Google Scholar]

- Gonzalez, R.C.; Wintz, P. Digital Image Processing; Addison-Wesly: Reading, MA, USA, 1987. [Google Scholar]

- Barnea, D.I.; Silverman, H.F. A Class of algorithms for fast digital image registration. IEEE Trans. Comput. 1972, 21, 179–186. [Google Scholar] [CrossRef]

- Shirai, Y. Three-Dimensional Computer Vision; Springer: Berlin, Germany, 1987. [Google Scholar]

- Faugeras, O.; Keriven, R. Variational Principles, Surface Evolution, PDE’s, Level Set Methods and the Stereo Problem. IEEE Trans. Image Process. 1998, 7, 336–344. [Google Scholar] [CrossRef] [PubMed]

- Lankton, S. 3D Vision with Stereo Disparity. 2007. Available online: http://www.shawnlankton.com/2007/12/3d-vision-with-stereo-disparity (accessed on 8 November 2016).

- Alagoz, B. Obtaining Depth Maps from Color Images by Region Based Stereo Matching Algorithms. OncuBilim Algorithm Syst. Labs 2008, 8, 1–13. [Google Scholar]

- Ogale, A.S.; Aloimonos, Y. Shape and the Stereo Correspondence Problem. Int. J. Comput. Vis. 2005, 65, 147–162. [Google Scholar] [CrossRef]

- Ogale, A.S.; Aloimonos, Y. A Roadmap to the Integration of Early Visual Modules. Int. J. Comput. Vis. 2007, 72, 9–25. [Google Scholar] [CrossRef]

- Abbeloos, W. Stereo Matching. 2010. Available online: http://www.mathworks.com/matlabcentral/fileexchange/28522-stereo-matching (accessed on 8 November 2016).

- Psarakis, E.Z.; Evangelidis, G.D. An enhanced correlation-based method for stereo correspondence with subpixel accuracy. In Proceedings of the Tenth IEEE International Conference on Computer Vision, Beijing, China, 17–21 October 2005.

- Konolige, K. Small vision system: Hardware and Implementation. In Proceedings of the International Symposium on Robotics Research, Hayama, Japan, 19–22 October 1997; pp. 111–116.

- Hirschmüller, H. Accurate and Efficient Stereo Processing by Semi-Global Matching and Mutual Information. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), San Diego, CA, USA, 20–25 June 2005.

- Kosov, S.; Thormaehlen, T.; Seidel, H.P. Accurate Real-Time Disparity Estimation with Variational Methods. In Proceedings of the International Symposium on Visual Computing, Las Vegas, NV, USA, 30 November–2 December 2009.

- Cech, J.; Sara, R. Efficient Sampling of Disparity Space for Fast and Accurate Matching. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Minneapolis, MN, USA, 17–22 June 2007.

- Scharstein, D.; Blasiak, A. Middlebury stereo evaluation site. 2014. Available online: http://vision.middlebury.edu/stereo/eval/ (accessed on 8 November 2016).

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. The KITTI Vision Benchmark Suite. 2014. Available online: http://www.cvlibs.net/datasets/kitti/eval_stereo_flow.php?benchmark=stereo (accessed on 8 November 2016).

- Correal, R.; Pajares, G. Modeling, Simulation and Onboard Autonomy Software for Robotic Exploration on Planetary Environments. In Proceedings of the 2011 International Conference on DAta Systems in Aerospace, San Anton, Malta, 17–20 May 2011.

- Correal, R.; Pajares, G. Onboard Autonomous Navigation Architecture for a Planetary Surface Exploration Rover and Functional Validation Using Open-Source Tools. In Proceedings of the ESA International Conference on Advanced Space Technologies in Robotics and Automation, Noordwijk, The Netherlands, 12–14 April 2011.

- Correal, R.; Pajares, G.; Ruz, J.J. Stereo Images Matching Process Enhancement by Homomorphic Filtering and Disparity Clustering. Rev. Iberoam. Autom. Inform. Ind. 2013, 10, 178–184. [Google Scholar] [CrossRef]

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Correal, R.; Pajares, G.; Ruz, J.J. A Matlab-Based Testbed for Integration, Evaluation and Comparison of Heterogeneous Stereo Vision Matching Algorithms. Robotics 2016, 5, 24. https://doi.org/10.3390/robotics5040024

Correal R, Pajares G, Ruz JJ. A Matlab-Based Testbed for Integration, Evaluation and Comparison of Heterogeneous Stereo Vision Matching Algorithms. Robotics. 2016; 5(4):24. https://doi.org/10.3390/robotics5040024

Chicago/Turabian StyleCorreal, Raul, Gonzalo Pajares, and Jose Jaime Ruz. 2016. "A Matlab-Based Testbed for Integration, Evaluation and Comparison of Heterogeneous Stereo Vision Matching Algorithms" Robotics 5, no. 4: 24. https://doi.org/10.3390/robotics5040024

APA StyleCorreal, R., Pajares, G., & Ruz, J. J. (2016). A Matlab-Based Testbed for Integration, Evaluation and Comparison of Heterogeneous Stereo Vision Matching Algorithms. Robotics, 5(4), 24. https://doi.org/10.3390/robotics5040024