Deployment Environment for a Swarm of Heterogeneous Robots

Abstract

:1. Introduction

2. Related Work

3. Methodology

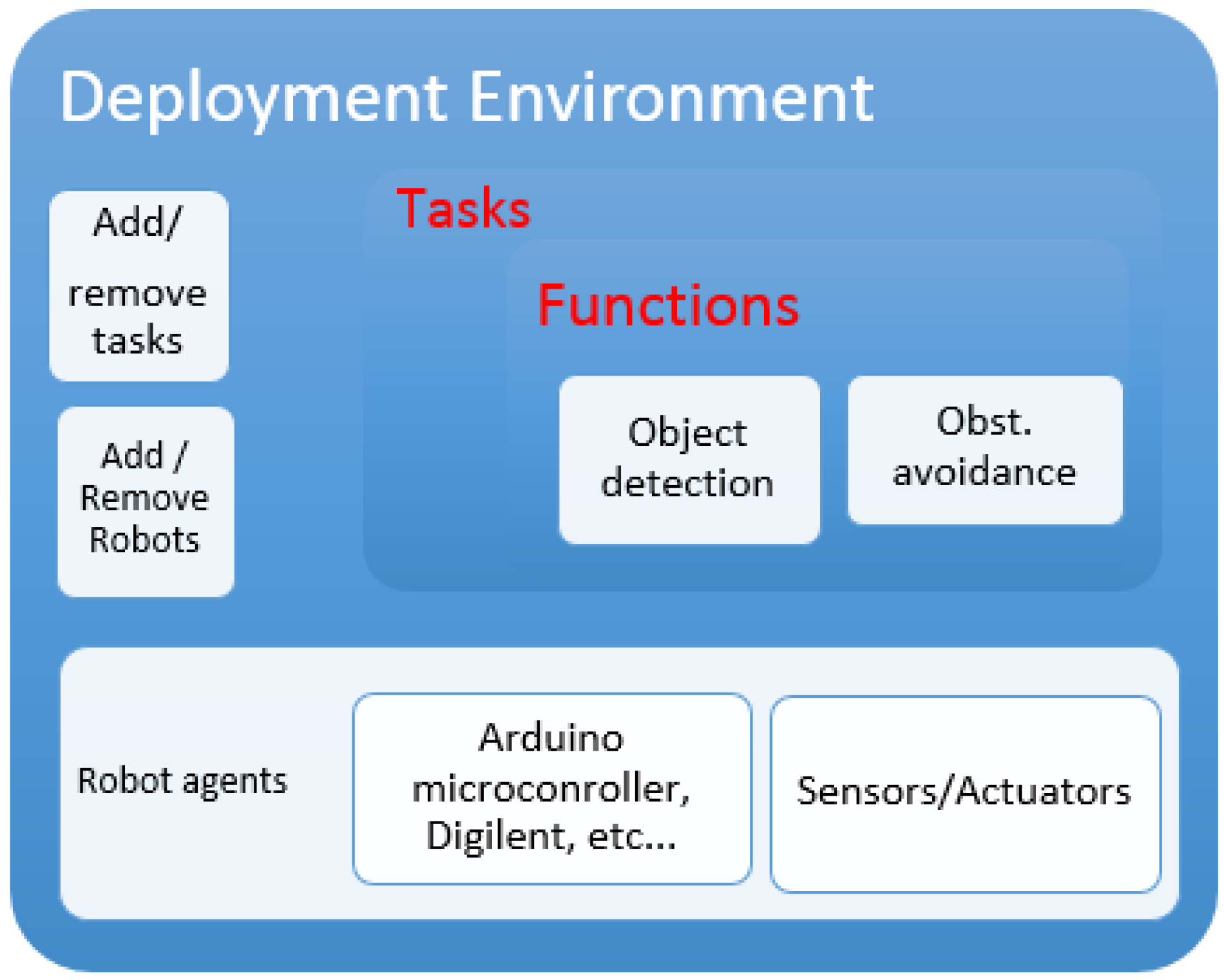

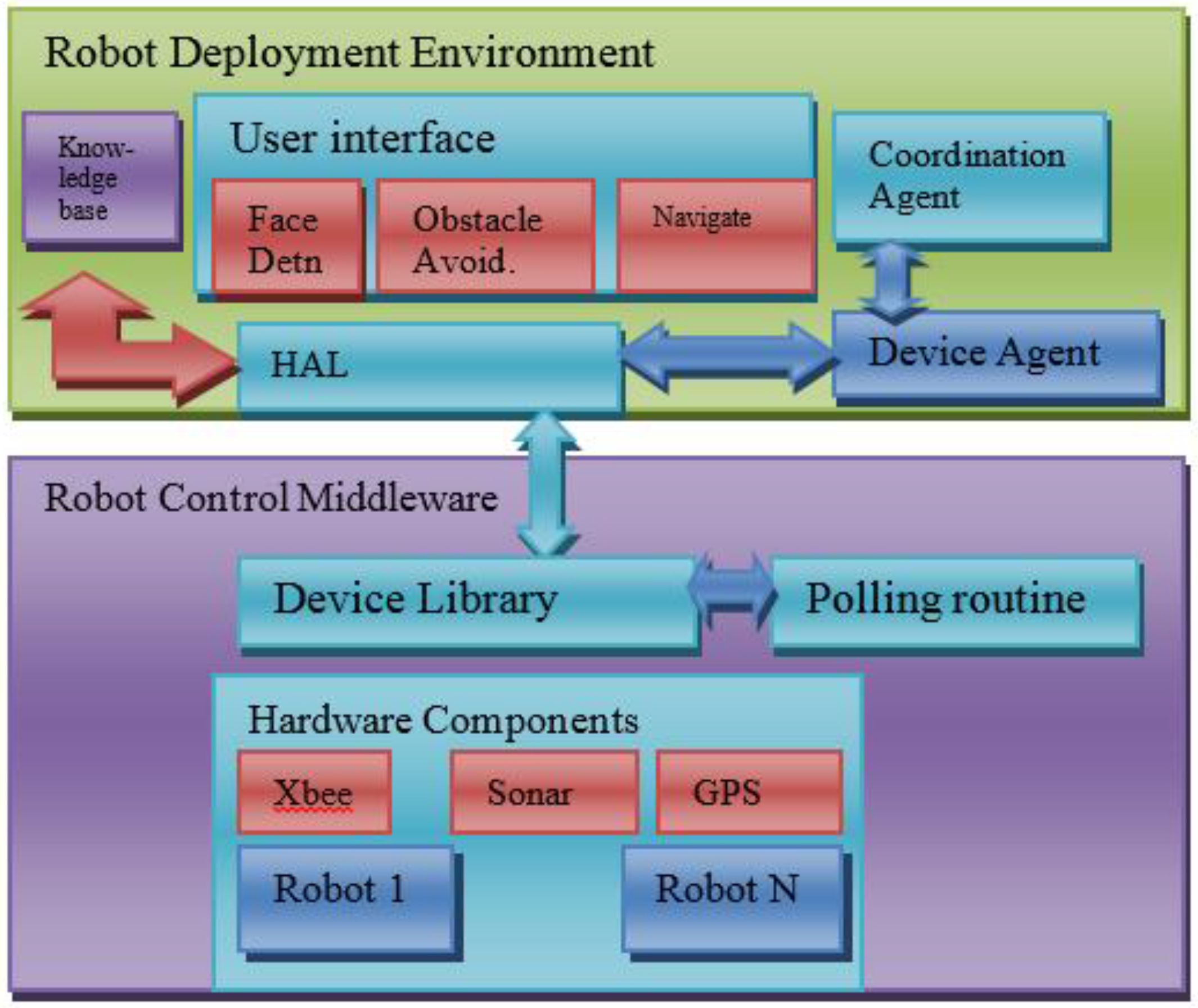

4. System Architecture

- -

- Configure the system by picking the available agents, their onboard features (sensors, motors, etc.), and the services needed to accomplish each task

- -

- Run the system using saved configurations and add/remove agents.

4.1. Robot Deployment Environment

4.1.1. User Interface

4.1.2. Coordination Agent

- -

- T is the task to be accomplished, which is a set of m subtasks that are basically composed of motor, sensor, and communication devices that need to be activated in certain ways in order to accomplish this task. It is denoted as , where is the subtask j performed by robot and , .

- -

- A subset of can be allocated to robots concurrently if they do not have ordering constraints.

- -

- To accomplish the task on robot , a collection of n plans (solutions), denoted Pi = {, , …, }, needs to be generated based on the task requirements and the robot capabilities.

- -

- A robot-specific cost determines the robot’s particular cost (e.g., in terms of energy consumption or computational requirements) of using particular capabilities on the robot to accomplish a task (such as a camera or a sonar sensor). We denote robot cost by robot cost (, ).

- -

- The cost of a plan Pi performing a task is the sum of the individual cost of robot i performing sub-tasks m that are in the plan , which is denoted by: where .

| Algorithm 1. Input: (T, R, M, N) |

|

| Algorithm 2. Input (R, N) |

|

4.1.3. Runtime Interpreter

4.1.4. Knowledge Base (Registry)

4.2. Robot Control Middleware

4.2.1. Device Library

- -

- Serial: 0 (RX) and 1 (TX). Used to receive (RX) and transmit (TX) TTL serial data. For example, on the Arduino Diecimila, these PINs are connected to the corresponding pins of the FTDI USB-to-TTL Serial chip.

- -

- External Interrupts (PINs 2 and 3): These pins can be configured to trigger an interrupt on a low value, a rising or falling edge, or a change in value.

- -

- PWM (Pulse Width Modulation) Pins: 4 up to 24 provide 8-bit PWM output.

- -

- Analog Pins: PINs 25 and higher (analog input pins) support 10-bit analog-to-digital conversion (ADC).

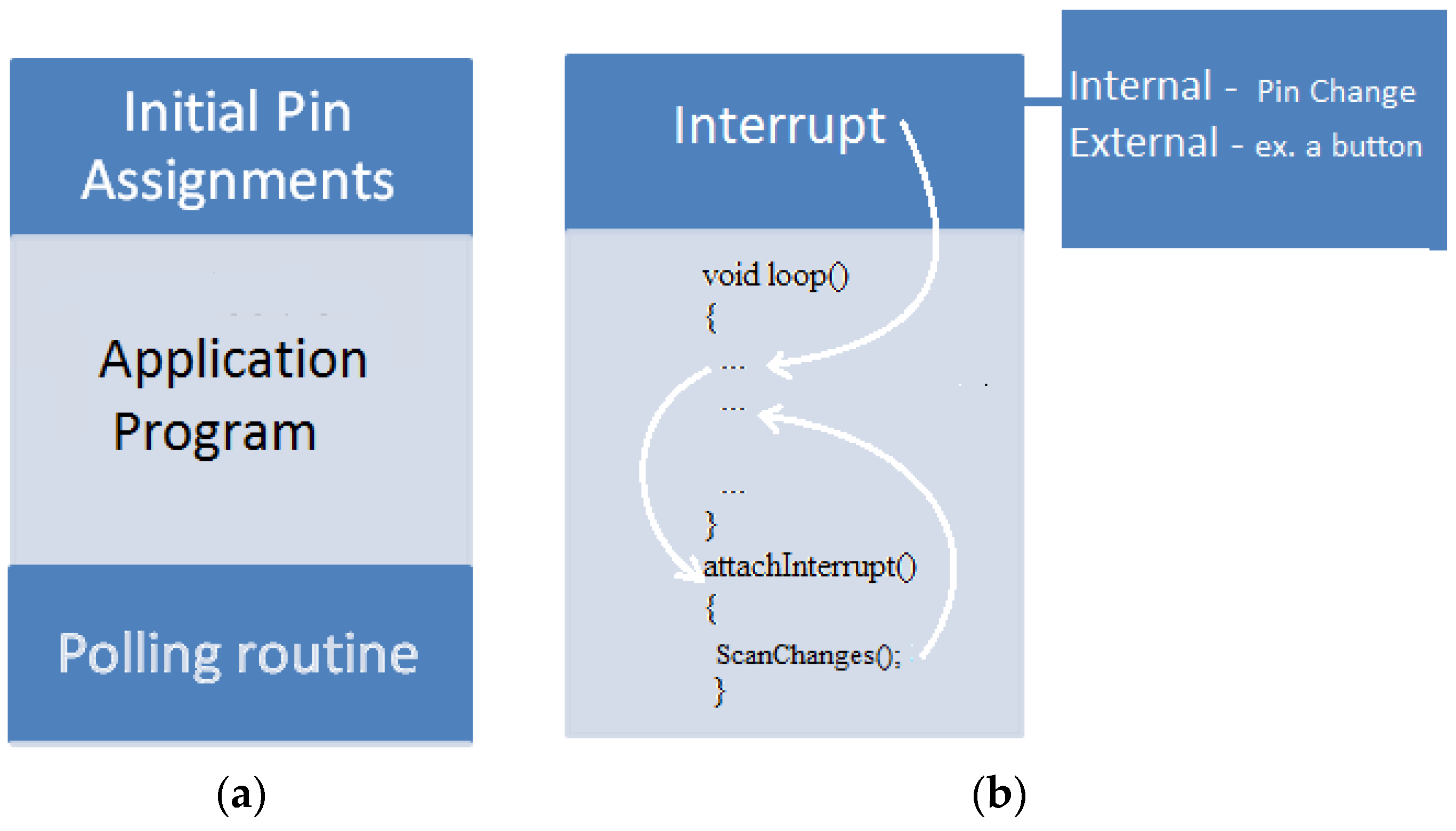

4.2.2. Controlling Program

4.2.3. Polling Routine

- -

- Digital PWM pins can only be connected to Ultrasonic sensors or servo motors

- -

- Analog pins can only be connected to Infra-red or sonar sensors

4.2.4. Hardware Abstraction Layer

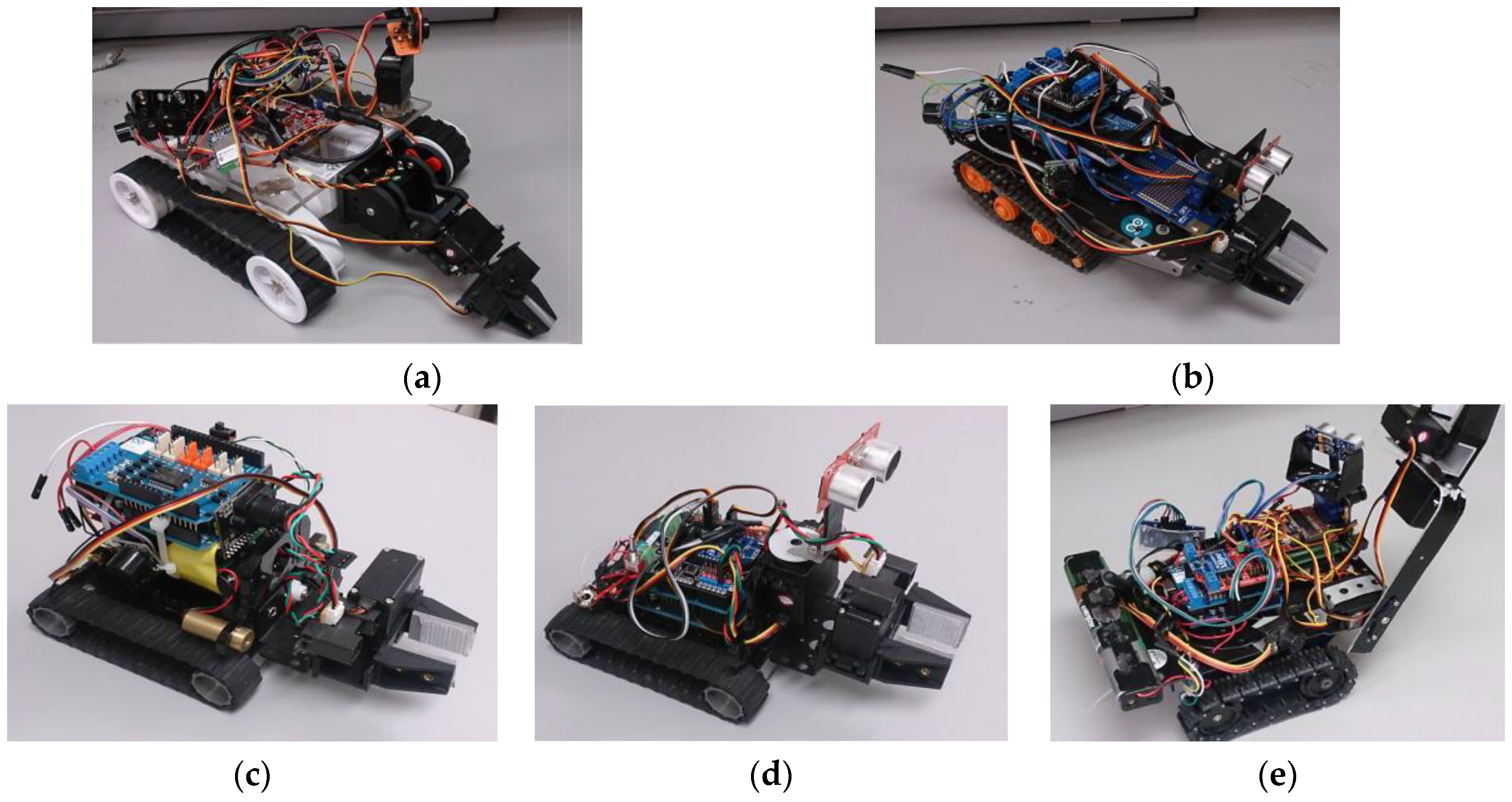

5. Experimental Results

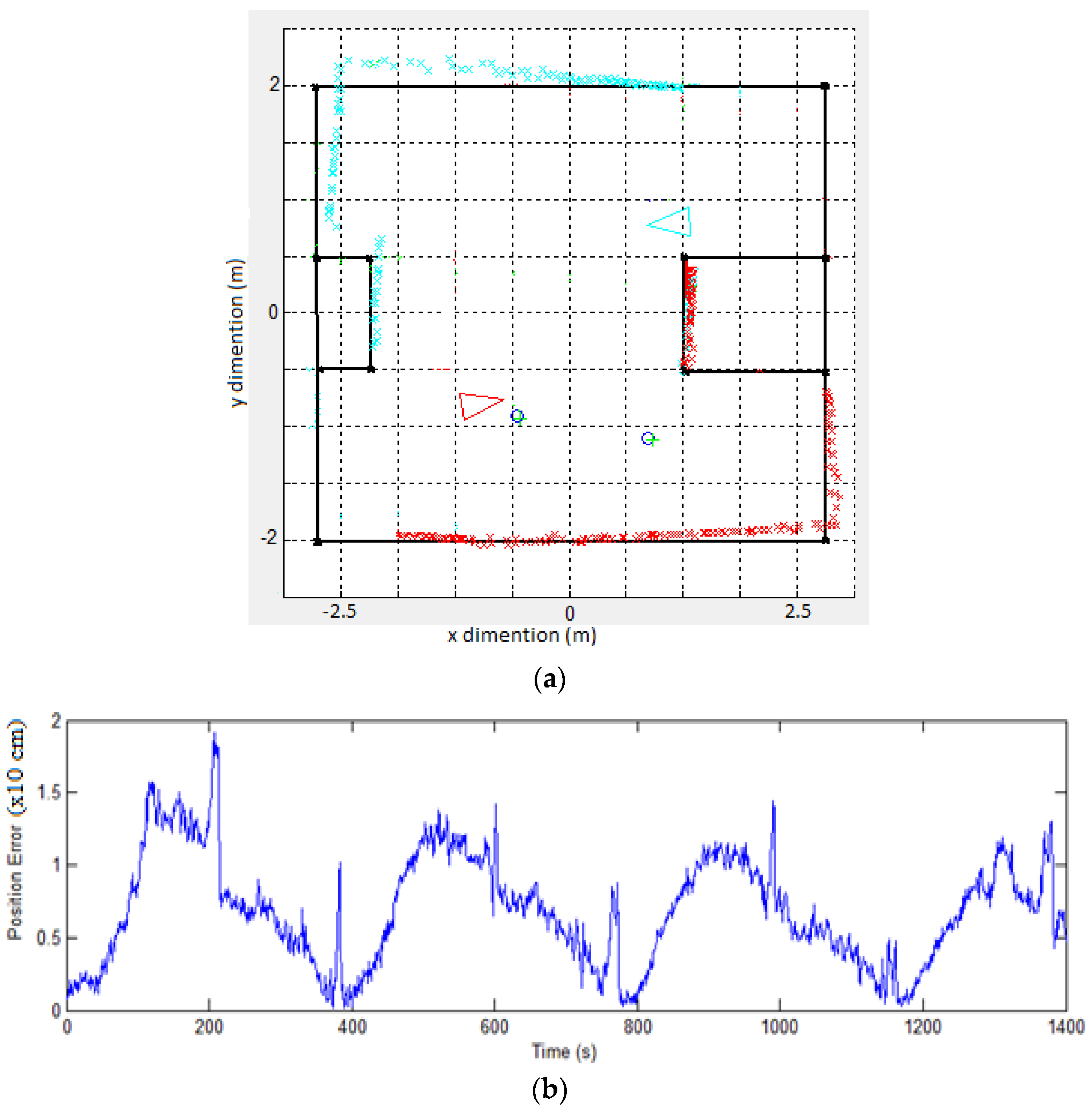

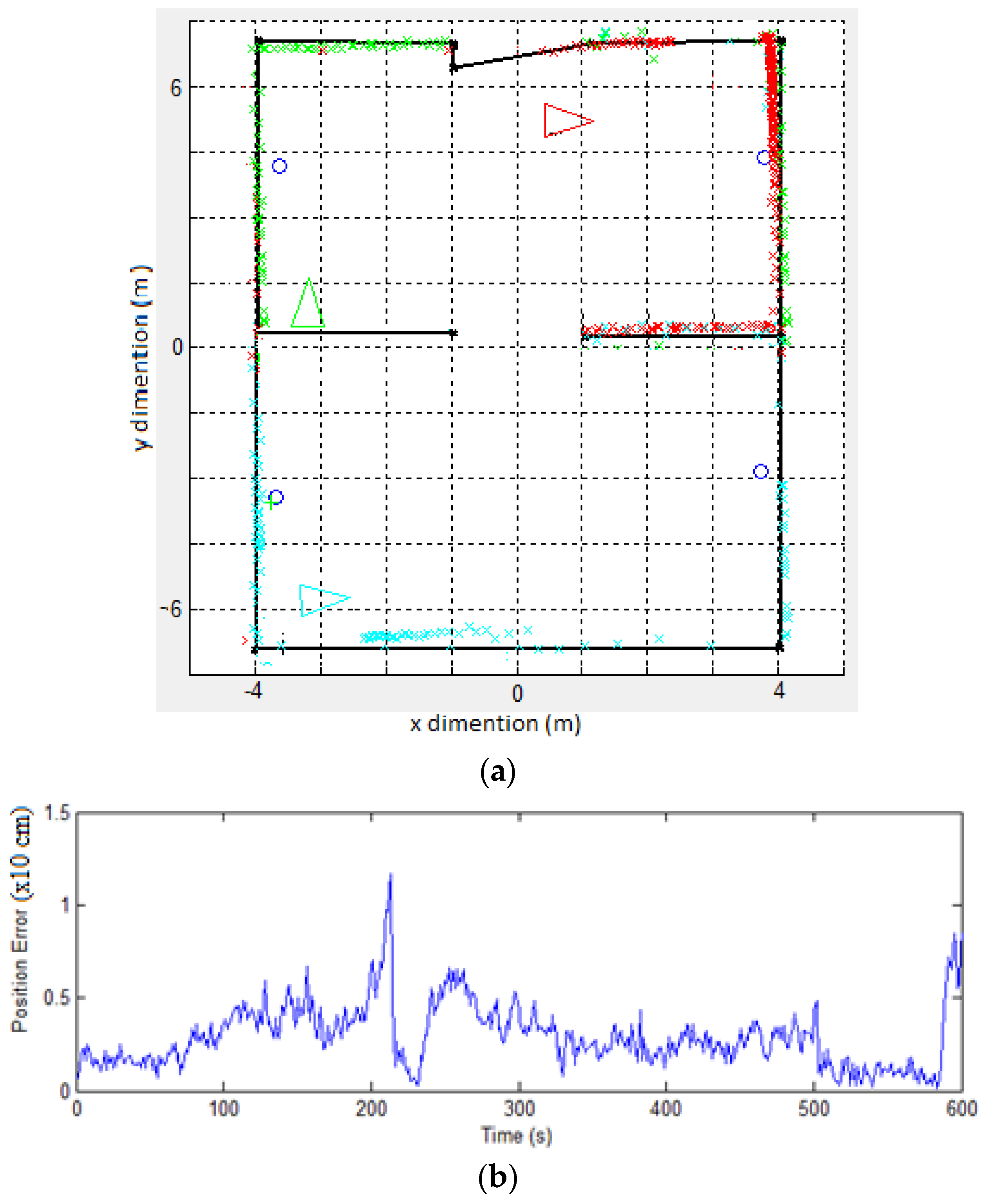

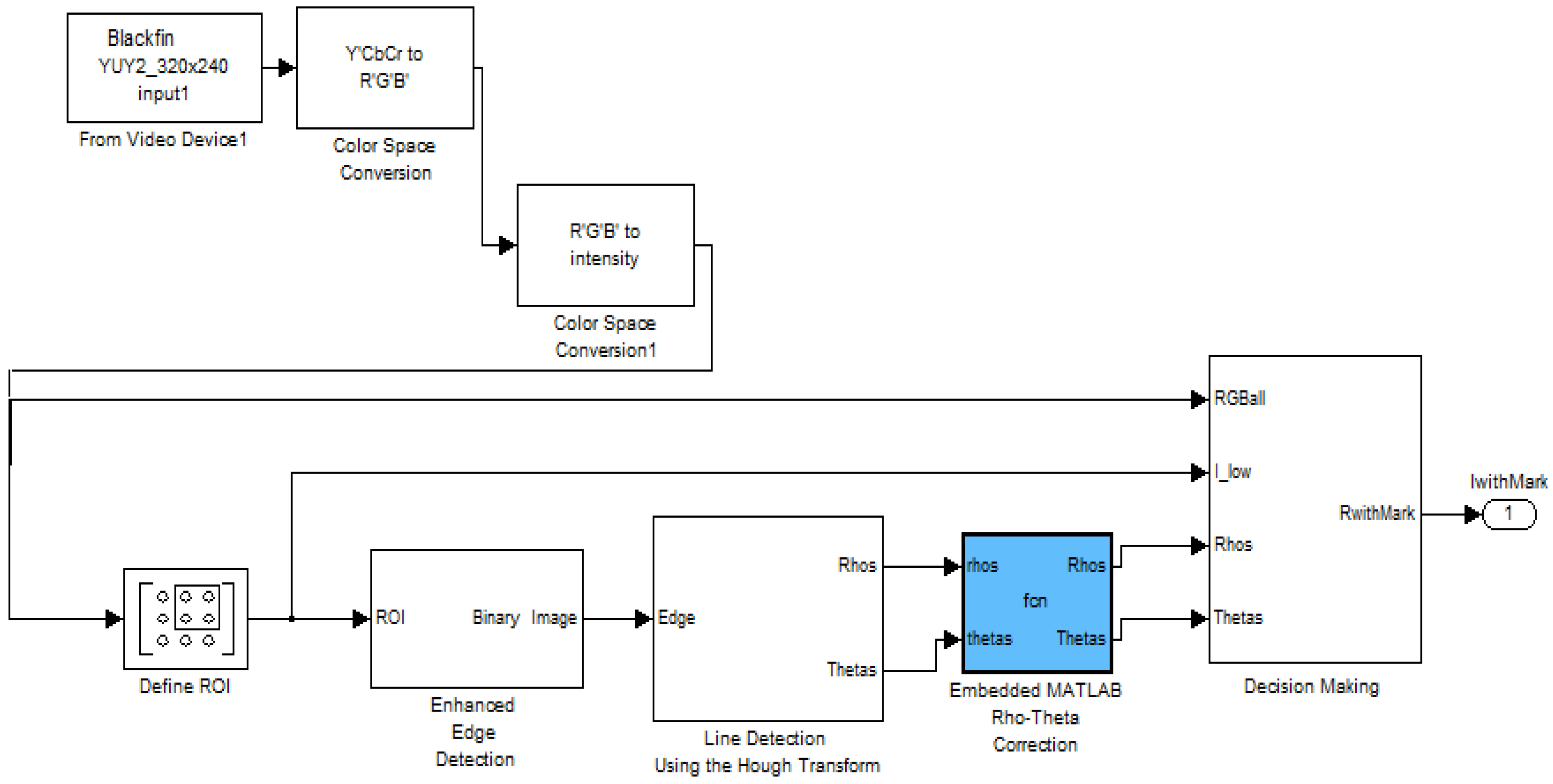

5.1. Mapping Task

- -

- Experiment 1 uses two robots; each one has wheel encoders and one onboard ultrasonic range finder.

- -

- Experiment 2 uses three robots, each of which has the same configuration as the above robots plus two more sonar sensors mounted on the sides.

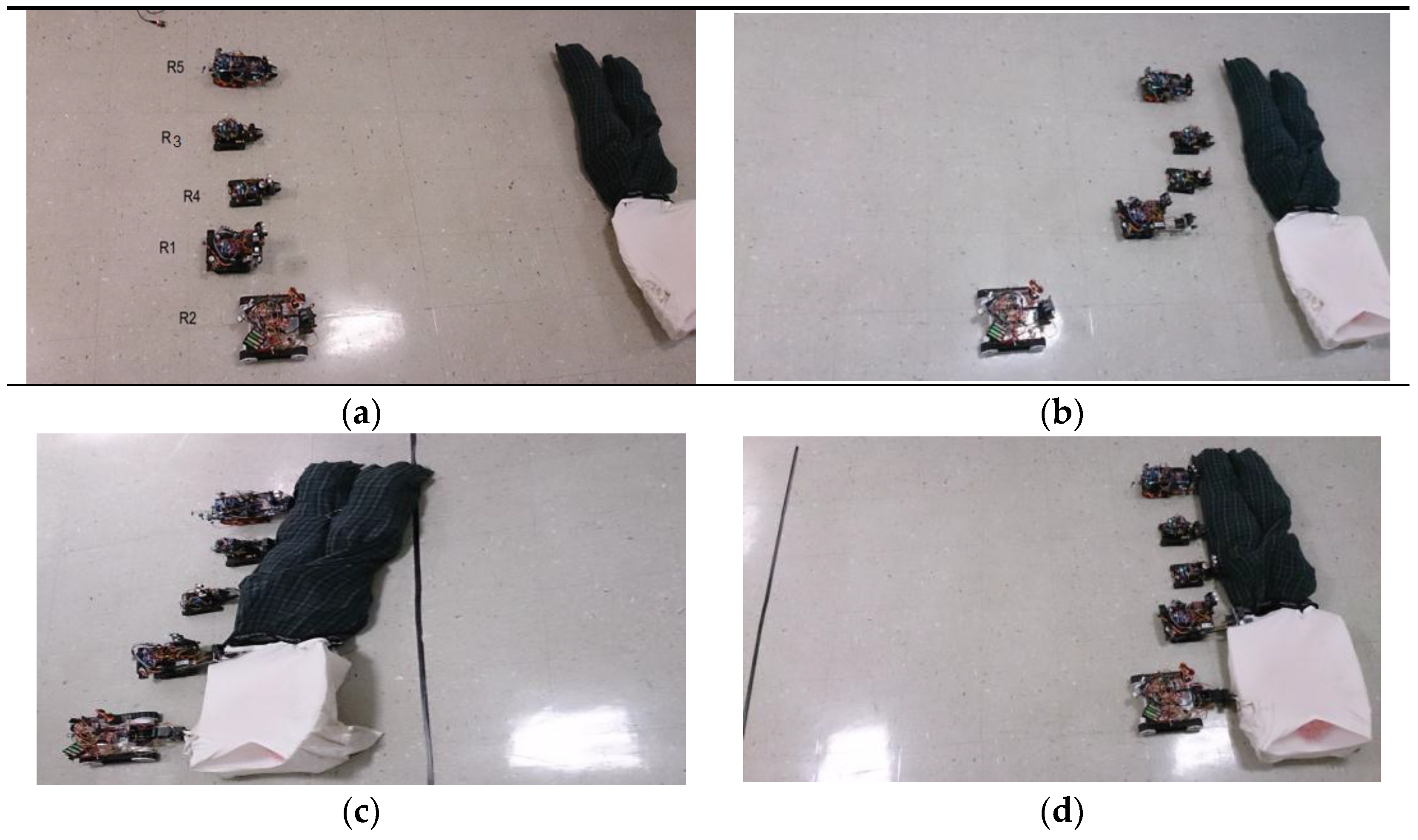

5.2. Human Rescue Task

5.2.1. Execution Example

5.2.2. Optimal Solution

6. Conclusions

Author Contributions

Conflicts of Interest

References

- Yan, X.; Liang, A.; Guan, H. An algorithm for self-organized aggregation of swarm robotics using timer. In Proceedings of the 2011 IEEE Symposium on Swarm Intelligence (SIS), Paris, France, 11–15 April 2011; pp. 1–7.

- Bayindir, L.; Sahin, E. A review of studies in swarm robotics. Turk. J. Electr. Eng. 2007, 15, 115–147. [Google Scholar]

- Liemhetcharat, S.; Veloso, M. Weighted synergy graphs for effective team formation with heterogeneous ad hoc agents. Artif. Intell. 2014, 208, 41–65. [Google Scholar] [CrossRef]

- Hayes, A.T.; Martinoli, A.; Goodman, R.M. Swarm robotic odor localization. In Proceedings of the 2001 IEEE/RSJ International Conference on Intelligent Robots and Systems, Maui, HI, USA, 29 October–3 November 2001; pp. 1073–1078.

- Kalde, N.; Simonin, O.; Charpillet, F. Comparison of Classical and Interactive Multi-Robot Exploration Strategies in Populated Environments. Acta Polytech. 2015, 55, 154–161. [Google Scholar] [CrossRef]

- Payton, D.; Daily, M.; Estowski, R.; Howard, M.; Lee, C. Pheromone robotics. Auton. Robots 2001, 11, 319–324. [Google Scholar] [CrossRef]

- Abukhalil, T.; Patil, M.; Sobh, T. Survey on Decentralized Modular Swarm Robots and Control Interfaces. Int. J. Eng. 2013, 7, 44. [Google Scholar]

- Patil, M.; Abukhalil, T.; Patel, S.; Sobh, T. Hardware Architecture Review of Swarm Robotics System: Self-Reconfigurability, Self-Reassembly, and Self-Replication. In Innovations and Advances in Computing, Informatics, Systems Sciences, Networking and Engineering; Springer International Publishing: New York, NY, USA, 2015; pp. 433–444. [Google Scholar]

- Inc, M. Documentation & Technical Support for MobileRobots Research Platforms; Adept MobileRobots Inc.: Amherst, NH, USA, 2006. [Google Scholar]

- Baillie, J.C. The URBI Tutorial; Gostai: Lyon, France, 2006. [Google Scholar]

- Gerkey, B.; Vaughan, R.T.; Howard, A. The player/stage project: Tools for multi-robot and distributed sensor systems. In Proceedings of the 11th International Conference on Advanced Robotics, Coimbra, Portugal, 30 June–3 July 2003; pp. 317–323.

- Nebot, P.; Cervera, E. Agent-based application framework for multiple mobile robots cooperation. In Proceedings of the 2005 IEEE International Conference on Robotics and Automation, Barcelona, Spain, 18–22 April 2005; pp. 1509–1514.

- Zhang, X.L.T.; Zhu, Y.; Li, X.; Chen, S. Coordinative Control for Multi-Robot System through Network Software Platform. iConcept Press 2010, 28, 51–59. [Google Scholar]

- Blank, D.; Kumar, D.; Meeden, L.; Yanco, H. Pyro: A python-based versatile programming environment for teaching robotics. J. Educ. Resour. Comput. 2004, 4, 3. [Google Scholar] [CrossRef]

- Kulis, Z.; Manikonda, V.; Azimi-Sadjadi, B.; Ranjan, P. The distributed control framework: A software infrastructure for agent-based distributed control and robotics. In Proceedings of the American Control Conference, Seattle, WA, USA, 11–13 June 2008; pp. 1329–1336.

- Elkady, A.; Joy, J.; Sobh, T. A plug and play middleware for sensory modules, actuation platforms and task descriptions in robotic manipulation platforms. In Proceedings of the ASME 2011 International Design Engineering Technical Conferences and Computers and Information in Engineering Conference, Chicago, IL, USA, 28–31 August 2011; pp. 565–574.

- Nestinger, S.S.; Cheng, H.H. Mobile-R: A reconfigurable cooperative control platform for rapid deployment of multi-robot systems. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation (ICRA), Shanghai, China, 9–13 May 2011; pp. 52–57.

- Chen, B.; Cheng, H.H.; Palen, J. Mobile-C: A mobile agent platform for mobile C/C++ agents. Softw. Pract. Exp. 2006, 36, 1711–1733. [Google Scholar] [CrossRef]

- Ball, G.P.; Squire, K.; Martell, C.; Shing, M.T. MAJIC: A Java application for controlling multiple, heterogeneous robotic agents. In Proceedings of the 19th IEEE/IFIP International Symposium on Rapid System Prototyping, Monterey, CA, USA, 5 January 2008; pp. 189–195.

- Tang, F.; Parker, L.E. A complete methodology for generating multi-robot task solutions using asymtre-d and market-based task allocation. In Proceedings of the 2007 IEEE International Conference on Robotics and Automation, Sanya, China, 15–18 December 2007; pp. 3351–3358.

- Kernbach, S.; Meister, E.; Schlachter, F.; Jebens, K.; Szymanski, M.; Liedke, J.; Laneri, D.; Winkler, L.; Schmickl, T.; Thenius, R.; et al. Symbiotic robot organisms: REPLICATOR and SYMBRION projects. In Proceedings of the 8th Workshop on Performance Metrics for Intelligent Systems, Gaithersburg, MD, USA, 19–21 August 2008; pp. 62–69.

- Bautin, A.; Simonin, O.; Charpillet, F. Minpos: A novel frontier allocation algorithm for multi-robot exploration. In Intelligent Robotics and Applications; Springer: Berlin/Heidelberg, Germany, 2012; pp. 496–508. [Google Scholar]

- Von Neumann, J.; Morgenstern, O. Theory of games and economic behavior. Bull. Am. Math. Soc. 1945, 51, 498–504. [Google Scholar]

- Abukhalil, T.; Patil, M.; Patel, S.; Sobh, T. Coordinating a Heterogeneous Robot Swarm Using Robot Utility-Based Task Assignment (RUTA). In Proceedings of the 2016 IEEE 14th International Workshop on Advanced Motion Control (AMC), Auckland, New Zealand, 22–24 April 2016; pp. 57–62.

- Patil, M.; Abukhalil, T.; Patel, S.; Sobh, T. UB Robot Swarm, Design, Implementation, and Power Management. In Proceedings of the 12th IEEE International Conference on Control and Automation (ICCA), Kathmandu, Nepal, 1–3 June 2016; pp. 577–582.

| Team Size | Weight of Body | Average Pulling Distance (m) | Average Time (s) |

|---|---|---|---|

| 1 | 300 g | 1.6 | 196 |

| 2 | 800 g | 1.3 | 240 |

| 3 | 1200 g | 2.5 | 201 |

| 4 | 1200 g | 2.0 | 210 |

| 5 | 1200 g | 1.6 | 400 |

| Team Composition | Centralized | Decentralized | ||

|---|---|---|---|---|

| Utility Value | Time (s) | Utility Value | Time (s) | |

| (R1, R3, R4, R5) | 8.82 | 210 | 6.62 | 299 |

| (R1, R3, R4) | 9.63 | 201 | 6.91 | 277 |

| (R2, R3, R4, R1, R5) | 8.43 | 400 | 6.66 | 405 |

| (R2, R5) | 8.16 | 240 | 6.34 | 310 |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Abukhalil, T.; Patil, M.; Patel, S.; Sobh, T. Deployment Environment for a Swarm of Heterogeneous Robots. Robotics 2016, 5, 22. https://doi.org/10.3390/robotics5040022

Abukhalil T, Patil M, Patel S, Sobh T. Deployment Environment for a Swarm of Heterogeneous Robots. Robotics. 2016; 5(4):22. https://doi.org/10.3390/robotics5040022

Chicago/Turabian StyleAbukhalil, Tamer, Madhav Patil, Sarosh Patel, and Tarek Sobh. 2016. "Deployment Environment for a Swarm of Heterogeneous Robots" Robotics 5, no. 4: 22. https://doi.org/10.3390/robotics5040022

APA StyleAbukhalil, T., Patil, M., Patel, S., & Sobh, T. (2016). Deployment Environment for a Swarm of Heterogeneous Robots. Robotics, 5(4), 22. https://doi.org/10.3390/robotics5040022