Terrain Perception in a Shape Shifting Rolling-Crawling Robot

Abstract

:1. Introduction

2. Scorpio Robot: System Overview

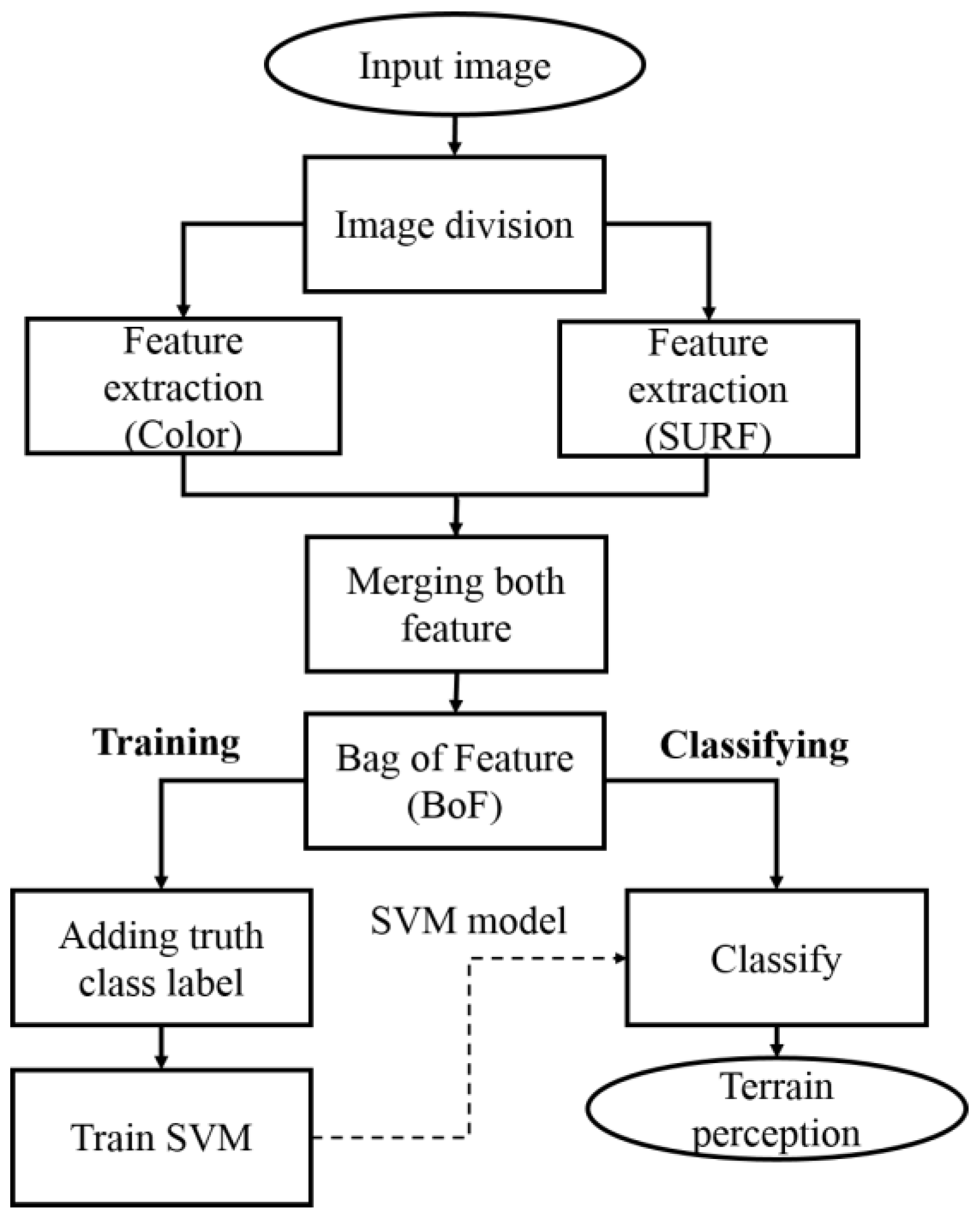

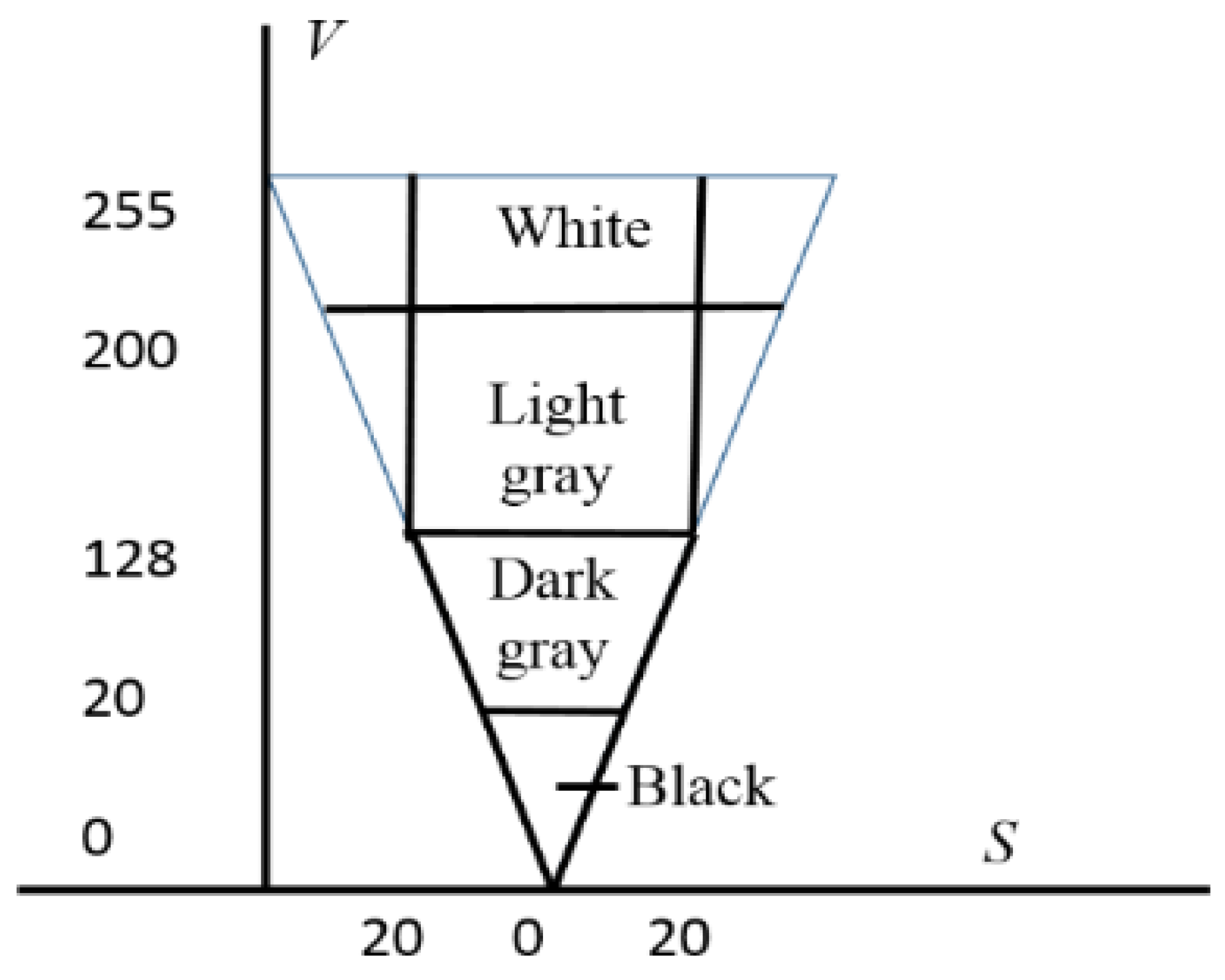

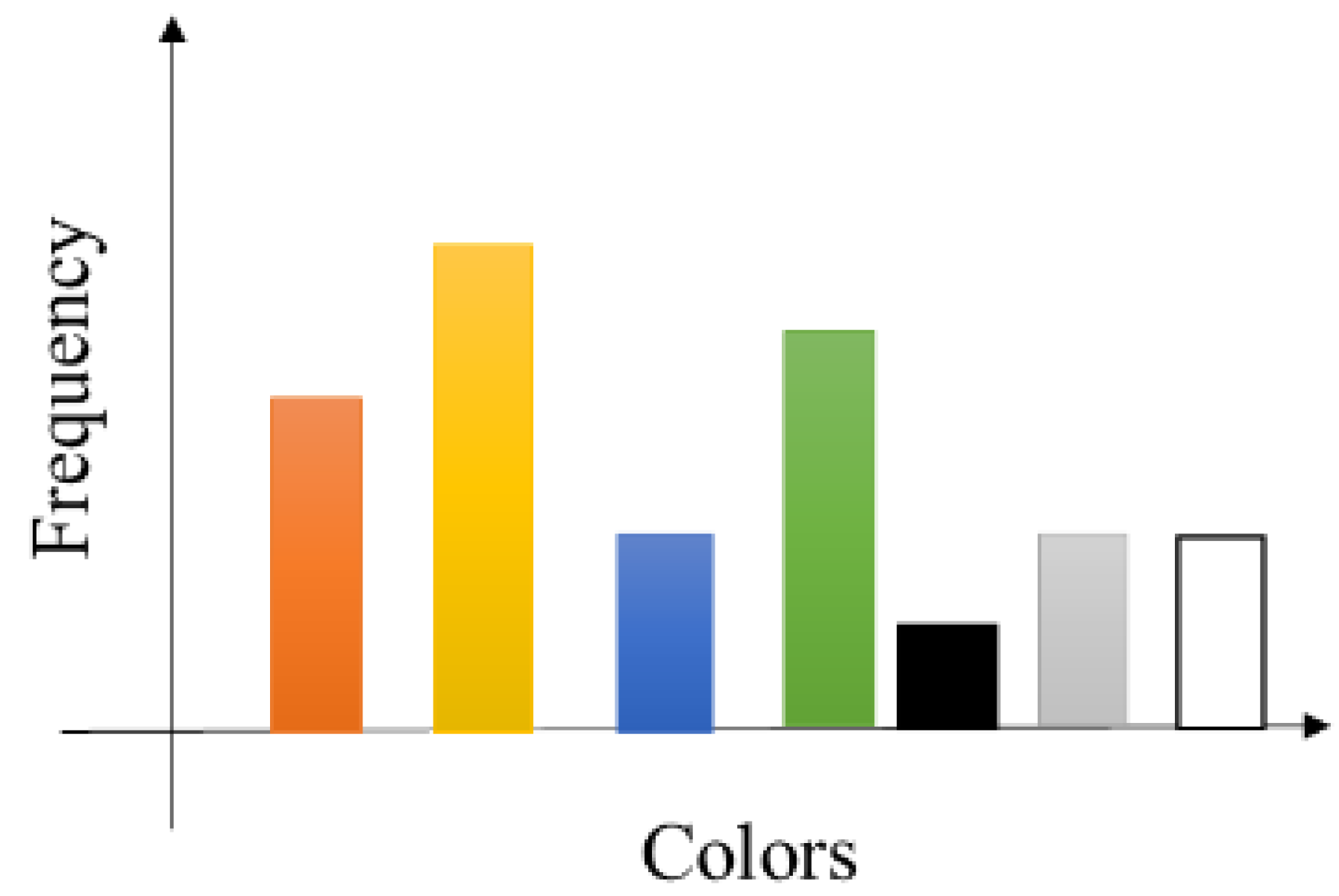

3. Terrain Perception

3.1. System Overview

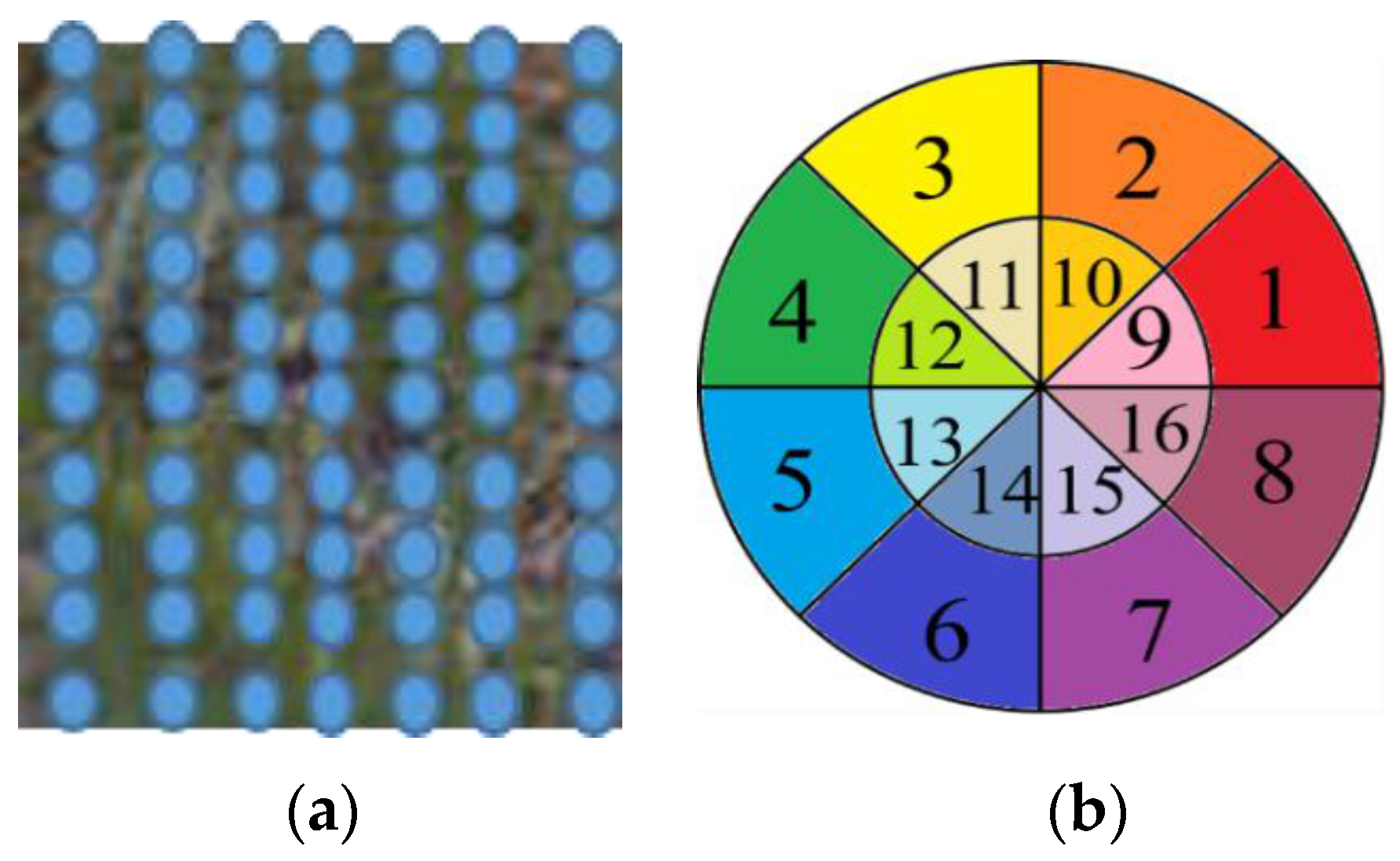

3.2. SURF (Speed Up Robust Feature) Descriptor

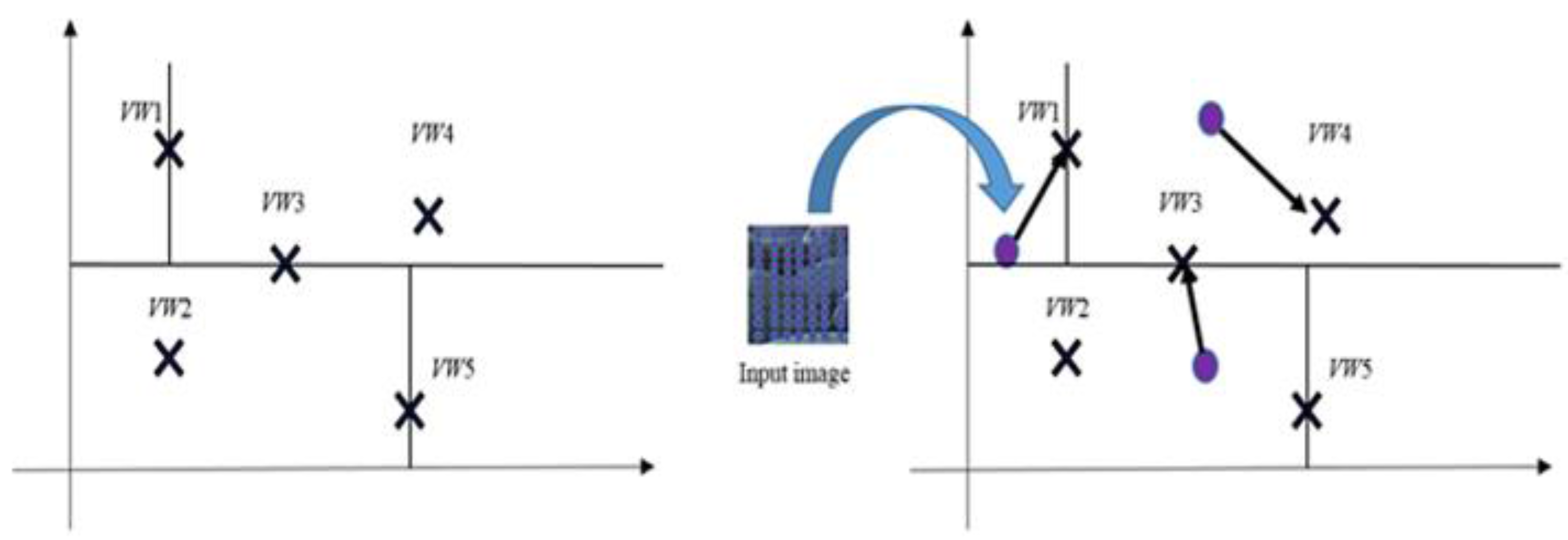

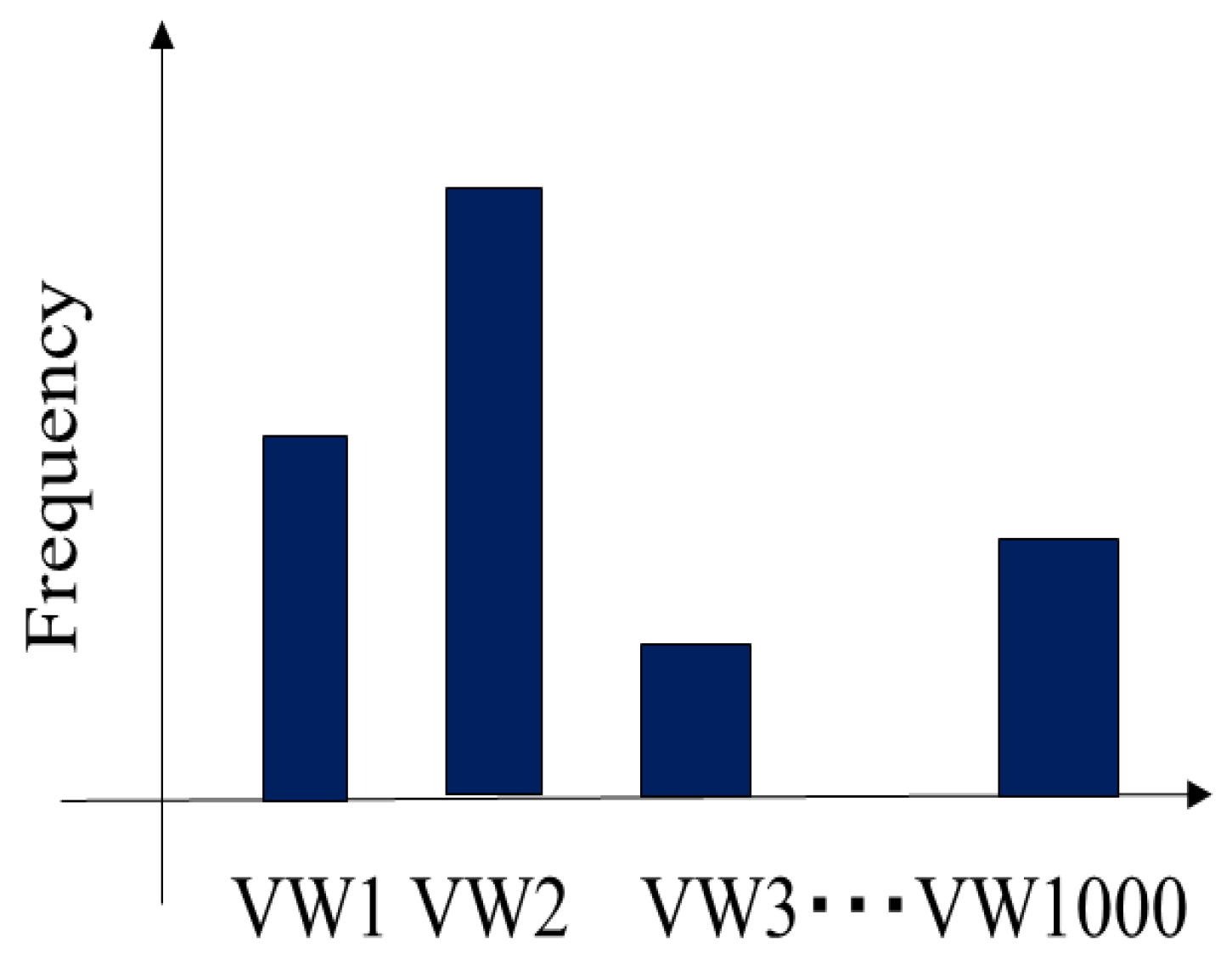

3.3. BoW (Bag of Words)

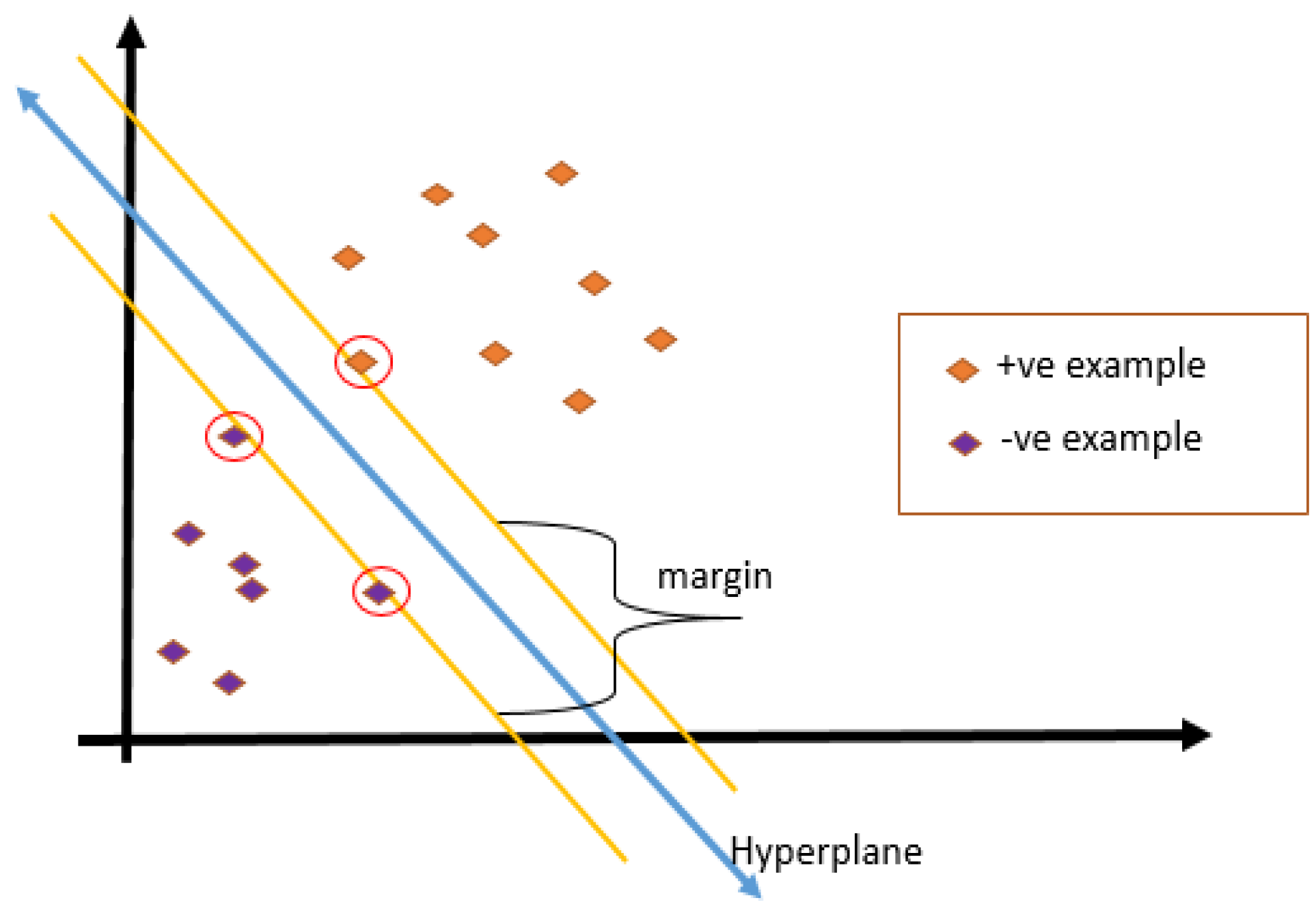

3.4. SVM (Support Vector Machine) Classifier

3.5. Database Establishment

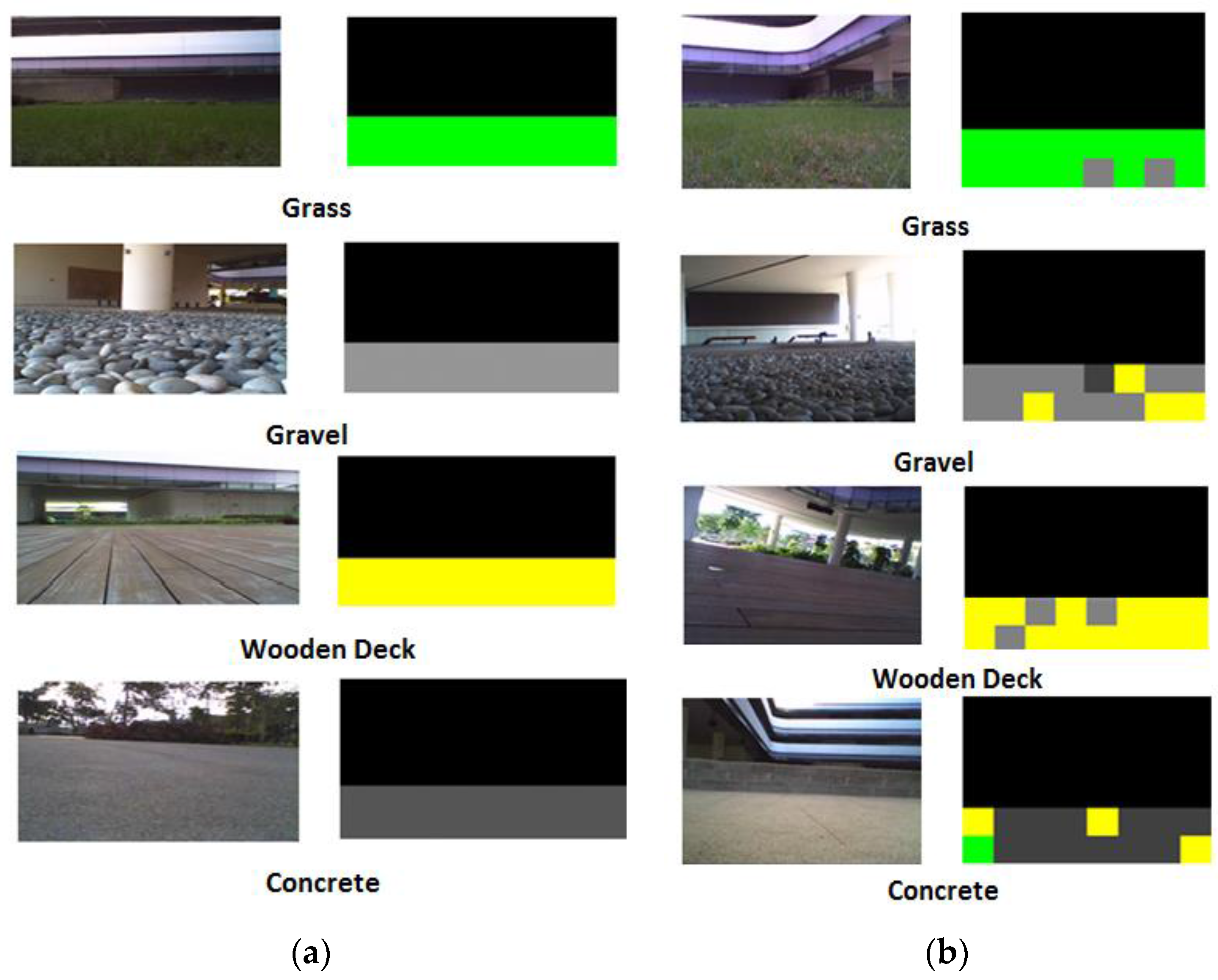

4. Experiments and Results

4.1. Conditions for the Experiment

4.2. Terrain Classification Results

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Weiss, C.; Frohlich, H.; Zell, A. Vibration-based terrain classification using support vector machines. In Proceedings of the 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems, Beijing, China, 9–15 October 2006; pp. 4429–4434.

- Best, G.; Moghadam, P.; Kottege, N.; Kleeman, L. Terrain classification using a hexapod robot. In Proceedings of the Australasian Conference on Robotics and Automation, Sydney, Australia, 2–4 December 2013.

- Takizawa, H.; Yamaguchi, S.; Aoyagi, M.; Ezaki, N.; Mizuno, S. Kinect cane: An assistive system for the visually impaired based on three-dimensional object recognition. In Proceedings of the 2012 IEEE/SICE International Symposium on System Integration (SII), Fukuoka, Japan, 16–18 December 2012; pp. 740–745.

- Zenker, S.; Aksoy, E.E.; Goldschmidt, D.; Worgotter, F.; Manoonpong, P. Visual terrain classification for selecting energy efficient gaits of a hexapod robot. In Proceedings of the 2013 IEEE/ASME International Conference on Advanced Intelligent Mechatronics (AIM), Wollongong, Australia, 9–12 July 2013; pp. 577–584.

- Lu, L.; Ordonez, C.; Collins, E.G., Jr.; DuPont, E.M. Terrain surface classification for autonomous ground vehicles using a 2D laser stripe-based structured light sensor. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, IROS 2009, St. Louis, MO, USA, 10–15 October 2009; pp. 2174–2181.

- Ascari, L.; Ziegenmeyer, M.; Corradi, P.; Gaßmann, B.; Zöllner, M.; Dillmann, R.; Dario, P. Can statistics help walking robots in assessing terrain roughness? Platform description and preliminary considerations. In Proceedings of the 9th ESA Workshop on Advanced Space Technologies for Robotics and Automation ASTRA 2006, ESTEC, Noordwijk, The Netherlands, 28–30 November 2006.

- Kim, J.R.; Lin, S.Y.; Muller, J.P.; Warner, N.H.; Gupta, S. Multi-resolution digital terrain models and their potential for Mars landing site assessments. Planet. Space Sci. 2013, 85, 89–105. [Google Scholar] [CrossRef]

- Filitchkin, P.; Byl, K. Feature-based terrain classification for littledog. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vilamoura, Portugal, 7–12 October 2012; pp. 1387–1392.

- Moghadam, P.; Wijesoma, W.S. Online, self-supervised vision-based terrain classification in unstructured environments. In Proceedings of the IEEE International Conference on Systems, Man and Cybernetics, 2009, SMC 2009, San Antonio, TX, USA, 11–14 October 2009; pp. 3100–3105.

- Otte, S.; Laible, S.; Hanten, R.; Liwicki, M.; Zell, A. Robust Visual Terrain Classification with Recurrent Neural Networks. In Proceedings; Presses Universitaires de Louvain: Bruges, Belgium, 2015; pp. 451–456. [Google Scholar]

- Thrun, S.; Montemerlo, M.; Aron, A. Probabilistic Terrain Analysis for High-Speed Desert Driving. In Proceedings of the Robotics: Science and Systems, Philadelphia, PA, USA, 16–19 August 2006; pp. 16–19.

- Bajracharya, M.; Tang, B.; Howard, A.; Turmon, M. Learning long-range terrain classification for autonomous navigation. In Proceedings of the IEEE International Conference on Robotics and Automation, 2008, ICRA 2008, Pasadena, CA, USA, 19–23 May 2008; pp. 4018–4024.

- Sugiyama, Y.; Hirai, S. Crawling and jumping by a deformable robot. Int. J. Robot. Res. 2006, 25, 603–620. [Google Scholar] [CrossRef]

- Chen, S.C.; Huang, K.J.; Chen, W.H.; Shen, S.Y.; Li, C.H.; Lin, P.C. Quattroped: A Leg—Wheel Transformable Robot. IEEE/ASME Trans. Mechatron. 2014, 19, 730–742. [Google Scholar] [CrossRef]

- Ijspeert, A.J.; Crespi, A.; Ryczko, D.; Cabelguen, J.M. From swimming to walking with a salamander robot driven by a spinal cord model. Science 2007, 315, 1416–1420. [Google Scholar] [CrossRef] [PubMed]

- Sinha, A.; Tan, N.; Mohan, R.E. Terrain perception for a reconfigurable biomimetic robot using monocular vision. Robot. Biomim. 2014, 1, 1–11. [Google Scholar] [CrossRef]

- Tan, N.; Mohan, R.E.; Elangovan, K. A Bio-inspired Reconfigurable Robot. In Advances in Reconfigurable Mechanisms and Robots II; Springer: Gewerbestrasse, Switzerland, 2016; pp. 483–493. [Google Scholar]

- Kapilavai, A.; Mohan, R.E.; Tan, N. Bioinspired design: A case study of reconfigurable crawling-rolling robot. In Proceedings of the DS80-2 20th International Conference on Engineering Design (ICED 15) Vol. 2: Design Theory and Research Methodology Design Processes, Milan, Italy, 27–30 July 2015; pp. 23–34.

- Bay, H.; Ess, A.; Tuytelaars, T.; Van Gool, L. Speeded-up robust features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Juan, L.; Gwun, O. A comparison of sift, pca-sift and surf. Int. J. Image Process. (IJIP) 2009, 3, 143–152. [Google Scholar]

- Csurka, G.; Dance, C.; Fan, L.; Willamowski, J.; Bray, C. Visual categorization with bags of keypoints. In Proceedings of the ECCV International Workshop on Statistical Learning in Computer Vision, Prague, Czech Republic, 11–14 May 2004; pp. 59–74.

- Weston, J.; Watkins, C. Multi-Class Support Vector Machines; Technical Report CSD-TR-98-04; University of London: London, UK, 1998. [Google Scholar]

- Tuytelaars, T. Dense interest points. In Proceedings of the 2010 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), San Francisco, CA, USA, 13–18 June 2010; pp. 2281–2288.

- Bentley, J.L. K-d trees for semidynamic point sets. In Proceedings of the Sixth Annual Symposium on Computational Geometry, Berkley, CA, USA, 7–9 June 1990; pp. 187–197.

| Components | Specifications |

|---|---|

| Controller | Arduino Mini Pro 328 |

| Servo Motor | JR ES 376 |

| Servo Controller | Pololu micro Meastro 18-Channer |

| Sensors | WiFi Ai-Ball Camera; MinIMU-9 v2 |

| Battery | LiPo 1200 mAh |

| Communication | Xbee Pro S1 |

| Full body material | Polylactic acid or polyclactide (PLA) |

| Diameter (rolling form) in mm | 168 mm |

| L × W × H (crawling form) in mm | 230 mm × 230 mm × 175 mm |

| Weight | 430 g |

| Terrain | Grass | Gravel | Wood Deck | Concrete | Precision (%) |

|---|---|---|---|---|---|

| Grass | 1579 | 2 | 0 | 18 | 98.7 |

| Gravel | 11 | 1544 | 49 | 67 | 92.4 |

| Wood deck | 1 | 30 | 1530 | 74 | 93.6 |

| Concrete | 9 | 24 | 21 | 1441 | 96.4 |

| Recall (%) | 98.7 | 96.5 | 95.6 | 90.1 |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Masataka, F.; Mohan, R.E.; Tan, N.; Nakamura, A.; Pathmakumar, T. Terrain Perception in a Shape Shifting Rolling-Crawling Robot. Robotics 2016, 5, 19. https://doi.org/10.3390/robotics5040019

Masataka F, Mohan RE, Tan N, Nakamura A, Pathmakumar T. Terrain Perception in a Shape Shifting Rolling-Crawling Robot. Robotics. 2016; 5(4):19. https://doi.org/10.3390/robotics5040019

Chicago/Turabian StyleMasataka, Fuchida, Rajesh Elara Mohan, Ning Tan, Akio Nakamura, and Thejus Pathmakumar. 2016. "Terrain Perception in a Shape Shifting Rolling-Crawling Robot" Robotics 5, no. 4: 19. https://doi.org/10.3390/robotics5040019

APA StyleMasataka, F., Mohan, R. E., Tan, N., Nakamura, A., & Pathmakumar, T. (2016). Terrain Perception in a Shape Shifting Rolling-Crawling Robot. Robotics, 5(4), 19. https://doi.org/10.3390/robotics5040019