Room Volume Estimation Based on Ambiguity of Short-Term Interaural Phase Differences Using Humanoid Robot Head †

Abstract

:1. Introduction

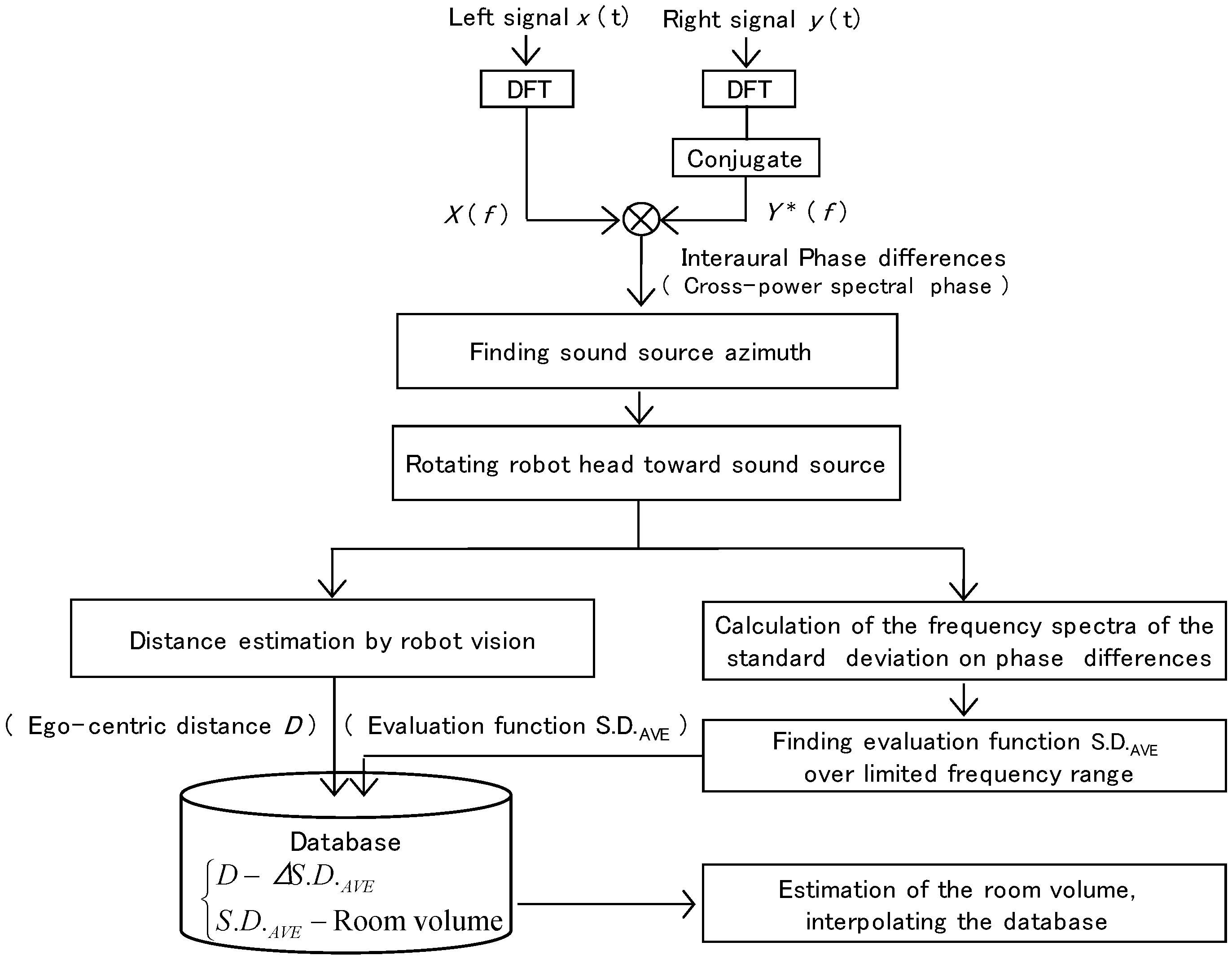

2. Methods and Procedures

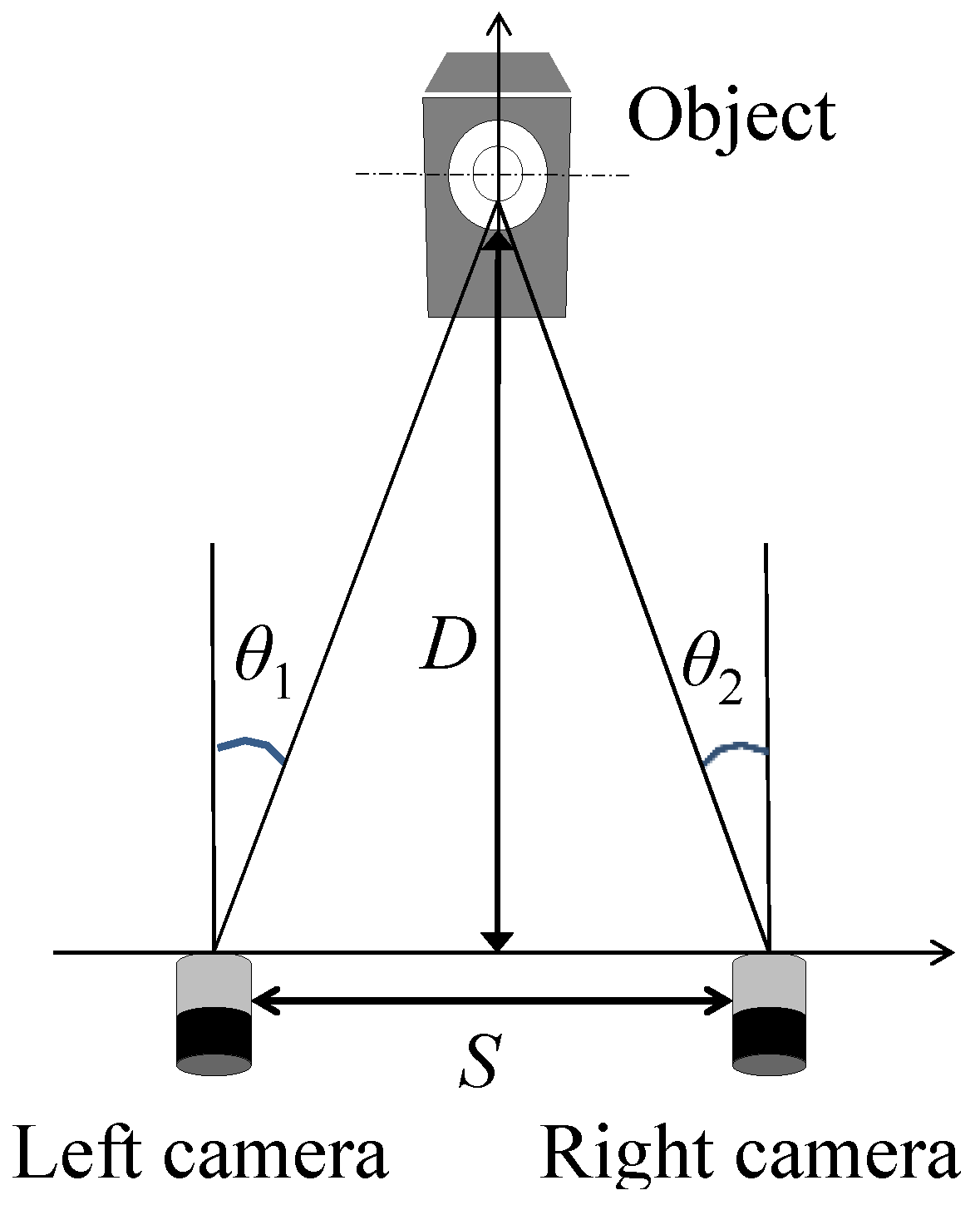

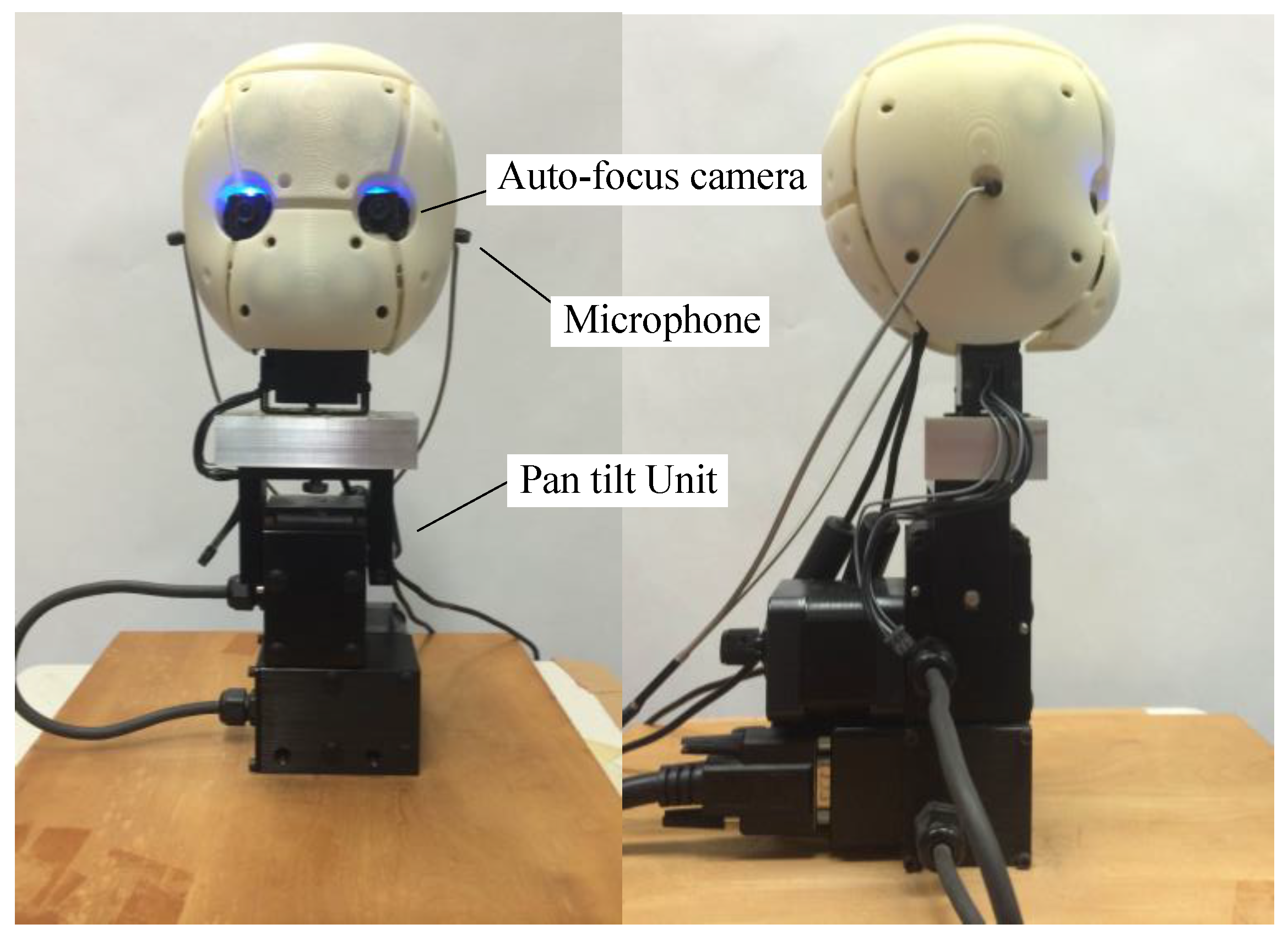

2.1. Ego-Centric Distance Estimation by Robot Vision

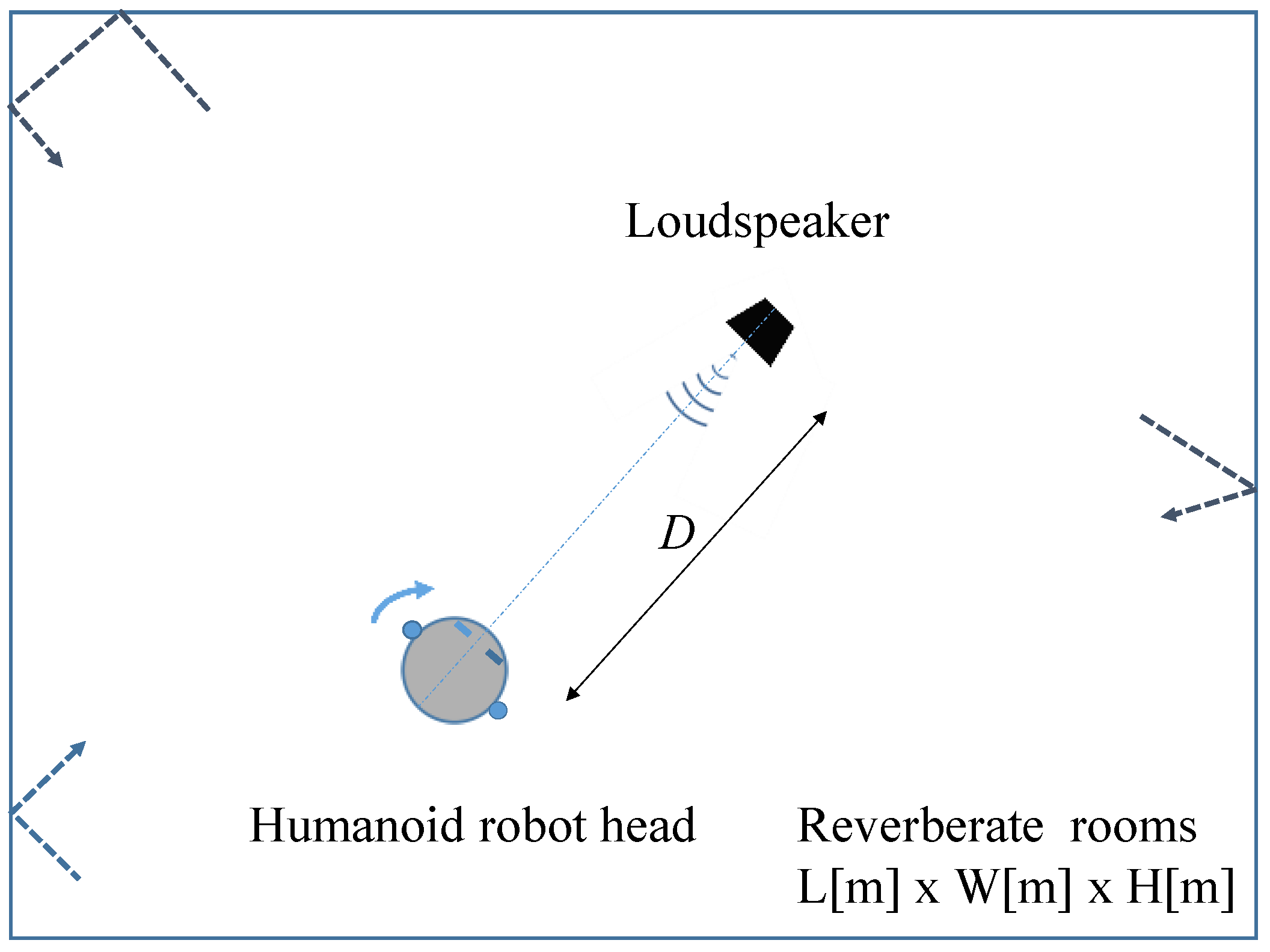

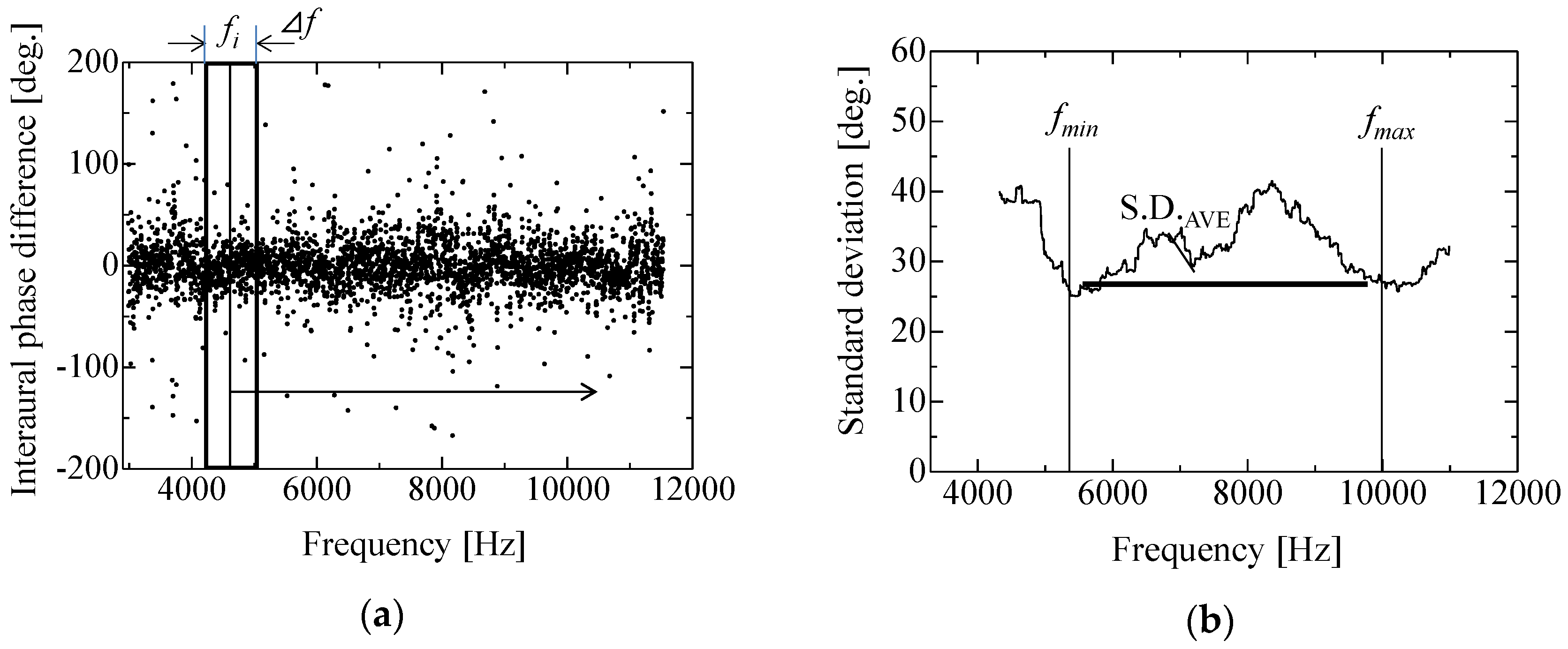

2.2. Estimation of Statistical Properties of Sound by Binaural Audition

2.3. Procedures

- Sound is generated in the room.

- The sound source is localized with binaural signals by using the localization algorithm CAVSPAC [21]. The robot head is rotated toward the sound source. Though there is ambiguity in the sound source’s position in terms of front or back, the robot can distinguish front from back by rotating its head several times.

- The object is recognized by robot vision. The robot adjusts its head angle horizontally and vertically to satisfy ε < 0.5 degrees in Equation (2). This threshold value was based on the results of preliminary measurements.

- The ego-centric distance is calculated by Equation (1). The error of the estimated ego-centric distance is below 0.02 m over the range from 0.5 m to 1.5 m after correction.

- Binaural sound signals are measured during the measuring time frame. The cross-power spectral phase is calculated by Equations (3) and (4).

- The frequency spectrum of the standard deviation is obtained by Equation (5).

- S.D.AVE is calculated for evaluating the reverberation by Equation (6).

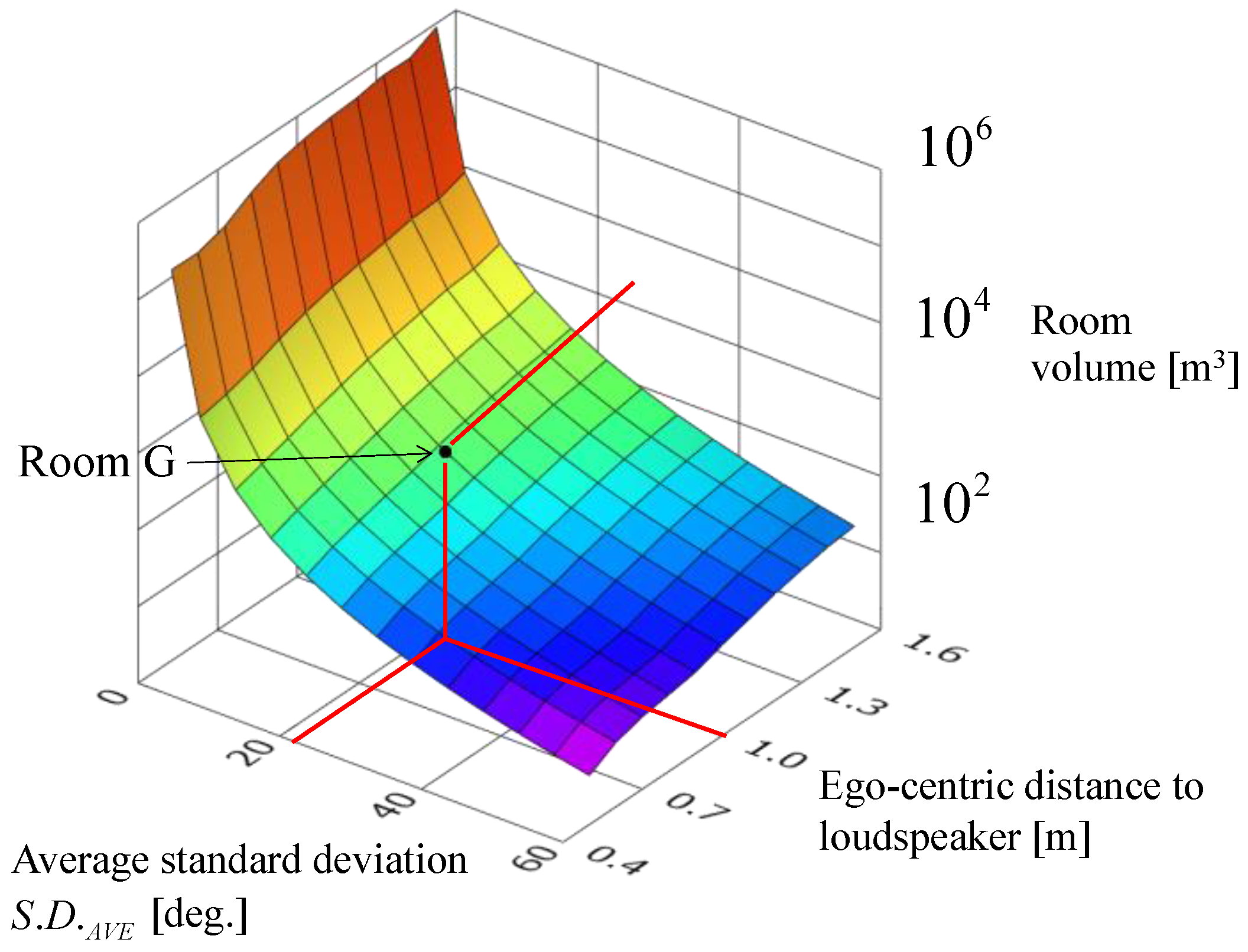

- The room volume is estimated by referring to the database, which contains the relations between the average standard deviation, the ego-centric distance, and the room volume, experimentally defined in advance.

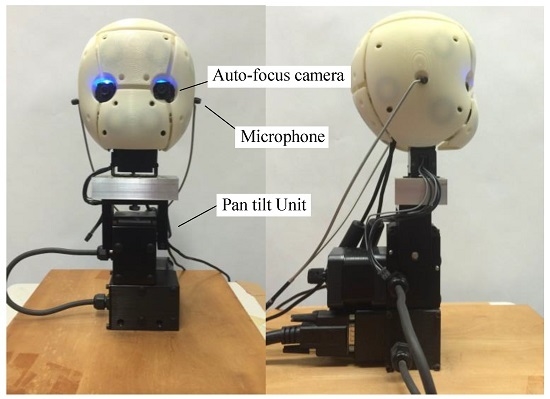

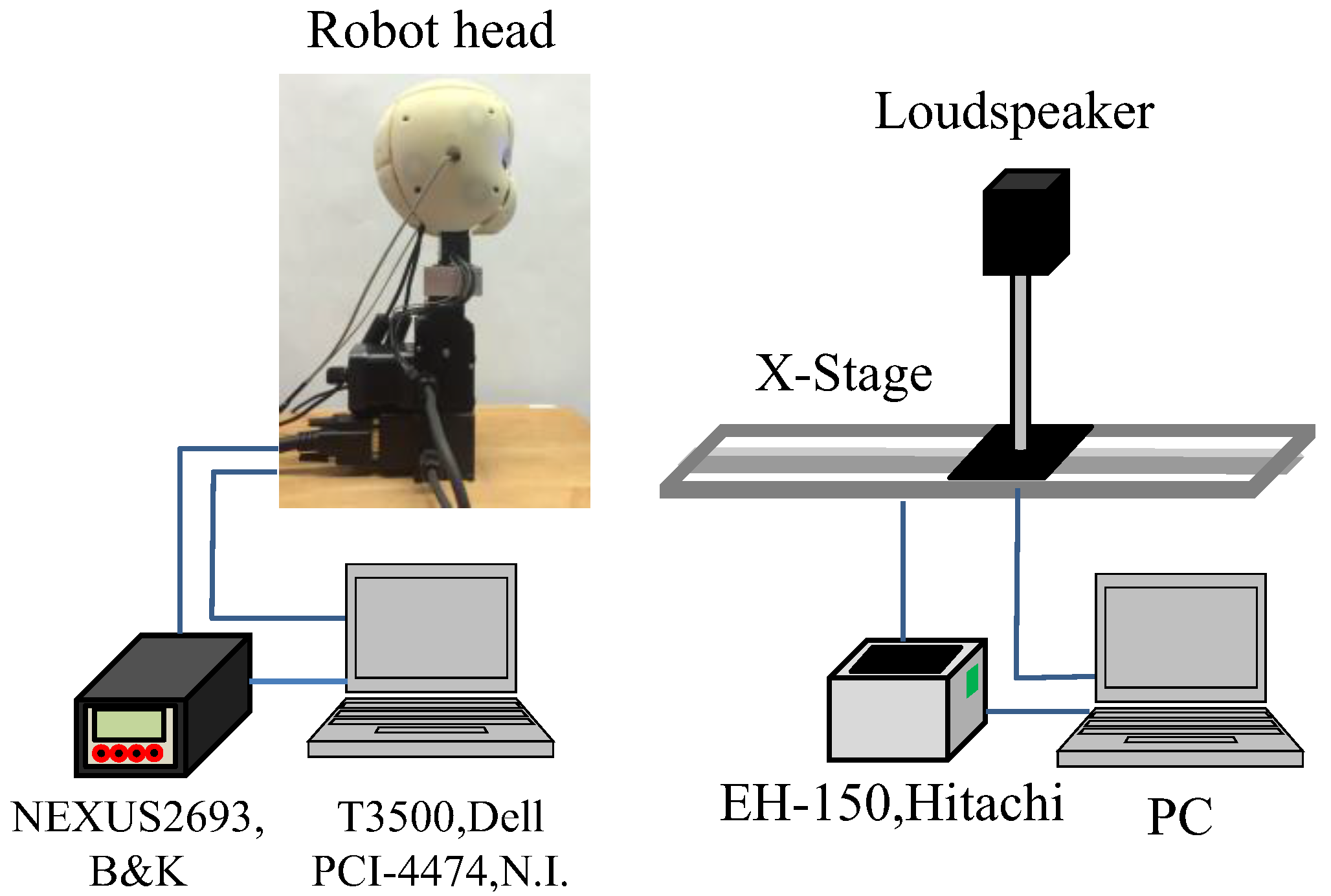

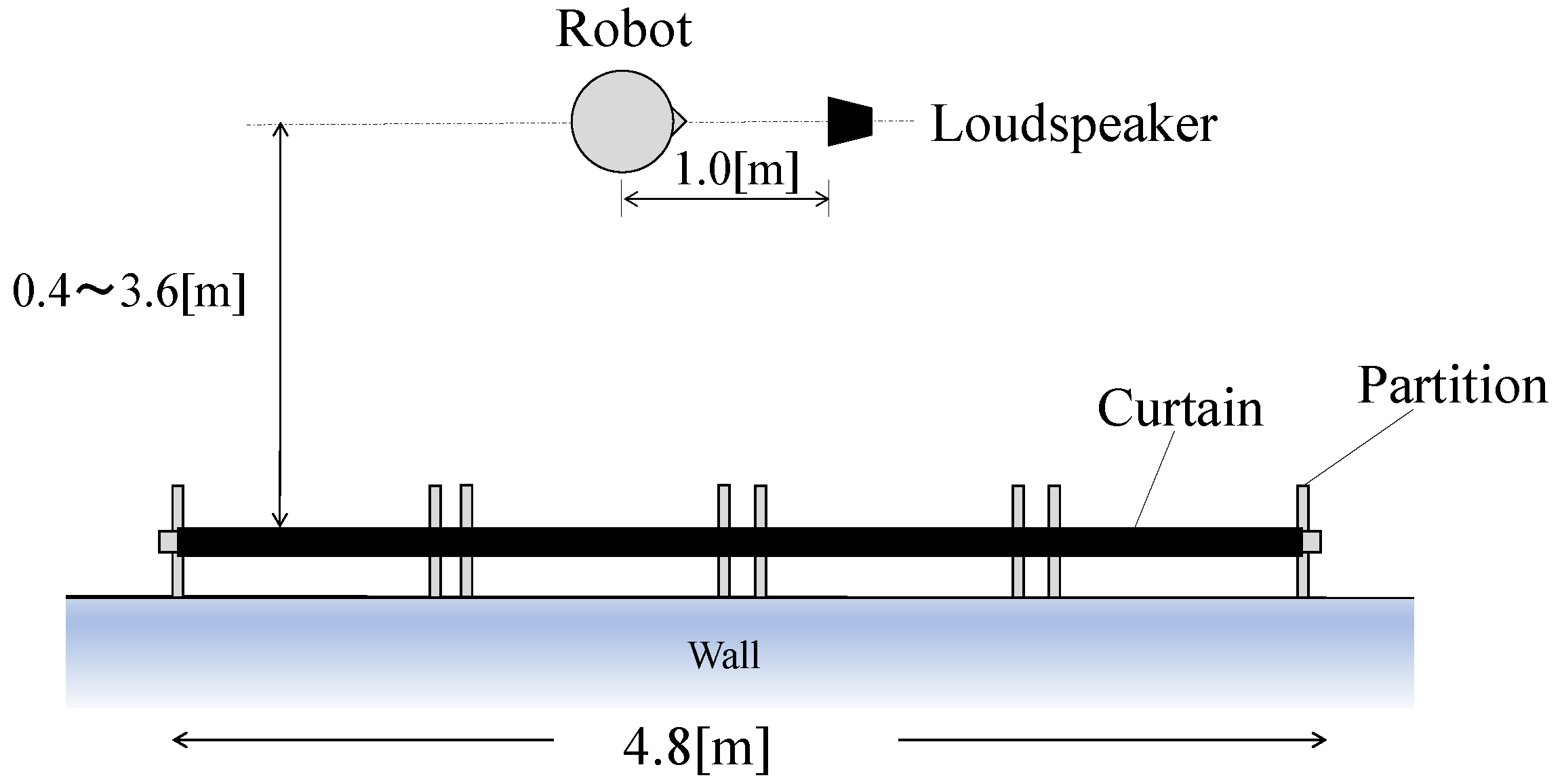

2.4. Experimental Measuring System

3. Experimental Results

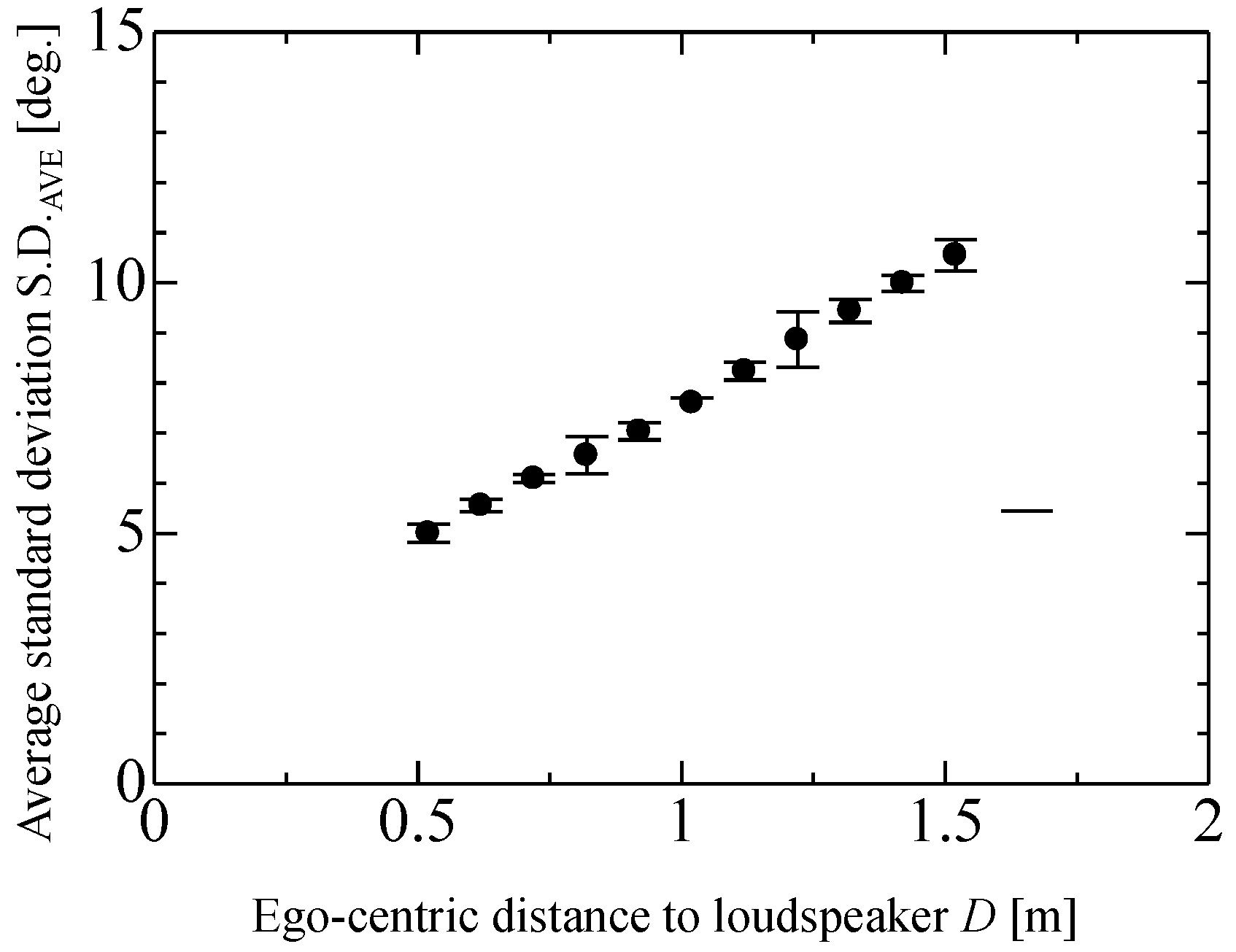

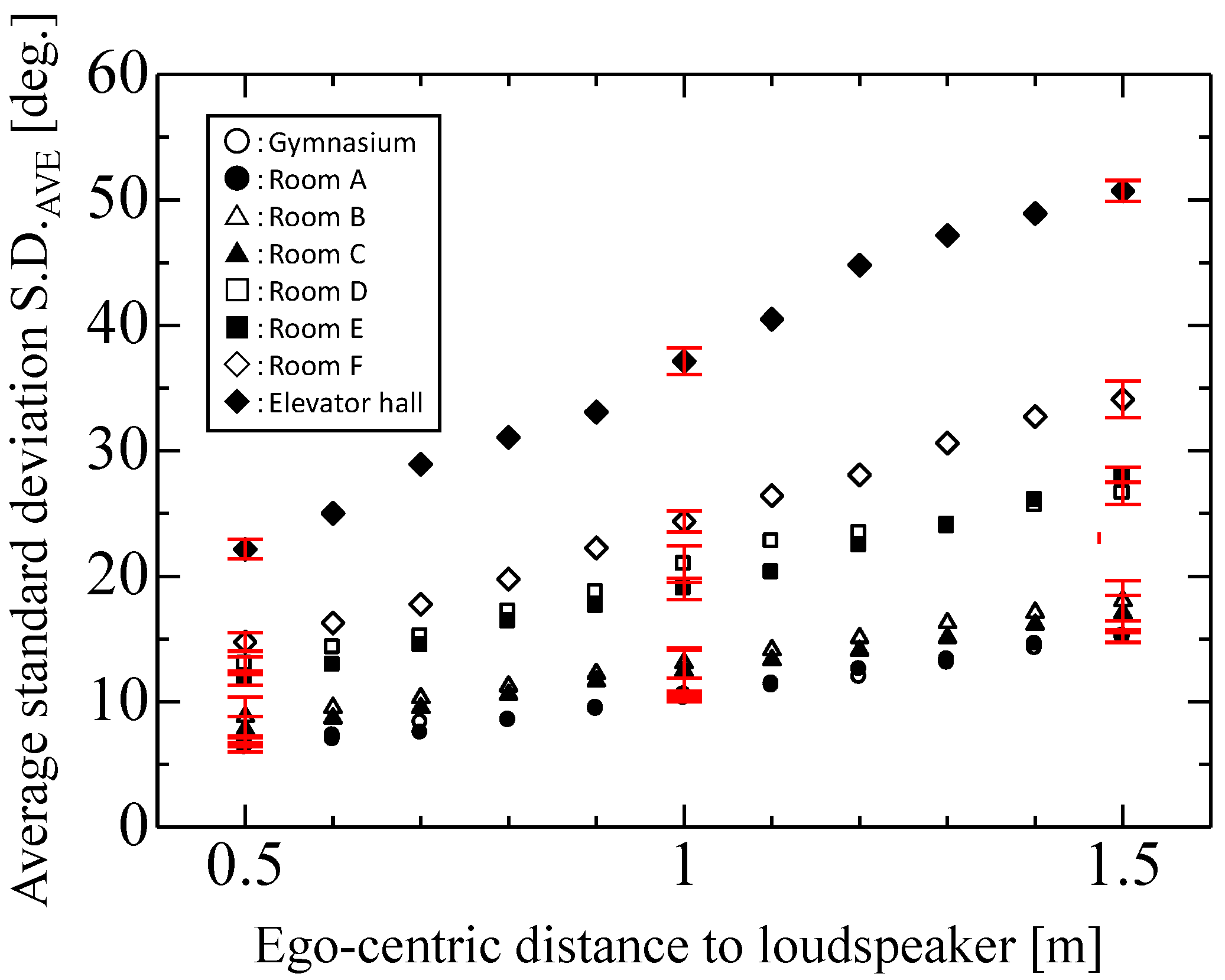

3.1. Statistic Properties of IPDs in Short-Term Frequency Analysis

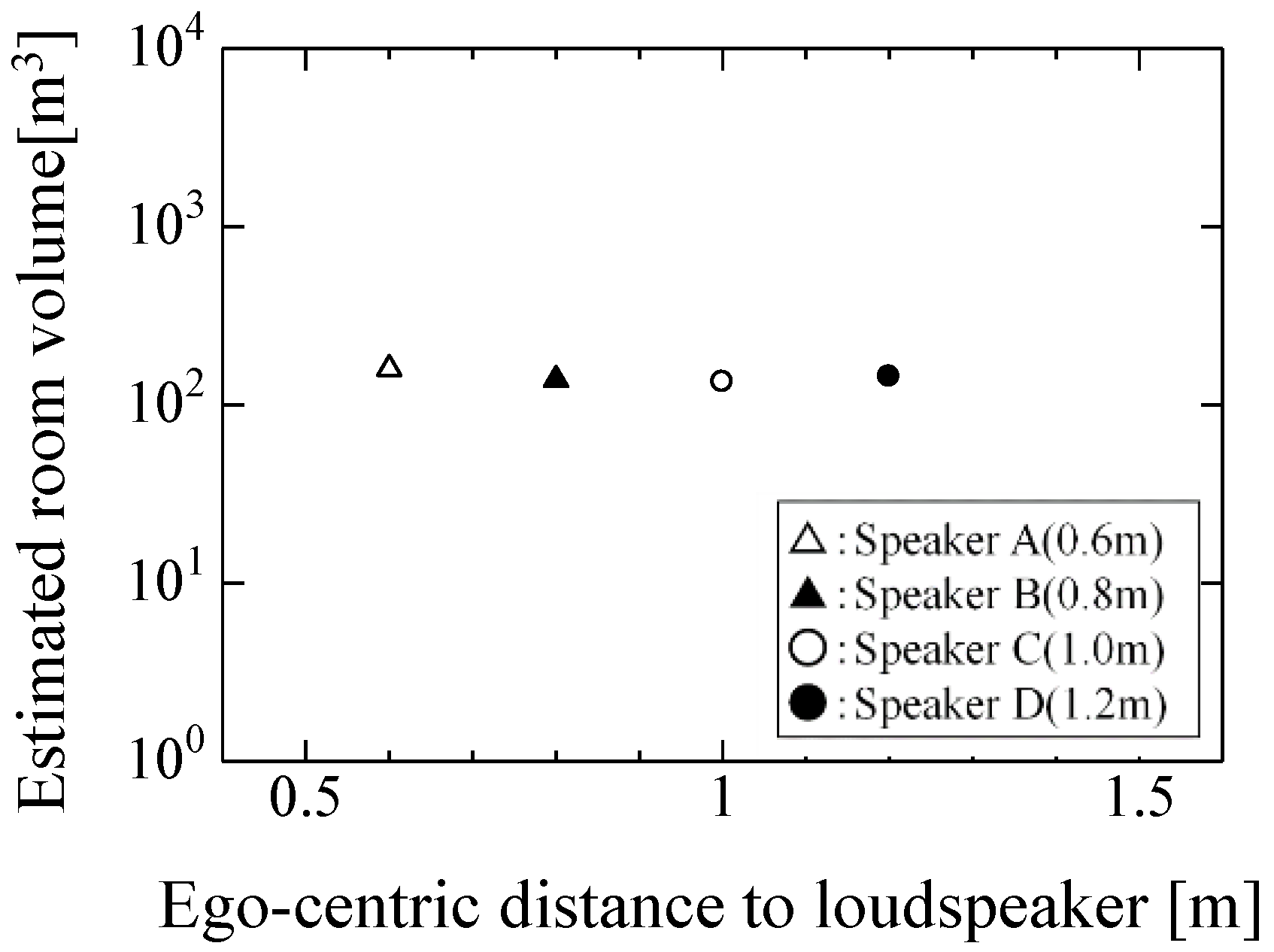

3.2. Estimation of Test Room Volume

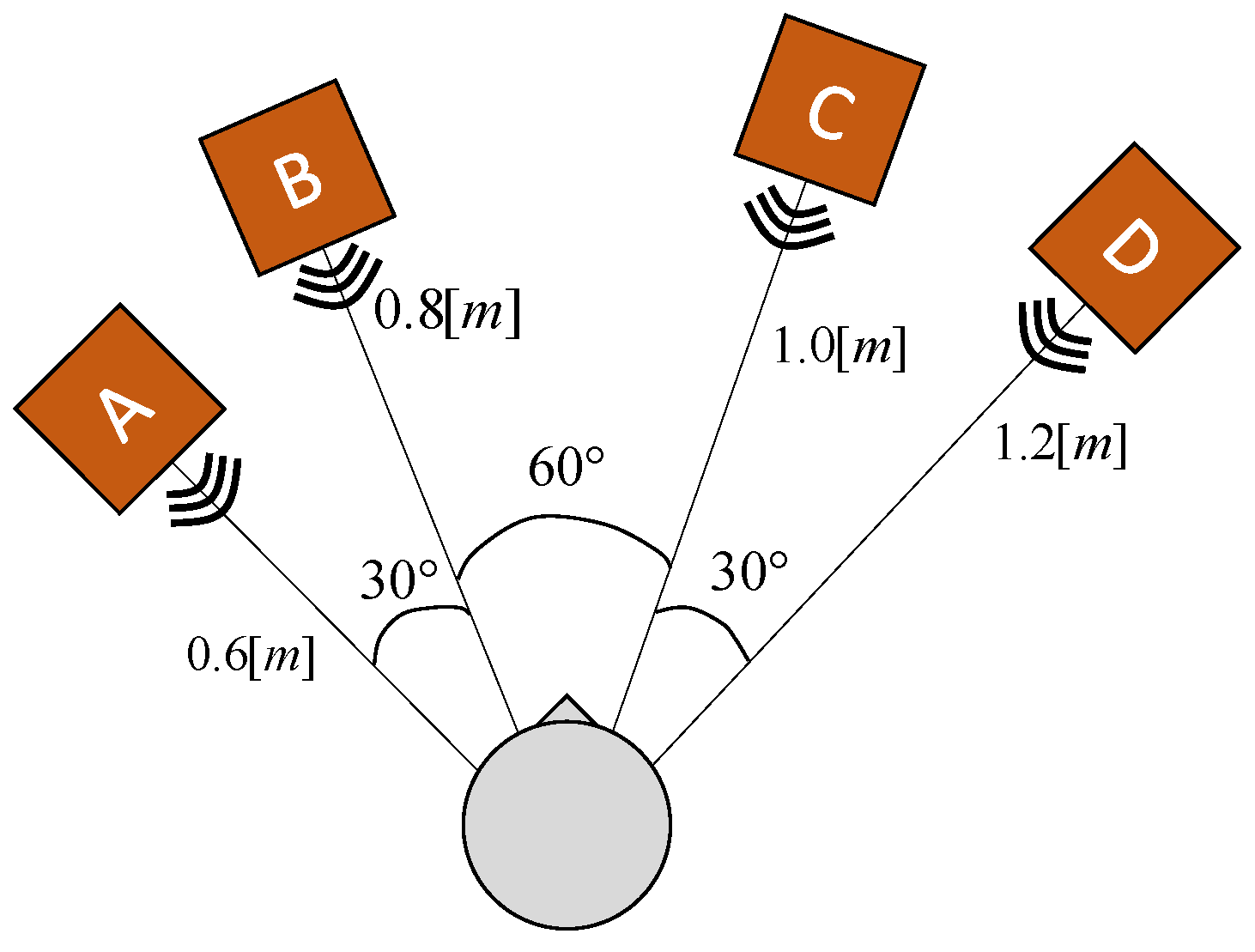

3.3. Effect of Surrounding Obstacles on the Room Volume Estimation

4. Discussion

5. Conclusions

- (1)

- Short-term IPDs are ambiguous, unrepeatable, and unreliable for distant sound sources. Average standard deviation of short-term IPDs (S.D.AVE) is more repeatable and reliable than the IPDs even in reverberation rooms.

- (2)

- The proposed average standard deviation is proportional to the ego-centric distance to the sound source. The slope of S.D.AVE with respect to the ego-centric distance depends on the room volume.

- (3)

- The average standard deviation of short-term IPDs depends on the volume of the room. The effect of the reflected sound from not only the floor and the ceiling but also the far wall may not be negligible in the average standard deviation.

- (4)

- The average standard deviation of short-term IPDs increases with decreasing distance near surrounding obstacles, such as a side wall, partitions, or a curtain. Thus, the room volume is underestimated near the obstacles.

- (5)

- For eight rooms having different room volumes, the robot could categorize them into four sizes of rooms, namely, small, middle, intermediate, and large, using the average standard deviation values of short-term IPDs.

Author Contributions

Conflicts of Interest

References

- Nakamura, K.; Nakadai, K.; Asano, F.; Hasegawa, Y.; Tsujino, H. Intelligent sound source localization for dynamic environments. In Proceedings of the 2009 IEEE/RSJ International Conference on Intelligent Robots And Systems, St. Louis, MO, USA, 10–15 October 2009; pp. 664–669.

- Asano, F.; Asoh, H.; Nakadai, K. Sound source localization using joint Bayesian estimation with a hierarchical noise model. IEEE Trans. Audio Speech Lang. Proc. 2013, 21, 1953–1965. [Google Scholar] [CrossRef]

- Shabtai, N.R.; Zigel, Y.; Rafaely, B. Estimating the room volume from room impulse response via hypothesis verification approach. In Proceedings of the 2009 IEEE/SP 15th Workshop on Statistical Signal Processing, Cardiff, UK, 31 August–3 September 2009; pp. 717–720.

- Kuster, M. Reliability of estimating the room volume from a single room impulse response. J. Acoust. Soc. Am. 2008, 124, 982–993. [Google Scholar] [CrossRef] [PubMed]

- Kearney, G.; Masterson, C.; Adams, S.; Boland, F. Approximation of binaural room impulse responses. Proc. ISSC 2009, 2009, 1–6. [Google Scholar]

- Jeub, M.; Schafer, M.; Vary, P. A binaural room impulse response database for the evaluation of dereverberation algorithms. In Proceedings of the 2009 16th International Conference on Digital Signal Processing, Santorini, Creece, 5–7 July 2009; pp. 1–5.

- Larsen, E.; Schmitz, C.D. Acoustic scene analysis using estimated impulse responses. Proc. Signals Syst. Comp. 2004, 1, 725–729. [Google Scholar]

- Shinn-Cunningham, B.G.; Kopco, N.; Martin, T.J. Localizing nearby sound sources in a classroom: Binaural room impulse responses. J. Acoust. Soc. Am. 2005, 117, 3100–3115. [Google Scholar] [CrossRef] [PubMed]

- Shabtai, N.R.; Zigel, Y.; Rafaely, B. Feature selection for room volume identification from room impulse response. In Proceedings of the 2009 IEEE Workshop on Applications of Signal Processing to Audio and Acoustics, New Paltz, NY, USA, 18–21 October 2009.

- Vesa, S. Binaural sound source distance learning in rooms. IEEE Trans. Audio Speech Lang. Proc. 2009, 17, 1498–1507. [Google Scholar] [CrossRef]

- Georganti, E.; May, T.; Par, S.V.D.; Mourjopoulos, J. Sound source distance estimation in rooms based on statistical properties of binaural signals. IEEE Trans. Audio Speech Lang. Proc. 2013, 21, 1727–1741. [Google Scholar] [CrossRef]

- Jetzt, J.J. Critical distance measurement of rooms from the sound energy spectral response. J. Acoust. Soc. Am. 1979, 65, 1204–1211. [Google Scholar] [CrossRef]

- Bronkhorst, A.W.; Houtgast, T. Auditory distance perception in rooms. Nature 1999, 397, 517–520. [Google Scholar] [CrossRef] [PubMed]

- Kuster, M. Estimating the direct-to reverberant energy ratio from the coherence between coincident pressure and particle velocity. J. Acoust. Soc. Am. 2011, 130, 3781–3787. [Google Scholar] [CrossRef] [PubMed]

- Georganti, E.; Mourjopoulos, J.; Par, S.V.D. Room statistics and direct-to-reverberant ratio estimation from dual-channel signals. In Proceedings of the 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Florence, Italy, 4–9 May 2014; pp. 42–47.

- Eaton, J.; Moore, A.H.; Naylor, P.A.; Skoglund, J. Direct-to-reverberant ratio estimation using a null-steered beamformer. In Proceedings of the 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), South Brisbane, Austrelia, 19–24 April 2015; pp. 46–50.

- Hioka, Y.; Niwa, K. Estimating direct-to-reverberant ratio mapped from power spectral density using deep neural network. In Proceedings of the 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Shanghai, China, 20–25 March 2016; pp. 149–152.

- Hu, J.S.; Liu, W.H. Location classification of nonstationary sound sources using binaural room distribution patterns. IEEE Trans. Audio Speech Lang. Proc. 2009, 17, 682–692. [Google Scholar] [CrossRef]

- Nix, J.; Holmann, V. Sound source localization in real sound fields based on empirical statistics of interaural parameters. J. Acoust. Soc. Am. 2006, 119, 463–479. [Google Scholar] [CrossRef] [PubMed]

- Kido, K.; Suzuki, H.; Ono, T. Deformation of impulse response estimates by time window in cross spectral technique. J. Acoust. Soc. Jpn. (E) 1998, 19, 249–361. [Google Scholar] [CrossRef]

- Shimoyama, R.; Yamazaki, K. Computational acoustic vision by solving phase ambiguity confusion. Acoust. Sci. Tech. 2009, 30, 199–208. [Google Scholar] [CrossRef]

- Hartmann, W.M.; Rakerd, B.; Koller, A. Binaural coherence in rooms. Acta Acust. United Acust. 2005, 91, 451–462. [Google Scholar]

- Shimoyama, R.; Fukuda, R. Room volume estimation based on statistical properties of binaural signals using humanoid robot. In Proceedings of the 23rd International Conference on Robotics in Alpe-Adria-Danube Region (RAAD), Smolenice, Slovakia, 3–5 September 2014; pp. 1–6.

| Room | Room Measurement L[m]×W[m]×H[m] | Room Volume [m3] |

|---|---|---|

| Gymnasium | 26.0 × 39.0 × 11.7 | 119 × 102 |

| Lecture room A | 20.1 × 14.7 × 3.3 | 971 |

| Lecture room B | 14.3 × 17.6 × 3.0 | 755 |

| Lecture room C | 14.1 × 17.1 × 3.0 | 726 |

| Lecture room D | 9.0 × 13.6 × 3.0 | 367 |

| Lecture room E | 8.5 × 13.6 × 3.0 | 347 |

| Lecture room F | 5.7 × 7.6 × 2.6 | 113 |

| Elevator hall | 7.8 × 3.6 × 3.0 | 70 |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shimoyama, R.; Fukuda, R. Room Volume Estimation Based on Ambiguity of Short-Term Interaural Phase Differences Using Humanoid Robot Head. Robotics 2016, 5, 16. https://doi.org/10.3390/robotics5030016

Shimoyama R, Fukuda R. Room Volume Estimation Based on Ambiguity of Short-Term Interaural Phase Differences Using Humanoid Robot Head. Robotics. 2016; 5(3):16. https://doi.org/10.3390/robotics5030016

Chicago/Turabian StyleShimoyama, Ryuichi, and Reo Fukuda. 2016. "Room Volume Estimation Based on Ambiguity of Short-Term Interaural Phase Differences Using Humanoid Robot Head" Robotics 5, no. 3: 16. https://doi.org/10.3390/robotics5030016

APA StyleShimoyama, R., & Fukuda, R. (2016). Room Volume Estimation Based on Ambiguity of Short-Term Interaural Phase Differences Using Humanoid Robot Head. Robotics, 5(3), 16. https://doi.org/10.3390/robotics5030016