Design and Evaluation of a Sound-Driven Robot Quiz System with Fair First-Responder Detection and Gamified Multimodal Feedback

Abstract

1. Introduction

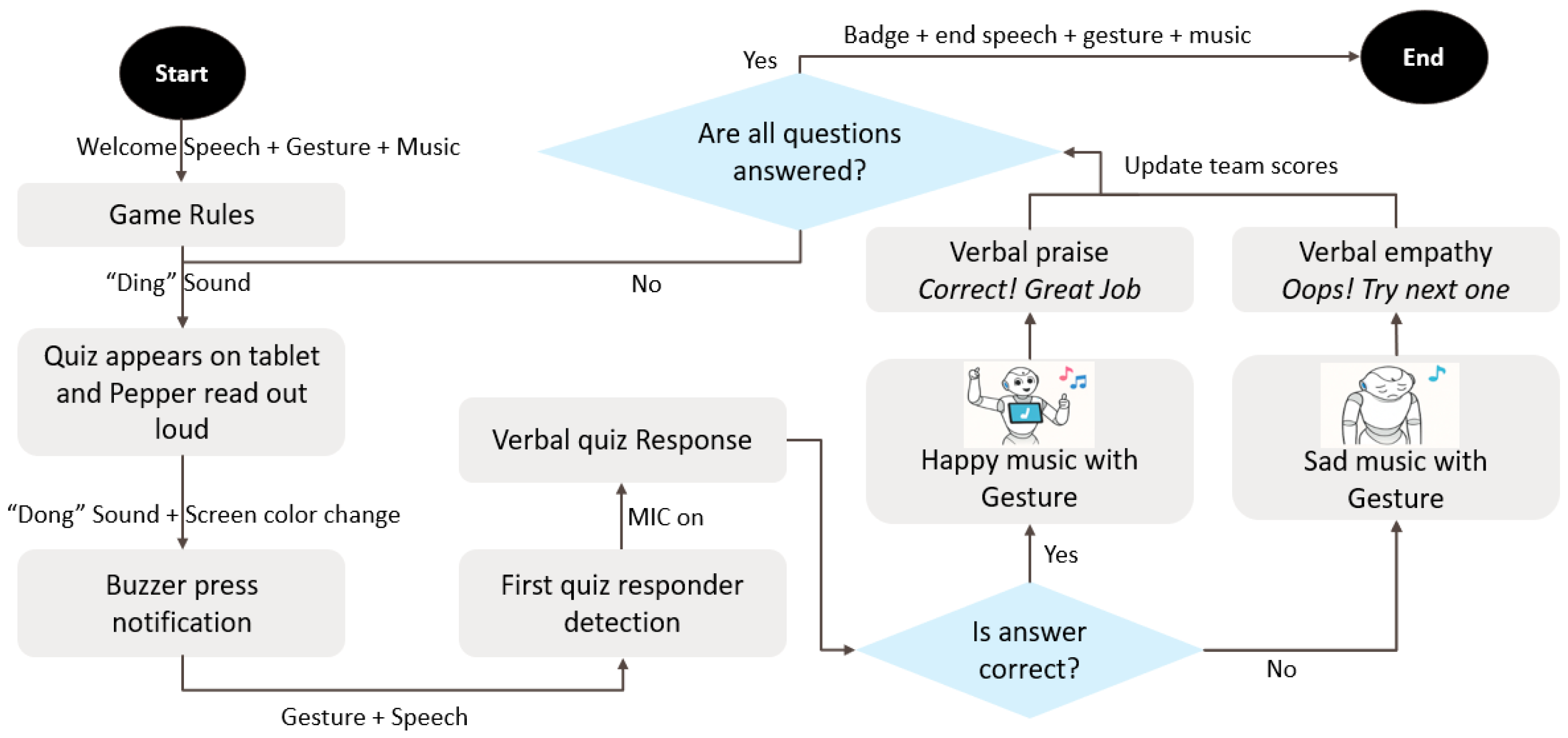

- Artefact A (Experimental Prototype): A robot-led quiz system featuring sound-driven first responder detection (using cross-correlation), multimodal feedback (gesture, music, speech), and gamification elements (points, badges).

- Artefact B (Baseline Prototype): A robot-led quiz system with sequential turn-taking, verbal-only feedback, and no gamification.

- RQ: How does gamified multimodal feedback combined with sound-based first responder detection compare with verbal-only feedback with sequential response during robot-led quiz activities involving two competing teams in terms of perceived usefulness, ease of use, motivation, social presence, and behavioral intention?

2. Related Work

2.1. Educational Robots in Learning Environments

2.2. Multimodal Interaction and Feedback in HRI

2.3. Fairness and First Responder Detection in Group-Based HRI

2.4. Gamification and the Octalysis Framework

2.5. Evaluation Through Multiscale HRI Instruments

3. System Design

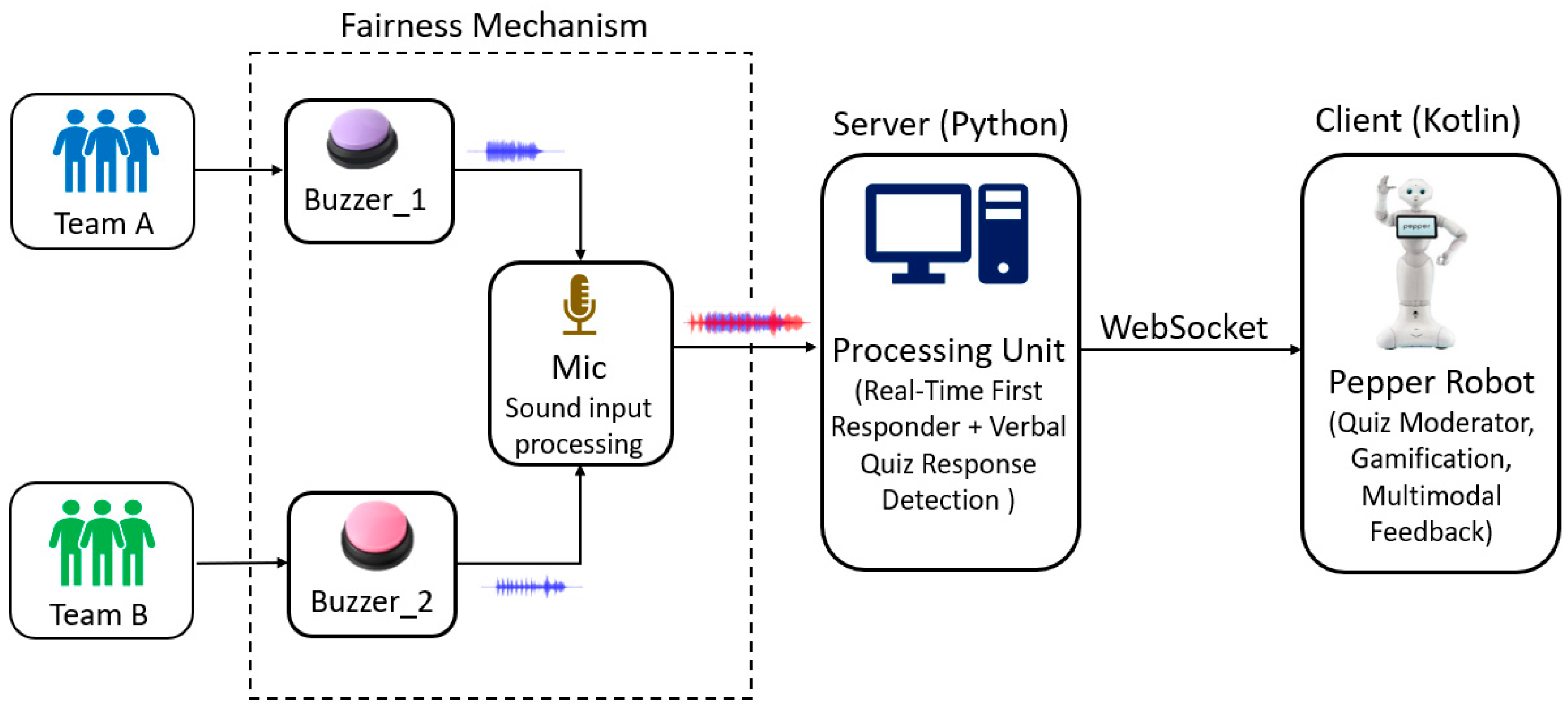

3.1. System Architecture

- A Python (3.12)-based backend responsible for sound order detection, template matching, and interaction logic

- A Kotlin (2.1)-based Pepper application using QiSDK ASR for speech recognition, gesture control, and verbal output

3.2. Sound-Based First Responder Detection

3.3. Gamification via Octalysis Integration

3.4. Feedback and Interaction Modalities

4. Experimental Design

4.1. Study Design and Conditions

4.2. Participants

4.3. Procedure and Evaluation Criteria

4.4. Data Analysis

5. Results

6. Discussion

6.1. Fairness Validation by Participants

6.2. Design Implications and Technical Considerations

7. Conclusions, Limitations, and Future Work

7.1. Limitations

7.2. Future Work

- Robust multimodal fusion combining sound, Bluetooth signals, and gesture input to reduce dependency on microphone placement.

- Adaptive ASR tuning, particularly to improve recognition of softer voices and female participants, thereby ensuring inclusivity.

- Standardized GUI design principles to minimize bias and improve usability across diverse learner groups.

- Involve larger and more diverse populations, including younger students, neurodiversity learners, and cross-cultural cohorts.

- Conduct longitudinal evaluations to measure learning outcomes, motivation, retention, and long-term system acceptance.

- Explore extended gamification strategies, such as progressive difficulty, storytelling, or cooperative challenges, to sustain engagement over repeated sessions.

- Investigate the role of fairness perception more systematically, integrating synchronized audiovisual logging to formally validate responder detection accuracy alongside user perception.

7.3. Final Remark

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| HRI | Human–Robot Interaction |

| TAM | Technology Acceptance Model |

| IMI | Intrinsic Motivation Inventory |

| QiSDK | QI Software Development Kit (SDK) for NAO and Pepper robots by SoftBank Robotics |

| ASR | Automatic Speech Recognition |

Appendix A

Appendix A.1. Technology Acceptance Model (TAM) Subscales and Items

| Subscales | Items |

|---|---|

| Perceived Usefulness (PU) |

|

| Perceived Ease of Use (PEOU) |

|

| Behavioral Intention (BI) |

|

Appendix A.2. Intrinsic Motivation Inventory (IMI) Subscales and Items

| Subscales | Items |

|---|---|

| Interest/Enjoyment |

|

| Perceived Competence |

|

Appendix A.3. Godspeed Social Presence Subscales and Items

| Subscales | Items |

|---|---|

| Likeability |

|

| Anthropomorphism |

|

References

- Belpaeme, T.; Kennedy, J.; Ramachandran, A.; Scassellati, B.; Tanaka, F. Social robots for education: A review. Sci. Robot. 2018, 3, eaat5954. [Google Scholar] [CrossRef]

- Papakostas, G.A.; Sidiropoulos, G.K.; Papadopoulou, C.I.; Vrochidou, E.; Kaburlasos, V.G.; Papadopoulou, M.T.; Holeva, V.; Nikopoulou, V.-A.; Dalivigkas, N. Social Robots in Special Education: A Systematic Review. Electronics 2021, 10, 1398. [Google Scholar] [CrossRef]

- Stasolla, F.; Curcio, E.; Borgese, A.; Passaro, A.; Di Gioia, M.; Zullo, A.; Martini, E. Educational Robotics and Game-Based Interventions for Overcoming Dyscalculia: A Pilot Study. Computers 2025, 14, 201. [Google Scholar] [CrossRef]

- Zhang, X.; Li, D.; Tu, Y.-F.; Hwang, G.-J.; Hu, L.; Chen, Y. Engaging Young Students in Effective Robotics Education: An Embodied Learning-Based Computer Programming Approach. J. Educ. Comput. Res. 2023, 62, 532–558. [Google Scholar] [CrossRef]

- Louie, W.-Y.G.; Nejat, G. A Social Robot Learning to Facilitate an Assistive Group-Based Activity from Non-expert Caregivers. Int. J. Soc. Robot. 2020, 12, 1159–1176. [Google Scholar] [CrossRef]

- Yang, Q.-F.; Lian, L.-W.; Zhao, J.-H. Developing a gamified artificial intelligence educational robot to promote learning effectiveness and behaviour in laboratory safety courses for undergraduate students. Int. J. Educ. Technol. High. Educ. 2023, 20, 18. [Google Scholar] [CrossRef]

- Chang, M.L.; Trafton, G.; McCurry, J.M.; Thomaz, A.L. Unfair! Perceptions of Fairness in Human-Robot Teams. In Proceedings of the 2021 30th IEEE International Conference on Robot & Human Interactive Communication (RO-MAN), Vancouver, BC, Canada, 8–12 August 2021; pp. 905–912. [Google Scholar] [CrossRef]

- Tutul, R.; Buchem, I.; Jakob, A.; Pinkwart, N. Enhancing Learner Motivation, Engagement, and Enjoyment Through Sound-Recognizing Humanoid Robots in Quiz-Based Educational Games. In Digital Interaction and Machine Intelligence, Proceedings of the MIDI’2023—11th Machine Intelligence and Digital Interaction Conference, Warsaw, Poland, 12–14 December 2023; Lecture Notes in Networks and Systems; Springer Nature: Cham, Switzerland, 2024; pp. 123–132. [Google Scholar] [CrossRef]

- Alam, A. Social Robots in Education for Long-Term Human-Robot Interaction: Socially Supportive Behaviour of Robotic Tutor for Creating Robo-Tangible Learning Environment in a Guided Discovery Learning Interaction. ECS Trans. 2022, 107, 12389–12403. [Google Scholar] [CrossRef]

- Leite, I.; Martinho, C.; Paiva, A. Social robots for long-term interaction: A survey. Int. J. Soc. Robot. 2013, 5, 291–308. [Google Scholar] [CrossRef]

- Bacula, A.; Knight, H. Dancing with Robots at a Science Museum: Coherent Motions Got More People To Dance, Incoherent Sends Weaker Signal. In Proceedings of the 2024 International Symposium on Technological Advances in Human-Robot Interaction, Boulder, CO, USA, 9–10 March 2024. [Google Scholar]

- Theodotou, E. Dancing With children or dancing for children? Measuring the effects of a dance intervention in children’s confidence and agency. Early Child Dev. Care 2025, 195, 64–73. [Google Scholar] [CrossRef]

- Chou, Y.K. Actionable Gamification: Beyond Points, Badges, and Leaderboards; Octalysis Group: Sheridan, WY, USA, 2015. [Google Scholar]

- Bagheri, E.; Vanderborght, B.; Roesler, O.; Cao, H.-L. A Reinforcement Learning Based Cognitive Empathy Framework for Social Robots. Int. J. Soc. Robot. 2020, 13, 1079–1093. [Google Scholar] [CrossRef]

- Venkatesh, V.; Davis, F.D. A theoretical extension of the TAM: Four longitudinal studies. Manag. Sci. 2000, 46, 186–204. [Google Scholar] [CrossRef]

- Deci, E.L.; Ryan, R.M. Intrinsic Motivation and Self-Determination in Human Behavior; Springer: Berline/Heidelberg, Germany, 1985. [Google Scholar]

- Bartneck, C.; Kulic, D.; Croft, E.; Zoghbi, S. Measurement instruments for the anthropomorphism, animacy, likeability, perceived intelligence, and perceived safety of robots. Int. J. Soc. Robot. 2009, 1, 71–81. [Google Scholar] [CrossRef]

- Fung, K.Y.; Lee, L.H.; Sin, K.F.; Song, S.; Qu, H. Humanoid robot-empowered language learning based on self-determination theory. Educ. Inf. Technol. 2024, 29, 18927–18957. [Google Scholar] [CrossRef]

- Hirschmanner, M.; Gross, S.; Krenn, B.; Neubarth, F.; Trapp, M.; Vincze, M. Grounded Word Learning on a Pepper Robot. In Proceedings of the 18th International Conference on Intelligent Virtual Agents, Sydney, NSW, Australia, 5–8 November 2018. [Google Scholar]

- Schiavo, F.; Campitiello, L.; Todino, M.D.; Di Tore, P.A. Educational Robots, Emotion Recognition and ASD: New Horizon in Special Education. Educ. Sci. 2024, 14, 258. [Google Scholar] [CrossRef]

- Ackermann, H.; Lange, A.L.; Hafner, V.V.; Lazarides, R. How adaptive social robots influence cognitive, emotional, and self-regulated learning. Sci. Rep. 2025, 15, 6581. [Google Scholar] [CrossRef] [PubMed]

- Goldman, E.J.; Baumann, A.; Poulin-Dubois, D. Pre-schoolers’ anthropomorphizing of robots: Do human-like properties matter? Front. Psychol. 2023, 13, 1102370. [Google Scholar] [CrossRef] [PubMed]

- Guo, J.; Ishiguro, H.; Sumioka, H. A Multimodal System for Empathy Expression: Impact of Haptic and Auditory Stimuli. In Proceedings of the Extended Abstracts of the CHI Conference on Human Factors in Computing Systems (CHI EA’25), Yokohama, Japan, 26 April–1 May 2025; Association for Computing Machinery: New York, NY, USA, 2025. Article 44. [Google Scholar] [CrossRef]

- Su, H.; Qi, W.; Chen, J.; Yang, C.; Sandoval, J.; Laribi, M.A. Recent advancements in multimodal human–robot interaction. Front. Neurorobot. 2023, 17, 1084000. [Google Scholar] [CrossRef]

- Kennedy, J.; Baxter, P.; Belpaeme, T. Comparing Robot Embodiments in a Guided Discovery Learning Interaction with Children. Int. J. Soc. Robot. 2015, 7, 293–308. [Google Scholar] [CrossRef]

- Delecluse, M.; Sanchez, S.; Cussat-Blanc, S.; Schneider, N.; Welcomme, J.-B. High-level behavior regulation for multi-robot systems. In Proceedings of the Companion Publication of the 2014 Annual Conference on Genetic and Evolutionary Computation (GECCO Comp’14), Vancouver, BC, Canada, 12–16 July 2014; Association for Computing Machinery: New York, NY, USA, 2014; pp. 29–30. [Google Scholar] [CrossRef]

- Kragness, H.E.; Ullah, F.; Chan, E.; Moses, R.; Cirelli, L.K. Tiny dancers: Effects of musical familiarity and tempo on children’s free dancing. Dev. Psychol. 2022, 58, 1277–1285. [Google Scholar] [CrossRef]

- Huang, P.; Hu, Y.; Nechyporenko, N.; Kim, D.; Talbott, W.; Zhang, J. EMOTION: Expressive Motion Sequence Generation for Humanoid Robots with In-Context Learning. IEEE Robot. Autom. Lett. 2024, 10, 7699–7706. [Google Scholar] [CrossRef]

- Cao, J.; Chen, N. The Influence of Robots’ Fairness on Humans’ Reward-Punishment Behaviors and Trust in Human-Robot Cooperative Teams. Hum. Factors 2022, 66, 1103–1117. [Google Scholar] [CrossRef] [PubMed]

- Ayalon, O.; Hok, H.; Shaw, A.; Gordon, G. When it is ok to give the Robot Less: Children’s Fairness Intuitions Towards Robots. Int. J. Soc. Robot. 2023, 15, 1581–1601. [Google Scholar] [CrossRef]

- Salinas-Martínez, Á.-G.; Cunillé-Rodríguez, J.; Aquino-López, E.; García-Moreno, A.-I. Multimodal Human–Robot Interaction Using Gestures and Speech: A Case Study for Printed Circuit Board Manufacturing. J. Manuf. Mater. Process. 2024, 8, 274. [Google Scholar] [CrossRef]

- Dichev, C.; Dicheva, D. Gamifying education: What is known, what is believed and what remains uncertain: A critical review. Int. J. Educ. Technol. High. Educ. 2017, 14, 9. [Google Scholar] [CrossRef]

- Hamari, J.; Koivisto, J.; Sarsa, H. Does gamification work? A literature review of empirical studies on gamification. In Proceedings of the 47th Hawaii International Conference on System Sciences, Waikoloa, HI, USA, 6–9 January 2014; pp. 3025–3034. [Google Scholar] [CrossRef]

- Sripathy, A.; Bobu, A.; Li, Z.; Sreenath, K.; Brown, D.S.; Dragan, A.D. Teaching Robots to Span the Space of Functional Expressive Motion. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022; pp. 13406–13413. [Google Scholar]

- Buchem, I.; Mc Elroy, A.; Tutul, R. Designing and programming game-based learning with humanoid robots: A case study of the multimodal “Make or Do” English grammar game with the Pepper robot. In Proceedings of the 15th Annual International Conference of Education, Research and Innovation, Seville, Spain, 7–9 November 2022. [Google Scholar]

- Saerbeck, M.; Schut, T.; Bartneck, C.; Janse, M.D. Expressive robots in education: Varying the degree of social supportive behavior of a robotic tutor. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI’10), Atlanta, GA, USA, 10–15 April 2010; Association for Computing Machinery: New York, NY, USA, 2010; pp. 1613–1622. [Google Scholar] [CrossRef]

- Ravandi, B.S. Gamification for Personalized Human-Robot Interaction in Companion Social Robots. In Proceedings of the 12th International Conference on Affective Computing and Intelligent Interaction Workshops and Demos (ACIIW), Glasgow, UK, 15 September 2024; pp. 106–110. [Google Scholar] [CrossRef]

- Picard, R.W. Affective Computing; The MIT Press: Cambridge, MA, USA, 1997. [Google Scholar]

- Tutul, R.; Jakob, A.; Buchem, I.; Pinkwart, N. Sound recognition with a humanoid robot for a quiz game in an educational environment. In Proceedings of the Fortschritte der Akustik—DAGA 2023, Hamburg, Germany, 6–9 March 2023; pp. 938–941. [Google Scholar]

| Core Drive | Description | Score (A) * | Score (B) * | Δ | Justification |

|---|---|---|---|---|---|

| Feeling of contributing to a bigger goal | 3 | 2 | +1 | Both systems use team competition, but only Artefact A provides team badges and verbal recognition. |

| Progress through points and achievements | 5 | 1 | +4 | Artefact A awards real-time points and badges; Artefact B offers no visible achievement. |

| Making meaningful choices or expressing individuality | 4 | 1 | +3 | Artefact A lets users record custom buzzer sounds; Artefact B has no personalization. |

| Emotional investment via team identity or rewards | 4 | 1 | +3 | Artefact A reinforces team identity through badges and scores. |

| Peer collaboration or recognition | 3 | 2 | +1 | Artefact A enables simultaneous team interaction; Artefact B is sequential and less social. |

| Urgency or time pressure to act quickly | 5 | 0 | +5 | Artefact A rewards the fastest responder; Artefact B lacks time-based interaction. |

| Surprise elements, randomness | 3 | 1 | +2 | Artefact A offers variable feedback (music, gesture); B does not. |

| Avoiding failure or missing rewards | 3 | 1 | +2 | Artefact A uses sad music and gestures for incorrect answers; B gives neutral feedback. |

| Subscale | Control Mean (SD) | Experimental Mean (SD) | t(df) | p-Value | Cohen’s d | Result Summary |

|---|---|---|---|---|---|---|

| Perceived Usefulness | 3.05 (0.81) | 4.32 (0.83) | 6.05 | 0.01 | 2.14 | Significant, large effect |

| Perceived Ease of Use | 3.17 (0.71) | 4.03 (0.92) | 4.07 | <0.001 | 1.43 | Significant, large effect |

| Motivation | 3.39 (0.36) | 4.48 (0.34) | 6.96 | <0.001 | 3.11 | Significant, very large effect |

| Social Presence | 3.36 (0.70) | 3.70 (0.62) | 2.17 | 0.03 | 0.48 | Moderate, Significant medium effect |

| Behavioral Intention | 3.28 (0.80) | 4.24 (0.83) | 4.58 | <0.001 | 1.62 | Significant, large effect |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tutul, R.; Pinkwart, N. Design and Evaluation of a Sound-Driven Robot Quiz System with Fair First-Responder Detection and Gamified Multimodal Feedback. Robotics 2025, 14, 123. https://doi.org/10.3390/robotics14090123

Tutul R, Pinkwart N. Design and Evaluation of a Sound-Driven Robot Quiz System with Fair First-Responder Detection and Gamified Multimodal Feedback. Robotics. 2025; 14(9):123. https://doi.org/10.3390/robotics14090123

Chicago/Turabian StyleTutul, Rezaul, and Niels Pinkwart. 2025. "Design and Evaluation of a Sound-Driven Robot Quiz System with Fair First-Responder Detection and Gamified Multimodal Feedback" Robotics 14, no. 9: 123. https://doi.org/10.3390/robotics14090123

APA StyleTutul, R., & Pinkwart, N. (2025). Design and Evaluation of a Sound-Driven Robot Quiz System with Fair First-Responder Detection and Gamified Multimodal Feedback. Robotics, 14(9), 123. https://doi.org/10.3390/robotics14090123