1. Introduction

Machine learning (ML) is a rapidly growing part of the science in artificial intelligence. ML algorithms are mainly based on huge databases, which are categorized and processed accordingly. For example, the authors in [

1,

2,

3] discussed the classification of machine learning-based schemes in healthcare, categorizing them based on data preprocessing and learning methods. They emphasized that ML has potential; however, there is still an issue in the appropriate selection of data on which the algorithms are to learn from. For example, the paper of [

4] presented the idea that building databases for medicine is based on the many hundreds of (for example) images of a single case; furthermore, the paper noted that this will stimulate collaboration between scientists even more than it does today. The authors mentioned that “Machine learning fuelled by the right data has the power to transform the development of breakthrough, new medicines and optimise their use in patient care”. Another example [

5] is a review of articles related to the use of artificial intelligence as a tool in higher education. Machine learning in automation is used especially in robots. An interesting example of the use of machine learning and inverse kinematics is the work on the motion simulation of an industrial robot, the results of which clearly presented a reduction in development time and investment [

6,

7].

This paper is focused on the applications of machine learning methods for mobile robots. A very interesting overview of the techniques was presented in [

8], where ML was applied for the classification of defects in wheeled mobile robots. Several techniques, i.e., random forest, support vector machine, artificial neural network, and recurrent neural network, were investigated in this research. The authors stated that machine learning can be applied to improve the performance of mobile robots, thus allowing them to save energy by waiting close to the point where work orders come in most often. An algorithm based on reinforcement learning was proposed for mobile robots in [

9]. This algorithm discretizes obstacle information and target direction information into finite states, designs a continuous reward function, and improves training performance. The algorithm was tested in a simulation environment and on a real robot [

10]. Another study focused on using deep reinforcement learning to train real robot primitive skills such as go-to-ball and shoot the goalie [

11]. The study presented state and action representation, reward and network architecture, as well as showed good performance on real robots. An improved genetic algorithm was used for path planning in mobile robots, thereby solving problems such as path smoothness and large control angles [

12]. Finally, a binary classifier and positioning error compensation model that combined the genetic algorithm and extreme learning machine was proposed for the indoor positioning of mobile robots.

Many authors claim that they achieve very satisfactory results applying ML methods in mobile robot problems. For example, in the work of [

13], the best results for mobile robots in machine learning were obtained using Central Moments as a feature extractor and Optimum Path Forest as a classifier with an accuracy of 96.61%. The authors of [

9] stated that the developed algorithm for autonomous mobile robots in industrial areas enabled the execution of work orders with a 100% accuracy. In [

14], the proposed model was based on the Extreme Learning Machine-based Genetic Algorithm (GA-ELM), which achieved a 71.32% reduction in positioning error for mobile robots without signal interference. These are just a few examples of the recent research applying different ML approaches in robotics.

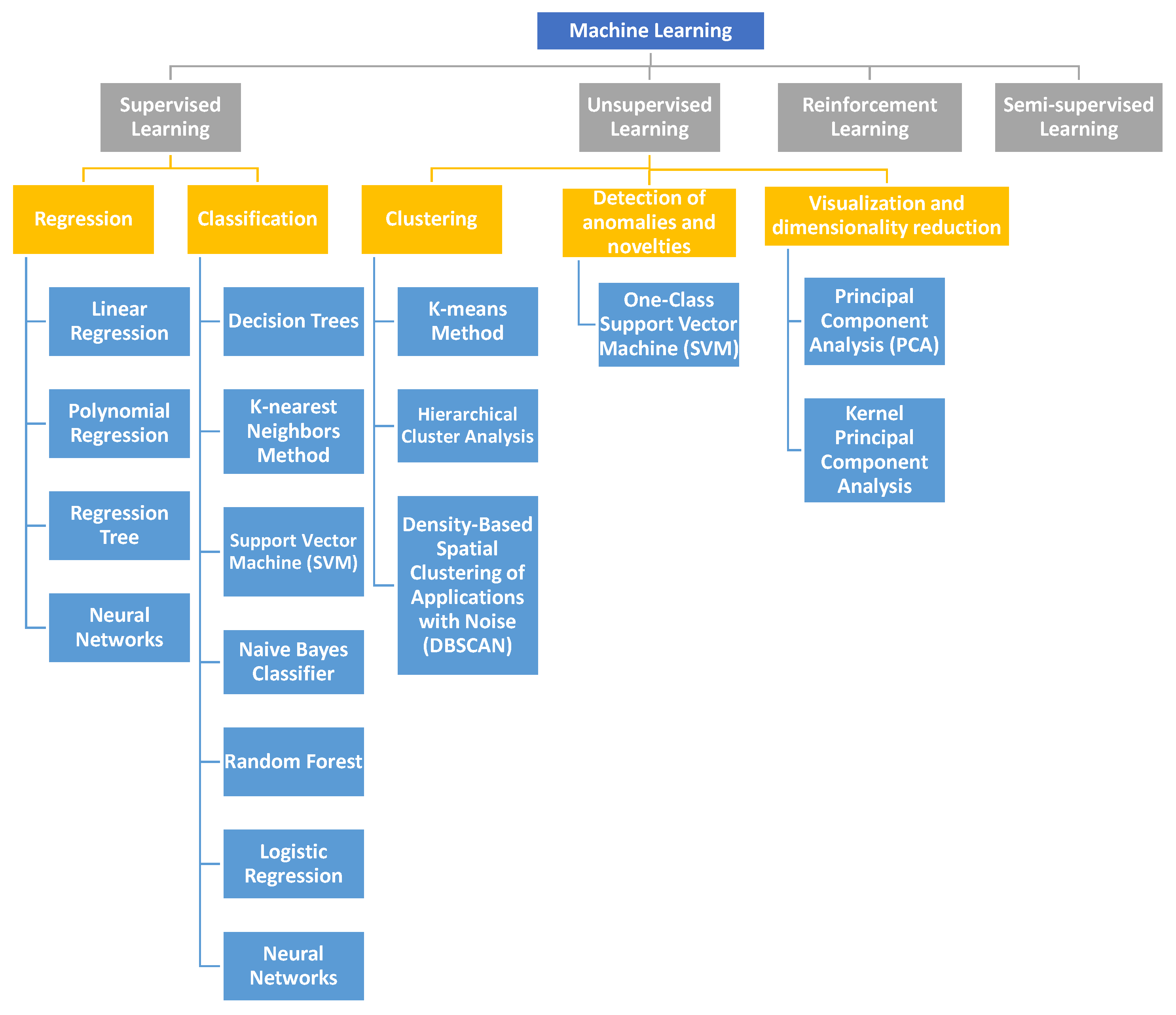

The machine learning methods, as shown in

Figure 1, are divided into the following: supervised learning, unsupervised learning, reinforcement learning, and semi-supervised learning.

Robotics is one of the vital branches of science and technology nowadays, experiencing dynamic growth. Therefore, it is very difficult to track the development of all topics belonging to this area of knowledge. The surveys of the works associated with the specific aspects of this field of research can be found in the recent literature. In [

15], the authors concentrated on the heuristic methods applied for the robot path planning in the years 1965–2012. Their study included the application of neural networks (NN), fuzzy logic (FL), and nature-inspired algorithms such as the following: genetic algorithms (GA), particle swarm optimization (PSO), and ant colony optimization (ACO). In [

16], the authors presented the approaches utilizing artificial intelligence (AI), machine learning (ML), and deep learning (DL) in different tasks of advanced robotics, such as the following: autonomous navigation, object recognition and manipulation, natural language processing, and predictive maintenance. They presented different applications of AI, ML and DL in industrial robots, advanced transportation systems, drones, ship navigation, aeronautical industry, aviation managements, and taxi services. An overview of ML algorithms applied for the control of bipedal robots was presented in [

17]. In the work of [

18], the authors introduced a review of Visual SLAM methods based on DL.

The classification of mobile robots can be based on the type of the motion system, as shown in [

19], where the wheeled mobile robots and the walking robots can be distinguished. The examples of mobile robots classified to both types of motion systems are shown in

Figure 2 and

Figure 3.

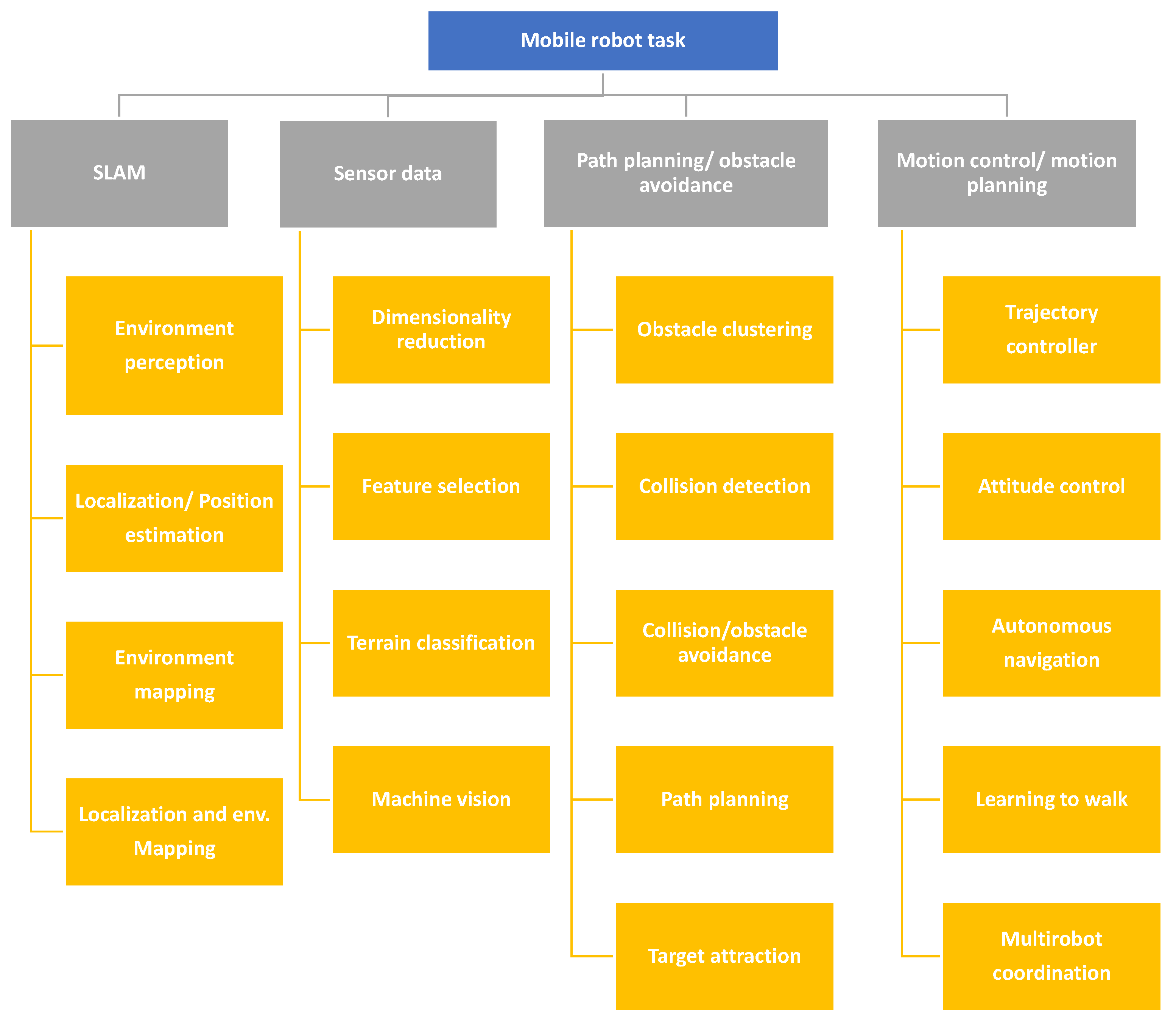

The main issues concerning mobile robots can be classified into navigation, control, and remote sensing, as stated in [

20].

One of the specific tasks that is associated with the navigation of the mobile robots is the localization and environment mapping, which is also known as Simultaneous Localization and Mapping (SLAM). The SLAM algorithms allow for the environment mapping with the simultaneous tracking of the current robot’s position. The SLAM algorithms perceive the environment using sensors such as the following: cameras, lidars, and radars. The different subtasks of SLAM are the environment perception, the robot localization/position estimation, and the environment mapping.

The remote sensing in mobile robots deals with the usage of different sensors in order to perceive the robot’s surroundings. The commonly used sensors are cameras, lidars (Light Detection and Ranging), radars (Radio Detection and Ranging), ultrasonic sensors, infrared sensors and the GPS (Global Positioning System). The specific tasks being developed in the mobile robotics research, which are associated with the remote sensing and the sensor data collecting, include the data dimensionality reduction, the feature selection, the terrain classification and the machine vision solutions.

The path planning and obstacle avoidance tasks are inherent in the navigation of mobile robots. The path planning algorithms calculate a feasible, optimal or near-optimal path from the current position of a robot to the defined goal position. The most commonly applied optimization criteria are the shortest distance and the minimal energy consumption. The path planning process has to consider the avoidance of static and dynamic obstacles. The obstacle avoidance problem is also related to the obstacle detection and clustering. Another issue also connected with this topic is the target attraction task, which is aimed at leading the mobile robot toward a specific target or a goal position.

The mobile robotics research is also concentrated on the motion control that includes the attitude control, the heading control, the speed control, and the steering along a path. In this last task, a trajectory controller has to be developed. Other topics that are related to the mobile robot motion control include learning to walk, which is associated with the walking robots, the multirobot coordination and the autonomous navigation.

The classification of the mobile robotics tasks is divided into four main categories: SLAM, sensor data, path planning, and motion control, which are presented in

Figure 4.

The subsequent sections present a comparison of the ML techniques proposed recently for the different tasks of robots, such as positioning, path planning, path following, and environment mapping.

2. Supervised Learning Approaches

This section provides an overview of the supervised machine learning methods that were used in mobile robotics in the years 2003–2023. In the supervised learning, as the name of the method suggests, the solutions of the considered problem are attached to the set of the training data as labels or classes. The main application areas of the supervised learning methods are the regression and the classification problems, as shown in

Figure 1.

The aim of the regression algorithm is to predict a value of an output variable. An example of a regression problem is the forecasting of the value of a car based on its features such as the following: the model, the brand, the year of production, and the engine capacity. Another similar case is the prediction of the house prices based on their various features, such as the following: the number of bedrooms, the neighborhood’s crime rate, and the proximity to schools. An example of the SL application in a different domain is the estimation of a person’s age based on the facial features. The common regression algorithms include linear regression, polynomial regression, regression tree, and neural networks.

The goal of the classification algorithm is the categorization of the input data into the predefined classes or categories. An example of a classification problem is the task of classifying a message as “spam” or “non-spam”. Other classification tasks include the image classification, the sentiment analysis, and the disease diagnosis. The common classification algorithms include decision trees, the k-nearest neighbors method, support vector machines (SVM), the Naive Bayes classifier, the random forest, logistic regression, and neural networks.

2.1. Regression Methods

As mentioned above, the supervised learning methods are grouped into two main categories of regression and classification. This subsection presents a literature review of the regression approaches that were applied in mobile robotics.

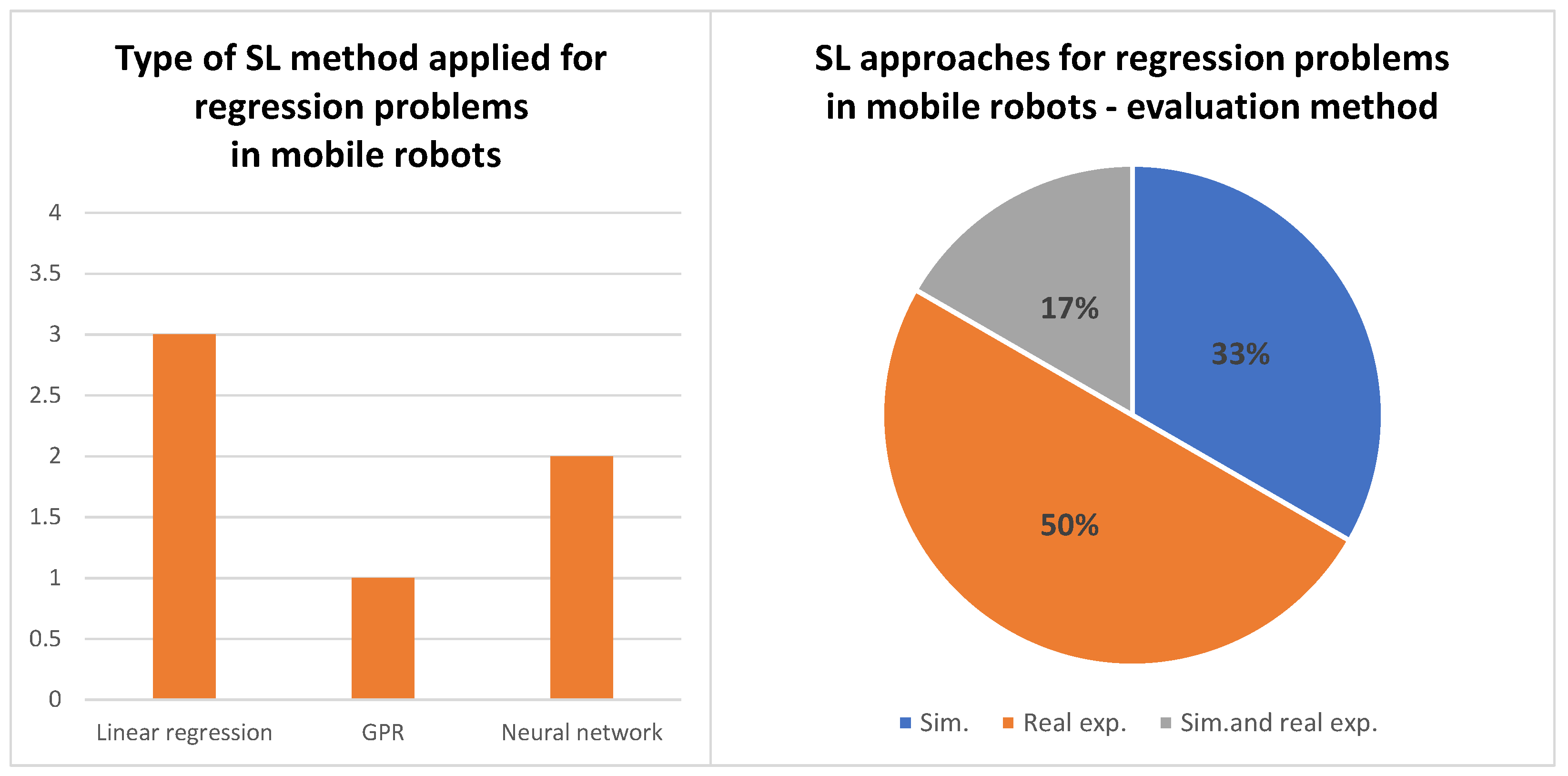

Table 1 shows a comparative analysis of the recent SL algorithms that were proposed for solving of the regression problems in mobile robots.

The SL regression methods are applied in mobile robots for tasks such as the following: localization, obstacle detection and avoidance, path planning, and slippage prediction.

The charts presenting the statistics of the SL regression algorithms applied in mobile robotics are shown in

Figure 5.

2.1.1. Regression Methods for Robot Localization

One of the tasks of mobile robots, according to

Figure 4, is the robot localization. In [

21], the authors proposed a method for the identification of the optimal value of the wheel speed. The approach was used for the relative localization of a differentially driven robot, moving on the circular and straight paths. The linear regression analysis was performed in order to find the relationship between the wheel speed and the odometry of the two-wheeled differential drive mobile robot. The test that used the Analysis of Variance (ANOVA) technique was carried out based on the statistical tools available in the MINITAB scientific analysis software. The V-Rep 3.2.1 simulation software was used for the validation of the proposed method.

In [

26], the authors described the application of the convolutional neural network (CNN) for the robot localization problem. The issue was solved using a hierarchical approach. In the first stage, the CNN solved a classification problem and found the room where the robot was located. In the second stage, the regression CNN was applied in order to estimate the exact position of the robot (the X and Y coordinates). The approach was tested with the use of the dataset containing the sensor data that were registered by a mobile robot under real operating conditions.

2.1.2. Regression Methods for Obstacle Detection

In [

25], the authors proposed the application of the artificial neural networks (ANNs) for the obstacle detection with the use of data from infrared (IR) sensors and a camera. The ANN was developed and trained using the Matlab/Simulink software, and the simulation tests were carried out in the RobotinoSIM virtual environment. An obstacle detection accuracy of 85.56% was achieved. The authors stated that in order to accomplish greater accuracy, a larger dataset should be used, but it would cause longer learning and data processing times. The usage of hardware with higher computational capabilities would also be needed.

2.1.3. Regression Methods for Obstacle Avoidance and Other Tasks

A linear regression approach for the collision avoidance and path planning of an autonomous mobile robot (AMR) was proposed in [

22]. The authors developed the adaptive stochastic gradient descent linear regression (ASGDLR) algorithm for solving this task. The velocities of the right and left wheels, and the distance from an obstacle, were measured by two infrared sensors and one ultrasonic sensor. The stochastic gradient descent (SGD) optimization technique was applied for the iterative updating of the ASGDLR model weights. The difference between the actual velocity and the model output velocity was used as an error signal. The ASGDLR algorithm was implemented on the NodeMCU ESP 8266 controller. The method was verified in the simulations test with the use of the Python IDLE platform and the Matlab software as well as in real experiments using an AMR. The approach was also compared with four other algorithms: VFH, VFH*, FLC, and A*. The authors stated that the advantages of the algorithm are “the effectiveness of memory utilization and less time requirement for each command obtained as a command signal from the NodeMCU to the DC motor module compared to other Linear Regression algorithms”.

In [

23], the authors proposed a linear regression approach for the mobile robot obstacle avoidance. The task was carried out by predicting the wheel velocities of the differential drive robot. Input data were obtained with the use of the ultrasonic sensors for the distance and the IR sensors for the wheel velocities measurements. The robot control platform used in this research was the Atmega328 microcontroller.

In [

24], a slippage prediction method was introduced using Gaussian process regression (GPR). The approach was validated with the use of the MIT single-wheel testbed equipped with an MSL spare wheel. This solution can be useful for the off-road mobile robots. According to the authors, the results proved an appropriate trade-off between the accuracy and the computation time. The algorithm returned the variance associated with every prediction, which might be useful for the route planning and the control tasks.

2.2. Classification Methods

The second category in the supervised learning is the classification. This section presents the recent approaches for the classification problems applied in mobile robotics.

Table 2 presents a comparative analysis of the recent SL classification algorithms applied in mobile robotics.

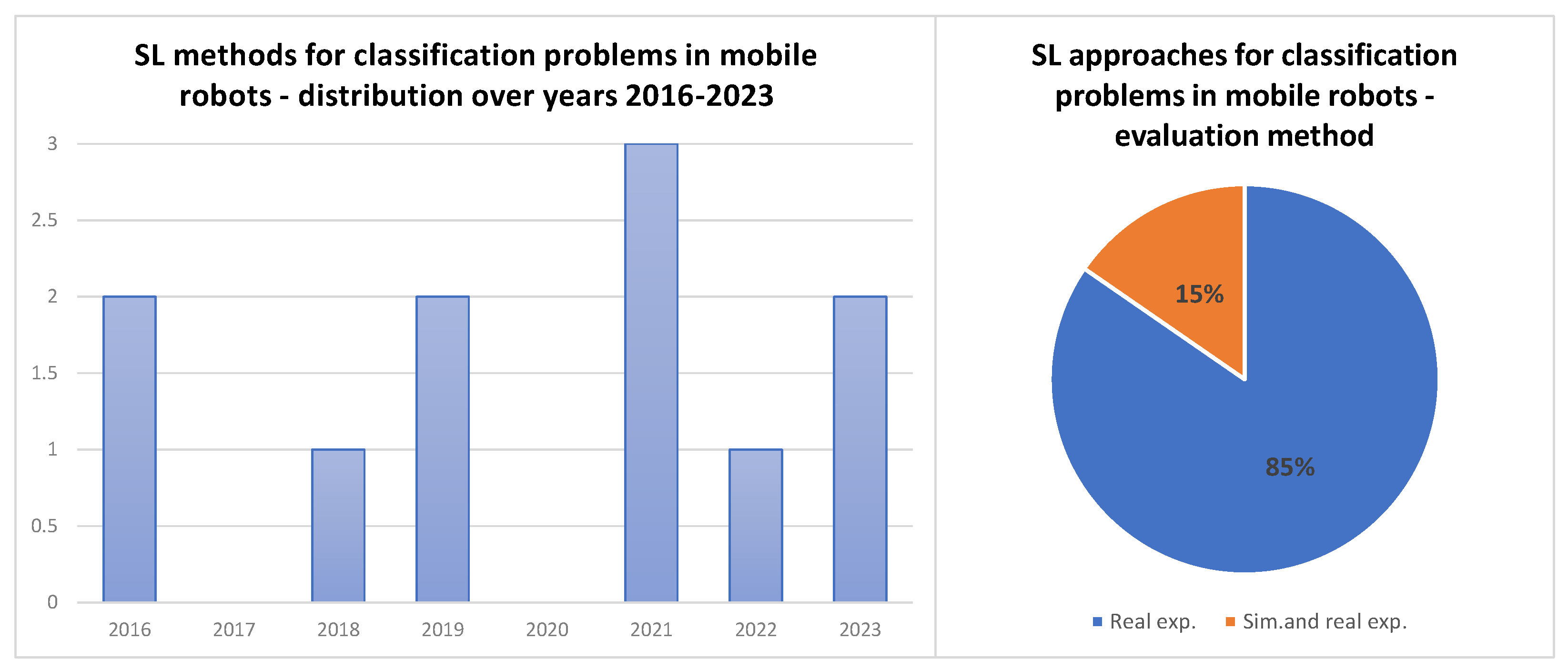

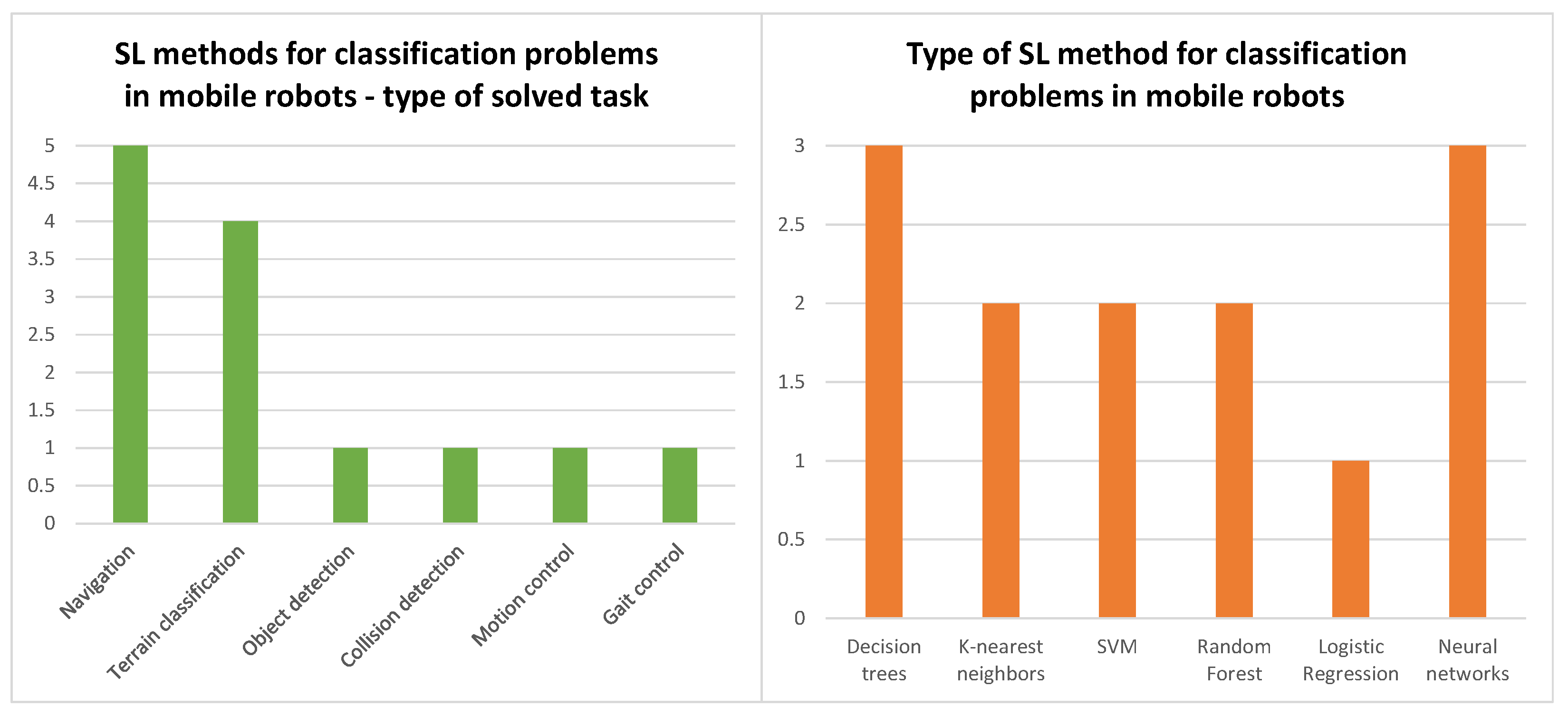

The charts presenting the statistics of the SL classification algorithms applied in mobile robotics are shown in

Figure 6 and

Figure 7.

2.2.1. Classification Methods for Terrain Type Recognition

An important issue in mobile robotics for the use in the rescue operations or the inspection tasks is the terrain classification. The appropriate recognition of the type of a terrain will enable for the mobile robot’s behavior adaptation to the environment. It will also allow the robot to reach the defined target faster and in a more effective way. The different supervised learning classification algorithms proved to be suitable for this task. The random forest classifier was proposed in the work of [

35] for the evaluation of the traversability of the terrain.

In [

34], the authors introduced the random forest classifier optimized by a genetic algorithm for the classification of ground types. This approach allowed overcoming the limitation of the traditional random forest algorithm, which is the lack of a formula for the determination of the optimal combination of many initial parameters. The method allowed the authors to achieve the recognition accuracy of 93%. This was a significantly higher value then the results obtained with the use of the traditional random forest algorithm.

An artificial neural network (ANN) was applied for the terrain classification in the research presented in [

37]. The ANN was implemented on the Raspberry Pi 4B in order to process the vibration data in real time. The 9-DOF inertial measurement unit (IMU), including an accelerometer, a gyroscope, and a magnetometer, was used for the data reception. The Arduino Mega Board was used as a control unit. The carried out experiments allowed for the achievement of online terrain classification prediction results above 93%.

In the work of [

39], a deep neural network (DNN) was applied for the terrain recognition task. The input data to the model were the vision data from an RGB-D sensor, which contained a depth map and an infrared image, in addition to the standard RGB data.

2.2.2. Classification Methods for Mobile Robot Navigation

In [

27], the authors proposed the application of a decision tree algorithm for the mobile robot navigation. The developed learning system was aimed at performing the incremental learning in real time. It was using the limited memory in order to be applicable in an embedded system. The algorithm was developed using the Matlab environment and was tested with the use of the Talrik II mobile robot, equipped with 12 infrared sensors and sonar transmitters, and receivers. The controller was implemented on the ARM evaluator 7T development board, running the eCos real-time operating system. The continuation of this research was presented in [

28]. The method proposed in this paper was based on the incremental decision tree. In this approach, the feature vectors were kept in the tree. The experiments with the use of a mobile robot, performing the real-time navigation task, showed that “the calculation time is typically an order of magnitude less than that of an incremental generation of an ITI decision tree”.

In the work of [

29], the expert policy based on the deep reinforcement learning was applied for the calculation of a collision-free trajectory for a mobile robot in a dense, dynamic environment. In order to enhance the system’s reliability, an expert policy was combined with the policy extraction technique. The resulting policy was converted into a decision tree. The application of the decision trees was aimed at improving of the solutions obtained by the algorithm. The improvements included the smoothness of the trajectory, the frequency of oscillations, the frequency of immobilization, and the obstacle avoidance. The method was tested in simulations and with the use of the Clearpath Jackal robot, which was navigating in the environment with moving pedestrians.

In the work of [

38], the authors introduced a motion planner based on the convolutional neural network (CNN) with the long short-term memory (LSTM). The imitation learning was applied for training the policy of the motion planner. In the experiments carried out, the robot was moving autonomously toward the destination and was also avoiding standing and walking persons.

In the work of [

32], a method based on support vector machine (SVM) was proposed for the application to the mobile robot’s precise motion control. The control algorithm was implemented on the ARM9 control board. The results of the experiments proved the feasibility of the approach for the precise position control of a mobile robot. The achieved maximum error was less than 32 cm in the linear movement on the distance of 10 m.

In [

30], the authors considered the application of the different classification algorithms for the wall following the navigation task of a mobile robot. They introduced the k-nearest neighbors approach and compared it with the other methods, such as the following: decision trees, neural networks, Naïve Bayes, JRipper and support vector machines.

2.2.3. Classification Methods for Other Tasks

In [

31], the authors proposed the application of the k-nearest neighbors method for the image classification task. The approach was used for object detection and recognition in the machine vision-based mobile robotic system.

In [

36], the authors applied logistic regression for the collision detection task. The training data were obtained from the acceleration sensor. The data were registered during the movement of a small mobile robot. The accelerometer data and the motor commands were afterwards combined in the logistic regression model. The Dagu T’Rex Tank chassis was used in the experiments. The robot was driven by two motors via the Sabertooth motor controller. The Arduino Mega 256 microcontroller was used as the robot’s control unit. The trained model detected 13 out of 14 collisions with no false positive results.

An essential task for the walking robots is learning to walk. In [

33], the authors presented a method for the gait control. It was based on support vector machine (SVM) with the mixed kernel function (MKF). The ankle and the hip trajectories were applied as the inputs. The corresponding trunk trajectories were used as the outputs. The results of the SVM training were the dynamic kinematics relationships between the legs and the trunk of the biped robot. The authors stated that their method achieved better performance than the SVM with the radial basis function (RBF) kernels and the polynomial kernels.

The analysis of the recent approaches for the mobile robots based on the supervised learning techniques enabled stating that these methods were applied for the following:

The path control;

The robot navigation;

The environment mapping;

The robot’s position and orientation estimations;

The collision detection;

The clustering of the data registered with the use of the different robot’s sensors;

The recognition of the different terrain types;

The classification of the robot’s images;

The exploration and the path planning in unknown or partially known environments.

3. Unsupervised Learning Approaches

This section provides an overview of the unsupervised machine learning methods that were used in mobile robotics in the years 2009–2023.

The unsupervised learning approaches use unlabeled training data. In other words, the raw data are fed into the algorithm. The algorithm is responsible for finding the connections between these data. This type of machine learning is also called teaching without a teacher. Three common types of tasks that were solved with the use of the unsupervised learning approaches include clustering, the detection of anomalies and novelties, and visualization and dimensionality reduction. The unsupervised learning methods that were applied to solving the clustering problems include the k-means method, hierarchical cluster analysis, and the Density-Based Spatial Clustering of Applications with Noise (DBSCAN). The one-class support vector machine method was applied for anomaly and novelty detection. The visualization and dimensionality reduction problems were solved with the use of the principal component analysis (PCA) and kernel principal component analysis techniques (KPCA).

Table 3 and

Table 4 present a comparative analysis of the recent UL algorithms proposed for solving the problems in mobile robotics.

3.1. Unsupervised Learning for SLAM

This subsection presents the recent unsupervised learning approaches that were applied to the SLAM task, such as the following: environment perception, robot localization/position estimation, and environment mapping.

Clustering is the problem of grouping similar data together. In [

52], the authors proposed an algorithm based on the k-means method for the wheeled mobile robot indoor mapping with the use of a laser distance sensor. The results of the calculations for three different cluster sizes, equal to 20, 25, and 30, were compared in the paper. The authors stated that in order to achieve satisfactory results with the use of the proposed method, the filtering techniques should be applied to the dataset registered with the use of the sensor. The limitation of the method was the necessity of predicting how many clusters should be used in order to achieve good results.

In [

50], the authors presented k-means clustering. It was applied to the task allocation problem in the process of the unknown environments’ exploration and mapping by a team of the mobile robots. In this approach, the k-means clustering algorithm was responsible for the assignment of the frontiers to the different robots. The frontiers are the boundaries between the known and unknown space in the environment exploration process. The introduced concept was evaluated with the use of both the simulations and the real-world experiments on the TurtleBot3 robots. The results were also compared with two other methods. In the first method, the map was explored by each robot separately without any coordination between them. In the second method, the robots exploring the space shared their information in order to create a global map of the environment. The proposed k-means method achieved better results than the other methods in terms of both the time, the robots needed for the space exploration, and the traveled distance.

In the work of [

51], the authors developed a system for the compact 3D map representation based on the point-cloud spatial clustering called the Sparse-Map. The results obtained with the use of three clustering algorithms—k-means, k-means++, and LBG—were presented in the paper. The achieved partition quality and the runtime of the clustering algorithms were also compared. The results showed that the fastest was the k-means algorithm, which was applied in the GPU, and the slowest was the LBG algorithm. The authors stated that their system allowed for the obtainment of the maps of the environment useful for the calculation of the high-quality paths for the domestic service robots.

In [

49], the authors applied the GNG neural network for this task. This approach is classified as the unsupervised incremental clustering algorithm. The data about the environment were obtained with the use of an ultrasonic sensor. The sensor was applied on the Lego Mindstorms NXT robot. The registered data were used for the construction of a connected graph. The graph was composed of the nodes, presenting the robot’s states, and the links between the nodes. The links included the information about the actions that should be carried out in order to make a transition between the nodes. The GNG network approach was compared with a different method, which was proposed by the authors in [

48]. In this paper, the authors introduced the FuzzyART (Adaptive Resonance Theory) neural network. Both of the algorithms enabled the achievement of similar results in terms of the accuracy of the position estimation. The FuzzyART neural network allowed for the accomplishment of slightly better effects.

In the work of [

56], the UL approach based on the Louvain community detection algorithm (LCDA) was proposed for the semantic mapping and localization tasks. The authors compared their method with two other UL approaches: the Single-Linkage (SLINK) agglomerative algorithm, which was presented in [

63], and the self-organizing map (SOM), which was introduced in the work of [

66]. The self-organizing map is a type of an artificial neural network that does not use the labeled data during the training phase. It is applied for clustering as well as the visualization and the dimensionality reduction tasks.

The machine learning methods for the legged robots are much less explored. The recently introduced method is the enhanced self-organizing incremental neural network (ESOINN). It was applied for the environment perception in the work of [

46]. The approach was tested on a hexapod robot in both indoor and outdoor environments.

In the work of [

60], the authors proposed the indoor positioning system (IPS) based on the robust principal component analysis-extreme learning machine (RPCA-ELM). The method was verified with the use of a mobile robotic platform. It was controlled by the ARM microcontroller.

3.2. Unsupervised Learning for Remote Sensing

In [

40], the authors proposed the UL clustering sensor data approach. It was applied for the environment perception task, specifically for the identification of the terrain type. The introduced approach was the single-stage batch method, which is aimed at finding the global description of a cluster. The approach was evaluated with the use of two types of the mobile robots: a hexapod robot and a differential drive robot. The hexapod robot was equipped with the following sensors: three accelerometers, three rate gyroscopes, six leg angle encoders, and six motor current estimators. Principal component analysis (PCA) was applied for the dimensionality reduction of the collected data. In the robot with the differential drive, a tactile sensor was used for the terrain identification. The tactile sensor is a metallic rod with an accelerometer that is located at its tip. The PCA was also applied for the dimensionality reduction of the collected dataset. The comparative tests proved that the proposed method outperformed the window-based clustering and the hidden Markov model trained using expectation–maximization (EM-HMM).

In [

55], the authors introduced the UL approach for the generation of the compact latent representations of the sensor data, which was called the LatentSLAM. This method was the development of the RatSLAM, which was a SLAM system based on the navigational processes in a rat’s hippocampus. The system was composed of the pose cells, the local view cells, and the experience map. The authors demonstrated that their approach can be used with the different sensors’ data such as the following: the camera images, the radar range-doppler maps and the lidar scans. A dataset of over 60 GB of the camera, lidar and radar recordings was used in the verification tests.

In the work of [

54], the kernel principal component analysis (KPCA) was applied for the dimensionality reduction of the dataset obtained with the use of the laser range sensor SICK LMS 200-30106. The sensor was used in order to register the robot’s surroundings. In [

53], the KPCA was coupled with the online radial basis function (RBF) neural network algorithm for the identification of a mobile robot’s position. The KPCA was applied as the preprocessing step. The aim was the feature dimensionality reduction of the dataset that was further fed into the RBF neural network.

In [

58], the authors applied the one-class SVM for the target feature points selection. Such an approach can be applied on a vision-based mobile robot. The authors also applied the SOM for the creation of the visual words and the histograms from the selected features.

3.3. Unsupervised Learning for Obstacle Avoidance and Path Planning

The density-based spatial clustering of applications with noise (DBSCAN) method was applied to mobile robots in [

43,

44]. It was applied for vanishing point estimation based on the data registered with the use of an omnidirectional camera installed onboard a wheeled mobile robot. The heading angle was estimated on the basis of the determined vanishing point. Afterwards, it was used by the robot control system utilizing the fuzzy logic controller. In the work of [

42], the DBSCAN method was used for the detection of mobile robots. In this approach, up to four mobile robots were detected with the use of a stationary camera. The single k-dimensional tree-based technique called IDTrack was applied for tracking the detected robots. The performance of the developed system was evaluated in the experiments. The following metrics were used in the evaluation: precision, recall, mean absolute error, and multi-object tracking accuracy. The mobile robots used in this study had two differentially driven treads and the 9-degrees of freedom inertial measurement unit (IMU) with an accelerometer, a gyroscope and a magnetometer. In [

41], the DBSCAN method was applied for the obstacle clustering task using a grid map. The method was used along with the graph search path-planning algorithm and was evaluated in the simulation tests using the Matlab environment and the C# language on the Visual Studio Ultimate 2012 platform.

The one-class support vector machine (SVM) algorithm was proposed for application in the cloud-based mobile robotic system in [

57]. The presented system was composed of a robot local station and a cloud-based station. The robot local station was implemented on four Raspberry-Pi 4B microcomputers. A computer with an Intel Core i9 CPU, 64 GB RAM, and NVIDIA GeForce RTX 3090 GPU was used as the cloud-based station. The robot local station was responsible for the camera image data registration and for sending these data to the cloud-based station. The cloud-based station was responsible for the image processing with the use of the machine-learning algorithms in order to generate the actions for the mobile robots. The one-class SVM was applied for the purpose of the unknown objects’ detection and for the execution of the incremental learning process. Principal component analysis (PCA) was applied for the dimensionality reduction task. It was used in order to enhance the speed of the one-class SVM model training. Thousands of features from the images were extracted with the use of the CNN model in this approach. Therefore, the dimensionality reduction of these features had to be carried out.

The application of the self-organizing map (SOM) technique was also proposed for solving the path-planning problem of a group of cooperating mobile robots in [

65].

The PCA method was also applied for the localization of the radiation sources [

59]. A mobile robot equipped with an imaging gamma-ray detector was used in this approach. A path surrounding the radiation sources was generated with the use of the PCA, utilizing the results of the previous measurements. The approach was verified with the use of the simulation tests and the real experiments with the mobile robot Pioneer-3DX and the all-around view Compton camera.

3.4. Unsupervised Learning for Motion Control

A different task associated with mobile robots is the design of the trajectory controller. It is responsible for the control of the robot’s motion during the process of the predefined path following. For this purpose, the authors in [

47] presented the UL algorithm, which was called the enhanced self-organizing incremental neural network (ESOINN). It was introduced by Furao et al. in 2007 [

70]. The method was proposed for the control of the movement of the four-wheel skid steer mobile robot. The robot was developed for use on the oil palm plantations. The robot control system was based on the Arduino Mega microcontroller. The method also applied incremental learning. This feature allowed the achievement of the robot’s adaptation to new environments and noise elimination. The designed controller did not need the kinematic and dynamic models of the robot, as it developed its own robot’s model during the training phase based on the registered motion data. During the incremental learning stage, the previously measured trajectory data and the simulated data were added to the system in order to achieve a better accuracy of the path-following task. The ESOINN approach was also compared with two other UL algorithms: the self-organizing map (SOM) method [

71] and the k-means clustering method [

72], and also with one SL approach, based on the feed-forward neural network with the adaptive weight learning (adaptive NN) [

73]. The authors stated that their method achieved the best results in terms of the path-following accuracy. It also accomplished the shortest processing time during the incremental learning phase.

In the works of [

68,

69], the authors proposed the application of the spike timing-dependent plasticity (STDP) algorithm for the autonomous navigation of a hybrid mini-robot. The spiking neural network (SNN) was applied to the implementation of the navigation control algorithm of the robot. The SNN is a type of artificial neural network that is inspired by the spiking activity of the neurons in the brain. The SNN models the behavior of the neurons using the spikes. They constitute a representation of the discrete electrical pulses that are generated by the neurons during the transmission of the information. The hybrid mini-robot contained the two wheel-legs modules in order to enhance its walking ability in the rough terrain and the obstacle avoidance task. It was also equipped with a manipulator for the object grasping and the obstacle climbing tasks. Two ATmega128 8-bit microcontrollers were applied for the robot control along with a PC that communicated with the robot through the RF wireless XBee module.

In the work of [

67], the STDP algorithm was proposed for attracting the robot toward the target. The approach was tested in the simulations with the use of a mobile robot and a robotic arm.

In [

64], the authors proposed the self-organizing map (SOM) method for error compensation in the attitude control of the quadruped robot. The model-free, adaptive unsupervised nonlinear controller, called the motor-map-based nonlinear controller (MMC), was introduced in this work. Its aim was to act as a feed-forward error compensator. The approach was verified by extensive simulation tests.

In the work of [

61], the improved PCA was applied to the fault detection task. It was implemented in a system for the measurement of the mobile robot’s attitude with the use of five gyroscopes.

3.5. Unsupervised Learning for Other Tasks

In the work of [

62], PCA was applied for the creative tasks’ coordination using the mobile robotic platforms. The developed system was verified with the use of the flower pattern detection and painting on a canvas with the use of mobile robots. The other task was the person’s identity and mood detection, which involved the mobile robots performing a creative art in order to enhance the mood.

In [

45], the authors introduced the UL approach for the task recognition problem. The unsupervised contextual task learning and recognition approach was composed of two phases. At first, the Dirichlet process Gaussian mixture model (DPGMM) was applied for the clustering of the human motion patterns of the task executions. In the post-clustering phase, the sparse online Gaussian process (SOGP) was applied for the classification of the query point with the learned motion patterns. The holonomic mobile robot was used for the evaluation of this approach. The 2D Laser Range Finder was applied for the environment perception task. The data for the evaluation were collected during the performance of the following four contextual task types: doorway crossing, object inspection, wall following and object bypass. The results proved that the proposed approach was capable of detecting the unknown motion patterns that were different from those used in the training set.

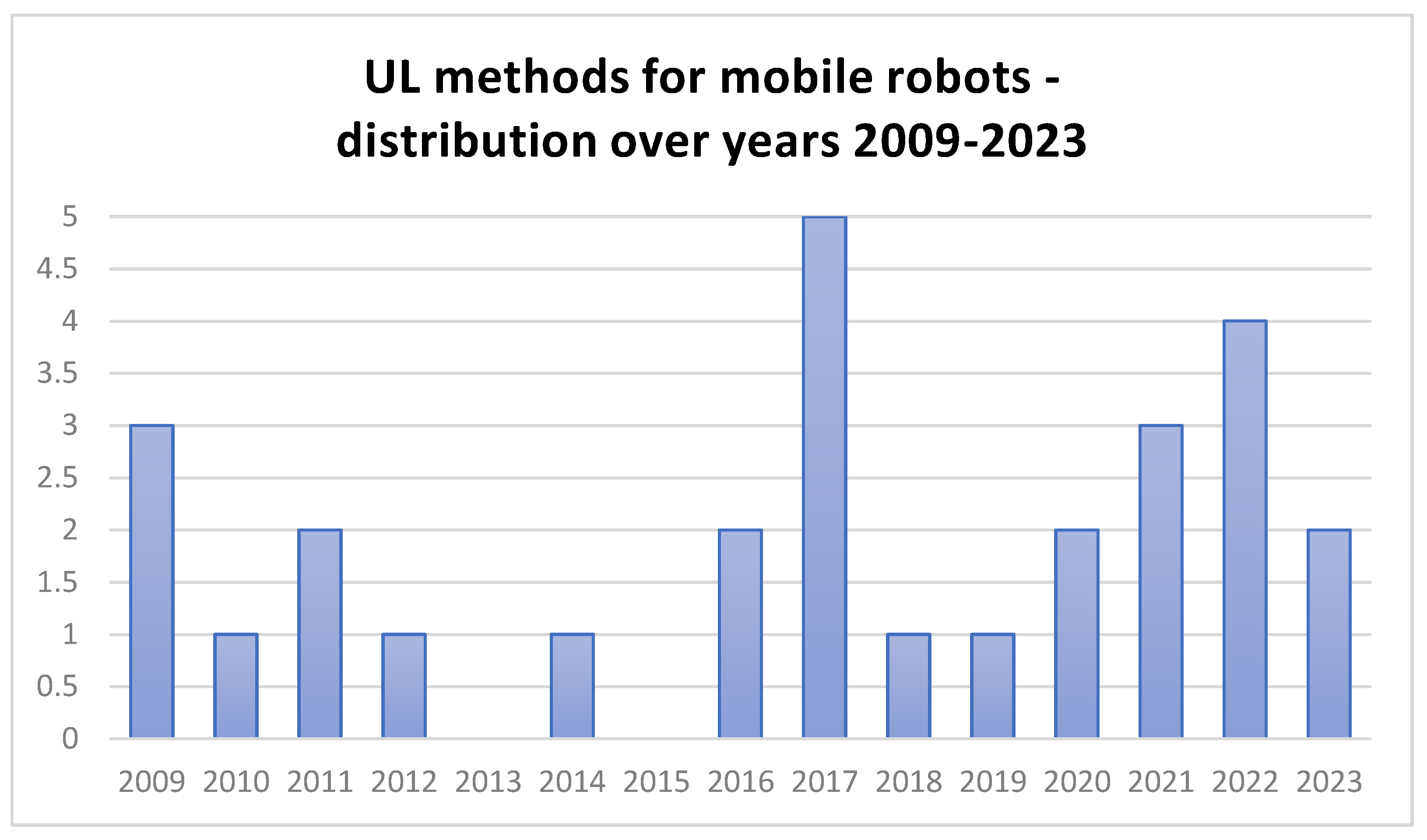

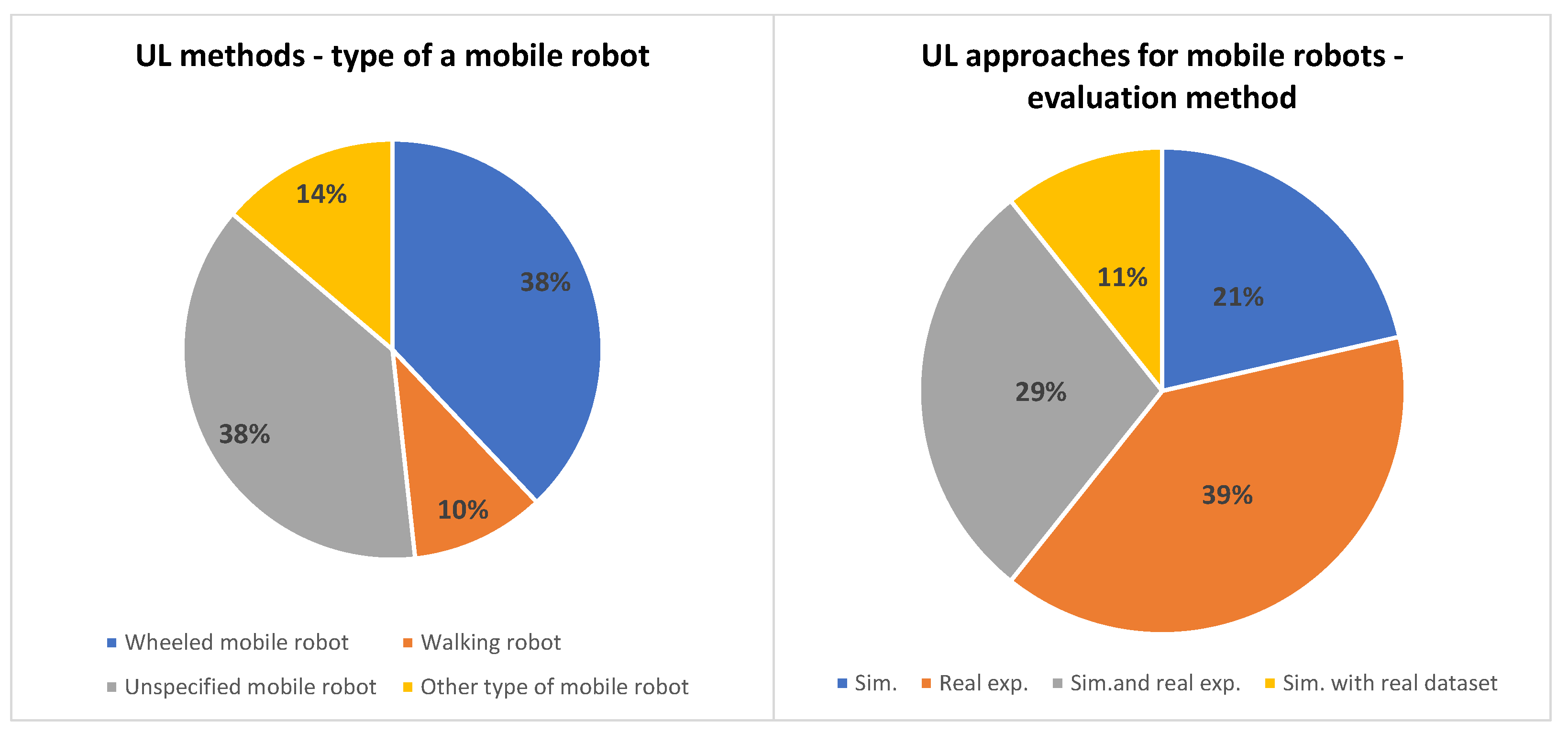

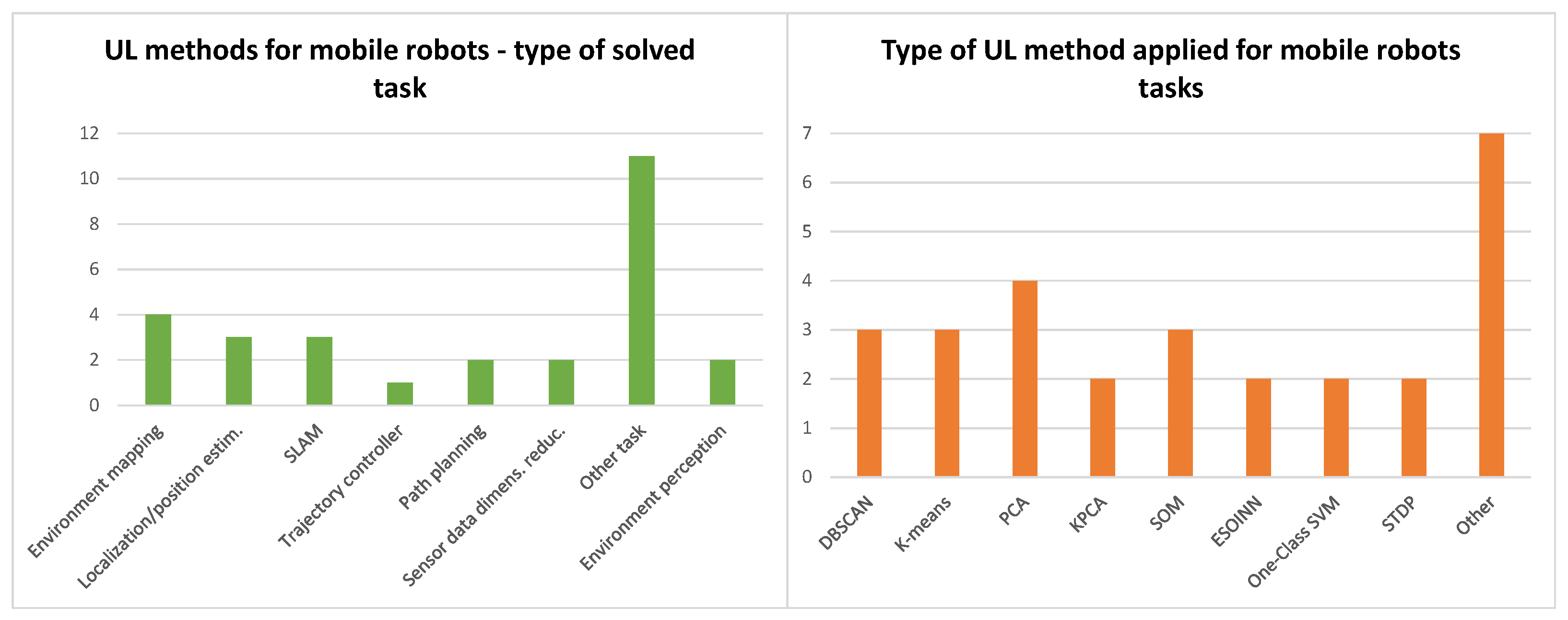

The charts presenting the statistics of the UL algorithms applied in mobile robotics are shown in

Figure 8,

Figure 9 and

Figure 10.

The analysis of the recent approaches for mobile robots based on the UL techniques enabled stating that these methods were applied for the following:

Environment mapping;

The robot’s position and orientation estimations;

Simultaneous Localization and Mapping (SLAM);

Data compression, that is the reduction of the amount of data registered by the robot’s sensors;

Anomalies and unusual patterns detection in the data registered by the robot’s sensors;

The clustering of data registered with the use of the different robot’s sensors;

The recognition of the different terrain types;

The feature extraction from the data registered by the robot’s sensors;

The exploration and the path planning in unknown or partially known environments;

The autonomous learning, that is the continuous refinement of the applied models and representations of the environment based on the actually acquired experience.

4. Reinforcement Learning Approaches

This section provides an overview of the reinforcement machine learning methods that were used in mobile robotics in the years 2012–2023.

The reinforcement machine learning (RL) does not work with data. It uses an agent to generate the data. The agent interacts with the environment, learning the most beneficial interactions. The signals in the form of the rewards are applied in order to shape the agent’s action policy. The agent has to discover what actions lead to the achievement of the biggest rewards.

The RL approaches can be classified into the following groups: value-based methods, policy-based methods, and actor–critic methods. In addition, the following types of RL algorithms are distinguished: multi-agent RL, hierarchical RL, inverse RL, and state representation learning. The value-based RL approaches include algorithms based on Q-learning, the deep Q-network (DQN), and the double deep Q-network. The policy-based RL algorithms are based on proximal policy optimization (PPO). The actor–critic approaches use algorithms based on advantage actor–critic (A2C), asynchronous advantage actor–critic (A3C), and deep deterministic policy gradient (DDPG). The model-based RL approaches are based on the Dyna-Q and the Model Predictive Control (MPC).

4.1. Reinforcement Learning for Obstacle Avoidance and Path Planning

The Q-learning algorithm was applied to path planning and obstacle avoidance tasks of the mobile robots. The Q-learning method analyzes all states of the robot. The disadvantage of this approach is the high computational cost. Therefore, an improved Q-learning model was proposed in the paper [

74]. This method allowed reducing the computational time by limiting the impassable areas. In the paper [

75], the authors introduced an approach considering eight optional path directions for the robot. The dynamic exploration factor was applied in this research in order to speed up the algorithm’s convergence. In [

76], the authors also proposed the application of the Q-learning algorithm for the mobile robot’s path planning and collision avoidance.

The reinforcement learning in the combination with the deep Q-network (DQN) was applied to the training of the mobile robots in different environments. In [

77], the authors proposed an approach for the autonomous navigation of a mobile robot. It was applied for the robot’s movement from its current position to a desired final position with the use of visual observation without a pre-built map of the environment. The authors trained the DQN using a simulation environment and afterwards applied the developed solution to a real-world mobile robot navigation scenario.

Another example of the deep RL approach applied to the mobile robot navigation in a storage environment was presented in [

78]. The survey data were supported by the information obtained with the use of the LIDAR sensors. The algorithm detected obstacles and performed actions in order to reach the target area. The Deep Q-network-based path planning for a mobile robot was presented in the work of [

79], where the information about the environment was extracted from the RGB images. In the work of [

80], the double DQN model was used for mobile robot collision avoidance.

In [

81], the authors applied the asynchronous advantage actor–critic method (A3C) for the mobile robot motion planning in a dynamically changing environment, that is, in a crowd. The proposed method was evaluated in the simulated pedestrian crowds and was finally demonstrated using the real pedestrian traffic.

The A2C algorithm was proven to be the best choice in terms of learning efficiency and the generalization applied for robot navigation in the paper [

82]. The state representation learned through A2C achieved satisfactory performance for the task of navigating a mobile robot in the laboratory experiments.

The deep deterministic policy gradient (DDPG) is an RL method used and applied for controlling the robot’s movement in the different navigation scenarios. It was applied to the task of dynamic obstacles’ avoidance in complex environments in [

83,

84].

The Dyna-Q is an RL algorithm used for a mobile robot’s path planning in unfamiliar environments. It incorporates the heuristic search strategies and the reactive navigation rules in order to enhance the exploration and learning performance. In research presented in [

85], a mobile robot was able to find a collision-free path, thus fulfilling the task of the autonomous navigation in the real world.

4.2. Reinforcement Learning for Motion Control and Other Tasks

In [

86], the authors presented a lightweight RL method, called the mean-asynchronous advantage actor–critic (M-A3C) for the real-time gait planning of the bipedal robots. In this approach, the robot interacts with the environment, evaluates its control actions and adjusts the control strategy based on the walking state. The proposed method allowed attaining the continuous and stable gait planning for a bipedal robot, as demonstrated in the various experiments.

In [

87], the authors proposed the application of the convolutional proximal policy optimization network for the mobile robot navigation problem without using a map. In [

88], the PPO algorithm was applied to the acceleration of the training process convergence and the reduction of the vibrations in the mobile robotic arm.

In [

89], the authors successfully implemented the DDPG algorithm for skid-steered wheeled robot trajectory tracking. The RL was applied to training the agent in an unsupervised manner. The effectiveness of the trained policy was demonstrated in the dynamic model simulations with ground force interactions. The trained system met the requirement of achieving a certain distance from the reference paths. This research demonstrated the effectiveness of the DDPG in attaining the collision-free trajectories, reducing the path distance and improving the movement time in the various robotic applications.

The model-based RL was applied to learning the models of the world and the achievement of long-term goals. The guided Dyna-Q was developed in order to reason about the action knowledge and to improve the exploration strategies [

90]. The deep Dyna-Q algorithm was applied to controlling the formation in a cooperative multi-agent system, accelerating the learning process and improving the formation achievement rate. The verification of this approach was carried out through the simulations and in the real-world conditions [

91].

Model predictive control (MPC) is a control strategy increasingly used in robotics, especially for mobile robots. The MPC allows optimal control under constraints, such as in the real-time collision avoidance. It can be applied to controlling the mobile robots’ movement in real time, considering their dynamics and kinematics [

92]. The MPC-based motion-planning solutions were evaluated using various models, including the holonomic and non-holonomic kinematic and dynamic models, also in the simulations and in the real-world experiments [

93]. The MPC was also applied for dynamic object tracking, allowing the mobile robot to track and respond to the moving objects, at the same time ensuring the safety of the process [

94]. In addition, the MPC was also integrated with the trajectory planning and the control of the autonomous mobile robots in the workspaces shared with humans, considering the following constraints: energy efficiency, safety and human-aware proximity.

During the model training, the repetition of the successive trials is very important. The repetition of the experience is a technique used in the RL in order to address the problem of sparse rewards and to improve the learning performance. In [

95], the authors presented a method combining the simple reward engineering and the hindsight experience repetition (HER) in order to overcome the sparse reward problem in the autonomous driving of a mobile robot. Another example was the implementation of the HER and curriculum learning (CL) techniques in the process of learning the optimal navigation rules in the dense crowds without the need for the usage of additional demonstration data [

96]. In [

97], the authors demonstrated the application of the acceleration RL algorithm based on the replay learning experience. It was applied in order to improve the performance of the RL in the mobile robot navigation task.

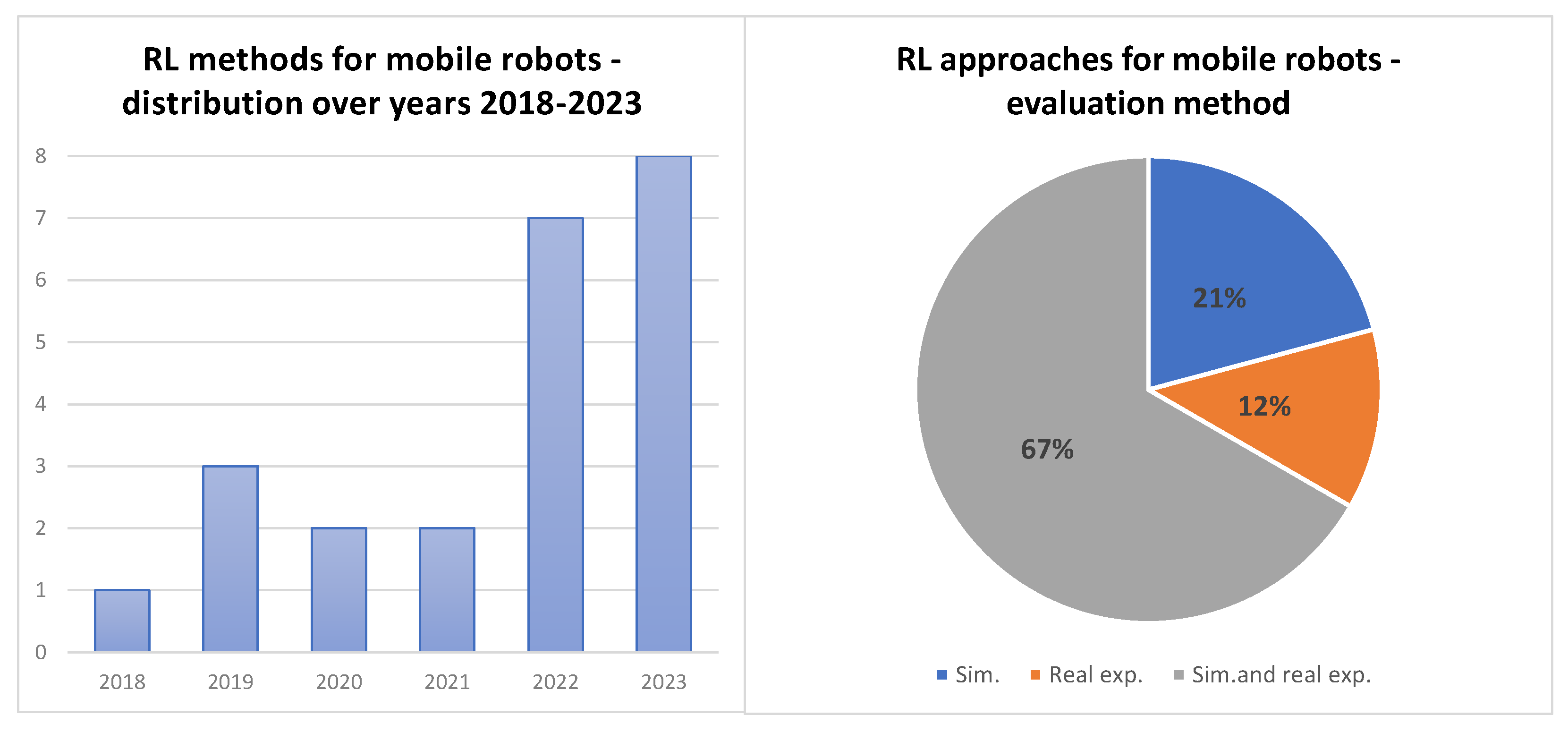

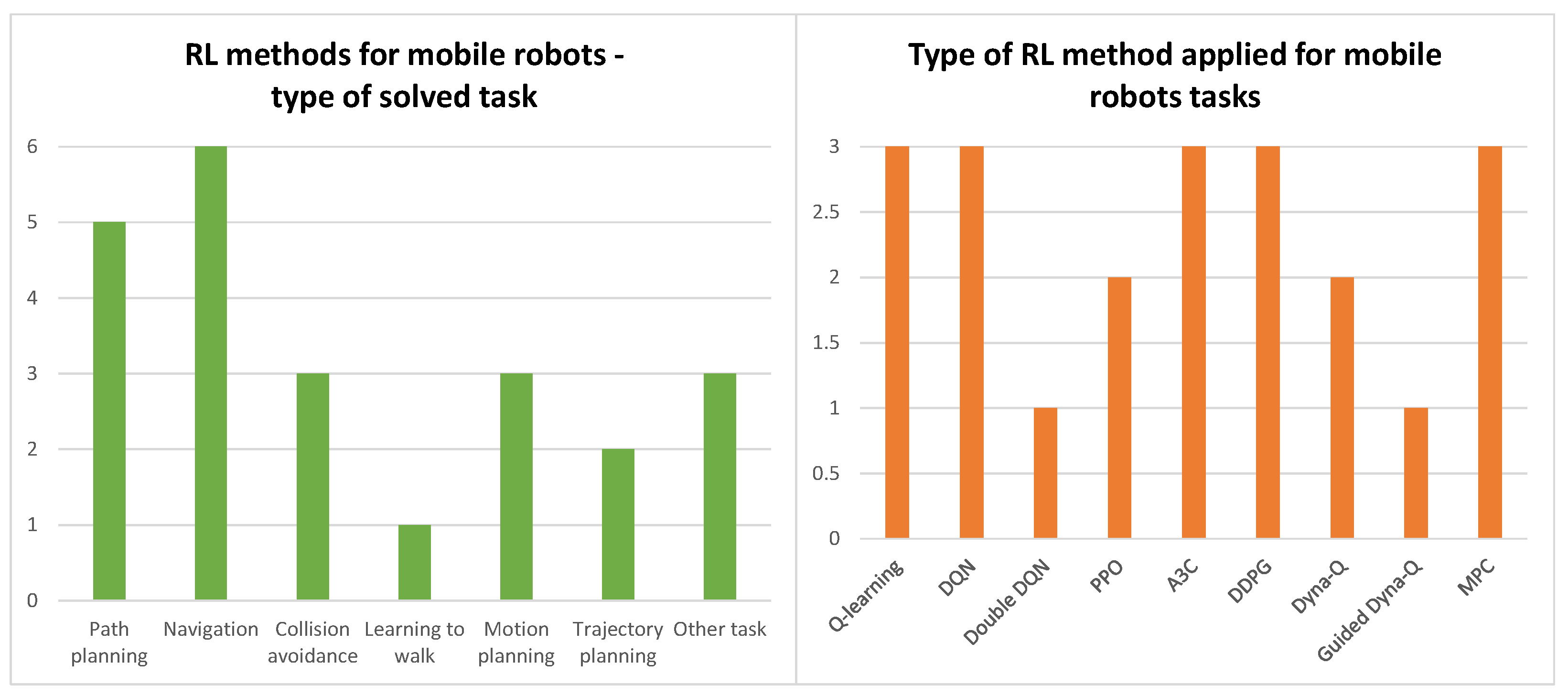

The charts presenting the statistics of the RL algorithms applied in mobile robotics are shown in

Figure 11 and

Figure 12.

The list in

Table 5 refers to a different approach to the learned model because of the interaction that occurs between the agent, the policy it performs to learn the environment and the dataset. The RL architecture teaches the model so as not to overtrain it.

The analysis of the recent approaches for mobile robots based on the RL techniques enabled to state that these methods were applied for the following:

5. Semi-Supervised Learning Approaches

This section provides an overview of the semi-supervised machine learning methods that were used in mobile robotics in the years 2003–2021.

The semi-supervised learning (SSL) incorporates the assumptions of both supervised and unsupervised learning. It works on the dataset with both labeled and unlabeled data. The main advantage of such an approach is the improvement of the model’s performance by the usage of the labeled data along with the extraction of the additional information from the unlabeled data. The semi-supervised learning applications include areas such as the following: natural language processing, computer vision, and speech recognition. The semi-supervised learning algorithm utilizes the different techniques, such as for example self-training. In this concept, the model is first trained with the use of the labeled data. Afterwards, it is used for making the predictions with the use of the unlabeled data, which are added to the dataset of the labeled data. Another approach is based on the co-training, which involves the training process of multiple models, using the different feature sets or the subsets of the data. As in the self-training approach, the model is trained with the use of the labeled data. Afterwards, it is used for the prediction of the labels of the unlabeled data. There also exist multi-view learning methods, which use the multiple perspectives of the data, such as the different feature sets or the data representations, in order to improve the performance of the developed model.

The semi-supervised learning methods for mobile robots are definitely less common in the current literature.

Table 6 presents a comparative analysis of the recent semi-supervised learning algorithms proposed for solving the different problems in mobile robotics.

5.1. Semi-Supervised Learning for Robot Localization

One of the tasks where the SSL methods are applied is robot localization. In [

100], the authors proposed the application of the variational autoencoder (VAE)-based semi-supervised learning model for indoor localization. It constitutes a deep learning-based model. The approach was verified with the use of two real-world datasets. The obtained results proved that the introduced VAE-based model outperformed the other conventional machine learning and deep learning methods.

In the work of [

102], the authors proposed the SSL algorithm for the indoor localization task. In this approach, the Laplacian embedded regression least square (LapERLS) was applied for the pseudo-labeling process. Afterwards, the time-series regularization was applied in order to sort the dataset in chronological order. Then, the Laplacian least square (LapLS) was applied. It combines manifold regularization and the transductive support vector machine (TSVM) method. The approach was verified by the real experiments with the use of a smartphone mobile robot for the estimation of unknown locations.

In [

103], the authors introduced the kernel principal component analysis (KPCA)-regularized least-square (RLS) algorithm for robot localization with uncalibrated monocular visual information. The approach also utilized the semi-supervised learning technique. The method was verified with the use of a wheeled mobile robot equipped with a stereo camera and a wireless adapter. One computer was used for tracking the robot and the registration of its positions. Another computer controlled the robot’s movement along a defined path. The authors stated that their “online localization algorithm outperformed the state-of-the-art appearance-based SLAM algorithms at a processing rate of 30 Hz for new data on a standard PC with a camera”.

5.2. Semi-Supervised Learning for Terrain Classification

Another task that was solved with the use of the semi-supervised learning is terrain classification. In [

99], the authors proposed the application of semi-supervised gated recurrent neural networks (RNN) for the terrain classification with the use of a quadruped robot. The method was verified in experiments with the use of the real-world datasets and was compared with the results obtained with the use of the supervised learning approach. The results showed that the semi-supervised model outperformed the supervised model in the situations with only small amounts of the available labeled data. In [

101], the authors proposed an approach to the terrain classification based on visual image processing and the semi-supervised multimodal deep network (SMMDN). The presented simulation results proved that the SMMDN contributed to the improvement of the mobile robots’ perception and recognition abilities in the complex outdoor environments.

5.3. Semi-Supervised Learning for Motion Control and Other Tasks

In [

98], a semi-supervised learning approach called semi-supervised imitation learning (SSIL) was proposed for the development of a trajectory controller. The method was tested with the use of an RC car. In [

104], the authors presented a semi-supervised method for the clustering of the robot’s experiences using dynamic time warping (DTW). The method was evaluated in real-world experiments with the use of the Pioneer 2 robot. The authors stated that they achieved 91% accuracy in the classification of the high-dimensional robot time series.

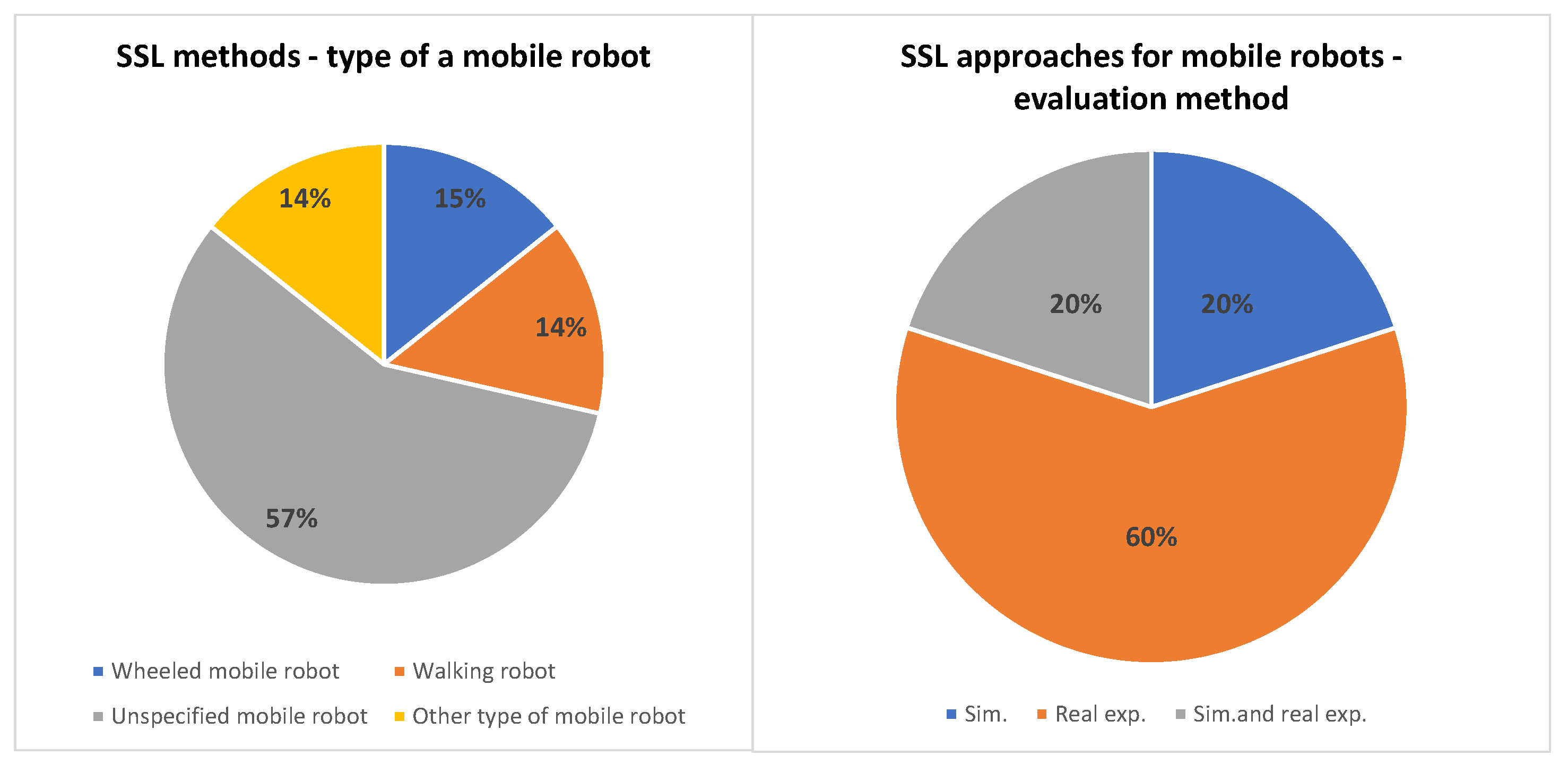

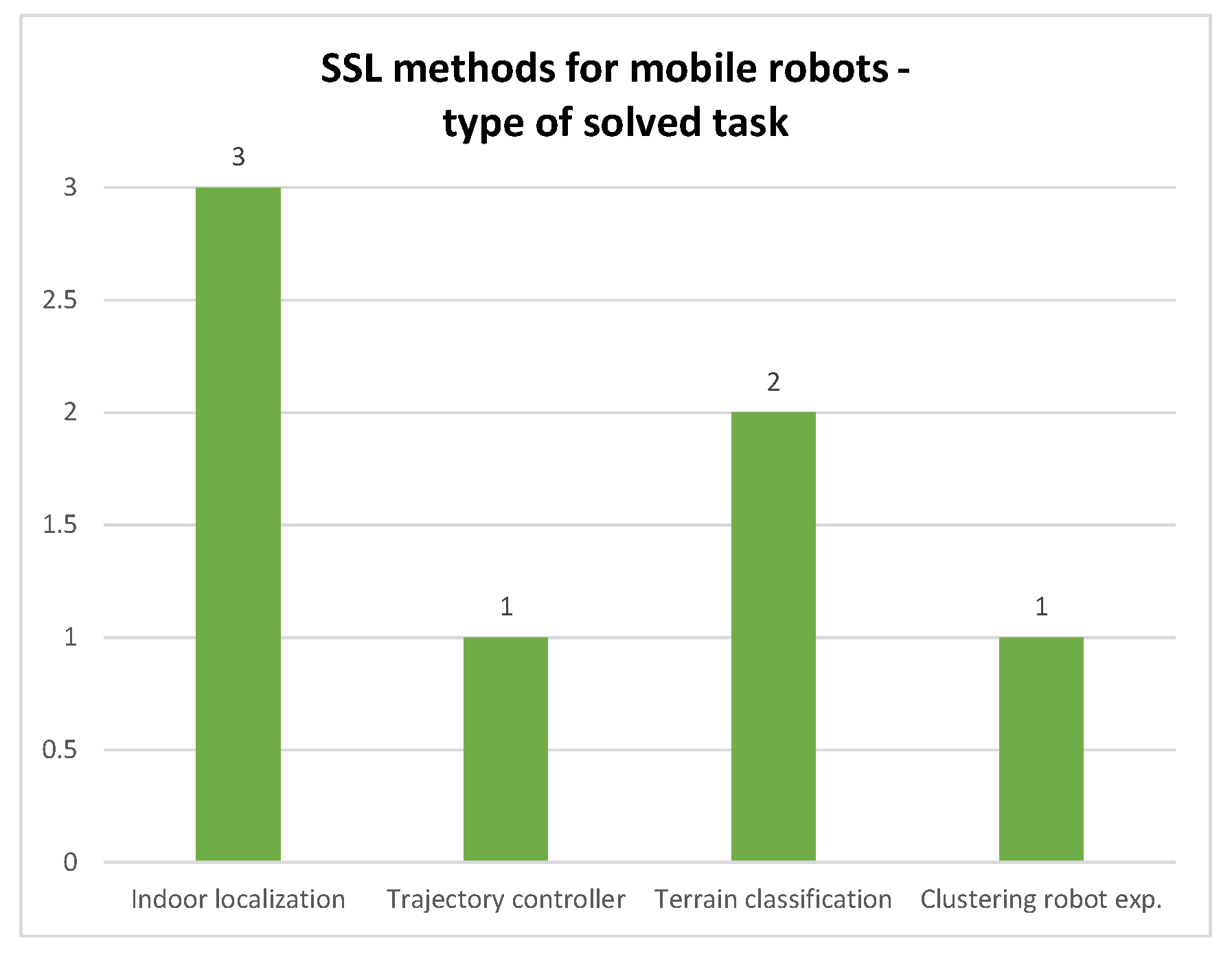

The charts presenting the statistics of the SSL algorithms applied in mobile robotics are shown in

Figure 13 and

Figure 14.

The analysis of the recent approaches for mobile robots based on the semi-supervised learning techniques allowed us to conclude that these methods were applied for the following:

Object detection and recognition, specifically for the terrain classification;

Simultaneous Localization and Mapping (SLAM), specifically for the indoor and outdoor localization;

Motion control, in the development of a trajectory controller;

Clustering of the robot’s experiences in order to construct a model of its interaction with the environment.

6. Open Issues and Future Prospects

The literature review of the machine learning methods applied for the different tasks associated with the control of mobile robots allowed the authors of this survey to realize that this topic covers a large amount of the various issues and proposed approaches.

The main challenges and open issues related to this topic were stated below. The relations between the application of the complex machine learning algorithms and the limited computational resources of the control system used on-board the mobile robot constitutes a challenge. The application of the machine learning algorithms enabling the mobile robot for decision making and motion control in real time is also a challenging issue. The adaptability of the algorithms to the dynamic, changing environments also constitutes a very demanding open issue.

Many machine learning algorithms need a large amount of data in order to achieve satisfactory performance. Therefore, the acquisition of large volumes of valuable data for training of the model can constitute a difficult task. In such cases, the solution might be to develop the effective algorithms, which can learn from the limited or unlabeled data. Another issue is the robustness and the generalization of the models. This is essential for the achievement of the reliable mobile robot’s operation.

The challenge, especially with regard to the application of the self-learning algorithms, might also be the assurance of the safety and reliability of the robot’s operation in the various environments and situations. This is particularly important in cases where interactions with humans occur.

The review allowed the detection of relationships between the specific machine learning methods or groups of methods and the associated mobile robotics tasks. One of the most easily noticeable cases is the application of reinforcement learning methods for the mobile robot’s path planning. However, it does not mean that this is the ideal method for solving this task and that the application of the other approaches is useless.

According to the authors, one of the significant future prospects is the development of self-learning algorithms for mobile robots performing different tasks—especially those replacing humans in the dangerous and/or physically exhausting activities. The important aspect that should be considered in future research is the assurance of safe and reliable operation. The development of the nature-inspired robots, for example the walking robots, implementing the ML methods, also constitutes a demanding open research task.

7. Conclusions

The paper presents a comprehensive review of the different machine learning methods applied in mobile robotics. The survey was divided into sections dedicated to the various subgroups of the ML approaches, including supervised learning (SL), unsupervised learning (UL), reinforcement learning (RL), and semi-supervised learning (SSL).

The revised literature covered the years 2003–2023 from the following scientific databases: Scopus, Web of Science, IEEE Xplore, and ScienceDirect. The works were primarily evaluated in terms of the applied method: SL, UL, RL or SSL and the considered task, where the main solved problems were: environment mapping, robot localization, SLAM, sensor data dimensionality reduction, fault detection, trajectory controller, obstacles clustering, and path planning.

Every approach was also evaluated with regard to the object for which the problem was solved. In this criterion, the mobile robots were distinguished as wheeled mobile robots or legged robots, such as quadruped robots, and hexapod robots. In some of the works, the exact mobile platform was not specified. In such cases, the control object was defined as a mobile robot without any specifications. The overview of the ML approaches also included the verification method, where the possibilities included simulations, real experiments, simulations with real-world datasets, and evaluation by both simulations and real experiments.

The above-described analysis allowed the authors to formulate the following concluding remarks:

Supervised machine learning is often used in mobile robotics for a variety of tasks; based on the literature review, it can be seen that both the regression and the classification methods were applied to navigation, terrain recognition and obstacle avoidance tasks;

According to the authors, the supervised learning algorithms are less useful than other methods for learning to walk and path-planning tasks;

An interesting study was that of Zhang [

35,

37], which used the random forest and the ANN algorithms to examine the terrain, on which the mobile robot was moving; the results of this work showed the usefulness of the classification for making certain decisions when performing the robot’s movement;

The reinforcement machine learning is characterized by many examples of the approaches utilized for learning of the environment;

The mobile robotics approaches utilizing the semi-supervised learning methods are less frequently applied than the other ML algorithms;

Most of the works considered the problems related to the wheeled mobile robots as solutions for the walking robots are less common;

Many methods concentrated on the tasks such as the position estimation or the environment mapping; a potential application area that is less extensively explored is the development of the ML-based methods for self-learning robots in order to enhance their autonomy;

To sum up, ML is characterized by significant implementation potential in many aspects of robotic tasks and problems.