A Meteor Detection Algorithm for GWAC System

Abstract

:1. Introduction

2. Materials and Methods

2.1. Dataset

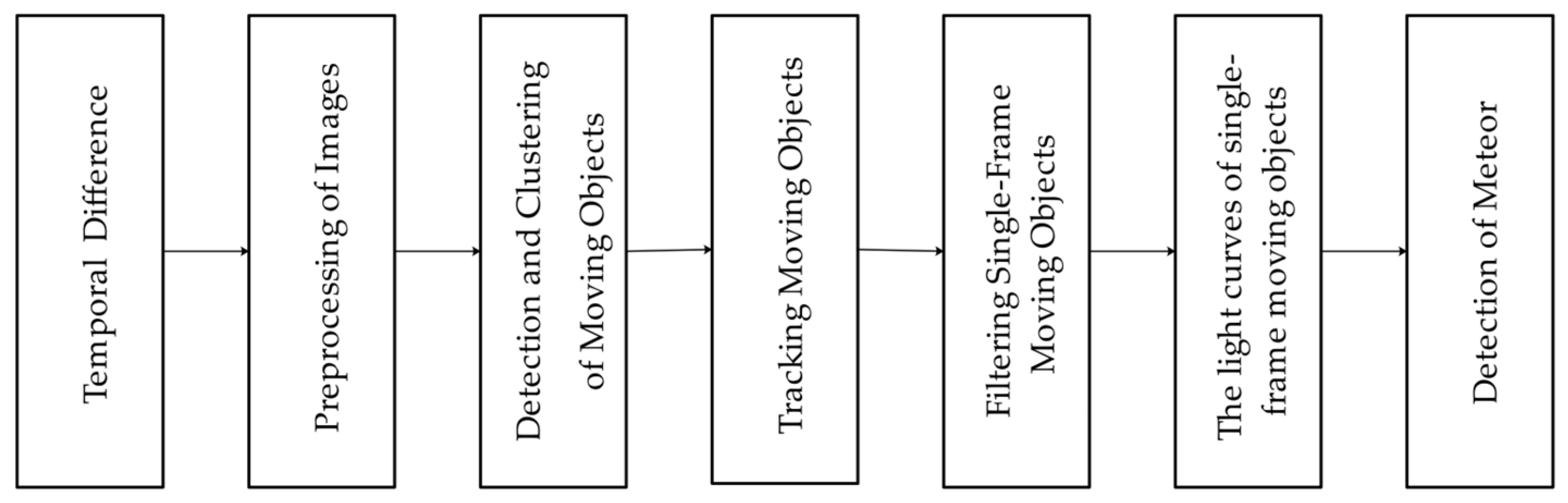

2.2. Meteor Detection Model

2.2.1. Temporal Difference

2.2.2. Preprocessing of Images

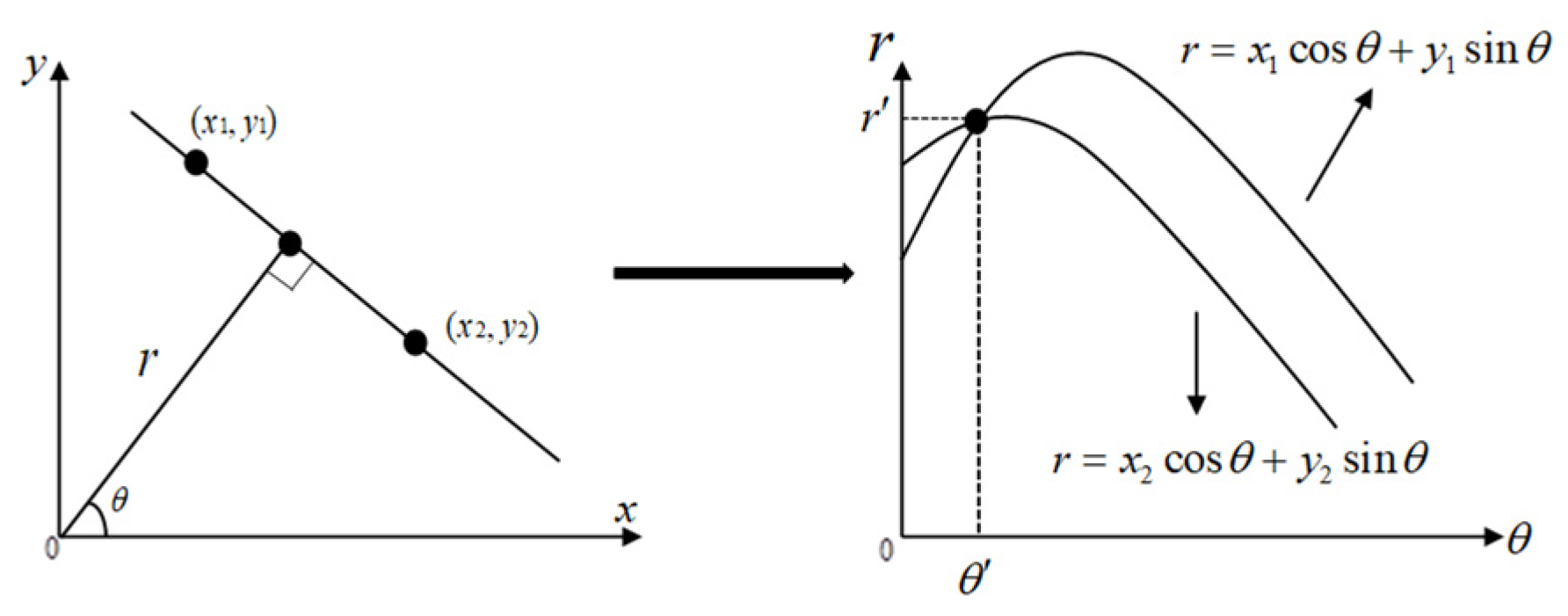

2.2.3. Detection and Clustering of Moving Objects

- The new clustering object set is initialized by adding the origin moment , the average origin moment , two position coordinates and , the inclination angle , the average of the inclination angle and the variance of the inclination angle from the first line segment or unmatched line segment in the line segments set.

- The distance is calculated between the origin moment of the line segment in the line segment set and the average origin moment of each clustering object set in turn. If the distance is less than the maximum distance error (MDE), the line segment is classified into the same clustering object set, , and are updated in clustering object set. If the line segment is matched with all clustering object sets, step 1 is executed to add a new clustering object set.

- When all the line segments in the line segment set are clustered, the clustering reliability of each clustering object set is judged by of the line segments within each clustering object set.

- If the variance of the inclination angles of the clustering object set is less than the maximum inclination angle error (MIAE), it indicates a successful clustering set; otherwise, it fails. Then the inclination angle error is calculated between the inclination angle of the line segments of the failed clustering object set and the average inclination angle of each clustering object set in turn. If it is less than MIAE, this line segment is matched with this clustering object set; otherwise, step 1 is executed to add a new cluster object set.

- Each clustering object set represents a moving object. The longest line segment in the clustering object set is denoted as the longest trajectory of the moving object, with position coordinates and .

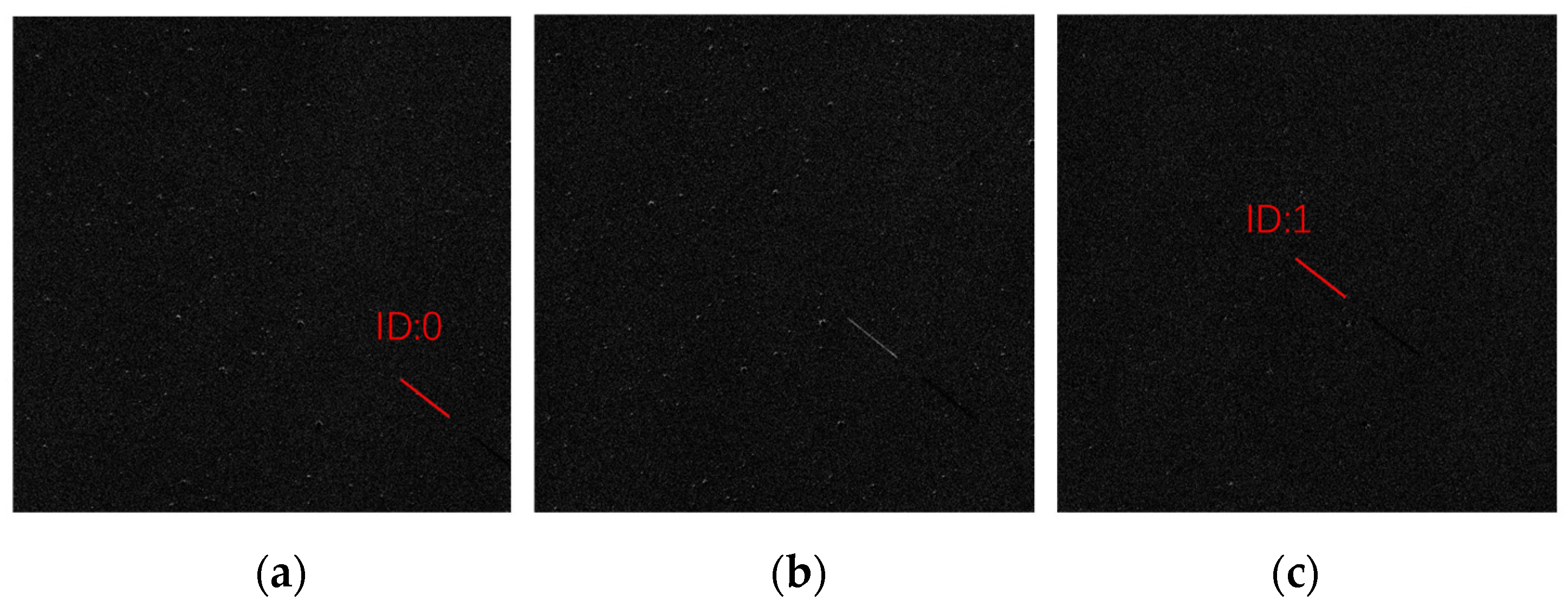

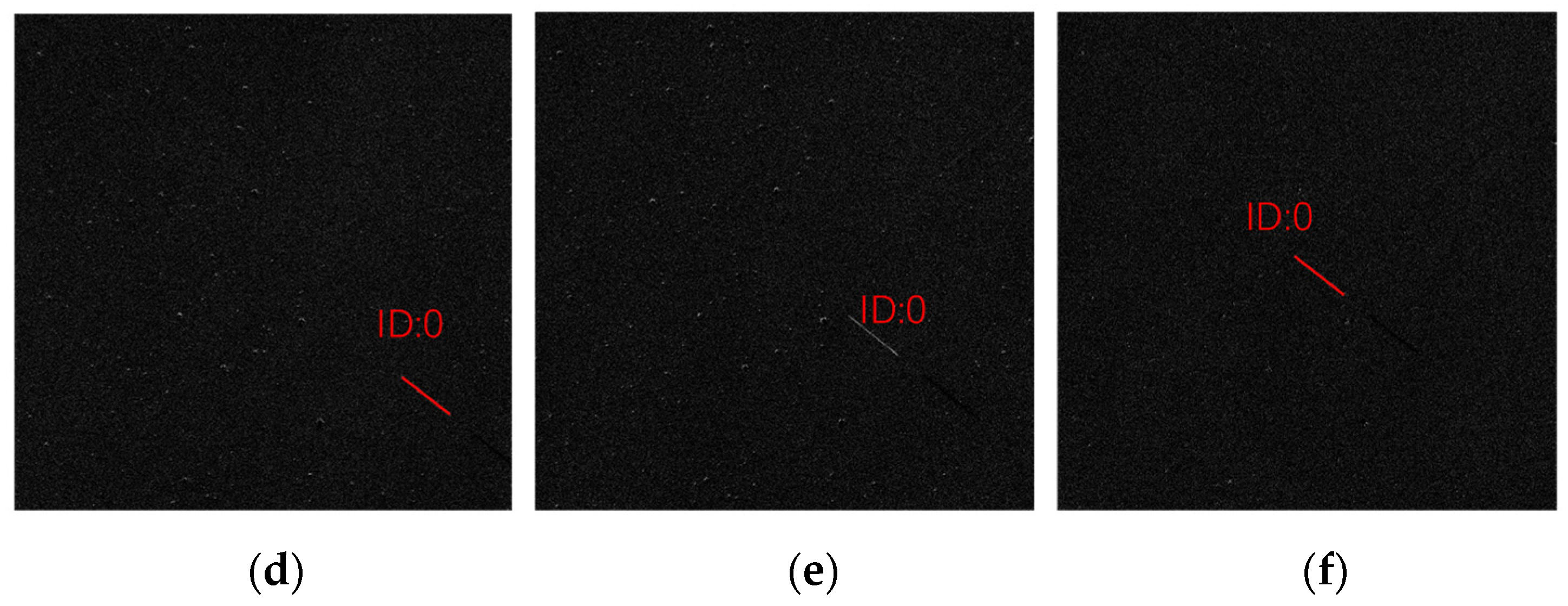

2.2.4. Tracking Moving Objects and Filtering Single-Frame Moving Objects

- 6.

- The position coordinates and , the inclination angle , and midpoint coordinate of each moving object in every frame are obtained. If it is the first frame, each moving object is assigned an initial ID.

- 7.

- It is firstly necessary to determine whether the moving objects in the current frame match with the ones in the previous frames.

- 8.

- We calculate the inclination angle of the line segments formed by pairing the midpoints of the moving objects in the current frame with the midpoints of the moving objects in the tracking object set, and create a matrix of the inclination angles .

- 9.

- Where the total of moving objects in the current frame is , while the tracking object set consists of moving objects.

- 10.

- We update the ID and the position coordinate of the moving objects in the current frame. The inclination angle of the moving object in the tracking object set indicates its movement direction in the next frame. The inclination angles in column of the matrix indicate the actual movement directions of the moving object . Then we find the moving object in the current frame that inclination angle is the nearest and calculate the error in the inclination angle between the two. If this error is less than MIAE, the moving object in the current frame is successfully matched the moving object in the tracking object set. They are regarded as the same object and assigned the same ID. The appearance count of this moving object is added by 1.

- 11.

- If there are moving objects matched unsuccessfully in the tracking object set, they are marked as disappeared moving objects. When the number of frames in which they have disappeared exceeds the maximum disappearance frame (MDF), they are removed from the tracking object set.

- 12.

- If there are moving objects matched unsuccessfully in the current frame, they are marked as newly appeared moving objects and assigned a new ID. They are added to the tracking object set along with their position coordinates and inclination angles. The appearance count of these objects is incremented by 1.

- 13.

- We filter single-frame moving objects. When the moving object is marked as disappeared and the number of frames it has been missing exceeds the MDF, it is considered a single-frame moving object if the appearance count of it is 1.

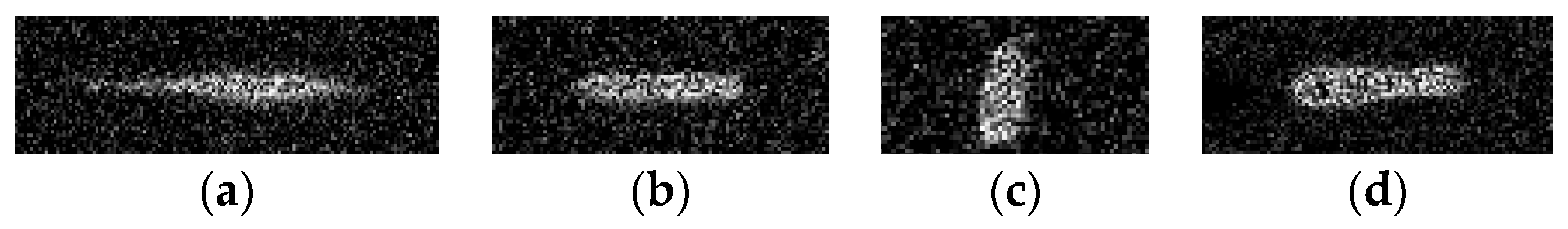

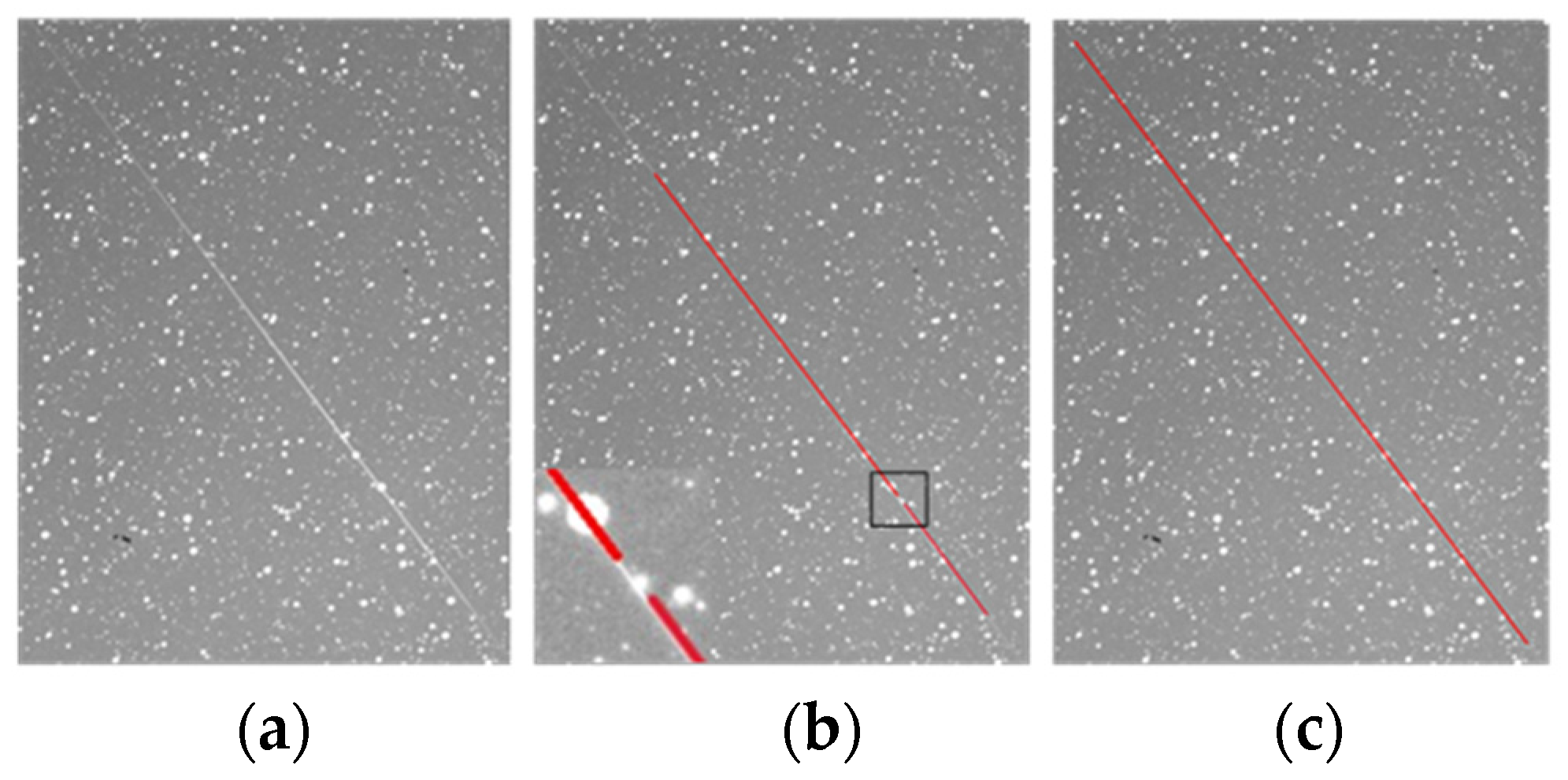

2.2.5. Meteor Detection

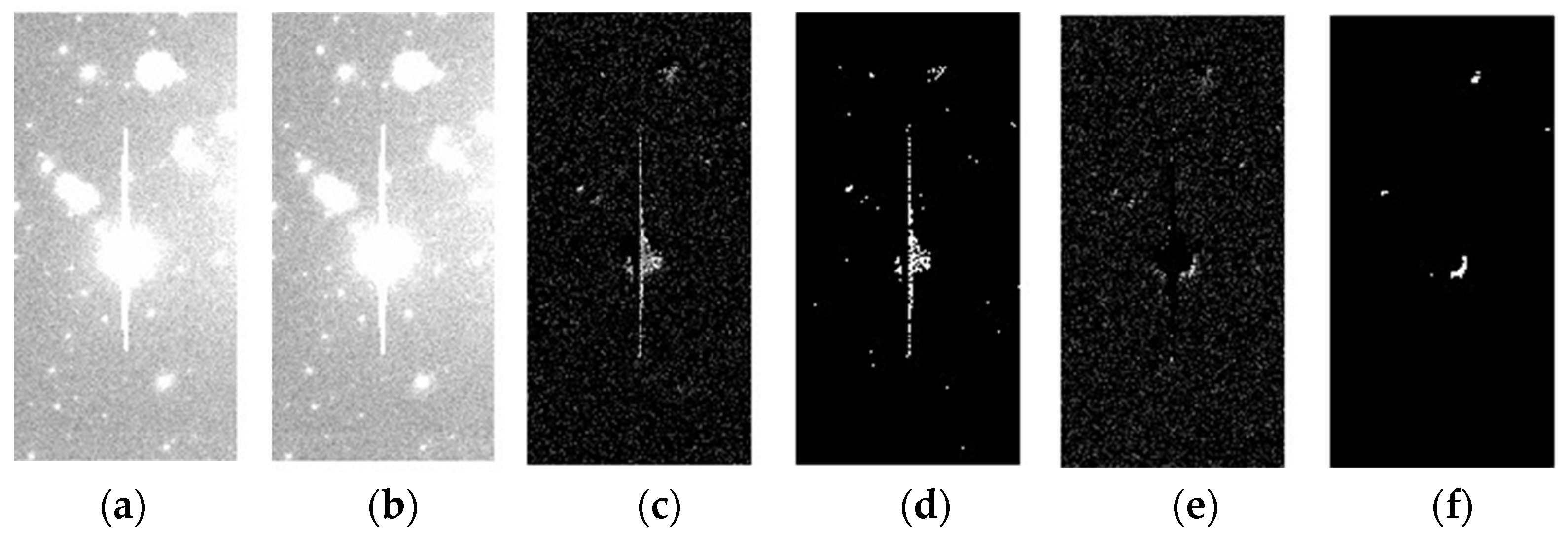

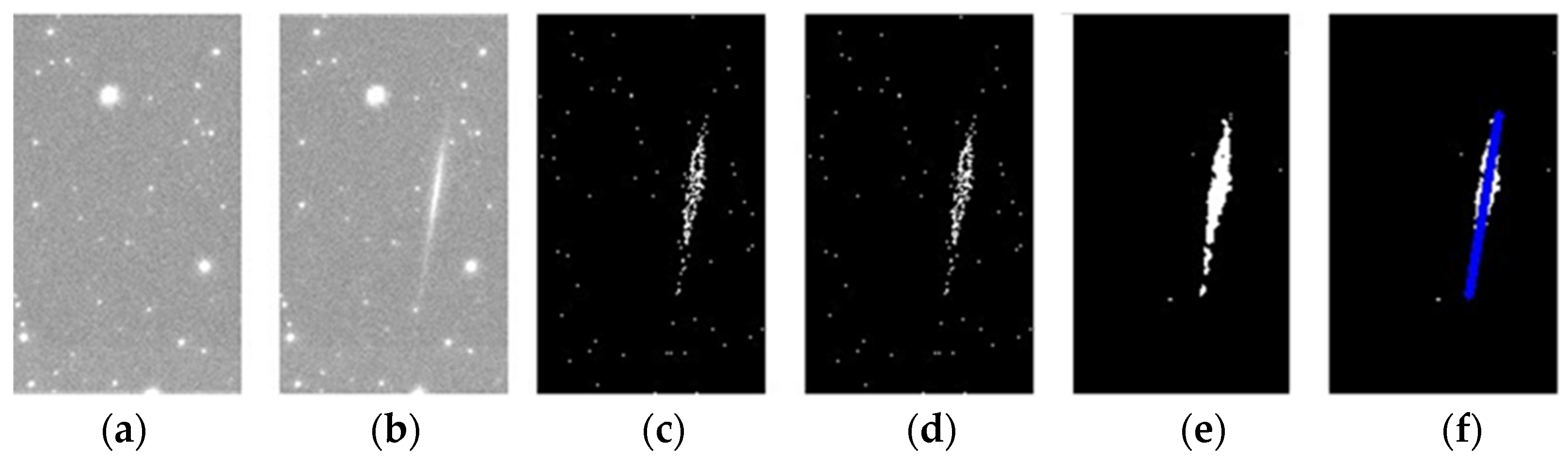

- Preprocessing of single-frame moving objects

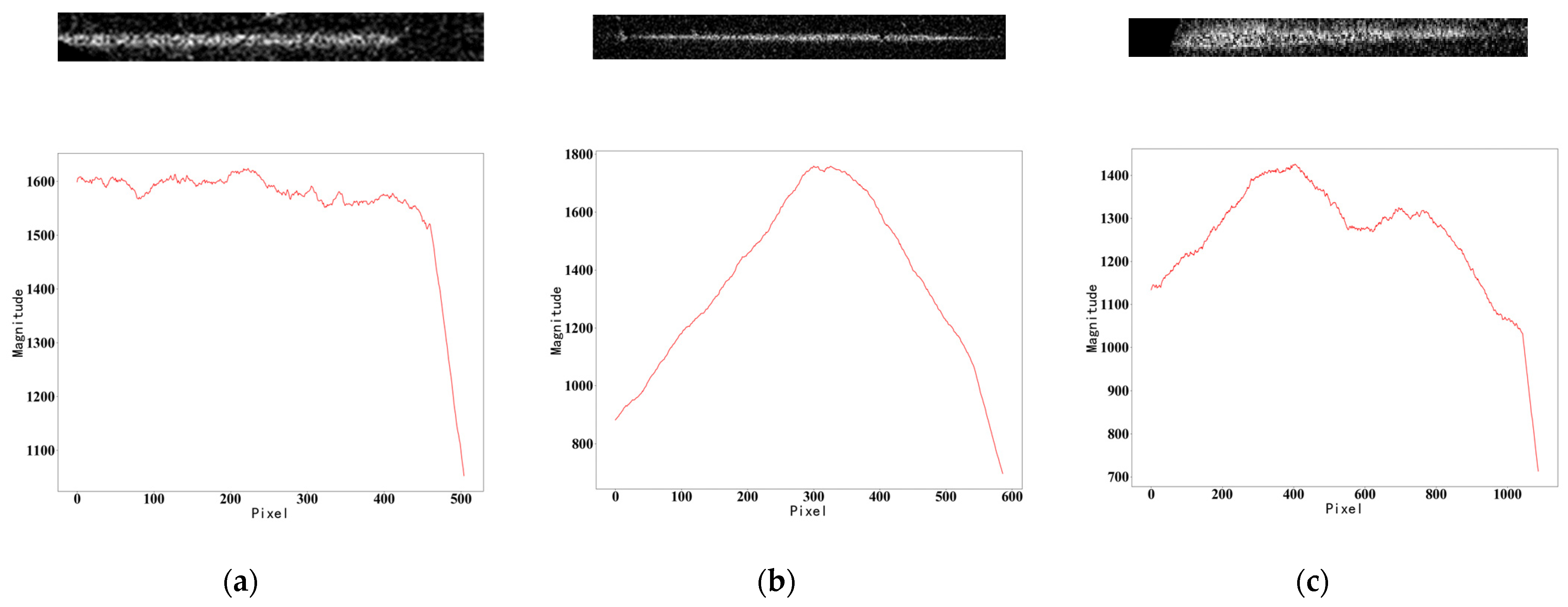

- The light curves of single-frame moving objects

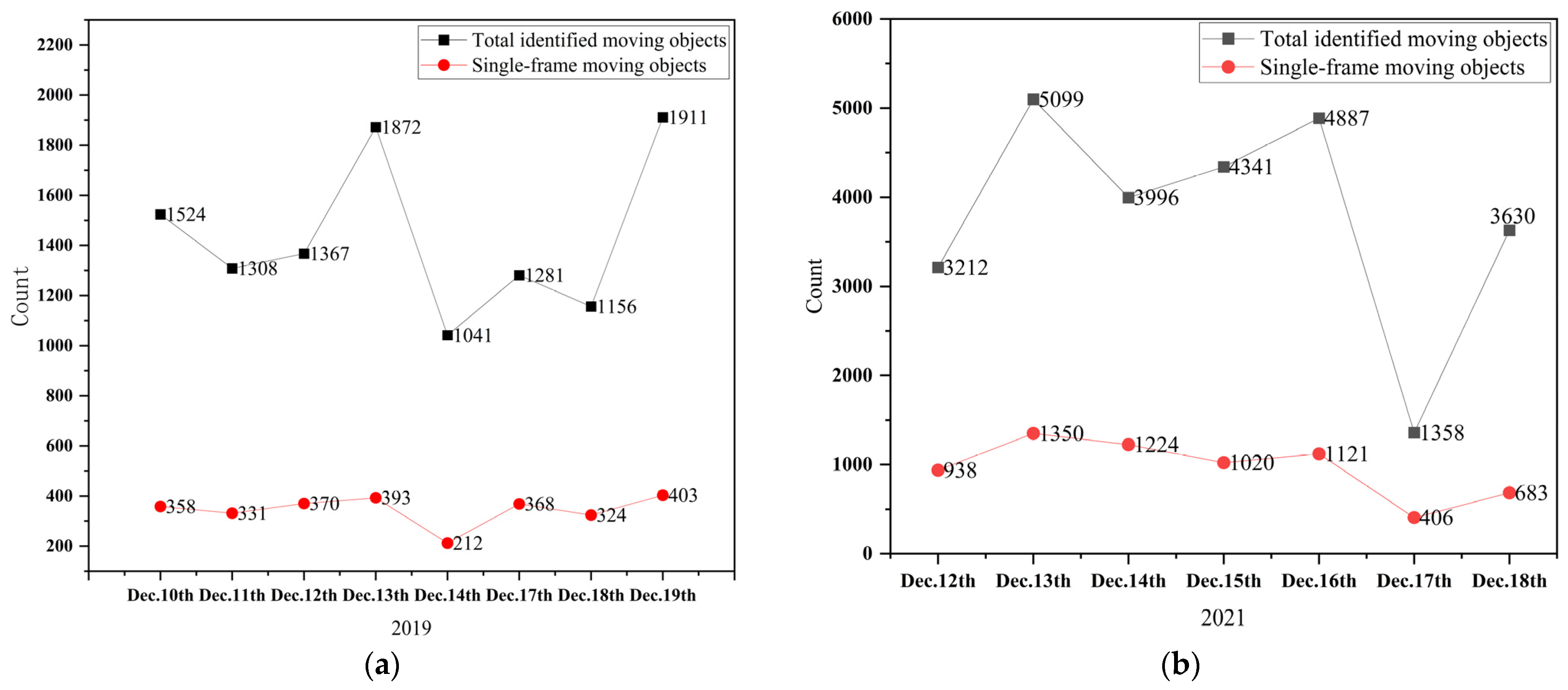

3. Results and Discussions

3.1. Implementation Details

3.2. Experimental Results

3.2.1. Comparative Experiments

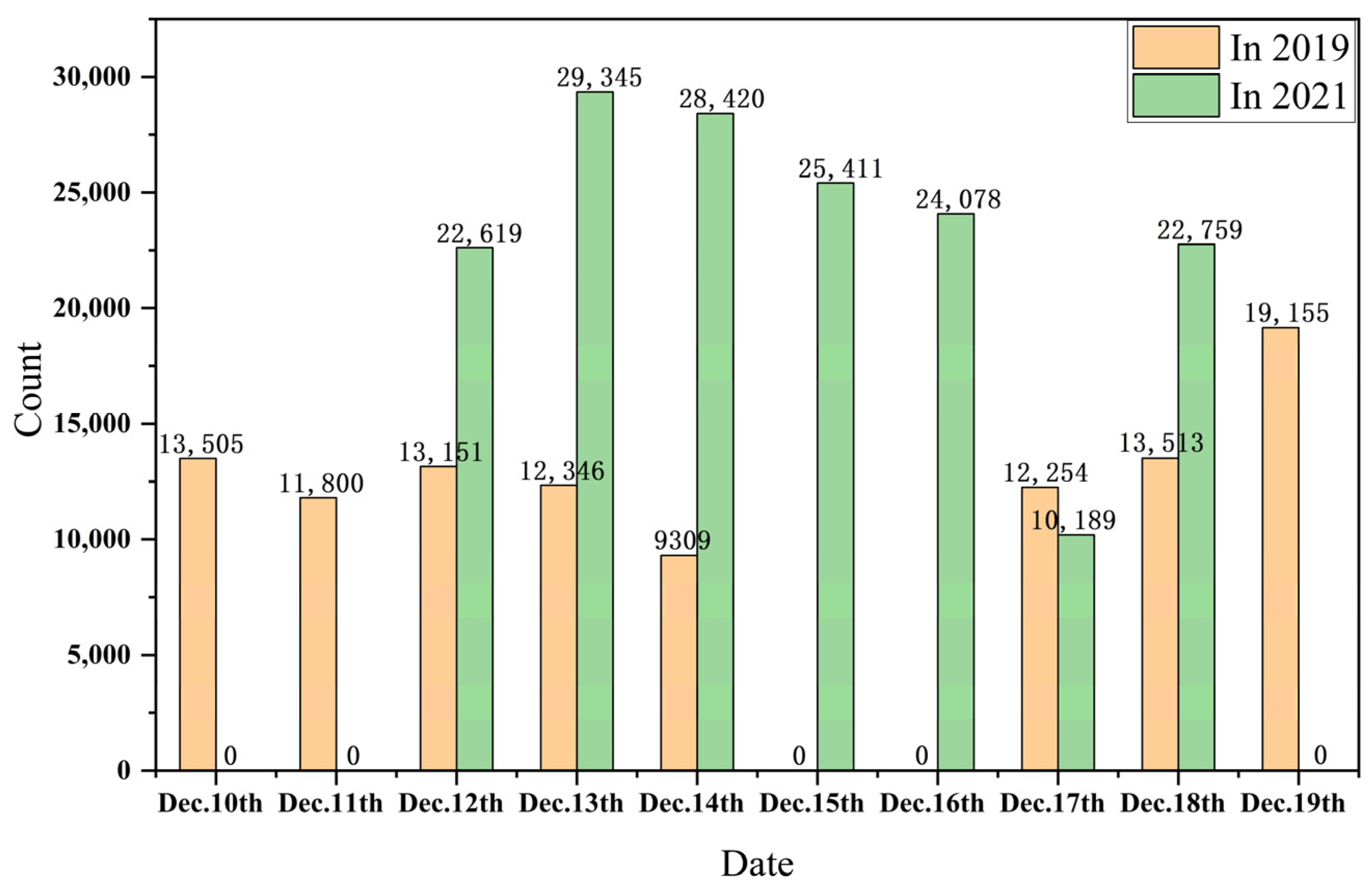

3.2.2. Meteor Detection for the GWAC Data

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Brownlee, D.; Joswiak, D.; Matrajt, G. Overview of the rocky component of Wild 2 comet samples: Insight into the early solar system, relationship with meteoritic materials and the differences between comets and asteroids. Meteorit. Planet. Sci. 2012, 47, 453–470. [Google Scholar] [CrossRef]

- Hu, Z. Some progress in meteor physics. Prog. Astron. 1996, 14, 19–28. [Google Scholar]

- Nascimento, J.C.; Abrantes, A.J.; Marques, J.S. An algorithm for centroid-based tracking of moving objects. In Proceedings of the 1999 IEEE International Conference on Acoustics, Speech, and Signal Processing, ICASSP99 (Cat. No.99CH36258), Phoenix, AZ, USA, 15–19 March 1999; Volume 3306, pp. 3305–3308. [Google Scholar]

- Gural, P.S. Advances in the meteor image processing chain using fast algorithms, deep learning, and empirical fitting. Planet. Space Sci. 2020, 182, 104847. [Google Scholar] [CrossRef]

- Gural, P. A fast meteor detection algorithm. In Proceedings of the International Meteor Conference, Egmond, The Netherlands, 2–5 June 2016; p. 96. [Google Scholar]

- Gural, P.S. MeteorScan Documentation and User’s Guide; Sterling VA, USA, 1999. [Google Scholar]

- Molau, S. The Meteor Detection Software METREC. In Proceedings of the International Meteor Conference, 17th IMC, Stara Lesna, Slovakia, 20–23 August 1998; pp. 9–16. [Google Scholar]

- Fujiwara, Y.; Nakamura, T.; Ejiri, M.; Suzuki, H. An automatic video meteor observation using UFO Capture at the Showa Station. In Proceedings of the Asteroids, Comets, Meteors 2012, Niigata, Japan, 16–20 May 2012; p. 6096. [Google Scholar]

- Brown, P.; Weryk, R.; Kohut, S.; Edwards, W.; Krzeminski, Z. Development of an All-Sky video meteor network in southern ontario, Canada the ASGARD system. WGN J. Int. Meteor Organ. 2010, 38, 25–30. [Google Scholar]

- Blaauw, R.; Cruse, K.S. Comparison of ASGARD and UFOCapture. In Proceedings of the International Meteor Conference, 30th IMC, Sibiu, Romania, 15–18 September 2011; pp. 44–46. [Google Scholar]

- Molau, S. The AKM video meteor network. In Proceedings of the Meteoroids 2001 Conference, Kiruna, Sweden, 6–10 August 2001; pp. 315–318. [Google Scholar]

- Jenniskens, P.; Gural, P.S.; Dynneson, L.; Grigsby, B.J.; Newman, K.E.; Borden, M.; Koop, M.; Holman, D. CAMS: Cameras for All sky Meteor Surveillance to establish minor meteor showers. Icarus 2011, 216, 40–61. [Google Scholar] [CrossRef]

- Kornoš, L.; Koukal, J.; Piffl, R.; Tóth, J. EDMOND Meteor Database. In Proceedings of the International Meteor Conference, Poznan, Poland, 22–25 August 2013; pp. 23–25. [Google Scholar]

- Karpov, S.; Orekhova, N.; Beskin, G.; Biryukov, A.; Bondar, S.; Ivanov, E.; Katkova, E.; Perkov, A.; Sasyuk, V. Meteor observations with Mini-Mega-TORTORA wide-field monitoring system. arXiv 2016, arXiv:1602.07977. [Google Scholar]

- Weryk, R.J.; Campbell-Brown, M.D.; Wiegert, P.A.; Brown, P.G.; Krzeminski, Z.; Musci, R. The Canadian Automated Meteor Observatory (CAMO): System overview. Icarus 2013, 225, 614–622. [Google Scholar] [CrossRef]

- Feng, T.; Du, Z.; Sun, Y.; Wei, J.; Bi, J.; Liu, J. Real-time anomaly detection of short-time-scale GWAC survey light curves. In Proceedings of the 2017 IEEE International Congress on Big Data (BigData Congress), Honolulu, HI, USA, 25–30 June 2017; pp. 224–231. [Google Scholar]

- Wan, M.; Wu, C.; Zhang, Y.; Xu, Y.; Wei, J. A pre-research on GWAC massive catalog data storage and processing system. Astron. Res. Technol. 2016, 20, 373–381. [Google Scholar]

- Yang, C.; Weng, Z.; Meng, X.; Ren, W.; Xin, R.; Wang, C.; Du, Z.; Wan, M.; Wei, J. Data management challenges and real-time processing technologies in astronomy. J. Comput. Res. Dev. 2017, 54, 248–257. [Google Scholar] [CrossRef]

- Götz, D.; Collaboration, T. SVOM: A new mission for Gamma-Ray Bursts studies. Mem. Della Soc. Astron. Ital. Suppl. 2012, 21, 162. [Google Scholar]

- Bi, J.; Feng, T.; Yuan, H. Real-time and short-term anomaly detection for GWAC light curves. Comput. Ind. 2018, 97, 76–84. [Google Scholar] [CrossRef]

- Gural, P.S. Algorithms and software for meteor detection. In Advances in Meteoroid and Meteor Science; Trigo-Rodríguez, J.M., Rietmeijer, F.J.M., Llorca, J., Janches, D., Eds.; Springer: New York, NY, USA, 2008; pp. 269–275. [Google Scholar]

- Xu, Y.; Wang, J.; Huang, M.; Wei, J. An algorithm of selection of meteor candidates in GWAC system. Astron. Res. Technol. 2019, 16, 478–487. [Google Scholar] [CrossRef]

- Yang, Q.; Feng, X.; Li, Z.; Sun, X.; Lin, X.; Wang, J. Dynamic object detection algorithm based on improved inter frame difference. J. Heilongjiang Univ. Sci. Technol. 2022, 32, 779–783. [Google Scholar]

- Romanengo, C.; Falcidieno, B.; Biasotti, S. Hough transform based recognition of space curves. J. Comput. Appl. Math. 2022, 415, 114504. [Google Scholar] [CrossRef]

- Fernandes, L.A.F.; Oliveira, M.M. Real-time line detection through an improved Hough transform voting scheme. Pattern Recognit. 2008, 41, 299–314. [Google Scholar] [CrossRef]

- Zheng, F.; Luo, S.; Song, K.; Yan, C.; Wang, M. Improved lane line detection algorithm based on Hough transform. Pattern Recognit. Image Anal. 2018, 28, 254–260. [Google Scholar] [CrossRef]

- Bednář, J.; Krauz, L.; Páta, P.; Koten, P. Meteor cluster event indication in variable-length astronomical video sequences. Mon. Not. R. Astron. Soc. 2023, 523, 2710–2720. [Google Scholar] [CrossRef]

- Yang, Y.; Yin, C.; Liu, B.; Wang, D.; Zhang, D.; Liang, Q. Automatic recognition method research of pointer meter based on Hough transform and feature clustering. Mach. Des. Res. 2019, 35, 7–11. [Google Scholar] [CrossRef]

- Zhu, S.; Gao, J.; Li, Z. Video object tracking based on improved gradient vector flow snake and intra-frame centroids tracking method. Comput. Electr. Eng. 2014, 40, 174–185. [Google Scholar] [CrossRef]

- Subasinghe, D.; Campbell-Brown, M.D.; Stokan, E. Physical characteristics of faint meteors by light curve and high-resolution observations, and the implications for parent bodies. Mon. Not. R. Astron. Soc. 2016, 457, 1289–1298. [Google Scholar] [CrossRef]

| Method | Filtering Single-Frame Moving Objects | Detection of Meteors | ||

|---|---|---|---|---|

| Precision | Recall | Precision | Recall | |

| The Reference [22] | 0.781 | 0.759 | 0.814 | 0.793 |

| Our algorithm | 0.914 | 0.880 | 0.898 | 0.835 |

| Method | The Reference [22] | Our Algorithm |

|---|---|---|

| Time/s | 6965 | 2280 |

| Date | Number of Single-Frame Moving Objects (2019/2021) | Number of Meteors (2019/2021) | Manually Verified Number of Meteors (2019/2021) |

|---|---|---|---|

| 10 December | 358/0 | 208/0 | 179/0 |

| 11 December | 331/0 | 228/0 | 197/0 |

| 12 December | 370/938 | 216/621 | 201/562 |

| 13 December | 393/1350 | 291/857 | 267/785 |

| 14 December | 212/1224 | 126/833 | 111/747 |

| 15 December | 0/1020 | 0/711 | 0/657 |

| 16 December | 0/1121 | 0/633 | 0/569 |

| 17 December | 368/406 | 206/323 | 194/298 |

| 18 December | 324/683 | 195/317 | 172/279 |

| 19 December | 403/0 | 249/0 | 223/0 |

| Total | 2759/6742 | 1719/4295 | 1544/3897 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, Y.; Li, G.; Liu, C.; Qiu, B.; Shan, Q.; Li, M. A Meteor Detection Algorithm for GWAC System. Universe 2023, 9, 468. https://doi.org/10.3390/universe9110468

Chen Y, Li G, Liu C, Qiu B, Shan Q, Li M. A Meteor Detection Algorithm for GWAC System. Universe. 2023; 9(11):468. https://doi.org/10.3390/universe9110468

Chicago/Turabian StyleChen, Yicong, Guangwei Li, Cuixiang Liu, Bo Qiu, Qianqian Shan, and Mengyao Li. 2023. "A Meteor Detection Algorithm for GWAC System" Universe 9, no. 11: 468. https://doi.org/10.3390/universe9110468

APA StyleChen, Y., Li, G., Liu, C., Qiu, B., Shan, Q., & Li, M. (2023). A Meteor Detection Algorithm for GWAC System. Universe, 9(11), 468. https://doi.org/10.3390/universe9110468