1. Introduction

Neutrino oscillations have provided the first signal for physics beyond the standard model (SM). They were first proposed to explain the deficit in the solar neutrino flux observed by the pioneering Homestake experiment [

1]. Oscillations between two neutrino flavours require them to mix and form two mass eigenstates. The survival probability of a neutrino with energy

E and given flavour

, after propagation over a distance

L in vacuum, is given by:

where

is the difference between the squares of the neutrino masses and

is the mixing angle. In Equation (

1), the units are chosen such that

should be specified in eV

2,

L in meters, and

E in MeV. The solar neutrino deficit was confirmed by the water Cerenkov detector Kamiokande [

2], which detected the solar neutrinos in real time. Radio-chemical Gallium experiments, GALLEX [

3], SAGE [

4], and GNO [

5], which were mostly sensitive to the low energy

solar neutrinos, also observed a deficit. The high statistics water Cerenkov detector Super-Kamiokande [

6] and the heavy water Cerenkov detector SNO [

7] and Borexino [

8] also have made detailed spectral measurements of the solar neutrino fluxes. Analysing the solar neutrino data in a two-flavour oscillation framework gives the oscillation parameters:

Observation of proton decay is one of the main physics motivations for the construction of water Cerenkov detectors, IMB [

9,

10] and Kamiokande [

11,

12]. The interactions of atmospheric neutrinos in the detector, especially those of

and

, could mimic the proton decay signal. Hence, these experiments have made a detailed study of the atmospheric neutrino interactions. They did not find any signal for proton decay, however instead observed a deficit of up-going atmospheric

flux, relative to the down-going flux. It was proposed that the up-going neutrinos, which travel thousands of kilometres inside the Earth, oscillate into another flavour whereas the down-going neutrinos, which travel tens of kilometres, do not. The Super-Kamiokande experiment [

13] observed a zenith angle dependence of the deficit, which is expected from neutrino oscillations. An analysis of the atmospheric neutrino data in a two-flavour oscillation framework gives the oscillation parameters:

we note that

.

It is known that there are three flavours of neutrinos,

,

, and

[

14]. They mix to form three mass eigenstates,

,

, and

, with mass eigenvalues

,

, and

. The

unitary matrix

U, connecting the flavour basis to the mass basis,

is called the Pontecorvo–Maki–Nakagawa–Sakata (PMNS) matrix [

15,

16]. Naively, it seems desirable to label the lightest mass

, the middle mass

, and the heaviest mass

. However, no method exists at present to directly measure these masses. What can be measured in oscillation experiments are the mixing matrix elements

, where

is a flavour index and

j is a mass index. In particular, the three elements of the first row

,

, and

are well measured. The labels 1, 2, and 3 are chosen such that

.

Given the three masses

,

, and

, it is possible to define two independent mass-squared differences,

and

. The third mass-squared difference is then

. Without loss of generality, we can choose

and

. Since

, we find that

. As a result of the way the labels 1, 2, and 3 are chosen, these mass-squared differences, in principle, can be either positive or negative. Their signs have to be determined by experiments. The solar neutrinos, produced at the core of the sun, undergo forward elastic scattering as they travel through the solar matter. This scattering leads to matter effect [

17,

18], which modifies the solar electron neutrino survival probability

. Super-Kamiokande [

19] and SNO [

7] have measured

as a function of neutrino energy for

MeV and found it to be of a constant value ≃0.3. SNO has also measured [

7] the neutral current interaction rate of solar neutrinos to be consistent with predictions of the standard solar model [

20]. The measurements of the Gallium experiments imply that

for neutrino energies

MeV. This increase in

at lower solar neutrino energies can be explained only if

is positive. At present, there is no definite experimental evidence for either a positive or negative sign of

.

The PMNS matrix is similar to the quark mixing matrix introduced by Kobayashi and Maskawa [

21]. It can be parameterised in terms of three mixing angles,

,

, and

, and one char-parity (CP)-violating phase

. The following parameterisation of the PMNS matrix is found to be the most convenient to analyse the neutrino data:

where

and

. Among these mixing angles, the CHOOZ experiment sets a strong upper bound on the middle angle

[

22]:

By combining this limit with the solar and atmospheric neutrino data, it can be shown that

[

23].

The mixing angles and the mass-squared differences have been determined in a series of precision experiments with man-made neutrino sources, which we briefly describe below.

The long-baseline reactor neutrino experiment KamLAND [

24] has

km. At this long distance, it can observe oscillations due to the small mass-squared difference,

. In the limit of neglecting

in the three-flavour oscillation, the expression for the anti-neutrino survival probability

reduces to an effective two-flavour expression:

KamLAND measured the spectral distortion

precisely. A combined analysis of KamLAND and solar neutrino data yields the results:

The short baseline reactor neutrino experiments, Daya Bay [

25], RENO [

26], and DoubleCHOOZ [

27], have baselines of the order of 1 km. At this distance, the oscillating term in

containing

is negligibly small. In this approximation,

again reduces to an effective two-flavour expression:

High statistics measurement from Daya Bay gives the measurement:

The long-baseline accelerator experiment MINOS [

28] has a baseline of 730 km and it measured the survival probability of the accelerator

beam. For this baseline and for accelerator neutrino energies, the oscillating term in

due to

is negligibly small. Setting this term and

to be zero in the three-flavour expression for

, once again we obtain an effective two-flavour expression:

MINOS data gives the results:

Note that both the short-baseline reactor neutrino experiments and long-baseline accelerator experiments as well as atmospheric neutrino data determine only the magnitude of but not its sign. Hence, we must consider both positive and negative sign possibilities in the data analysis. The case of positive is called normal hierarchy (NH) and that of negative is called an inverted hierarchy (IH). Both atmospheric neutrino data and accelerator neutrino data are functions of and they prefer . For such values, there are two possibilities: , which is called lower octant (LO) and , which is called higher octant (HO). At present, the data is not able to make a distinction between these two cases.

A number of groups have done global analysis of neutrino oscillation data from all the available sources: solar, atmospheric, reactor, and accelerator [

29,

30]. In

Table 1, we present the latest results obtained by the nu-fit collaboration [

29].

Among the neutrino oscillation parameters, the mass-squared differences and the mixing angles (except for ) are measured to a precision of a few percent. On the other hand, the CP-violating phase still eludes measurement. In addition, we also need to resolve the issues of the sign of (also called the problem of neutrino mass hierarchy) and the octant of . Thus, there are currently three main unknowns in the three-flavour neutrino oscillation paradigm.

It can be shown that the survival probability of neutrinos,

, is equal to that of the anti-neutrinos

due to charge-parity-time (CPT) invariance. However, the oscillation probabilities

and

are not equal if there is CP violation. A measurement of the difference between these two probabilities will establish CP violation in neutrino oscillations and will also determine

. While considering oscillation probabilities, in principle, we can enumerate six possibilities, with

and

taking two possible values other than

. It can be shown that, in all six cases, the difference:

is proportional to:

where

J is the Jarlskog invariant of the PMNS matrix [

31].

Practically speaking, at production are not possible because there are no intense sources of . Nuclear reactors do produce copiously but their energies are in the range of a few MeV. When such oscillate into , the resultant anti-neutrinos are not energetic enough to produce by their interactions in the detector. Hence, are not practical choices. The neutrino beams produced by accelerators yield intense fluxes of . In principle, it is possible to search for CP violation by contrasting with or by contrasting with . However, the second option is much more difficult compared to the first for the reasons listed below.

To produce in the detector, due to the interactions of , the neutrinos should have energies of tens of GeV. At these energies, the oscillation probabilities are quite small.

Even when we have energetic-enough beams to produce in the detector, the efficiency of reconstructing these particles is very poor. Thus, the event numbers will be very limited.

From Equation (

14), we see that the charge-parity CP violating asymmetry:

will be very small because the numerator is a product of two small quantities

and

, whereas the denominator is close to 1. Hence a measurement of this CP asymmetry requires high statistics.

Thus, the most feasible method to establish CP violation in neutrino oscillations and to determine

is to measure the difference between

and

. The neutrinos do not require large energies to produce electrons/positrons on interacting in the detector. Thus, the neutrino beam energy can be tuned to the oscillation maximum. The produced electrons and positrons are relatively easy to identify in the detector. The dominant term in the expression for

is proportional to

[

32]. The expression for the CP asymmetry in

oscillations has the form:

which is much larger than

. Thus, CP violation in these oscillations can be established with moderate statistics.

There are, however, some other difficulties to overcome before the goal of establishing CP violation in neutrino oscillations can be achieved. The matter effect, which modifies the solar neutrino oscillation probabilities, modifies

and

[

33,

34]. These modifications depend on the sign of

. Since the dominant terms in these oscillation probabilities are proportional to

, they are also subject to the octant ambiguity of

. That is, the two oscillation probabilities,

and

, depend on

all the three unknowns of the three-flavour neutrino oscillation parameters. In such a situation, the change in the probabilities induced by changing one of the unknowns can be compensated by changing another unknown. This leads to degenerate solutions that can explain a given set of measurements. Unravelling these degeneracies and making a distinction between the degenerate solutions requires a number of careful measurements and moderately high statistics.

In this review article, we analyse the theory of oscillation probability and parameter degeneracy in

Section 2. Details of the

analysis are discussed in

Section 3. In

Section 4, we outline the chronology of NO

A and T2K data. In the same section, we also explain the results of the analysis of data from NO

A and T2K in the past and present ones as well with the help of parameter degeneracy and describe the cause for the tension between the data of the two experiments. The resolution of the tension in terms of BSM physics is discussed in

Section 5. A summary of the article is drawn in

Section 6.

2. Oscillation Probability and Parameter Degeneracy

Two accelerator experiments, T2K [

35] and NO

A [

36], take data with the aim of establishing CP violation as well as determining neutrino mass hierarchy and the octant of

. Both experiments share the following common features.

They aim a beam of to a far detector a few hundred kilometres away, which is at an off-axis location.

The off-axis location leads to a sharp peak in neutrino spectrum [

37], which is crucial to suppress the

events, produced via the neutral current reaction

, that form a large background for the

oscillation signal.

They have a near detector, a few hundred meters from the accelerator, which measures the neutrino flux accurately.

The energy of the neutrino beam is tuned to be close to the oscillation maximum.

They measure the two survival probabilities, and , and the oscillation probabilities and .

The survival probabilities lead to further improvement in the precision of

and

. A careful analysis of the oscillation probabilities can lead to the determination of three unknowns of the neutrino oscillation parameters. The crucial parameters of T2K and NO

A experiments are summarised in

Table 2. Note that the integrated flux of accelerator neutrinos is specified in units of protons on target (POT).

We first begin with a discussion of the oscillation probabilities,

and

and describe how they vary with each of the unknown neutrino oscillation parameters. The three-flavour

oscillation probability in the presence of matter effect with constant matter density can be written as [

32]:

where

,

, and

, with

E being the energy of the neutrino and

L being the length of the baseline. The parameter

A is the Wolfenstein matter term [

17], given by

, where

is the Fermi coupling constant and

is the number density of the electrons in the matter. Anti-neutrino oscillation probability

can be obtained by changing the sign of

A and

in Equation (

16). The oscillation probability mainly depends on hierarchy (sign of

), octant of

and

, and precision in the value of

.

is enhanced if

is in the lower half plane (LHP,

), and it is suppressed if

is in the upper half plane (UHP,

), compared to the CP conserving

values. In the following paragraph, for the sake of discussion, we will treat

as a binary variable that either increases or decreases oscillation probability.

The dominant term in is proportional to . Therefore, the oscillation probability is rather small. It can be enhanced (suppressed), by for T2K and for NOA, due to the matter effect if is positive (negative). This dominant term is also proportional to . If , there can be two possible cases: (i) which will suppress , and (ii) which will enhance relative to the maximal . Since each of the unknowns can take 2 possible values, there are 8 different combinations of three unknowns. Any given value of can be reproduced by any of these eight combinations of the three unknowns with the appropriate choice of value. Thus, if the value of is not known precisely, it will lead to an eight-fold degeneracy in . Given that has been measured quite precisely, this degeneracy becomes less severe.

2.1. Hierarchy- Degeneracy

To start with, we assume that

is maximal and the values of

and

are precisely known. With these assumptions, the only two unknowns are hierarchy and

. From

Table 1, we see that, according to the current measurements,

whereas

. Therefore, the first term in the expression of

(and in

) in Equation (

16) has the maximum matter effect contribution. This term is much larger than the second term and the third term is extremely small. We will neglect the third term in all further discussions.

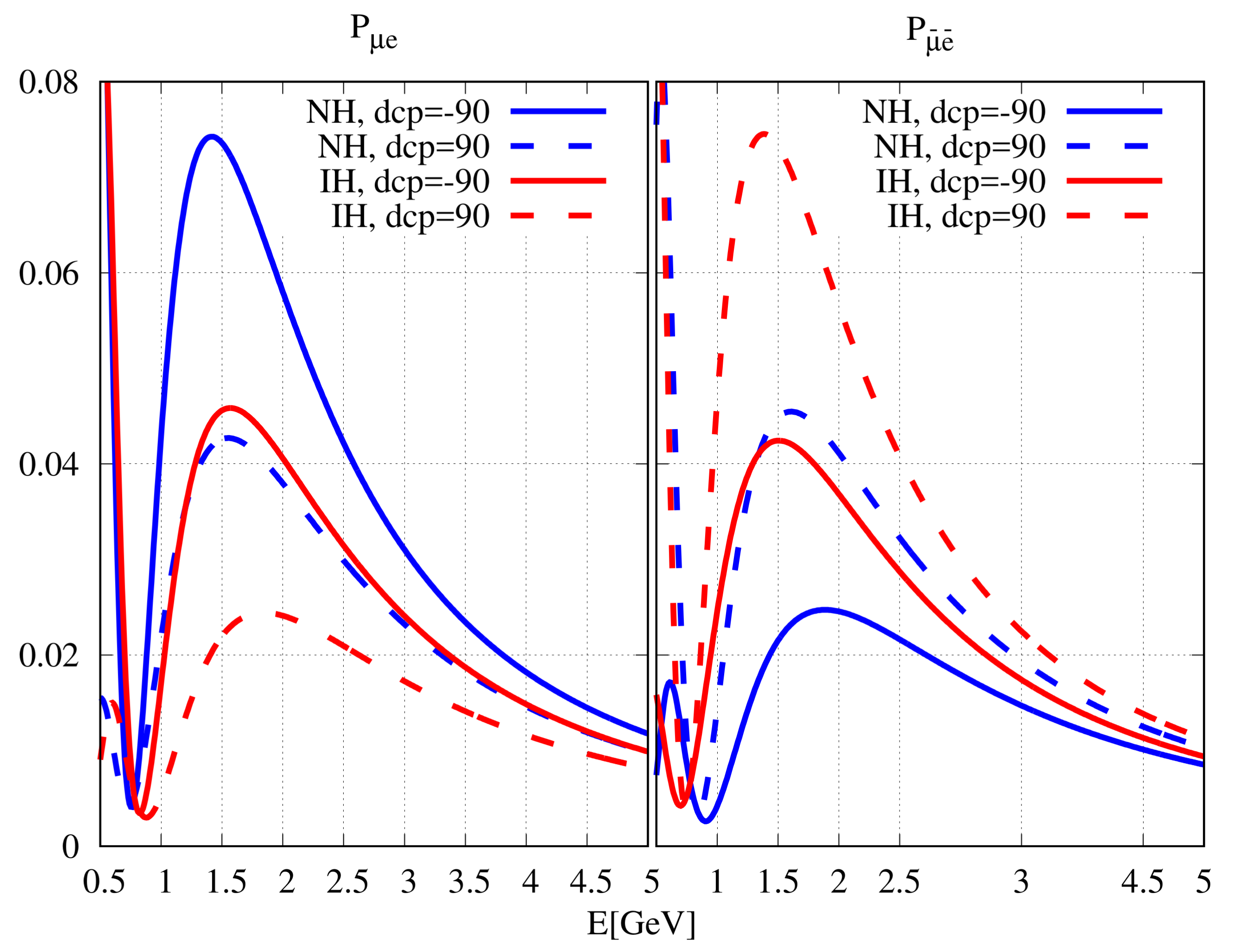

For NH (IH), the first term in

becomes larger (smaller). For

, the situation is reversed. These changes in

and in

can be

amplified or cancelled by the second term, depending on the value of

. As a result of the dependence on

term,

(

) for NH is always larger (smaller) than that for IH. At the oscillation maxima,

. Thus for

(

), the term

is maximum (minimum) for

and it is minimum (maximum) for

. Therefore, for NH and

,

(

) is maximum (minimum) and for IH and

, it is minimum (maximum). These two hierarchy-

combinations, for both

and

, are well separated from each other. It can be shown that oscillation probability, for the NH and

in the LHP, is well separated from that for the IH and

in the UHP, for both neutrino and anti-neutrino. However, for the other two hierarchy-

combinations, NH and

in the UHP, and IH and

in the LHP,

and

are quite close to each other, leading to hierarchy-

degeneracy. This is illustrated in

Figure 1, where

and

are plotted for the NO

A experiment baseline. For these plots, we have used maximal

, i.e.,

. The other mixing angle values are

and

. For the mass-squared differences, we have used

and

.

is related with

by the following equation [

38]:

is positive (negative) for NH (IH). For the NH and

in the LHP, the values of

(

) are reasonably greater (lower) than the values of

(

) for the IH and any value of

. Similarly, for the IH and

in the UHP, the values of

(

) are reasonably lower (greater) than the values of

(

) for the NH and any value of

. Hence, for these

favourable combinations, NO

A is capable of determining the hierarchy at a confidence level (C.L.) of

or better, with a 3-year run each for

and

. However, as mentioned above, the change in the first term in Equation (

16) can be cancelled by the second term for unfavourable values of

. This leads to hierarchy-

degeneracy [

39,

40,

41]. From

Figure 1, it can be seen that

and

for NH and

in the UHP are very close to or degenerate with those of IH and

in the LHP. For these

unfavourable combinations, NO

A has

no hierarchy sensitivity [

42]. Since the neutrino energy of T2K is only one third of the energy of NO

A, the matter effect of T2K is correspondingly smaller. Therefore, T2K has very little hierarchy sensitivity. The cancellation of change due to matter effect occurs for different values of

in the case of NO

A and T2K. Therefore, combining the data of NO

A and T2K leads to a small hierarchy discrimination capability for unfavourable hierarchy-

combinations [

41,

42,

43].

2.2. Octant-Hierarchy Degeneracy

Even though the atmospheric neutrino experiments prefer maximal

(

), the MINOS experiment prefers non-maximal values,

[

44]. The global fits, before the NO

A and T2K experiment begun taking data, also favour a non-maximal value of

[

45,

46,

47], leading to two degenerate solutions:

in the lower octant (LO) (

) and

in the higher octant (HO) (

). Given the two hierarchy and two octant possibilities, there are four possible octant-hierarchy combinations: LO-NH, HO-NH, LO-IH, and HO-IH. The first term of

in Equation (

16) becomes larger (smaller) for NH (IH). The same term also becomes smaller (larger) for LO (HO). If HO-NH (LO-IH) is the true octant-hierarchy combination, then the values of

are significantly higher (smaller) than those for IH (NH) and for any octant. For these two cases, only

data has good hierarchy determination capability. However, the situation is very different for the two cases LO-NH and HO-IH. The increase (decrease) in the first term of

due to NH (IH) is cancelled (compensated) by the decrease (increase) for LO (HO). Therefore the two octant-hierarchy combinations, LO-NH and HO-IH, have degenerate values for

. However, this degeneracy is not present in

, which receives a double boost (suppression) for the case of HO-IH (LO-NH). Thus, the octant-hierarchy degeneracy in

is broken by

(and vice-verse). Therefore

-only data has

no hierarchy sensitivity if the cases LO-NH or HO-IH are true, but a combination of

and

data will have a good sensitivity.

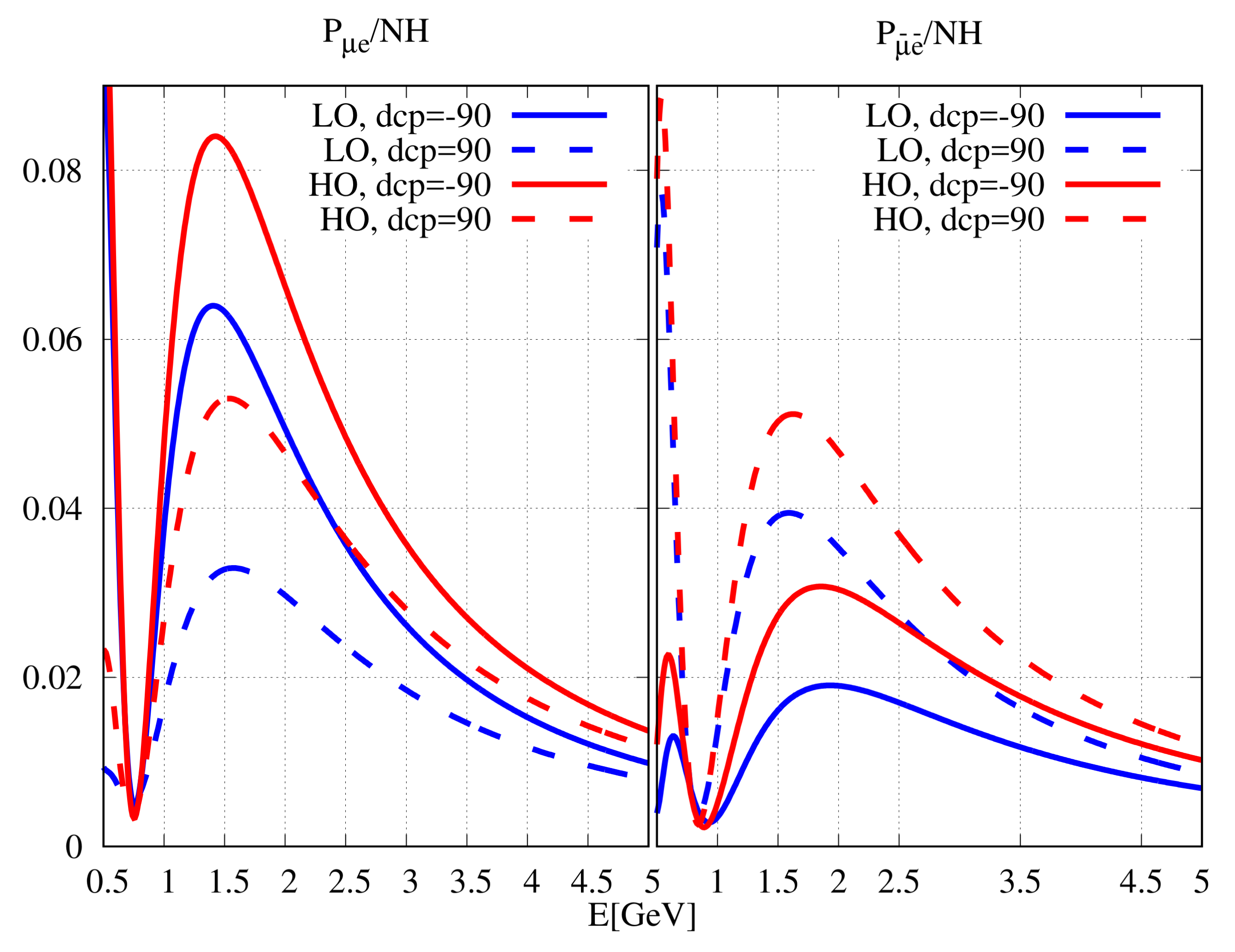

This has been illustrated in

Figure 2. From the figure, we can see that

has degeneracy for the octant-hierarchy combinations LO-NH and HO-IH. This degeneracy does not exist in the case of

[

48].

2.3. Octant- Degeneracy

The possibility of two octants of

also leads to octant-

degeneracy. To highlight this degeneracy, we rewrite the expression for

in Equation (

16) as [

49]:

where

In

Figure 3,

(

) is plotted for the NO

A experiment as a function of neutrino energy

, for normal hierarchy and for different values of

. In our calculation,

, when

is in the LO and

, when

is in the HO. The

has been taken equal to 0.089.

From the left panel of the figure, we can see that when is in the LO and is , is quite distinctive from probability values with other and combinations. Similar arguments hold for with in the HO and . Therefore, in the LO (HO) and () is a favourable octant- combination to determine the octant of . However, for in LO and overlaps with that for in HO and . Therefore, in the LO (HO) and () is an unfavourable combination to determine the octant.

However, these unfavourable combinations become favourable for

and vice-versa, as can be seen from the right panel of

Figure 3. Thus, the octant-

degeneracy, present in neutrino data, can be removed by anti-neutrino data and vice-versa. This aspect is different from the hierarchy-

degeneracy.

The above features can also be understood by following an algebraic analysis of Equation (

18). From that equation, we see that

increases with the increase in

. However,

can increase or decrease with a change in

. In the case of octant-

degeneracy, for different

and

, we can have

. It leads to:

The NO

A experiment has a baseline of 810 km and the flux peaks at an energy 2 GeV. Now for the NH and neutrino,

This equation can have solutions only if:

From the above equation, we have the ranges of

as:

Therefore, for the NO

A experiment, for NH and neutrino,

is close to

[

49]. Similar equations for

show that this degeneracy can be removed by an anti-neutrino run.

3. Details of Data Analysis

In this section, we describe the methodology by which we have done data analyses of T2K and NO

A data in

Section 4 and

Section 5. We have computed

and

between the data and a given theoretical model. In

Section 4, we have discussed the evolution of NO

A and T2K data with time. To do so, we have presented the analysis of the latest data from both the experiments in a standard 3-flavour oscillation scenario. In

Section 5, we have discussed how different BSM scenarios alleviate the tension between the two experiments. This is done by analysing the latest data from NO

A and T2K in each of the different BSM frameworks. In both

Section 4 and

Section 5, the results have been presented in the form of

.

The observed and the expected number of events in the

i-th energy bin of a given experiment are denoted by

and

, respectively. The

between these two distributions is calculated as:

where

i stands for the bins for which the observed event numbers are non-zero,

j stands for bins for which the observed event numbers are zero, and

z is the parameter defining systematic uncertainties.

The theoretical expected event numbers for each energy bin and the

between theory and experiment have been calculated using the software GLoBES [

50,

51]. To do so, we fixed the signal and background efficiencies of each energy bin according to the Monte–Carlo simulations provided by the experimental collaborations [

52,

53,

54,

55]. We kept

and

at their best-fit values

and

, respectively. We varied

in its

range around its central value

with

uncertainty [

56].

has been varied in its

range [0.41:0.62] (with

uncertainty on

[

57]). We varied

in its

range around the MINOS best-fit value

with

uncertainty [

44]. The CP-violating phase

has been varied in its complete range

. In case of BSM physics, we modified the software to include new physics. The ranges of different new parameters for each of the BSM scenarios have been discussed in

Section 5.

Automatic bin-based energy smearing for the generated theoretical events has been implemented within GLoBES [

50,

51] using a Gaussian smearing function:

where

is the reconstructed energy. The energy resolution function is given by:

For NO

A, we used

,

for electron- (muon) like events [

58,

59]. For T2K, we used

,

,

for both electron- and muon-like events. For T2K, the relevant systematic uncertainties are

A total of normalisation and energy calibration systematics uncertainty for e-like events, and

A total of normalisation and energy calibration systematics uncertainty for -like events.

For NO

A, we used

normalisation and

energy calibration systematic uncertainties for both the

e-like and

-like events [

58]. Details of systematic uncertainties have been discussed in the GLoBES manual [

50,

51].

During the calculation of , we added (for the older, pre-2020 data) priors on , , and , in cases where we have not included muon disappearance data. In all other cases, priors have been added on only (to account for electron disappearance data from reactor neutrino experiments).

We calculated the for both the hierarchies. Once the s had been calculated, we subtracted the minimum from them to calculate the . The parameter values and hierarchy, for which the , is called the best-fit point.

6. Summary and Discussion

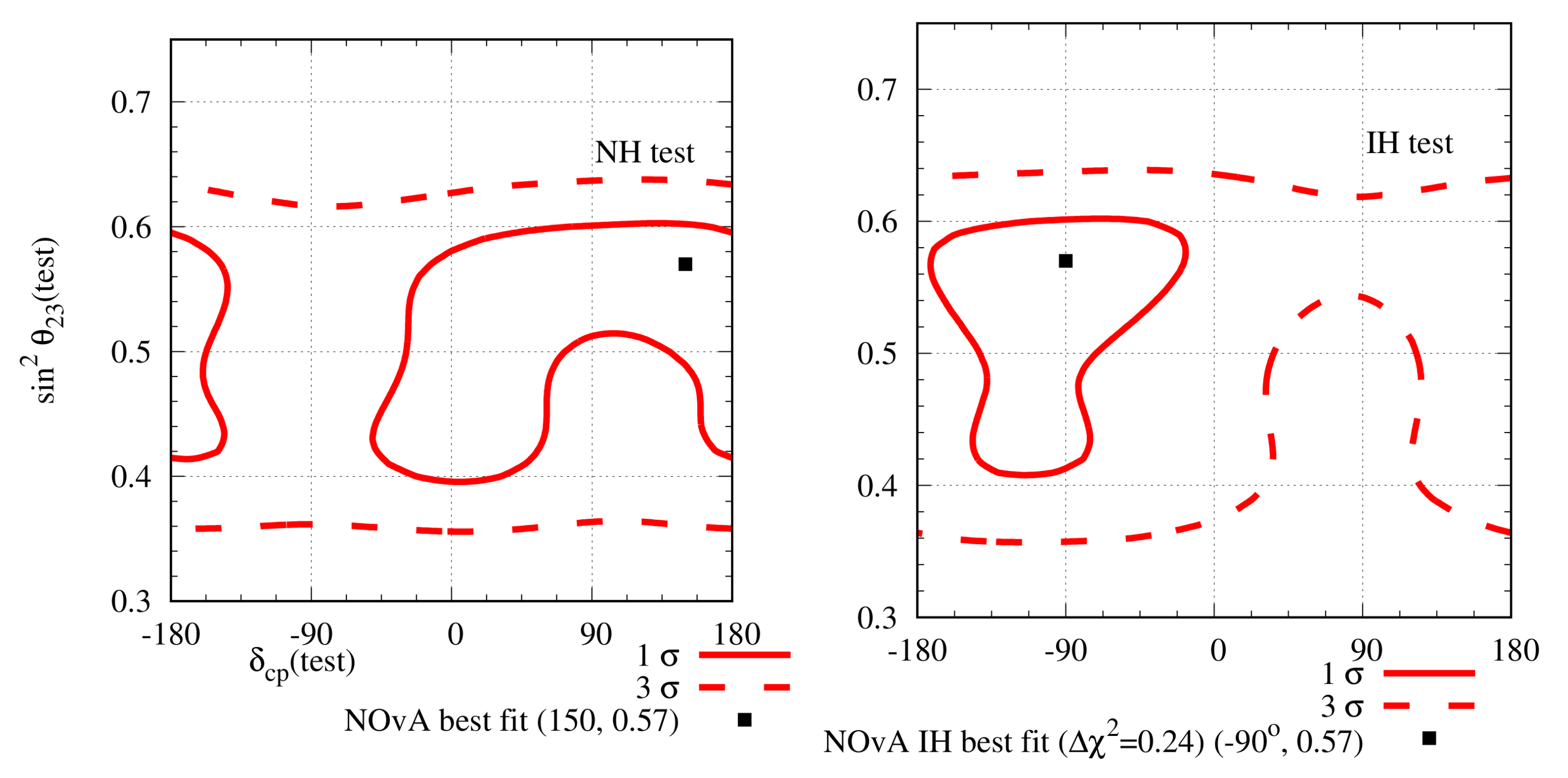

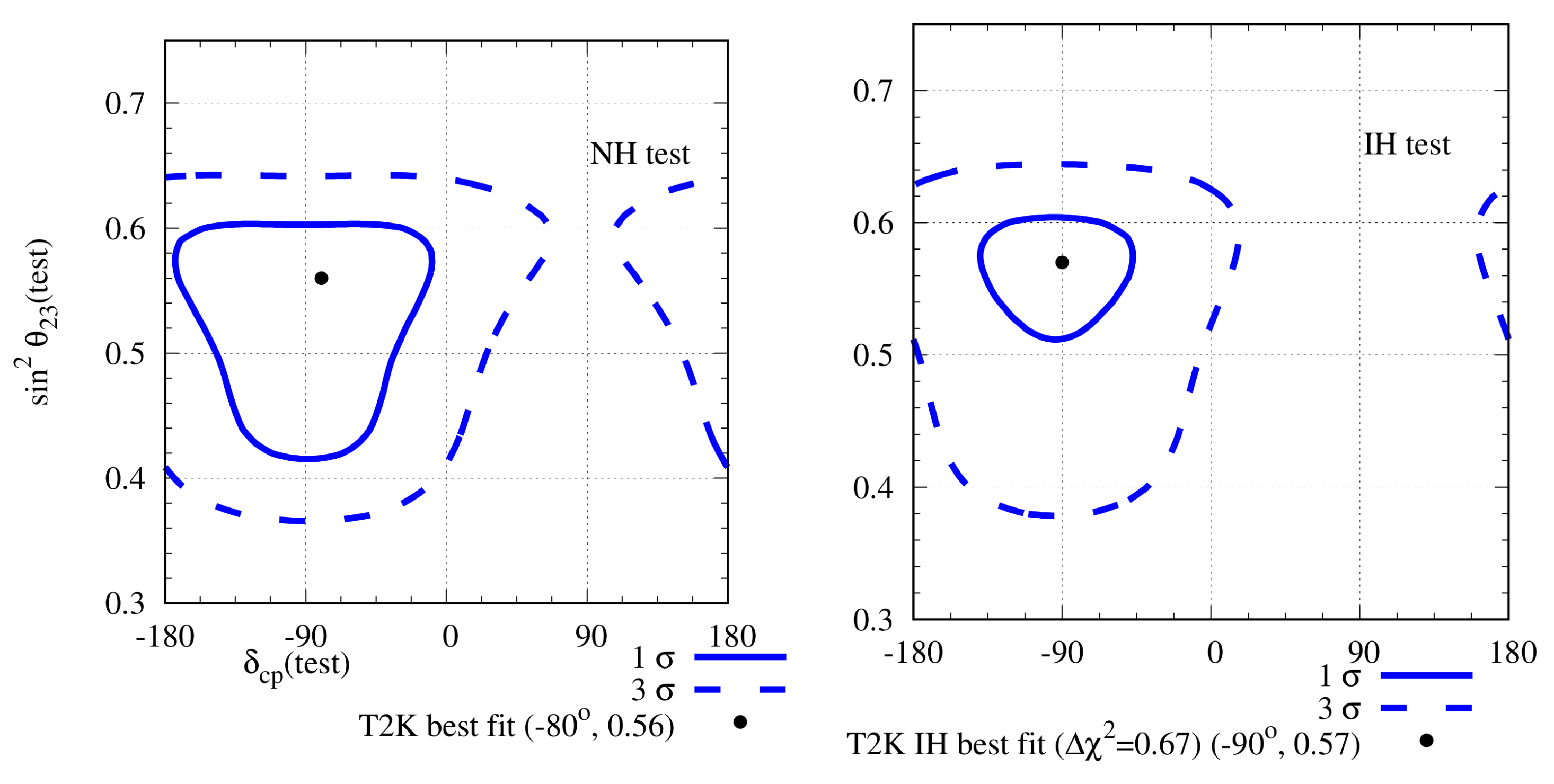

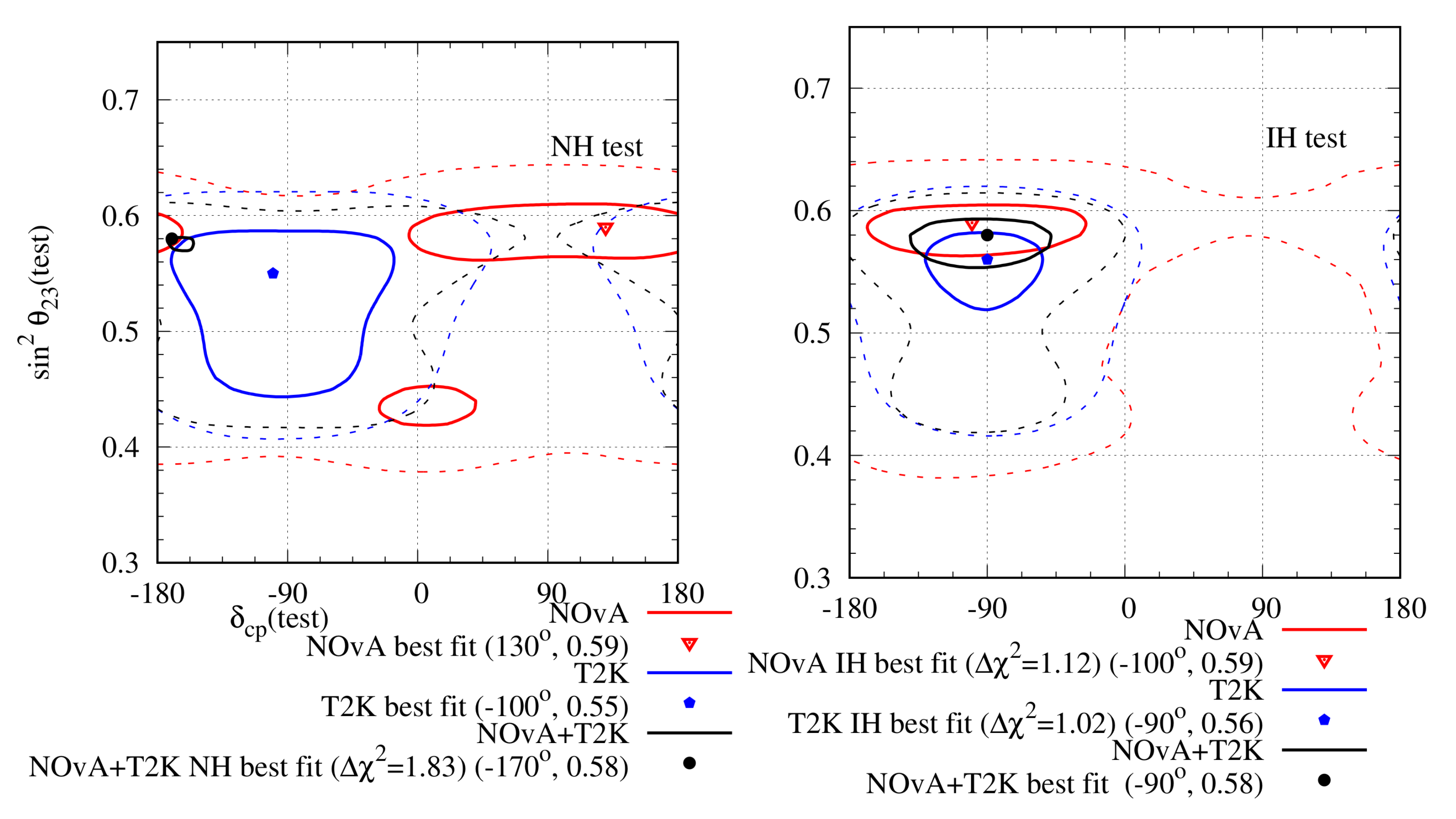

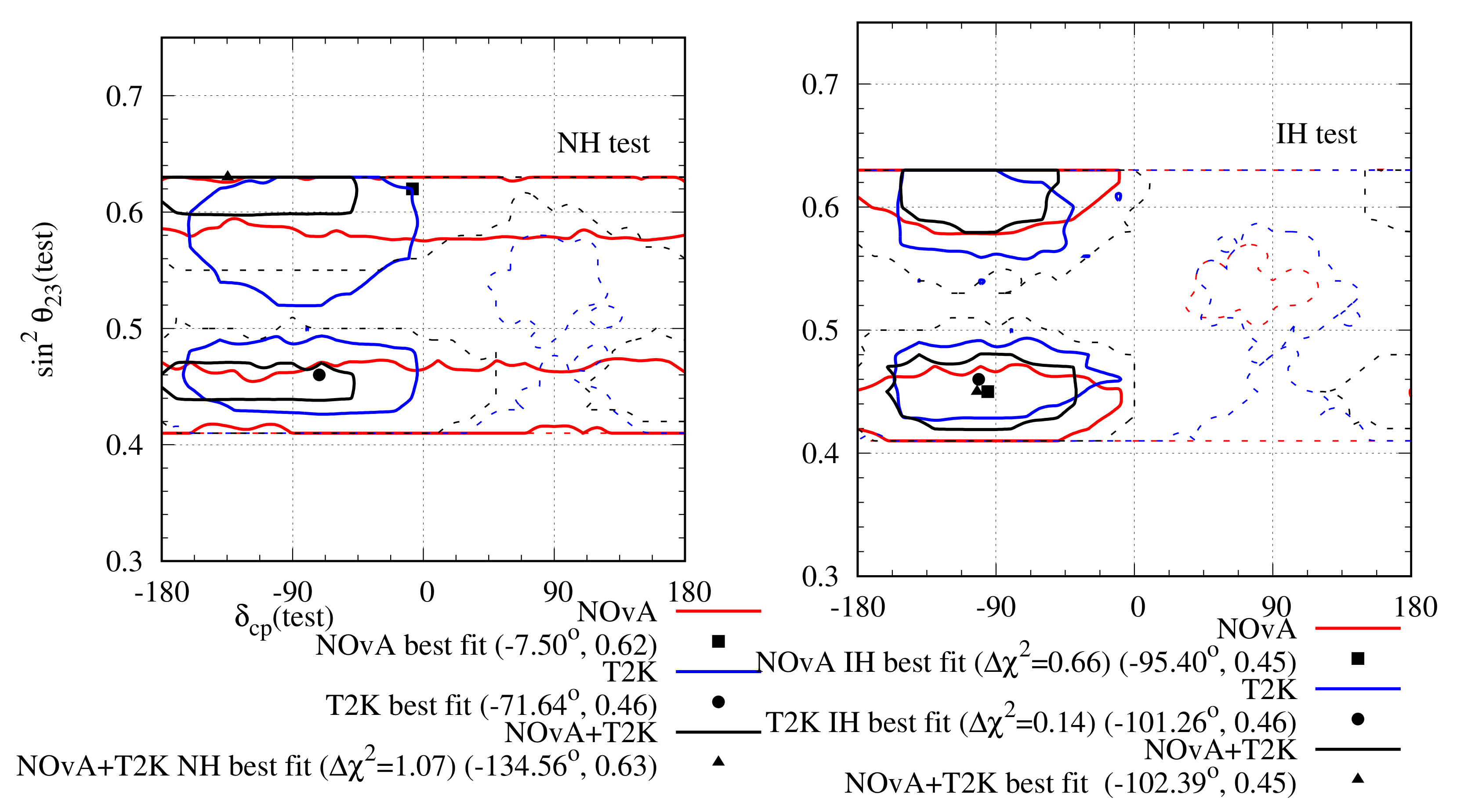

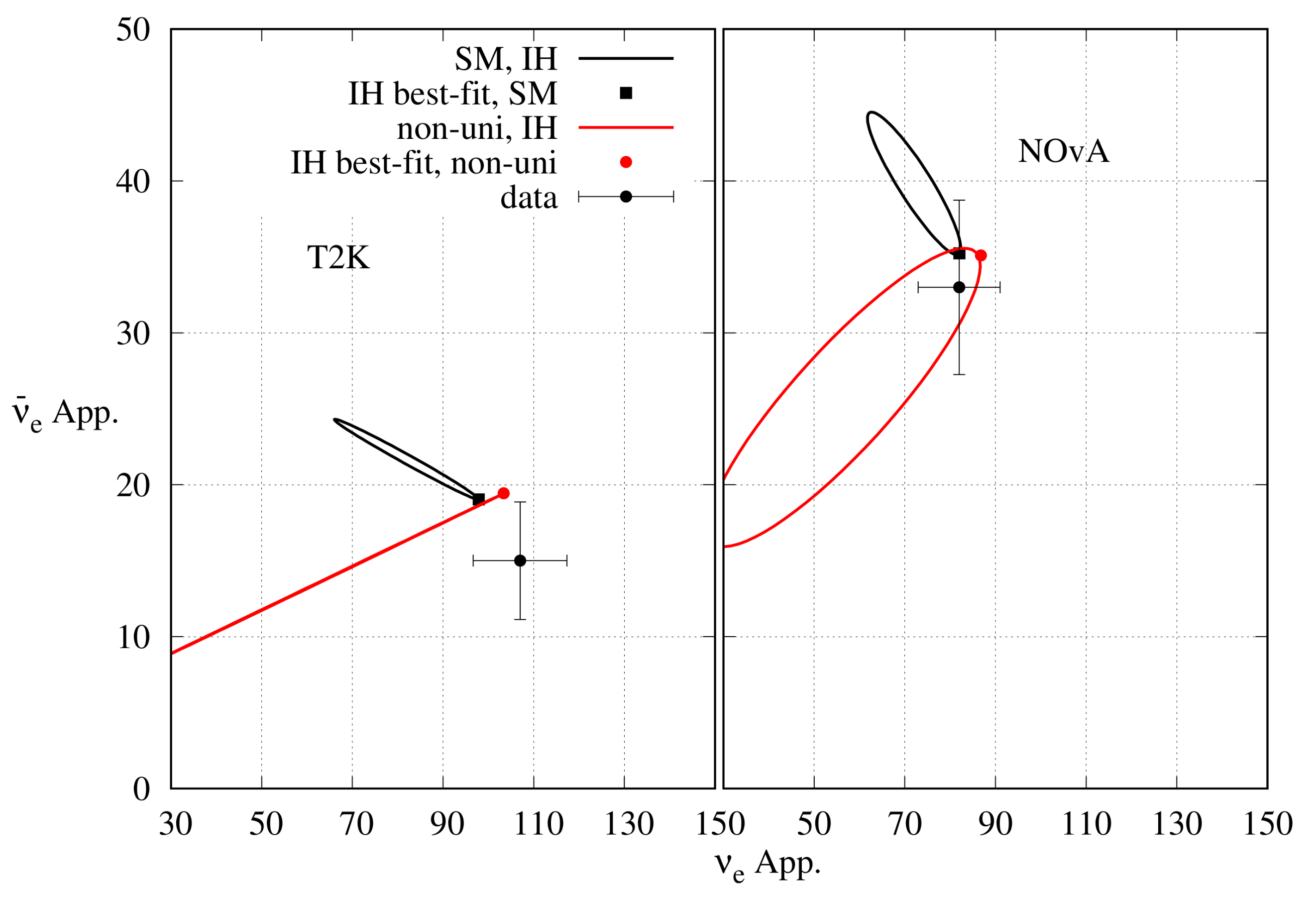

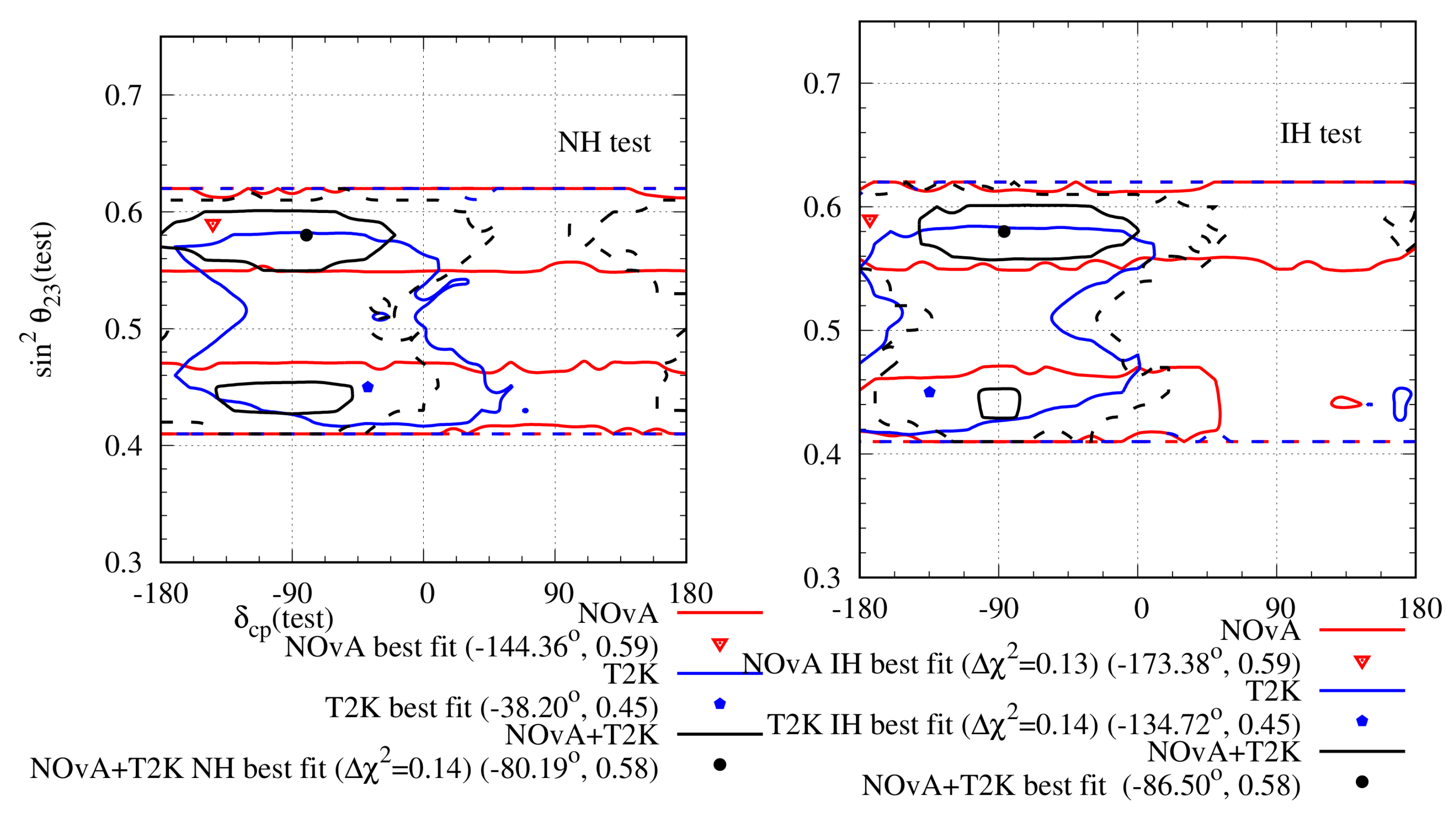

A tension between the best-fit points of T2K and NOA existed from the very beginning, which became only stronger with time. This tension arises mostly from the appearance data of the two experiments. T2K observes a large excess in the appearance events compared to the expected event number at the reference point of vacuum oscillation, , and (referred to as 000). This large excess dictates that be anchored around and that be in HO, for both the hierarchies (‘’ and ‘’, with the former being the best-fit point). The appearance events observed by NOA show a very different pattern. They are moderately larger than the expectation from the reference point in the channel and are consistent with it in the channel. These two facts, when combined together, lead to two possible degenerate solutions for NO A: A. NH- in HO- in UHP (‘’), and B. IH- in HO- in LHP (‘’). A fit of the combined T2K + NOA data to a standard three-flavour oscillation framework, has the best-fit point as IH- in HO- in LHP, which is reasonably close to the IH best-fit points of T2K and NOA. If NH is assumed to be the true hierarchy, there is almost no allowed region within , despite the best-fit point of each experiment picking NH. This is the essential tension between the two experiments.

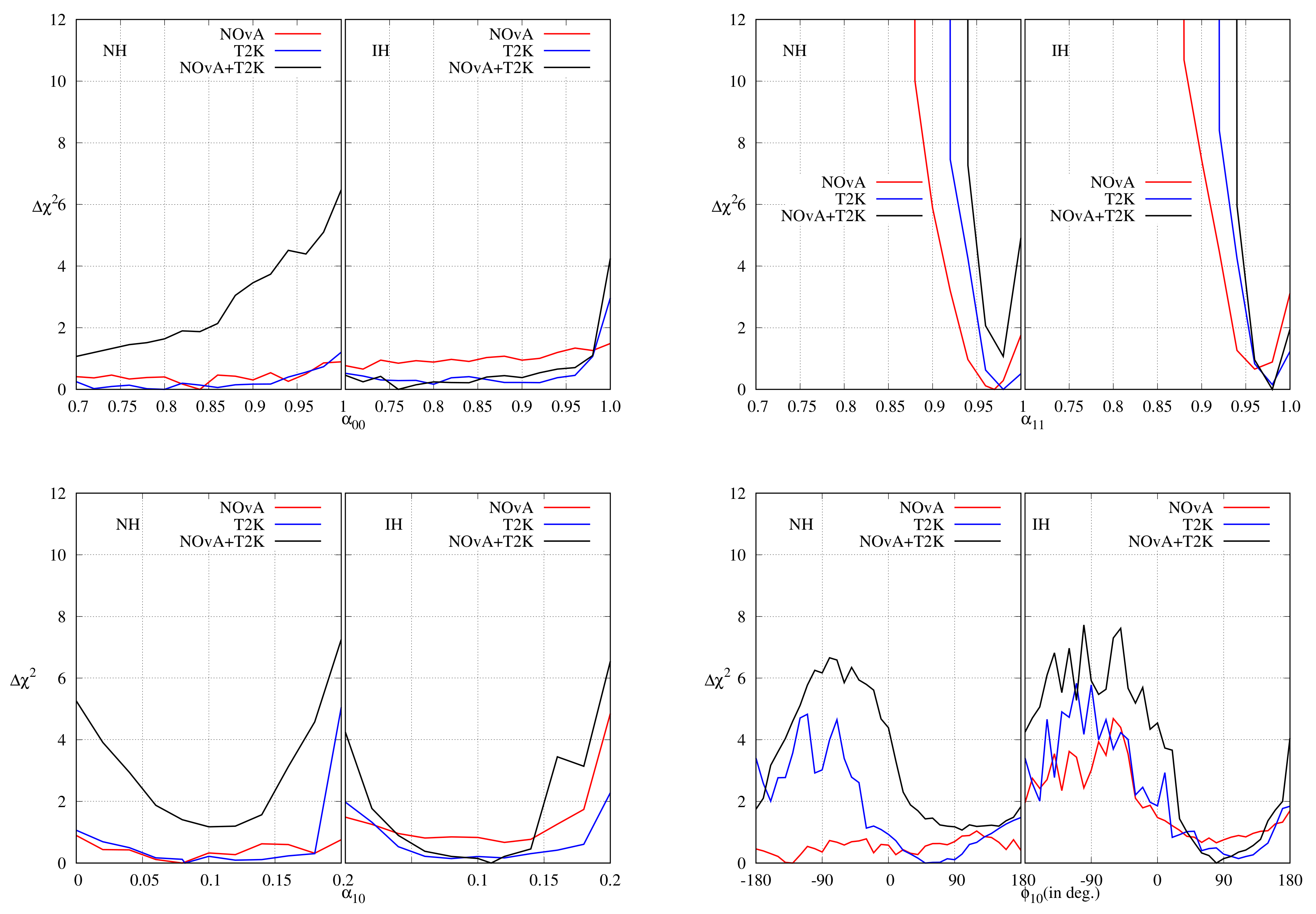

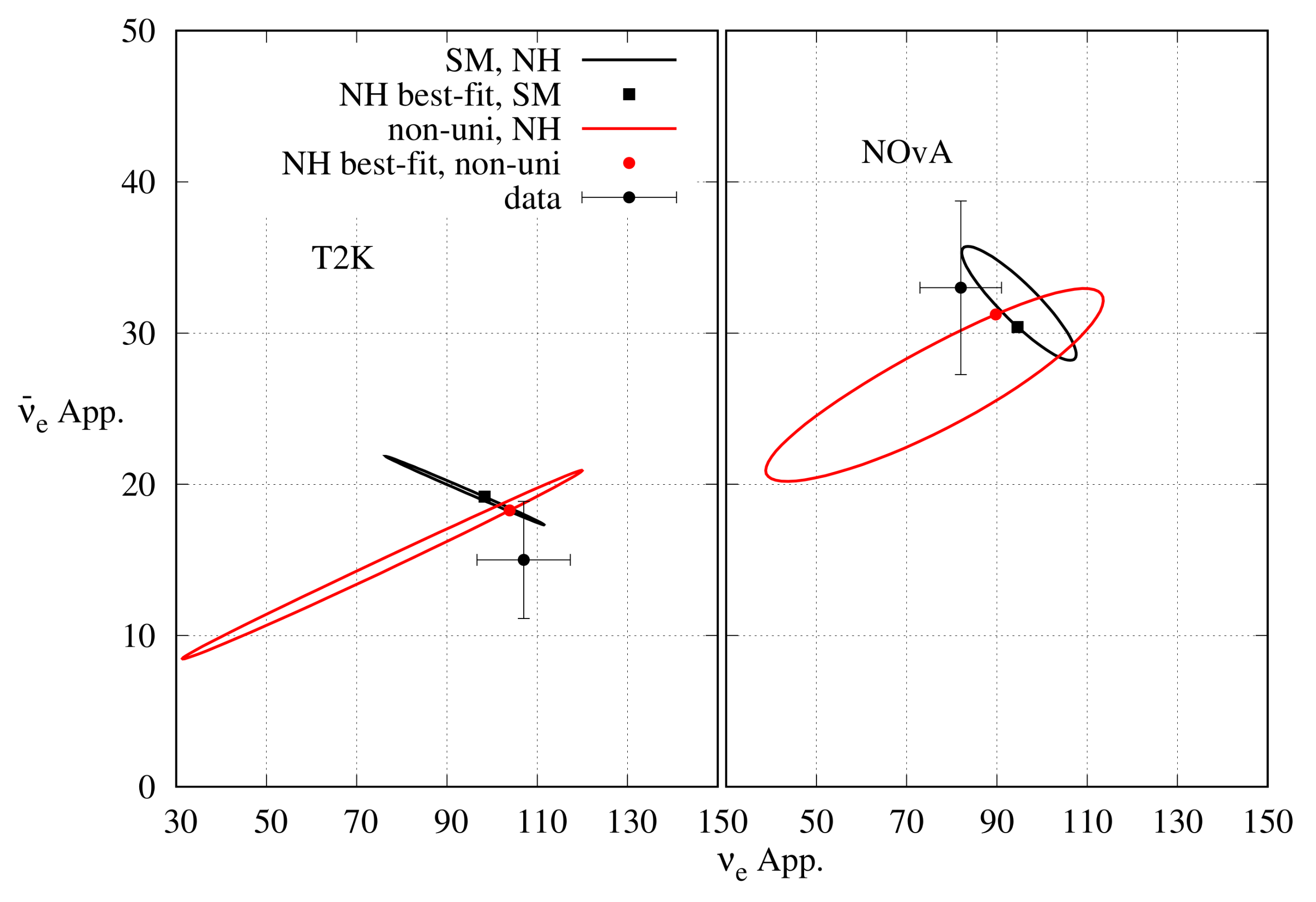

Several studies have been done to resolve this tension with BSM physics. Three different BSM scenarios have been considered in the literature: (1) Non-unitary mixing, (2) Lorentz invariance violation, and (3) non-standard interaction during neutrino propagation. All three scenarios bring the expected event numbers at the combined best-fit point at NH closer to the observed , and event numbers of both the experiments, and thus reduce the tension between them.

T2K and NOA individually prefer non-unitary mixing over unitary mixing at C.L. Combined data from both of them prefer non-unitary mixing at C.L. Both the experiments lose hierarchy and octant sensitivity when analysed with non-unitary mixing. There is a large overlap between the -allowed regions on the plane of the two experiments.

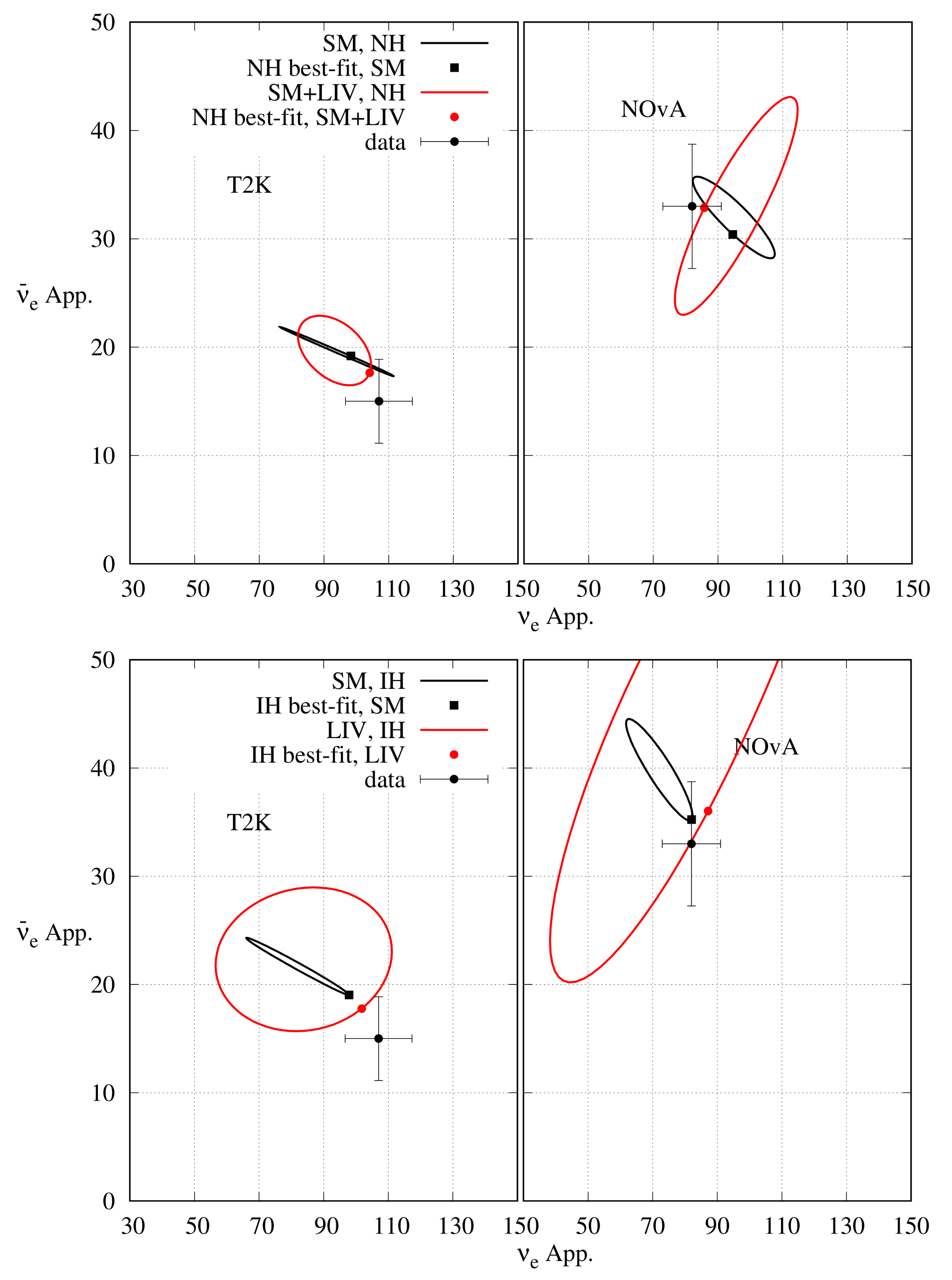

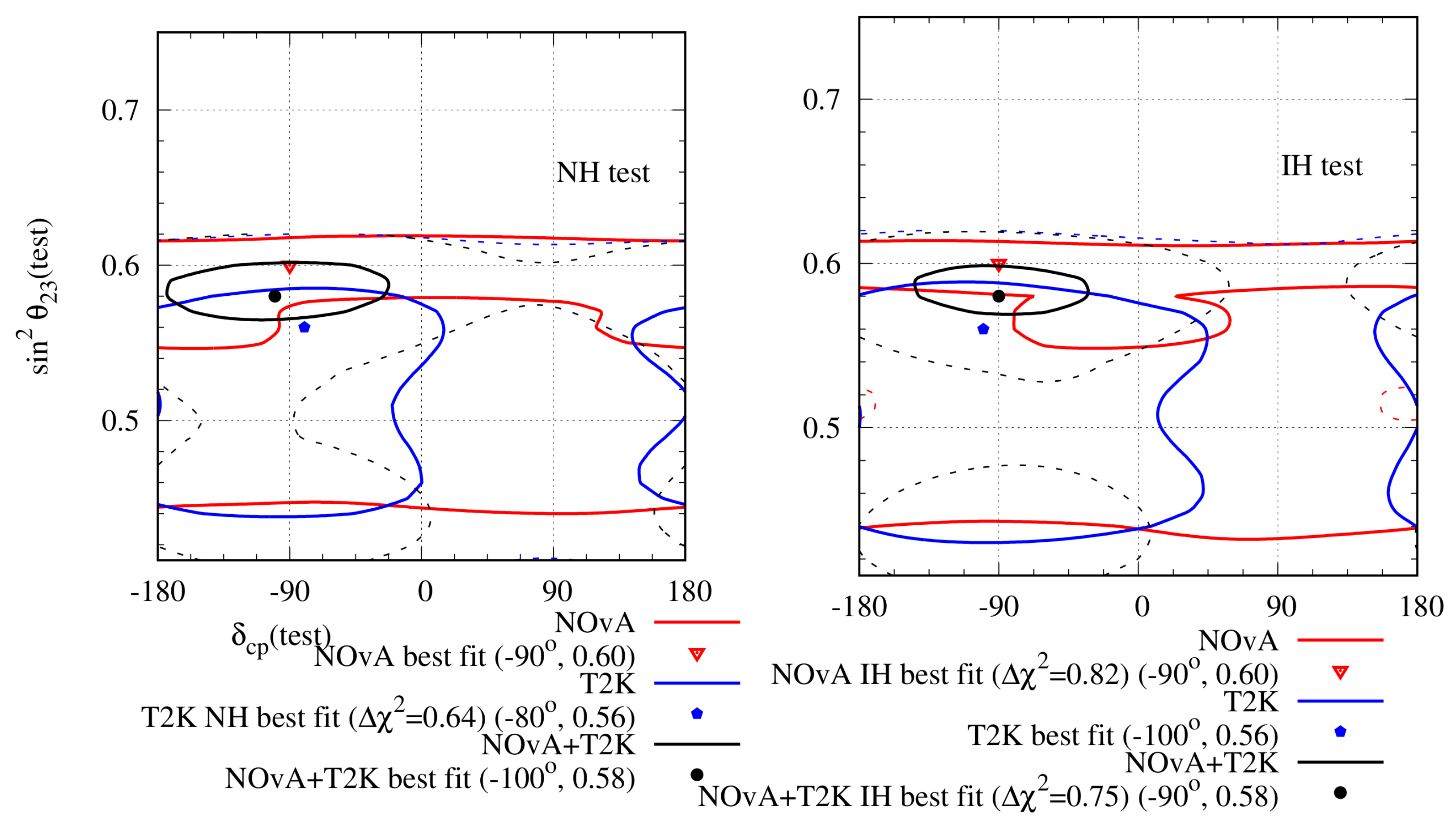

In the case of LIV, T2K data prefers LIV over standard 3-flavour oscillation at . NOA data cannot make any preference between the two hypothesis at . The combined analysis rules out standard oscillation at C.L. Similar to non-unitary mixing, both experiments lose hierarchy, and octant sensitivity in the case of LIV too. In this case, there is also a large overlap between the -allowed region on the plane of the two experiments.

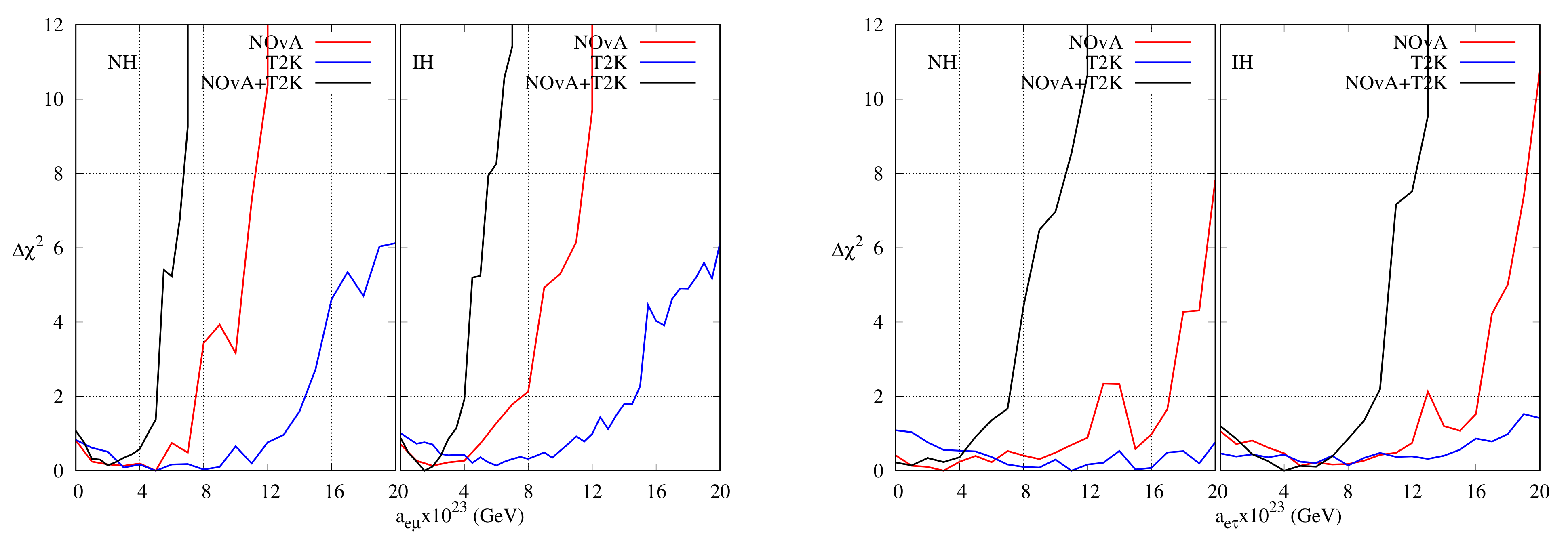

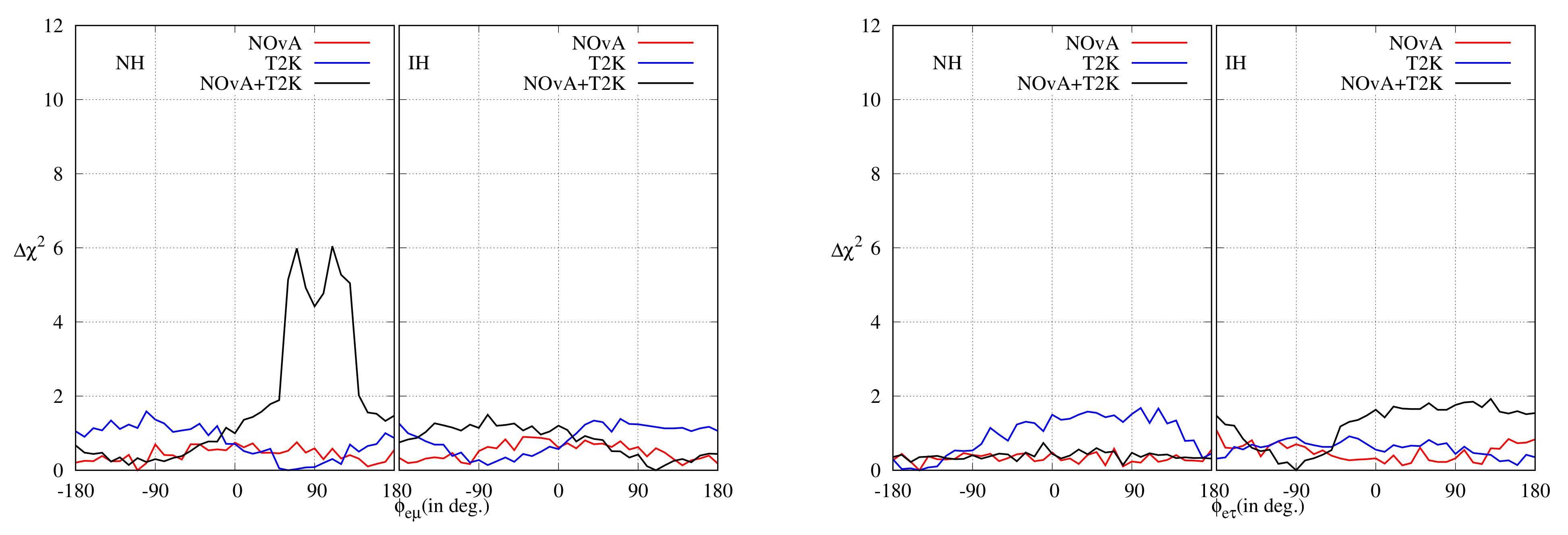

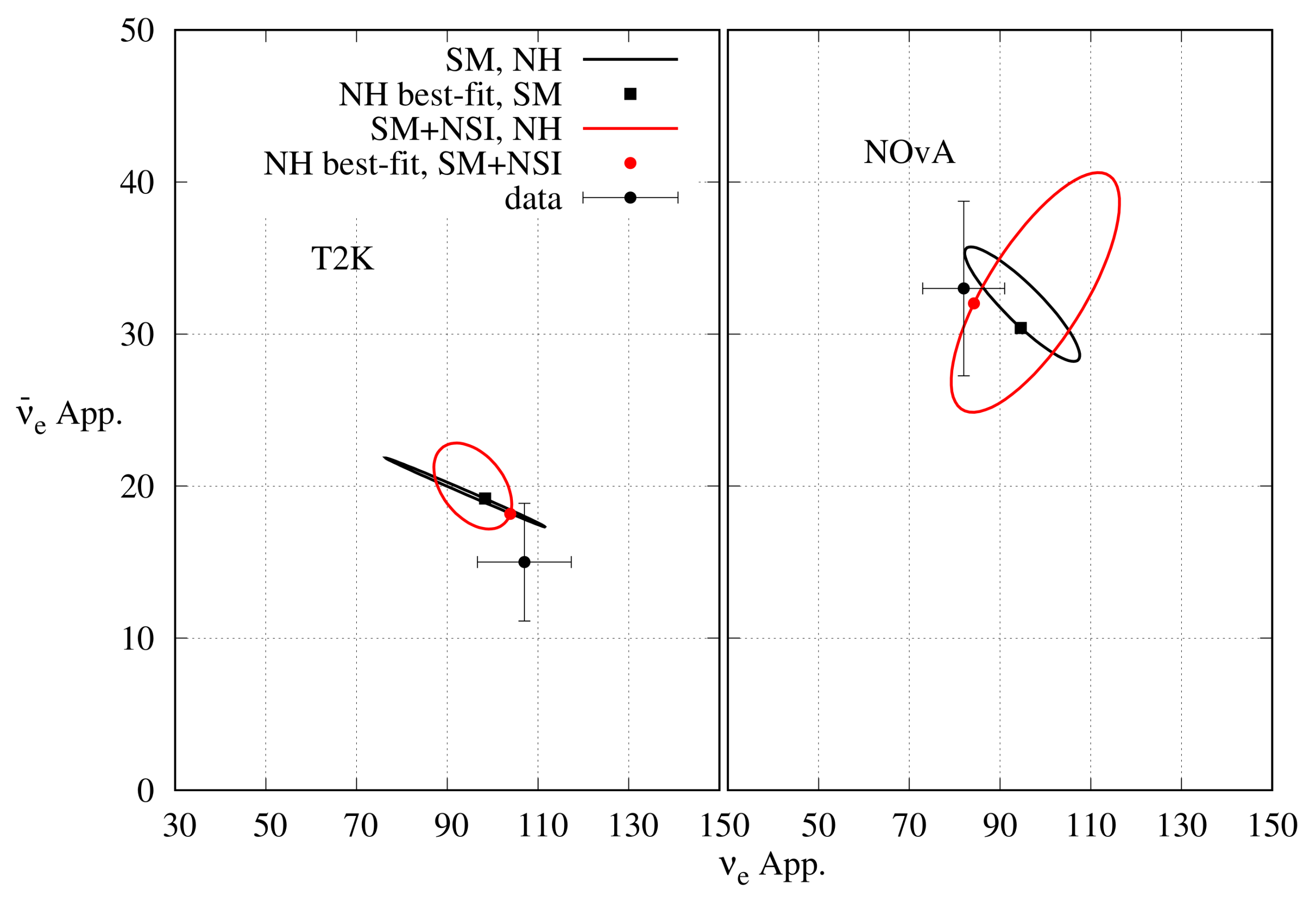

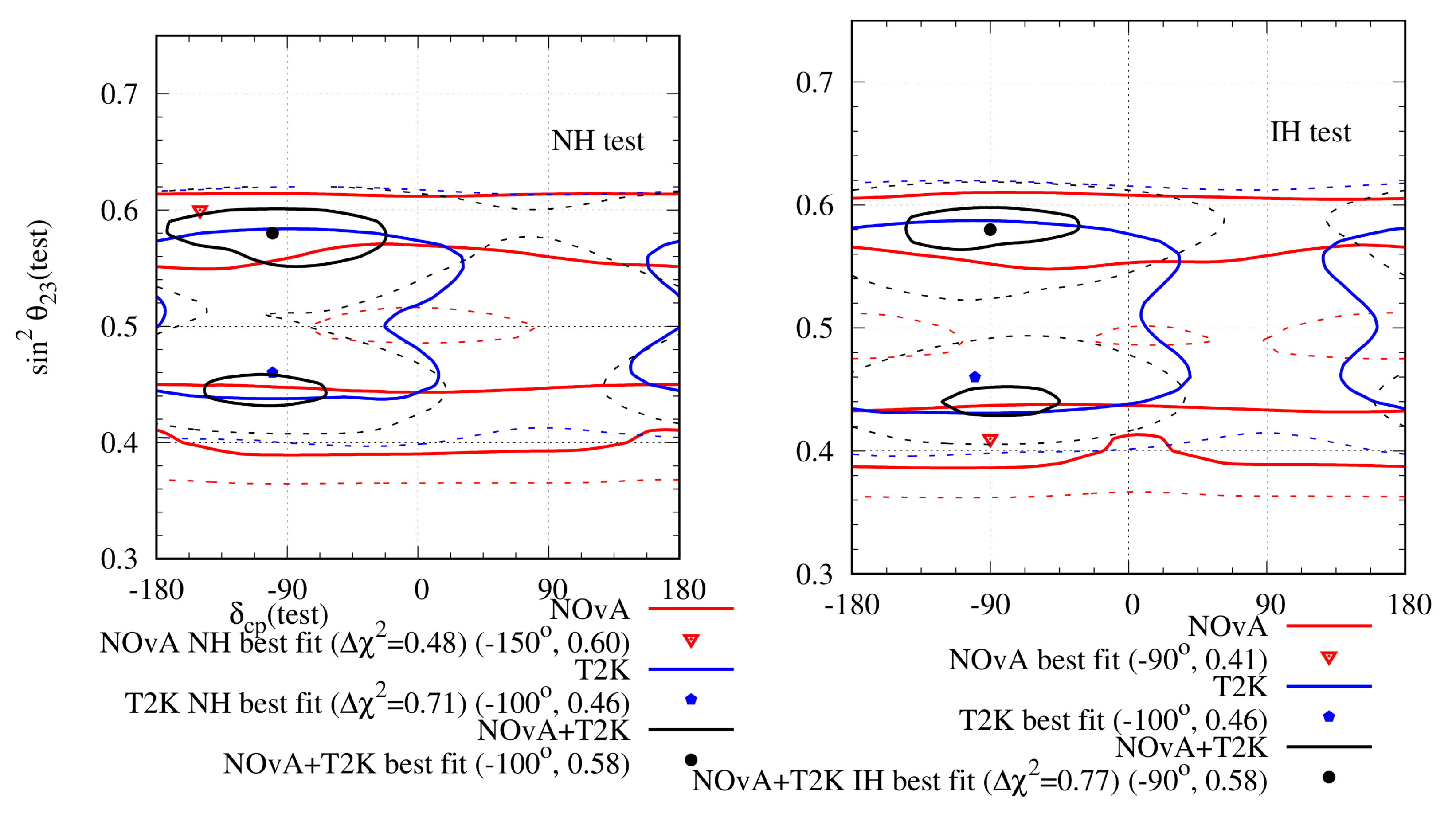

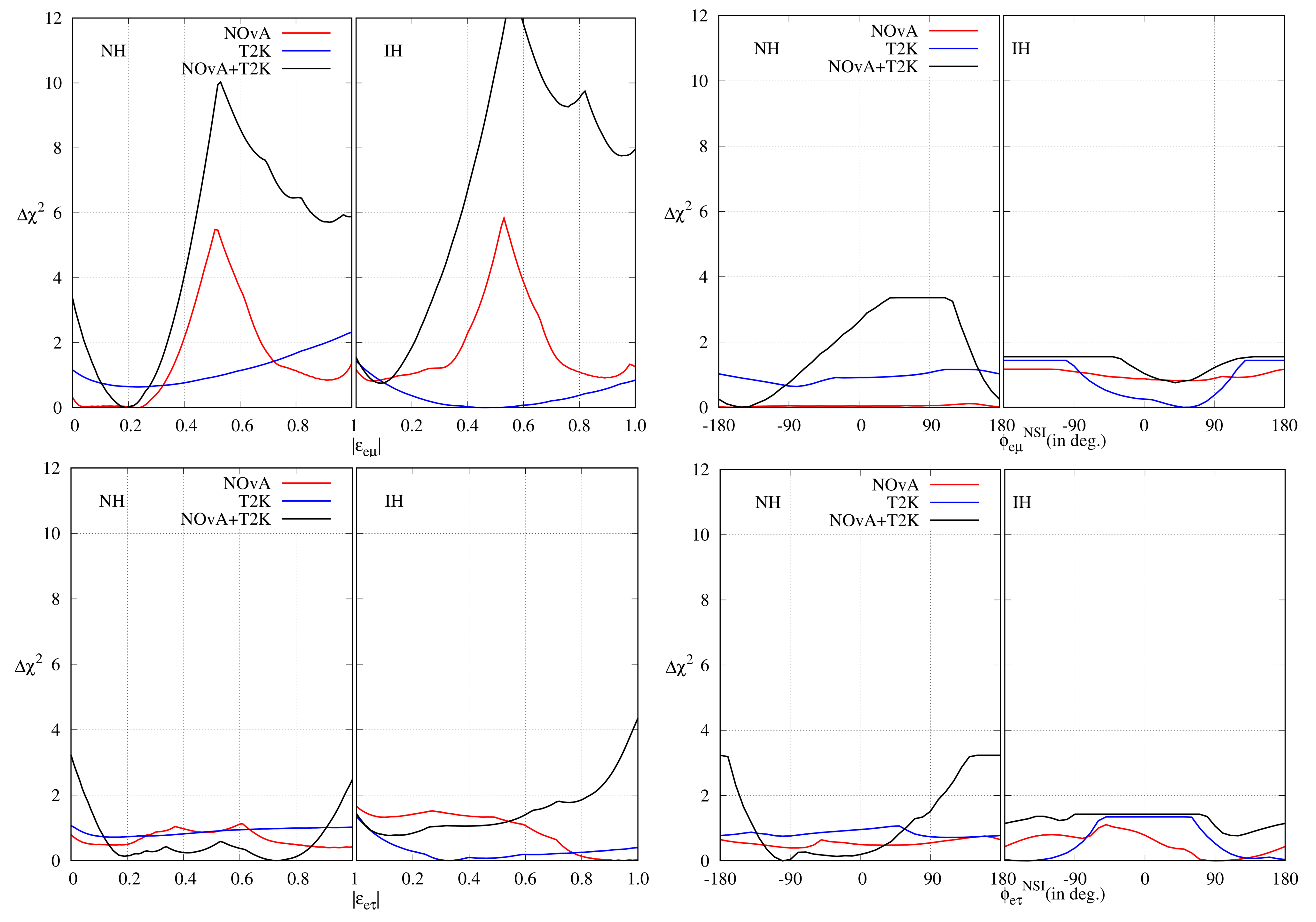

In the case of NSI, we considered the effects of , and one at a time. In case of , T2K data rule out standard oscillation at C.L., whereas NOA data cannot make a preference between the two hypothesis. The combined data from NOA and T2K rule out standard oscillation at more than C.L. In the case of , data from both NOA and T2K rule out standard oscillation at , whereas their combined data rule out standard oscillation at C.L. As before, in the case of NSI also, each of the two experiments loses their hierarchy and octant sensitivity. A large overlap between the -allowed regions on the plane of the two experiments exists.

T2K and NO

A continue to take data. The additional data may either sharpen or reduce the tension. If the tension becomes sharper, then we need to explore which new physics scenario can best relieve this tension. We also need to test the predictions of the preferred new physics scenario in future neutrino oscillation experiments, such as T2HK [

151] and DUNE [

152,

153,

154].