1. Introduction

A basic and well accepted fact is that space-time in the solar system can be approximately described by a Lorentz (Minkowski) metric (Greek indices belong to the set ) and therefore physical theories are invariant under the Lorentz symmetry group.

Ref. [

1] informs us that in the general theory of relativity (GR), the metric is locally

, but not globally due to curvature. This is dictated by the principle of equivalence. Other requirements of GR including diffeomorphism invariance, and the Newtonian limit for weak gravity and slowly changing density profiles lead to the equations of Einstein:

here

stands for the Einstein tensor,

symbolizes the stress-energy tensor,

G is the Newtonian universal gravitational constant and

c is the speed of light in vacuum.

Does one need to postulate that space-time is locally Lorentz based on an empirical (unexplained) facts? The answer is no. This property can be derived from the field equations based on the stability of the Minkowskian solution [

2]. Other unstable flat solutions of GR, which are of a non Minkowskian type, such as an Euclidian metric

can exist in a limited region of space-time, in which the physical equations will have an Euclidean symmetry group. In an Euclidian metric there are no speed limitations and thus the alleged particle can travel faster than the speed of light [

3].

Eddington ([

4], p. 25) has already studied the possibility that the universe contains different domains that are locally Lorentzian and others that have some other local metric such as

or

. The stability of those domains was not discussed by Eddington.

One can find in the literature other explanations to the signature of the space-time metric [

5,

6,

7,

8], however, all other metric justifications rely on theoretical structures and assumptions that are external to GR and thus should be abandoned according to the Occam razor principle. Previous works [

2,

9,

10,

11] have shown that GR equations and linear stability analysis of the Lorentzian metric suffice to obtain a unique choice of the Lorentzian metric being the only one that is stable in an empty or almost empty space-time. Other allowed metrics are unstable and can thus exist in only a limited region of empty space-time. In the case of high density (such as the very early universe or near a black hole singularity) the situation is less certain [

12]. The existence of the intuitive partition of a 4-dimensional space into space and time is conjectured to be a feature of an (almost) empty space-time. Such a partition is not a property of a general solutions of Einstein’s equations, such as the one discovered by Gödel [

13]. However, this problem is not a characteristic of exotic space-times, but rather a property of standard cosmological models. We mention that the choice of coordinates in the Fisher approach to physics can also be justified using the above stability analysis [

14]. Although the stability analysis of previous work is linear, we notice also the nonlinear stability analysis of the Lorentzian metric in Ref. [

15]. We also point out that the nonlinear instability of a constant metric of different signatures remains an open question at this time.

Cosmological theory encounters many difficulties related to the horizon, flatness, entropy, and monopole problems [

16]. A solution to these problems was introduced by Alan Guth using his famous cosmological inflation [

17]. Entropy difficulties that remained in the original inflation model has led to a new cosmological inflation put forward by Linde [

18], which solves the entropy problem at a price of fine tuned parameters. The same criticism is also relevant to chaotic inflation, also conjectured by Linde [

19]. On 17 March 2014, astrophysicists of the BICEP2 group reported the detection of inflationary gravitational waves in the B-mode power spectrum, providing experimental evidence for the theory of inflation [

20]. However, on 19 June 2014, the collaboration lowered confidence in confirming the findings.

There is a basic deficiency relevant to all inflation models, which require one or more ad hoc scalar fields. Those conjectured physical fields have no function, implication, or purpose in nature except for their use in the inflation model, which is in gross violation of the Occam razor principle. Occam’s razor principle demand that a minimum number of assumptions will explain a maximum number of phenomena (and not the other way around). Postulating a field to explain every phenomena does not serve the purpose of theoretical physics. As Einstein stated: “Everything should be made as simple as possible, but not simpler”.

This idea of an Euclidean metric in the early universe has attracted the attention of a long and distinguished list of authors including Sakharov [

21,

22], Hawking [

23], and Ellis [

24]. Sakharov’s work on the changes in the metrics signature was authored during an era in which quantum cosmology was a vibrant topic of research. It was influenced by the papers of Vilenkin [

25], Hartle and Hawking [

23], and others. Sakharov conjectured that the early beginning of the Universe is a result of a quantum transition from a spacetime with an Euclidean signature

to a Lorentzian signature

. However, Sakharov has not given a detailed mathematical theory of this transition. As mentioned in Ref. [

26], the idea became popular later. It was actively studied in 1990s (see, e.g., Ref. [

27] and references mentioned therein). Different approaches exist to the change of metric; some are classical [

28], while others are quantum [

29,

30]. Here we will not concern ourselves with the mechanism of metric change (however, the interested reader can refer to Ref. [

3] in

Section 2), but rather with the dynamics and statistical physics of the particles within a portion of space-time in which a metric with a specific signature prevails.

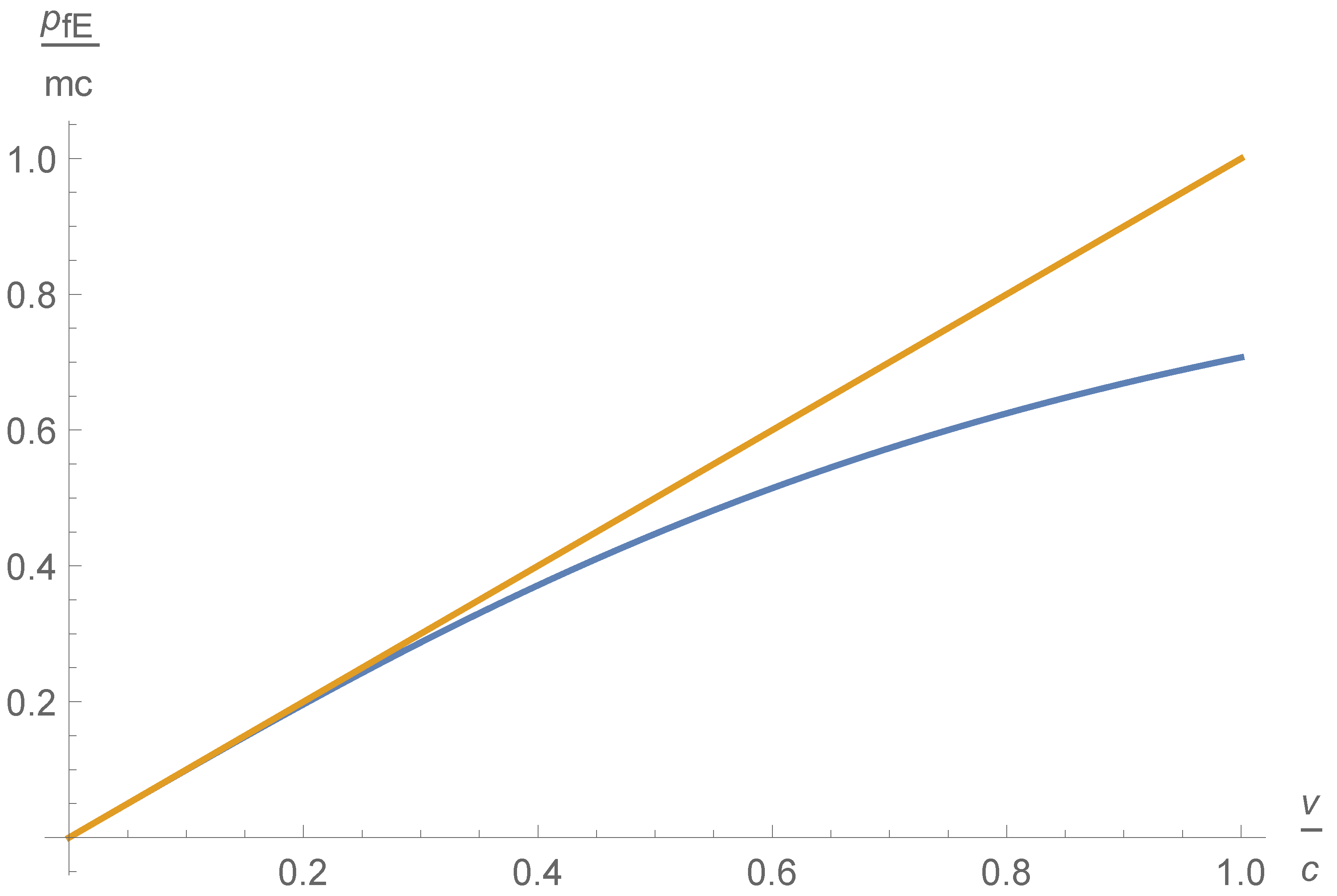

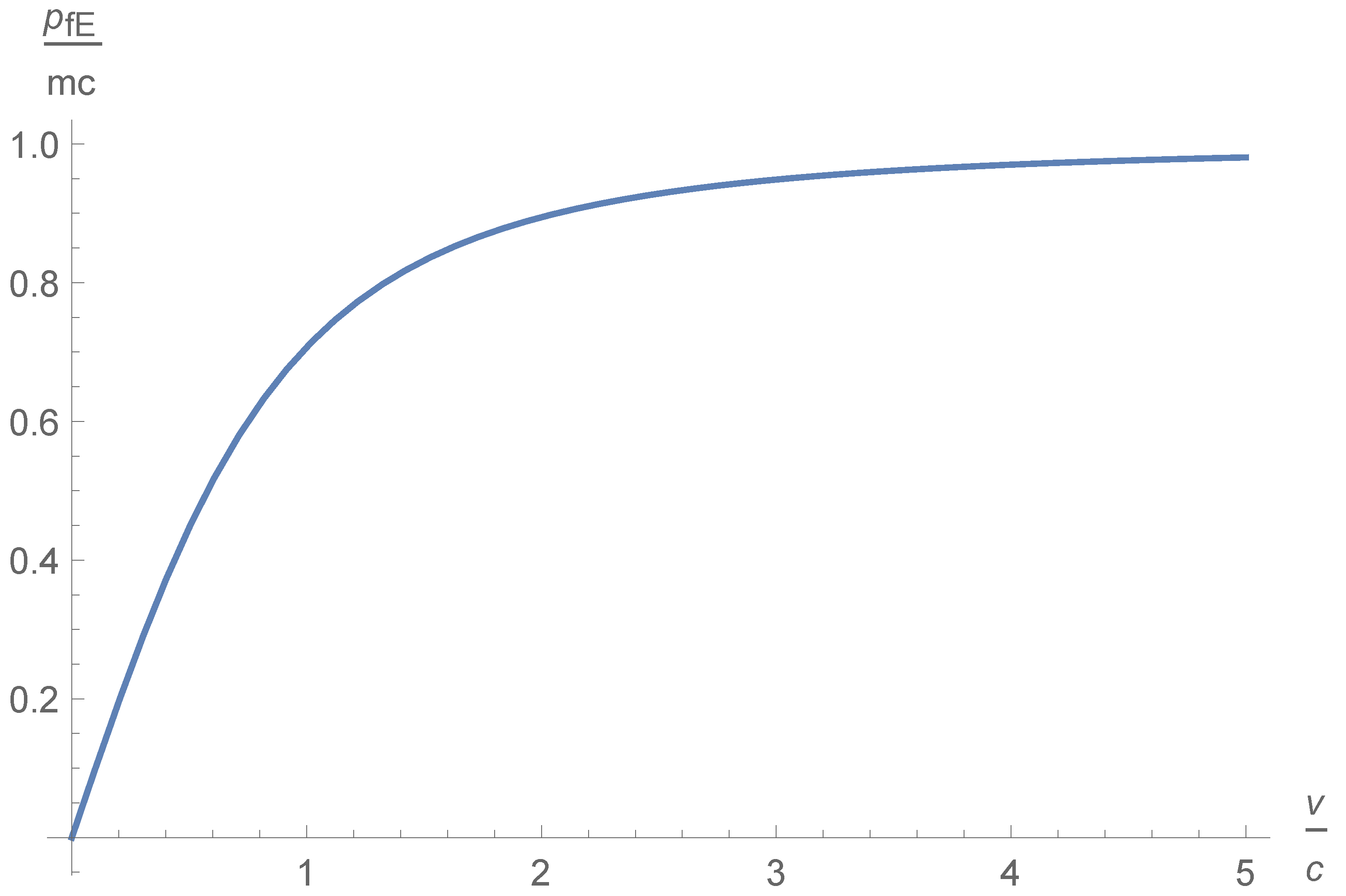

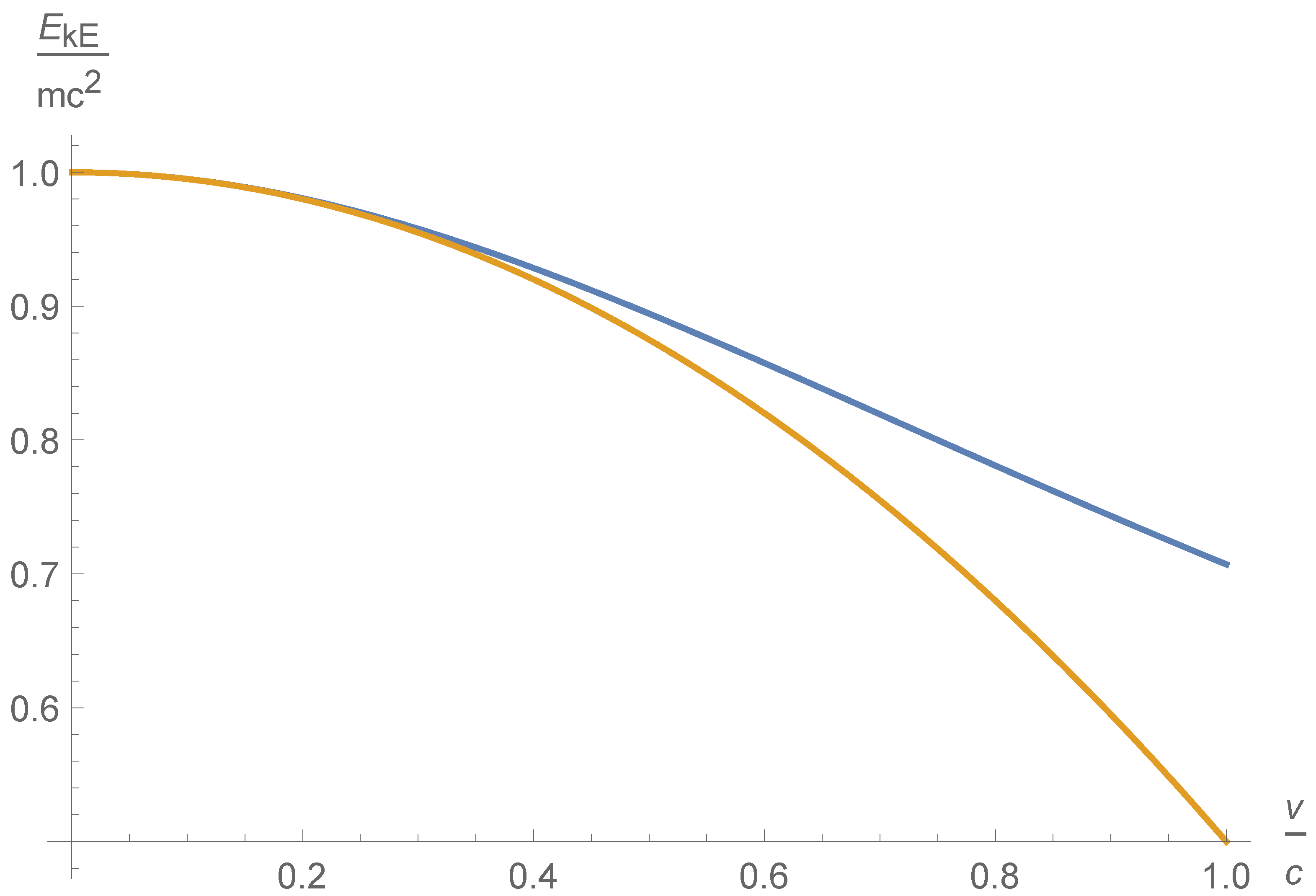

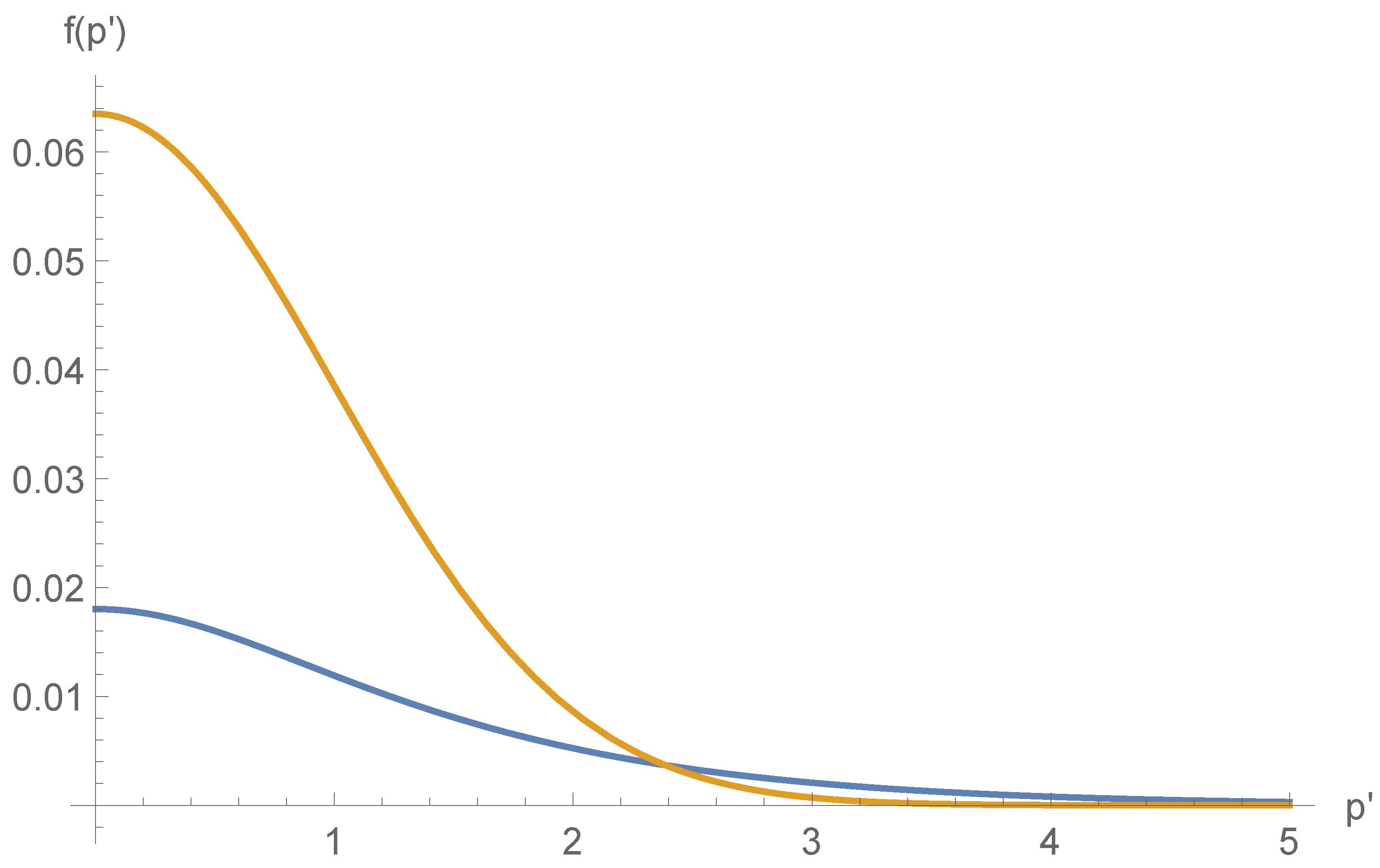

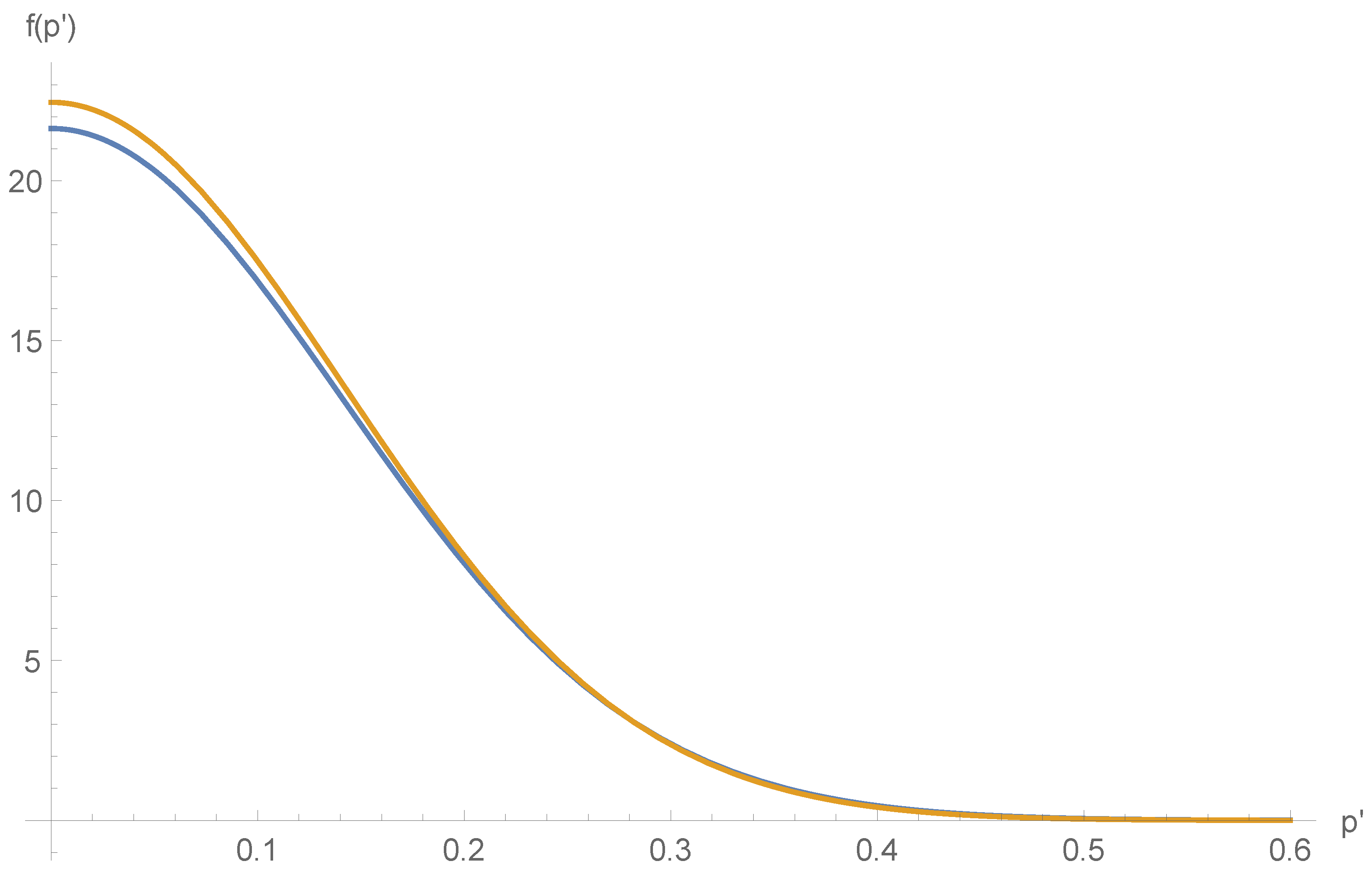

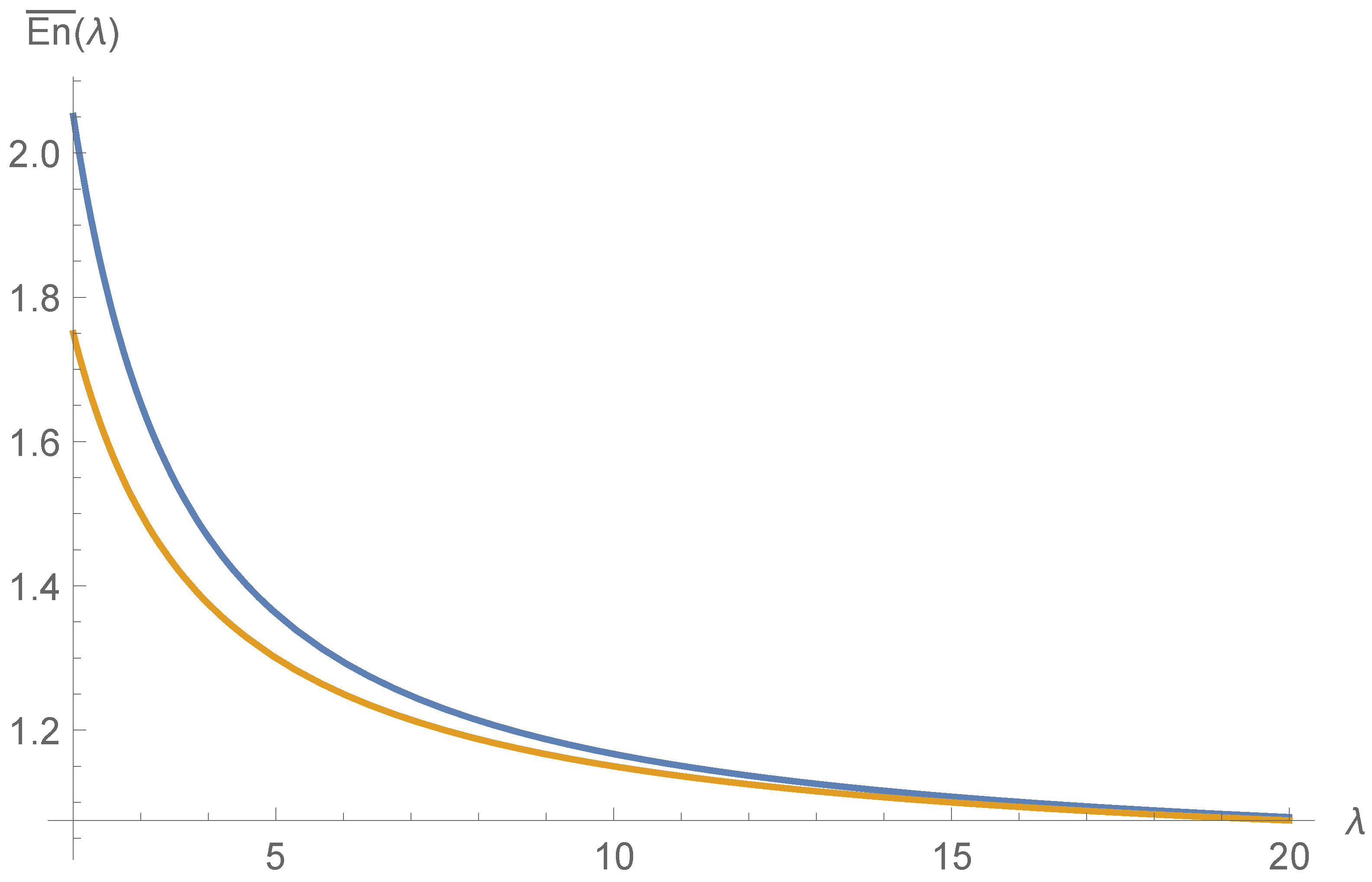

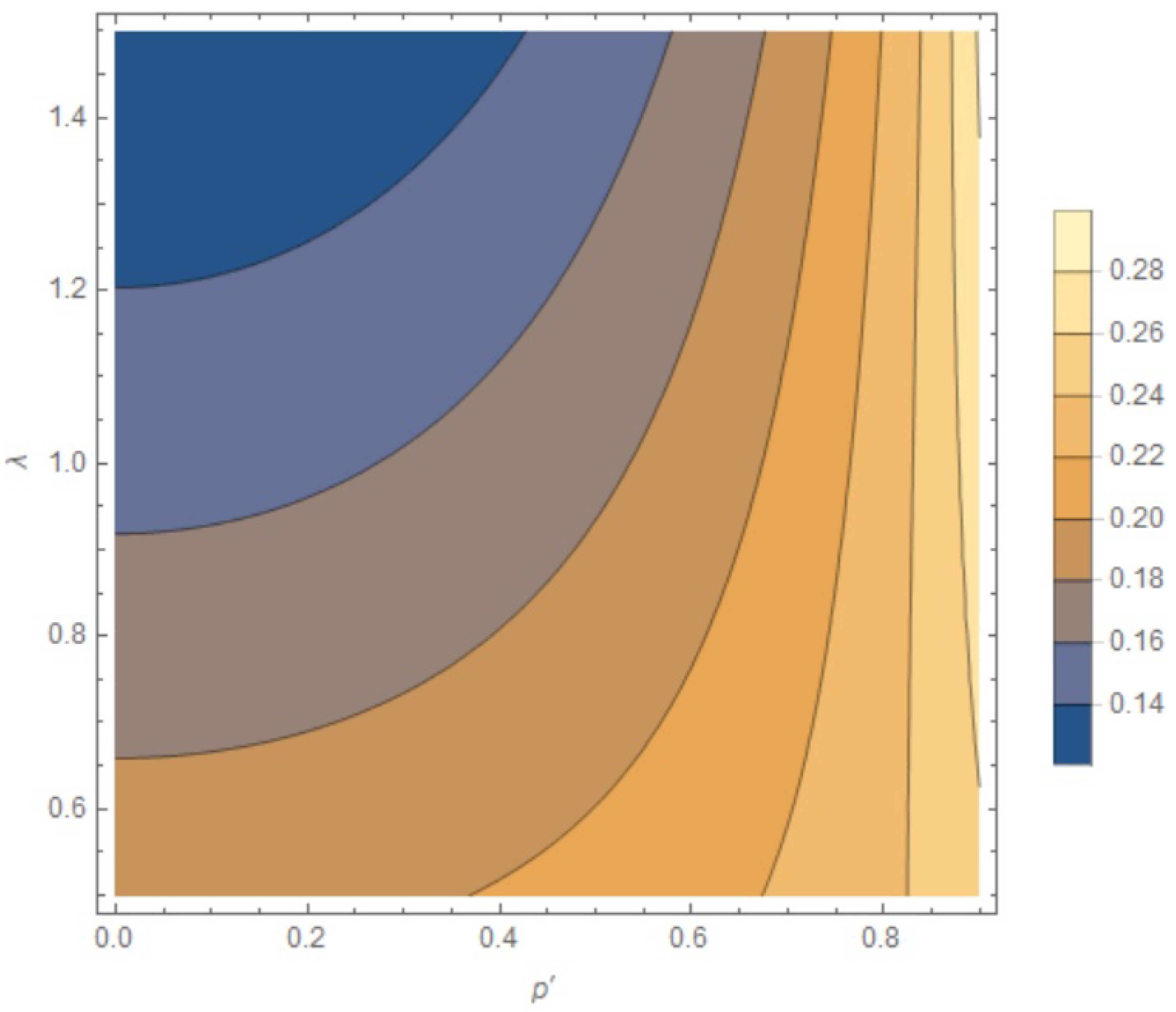

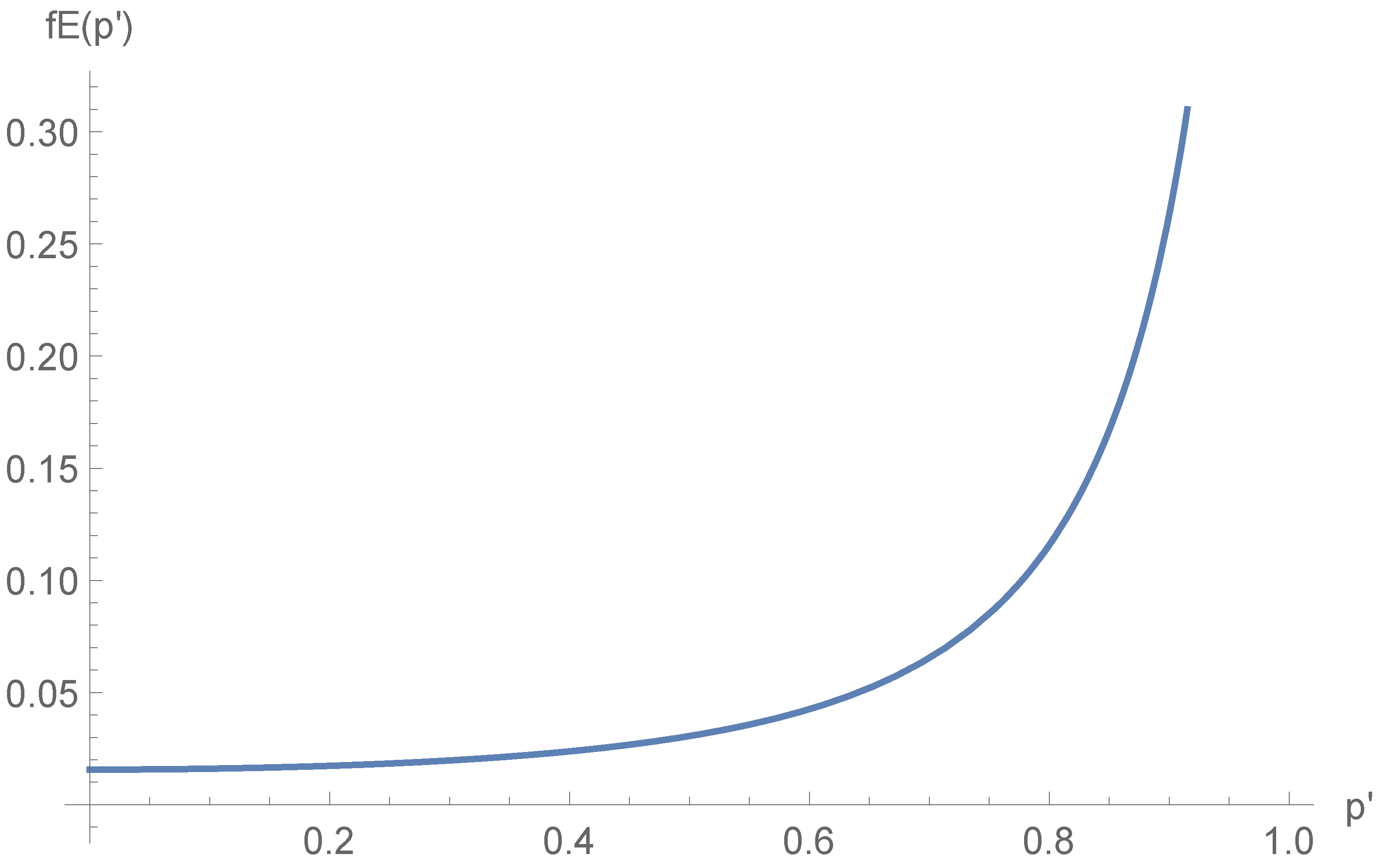

The plan of this paper is as follows: First we make a comment about the definition of time in an Euclidean space, after which we describe a particle trajectory in a general flat space. Then we analyze particle trajectories in Lorentz space-time for the standard subluminal and superluminal cases. The next section will discuss Euclidean metric dynamics. The final section is devoted to statistical analysis of free particles of the three different types (Euclidian, subluminal Lorentzian, and superluminal Lorentzian), in which we shall attempt to describe an equilibrium probability density function of a canonical ensemble of free particles. The possible physical implications of the current theory are then described. Finally, we make some concluding remarks.

2. The Definition of the Temporal Coordinate

Obviously for the Minkowski metric , the underlying symmetry is Lorentz symmetry, and the plus sign (different from the three minus signs) singles out the time coordinate. A basic problem for Euclidean metric is how to define time. If the underlying symmetry is , then all coordinates have the same meaning, so the first thing we need to do is to define time.

Notice, however, that if the universe was initially with a metric of signature (−1, −1, −1, −1) this type of solution would become unstable as the universe expands and the density decreases [

2,

9,

11,

12], eventually evolving into a solution with a Minkowski signature

. This involves a spontaneous symmetry breaking in an arbitrary direction, which will become the “time” coordinate. This resembles the situation of a pencil well balanced on its tip on a table. The pencil will eventually fall into some direction, but one cannot tell in advance into which direction it will fall, after falling the initial cylindrical symmetry is obviously broken, and the direction of falling can be assumed to become the axis

for the azimuthal angel

.

Thus, in hindsight, we can distinguish one specific direction as a temporal direction, which we designate as the axis. This is the axis along which the symmetry has been broken. Thus, we can also designate the same direction that initially cannot be distinguished from any other direction as a temporal only because we know that after the universe reaches a certain expansion this will be recognized as different from the other directions.

5. Some Possible Cosmological and Physical Implications

Suppose that the universe is Euclidean at

. Once its starts to expand the temperature drops and the Euclidean particles become faster, thus increasing the rate of the universe expansion and thermalization; obviously there is no horizon (homogeneity) problem for the Euclidean particles. As the universe increases further, the temperature continues to drop, making the particles even faster, thus creating a positive feedback loop. This increased expansion is cosmological inflation, but without an ad hoc inflationary field [

35]. This is the primordial particle accelerator of the cosmos. We notice that Higgs type fields do not give the correct density perturbation spectrum [

35], hence one is forced to postulate a new field that is not a part of any particle model and thus is a possible but inelegant solution of the homogeneity problem. Alternatively, one can speculate that homogeneity is achieved by ordinary matter, which can become superluminal as the current analysis shows.

However, as the universe expands to a certain limit, the density drops and the Euclidean metric becomes unstable [

2] and a Lorentzian metric develops instead. In a Lorentzian space-time we have two distinct particle species that cannot mix, the subluminal particles that we are familiar with, and the superluminal particles which tend to reach higher and higher velocities and are thus moving to the further reaches of the universe, quite beyond our observational reach. The details of this process are left for future work. Those particles may be what is perceived as dark energy [

36] which affect the velocities of very distant supernovae and the CMB spectrum.

cosmology predicts that

of the universe are made of an unexplained “dark energy” component, obviously the Occam razor principle will vindicate a model in which such an ad hoc component is not needed. Notice, however, that the effect on the CMB spectrum of superluminal particles should be elaborated. This is beyond the scope of the current paper.

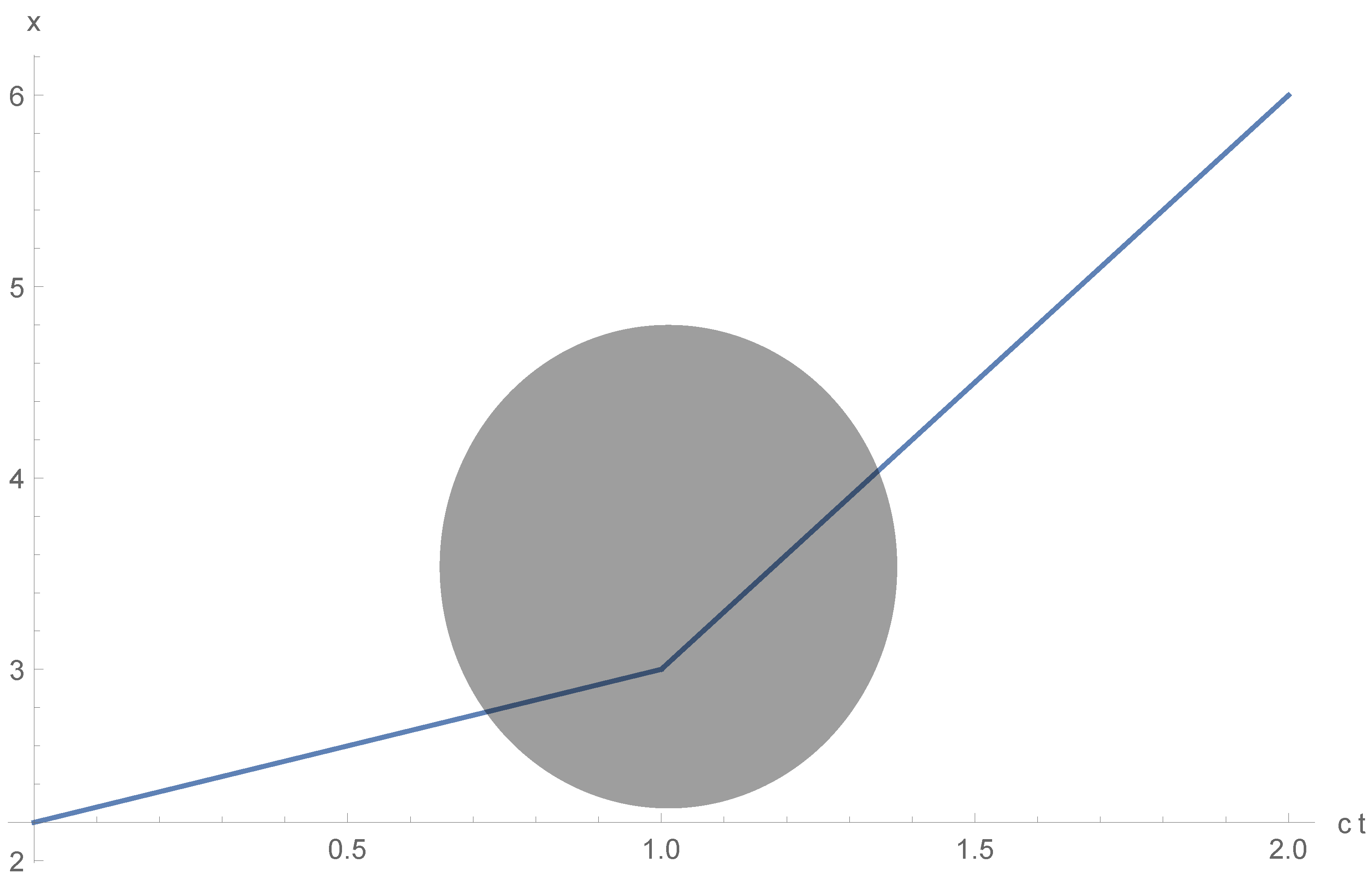

Another obvious physical implication of the previous analysis involve a far fetched technological scenario, in which a particle is accelerated to a velocity close to the velocity

c in a Lorentz space-time, enters into an artificially created Euclidean space-time and accelerated further in this region to velocities above the speed

c, and finally emerges in a Lorentz space in which it will remain above the speed

c for ever unless it is decelerated in an Euclidean space again (see

Figure 24). This may happen to a particle that travels radially in a Friedman–Lemaitre–Robertson–Walker metric passing outwards the critical radius of

and then coming back at superluminal velocities. However, this will be very difficult to do artificially. Obviously a metric change will require a significant

according to Equation (

1). Taking into account that the largest metric deviation from the Lorentzian metric is the solar system on the surface of the sun in

[

37], it does not seem conceivable that such a metric change can be indeed implemented.

Finally, one should take into account that although classical physics is assumed to occur in a Lorentzian metric, quantum field theory calculations are done frequently in an Euclidean background using the rotation of Wick. This is explained through the concept of analytic continuation. However, this procedure is a mathematical technique that has no physical reason in a Lorentzian space-time but may make perfect sense if it is part of space-time, in particular if the part that is very near to the said particle is Euclidean. Hence, one may speculate that each elementary particle may carry with it a “bubble” of a microscopic Euclidean space-time, which may be considered as a one-dimensional string or vortex when viewed from a four-dimensional space-time perspective.

6. Conclusions

GR allows for non-Lorentzian space-times; this is in particular allowed in part of the Friedman–Lemaitre–Robertson–Walker universe. Thus, superluminal particles can exist in such a cosmology. Some of the cosmological consequences of superluminal particles for the homogeneity problem and dark energy problems are briefly described. Some implications of non Lorentzian metrics not connected to superluminality but that may result from non-Euclidean metrics are suggested. Much more detailed analysis is needed to reach a definite conclusion regarding each of the above physical problems. However, the existence of non-Lorentzian space-times and, as a consequence (not an additional assumption), superluminal particles, suggests a possible solution. Of course, just having a rapid expansion in this case does not make inflation in the early universe redundant, and much more detailed and rigorous studies are needed, in particular with respect to the CMB spatial spectrum and the evolution of large scale structure in the universe.

In the scope of the current paper we have only considered canonical ensembles in the number of particles to be fixed, however, at high energies pair creation from the vacuum is possible. Hence, a grand canonical ensemble should be studied. Quantum mechanical effects were also out of the scope of the current paper, which concentrated on classical effects only.

Finally, an Euclidean metric will effect the energy momentum tensor, thus affecting the allowed solutions of the Friedman–Lemaitre–Robertson–Walker universe. A derivation of an exact mathematical model describing the transition from the Euclidean to the Lorentzian universe in which we live in, is left for future studies.