Abstract

After exactly a century since the formulation of the general theory of relativity, the phenomenon of gravitational lensing is still an extremely powerful method for investigating in astrophysics and cosmology. Indeed, it is adopted to study the distribution of the stellar component in the Milky Way, to study dark matter and dark energy on very large scales and even to discover exoplanets. Moreover, thanks to technological developments, it will allow the measure of the physical parameters (mass, angular momentum and electric charge) of supermassive black holes in the center of ours and nearby galaxies.

Keywords:

gravitational lensing 1. Introduction

In 1911, while he was still involved in the development of the general theory of relativity (subsequently published in 1916), Einstein made the first calculation of light deflection by the Sun [1]. He correctly understood that a massive body may act as a gravitational lens deflecting light rays passing close to the body surface. However, his calculation, based on Newtonian mechanics, gave a deflection angle wrong by a factor of two. On 14 October 1913, Einstein wrote to Hale, the renowned astronomer, inquiring whether it was possible to measure a deflection angle of about 0.84″ toward the Sun. The answer was negative, but Einstein did not give up, and when, in 1915, he made the calculation again using the general theory of relativity, he found the right value (where is the Schwarzschild radius and b is the light rays’ impact parameter) that corresponds to an angle of about 1.75″ in the case of the Sun. That result was resoundingly confirmed during the Solar eclipse of 1919 [2].

In 1924, Chwolson [3] considered the particular case when the source, the lens and the observer are aligned and noticed the possibility of observing a luminous ring when a far source undergoes the lensing effect by a massive star. In 1936, after the insistence of Rudi Mandl, Einstein published a paper on science [4] describing the gravitational lensing effect of one star on another, the formation of the luminous ring, today called the Einstein ring, and giving the expression for the source amplification. However, Einstein considered this effect exceedingly curious and useless, since in his opinion, there was no hope to actually observe it.

On this issue, however, Einstein was wrong: he underestimated technological progress and did not foresee the motivations that today induce one to widely use the gravitational lensing phenomenon. Indeed, Zwicky promptly understood that galaxies were gravitational lenses more powerful than stars and might give rise to images with a detectable angular separation. In two letters published in 1937 [5,6], Zwicky noticed that the observation of galaxy lensing, in addition to giving a further proof of the general theory of relativity, might allow observing sources otherwise invisible, thanks to the light gravitational amplification, thereby obtaining a more direct and accurate estimate of the lens galaxy dynamical mass. He also found that the probability to observe lensed galaxies was much larger than that of star on star. This shows the foresight of this eclectic scientist, since the first strong lensing event was discovered only in 1979: the double quasar QSO 0957+561 a/b [7], shortly followed by the observation of tens of other gravitational lenses, Einstein rings and gravitational arcs. All of that plays today an extremely relevant role for the comprehension of the evolution of the structures and the measure of the parameters of the so-called cosmological standard model.

Actually, there are different scales in gravitational lensing, on which we shall briefly concentrate in the next sections, after a short introduction to the basics of the theory of gravitational lensing (Section 2). Generally speaking, gravitational lens images separated by more than a few tenths of arcsecs are clearly seen as distinct images by the observer. This was the case considered by Zwicky, and the gravitational lensing in this regime is called strong (or macro) lensing (see Section 3), which also includes distorted galaxy images, like Einstein rings or arcs. If instead, the distortions induced by the gravitational fields on background objects are much smaller, we have the weak lensing effect (see Section 4). On the other side, if one considers the phenomenology of the star-on-star lensing (as Einstein did), the resulting angular distance between the images is of the order of a few μas, generally not separable by telescopes. Gravitational lensing in this regime is called, following Paczyǹski [8, microlensing, and the observable is an achromatic change in the brightness of the source star over time, due to the relative motion of the lens and the source with respect to the line of sight of the source (see Section 5). In all of these regimes, the gravitational field can be treated in the weak field approximation. Another scale on which gravitational lensing applies is that involving black holes. In particular, when light rays come very close to the event horizon, they are subject to strong gravitational field effects, and thereby, the deflection angles are large. This effect is called retro-lensing, and we shall discuss it in Section 6. The observation of retro-lensing events is of great importance also because the general theory of relativity still stands practically untested in the strong gravitational field regime (see [9] for a very recent review), apart from gravitational waves [10]. A short final discussion is then offered in Section 7.

2. Basics of Gravitational Lensing

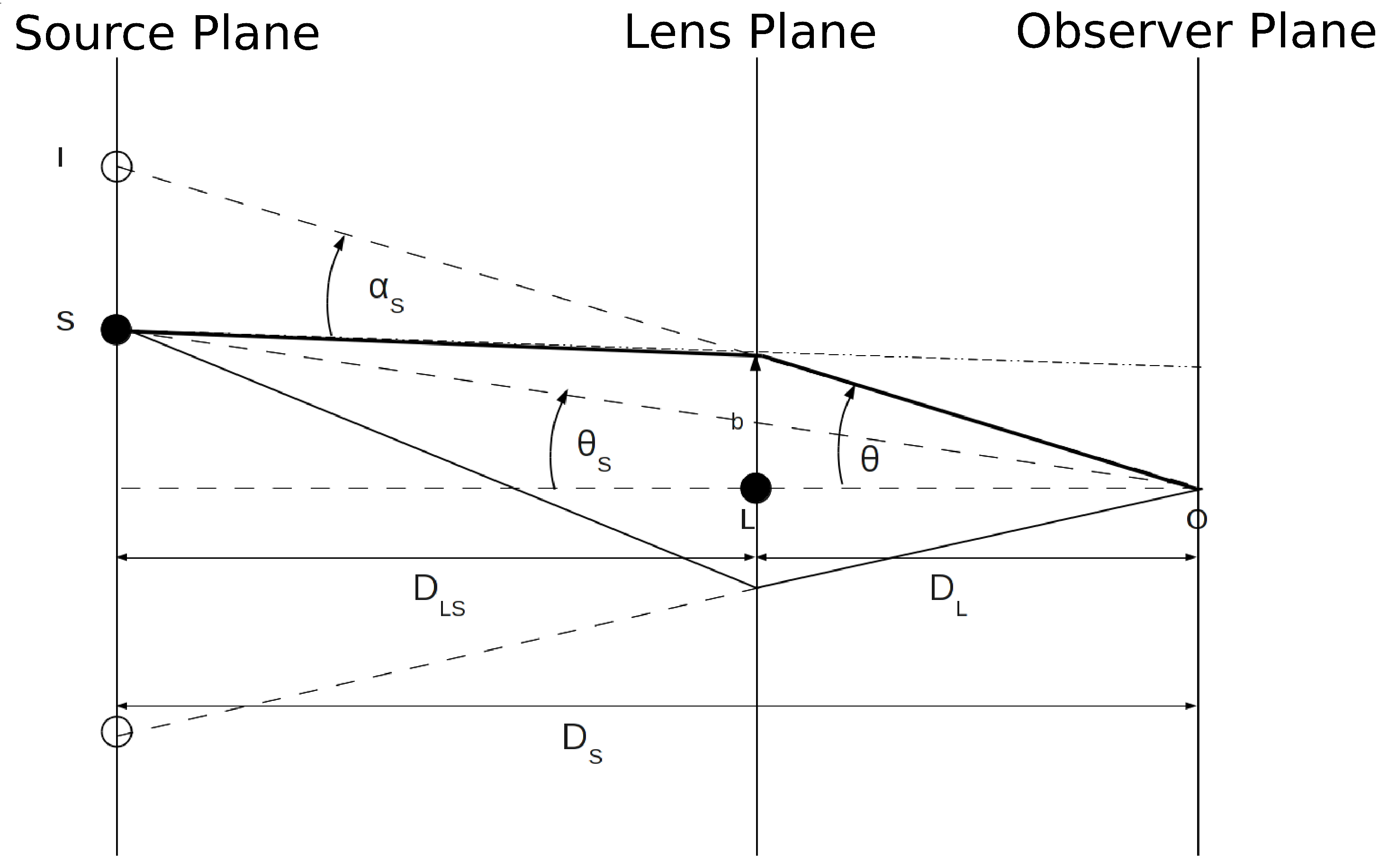

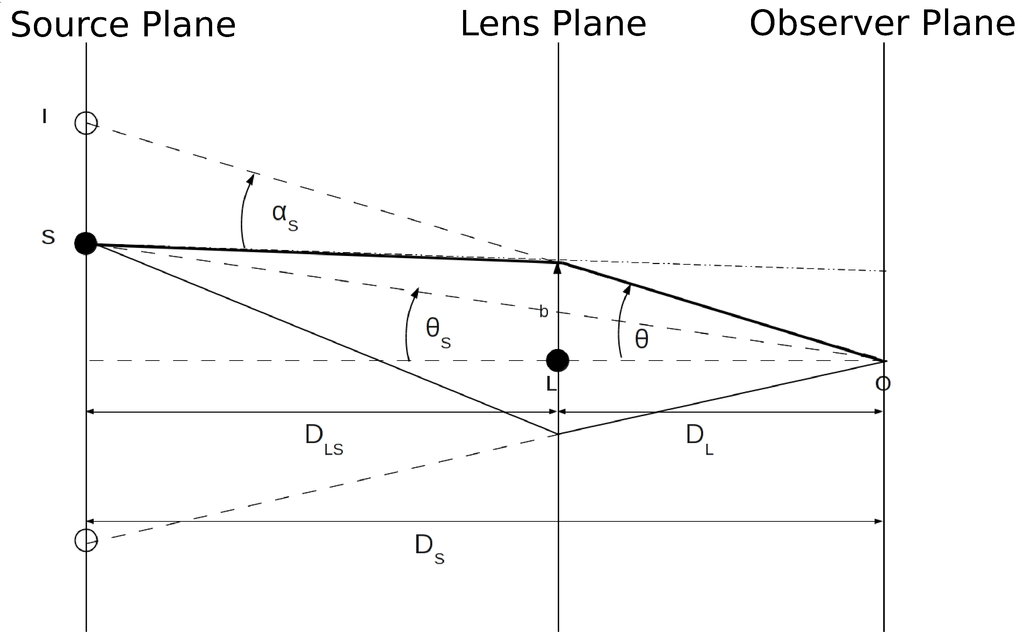

In the general theory of relativity, light rays follow null geodesics, i.e., the minimum distance paths in a curved space-time. Therefore, when a light ray from a far source interacts with the gravitational field due to a massive body, it is bent by an angle approximately equal to . By looking at Figure 1, assuming the ideal case of a thin lens and noting that , one can easily derive the so-called lens equation:

where indicates the source position and:

is the Einstein ring radius, which is the angular radius of the image when lens and source are perfectly aligned (). Therefore, one can see that two images appear in the source plane, whose positions can be obtained by solving Equation (1).

Figure 1.

Schematics of the lensing phenomenon.

More generally (see for details [11]), the light deflection between the two-dimensional position of the source and the position of the image θ is given by the lens mapping equation:

where is the so-called lensing potential and is the two-dimensional Newtonian projected gravitational potential of the lens. We also note, in turn, that the ratio depends on the redshift of the source and the lens, as well as on the cosmological parameters and , being , and the critical, the matter and the dark energy densities, respectively. The transformation above is thus a mapping from the source plane to the image plane, and the Jacobian of the transformation is given by:

where the commas are the partial derivatives with respect to the two components of θ. Here, κ is the convergence, which turns out to be equal to , where:

is the critical surface density, is the shear and is the magnification matrix. Thus, the previous equations define the convergence and shear as second derivatives of the potential, i.e.,

From the above discussion, it is clear that gravitational lensing may allow one to probe the total mass distribution within the lens system, which reproduces the observed image configurations and distortions. This, in turn, may allow one to constrain the cosmological parameters, although this is a second order effect.

3. Strong Lensing

Quasars are the brightest astronomical objects, visible even at a distance of billions of parsecs. After the identification of the first quasar in 1963 [12], these objects remained a mystery for quite a long time, but today, we know that they are powered by mass accretion on a supermassive black hole, with a mass billions of times that of the Sun. The first strong gravitational lens, discovered in 1979, was indeed linked to a quasar (QSO 0957+561 [7]), and although the phenomenon was expected on theoretical grounds, it left the astronomers astonished. The existence of two objects separated by about 6″ and characterized by an identical spectrum led to the conclusion that they were the doubled image of the same quasar, clearly showing that Zwicky was perfectly right and that galaxies may act as gravitational lenses. Afterwards, also the lens galaxy was identified, and it was established that its dynamical mass, responsible for the light deflection, was at least ten-times larger than the visible mass. This double quasar was also the first object for which the time delay (about 420 days) between the two images [13], due to the different paths of the photons forming the two images, has been measured. This has also allowed obtaining an independent estimate of the lens galaxy dynamical mass. Observations can also show four images of the same quasar, as in the case of the so-called Einstein Cross, or when the lens and the source are closely aligned, one can observe the Einstein ring, e.g., in the case of MG1654-1346 [14]. The macroscopic effect of multiple images’ formation is generally called strong lensing, which also consists of the formation of arcs, as those clearly visible in the deep sky field images by the Sloan Digital Sky Survey (SDSS; see, e.g., [15]). The sources of strong lensing events are often quasars, galaxies, galaxy clusters and supernovae, whereas the lenses are usually galaxies or galaxy clusters. The image separation is generally larger than a few tenths of an arcsec, often up to a few arcsecs.

Over the years, many strong lensing events have been found in deep surveys of the sky, such as the CLASS [16], the Sloan ACS [17], the SDSS, one of the most successful surveys in the history of astronomy (see, e.g., [18] and references therein), the SQLS (the Sloan Digital Sky Survey for Quasar Lens Search) [19], and so on.

Strong gravitational lensing is nowadays a powerful tool for investigation in astrophysics and cosmology (see, e.g. [20,21]). As already mentioned in the previous section, strong lensing gives a unique opportunity to measure the dynamical mass of the lens object using, for example, the mass estimator , which directly gives the mass within , using Equation (5) in this regime. The result is that masses obtained in this way are almost always larger than the visible mass of the lensing object, showing that galaxy and galaxy cluster masses are dominated by dark matter. In any case, accurately constraining the mass distribution of the lens system (e.g., a galaxy cluster) is a generally degenerate problem, in the sense that there are several mass distributions that can fit the observables; thus, the best way to solve it is to use multiple images (see, e.g., [22]).

Another important application of strong lensing is the study of dark matter halo substructures. Indeed, sometimes flux ratio anomalies in the lensed quasar images are detected (see, e.g., [23,24]), and while smooth mass models of the lensing galaxy may generally explain the observed image positions, the prediction of such models of the corresponding fluxes is frequently violated. Especially in the radio band observations, since the quasar radio emitting region is quite large, the observed radio flux anomalies are explained as being due to the presence of substructures of about along the line of sight. After some controversy regarding whether ΛCDM (cold dark matter plus Cosmological Constant) simulations predict enough dark matter substructures to account for the observations (for example, in [25], some indication is found of an excess of massive galaxy satellites), more recent analysis, taking also into account the uncertainty in the lens system ellipticity, finds results consistent with those predicted by the standard cosmological model [26,27]. However, at present, the list of multiply-imaged quasars observed in the radio and mid-IR bands is quite short, and further observational and theoretical work would be very helpful in this respect. Another indication of dark matter halo substructures comes from detailed analysis of galaxy-galaxy lensing. Although the results obtained are generally consistent with ΛCDM simulations, more data should be analyzed in order to get strong constraints [28,29]. Strongly lensed quasars have been observed to show a certain variability of one image with respect to the others. This can be often attributed to microlensing (see Section 5) by the stars throughout the lens galaxy. This effect, and in particular its variation with respect to the wavelength, has provided an opportunity to study in detail the central engine of the source quasar, and the magnitude of the microlensing variability has allowed astrophysicists to constrain the stellar density in the lens galaxy [30,31,32].

Strong gravitational lensing may be used as a natural telescope that magnifies dim galaxies, making them easier to be studied in detail. For this reason, mass concentrations, like galaxies and clusters of galaxies, can be effectively used as cosmic telescopes to study faint sources that would not be possible to detect in the absence of gravitational lensing (see, e.g., [33,34]). At present, there is also an event of a high magnified supernova multiply imaged and also seen exploding again, being lensed by a galaxy in the cluster MACS J1149.6+2223 [35,36].

The ultimate goal of strong lensing is not only to get information on the large-scale structure of the Universe, but also to constrain the cosmological parameters. For instance, analyzing the time delay among the lensed source images, it is possible to estimate also the value of the Hubble constant . Indeed, the time delay is given by the difference of the light paths from the images and is inversely proportional to , as first understood by Refsdal [37] (see also the review in [38]). At present, one of the most accurate measurement of the Hubble constant using a gravitational lens is provided in [39]. There is also a project (COSMOGRAIL) particularly devoted to the time delay measurements of doubly- or multiply-lensed quasars (see [40] and the references therein). Moreover, the measure of both the frequency of occurrence and the redshift of multiple images in deep sky surveys may allow one to constrain the values of and in an independent way with respect to other methods, such as those coming from SN Ia or the CMB (Cosmic Microwave Background) power spectrum.

4. Weak Lensing

In addition to the macroscopic deformations discussed in the previous section, in the deep field surveys of the sky, also arclets (i.e., single distorted images with an elliptical shape) and weakly distorted images of galaxies, with an almost invisible individual elongation, have been detected. This effect is known as weak lensing and is playing an increasingly important role in cosmology.

The weak lensing’s main feature is the shape deformation of background galaxies, whose light crosses a mass distribution (e.g., a galaxy or a galaxy cluster) that acts as a gravitational lens. Actually, as discussed in Section 2, gravitational lensing gives rise to two distinct effects on a source image: convergence, which is isotropic, and shear, which is anisotropic. In the weak lensing regime, the observer makes use of the shear, that is the image deformation (sometimes related to the galaxy orientation), while the convergence effect is not used, since the intrinsic luminosity and the size of the lensed objects are unknown. For a complete and in-depth review on the basics of weak gravitational lensing, with full mathematical details of all the most important concepts, we refer the reader to [41].

The first weak lensing event was detected in 1990 as statistical tangential alignment of galaxies behind massive clusters [42], but only in 2000, coherent galaxy distortions were measured in blind fields, showing the existence of the cosmic shear (see, e.g., [43,44]). Here, we remark that the weak lensing cannot be measured by a single galaxy, but its observation relies on the statistical analysis of the shape and alignment of a large number of galaxies in a certain direction.

Therefore, the game is to measure the galaxy ellipticities and orientations and to relate them to the surface mass density distribution of the lens system (generally a galaxy cluster placed in between). There are at least two major issues in weak lensing studies, one mainly relying on the theory, the other one on observations: the former concerns finding the best way to reconstruct the intervening mass distribution from the shear field , the latter with looking for the best way to determine the true ellipticity of a faint galaxy, which is smeared out by the instrumental point spread function (PSF). To solve these issues, several approaches have been proposed, which can be distinguished into two broad families: direct and inverse methods. On the theoretical side, the direct approaches are: the integral method, which consists of expressing the projected mass density distribution as the convolution of γ by a kernel (see, e.g., [45]), and the local inversion method, which instead starts from the gradient of γ (see, e.g., [46] and the references therein). The inverse approaches work on the lensing potential (see Equation 3), and they include the use of the maximum likelihood [47,48] or the maximum entropy methods [49] to determine the most likely projected mass distribution that reproduces the shear field. The inverse methods are particularly useful since they make it possible to quantify the errors in the resultant lensing mass estimates, as, for instance, errors deriving from the assumption of a spherical mass model when fitting a non-spherical system [50,51].

The inverse methods allow one also to derive constraints from external observations, such as X-ray data on galaxy clusters’ strong lensing or CMB lensing. In particular, one can compare mass measurements from weak lensing and X-ray observations for large samples of galaxy clusters [52]. In this respect, [53] used a large sample of nearby clusters with good weak lensing and X-ray measurements to investigate the agreement between mass estimates based on weak lensing and X-ray data, as well as studied the potential sources of errors in both methods. Moreover, a combination of weak lensing and CMB data may provide powerful constraints on the cosmological parameters, especially on the Hubble constant , the amplitude of fluctuations and the matter cosmic density [54,55]. We also mention, in this respect, that one way to determine the fluid-mechanical properties of dark energy, characterized by its sound speed and its viscosity apart from its equation of state, is to combine Planck data with galaxy clustering and weak lensing observations by Euclid, yielding one percent sensitivity on the dark energy sound speed and viscosity [56] (see the end of this section).

On the observational side, the first priority is to use a telescope with a wide field of view, appropriate to probe the large-scale structure distribution at least of a galaxy cluster. On the other hand, it is also necessary to minimize the source of noise in the determination of the ellipticity of very faint galaxies, so that the best-seeing conditions for a ground-based telescope or, better, a space-based instrument, are extremely useful.

Very promising results have been obtained with the weak lensing technique so far, as, for example, the best measure, until today, of the existence and distribution of dark matter within the famous Bullet cluster [57] (actually constituted by a pair of galaxy clusters observed in the act of colliding). Astronomers found that the shocked plasma was almost entirely in the region between the two clusters, separated from the galaxies. However, weak lensing observations showed that the mass was largely concentrated around the galaxies themselves, and this enabled a clear, independent measurement of the amount of dark matter.

With the major aim to map, through the weak lensing effect, the mass distribution in the Universe and the dark energy contribution by measuring the shape and redshift of billions of very far away galaxies (for a review, see [58]), the European Space Agency (ESA) is planning to launch the Euclid satellite in the near future. Also ground-based telescopes will allow one to detect an enormous number of weak and strong lensing events. An example is given by the LSST (Large Synoptic Survey Telescope) project, located on the Cerro Pachón ridge in north-central Chile, which will become operative in 2022. Its 8.4-meter telescope uses a special three-mirror design, creating an exceptionally wide field of view, and has the ability to survey the entire sky in only three nights. The effective number density neff of weak lensing galaxies (which is a measure of the statistical power of a weak lensing survey) that will be discovered by LSST is, conservatively, in the range of 18–24 arcmin−2 (see Table 4 in [59]). The very large (about 1.5 × 104 square degrees) and deep survey of the sky that will be performed by Euclid will allow astrophysicists to address fundamental questions in physics and cosmology about the nature and the properties of dark matter and dark energy, as well as in the physics of the early Universe and the initial conditions that provided the seeds for the formation of cosmic structure. Before closing this section, we also mention that strong systematics may be present in weak lensing surveys. For example, the intrinsic alignment of background sources may mimic to an extent the effects of shear and may contaminate the weak lensing signal. However, these systematics may be controlled if also the galaxy redshifts are acquired, and this fully removes the unknown intrinsic alignment errors from weak lensing detections (for further details, see [60,61]).

5. Microlensing

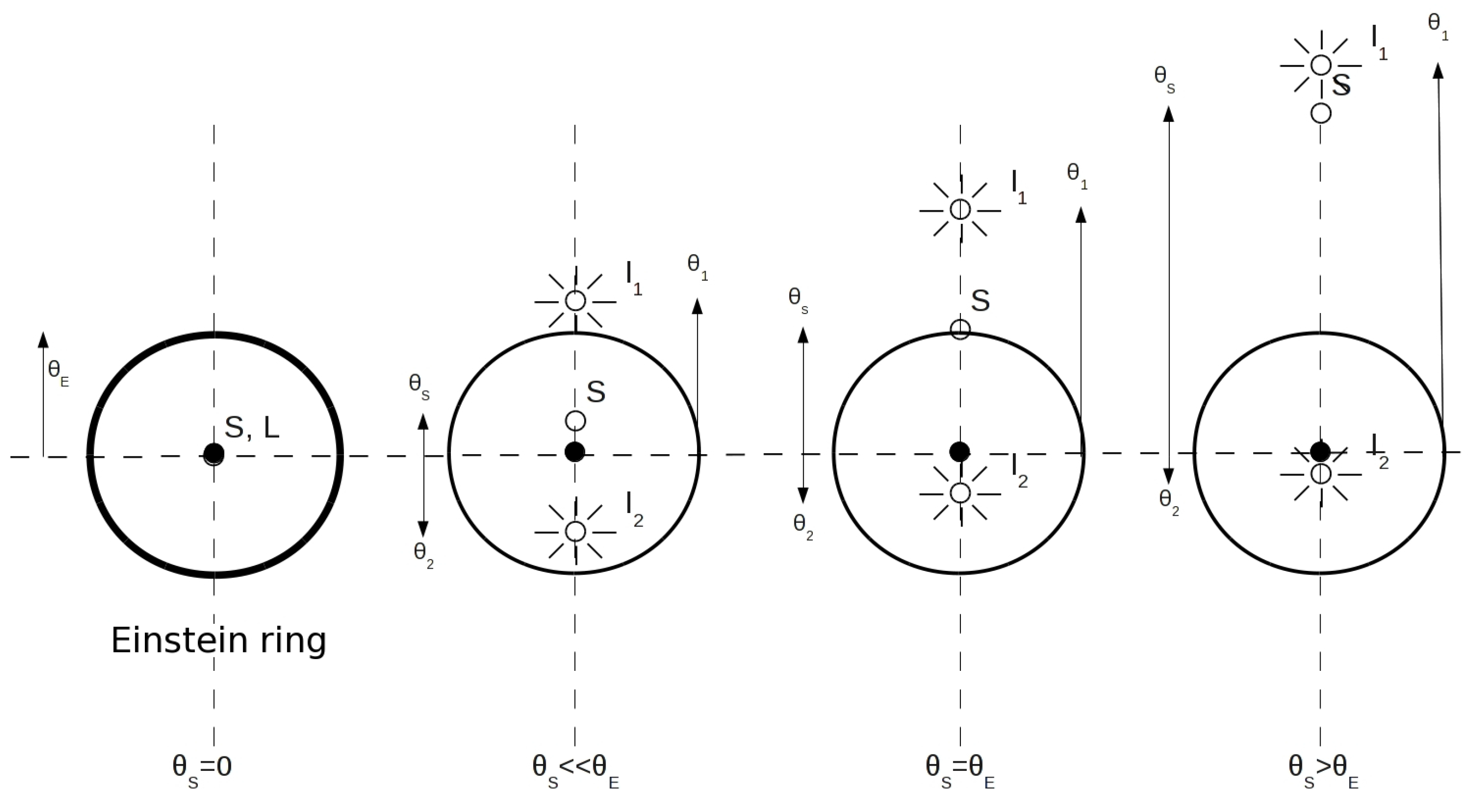

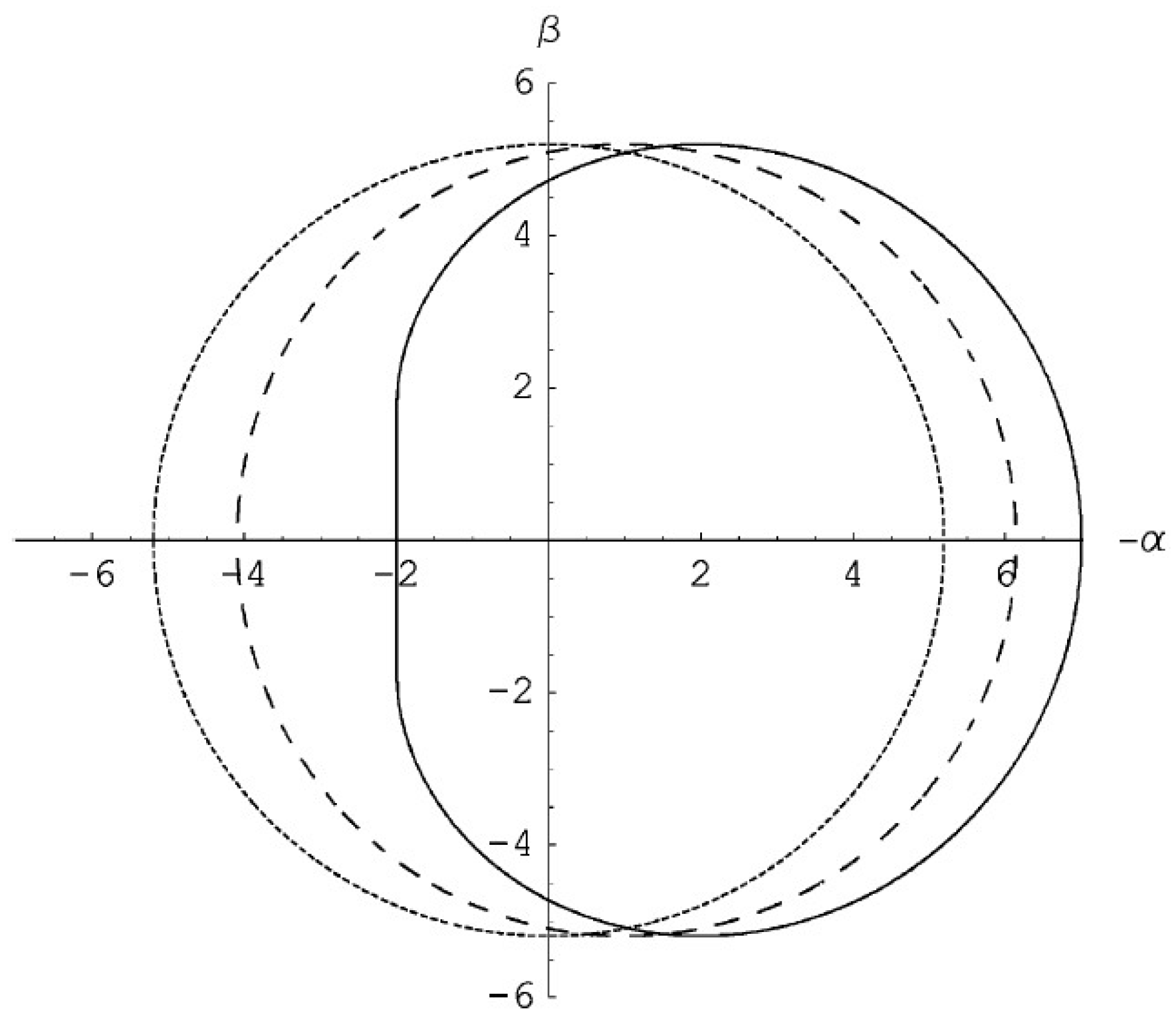

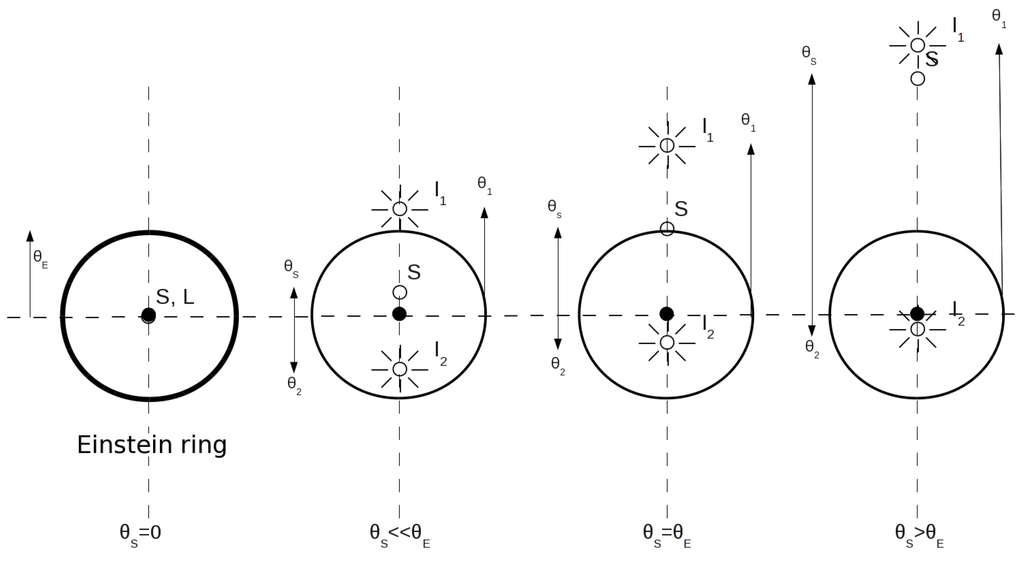

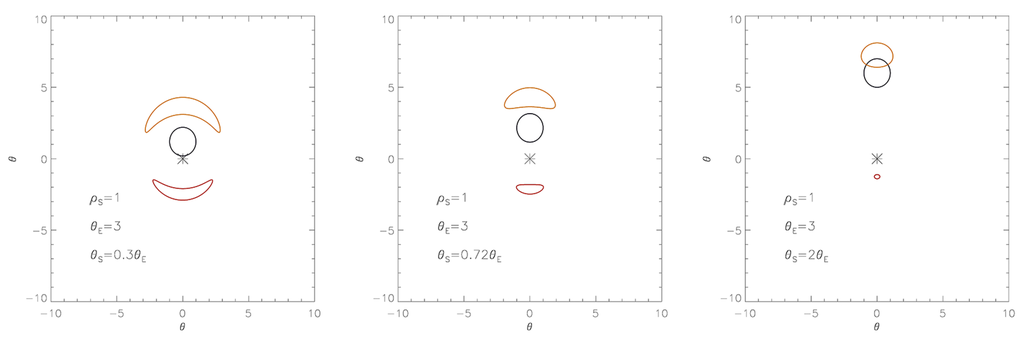

Let us consider now the microlensing scale of the lensing phenomenon that occurs when is smaller than the typical telescope angular resolution, as in the case of stars lensing the light from background stars (for a review on gravitational microlensing and its astrophysical applications, we refer to, e.g., [62]). As is clear from the discussion in Section 2, by solving the lens Equation (1), one can determine the angular positions of the primary () and secondary () images. In Figure 2, these positions are shown for four different values of the impact parameter in the case of a point-like source. If the source and the lens are aligned (first panel on the left), the circular symmetry of the problem leads to the formation of a luminous annulus having radius around the lens position. Otherwise, increasing the value, the secondary image gets closer to the lens position, while the primary image drifts apart from it, and in the limit of , the microlensing phenomenon tends to disappear. However, observing multiple images during a microlensing event is practically impossible with the present technology. For instance, in the case in which the phenomenon is maximized, corresponding to the perfect alignment, for a star in the galactic bulge (about 8 kpc away), one has , which is well below the angular resolving power, even of the Hubble Space Telescope (about 43 mas at 500 nm); see, e.g., http://www.coseti.org/9008-065.htm.

Figure 2.

Angular positions of the primary () and secondary () images for four different values of the source impact parameter in the case of a point-like source.

When a source is microlensed, its images do not have the same luminosity; therefore, the observer receives a total flux (or magnitude) different from that of the unlensed source. The flux difference can be described very simply in terms of the light magnification and the law of the conservation of the specific intensity I, which represents the energy, with frequency in the range crossing the surface during the time interval in the solid angle around the direction orthogonal to the surface. Indeed, the light specific intensity turns out to be conserved in the absence of absorption phenomena, interstellar scattering or Doppler shifts. This is also a consequence of Liouville’s theorem, which claims that the density of states in the phase space remains constant if the interacting forces are non-collisional (and gravitation fulfills this condition due to its weak coupling constant), and the propagating medium is approximately transparent (as is the case for interstellar space). This effect can produce a magnification or a de-magnification of the images of an extended light source (see Figure 3). If the image is magnified, it means that it certainly subtends a wider angle with respect to that subtended by the source in the absence of the lens. In microlensing, the source disk size should not be neglected in general. Within the framework of the finite source approximation for a source with flux and assuming , one can show that the magnification A of an image at angular position θ is given by . As a consequence, the observed flux corresponds to . Of course, when the source star disk gradually moves away from the line of sight, the magnification decreases, and the unlensed flux is then recovered.

Figure 3.

As in Figure 2, but with a conformal transformation of the source boundary, considered extended and with radius , by a point-like lens. Each point of the source disk behaves as a point-like source. The black circle represents the source disk, while the red and yellow arcs are the deformed primary and secondary images.

As already anticipated, the observer cannot see, in the microlensing case, well-separated images, but, instead, detects a single image made by the overlapping of the primary and the secondary images. In this case, one can easily obtain the classical magnification factor A by summing up the individual magnifications, i.e., , where is the impact factor. If there is a relative movement between the lens and the source, u changes with time, and a standard Paczyǹski curve [8] does emerge.

An important role in gravitational microlensing is played by the caustics, the geometric loci of the points belonging to the lens plane where the light magnification of a point-like source becomes infinite, and by the corresponding critical curves in the source plane. In the case of a single lens, the caustic is a point coinciding with the lens position; therefore, the magnification diverges when the impact parameter approaches zero. However, real sources are not point-like, so we always have finite magnifications that can be calculated by an average procedure:

where is the point-like source magnification, is the brightness profile of the stellar disk (the limb darkening profile) and the integral is extended over the source star disk.

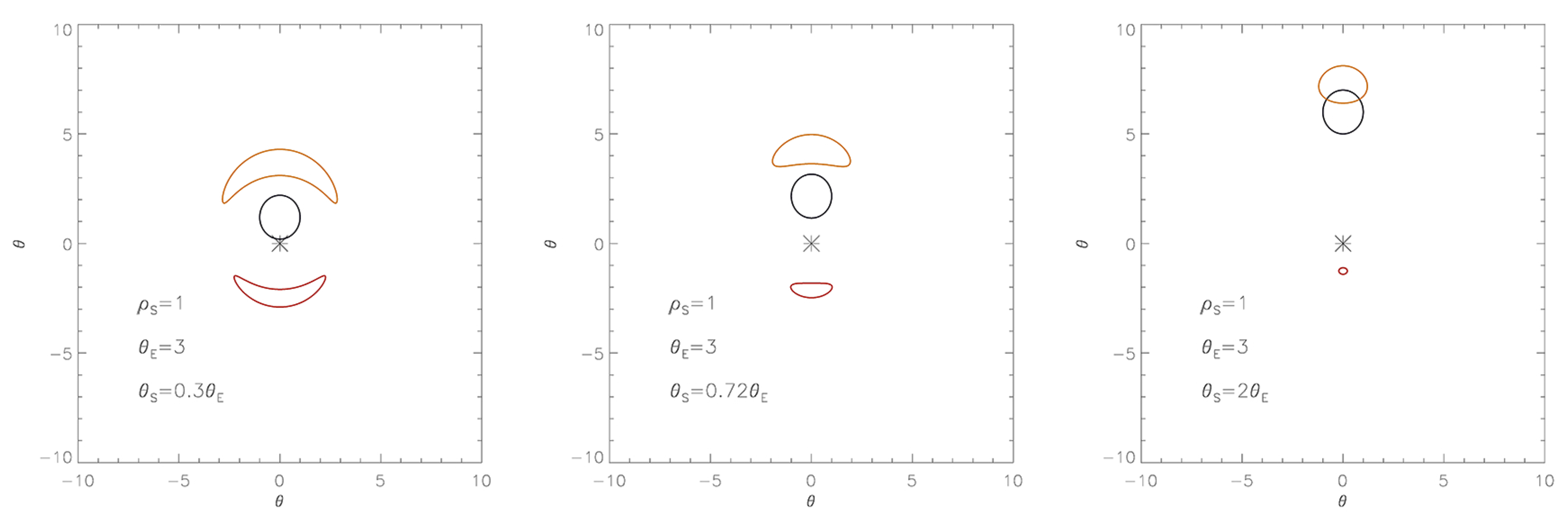

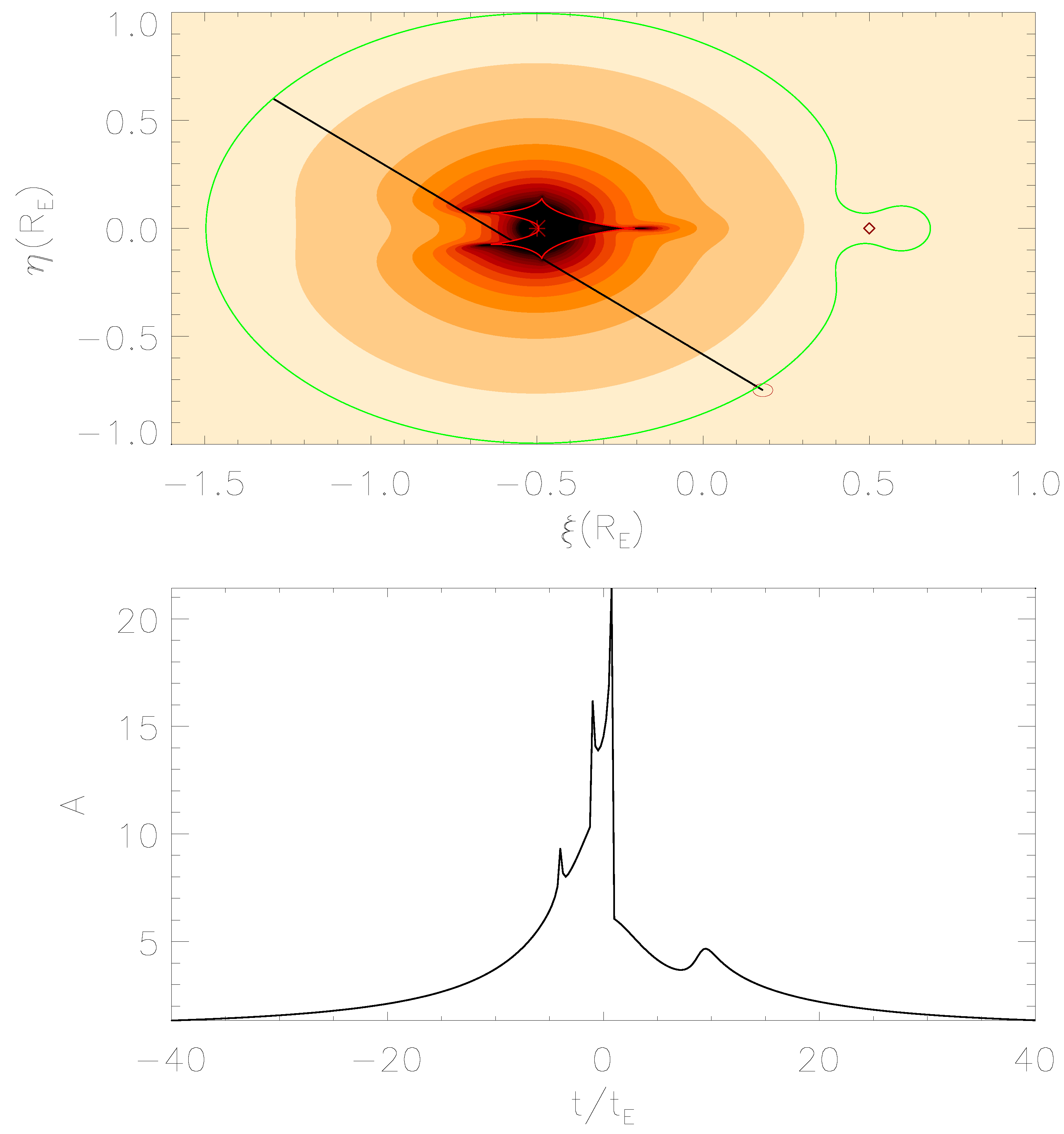

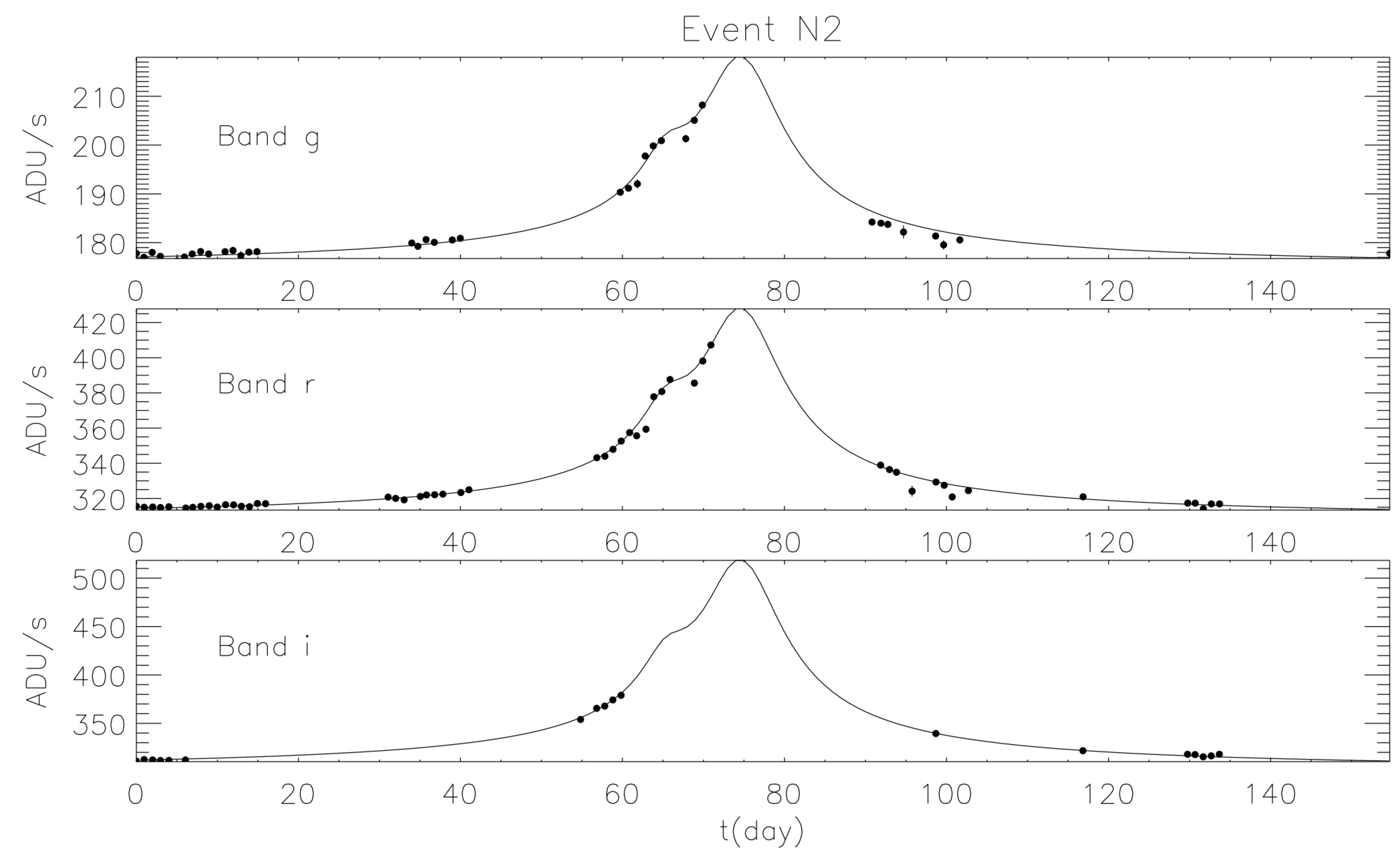

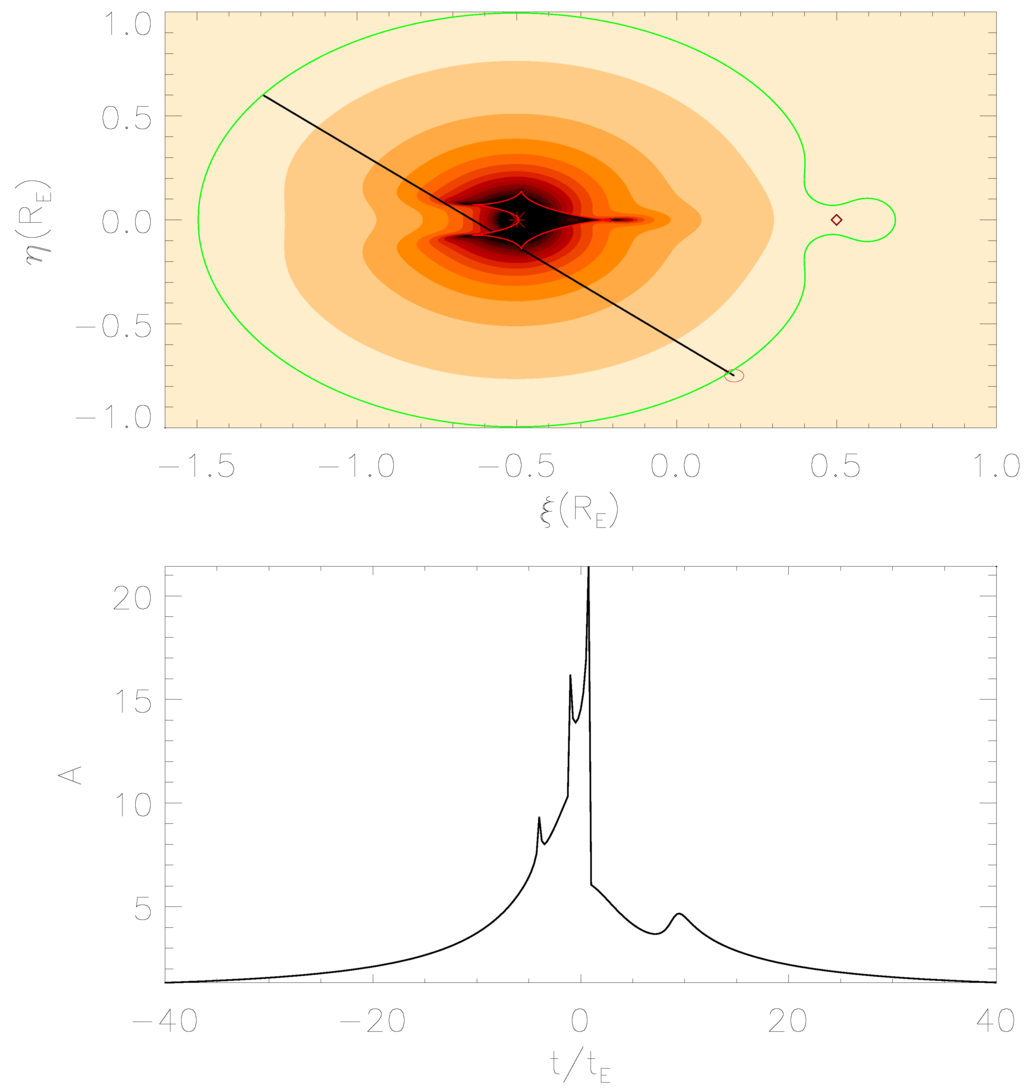

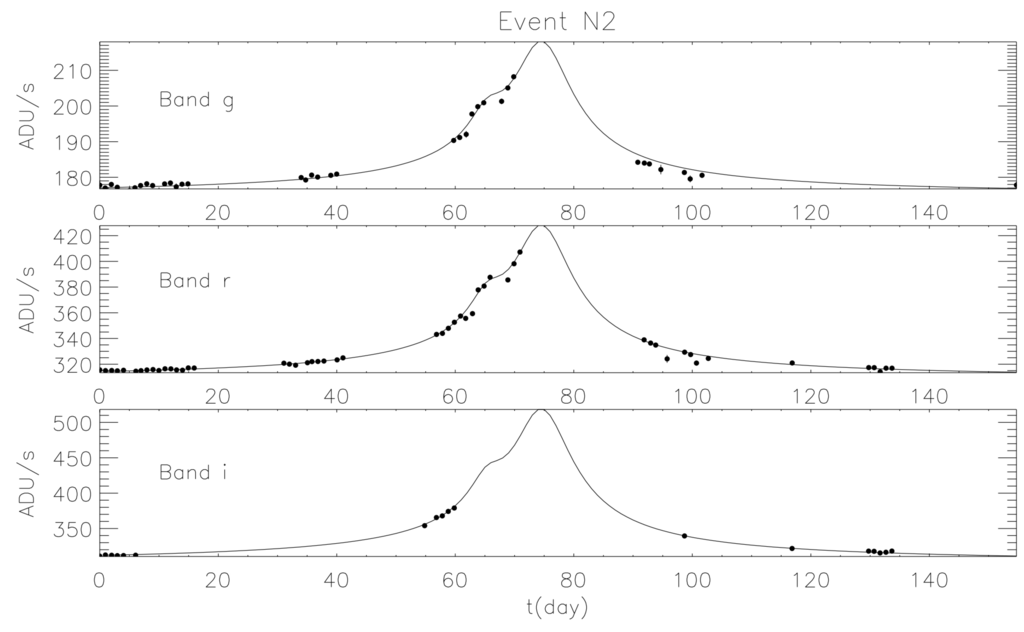

Observations show that about half of all stars are in binary systems, and moreover, thousands of exoplanets are being discovered around their host stars by different techniques and instruments. Therefore, it is worth considering binary and multiple systems as lenses in microlensing observations. In this case, the lens equation, obviously, becomes more complicated, but it can still be solved by numerical methods in order to obtain the magnification map where caustics take on distinctive shapes depending on the specific geometry of the system. In Figure 4, we show the magnification map and the resulting light curve for a simulated microlensing event due to a binary lens with mass ratio (e.g., a solar mass as the primary component and a Jupiter-like planet as the secondary one). In these cases, the resulting light curve may be rather different with respect to the typical Paczyǹski one, depending on the system parameters. The study of these anomalies in the microlensing light curves behavior is becoming more and more important nowadays, since it allows one to estimate some of the parameters of the lensing system (see, e.g., [63]). The main advantage of this technique, compared to the other methods adopted by the exoplanets hunters (e.g., radial velocity, direct imaging, transits), is the possibility to detect even very small planets orbiting their own star at enormous distances from Earth. It also allows one to discover the so-called free-floating planets (FFPs), otherwise hardly detectable [64]. By studying the PA99-N2 microlensing event, detected in 1999 by the French-British collaboration POINT-AGAPE [66], Ingrosso et al. [65] revealed in 2009 that the anomaly observed was compatible with the presence of a super-Jupiter with a mass of around a star lying in the Andromeda galaxy (see Figure 5), thus finding the first putative exoplanet in another galaxy.

Figure 4.

Magnification map for a binary lens system characterized by two objects separated by a projected distance of and mass ratio . The green and red closed lines indicate the critical and caustic curves obtained by solving the lens equation in Equation (1). The black line indicates the trajectory of the source star, which has a radius of . The simulated light curve is shown in the lower panel.

Figure 5.

Light curve in three different bands (g, r and i) of the PA99-N2 event detected in 1999 toward the Andromeda galaxy.

5.1. Astrometric Microlensing

During an ongoing microlensing event, the centroid of the multiple images and the source star positions move in the lens plane giving rise to a phenomenon known as astrometric microlensing (see, e.g., [67,68] and the references therein). In the simplest case of a point-like lens, for a source at angular distance , the position θ of the images with respect to the lens can be obtained by solving the lens Equation (1). Since the Einstein radius defines the scale length on the lens plane, the lens equation reads:

where and d are the linear distances, in the lens plane, of the source and images from the gravitational lens, respectively. Moreover, using the dimensionless source-lens distances and , the previous relation can be further simplified as:

Denoting with and the solutions of this equation, one notes that, in the lens plane, the + image resides always outside the circular ring centered on the lens position with radius equal to the Einstein angle, while the − image is always within the ring. As the source-lens distance increases, the + image approaches the source position, while the − one (becoming fainter) moves towards the lens location. For a source moving in the lens plane with transverse velocity directed along the ξ axis (η is perpendicular to it), the projected coordinates of the source result in being:

where and is the impact parameter (in this case, lying on the η axis). Since is time dependent, the two images move in the lens plane during the gravitational lensing event.

By weighting the + and − image position with the associated magnification [69], one gets:

Finally, the observable is defined as the displacement of the centroid with respect to the source,

Note that the centroid shift may be viewed as a vector:

with components along the axes:

Here, we remind that all of the angular quantities are given in units of the Einstein angle , which, for a source at distance , results in being:

which fixes the scale of the phenomenon.

It is straightforward to show (see [69]) that during a microlensing event, the centroid shift Δ traces (in the plane) an ellipse centered in the point . The ellipse semi-major axis a (along ) and semi-minor axis b (along ) are:

Then, for , the ellipse becomes a circle with radius , while it degenerates into a straight line of length for approaching zero. Note also that Equation (16) implies:

so that by measuring a and b, one can determine the event impact parameter .

As observed in [67, Δ falls more slowly than the magnification, implying that the centroid shift may be an interesting observable also for large source-lens distances, i.e., far from the light curve peak. In fact, in astrometric microlensing, the threshold impact parameter (i.e., the value of the impact parameter that gives an astrometric centroid signal larger than a certain quantity ) is given by , where is the observing time and the relative velocity of the source with respect to the lens. For example, the Gaia satellite should reach an astrometric precision (for objects with visual magnitude ) in five years of observation [70]. Then, assuming a threshold centroid shift , one has for a lens at a distance of 0.1 kpc and transverse velocity km s−1. For comparison, the threshold impact parameter for a ground-based photometric observation is ≃ 1. Consequently, the cross-section for an astrometric microlensing measurement is much larger than the photometric one, since it scales as . Hence, in the absence of finite-source and blending effects, by measuring a and b, one can directly estimate the impact parameter .

A further advantage of the astrometric microlensing is that some events can be predicted in advance [71]. In fact, by studying in detail the characteristics of stars with large proper motions, Proft et al. [72] identified tens of candidates to measure astrometric microlensing by the Gaia satellite, an European Space Agency (ESA) mission that will perform photometry, spectroscopy and high precision astrometry (see [70]).

5.2. Polarization and Orbital Motion Effects in Microlensing Events

Gravitational microlensing observations may also offer a unique tool to study the atmospheres of far away stars by detecting a characteristic polarization signal [73]. In fact, it is well known that the light received from stars is linearly polarized by the photon scattering occurring in the stellar atmospheres. The mechanism is particularly effective for the hot stars (of A or B type) that have a free electron atmosphere giving rise to a polarization degree increasing from the center to the stellar limb [74]. By a minor extent, polarization may be also induced in main sequence F or G stars by the scattering of star light off atoms/molecules and in evolved, cool giant stars by photon scattering on dust grains contained in their extended envelopes.

Following the approach in [74], the polarization P in the direction making an angle with the normal to the star surface is , where is the intensity in the plane containing the line of sight and the normal, and is the intensity in the direction perpendicular to this plane. Here, , where r is the distance of a star disk element from the center and R the star radius, and we are assuming that light propagates in the direction .

For isolated stars, a polarization signal has been measured only for the Sun for which, due to the distance, the projected disk is spatially resolved. Instead, when a star is significantly far away and can be considered as point-like, only the polarization averaged over the stellar disk can be measured, and usually , since the flux from each stellar disk element is the same. A net polarization of the light appears if a suitable asymmetry in the stellar disk is present (caused by, e.g., eclipses, tidal distortions, stellar spots, fast rotation, magnetic fields). In the microlensing context, the polarization arises since different regions of the source star disk are magnified differently during the event. Indeed, during an ongoing microlensing event, the gravitational lens scans the disk of the background star, giving rise not only to a time-dependent light magnification, but also to a time-dependent polarization.

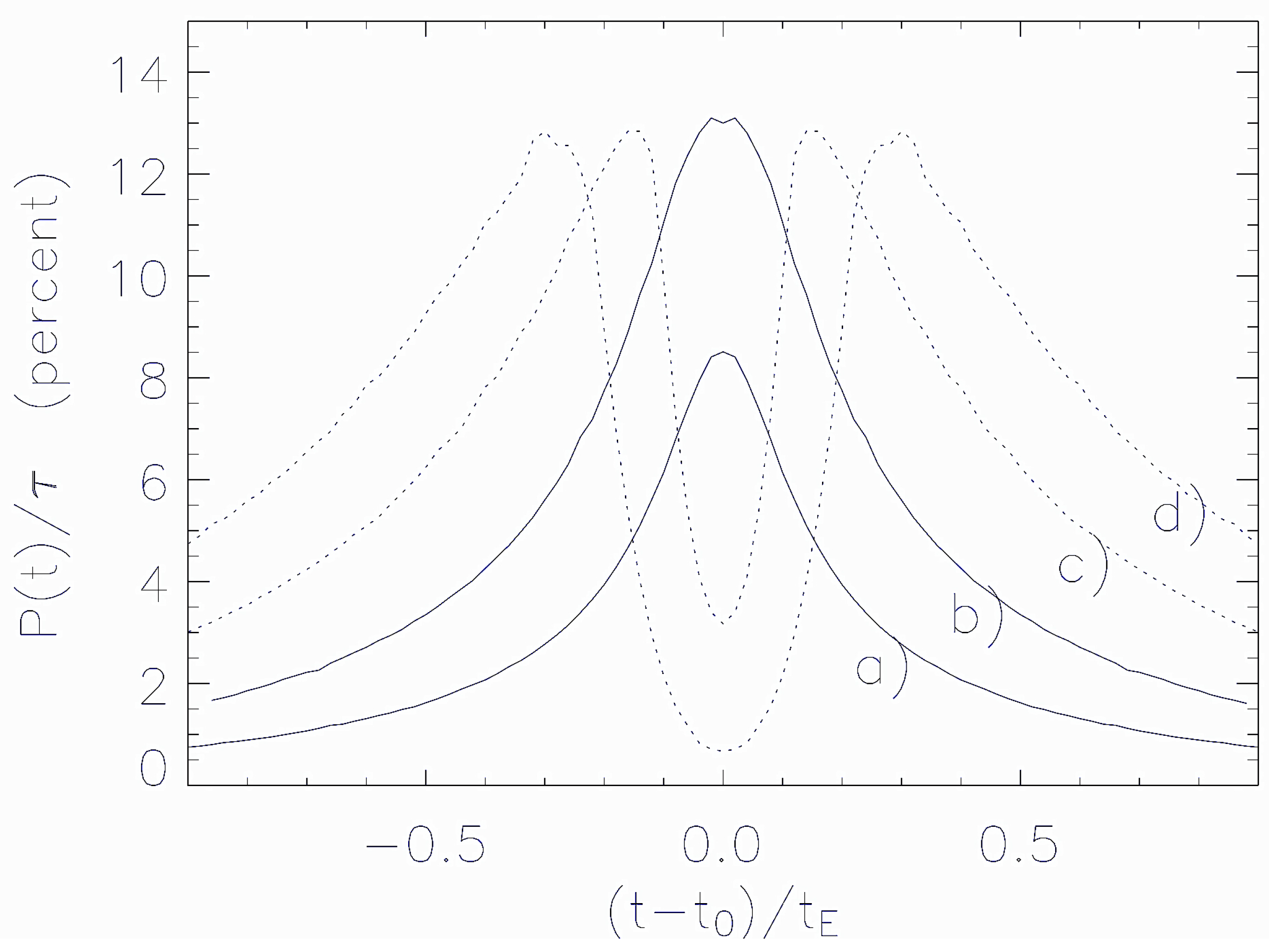

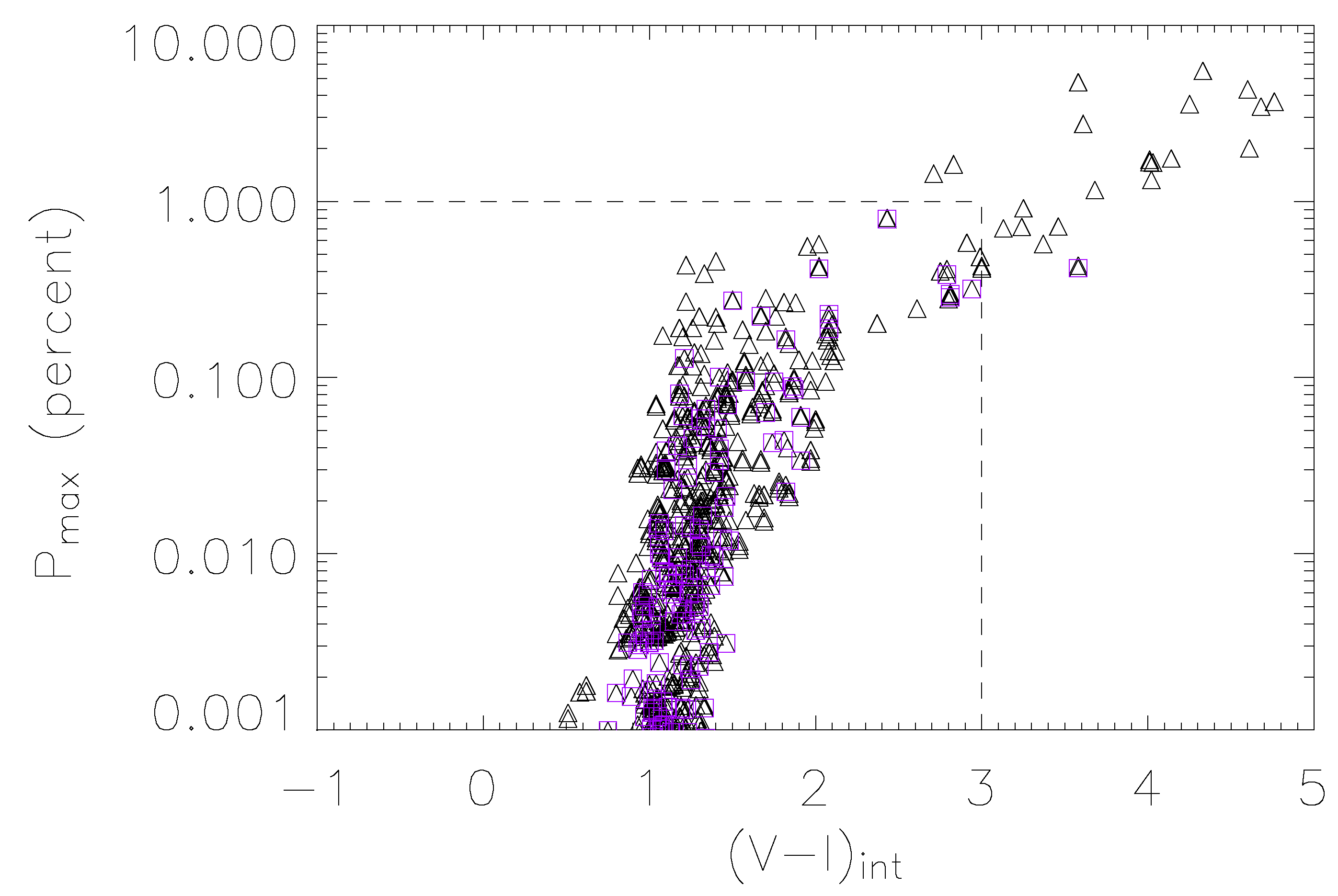

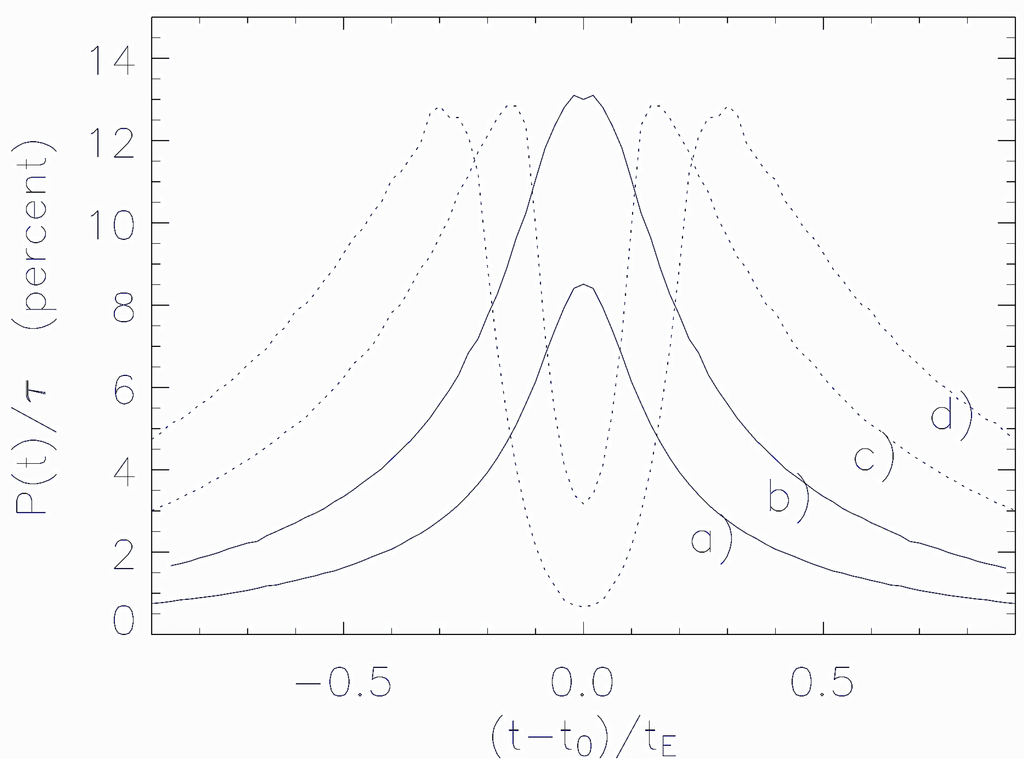

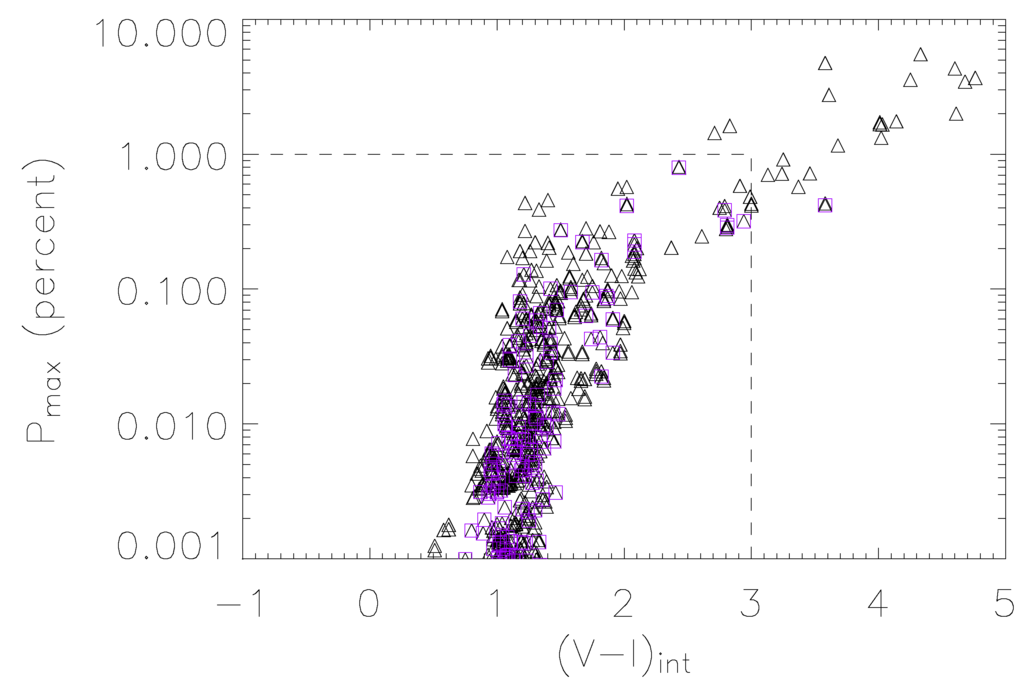

This effect (see also [75]) is particularly relevant in the microlensing events where: (1) the magnification turns out to be significant; (2) the source star radius and the lens impact parameter are comparable; (3) the source star is a red giant, characterized by a rather low surface temperature (), around which the formation of dust grains is possible. This occurs beyond the distance from the star center at which the gas temperature in the stellar wind becomes lower than the grain sublimation temperature (≃ 1400 K). The intensity of the expected polarization signal relies on the dust grain optical depth τ and can reach values of 0.1%–1%, which could be reasonably observed using, for example, the ESO VLTtelescope (see [76]). In Figure 6, we show some typical polarization curves, expected in bypass (continuous curves) and transit events (dashed curves), in which the lens trajectory approaches or passes through the source regions where the dust grains are present. In Figure 7, the distribution of the peak polarization values (given in percent) as a function of the intrinsic source star color index (i.e., the de-reddened color of the unlensed source star) is shown for a sample of OGLE-type microlensing events generated by a synthetic stellar catalog simulating the bulge stellar population. As one can see, red giants with , which corresponds to the events inside the regions delimited by dashed lines, have percent values. These are the typical events observed by the OGLE-III microlensing campaign. There are, however, a few events with percent, characterized by , corresponding to source stars in the AGB phase. These stars, which are rather rare in the galactic bulge, have not been sources of microlensing events observed in the OGLE-III campaign, but they are expected to exist in the galactic bulge. In this respect, the significant increase in the event rate by the forthcoming generation of microlensing surveys towards the galactic bulge, both ground-based, like KMTNet [77], and space-based, like EUCLID [78] and WFIRST [79], opens the possibility to develop an alert system able to trigger polarization measurements in ongoing microlensing events.

Figure 6.

Assuming parameter values , day and , the polarization curves are shown as a function of , for increasing values of , corresponding to the curves labeled (a), (b) (c) and (d), respectively. Continuous curves, (a) and (b), are bypass events; dotted lines, (c) and (d), are transit events. Here, is the minimum distance for the formation of dust grains, and is the source star radius.

Figure 7.

Distribution of the peak value of the polarization signal as a function of the intrinsic color index of the source star for simulated transit (triangles) and bypass (purple squares) events. The dashed lines indicate the region in which the events observed by the OGLECollaboration are expected to lie.

Another way to study the atmosphere of the source star is to analyze the amplification curve and look for dips and peaks, typically due to the presence of stellar spots on the photosphere of the star [80,81]. These features may be easily confused, however, with the signatures of a binary lensing system. When the source star has a relevant rotation motion during the lensing event, there is the possibility to really detect the stellar spots on the source’s surface and to estimate the rotation period of the star [82]. A new generation of networks of telescopes dedicated to microlensing surveys, like KMTNet [83], will provide high-precision and high-cadence photometry that will enable us to observe spots on the source’s surface. We remark that also multicolor observations of the event would help to disentangle the aforementioned degeneracy, as the ratio between the brightness of the spot and the surrounding photosphere strongly depends on the frequency of the observation. It has been shown that stellar spots can be detected also through polarimetric observations of microlensing caustic-crossing events [84].

Under certain circumstances, binary lens systems are characterized by the close-wide degeneracy: if the two objects are separated by a projected distance s or 1/s, the resulting caustics have the same structure, and also, the observed light curves will appear the same [85,86]. This happens, for example, in systems with small mass ratio q, like planetary systems [87]. It is possible to resolve this degeneracy in the case of short-period binary lenses, the so-called rapidly rotating lenses, as the orbital motion induces repeating features in the amplification curve that can be exploited to estimate important physical parameters of the lensing systems, including the orbital period, the projected separation and the mass [88,89].

6. Retro-Lensing: Measuring the Black Hole Features

Gravitational lensing at the scales considered in the previous sections can be treated in the weak gravitational field approximation of the general theory of relativity, since in those cases, photons are deflected by very small angles. This is not the case when one considers black holes, for which it may happen that photons get very close to the event horizon of these compact objects.

Black holes are relatively simple objects. The no-hair theorem postulates that they are completely described by only three parameters: mass, angular momentum (generally indicated by the spin parameter a) and electric charge; any other information (for which hair is a metaphor) disappears behind the event horizon, and it is therefore inaccessible to external observers. Depending on the values of these parameters, black holes can be classified into Schwarzschild black holes (non-rotating and non-charged), Kerr black holes (rotating and non-charged), Reissner–Nordström black holes (non-rotating and charged) and Kerr–Newman black holes (rotating and charged).

Even though they appear so simple, black holes are mathematically complicated to describe (see, e.g., [90]). Nowadays, we know that black holes are placed at the center of the majority of galaxies, active or not, and in many binary systems emitting X-rays. Moreover, they are the engine of gamma-ray bursts (GRBs) and play an essential role in better understanding stellar evolution, galaxy formation and evolution, jets and, in the end, the nature of space and time. One goal astrophysicists have been pursuing for a long time is to probe the immediate vicinity of a black hole with an angular resolution as close as possible to the size of the event horizon. This kind of observations would give a new opportunity to study strong gravitational fields, and as we will see at the end of this section, we think we are very close to reaching this goal.

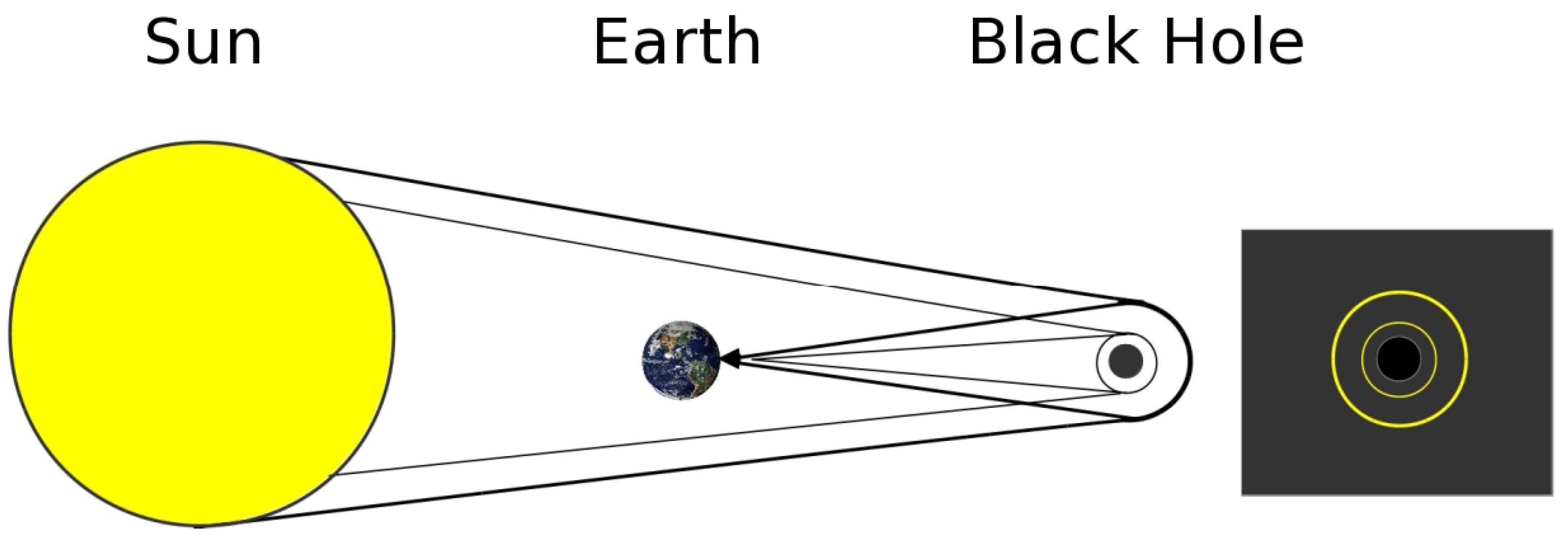

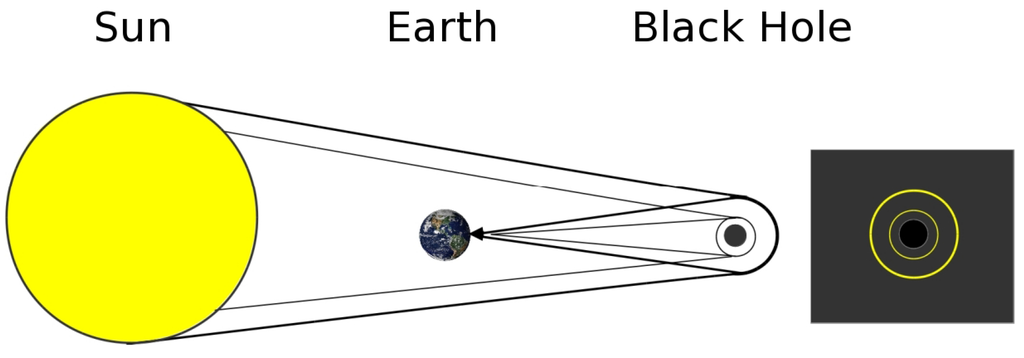

How do we measure the mass, angular momentum and electric charge of a black hole? One possibility, rich with interesting consequences, was suggested by Holz and Wheeler [91], who considered a phenomenon that was already known to be possible around black holes. They used the Sun as the source of light rays and a black hole far from the solar system. As shown in Figure 8, some photons would have the right impact parameter to turn around the black hole and come back to Earth. Other photons, with a slightly smaller impact parameter, can even rotate twice around the black hole, and so on. A series of concentric rings should then appear if the observer, the Sun and the black hole are perfectly aligned. The two authors also suggested to do a survey and look for concentric rings in the sky in order to discover black holes. Unfortunately, there are two problems with this idea. First, it is unlikely that the Sun, Earth and a black hole are perfectly aligned, and in any case, Earth moves around the Sun, so that the alignment can occur only for a short time interval. The second and most important problem is that the retro-image of the Sun is so dim, that even using the Hubble Space Telescope (HST), only a black hole with a mass larger than within 0.01 pc from the Earth could be observed with the proposed technique. Moreover, we already know that such an object cannot be so close to the solar system without causing observable perturbations in the planet orbits.

Figure 8.

Retro-lensing of the Sun light by a black hole as seen from Earth. On the right-hand side, the series of rings around the black hole’s event horizon an observer would observe in the case of perfect alignment. For clarity, only two rings are shown.

A better approach to test the idea proposed by Holz and Wheeler is to consider a well-known supermassive black hole and a bright star around it. Of course, the brighter the source star, the brighter will be the retro-image. Some of us [92] soon proposed to consider retro-lensing around the black hole at the galactic center, and in particular, the retro-lensing image of the closest star orbiting around it. Indeed, it is known that at the center of our galaxy, there is a supermassive black hole, with mass about , identified by studying the orbits of several bright stars orbiting around it (see [93,94] and the references therein). A method to determine the mass and the angular momentum of this black hole could then be to measure the periastron or apoastron shifts of some of the stars orbiting around it. Another method to estimate the black hole spin a is based on the analysis of the quasi-periodical oscillations towards Sgr A*. Recently, the analysis of the data in the X-ray and IR bands have allowed some astrophysicists to find that [94,95]. However, there is a drawback in this approach: periastron and apoastron shift of orbits depend not only on the black hole parameters, but also on how stars are distributed around the black hole and on the mass density profile of the dark matter possibly present in the region surrounding the black hole. It is possible to understand the difficulty of the measure by noting that the difference of the periastron shift of the S2 star (the closest one to the black hole at the center of our galaxy) induced by a Schwarzschild black hole or a Kerr black hole with spin parameter (and the same mass of the Schwarzschild one) is only of as (for the dependance of the periastron shift on the black hole spin orientation see [96]). Then, even if one had succeeded in measuring the periastron shift of the closest star to the central black hole, it would be unlikely to derive the amount of the black hole angular momentum. Our goal could be achieved anyway by measuring the periastron shift of many stars orbiting around the center of the Galaxy. The measure of the periastron shift could give, in turn, also an estimate of the parameters of the dark matter concentration expected to lie towards the Sgr region [97], as well as to test different modifications of the general theory of relativity [98,99,100] (see also [101] for the constraints on theories by Solar System data)). However, this is anything but easy [102]. An important step forward in this direction has been provided recently by near-infrared astrometric observations of many stars around Sgr with a precision of about 170 μas in position and ≃ 0.07 mas· yr−1 in velocity [103]. A further improvement, hopefully in the near future, would make possible the direct detection of relativistic effects in the orbits of stars orbiting the central black hole.

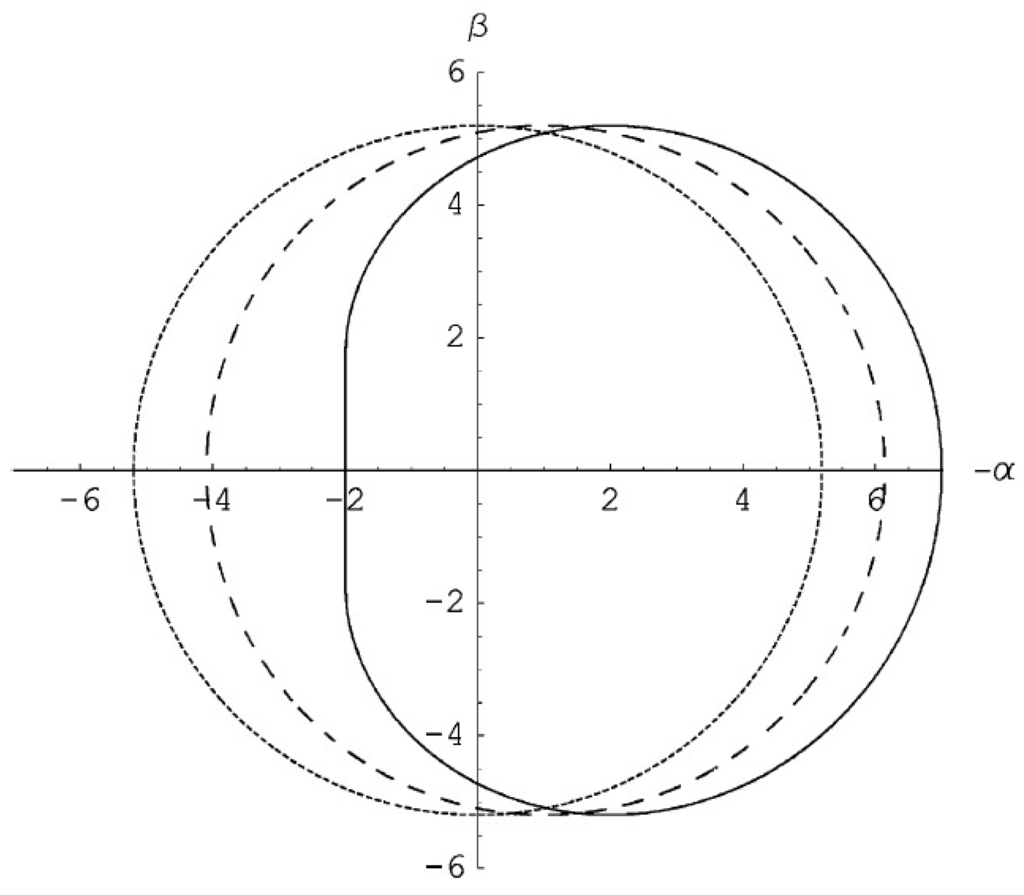

Retro-lensing images of bright stars retro-lensed by the black hole at the galactic center might give an alternative method to estimate the Sgr A* black hole parameters. Even though in general it is difficult to calculate the retro-lensing images, since this requires integrating with high precision the trajectories followed by the light, it is possible to numerically do these calculations not only for a Schwarzschild black hole, but also for Kerr and Reissner–Nordström black holes. As discussed in several papers (see, e.g., [104] and the references therein), one finds that the shape of the retro-lensing image depends on the black hole spin (see Figure 9), and then, in principle, a single precise enough observation of the retro-lensing image of a star could allow one to unambiguously estimate the parameters of the black hole in Sgr A*. It is possible to show that also the electric charge of a Reissner–Nordström black hole can be obtained [105]. In fact, although the formation of a Reissner–Nordström black hole may be problematic, charged black holes are objects of intensive investigations, and the black hole charge can be estimated by using the size of the retro-lensing images that can be revealed by future astrometrical missions. The shape of the retro-lensing (or shadow) image depends in fact also on the electric charge of the black hole, and it becomes smaller as the electric charge increases. The mirage size difference between the extreme charged black hole and the Schwarzschild black hole case is about 30%, and in the case of the black hole in Sgr A*, the shadow typical angular sizes are about 52 μas for the Schwarzschild case and about 40 μas for a maximally charged Reissner–Nordström black hole. Therefore, a charged black hole could be, in principle, distinguished by a Schwarzschild black hole with RADIOASTRON, at least if its charge is close to the maximal value. We also mention that the black hole spin gives rise also to chromatic effects (while for non-rotating lenses, the gravitational lensing effect is always achromatic), making one side of the image bluer than the other side [104].

Figure 9.

Retro-lensing images of a source by a Schwarzschild black hole (dotted circle), a Kerr black hole with spin parameter (dashed line) and a maximally spinning black hole with (continuous line). The line of sight of the observer is perpendicular to the spin axis of the black hole.

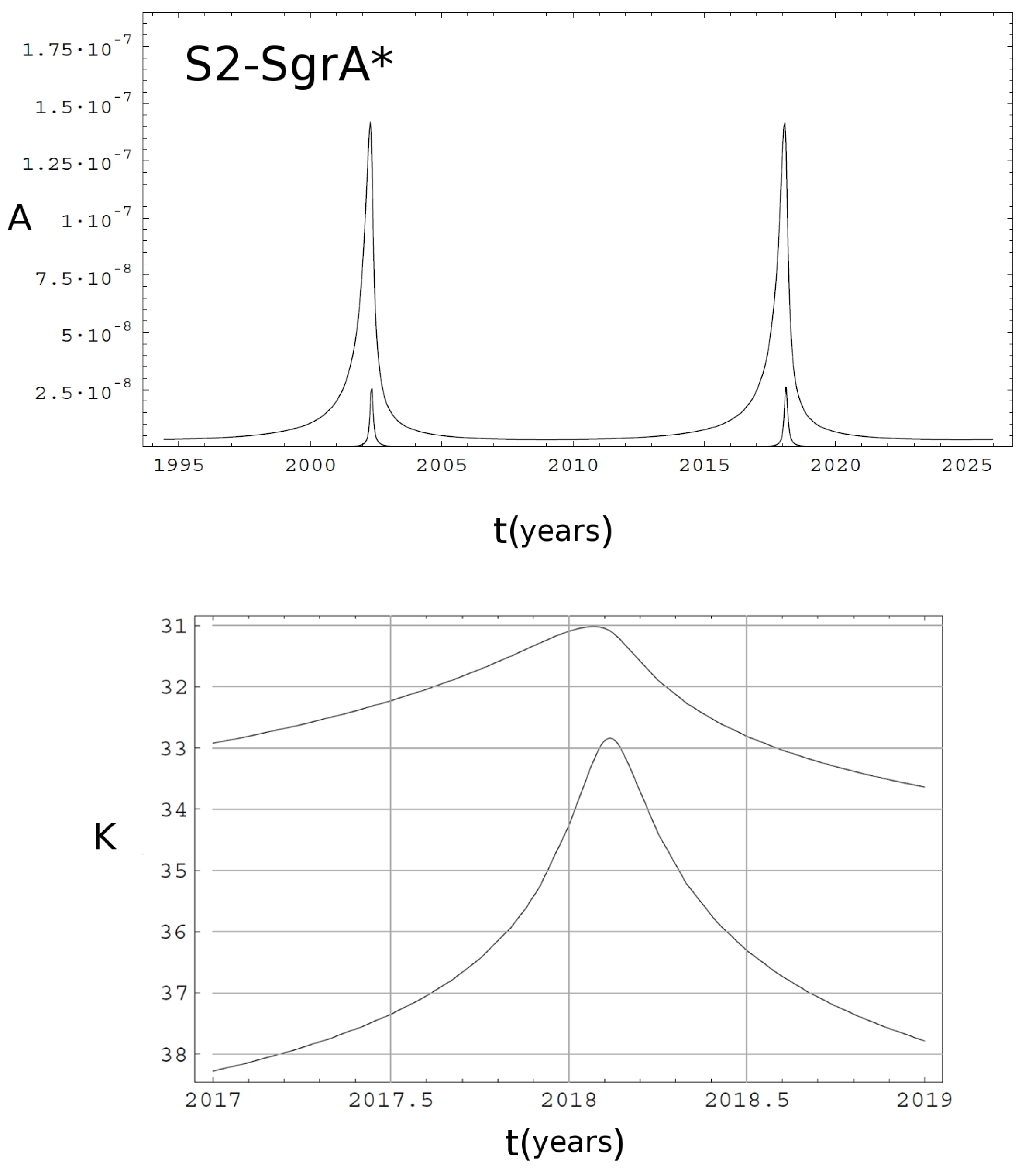

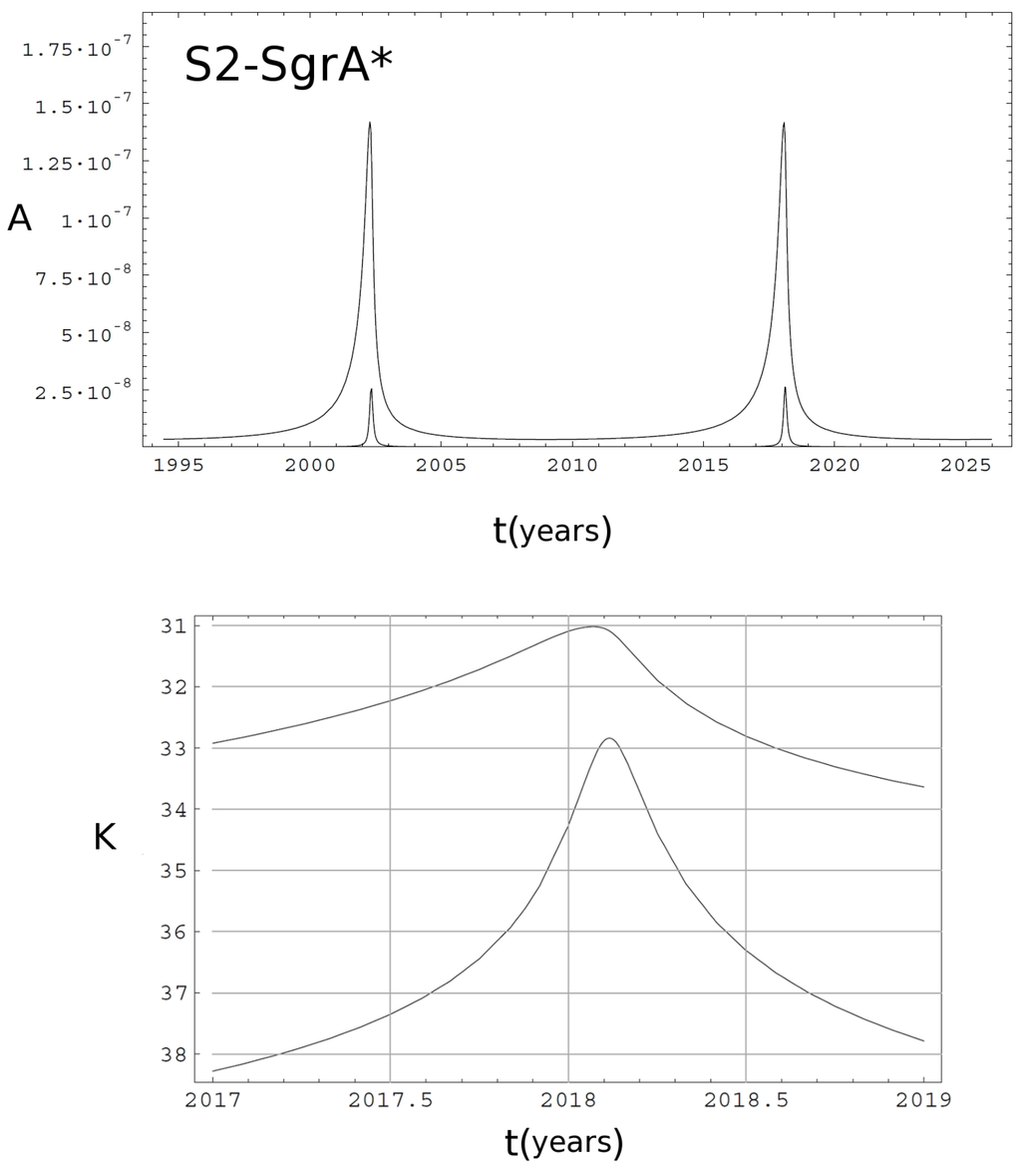

Can we really hope to observe these retro-lensing images towards Sgr A*? Despite what one could think, we are not so far from this goal. The successor of the Hubble Space Telescope, the James Webb Space Telescope (JWST), scheduled for launch in October 2018, has the sensitivity to observe the retro-lensing image of the S2 star produced by the black hole at the galactic center with an exposure time of about thirty hours. In Figure 10, we show the magnification (upper panel) and the magnitude (bottom panel) light curves (in K band) of the retro-lensing image of the S2 star produced by the black hole at the galactic center (see also [106]). Unfortunately, JWST has not the angular resolution necessary to provide information about the shape of the retro-lensing image. The right angular resolution could be gained with the next generation of radio interferometers. In fact, the diameter of the retro-lensing image around the central black hole should be of about 30 μas, and already in 2008, Doeleman and his collaborators [107] managed to achieve an angular resolution of about 37 μas, very close to the required one, by using interferometrically different radio telescopes with a baseline of about 4500 km. Progress in this field is so fast, that it is not hard to think we can eventually reach this aim in the near future by, e.g., the EHT (Event Horizon Telescope) project, or by the planned Russian space observatory, Millimetron (the spectrum-M project), or by combined observations with different interferometers, such as the Very Large Array (VLA) and ALMA (Atacama Large Millimeter Array).

Figure 10.

Upper panel: amplification as a function of time for the primary (upper curve) and secondary (lower curve) retro-lensing images of the S2 star by the black hole in the Galaxy center. Lower panel: light curve in K-band magnitude of the two retro-lensing images (adapted from [106]). The standard interstellar absorption coefficient towards the Galaxy center has been assumed.

7. Conclusions

In the paper, we have discussed the various scales in which gravitational lensing manifests itself and that may lead us to obtain valuable information about a great variety of astronomical issues ranging from the star distribution in the Milky Way, the study of stellar atmospheres, the discovery of exoplanets in the Milky Way and also in nearby galaxies, the study of far away galaxies, galaxy clusters and black holes. Gravitational lensing, in particular in the strong and weak lensing regime, may also allow scientists to answer, in the near future, fundamental questions in cosmology related to the nature of dark matter, why the Universe is accelerating and what is the nature of the source responsible for the acceleration, which physicists refer to as dark energy.

Acknowledgments

The authors acknowledge the support of the TAsP (Theoretical Astroparticle Physics) Project funded by INFN. Mosè Giordano acknowledges the support of the Max Planck Institute for Astronomy, Heidelberg, where part of this work has been done. We thank Asghar Qadir and Alexander Zakharov for the valuable discussions on the paper subject during many years.

Author Contributions

All authors have equally contributed to this paper and have read and approved the final version.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Einstein, A. On the Influence of Gravitation on the Propagation of Light. Ann. Phys. 1911, 340, 898–908. [Google Scholar] [CrossRef]

- Dyson, F.W.; Eddington, A.; Davidson, C. A determination of the deflection of light by the sun’s gravitational field from observations made at the total eclipse of May 29, 1919. Phil. Trans. Roy. Soc. A 1920, 220, 291–333. [Google Scholar] [CrossRef]

- Chwolson, O. Über eine mögliche Form fiktiver Doppelsterne. Astron. Nachr. 1924, 221, 329–330. [Google Scholar] [CrossRef]

- Einstein, A. Lens-Like Action of a Star by the Deviation of Light in the Gravitational Field. Science 1936, 84, 506–507. [Google Scholar] [CrossRef] [PubMed]

- Zwicky, F. Nebulae as Gravitational Lenses. Phys. Rev. 1937, 51, 290. [Google Scholar] [CrossRef]

- Zwicky, F. On the Probability of Detecting Nebulae which Act as Gravitational lenses. Phys. Rev. 1937, 51, 679. [Google Scholar] [CrossRef]

- Walsh, D.; Carswell, R.F.; Weymann, R.J. 0957 + 561 A,B: Twin quasistellar objects or gravitational lens? Nature 1979, 279, 381–384. [Google Scholar] [CrossRef] [PubMed]

- Paczyǹski, B. Gravitational microlensing by the galactic halo. Astrophys. J. 1986, 304, 1–5. [Google Scholar] [CrossRef]

- Johannsen, T. Sgr A* and general relativity. 2015; arXiv:1512.03818. [Google Scholar]

- Abbott, B.P.; Abbott, R.; Abbott, T.D.; Abernathy, M.R.; Acernese, F.; Ackley, K.; Adams, C.; Adams, T.; Addesso, P.; Adhikari, R.X.; et al. Observation of Gravitational Waves from a Binary Black Hole Merger. Phys. Rev. Lett. 2016, 116, 061102. [Google Scholar] [CrossRef] [PubMed]

- Schneider, P.; Ehlers, G.; Falco, E.E. Gravitational Lenses; Springer Verlag: Berlin, Germany, 1992. [Google Scholar]

- Schmidt, M. 3C 273: A star-like object with large red-shift. Nature 1963, 197, 1040. [Google Scholar] [CrossRef]

- Pelt, J.; Kayser, R.; Refsdal, S.; Schramm, T. The light curve and the time delay of QSO 0957+561. Astron. Astrophys. 1996, 306, 97–106. [Google Scholar]

- Chen, G.H.; Kochanek, C.S.; Hewitt, J.N. The Mass Distribution of the Lens Galaxy in MG 1131+0456. Astrophys. J. 1995, 447, 62–81. [Google Scholar] [CrossRef]

- Hennawi, J.F.; Gladders, M.D.; Oguri, M.; Dalal, N.; Koester, B.; Natarajan, P.; Strauss, M.A.; Inada, N.; Kayo, I.; Lin, H. A New Survey for Giant Arcs. Astron. J. 2008, 135, 664–681. [Google Scholar] [CrossRef]

- Browne, I.W.A.; Wilkinson, P.N.; Jackson, N.J.F.; Myers, S.T.; Fassnacht, C.D.; Koopmans, L.V.E.; Marlow, D.R.; Norbury, M.; Rusin, D.; Sykes, C.M.; et al. The Cosmic Lens All-Sky Survey - II. Gravitational lens candidate selection and follow-up. Mon. Not. R. Astron. Soc. 2003, 341, 13–32. [Google Scholar] [CrossRef]

- Bolton, A.S.; Burles, S.; Koopmans, L.V.E.; Treu, T.; Moustakas, L.A. The Sloan Lens ACS Survey. I. A Large Spectroscopically Selected Sample of Massive Early-Type Lens Galaxies. Astrophys. J. 2006, 638, 703–724. [Google Scholar] [CrossRef]

- Alam, S.; Albareti, F.D.; Allende, P.; Anders, F.; Anderson, S.F.; Anderton, T.; Andrews, B.H.; Armengaud, E.; Aubourg, E.; Bailey, S.; et al. The Eleventh and Twelfth Data Releases of the Sloan Digital Sky Survey: Final Data from SDSS-III. Astrophys. J. Suppl. Ser. 2015, 219, 12. [Google Scholar] [CrossRef]

- Oguri, M.; Inada, N.; Pindor, B.; Strauss, M.A.; Richards, G.T.; Hennawi, J.F.; Turner, E.L.; Lupton, R.H.; Schneider, D.P.; Fukugita, M. The Sloan Digital Sky Survey Quasar Lens Search. I. Candidate Selection Algorithm. Astrophys. J. 2006, 132, 999–1013. [Google Scholar] [CrossRef]

- Blandford, R.D.; Narajan, R. Cosmological applications of gravitational lensing. Annu. Rev. Astron. Astrophys. 1992, 30, 311–358. [Google Scholar] [CrossRef]

- Treu, T. Strong lensing by galaxies. Annu. Rev. Astron. Astrophys. 2010, 48, 87–125. [Google Scholar] [CrossRef]

- Kneib, J.-P.; Ellis, R.S.; Smail, I.; Couch, W. J.; Sharples, R.M. Hubble Space Telescope Observations of the Lensing Cluster Abell 2218. Astrophys. J. 1996, 471, 643–656. [Google Scholar] [CrossRef]

- Mao, S.; Schneider, P. Evidence for substructure in lens galaxies? Mon. Not. R. Astron. Soc. 1998, 95, 587–594. [Google Scholar] [CrossRef]

- Metcalf, R.B.; Madau, P. Compound Gravitational Lensing as a Probe of Dark Matter Substructure within Galaxy Halos. Astrophys. J. 2001, 563, 9–20. [Google Scholar] [CrossRef]

- Xu, D.D.; Mao, S.; Wang, J.; Springel, V.; Gao, L.; White, S.D.M.; Frenk, C.S.; Jenkins, A.; Li, G.; Navarro, J.F. Effects of dark matter substructures on gravitational lensing: results from the Aquarius simulations. Mon. Not. R. Astron. Soc. 2009, 408, 1235–1253. [Google Scholar] [CrossRef]

- Metcalf, R.B.; Amara, A. Small-scale structures of dark matter and flux anomalies in quasar gravitational lenses. Mon. Not. R. Astron. Soc. 2012, 419, 3414–3425. [Google Scholar] [CrossRef]

- Xu, D.D.; Sluse, D.; Gao, L.; Wang, J.; Frenk, C.; Mao, S.; Schneider, P.; Springel, V. How well can cold dark matter substructures account for the observed radio flux-ratio anomalies. Mon. Not. R. Astron. Soc. 2015, 447, 3189–3206. [Google Scholar] [CrossRef]

- Vegetti, S.; Lagattuta, D.J.; McKean, J.P.; Auger, M.W.; Fassnacht, C.D.; Koopmans, L.V.E. Gravitational detection of a low-mass dark satellite galaxy at cosmological distance. Nature 2012, 481, 341–343. [Google Scholar] [CrossRef] [PubMed]

- Vegetti, S.; Koopmans, L.V.E.; Auger, M.W.; Treu, T.; Bolton, A.S. Inference of the cold dark matter substructure mass function at z = 0.2 using strong gravitational lenses. Mon. Not. R. Astron. Soc. 2014, 442, 2017–2035. [Google Scholar] [CrossRef]

- Kochanek, C.S. Quantitative Interpretation of Quasar Microlensing Light Curves. Astrophys. J. 2004, 605, 58–77. [Google Scholar] [CrossRef]

- Mosquera, A.M.; Kochanek, C.S. The Microlensing Properties of a Sample of 87 Lensed Quasars. Astrophys. J. 2011, 738, 96. [Google Scholar] [CrossRef]

- Pooley, D.; Rappaport, S.; Blackburne, J.A.; Schechter, P.L.; Wambsganss, J. X-Ray and Optical Flux Ratio Anomalies in Quadruply Lensed Quasars. II. Mapping the Dark Matter Content in Elliptical Galaxies. Astrophys. J. 2012, 744, 111. [Google Scholar] [CrossRef]

- Soucail, G.; Fort, B.; Mellier, Y.; Picat, J.P. A blue ring-like structure, in the center of the A 370 cluster of galaxies. Astron. Astrophys. 1987, 172, L14–L16. [Google Scholar]

- Stark, D.P.; Swinbank, A.M.; Ellis, R.S.; Dye, S.; Smail, I.R.; Richard, J. The formation and assembly of a typical star-forming galaxy at redshift z∼3. Nature 2008, 455, 775–777. [Google Scholar] [CrossRef] [PubMed]

- Kelly, P.L.; Rodney, S.A.; Treu, T.; Foley, R.J.; Brammer, G.; Schmidt, K.B.; Zitrin, A.; Sonnenfeld, A.; Strolger, L.-G.; Graur, O.; et al. Multiple images of a highly magnified supernova formed by an early-type cluster galaxy lens. Science 2015, 347, 1123–1126. [Google Scholar] [CrossRef] [PubMed]

- Treu, T.; Brammer, G.; Diego, J.M.; Grillo, C.; Kelly, P.L.; Oguri, M.; Rodney, A.; Rosati, P.; Sharon, K.; Zitrin, A. “Refsdal” Meets Popper: Comparing Predictions of the Re-appearance of the Multiply Imaged Supernova Behind MACSJ1149.5+2223. Astrophys. J. 2016, 817, 60. [Google Scholar] [CrossRef]

- Refsdal, S. On the possibility of determining Hubble’s parameter and the masses of galaxies from the gravitational lens effect. Mon. Not. R. Astron. Soc. 1964, 128, 307–310. [Google Scholar] [CrossRef]

- Jackson, N. The Hubble Constant. Living Rev. Relativ. 2007, 10, 4. [Google Scholar] [CrossRef] [Green Version]

- Suyu, S.H.; Marshall, P.J.; Auger, M.W.; Hilbert, S.; Blandford, R.D.; Koopmans, L.V.E.; Fassnacht, C.D.; Treu, T.; et al. Dissecting the Gravitational lens B1608+656. II. Precision Measurements of the Hubble Constant, Spatial Curvature, and the Dark Energy Equation of State. Astrophys. J. 2010, 711, 201–221. [Google Scholar] [CrossRef]

- Bonvin, V.; Tewes, M.; Courbin, F.; Kuntzer, T.; Sluse, D.; Meylan, G. COSMOGRAIL: the COSmological MOnitoring of GRAvItational Lenses. XV. Assessing the achievability and precision of time-delay measurements. Astron. Astrophys. 2016, 585, A88. [Google Scholar] [CrossRef]

- Bartelmann, M.; Schneider, P. Weak gravitational lensing. Phys. Rep. 2001, 340, 291–472. [Google Scholar] [CrossRef]

- Tyson, J.A.; Wenk, R.A.; Valdes, F. Detection of systematic gravitational lens galaxy image alignments—Mapping dark matter in galaxy clusters. Astrophys. J. 1990, 349, L1–L4. [Google Scholar] [CrossRef]

- Bacon, D.J.; Refregier, A.R.; Ellis, R.S. Detection of weak gravitational lensing by large-scale structure. Mon. Not. R. Astron. Soc. 2000, 318, 625–640. [Google Scholar] [CrossRef]

- Kaiser, N. A New Shear Estimator for Weak-Lensing Observations. Astrophys. J. 2000, 537, 555–577. [Google Scholar] [CrossRef]

- Seitz, S.; Schneider, P. Cluster lens reconstruction using only observed local data: An improved finite-field inversion technique. Astron. Astrophys. 1996, 305, 383–401. [Google Scholar]

- Lombardi, M.; Bertin, G. A fast direct method of mass reconstruction for gravitational lenses. Astron. Astrophys. 1999, 348, 38–42. [Google Scholar]

- Schneider, P.; King, L.; Erben, T. Cluster mass profiles from weak lensing: Constraints from shear and magnification information. Astron. Astrophys. 2000, 353, 41–56. [Google Scholar]

- Han, J.; Eke, V.R.; Frenk, C.S.; Mandelbaum, R.; Norberg, P.; Schneider, M.D.; Peacock, J.A.; Jing, Y.; Baldry, I.; Bland-Hawthorn, J.; et al. Galaxy And Mass Assembly (GAMA): the halo mass of galaxy groups from maximum-likelihood weak lensing. Mon. Not. R. Astron. Soc. 2015, 446, 1356–1379. [Google Scholar] [CrossRef]

- Marshall, P.J.; Hobson, M.P.; Gull, S.F.; Bridle, S.L. Maximum-entropy weak lens reconstruction: improved methods and application to data. Mon. Not. R. Astron. Soc. 2002, 335, 1037–1048. [Google Scholar] [CrossRef]

- Clowe, D.; De Lucia, G.; King, L. Effects of asphericity and substructure on the determination of cluster mass with weak gravitational lensing. Mon. Not. R. Astron. Soc. 2004, 350, 1038–1048. [Google Scholar] [CrossRef]

- Corless, V.L.; King, L.J. A statistical study of weak lensing by triaxial dark matter haloes: consequences for parameter estimation. Mon. Not. R. Astron. Soc. 2007, 380, 149–161. [Google Scholar] [CrossRef]

- Hoekstra, H. A comparison of weak-lensing masses and X-ray properties of galaxy clusters. Mon. Not. R. Astron. Soc. 2007, 379, 317–330. [Google Scholar] [CrossRef]

- Zhang, Y.-Y.; Finoguenov, A.; Böhringer, H.; Kneib, J.-P.; Smith, G.P.; Kneissl, R.; Okabe, N.; Dahle, H. LoCuSS: Comparison of observed X-ray and lensing galaxy cluster scaling relations with simulations. Astron. Astrophys. 2008, 482, 451–472. [Google Scholar] [CrossRef]

- Contaldi, C.R.; Hoekstra, H.; Lewis, A. Joint Cosmic Microwave Background and Weak Lensing Analysis: Constraints on Cosmological Parameters. Phys. Rev. Lett. 2003, 22, 221303. [Google Scholar] [CrossRef] [PubMed]

- Hollenstein, L.; Sapone, D.; Crittenden, R.; Schäfer, B.M. Constraints on early dark energy from CMB lensing and weak lensing tomography. J. Cosmol. Astropart. Physucs 2009, 04. id. 012. [Google Scholar] [CrossRef]

- Majerotto, E.; Sapone, D.; Schäfer, B.M. Combined constraints on deviations of dark energy from an ideal fluid from Euclid and Planck. Mon. Not. R. Astron. Soc. 2016, 456, 109–118. [Google Scholar] [CrossRef]

- Clowe, D.; Bradaĉ, M.; Gonzalez, A.H.; Markevitch, M.; Randall, S.W.; Jones, C.; Zaritsky, D. A Direct Empirical Proof of the Existence of Dark Matte. Astrophys. J. 2006, 648, L109–L113. [Google Scholar] [CrossRef]

- Amendola, L.; Appleby, S.; Bacon, D.; Baker, T.; Baldi, M.; Bartolo, N.; Blanchard, A.; Bonvin, C.; Borgani, S.; Branchini, E.; et al. Cosmology and Fundamental Physics with the Euclid Satellite. Living Rev. Relat. 2013, 16, 6. [Google Scholar]

- Chang, C.; Jarvis, M.; Jain, B.; Kahn, S.M.; Kirkby, D.; Connolly, A.; Krughoff, S.; Peng, E.-H.; Peterson, J.R. The effective number density of galaxies for weak lensing measurements in the LSST project. Mon. Not. R. Astron. Soc. 2013, 434, 2121–2135. [Google Scholar] [CrossRef]

- Heymans, C.; Heavens, A. Weak gravitational lensing: reducing the contamination by intrinsic alignments. Mon. Not. R. Astron. Soc. 2003, 339, 711–720. [Google Scholar] [CrossRef]

- Valageas, P. Source-lens clustering and intrinsic-alignment bias of weak-lensing estimators. Astron. Astrophys. 2014, 561, A53. [Google Scholar] [CrossRef]

- Mao, S. Astrophysical applications of gravitational microlensing. Res. Astron. Astrophys. 2012, 12, 947–972. [Google Scholar] [CrossRef]

- Perryman, M. The Exoplanet Handbook; University Press: Cambridge, UK, 2014. [Google Scholar]

- Sumi, T.; Kamiya, K.; Udalski, A.; Bennett, D.P.; Bond, I.A.; Abe, F.; Botzler, C.S.; Fukui, A.; Furusawa, K.; Hearnshaw, J.B.; Itow, Y.; et al. Unbound or distant planetary mass population detected by gravitational microlensing. Nature 2011, 473, 349–352. [Google Scholar] [CrossRef] [PubMed]

- Ingrosso, G.; Calchi Novati, S.; De Paolis, F.; Jetzer, P.; Nucita, A.A.; Strafella, F. Pixel lensing as a way to detect extrasolar planets in M31. Mon. Not. R. Astron. Soc. 2009, 399, 219–228. [Google Scholar] [CrossRef]

- An, J.H.; Evans, N.W.; Kerins, E.; Baillon, P.; Calchi-Novati, S.; Carr, B.J.; Creze, M.; Giraud-Heraud, Y.; Gould, A.; Hewett, P.; et al. The Anomaly in the Candidate Microlensing Event PA-99-N2. Astrophys. J. 2004, 601, 845–857. [Google Scholar] [CrossRef]

- Dominik, M.; Sahu, K.C. Astrometric Microlensing of Stars. Astrophys. J. 2000, 534, 213–226. [Google Scholar] [CrossRef]

- Lee, C.-H.; Seitz, S.; Riffeser, A.; Bender, R. Finite-source and finite-lens effects in astrometric microlensing. Mon. Not. R. Astron. Soc. 2010, 407, 1597–1608. [Google Scholar] [CrossRef]

- Walker, M.A. Microlensed Image Motions. Astrophys. J. 1995, 453, 37–39. [Google Scholar] [CrossRef]

- Eyer, L.; Holl, B.; Pourbaix, D.; Mowlavi, N.; Siopis, C.; Barblan, F.; Evans, D.W.; North, P. The Gaia Mission. Cent. Eur. Astrophys. Bull. (CEAB) 2013, 37, 115–126. [Google Scholar]

- Paczyński, B. The Masses of Nearby Dwarfs and Brown Dwarfs with the HST. Acta Astron. 1996, 46, 291–296. [Google Scholar]

- Proft, S.; Demleitner, M.; Wambsganss, J. Prediction of astrometric microlensing events during the Gaia mission. Astron. Astrophys. 2011, 536, A50. [Google Scholar] [CrossRef]

- Ingrosso, G.; Calchi Novati, S.; De Paolis, F.; Jetzer, P.; Nucita, A.A.; Strafella, F.; Zakharov, A.F. Polarization in microlensing events towards the Galactic bulge. Mon. Not. R. Astron. Soc. 2012, 426, 1496–1506. [Google Scholar] [CrossRef]

- Chandrasekhar, S. Radiative Transfer; Clarendon Press: Oxford, UK, 1950. [Google Scholar]

- Simmons, J.F.L.; Bjorkman, J.E.; Ignace, R.; Coleman, I.J. Polarization from microlensing of spherical circumstellar envelopes by a point lens. Mon. Not. R. Astron. Soc. 2002, 336, 501–510. [Google Scholar] [CrossRef]

- Ingrosso, G.; Calchi Novati, S.; De Paolis, F.; Jetzer, P.; Nucita, A.A.; Strafella, F. Measuring polarization in microlensing events. Mon. Not. R. Astron. Soc. 2015, 446, 1090–1097. [Google Scholar] [CrossRef]

- Henderson, C.B.; Gaudi, B.S.; Han, C.; Skowron, J.; Penny, M.T.; Nataf, D.; Gould, A.P. Optimal Survey Strategies and Predicted Planet Yields for the Korean Microlensing Telescope Network. Astrophys. J. 2014, 794, 52. [Google Scholar] [CrossRef]

- Penny, M.T.; Kerins, E.; Rattenbury, N.; Beaulieu, J.-P.; Robin, A.C.; Mao, S.; Batista, V.; Calchi Novati, S.; Cassan, A.; Fouque, P.; et al. Zapatero Osorio ExELS: An exoplanet legacy science proposal for the ESA Euclid mission - I. Cold exoplanets. Mon. Not. R. Astron. Soc. 2013, 434, 2–22. [Google Scholar] [CrossRef]

- Yee, J.C.; Albrow, M.; Barry, R.K.; Bennett, D.; Bryden, G.; Chung, S.-J.; Gaudi, B.S.; Gehrels, N.; Gould, A.; Penny, M.T.; et al. Takahiro SumiNASA ExoPAG Study Analysis Group 11: Preparing for the WFIRST Microlensing Survey. 2014; arXiv:1409.2759. [Google Scholar]

- Heyrovský, D.; Sasselov, D. Detecting Stellar Spots by Gravitational Microlensing. Astrophys. J. 2000, 529, 69–76. [Google Scholar] [CrossRef]

- Hendry, M.A.; Bryce, H.M.; Valls-Gabaud, D. The microlensing signatures of photospheric starspots. Mon. Not. R. Astron. Soc. 2002, 335, 539–549. [Google Scholar] [CrossRef]

- Giordano, M.; Nucita, A.A.; De Paolis, F.; Ingrosso, G. Starspot induced effects in microlensing events with rotating source star. Mon. Not. R. Astron. Soc. 2015, 453, 2017–2021. [Google Scholar] [CrossRef]

- Kim, S.-L.; Park, B.-G.; Lee, C.-U.; Yuk, I.-S.; Han, C.; O’Brien, T.; Gould, A.; Lee, J.W.; Kimet, D.-J. Technical specifications of the KMTNet observation system. Proc. SPIE 2010. [Google Scholar] [CrossRef]

- Sajadian, S. Detecting stellar spots through polarimetric observations of microlensing events in caustic-crossing. Mon. Not. R. Astron. Soc. 2015, 452, 2587–2596. [Google Scholar] [CrossRef]

- Dominik, M. The binary gravitational lens and its extreme cases. Astron. Astrophys. 1999, 349, 108–125. [Google Scholar]

- An, J.H. Gravitational lens under perturbations: symmetry of perturbing potentials with invariant caustics. Mon. Not. R. Astron. Soc. 2005, 356, 1409–1428. [Google Scholar] [CrossRef]

- Griest, K.; Safizadeh, N. The Use of High-Magnification Microlensing Events in Discovering Extrasolar Planets. Astrophys. J. 1998, 500, 37–50. [Google Scholar] [CrossRef]

- Penny, M.T.; Kerins, E.; Mao, S. Rapidly rotating lenses: Repeating features in the light curves of short-period binary microlenses. Mon. Not. R. Astron. Soc. 2011, 417, 2216–2229. [Google Scholar] [CrossRef] [Green Version]

- Nucita, A.A.; Giordano, M.; De Paolis, F.; Ingrosso, G. Signatures of rotating binaries in microlensing experiments. Mon. Not. R. Astron. Soc. 2014, 438, 2466–2473. [Google Scholar] [CrossRef]

- Chandrasekhar, S. The Mathematical Theory of Black Holes; Oxford University Press: Oxford, UK, 1983. [Google Scholar]

- Holz, D.E.; Wheeler, J.A. Retro-MACHOs: pi in the Sky. Astrophys. J. 2002, 57, 330–334. [Google Scholar] [CrossRef]

- De Paolis, F.; Geralico, A.; Ingrosso, G.; Nucita, A.A. The black hole at the galactic center as a possible retro-lens for the S2 orbiting star. Astron. Astrophys. 2003, 409, 809–812. [Google Scholar] [CrossRef]

- Gillessen, S.; Eisenhauer, F.; Trippe, S.; Alexander, T.; Genzel, R.; Martins, F.; Ott, T. Monitoring Stellar Orbits Around the Massive Black Hole in the Galactic Center. Astrophys. J. 2009, 692, 1075–1109. [Google Scholar] [CrossRef]

- Dokuchaev, V.I. Spin and mass of the nearest supermassive black hole. Gen. Rel. Gravit. 2014, 46, 1832. [Google Scholar] [CrossRef]

- Dokuchaev, V.I.; Eroshenko, Y.N. Physical Laboratory at the center of the Galaxy. Phys. Uspekhi 2015, 58, 772–784. [Google Scholar] [CrossRef]

- Iorio, L. Perturbed stellar motions around the rotating black hole in Sgr A* for a generic orientation of its spin axis. Phys. Rev. D 2012, 84, 124001. [Google Scholar] [CrossRef]

- Zakharov, A.F.; Nucita, A.A.; De Paolis, F.; Ingrosso, G. Apoastron shift constraints on dark matter distribution at the Galactic Center. Phys. Rev. D 2007, 76, 062001. [Google Scholar] [CrossRef]

- Zakharov, A.F.; de Paolis, F.; Ingrosso, G.; Nucita, A.A. Shadows as a tool to evaluate the black hole parameters and a dimension of spacetime. New Astron. Rev. 2012, 56, 64–73. [Google Scholar] [CrossRef]

- Falcke, H.; Markoff, S.B. Toward the event horizon—The supermassive black hole in the galactic center. Classicala Quantum Gravit. 2013, 30, 244003. [Google Scholar] [CrossRef]

- Zakharov, A.F.; Borka, D.; Borka Jovanović, V.; Jovanović, P. Constraints on Rn gravity from precession of orbits of S2-like stars: A case of a bulk distribution of mass. Adv. Space Res. 2014, 54, 1108–1112. [Google Scholar] [CrossRef]

- Zakharov, A.F.; Nucita, A.A.; De Paolis, F.; Ingrosso, G. Solar system constraints on Rn gravity. Phys. Rev. D 2006, 74, 107101. [Google Scholar] [CrossRef]

- Nucita, A.A.; De Paolis, F.; Ingrosso, G.; Qadir, A.; Zakharov, A.F. Sgr A*: A Laboratory to Measure the Central Black Hole and Stellar Cluster Parameters. Publ. Astron. Soc. Pac. 2007, 119, 349–359. [Google Scholar] [CrossRef]

- Plewa, P.M.; Gillessen, S.; Eisenhauer, F.; Ott, T.; Pfuhl, O.; George, E.; Dexter, J.; Habibi, M.; Genzel, R.; Reid, M.J.; et al. Pinpointing the near-infrared location of Sgr A* by correcting optical distortion in the NACO imager. Mon. Not. R. Astron. Soc. 2015, 453, 3234–3244. [Google Scholar] [CrossRef]

- De Paolis, F.; Ingrosso, G.; Nucita, A.A.; Qadir, A.; Zakharov, A.F. Estimating the parameters of the Sgr A* black hole. Gen. Rel. Gravit. 2011, 43, 977–988. [Google Scholar] [CrossRef]

- Zakharov, A.F.; De Paolis, F.; Ingrosso, G.; Nucita, A. Direct measurements of black hole charge with future astrometrical missions. Astron. Astrophys. 2005, 442, 795–799. [Google Scholar] [CrossRef]

- Bozza, V.; Mancini, L. Gravitational Lensing by Black Holes: A Comprehensive Treatment and the Case of the Star S2. Astrophys. J. 2005, 611, 1045–1053. [Google Scholar] [CrossRef]

- Doeleman, S.S.; Weintroub, J.; Rogers, A.E.E.; Plambeck, R.; Freund, R.; Tilanus, R.P.J.; Friberg, P.; Ziurys, L.M.; Moran, J.M.; Corey, B.; et al. Event-horizon-scale structure in the supermassive black hole candidate at the Galactic Centre. Nature 2008, 455, 78–80. [Google Scholar] [CrossRef] [PubMed]

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons by Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).