1. Introduction

The Hubble constant (

) represents one of the most fundamental parameters in cosmology, determining the expansion rate of the Universe today and serving as a key calibrator for cosmic distance measurements, e.g., [

1]. It is a local quantity measured at redshift

, establishing the size and age scale of the universe and linking redshift to time and distance. During the past decade, a significant and intriguing discrepancy has emerged, commonly referred to as the “Hubble tension”. This tension lies between the predictions for

derived from early universe probes, based on the standard Lambda Cold Dark Matter (ΛCDM) cosmological model, and those obtained directly from local late universe observations [

2,

3,

4]. This discrepancy has persisted despite continuous improvements in observational techniques and has reached a level that challenges the standard cosmological model ΛCDM, detected at a statistical level of high significance [

5,

6,

7].

In this work, we specifically focus on the persistent discrepancy in measurements of obtained from early Universe probes, exemplified by the Cosmic Microwave Background (CMB) data from the Planck collaboration, and those derived from local late Universe observations, primarily through Type Ia Supernovae (SNIa) distance ladder measurements from the SH0ES collaboration. These two leading methods provide the primary observational input for our analysis of the Hubble tension, allowing us to investigate its underlying components.

Currently, the most precise predictions for

from the Cosmic Microwave Background (CMB) by the Planck collaboration in combination with the South Pole and Atacama Cosmology Telescopes yield

km/s/Mpc [

8], while distance ladder measurements based on Type Ia supernovae (SNIa) calibrated with Cepheid variables from the SH0ES collaboration obtain

km/s/Mpc [

5]. This difference of ∼5.94 km/s/Mpc corresponds to a statistical significance of approximately

when uncertainties are taken at face value [

9]. The robustness of this tension is further highlighted by various other independent probes that provide measurements across this spectrum: Baryon Acoustic Oscillations (BAO) combined with Big Bang Nucleosynthesis yield

km/s/Mpc [

10], largely consistent with CMB values. Megamaser-based measurements give

km/s/Mpc [

11], aligned with the distance ladder. Meanwhile, cosmic chronometers report intermediate values around

km/s/Mpc [

12].

The persistent nature of this tension has led to numerous investigations of potential systematic errors in early or late universe measurements [

13,

14]. Recent analyses using alternative distance calibrators such as the Tip of the Red Giant Branch (TRGB) have yielded intermediate values of

km/s/Mpc [

15], with more recent works like Jensen et al. (2025) [

16] providing additional constraints from TRGB + SBF methods. These suggest possible systematics in the Cepheid calibration. In contrast, studies exploring modifications to the CMB analysis find that standard extensions to ΛCDM do not readily resolve tension [

17]. The launch of the James Webb Space Telescope (JWST) has opened a new chapter in the measurement of extragalactic distances and

, offering new capabilities to explore and refine the strongest observational evidence that contributes to tension, where errors in photometric measurements of Cepheids along the distance ladder do not significantly contribute to tension [

18].

Beyond purely systematic considerations, the tension may be affected by what we term “information loss errors”, subtle biases arising from the simplified models used to interpret complex cosmological data. When observations are compressed into a single

value, assumptions about other cosmological parameters can introduce model-dependent biases that are not fully captured in the reported uncertainties [

19,

20]. Such effects may artificially inflate the apparent tension between different probes. Furthermore, various theoretical models have been proposed as potential solutions, including early dark energy [

21], modified gravity [

22], and new physics in the neutrino sector [

23]. However, many of these models struggle to simultaneously accommodate all cosmological observations without introducing new tensions in other parameters [

24]. Thus, a critical question emerges: How much of the observed Hubble tension represents a genuine physical discrepancy requiring new physics, and how much might be attributed to systematic errors or information loss in the analysis pipeline? Addressing this question requires a framework that can decompose the observed tension into its constituent components while accounting for the possibility that quoted uncertainties may be underestimated. The broader landscape of cosmological tensions and proposed solutions is comprehensively reviewed in the recent literature, including the CosmoVerse white paper (2025) [

25], which provides a valuable context for understanding the challenges posed to the standard cosmological model.

In this paper, we develop a Bayesian hierarchical model that explicitly parameterizes three contributions to the observed tension: (1) standard measurement errors, (2) information loss errors arising from model simplifications, and (3) real physical tension that might indicate new physics beyond ΛCDM. By simultaneously analyzing multiple cosmological probes, CMB, SNIa, BAO, and H(z) measurements, we can assess the robustness of the Hubble tension and quantify the probability that it represents a genuine challenge to the standard cosmological model. Our approach builds on previous Bayesian treatments of cosmological tensions [

26,

27,

28] but extends them by explicitly modeling information loss and allowing probe-specific inflation factors that account for potential underestimation of uncertainties. This methodology enables us to assess the statistical significance of the Hubble tension in a framework that accommodates realistic assessments of both statistical and systematic uncertainties. This new framework is designed to meticulously decompose the observed tension into its constituent parts: standard measurement errors, information loss arising from parameter-space projection, and genuine physical tension. The framework does not propose new physics solutions; instead, it serves as a diagnostic tool to quantify the nature of the discrepancy, particularly highlighting the portion that cannot be explained by known statistical and information-theoretic effects.

3. The Total Constraining Information

In statistical analysis, information loss is a critical concept that refers to the reduction in the ability to make precise inferences about parameters of interest due to various factors in the data collection, processing, or analysis stages. In cosmology, information loss is a critical concern because of the limited observational data available and the complexity of cosmological models. For instance, in the analysis of the Cosmic Microwave Background (CMB), the compression of full-sky maps into power spectra results in some information loss, particularly regarding non-Gaussian features. A concept related to information loss (IL) is total constraining information (TCI), which refers to the total amount of information available in a dataset that can be used to constrain or determine model parameters. The concept is closely related to the Fisher information matrix, with the determinant of the Fisher matrix serving as a measure of total constraining information. Mathematically, it can be understood as the volume of parameter space excluded by observations. The greater the constraining information, the smaller the allowed volume in the parameter space. The Fisher matrix represents the total information theoretically available:

where

L is the likelihood function and

are the cosmological parameters. We modify the Fisher matrix to include loss information effects:

This equation introduces the concept of information loss directly within the Fisher Information Matrix (FIM) framework. Here,

are the loss coefficients, which are elements of a matrix

(

). They represent the fraction of information effectively extracted or preserved from the observations for a given pair of parameters

. The choice of direct multiplication, where each element

of the original FIM is scaled by a corresponding

, allows for a precise, element-wise attenuation of information. This functional form directly models the idea that not all information contained within the theoretical FIM might be realized or extracted from observational data. These loss coefficients are inversely related to the variance inflation factors (

) introduced in

Section 2.3. The physical reason for this inverse relationship stems from the fundamental principle that information and uncertainty (variance) are inversely proportional in statistical estimation. If

quantifies the fraction of information retained, then a factor of

naturally represents the inflation of variance due to this information reduction. Specifically, for a single parameter,

, indicating that a reduction in information (smaller

) leads to a proportional increase in uncertainty (larger

).

The TCI is a fundamental concept in our analysis, defined as the logarithm of the determinant of the Fisher matrix. With the introduction of loss coefficients, the TCI is expressed as:

where

is the matrix of loss coefficients and

is the original Fisher matrix. If the loss coefficients

are assumed to primarily affect the diagonal elements of the Fisher matrix, or if

is a diagonal matrix where its non-zero elements are the

loss coefficients applied to the corresponding diagonal elements of

, the expression can be approximated as:

Here,

represents the “ideal” or theoretical TCI without considering information loss. The term

represents the reduction in TCI due to information loss, specifically from the diagonal components. As

, the term

is always non-positive, effectively reducing the TCI compared to the ideal case. We clarify that this decomposition is strictly valid if the scaling matrix

is diagonal, which is a common simplification when modeling total information, or if the off-diagonal effects on the determinant are negligible for practical purposes. Our MCMC framework directly estimates the

matrix elements, allowing for more general cases.

Relating cosmological parameters to information loss coefficients is a crucial aspect of this analysis. The starting point is the Fisher Information Matrix (FIM). For cosmological parameters

, the FIM elements are given by:

where

L is the log-likelihood of the data given the parameters. The inverse of the FIM provides a lower bound on the covariance matrix of the parameter estimates (Cramer–Rao bound). This gives us an idea of the best possible constraints on cosmological parameters. For a combined analysis, we construct a total Fisher matrix:

where each

is the Fisher matrix for the respective probe. We can now introduce loss coefficients for each probe and parameter:

where ∘ denotes the Hadamard product (element-wise). This element-wise multiplication is specifically chosen because the matrices

are designed to act as “attenuation masks” on the original Fisher matrices. Each element

within

directly scales the corresponding

-th element of the Fisher matrix

, allowing for a granular, heterogeneous reduction of information across different parameter correlations. This contrasts with standard matrix multiplication, which would imply a linear transformation of the parameter space, and is not what our model intends to represent for information loss.

This approach allows us to maximize the information content from diverse observational probes while quantifying and accounting for potential information loss. By carefully modeling the interplay between different datasets and their associated systematics, we can obtain robust constraints on cosmological parameters and gain insights into the nature of information degradation in cosmology. In particular, the Hubble tension refers to the discrepancy between measurements of the Hubble constant () derived from early Universe probes and those from late Universe probes. Understanding this division is crucial for analyzing the tension and applying our Fisher matrix approach with information loss coefficients.

3.1. Generation of Information Loss Coefficients

The likelihood function is modified to include the information loss coefficients and the priors are defined for from the beta distributions, Beta(a,b), where a and b are hyperparameters controlling the shape of the distribution. The coefficients represent the fraction of theoretical information effectively extracted from the observations. They range from 0 to 1, where 1 means no information loss, and 0 means total information loss. We use a Markov chain Monte Carlo (MCMC) algorithm (e.g., Metropolis–Hastings or Hamiltonian Monte Carlo) to estimate the values. The MCMC explores the parameter space, including both cosmological parameters and .

The sampling process follows two basic steps. First, we start with initial values for (can be prior values or arbitrary values between 0 and 1). Then, at each MCMC iteration:

Propose new values for .

Calculate the modified Fisher matrix .

Calculate the likelihood using this modified matrix.

Accept or reject new values according to the MCMC acceptance ratio.

It is important to note that the likelihood function is modified to include

:

where

are the cosmological parameters,

are fiducial values,

is the modified Fisher matrix, and

is the prior to

. After MCMC, we analyze the posterior distribution of

and calculate means, medians, and confidence intervals for each

.

3.2. Separating Measurement Error and Information Loss in Hubble Tension

We propose a Bayesian hierarchical model to separate the contributions of measurement error and information loss to the observed Hubble tension, as defined in Equation (

1). For the purpose of illustrating our methodology, in this work we focus on the well-known tension between the CMB and SNIa measurements of

. Future work will extend this framework to incorporate a broader range of cosmological datasets.

The variance inflation factors

and

are defined as:

These equations rigorously define the variance inflation factor for CMB and SNIa measurements. The functional form, as a simple ratio of variances, directly quantifies the extent to which the total uncertainty (

) exceeds the uncertainty attributed solely to conventional measurement errors (

). This empirical definition captures any additional variance, including that arising from information loss due to parameter marginalization or unmodeled systematic effects, that is not captured by the reported measurement uncertainty. In other words, the variance inflation factors quantify how much the variance of

increases when we account for the full parameter space compared to considering

in isolation.

The information loss component (

) is calculated as the standard deviation of the additional variance not accounted for by measurement errors, consistent with the rigorous separation in

Section 2.4:

This equation calculates the standard deviation of the information loss component

. Its functional form is derived directly from the variance decomposition presented in

Section 2.4. The term

represents the additional variance beyond the reported measurement error that is attributable to information loss for each probe. By summing these additional variance contributions for CMB and SNIa and taking the square root, we obtain the combined standard deviation of the information loss. This quadrature sum is appropriate, as it assumes that the information loss contributions from the two distinct probes are independent sources of variance, thereby adding linearly in terms of squared standard deviations.

The real tension

is then estimated as a parameter within the Bayesian framework, representing the true underlying discrepancy. Consistent with the observational data outlined in

Section 1, our analysis uses the following key measurements of the Hubble constant:

km/s/Mpc from Planck 2018 data.

km/s/Mpc from the SH0ES (Supernova for the Equation of State) team.

The combination of these values yields an observed tension of km/s/Mpc. Our aim is to verify how much uncertainty and loss of information contribute to this value.

3.3. Model Specification

Bayesian analysis provides a natural framework for decomposing the Hubble tension, allowing for the explicit incorporation of uncertainties at multiple levels of the problem and direct quantification of the relative contributions from different sources of variance. Our Bayesian hierarchical model formally characterizes the observed tension between the CMB and SNIa datasets as arising from three fundamental components: the real physical tension (), measurement error (), and information loss due to projection from multidimensional parameter spaces (). The hierarchical structure of the model recognizes that these components are not directly observed but emerge from a generative process involving variance inflation factors for each method ( and ).

At the top level of the hierarchy, we model the observed difference between the estimates as a quantity arising from an underlying generative process. At the intermediate level, this difference is decomposed into components with distinct physical and statistical meanings. At the lower level, the variance inflation factors, which capture the covariance structure of the full parameter spaces, are treated as parameters to be estimated from the data, rather than fixed values. A crucial advantage of this approach is that uncertainty in the estimation of variance inflation factors is automatically propagated to our conclusions about the magnitude of the real tension. Additionally, the Bayesian model allows for a direct interpretation of confidence intervals for the real tension and provides a foundation for formal model comparisons should different structures for tension decomposition be considered.

The complete mathematical formulation of the model begins with the specification of prior distributions for all parameters. These priors represent our knowledge or beliefs about the parameters before observing the specific data in this analysis. The choice of these prior distributions is guided by both theoretical considerations and previous empirical results related to the structure of cosmological parameter spaces.

3.3.1. Priors

The choice of prior distributions is a critical step in Bayesian analysis, as they encode our prior knowledge or assumptions about the parameters before observing the data. We carefully selected our priors to balance weak informativeness with physical consistency:

For the true values of the Hubble constant,

and

, we employ Normal distributions (

N):

This choice is standard for continuous parameters that are expected to be centered on a specific value and possess a quantifiable uncertainty. The Normal distribution is maximal entropy given a mean and variance, making it a flexible choice. We set these as weakly informative priors by centering them on the observed values of

(e.g.,

km/s/Mpc) but assigning sufficiently wide standard deviations (e.g.,

much larger than the observed uncertainty) to ensure that the data primarily drives the posterior inference, rather than the prior. This approach allows the MCMC sampler to efficiently explore the parameter space while maintaining physical realism.

The Beta distribution is a continuous probability distribution defined on the interval

, which makes it the natural and most appropriate choice for parameters that represent proportions, fractions, or probabilities. Since the coefficients

represent the “fraction of theoretical information effectively extracted from observations” (i.e.,

), the Beta distribution is perfectly suited. Its two positive shape parameters,

a and

b, provide significant flexibility to model various prior beliefs about the distribution of information loss, ranging from uniform (e.g., Beta (1,1)) to skewed toward higher (e.g., Beta (5,1)) or lower (e.g., Beta (1,5)) values. This flexibility allows us to specify informative but relatively wide priors, as discussed in detail in the Bayesian implementation section (

Section 3.5), allowing the data to primarily inform the magnitude of information loss.

3.3.2. Likelihood

The likelihood function is given by:

with the expressions below representing the generative model for our observations, that is, how the observed measurements of

are generated as a function of the true values and their respective uncertainties.

These equations specify the generative model for the observed CMB and SNIa measurements of . The use of a Normal (Gaussian) distribution is a standard assumption for modeling observational errors, often justified by the Central Limit Theorem when multiple small error sources contribute to the total uncertainty. The important aspect of its functional form lies in the variance term which explicitly incorporates the information loss coefficients and into the precision of the observed data. Since is a fraction (), dividing by it effectively inflates the reported variances and . A smaller (indicating greater information loss) leads to a larger variance, implying a less precise observed measurement. This directly reflects how the “loss of information” manifests in the observational data, making the observed value effectively less constrained.

Expanding these expressions using probabilistic models, we have the following:

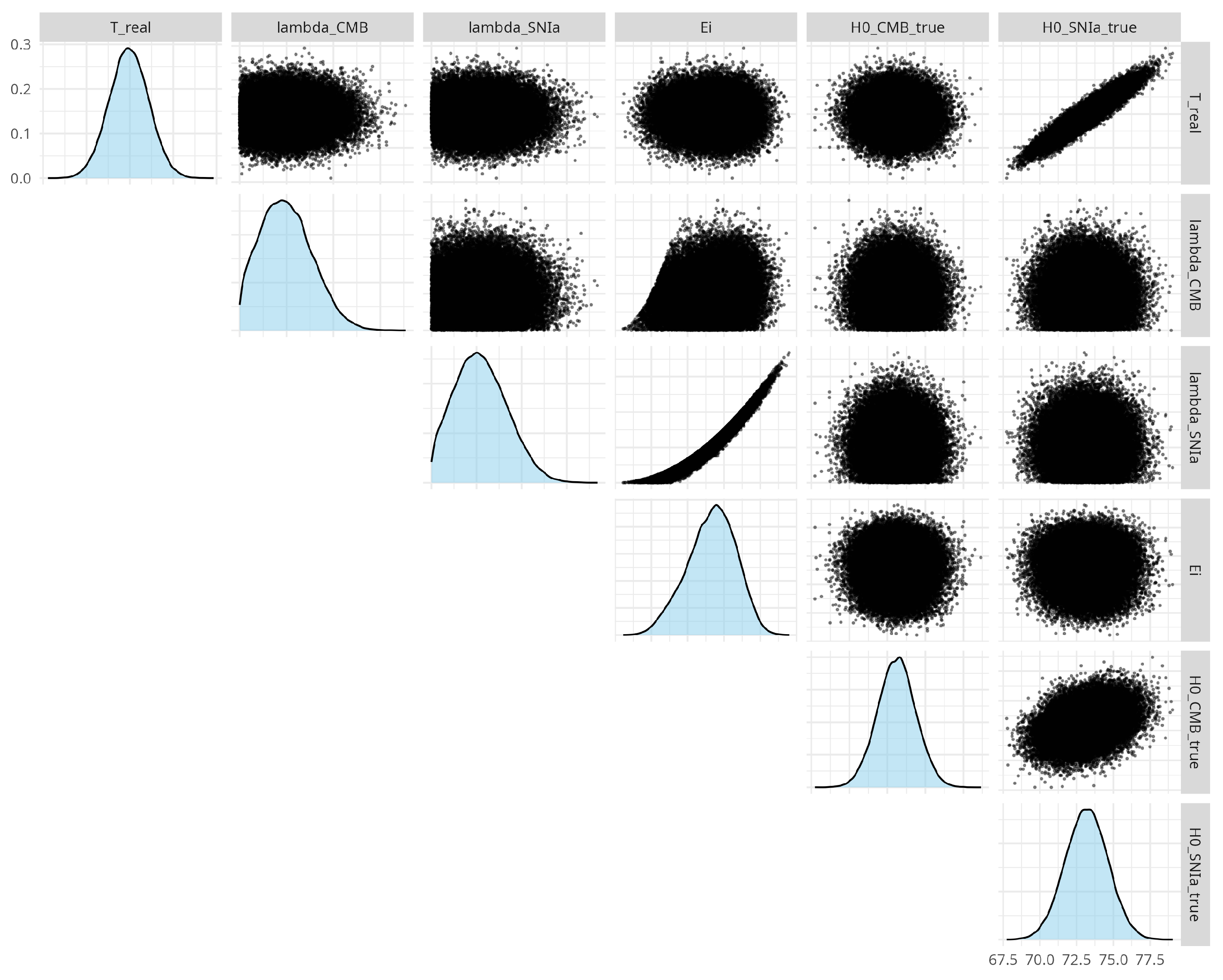

3.5. Bayesian Implementation

The Bayesian analysis was implemented using the Stan probabilistic programming language, which employs a No-U-Turn Sampler (NUTS), a highly efficient variant of Hamiltonian Monte Carlo (HMC). This choice is particularly advantageous for complex, high-dimensional models as it navigates the parameter space effectively, reducing issues like random walk behavior and improving sampling efficiency.

We ran 4 independent MCMC chains, each initialized from different random starting points to ensure robust exploration of the posterior distribution and to facilitate convergence diagnostics. Each chain consisted of 5000 iterations. The first 2000 iterations of each chain were designated as warm-up (or burn-in) and discarded. This warm-up phase allows the sampler to adapt its parameters and converge to the target posterior distribution, ensuring that the subsequent samples are representative. This configuration resulted in a total of 12,000 effective posterior samples (4 chains × (5000 − 2000) samples/chain) used for subsequent inference.

The prior distributions for all the model parameters were carefully specified. For the true Hubble constant values ( and ), we used weakly informative normal priors, centered on the observed values, but with sufficiently wide standard deviations to allow the data to primarily drive the inference. For the information loss coefficients ( and ), Beta priors were used. These were set to be informative but relatively wide, reflecting the expectation that some information loss might occur, but avoiding overly strong assumptions about its magnitude. This choice aligns with the goal of allowing the data to inform the extent of information loss, while respecting the physical bounds of the parameters (0 to 1). The prior for the real tension component () was chosen to be non-informative (e.g., a very wide normal distribution or a flat prior), ensuring that the data primarily drive its posterior distribution.

The convergence of the MCMC chains was rigorously assessed to ensure that the samples accurately represent the target posterior distribution. We monitored the R-hat statistic for all parameters, aiming for values close to 1.0 (typically below 1.01–1.05), which indicates good mixing and convergence across chains. Furthermore, the effective sample size (ESS) was checked to ensure a sufficient number of independent samples for reliable inference, generally targeting ESS values greater than 400–1000 for each parameter. These diagnostics confirmed that the chains had adequately explored the parameter space.

From the converged posterior samples, summary statistics (mean, median, standard deviation) were calculated for all model parameters and derived quantities (such as , , and ). Posterior confidence intervals (e.g., 95% confidence intervals) were calculated directly from the percentiles of the MCMC samples, providing a robust measure of uncertainty for each parameter. The full Stan model code and data used for this analysis are available upon request/in a supplementary repository to ensure reproducibility.

5. Discussion

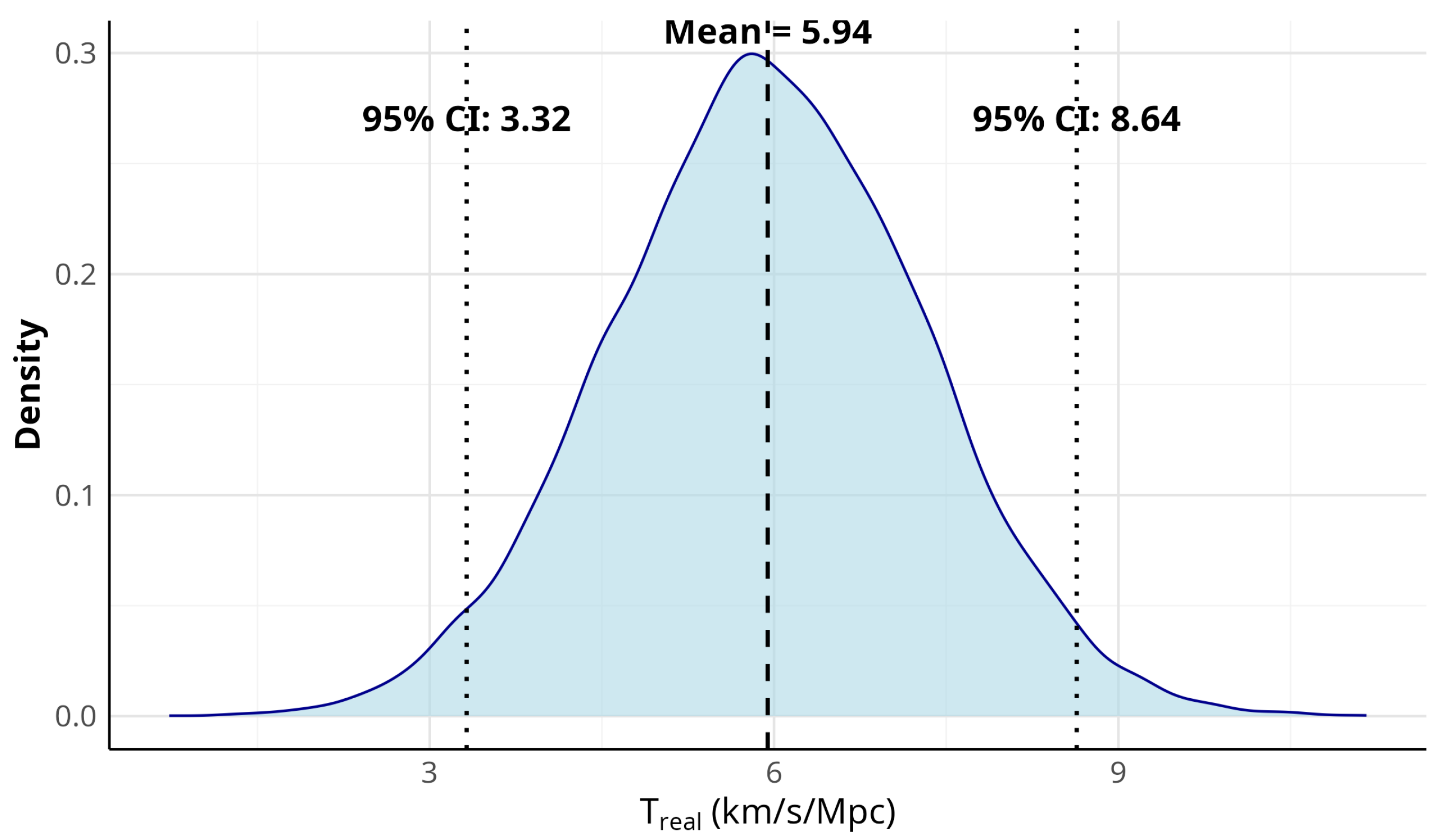

Our analysis provides compelling evidence that the Hubble tension remains significant even after accounting for both measurement errors and information loss due to parameter-space projection. The posterior distribution of the real tension component (

) shows a mean value of 5.94 km/s/Mpc with a confidence interval of 95% of [3.32, 8.64] km/s/Mpc, clearly excluding zero from the range of plausible values. It is important to note that

represents the discrepancy that persists after rigorously accounting for the uncertainties of the statistical measurement and the inherent loss of information from the marginalization of the parameters within the standard cosmological model framework. Therefore, a significant

strongly suggests that the observed discrepancy between CMB and SNIa measurements likely reflects a genuine physical phenomenon beyond what can be explained by these statistical and information-processing effects alone. This finding does not preclude the possibility that new physics beyond ΛCDM could ultimately resolve the tension; rather, it provides robust statistical evidence that such a resolution would indeed require going beyond the standard model’s current observational and data analysis interpretations. This finding aligns with the prevailing consensus in the cosmological community that the Hubble tension is a robust discrepancy, often reported at a significance level exceeding 4–5

in various independent analyzes [

5,

6,

29,

30]. Our work reinforces this by demonstrating that even when explicitly modeling and quantifying potential sources of uncertainty and information degradation, the core tension persists.

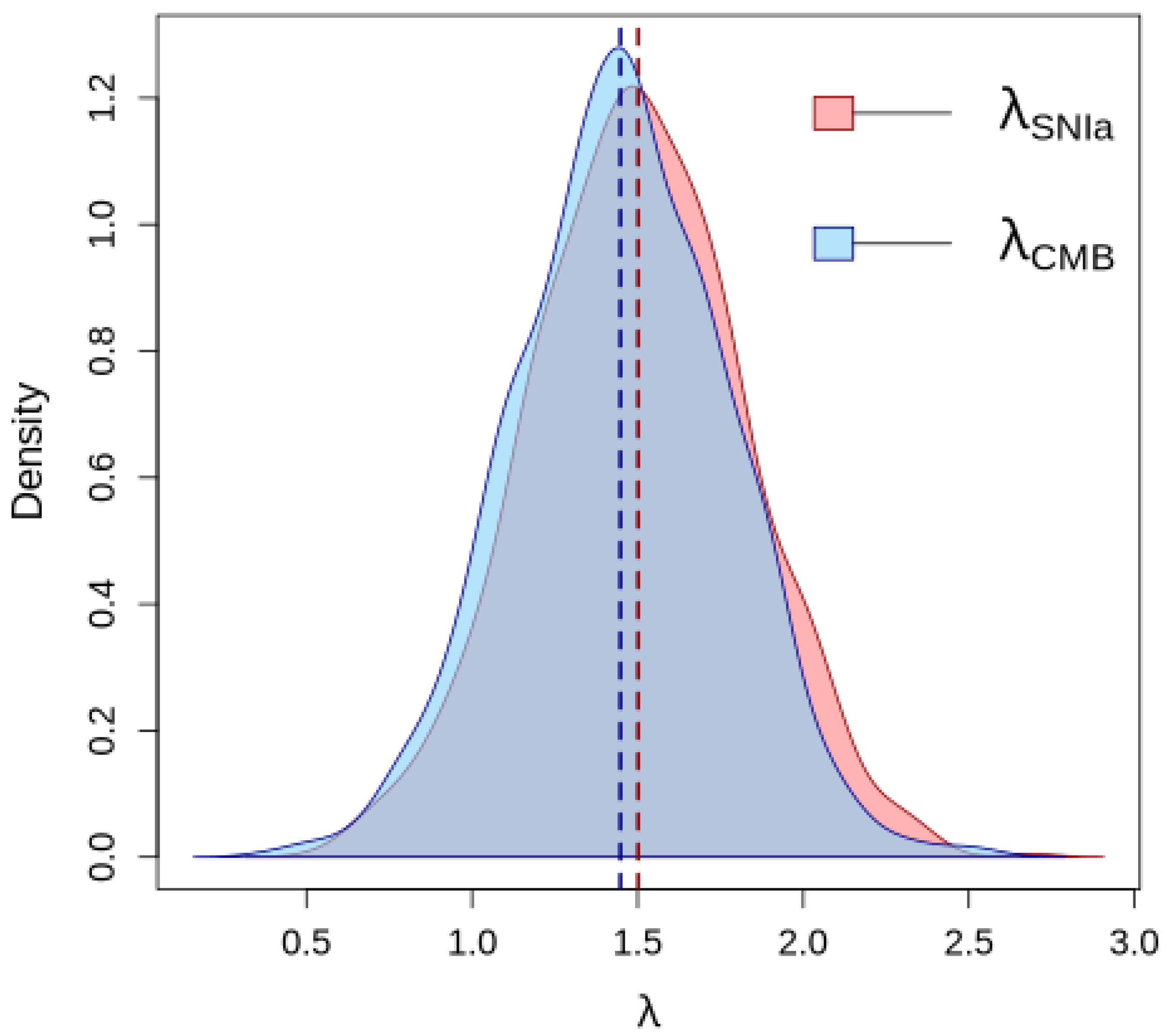

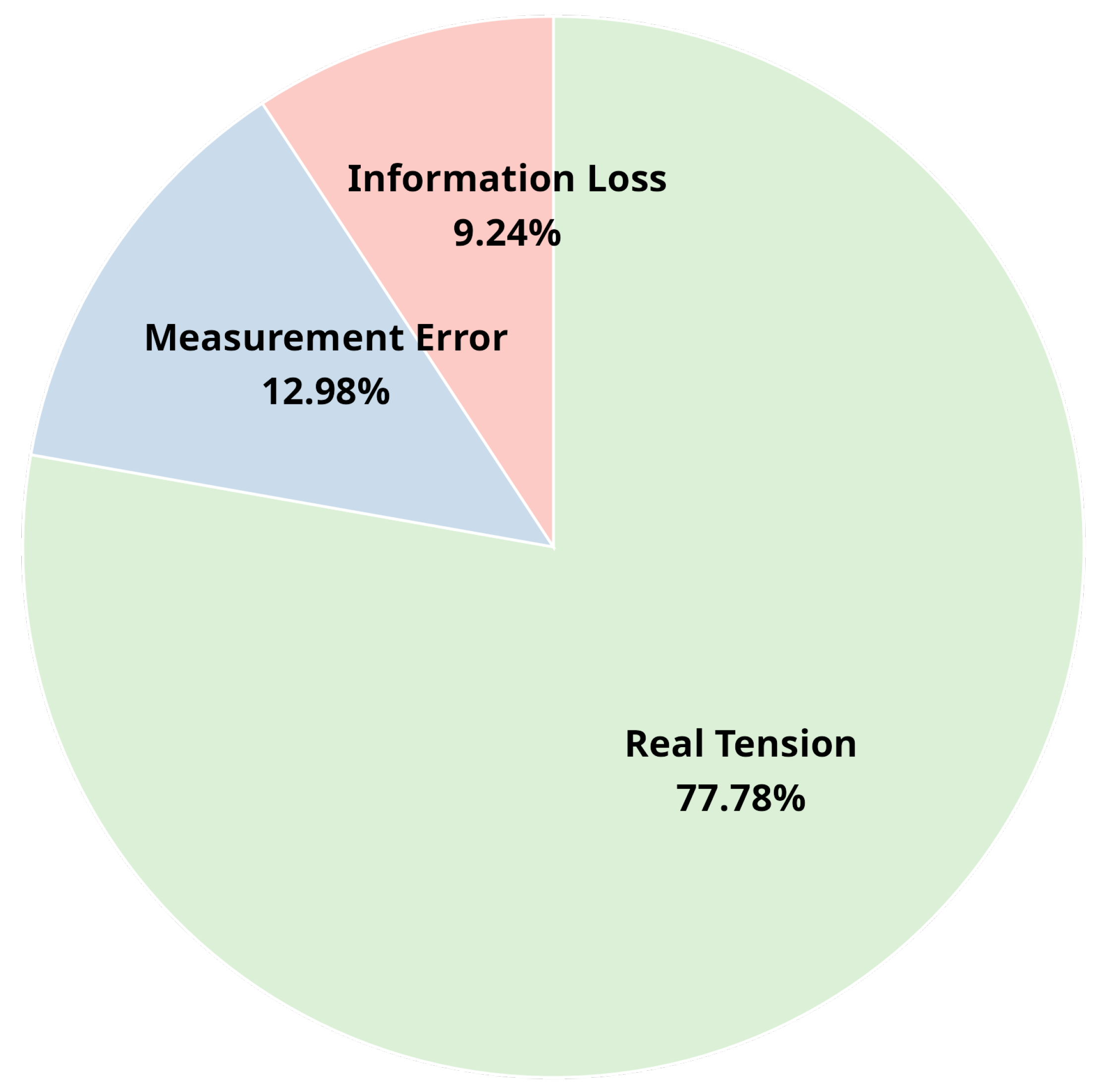

A key distinguishing feature of our approach, based on previous Bayesian treatments of cosmological tensions [

26,

27,

28], is the explicit parameterization and decomposition of the observed tension into three distinct components: standard measurement errors, information loss errors arising from model simplifications and parameter-space projection, and the real physical tension. For example, while Feeney et al. [

26] applied a Bayesian hierarchical model to clarify tension within the local distance ladder, our framework extends this by specifically isolating and quantifying the contribution of information loss (

) through the introduction of variance inflation factors (

). Our results indicate that approximately 78% of the variance of the observed tension is attributed to the real tension, the measurement error that accounts for 14% and the loss of information for the remaining 9%. The estimated variance inflation factors (

and

) being significantly greater than unity further underscore the importance of accounting for these effects, as they suggest that reported uncertainties might be underestimated or that parameter correlations lead to non-trivial information loss when marginalizing. This figure, therefore, represents the magnitude of the tension remaining after explicitly modeling identifiable error sources; while strongly suggestive of new physics, it is formally an upper bound for direct evidence of such physics, as it inherently absorbs any unquantified systematic effects not accounted for by our

parameters. This decomposition provides a more nuanced understanding of the tension’s origins, allowing us to confidently assert that the majority of the discrepancy is not an artifact of our analytical pipeline.

Future studies should extend our variance decomposition framework to incorporate a broader observational landscape. Particularly valuable would be the inclusion of probes that sample intermediate redshifts between the recombination epoch probed by the CMB and the relatively local universe explored by SNIa. The addition of such complementary datasets is expected to have a significant impact on the information loss component. By breaking existing parameter degeneracies, these new data sources should reduce the correlations between and other cosmological parameters. Consequently, our methodology would predict a decrease in the information loss component () and a convergence of the variance inflation factors () closer to unity. This reduction in information loss would further strengthen the robustness of the real tension, should it persist, by demonstrating that it is not an artifact of unresolved correlations or projection effects. Conversely, if the information loss component were to remain substantial or even increase with the inclusion of more data, it would point toward more complex or yet unidentified systematic issues in the combined dataset analysis. Furthermore, a multiprobe analysis would allow cross-validation of the inflation factors of the estimated variance. If similar values of emerge from independent dataset combinations, this would strengthen confidence in our quantification of information loss. In contrast, significant variations in these factors across different probe combinations might indicate probe-specific systematics or modeling assumptions that warrant further investigation.

Although our current results indicate that the Hubble tension remains robust even after accounting for information loss, we cannot exclude the possibility that more complex projection effects, perhaps involving higher-order moments of parameter distributions or nonlinear parameter degeneracies, might emerge in a more diverse dataset combination. The Bayesian hierarchical framework we have developed is well-suited for such extensions, as it naturally accommodates additional complexity through appropriate prior specifications and model comparison tools.

In conclusion, while our analysis provides important insights into the nature of the Hubble tension using two foundational cosmological probes, a definitive assessment of the contribution of information loss to this tension will require a more comprehensive observational foundation. This represents a promising direction for future research that could further illuminate whether the Hubble tension ultimately demands new physics beyond the standard cosmological model, as discussed in recent works [

3,

4,

7].