Assessing Galaxy Rotation Kinematics: Insights from Convolutional Neural Networks on Velocity Variations

Abstract

1. Introduction

2. Dataset and Machine Learning Methods

2.1. Convolutional Neural Network Architecture

2.2. Data Preprocessing

2.3. Loss Function

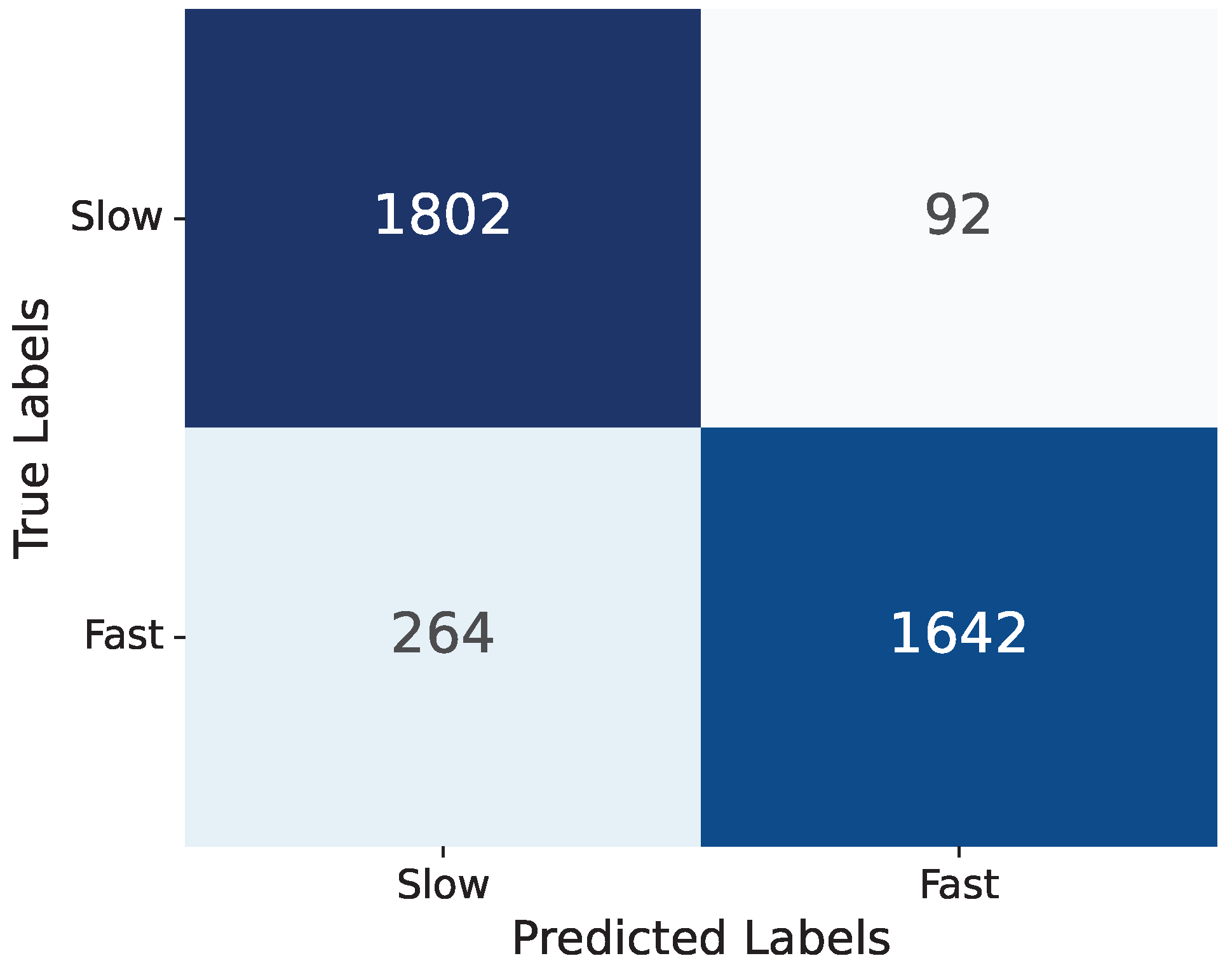

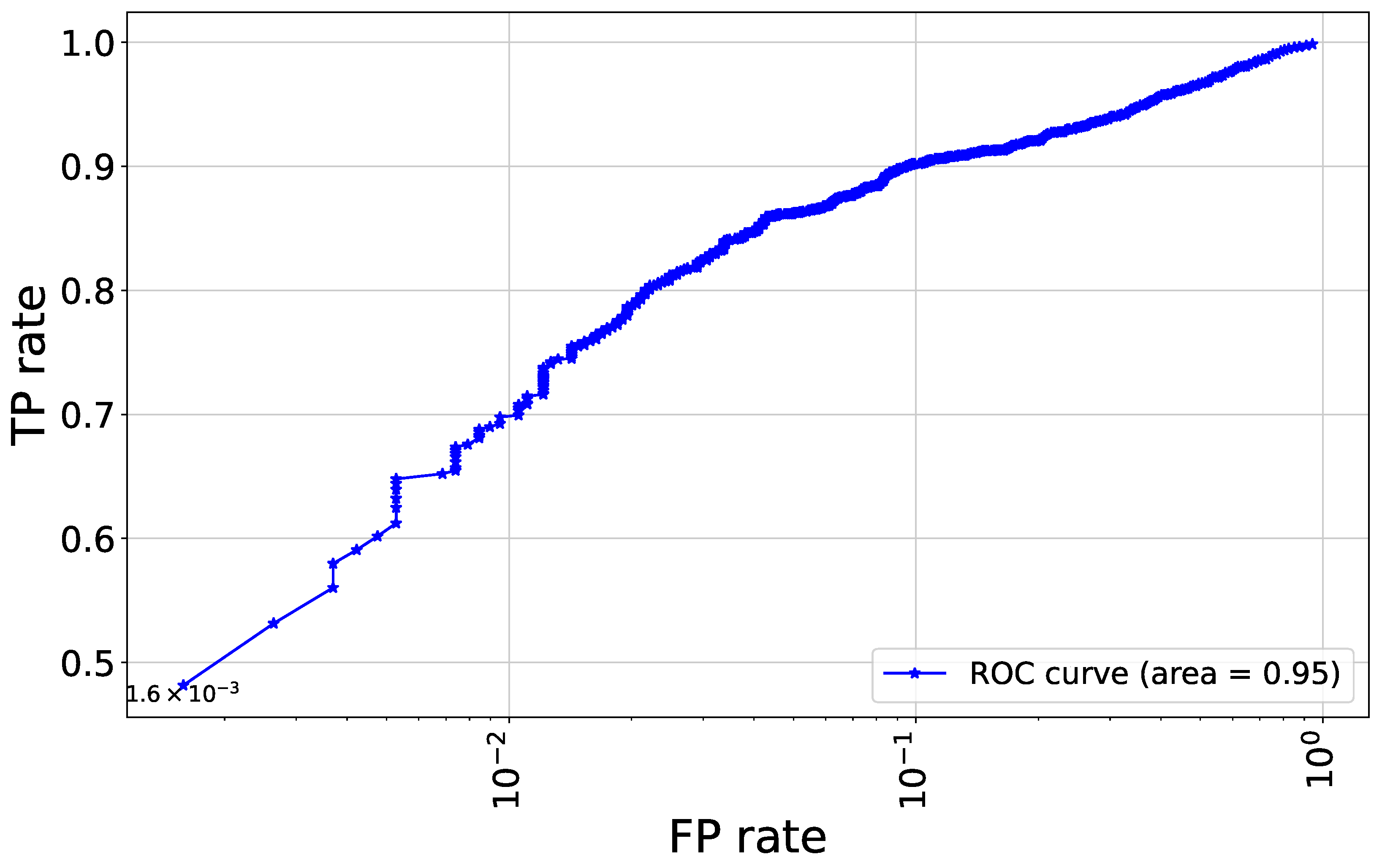

2.4. Evaluation Criteria

3. Results

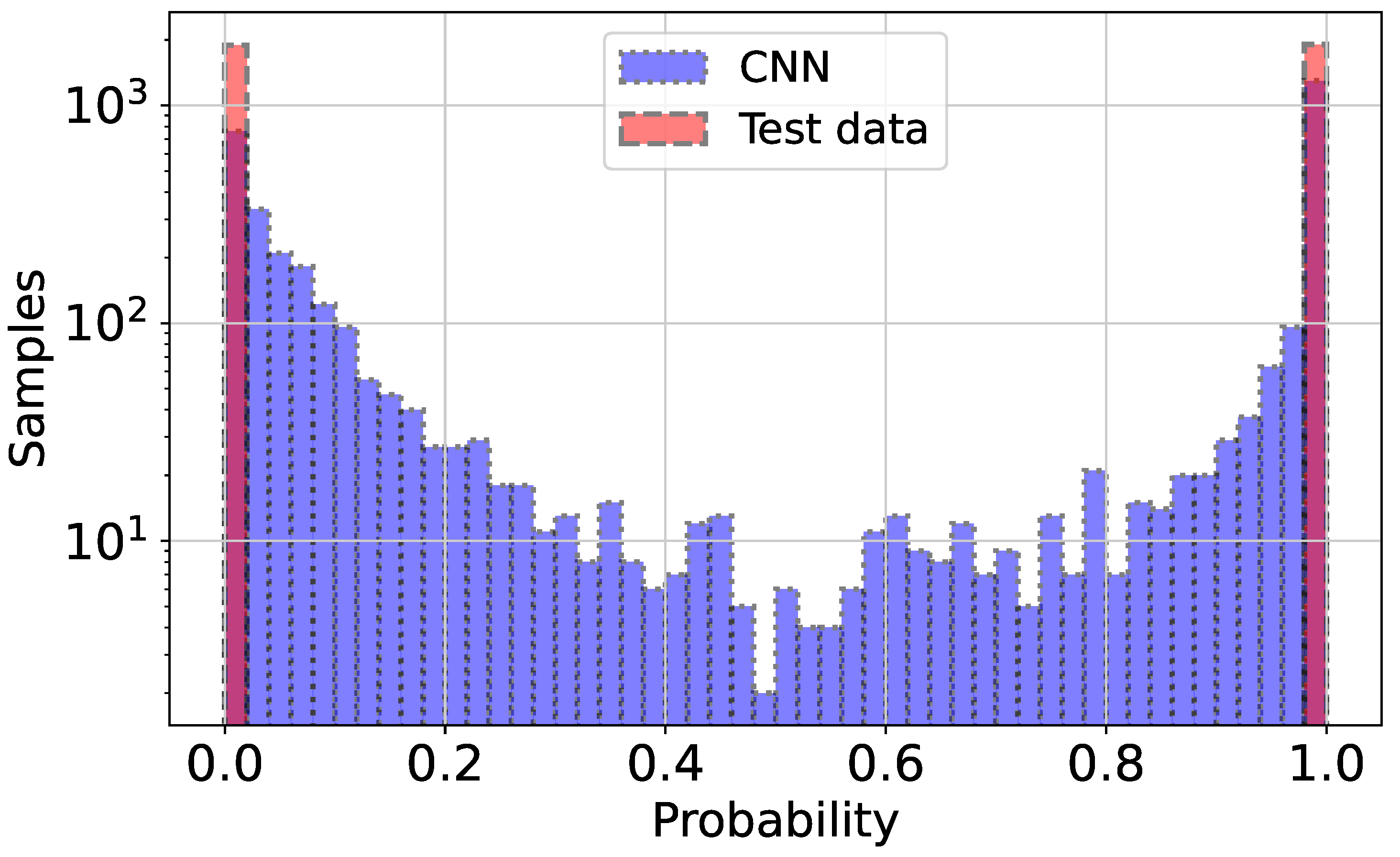

3.1. Training CNN on Galaxies with Known Fast/Slow Rotation

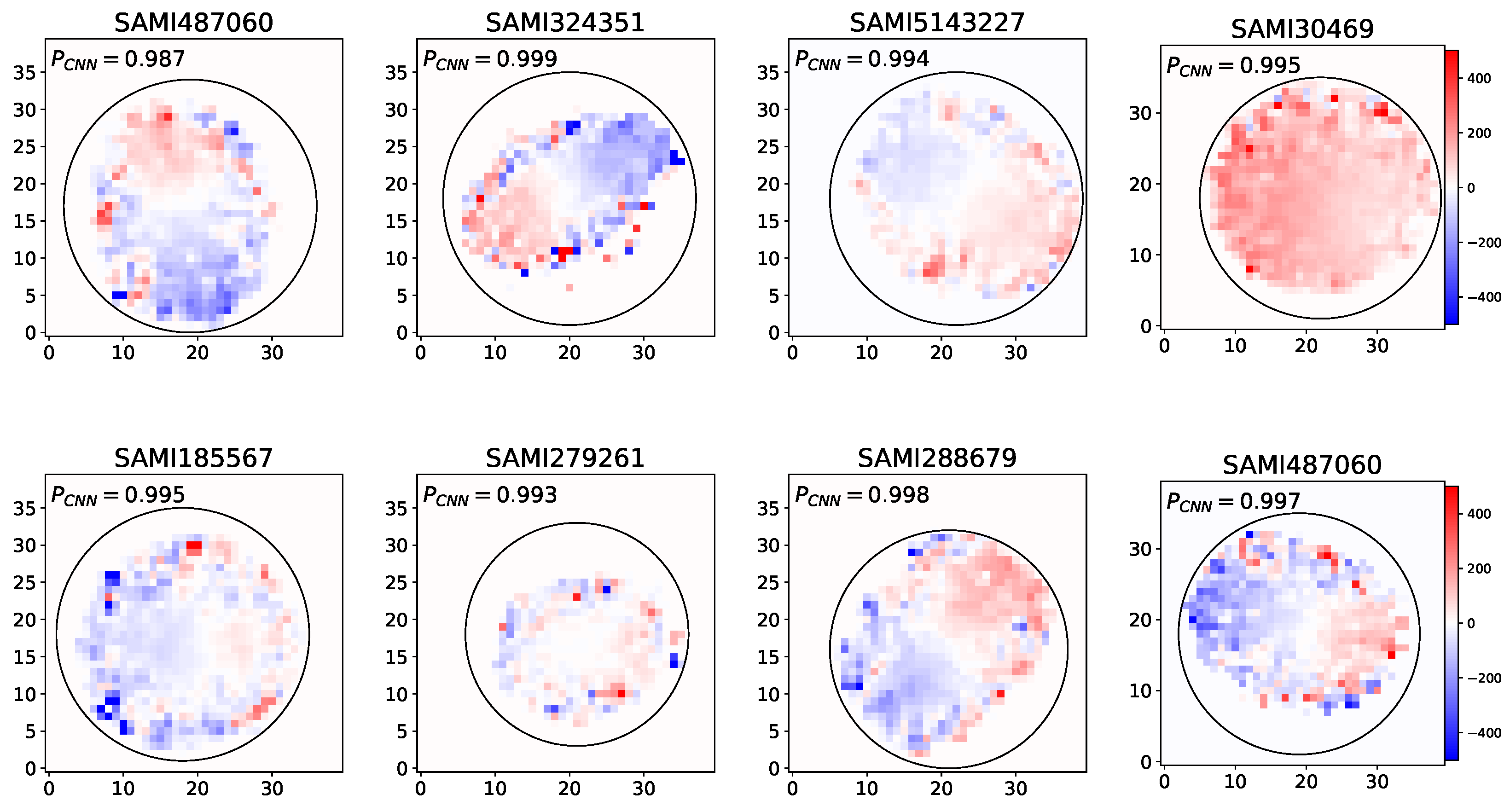

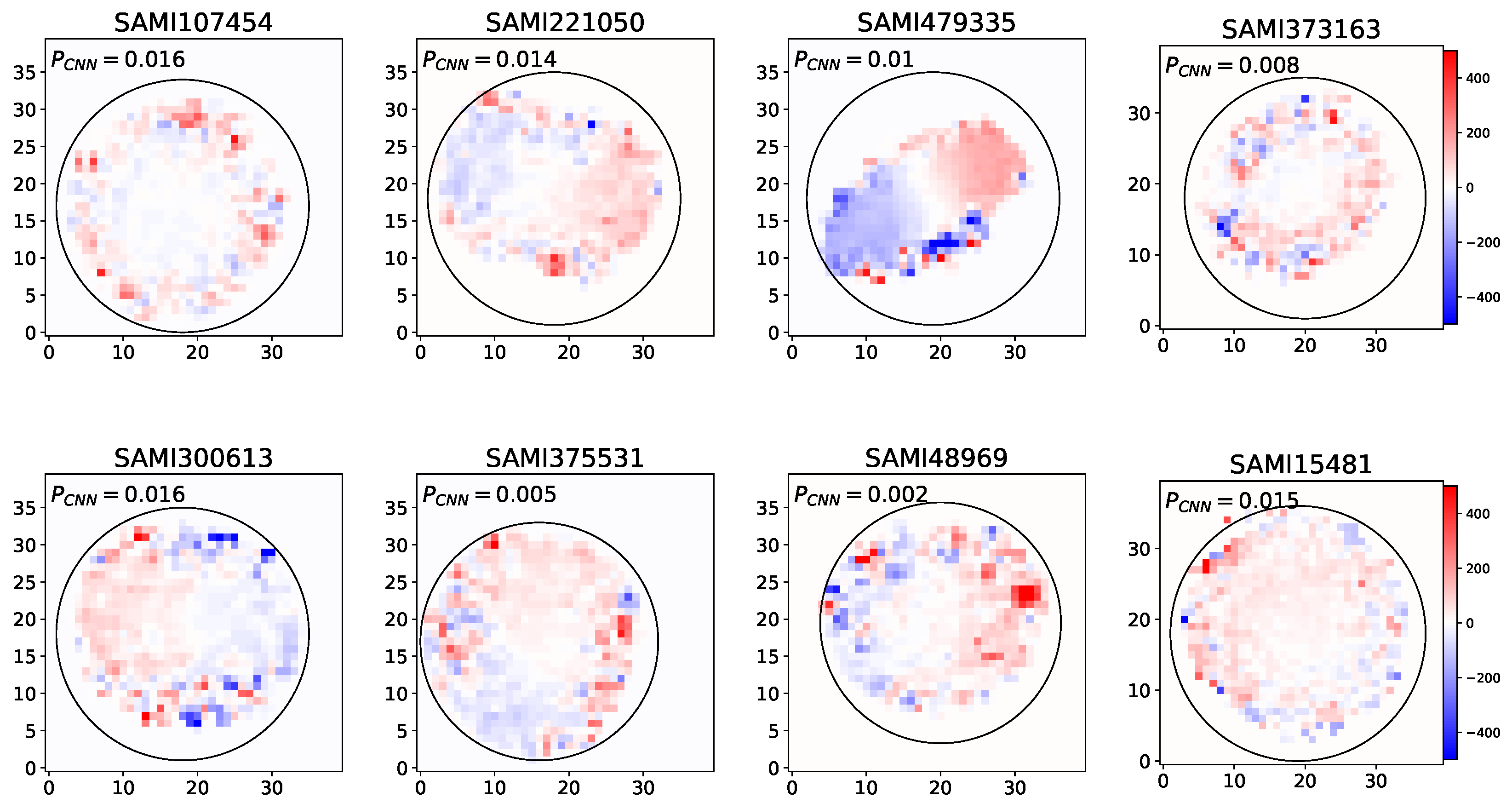

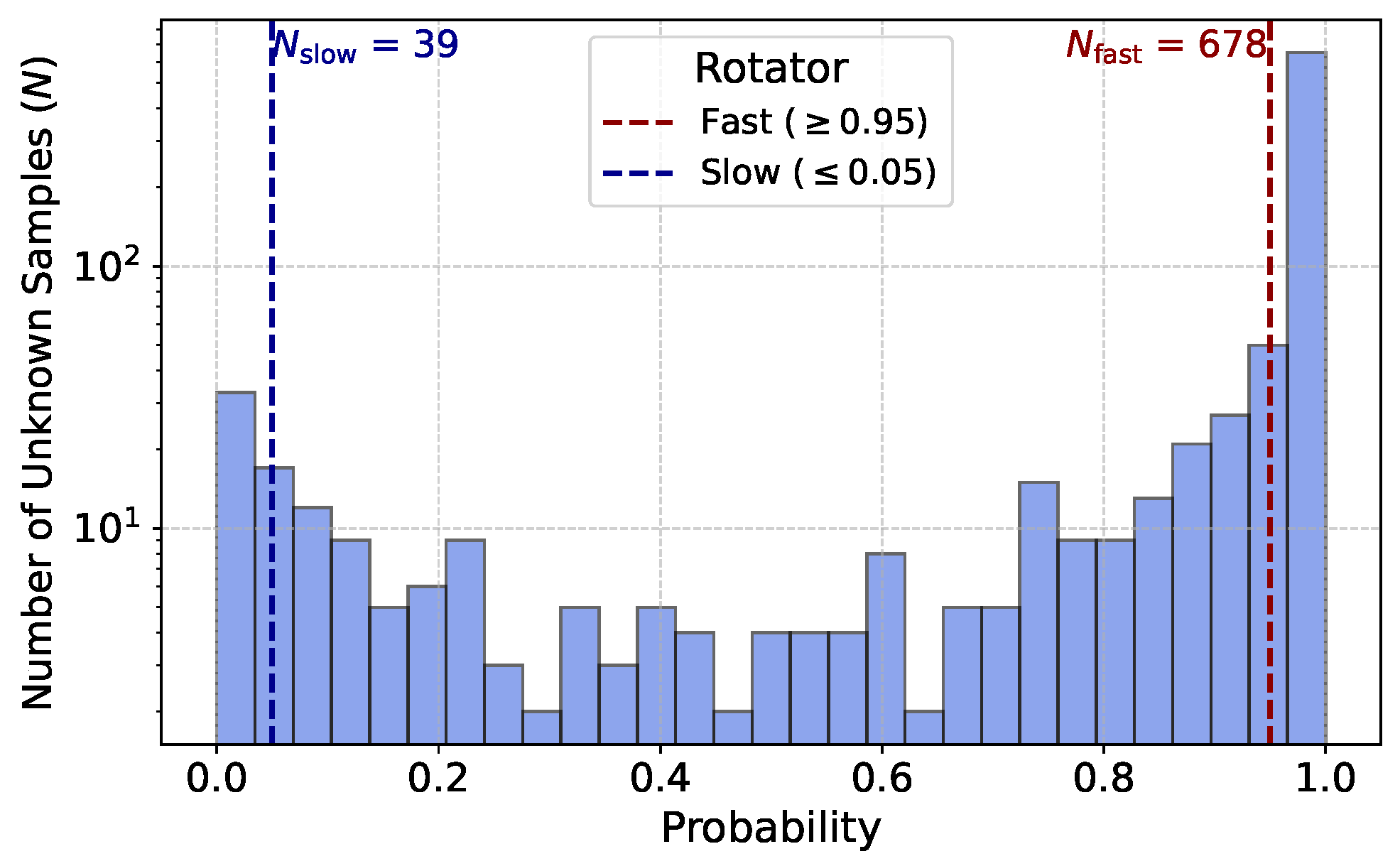

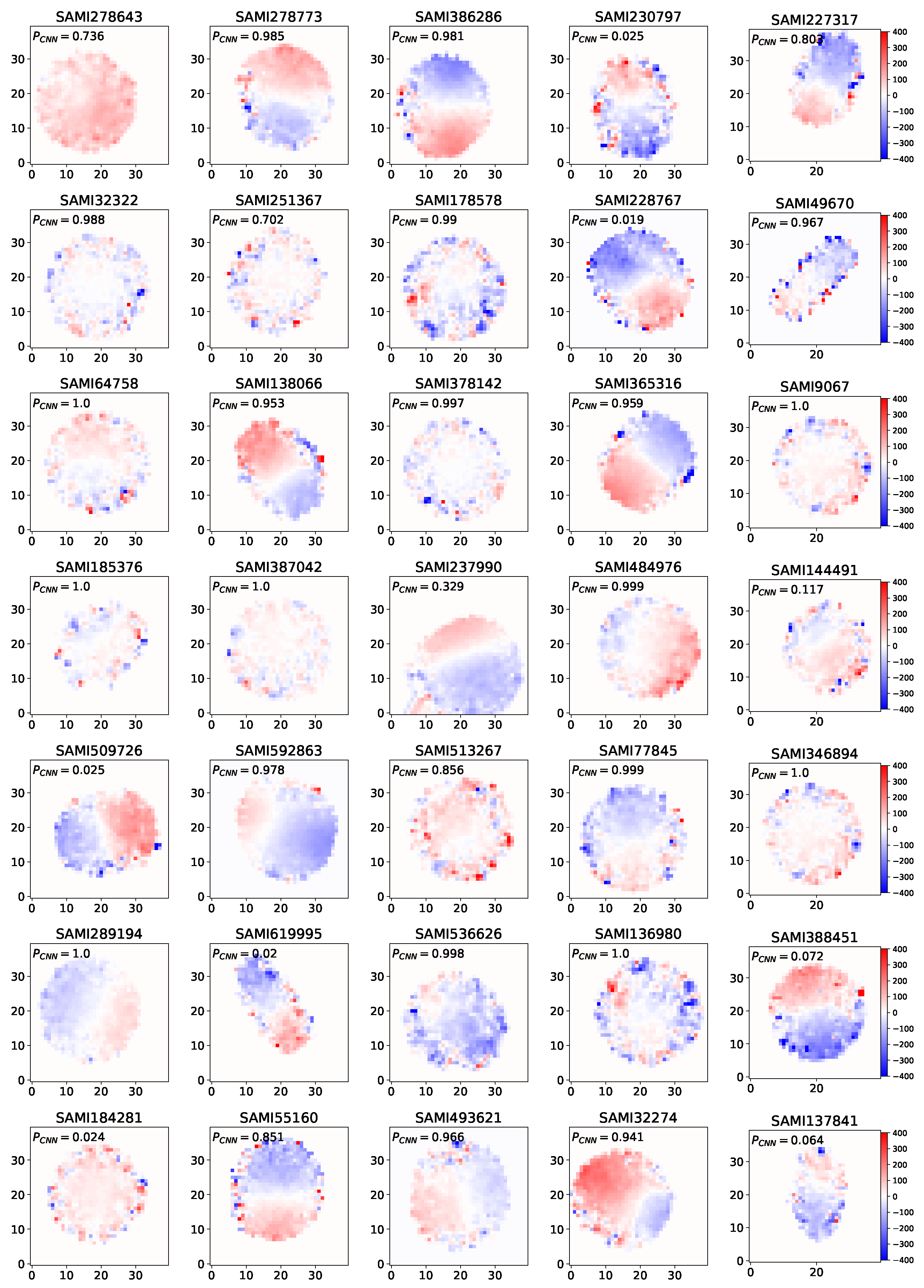

3.2. Testing the CNN on Unknown Rotators

3.3. Interpretability of the Model’s Classifications

- (i)

- (ii)

- Utilizing Integrated Gradients (IGs) [64] to emphasize the areas of input images that significantly correspond to the fast or slow classes.

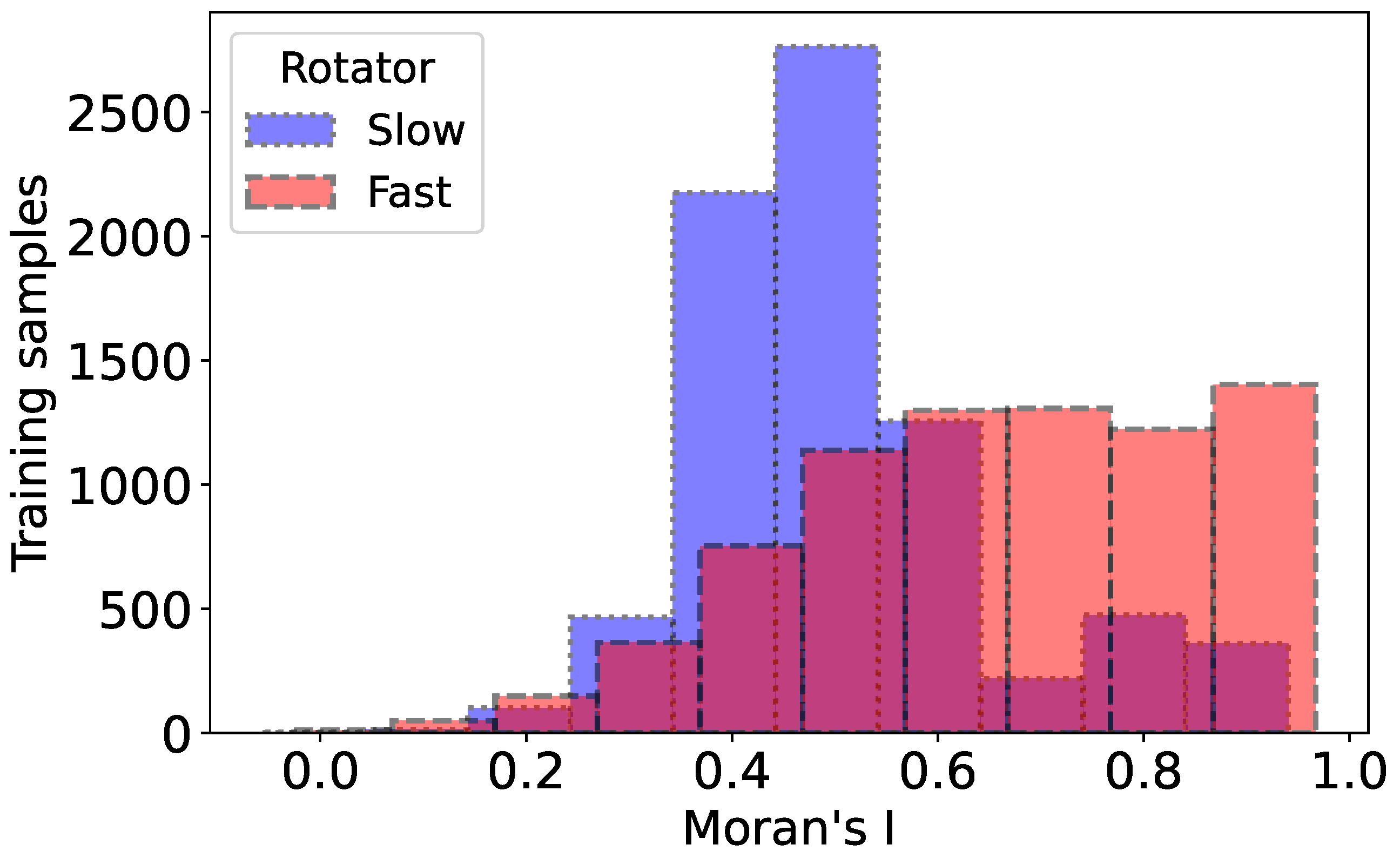

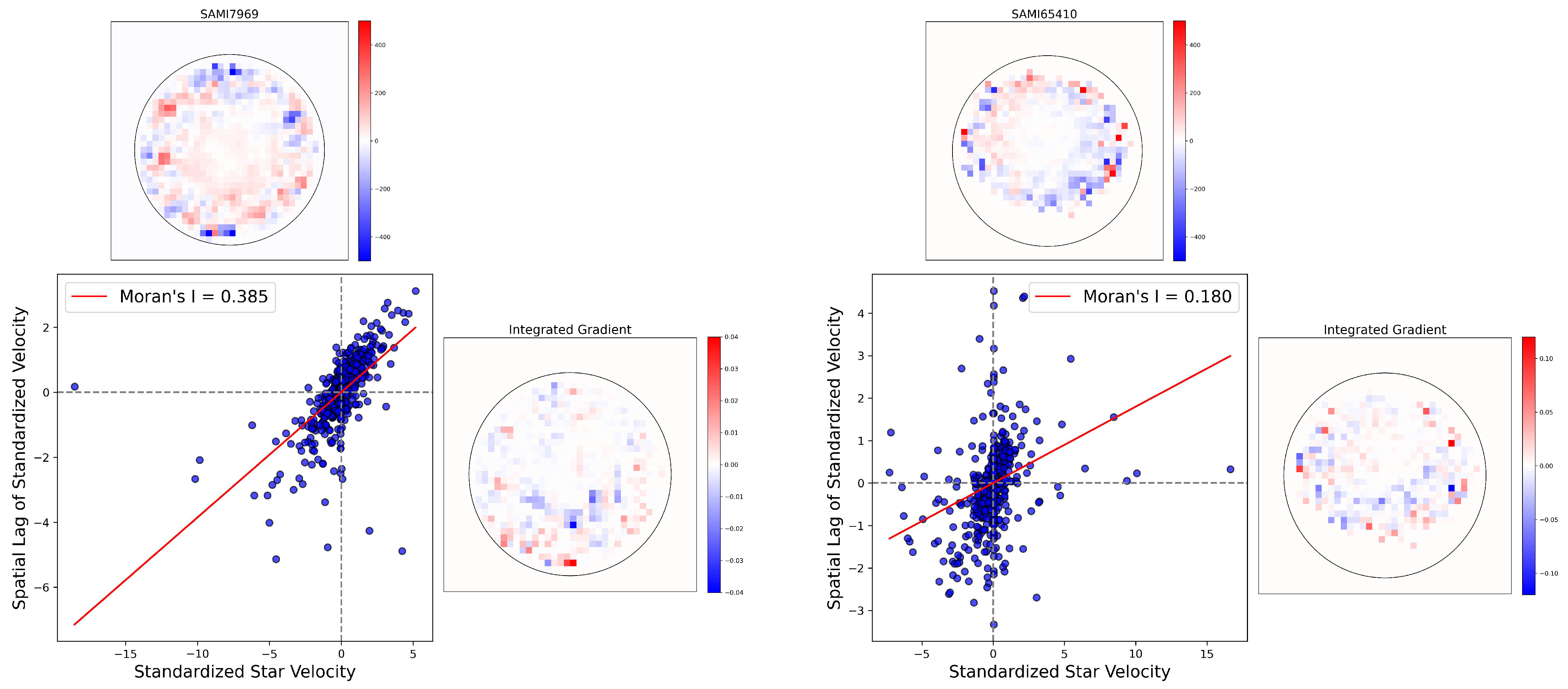

3.3.1. Clustering of High- and Low-Velocity Stars

- (i)

- A positive GMI index (close to +1) indicates clustering or positive spatial autocorrelation, meaning similar values (either high or low) tend to be close to each other;

- (ii)

- A negative GMI index (close to −1) indicates dispersion or negative spatial autocorrelation, suggesting that high and low values are intermixed and tend to avoid clustering;

- (iii)

- A zero or near-zero GMI index suggests a random spatial pattern with no clear clustering or dispersion.

3.3.2. Integrated Gradients

4. Discussion and Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

| 1 | The angle brackets indicate a sky average weighted by surface brightness. |

| 2 | We obtained results similar to those from the MaNGA dataset; however, we prefer to focus on the SAMI survey for this study, as it offers the advantage of higher signal-to-noise ratios in the stellar kinematics of galaxies compared to MaNGA. While we did apply our method to a small sample of MaNGA data with known and ellipticity values, the limited sample size and lower signal-to-noise ratio in MaNGA made it less valuable for inclusion at this stage. |

References

- Emsellem, E.; Cappellari, M.; Krajnović, D.; Van De Ven, G.; Bacon, R.; Bureau, M.; Davies, R.L.; De Zeeuw, P.; Falcón-Barroso, J.; Kuntschner, H.; et al. The SAURON project—IX. A kinematic classification for early-type galaxies. Mon. Not. R. Astron. Soc. 2007, 379, 401–417. [Google Scholar] [CrossRef]

- Raouf, M.; Smith, R.; Khosroshahi, H.G.; Sande, J.v.d.; Bryant, J.J.; Cortese, L.; Brough, S.; Croom, S.M.; Hwang, H.S.; Driver, S.; et al. The SAMI Galaxy Survey: Kinematics of Stars and Gas in Brightest Group Galaxies—The Role of Group Dynamics. Astrophys. J. 2021, 908, 123. [Google Scholar] [CrossRef]

- Raouf, M.; Viti, S.; García-Burillo, S.; Richings, A.J.; Schaye, J.; Bemis, A.; Nobels, F.S.J.; Guainazzi, M.; Huang, K.Y.; Schaller, M.; et al. Hydrodynamic simulations of the disc of gas around supermassive black holes (HDGAS)—I. Molecular gas dynamics. Mon. Not. R. Astron. Soc. 2023, 524, 786–800. [Google Scholar] [CrossRef]

- Raouf, M.; Purabbas, M.H.; Fazel Hesar, F. Impact of Active Galactic Nuclei Feedback on the Dynamics of Gas: A Review across Diverse Environments. Galaxies 2024, 12, 16. [Google Scholar] [CrossRef]

- Cappellari, M.; Emsellem, E.; Bacon, R.; Bureau, M.; Davies, R.L.; De Zeeuw, P.; Falcón-Barroso, J.; Krajnović, D.; Kuntschner, H.; McDermid, R.M.; et al. The SAURON project—X. The orbital anisotropy of elliptical and lenticular galaxies: Revisiting the (V/σ, ε) diagram with integral-field stellar kinematics. Mon. Not. R. Astron. Soc. 2007, 379, 418–444. [Google Scholar] [CrossRef]

- Hernquist, L. Structure of merger remnants. II-Progenitors with rotating bulges. Astrophys. J. 1993, 409, 548–562. [Google Scholar] [CrossRef]

- Burkert, A.; Naab, T.; Johansson, P.H.; Jesseit, R. SAURON’s Challenge for the Major Merger Scenario of Elliptical Galaxy Formation. Astrophys. J. 2008, 685, 897. [Google Scholar] [CrossRef][Green Version]

- Blanton, M.R.; Moustakas, J. Physical properties and environments of nearby galaxies. Annu. Rev. Astron. Astrophys. 2009, 47, 159–210. [Google Scholar] [CrossRef]

- Naab, T.; Oser, L.; Emsellem, E.; Cappellari, M.; Krajnović, D.; McDermid, R.M.; Alatalo, K.; Bayet, E.; Blitz, L.; Bois, M.; et al. The ATLAS3D project—XXV. Two-dimensional kinematic analysis of simulated galaxies and the cosmological origin of fast and slow rotators. Mon. Not. R. Astron. Soc. 2014, 444, 3357–3387. [Google Scholar] [CrossRef]

- Raouf, M.; Khosroshahi, H.G.; Ponman, T.J.; Dariush, A.A.; Molaeinezhad, A.; Tavasoli, S. Ultimate age-dating method for galaxy groups; clues from the Millennium Simulations. Mon. Not. R. Astron. Soc. 2014, 442, 1578–1585. [Google Scholar] [CrossRef]

- Raouf, M.; Khosroshahi, H.G.; Dariush, A. Evolution of Galaxy Groups in the Illustris Simulation. Astrophys. J. 2016, 824, 140. [Google Scholar] [CrossRef]

- Raouf, M.; Khosroshahi, H.G.; Mamon, G.A.; Croton, D.J.; Hashemizadeh, A.; Dariush, A.A. Merger History of Central Galaxies in Semi-analytic Models of Galaxy Formation. Astrophys. J. 2018, 863, 40. [Google Scholar] [CrossRef]

- Raouf, M.; Smith, R.; Khosroshahi, H.G.; Dariush, A.A.; Driver, S.; Ko, J.; Hwang, H.S. The Impact of the Dynamical State of Galaxy Groups on the Stellar Populations of Central Galaxies. Astrophys. J. 2019, 887, 264. [Google Scholar] [CrossRef]

- Illingworth, G. Rotation in 13 elliptical galaxies. Astrophys. J. 1977, 218, L43–L47. [Google Scholar] [CrossRef]

- Binney, J. Rotation and anisotropy of galaxies revisited. Mon. Not. R. Astron. Soc. 2005, 363, 937–942. [Google Scholar] [CrossRef]

- Vitvitska, M.; Klypin, A.A.; Kravtsov, A.V.; Wechsler, R.H.; Primack, J.R.; Bullock, J.S. The origin of angular momentum in dark matter halos. Astrophys. J. 2002, 581, 799. [Google Scholar] [CrossRef]

- Prieto, J.; Jimenez, R.; Haiman, Z.; González, R.E. The origin of spin in galaxies: Clues from simulations of atomic cooling haloes. Mon. Not. R. Astron. Soc. 2015, 452, 784–802. [Google Scholar] [CrossRef][Green Version]

- Bryant, J.J.; Bland-Hawthorn, J.; Lawrence, J.; Norris, B.; Min, S.S.; Brown, R.; Wang, A.; Bhatia, G.S.; Saunders, W.; Content, R.; et al. Hector: A new multi-object integral field spectrograph instrument for the Anglo-Australian Telescope. In Proceedings of the Ground-Based and Airborne Instrumentation for Astronomy VIII, Online Conference, 14–18 December 2020; Volume 11447, pp. 201–207. [Google Scholar]

- Bryant, J.J.; Oh, S.; Gunawardhana, M.; Quattropani, G.; Bhatia, G.S.; Bland-Hawthorn, J.; Broderick, D.; Brown, R.; Content, R.; Croom, S.; et al. Hector: Performance of the new integral field spectrograph instrument for the Anglo-Australian Telescope. In Proceedings of the Ground-Based and Airborne Instrumentation for Astronomy X, Yokohama, Japan, 16–21 June 2024; Volume 13096, pp. 88–98. [Google Scholar]

- Mainieri, V.; Anderson, R.I.; Brinchmann, J.; Cimatti, A.; Ellis, R.S.; Hill, V.; Kneib, J.P.; McLeod, A.F.; Opitom, C.; Roth, M.M.; et al. The Wide-field Spectroscopic Telescope (WST) Science White Paper. arXiv 2024, arXiv:2403.05398. [Google Scholar] [CrossRef]

- Koushik, J. Understanding Convolutional Neural Networks. arXiv 2016, arXiv:1605.09081. [Google Scholar]

- Valizadegan, H.; Martinho, M.J.; Wilkens, L.S.; Jenkins, J.M.; Smith, J.C.; Caldwell, D.A.; Twicken, J.D.; Gerum, P.C.; Walia, N.; Hausknecht, K.; et al. ExoMiner: A highly accurate and explainable deep learning classifier that validates 301 new exoplanets. Astrophys. J. 2022, 926, 120. [Google Scholar] [CrossRef]

- Cuéllar, S.; Granados, P.; Fabregas, E.; Curé, M.; Vargas, H.; Dormido-Canto, S.; Farias, G. Deep learning exoplanets detection by combining real and synthetic data. PLoS ONE 2022, 17, e0268199. [Google Scholar] [CrossRef]

- Rezaei, S.; McKean, J.P.; Biehl, M.; Javadpour, A. DECORAS: Detection and characterization of radio-astronomical sources using deep learning. Mon. Not. R. Astron. Soc. 2022, 510, 5891–5907. [Google Scholar] [CrossRef]

- Cuoco, E.; Powell, J.; Cavaglià, M.; Ackley, K.; Bejger, M.; Chatterjee, C.; Coughlin, M.; Coughlin, S.; Easter, P.; Essick, R.; et al. Enhancing gravitational-wave science with machine learning. Mach. Learn. Sci. Technol. 2020, 2, 011002. [Google Scholar] [CrossRef]

- Chang, Y.Y.; Hsieh, B.C.; Wang, W.H.; Lin, Y.T.; Lim, C.F.; Toba, Y.; Zhong, Y.; Chang, S.Y. Identifying AGN host galaxies by machine learning with HSC+ WISE. Astrophys. J. 2021, 920, 68. [Google Scholar] [CrossRef]

- He, Z.; Er, X.; Long, Q.; Liu, D.; Liu, X.; Li, Z.; Liu, Y.; Deng, W.; Fan, Z. Deep learning for strong lensing search: Tests of the convolutional neural networks and new candidates from KiDS DR3. Mon. Not. R. Astron. Soc. 2020, 497, 556–571. [Google Scholar] [CrossRef]

- Cheng, T.Y.; Li, N.; Conselice, C.J.; Aragón-Salamanca, A.; Dye, S.; Metcalf, R.B. Identifying strong lenses with unsupervised machine learning using convolutional autoencoder. Mon. Not. R. Astron. Soc. 2020, 494, 3750–3765. [Google Scholar] [CrossRef]

- Rezaei, S.; Chegeni, A.; Nagam, B.C.; McKean, J.P.; Baratchi, M.; Kuijken, K.; Koopmans, L.V.E. Reducing false positives in strong lens detection through effective augmentation and ensemble learning. Mon. Not. R. Astron. Soc. 2025, staf327. [Google Scholar] [CrossRef]

- Chegeni, A.; Hassani, F.; Sadr, A.V.; Khosravi, N.; Kunz, M. Clusternets: A deep learning approach to probe clustering dark energy. arXiv 2023, arXiv:2308.03517. [Google Scholar] [CrossRef]

- Escamilla-Rivera, C.; Quintero, M.A.C.; Capozziello, S. A deep learning approach to cosmological dark energy models. J. Cosmol. Astropart. Phys. 2020, 2020, 008. [Google Scholar] [CrossRef]

- Goh, L.; Ocampo, I.; Nesseris, S.; Pettorino, V. Distinguishing coupled dark energy models with neural networks. Astron. Astrophys. 2024, 692, A101. [Google Scholar] [CrossRef]

- Khosa, C.K.; Mars, L.; Richards, J.; Sanz, V. Convolutional neural networks for direct detection of dark matter. J. Phys. Nucl. Part. Phys. 2020, 47, 095201. [Google Scholar] [CrossRef]

- Lucie-Smith, L.; Peiris, H.V.; Pontzen, A. An interpretable machine-learning framework for dark matter halo formation. Mon. Not. R. Astron. Soc. 2019, 490, 331–342. [Google Scholar] [CrossRef]

- Herrero-Garcia, J.; Patrick, R.; Scaffidi, A. A semi-supervised approach to dark matter searches in direct detection data with machine learning. J. Cosmol. Astropart. Phys. 2022, 2022, 039. [Google Scholar] [CrossRef]

- Sadr, A.V.; Farsian, F. Filling in Cosmic Microwave Background map missing regions via Generative Adversarial Networks. J. Cosmol. Astropart. Phys. 2021, 2021, 012. [Google Scholar] [CrossRef]

- Ni, S.; Li, Y.; Zhang, X. CMB delensing with deep learning. arXiv 2023, arXiv:2310.07358. [Google Scholar]

- Mishra, A.; Reddy, P.; Nigam, R. Cmb-gan: Fast simulations of cosmic microwave background anisotropy maps using deep learning. arXiv 2019, arXiv:1908.04682. [Google Scholar]

- Razzano, M.; Cuoco, E. Image-based deep learning for classification of noise transients in gravitational wave detectors. Class. Quantum Gravity 2018, 35, 095016. [Google Scholar] [CrossRef]

- Vajente, G.; Huang, Y.; Isi, M.; Driggers, J.C.; Kissel, J.S.; Szczepańczyk, M.; Vitale, S. Machine-learning nonstationary noise out of gravitational-wave detectors. Phys. Rev. 2020, 101, 042003. [Google Scholar] [CrossRef]

- Rezaei, S.; Chegeni, A.; Javadpour, A.; VafaeiSadr, A.; Cao, L.; Röttgering, H.; Staring, M. Bridging gaps with computer vision: AI in (bio)medical imaging and astronomy. Astron. Comput. 2025, 51, 100921. [Google Scholar] [CrossRef]

- Baron, D. Machine learning in astronomy: A practical overview. arXiv 2019, arXiv:1904.07248. [Google Scholar]

- Ntampaka, M.; Avestruz, C.; Boada, S.; Caldeira, J.; Cisewski-Kehe, J.; Di Stefano, R.; Dvorkin, C.; Evrard, A.E.; Farahi, A.; Finkbeiner, D.; et al. The role of machine learning in the next decade of cosmology. arXiv 2019, arXiv:1902.10159. [Google Scholar]

- Croom, S.M.; Lawrence, J.S.; Bland-Hawthorn, J.; Bryant, J.J.; Fogarty, L.; Richards, S.; Goodwin, M.; Farrell, T.; Miziarski, S.; Heald, R.; et al. The Sydney-AAO multi-object integral field spectrograph. Mon. Not. R. Astron. Soc. 2012, 421, 872–893. [Google Scholar] [CrossRef]

- Bryant, J.J.; Owers, M.S.; Robotham, A.; Croom, S.M.; Driver, S.P.; Drinkwater, M.J.; Lorente, N.P.; Cortese, L.; Scott, N.; Colless, M.; et al. The SAMI Galaxy Survey: Instrument specification and target selection. Mon. Not. R. Astron. Soc. 2015, 447, 2857–2879. [Google Scholar] [CrossRef]

- Sharp, R.; Saunders, W.; Smith, G.; Churilov, V.; Correll, D.; Dawson, J.; Farrel, T.; Frost, G.; Haynes, R.; Heald, R.; et al. Performance of AAOmega: The AAT multi-purpose fiber-fed spectrograph. In Proceedings of the Ground-Based and Airborne Instrumentation for Astronomy, Orlando, FL, USA, 24–31 May 2006; Volume 6269, pp. 152–164. [Google Scholar]

- Sharp, R.; Allen, J.T.; Fogarty, L.M.; Croom, S.M.; Cortese, L.; Green, A.W.; Nielsen, J.; Richards, S.; Scott, N.; Taylor, E.N.; et al. The SAMI Galaxy Survey: Cubism and covariance, putting round pegs into square holes. Mon. Not. R. Astron. Soc. 2015, 446, 1551–1566. [Google Scholar] [CrossRef]

- Bland-Hawthorn, J.; Bryant, J.; Robertson, G.; Gillingham, P.; O’Byrne, J.; Cecil, G.; Haynes, R.; Croom, S.; Ellis, S.; Maack, M.; et al. Hexabundles: Imaging fiber arrays for low-light astronomical applications. Opt. Express 2011, 19, 2649–2661. [Google Scholar] [CrossRef]

- Allen, J.T.; Croom, S.M.; Konstantopoulos, I.S.; Bryant, J.J.; Sharp, R.; Cecil, G.; Fogarty, L.M.; Foster, C.; Green, A.W.; Ho, I.T.; et al. The SAMI Galaxy Survey: Early data release. Mon. Not. R. Astron. Soc. 2015, 446, 1567–1583. [Google Scholar] [CrossRef]

- Green, A.W.; Croom, S.M.; Scott, N.; Cortese, L.; Medling, A.M.; D’eugenio, F.; Bryant, J.J.; Bland-Hawthorn, J.; Allen, J.T.; Sharp, R.; et al. The SAMI Galaxy Survey: Data Release One with emission-line physics value-added products. Mon. Not. R. Astron. Soc. 2018, 475, 716–734. [Google Scholar] [CrossRef]

- Scott, N.; van de Sande, J.; Croom, S.M.; Groves, B.; Owers, M.S.; Poetrodjojo, H.; D’Eugenio, F.; Medling, A.M.; Barat, D.; Barone, T.M.; et al. The SAMI Galaxy Survey: Data Release Two with absorption-line physics value-added products. Mon. Not. R. Astron. Soc. 2018, 481, 2299–2319. [Google Scholar] [CrossRef]

- Van De Sande, J.; Bland-Hawthorn, J.; Fogarty, L.M.; Cortese, L.; d’Eugenio, F.; Croom, S.M.; Scott, N.; Allen, J.T.; Brough, S.; Bryant, J.J.; et al. The SAMI Galaxy Survey: Revisiting galaxy classification through high-order stellar kinematics. Astrophys. J. 2017, 835, 104. [Google Scholar] [CrossRef]

- Emsellem, E.; Cappellari, M.; Krajnović, D.; Alatalo, K.; Blitz, L.; Bois, M.; Bournaud, F.; Bureau, M.; Davies, R.L.; Davis, T.A.; et al. The ATLAS3D project—III. A census of the stellar angular momentum within the effective radius of early-type galaxies: Unveiling the distribution of fast and slow rotators. Mon. Not. R. Astron. Soc. 2011, 414, 888–912. [Google Scholar] [CrossRef]

- Zhao, Z.Q.; Zheng, P.; Xu, S.t.; Wu, X. Object detection with deep learning: A review. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 3212–3232. [Google Scholar] [CrossRef]

- Wersing, H.; Körner, E. Learning optimized features for hierarchical models of invariant object recognition. Neural Comput. 2003, 15, 1559–1588. [Google Scholar] [CrossRef]

- O’Malley, T.; Bursztein, E.; Long, J.; Chollet, F.; Jin, H.; Invernizzi, L.; Jin, H. KerasTuner. 2019. Available online: https://github.com/keras-team/keras-tuner (accessed on 29 January 2025).

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Mao, A.; Mohri, M.; Zhong, Y. Cross-entropy loss functions: Theoretical analysis and applications. In Proceedings of the International Conference on Machine Learning, Honolulu, HI, USA, 23–29 July 2023; pp. 23803–23828. [Google Scholar]

- Liang, J. Confusion matrix: Machine learning. Pogil Act. Clgh. 2022, 3, 4. Available online: https://pac.pogil.org/index.php/pac/article/view/304 (accessed on 12 December 2022).

- Hanley, J.A. Receiver operating characteristic (ROC) methodology: The state of the art. Crit. Rev. Diagn. Imaging 1989, 29, 307–335. [Google Scholar]

- Harborne, K.E.; Power, C.; Robotham, A.S.G.; Cortese, L.; Taranu, D.S. A numerical twist on the spin parameter, λR. Mon. Not. R. Astron. Soc. 2019, 483, 249–262. [Google Scholar] [CrossRef]

- Moran, P.A. The interpretation of statistical maps. J. R. Stat. Soc. Ser. (Methodol.) 1948, 10, 243–251. [Google Scholar] [CrossRef]

- Chen, Y. New approaches for calculating Moran’s index of spatial autocorrelation. PLoS ONE 2013, 8, e68336. [Google Scholar] [CrossRef]

- Sundararajan, M.; Taly, A.; Yan, Q. Axiomatic attribution for deep networks. In Proceedings of the International Conference on Machine Learning, PMLR, Sydney, Australia, 6–11 August 2017; pp. 3319–3328. [Google Scholar]

- Wilde, J.; Serjeant, S.; Bromley, J.M.; Dickinson, H.; Koopmans, L.V.; Metcalf, R.B. Detecting gravitational lenses using machine learning: Exploring interpretability and sensitivity to rare lensing configurations. Mon. Not. R. Astron. Soc. 2022, 512, 3464–3479. [Google Scholar] [CrossRef]

- Matilla, J.M.Z.; Sharma, M.; Hsu, D.; Haiman, Z. Interpreting deep learning models for weak lensing. Phys. Rev. 2020, 102, 123506. [Google Scholar] [CrossRef]

- Cordonnier, J.B.; Loukas, A.; Jaggi, M. On the relationship between self-attention and convolutional layers. arXiv 2019, arXiv:1911.03584. [Google Scholar]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Suthaharan, S.; Suthaharan, S. Support vector machine. In Machine Learning Models and Algorithms for Big Data Classification: Thinking with Examples for Effective Learning; Springer: Boston, MA, USA, 2016; pp. 207–235. [Google Scholar]

- Webb, G.I.; Keogh, E.; Miikkulainen, R. Naïve Bayes. Encycl. Mach. Learn. 2010, 15, 713–714. [Google Scholar]

| Layer Type | Output Shape | Parameters |

|---|---|---|

| Conv2D | (None, 38, 38, 64) | 640 |

| MaxPooling2D | (None, 19, 19, 64) | 0 |

| BatchNormalization | (None, 19, 19, 64) | 256 |

| Conv2D | (None, 17, 17, 128) | 73,856 |

| MaxPooling2D | (None, 8, 8, 128) | 0 |

| BatchNormalization | (None, 8, 8, 128) | 512 |

| Conv2D | (None, 6, 6, 256) | 295,168 |

| MaxPooling2D | (None, 3, 3, 256) | 0 |

| BatchNormalization | (None, 3, 3, 256) | 1024 |

| Flatten | (None, 2304) | 0 |

| Dense | (None, 96) | 221,280 |

| Dropout | (None, 96) | 0 |

| Dense | (None, 32) | 3104 |

| Dropout | (None, 32) | 0 |

| Dense | (None, 1) | 33 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chegeni, A.; Hesar, F.F.; Raouf, M.; Foing, B.; Verbeek, F.J. Assessing Galaxy Rotation Kinematics: Insights from Convolutional Neural Networks on Velocity Variations. Universe 2025, 11, 92. https://doi.org/10.3390/universe11030092

Chegeni A, Hesar FF, Raouf M, Foing B, Verbeek FJ. Assessing Galaxy Rotation Kinematics: Insights from Convolutional Neural Networks on Velocity Variations. Universe. 2025; 11(3):92. https://doi.org/10.3390/universe11030092

Chicago/Turabian StyleChegeni, Amirmohammad, Fatemeh Fazel Hesar, Mojtaba Raouf, Bernard Foing, and Fons J. Verbeek. 2025. "Assessing Galaxy Rotation Kinematics: Insights from Convolutional Neural Networks on Velocity Variations" Universe 11, no. 3: 92. https://doi.org/10.3390/universe11030092

APA StyleChegeni, A., Hesar, F. F., Raouf, M., Foing, B., & Verbeek, F. J. (2025). Assessing Galaxy Rotation Kinematics: Insights from Convolutional Neural Networks on Velocity Variations. Universe, 11(3), 92. https://doi.org/10.3390/universe11030092