Artificial Intelligence Revolutionizing Time-Domain Astronomy

Abstract

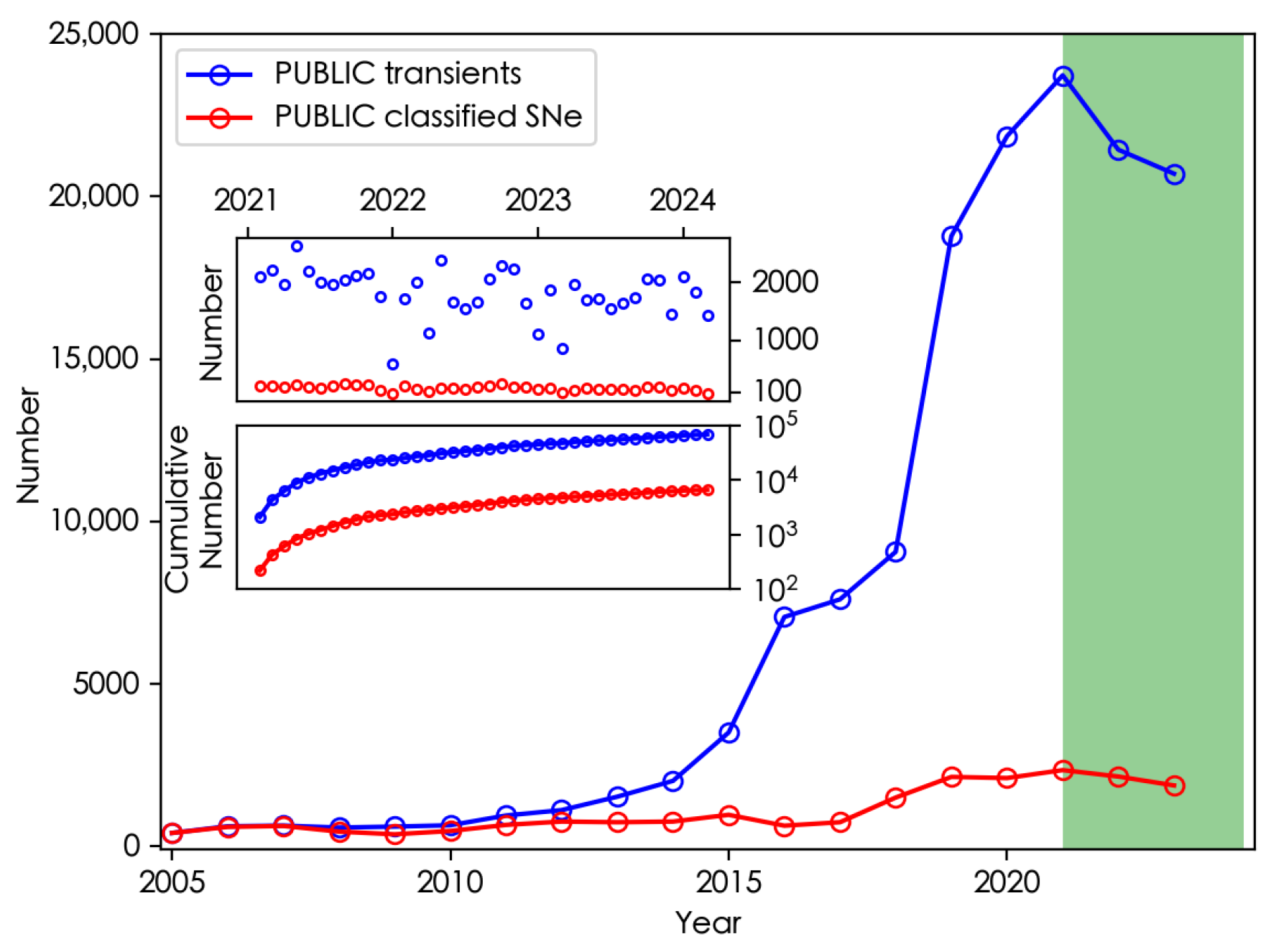

1. Introduction

- Exoplanet Discovery: AI algorithms analyze stellar data to identify patterns indicative of exoplanets orbiting distant stars. This has led to the discovery of numerous exoplanets, expanding our understanding of planetary systems beyond our own.

- Galaxy Morphology Classification: AI techniques, such as deep learning, are used to classify the shapes and structures of galaxies in large-scale surveys. This helps astronomers study galaxy evolution and formation.

- Gravitational Wave Detection: AI algorithms analyze data from gravitational wave observatories like LIGO and Virgo to detect and characterize gravitational wave signals emitted by cataclysmic cosmic events such as black hole mergers.

- Transient Detection and Classification: AI is used to automatically detect and classify transient events such as supernovae, gamma-ray bursts, and fast radio bursts in astronomical surveys, enabling rapid follow-up observations.

- Data Analysis and Interpretation: AI techniques are employed to analyze large datasets from telescopes and satellites, extracting valuable insights about the properties and behaviors of celestial objects.

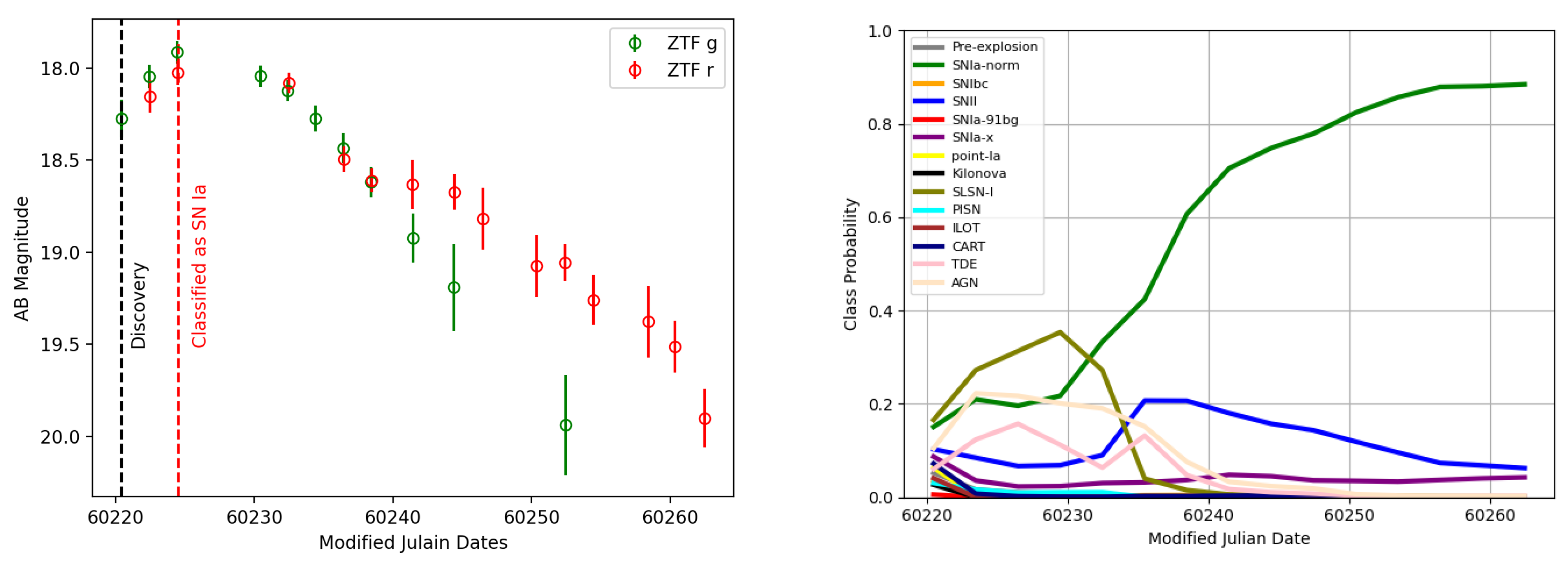

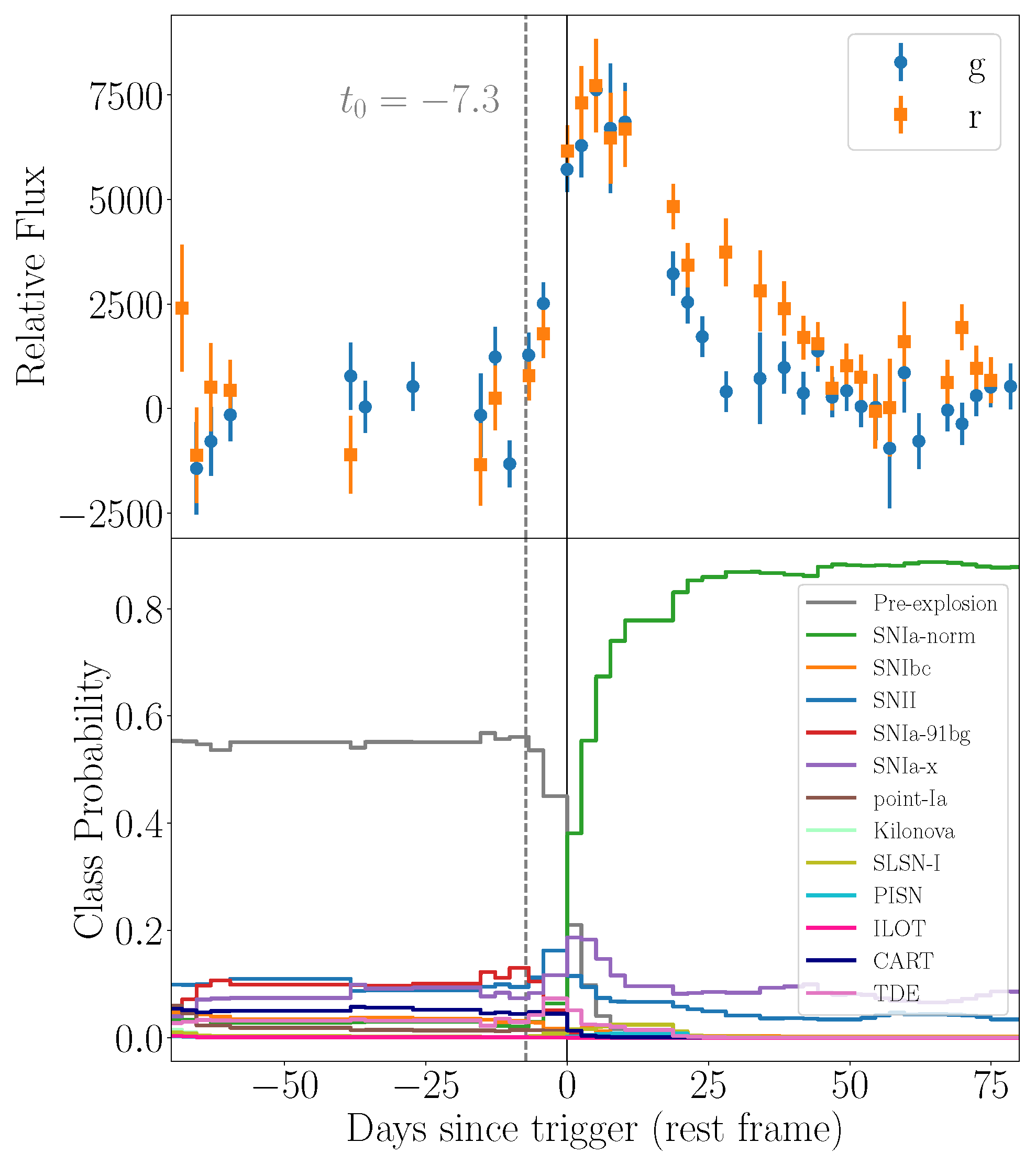

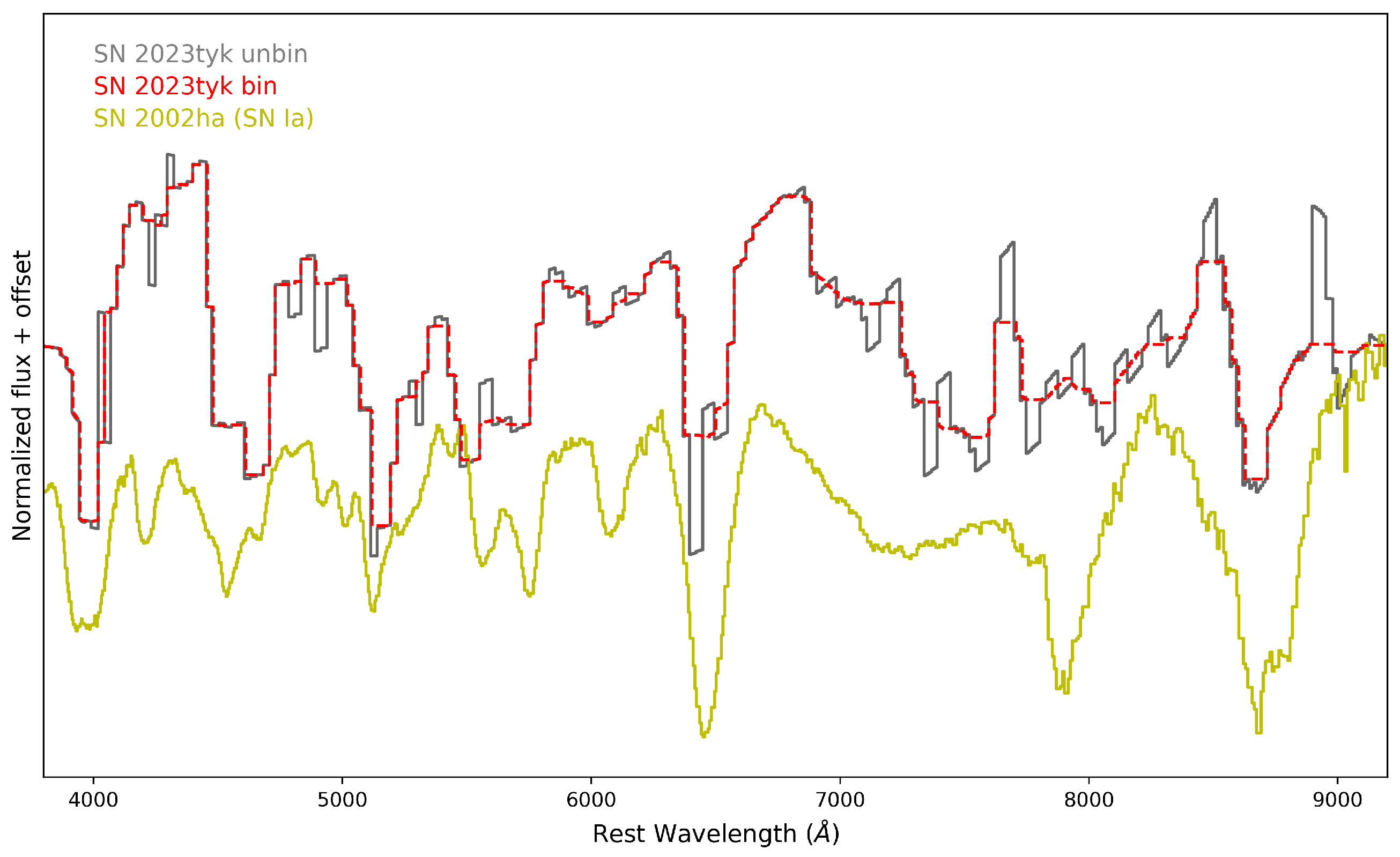

2. SN 2023tyk as a Case Illustrating the Application of AI in Time-Domain Astronomy

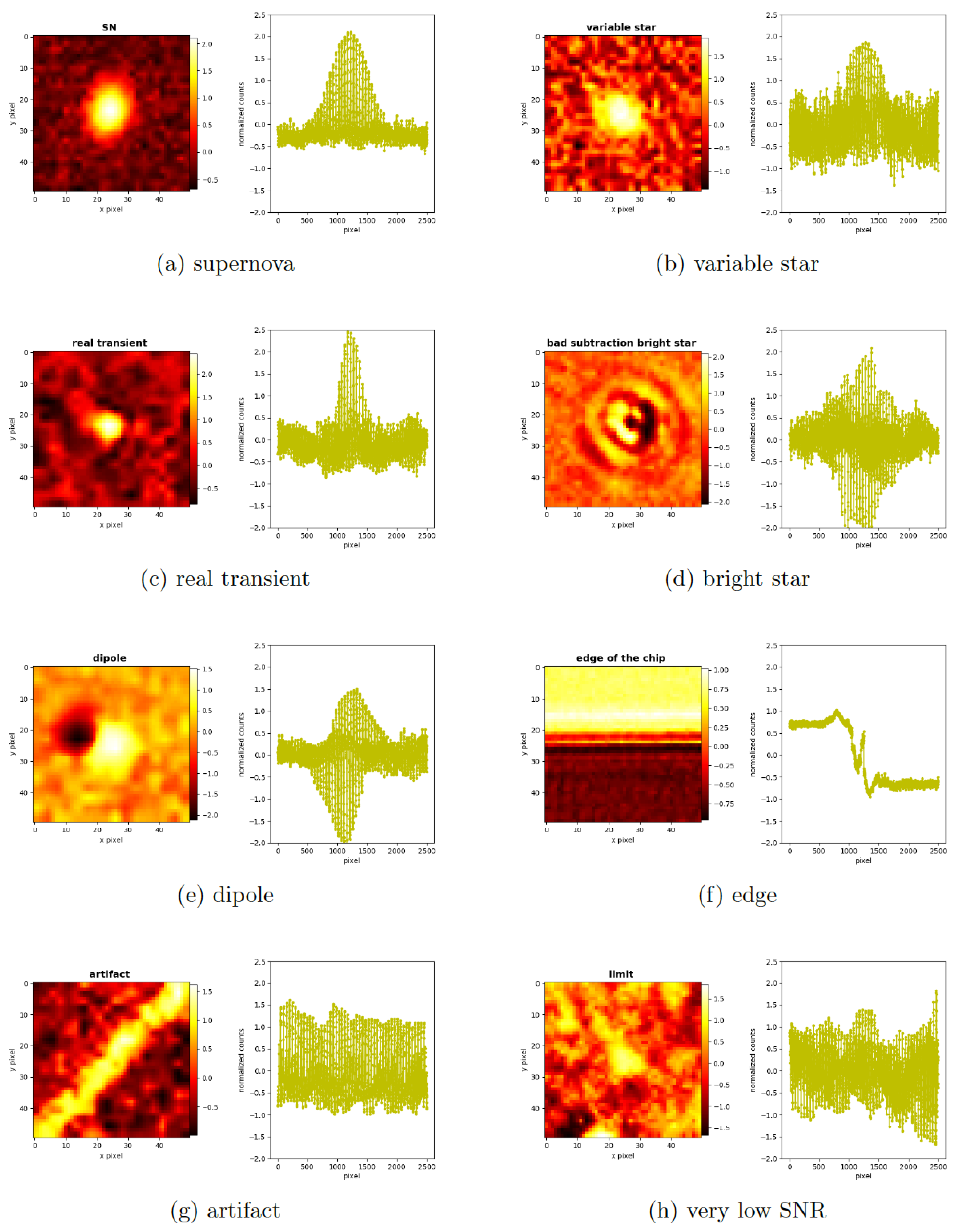

2.1. Real–Bogus Classification

2.2. Multi-Band Photometric Lightcurves

2.3. Spectral Energy Distributions

3. Machine Learning Algorithms and Criteria

3.1. Machine Learning Concepts and Evaluation Standards

- Model: A model is an abstract representation of a computer program or algorithm used to process and analyze data, make decisions, or make predictions. A model can be seen as a decision center that learns patterns and rules from data to perform tasks like prediction or classification. In time-domain astronomy, models can be applied to process and analyze various types of data, such as tabular data, time series data, and image data, to explore and understand astronomical phenomena.

- Dataset: A dataset is a collection of information used to train and test models. In time-domain astronomy, datasets can include various types of data, such as astronomical images, astronomical light curves, and observational flux distributions. Typically, datasets are divided into training sets and test sets. The training set is used for the learning and training of the model, providing a large number of sample data points that enable the model to learn the features and patterns of the data. The test set is used to evaluate the model’s performance and generalization ability on unseen data, verifying whether the model has truly learned knowledge from the training data.

- Features and Labels: Features are attributes or characteristics used to describe data, such as color, shape, size, etc. In time-domain astronomy, features can be various numerical characteristics extracted from observational data. Labels are interpretations or tags assigned to data, similar to naming or classifying the data. By learning the associations between features and labels, models can classify or identify new observational data.

- Supervised Learning [103]: In this learning mode, models are trained using a set of input–output pairs, where the input consists of features and the output is the target variable. The goal of supervised learning is to master the mapping relationship between features and the target variable. Common algorithms include Decision Trees, Support Vector Machines (SVMs), and Random Forests (RFs). Typical application scenarios include classification (classifying spectra as stars or quasars [104]) and regression (estimating redshift from photometric measurements [105]).

- Unsupervised Learning [106]: Unsupervised learning is used to discover the intrinsic structure of unlabeled data. It does not rely on labels provided by humans but instead reveals patterns and relationships in the data through techniques such as clustering, dimensionality reduction, and anomaly detection. Unsupervised learning is particularly important in scientific research because it can extract new knowledge from existing datasets and drive new discoveries. Common unsupervised learning methods include clustering algorithms (e.g., K-means, HDBSCAN, DBSCAN) [107,108,109], dimensionality reduction techniques (e.g., PCA, t-SNE, UMAP) [110,111,112], and anomaly detection algorithms [113,114,115,116].

- Reinforcement learning (RL) [117]: RL is centered on the idea that an agent explores and exploits a specific environment, optimizing its decision-making process through trial and error. The goal is to learn how to take effective actions through interaction with the environment. Compared to the other two machine learning methods, RL significantly transforms the learning process into actual actions. Currently, in the field of astronomy, RL has been widely applied to telescope control [118,119,120,121,122] and hyperparameter tuning in radio astronomical data processing pipelines [123,124].

- ROC (Receiver Operating Characteristic) Curve: The ROC curve displays the performance of a classifier at different thresholds, with the horizontal axis representing the false positive rate (FP) and the vertical axis representing the true positive rate (TP). The closer the curve is to the top left corner (point (0,1)), the better the classification performance. The AUC value, which represents the area under the ROC curve, ranges from 0 to 1; the closer the AUC value is to 1, the better the model’s performance.

- F1 Score: The F1 score is the harmonic mean of precision (P) and recall (R), calculated as follows:

- F2 Score: The F2 score gives more weight to recall than precision, calculated as follows:

- F1/2 Score: The F1/2 score emphasizes precision while considering recall, calculated as follows:

- F1/3 Score: The F1/3 score puts a greater emphasis on recall, calculated as follows:

- Accuracy: Accuracy measures the proportion of correct predictions (both true positives and true negatives) out of the total predictions made. It is calculated as follows:

3.2. Photometric Classification for Optical Transient Studies

3.3. Beyond the Optical: Classification of Transients Across the Spectrum

4. Future Directions and Challenges

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

| 1 | https://chatgpt.com/. Note that all webpage links throughout this paper are accessed on 25 October 2025. |

| 2 | |

| 3 | |

| 4 | |

| 5 | |

| 6 | |

| 7 | |

| 8 | |

| 9 | Check the detailed description and full list of LSST brokers at https://rubinobservatory.org/for-scientists/data-products/alerts-and-brokers. |

| 10 | |

| 11 | |

| 12 | |

| 13 | |

| 14 | |

| 15 | |

| 16 | |

| 17 | |

| 18 | |

| 19 | |

| 20 | |

| 21 | |

| 22 | |

| 23 | |

| 24 | |

| 25 | |

| 26 | |

| 27 | |

| 28 |

References

- OpenAI; Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Leoni Aleman, F.; Almeida, D.; Altenschmidt, J.; Altman, S.; et al. GPT-4 Technical Report. arXiv 2023, arXiv:2303.08774. [Google Scholar] [CrossRef]

- Alsabti, A.W.; Murdin, P. Handbook of Supernovae; Springer: Cham, Switzerland, 2017. [Google Scholar] [CrossRef]

- Bambi, C.; Santangelo, A. (Eds.) Handbook of X-Ray and Gamma-Ray Astrophysics; Springer: Singapore, 2024. [Google Scholar] [CrossRef]

- Warner, B. Cataclysmic Variable Stars; Cambridge University Press: Cambridge, UK, 1995. [Google Scholar] [CrossRef]

- Casares, J.; Jonker, P.G.; Israelian, G. X-Ray Binaries. In Handbook of Supernovae; Alsabti, A.W., Murdin, P., Eds.; Springer: Cham, Switzerland, 2017. [Google Scholar] [CrossRef]

- Beckmann, V.; Shrader, C.R. Active Galactic Nuclei; Wiley-VCH: Weinheim, Germany, 2012. [Google Scholar] [CrossRef]

- Luppino, G.A.; Tonry, J.L.; Stubbs, C.W. CCD mosaics–past, present, and future: A review. Opt. Astron. Instrum. 1998, 3355, 469–476. [Google Scholar] [CrossRef]

- Luppino, G.A.; Tonry, J.; Kaiser, N. The current state of the art in large CCD mosaic cameras, and a new strategy for wide field, high resolution optical imaging. In Optical Detectors for Astronomy II. Astrophysics and Space Science Library; Amico, P., Beletic, J.W., Eds.; Springer: Dordrecht, The Netherlands, 2000; Volume 252, p. 119. [Google Scholar] [CrossRef]

- Platais, I.; Kozhurina-Platais, V.; Girard, T.M.; van Altena, W.F.; Klemola, A.R.; Stauffer, J.R.; Armandroff, T.E.; Mighell, K.J.; Dell’Antonio, I.P.; Falco, E.E.; et al. WIYN Open Cluster Study. VIII. The Geometry and Stability of the NOAO CCD Mosaic Imager. Astron. J. 2002, 124, 601–611. [Google Scholar] [CrossRef]

- Chambers, K.C.; Magnier, E.A.; Metcalfe, N.; Flewelling, H.A.; Huber, M.E.; Waters, C.Z.; Denneau, L.; Draper, P.W.; Farrow, D.; Finkbeiner, D.P.; et al. The Pan-STARRS1 Surveys. arXiv 2016, arXiv:1612.05560. [Google Scholar] [CrossRef]

- Bellm, E.C.; Kulkarni, S.R.; Graham, M.J.; Dekany, R.; Smith, R.M.; Riddle, R.; Masci, F.J.; Helou, G.; Prince, T.A.; Adams, S.M.; et al. The Zwicky Transient Facility: System Overview, Performance, and First Results. Publ. Astron. Soc. Pac. 2018, 131, 018002. [Google Scholar] [CrossRef]

- Dekany, R.; Smith, R.M.; Riddle, R.; Feeney, M.; Porter, M.; Hale, D.; Zolkower, J.; Belicki, J.; Kaye, S.; Henning, J.; et al. The Zwicky Transient Facility: Observing System. Publ. Astron. Soc. Pac. 2020, 132, 038001. [Google Scholar] [CrossRef]

- Masci, F.J.; Laher, R.R.; Rusholme, B.; Shupe, D.L.; Groom, S.; Surace, J.; Jackson, E.; Monkewitz, S.; Beck, R.; Flynn, D.; et al. The Zwicky Transient Facility: Data Processing, Products, and Archive. Publ. Astron. Soc. Pac. 2019, 131, 018003. [Google Scholar] [CrossRef]

- Graham, M.J.; Kulkarni, S.R.; Bellm, E.C.; Adams, S.M.; Barbarino, C.; Blagorodnova, N.; Bodewits, D.; Bolin, B.; Brady, P.R.; Cenko, S.B.; et al. The Zwicky Transient Facility: Science Objectives. Publ. Astron. Soc. Pac. 2019, 131, 078001. [Google Scholar] [CrossRef]

- Li, L.X.; Paczyński, B. Transient Events from Neutron Star Mergers. Astrophys. J. Lett. 1998, 507, L59–L62. [Google Scholar] [CrossRef]

- Metzger, B.D.; Fernández, R. Red or blue? A potential kilonova imprint of the delay until black hole formation following a neutron star merger. Mon. Not. R. Astron. Soc. 2014, 441, 3444–3453. [Google Scholar] [CrossRef]

- Abbott, B.P.; Abbott, R.; Abbott, T.D.; Acernese, F.; Ackley, K.; Adams, C.; Adams, T.; Addesso, P.; Adhikari, R.X.; Adya, V.B.; et al. Multi-messenger Observations of a Binary Neutron Star Merger. Astrophys. J. Lett. 2017, 848, L12. [Google Scholar] [CrossRef]

- Coulter, D.A.; Foley, R.J.; Kilpatrick, C.D.; Drout, M.R.; Piro, A.L.; Shappee, B.J.; Siebert, M.R.; Simon, J.D.; Ulloa, N.; Kasen, D.; et al. Swope Supernova Survey 2017a (SSS17a), the optical counterpart to a gravitational wave source. Science 2017, 358, 1556–1558. [Google Scholar] [CrossRef] [PubMed]

- Valenti, S.; Sand, D.J.; Yang, S.; Cappellaro, E.; Tartaglia, L.; Corsi, A.; Jha, S.W.; Reichart, D.E.; Haislip, J.; Kouprianov, V. The Discovery of the Electromagnetic Counterpart of GW170817: Kilonova AT 2017gfo/DLT17ck. Astrophys. J. Lett. 2017, 848, L24. [Google Scholar] [CrossRef]

- Tanvir, N.R.; Levan, A.J.; González-Fernández, C.; Korobkin, O.; Mandel, I.; Rosswog, S.; Hjorth, J.; D’Avanzo, P.; Fruchter, A.S.; Fryer, C.L.; et al. The Emergence of a Lanthanide-rich Kilonova Following the Merger of Two Neutron Stars. Astrophys. J. 2017, 848, L27. [Google Scholar] [CrossRef]

- Lipunov, V.M.; Gorbovskoy, E.; Kornilov, V.G.; Tyurina, N.; Balanutsa, P.; Kuznetsov, A.; Vlasenko, D.; Kuvshinov, D.; Gorbunov, I.; Buckley, D.A.H.; et al. MASTER Optical Detection of the First LIGO/Virgo Neutron Star Binary Merger GW170817. Astrophys. J. Lett. 2017, 850, L1. [Google Scholar] [CrossRef]

- Soares-Santos, M.; Holz, D.E.; Annis, J.; Chornock, R.; Herner, K.; Berger, E.; Brout, D.; Chen, H.Y.; Kessler, R.; Sako, M.; et al. The Electromagnetic Counterpart of the Binary Neutron Star Merger LIGO/Virgo GW170817. I. Discovery of the Optical Counterpart Using the Dark Energy Camera. Astrophys. J. Lett. 2017, 848, L16. [Google Scholar] [CrossRef]

- Arcavi, I.; Hosseinzadeh, G.; Howell, D.A.; McCully, C.; Poznanski, D.; Kasen, D.; Barnes, J.; Zaltzman, M.; Vasylyev, S.; Maoz, D.; et al. Optical emission from a kilonova following a gravitational-wave-detected neutron-star merger. Nature 2017, 551, 64–66. [Google Scholar] [CrossRef]

- Kasliwal, M.M.; Nakar, E.; Singer, L.P.; Kaplan, D.L.; Cook, D.O.; Van Sistine, A.; Lau, R.M.; Fremling, C.; Gottlieb, O.; Jencson, J.E.; et al. Illuminating gravitational waves: A concordant picture of photons from a neutron star merger. Science 2017, 358, 1559–1565. [Google Scholar] [CrossRef]

- Yang, S.; Valenti, S.; Cappellaro, E.; Sand, D.J.; Tartaglia, L.; Corsi, A.; Reichart, D.E.; Haislip, J.; Kouprianov, V. An Empirical Limit on the Kilonova Rate from the DLT40 One Day Cadence Supernova Survey. Astrophys. J. Lett. 2017, 851, L48. [Google Scholar] [CrossRef]

- Goldstein, A.; Veres, P.; Burns, E.; Briggs, M.S.; Hamburg, R.; Kocevski, D.; Wilson-Hodge, C.A.; Preece, R.D.; Poolakkil, S.; Roberts, O.J.; et al. An Ordinary Short Gamma-Ray Burst with Extraordinary Implications: Fermi-GBM Detection of GRB 170817A. Astrophys. J. Lett. 2017, 848, L14. [Google Scholar] [CrossRef]

- Savchenko, V.; Ferrigno, C.; Kuulkers, E.; Bazzano, A.; Bozzo, E.; Brandt, S.; Chenevez, J.; Courvoisier, T.J.L.; Diehl, R.; Domingo, A.; et al. INTEGRAL Detection of the First Prompt Gamma-Ray Signal Coincident with the Gravitational-wave Event GW170817. Astrophys. J. Lett. 2017, 848, L15. [Google Scholar] [CrossRef]

- Haggard, D.; Nynka, M.; Ruan, J.J.; Kalogera, V.; Cenko, S.B.; Evans, P.; Kennea, J.A. A Deep Chandra X-Ray Study of Neutron Star Coalescence GW170817. Astrophys. J. Lett. 2017, 848, L25. [Google Scholar] [CrossRef]

- Troja, E.; Piro, L.; van Eerten, H.; Wollaeger, R.T.; Im, M.; Fox, O.D.; Butler, N.R.; Cenko, S.B.; Sakamoto, T.; Fryer, C.L.; et al. The X-ray counterpart to the gravitational-wave event GW170817. Nature 2017, 551, 71–74. [Google Scholar] [CrossRef]

- Hallinan, G.; Corsi, A.; Mooley, K.P.; Hotokezaka, K.; Nakar, E.; Kasliwal, M.M.; Kaplan, D.L.; Frail, D.A.; Myers, S.T.; Murphy, T.; et al. A radio counterpart to a neutron star merger. Science 2017, 358, 1579–1583. [Google Scholar] [CrossRef]

- Margutti, R.; Berger, E.; Fong, W.; Guidorzi, C.; Alexander, K.D.; Metzger, B.D.; Blanchard, P.K.; Cowperthwaite, P.S.; Chornock, R.; Eftekhari, T.; et al. The Electromagnetic Counterpart of the Binary Neutron Star Merger LIGO/Virgo GW170817. V. Rising X-Ray Emission from an Off-axis Jet. Astrophys. J. Lett. 2017, 848, L20. [Google Scholar] [CrossRef]

- Yang, S.; Sand, D.J.; Valenti, S.; Cappellaro, E.; Tartaglia, L.; Wyatt, S.; Corsi, A.; Reichart, D.E.; Haislip, J.; Kouprianov, V.; et al. Optical Follow-up of Gravitational-wave Events during the Second Advanced LIGO/VIRGO Observing Run with the DLT40 Survey. Astrophys. J. 2019, 875, 59. [Google Scholar] [CrossRef]

- Drout, M.R.; Chornock, R.; Soderberg, A.M.; Sanders, N.E.; McKinnon, R.; Rest, A.; Foley, R.J.; Milisavljevic, D.; Margutti, R.; Berger, E.; et al. Rapidly Evolving and Luminous Transients from Pan-STARRS1. Astrophys. J. 2014, 794, 23. [Google Scholar] [CrossRef]

- Prentice, S.J.; Maguire, K.; Smartt, S.J.; Magee, M.R.; Schady, P.; Sim, S.; Chen, T.W.; Clark, P.; Colin, C.; Fulton, M.; et al. The Cow: Discovery of a Luminous, Hot, and Rapidly Evolving Transient. Astrophys. J. Lett. 2018, 865, L3. [Google Scholar] [CrossRef]

- Ho, A.Y.Q.; Perley, D.A.; Gal-Yam, A.; Lunnan, R.; Sollerman, J.; Schulze, S.; Das, K.K.; Dobie, D.; Yao, Y.; Fremling, C.; et al. A Search for Extragalactic Fast Blue Optical Transients in ZTF and the Rate of AT2018cow-like Transients. Astrophys. J. 2023, 949, 120. [Google Scholar] [CrossRef]

- Perley, D.A.; Mazzali, P.A.; Yan, L.; Cenko, S.B.; Gezari, S.; Taggart, K.; Blagorodnova, N.; Fremling, C.; Mockler, B.; Singh, A.; et al. The fast, luminous ultraviolet transient AT2018cow: Extreme supernova, or disruption of a star by an intermediate-mass black hole? Mon. Not. R. Astron. Soc. 2019, 484, 1031–1049. [Google Scholar] [CrossRef]

- Margutti, R.; Metzger, B.D.; Chornock, R.; Vurm, I.; Roth, N.; Grefenstette, B.W.; Savchenko, V.; Cartier, R.; Steiner, J.F.; Terreran, G.; et al. An Embedded X-Ray Source Shines through the Aspherical AT 2018cow: Revealing the Inner Workings of the Most Luminous Fast-evolving Optical Transients. Astrophys. J. 2019, 872, 18. [Google Scholar] [CrossRef]

- Thompson, T.A.; Burrows, A.; Pinto, P.A. Shock Breakout in Core-Collapse Supernovae and Its Neutrino Signature. Astrophys. J. 2003, 592, 434–456. [Google Scholar] [CrossRef]

- Piro, A.L.; Chang, P.; Weinberg, N.N. Shock Breakout from Type Ia Supernova. Astrophys. J. 2010, 708, 598–604. [Google Scholar] [CrossRef]

- Basri, G.; Borucki, W.J.; Koch, D. The Kepler Mission: A wide-field transit search for terrestrial planets. New Astron. Rev. 2005, 49, 478–485. [Google Scholar] [CrossRef]

- Borucki, W.J. KEPLER Mission: Development and overview. Rep. Prog. Phys. 2016, 79, 036901. [Google Scholar] [CrossRef] [PubMed]

- Borucki, W.J.; Koch, D.; Basri, G.; Batalha, N.; Brown, T.; Caldwell, D.; Caldwell, J.; Christensen-Dalsgaard, J.; Cochran, W.D.; DeVore, E.; et al. Kepler Planet-Detection Mission: Introduction and First Results. Science 2010, 327, 977. [Google Scholar] [CrossRef]

- Ricker, G.R.; Winn, J.N.; Vanderspek, R.; Latham, D.W.; Bakos, G.Á.; Bean, J.L.; Berta-Thompson, Z.K.; Brown, T.M.; Buchhave, L.; Butler, N.R.; et al. Transiting Exoplanet Survey Satellite (TESS). J. Astron. Telesc. Instrum. Syst. 2015, 1, 014003. [Google Scholar] [CrossRef]

- Stassun, K.G.; Oelkers, R.J.; Paegert, M.; Torres, G.; Pepper, J.; De Lee, N.; Collins, K.; Latham, D.W.; Muirhead, P.S.; Chittidi, J.; et al. The Revised TESS Input Catalog and Candidate Target List. Astron. J. 2019, 158, 138. [Google Scholar] [CrossRef]

- Stassun, K.G.; Oelkers, R.J.; Pepper, J.; Paegert, M.; De Lee, N.; Torres, G.; Latham, D.W.; Charpinet, S.; Dressing, C.D.; Huber, D.; et al. The TESS Input Catalog and Candidate Target List. Astron. J. 2018, 156, 102. [Google Scholar] [CrossRef]

- Kempton, E.M.R.; Bean, J.L.; Louie, D.R.; Deming, D.; Koll, D.D.B.; Mansfield, M.; Christiansen, J.L.; López-Morales, M.; Swain, M.R.; Zellem, R.T.; et al. A Framework for Prioritizing the TESS Planetary Candidates Most Amenable to Atmospheric Characterization. Publ. Astron. Soc. Pac. 2018, 130, 114401. [Google Scholar] [CrossRef]

- Gaia Collaboration; Prusti, T.; de Bruijne, J.H.J.; Brown, A.G.A.; Vallenari, A.; Babusiaux, C.; Bailer-Jones, C.A.L.; Bastian, U.; Biermann, M.; Evans, D.W.; et al. The Gaia mission. Astron. Astrophys. 2016, 595, A1. [Google Scholar] [CrossRef]

- Foreman-Mackey, D.; Hogg, D.W.; Lang, D.; Goodman, J. emcee: The MCMC Hammer. Publ. Astron. Soc. Pac. 2013, 125, 306. [Google Scholar] [CrossRef]

- Smith, K.W.; Smartt, S.J.; Young, D.R.; Tonry, J.L.; Denneau, L.; Flewelling, H.; Heinze, A.N.; Weiland, H.J.; Stalder, B.; Rest, A.; et al. Design and Operation of the ATLAS Transient Science Server. Publ. Astron. Soc. Pac. 2020, 132, 085002. [Google Scholar] [CrossRef]

- Tonry, J.L. An Early Warning System for Asteroid Impact. Publ. Astron. Soc. Pac. 2010, 123, 58. [Google Scholar] [CrossRef]

- Tonry, J.L.; Denneau, L.; Heinze, A.N.; Stalder, B.; Smith, K.W.; Smartt, S.J.; Stubbs, C.W.; Weiland, H.J.; Rest, A. ATLAS: A High-cadence All-sky Survey System. Publ. Astron. Soc. Pac. 2018, 130, 064505. [Google Scholar] [CrossRef]

- Shappee, B.J.; Prieto, J.L.; Grupe, D.; Kochanek, C.S.; Stanek, K.Z.; Rosa, G.D.; Mathur, S.; Zu, Y.; Peterson, B.M.; Pogge, R.W.; et al. The man behind the curtain: X-rays drive the UV through nir variability in the 2013 active galactic nucleus outburst in ngc 2617. Astrophys. J. 2014, 788, 48. [Google Scholar] [CrossRef]

- Sand, D. Highlights from the D<40 Mpc Sub-Day Cadence Supernova Survey, DLT40. Am. Astron. Soc. Meet. Abstr. 2023, 241, 447.07. [Google Scholar]

- Rehemtulla, N.; Miller, A.A.; Jegou Du Laz, T.; Coughlin, M.W.; Fremling, C.; Perley, D.A.; Qin, Y.J.; Sollerman, J.; Mahabal, A.A.; Laher, R.R.; et al. The Zwicky Transient Facility Bright Transient Survey. III. BTSbot: Automated Identification and Follow-up of Bright Transients with Deep Learning. Astrophys. J. 2024, 972, 7. [Google Scholar] [CrossRef]

- Rehemtulla, N.; Miller, A.; Fremling, C.; Perley, D.A.; Qin, Y.; Sollerman, J.; Mahabal, A.; Neill, J.D.; Laz, T.J.D.; Coughlin, M. SN 2023tyk: Discovery to spectroscopic classification performed fully automatically. Transient Name Serv. AstroNote 2023, 265. [Google Scholar]

- Rehemtulla, N.; Miller, A.A.; Coughlin, M.W.; Jegou du Laz, T. BTSbot: A Multi-input Convolutional Neural Network to Automate and Expedite Bright Transient Identification for the Zwicky Transient Facility. arXiv 2023, arXiv:2307.07618. [Google Scholar] [CrossRef]

- Fremling, C.; Miller, A.A.; Sharma, Y.; Dugas, A.; Perley, D.A.; Taggart, K.; Sollerman, J.; Goobar, A.; Graham, M.L.; Neill, J.D.; et al. The Zwicky Transient Facility Bright Transient Survey. I. Spectroscopic Classification and the Redshift Completeness of Local Galaxy Catalogs. Astrophys. J. 2020, 895, 32. [Google Scholar] [CrossRef]

- Perley, D.A.; Fremling, C.; Sollerman, J.; Miller, A.A.; Dahiwale, A.S.; Sharma, Y.; Bellm, E.C.; Biswas, R.; Brink, T.G.; Bruch, R.J.; et al. The Zwicky Transient Facility Bright Transient Survey. II. A Public Statistical Sample for Exploring Supernova Demographics. Astrophys. J. 2020, 904, 35. [Google Scholar] [CrossRef]

- Blagorodnova, N.; Neill, J.D.; Walters, R.; Kulkarni, S.R.; Fremling, C.; Ben-Ami, S.; Dekany, R.G.; Fucik, J.R.; Konidaris, N.; Nash, R.; et al. The SED Machine: A Robotic Spectrograph for Fast Transient Classification. Publ. Astron. Soc. Pac. 2018, 130, 035003. [Google Scholar] [CrossRef]

- Rigault, M.; Neill, J.D.; Blagorodnova, N.; Dugas, A.; Feeney, M.; Walters, R.; Brinnel, V.; Copin, Y.; Fremling, C.; Nordin, J.; et al. Fully automated integral field spectrograph pipeline for the SEDMachine: Pysedm. Astron. Astrophys. 2019, 627, A115. [Google Scholar] [CrossRef]

- Fremling, C.; Hall, X.J.; Coughlin, M.W.; Dahiwale, A.S.; Duev, D.A.; Graham, M.J.; Kasliwal, M.M.; Kool, E.C.; Mahabal, A.A.; Miller, A.A.; et al. SNIascore: Deep-learning Classification of Low-resolution Supernova Spectra. Astrophys. J. Lett. 2021, 917, L2. [Google Scholar] [CrossRef]

- Alard, C.; Lupton, R. ISIS: A method for optimal image subtraction. Astrophysics Source Code Library, record ascl:9909.003. 1999. [Google Scholar]

- Becker, A. HOTPANTS: High Order Transform of PSF ANd Template Subtraction. Astrophysics Source Code Library. 2015. [Google Scholar]

- Zackay, B.; Ofek, E.O.; Gal-Yam, A. Proper Image Subtraction—Optimal Transient Detection, Photometry, and Hypothesis Testing. Astrophys. J. 2016, 830, 27. [Google Scholar] [CrossRef]

- Bertin, E.; Arnouts, S. SExtractor: Software for source extraction. Astron. Astrophys. Suppl. Ser. 1996, 117, 393–404. [Google Scholar] [CrossRef]

- Wright, D.E.; Smartt, S.J.; Smith, K.W.; Miller, P.; Kotak, R.; Rest, A.; Burgett, W.S.; Chambers, K.C.; Flewelling, H.; Hodapp, K.W.; et al. Machine learning for transient discovery in Pan-STARRS1 difference imaging. Mon. Not. R. Astron. Soc. 2015, 449, 451–466. [Google Scholar] [CrossRef]

- Brocato, E.; Branchesi, M.; Cappellaro, E.; Covino, S.; Grado, A.; Greco, G.; Limatola, L.; Stratta, G.; Yang, S.; Campana, S.; et al. GRAWITA: VLT Survey Telescope observations of the gravitational wave sources GW150914 and GW151226. Mon. Not. R. Astron. Soc. 2018, 474, 411–426. [Google Scholar] [CrossRef]

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: New York, NY, USA, 2012. [Google Scholar] [CrossRef]

- Ivezić, Ž.; Kahn, S.M.; Tyson, J.A.; Abel, B.; Acosta, E.; Allsman, R.; Alonso, D.; AlSayyad, Y.; Anderson, S.F.; Andrew, J.; et al. LSST: From Science Drivers to Reference Design and Anticipated Data Products. Astrophys. J. 2019, 873, 111. [Google Scholar] [CrossRef]

- Kreps, J. Kafka: A Distributed Messaging System for Log Processing. 2011. Available online: https://notes.stephenholiday.com/Kafka.pdf (accessed on 23 September 2025).

- Förster, F.; Cabrera-Vives, G.; Castillo-Navarrete, E.; Estévez, P.A.; Sánchez-Sáez, P.; Arredondo, J.; Bauer, F.E.; Carrasco-Davis, R.; Catelan, M.; Elorrieta, F.; et al. The Automatic Learning for the Rapid Classification of Events (ALeRCE) Alert Broker. Astron. J. 2021, 161, 242. [Google Scholar] [CrossRef]

- Smith, K.W.; Williams, R.D.; Young, D.R.; Ibsen, A.; Smartt, S.J.; Lawrence, A.; Morris, D.; Voutsinas, S.; Nicholl, M. Lasair: The Transient Alert Broker for LSST:UK. Res. Notes Am. Astron. Soc. 2019, 3, 26. [Google Scholar] [CrossRef]

- Matheson, T.; Stubens, C.; Wolf, N.; Lee, C.H.; Narayan, G.; Saha, A.; Scott, A.; Soraisam, M.; Bolton, A.S.; Hauger, B.; et al. The ANTARES Astronomical Time-domain Event Broker. Astron. J. 2021, 161, 107. [Google Scholar] [CrossRef]

- Leoni, M.; Ishida, E.E.O.; Peloton, J.; Möller, A. Fink: Early supernovae Ia classification using active learning. Astron. Astrophys. 2022, 663, A13. [Google Scholar] [CrossRef]

- Nordin, J.; Brinnel, V.; van Santen, J.; Bulla, M.; Feindt, U.; Franckowiak, A.; Fremling, C.; Gal-Yam, A.; Giomi, M.; Kowalski, M.; et al. Transient processing and analysis using AMPEL: Alert management, photometry, and evaluation of light curves. Astron. Astrophys. 2019, 631, A147. [Google Scholar] [CrossRef]

- Patterson, M.T.; Bellm, E.C.; Rusholme, B.; Masci, F.J.; Juric, M.; Krughoff, K.S.; Golkhou, V.Z.; Graham, M.J.; Kulkarni, S.R.; Helou, G.; et al. The Zwicky Transient Facility Alert Distribution System. Publ. Astron. Soc. Pac. 2019, 131, 018001. [Google Scholar] [CrossRef]

- Li, G.; Hu, M.; Li, W.; Yang, Y.; Wang, X.; Yan, S.; Hu, L.; Zhang, J.; Mao, Y.; Riise, H.; et al. A shock flash breaking out of a dusty red supergiant. Nature 2024, 627, 754–758. [Google Scholar] [CrossRef]

- Chen, T.W.; Yang, S.; Srivastav, S.; Moriya, T.J.; Smartt, S.J.; Rest, S.; Rest, A.; Lin, H.W.; Miao, H.Y.; Cheng, Y.C.; et al. Discovery and Extensive Follow-up of SN 2024ggi, a Nearby Type IIP Supernova in NGC 3621. Astrophys. J. 2025, 983, 86. [Google Scholar] [CrossRef]

- Zhao, G.; Zhao, Y.H.; Chu, Y.Q.; Jing, Y.P.; Deng, L.C. LAMOST spectral survey—An overview. Res. Astron. Astrophys. 2012, 12, 723–734. [Google Scholar] [CrossRef]

- Kim, J.H.; Im, M.; Lee, H.M.; Chang, S.W.; Choi, H.; Paek, G.S.H. Introduction to the 7-Dimensional Telescope: Commissioning Procedures and Data Characteristics. arXiv 2024, arXiv:2406.16462. [Google Scholar] [CrossRef]

- Kessler, R.; Conley, A.; Jha, S.; Kuhlmann, S. Supernova Photometric Classification Challenge. arXiv 2010, arXiv:1001.5210. [Google Scholar] [CrossRef]

- Kessler, R.; Bassett, B.; Belov, P.; Bhatnagar, V.; Campbell, H.; Conley, A.; Frieman, J.A.; Glazov, A.; González-Gaitán, S.; Hlozek, R.; et al. Results from the Supernova Photometric Classification Challenge. Publ. Astron. Soc. Pac. 2010, 122, 1415. [Google Scholar] [CrossRef]

- Dark Energy Survey Collaboration; Abbott, T.; Abdalla, F.B.; Aleksić, J.; Allam, S.; Amara, A.; Bacon, D.; Balbinot, E.; Banerji, M.; Bechtol, K.; et al. The Dark Energy Survey: More than dark energy—an overview. Mon. Not. R. Astron. Soc. 2016, 460, 1270–1299. [Google Scholar] [CrossRef]

- Bazin, G.; Palanque-Delabrouille, N.; Rich, J.; Ruhlmann-Kleider, V.; Aubourg, E.; Le Guillou, L.; Astier, P.; Balland, C.; Basa, S.; Carlberg, R.G.; et al. The core-collapse rate from the Supernova Legacy Survey. Astron. Astrophys. 2009, 499, 653–660. [Google Scholar] [CrossRef]

- Villar, V.A.; Berger, E.; Miller, G.; Chornock, R.; Rest, A.; Jones, D.O.; Drout, M.R.; Foley, R.J.; Kirshner, R.; Lunnan, R.; et al. Supernova Photometric Classification Pipelines Trained on Spectroscopically Classified Supernovae from the Pan-STARRS1 Medium-deep Survey. Astrophys. J. 2019, 884, 83. [Google Scholar] [CrossRef]

- Ambikasaran, S.; Foreman-Mackey, D.; Greengard, L.; Hogg, D.W.; O’Neil, M. Fast Direct Methods for Gaussian Processes. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 38, 252. [Google Scholar] [CrossRef]

- Yang, S.; Sollerman, J. HAFFET: Hybrid Analytic Flux FittEr for Transients. Astrophys. J. Supp. 2023, 269, 40. [Google Scholar] [CrossRef]

- Müller-Bravo, T.E.; Sullivan, M.; Smith, M.; Frohmaier, C.; Gutiérrez, C.P.; Wiseman, P.; Zontou, Z. PISCOLA: A data-driven transient light-curve fitter. Mon. Not. R. Astron. Soc. 2022, 512, 3266–3283. [Google Scholar] [CrossRef]

- Guillochon, J.; Nicholl, M.; Villar, V.A.; Mockler, B.; Narayan, G.; Mandel, K.S.; Berger, E.; Williams, P.K.G. MOSFiT: Modular Open Source Fitter for Transients. Astrophys. J. Supp. 2018, 236, 6. [Google Scholar] [CrossRef]

- Kennamer, N.; Ishida, E.E.O.; Gonzalez-Gaitan, S.; de Souza, R.S.; Ihler, A.; Ponder, K.; Vilalta, R.; Moller, A.; Jones, D.O.; Dai, M.; et al. Active learning with RESSPECT: Resource allocation for extragalactic astronomical transients. arXiv 2020, arXiv:2010.05941. [Google Scholar] [CrossRef]

- Liu, L.D.; Zhang, Y.H.; Yu, Y.W.; Du, Z.X.; Li, J.Y.; Wu, G.L.; Dai, Z.G. TransFit: An Efficient Framework for Transient Light-Curve Fitting with Time-Dependent Radiative Diffusion. arXiv 2025, arXiv:2505.13825. [Google Scholar] [CrossRef]

- Kessler, R.; Narayan, G.; Avelino, A.; Bachelet, E.; Biswas, R.; Brown, P.J.; Chernoff, D.F.; Connolly, A.J.; Dai, M.; Daniel, S.; et al. Models and Simulations for the Photometric LSST Astronomical Time Series Classification Challenge (PLAsTiCC). Publ. Astron. Soc. Pac. 2019, 131, 094501. [Google Scholar] [CrossRef]

- Kessler, R.; Bernstein, J.P.; Cinabro, D.; Dilday, B.; Frieman, J.A.; Jha, S.; Kuhlmann, S.; Miknaitis, G.; Sako, M.; Taylor, M.; et al. SNANA: A Public Software Package for Supernova Analysis. Publ. Astron. Soc. Pac. 2009, 121, 1028. [Google Scholar] [CrossRef]

- Sharma, Y.; Mahabal, A.A.; Sollerman, J.; Fremling, C.; Kulkarni, S.R.; Rehemtulla, N.; Miller, A.A.; Aubert, M.; Chen, T.X.; Coughlin, M.W.; et al. CCSNscore: A multi-input deep learning tool for classification of core-collapse supernovae using SED-Machine spectra. arXiv 2024, arXiv:2412.08601. [Google Scholar] [CrossRef]

- Turatto, M. Classification of Supernovae. In Supernovae and Gamma-Ray Bursters; Weiler, K., Ed.; Springer: Berlin, Germany, 2003; Volume 598, pp. 21–36. [Google Scholar] [CrossRef]

- Blondin, S.; Tonry, J.L. Determining the Type, Redshift, and Age of a Supernova Spectrum. Astrophys. J. 2007, 666, 1024–1047. [Google Scholar] [CrossRef]

- Martin-Brualla, R.; Pandey, R.; Bouaziz, S.; Brown, M.; Goldman, D.B. GeLaTO: Generative Latent Textured Objects. arXiv 2020, arXiv:2008.04852. [Google Scholar] [CrossRef]

- Muthukrishna, D.; Parkinson, D.; Tucker, B.E. DASH: Deep Learning for the Automated Spectral Classification of Supernovae and Their Hosts. Astrophys. J. 2019, 885, 85. [Google Scholar] [CrossRef]

- Goldwasser, S.; Yaron, O.; Sass, A.; Irani, I.; Gal-Yam, A.; Howell, D.A. The Next Generation SuperFit (NGSF) tool is now available for online execution on WISeREP. Transient Name Serv. AstroNote 2022, 191. [Google Scholar]

- Yaron, O.; Gal-Yam, A. WISeREP—An Interactive Supernova Data Repository. Publ. Astron. Soc. Pac. 2012, 124, 668. [Google Scholar] [CrossRef]

- Sollerman, J.; Yang, S.; Perley, D.; Schulze, S.; Fremling, C.; Kasliwal, M.; Shin, K.; Racine, B. Maximum luminosities of normal stripped-envelope supernovae are brighter than explosion models allow. Astron. Astrophys. 2022, 657, A64. [Google Scholar] [CrossRef]

- Cunningham, P.; Cord, M.; Delany, S.J. Supervised Learning. In Machine Learning Techniques for Multimedia: Case Studies on Organization and Retrieval; Cord, M., Cunningham, P., Eds.; Springer: Berlin/Heidelberg, Germany, 2008; pp. 21–49. [Google Scholar] [CrossRef]

- Clarke, A.O.; Scaife, A.M.M.; Greenhalgh, R.; Griguta, V. Identifying galaxies, quasars, and stars with machine learning: A new catalogue of classifications for 111 million SDSS sources without spectra. Astron. Astrophys. 2020, 639, A84. [Google Scholar] [CrossRef]

- Bilicki, M.; Dvornik, A.; Hoekstra, H.; Wright, A.H.; Chisari, N.E.; Vakili, M.; Asgari, M.; Giblin, B.; Heymans, C.; Hildebrandt, H.; et al. Bright galaxy sample in the Kilo-Degree Survey Data Release 4. Selection, photometric redshifts, and physical properties. Astron. Astrophys. 2021, 653, A82. [Google Scholar] [CrossRef]

- Ghahramani, Z. Unsupervised Learning. In Advanced Lectures on Machine Learning: ML Summer Schools 2003, Canberra, Australia, 2–14 February 2003, Tübingen, Germany, 4–16 August 2003, Revised Lectures; Bousquet, O., von Luxburg, U., Rätsch, G., Eds.; Springer: Berlin/Heidelberg, Germany, 2004; pp. 72–112. [Google Scholar] [CrossRef]

- Ikotun, A.M.; Ezugwu, A.E.; Abualigah, L.; Abuhaija, B.; Heming, J. K-means clustering algorithms: A comprehensive review, variants analysis, and advances in the era of big data. Inform. Sci. 2023, 622, 178–210. [Google Scholar] [CrossRef]

- McInnes, L.; Healy, J.; Astels, S. hdbscan: Hierarchical density based clustering. J. Open Source Softw. 2017, 2, 205. [Google Scholar] [CrossRef]

- Ester, M.; Kriegel, H.P.; Sander, J.; Xu, X. A density-based algorithm for discovering clusters in large spatial databases with noise. In Proceedings of the Second International Conference on Knowledge Discovery and Data Mining; AAAI Press: Washington, DC, USA, 1996. KDD’96. pp. 226–231. [Google Scholar]

- Maćkiewicz, A.; Ratajczak, W. Principal components analysis (PCA). Comput. Geosci. 1993, 19, 303–342. [Google Scholar] [CrossRef]

- Van der Maaten, L.; Hinton, G. Visualizing data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

- McInnes, L.; Healy, J.; Melville, J. UMAP: Uniform Manifold Approximation and Projection for Dimension Reduction. arXiv 2018, arXiv:1802.03426. [Google Scholar] [CrossRef]

- Chandola, V.; Banerjee, A.; Kumar, V. Anomaly detection: A survey. ACM Comput. Surv. 2009, 41, 1–58. [Google Scholar] [CrossRef]

- Abraham, B.; Chuang, A. Outlier detection and time series modeling. Technometrics 1989, 31, 241–248. [Google Scholar] [CrossRef]

- Pruzhinskaya, M.V.; Malanchev, K.L.; Kornilov, M.V.; Ishida, E.E.O.; Mondon, F.; Volnova, A.A.; Korolev, V.S. Anomaly detection in the Open Supernova Catalog. Mon. Not. R. Astron. Soc. 2019, 489, 3591–3608. [Google Scholar] [CrossRef]

- Ishida, E.E.O.; Kornilov, M.V.; Malanchev, K.L.; Pruzhinskaya, M.V.; Volnova, A.A.; Korolev, V.S.; Mondon, F.; Sreejith, S.; Malancheva, A.A.; Das, S. Active anomaly detection for time-domain discoveries. Astron. Astrophys. 2021, 650, A195. [Google Scholar] [CrossRef]

- Sutton, R.; Barto, A. Reinforcement Learning: An Introduction. IEEE Trans. Neural Netw. 1998, 9, 1054. [Google Scholar] [CrossRef]

- Nousiainen, J.; Rajani, C.; Kasper, M.; Helin, T.; Haffert, S.Y.; Vérinaud, C.; Males, J.R.; Van Gorkom, K.; Close, L.M.; Long, J.D.; et al. Toward on-sky adaptive optics control using reinforcement learning. Model-based policy optimization for adaptive optics. Astron. Astrophys. 2022, 664, A71. [Google Scholar] [CrossRef]

- Landman, R.; Haffert, S.Y.; Radhakrishnan, V.M.; Keller, C.U. Self-optimizing adaptive optics control with reinforcement learning for high-contrast imaging. J. Astron. Telesc. Instrum. Syst. 2021, 7, 039002. [Google Scholar] [CrossRef]

- Nousiainen, J.; Rajani, C.; Kasper, M.; Helin, T. Adaptive optics control using model-based reinforcement learning. Opt. Express 2021, 29, 15327. [Google Scholar] [CrossRef]

- Jia, P.; Jia, Q.; Jiang, T.; Liu, J. Observation Strategy Optimization for Distributed Telescope Arrays with Deep Reinforcement Learning. Astron. J. 2023, 165, 233. [Google Scholar] [CrossRef]

- Jia, P.; Jia, Q.; Jiang, T.; Yang, Z. A simulation framework for telescope array and its application in distributed reinforcement learning-based scheduling of telescope arrays. Astron. Comput. 2023, 44, 100732. [Google Scholar] [CrossRef]

- Yatawatta, S.; Avruch, I.M. Deep reinforcement learning for smart calibration of radio telescopes. Mon. Not. R. Astron. Soc. 2021, 505, 2141–2150. [Google Scholar] [CrossRef]

- Yatawatta, S. Hint assisted reinforcement learning: An application in radio astronomy. arXiv 2023, arXiv:2301.03933. [Google Scholar] [CrossRef]

- Baron, D. Machine Learning in Astronomy: A practical overview. arXiv 2019, arXiv:1904.07248. [Google Scholar] [CrossRef]

- Chen, S.; Kargaltsev, O.; Yang, H.; Hare, J.; Volkov, I.; Rangelov, B.; Tomsick, J. Population of X-Ray Sources in the Intermediate-age Cluster NGC 3532: A Test Bed for Machine-learning Classification. Astrophys. J. 2023, 948, 59. [Google Scholar] [CrossRef]

- Neira, M.; Gómez, C.; Suárez-Pérez, J.F.; Gómez, D.A.; Reyes, J.P.; Hoyos, M.H.; Arbeláez, P.; Forero-Romero, J.E. MANTRA: A Machine-learning Reference Light-curve Data Set for Astronomical Transient Event Recognition. Astrophys. J. Suppl. Ser. 2020, 250, 11. [Google Scholar] [CrossRef]

- de Beurs, Z.L.; Islam, N.; Gopalan, G.; Vrtilek, S.D. A Comparative Study of Machine-learning Methods for X-Ray Binary Classification. Astrophys. J. 2022, 933, 116. [Google Scholar] [CrossRef]

- Debosscher, J.; Sarro, L.M.; Aerts, C.; Cuypers, J.; Vandenbussche, B.; Garrido, R.; Solano, E. Automated supervised classification of variable stars. I. Methodology. Astron. Astrophys. 2007, 475, 1159–1183. [Google Scholar] [CrossRef]

- Richards, J.W.; Starr, D.L.; Butler, N.R.; Bloom, J.S.; Brewer, J.M.; Crellin-Quick, A.; Higgins, J.; Kennedy, R.; Rischard, M. On machine-learned classification of variable stars with sparse and noisy time-series data. Astrophys. J. 2011, 733, 10. [Google Scholar] [CrossRef]

- Kim, D.W.; Protopapas, P.; Byun, Y.I.; Alcock, C.; Khardon, R.; Trichas, M. Quasi-stellar object selection algorithm using time variability and machine learning: Selection of 1620 quasi-stellar object candidates from macho large magellanic cloud database. Astrophys. J. 2011, 735, 68. [Google Scholar] [CrossRef]

- Razzano, M.; Cuoco, E. Image-based deep learning for classification of noise transients in gravitational wave detectors. Class. Quantum Gravity 2018, 35, 095016. [Google Scholar] [CrossRef]

- Flamary, R. Astronomical image reconstruction with convolutional neural networks. arXiv 2016, arXiv:1612.04526. [Google Scholar] [CrossRef]

- Martinazzo, A.; Espadoto, M.; Hirata, N.S.T. Self-supervised Learning for Astronomical Image Classification. arXiv 2020, arXiv:2004.11336. [Google Scholar] [CrossRef]

- Qu, H.; Sako, M. Photometric Classification of Early-time Supernova Light Curves with SCONE. Astron. J. 2022, 163, 57. [Google Scholar] [CrossRef]

- Charnock, T.; Moss, A. Supernova Photometric Classification with Deep Recurrent Neural Networks. arXiv 2017, arXiv:1706.01849. [Google Scholar]

- Gupta, R.; Muthukrishna, D.; Lochner, M. A classifier-based approach to multiclass anomaly detection for astronomical transients. RAS Tech. Instrum. 2025, 4, rzae054. [Google Scholar] [CrossRef]

- Fraga, B.M.O.; Bom, C.R.; Santos, A.; Russeil, E.; Leoni, M.; Peloton, J.; Ishida, E.E.O.; Möller, A.; Blondin, S. Transient Classifiers for Fink: Benchmarks for LSST. arXiv 2024, arXiv:2404.08798. [Google Scholar] [CrossRef]

- Webb, S.; Lochner, M.; Muthukrishna, D.; Cooke, J.; Flynn, C.; Mahabal, A.; Goode, S.; Andreoni, I.; Pritchard, T.; Abbott, T.M.C. Unsupervised machine learning for transient discovery in deeper, wider, faster light curves. Mon. Not. R. Astron. Soc. 2020, 498, 3077–3094. [Google Scholar] [CrossRef]

- Mahabal, A.; Sheth, K.; Gieseke, F.; Pai, A.; Djorgovski, S.G.; Drake, A.; Graham, M.; the CSS/CRTS/PTF Collaboration. Deep-Learnt Classification of Light Curves. arXiv 2017, arXiv:1709.06257. [Google Scholar] [CrossRef]

- Turner, R.E. An Introduction to Transformers. arXiv 2023, arXiv:2304.10557. [Google Scholar] [CrossRef]

- Allam, T.; McEwen, J.D. Paying attention to astronomical transients: Introducing the time-series transformer for photometric classification. RAS Tech. Instrum. 2024, 3, 209–223. [Google Scholar] [CrossRef]

- Cabrera-Vives, G.; Moreno-Cartagena, D.; Astorga, N.; Reyes-Jainaga, I.; Förster, F.; Huijse, P.; Arredondo, J.; Muñoz Arancibia, A.M.; Bayo, A.; Catelan, M.; et al. ATAT: Astronomical Transformer for time series and Tabular data. arXiv 2024, arXiv:2405.03078. [Google Scholar] [CrossRef]

- Nun, I.; Protopapas, P.; Sim, B.; Zhu, M.; Dave, R.; Castro, N.; Pichara, K. FATS: Feature Analysis for Time Series. arXiv 2015, arXiv:1506.00010. [Google Scholar] [CrossRef]

- Cuoco, E.; Powell, J.; Cavaglià, M.; Ackley, K.; Bejger, M.; Chatterjee, C.; Coughlin, M.; Coughlin, S.; Easter, P.; Essick, R.; et al. Enhancing gravitational-wave science with machine learning. Mach. Learn. Sci. Technol. 2020, 2, 011002. [Google Scholar] [CrossRef]

- Malik, A.; Moster, B.P.; Obermeier, C. Exoplanet detection using machine learning. Mon. Not. R. Astron. Soc. 2022, 513, 5505–5516. [Google Scholar] [CrossRef]

- de la Calleja, J.; Fuentes, O. Machine learning and image analysis for morphological galaxy classification. Mon. Not. R. Astron. Soc. 2004, 349, 87–93. [Google Scholar] [CrossRef]

- Wagstaff, K.L.; Tang, B.; Thompson, D.R.; Khudikyan, S.; Wyngaard, J.; Deller, A.T.; Palaniswamy, D.; Tingay, S.J.; Wayth, R.B. A Machine Learning Classifier for Fast Radio Burst Detection at the VLBA. Publ. Astron. Soc. Pac. 2016, 128, 084503. [Google Scholar] [CrossRef]

- Zhang, Y.G.; Gajjar, V.; Foster, G.; Siemion, A.; Cordes, J.; Law, C.; Wang, Y. Fast Radio Burst 121102 Pulse Detection and Periodicity: A Machine Learning Approach. Astrophys. J. 2018, 866, 149. [Google Scholar] [CrossRef]

- Wu, D.; Cao, H.; Lv, N.; Fan, J.; Tan, X.; Yang, S. Feature Matching Conditional GAN for Fast Radio Burst Localization with Cluster-fed Telescope. Astrophys. J. Lett. 2019, 887, L10. [Google Scholar] [CrossRef]

- Yang, X.; Zhang, S.B.; Wang, J.S.; Hobbs, G.; Sun, T.R.; Manchester, R.N.; Geng, J.J.; Russell, C.J.; Luo, R.; Tang, Z.F.; et al. 81 New candidate fast radio bursts in Parkes archive. Mon. Not. R. Astron. Soc. 2021, 507, 3238–3245. [Google Scholar] [CrossRef]

- Adámek, K.; Armour, W. Single-pulse Detection Algorithms for Real-time Fast Radio Burst Searches Using GPUs. Astrophys. J. Suppl. Ser. 2020, 247, 56. [Google Scholar] [CrossRef]

- Agarwal, D.; Aggarwal, K.; Burke-Spolaor, S.; Lorimer, D.R.; Garver-Daniels, N. FETCH: A deep-learning based classifier for fast transient classification. Mon. Not. R. Astron. Soc. 2020, 497, 1661–1674. [Google Scholar] [CrossRef]

- Bhatporia, S.; Walters, A.; Murugan, J.; Weltman, A. A Topological Data Analysis of the CHIME/FRB Catalogues. arXiv 2023, arXiv:2311.03456. [Google Scholar] [CrossRef]

- Chen, B.H.; Hashimoto, T.; Goto, T.; Kim, S.J.; Santos, D.J.D.; On, A.Y.L.; Lu, T.Y.; Hsiao, T.Y.Y. Uncloaking hidden repeating fast radio bursts with unsupervised machine learning. Mon. Not. R. Astron. Soc. 2021, 509, 1227–1236. [Google Scholar] [CrossRef]

- Zhu-Ge, J.M.; Luo, J.W.; Zhang, B. Machine learning classification of CHIME fast radio bursts-II. Unsupervised methods. Mon. Not. R. Astron. Soc. 2023, 519, 1823–1836. [Google Scholar] [CrossRef]

- Yang, X.; Zhang, S.B.; Wang, J.S.; Wu, X.F. Classifying FRB spectrograms using nonlinear dimensionality reduction techniques. Mon. Not. R. Astron. Soc. 2023, 522, 4342–4351. [Google Scholar] [CrossRef]

- Luo, J.W.; Zhu-Ge, J.M.; Zhang, B. Machine learning classification of CHIME fast radio bursts-I. Supervised methods. Mon. Not. R. Astron. Soc. 2022, 518, 1629–1641. [Google Scholar] [CrossRef]

- Sun, W.P.; Zhang, J.G.; Li, Y.; Hou, W.T.; Zhang, F.W.; Zhang, J.F.; Zhang, X. Exploring the Key Features of Repeating Fast Radio Bursts with Machine Learning. Astrophys. J. 2025, 980, 185. [Google Scholar] [CrossRef]

- Qiang, D.C.; Zheng, J.; You, Z.Q.; Yang, S. Unsupervised Machine Learning for Classifying CHIME Fast Radio Bursts and Investigating Empirical Relations. Astrophys. J. 2025, 982, 16. [Google Scholar] [CrossRef]

- Raquel, B.J.R.; Hashimoto, T.; Goto, T.; Chen, B.H.; Uno, Y.; Hsiao, T.Y.Y.; Kim, S.J.; Ho, S.C.C. Machine learning classification of repeating FRBs from FRB 121102. Mon. Not. R. Astron. Soc. 2023, 524, 1668–1691. [Google Scholar] [CrossRef]

- Chen, B.H.; Hashimoto, T.; Goto, T.; Raquel, B.J.R.; Uno, Y.; Kim, S.J.; Hsiao, T.Y.Y.; Ho, S.C.C. Classifying a frequently repeating fast radio burst, FRB 20201124A, with unsupervised machine learning. Mon. Not. R. Astron. Soc. 2023, 521, 5738–5745. [Google Scholar] [CrossRef]

- Ghirlanda, G.; Nava, L.; Ghisellini, G.; Celotti, A.; Firmani, C. Short versus long gamma-ray bursts: Spectra, energetics, and luminosities. Astron. Astrophys. 2009, 496, 585–595. [Google Scholar] [CrossRef]

- Rastinejad, J.C.; Gompertz, B.P.; Levan, A.J.; Fong, W.f.; Nicholl, M.; Lamb, G.P.; Malesani, D.B.; Nugent, A.E.; Oates, S.R.; Tanvir, N.R.; et al. A kilonova following a long-duration gamma-ray burst at 350 Mpc. Nature 2022, 612, 223–227. [Google Scholar] [CrossRef] [PubMed]

- Troja, E.; Fryer, C.L.; O’Connor, B.; Ryan, G.; Dichiara, S.; Kumar, A.; Ito, N.; Gupta, R.; Wollaeger, R.T.; Norris, J.P.; et al. A nearby long gamma-ray burst from a merger of compact objects. Nature 2022, 612, 228–231. [Google Scholar] [CrossRef] [PubMed]

- Levan, A.J.; Gompertz, B.P.; Salafia, O.S.; Bulla, M.; Burns, E.; Hotokezaka, K.; Izzo, L.; Lamb, G.P.; Malesani, D.B.; Oates, S.R.; et al. Heavy-element production in a compact object merger observed by JWST. Nature 2024, 626, 737–741. [Google Scholar] [CrossRef] [PubMed]

- Zhu, S.Y.; Sun, W.P.; Ma, D.L.; Zhang, F.W. Classification of Fermi gamma-ray bursts based on machine learning. Mon. Not. R. Astron. Soc. 2024, 532, 1434–1443. [Google Scholar] [CrossRef]

- Yang, J.; Ai, S.; Zhang, B.B.; Zhang, B.; Liu, Z.K.; Wang, X.I.; Yang, Y.H.; Yin, Y.H.; Li, Y.; Lü, H.J. A long-duration gamma-ray burst with a peculiar origin. Nature 2022, 612, 232–235. [Google Scholar] [CrossRef]

- Du, Z.; Lü, H.; Yuan, Y.; Yang, X.; Liang, E. The Progenitor and Central Engine of a Peculiar GRB 230307A. Astrophys. J. Lett. 2024, 962, L27. [Google Scholar] [CrossRef]

- Garcia-Cifuentes, K.; Becerra, R.; De Colle, F. ClassiPyGRB: Machine Learning-Based Classification and Visualization of Gamma Ray Bursts using t-SNE. J. Open Source Softw. 2024, 9, 5923. [Google Scholar] [CrossRef]

- Junell, A.; Sasli, A.; Fontinele Nunes, F.; Xu, M.; Border, B.; Rehemtulla, N.; Rizhko, M.; Qin, Y.J.; Jegou Du Laz, T.; Le Calloch, A.; et al. Applying multimodal learning to Classify transient Detections Early (AppleCiDEr) I: Data set, methods, and infrastructure. arXiv 2025, arXiv:2507.16088. [Google Scholar] [CrossRef]

- Aleo, P.D.; Engel, A.W.; Narayan, G.; Angus, C.R.; Malanchev, K.; Auchettl, K.; Baldassare, V.F.; Berres, A.; de Boer, T.J.L.; Boyd, B.M.; et al. Anomaly Detection and Approximate Similarity Searches of Transients in Real-time Data Streams. Astrophys. J. 2024, 974, 172. [Google Scholar] [CrossRef]

- Biswas, B.; Ishida, E.E.O.; Peloton, J.; Möller, A.; Pruzhinskaya, M.V.; de Souza, R.S.; Muthukrishna, D. Enabling the discovery of fast transients. A kilonova science module for the Fink broker. Astron. Astrophys. 2023, 677, A77. [Google Scholar] [CrossRef]

- Dillmann, S.; Martínez-Galarza, J.R.; Soria, R.; Stefano, R.D.; Kashyap, V.L. Representation learning for time-domain high-energy astrophysics: Discovery of extragalactic fast X-ray transient XRT 200515. Mon. Not. R. Astron. Soc. 2025, 537, 931–955. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Z.-N.; Qiang, D.-C.; Yang, S. Artificial Intelligence Revolutionizing Time-Domain Astronomy. Universe 2025, 11, 355. https://doi.org/10.3390/universe11110355

Wang Z-N, Qiang D-C, Yang S. Artificial Intelligence Revolutionizing Time-Domain Astronomy. Universe. 2025; 11(11):355. https://doi.org/10.3390/universe11110355

Chicago/Turabian StyleWang, Ze-Ning, Da-Chun Qiang, and Sheng Yang. 2025. "Artificial Intelligence Revolutionizing Time-Domain Astronomy" Universe 11, no. 11: 355. https://doi.org/10.3390/universe11110355

APA StyleWang, Z.-N., Qiang, D.-C., & Yang, S. (2025). Artificial Intelligence Revolutionizing Time-Domain Astronomy. Universe, 11(11), 355. https://doi.org/10.3390/universe11110355