Comparing Data-Driven Methods for Extracting Knowledge from User Generated Content

Abstract

1. Introduction

2. Literature Review

3. Materials and Methods

3.1. Data Sampling

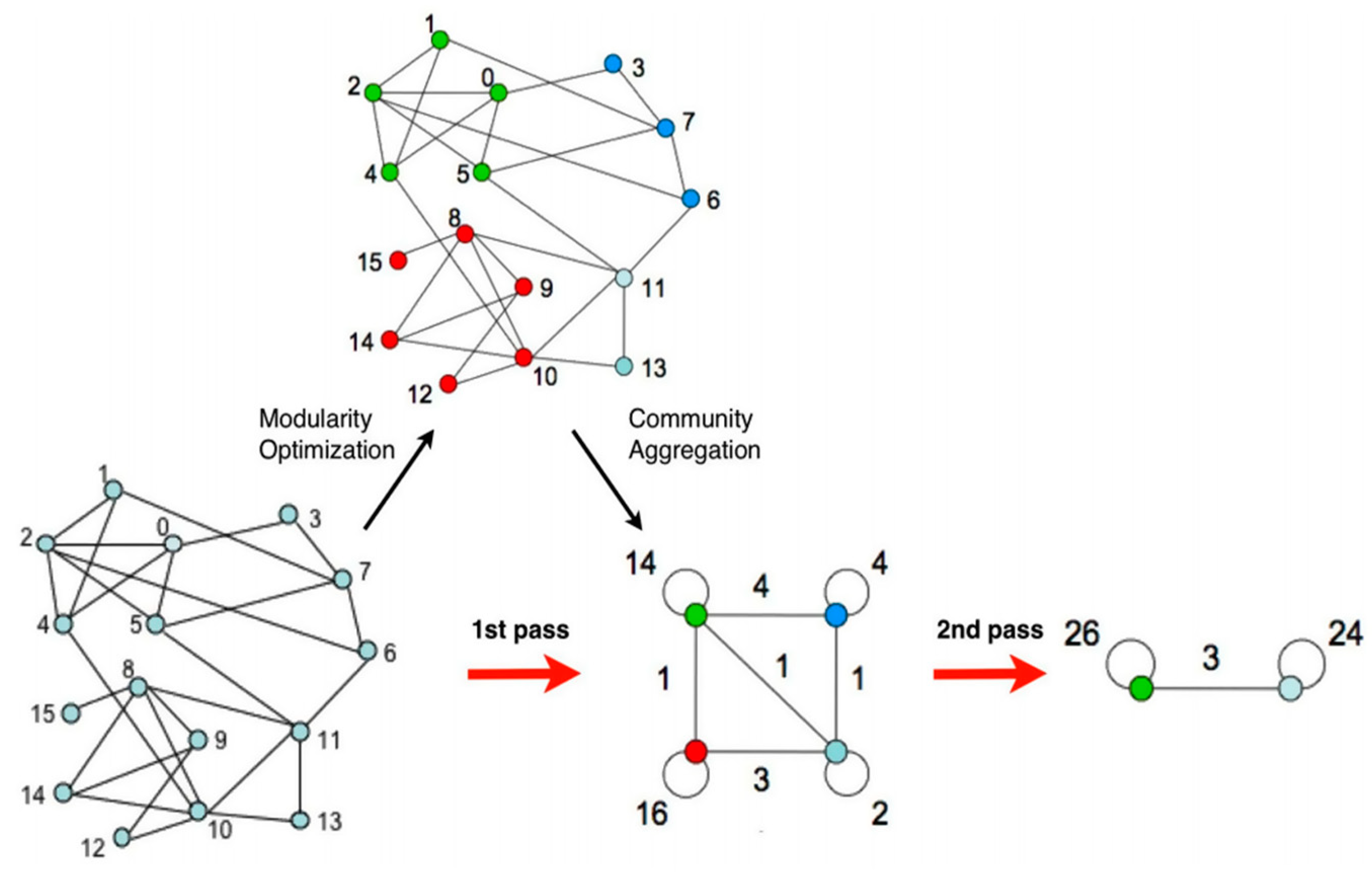

3.2. Method 1: Community Identification in Complex Networks

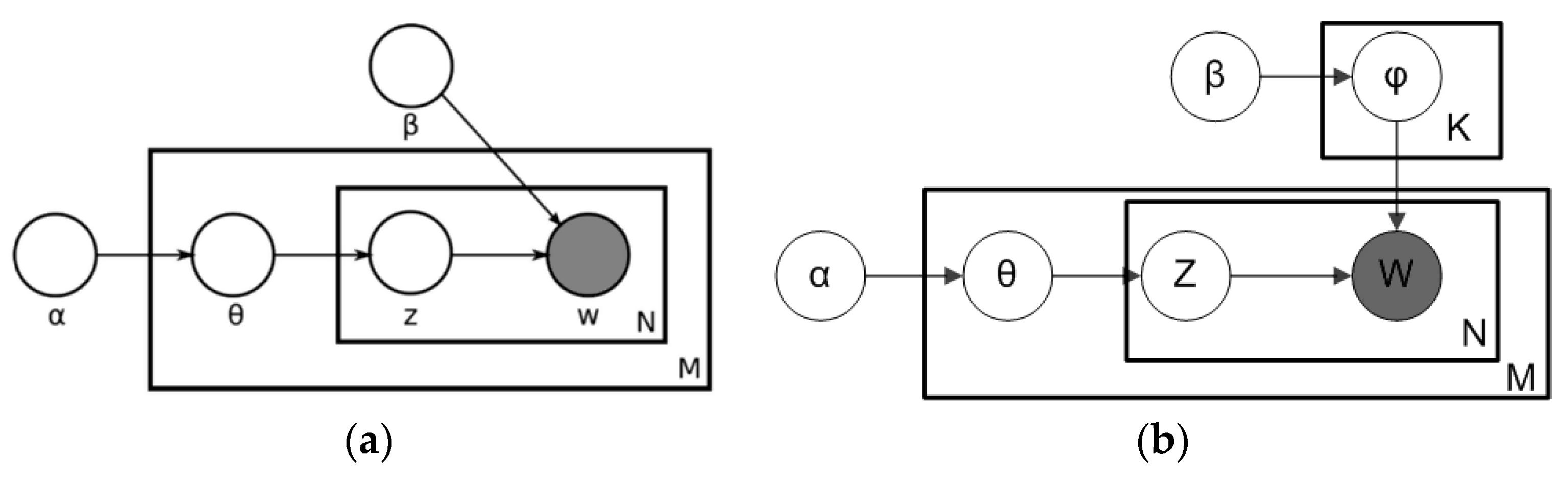

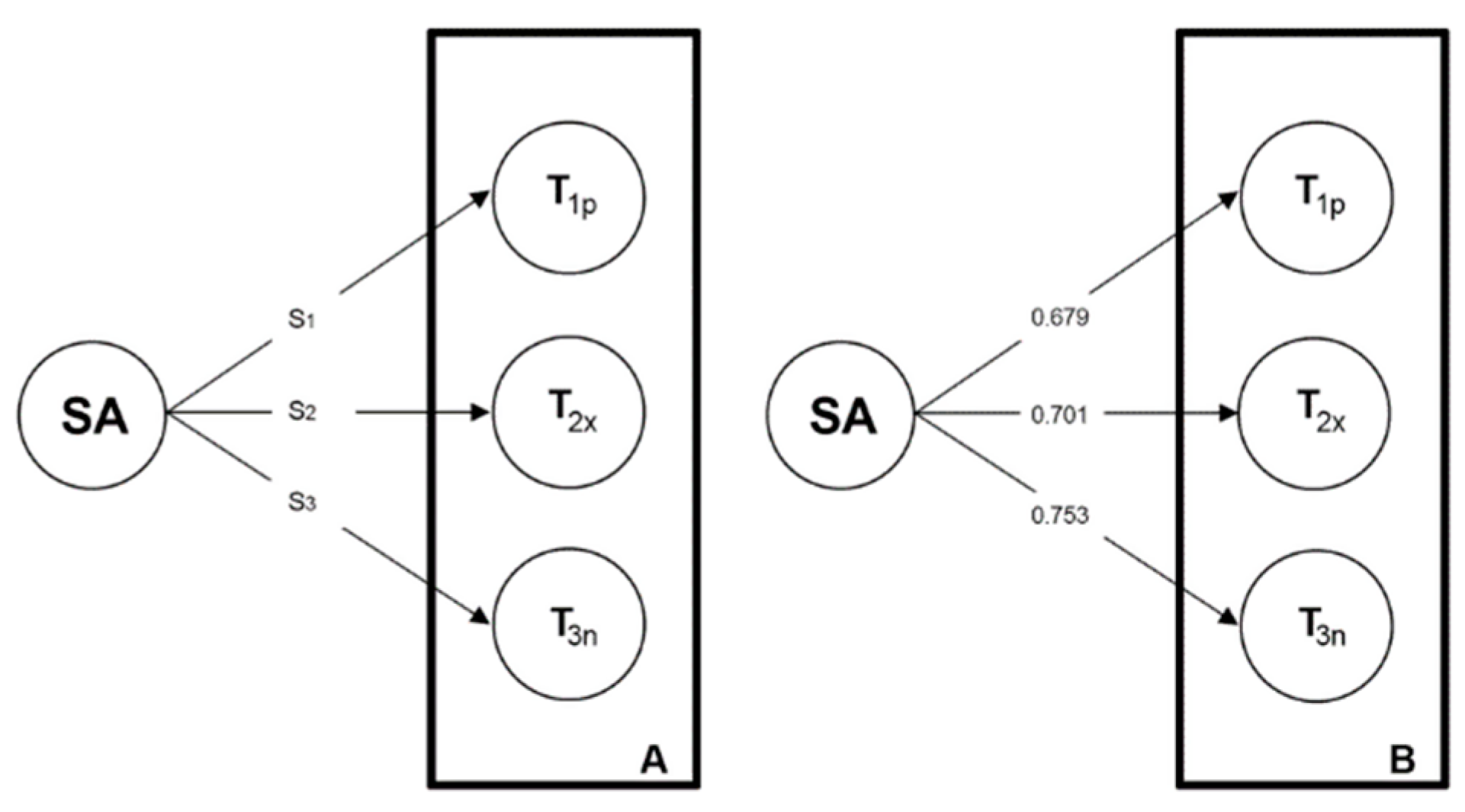

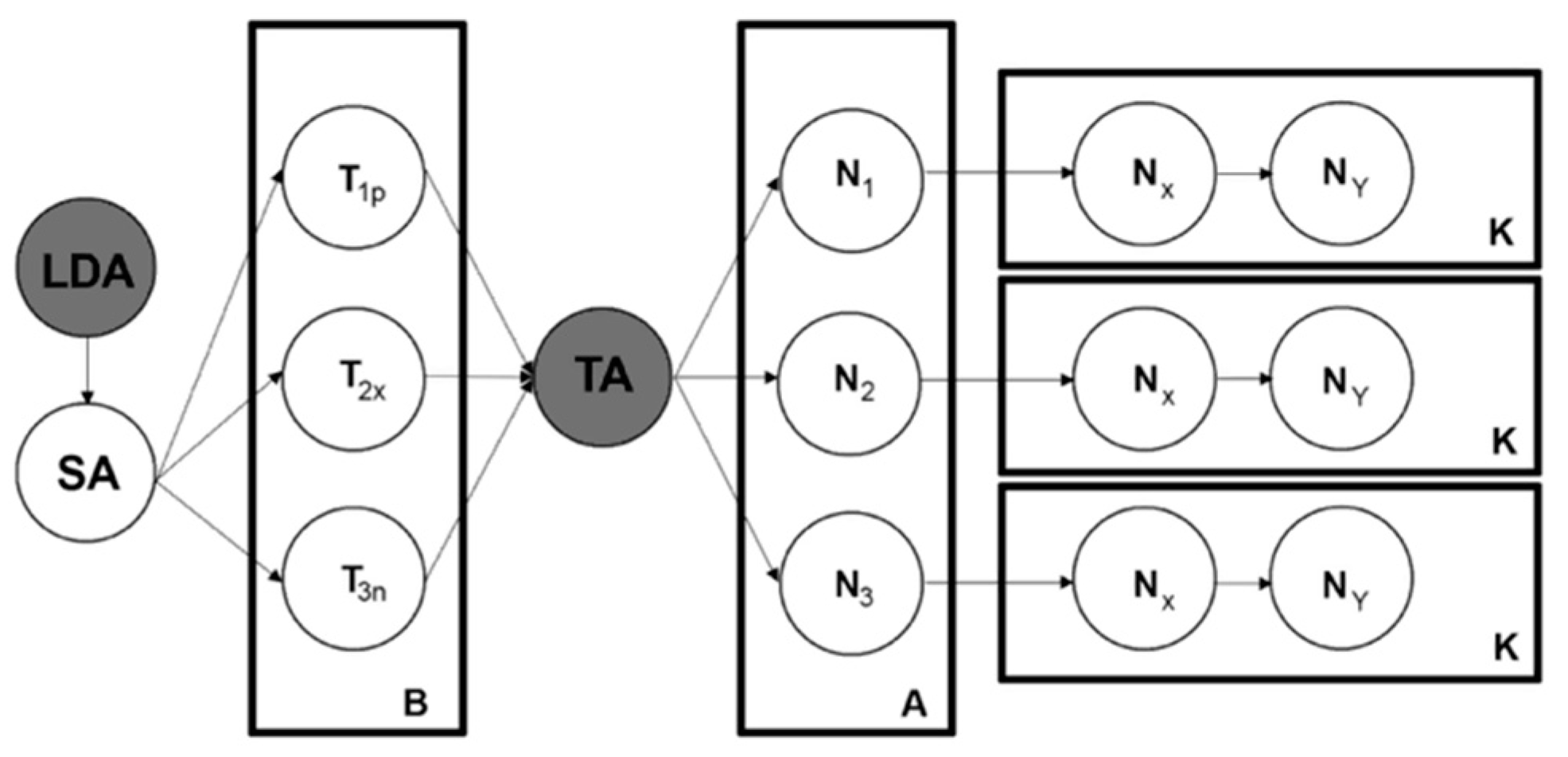

3.3. Method 2: A Three-Stage Method for Data Text Mining

4. Analysis of Results

4.1. Results of Method 1

4.2. Results of Method 2

5. Discussion

6. Conclusions

6.1. Theoretical Implications

6.2. Managerial Implications

6.3. Limitations and Future Research

Author Contributions

Funding

Conflicts of Interest

References

- Saura, J.R.; Rodríguez Herráez, B.; Reyes-Menendez, A. Comparing a traditional approach for financial Brand Communication Analysis with a Big Data Analytics technique. IEEE Access 2019, 7, 37100–37108. [Google Scholar] [CrossRef]

- Hooper, P.; Dedeo, S.; Hooper, A.C.; Gurven, M.; Kaplan, H. Complexal Structure of a Traditional Amazonian Social Network. Entropy 2013, 15, 4932–4955. [Google Scholar] [CrossRef]

- Saura, J.R.; Bennett, D.R. A Three-Stage method for Data Text Mining: Using UGC in Business Intelligence Analysis. Symmetry 2019, 11, 519. [Google Scholar] [CrossRef]

- Reyes-Menendez, A.; Saura, J.R.; Martinez-Navalon, J.G. The impact of e-WOM on Hotels Management Reputation: Exploring TripAdvisor Review Credibility with the ELM model. IEEE Access 2019, 7, 68868–68877. [Google Scholar] [CrossRef]

- Gantz, J.; Reinsel, D. The digital universe in 2020: Big data, bigger digital shadows, and biggest growth in the far east. IDC iView IDC Anal. Future 2012, 2007, 1–16. [Google Scholar]

- Erlandsson, F.; Bródka, P.; Borg, A.; Johnson, H. Finding Influential Users in Social Media Using Association Rule Learning. Entropy 2016, 18, 164. [Google Scholar] [CrossRef]

- Qiao, T.; Shan, W.; Zhou, C. How to Identify the Most Powerful Node in Complex Networks? A Novel Entropy Centrality Approach. Entropy 2017, 19, 614. [Google Scholar] [CrossRef]

- Peruzzi, A.; Zollo, F.; Quattrociocchi, W.; Scala, A. How News May Affect Markets’ Complex Structure: The Case of Cambridge Analytica. Entropy 2018, 20, 765. [Google Scholar] [CrossRef]

- Saura, J.R.; Palos-Sanchez, P.R.; Correia, M.B. Digital Marketing Strategies Based on the E-Business Model: Literature Review and Future Directions. In Organizational Transformation and Managing Innovation in the Fourth Industrial Revolution; IGI Global: Hershey, PA, USA, 2019; pp. 86–103. [Google Scholar] [CrossRef]

- Abbate, T.; De Luca, D.; Gaeta, A.; Lepore, M.; Miranda, S.; Perano, M. Analysis of Open Innovation Intermediaries Platforms by Considering the Smart Service System Perspective. Procedia Manuf. 2015, 3, 3575–3582. [Google Scholar] [CrossRef]

- Scuotto, V.; Santoro, G.; Bresciani, S.; Del Giudice, M. Shifting intra- and inter-organizational innovation processes towards digital business: An empirical analysis of SMEs. Creat. Innov. Manag. 2017, 26, 247–255. [Google Scholar] [CrossRef]

- Del Giudice, M.; Caputo, F.; Evangelista, F. How are decision systems changing? The contribution of social media to the management of decisional liquefaction. J. Decis. Syst. 2016, 25, 214–226. [Google Scholar] [CrossRef]

- Saura, J.R.; Reyes-Menendez, A.; Palos-Sanchez, P. Un Análisis de Sentimiento en Twitter con Machine Learning: Identificando el sentimiento sobre las ofertas de #BlackFriday. A sentiment analysis in Twitter with machine learning: Capturing sentiment from #BlackFriday offers. Espacios 2018, 39, 75. [Google Scholar]

- Hartmann, P.M.; Zaki, M.; Feldmann, N.; Neely, A. Capturing value from big data–a taxonomy of data-driven business models used by start-up firms. Int. J. Oper. Prod. Manag. 2016, 36, 1382–1406. [Google Scholar] [CrossRef]

- Wu, X.; Kumar, V.; Quinlan, J.R.; Ghosh, J.; Yang, Q.; Motoda, H.; Zhou, Z.H. Top 10 algorithms in data mining. Knowl. Inf. Syst. 2008, 14, 1–37. [Google Scholar] [CrossRef]

- Saura, J.R.; Palos-Sanchez, P.R.; Grilo, A. Detecting Indicators for Startup Business Success: Sentiment Analysis using Text Data Mining. Sustainability 2019, 15, 553. [Google Scholar] [CrossRef]

- Jia, S. Leisure Motivation and Satisfaction: A Text Mining of Yoga Centres, Yoga Consumers, and Their Interactions. Sustainability 2018, 10, 4458. [Google Scholar] [CrossRef]

- Caputo, F.; Perano, M.; Mamuti, A. A macro-level view of tourism sector between smartness and sustainability. Enl. Tour. 2017, 7, 36–61. [Google Scholar] [CrossRef]

- Kunz, W.; Aksoy, L.; Bart, Y.; Heinonen, K.; Kabadayi, S.; Ordenes, F.V.; Theodoulidis, B. Customer engagement in a big data world. J. Serv. Mark. 2017, 31, 161–171. [Google Scholar] [CrossRef]

- Marsh, J.A.; Pane, J.F.; Hamilton, L.S. Making Sense of Data-Driven Decision Making in Education; RAND Corporation: San Monica, CA, USA, 2006. [Google Scholar]

- Tiago, M.T.P.M.B.; Veríssimo, J.M.C. Digital marketing and social media: Why bother? Bus. Horiz. 2014, 57, 703–708. [Google Scholar] [CrossRef]

- Saura, J.R.; Reyes-Menendez, A.; Alvarez-Alonso, C. Do online comments affect environmental management? Identifying factors related to environmental management and sustainability of hotels. Sustainability 2018, 10, 3016. [Google Scholar] [CrossRef]

- Selwyn, N. Digital division or digital decision? A study of non-users and low-users of computers. Poetics 2006, 34, 273–292. [Google Scholar] [CrossRef]

- Royle, J.; Laing, A. The digital marketing skills gap: Developing a Digital Marketer Model for the communication industries. Int. J. Inf. Manag. 2014, 34, 65–73. [Google Scholar] [CrossRef]

- Ghotbifar, F.; Marjani, M.; Ramazani, A. Identifying and assessing the factors affecting skill gap in digital marketing in communication industry companies. Indep. J. Manag. Prod. 2017, 8, 1–14. [Google Scholar] [CrossRef][Green Version]

- Truong, Y.; Simmons, G. Perceived intrusiveness in digital advertising: Strategic marketing implications. J. Strateg. Mark. 2010, 18, 239–256. [Google Scholar] [CrossRef]

- Couldry, N.; Turow, J. Advertising, big data and the clearance of the public realm: marketers’ new approaches to the content subsidy. Int. J. Commun. 2014, 8, 1710–1726. [Google Scholar]

- Grefenstette, G. Evaluation Techniques for Automatic Semantic Extraction: Comparing Syntactic and Window Based Approaches. 1993. Available online: https://www.aclweb.org/anthology/W93-0113 (accessed on 23 September 2019).

- Reyes-Menendez, A.; Saura, J.R.; Filipe, F. The importance of behavioral data to identify online fake reviews for tourism businesses: A systematic review. PeerJ Comput. Sci. 2019. [Google Scholar] [CrossRef]

- Hartmann, P.; Zaki, M.; Feldmann, N.; Neely, A. Big Data for Big Business? A Taxonomy of Data-Driven Business Models Used by Start-Up Firms. 2014. Available online: https://cambridgeservicealliance.eng.cam.ac.uk/resources/Downloads/Monthly%20Papers/2014_March_DataDrivenBusinessModels.pdf (accessed on 23 September 2019).

- Souza, E.; Abrahão, S.; Moreira, A.; Araújo, J.; Insfran, E. Comparing Value-Driven Methods: An Experiment Design. 2016. Available online: http://ceur-ws.org/Vol-1805/Souza2016HuFaMo.pdf (accessed on 23 September 2019).

- Müller, R.M.; Thoring, K. Design thinking vs. lean startup: A comparison of two user-driven innovation strategies. Lead. Through Des. 2012, 151, 91–106. [Google Scholar]

- Moro, S.; Cortez, P.; Rita, P. A data-driven approach to predict the success of bank telemarketing. Decis. Support Syst. 2014, 62, 22–31. [Google Scholar] [CrossRef]

- Olson, J.R.; Rueter, H.H. Extracting expertise from experts: Methods for knowledge acquisition. Expert Syst. 1987, 4, 152–168. [Google Scholar] [CrossRef]

- Gangemi, A. A comparison of knowledge extraction tools for the semantic web. In Extended Semantic Web Conference; Springer: Berlin/Heidelberg, Germany, 2013; pp. 351–366. [Google Scholar]

- Schumacher, P.; Minor, M.; Walter, K.; Bergmann, R. Extraction of procedural knowledge from the web: A comparison of two workflow extraction approaches. In Proceedings of the 21st International Conference on World Wide Web, Lyon, France, 16–20 April 2012; pp. 739–747. [Google Scholar]

- Corbin, J.; Strauss, A. Techniques and procedures for developing grounded theory. In Basics of Qualitative Research; Sage Publications: Thousand Oaks, CA, USA, 2008. [Google Scholar]

- Ha, H.; Han, H.; Mun, S.; Bae, S.; Lee, J.; Lee, K. An Improved Study of Multilevel Semantic Network Visualization for Analyzing Sentiment Word of Movie Review. Data Appl. Sci. 2019, 9, 2419. [Google Scholar] [CrossRef]

- Wang, Y.; Youn, H.Y. Feature Weighting Based on Inter-Category and Intra-Category Strength for Twitter Sentiment Analysis. Appl. Sci. 2019, 9, 92. [Google Scholar] [CrossRef]

- Daugherty, T.; Eastin, M.S.; Bright, L. Exploring consumer motivations for creating user-generated content. J. Interact. Advert. 2008, 8, 16–25. [Google Scholar] [CrossRef]

- Lacity, M.C.; Janson, M.A. Understanding qualitative data: A framework of text analysis methods. J. Manag. Inf. Syst. 1994, 11, 137–155. [Google Scholar] [CrossRef]

- Verhoef, P.C.; Kooge, E.; Walk, N. Creating Value with Big Data Analytics: Making Smarter Marketing Decisions; Routledge: London, UK, 2016. [Google Scholar]

- Sherman, K.J.; Cherkin, D.C.; Erro, J.; Miglioretti, D.L.; Deyo, R.A. Comparing yoga, exercise, and a self-care book for chronic low back pain: A randomized, controlled trial. Ann. Intern. Med. 2005, 143, 849–856. [Google Scholar] [CrossRef] [PubMed]

- Banerjee, S.; Chua, A.Y.; Kim, J. Using supervised learning to classify authentic and fake online reviews. In Proceedings of the 9th International Conference on Ubiquitous Information Management and Communication, Bali, Indonesia, 8–10 January 2015. [Google Scholar]

- Reyes-Menendez, A.; Saura, J.R.; Alvarez-Alonso, C. Understanding #WorldEnvironmentDay User Opinions in Twitter: A Topic-Based Sentiment Analysis Approach. Int. J. Environ. Res. Public Health 2018, 15, 2537. [Google Scholar] [CrossRef]

- Blondel, V.D.; Guillaume, J.; Lambiotte, R.; Lefebvre, E. Fast unfolding of communities in large networks. J. Stat. Mech. Theory Exp. 2008, 2008, 10008. [Google Scholar] [CrossRef]

- Lambiotte, R.; Delvenne, J.C.; Barahona, M. Random walks, Markov processes and the multiscale modular organization of complex networks. IEEE Trans. Netw. Sci. Eng. 2014, 1, 76–90. [Google Scholar] [CrossRef]

- Matta, J.; Obafemi-Ajayi, T.; Borwey, J.; Sinha, K.; Wunsch, D.; Ercal, G. Node-Based Resilience Measure Clustering with Applications to Noisy and Overlapping Communities in Complex Networks. Appl. Sci. 2018, 8, 1307. [Google Scholar] [CrossRef]

- Krippendorff. Content Analysis: An Introduction to Its Methodology, 3rd ed.; Sage: Thousand Oaks, CA, USA, 2013; pp. 221–250. [Google Scholar]

- Saura, J.R.; Reyes-Menendez, A.; Palos-Sanchez, P. Are Black Friday Deals Worth It? Mining Twitter Users’ Sentiment and Behavior Response. J. Open Innov. Technol. Mark. Complex. 2019, 5, 58. [Google Scholar] [CrossRef]

- Saif, H.; Fernández, M.; He, Y.; Alani, H. Evaluation datasets for Twitter sentiment analysis: A survey and a new dataset, the STS-Gold. In Proceedings of the 1st Interantional Workshop on Emotion and Sentiment in Social and Expressive Media: Approaches and Perspectives from AI (ESSEM 2013), Turin, Italy, 3 December 2013. [Google Scholar]

- Anderson, D.; Burnham, K. Model Selection and Multi-Model Inference, 2nd ed.; Springer: New York, NY, USA, 2004; ISBN 978-0-387-95364-9. [Google Scholar]

- Pritchard, J.K.; Stephens, M.; Donnelly, P. Inference of population structure using multilocus genotype data. Genetics 2000, 15, 945–959. [Google Scholar]

- Blei, D.M.; Ng, A.Y.; Jordan, M.I.; Lafferty, J. Latent Dirichlet Allocation. J. Mach. Learn. Res. 2003, 3, 993–1022. [Google Scholar]

- Guibon, G.; Ochs, M.; Bellot, P. From Emojis to Sentiment Analysis. 2016. Available online: https://hal-amu.archives-ouvertes.fr/hal-01529708/document (accessed on 23 September 2019).

- Jain, T.I.; Nemade, D. Recognizing Contextual Polarity in Phrase-Level Sentiment Analysis. Int. J. Comput. Appl. 2010, 7, 12–21. [Google Scholar] [CrossRef]

- Krippendorff, K. Bivariate Agreement Coefficients for Reliability Data. Chapter 8. In Sociological Methodology; Borgatta, E.R., Bohrnstedt, G.W., Eds.; Jossey Bass, Inc.: San Francisco, CA, USA, 1970; Volume 2, pp. 139–150. [Google Scholar]

- Krippendorff, K. Computing Krippendorff’s Alpha-Reliability. 2011. Available online: http://repository.upenn.edu/asc_papers/43 (accessed on 23 September 2019).

- Palos-Sanchez, P.; Martin-Velicia, F.; Saura, J.R. Complexity in the Acceptance of Sustainable Search Engines on the Internet: An Analysis of Unobserved Heterogeneity with FIMIX-PLS. Complexity 2018. [Google Scholar] [CrossRef]

- Van den Broek-Altenburg, E.M.; Atherly, A.J. Using Social Media to Identify Consumers’ Sentiments towards Attributes of Health Insurance during Enrollment Season. Appl. Sci. 2019, 9, 2035. [Google Scholar] [CrossRef]

- Provost, F.; Fawcett, T. Data science and its relationship to big data and data-driven decision making. Big Data 2013, 1, 51–59. [Google Scholar] [CrossRef]

| Test | Communities | Modularity | MR | Resolution | MMC | MiMC |

|---|---|---|---|---|---|---|

| 1 (a) | 71 | 0.246 | 0.280 | 1.0 | 70 | 0 |

| 2 (b) | 147 | 0.122 | −0.017 | 0.1 | 156 | 0 |

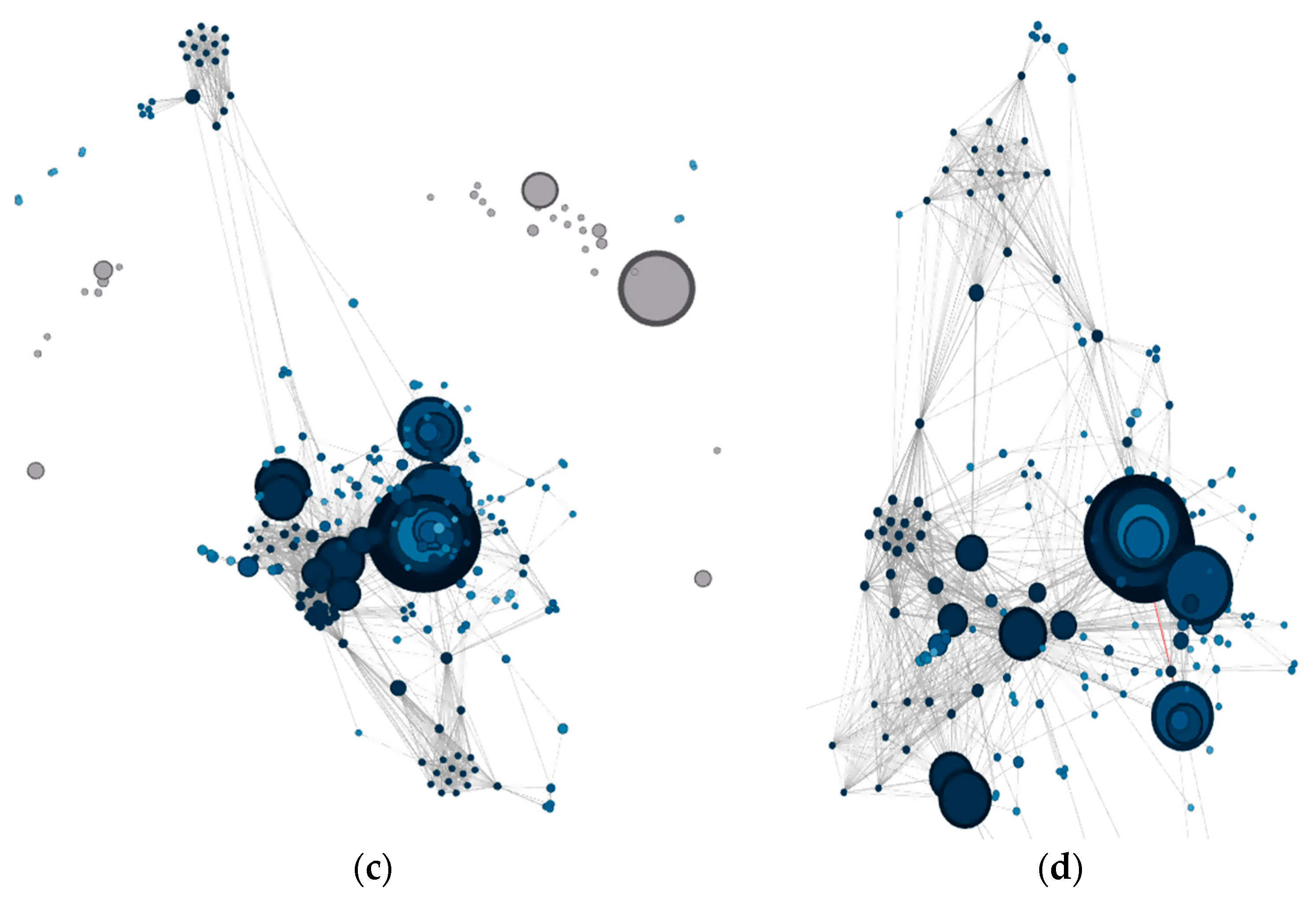

| 3 (c) | 519 | 0.053 | −0.021 | 0.01 | 518 | 0 |

| 4 (d) | 1073 | 0.011 | −0.021 | 0.001 | 1072 | 0 |

| Topic Name | Topic Description | Sentiment | KAV |

|---|---|---|---|

| SEO | It is a search engine optimization strategy that optimizes the results in the search engine result pages. | Positive | 0.824 |

| Content Marketing | Social media marketing strategy that aims to generate quality content. | Positive | 0.801 |

| Startups | Small companies that base their strategies on technology and innovation. | Neutral | 0.799 |

| Tools | Main tools and online platforms to perform digital marketing strategies. | Neutral | 0.793 |

| Blackhat Strategies | Positioning strategies based on practices that can receive penalties from search engines. | Negative | 0.791 |

| JavaScript | Programming code based on scripts. | Negative | 0.788 |

| Social Media Marketing (SMM) | Strategy that consists of transferring digital marketing strategies to social networks to get engagement. | Positive | 0.779 |

| Influencers Marketing | Digital marketing technique where influencers generate content and share it in their main networks. | Neutral | 0.680 |

| N1 | Positive Insights | WP | Count |

| SEO | SEO is one of the main digital marketing strategies where tutorials and tricks for positioning in search engines are shared by consultants, agencies, and freelancers. | 4.51 | 691 |

| SMM | The SMM is configured as one of the main axes of digital marketing because of the high interaction between the communities and their engagement if it is carried out correctly. | 4.08 | 603 |

| Content Marketing | Content marketing is the main technique for the generation of original content by companies to invoke their clients’ interest and is published to enhance user interaction in social networks. | 3.91 | 593 |

| N2 | Neutral Insights | WP | Count |

| Influencers Marketing | Sometimes the veracity of influencers and that of followers’ communities in social networks are questioned, although these have an impact and presence in digital marketing. | 3.42 | 572 |

| Tools | There are many tools to develop digital marketing strategies and apply them correctly. These tools have specific functions. | 3.71 | 583 |

| Startups | Digital marketing is used by startups to develop their strategies in digital environments. It is one of the main techniques used by this type of companies to promote their products. | 2.54 | 460 |

| N3 | Negative Insights | WP | Count |

| Blackhat Strategies | Strategies that quickly and efficiently position companies in digital marketing in the medium and long-term are penalized by the search engines by de-indexing the results of the web pages of the SERPs. | 3.01 | 361 |

| JavaScript | There are issues with the implementation of this type of scripting code for positioning and digital marketing. It is one of the main obstacles discussed in social networks around the technical implementation of digital marketing and its strategies. | 1.91 | 297 |

| Method | Sentiments Identification | Topics Identification | Communities Analysis | UGC Analysis | Node Analysis | Data Visualization |

|---|---|---|---|---|---|---|

| Method 1 | - | ✓ | ✓ | ✓ | ✓ | ✓ |

| Method 2 | ✓ | ✓ | - | ✓ | ✓ | - |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Saura, J.R.; Reyes-Menendez, A.; Filipe, F. Comparing Data-Driven Methods for Extracting Knowledge from User Generated Content. J. Open Innov. Technol. Mark. Complex. 2019, 5, 74. https://doi.org/10.3390/joitmc5040074

Saura JR, Reyes-Menendez A, Filipe F. Comparing Data-Driven Methods for Extracting Knowledge from User Generated Content. Journal of Open Innovation: Technology, Market, and Complexity. 2019; 5(4):74. https://doi.org/10.3390/joitmc5040074

Chicago/Turabian StyleSaura, Jose Ramon, Ana Reyes-Menendez, and Ferrão Filipe. 2019. "Comparing Data-Driven Methods for Extracting Knowledge from User Generated Content" Journal of Open Innovation: Technology, Market, and Complexity 5, no. 4: 74. https://doi.org/10.3390/joitmc5040074

APA StyleSaura, J. R., Reyes-Menendez, A., & Filipe, F. (2019). Comparing Data-Driven Methods for Extracting Knowledge from User Generated Content. Journal of Open Innovation: Technology, Market, and Complexity, 5(4), 74. https://doi.org/10.3390/joitmc5040074