Python TensorFlow Big Data Analysis for the Security of Korean Nuclear Power Plants

Abstract

:1. Introduction

2. Related Work

3. Python TensorFlow Big Data Analysis for the Security of Korean Nuclear Power Plants

3.1. Define Data Features

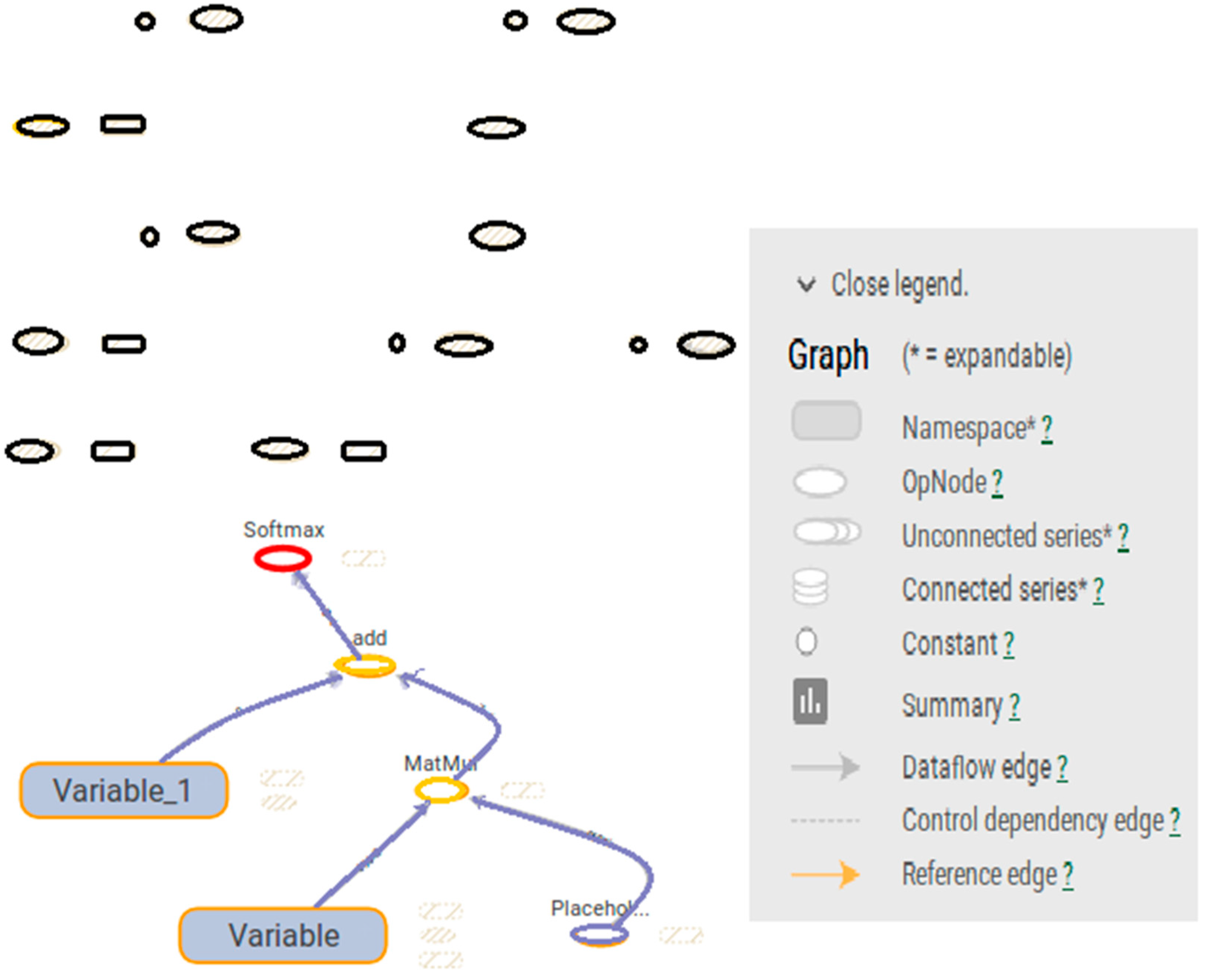

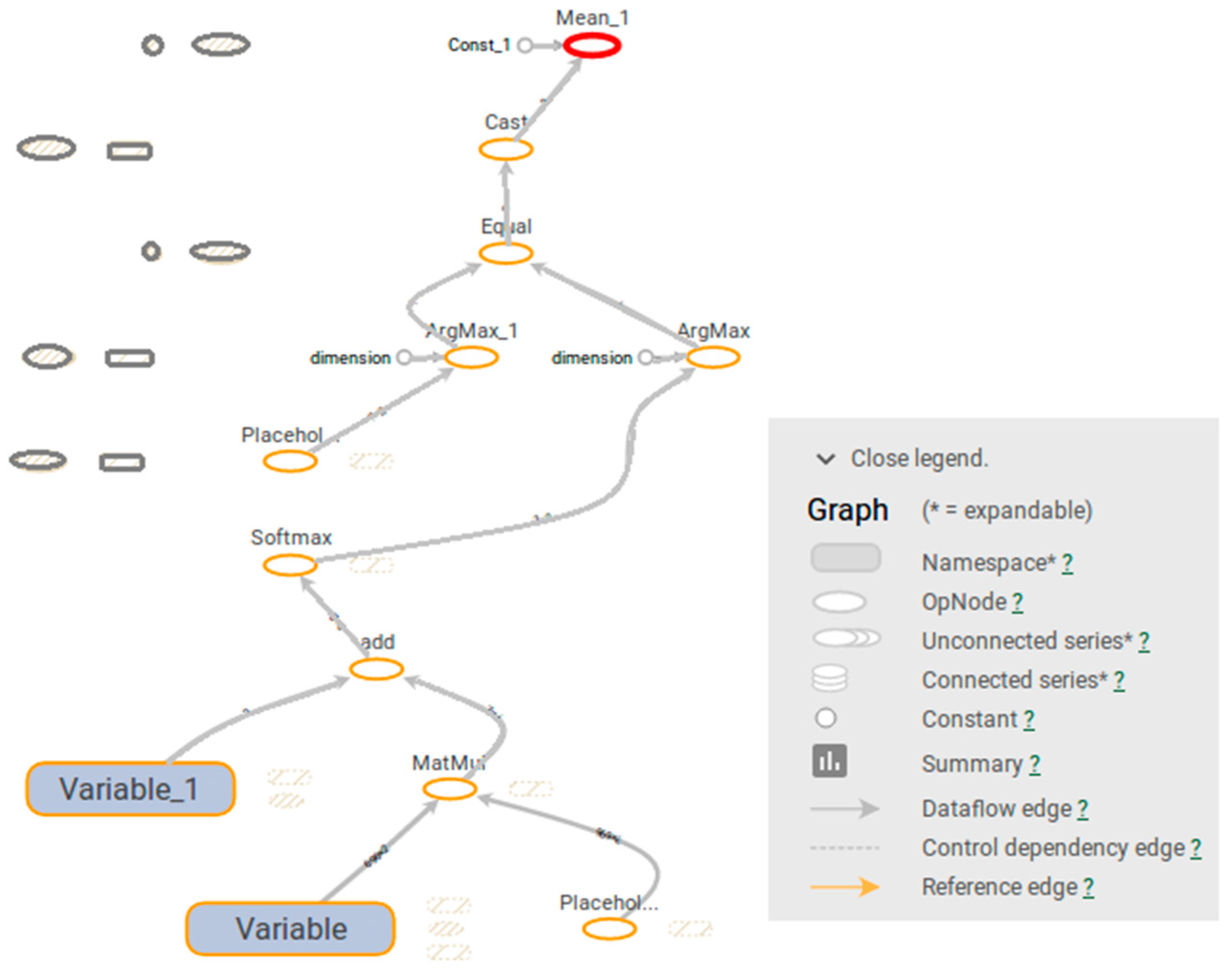

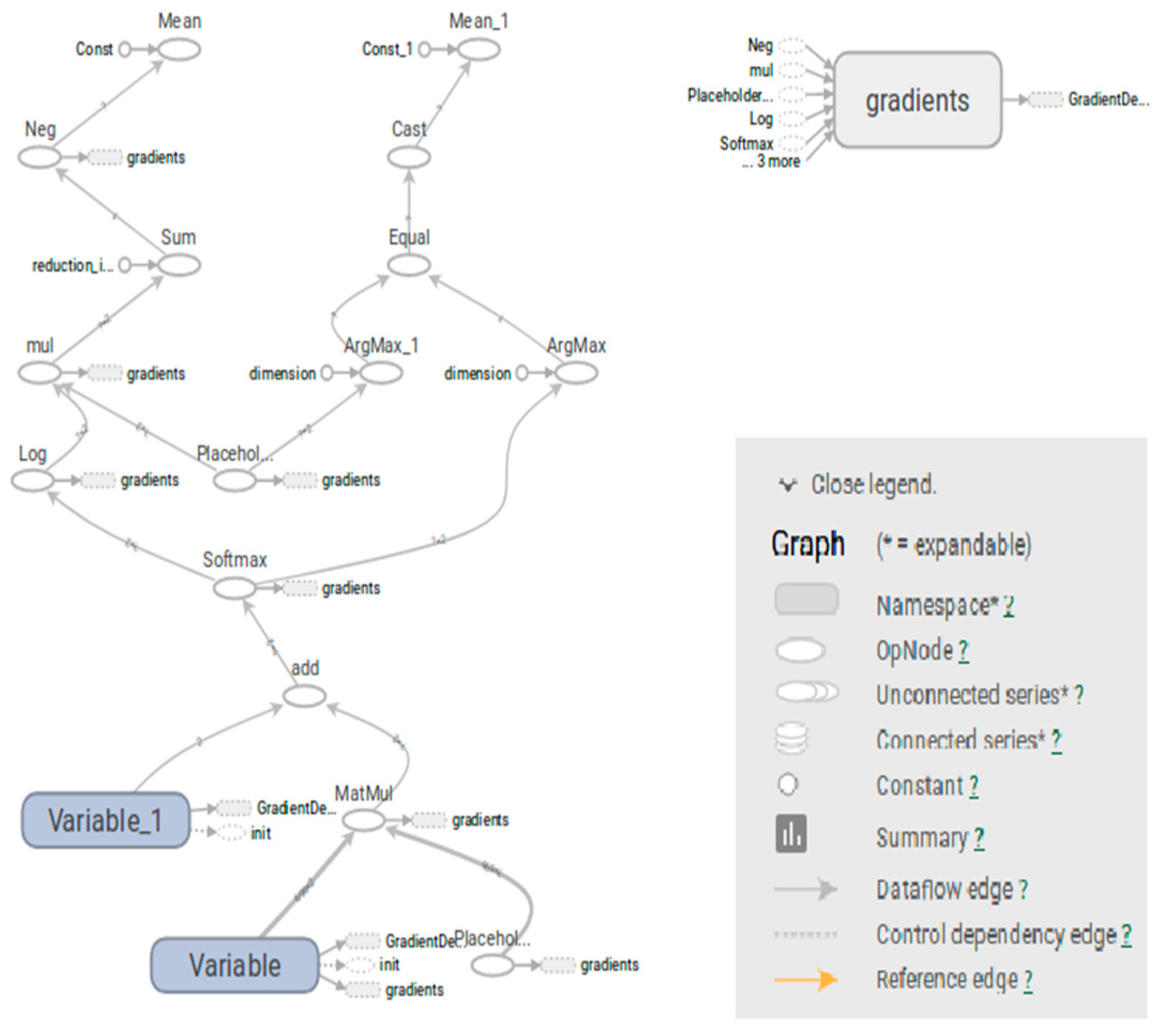

3.2. Data Learning

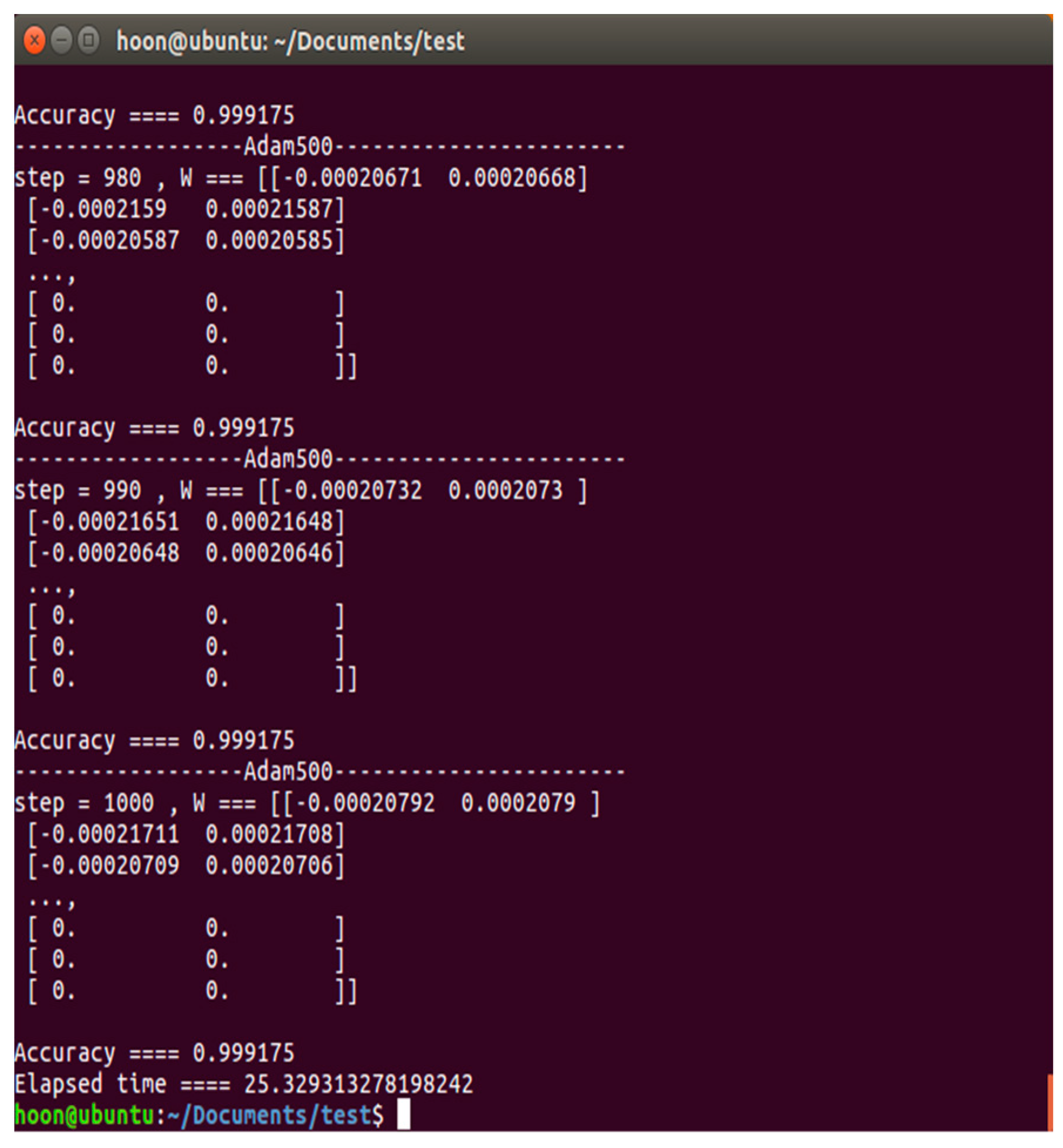

3.3. Analysis of Experiment Results and Performance Evaluation

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Sangil, P.; Jun-Ho, H. Effect of cooperation on manufacturing it project development and test bed for successful industry 4.0 project: Safety management for security. Processes 2018, 6, 1–31. [Google Scholar]

- Diederik, P.K.; Jimmy, L.B. Adam: A method for stochastic optimization. In Proceedings of the 3rd International Conference for Learning Representations (ICLR’15), San Diego, CA, USA, 7 May 2015. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Sermanet, P.; Eigen, D.; Zhang, X.; Mathieu, M.; Fergus, R.; LeCun, Y. Overfeat: Integrated recognition, localization and detection using convolutional networks. In proceeding of 2nd International Conference on Learning Representations, Banff, AB, Canada, 14–16 April 2014. [Google Scholar]

- Jun-Ho, H. Big data analysis for personalized health activities: Machine learning processing for automatic keyword extraction approach. Symmetry 2018, 10, 93. [Google Scholar]

- Vosoughi, S.; Roy, D.; Aral, S. The spread of true and false news online. Science 2018, 359, 1146–1151. [Google Scholar] [CrossRef] [PubMed]

- Lee, S.; Jun-Ho, H. An effective security measures for nuclear power plant using big data analysis approach. J. Supercomput. 2019, 75, 4267–4294. [Google Scholar] [CrossRef]

- Birkenmeier, G.F.; Park, J.K.; Rizvi, S.T. Principally quasi-Baer ring hulls. In Advances in Ring Theory; Trends in Mathematics; Springer: Berlin/Heidelberg, Germany, 2010; pp. 47–61. [Google Scholar]

- Lantz, B. Machine Learning with R; Packt Publishing Ltd.: Birmingham, UK, 2013. [Google Scholar]

- Mnih, V. Playing Atari with Deep Reinforcement Learning. In Proceedings of the 2013 Conference on Neural Information Processing Systems (NIPS) Deep Learning Workshop, Lake Tahoe, CA, USA, 9 December 2013. [Google Scholar]

- Murphy, K.P. Machine Learning: A Probabilistic Perspective; MIT Press: Cambridge, MA, USA, 2012. [Google Scholar]

- Sagiroglu, S.; Duygu, S. Big data: A review. In Proceedings of the 2013 International Conference on Collaboration Technologies and Systems (CTS), San Diego, CA, USA, 20 May 2013; pp. 42–47. [Google Scholar]

- Zhu, X.; Zhang, L.; Huang, Z. A sparse embedding and least variance encoding approach to hashing. IEEE Trans. Image Process. 2014, 23, 3737–3750. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhu, X.; Zhang, S.; Jin, Z.; Zhang, Z.; Xu, Z. Missing value estimation for mixed-attribute data sets. IEEE Trans. Knowl. Data Eng. 2011, 23, 110–121. [Google Scholar] [CrossRef]

- Zhang, S. KNN-CF Approach: Incorporating Certainty Factor to kNN Classification. IEEE Intell. Inform. Bull. 2010, 11, 24–33. [Google Scholar]

- Lu, L.R.; Fa, H.Y. A density-based method for reducing the amount of training data in kNN text classification. J. Comput. Res. Dev. 2004, 4, 3. [Google Scholar]

- Zhao, D.; Zou, W.; Sun, G. A fast image classification algorithm using support vector machine. In Proceedings of the 2nd International Conference on Computer Technology and Development (ICCTD) IEEE, Cairo, Egypt, 2 November 2010; pp. 385–388. [Google Scholar]

- Wu, X.; Zhang, C.; Zhang, S. Database classification for multi-database mining. Inf. Syst. 2005, 30, 71–88. [Google Scholar] [CrossRef]

- Wu, X.; Zhang, S. Synthesizing high-frequency rules from different data sources. IEEE Trans. Knowl. Data Eng. 2003, 15, 353–367. [Google Scholar]

- Deng, Z.; Zhu, X.; Cheng, D.; Zong, M.; Zhang, S. Efficient kNN classification algorithm for big data. Neurocomputing 2016, 195, 143–148. [Google Scholar] [CrossRef]

- Xu, Y.; Zhang, Y.; Zhao, J.; Yang, Z.; Pan, X. KNN-based maximum margin and minimum volume hyper-sphere machine for imbalanced data classification. Int. J. Mach. Learn. Cybern. 2019, 1, 357–368. [Google Scholar] [CrossRef]

- Jordan, M.I.; Mitchell, T.M. Machine learning: Trends, perspectives, and prospects. Science 2015, 349, 255–260. [Google Scholar] [CrossRef] [PubMed]

- Moscato, V.; Picariello, A.; Sperlí, G. Community detection based on game theory. Eng. Appl. Artif. Intell. 2019, 85, 773–782. [Google Scholar] [CrossRef]

- Chakraborty, T.; Jajodia, S.; Katz, J.; Picariello, A.; Sperli, G.; Subrahmanian, V.S. FORGE: A Fake Online Repository Generation Engine for Cyber Deception. In IEEE Transactions on Dependable and Secure Computing; IEEE: Piscataway, NJ, USA, 2019. [Google Scholar]

- Mercorio, F.; Mezzanzanica, M.; Moscato, V.; Picariello, A.; Sperli, G. Dico: A Graph-db Framework for Community Detection on Big Scholarly Data. In IEEE Transactions on Dependable and Secure Computing; IEEE: Piscataway, NJ, USA, 2019. [Google Scholar]

- Sangdo, L. An Improved Big Data Analysis Technique for Enhanced Security of Nuclear Power Plant System. Ph.D. Thesis, School of Computer, Soongsil University, Seoul, Korea, 2018. [Google Scholar]

- Silver, A.D.; Huang, C.J.; Maddison, A.; Guez, L.; Sifre, G.; Van Den, D.; Sander, D. Mastering the game of go with deep neural networks and tree search. Nature 2016, 529, 484–489. [Google Scholar] [CrossRef] [PubMed]

| Feature | # of Planes | Description |

|---|---|---|

| Stone color | 3 | Player stone/opponent stone/empty |

| Ones | 1 | A constant plane filled with 1 |

| Turns since | 8 | How may turns since a move was played |

| Liberties | 8 | Number of liberties (empty adjacent points) |

| Capture size | 8 | How many opponent stones would be captured |

| Self-atari size | 8 | How many of own stones would be captured |

| Liberties after move | 8 | Number of liberties after this move is played |

| Ladder capture | 1 | Whether a move at this point is a successful ladder capture |

| Ladder escape | 1 | Whether a move at this point is a successful ladder escape |

| Sensibleness | 1 | Whether a move is legal and does not fill its own eyes |

| Zeros | 1 | A constant plane filled with 0 |

| Player color | 1 | Whether current player is black |

| Log | Contents |

|---|---|

| Log of common information | WRK_IDX: “xxxxxx” SERVER_FLAG: “0” USBSERIAL: “AAFxxxxxxxx” MNUM: “xxxxxxxxxx-0108” USER_ID: “xxxxxxxx” IP_ADDR: “xxx.xxx.xxx.xxx” ONOFF_FLAG: “xx” WRKLOG_FILENAME: “xxxxxxxx_setup.exe” WRKLOG_SRC: “C:\Users\User\Desktop” WRKLOG_DST: “USB” WRKLOG_TYPE: “2” LOG_DT: “2017-09-17 03:24:01.0” WORK_DT: “2017-09-17 18:12:43.0” GROUP_CD: “xxxxxxxx” DEL_FLAG: “x” WORK_IP: “xxx.xxx.xxx.xxx” WORK_USER_NM: “null” WORK_GROUP_CD: “null” WORK_GROUP_NM: “null” WORK_SERVER_IP: “xxx.xxx.xxx.xxx” WORK_SERVER_NM: “nProtect Solution” USB_CMT: ““ |

| Log of information leakage | WRK_IDX: “xxxxxx” SERVER_FLAG: “1” USBSERIAL: “AAFxxxxxxxx” MNUM: “xxxxxxxxxxxx-0001” USER_ID: “xxxxxxx” IP_ADDR: “xxx.xxx.xxx.xxx” ONOFF_FLAG: “xx” WRKLOG_FILENAME: “signCert.der” WRKLOG_SRC: “USB” WRKLOG_DST: “USB” WRKLOG_TYPE: “x” LOG_DT: “2017-09-19 09:19:04.0” WORK_DT: “2017-09-19 09:19:06.0” GROUP_CD: “xxxxxxxx” USB_CMT: ““ DEL_FLAG: “x” WORK_IP: “xxx.xxx.xxx.xxx” WORK_USER_NM: “null” WORK_GROUP_CD: “null” WORK_GROUP_NM: “null” WORK_SERVER_IP: “xxx.xxx.xxx.xxx” WORK_SERVER_NM: “nProtect Solution” |

| Log of Sniper | [SNIPER-1821] [Attack_Name=(xxxxx)TCP DRDOS Attack], [Time=2017/09/13 09:03:38], [Hacker=xxx.xxx.xxx.xxx], [Victim=xxx.xxx.xxx.xxx], [Protocol=tcp/xxxxx], [Risk=xxx], [Handling=xxx], [Information=], [SrcPort=xxx], [HackType=xxxxx] |

| Log of Exploit-Malware | DETECT|WAF|2017-09-12 09:36:57|10.221.0.97|v4|xxx.xxx.xxx.xxx|xxxxxx|xxx.xxx.xxx.xxx|xxxx|메인홈페이지|Error Page Cloaking_404|중간|탐지|xxx|에러페이지 클로킹|status code 404|http|www.xxx.xx.xx|478|GET/gate/js/write.js HTTP/1.1 Accept: application/javascript, */*;q=0.8 Referer: http://www.xxx.xx.xx/gate/xxxxxxxx.do Accept-Language: ko-KR User-Agent: Mozilla/5.0 Accept-Encoding: xxxx, deflate Host: www.xxxx.xx.xx DNT: xxx Connection: Keep-Alive Cookie: WCN_KHNPHOME=xxxxxxxxxxxxxxxxxxxx WCN_GATE=xxxxxxxxxxxxxxxxx |

| Feature | # of Planes | Description |

|---|---|---|

| Information leakage | 23 | Value of each item without a date (more detail is included in the patent, which has been excluded) |

| Printout security | 23 | |

| ExploitMalware | 10 | |

| Local Worm Detected | 10 | |

| SniperIPS | 10 | |

| Virusspware | 5 | |

| Total | 81 |

| No. | Order | Algorithm | Learning Method | Accuracy | Time (/s) | Comparison Result |

|---|---|---|---|---|---|---|

| 1 | 1st | Adam algorithm | Single learning | 0.9989 | 15.86 | Faster than G-Descent (16.83) |

| 2 | G-Descent algorithm | 0.9989 | 32.69 | Accuracy is the same as that of Adam | ||

| 3 | CNN algorithm | 0.9510 | 370.06 | Exceeded time | ||

| 1 | 2nd | Adam algorithm | Noise insertion | 0.9991 | 44.62 | Faster than G-Descent (0.68) |

| 2 | G-Descent algorithm | 0.9994 | 45.30 | Accuracy is higher than Adam (0.0003) |

| No. | Order of Experiment Scenario | Learning Count | Remarks |

|---|---|---|---|

| 1 | G-Descent algorithm ⇒ Adam algorithm | 500:500 | Single learning |

| 2 | Adam algorithm ⇒ G-Descent algorithm | 500:500 | |

| 3 | G-Descent algorithm ⇒ Adam algorithm | 500:500 | Noise Insertion |

| 4 | Adam algorithm ⇒ G-Descent algorithm | 500:500 |

| No. | Scenario | Learning Method | Accuracy | Time (/s) | Result |

|---|---|---|---|---|---|

| 1 | Adam algorithm ⇒ G-Descent algorithm | Single learning | 0.998351 | 14.46075 | Faster than G-D ⇒ Adam (10.86856) |

| 2 | G-Descent algorithm ⇒ Adam algorithm | 0.999175 | 25.32931 | Accuracy is higher than Adam ⇒ G-D (0.000824) | |

| 3 | Adam algorithm ⇒ G-Descent algorithm | Noise insertion | 0.999133 | 49.86963 | The two algorithms have the same accuracy |

| 4 | G-Descent algorithm ⇒ Adam algorithm | 0.999133 | 44.9327 | Faster than Adam ⇒ G-D (4.93693) |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, S.; Huh, J.-H.; Kim, Y. Python TensorFlow Big Data Analysis for the Security of Korean Nuclear Power Plants. Electronics 2020, 9, 1467. https://doi.org/10.3390/electronics9091467

Lee S, Huh J-H, Kim Y. Python TensorFlow Big Data Analysis for the Security of Korean Nuclear Power Plants. Electronics. 2020; 9(9):1467. https://doi.org/10.3390/electronics9091467

Chicago/Turabian StyleLee, Sangdo, Jun-Ho Huh, and Yonghoon Kim. 2020. "Python TensorFlow Big Data Analysis for the Security of Korean Nuclear Power Plants" Electronics 9, no. 9: 1467. https://doi.org/10.3390/electronics9091467

APA StyleLee, S., Huh, J.-H., & Kim, Y. (2020). Python TensorFlow Big Data Analysis for the Security of Korean Nuclear Power Plants. Electronics, 9(9), 1467. https://doi.org/10.3390/electronics9091467