Tomographic Feature-Based Map Merging for Multi-Robot Systems

Abstract

1. Introduction

2. Problem Description

2.1. Map Merging in Multi-Robot Systems

2.2. The Accuracy of Map Merging

3. Proposed Method

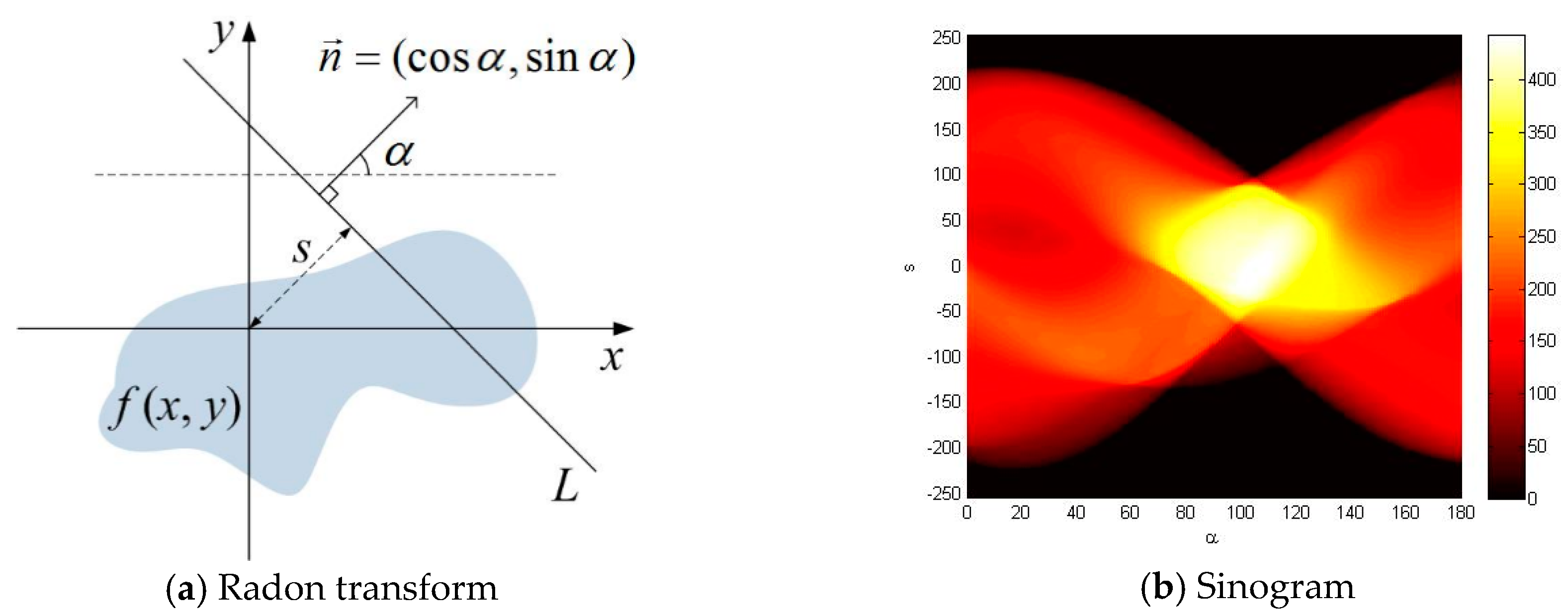

3.1. Estimation of the MTM Using Tomographic Features

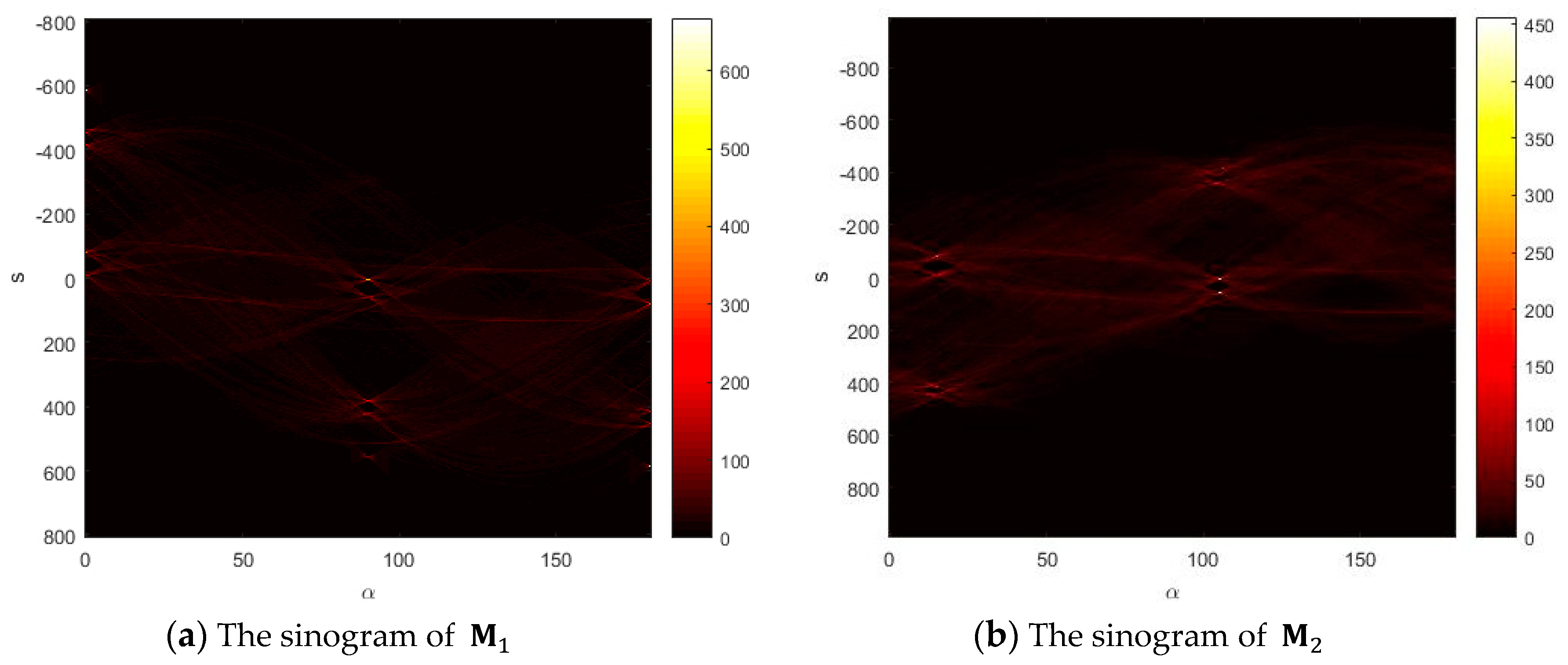

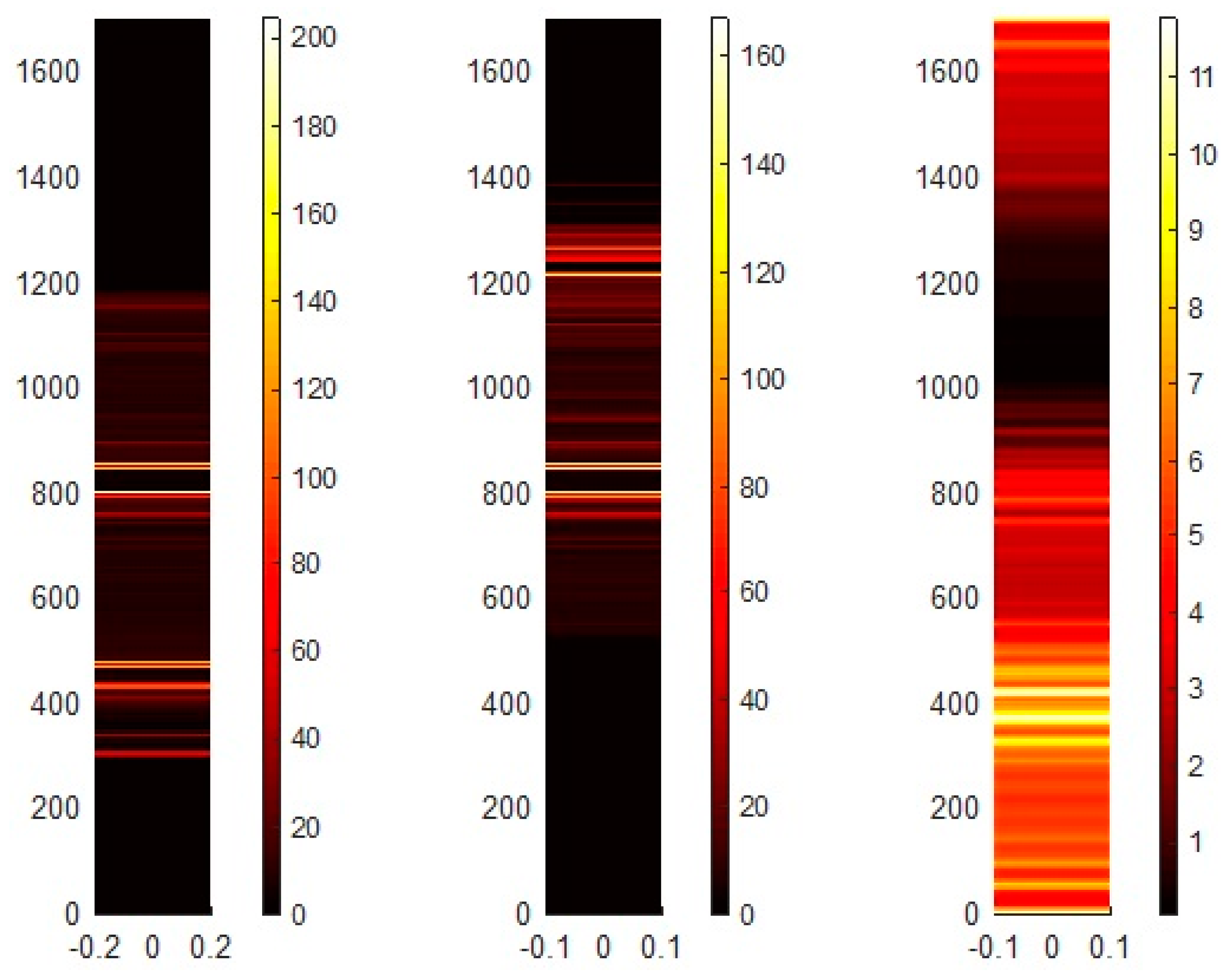

3.1.1. Estimation of a Rotation Angle

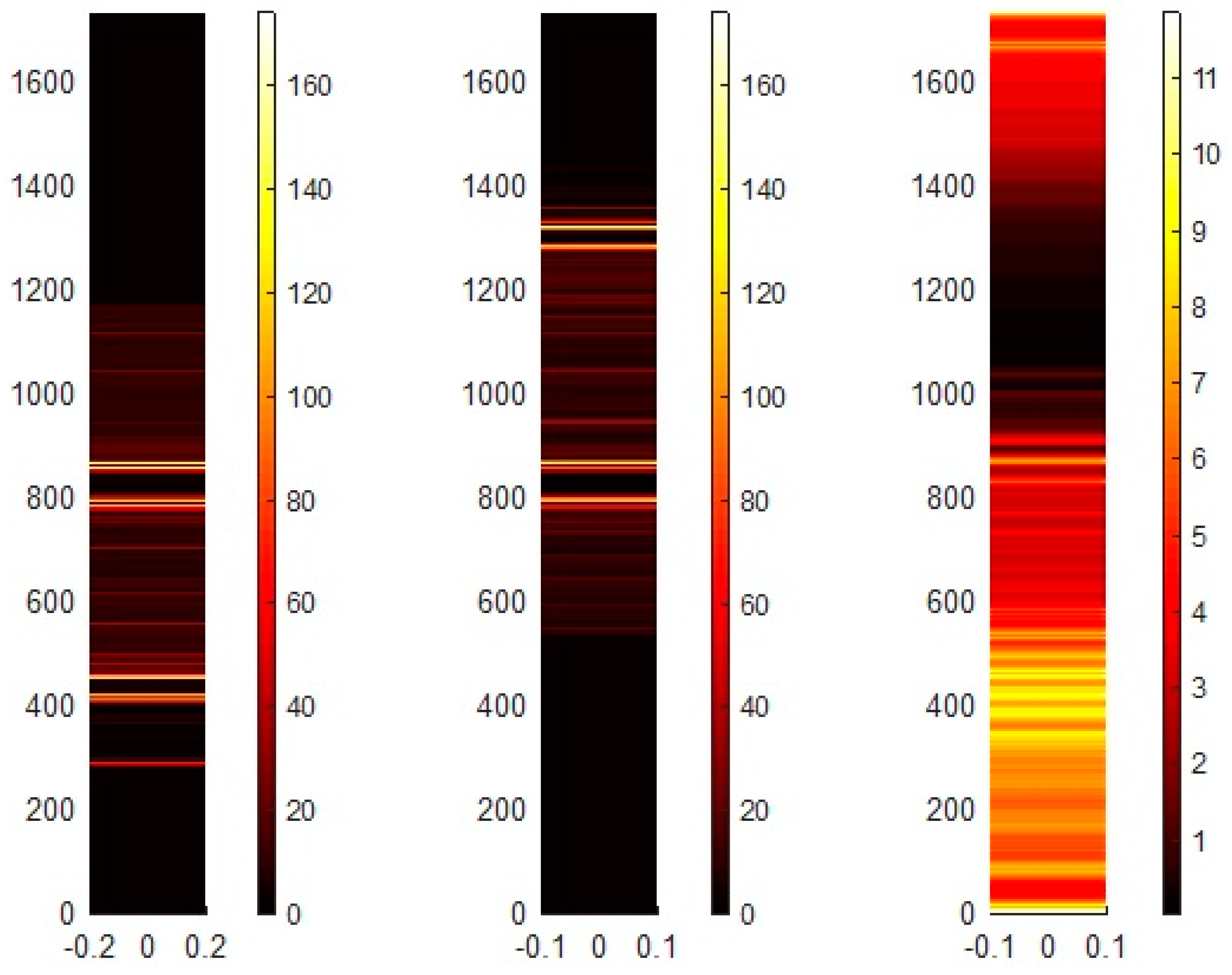

3.1.2. Estimation of - Translations

3.2. Search Space Determination with Gaussian Mixture Models

3.3. Optimization for the More Accurate MTM

4. Evaluation Results

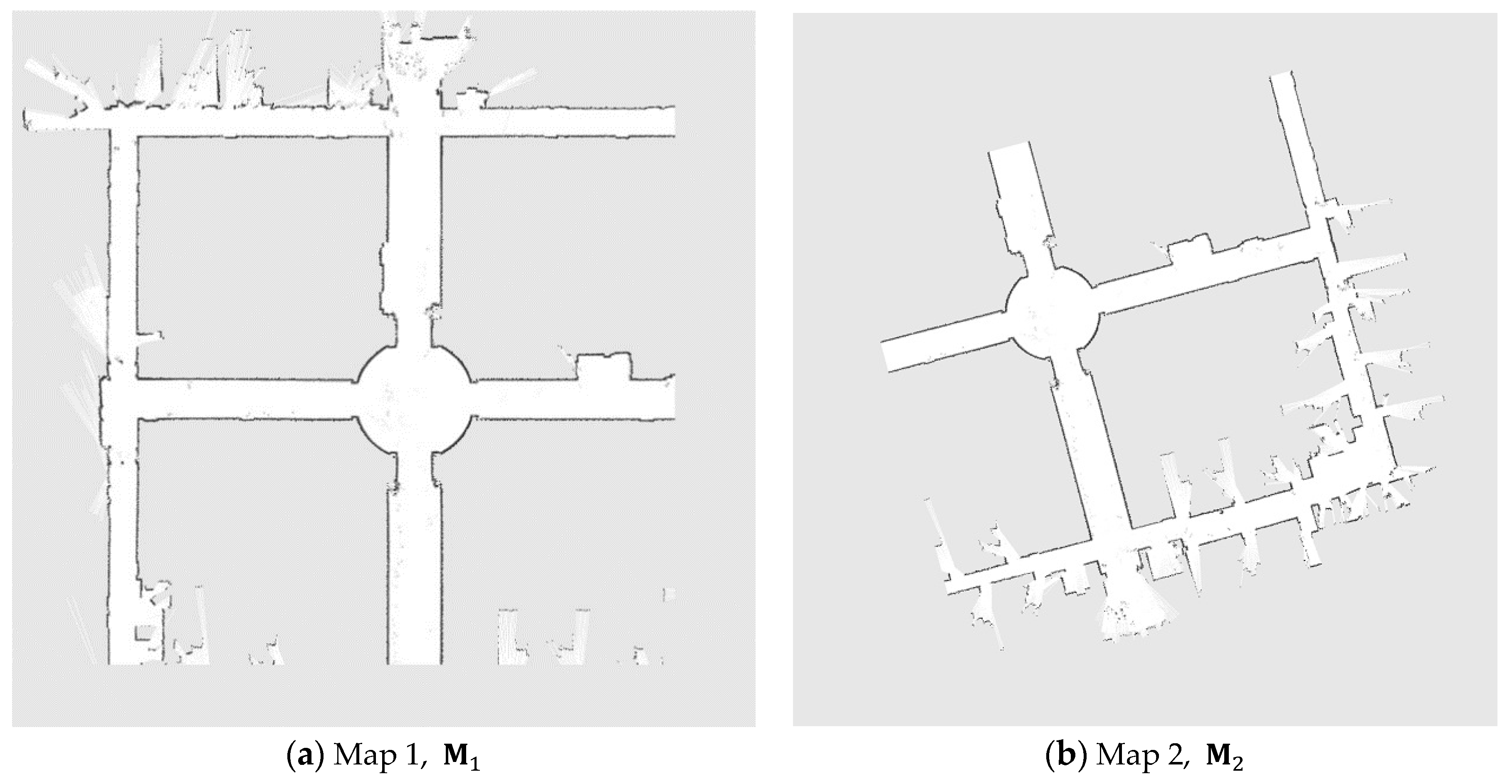

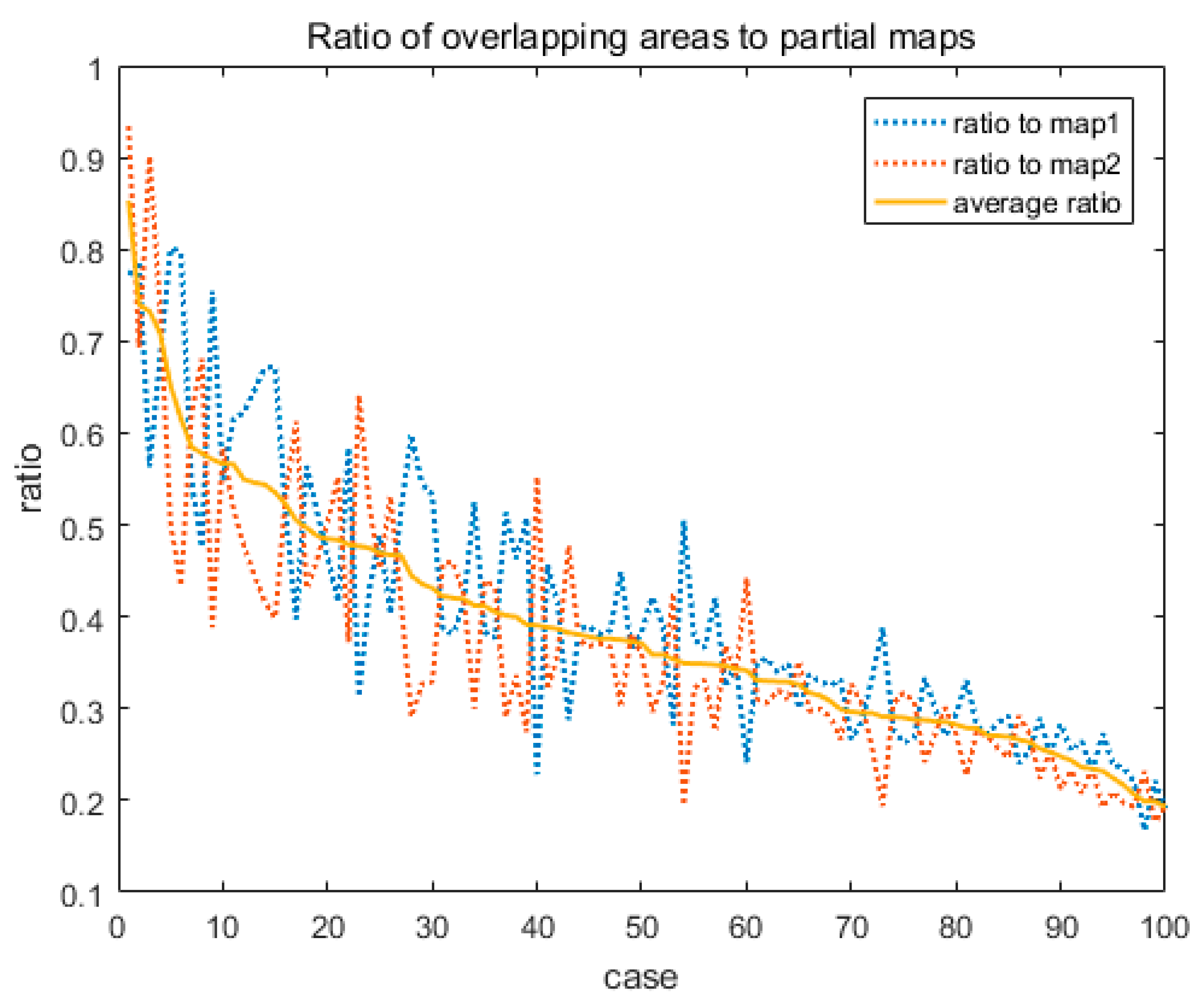

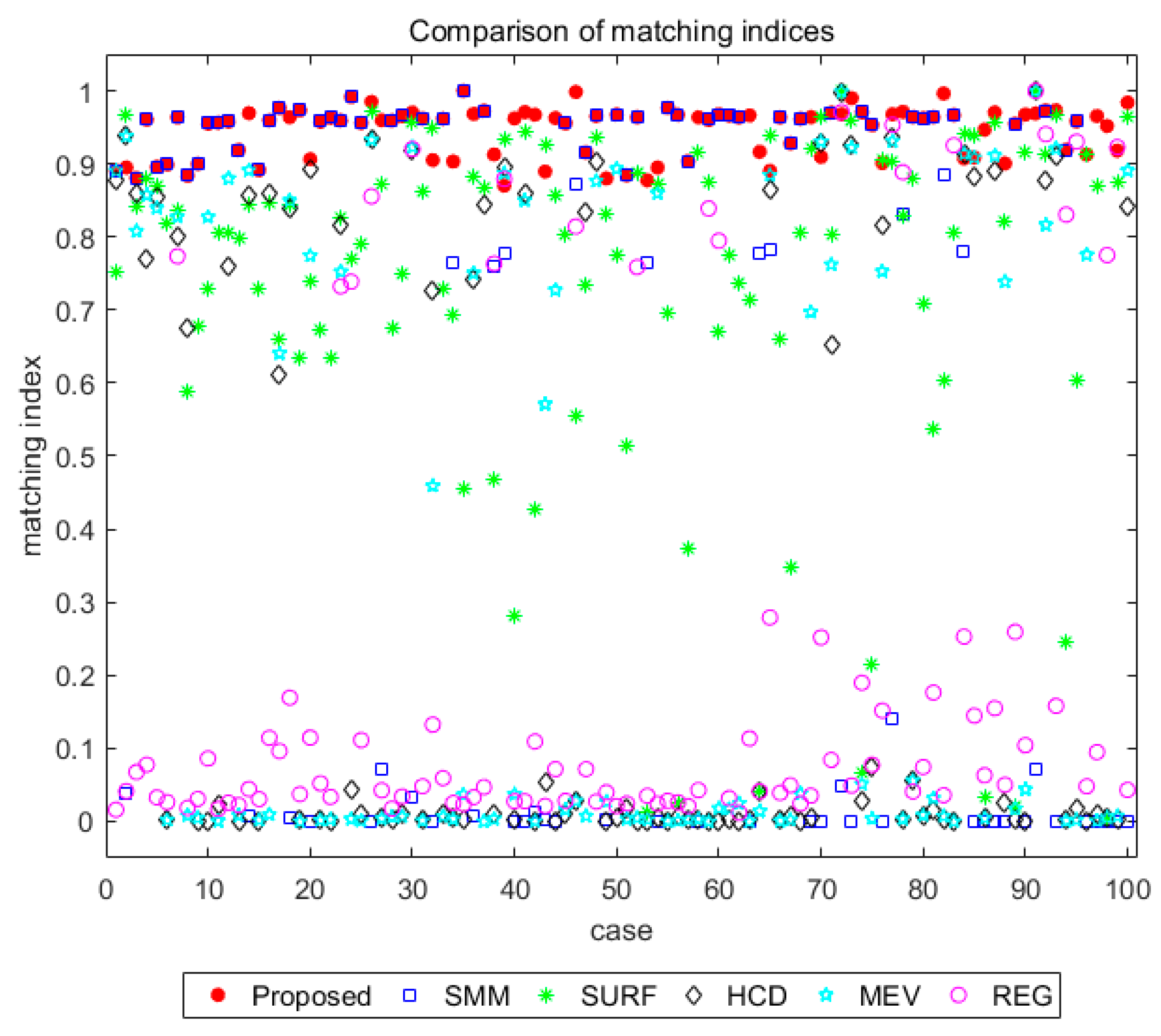

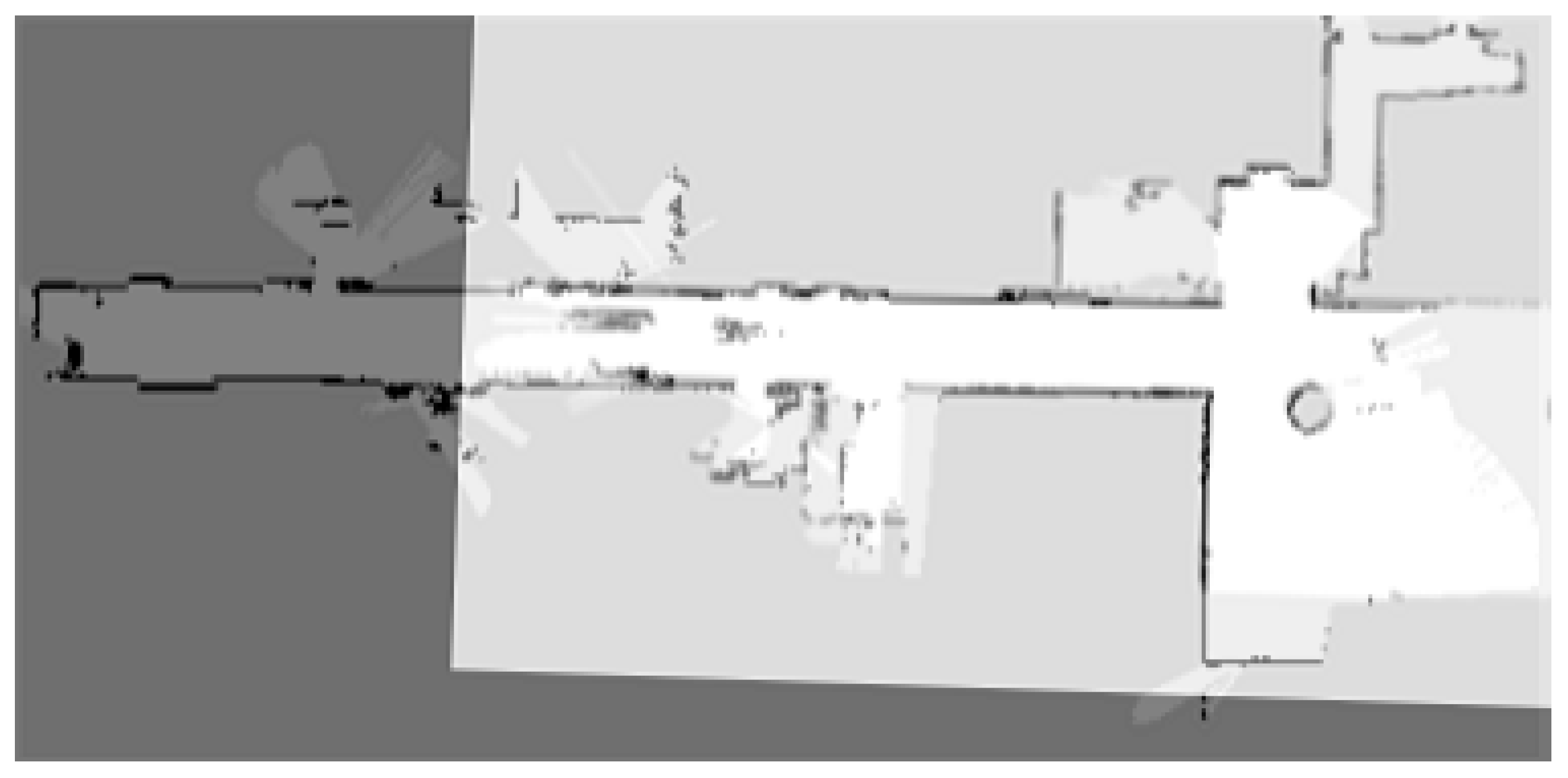

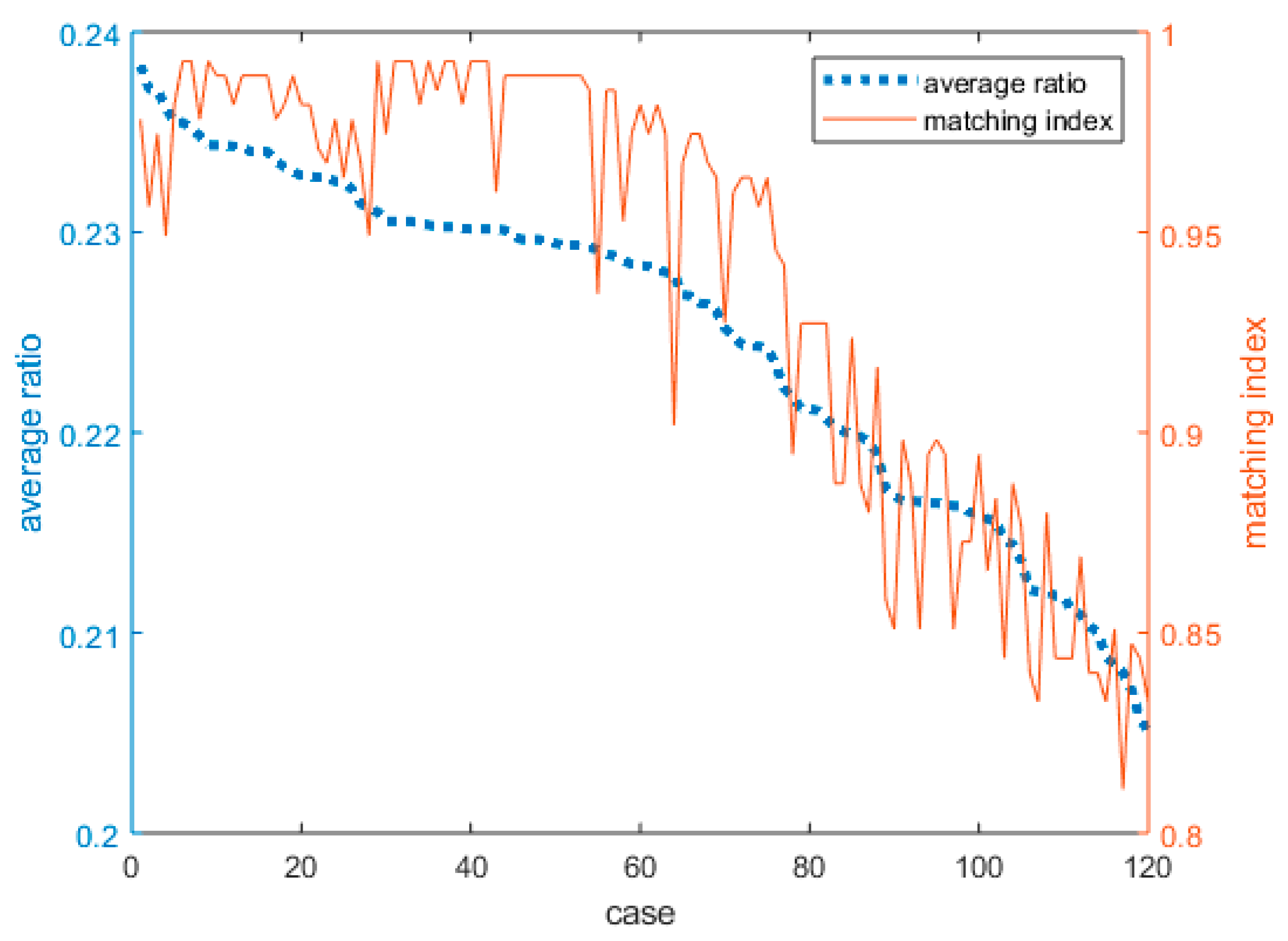

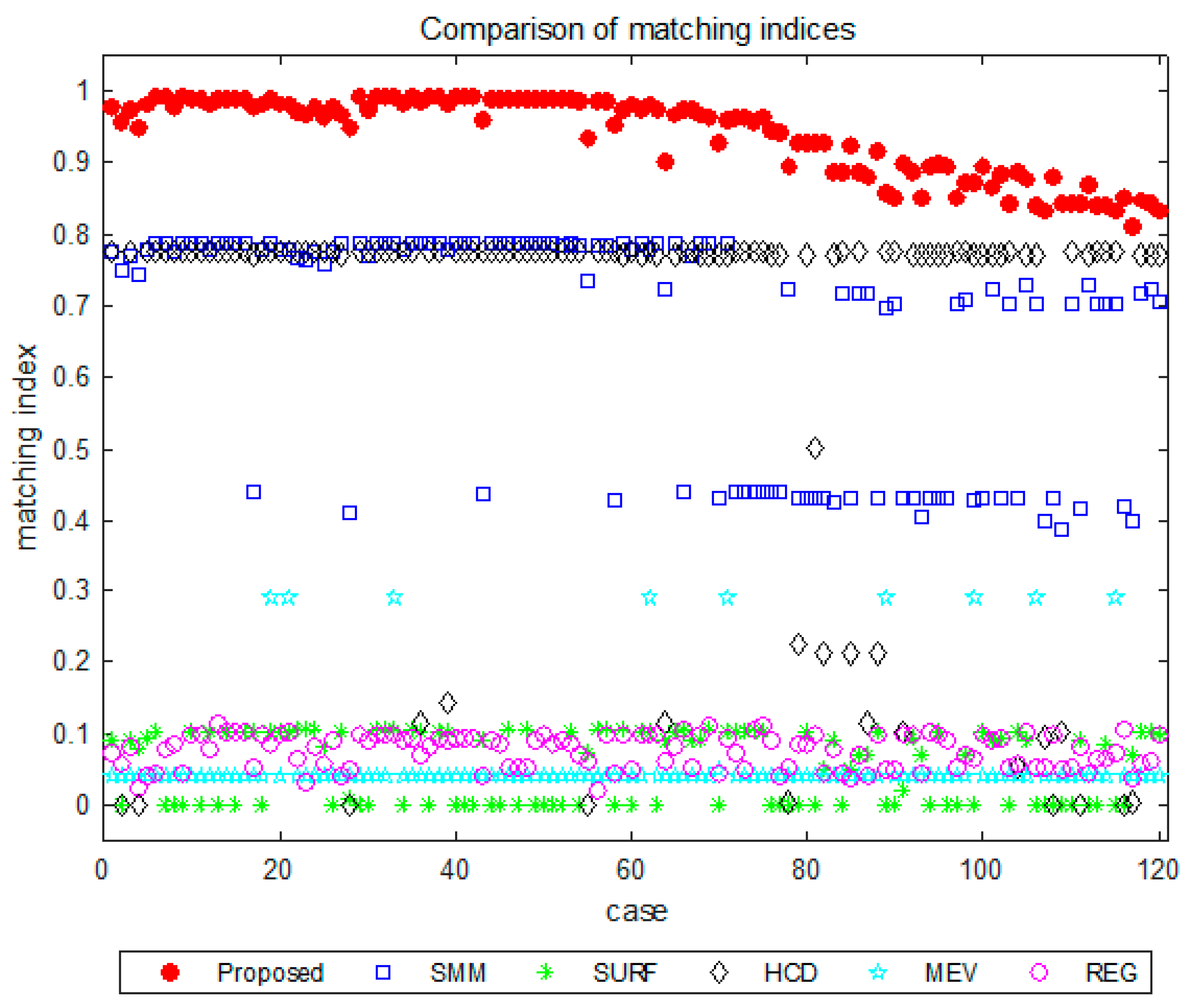

4.1. Simulation Results

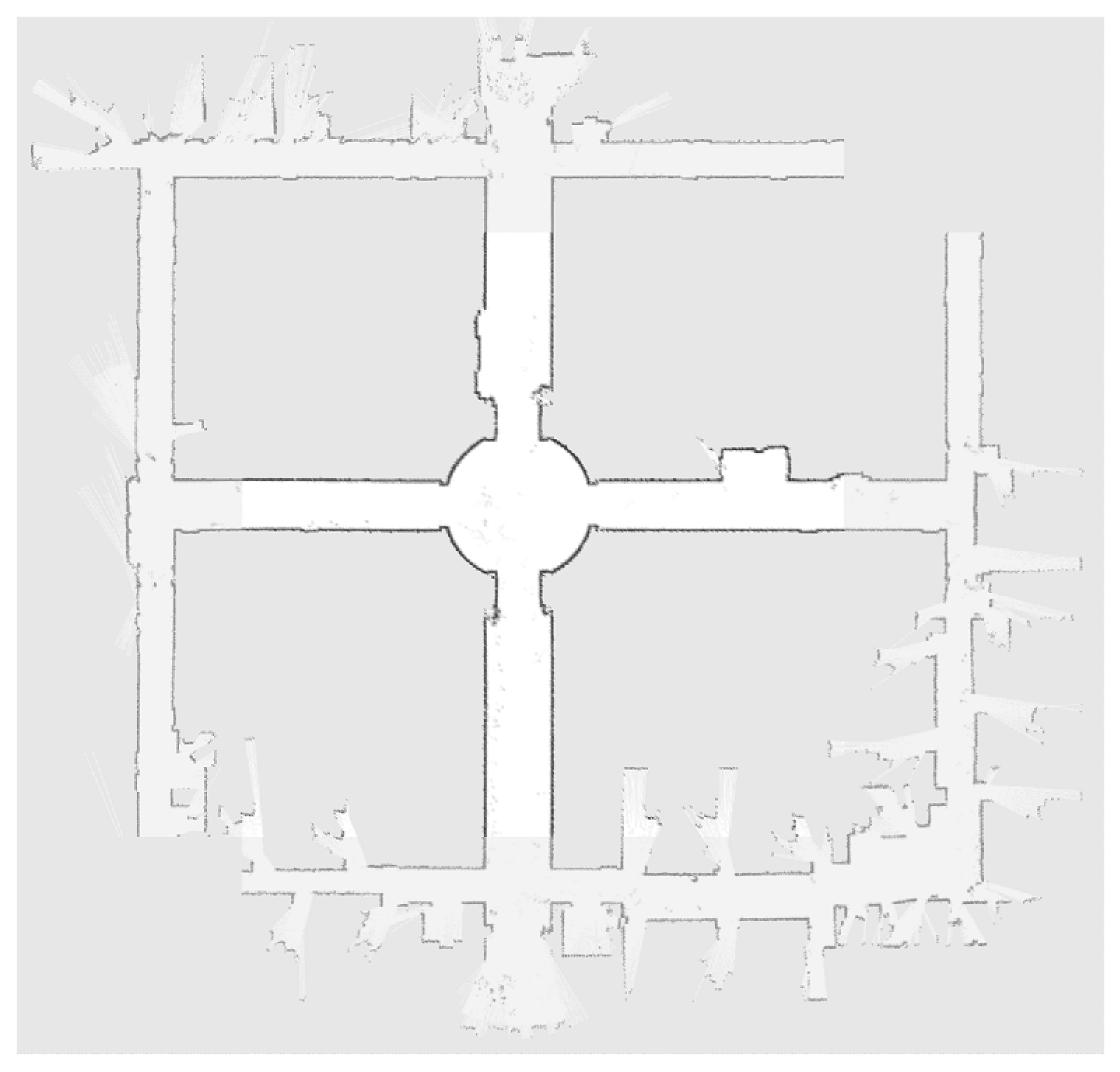

4.2. Experimental Results

5. Conclusions

Acknowledgments

Conflicts of Interest

References

- Lee, H.C.; Lee, B.H. Enhanced-spectrum-based map merging for multi-robot systems. Adv. Robot. 2013, 2, 1285–1300. [Google Scholar] [CrossRef]

- Konolige, K.; Fox, D.; Limketkai, B.; Ko, J.; Stewart, B. Map merging for distributed robot navigation. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Las Vegas, NV, USA, 27–31 October 2003. [Google Scholar]

- Zhou, X.S.; Roumeliotis, S.I. Robot-to-robot relative pose estimation from range measurements. IEEE Trans. Robot. 2008, 24, 1379–1393. [Google Scholar] [CrossRef]

- Tungadi, F.; Lui, W.L.D.; Kleeman, L.; Jarvis, R. Robust online map merging system using laser scan matching and omnidirectional vision. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010. [Google Scholar]

- Kim, B.; Kaess, M.; Fletcher, L.; Leonard, J.; Bachrach, A.; Roy, N.; Teller, S. Multiple relative pose graphs for robust cooperative mapping. In Proceedings of the IEEE International Conference on Robotics and Automation, Anchorage, AK, USA, 3–8 May 2010. [Google Scholar]

- Li, H.; Tsukada, M.; Nashashibi, F.; Parent, M. Multivehicle cooperative local mapping: A methodology based on occupancy grid map merging. IEEE Trans. Intell. Transp. Syst. 2014, 15, 2089–2100. [Google Scholar] [CrossRef]

- Dinnissen, P.; Givigi, S.N.; Schwartz, H.M. Map merging of multi-robot SLAM using reinforcement learning. In Proceedings of the IEEE International Conference on Systems, Man, and Cybernetics, Seoul, Korea, 14–17 October 2012. [Google Scholar]

- Garcia-Cruz, X.M.; Sergiyenko, O.Y.; Tyrsa, V.; Rivas-Lopez, M.; Hernandez-Balbuena, D.; Rodriguez-Quiñonez, J.C.; Basaca-Preciado, L.C.; Mercorelli, P. Optimization of 3D laser scanning speed by use of combined variable step. Opt. Lasers Eng. 2014, 54, 141–151. [Google Scholar] [CrossRef]

- Lindner, L.; Sergiyenko, O.; Rivas-López, M.; Ivanov, M.; Rodríguez-Quiñonez, J.C.; Hernández-Balbuena, D.; Flores-Fuentes, W.; Tyrsa, V.; Muerrieta-Rico, F.N.; Mercorelli, P. Machine vision system errors for unmanned aerial vehicle navigation. In Proceedings of the IEEE International Symposium on Industrial Electronics, Edinburgh, UK, 19–21 June 2017; pp. 1615–1620. [Google Scholar]

- Lee, H.C.; Lee, S.H.; Choi, M.H.; Lee, B.H. Probabilistic map merging for multi-robot RBPF-SLAM with unknown initial poses. Robotica 2012, 30, 205–220. [Google Scholar] [CrossRef]

- Lee, H.C.; Cho, Y.J.; Lee, B.H. Accurate map merging with virtual emphasis for multi-robot systems. Electron. Lett. 2013, 49, 932–934. [Google Scholar] [CrossRef]

- Birk, A.; Carpin, S. Merging occupancy grid maps from multiple robots. Proc. IEEE 2006, 94, 1384–1397. [Google Scholar] [CrossRef]

- León, A.; Barea, R.; Bergasa, L.M.; López, E.; Ocaña, M.; Schleicher, D. SLAM and map merging. J. Phys. Agents 2009, 3, 13–23. [Google Scholar]

- Wang, K.; Jia, S.; Li, Y.; Li, X.; Guo, B. Research on map merging for multi-robotic system based on RTM. In Proceedings of the IEEE International Conference on Information and Automation, Shenyang, China, 6–8 June 2012. [Google Scholar]

- Saeedi, S.; Paull, L.; Trentini, M.; Li, H. Multiple robot simultaneous localization and mapping. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, San Francisco, CA, USA, 25–30 September 2011. [Google Scholar]

- Saeedi, S.; Paull, L.; Trentini, M.; Li, H. A neural network-based multiple robot simultaneous localization and mapping. IEEE Trans. Neural Netw. 2011, 22, 2376–2387. [Google Scholar] [CrossRef] [PubMed]

- Howard, A. Multi-robot simultaneous localization and mapping using particle filters. Int. J. Robot. Res. 2006, 25, 1243–1256. [Google Scholar] [CrossRef]

- Carpin, S. Fast and accurate map merging for multi-robot systems. Auton. Robots 2008, 25, 305–316. [Google Scholar] [CrossRef]

- Lee, H.C.; Lee, B.H. Improved feature map merging using virtual supporting lines for multi-robot systems. Adv. Robot. 2012, 25, 1675–1696. [Google Scholar] [CrossRef]

- Lee, H.; Kim, K.; Kwon, Y.; Hong, E. Real-time particle swarm optimization on FPGA for the optimal message-chain structure. Electronics 2018, 7, 274. [Google Scholar] [CrossRef]

- Lee, H.; Kim, K.; Kwon, Y. Efficient and reliable bus controller operation with particle swarm optimization in MIL-STD-1553B. In Proceedings of the Asia-Pacific International Symposium on Aerospace Technology, Seoul, Korea, 16–18 October 2017. [Google Scholar]

- Resnick, J. The radon transforms and some of its applications. IEEE Trans. Acoust. Speech Signal Process. 1985, 33, 338–339. [Google Scholar] [CrossRef]

- Stachniss, C. Robotics 2D-Laser Datasets. Available online: http://www.ipb.uni-bonn.de/datasets (accessed on 12 December 2019).

- Bay, H.; Tuytelaars, T.; Gool, L.V. SURF: Speeded up robust features. In Proceedings of the European Conference on Computer Vision, Graz, Austria, 7–13 May 2006. [Google Scholar]

- Harris, C.; Stephens, M. A combined corner and edge detector. In Proceedings of the Alvey Vision Conference, Roke Manor, UK, 1 January 1988. [Google Scholar]

- Shi, J.; Tomasi, C. Good features to track. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 21–23 June 1994. [Google Scholar]

- Zhang, C.; Weingartner, S.; Moeller, S.; Uğurbil, K.; Akçakaya, M. Fast GPU Implementation of a scan-specific deep learning reconstruction for accelerated magnetic resonance imaging. In Proceedings of the IEEE International Conference on Electro/Information Technology, Rochester, MI, USA, 3–5 May 2018; pp. 399–403. [Google Scholar]

| Algorithm: | Monte-Carlo optimization for the more accurate MTM |

| Input: | Given individual maps: and |

| The mean set and variance set of the UGMM: and | |

| The mean vector set and covariance set of the MGMM: , , and | |

| Output: | The best MTM in |

| 1: | Determine the number of samples and , where |

| 2: | Initialize the objective function |

| 3: | Sample one-dimensional candidates for the rotation angle in Eq. (27) |

| 4: | Sample two-dimensional candidates for the translation amounts in Eq. (28) |

| 5: | Obtain the set of three-dimensional combined candidates, by combining and |

| 6: | Define the normal distribution with and |

| 7: | for= 1 todo |

| 8: | Pick the -the candiate from |

| 9: | Obtain by transforming using |

| 10: | Calculate the -th value of the objective function in Eq. (29) |

| 11: | end for |

| 12: | Take indicating the maximum of the objective function in Eq. (30) |

| 13: | return |

| Type | Proposed. | SMM | SURF | HCD | MEV | REG | |

|---|---|---|---|---|---|---|---|

| Sim. | Avg. | 0.9457 | 0.5970 | 0.7244 | 0.3209 | 0.3487 | 0.2351 |

| Std. Dev. | 0.0334 | 0.4442 | 0.2553 | 0.4122 | 0.4140 | 0.3292 | |

| Exp. | Avg. | 0.9393 | 0.6665 | 0.0543 | 0.6507 | 0.0596 | 0.0756 |

| Std. Dev. | 0.0552 | 0.1557 | 0.0483 | 0.2668 | 0.0658 | 0.0232 | |

| Type | Proposed. | Proposed. w/o MCO | SMM | SURF | HCD | MEV | REG | |

|---|---|---|---|---|---|---|---|---|

| Sim. | Avg. | 7.6219 | 3.3047 | 3.1527 | 5.3756 | 4.7475 | 4.8870 | 17.3969 |

| Std. Dev. | 1.1282 | 0.3013 | 0.4235 | 0.3948 | 0.4463 | 0.3995 | 1.2543 | |

| Exp. | Avg. | 0.3766 | 0.1781 | 0.1531 | 0.2198 | 0.4297 | 0.3017 | 0.6509 |

| Std. Dev. | 0.0432 | 0.0223 | 0.1263 | 0.0480 | 0.0907 | 0.0613 | 0.1208 | |

© 2020 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, H. Tomographic Feature-Based Map Merging for Multi-Robot Systems. Electronics 2020, 9, 107. https://doi.org/10.3390/electronics9010107

Lee H. Tomographic Feature-Based Map Merging for Multi-Robot Systems. Electronics. 2020; 9(1):107. https://doi.org/10.3390/electronics9010107

Chicago/Turabian StyleLee, Heoncheol. 2020. "Tomographic Feature-Based Map Merging for Multi-Robot Systems" Electronics 9, no. 1: 107. https://doi.org/10.3390/electronics9010107

APA StyleLee, H. (2020). Tomographic Feature-Based Map Merging for Multi-Robot Systems. Electronics, 9(1), 107. https://doi.org/10.3390/electronics9010107