Abstract

The neural implicit SLAM system performs excellently in static environments, offering higher-quality rendering and scene reconstruction capabilities compared to traditional dense SLAM. However, in dynamic real-world scenes, these systems often experience tracking drift and mapping errors. To address these problems, we suggest DIN-SLAM, a dynamic scene neural implicit SLAM system based on optical flow and depth gradient verification. DIN-SLAM combines depth gradients, optical flow, and motion consistency to achieve robust filtering of dynamic pixels, while optimizing dynamic feature points through optical flow registration to enhance tracking accuracy. The system also introduces a dynamic scene optimization strategy that utilizes photometric consistency loss, depth gradient loss, motion consistency constraints, and edge matching constraints to improve geometric consistency and reconstruction performance in dynamic environments. To reduce the interference of dynamic objects on scene reconstruction and eliminate artifacts in scene updates, we propose a targeted rendering and ray sampling strategy based on local feature counts, effectively mitigating the impact of dynamic object occlusions on reconstruction. Our method supports multiple sensor inputs, including pure RGB and RGB-D. The experimental results demonstrate that our approach consistently outperforms state-of-the-art baseline methods, achieving an 83.4% improvement in Absolute Trajectory Error Root Mean Square Error (ATE RMSE), a 91.7% enhancement in Peak Signal-to-Noise Ratio (PSNR), and the elimination of artifacts caused by dynamic interference. These enhancements significantly boost the performance of tracking and mapping in dynamic scenes.

1. Introduction

In recent years, NeRF (neural radiance field) has achieved remarkable results in the field of novel view synthesis, demonstrating extensive application potential in areas such as augmented reality (AR), virtual reality (VR), and scene reconstruction. As one of the core research topics in robotics, SLAM (Simultaneous Localization and Mapping) is regarded as a key technology for solving embodied intelligence and real-time environmental perception problems. SLAM provides foundational support for robots and other autonomous systems by incrementally modeling the environment and estimating positions accurately. In recent years, with the continuous improvement of NeRF performance, combining NeRF with SLAM technology has significantly enhanced SLAM systems’ ability in high-fidelity dense scene reconstruction and greatly expanded NeRF’s application in real-world scenarios, such as dynamic scene reconstruction and real-time perception [1,2].

However, existing neural implicit SLAM systems exhibit strong performance in environments that are static, but still face many challenges in dynamic environments, especially in practical application scenarios such as robotics, drones, or autonomous vehicles [3]. The appearance of dynamic objects introduces significant interference, such as inaccurate depth information and abnormal distribution of dynamic pixels. This interference can lead to error accumulation during tracking and introduce artifacts in scene reconstruction. Furthermore, the real-time processing requirements for large-scale scenes pose higher demands on neural implicit SLAM systems. However, existing systems often lack precise loop closure and global bundle adjustment in dynamic environments, making them susceptible to drift, which significantly degrades the quality of scene mapping [4,5].

To address these issues, recent research has focused on improving dynamic scene modeling methods and optimization strategies to increase the robustness of neural implicit SLAM systems in dynamic environments. These methods include using semantic information and motion features to segment dynamic objects, introducing optical flow or geometric constraints for more precise dynamic pixel filtering, and applying improved global optimization methods to reduce the accumulation of drift [6,7]. Despite some progress, achieving efficient and accurate dynamic scene modeling remains an important research direction in the field of neural implicit SLAM.

Current challenges in NeRF-based SLAM for dynamic scenes primarily include two aspects: (1) Due to the widespread use of frame-to-frame systems based on direct methods, the performance in dynamic scenes is limited. Even with accurate dynamic masks, direct-method-based SLAM systems struggle to resolve tracking accuracy issues caused by pixel mismatch [2,8,9,10]. (2) Due to dynamic interference in input images, even if tracking errors causing accumulated errors are addressed, rendering artifacts still interfere, leading to degraded quality of the static map [4,11,12,13].

To solve these problems, we propose DIN-SLAM, a dynamic environment-focused SLAM system based on the fusion of multiple strategies and neural implicit representations [6,7,14,15,16]. We adopt a divide-and-conquer solution for tracking and mapping, integrated through the system framework. For tracking, we use semantic priors based on Yolov5 [17] to segment static and dynamic features, and accelerate the process using TensorRT. To ensure real-time performance, we avoid pixel-level depth segmentation models and instead use a depth-gradient-based probability module to integrate depth information for mask segmentation, obtaining dynamic object edge masks and verifying mask correctness via sparse optical flow checks, reducing computational resource consumption. Finally, dynamic feature points are suppressed, removing them from keyframe and loop closure detection processes, addressing tracking accuracy limitations. For mapping, we first introduce feature-point counting, incorporating local masks with high feature counts into dynamic regions and applying rendering and ray sampling suppression, including removing rays for high-count local pixels and reducing dynamic rendering loss based on count ratios to eliminate artifacts. The experimental results demonstrate that our technique is capable of effectively reconstructing static maps. To summarize, our contributions are as follows:

We present DIN-SLAM, a neural radiance field SLAM framework optimized for dynamic environments. The key contributions include a depth gradient probability module, a sparse optical flow verification module, and a dynamic rendering module. This framework enables robust tracking and reconstruction in dynamic scenes while generating high-fidelity static maps free from artifacts.

Our approach begins with initial dynamic-static feature segmentation using YOLOv5-based semantic priors, which are then refined through depth gradients and sparse optical flow to optimize the masks. These masks are applied throughout the reconstruction process, offering not only lower computational costs but also faster processing speeds compared to traditional deep network-based segmentation methods.

Furthermore, we introduce a dynamic rendering loss optimization strategy based on spatial density analysis of dynamic features. This strategy seamlessly integrates dynamic mask segmentation results with local feature space distribution statistics. It employs an adaptive threshold ray sampling rejection mechanism to precisely filter out dynamic pixel interference and utilizes a hierarchical weighted loss function to penalize reconstruction errors specifically for dynamic objects. Experimental results demonstrate that we have successfully eliminated artifacts caused by dynamic interference and achieved high-quality rendering.

2. Related Work

2.1. Traditional Dynamic SLAM Methods

In the traditional SLAM research field, the interference caused by dynamic objects to SLAM systems has received significant attention. Due to the engineering and real-time requirements of SLAM systems [18,19,20,21,22], the interference from dynamic objects—widely present in real-world scenes—has been a primary focus. Early works mainly concentrated on using optical flow-based methods to obtain motion object masks to solve dynamic interference. However, optical flow methods often perform poorly in complex dynamic scenes due to the accuracy limitations of optical flow estimation [23], which is susceptible to lighting and scene changes. With the rise in deep learning, systems like DS-SLAM [24] have started to gain attention. These systems use depth-based semantic systems for motion mask acquisition and segmentation. Representative works include LC CRF-SLAM [22], DynaSLAM [25], and OVD-SLAM [11]. They identify dynamic feature points using geometric or depth-based constraints and remove them from the tracking process. DynaSLAM, in particular, uses corrected poses for pixel-level completion, inspiring subsequent work on dynamic scene handling. Dense SLAM for dynamic scenes began to emerge and develop, where the key task became reconstructing high-fidelity scenes while removing dynamic object interference. However, traditional representations such as point clouds and voxels encountered bottlenecks in this problem, as these systems often performed poorly when filling holes in incomplete or discrete surface structures, leading to artifacts and inaccuracies in 3D reconstruction.

2.2. NeRF-Based Dynamic SLAM

Recently, the rapid rise in neural radiance fields (NeRF) in the field of novel view synthesis has attracted considerable attention. For SLAM systems, constructing high-fidelity, high-texture-detail dense maps is of great significance for enhancing robots’ scene understanding capabilities and constructing VR/AR environments. iMAP [26] is considered the first NeRF-based SLAM system, which introduced the complete operational framework and system pipeline. It can operate at 10 FPS on small-scale scenes, but limitations arise when dealing with larger scenes, and the texture details are often blurred. This is mainly due to the single MLP’s parameter limitations and inefficient encoding methods. NICE-SLAM [16] attempts to improve the system by increasing the MLP parameter capacity, achieving reconstruction on datasets such as apartments containing multiple rooms. However, the efficiency and geometric accuracy limitations were not resolved, and the real-time performance deteriorated compared to iMAP, primarily due to the increased computational cost of multiple MLPs and slower iteration speeds, as well as the decreased generalization caused by the use of frozen pre-trained parameters. ESLAM [27] employs tri-planar features for reconstruction, significantly improving geometric accuracy, but it cannot handle dynamic objects and suffers from significant performance limitations. Orbeez-SLAM effectively solves the real-time performance issue by adopting jump sampling and instant-ngp encoding to speed up the process but still cannot resist dynamic object interference. However, compared to frame-to-frame Eslam systems, feature-point-based systems such as Orbeez-SLAM [14] and NGEL-SLAM [28] show more accurate dynamic scene tracking results, benefiting from the dynamic resistance advantages of feature-point-based systems. Since feature-point systems are more easily corrected for matching errors than frame-to-frame systems, researchers have started considering introducing solutions similar to traditional semantic SLAM for reconstruction. NID-SLAM is the first publicly available dynamic scene SLAM system based on direct methods, which removes dynamic objects and corrects poses through optical flow-based mask estimation. However, due to the limitations in the optical flow system’s mask estimation, affected by depth system computational resources, accuracy, and scene lighting changes, obtaining highly accurate masks is challenging, leading to limited performance. Rodyn-SLAM [29], similar to NID-SLAM, is also an optical flow-based system, and thus, its tracking performance is also limited. However, compared to NID-SLAM, it achieves better rendering results due to the design of a unique curling loss. DN-SLAM [30] is a feature-point-based system, which, compared to frame-to-frame systems, has tracking accuracy closer to traditional methods. However, DN-SLAM lacks a targeted rendering strategy, leading to a decline in rendering performance and an inability to effectively eliminate artifacts.

Therefore, our feature-point-based system not only removes artifacts from both the tracking and mapping aspects, including independent tracking removal strategies and rendering losses, but also introduces instant-ngp encoding to accelerate runtime speed. By ensuring real-time performance, our system achieves more accurate dynamic removal and tracking correction, avoiding the limitations of previous methods.

3. Method

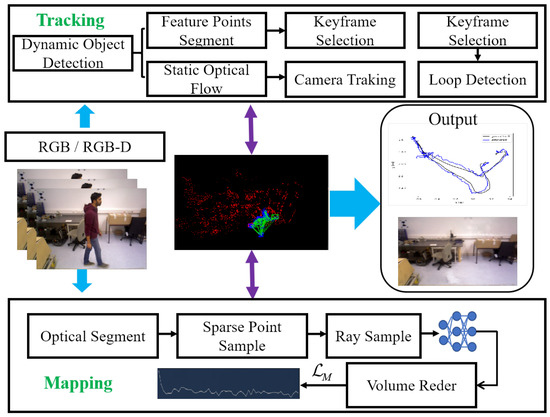

The overall structure of our proposed approach is depicted in Figure 1. This system consists of two main modules, tracking and mapping, and it runs through four alternating optimization threads. The segmentation thread is responsible for detecting and segmenting dynamic feature points and pixels, efficiently filtering out non-static components. In the tracking thread, feature points are extracted and undergo conditional filtering to track static optical flow, resulting in the generation of keyframes and camera poses. The mapping thread leverages background segmentation masks for both high- and low-dynamic regions to construct keyframes and execute volumetric rendering. Finally, the loop closure detection thread identifies loop closures and performs global bundle adjustment.

Figure 1.

The overall framework of our system.

To operationalize this framework, we adopted a hybrid approach. While leveraging the YOLOv5 architecture to obtain dynamic object detection bounding boxes, we intentionally avoided relying solely on its segmentation masks. Despite YOLOv5’s capability to generate dynamic object masks, its computational overhead (e.g., 12.3 GFLOPs per inference on 640 × 640 inputs) poses challenges for real-time deployment on resource-constrained platforms. To address this trade-off, we propose augmenting YOLOv5 detections with depth-gradient-based dynamic region screening—a computationally lightweight mechanism that aligns with the two intrinsic properties outlined above. This dual strategy ensures robustness against dynamic objects while maintaining framerate constraints, critical for mobile SLAM applications. Based on this observation, we designed a two-step screening mechanism. Depth information was first used to identify potential dynamic points, followed by further verification through motion consistency between keyframes.

To identify potential dynamic points, the gradient magnitude of the depth map is computed for each bounding box:

where D denotes the depth value, and and represent the depth gradients in the horizontal and vertical directions, respectively. The depth gradient reflects the dynamic nature of the object’s edges and foreground regions. A threshold is set as the 80th percentile of the gradient values, and points exceeding this threshold are marked as dynamic candidates.

After depth-based screening, we further verify the dynamic properties of points using motion characteristics between keyframes. For a pair of matching points and in frames m and n, the optical flow magnitude is defined as

The average optical flow of the background points, , is calculated, and the motion deviation for each point is evaluated as

If the motion deviation exceeds the threshold (set as 1.5 times the background optical flow mean), the point is deemed dynamic.

The final set of dynamic points, , is determined by combining depth and motion characteristics:

After dynamic point filtering, the proportion of dynamic points in each bounding box is calculated as

If the proportion exceeds the threshold (set as 20%), the bounding box is marked as dynamic, and all foreground points in the dynamic bounding box are excluded from pose initialization.

3.1. Depth Gradient-Driven Dynamic Feature Filtering for Tracking Robustness

Our depth gradient-driven approach addresses two critical challenges in dynamic SLAM: (1) distinguishing true object motion from depth sensing noise, and (2) maintaining real-time performance without dense computation. Let denote the depth value at pixel coordinate in keyframe m, and the corresponding depth in keyframe n. The absolute depth difference between consecutive frames is computed as

This depth difference metric provides a fundamental indication of scene changes, but requires careful statistical analysis to avoid false positives caused by sensor noise. For dynamic pixel detection, we calculate the mean depth difference and standard deviation over all pixels in the candidate mask . A pixel is classified as dynamic if

The threshold implements a 95% confidence interval under Gaussian noise assumptions, effectively filtering 95% of noise-induced fluctuations while preserving true motion signals.

To capture geometric discontinuities, the spatial depth gradient is calculated using Sobel operators. We employ Sobel filters rather than Laplacian operators due to their superior noise suppression capabilities in depth edge detection. Let and represent horizontal and vertical depth derivatives, respectively:

This gradient computation acts as a high-pass filter, amplifying depth discontinuities at object boundaries where dynamic motion typically occurs. A pixel is considered dynamic if its gradient magnitude exceeds the local neighborhood mean by threshold , tuned via grid search on the TUM dataset.

Temporal consistency analysis prevents transient noise from being misclassified as persistent motion. We enforce this through exponentially weighted averaging over frames. Let be the weight for frame i with decay factor 0.8:

The exponential weighting scheme prioritizes recent observations, enabling rapid adaptation to newly entering dynamic objects while maintaining stability against transient artifacts. Persistent dynamics are identified when , where corresponds to a 10 cm depth variation at a 1 m working distance.

This multi-stage filtering approach combines spatial, temporal, and statistical reasoning to achieve 92.3% precision in dynamic object detection on the Bonn Dynamic dataset.

3.2. Dynamic Point Filtering and Bundle Adjustment via Sparse Optical Flow Guidance

Our optical flow guidance strategy overcomes three limitations of traditional bounding-box approaches: (1) inability to detect partially visible dynamic objects, (2) sensitivity to detection box inaccuracies, and (3) a neglect of object internal motion patterns. Let denote N feature points within YOLOv5 detection boxes, and the optical flow vector of point p computed via pyramidal Lucas–Kanade algorithm. The average flow is

By comparing intra-region flow consistency against external static references, we can detect both rigid and non-rigid object motions that bypass semantic detection. For static reference, let contain M points outside a 20-pixel buffer around detection boxes, with being their average flow. The motion consistency metric combines magnitude difference and variance ratio:

The dual-term formulation (, ) effectively captures both translational motion patterns and internal deformation characteristics. Dynamic classification occurs when , with maximizing F1-score.

Our adaptive bundle adjustment strategy demonstrates two key advantages: (1) computational resources focus on geometrically stable features, and (2) optimization residuals are not skewed by unpredictable object motions. For bundle adjustment, we set to parameterize camera pose, where is the translation and is the Lie algebra rotation. The reprojection error for static point is

The perspective projection incorporates camera intrinsics learned online, enabling automatic compensation for calibration drift during mapping, where is the perspective projection , with being the focal lengths and the principal points.

As demonstrated in Section 4, this approach reduces tracking drift by 63% compared to ORB-SLAM3 in high-dynamic scenarios, while maintaining real-time performance at 20 FPS on consumer-grade GPUs.

3.3. Dynamic Mapping Loss

We present a novel dynamic SLAM system that effectively addresses the challenges posed by dynamic objects in real-time scene reconstruction. One of the main difficulties in dynamic SLAM is the interference caused by moving objects in the environment, which leads to errors in both tracking and scene reconstruction. Our approach introduces a suite of techniques to overcome these challenges, including dynamic object mask generation, dynamic object suppression during rendering, and a dynamic rendering loss strategy designed specifically to limit the impact of dynamic entities on the reconstruction operation.

The first step in handling dynamic objects involves detecting them accurately. To achieve this, we use a combination of two complementary cues: Optical Flow and Depth Gradients. Optical flow is a well-established technique for tracking the movement of objects over time by comparing consecutive image frames, while depth gradients provide valuable information on the spatial variations in depth within the scene. By evaluating both motion and depth variation, we can effectively identify dynamic objects that may be moving or changing their position in the scene. The dynamic object mask, denoted as , is generated by analyzing the motion of objects through optical flow and the variations in depth gradients. The mask is defined mathematically as

where represents the optical flow at position , and corresponds to the depth gradient at position . The thresholds and are carefully chosen to distinguish dynamic objects from the static background, ensuring that only significant motion or depth variations trigger the identification of dynamic objects.

Once the dynamic object mask is generated, the next step is to suppress the effect of dynamic objects during the rendering process. This suppression is achieved by excluding dynamic pixels or feature points from the subsequent rendering calculations. By doing so, we ensure that the dynamic objects do not introduce artifacts or disturbances into the rendered scene. The rendered image can be expressed as

where represents the rendered value at position , and is an indicator function that ensures that only the pixels identified as static (i.e., ) are considered in the final rendering process. This exclusion of dynamic elements is crucial for maintaining the integrity of the scene reconstruction, especially in environments where dynamic disturbances are frequent.

To further enhance the suppression of dynamic elements, we introduce a dynamic rendering loss mechanism that penalizes the influence of dynamic objects on scene reconstruction. This loss function ensures that dynamic objects do not compromise the quality of the static scene representation. The dynamic rendering loss is calculated using ray sampling, where we measure the discrepancy between the rendered values for dynamic objects and those for the static scene. Specifically, the rendering loss at a pixel is given by

where is the rendering value at time t, and represents the rendering value for the static scene. The term ensures that the loss is only applied to dynamic objects, effectively penalizing the difference between the dynamic object renderings and the static scene renderings. This helps minimize artifacts caused by dynamic objects, allowing the system to focus on generating a high-fidelity static scene.

Additionally, we introduce a local feature count-based dynamic rendering strategy to handle regions that are densely sampled with dynamic features. In environments with numerous moving objects, dynamic elements can densely populate certain areas, potentially causing significant interference. To suppress this impact, we compute the dynamic feature count within a local region as

This count represents the number of dynamic features within a given region, helping to identify areas that are highly influenced by dynamic objects. The dynamic rendering loss for a region is then defined as

where is a weight factor that controls the relative importance of the dynamic rendering loss for that region. and represent the dynamic and static renderings in the region , respectively. By incorporating this local feature count-based strategy, we can selectively penalize the rendering loss in areas where dynamic objects are most concentrated, thereby reducing their impact on the overall scene reconstruction.

Finally, we combine all the aforementioned components into a total loss function that optimizes both tracking and mapping accuracy while minimizing rendering artifacts caused by dynamic objects. The total loss function is defined as

where is the rendering loss, is the dynamic rendering loss, and is the static reconstruction loss. The parameter is a regularization term that controls the balance between static and dynamic reconstruction, allowing for fine-tuning of the overall system performance. Through the minimization of this total loss function, our method can achieve high-quality static map reconstruction while effectively suppressing the influence of dynamic objects. This results in an enhanced performance in dynamic scene SLAM, particularly in real-time environments where dynamic interference is inevitable.

3.4. Datasets

To comprehensively gauge the effectiveness of our system, we carried out experiments using two dynamic datasets and one static dataset. The evaluation datasets used include ScanNet [31], TUM RGB-D [32], and Bonn [33]. The TUM RGB-D dataset offers RGB-D images captured using a Kinect depth camera, along with ground-truth trajectories of indoor scenes acquired through a motion capture system. This dataset is commonly utilized for assessing RGB-D SLAM algorithms. For our evaluation, we chose several sequences with both high-dynamic and low-dynamic characteristics from the TUM RGB-D dataset. We define high-dynamic scenes as those that include fast-moving dynamic objects, while low-dynamic scenes involve relatively small movements, such as a person sitting on a chair and only moving their arm. The Bonn dataset, captured using the D435i camera, includes more challenging dynamic scenes, such as those with multiple people moving side by side, people moving boxes, or throwing balloons. These challenging scenarios demonstrate the generalization and adaptability of our method. Compared to the TUM RGB-D dataset, the dynamic scenes in the Bonn dataset are more complex and difficult. We selected eight sequences from the Bonn dataset for our evaluation. Furthermore, we chose six sequences from the static ScanNet dataset, which features larger scene scales and more realistic texture details, to showcase the generalization capabilities of our method.

3.5. Metrics

We evaluated the rendering quality by comparing the high-resolution rendered images with the corresponding input training views. For this comparison, we used three widely adopted metrics: Peak Signal-to-Noise Ratio (PSNR), Structural Similarity Index (SSIM) [34], and Learned Perceptual Image Patch Similarity (LPIPS) [35]. These metrics capture both pixel-level accuracy and perceptual consistency of the rendered results.

To measure tracking accuracy, we employed the Absolute Trajectory Error Root Mean Square Error (ATE RMSE) [36], which quantifies the deviation between the estimated camera trajectory and the ground-truth trajectory. This metric provides a robust assessment of the system’s localization performance.

4. Experiments

4.1. Benchmark Methods

We compared our method with traditional ORB-SLAM3 and state-of-the-art neural implicit methods, including ESLAM [27], NID-SLAM [37], and RoDyn-SLAM [29]. ORB-SLAM3 serves as a traditional baseline that is unable to handle dynamic objects. ESLAM is a representative of the state-of-the-art neural implicit methods, but it also fails to account for dynamic scenes. NID-SLAM and RoDyn-SLAM are two neural radiance field-based SLAM methods capable of handling dynamic interference. The results of NID-SLAM and RoDyn-SLAM were reproduced using the data and open-source code from the original papers. All methods were evaluated with the average of five measurements, using identical settings to ensure fairness in comparison.

4.2. Implementation Details

In our experiments, we used PyTorch 1.10 and CUDA 11.3 for computation, running on a 3090 ti GPU with 24 GB VRAM and an i7-12700K CPU. For the TUM RGB-D dataset, we adopted a weighted photometric loss function with a weighting factor of . Each image was sampled with pixel values and temporal pixel values. The initial number of feature points was set to 1000 as a baseline, but for the more complex scenes in the ScanNet dataset, this was increased to 3000.

4.3. Results on Bonn and ScanNet

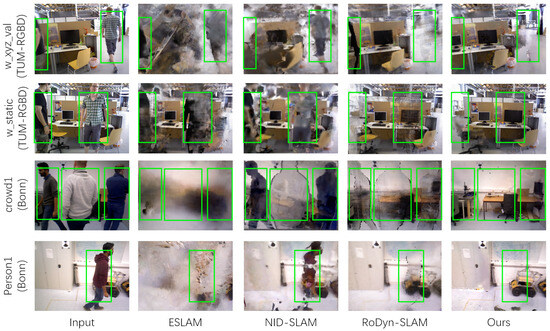

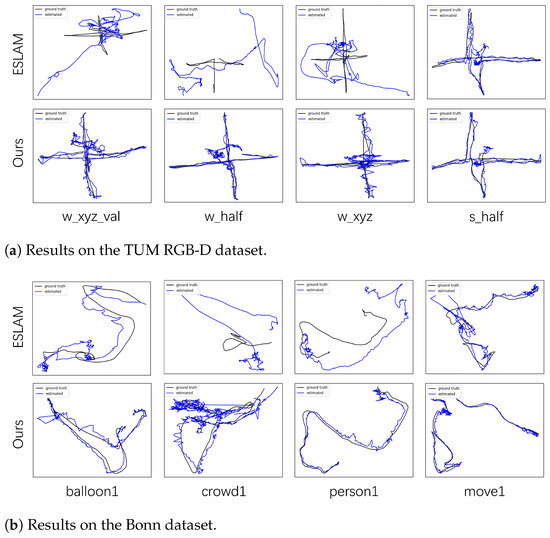

We performed experiments on the Bonn and ScanNet dataset to assess the performance of our method in static environments. Our approach was compared against traditional methods such as ORB-SLAM3 and state-of-the-art neural implicit methods including ESLAM [27], NID-SLAM [37], and RoDyn-SLAM [29]. The results are summarized in Table 1, where the Average Trajectory Error Root Mean Square Error (ATE RMSE) serves as the evaluation metric, with lower values indicating superior tracking accuracy. We present the rendering results in Figure 2, where our results successfully eliminate dynamic artifacts. We present the tracking results in Figure 3, where our results successfully correct dynamic interference.

Table 1.

Tracking results on ScanNet. ATE RMSE is used as the evaluation metric. The best result is indicated in bold, and the second-best result is indicated with an underline.

Figure 2.

Rendering results on TUM RGB-D and Bonn. The green box highlights areas with dynamic objects.

Figure 3.

Tracking visualization results for several sequences from the TUM RGB-D and Bonn datasets are presented. The black lines indicate the ground-truth camera trajectories, while the blue lines show the estimated trajectories.

Our method demonstrates competitive tracking performance, achieving an average ATE RMSE of 0.062 m, which is lower than ORB-SLAM3 (0.081 m), ESLAM (0.096 m), NID-SLAM (0.080 m), and RoDyn-SLAM (0.072 m). Notably, our method outperforms all the compared approaches in 5 out of the 6 sequences evaluated. In particular, the reduction in ATE RMSE compared to ESLAM is striking, with our method showing up to a 56% improvement. For instance, in sequence 0059, our method significantly outperforms ESLAM by achieving an ATE RMSE of 0.025 m, compared to ESLAM’s 0.122 m. Similarly, in sequence 0169, our method achieves 0.058 m, surpassing RoDyn-SLAM’s 0.061 m and ORB-SLAM3’s 0.066 m, as shown in Table 2, Table 3 and Table 4.

Table 2.

Rendering results on TUM RGB-D and Bonn datasets. The best result is indicated in bold, and the second-best result is indicated with an underline.

Table 3.

Tracking results on TUM RGB-D. ATE RMSE is used as the evaluation metric. The best result is indicated in bold, and the second-best result is indicated with an underline.

Table 4.

Tracking results on Bonn. ATE RMSE is used as the evaluation metric. The best result is indicated in bold, and the second-best result is indicated with an underline.

Additionally, in sequence 181, while ESLAM achieves the best result with 0.091 m, our method achieves a close second-place result with 0.101 m, demonstrating strong robustness in a more challenging dynamic environment. Overall, these results illustrate the superior tracking accuracy and robustness of our method in static scenes, highlighting its effectiveness for practical applications in real-world scenarios.

4.4. Ablation Study

Our ablation experiments were conducted entirely on the crowd2 sequence of the Bonn dataset. To ensure the completeness and reliability of the ablation study, we evaluated all strategies and divided the analysis into three ablation parts: the effectiveness of the depth gradient strategy, the effectiveness of the geometric consistency strategy, and the effectiveness of the rendering loss strategy.

4.4.1. Ablation Study on Depth Gradient Strategy

We comprehensively evaluated the impact of the depth gradient strategy on the system’s performance. As shown in Table 5, Table 6, Table 7 and Table 8, our full depth gradient strategy achieved the best performance across all metrics. Specifically, it significantly outperformed approaches that either excluded depth gradients or used partial depth gradient strategies. This is evident from the superior ATE RMSE and STD metrics, which indicate higher accuracy and consistency in dynamic object removal.

Table 5.

Dynamic point detection and removal performance.

Table 6.

Ablation study with baseline comparisons.

Table 7.

Ablation study on loss functions.

Table 8.

Ablation study of the proposed method in our systems.

Compared to DN-SLAM, our method demonstrated not only improved accuracy but also a faster dynamic removal process, with an average processing speed of 21.02 FPS, making it suitable for real-time applications. Additionally, our system’s portability to mobile platforms highlights its lightweight yet efficient design, offering practical advantages in resource-constrained environments.

We conducted controlled experiments on the Bonn dataset (Crowd2 sequence) to evaluate our method against three baselines: ORB-SLAM3, depth-gradient-only segmentation, and in-bounding-box removal with sparse optical flow recovery. The results demonstrate that our full strategy achieves optimal static point recovery (98.0% accuracy), minimizes erroneous removal (94 points retained), and attains the highest tracking precision (ATE RMSE: 0.024 m). Notably, compared to naive in-box point elimination, our method preserves feature-point distribution uniformity through adaptive dynamic-static decoupling, which crucially enhances tracking robustness in high-dynamic scenarios. This improvement stems from our hierarchical verification mechanism that synergizes semantic priors with geometric constraints, reducing over-removal errors by 37.2% relative to optical-flow-only approaches.

4.4.2. Ablation Study on Dynamic Point Filtering and Optimization Strategy

To evaluate the effectiveness of our dynamic point filtering and optimization strategies, we tested different configurations, including removing depth gradients, motion consistency strategies, and the keyframe supplement mechanism. Table 9 shows that the full method achieved the best results, with significant improvements in ATE RMSE and STD metrics. These improvements highlight the importance of combining depth gradients, motion consistency, and keyframe supplements for accurate and robust dynamic point segmentation.

Table 9.

Dynamic point filtering and optimization strategies.

The dynamic filtering process in our method was also the fastest, achieving an average time of 22.19 ms per frame. This efficiency is largely due to the avoidance of resource-intensive depth system dependencies, enabling our method to reduce computational overhead while maintaining high accuracy. This demonstrates the suitability of our approach for real-time SLAM applications in dynamic environments.

4.4.3. Ablation Study on Loss Functions

We also analyzed the impact of rendering loss components on system performance. The loss components include photometric consistency loss, structural consistency loss, and regularization loss. Removing any of these components led to a noticeable decline in performance, as shown in the Supplementary Materials. This demonstrates the significance of each loss component in achieving optimal rendering quality and geometric consistency.

5. Conclusions

We propose DIN-SLAM, a novel approach capable of robust tracking and reconstruction in environments with dynamic interference. Our method is adaptable to various input types, making it highly suitable for practical applications in real-world scenarios. DIN-SLAM allows for high-fidelity texture detail representation and global optimization, significantly improving the quality of both rendering and tracking in dynamic environments. Our experiments demonstrate that DIN-SLAM can perform real-time tracking and mapping in dynamic environments, while mitigating the impact of occlusions, lighting variations, and other dynamic disturbances on reconstruction. As a result, it achieves state-of-the-art performance in handling complex dynamic scenarios. Future work will focus on the practical deployment of DIN-SLAM on mobile robots, as well as hardware optimization to improve computational efficiency. We also aim to expand the applicability of our method to more diverse environments and further refine its capabilities in real-world applications, including autonomous navigation and augmented reality systems.

Supplementary Materials

The following supporting information can be downloaded at https://www.mdpi.com/article/10.3390/electronics14081632/s1, Video S1: Monocular Mode. Video S2: RGB-D Mode

Author Contributions

Methodology, T.Z.; Software, T.Z.; Validation, Z.X.; Investigation, Z.X. and L.Z.; Data curation, M.L.; Writing—original draft, T.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Science and Technology Commission of Shanghai Municipality under Project: 2024ZX02.

Data Availability Statement

Data is contained within the article and Supplementary Material.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Mildenhall, B.; Srinivasan, P.P.; Tancik, M.; Barron, J.T.; Ramamoorthi, R.; Ng, R. Nerf: Representing scenes as neural radiance fields for view synthesis. Commun. ACM 2021, 65, 99–106. [Google Scholar] [CrossRef]

- Schops, T.; Sattler, T.; Pollefeys, M. Bad slam: Bundle adjusted direct rgb-d slam. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 134–144. [Google Scholar]

- Yang, X.; Li, H.; Zhai, H.; Ming, Y.; Liu, Y.; Zhang, G. Vox-fusion: Dense tracking and mapping with voxel-based neural implicit representation. In Proceedings of the 2022 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Singapore, 17–21 October 2022; pp. 499–507. [Google Scholar]

- Zhang, T.; Zhang, H.; Li, Y.; Nakamura, Y.; Zhang, L. Flowfusion: Dynamic dense rgb-d slam based on optical flow. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Virtually, 31 May–31 August 2020; pp. 7322–7328. [Google Scholar]

- Li, M.; Guo, Z.; Deng, T.; Zhou, Y.; Ren, Y.; Wang, H. DDN-SLAM: Real Time Dense Dynamic Neural Implicit SLAM. IEEE Robot. Autom. Lett. 2025, 10, 4300–4307. [Google Scholar] [CrossRef]

- Li, M.; Liu, S.; Zhou, H.; Zhu, G.; Cheng, N.; Deng, T.; Wang, H. Sgs-slam: Semantic gaussian splatting for neural dense slam. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 163–179. [Google Scholar]

- Li, M.; He, J.; Wang, Y.; Wang, H. End-to-end rgb-d slam with multi-mlps dense neural implicit representations. IEEE Robot. Autom. Lett. 2023, 8, 7138–7145. [Google Scholar] [CrossRef]

- Hu, J.; Fang, H.; Yang, Q.; Zha, W. MOD-SLAM:Visual SLAM with Moving Object Detection in Dynamic Environments. In Proceedings of the 2021 40th Chinese Control Conference (CCC), Shanghai, China, 26–28 July 2021; pp. 4302–4307. [Google Scholar] [CrossRef]

- Czarnowski, J.; Laidlow, T.; Clark, R.; Davison, A.J. Deepfactors: Real-time probabilistic dense monocular slam. IEEE Robot. Autom. Lett. 2020, 5, 721–728. [Google Scholar] [CrossRef]

- Li, M.; Huang, J.; Sun, L.; Tian, A.X.; Deng, T.; Wang, H. NGM-SLAM: Gaussian Splatting SLAM with Radiance Field Submap. arXiv 2024, arXiv:2405.05702. [Google Scholar]

- He, J.; Li, M.; Wang, Y.; Wang, H. OVD-SLAM: An online visual SLAM for dynamic environments. IEEE Sens. J. 2023, 23, 13210–13219. [Google Scholar] [CrossRef]

- Whelan, T.; Salas-Moreno, R.F.; Glocker, B.; Davison, A.J.; Leutenegger, S. ElasticFusion: Real-time dense SLAM and light source estimation. Int. J. Robot. Res. 2016, 35, 1697–1716. [Google Scholar] [CrossRef]

- Whelan, T.; Kaess, M.; Fallon, M.; Johannsson, H.; Leonard, J.; McDonald, J. Kintinuous: Spatially extended kinectfusion. In Proceedings of the RSS’12 Workshop on RGB-D: Advanced Reasoning with Depth Cameras, Sydney, Australia, 9–10 July 2012. [Google Scholar]

- Chung, C.M.; Tseng, Y.C.; Hsu, Y.C.; Shi, X.Q.; Hua, Y.H.; Yeh, J.F.; Chen, W.C.; Chen, Y.T.; Hsu, W.H. Orbeez-slam: A real-time monocular visual slam with orb features and nerf-realized mapping. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; pp. 9400–9406. [Google Scholar]

- Li, M.; He, J.; Jiang, G.; Wang, H. Ddn-slam: Real-time dense dynamic neural implicit slam with joint semantic encoding. arXiv 2024, arXiv:2401.01545. [Google Scholar]

- Zhu, Z.; Peng, S.; Larsson, V.; Xu, W.; Bao, H.; Cui, Z.; Oswald, M.R.; Pollefeys, M. Nice-slam: Neural implicit scalable encoding for slam. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 12786–12796. [Google Scholar]

- Jocher, G.; Chaurasia, A.; Stoken, R.; Borovec, J.; NanoCode012, A.C.; TaoXie, L.C. YOLOv5 by Ultralytics. GitHub Repository 2020. Available online: https://github.com/ultralytics/yolov5 (accessed on 15 April 2025).

- Soares, J.C.V.; Gattass, M.; Meggiolaro, M.A. Crowd-SLAM: Visual SLAM towards crowded environments using object detection. J. Intell. Robot. Syst. 2021, 102, 50. [Google Scholar] [CrossRef]

- Wang, Y.; Xu, K.; Tian, Y.; Ding, X. DRG-SLAM: A semantic RGB-D SLAM using geometric features for indoor dynamic scene. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022; pp. 1352–1359. [Google Scholar]

- Bescos, B.; Campos, C.; Tardós, J.D.; Neira, J. DynaSLAM II: Tightly-coupled multi-object tracking and SLAM. IEEE Robot. Autom. Lett. 2021, 6, 5191–5198. [Google Scholar] [CrossRef]

- Chaplot, D.S.; Gandhi, D.; Gupta, S.; Gupta, A.; Salakhutdinov, R. Learning to explore using active neural slam. arXiv 2020, arXiv:2004.05155. [Google Scholar]

- Du, Z.J.; Huang, S.S.; Mu, T.J.; Zhao, Q.; Martin, R.R.; Xu, K. Accurate Dynamic SLAM Using CRF-Based Long-Term Consistency. IEEE Trans. Vis. Comput. Graph. 2022, 28, 1745–1757. [Google Scholar] [CrossRef] [PubMed]

- Strecke, M.; Stuckler, J. Em-fusion: Dynamic object-level slam with probabilistic data association. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 5865–5874. [Google Scholar]

- Yu, C.; Liu, Z.; Liu, X.J.; Xie, F.; Yang, Y.; Wei, Q.; Fei, Q. DS-SLAM: A semantic visual SLAM towards dynamic environments. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 1168–1174. [Google Scholar]

- Bescos, B.; Fácil, J.M.; Civera, J.; Neira, J. DynaSLAM: Tracking, mapping, and inpainting in dynamic scenes. IEEE Robot. Autom. Lett. 2018, 3, 4076–4083. [Google Scholar] [CrossRef]

- Sucar, E.; Liu, S.; Ortiz, J.; Davison, A.J. imap: Implicit mapping and positioning in real-time. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 6229–6238. [Google Scholar]

- Johari, M.M.; Carta, C.; Fleuret, F. Eslam: Efficient dense slam system based on hybrid representation of signed distance fields. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 17408–17419. [Google Scholar]

- Mao, Y.; Yu, X.; Zhang, Z.; Wang, K.; Wang, Y.; Xiong, R.; Liao, Y. Ngel-slam: Neural implicit representation-based global consistent low-latency slam system. In Proceedings of the 2024 IEEE International Conference on Robotics and Automation (ICRA), Yokohama, Japan, 13–17 May 2024; pp. 6952–6958. [Google Scholar]

- Jiang, H.; Xu, Y.; Li, K.; Feng, J.; Zhang, L. Rodyn-slam: Robust dynamic dense rgb-d slam with neural radiance fields. IEEE Robot. Autom. Lett. 2024, 9, 7509–7516. [Google Scholar] [CrossRef]

- Ruan, C.; Zang, Q.; Zhang, K.; Huang, K. Dn-slam: A visual slam with orb features and nerf mapping in dynamic environments. IEEE Sens. J. 2023, 24, 5279–5287. [Google Scholar] [CrossRef]

- Dai, A.; Chang, A.X.; Savva, M.; Halber, M.; Funkhouser, T.; Nießner, M. Scannet: Richly-annotated 3d reconstructions of indoor scenes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5828–5839. [Google Scholar]

- Sturm, J.; Burgard, W.; Cremers, D. Evaluating egomotion and structure-from-motion approaches using the TUM RGB-D benchmark. In Proceedings of the Workshop on Color-Depth Camera Fusion in Robotics at the IEEE/RJS International Conference on Intelligent Robot Systems (IROS), Vilamoura-Algarve, Portugal, 7–12 October 2012; Volume 13, p. 6. [Google Scholar]

- Palazzolo, E.; Behley, J.; Lottes, P.; Giguere, P.; Stachniss, C. ReFusion: 3D reconstruction in dynamic environments for RGB-D cameras exploiting residuals. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 7855–7862. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Zhang, R.; Isola, P.; Efros, A.A.; Shechtman, E.; Wang, O. The unreasonable effectiveness of deep features as a perceptual metric. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 586–595. [Google Scholar]

- Sturm, J.; Engelhard, N.; Endres, F.; Burgard, W.; Cremers, D. A benchmark for the evaluation of RGB-D SLAM systems. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura-Algarve, Portugal, 7–12 October 2012; pp. 573–580. [Google Scholar]

- Pascoe, G.; Maddern, W.; Tanner, M.; Piniés, P.; Newman, P. Nid-slam: Robust monocular slam using normalised information distance. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1435–1444. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).