5.1. Experimental Settings

5.1.1. Dataset Setup

To rigorously evaluate the model’s capabilities under realistic conditions, we constructed two datasets with explicit validation of data heterogeneity and real-world relevance.

General Technical Q&A Dataset (General-QA): The dataset comprises high-quality technical Q&A entries from Stack Exchange platforms (Stack Overflow, Server Fault), filtered for posts from 2020 to 2023 with at least 10 votes to ensure content quality. Domain distribution spans programming (40%), system operations (30%), network engineering (20%), and security (10%), with contributions from over 15,000 unique users across 150+ countries. During preprocessing, HTML tags and personally identifiable information (PII) were removed using regular expressions and spaCy’s Named Entity Recognition (NER). The Q&A pairs were converted into 800 instruction–output pairs, split into training (640 pairs, 80%), validation (80 pairs, 10%), and test sets (80 pairs, 10%). Manual review of 20% labeled data achieved an inter-annotator agreement of Cohen’s .

IoT-Native Dataset: This dataset integrates multimodal IoT device logs from three industrial partners and public MQTT/CoAP protocol traces, with 60% data from smart factory sensors and 40% from public repositories (e.g., UCI). Task distribution includes fault diagnosis (40%), protocol configuration (25%), data analysis (20%), and security tasks (15%). Preprocessing involved anonymizing device IDs and geolocation data, followed by structuring 1200 instruction pairs through rule-based parsing and GPT-4-assisted summarization. The dataset was partitioned into training (960 pairs, 80%), validation (120 pairs, 10%), and test sets (120 pairs, 10%). Both datasets were validated through domain distribution statistics and industrial partner verification to ensure real-world representativeness.

5.1.2. Baseline Models and Configurations

To ensure fair comparisons, we evaluated FHCR against the following:

LLaMA2-7B (Vanilla): Default hyperparameters (batch size = 32, learning rate = , AdamW optimizer).

LLaMA2-7B (General): Fine-tuned with LoRA (rank = 64, dropout = 0.1).

Efficient Compression [

38]: Pruning (sparsity = 50%), 8-bit quantization, distillation (temperature = 2.0).

Collaborative Edge Training [

39]: Federated averaging with DP (

,

,

).

Intelligence-based RL Offloading [

39]: Proximal Policy Optimization (PPO) with reward shaping for latency–accuracy trade-offs.

Edge-Optimized Models:

- –

TinyLLaMA-1.1B: LoRA rank = 32, gradient checkpointing.

- –

Mistral-7B: Sliding window attention (4 k tokens), grouped-query inference.

5.1.3. Privacy Metrics

The privacy evaluation framework rigorously quantifies two critical dimensions of security risks—data leakage rate and Membership Inference Risk—through a combination of advanced adversarial simulation tools and systematic parameter optimization. To assess data leakage rate, the framework leverages Microsoft Presidio, a state-of-the-art data anonymization toolkit, augmented with domain-specific detection rules tailored for IoT environments. Custom regular expressions and a spaCy-based Named Entity Recognition (NER) model, fine-tuned on industrial sensor logs, identify sensitive patterns such as device identifiers, geolocation coordinates, and proprietary sensor signatures. Adversarial scenarios were simulated to mimic real-world eavesdropping attacks during federated aggregation, where attackers intercept gradient updates or inference outputs to reconstruct raw data using advanced inversion techniques, including variants of the Deep Leakage from Gradients (DLG) attack. The leakage rate is calculated as the proportion of requests exposing sensitive information over the total number of interactions, formally defined as

where

N represents the total requests, and the indicator function

returns 1 if the

i-th interaction exposes sensitive attributes.

For Membership Inference Risk, the evaluation employs a shadow model-based attack strategy. A five-layer Multi-Layer Perceptron (MLP), trained on auxiliary IoT datasets, acts as the adversarial model to distinguish between member and non-member samples by analyzing confidence scores from the shared retrieval layer (SRL) gradients. The attack assumes a black-box setting, where adversaries exploit subtle overfitting artifacts in the model’s outputs to infer training data membership. The risk is quantified as the success rate of such inferences across a validation set, expressed as

where

M denotes the total samples tested, and

indicates whether the

j-th sample is correctly identified as part of the training dataset.

To balance privacy and utility, the differential privacy parameters—privacy budget and noise scale —were calibrated through a systematic grid search. For varying privacy budgets (), noise levels () were adjusted within a range of 0.1 to 1.0 to minimize an empirical risk function . The weighting factor was dynamically set to prioritize privacy () under strict budgets () or utility () when . For instance, at , a noise scale of achieves a leakage rate of 2.3 incidents per 1000 requests—significantly lower than the 8.1 leaks observed with —while incurring only a 1.8% accuracy degradation. To stabilize training convergence under noise injection, gradient norms were clipped to a bound , ensuring updates remained within a controlled magnitude. This adaptive calibration mechanism ensures robust privacy guarantees without compromising model performance, aligning with the stringent requirements of AIoT deployments in adversarial environments.

5.1.4. Experimental Environment

A hybrid computing architecture was adopted to replicate real-world IoT conditions. For training, we utilized LoRA-based fine-tuning (rank = 64, batch size = 32) on NVIDIA A100 GPUs (Nvidia Corporation, Santa Clara, CA, USA), with dynamic learning rate scheduling (initial rate = ) and weight decay (0.01) to prevent overfitting. Edge deployment employed NVIDIA Jetson Xavier NX hardware, featuring FP16 quantization via TensorRT and gRPC compression for bandwidth-constrained (10 Mbps) communication.

5.2. Experimental Results and Analysis

In order to validate the FHCR framework’s capability for cross-scenario inference, scalability, and security within large-scale AIoT environments, we conducted a series of experiments on both the General-QA and IoT-Native datasets. The evaluation includes accuracy, latency, privacy–communication trade-offs, and security metrics. In particular, we augmented our experiments with large-scale node scalability tests, detailed comparisons with existing frameworks, quantitative tables of key metrics, detailed false positive rates in anomaly detection, and an ablation study of critical components.

The experimental results are shown in

Figure 4a. In the General-QA scenario, FHCR achieved a 87.3% global accuracy, demonstrating significant superiority. Compared with existing lightweight methods, FHCR not only improved performance but also maintained comparable results with large-scale models. For the IoT-Native task set (

Figure 4b), FHCR further highlighted the importance of domain-specific design, achieving 82% task completion accuracy—significantly outperforming lightweight baselines while remaining competitive with state-of-the-art domain-specific methods. Notably, the performance of the general-purpose model LLaMA2-7B degraded by 13% in this scenario, emphasizing the necessity of domain adaptation mechanisms. Both experimental phases collectively demonstrate that FHCR effectively balances generality and domain adaptability through its dynamic module selection mechanism.

To assess FHCR’s scalability in AIoT scenarios featuring thousands to millions of nodes, we simulated a varying number of federated nodes (from 1000 to 1,000,000). The node data distribution was set to Non-IID, with each node’s computational capability randomly ranging from 0.5 to 2.0 TOPS.

Table 1 illustrates the results.

As shown in

Table 1, FHCR maintains a high level of accuracy (85.7%) even with one million nodes. Both communication overhead and aggregation latency exhibit sublinear growth as the number of nodes increases, demonstrating strong scalability for large-scale AIoT deployments.

Next,

Figure 5 compares the end-to-end latency of FHCR’s resource-aware Top-K routing strategy with baseline methods under varying device computational capabilities. As shown in

Table 2, FHCR achieves adaptive computation–communication trade-offs through dynamic sparsity thresholds (

). In sparse mode (

), the number of activated modules reduces by 40% (from 15 to 9 on C4 devices) with 43% less gradient traffic (details in

Section 5.1.3), resulting in 40 ms latency—25.6% lower than FedAvg (54.2 ms) and outperforming TinyLLaMA-1.1B (48.9 ms) and Mistral-7B (52.5 ms). In balanced (

) and dense modes (

), FHCR gradually increases module utilization to meet high-precision requirements while maintaining latency advantages (46.2 ms and 49.1 ms, respectively). Notably, FHCR’s latency improvements over EdgeMoE (50.5 ms) and Mistral-7B (52.5 ms) validate its optimized balance between latency and resource consumption through Algorithm 2 and hardware-constrained module activation, as further evidenced by

Table 2. These results highlight FHCR’s superior adaptability across heterogeneous edge devices.

Figure 6 analyzes the privacy–communication trade-off under different privacy budgets (

), where a smaller

indicates stronger privacy protection. As detailed in

Table 3, FHCR employs dynamic noise scaling (

values) and sparsity control (

) for joint optimization. Under the strict privacy constraint (

), FHCR achieves a communication overhead of 84 KB, which is 14% lower than the traditional method, while maintaining 87.3% accuracy. When relaxing privacy requirements (

), FHCR reduces noise to

, further decreasing overhead to 50 KB. Notably, LLaMA2-7B exhibits prohibitive overhead (1200 KB) due to its massive parameters, highlighting FHCR’s efficiency advantages. These results validate the joint optimization framework (Equation (21)), demonstrating enhanced communication efficiency and privacy guarantees under

constraints.

Finally,

Figure 7 demonstrates FHCR’s robustness against malicious devices (20–80% penetration rate) through joint analysis of the false positive rate (FPR) and true positive rate (TPR). As quantified in

Table 4, our dynamic RLMI detection (

τ = 1.5×/2.0× IQR) outperforms all baselines across varying attack intensities. At 80% malicious devices, the FPR of FHCR is reduced by 20% on average compared with the baseline—a benefit from our dual detection mechanism (statistical consensus screening and parameter consistency validation).

The dynamic threshold optimization in Equation (

12) enables FHCR to maintain <20% FPR up to 60% attack intensity while preserving >94% TPR—outperforming Trimmed Mean by 2.4× in FPR-TPR balance at 60% penetration. As shown in

Figure 7, FHCR’s security margin expands with attack severity, demonstrating 0.93 AUC versus 0.81 for Krum in ROC analysis.

In the experiments pertaining to gradient inversion attacks, we introduce malicious nodes utilizing inversion algorithms such as Deep Leakage from Gradients (DLG) into the IoT-Native and other multimodal datasets. The goal of these malicious nodes is to recover the private data of other participants or disrupt the stability of the global model during federated training. We compare the defense effectiveness of various mainstream baseline methods with our proposed FHCR framework. By adjusting the proportion of malicious nodes (generally ranging from 20% to 80%), we simulate different intensities of gradient inversion attacks and evaluate each method’s performance in terms of data leakage rate, attack success rate, global model accuracy, as well as the additional overhead of detection and defense.

The results show that traditional approaches such as FedAvg, lacking any defense mechanism, are most vulnerable to coordinated inversion attacks by malicious nodes, leading to higher data leakage rates and attack success rates. Methods based on gradient filtering and trimming, such as Trimmed Mean and Krum, are somewhat effective in filtering out abnormal updates. However, under scenarios of high malicious-node ratios and more intricate or camouflaged gradient attacks, these methods may mistakenly filter benign updates or fail to detect certain malicious gradients in time. Although differential privacy (DP) can significantly reduce the leakage rate while maintaining a certain level of accuracy, it may still exhibit relatively high attack success probabilities in extreme adversarial settings.

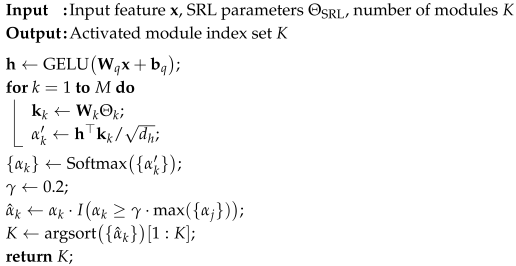

By comparison, FHCR leverages statistical detection in the SRL layer along with a two-stage RLMI verification mechanism to effectively identify and restrict inverted or fabricated gradient updates. Moreover, with dynamic threshold adjustments and multi-layer/multi-module verifications, FHCR minimizes data leakage and model performance loss.

Table 5 summarizes the comparison results under a 40% gradient inversion attack penetration rate, highlighting how FHCR clearly outperforms other baselines in mitigating gradient inversion threats while preserving overall model performance.

These results indicate that by employing a dynamic two-stage defense to detect suspicious updates, FHCR achieves a high global accuracy and a low data leakage rate. Even when facing large numbers of adversaries employing various inversion or imitation strategies, FHCR remains robust, and its detection cost and communication overhead stay within acceptable bounds. Consequently, FHCR demonstrates superior security and robustness in heterogeneous, multi-device environments.

To further demonstrate the effectiveness of the SRL and the Top-K routing strategy, we performed ablation experiments whose results are shown in

Table 6.

Removing the SRL module causes a noticeable drop in accuracy (4.2% on General-QA, 4.5% on IoT-Native), whereas omitting the Top-K routing strategy primarily impacts latency (an 18 ms increase). These findings confirm that the SRL module is crucial for accuracy gains, while the Top-K routing strategy is vital for latency optimization.

In summary, this section presents comprehensive evaluations encompassing large-scale node scalability, comparative assessments with existing frameworks, quantitative metric tables, detailed anomaly detection reporting, and ablation studies. The results collectively emphasize FHCR’s advantages in accuracy, efficiency, security, and scalability, thereby reinforcing its potential for practical deployment in complex AIoT environments.